Anahtar Kelimeler:insansı robot, AI büyük modeli, pekiştirmeli öğrenme, çok modlu AI, AI Ajanı, Figure 03 veri darboğazı, GPT-5 Pro matematiksel kanıt, EmbeddingGemma cihaz tarafı RAG, GraphQA grafik analiz sohbeti, NVIDIA Blackwell çıkarım performansı

🔥 Spotlight

Figure 03 graces the cover of TIME’s Best Inventions list, CEO states “all we need now is data” : Figure company CEO Brett Adcock stated that the biggest bottleneck for the humanoid robot Figure 03 is currently “data,” not architecture or computing power, believing that data can solve almost all problems and drive the robot’s large-scale application. Figure 03 appeared on the cover of TIME magazine’s 2025 Best Inventions list, sparking discussions about the importance of data, computing power, and architecture in robot development. Brett Adcock emphasized that Figure’s goal is for robots to perform human tasks in home and commercial settings, and highly prioritizes robot safety, predicting that the number of humanoid robots may surpass humans in the future. (Source: QbitAI)

Terence Tao takes on a cross-disciplinary challenge with GPT-5 Pro! An unsolvable problem for 3 years, a complete proof in 11 minutes : Renowned mathematician Terence Tao collaborated with GPT-5 Pro to solve a 3-year-old unsolved problem in differential geometry in just 11 minutes. GPT-5 Pro not only completed complex calculations but also directly provided a complete proof, even helping Tao correct his initial intuition. Tao concluded that AI performs excellently on “small-scale” problems and also assists in “large-scale” problem understanding, but may reinforce incorrect intuitions on “medium-scale” strategies. He emphasized that AI should serve as a mathematician’s “co-pilot,” enhancing experimental efficiency, rather than completely replacing human work in creativity and intuition. (Source: QbitAI)

🎯 Trends

Yunpeng Technology releases AI+Health new products : Yunpeng Technology partnered with Shuaikang and Skyworth to release new AI+Health products, including a “Digitalized Future Kitchen Lab” and a smart refrigerator equipped with an AI health large model. The AI health large model optimizes kitchen design and operation, while the smart refrigerator provides personalized health management through “Health Assistant Xiaoyun.” This marks a breakthrough for AI in daily health management, achieving personalized health services through smart devices, and is expected to promote the development of home health technology and improve residents’ quality of life. (Source: 36Kr)

Progress in Humanoid Robots and Embodied AI: From Household Chores to Industrial Applications : Multiple social discussions showcase the latest advancements in humanoid robots and embodied AI. Reachy Mini was named one of TIME magazine’s Best Inventions of 2025, demonstrating the potential of open-source collaboration in robotics. AI-powered bionic prosthetics enable a 17-year-old to achieve mind control, and humanoid robots can easily perform household chores. In the industrial sector, Yondu AI released a wheeled humanoid robot warehouse picking solution, AgiBot launched Lingxi X2 with near-human mobility, and China also unveiled a high-speed spherical police robot. Boston Dynamics’ robots have evolved into versatile cinematographers, and LocoTouch quadruped robots achieve intelligent transportation through haptics. (Source: Ronald_vanLoon, Ronald_vanLoon, ClementDelangue, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, johnohallman, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Large Model Capability Breakthroughs and New Developments in Code Benchmarking : GPT-5 Pro and Gemini 2.5 Pro achieved gold medal performance in the International Astronomy and Astrophysics Olympiad (IOAA), demonstrating AI’s powerful capabilities in cutting-edge physics. GPT-5 Pro also showed exceptional scientific literature search and verification abilities, solving Erdos problem #339 and effectively identifying significant flaws in published papers. In the code domain, KAT-Dev-72B-Exp became the top open-source model on the SWE-Bench Verified leaderboard, achieving a 74.6% fix rate. The SWE-Rebench project avoids data contamination by testing new GitHub issues raised after large model releases. Sam Altman is optimistic about the future of Codex. Regarding whether AGI can be achieved purely through LLMs, the AI research community generally believes that LLM core alone is insufficient. (Source: gdb, karminski3, gdb, SebastienBubeck, karminski3, teortaxesTex, QuixiAI, sama, OfirPress, Reddit r/LocalLLaMA, Reddit r/ArtificialInteligence)

Performance Innovation and Challenges in AI Hardware and Infrastructure : NVIDIA Blackwell platform demonstrated unparalleled inference performance and efficiency in SemiAnalysis InferenceMAX benchmarks, and Together AI now offers NVIDIA GB200 NVL72 and HGX B200 systems. Groq is reshaping the open-source LLM infrastructure economy with lower latency and competitive pricing through its ASIC and vertical integration strategy. Community discussions addressed the impact of Python GIL removal on AI/ML engineering, suggesting it could enhance multi-threading performance. Additionally, LLM enthusiasts shared their hardware configurations and discussed the performance trade-offs between large quantized models and smaller non-quantized models at different quantization levels, noting that 2-bit quantization might be suitable for dialogue, but coding tasks require at least Q5. (Source: togethercompute, arankomatsuzaki, code_star, MostafaRohani, jeremyphoward, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

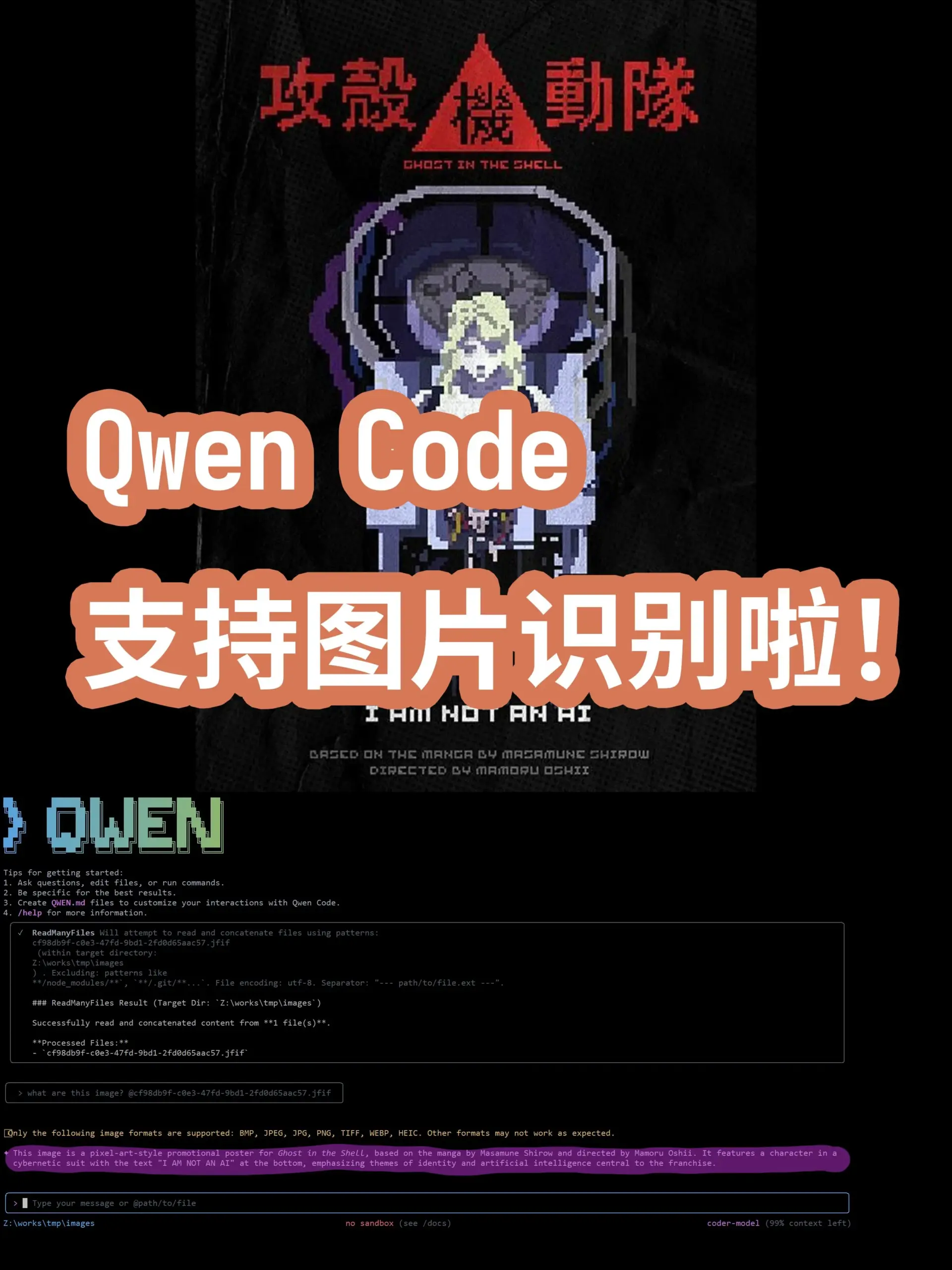

Frontier Dynamics of AI Models and Applications: From General Models to Vertical Domains : New AI models and features continue to emerge. The Turkish large language model Kumru-2B is gaining prominence on Hugging Face, and Replit released several updates this week. Sora 2 has removed watermarks, indicating broader applications for video generation technology. Rumors suggest Gemini 3.0 will be released on October 22nd. AI continues to deepen its presence in healthcare, with digital pathology using AI to assist cancer diagnosis, and label-free microscopy combined with AI promising new diagnostic tools. Augmented Reality (AR) models achieved SOTA on the Imagenet FID leaderboard. Qwen Code command-line coding Agent updated to support image recognition with Qwen-VL models. Stanford University proposed the Agentic Context Engineering (ACE) method, enabling models to become smarter without fine-tuning. The DeepSeek V3 series models are also continuously iterating, and the deployment types of AI Agents and AI’s reshaping of professional service sectors are also industry focal points. (Source: mervenoyann, amasad, scaling01, npew, kaifulee, Ronald_vanLoon, scaling01, TheTuringPost, TomLikesRobots, iScienceLuvr, NerdyRodent, shxf0072, gabriberton, Ronald_vanLoon, karminski3, Ronald_vanLoon, teortaxesTex, demishassabis, Dorialexander, yoheinakajima, 36Kr)

🧰 Tools

GraphQA: Transforming Graph Analysis into Natural Language Conversations : LangChainAI launched the GraphQA framework, which combines NetworkX and LangChain to transform complex graph analysis into natural language conversations. Users can ask questions in plain English, and GraphQA will automatically select and execute appropriate algorithms, handling graphs with over 100,000 nodes. This greatly simplifies the barrier to graph data analysis, making it more accessible to non-expert users, and is an important tool innovation in the LLM field. (Source: LangChainAI)

Top Agentic AI Tool for VS Code : Visual Studio Magazine rated a certain tool as one of the top Agentic AI tools for VS Code, signaling a paradigm shift in development from “assistants” to “true Agents” that can think, act, and build alongside developers. This reflects the evolution of AI tools in software development from auxiliary functions to deeper intelligent collaboration, enhancing developer efficiency and experience. (Source: cline)

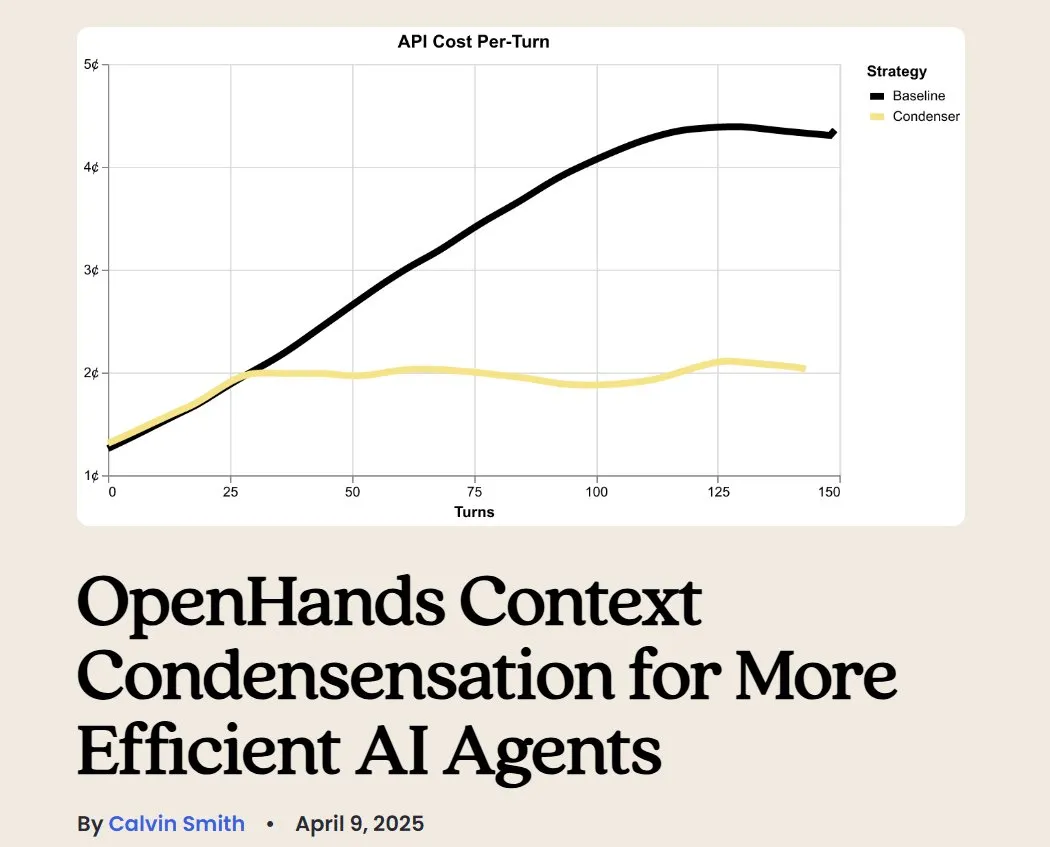

OpenHands: Open-Source LLM Context Management Tool : OpenHands, an open-source tool, provides various context compressors to manage LLM context in Agentic applications, including basic history pruning, extracting “most important events,” and browser output compression. This is crucial for debugging, evaluating, and monitoring LLM applications, RAG systems, and Agentic workflows, helping to improve LLM efficiency and consistency in complex tasks. (Source: gneubig)

BLAST: AI Web Browser Engine : LangChainAI released BLAST, a high-performance AI web browser engine designed to provide web browsing capabilities for AI applications. BLAST offers an OpenAI-compatible interface, supporting automatic parallelization, intelligent caching, and real-time streaming, efficiently integrating web information into AI workflows, greatly expanding the ability of AI Agents to acquire and process real-time web data. (Source: LangChainAI)

Opik: Open-Source LLM Evaluation Tool : Opik is an open-source LLM evaluation tool used for debugging, evaluating, and monitoring LLM applications, RAG systems, and Agentic workflows. It provides comprehensive tracing, automated evaluation, and production-ready dashboards, helping developers better understand model behavior, optimize performance, and ensure application reliability in real-world scenarios. (Source: dl_weekly)

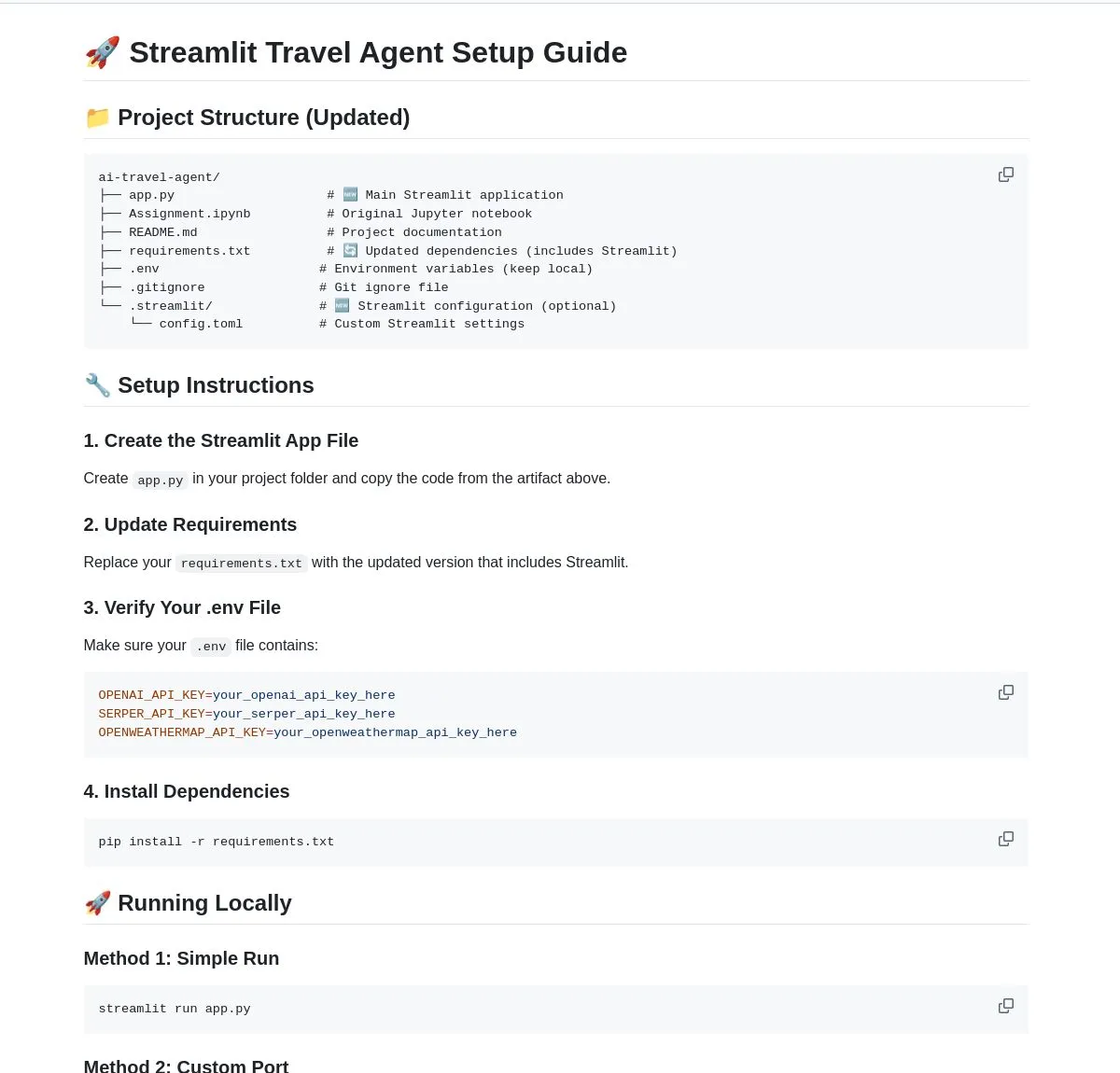

AI Travel Agent: Intelligent Planning Assistant : LangChainAI showcased an intelligent AI Travel Agent that integrates real-time weather, search, and travel information, leveraging multiple APIs to simplify the entire process from weather updates to currency exchange. This Agent aims to provide one-stop travel planning and assistance, enhancing user travel experience, and is a typical example of LLMs empowering Agents in vertical application scenarios. (Source: LangChainAI)

Envisioning an AI Advertiser Prompt Building Tool : A perspective suggests an urgent market need for an AI tool to help marketers build “advertiser prompts.” This tool should assist in establishing an evaluation system (covering brand safety, prompt adherence, etc.) and testing mainstream models. With OpenAI launching various native ad units, the importance of marketing prompts is increasingly highlighted, and such tools will become a key component in the advertising creative and distribution process. (Source: dbreunig)

Qwen Code Update: Supports Qwen-VL Model for Image Recognition : The Qwen Code command-line coding Agent recently updated, adding support for switching to the Qwen-VL model for image recognition. User tests show good results, and it is currently available for free. This update greatly expands Qwen Code’s capabilities, allowing it to not only handle coding tasks but also perform multimodal interactions, improving the efficiency and accuracy of coding Agents when dealing with tasks involving visual information. (Source: karminski3)

Hosting a Personal Chatbot Server with LibreChat : A blog post provides a guide on using LibreChat to host a personal chatbot server and connect to multiple Model Control Panels (MCPs). This allows users to flexibly manage and switch between different LLM backends, achieving a customized chatbot experience, emphasizing the flexibility and controllability of open-source solutions in AI application deployment. (Source: Reddit r/artificial)

AI Generator: Bringing Avatars to Life : A user sought the best AI generator to “bring their brand image (including real-person videos and avatars) to life” for a YouTube channel, aiming to reduce filming and recording time and focus on editing. The user hoped AI could enable avatars to converse, play games, dance, etc. This reflects content creators’ high demand for AI tools in avatar animation and video generation to improve production efficiency and content diversity. (Source: Reddit r/artificial)

Local LLMs Combat Email Spam: A Private Solution : A blog post shared practical experience on how to privately identify and combat spam on one’s own mail server using local LLMs. This solution combines Mailcow, Rspamd, Ollama, and a custom Python agent, providing an AI-based spam filtering method for users of self-hosted mail servers, highlighting the potential of local LLMs in privacy protection and customized applications. (Source: Reddit r/LocalLLaMA)

📚 Learning

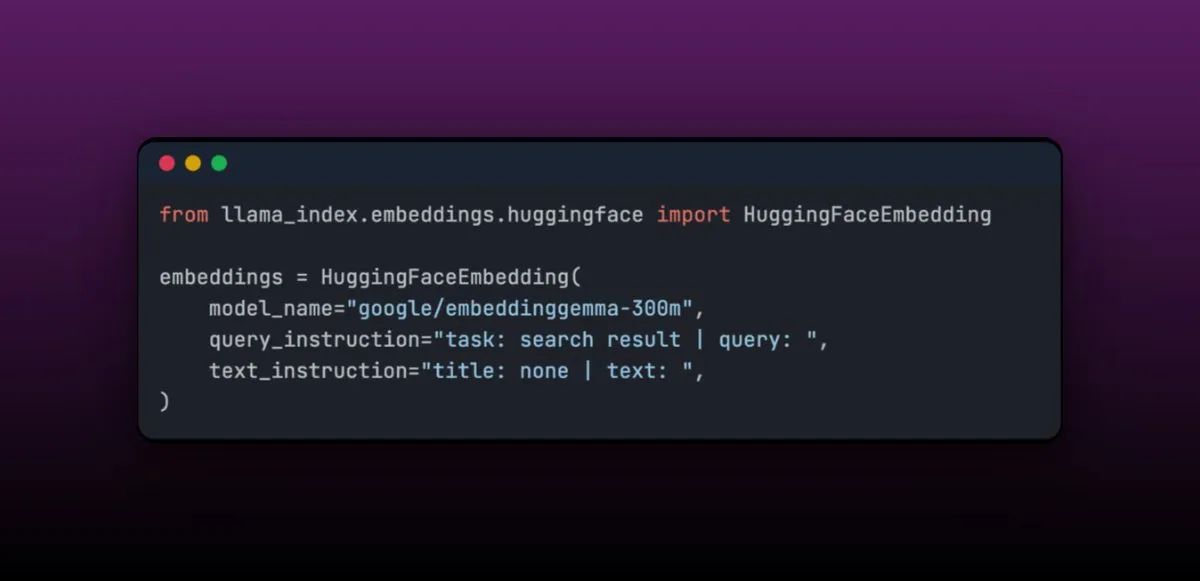

EmbeddingGemma: A Multilingual Embedding Model for On-Device RAG Applications : EmbeddingGemma is a compact multilingual embedding model with only 308M parameters, ideal for on-device RAG applications and easily integrated with LlamaIndex. The model ranks high on the Massive Text Embedding Benchmark while being small enough for mobile devices. Its easy fine-tuning capability allows it to outperform larger models after fine-tuning on specific domains (e.g., medical data). (Source: jerryjliu0)

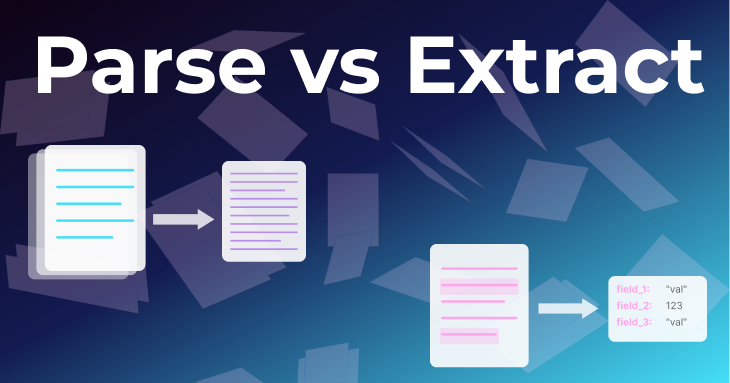

Two Fundamental Approaches to Document Processing: Parsing and Extraction : An article by the LlamaIndex team delves into two fundamental methods in document processing: “parsing” and “extraction.” Parsing converts an entire document into structured Markdown or JSON, retaining all information, suitable for RAG, deep research, and summarization. Extraction obtains structured output from an LLM, standardizing documents into common patterns, suitable for database ETL, automated Agent workflows, and metadata extraction. Understanding the distinction between the two is crucial for building efficient document Agents. (Source: jerryjliu0)

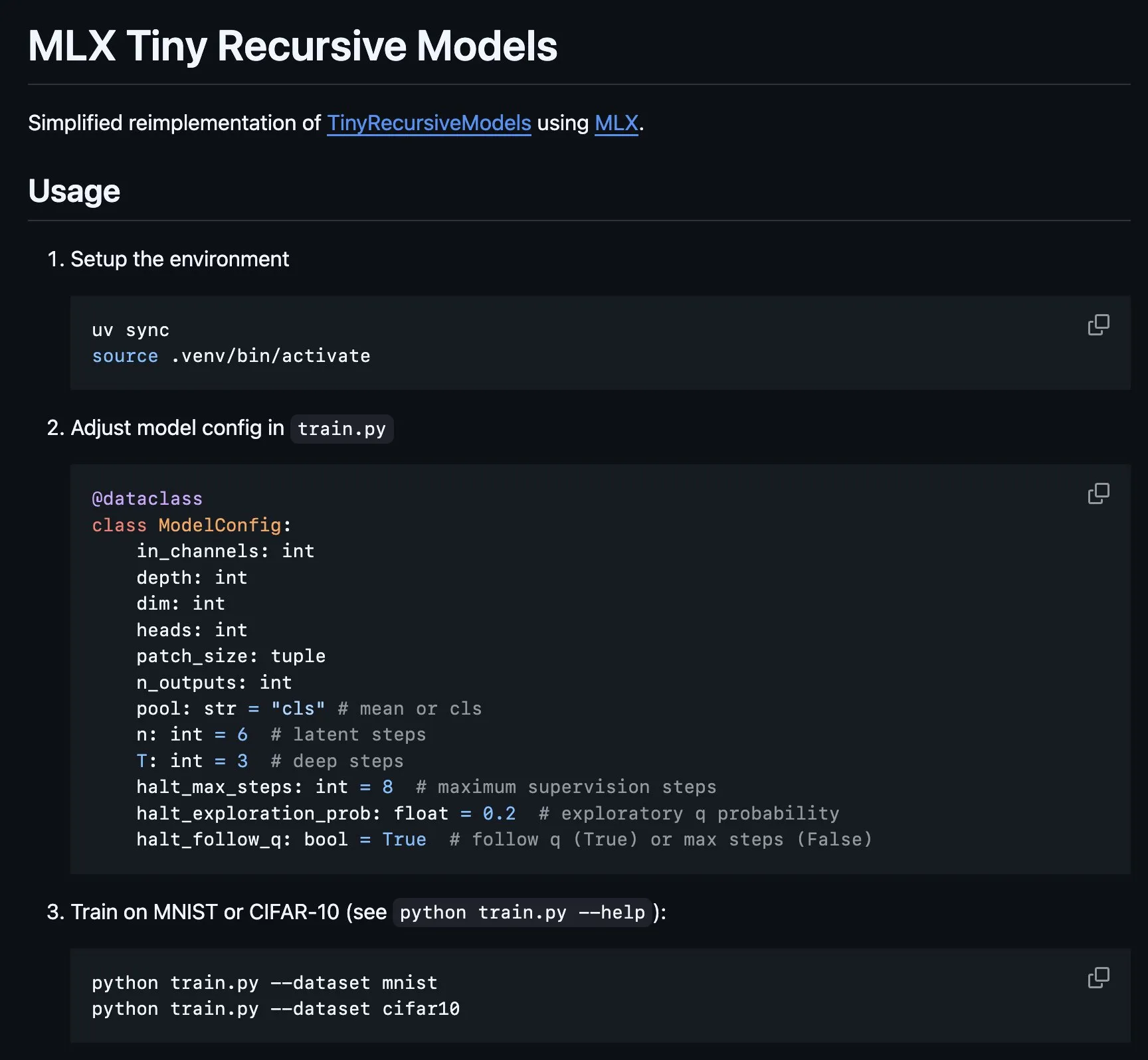

Implementation of Tiny Recursive Model (TRM) on MLX : The core part of the Tiny Recursive Model (TRM), proposed by Alexia Jolicoeur-Martineau, has been implemented on the MLX platform. This model aims to achieve high performance with a tiny neural network of 7M parameters through recursive inference. This MLX implementation enables local experimentation on Apple Silicon laptops, reducing complexity and covering features such as deep supervision, recursive inference steps, and EMA, providing convenience for the development and research of small, efficient models. (Source: awnihannun, ImazAngel)

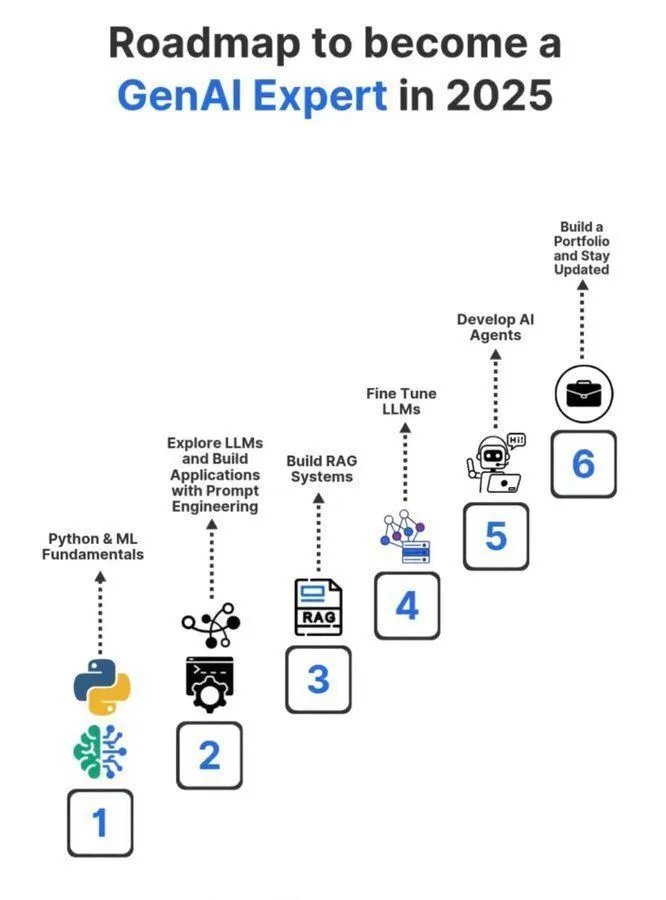

2025 Generative AI Expert Learning Roadmap : A detailed 2025 Generative AI Expert Learning Roadmap was shared on social media, covering the key knowledge and skills required to become a professional in the generative AI field. This roadmap aims to guide aspiring individuals to systematically learn core concepts such as artificial intelligence, machine learning, and deep learning, to adapt to the rapidly evolving GenAI technology trends. (Source: Ronald_vanLoon)

Sharing Machine Learning PhD Study Experience : A user re-shared a series of tweets about pursuing a PhD in machine learning, aiming to provide guidance and experience for those interested in ML PhD studies. These tweets likely cover application processes, research directions, career development, and personal experiences, serving as valuable AI learning resources within the community. (Source: arohan)

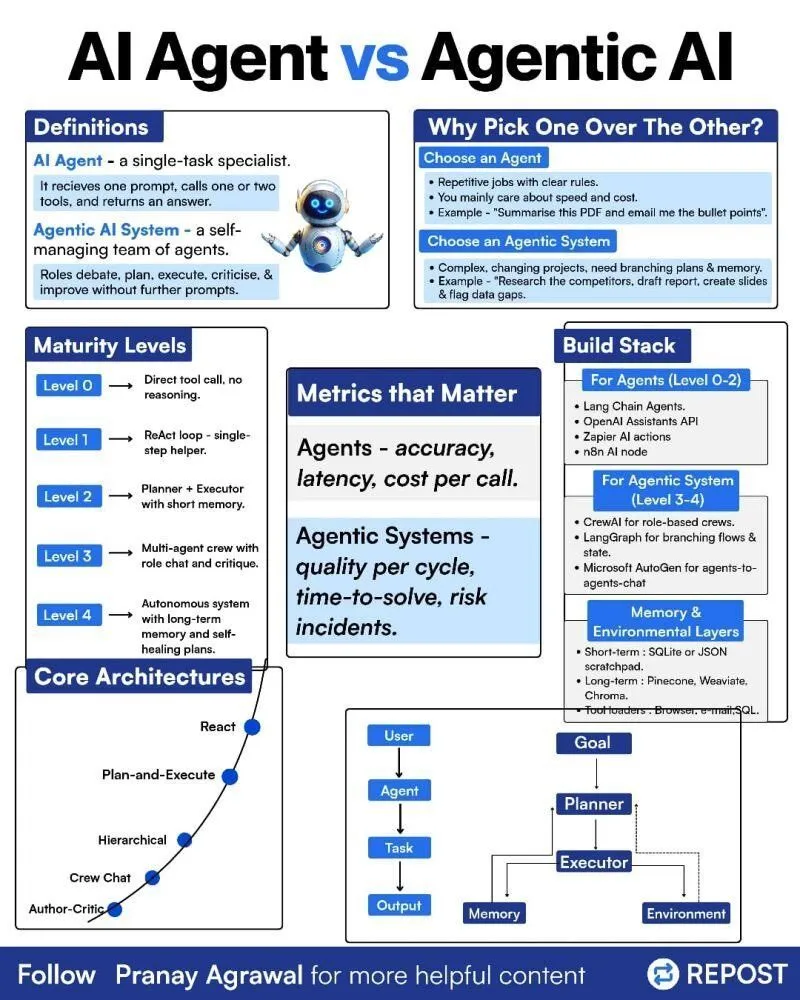

The Difference Between AI Agents and Agentic AI : An infographic explaining the difference between “AI Agents” and “Agentic AI” was shared on social media, aiming to clarify these two related but distinct concepts. This helps the community better understand the deployment types of AI Agents, their level of autonomy, and the role of Agentic AI in broader artificial intelligence systems, fostering more precise discussions on Agent technology. (Source: Ronald_vanLoon)

Reinforcement Learning and Weight Decay in LLM Training : Social media discussions explored why Weight Decay might not be a good idea in LLM Reinforcement Learning (RL) training. Some argue that weight decay can cause the network to forget a large amount of pre-training information, especially in GRPO updates where advantage is zero, weights tend towards zero. This suggests that researchers need to carefully consider the impact of weight decay when designing RL training strategies for LLMs to avoid model performance degradation. (Source: lateinteraction)

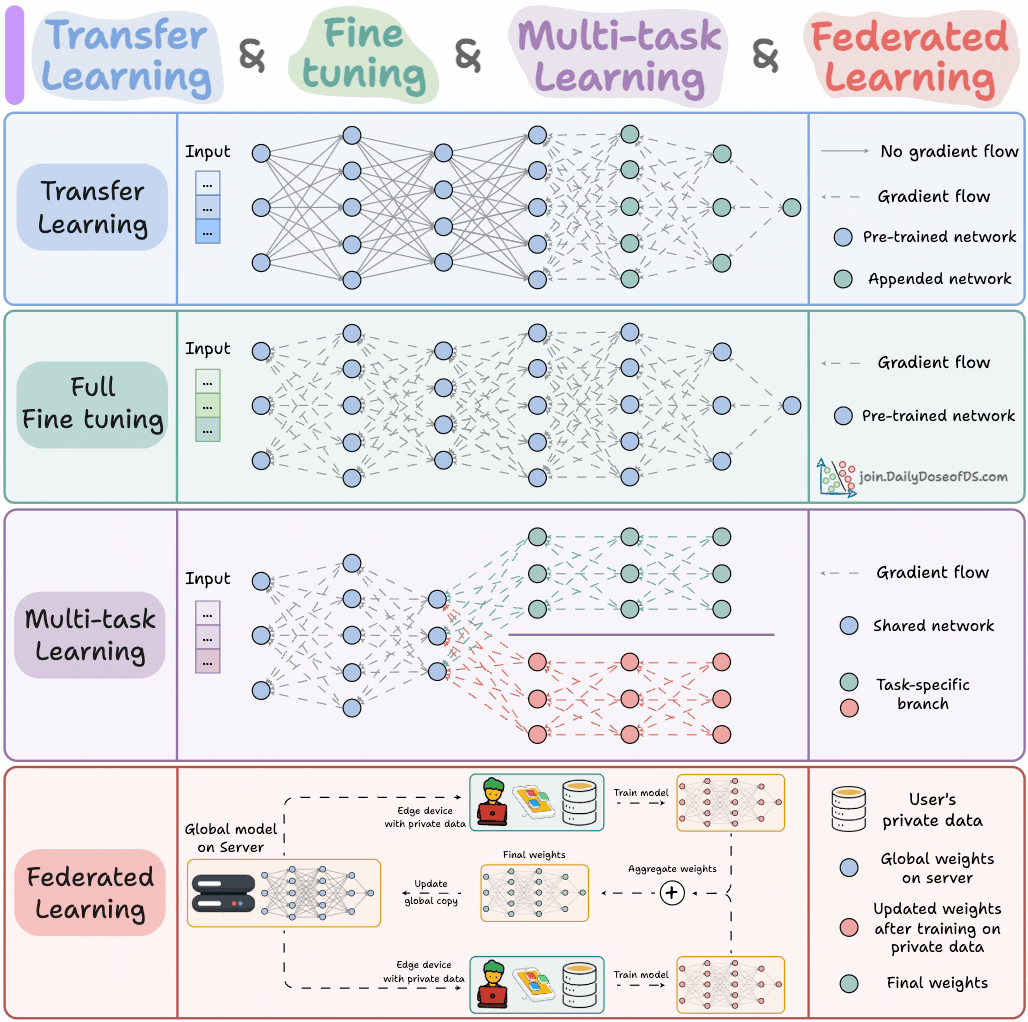

AI Model Training Paradigms : An expert shared four model training paradigms that ML engineers must understand, aiming to provide key theoretical guidance and practical frameworks for machine learning engineers. These paradigms likely cover supervised learning, unsupervised learning, reinforcement learning, and self-supervised learning, helping engineers better understand and apply different model training methods. (Source: _avichawla)

Curriculum Learning-Based Reinforcement Learning to Enhance LLM Capabilities : A study found that reinforcement learning (RL) combined with curriculum learning can teach LLMs new capabilities that are difficult to achieve with other methods. This indicates the potential of curriculum learning in improving LLM’s long-term reasoning abilities, suggesting that the combination of RL and curriculum learning may be a key pathway to unlocking new AI skills. (Source: sytelus)

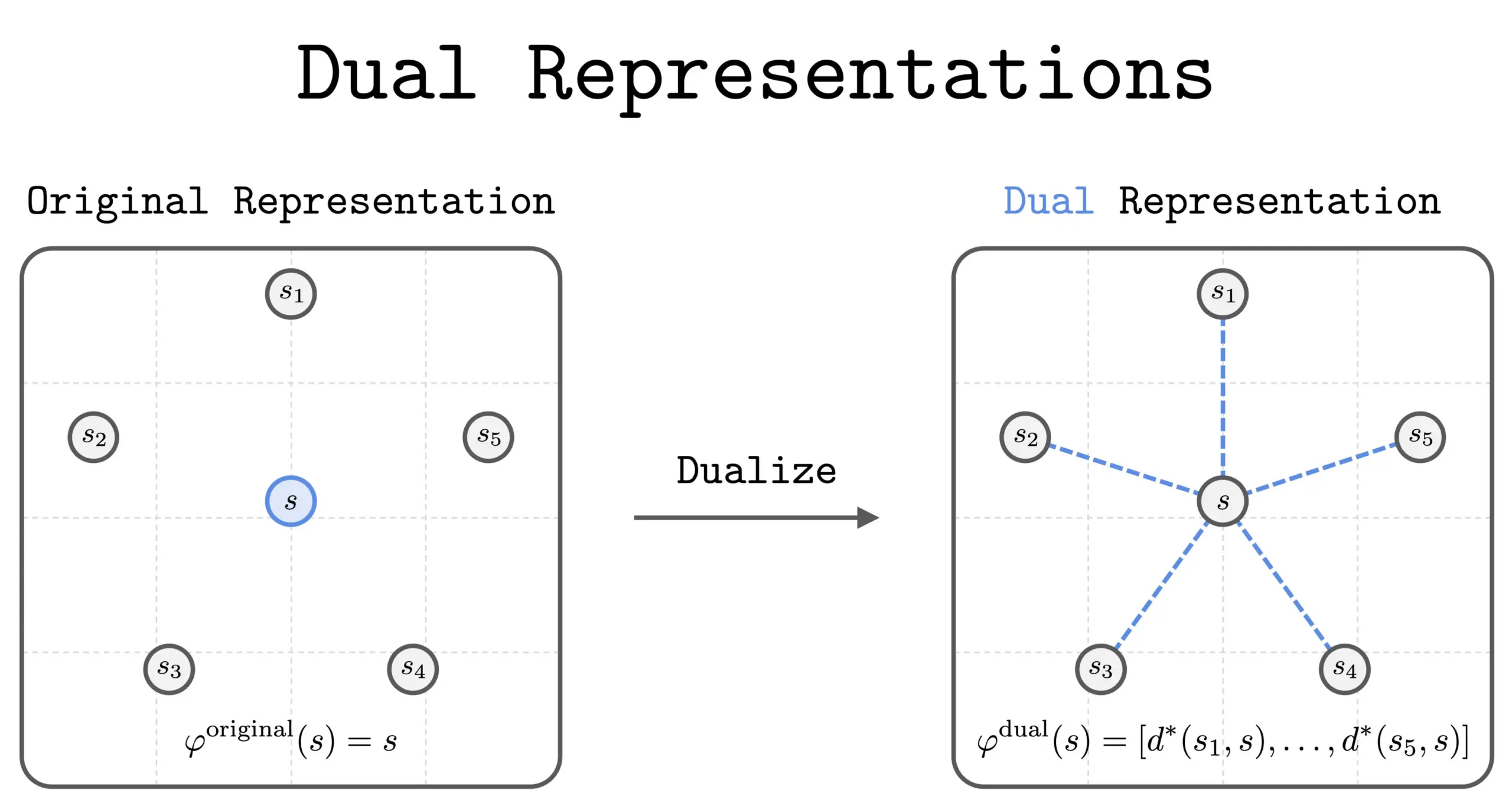

New Dual Representation Method in RL : A new study introduced a “dual representation” method in Reinforcement Learning (RL). This method offers a new perspective by representing states as a “set of similarities” with all other states. This dual representation has good theoretical properties and practical benefits, expected to enhance RL performance and understanding. (Source: dilipkay)

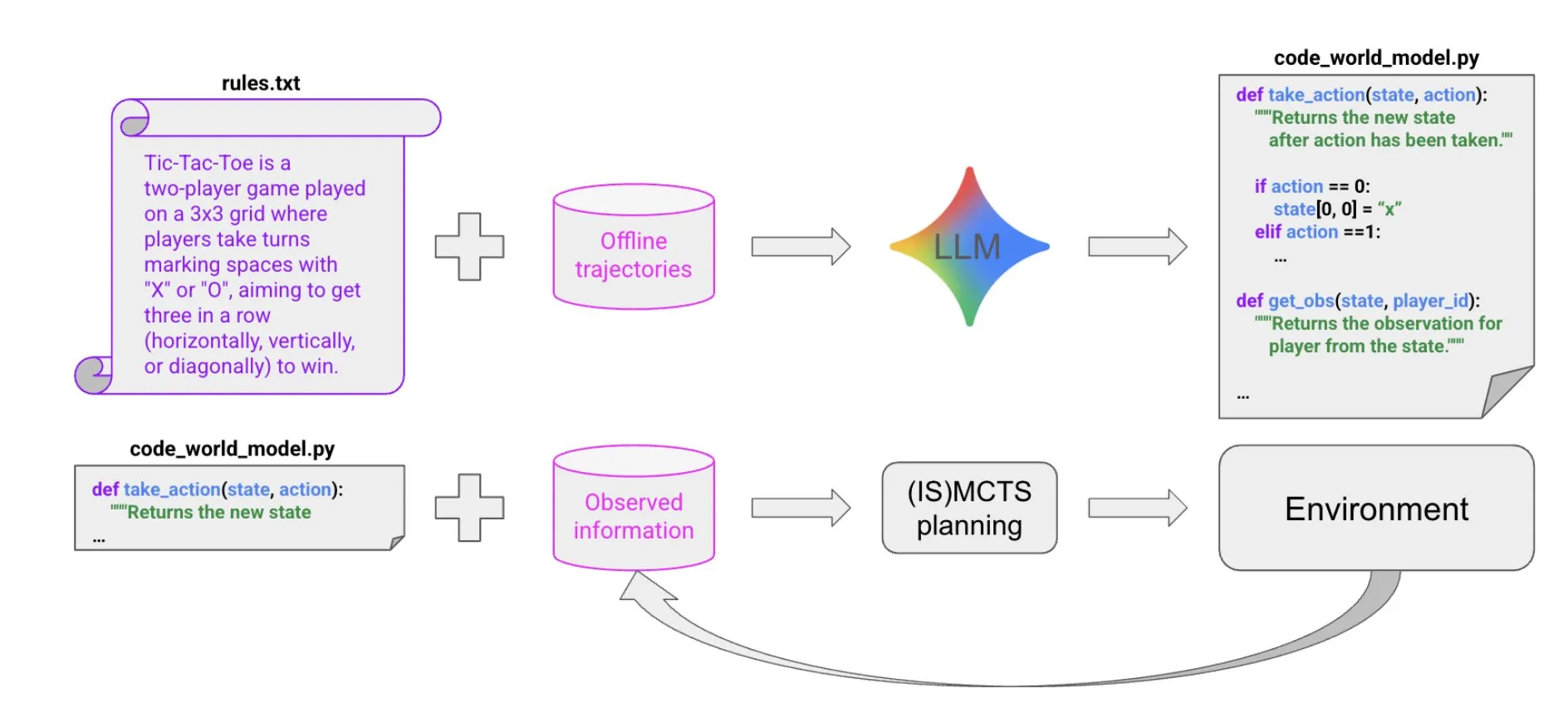

LLM-Driven Code Synthesis for Building World Models : A new paper proposes an extremely sample-efficient method for creating Agents that perform well in multi-agent, partially observable symbolic environments through LLM-driven code synthesis. This method learns a code world model from a small amount of trajectory data and background information, then passes it to existing solvers (like MCTS) to select the next action, offering new ideas for building complex Agents. (Source: BlackHC)

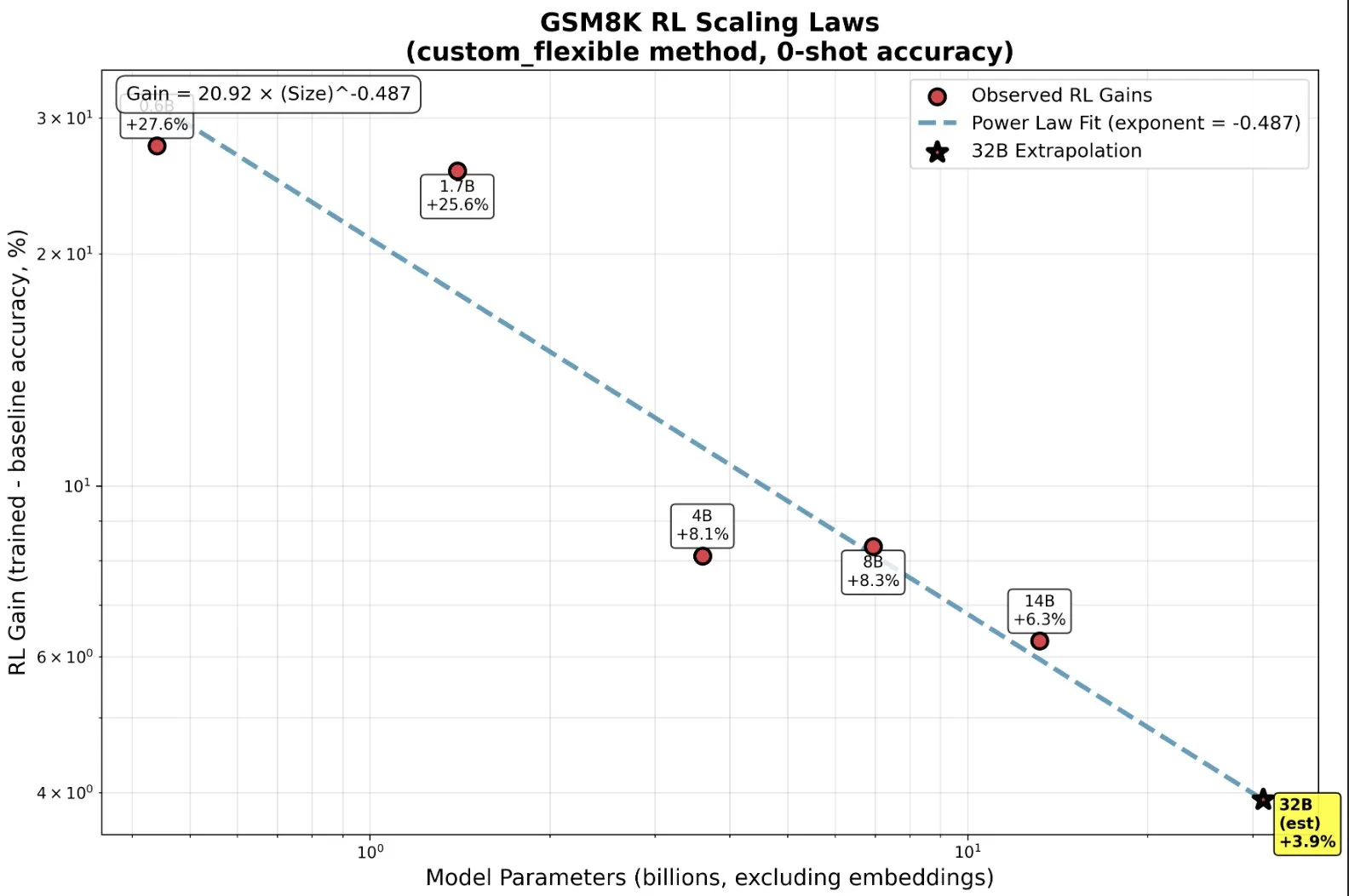

RL Training Small Models: Emergent Capabilities Beyond Pre-training : Research found that in Reinforcement Learning (RL), small models can disproportionately benefit and even exhibit “emergent” capabilities, challenging the traditional intuition of “bigger is better.” For smaller models, RL might be more computationally efficient than more pre-training. This finding has significant implications for AI labs’ decisions on when to stop pre-training and when to start RL when scaling RL, revealing new scaling laws between model size and performance improvement in RL. (Source: ClementDelangue, ClementDelangue)

AI vs. Machine Learning vs. Deep Learning: A Simple Explanation : A video resource explains the differences between Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning (DL) in an easy-to-understand way. This video aims to help beginners quickly grasp these core concepts, laying the foundation for further in-depth study in the AI field. (Source: )

Prompt Template Management in Deep Learning Model Experiments : The deep learning community discussed how to manage and reuse prompt templates in model experiments. In large projects, especially when modifying architectures or datasets, tracking the effects of different prompt variations becomes complex. Users shared experiences using tools like Empromptu AI for prompt version control and categorization, emphasizing the importance of prompt versioning and aligning datasets with prompts to optimize model products. (Source: Reddit r/deeplearning)

Code Completion (FIM) Model Selection Guide : The community discussed key factors for choosing Code Completion (FIM) models. Speed is considered an absolute priority, recommending models with fewer parameters that run solely on GPU (targeting >70 t/s). Additionally, “base” models and instruction models perform similarly in FIM tasks. The discussion also listed recent and older FIM models like Qwen3-Coder and KwaiCoder, and explored how tools like nvim.llm support non-code-specific models. (Source: Reddit r/LocalLLaMA)

Quantized Model Performance Trade-offs: Large Models vs. Low Precision : The community discussed the performance trade-offs between large quantized models and smaller non-quantized models, as well as the impact of quantization levels on model performance. It is generally believed that 2-bit quantization might be suitable for writing or dialogue, but for tasks like coding, at least Q5 level is required. Some users pointed out that Gemma3-27B’s performance significantly degrades at low quantization, while some new models are trained at FP4 precision, not requiring higher precision. This indicates that quantization effects vary by model and task, requiring specific testing. (Source: Reddit r/LocalLLaMA)

Reasons for R Language MissForest Failure in Prediction Tasks : An analysis article explored the reasons why the R language’s MissForest algorithm fails in prediction tasks, pointing out that it subtly breaks the crucial principle of training-test set separation during imputation. The article explains MissForest’s limitations in such scenarios and introduces new methods like MissForestPredict that address this issue by maintaining consistency between learning and application. This provides important guidance for machine learning practitioners when handling missing values and building predictive models. (Source: Reddit r/MachineLearning)

Seeking Multimodal Machine Learning Resources : Community users sought learning resources for multimodal machine learning, especially theoretical and practical materials on how to combine different data types (text, images, signals, etc.) and understand concepts like fusion, alignment, and cross-modal attention. This reflects the growing demand for learning multimodal AI technologies. (Source: Reddit r/deeplearning)

Seeking Video Resources for Training Inference Models with Reinforcement Learning : The machine learning community sought video resources for the best scientific lectures on using Reinforcement Learning (RL) to train inference models, including overview videos and in-depth explanations of specific methods. Users hoped to obtain high-quality academic content, rather than superficial influencer videos, to quickly understand relevant literature and decide on further research directions. (Source: Reddit r/MachineLearning)

11-Month AI Coding Journey: Tools, Tech Stack, and Best Practices : A developer shared an 11-month AI coding journey, detailing experiences, failures, and best practices using tools like Claude Code. He emphasized that in AI coding, upfront planning and context management are far more important than writing the code itself. Although AI lowers the barrier to code implementation, it does not replace architectural design and business insights. This experience sharing covered multiple projects from frontend to backend, mobile application development, and recommended auxiliary tools like Context7 and SpecDrafter. (Source: Reddit r/ClaudeAI)

💼 Business

JPMorgan Chase: $2 Billion Investment Annually, Transforming into an “All-AI Bank” : JPMorgan Chase CEO Jamie Dimon announced an annual investment of $2 billion in AI, aiming to transform the company into an “all-AI bank.” AI has been deeply integrated into core businesses such as risk control, trading, customer service, compliance, and investment banking, not only saving costs but, more importantly, accelerating work pace and changing the nature of roles. JPMorgan Chase, through its self-developed LLM Suite platform and large-scale deployment of AI Agents, views AI as the underlying operating system for the company, and emphasizes data integration and cybersecurity as the biggest challenges in its AI strategy. Dimon believes AI is a real long-term value, not a short-term bubble, and will redefine banking. (Source: 36Kr)

Apple from Musk : (The original text is incomplete here.)