Anahtar Kelimeler:OpenAI Sora, AI video oluşturma, Küçük Özyinelemeli Model (Tiny Recursive Model), AI oyuncak, AI çip, Sora 2 video yeniden oluşturma, TRM çıkarım verimliliği, AI oyuncak pazarı büyümesi, AMD ve OpenAI çip işbirliği, AI içerik telif hakkı tartışmaları

🔥 Spotlight

The Rise and Challenges of OpenAI Sora App : OpenAI’s AI-generated video application, Sora, quickly gained popularity, topping the App Store. Its free, unlimited video generation capabilities have raised widespread concerns about operational costs, copyright infringement (especially regarding the use of existing IP and the likenesses of deceased celebrities), and the misuse of deepfake technology. Sam Altman admitted the need to consider a monetization model and plans to offer more refined copyright controls. The app’s impact on the content creation ecosystem and the perception of reality has sparked discussions about whether AI-generated videos will surpass ‘real’ videos. (Source: MIT Technology Review, rowancheung, fabianstelzer, nptacek, paul_cal, BlackHC)

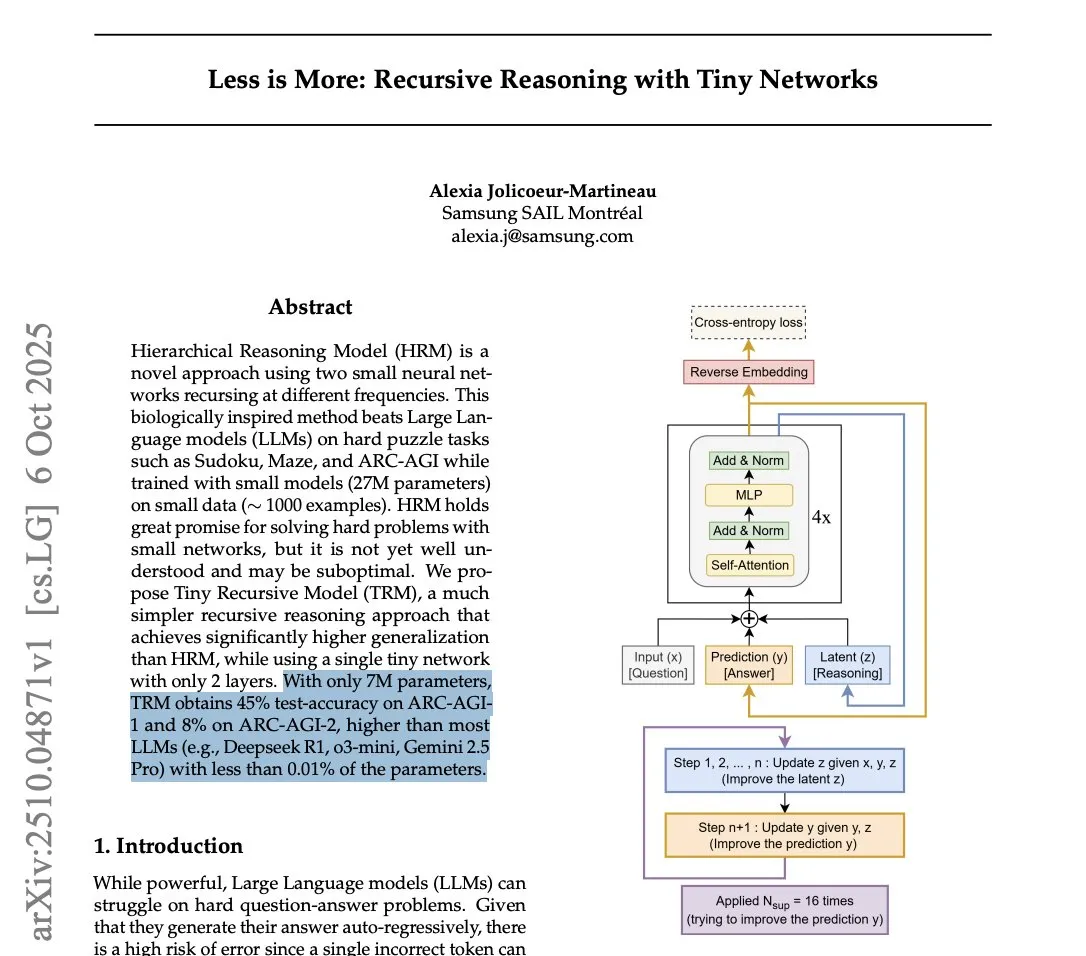

Samsung Unveils Tiny Recursive Model (TRM) to Challenge LLM Inference Efficiency : Samsung has released the Tiny Recursive Model (TRM), a small neural network with only 7 million parameters, which performed exceptionally well in the ARC-AGI benchmark, even surpassing large LLMs like DeepSeek-R1 and Gemini 2.5 Pro. TRM employs a recursive reasoning approach, optimizing answers through multiple internal ‘thoughts’ and self-criticism. This breakthrough has sparked discussions about whether ‘smaller models can be smarter’ and suggests that architectural innovation might be more crucial than mere model scale for inference tasks, potentially significantly reducing the computational costs of SOTA inference. (Source: HuggingFace Daily Papers, fchollet, cloneofsimo, ecsquendor, clefourrier, AymericRoucher, ClementDelangue, Dorialexander)

Tsinghua’s Yao Shunyu Jumps from Anthropic to Google DeepMind, Citing Value Discrepancies as Main Reason : Yao Shunyu, a special scholarship recipient from Tsinghua University’s Department of Physics, announced his departure from Anthropic to join Google DeepMind as a Senior Research Scientist. He stated that 40% of his reason for leaving was due to ‘fundamental value disagreements’ with Anthropic, believing the company was unfriendly towards Chinese researchers and employees with neutral stances. Yao Shunyu worked at Anthropic for a year, contributing to the reinforcement learning theory behind Claude 3.7 Sonnet and the Claude 4 series. He noted the astonishing pace of AI development but felt it was time to move forward. (Source: ZhihuFrontier, 量子位)

Arduino Acquired by Qualcomm, Signaling New Direction for Embedded AI : Arduino has been acquired by Qualcomm and has launched its first co-developed board, UNO Q, featuring the Qualcomm Dragonwing QRB2210 processor with integrated AI solutions. This marks Arduino’s shift from traditional low-power microcontrollers towards medium-power, AI-integrated edge computing. This move could drive widespread adoption of AI in IoT and embedded devices, providing developers with more powerful AI computing capabilities and signaling a new transformation in the embedded AI hardware ecosystem. (Source: karminski3)

Meta Superintelligence Releases REFRAG: A New Breakthrough in RAG Efficiency : Meta Superintelligence has published its first paper, REFRAG, proposing a novel RAG (Retrieval Augmented Generation) method aimed at significantly boosting efficiency. The method converts most retrieved document chunks into compact, LLM-friendly ‘chunk embeddings’ for direct LLM consumption, and uses a lightweight strategy to expand some chunk embeddings back to full tokens on demand within a budget. This significantly reduces KV cache and attention costs, accelerates time-to-first-byte and throughput, while maintaining accuracy, paving new paths for real-time RAG applications. (Source: Reddit r/deeplearning, Reddit r/LocalLLaMA)

🎯 Trends

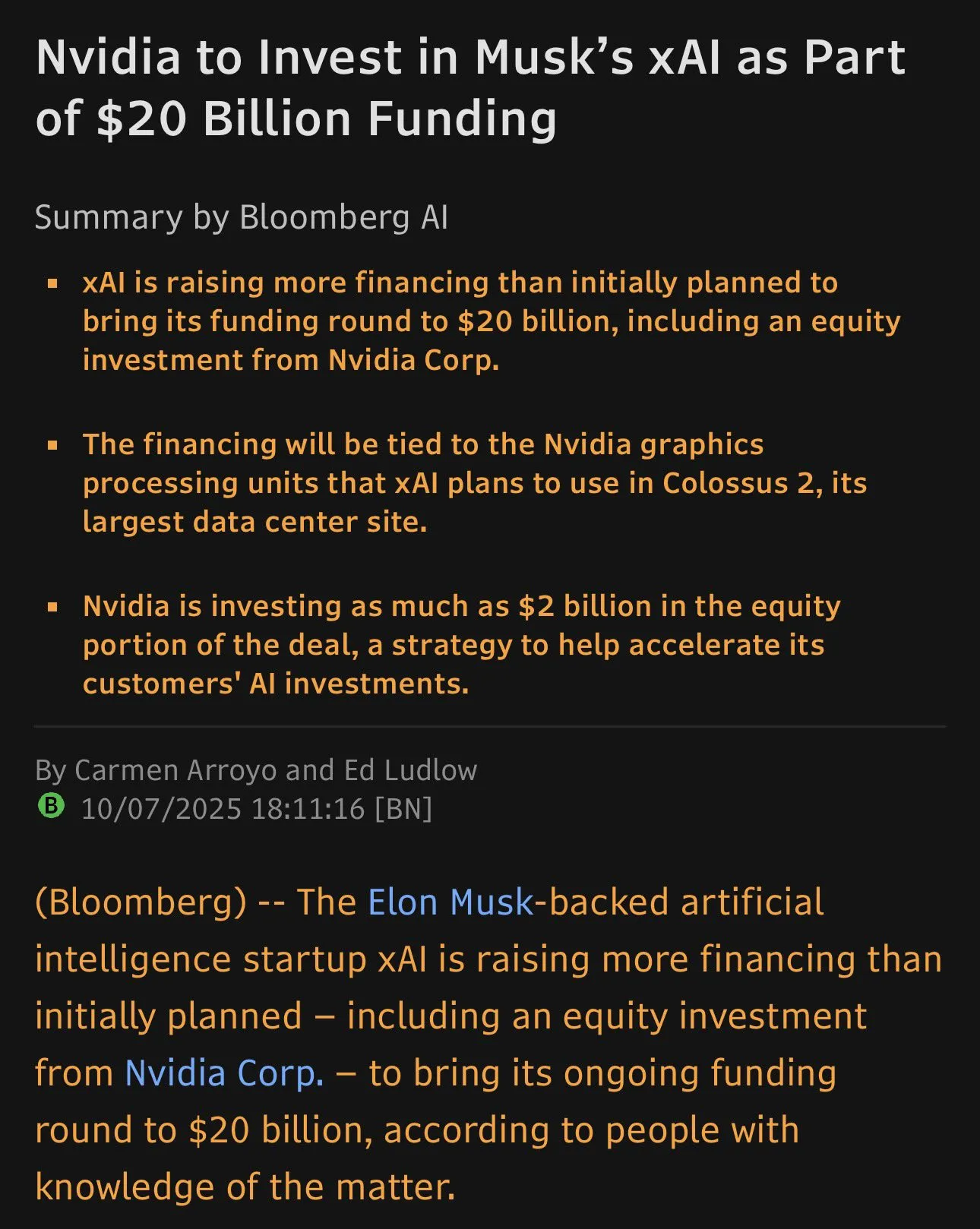

xAI Secures $20 Billion Funding, NVIDIA Invests $2 Billion : Elon Musk’s xAI company has successfully completed a $20 billion funding round, including a direct investment of $2 billion from NVIDIA. This capital, channeled through a Special Purpose Vehicle (SPV), will be used to procure NVIDIA GPUs to support the construction of its Memphis Colossus 2 data center. This unique funding structure aims to provide hardware assurance for xAI’s large-scale expansion in AI computing, further intensifying competition in the AI chip market. (Source: scaling01)

AI Toys Emerge in Chinese and US Markets : AI toys equipped with chatbots and voice assistants are becoming a new trend, growing rapidly in the Chinese market and expanding to international markets like the US. Products from companies like BubblePal and FoloToy aim to reduce children’s screen time. However, parents report that AI features are sometimes unstable, with lengthy responses or delayed speech recognition, leading to decreased child interest. US companies like Mattel are also collaborating with OpenAI to develop AI toys. (Source: MIT Technology Review)

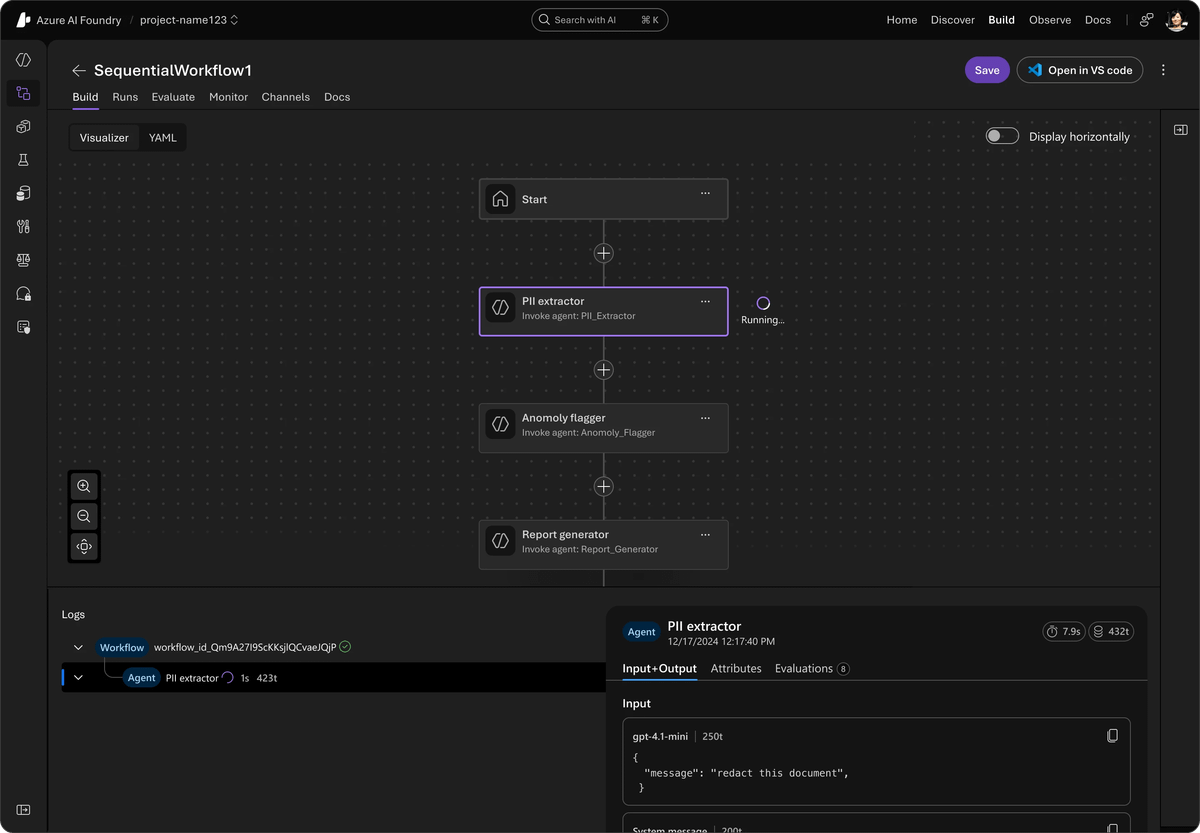

Microsoft Launches Unified Open-Source Agent Framework, Integrating AutoGen and Semantic Kernel : Microsoft has released the Agent Framework, a unified open-source SDK designed to integrate AutoGen and Semantic Kernel for building enterprise-grade multi-agent AI systems. Supported by Azure AI Foundry, the framework simplifies orchestration and observability and is compatible with various APIs. It introduces private previews for multi-agent workflows, cross-framework tracing with OpenTelemetry, real-time voice agent capabilities with the Voice Live API, and responsible AI tools, all aimed at enhancing the safety and efficiency of agent systems. (Source: TheTuringPost)

AI21 Labs Releases Jamba 3B, Small Model Outperforms Competitors : AI21 Labs has launched Jamba 3B, an MoE model with only 3 billion parameters, which excels in both quality and speed, especially in long-context processing. The model maintains a generation speed of approximately 40 t/s on Mac, even with contexts exceeding 32K, far surpassing Qwen 3 4B and Llama 3.2 3B. Jamba 3B scores higher on intelligence indices than Gemma 3 4B and Phi-4 Mini, and maintains full inference capabilities at 256K context, demonstrating the immense potential of small models for edge AI and on-device deployment. (Source: Reddit r/LocalLLaMA)

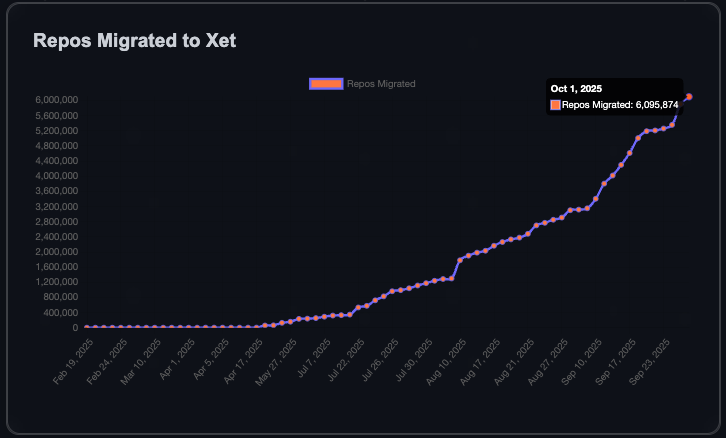

HuggingFace Community Sees Rapid Growth, Adds One Million Repositories in 90 Days : The HuggingFace community has added one million new model, dataset, and Space repositories in the past 90 days. It took six years to reach the first million, meaning a new repository is created every 8 seconds. This growth is attributed to more efficient data transfer enabled by Xet technology, and 40% private repositories indicate a trend of enterprises using HuggingFace for internal model and data sharing. The community’s goal is to reach 10 million repositories, signaling the flourishing development of the open-source AI ecosystem. (Source: Teknium1, reach_vb)

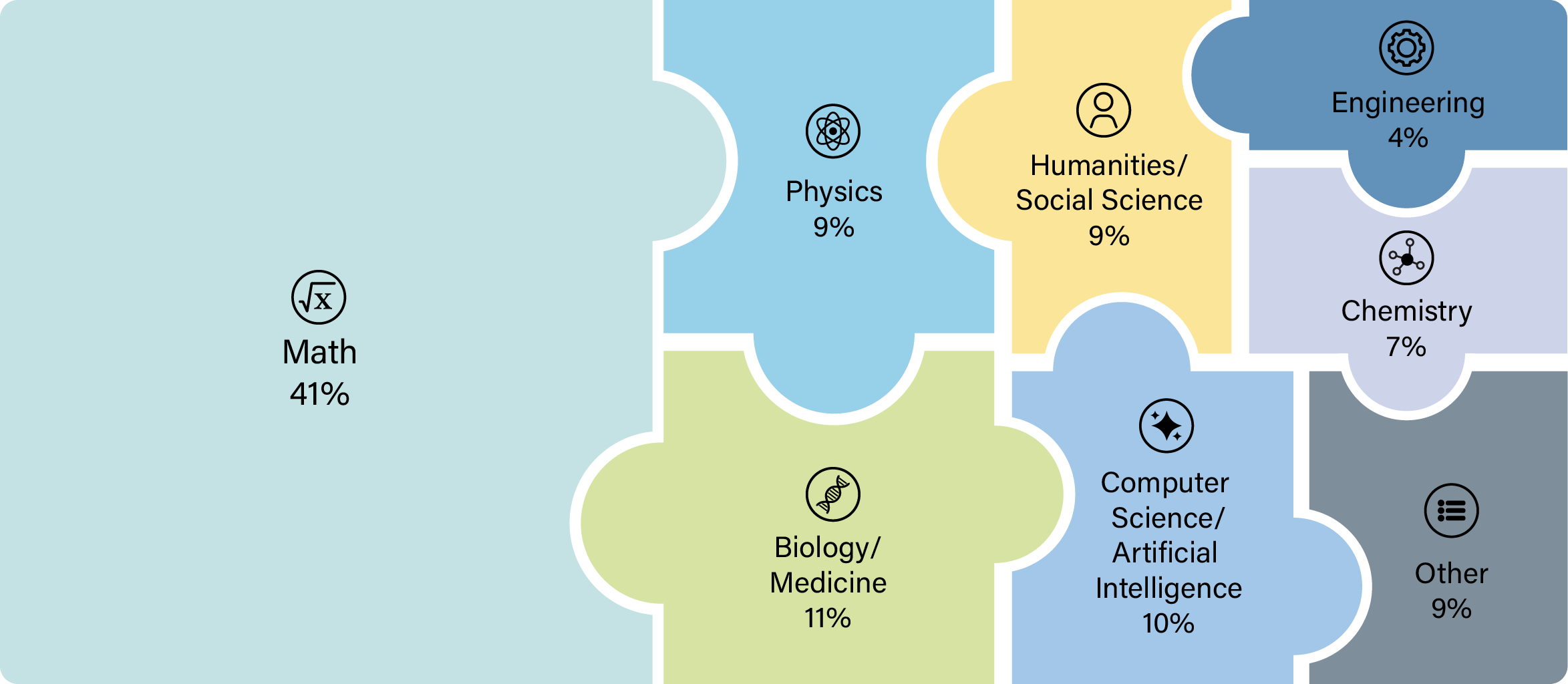

OpenAI GPT-5 Demonstrates Breakthrough Capabilities in Scientific Research : OpenAI’s GPT-5 model has crossed a significant threshold, with scientists successfully using it for original research in fields such as mathematics, physics, biology, and computer science. This advancement indicates that GPT-5 can not only answer questions but also guide and execute complex scientific explorations, greatly accelerating the research process. Some researchers have stated that after the release of GPT-5 Thinking and GPT-5 Pro, it is no longer reasonable to conduct scientific research without consulting them. (Source: tokenbender)

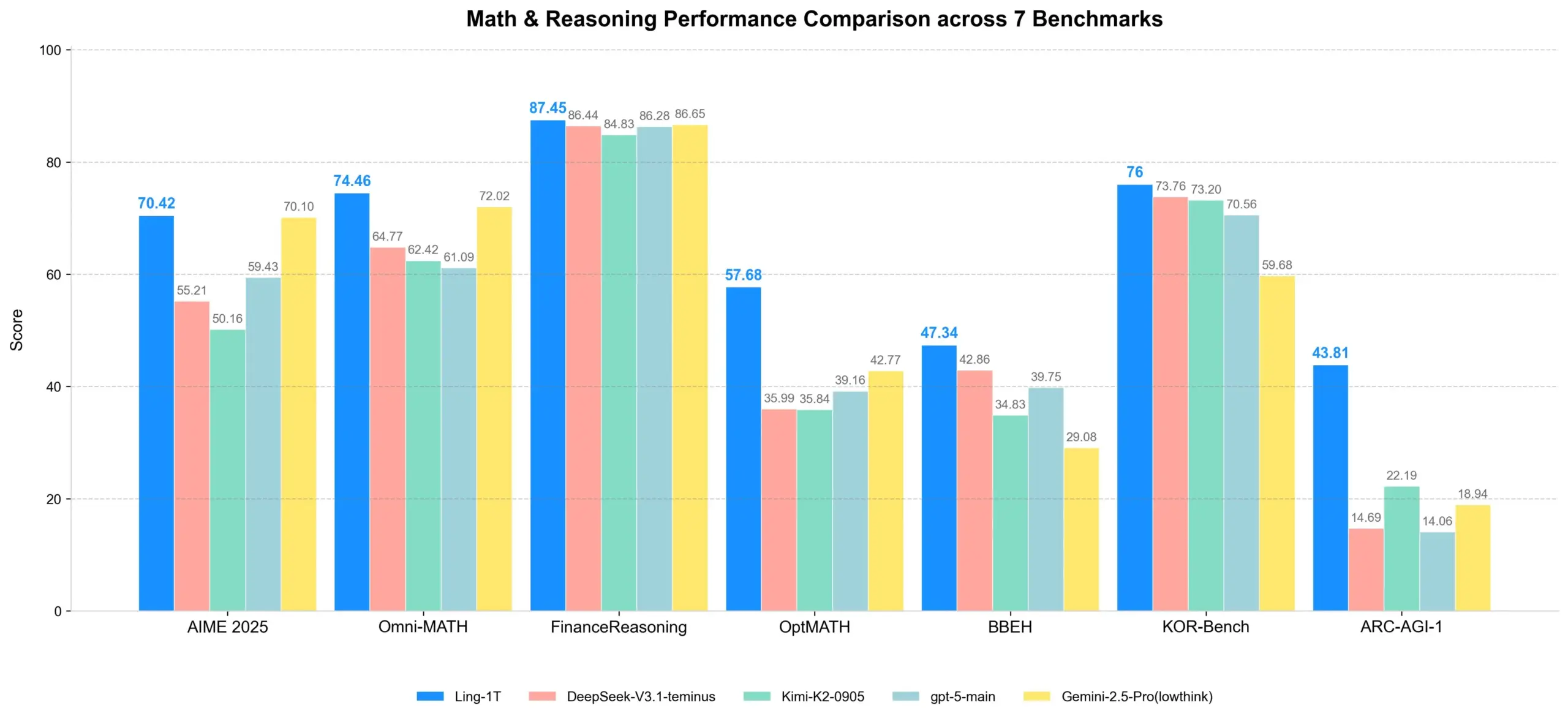

Ling-1T: Trillion-Parameter Open-Source Inference Model Released : Ling-1T, the flagship model of the Ling 2.0 series, boasts 1 trillion total parameters, with approximately 50 billion active parameters per token, trained on 20 trillion+ inference-intensive tokens. The model achieves scalable inference through Evo-CoT curriculum and Linguistics-Unit RL, demonstrating a strong efficiency-accuracy balance on complex reasoning tasks. It also features advanced visual understanding and frontend code generation capabilities, and can achieve tool use with approximately 70% success rate, marking a new milestone for open-source trillion-scale intelligence. (Source: scaling01, TheZachMueller)

Meta Ray-Ban Display Redefines Human-Computer Interaction and Learning : Meta Ray-Ban Display smart glasses integrate learning and translation features into daily wear, offering ‘invisible translation’ and ‘instant visual learning’ experiences. Conversation subtitles are displayed directly in the lenses, allowing users to get information simply by looking at landmarks or artworks. Furthermore, gesture control is enabled via the Neural Band, allowing operation without a phone. This technology promises to reshape how we interact with the world, learn, and connect, marking a new beginning for human-centric computing. (Source: Ronald_vanLoon)

🧰 Tools

Synthesia Launches Copilot, Empowering Professional AI Video Editing : Synthesia has released Copilot, a professional AI video editor. This tool can quickly write scripts, connect knowledge bases, and intelligently recommend visual assets, much like having a colleague who deeply understands the business and the Synthesia platform. Copilot aims to simplify the video production process, lower the barrier to professional video creation, and provide efficient, personalized AI video solutions for businesses and content creators. (Source: synthesiaIO, synthesiaIO)

GLIF Agent Uses Sora 2 to Recreate and Customize Viral Videos : GLIF has developed an Agent capable of recreating any viral video using the Sora 2 model. The Agent first analyzes the original video, then generates detailed prompts based on the analysis. Users can collaborate with the Agent to customize these prompts, creating highly personalized AI-generated videos. This technology is expected to provide powerful video production and re-creation capabilities in content creation and marketing. (Source: fabianstelzer)

Cloudflare AI Search Partners with GroqInc to Launch ‘Document Chat’ Template : Cloudflare AI Search (formerly AutoRAG) has partnered with GroqInc to release a new open-source ‘document chat’ template. This template combines Groq’s inference engine with AI Search, enabling users to more easily add conversational AI capabilities to documents for real-time Q&A and interaction with document content. This integration will enhance the efficiency of document retrieval and information access. (Source: JonathanRoss321)

HuggingFace Introduces In-Browser GGUF Editing Feature : HuggingFace now supports direct editing of GGUF model metadata within the browser, without needing to download the full model. This feature, enabled by Xet technology, supports partial file updates, greatly simplifying model management and iteration processes, and improving developer efficiency on the HuggingFace platform. (Source: reach_vb)

LangChain and LangGraph Release v1.0 Alpha, Soliciting Developer Feedback : LangChain and LangGraph have released v1.0 Alpha, introducing new Agent middleware APIs, standard output/content blocks, and significant API updates. The team is actively inviting developers to test the new version and provide feedback to further refine its AI agent development framework and drive the creation of more powerful AI applications. (Source: LangChainAI)

NeuML Releases ColBERT Nano Series of Micro Models, Under One Million Parameters : NeuML has released the ColBERT Nano series of models, all with fewer than 1 million parameters (250K, 450K, 950K). These micro models demonstrate astonishing performance in ‘Late interaction’ mode, proving that even extremely small-scale models can achieve good results on specific tasks, providing efficient solutions for AI deployment in resource-constrained environments. (Source: lateinteraction, lateinteraction)

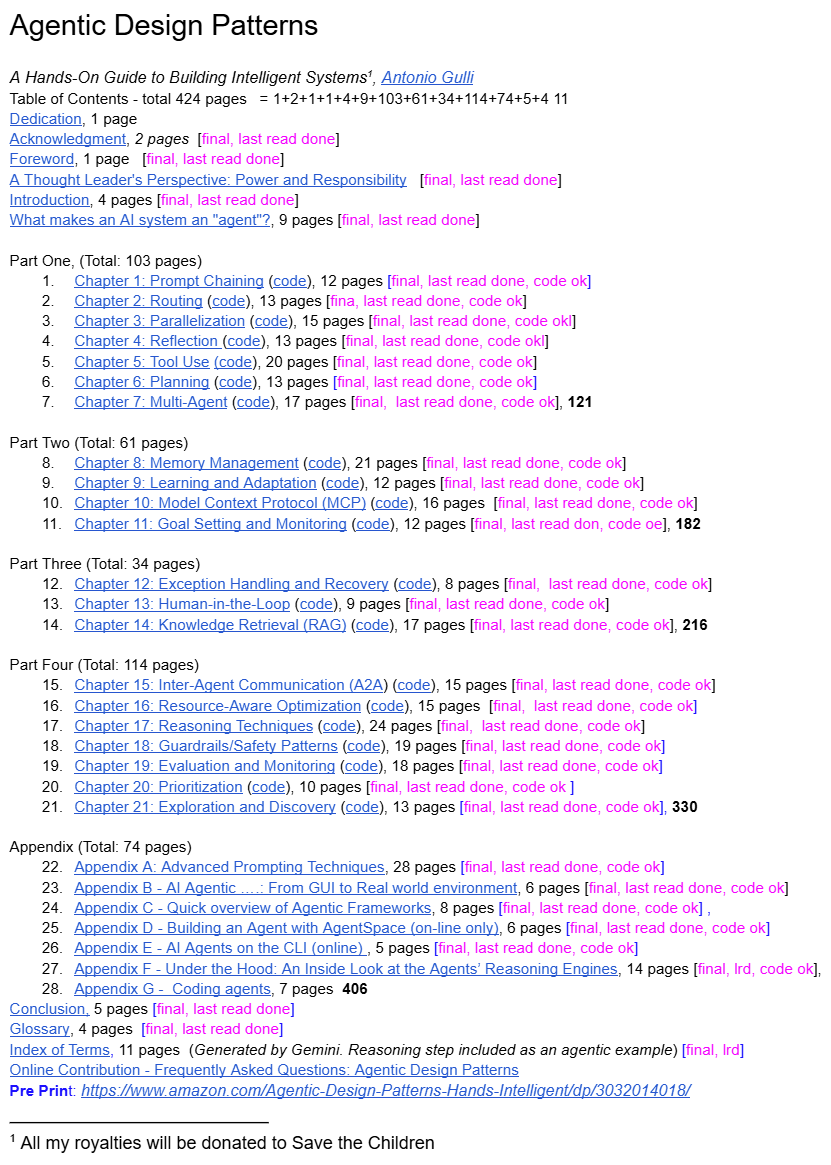

Google Senior Engineering Director Shares ‘Agent Design Patterns’ : A Google Senior Engineering Director has freely shared ‘Agent Design Patterns,’ bringing the first systematic set of design principles and best practices to the booming AI Agent field. This resource aims to help developers better understand and build AI agents, filling a gap in systematic guidance for the field and potentially becoming an important reference for AI Agent developers. (Source: dotey)

📚 Learning

Research on LLM Hallucinations and Safety Alignment Mechanisms: From Internal Origins to Mitigation Strategies : Research reveals that LLM inference models may exhibit a ‘refusal cliff’ phenomenon, where refusal intent sharply declines, before generating final outputs. Using the DST framework, researchers uncovered that hallucinations become inevitable at a model-specific ‘commitment layer’ and proposed HalluGuard, a small inference model that integrates clinical signals and data optimization to mitigate hallucinations in RAG, providing mechanistic explanations and practical strategies for LLM safety alignment and hallucination mitigation. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

ASPO Optimizes LLM Reinforcement Learning, Addressing IS Ratio Mismatch Issues : ASPO (Asymmetric Importance Sampling Policy Optimization) is a novel LLM post-training method that addresses a fundamental flaw in traditional reinforcement learning: the mismatch of importance sampling (IS) ratios for positive advantage tokens. By flipping the IS ratio for positive advantage tokens and introducing a soft double-clipping mechanism, ASPO can more stably update low-probability tokens, alleviate premature convergence, and significantly improve performance on coding and mathematical reasoning benchmarks. (Source: HuggingFace Daily Papers)

Fathom-DeepResearch: An Agent System for Long-Horizon Information Retrieval and Synthesis : Fathom-DeepResearch is an agent system composed of Fathom-Search-4B and Fathom-Synthesizer-4B, designed specifically for complex, open-ended information retrieval tasks. Fathom-Search-4B achieves real-time web search and webpage querying through multi-agent self-play datasets and reinforcement learning optimization. Fathom-Synthesizer-4B, in turn, transforms multi-round search results into structured reports. The system performs exceptionally well across multiple benchmarks and demonstrates strong generalization capabilities for reasoning tasks like HLE and AIME-25. (Source: HuggingFace Daily Papers)

AgentFlow: In-Flow Agent System Optimization for Efficient Planning and Tool Use : AgentFlow is a trainable in-flow agent framework that directly optimizes its planner through multi-round interactive loops by coordinating four modules: planner, executor, verifier, and generator. It employs Flow-based Group Refined Policy Optimization to address the credit assignment problem in long-horizon, sparse reward scenarios. Across ten benchmarks, AgentFlow, with a 7B backbone model, surpasses SOTA baselines, showing significant improvements in average accuracy across search, agent, mathematics, and science tasks, even outperforming GPT-4o. (Source: HuggingFace Daily Papers)

Systematic Study on the Impact of Code Data on LLM Reasoning Capabilities : Research explores how code data enhances LLM reasoning capabilities through a systematic, data-centric framework. By constructing parallel instruction datasets in ten programming languages and applying structural or semantic perturbations, it was found that LLMs are more sensitive to structural perturbations than semantic ones, especially in mathematics and coding tasks. Pseudocode and flowcharts are as effective as code, and syntactic style also influences task-specific gains (Python benefits natural language inference, Java/Rust benefits mathematics). (Source: HuggingFace Daily Papers)

DeepEvolve: An Agent for Scientific Algorithm Discovery Fusing Deep Research and Algorithmic Evolution : DeepEvolve is an agent that combines deep research with algorithmic evolution, discovering scientific algorithms through external knowledge retrieval, cross-file code editing, and systematic debugging in a feedback-driven iterative loop. It not only proposes new hypotheses but also refines, implements, and tests them, avoiding superficial improvements and ineffective over-refinement. Across nine benchmarks in chemistry, mathematics, biology, materials, and patents, DeepEvolve continuously improves initial algorithms, generating executable new algorithms and achieving sustained gains. (Source: HuggingFace Daily Papers)

AI/ML Learning Roadmap and Core Concepts : The community has shared a comprehensive learning path for AI, Machine Learning, and Deep Learning, covering various aspects from fundamental concepts to advanced techniques (such as Agentic AI and LLM generation parameters). These resources aim to provide structured learning guidance for professionals looking to enter or deepen their knowledge in the AI/ML field, helping them master end-to-end skills from model development to deployment and operations, and understand how AI drives industry transformation. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

AI Benchmarks and Learning Resources: HLE, Conferences, and GPU Cost Management : The community discussed several AI learning and practical resources. CAIS released the dynamically updated ‘Humanity’s Last Exam’ benchmark to adapt to improving model performance. Additionally, a guide for attending machine learning conferences and strategies for low-cost LLM development, including pay-as-you-go GPUs and running small models locally, were provided. Furthermore, the GPU Mode Hackathon offered a platform for developers to learn and exchange ideas. (Source: clefourrier, Reddit r/MachineLearning, Reddit r/MachineLearning, Reddit r/MachineLearning, danielhanchen)

OneFlow: Concurrent Mixed-Modality and Interleaved Generative Model : OneFlow is the first non-autoregressive multimodal model that supports variable-length and concurrent mixed-modality generation. It combines an in-filling editing flow for discrete text tokens with flow matching in the image latent space. OneFlow achieves concurrent text-image synthesis through hierarchical sampling, prioritizing content over grammar. Experiments show that OneFlow outperforms autoregressive baselines on both generation and understanding tasks, reducing training FLOPs by up to 50%, and unlocking new capabilities for concurrent generation, iterative refinement, and natural inference-style generation. (Source: HuggingFace Daily Papers)

Equilibrium Matching: A Generative Modeling Framework Based on Implicit Energy Models : Equilibrium Matching (EqM) is a new generative modeling framework that discards the non-equilibrium, time-conditioned dynamics of traditional diffusion and flow models, instead learning the equilibrium gradients of an implicit energy landscape. EqM employs an optimization-based sampling process, sampling on the learned landscape via gradient descent, achieving SOTA performance with ImageNet 256×256 FID 1.90, and naturally handling tasks like partial denoising, OOD detection, and image synthesis. (Source: HuggingFace Daily Papers)

💼 Business

OpenAI and AMD Strike Chip Partnership, Challenging NVIDIA’s Dominance : OpenAI has signed a five-year, multi-billion dollar chip partnership agreement with AMD, aiming to challenge NVIDIA’s dominance in the AI chip market. This move is part of OpenAI’s strategy to diversify its chip supply, having previously also partnered with NVIDIA. This agreement highlights the immense demand for high-performance computing hardware in the AI industry and the pursuit of supply chain diversity. (Source: MIT Technology Review)

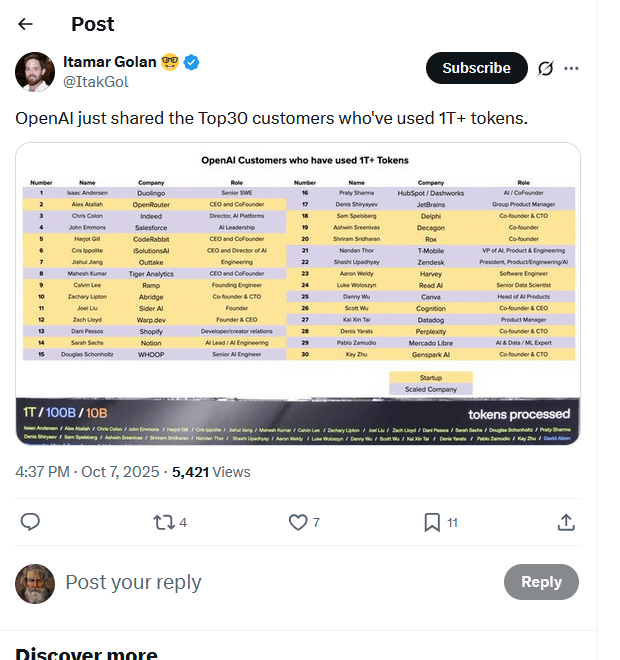

OpenAI Top Customer List Leaked, 30 Companies Consume Trillions of Tokens : A list, purportedly of OpenAI’s top customers, has circulated online, showing 30 companies that have processed over a trillion tokens through its models. This list (including Duolingo, OpenRouter, Salesforce, Canva, Perplexity, etc.) reveals the rapid formation of the AI inference economy and showcases four main types: AI-native builders, AI integrators, AI infrastructure providers, and vertical AI solution providers. Token consumption is being seen as a new benchmark for measuring the true value and business progress of AI applications. (Source: Reddit r/ArtificialInteligence, 量子位)

Singapore Becomes Copyright Safe Harbor for AI Development, Attracting Global AI Companies : Singapore has amended its Copyright Act, introducing a computational analysis defense clause that explicitly exempts computational data analysis performed to improve AI systems from copyright infringement, even preventing contractual overrides. This move aims to position Singapore as the most attractive global hub for AI model development, drawing investment and innovation, in stark contrast to the cautious approach to AI copyright in regions like Europe and the US. Although the protection is limited to Singapore, it provides significant safeguards for foundational model development. (Source: Reddit r/ArtificialInteligence)

🌟 Community

AI Industry Funding Deals and Bubble Concerns : Questions have emerged on social media regarding AI industry funding deals, with many believing that numerous transactions sound more like attempts to artificially inflate stock prices rather than being based on actual value. Some comments suggest that many AI products have not shown practical application effects in local or regional markets, with businesses instead complaining about ineffective AI products. This phenomenon is interpreted as market speculation rather than genuine capital formation and spillover effects. Concurrently, there’s a discussion about the application of AI digital twins in marketing: ‘hype or future?’ (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

ChatGPT Content Moderation and User Experience Controversy : ChatGPT users complain that the platform’s content moderation is overly strict, even flagging simple recipe requests or hugs between characters as ‘suggestive content,’ while being less sensitive to violent content. Users believe ChatGPT has become ‘garbage’ and ‘overprotective,’ questioning whether OpenAI has lost its top talent. Additionally, users have reported issues with LaTeX rendering in the ChatGPT App. This has led some users to cancel subscriptions, calling on OpenAI to stop stifling creativity. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, jeremyphoward)

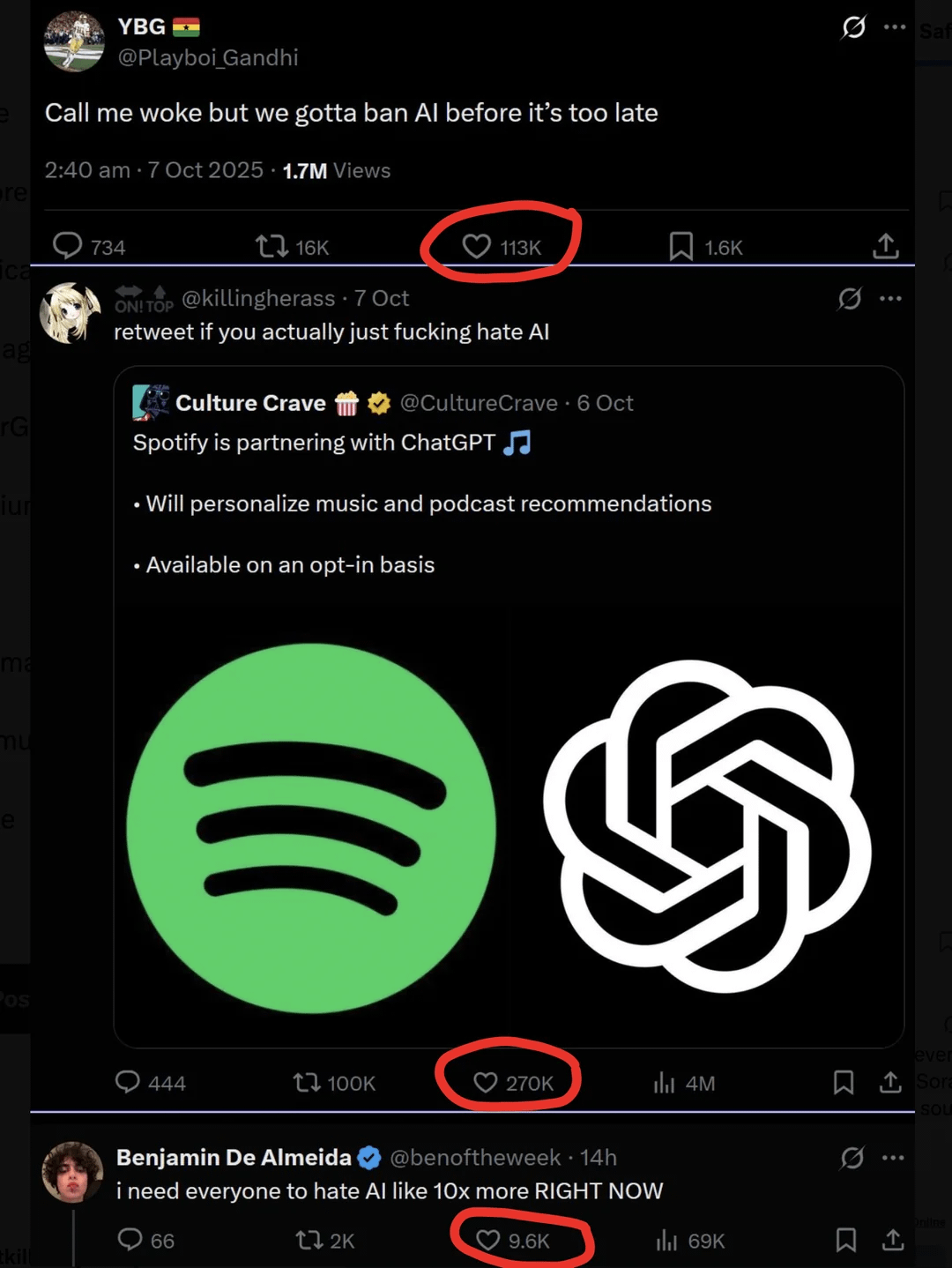

AI Content Backlash and Creator Concerns : As AI-generated content becomes increasingly prevalent, strong anti-AI sentiment has emerged in society, particularly in the arts and creative fields. Prominent creators like YouTuber MrBeast have expressed concerns that AI videos could threaten the livelihoods of millions of creators. Taylor Swift’s fans also criticized her AI-generated promotional video as ‘cheap and rough.’ This backlash reflects creators’ anxiety about AI technology impacting traditional industries, as well as concerns about content quality and authenticity. (Source: Reddit r/artificial, MIT Technology Review)

AI’s Application in Gaming: Challenges and Prospects : The community discussed the powerful, creative, interesting, and dynamic characteristics of AI technology, but questioned why no popular game has widely adopted AI yet. Some believe AI is already widely used in development, but running AI models locally is costly, and game developers tend to control the narrative. Others suggest that developers only focus on traditional blockbusters, neglecting new creative ideas. This reflects the technical, cost, and creative challenges AI faces in being implemented in the gaming sector. (Source: Reddit r/artificial)

Perplexity’s Utility in AI Search Highlighted by Cristiano Ronaldo’s Use Case : Football superstar Cristiano Ronaldo used the AI search tool Perplexity while preparing his Prestige Globe Award speech. He stated that Perplexity helped him understand the award’s significance and overcome nervousness. This event was widely publicized by Perplexity officially and on social media, highlighting the practical value of AI search in providing fast, accurate information, and its promotional potential through celebrity endorsement. (Source: AravSrinivas, AravSrinivas)

Google AI Research Wins Nobel Prizes Amidst Related Controversies : Google has garnered three Nobel Prizes within two years, including Demis Hassabis (AlphaFold) and Geoff Hinton (AI). This achievement is seen as a testament to Google’s long-term research investment and ambition. However, Jürgen Schmidhuber questioned the 2024 Nobel Prize in Physics for alleged plagiarism, arguing that its findings highly overlap with earlier research and were not properly cited, sparking discussions on academic ethics and attribution in the AI field. (Source: Yuchenj_UW, SchmidhuberAI, SchmidhuberAI)

Philosophical Debate on AI Computing Demands and the Path to AGI/ASI : Regarding the vast computing resources required for AI video generation, some argue that such enormous computational investment, driven by real demand, paradoxically indicates that Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) remain distant fantasies. This discussion reflects the industry’s contemplation of AI development paths: whether current AI successes in specific applications might divert resources and delay the achievement of grander goals. Concurrently, Richard Sutton emphasizes that the essence of learning is active agent behavior, not passive training. (Source: fabianstelzer, Plinz, dwarkesh_sp)

AI’s Impact on the Labor Market: PhD Data Labelers See Hourly Wages Decline : Social media discussions indicate that due to an oversupply of PhD data labelers, their hourly wages have dropped from $100/hour to $50/hour. Previously, OpenAI had hired PhDs for data labeling at $100/hour. This phenomenon reflects intensified competition in the AI data labeling market and shifts in the demand for high-quality data labeling talent. (Source: teortaxesTex)

AI-Assisted Software Engineer Tools Secure Funding and Their Impact on Careers : Relace, a startup focused on providing tools for AI-driven software engineers, secured $23 million in Series A funding led by Andreessen Horowitz. This signifies that the AI toolchain is extending into deeper realms of AI-driven autonomous development. Concurrently, engineers discussed how AI coding tools are changing their workflows, believing that while proficiency with AI tools is important, human creativity and problem-solving abilities remain core values. (Source: steph_palazzolo, kylebrussell)

The Rise and Fall of the Vibe Coding Cultural Phenomenon : Social media discussions have explored the rise and fall of the ‘Vibe Coding’ concept. Some consider Vibe Coding a way to program in a relaxed atmosphere, while others believe it has ‘died out.’ Related discussions also mentioned AI-generated content like ‘Bob Ross vibe coding,’ reflecting the developer community’s exploration and reflection on programming culture and AI-assisted coding methods. (Source: arohan, Ronald_vanLoon, nptacek)

💡 Other

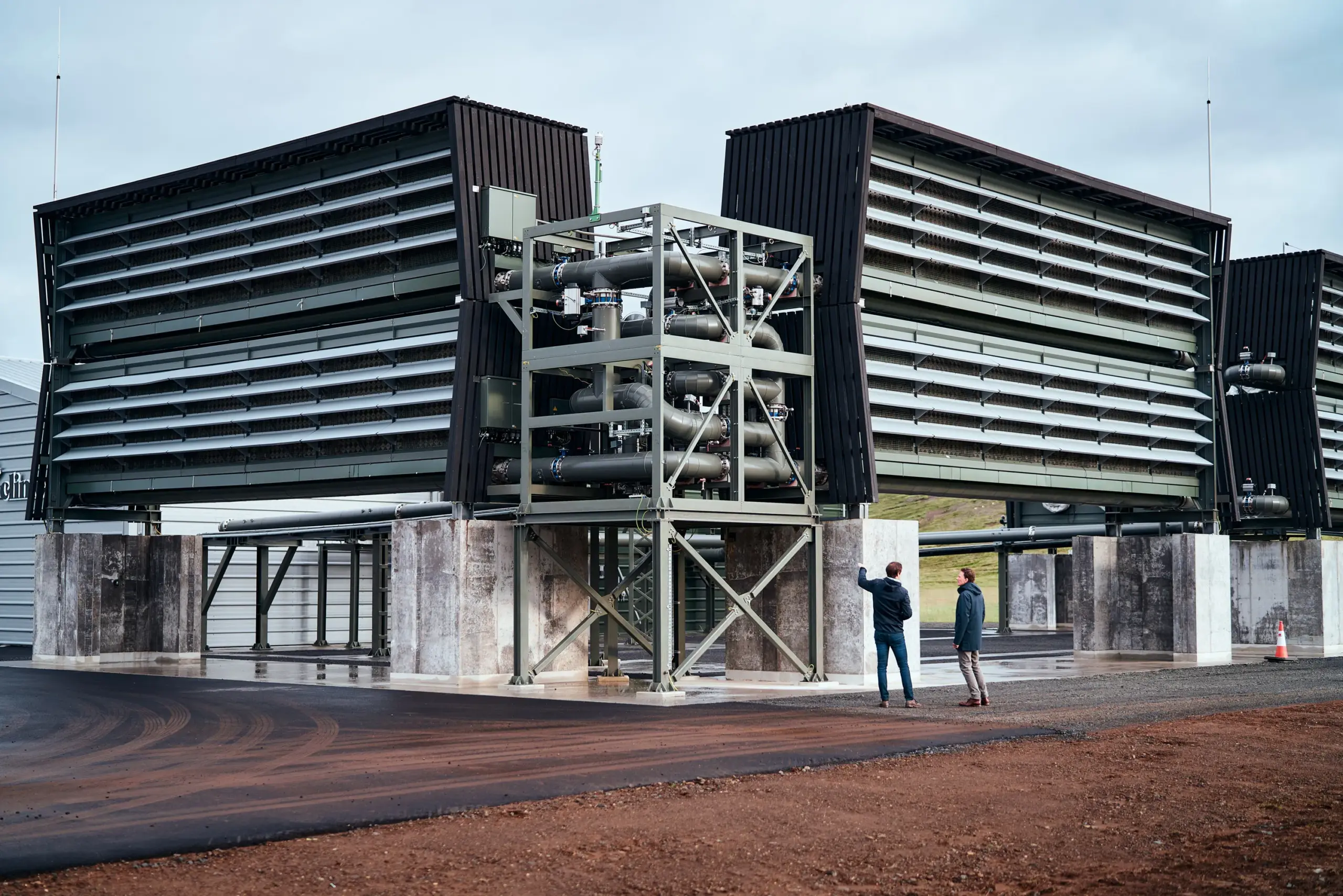

US Government May Cancel Billions in Funding for Carbon Capture Plants : The US Department of Energy may terminate billions of dollars in funding for two large direct air carbon capture plants. These projects were originally slated to receive over $1 billion in government grants but are currently facing ‘termination’ status. Although the Department of Energy stated no final decision has been made, and it had previously terminated over 200 projects to save $7.5 billion, this uncertainty has raised industry concerns about US climate technology development and international competitiveness. (Source: MIT Technology Review)

New Advances in Robotics: From Flexible Wrists to Bionic Beetles and Humanoid Robots : Several advancements have been made in the field of robotics. A new parallel robotic wrist achieves flexible, human-like movements in confined spaces, enhancing operational precision. Concurrently, researchers are developing bionic robotic beetles with backpacks, intended for disaster search and rescue. Furthermore, reports have shown humanoid robots interacting with motorcycles, demonstrating the capabilities of bionic technology in simulating human behavior. These advancements collectively push the boundaries of robotic technology in terms of adaptability to complex environments and human-robot interaction. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)