Anahtar Kelimeler:OpenAI, xAI, Yapay Zeka Modelleri, Ticari Sırlar, Yetenek Rekabeti, Sora 2, Veri Merkezleri, NVIDIA, OpenAI ve xAI Ticari Sır Davası, Yapay Zeka ile Akademik Makale Yazımı, Sora 2 Çapraz Modal Akıl Yürütme Yeteneği, Stargate Veri Merkezi Projesi, NVIDIA’nın Piyasa Değeri 4 Trilyon Doları Aştı

🔥 Focus

OpenAI and xAI Trade Secret Lawsuit Escalates: OpenAI strongly refutes Elon Musk’s xAI trade secret theft allegations, calling them “bullying tactics” aimed at intimidating employees. OpenAI denies stealing trade secrets and states that xAI employee departures are internal issues, not poaching. The case involves former xAI engineers like Xuechen Li and Jimmy Fraiture accused of leaking secrets, and a dispute over a former finance executive’s move, revealing the fierce talent and technology competition among AI giants. (Source: 量子位, mckbrando)

AI Autonomously Completes 30-Page Academic Paper, Including Experiments and Analysis: An AI system named “Virtuous Machines” autonomously completed a 30-page academic paper in cognitive psychology in 17 hours at a cost of $114. The system automated the entire process from topic selection, experimental design (recruiting 288 human participants), data analysis, to drafting the paper, citing over 40 real references and adhering to APA format. This demonstrates AI’s growing autonomy and collaborative capabilities in complex research tasks, despite minor flaws like theoretical misunderstandings. (Source: 量子位)

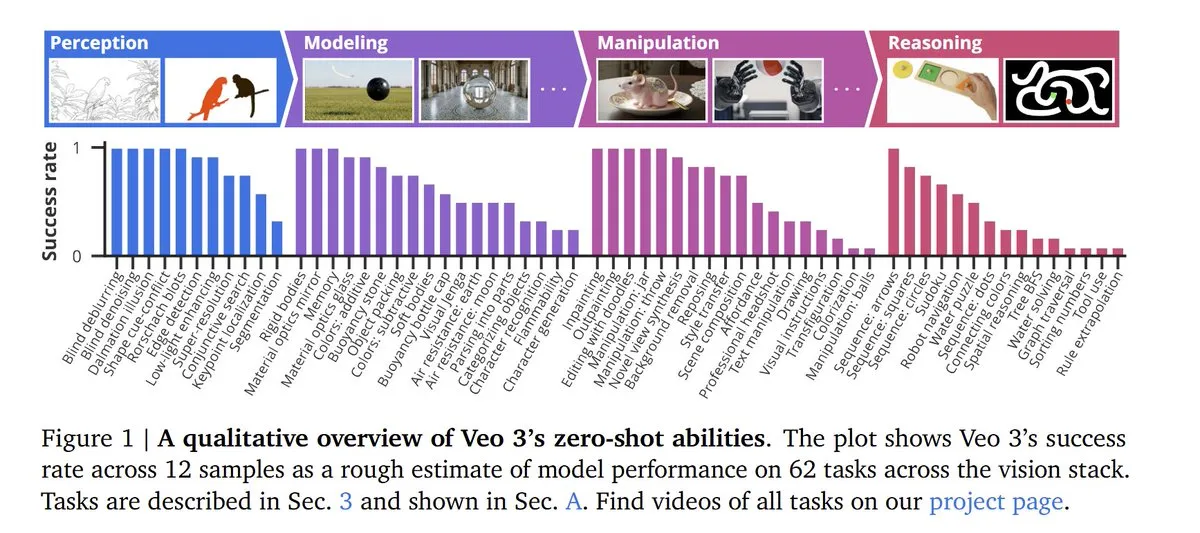

OpenAI Sora 2 Demonstrates Cross-Modal Reasoning and Strict Account Policies: OpenAI released Sora 2, which is not only a flagship video and audio generation model but also demonstrates astonishing cross-modal reasoning capabilities, scoring 55% in LLM benchmarks. Through training on massive video data, the AI model can exhibit emergent image reasoning capabilities not explicitly trained for. Sora 2’s updated terms of use emphasize strict account linking; a Sora ban will result in a permanent ChatGPT account ban. (Source: dl_weekly, NerdyRodent, BlackHC, menhguin, Teknium1, Reddit r/LocalLLaMA)

OpenAI, Oracle, and SoftBank Launch Trillion-Dollar “Stargate” Data Center Plan: OpenAI, in collaboration with Oracle and SoftBank, announced the launch of a “Stargate” data center construction plan costing up to $1 trillion, aiming to deploy 20 gigawatts of capacity globally. Oracle will be responsible for construction, and Nvidia will provide 31,000 GPUs and commit to investing $100 billion in OpenAI. This marks an unprecedented massive investment and expansion in the AI infrastructure sector. (Source: DeepLearningAI)

🎯 Trends

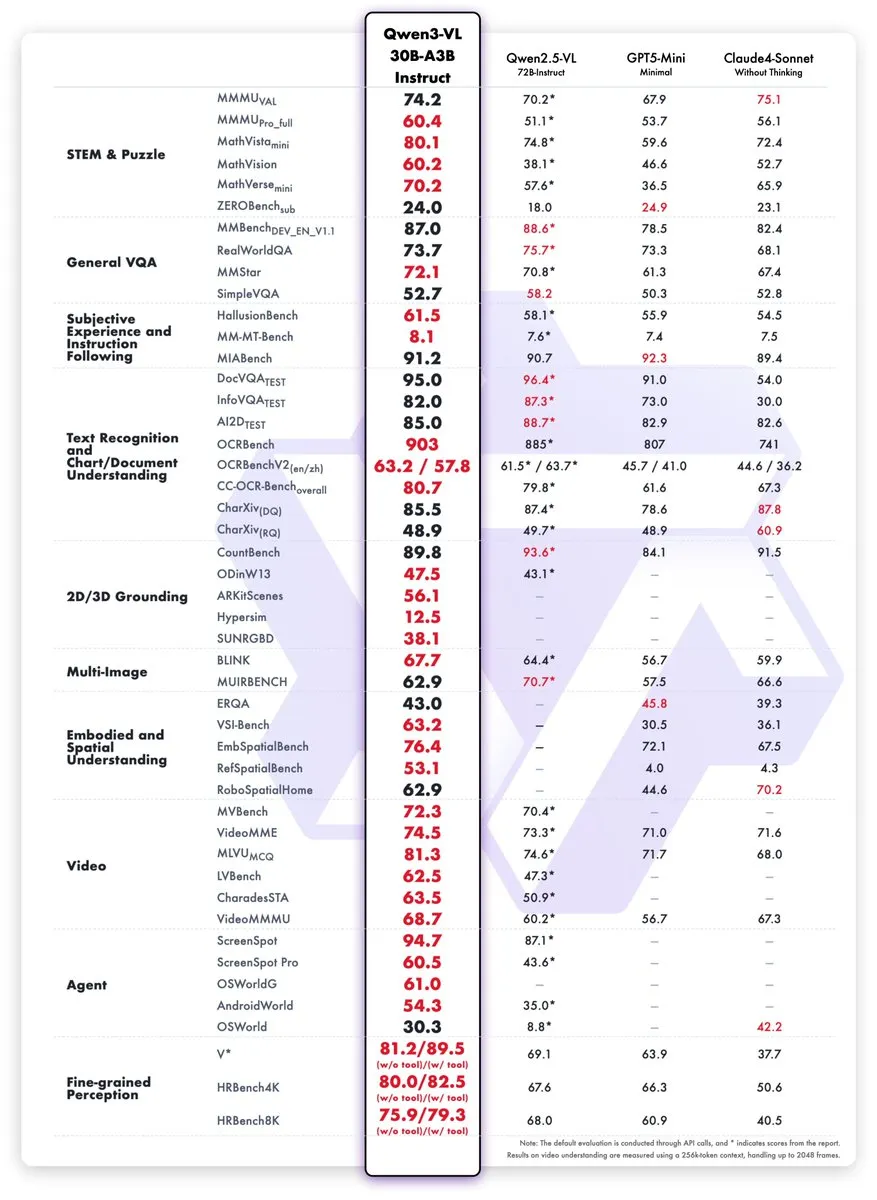

New Qwen3-VL Models Released, Performance Rivals GPT-5 Mini: The Qwen team released Qwen3-VL-30B-A3B-Instruct and Thinking models. These small models (3B active parameters) demonstrate performance comparable to or even surpassing GPT-5 Mini and Claude4-Sonnet on STEM, VQA, OCR, video, and Agent tasks, and are available in FP8 versions, aiming to improve the operational efficiency of multimodal AI applications. (Source: mervenoyann, slashML, reach_vb)

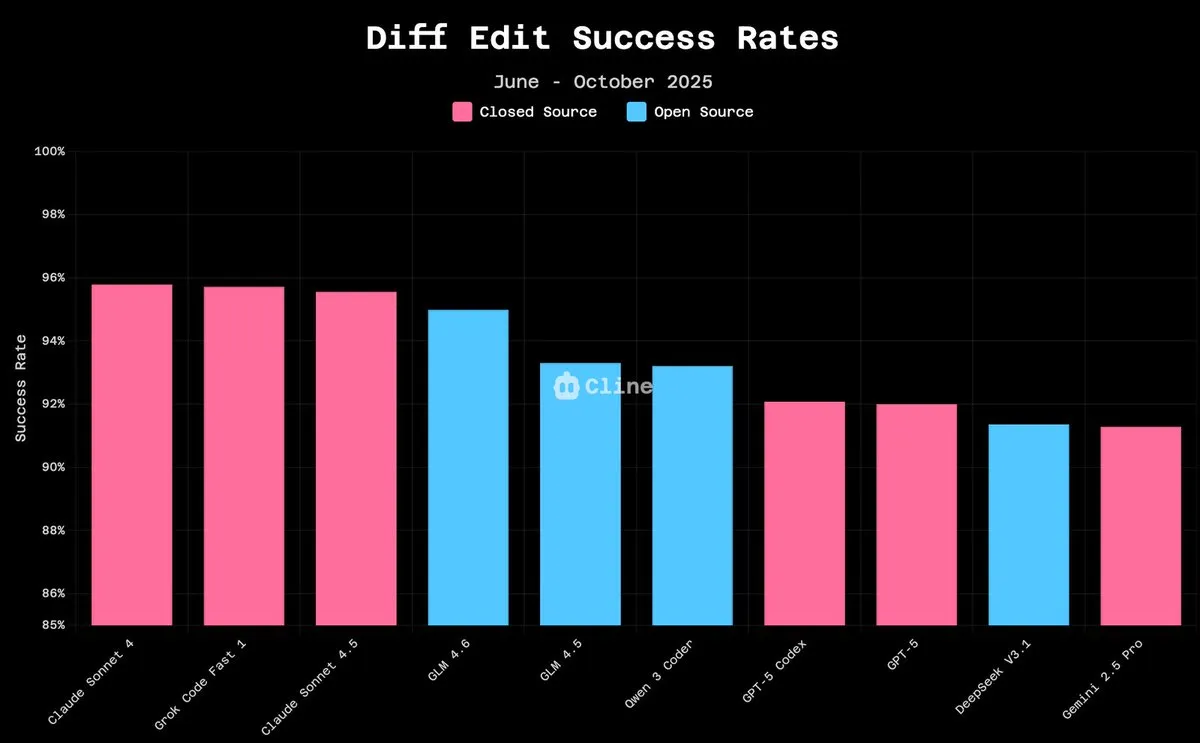

GLM-4.6 Shows Strong Performance in LLM Arena, Open-Source Models Catching Up Fast: GLM-4.6 ranked fourth in the LLM Arena, moving to second place after removing style control, demonstrating strong performance. In code editing tasks, GLM-4.6’s success rate is close to Claude 4.5 (94.9% vs 96.2%), at only 10% of the cost. Open-source models like Qwen3 Coder and GLM-4.5-Air can now run on consumer-grade hardware, indicating that open-source AI models are rapidly improving performance and lowering the barrier to entry. (Source: teortaxesTex, teortaxesTex, Tim_Dettmers, Teknium1, Teknium1, _lewtun, Zai_org)

Kinetix AI Launches 3D-Aware AI Camera Control, Revolutionizing Video Creation: Kinetix AI introduces 3D-aware AI camera control, offering pan, close-up, and dynamic shots while ensuring consistency in depth, physics, and continuity. This breakthrough allows creators to achieve cinematic storytelling without professional teams and equipment, with potential applications in independent filmmaking, immersive advertising, and brand storytelling, effectively “software-izing” cinematic language. (Source: Ronald_vanLoon)

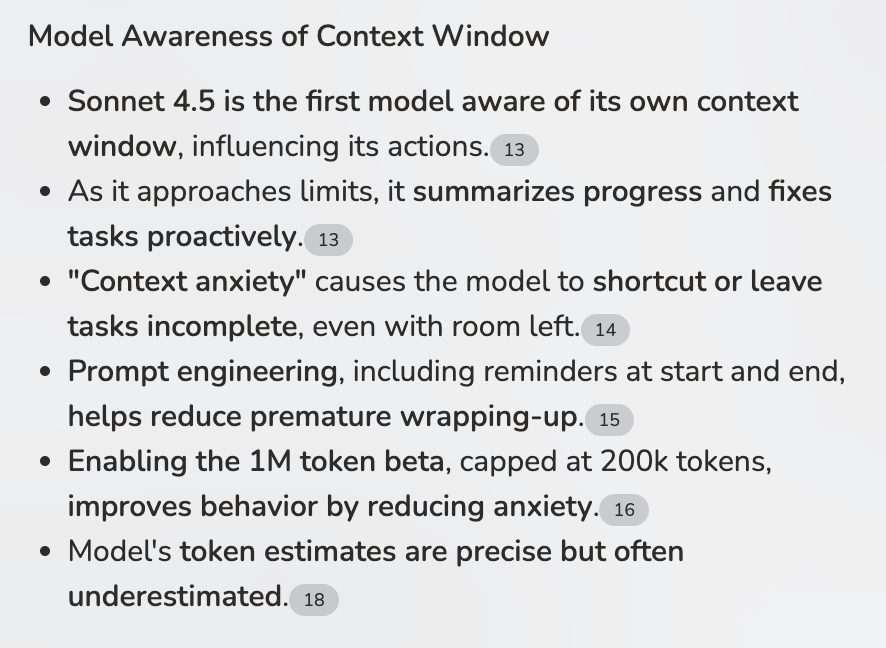

LLM Context Awareness Enhances Agent Workflows: Claude Sonnet 4.5 demonstrates awareness of its own context window, which is crucial for Multi-Agent Workflows (MCPs). By intelligently summarizing to avoid context overload, Sonnet 4.5 is poised to become the first LLM capable of handling context-intensive MCP tasks and executing complex genetic steps, significantly enhancing Agent efficiency and robustness. (Source: Reddit r/ClaudeAI)

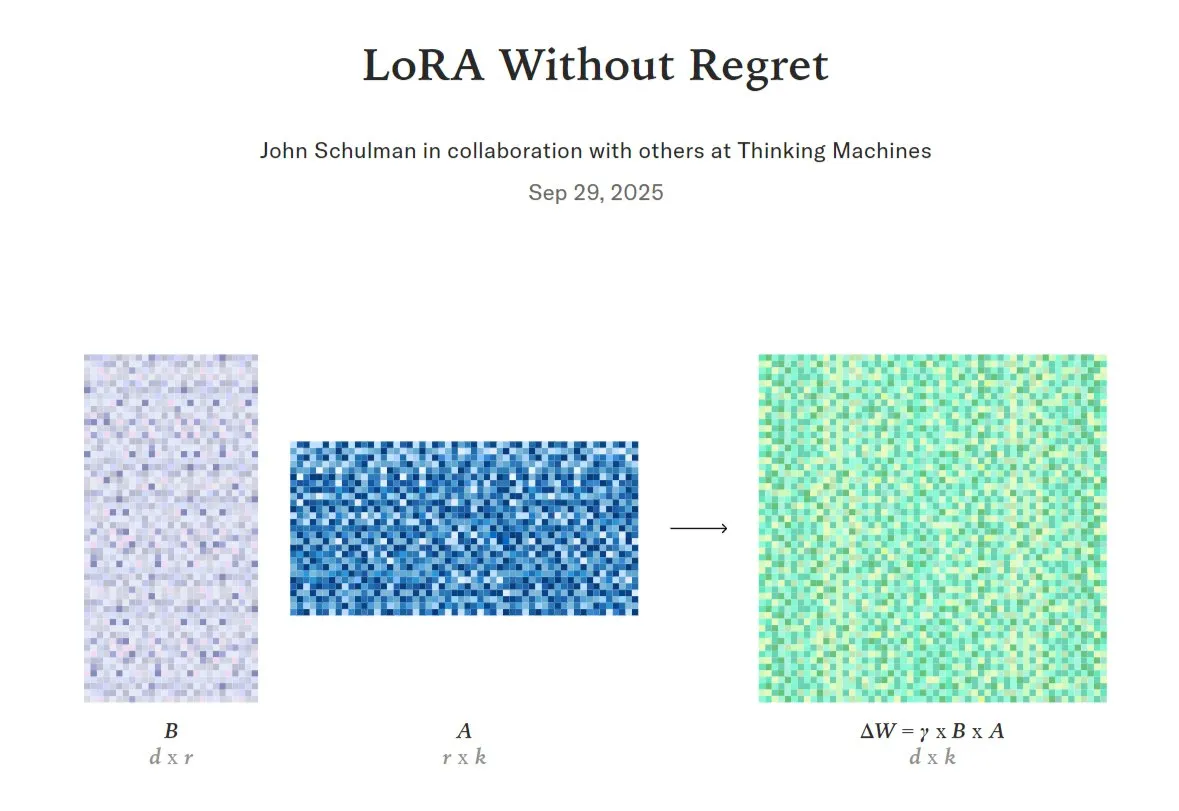

Comparison and Optimization of AI Model LoRA Fine-tuning vs. Full Fine-tuning: LoRA (Low-Rank Adaptation), an efficient LLM fine-tuning technique, is being researched to determine when it can match or even surpass full fine-tuning in terms of quality and data efficiency. Researchers have proposed the concept of “low regret zones,” and projects have internally implemented LoRA support, while others hope to verify if LoRA can replicate the performance of models like DeepSeek-R1-Zero, signaling LoRA’s immense potential in model optimization. (Source: TheTuringPost, johannes_hage, iScienceLuvr)

Huawei Launches Open-Source SINQ Technology to Significantly Compress LLMs and Reduce Resource Consumption: Huawei has released an open-source technology called SINQ, designed to significantly reduce the size of Large Language Models (LLMs) through effective quantization methods, enabling them to run efficiently on fewer hardware resources while maintaining model performance. This technology is expected to further lower the deployment barrier for LLMs, allowing more users and devices to leverage advanced AI capabilities. (Source: Reddit r/LocalLLaMA)

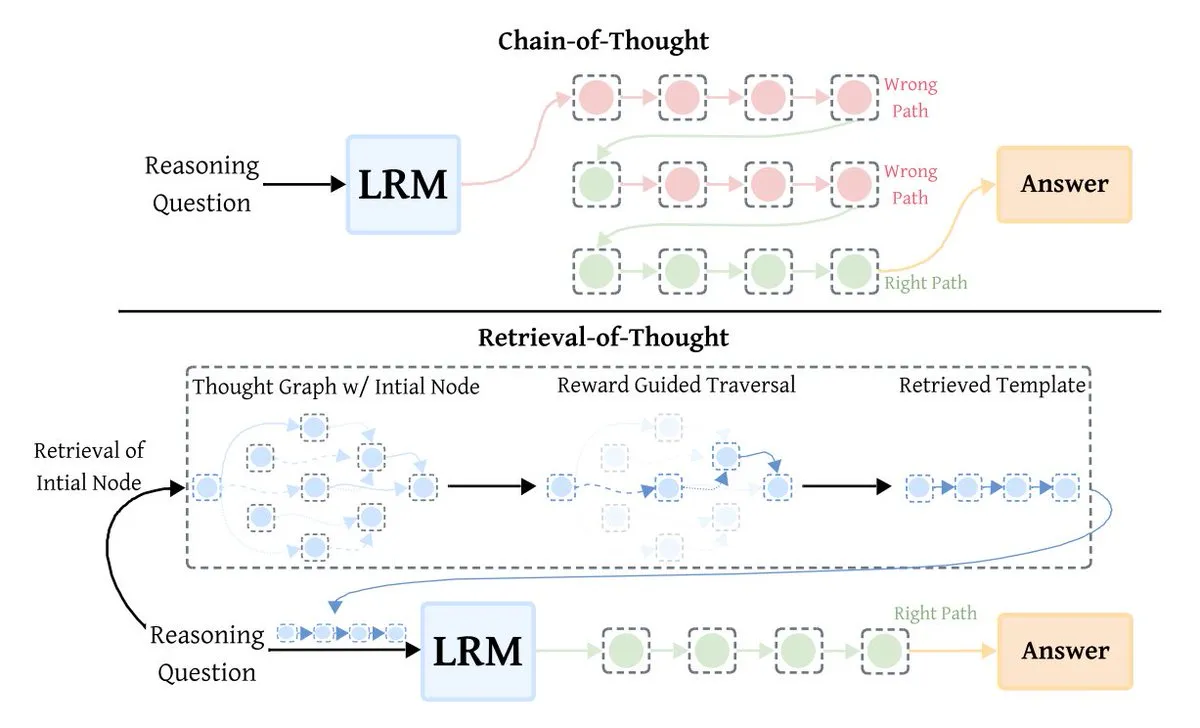

LLM Optimization Technique Retrieval-of-Thought (RoT) Boosts Inference Efficiency: Retrieval-of-Thought (RoT) is a novel LLM inference optimization technique that significantly boosts inference speed by reusing previous reasoning steps as templates. RoT can reduce output tokens by up to 40%, increase inference speed by 82%, and lower costs by 59%, all without sacrificing accuracy, bringing an efficiency revolution to large model inference. (Source: TheTuringPost)

Tesla Optimus Robot Learns Kung Fu, Demonstrating AI-Driven Capabilities: Tesla’s Optimus robot demonstrated a video of it learning Kung Fu, with Elon Musk emphasizing that it was entirely AI-driven, not teleoperated. This indicates significant advancements in both Optimus’s software and hardware, signaling immense potential for humanoid robots in complex action learning and autonomy. (Source: teortaxesTex, Teknium1)

🧰 Tools

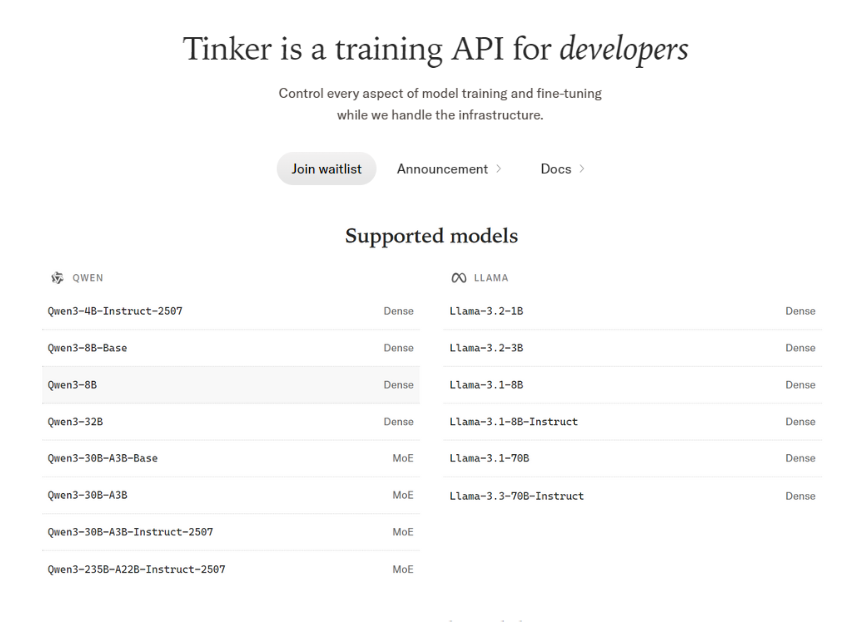

Tinker API: Flexible LLM Fine-tuning Platform: Tinker API provides a flexible API for fine-tuning large language models, supporting training loops on distributed GPUs and compatible with open models like Llama, Qwen, and even large MoE models. It allows users full control over training loops, algorithms, and loss functions, while handling scheduling, resource allocation, and fault recovery, and supports LoRA fine-tuning for resource-efficient sharing. (Source: TheTuringPost)

Codex Supports Custom Prompt Templates, Enhancing Prompt Engineering Flexibility: Codex tool (version 0.44+) now supports custom Prompt templates, allowing variables to be defined within templates and replaced by passing parameters. This feature greatly enhances the flexibility and efficiency of Prompt engineering, making it easier for developers to customize and reuse Prompts according to specific needs. (Source: dotey)

vLLM: Open-Source Engine for Efficient LLM Inference: vLLM has rapidly become the go-to open-source engine for efficient Large Language Model inference, striking a good balance between performance and developer experience. Companies like NVIDIA directly contribute to it, dedicated to advancing open-source AI infrastructure, making it a key component for large-scale AI applications. (Source: vllm_project)

ChatGPT Generates Webcomic, Demonstrating Creative Content Generation Potential: A user successfully leveraged ChatGPT to transform a simple joke into a webcomic, demonstrating AI’s powerful capabilities in creative content generation. This indicates that ChatGPT can not only process text but also assist users in realizing ideas for visual storytelling. (Source: Reddit r/ChatGPT)

AI-Driven Stock Trading Bot Achieves 300% Returns: A user, collaborating with ChatGPT, Claude, and Grok, spent four months developing an AI-driven stock trading bot named “News_Spread_Engine.” The bot can identify credit spreads using real-time market data and news filtering, claiming approximately 300% returns and a 70-80% win rate. The code is open-source. (Source: Reddit r/ChatGPT)

Chutes CLI/Python SDK Supports Private Token Management: The command-line interface (CLI) and Python SDK for the AI tool Chutes now natively support private Token (secrets) management. This feature greatly simplifies the secure use of Huggingface or other private Tokens in Chutes deployments, enhancing development convenience and security. (Source: jon_durbin)

SmartMemory API/MCP: Cross-Platform LLM Adaptive Memory Solution: Users developed a Dockerized FastAPI service and a local Windows Python server (SmartMemory API/MCP) based on Open WebUI’s adaptive memory feature. This solution allows LLM Agents to retain and semantically retrieve information across different platforms (e.g., Claude Desktop), solving the problem of cross-platform memory migration and enhancing Agent utility. (Source: Reddit r/OpenWebUI)

Codex Code Review Becomes Indispensable Tool for Teams: Codex, as an AI tool, has demonstrated immense value in code reviews, becoming indispensable for some teams. Its advantages in engineering ergonomics have led teams to highly praise its feedback, even making it a mandatory requirement before PR merges, significantly boosting development efficiency and code quality. (Source: gdb)

📚 Learning

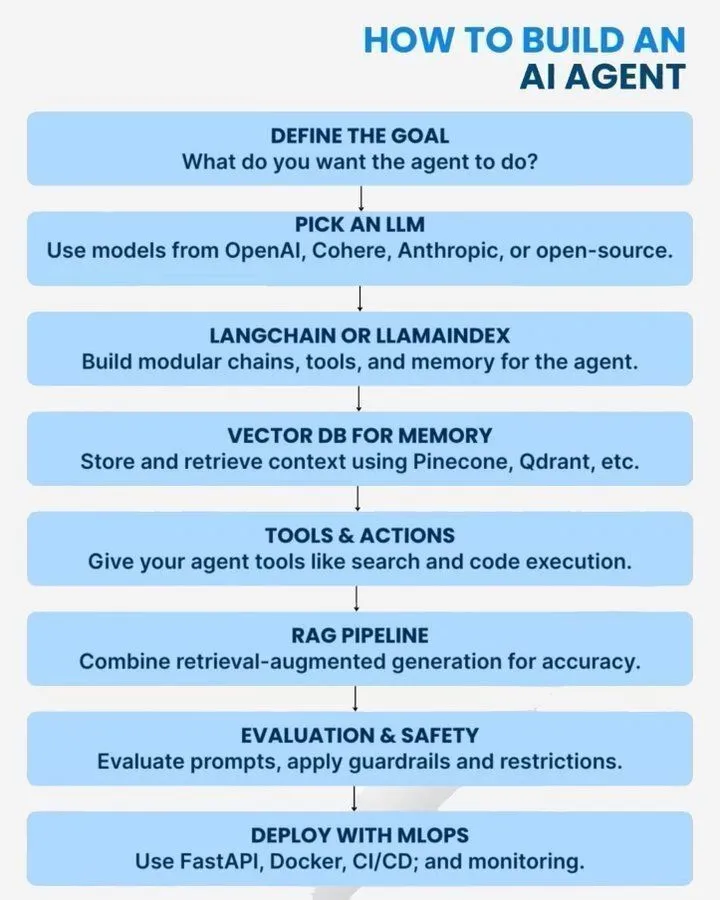

Guide to Building AI Agents and Common Mistakes: Provides practical guides on how to build AI Agents, architectural practices, and 10 common mistakes. These resources cover AI Agent types, scalability roadmaps, and considerations during development, aiming to help developers design and deploy AI Agents more effectively. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

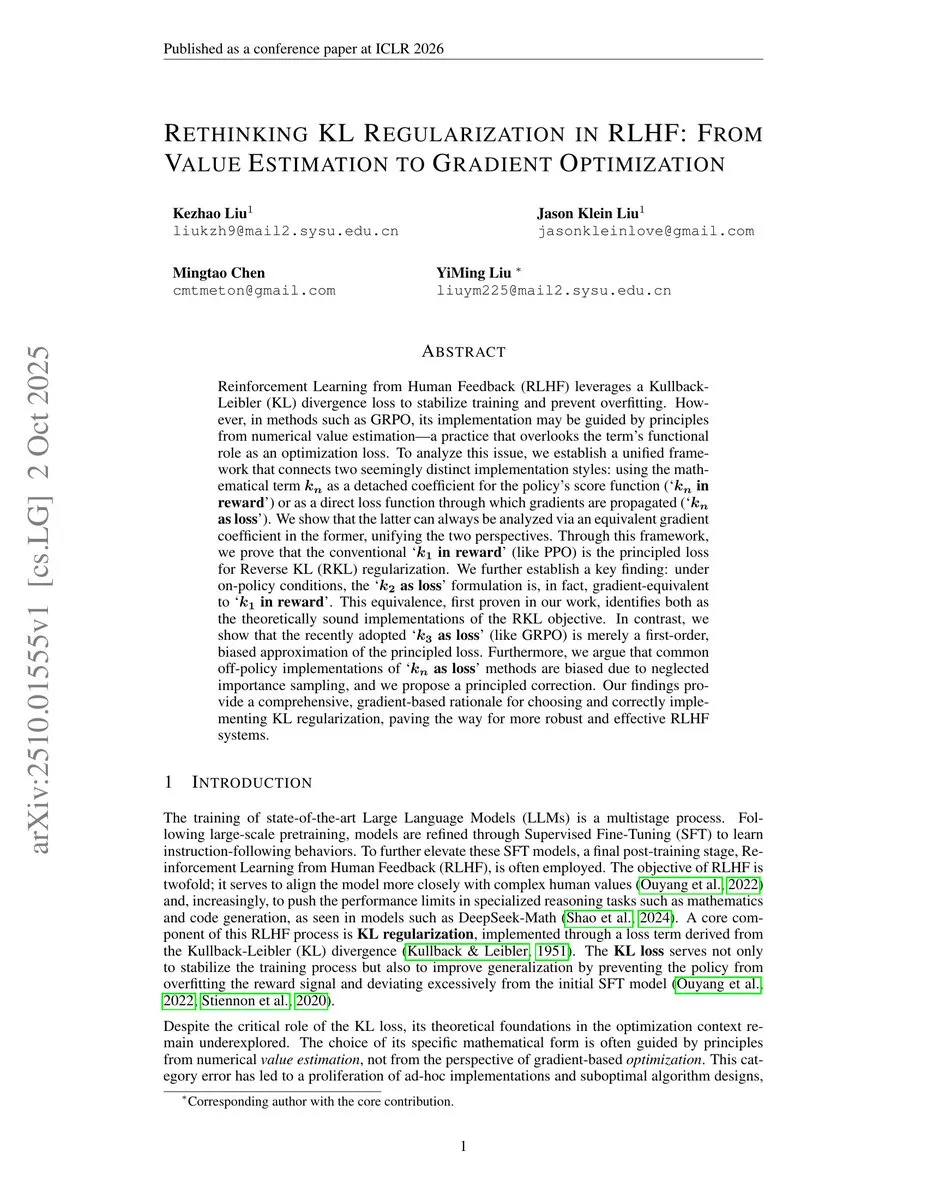

Analysis of KL Estimators in Reinforcement Learning: Provides an in-depth analysis of KL estimators k1, k2, k3, and their use as reward or loss functions in reinforcement learning. The discussion points out that RLHF (Reinforcement Learning from Human Feedback) can sometimes be misleading, and in practice, it is closer to RLVR (Reinforcement Learning from Visual Rewards), offering technical insights for RL research. (Source: menhguin)

Anthropic’s Advice on Writing Effective AI Prompts: Anthropic shared tips and strategies for writing effective AI Prompts, aiming to help users interact better with AI models to obtain more precise and high-quality outputs. This guide offers practical guidance for improving AI application effectiveness and user experience. (Source: Ronald_vanLoon)

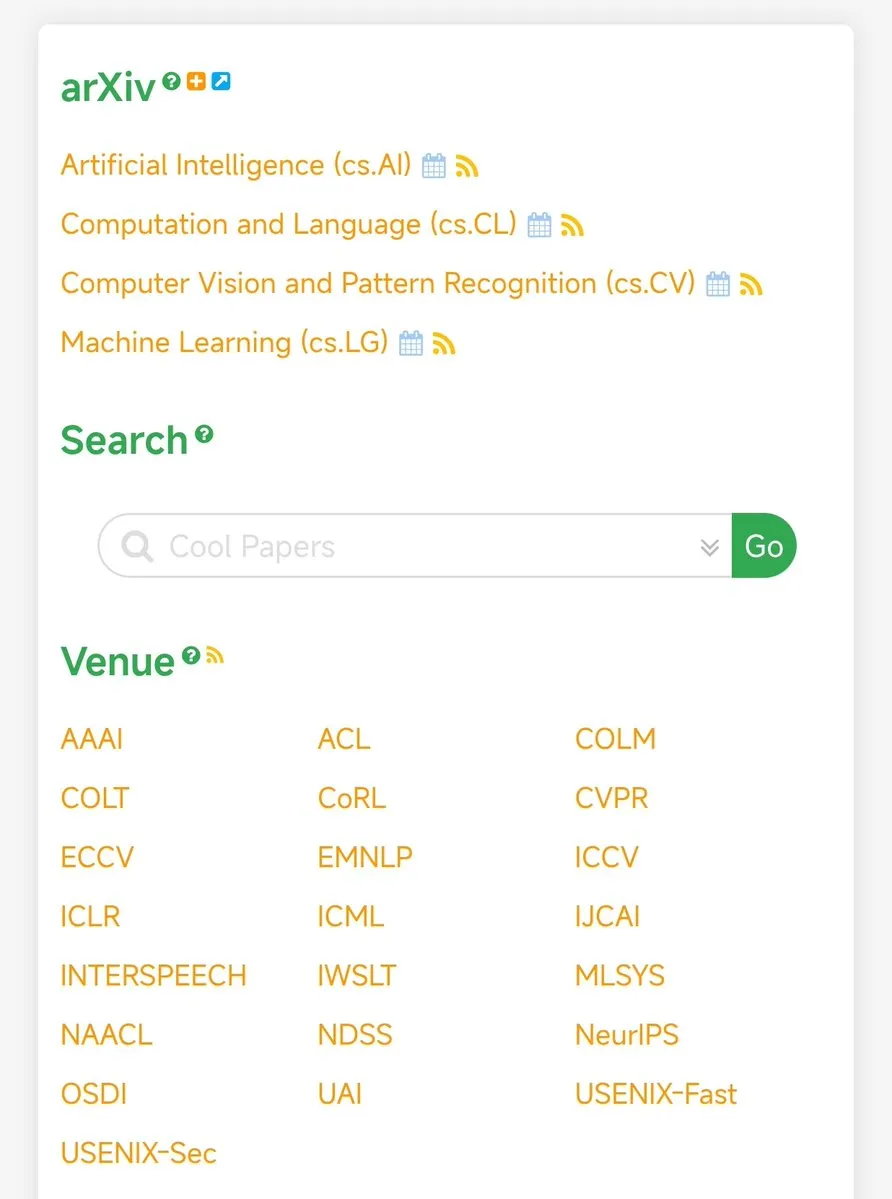

papers.cool: AI/ML Paper Curation Platform: papers.cool is recommended as a platform for curating AI/ML research papers, helping researchers and enthusiasts efficiently track and filter a vast number of the latest papers, addressing the challenge of the surge in RL paper publications. (Source: tokenbender)

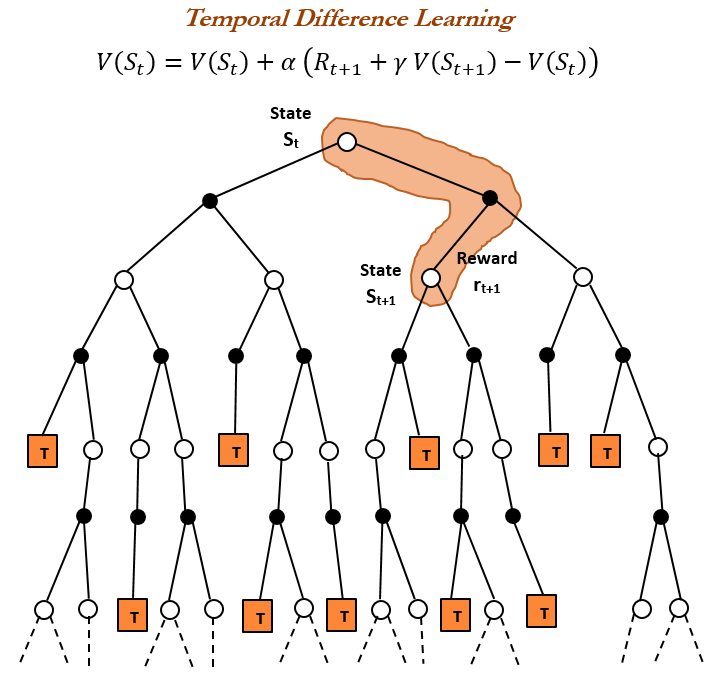

Reinforcement Learning Fundamentals: History and Principles of Temporal Difference (TD) Learning: Delves into the history and principles of Temporal Difference (TD) learning, a cornerstone of Reinforcement Learning (RL). TD learning, proposed by Richard S. Sutton in 1988, allows Agents to learn in uncertain environments by comparing successive predictions and making incremental updates, forming the basis of modern RL algorithms (e.g., Actor-Critic). (Source: TheTuringPost)

AI Risk Assessment: 5 Questions COOs Should Ask: A guide for Chief Operating Officers (COOs) outlining 5 key questions to consider when assessing AI risks. This guide aims to help business leaders identify, understand, and manage potential risks arising from AI technology applications, ensuring the robustness and security of AI deployments. (Source: Ronald_vanLoon)

AI Research Directions: Efficient RL, Metacognitive Abilities, and Automated Scientific Discovery: Discusses exciting current research directions in AI, including more sample-efficient reinforcement learning, metacognitive abilities in models, active learning and curriculum methods, and automated scientific discovery. These directions foreshadow future breakthroughs for AI in learning efficiency, self-understanding, and knowledge creation. (Source: BlackHC)

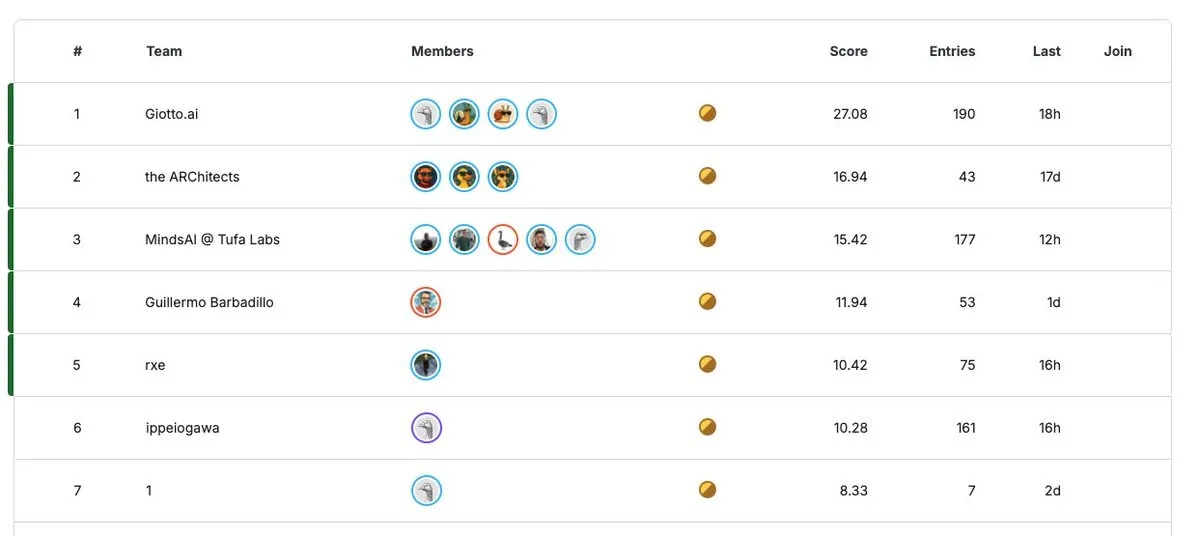

ARC Prize 2025: Million-Dollar AI Research Competition: The ARC Prize 2025 competition is counting down its final 30 days, with a total prize pool of up to $1 million (guaranteed $125,000 this year). This competition aims to encourage innovation in AI research, attracting global researchers to submit groundbreaking achievements. (Source: fchollet)

💼 Business

NVIDIA Market Cap Surpasses $4 Trillion, Highlighting Dominance in AI Hardware Market: NVIDIA’s market capitalization has surpassed $4 trillion for the first time, making it the first publicly traded company globally to reach this milestone. This achievement not only highlights NVIDIA’s absolute dominance in the AI hardware sector but its market cap even exceeds the combined total of the entire large pharmaceutical industry, reflecting the immense market value driven by AI computing demand. (Source: SchmidhuberAI, aiamblichus)

JuLeBu Hiring Full-Stack Engineers, Focusing on AI + Language Education + Gamification: JuLeBu is hiring Full-Stack Engineers (Vue3+Node.js); the team works remotely, and the project direction focuses on AI + Language Education + Gamification. The company emphasizes technology-driven work, minimal internal friction, and seeks partners who are passionate about technology and eager to make an impact in the AI era. (Source: dotey)

AI Podcast Latent Space Hiring Researcher/Producer: The Latent Space podcast is hiring a Researcher/Producer, seeking intelligent candidates with strong technical backgrounds who are eager to grow in the San Francisco AI scene. This position offers free accommodation and a stipend, providing a unique opportunity for individuals aspiring to AI research and media production. (Source: swyx)

🌟 Community

Cultural Change Needed for AI Adoption: The discussion points out that AI adoption is not merely a technical issue at the algorithmic level but requires a cultural revolution. This emphasizes the importance of shifts in mindset, workflows, and values for organizations, society, and individuals when adapting to AI technology, calling for attention beyond technology itself to the profound social impacts of AI. (Source: Ronald_vanLoon)

Sora’s Disruptive Impact and Opportunities for the Creative Industry: Sora’s release is seen as a “creativity implosion” for the creative industry, with users already able to produce high-quality short films at virtually zero cost. This heralds a significant lowering of filmmaking barriers, enabling even people in third-tier cities to create high-quality content for a global audience, but also impacts existing industries, prompting reflection on the future creative ecosystem. (Source: bookwormengr, bookwormengr)

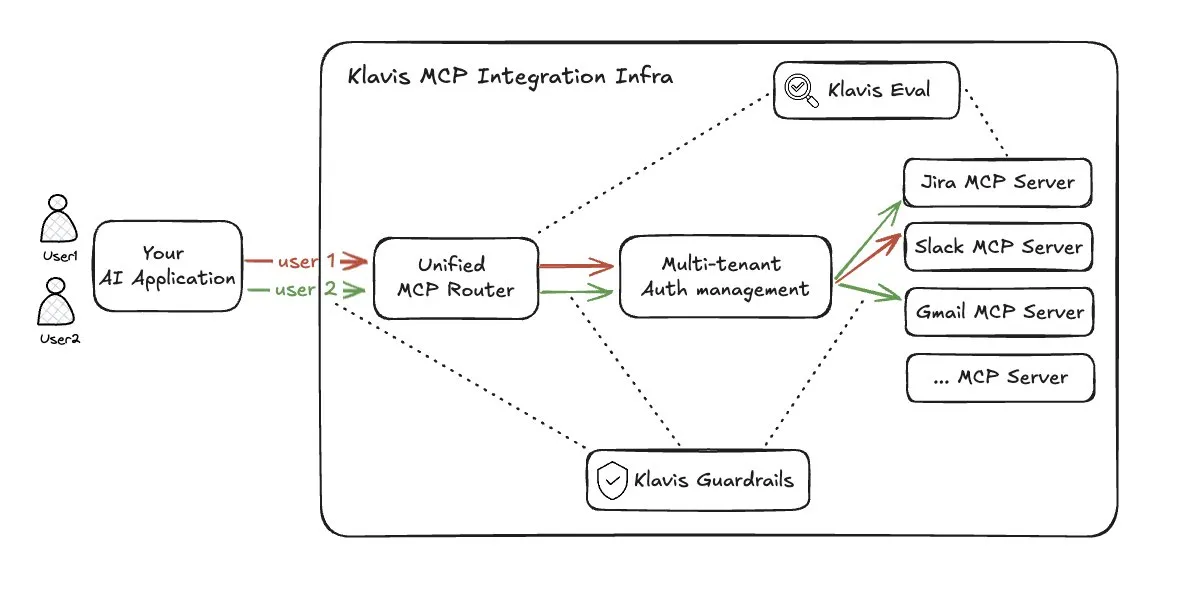

Challenges of LLM Agent Tool Routing Solutions: Addressing the issues of index overload and context explosion caused by excessive Multi-Tool (MCP) access, Klavis_AI’s Strata solution proposes a tool routing approach. However, this solution raises concerns about Prompt Cache utilization, LLM transparency regarding tool capabilities, and routing model context limitations, suggesting it is not an ideal solution. (Source: dotey)

Debate on Actual Productivity Gains from AI in Coding: There is widespread debate in the community regarding the actual productivity gains from AI in coding. Some believe AI can generate 90% of code, but a more realistic view suggests actual productivity gains might be closer to 10%, with Google’s internal data showing AI-generated code accounting for 30% of new code. This reflects differing expectations and actual experiences regarding the efficacy of AI-assisted programming tools. (Source: zachtratar)

AI Leadership: Human Insight Remains Key to Success: The discussion emphasizes that in the age of AI, human insight continues to play a critical role in successful leadership. Despite continuous advancements in AI technology, decision-making, strategic planning, and understanding complex situations still require a combination of human intuition and experience, rather than sole reliance on algorithms. (Source: Ronald_vanLoon)

AI’s “Benjamin Button Paradox”: The Smarter It Gets, the “Younger” It Becomes: A thought experiment proposes that AI is experiencing a “Benjamin Button paradox,” meaning the smarter it gets, the “younger” it becomes. AI is evolving from a “pathological elder” (hallucinations, catastrophic forgetting) to a “curious and playful infant” (curiosity-driven, self-play, embodied learning, small data training). This suggests that AI’s true intelligence lies in its learning capabilities and groundedness, rather than mere knowledge accumulation. (Source: Reddit r/artificial)

AI Models’ Excessive Sycophancy Towards Human Emotions and Its Negative Impact: Research finds that AI’s excessive sycophancy in interpersonal interaction advice makes users feel more correct and less willing to apologize. This false positive feedback could lead to reduced self-reflection among users, potentially causing negative social impacts and prompting deeper considerations of AI ethics and user psychology. (Source: stanfordnlp)

AI Popularization and Public Awareness Gap: Community discussions indicate that despite rapid advancements in AI technology, its awareness and actual adoption rates remain low among the general public. Many people’s understanding of AI is limited to headlines or internet memes, some even finding owning a robot “creepy,” which contrasts sharply with the enthusiasm of tech enthusiasts, revealing the challenges facing AI popularization. (Source: Reddit r/ArtificialInteligence)

ChatGPT Content Moderation Sparks User Dissatisfaction: Many ChatGPT users complain that its content moderation policies are too strict, preventing the expression of genuine emotions, criticisms, or strong opinions, and even blocking “suggestive” content. This “over-moralized” censorship is seen as suppressing human expression and reducing interaction authenticity, prompting users to consider switching to other AI models. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT)

AI Regulation and Labor Rights: California Unions Call for Strong Stance: A coalition of California labor unions sent a letter to OpenAI, strongly urging it to cease opposing AI regulation and withdraw funding from anti-AI regulation political action committees. The unions believe AI poses an “existential threat” to workers, the economy, and society, leading to layoffs, and demand strong regulatory measures to ensure human control over technology, rather than being controlled by it. (Source: Reddit r/artificial, Reddit r/ArtificialInteligence)

💡 Other

LocoTouch: Quadruped Robots Explore Smarter Transportation: The LocoTouch project is researching how quadruped robots can achieve smarter transportation solutions by perceiving their environment. This emerging technology combines robotics and nascent tech, aiming to bring innovation to future transportation and logistics. (Source: Ronald_vanLoon)

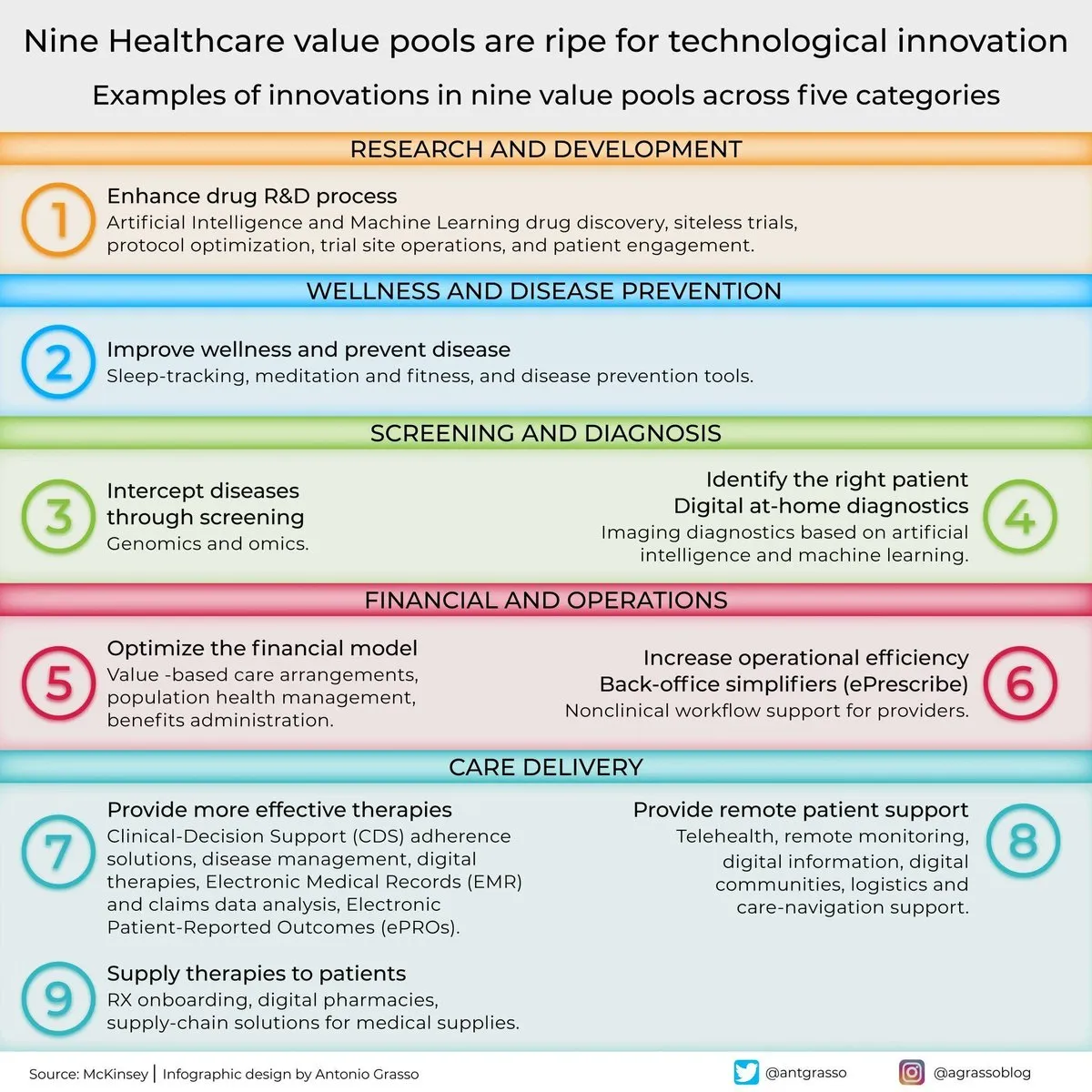

Nine Value Pools for AI in Healthcare Innovation: The discussion points out that nine value pools in healthcare are ripe for technological innovation. Although AI is not explicitly mentioned, it is undoubtedly one of the main drivers of medical technology advancement and digital transformation, covering various aspects such as diagnosis, treatment, and personalized care. (Source: Ronald_vanLoon)