Anahtar Kelimeler:GPT-5, Kuantum hesaplama, AI malzeme tasarımı, Pekiştirmeli öğrenme, Büyük dil modelleri, AI altyapısı, Çok modelli modeller, AI Ajan, Kuantum NP zor problemleri, CGformer kristal grafik sinir ağı, RLMT pekiştirmeli öğrenme çerçevesi, DeepSeek seyrek dikkat DSA, UniVid birleşik görsel görev çerçevesi

🔥 Spotlight

GPT-5 Conquers “Quantum NP Problem”: Quantum computing expert Scott Aaronson published a paper for the first time, revealing GPT-5’s breakthrough assistive role in quantum complexity theory research. GPT-5 assisted in solving a critical derivation step in the “quantum version of the NP problem” within 30 minutes, a task that typically takes humans 1-2 weeks. This achievement marks AI’s foray into core scientific discovery, signaling a significant leap in AI’s potential in scientific research. (Source: arXiv, scottaaronson.blog)

New Material AI Design Model CGformer: A team led by Professors Li Jinjin and Huang Fuqiang from Shanghai Jiao Tong University developed the new AI material design model CGformer. By innovatively integrating Graphormer’s global attention mechanism with CGCNN, and incorporating centrality encoding and spatial encoding, it successfully breaks through the limitations of traditional crystal graph neural networks. The model can fully capture global information of complex crystal structures, significantly improving the prediction accuracy and screening efficiency of new materials like high-entropy sodium-ion solid-state electrolytes. (Source: Matter)

UniVid Unified Visual Task Framework: UniVid is an innovative framework that adapts to diverse image and video tasks without task-specific modifications, achieved by fine-tuning a pre-trained video diffusion Transformer. This method represents tasks as visual sentences, defining tasks and expected output modalities through contextual sequences, demonstrating the immense potential of pre-trained video generation models as a unified foundation for visual modeling. (Source: HuggingFace Daily Papers)

RLMT Revolutionizes Post-Training for Large Models: A team led by Associate Professor Danqi Chen at Princeton University proposed the “Reinforcement Learning with Model-Reward Thinking” (RLMT) framework. It involves LLMs generating long chains of thought before responding, combined with online RL optimization using a preference-based reward model. This method significantly enhances LLMs’ reasoning capabilities and generalization on open-ended tasks, even enabling an 8B model to outperform GPT-4o in chat and creative writing. (Source: arXiv)

CHURRO Historical Text Recognition Model: CHURRO is a 3B-parameter open-source Visual Language Model (VLM) specifically designed for high-accuracy, low-cost historical text recognition. Trained on the CHURRO-DS dataset, comprising 99,491 pages of historical documents spanning 22 centuries and 46 languages, its performance surpasses existing VLMs like Gemini 2.5 Pro, significantly enhancing the efficiency of cultural heritage research and preservation. (Source: HuggingFace Daily Papers)

🎯 Trends

Altman Predicts AI Superintelligence and Pulse Feature: Sam Altman predicts that AI will fully surpass human intelligence by 2030, noting the astonishing speed of AI development. OpenAI launched ChatGPT’s “proactive mode” Pulse feature, signaling AI’s shift from passive response to actively thinking for users. It can proactively provide relevant information based on user conversations, delivering highly personalized services, and foreshadowing AI becoming an outsourced human subconscious. (Source: 36氪, )

Jensen Huang Refutes AI Bubble Theory and NVIDIA’s Strategy: In an exclusive interview, Jensen Huang refuted the “AI bubble empire” theory, emphasizing AI’s critical role in the economy and predicting NVIDIA could become the first $10 trillion company. He pointed out the immense computing power demand behind AI inference. NVIDIA, through extreme co-design, launches new architectures annually and opens its system ecosystem, unafraid of the in-house development trend, aiming to shape the AI economic system and promote “sovereign AI” as a new consensus. (Source: 36氪, )

DeepSeek Open-Sources V3.2-Exp and DSA Mechanism: DeepSeek open-sourced the experimental V3.2-Exp version with 685B parameters and simultaneously released a paper detailing its new sparse attention mechanism, DeepSeek Sparse Attention (DSA). DSA aims to explore and validate optimizations for training and inference efficiency in long-context scenarios, significantly improving long-context processing efficiency while maintaining model output quality. (Source: 36氪, HuggingFace)

GLM-4.6 Forthcoming: Zhipu AI’s GLM-4.6 model is expected to be released soon, with its Z.ai official website already labeling GLM-4.5 as the “previous generation flagship model,” suggesting potential improvements in context length and other aspects in the new version, drawing community attention and anticipation. (Source: Reddit r/LocalLLaMA, karminski3)

Apple’s AI Strategy and Internal Chatbot Veritas: Apple’s internally developed AI chatbot, codenamed “Veritas,” has been revealed. It serves as a sparring partner for Siri and possesses the ability to perform in-app actions. Despite this, Apple remains committed to not launching a consumer-facing chatbot, focusing instead on system-level AI integration. It plans to deepen third-party model integration through an AI answer engine and the universal MCP interface, rather than developing its own chatbot. (Source: 36氪)

AI PC Market Growth and Technical Bottlenecks: AI PC market shipments are projected to grow strongly in 2025-2026, but primarily driven by the end-of-life for Windows 10 and PC replacement cycles, rather than AI technology disruption. Currently, most AI features complement traditional PCs, facing challenges such as insufficient local computing power, passive interaction, and closed ecosystems. True AI devices need to achieve “local computing power as primary, cloud as supplementary” and proactive sensing. (Source: 36氪)

AI Influx into Power Trading Market: AI is being widely applied in the power trading market, with companies like Qingpeng Smart using time-series large models to forecast wind and solar power generation and electricity demand, assisting trading decisions. AI’s advantage in processing massive data is expected to amplify profits, but it could also lead to losses due to immature models and market complexity; the industry is still in an exploratory phase. (Source: 36氪)

Alibaba Tongyi Large Model Update and Full-Stack AI Services: Alibaba Cloud heavily upgraded its full-stack AI system at the Apsara Conference, releasing 6 new models including Qwen3-MAX and Qwen3-Omni, positioning itself as a “full-stack AI service provider.” Alibaba Cloud is committed to building “Android for the AI era” and “the next-generation computer,” offering full-stack AI cloud services from foundational models to infrastructure, to address the evolution of AI Agents from “intelligent emergence” to “autonomous action.” (Source: 36氪)

In-depth Analysis of NVIDIA Blackwell Architecture: An in-depth analysis event for the NVIDIA Blackwell architecture will explore its architecture, optimizations, and implementation in GPU clouds. The event, led by SemiAnalysis and NVIDIA experts, aims to reveal how Blackwell GPUs, as “the GPU of the next decade,” will drive AI computing power development and the future of GPU clouds. (Source: TheTuringPost)

🧰 Tools

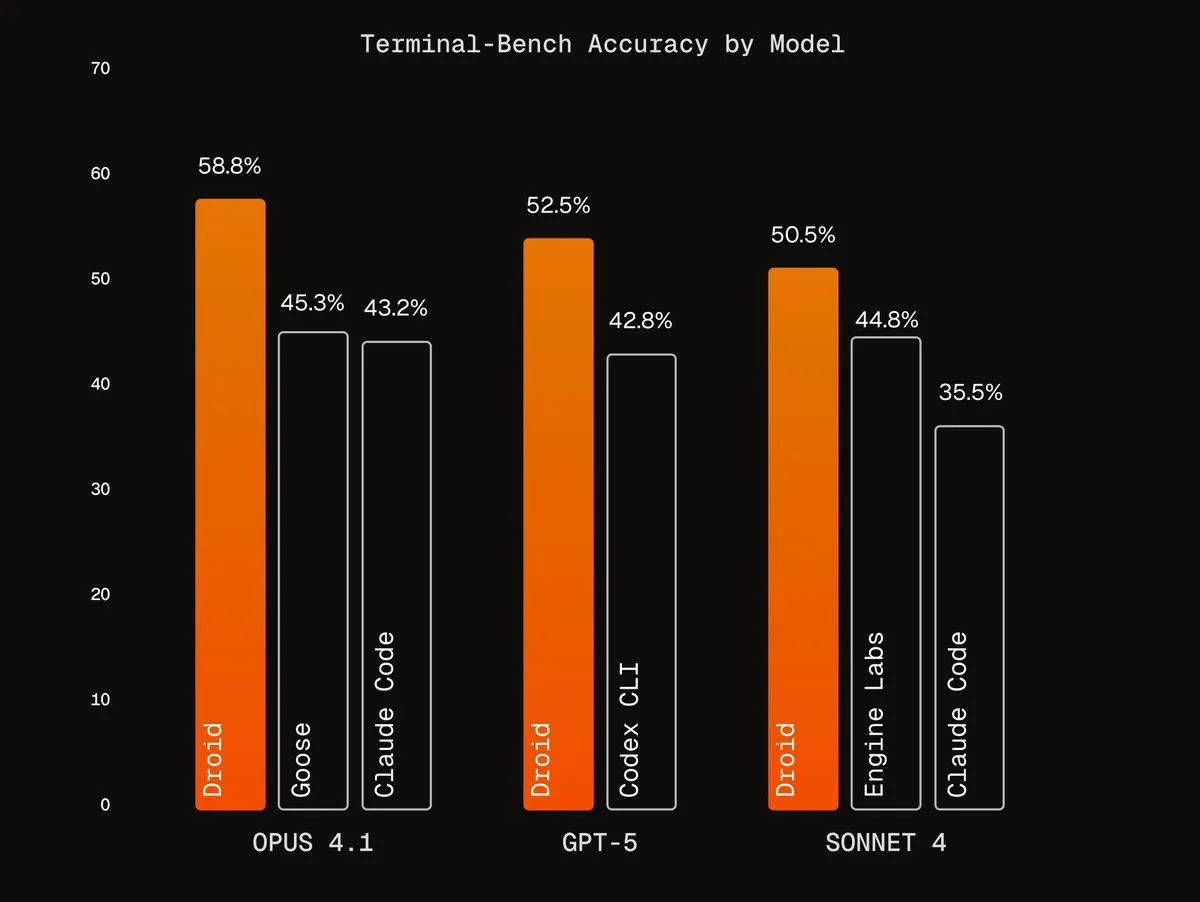

Factory AI’s Agentic Harnesses: Factory AI has developed world-class Agentic Harnesses, significantly boosting the performance of existing models, especially excelling in coding tasks, and dubbed “cheat codes” by users. Its Droids agent ranks first on Terminal-Bench and achieves reliable code refactoring through a multi-agent verification workflow. (Source: Vtrivedy10, matanSF, matanSF)

RAGLight Open-Source RAG Library: LangChainAI released RAGLight, a lightweight Python library for building production-grade RAG systems. The library features LangGraph-driven agent pipelines, multi-provider LLM support, built-in GitHub integration, and CLI tools, aiming to simplify the development and deployment of RAG systems. (Source: LangChainAI, hwchase17)

ArgosOS Semantic Operating System: ArgosOS is a desktop application that enables intelligent document search and content integration through a tag-based architecture rather than a vector database. It leverages LLMs to create relevant tags stored in an SQLite database, intelligently processing queries such as analyzing shopping bills, offering an accurate and efficient document management solution for small-scale applications. (Source: Reddit r/MachineLearning)

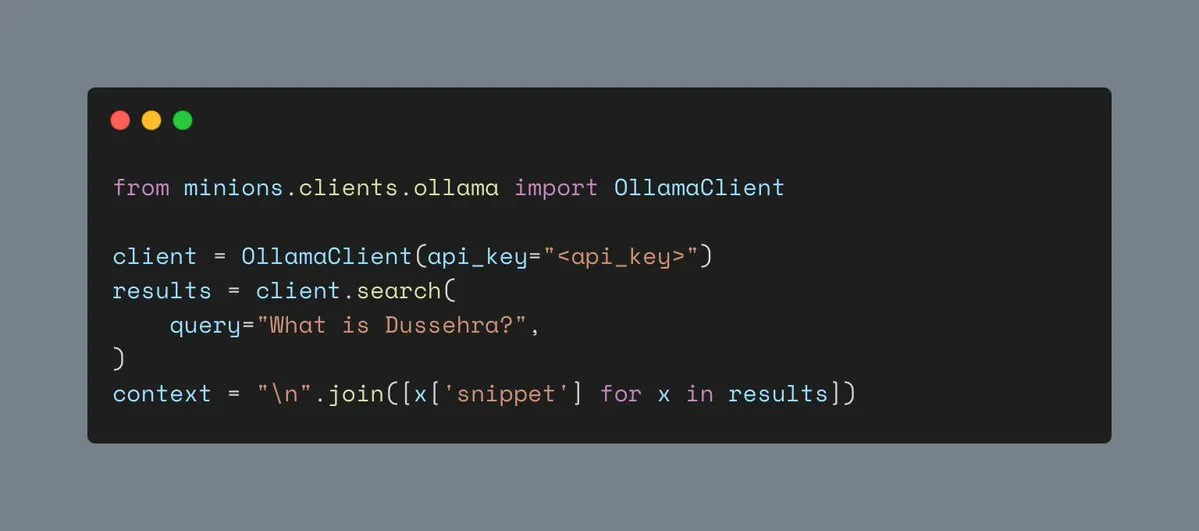

Ollama’s Web Search Tool: Ollama now supports Web search tools, allowing users to integrate Web search functionality into Minions workloads, thereby enriching the contextual information for AI applications and enhancing their ability to handle complex tasks. (Source: ollama)

Hyperlink Local Multimodal RAG: Hyperlink offers local multimodal RAG capabilities, allowing users to search and summarize screenshot/photo libraries offline. Through OCR and embedding technologies, this tool converts unstructured image data into searchable content, enabling fully private, on-device document management and information extraction. (Source: Reddit r/LocalLLaMA)

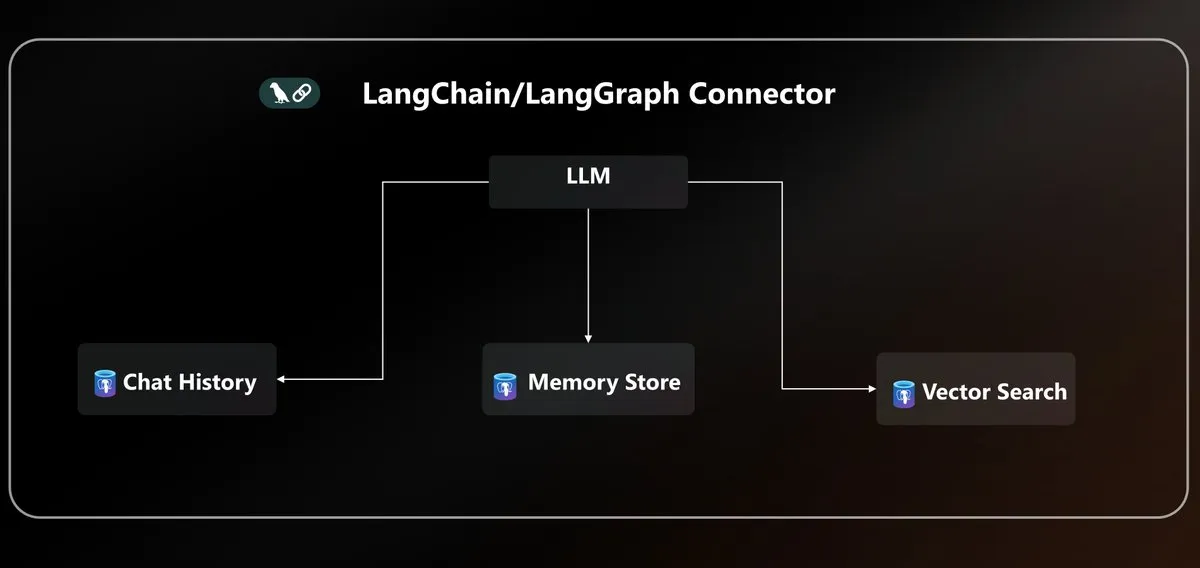

Azure PostgreSQL LangChain Connector: Microsoft launched a native Azure PostgreSQL connector to unify agent persistence for the LangChain ecosystem. This connector provides enterprise-grade vector storage and state management, simplifying the complexity of building and deploying AI agents in the Azure environment. (Source: LangChainAI)

LLM API Standardization and MCP Protocol: The community discussed the fragmentation of LLM APIs, pointing out incompatibilities in message structures, tool calling patterns, and inference field names across different providers, calling for industry standardization of JSON API protocols. Concurrently, the introduction of the MCP (Model-Client Protocol) has sparked discussions about its impact on agent development. (Source: AAAzzam, charles_irl)

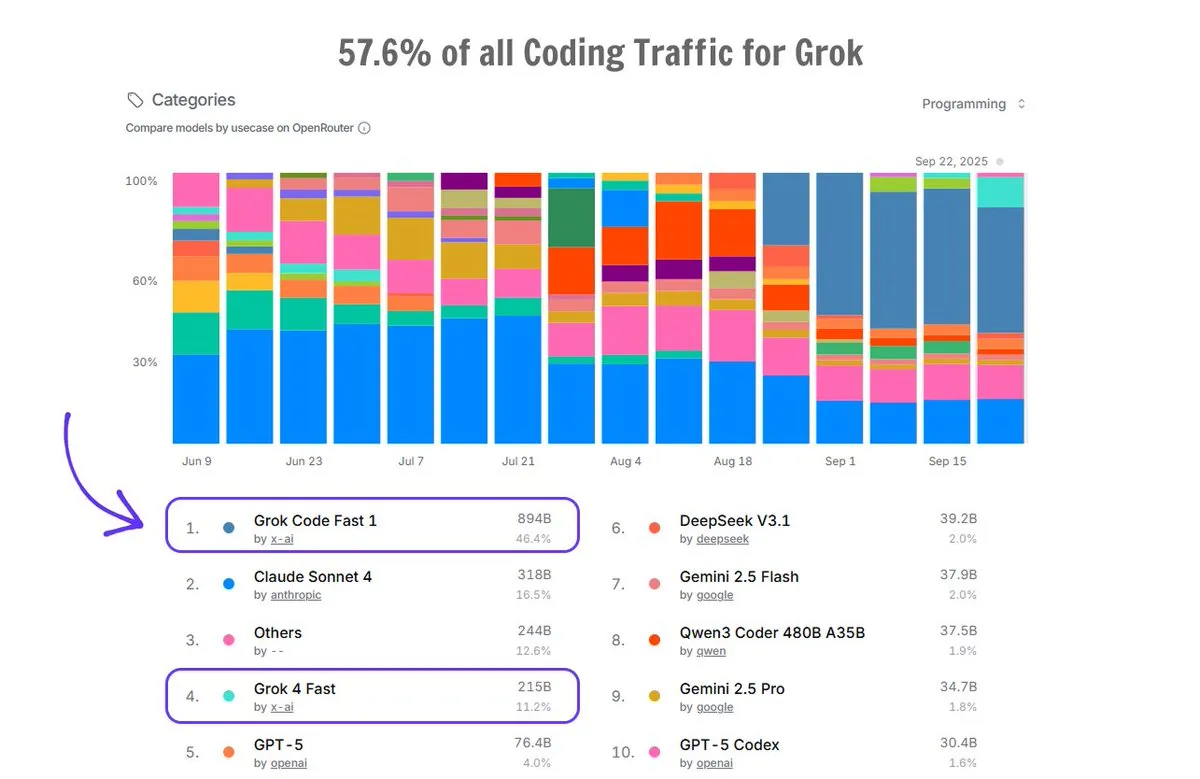

Grok Code’s Application on OpenRouter: Grok Code accounts for 57.6% of coding traffic on the OpenRouter platform, surpassing the combined total of all other AI code generators, with Grok Code Fast 1 ranking first, demonstrating its strong market performance and user preference in code generation. (Source: imjaredz)

📚 Learn

AI Fundamentals Course: Cursor Learn: Lee Robinson launched Cursor Learn, a free six-part video series designed to help beginners grasp fundamental AI concepts such as tokens, context, and agents. The course, approximately 1 hour long, offers quizzes and AI model trials, serving as a convenient resource for learning AI fundamentals. (Source: crystalsssup)

Free Book on Python Data Structures: Donald R. Sheehy released a free book titled “A First Course on Data Structures in Python,” covering data structures, algorithmic thinking, complexity analysis, recursion/dynamic programming, and search methods, providing a solid foundation for learners in AI and machine learning. (Source: TheTuringPost)

dots.ocr Multilingual OCR Model: Xiaohongshu Hi Lab released dots.ocr, a powerful multilingual OCR model supporting 100 languages. It can parse text, tables, formulas, and layouts end-to-end (outputting Markdown) and is free for commercial use. The model is compact (1.7B VLM) yet achieves SOTA performance on OmniDocBench and dots.ocr-bench. (Source: mervenoyann)

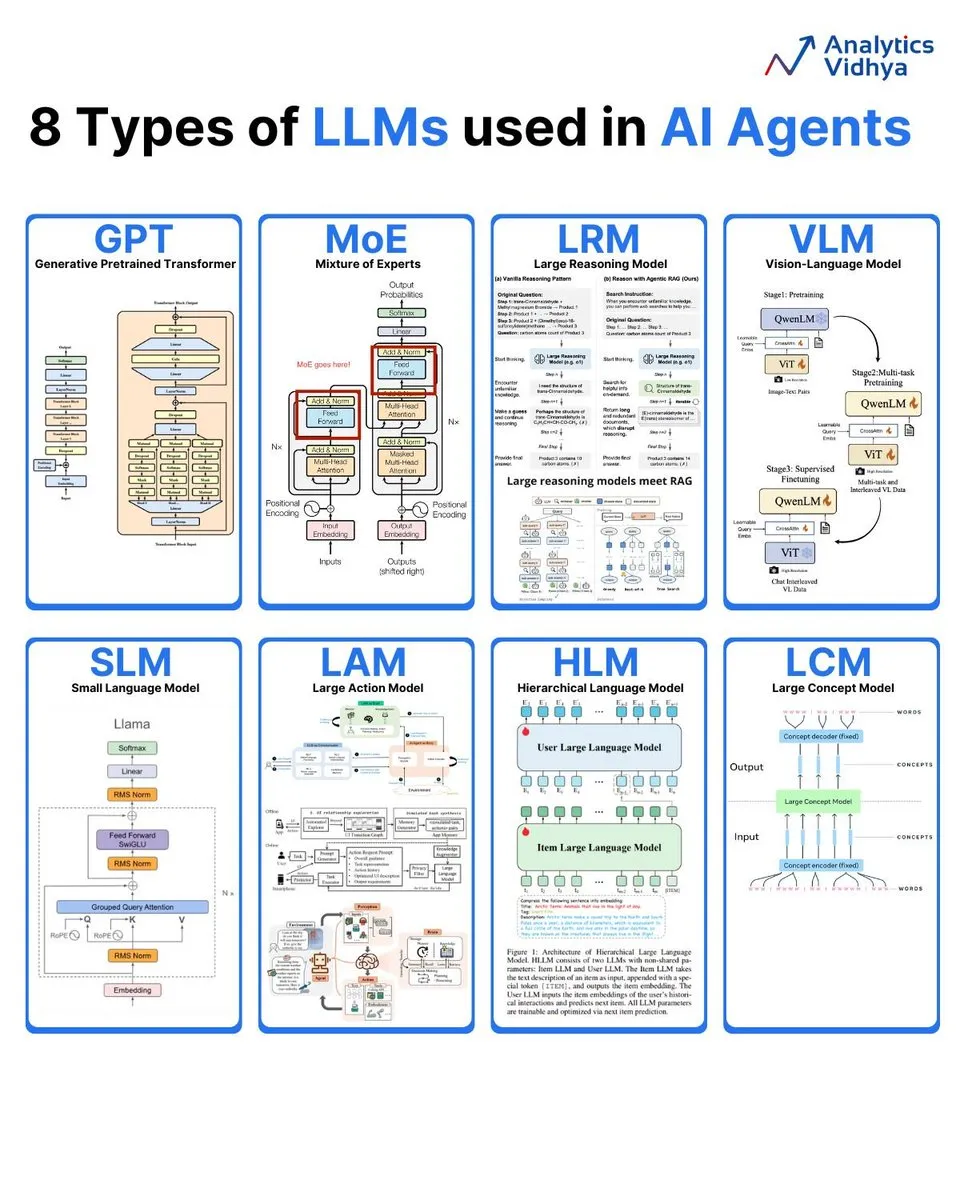

8 Types of Large Language Models Explained: Analytics Vidhya summarized 8 mainstream large language model types, including GPT (Generative Pre-trained Transformer), MoE (Mixture of Experts), LRM (Large Reasoning Model), VLM (Vision Language Model), SLM (Small Language Model), LAM (Large Action Model), HLM (Hierarchical Language Model), and LCM (Large Concept Model), providing detailed interpretations of their architectures and applications. (Source: karminski3)

AI Weekly: Latest Paper Summary: DAIR.AI released this week’s AI paper selection (September 22-28), covering multiple cutting-edge research areas such as ATOKEN, LLM-JEPA, Code World Model, Teaching LLMs to Plan, Agents Research Environments, Language Models that Think, Chat Better, and Embodied AI: From LLMs to World Models, providing AI researchers with the latest updates. (Source: dair_ai)

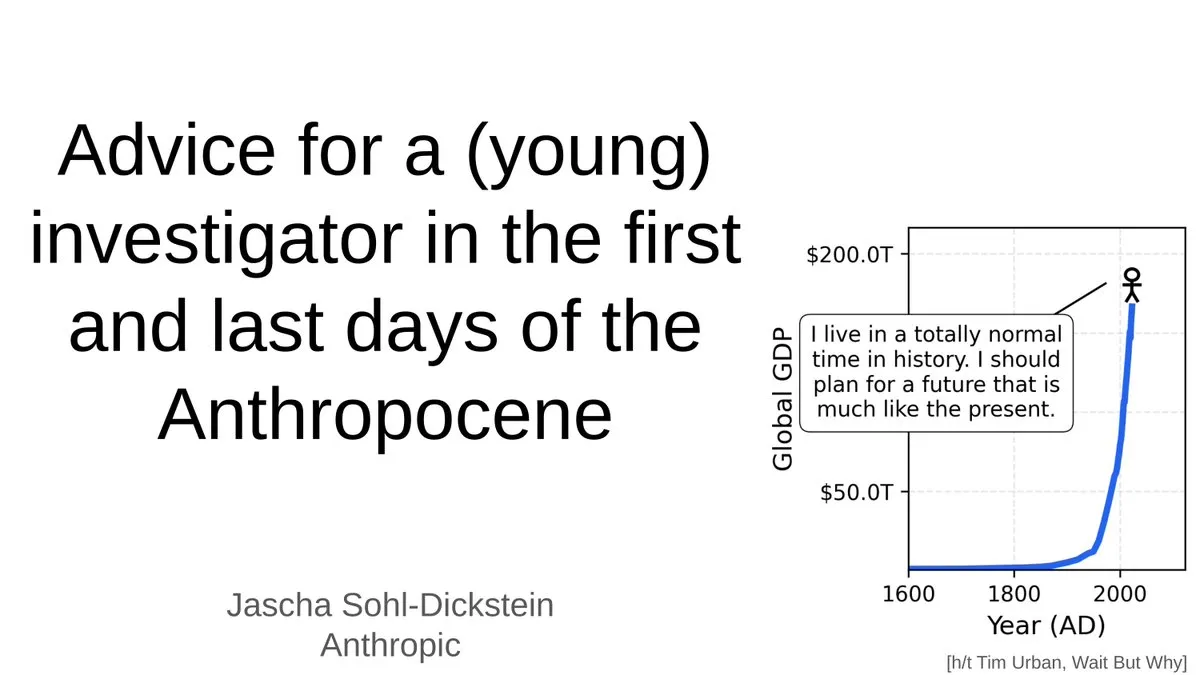

Advice for Young Researchers in the AI Era: Jascha Sohl-Dickstein shared practical advice for young researchers on how to choose research projects and make career decisions in the final stages of the “Anthropocene.” He discussed the profound impact of AGI on academic careers and emphasized the need to rethink research directions and professional development in the context of AI systems surpassing human intelligence. (Source: mlpowered)

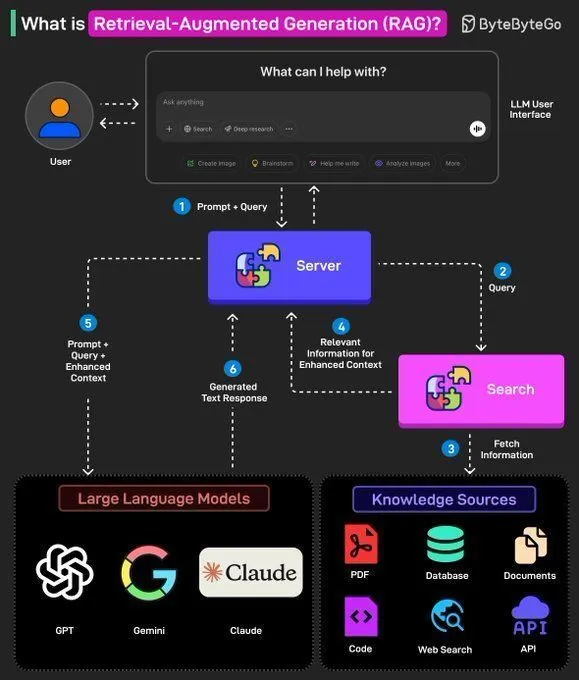

RAG Concepts and AI Agent Construction: Ronald van Loon shared the fundamental concepts of RAG (Retrieval-Augmented Generation) and its importance in LLMs, along with 8 key steps for building AI Agents. The content covers AI Agent concepts, stack, advantages, and how to evaluate them through frameworks, providing AI developers with guidance from theory to practice. (Source: Ronald_vanLoon, Ronald_vanLoon)

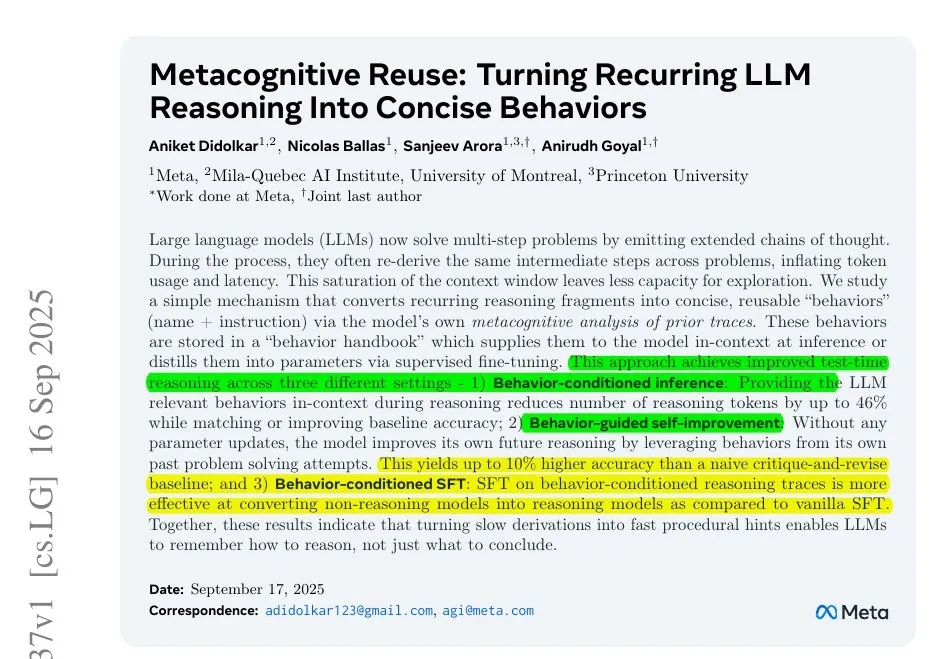

Meta Addresses LLM Inference Inefficiency: Meta’s research reveals an issue of inference inefficiency in LLMs due to repetitive work in long chains of thought. They propose compressing repetitive steps into small, named actions, allowing the model to call these actions instead of re-deriving them, thereby reducing token consumption and improving inference efficiency and accuracy, offering a new approach to optimize LLM inference processes. (Source: ylecun)

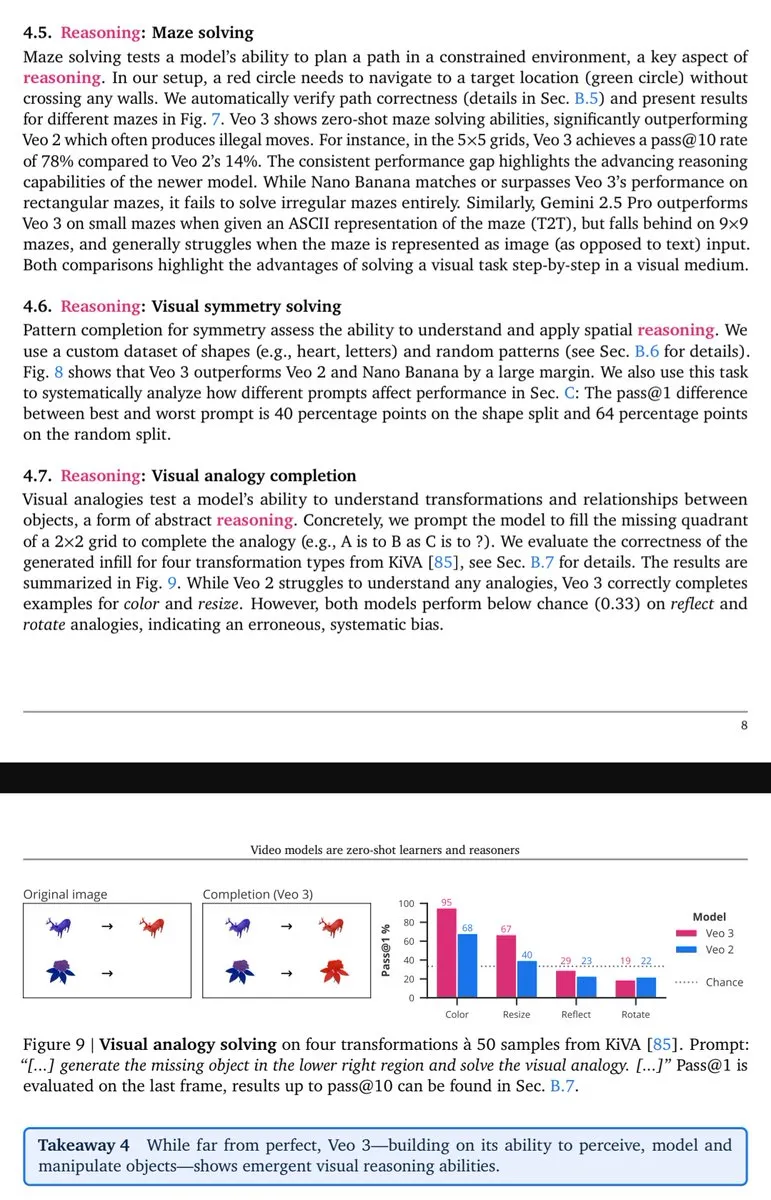

Veo-3 Demonstrates Visual Reasoning Capabilities: Lisan al Gaib noted that the Veo-3 video model exhibits emergent (visual) reasoning capabilities similar to GPT-3, suggesting that native multimodal models, once their full potential is realized, will bring more comprehensive visual understanding and reasoning benefits. (Source: scaling01)

💼 Business

OpenAI’s Hundred-Billion Dollar Bet and AI Infrastructure Bubble: OpenAI is rapidly building a colossal network spanning chips, cloud computing, and data centers, including a $100 billion investment from Nvidia and a $300 billion “StarGate” partnership with Oracle. Despite projected revenues of only $13 billion in 2025, OpenAI’s management views AI infrastructure investment as a “once-in-a-century opportunity,” sparking debate over whether AI infrastructure faces an internet bubble. (Source: 36氪)

Musk Sues OpenAI for the Sixth Time: Elon Musk’s xAI company has sued OpenAI for the sixth time, accusing it of systematically poaching employees, illegally stealing Grok large model source code, and data center strategic plans, among other trade secrets. This lawsuit marks the intensifying competition between the two AI giants. Musk believes OpenAI has deviated from its non-profit origins, while OpenAI denies the allegations, calling them “persistent harassment.” (Source: 36氪)

Top AI Scientist Steven Hoi Joins Alibaba Tongyi: Steven Hoi, a world-renowned AI scientist and IEEE Fellow, has joined Alibaba Tongyi Lab, where he will pivot to foundational and cutting-edge R&D for multimodal large models. With over 20 years of experience in AI industry, academia, and research, Steven Hoi previously served as VP at Salesforce and founded HyperAGI. His joining signifies Alibaba’s renewed significant investment in multimodal large models to accelerate model iteration efficiency and multimodal innovation breakthroughs. (Source: 36氪)

🌟 Community

ChatGPT 4o Performance Decline and User Sentiment: Numerous ChatGPT users reported a decline in 4o model performance, experiencing “dumbing down” and “safety routing” issues, leading to frustration and a feeling of being deceived. Many neurodivergent users were particularly saddened, considering 4o a “lifeline” for communication and self-understanding. Users widely questioned OpenAI’s lack of transparency and called for it to uphold its promise of “treating users as adults,” opposing vague censorship mechanisms. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT)

AI Era Employment and Layoff Controversies: The community hotly debated AI’s impact on the job market, including a significant decrease in entry-level positions, simultaneous corporate layoffs and AI investments, and the authenticity of AI-related layoff reasons. Discussions highlighted the trend of “those who understand AI replacing those who don’t” and called for companies to redesign entry-level jobs instead of simply eliminating them, to cultivate scarce talent adapted to the AI era. (Source: 36氪, 36氪, Reddit r/artificial)

Challenges and Barriers in LLM Research: The community hotly debated the rising barriers to machine learning research, where individual researchers struggle to compete with large tech giants. Facing challenges such as massive papers, expensive computing power, and complex mathematical theories, many find it difficult to get started and achieve breakthroughs, raising concerns about the field’s sustainability. (Source: Reddit r/MachineLearning)

Impact of MoE Models on Local Hosting: The community deeply discussed the pros and cons of MoE models for local LLM hosting. The view is that while MoE models consume more VRAM, they are computationally efficient and can run larger models through CPU offloading, making them particularly suitable for consumer-grade hardware with ample RAM but limited GPUs, thus being an effective way to improve LLM performance. (Source: Reddit r/LocalLLaMA)

Rapid Development and Applications of AI Agents: The community discussed the rapid development of AI Agents, whose capabilities have quickly improved from “almost unusable” to “performing well” in narrow scenarios, and even “general agents starting to be useful” in less than a year, exceeding expectations. However, some also believe that current coding agents are highly homogenized, lacking significant differentiation. (Source: nptacek, HamelHusain)

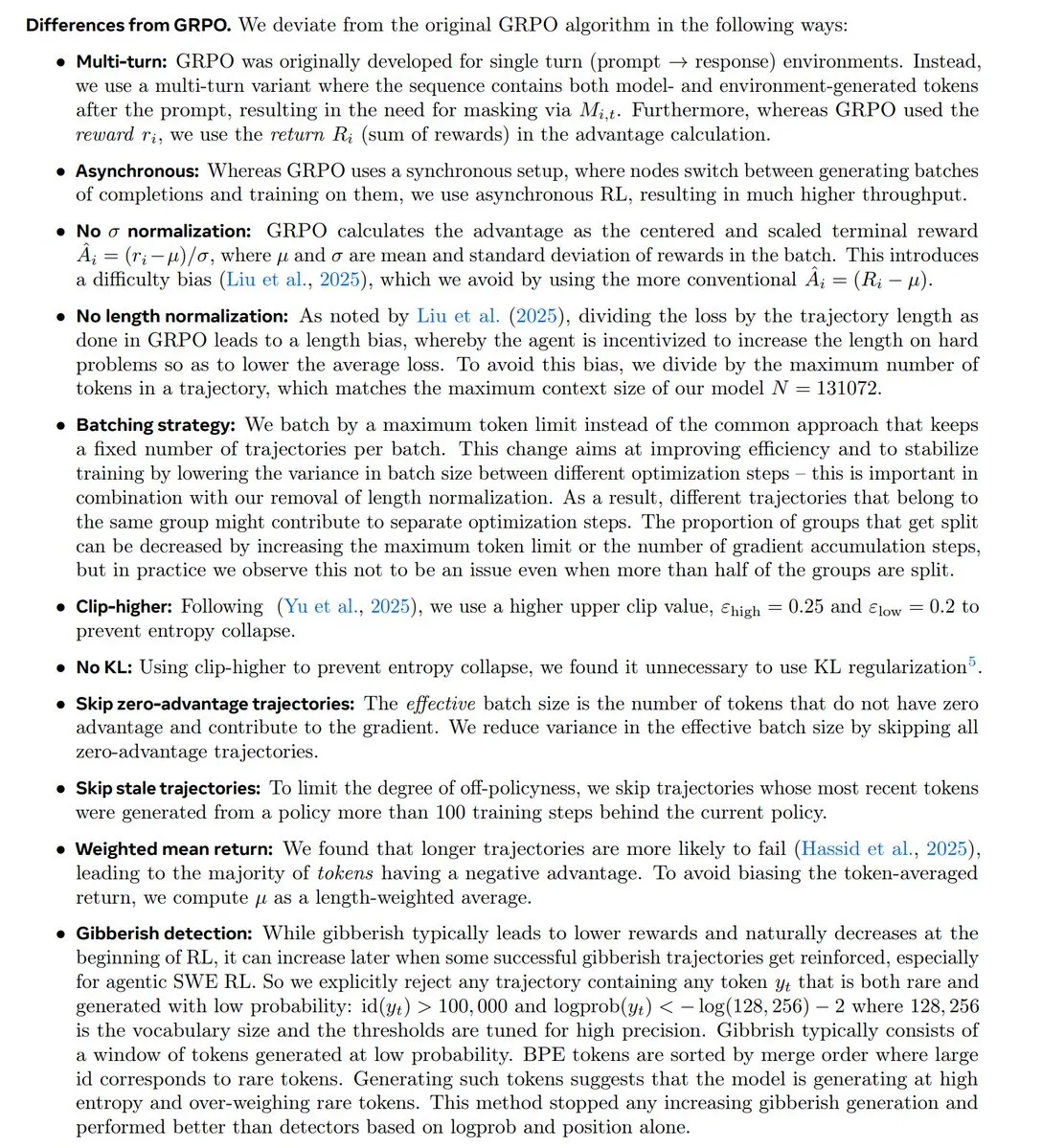

RL Research Trends and GRPO Controversy: The community deeply discussed the latest trends in Reinforcement Learning (RL) research, particularly the status and controversy surrounding the GRPO algorithm. Some views suggest RL research is shifting towards pre-training/modeling, and GRPO is a significant open-source advancement. However, some OpenAI employees believe it lags significantly behind cutting-edge technology, sparking intense debate about algorithmic innovation versus practical performance. (Source: natolambert, MillionInt, cloneofsimo, jsuarez5341, TheTuringPost)

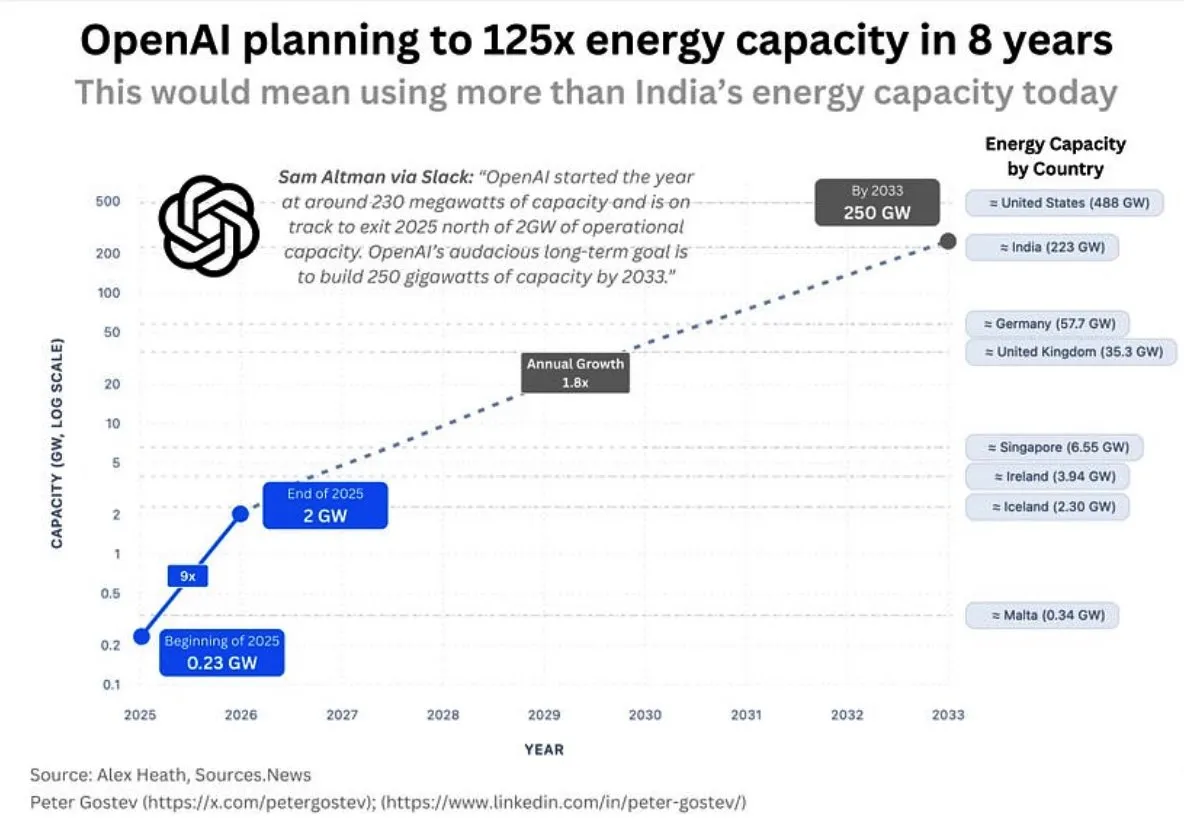

OpenAI’s Energy Consumption and AI Infrastructure: The community discussed OpenAI’s enormous future energy demands, projecting it to consume more energy than the UK or Germany within five years, and more than India within eight years, raising concerns about the scale of AI infrastructure construction, energy supply, and environmental impact. Concurrently, Google’s data center site selection has faced opposition from local residents due to water consumption issues. (Source: teortaxesTex, brickroad7)

Sutton’s Bitter Lesson and AI Development: The community discussed Richard Sutton’s “Bitter Lesson” and its implications for AI research, emphasizing the superiority of general computational methods over human prior knowledge. The discussion revolved around the relationship between “imitation and world models,” suggesting that mere imitation can lead to “cargo cults,” and imitation lacking real experience has fundamental limitations. (Source: rao2z, jonst0kes)

💡 Other

BionicWheelBot Biomimetic Robot: The BionicWheelBot robot achieves versatile navigation on complex terrains by mimicking the rolling motion of the wheel spider. This innovation demonstrates the potential of biomimetics in robot design, offering new solutions for future robots to tackle diverse environments. (Source: Ronald_vanLoon)

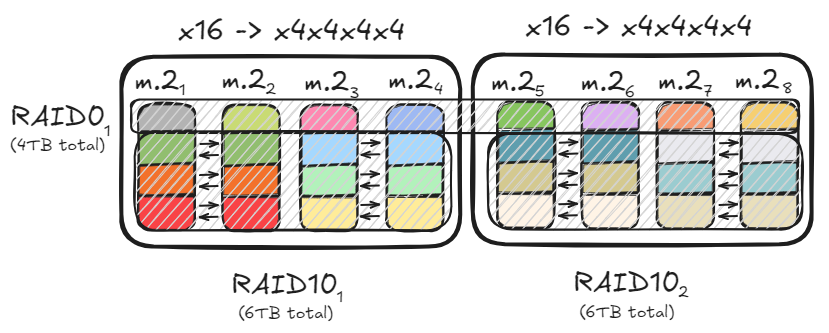

PC Storage Optimization and RAID Configuration: Users shared how to achieve data throughput of up to 47GB/s using RAID0 and RAID10 configurations with multiple PCIe lanes and M.2 drives, to accelerate large model loading. This optimization scheme balances high-speed read/write requirements with storage capacity and data redundancy, providing an efficient hardware foundation for local AI model deployment. (Source: TheZachMueller)

Liangzhu “Digital Habitat Bay AI+ Industrial Community” Opens: Hangzhou Liangzhu “Digital Habitat Bay AI+ Industrial Community” officially opened, focusing on cutting-edge fields such as artificial intelligence, digital nomad economy, and cultural creativity. Through the “Digital Habitat Eight Articles” special policy and “Four Fields” spatial layout, the community provides full-cycle support for AI explorers, from idea inception to ecosystem leadership, aiming to create an innovative ecosystem where technology and humanities are deeply integrated. (Source: 36氪)