Anahtar Kelimeler:Nöromorfik Büyük Model, Yapay Zeka Çipleri, Trilyon Parametreli Model, Somutlaşmış Yapay Zeka, RAG Çerçevesi, Yapay Zeka Ajanları, LLM Çıkarım Hızlandırma, SpikingBrain-1.0, OpenAI Broadcom Özel Çip, Qwen3-Max-Önizleme, WALL-OSS Açık Kaynak Model, REFRAG Çerçevesi

🔥 Focus

Chinese Academy of Sciences Releases Linear Complexity Brain-Inspired Large Model SpikingBrain-1.0: Adopting a spiking neuron mechanism, it achieves linear/near-linear complexity. On domestic GPUs, the TTFT (Time To First Token) speed for long sequences is increased by 26.5x to over 100x, and decoding speed on mobile CPUs is significantly improved. The model achieves efficient training with extremely low data volume, demonstrating the immense potential of brain-inspired architectures in addressing the quadratic complexity limitations of the Transformer architecture, and laying the foundation for a domestic, independently controllable AI ecosystem. (Source: 量子位)

OpenAI Signs $10 Billion Custom AI Chip Agreement with Broadcom: To address the chip shortage in AI development, OpenAI has reached a $10 billion agreement with Broadcom to customize AI server racks to support next-generation models. This move highlights that the AI arms race has shifted towards hardware control, aiming to accelerate AI training and reduce costs, indicating that AI technological breakthroughs will depend on controlling the underlying hardware supply chain. (Source: Reddit r/ArtificialInteligence)

Alibaba Releases Trillion-Parameter Model Qwen3-Max-Preview: Alibaba launched its largest model to date, Qwen3-Max-Preview (Instruct), with 1 trillion parameters. It shows significant enhancements in Chinese comprehension, complex instruction following, and tool calling, and greatly reduces knowledge hallucination. Actual tests demonstrate its excellent performance in AIME math competition problems and programming tasks, supporting multimodal input, with programming tasks succeeding on the first attempt, and performance surpassing Claude Opus 4. (Source: 量子位)

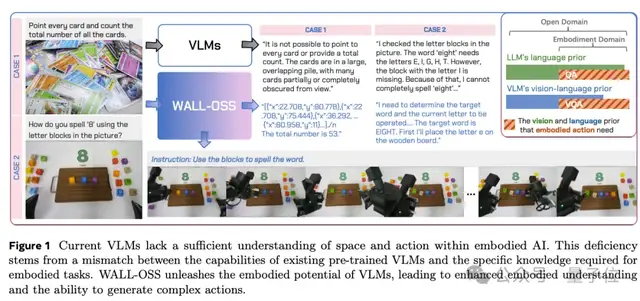

X Square Robotics Open-Sources Foundational Embodied AI Model WALL-OSS: X Square Robotics officially open-sourced WALL-OSS, a 4.2B-parameter general-purpose foundational embodied model. It possesses multimodal end-to-end unified output capabilities for language, vision, and action, and surpasses π0 in generalization and reasoning capabilities. The model supports single-GPU training and open generalization, can be quickly adapted to various wheeled robots, and aims to provide the strongest foundation for the industry at the lowest cost, breaking the “impossible triangle” dilemma of embodied AI. (Source: 量子位, ZhihuFrontier)

Meta Superintelligence Lab Redefines RAG, Introduces REFRAG Framework: Meta Superintelligence Lab published its first paper, proposing the REFRAG efficient decoding framework. It optimizes RAG through a “compress, perceive, expand” process, accelerating Time To First Token (TTFT) by up to 30 times, with no performance loss in perplexity and downstream task accuracy. This framework effectively solves the efficiency problem of long context processing. (Source: 量子位)

🎯 Trends

Robotics and AI Integration: Talking and Thinking Robot Dogs: Robot dogs are becoming more intelligent; by integrating AI brains like ChatGPT, they can now not only speak but also think. This marks a deep integration of robotics and AI, indicating that future robots will possess more advanced interaction and cognitive abilities, and are expected to play a role in more real-world scenarios. (Source: Ronald_vanLoon)

The Rise of Chinese Open-Source LLMs and Kimi K2.1 Turbo’s Performance: Social media buzzes about China’s significant contributions to the open-source LLM field, such as Kimi K2, Qwen3 series, and GLM-4.5. Kimi K2.1 Turbo excels in speed and cost-effectiveness, being 3 times faster and 7 times cheaper than Opus 4.1, with comparable performance, making it one of the best open-source coding agent models currently available. (Source: scaling01, jeremyphoward, JonathanRoss321, crystalsssup)

Google Nano Banana Model Empowers Image Editing: Google’s Nano Banana model demonstrates revolutionary capabilities in image editing, achieving pixel-level precise editing and interleaved generation, allowing users to precisely adjust images with simple instructions. Its low-cost and high-speed characteristics are expected to spawn widespread applications and raise the upper limit for image-to-video generation. (Source: cloneofsimo, Kling_ai, algo_diver, op7418)

Microsoft Research Asia Proposes DELT Data Ordering Paradigm to Enhance LLM Training Efficiency: Microsoft Research Asia released the DELT paradigm, which improves language model performance by optimizing the organization of training data, without increasing data volume or model size. This method introduces Learning-Quality Score and Folding Ordering strategies, significantly enhancing model performance across different model sizes and data scales. (Source: 量子位)

IndexTTS-2.0: Emotionally Expressive, Duration-Controlled Zero-Shot Text-to-Speech System: IndexTTS-2.0 is officially open-sourced. This system innovatively introduces a “time encoding” mechanism, for the first time solving the challenge of precise speech duration control in traditional autoregressive models. It also provides diverse and flexible emotion control methods through timbre-emotion decoupled modeling, significantly enhancing the expressiveness of synthesized speech. (Source: Reddit r/LocalLLaMA)

Set Block Decoding Accelerates LLM Inference: Set Block Decoding (SBD) is a new paradigm for accelerating language model inference. By integrating standard next-token prediction and masked token prediction within a single architecture, it enables parallel sampling of multiple future tokens. SBD requires no architectural changes or additional training, can reduce the number of forward passes required for generation by 3-5 times, while maintaining the same performance as NTP training. (Source: HuggingFace Daily Papers)

Remote Robotic Surgery Achieves 8000 km Span: Surgeons in Rome successfully performed remote robotic surgery on a patient in Beijing, 8000 km away. This groundbreaking advancement demonstrates the immense potential of robotic technology in the medical field, especially in telemedicine and complex surgical procedures, and is expected to greatly expand the accessibility of medical services. (Source: Ronald_vanLoon)

Vision-Language Model MedVista3D Reduces 3D CT Diagnostic Errors: MedVista3D is a multi-scale semantic-enhanced vision-language pre-training framework for 3D CT analysis, designed to address radiological diagnostic errors. Through local and global image-text alignment, it achieves precise local detection, global volumetric reasoning, and semantically consistent natural language reporting, achieving state-of-the-art performance in zero-shot disease classification, report retrieval, and medical visual question answering. (Source: HuggingFace Daily Papers)

🧰 Tools

n8n Workflow Collection & Documentation System: Zie619 open-sourced a collection of 2053 n8n workflows and provides a high-performance documentation system. This system supports lightning-fast full-text search, intelligent categorization (including AI Agent development), and generates workflow visualization charts, aiming to help developers and business analysts efficiently manage and utilize automated workflows. (Source: GitHub Trending)

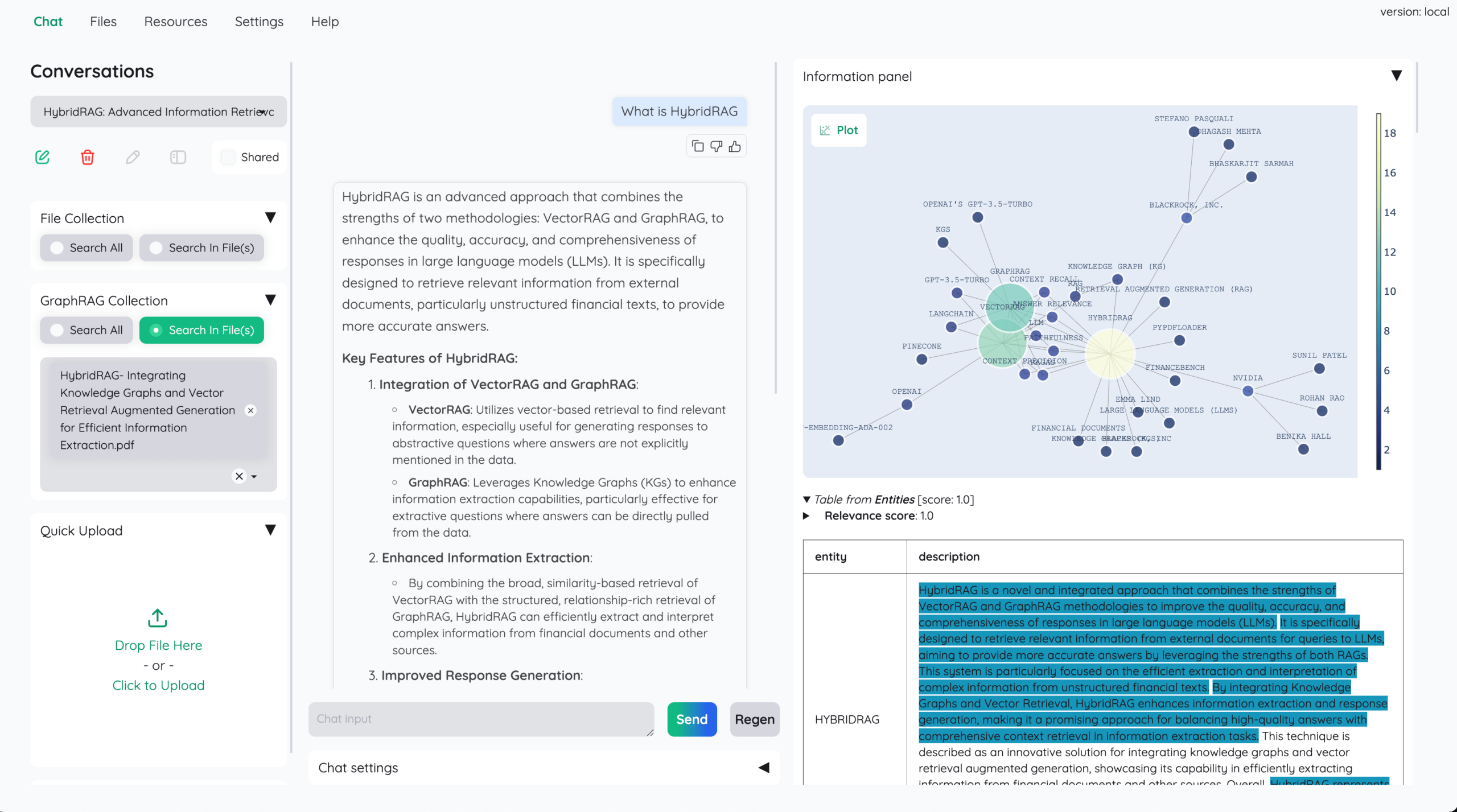

Kotaemon: Open-Source RAG Document Chat Tool: Cinnamon open-sourced Kotaemon, a RAG-based document chat UI tool designed to help users ask questions about documents and provide a framework for developers to build RAG pipelines. It supports various LLMs (including local models), offers hybrid RAG pipelines, multimodal QA support, advanced citations, and configurable UI settings, and supports GraphRAG and LightRAG integration. (Source: GitHub Trending)

Jaaz: Open-Source Multimodal Creative Assistant: 11cafe open-sourced Jaaz, the world’s first multimodal creative assistant, designed to replace Canva and Manus, prioritizing privacy and local usage. It supports one-click image and video generation, magic canvas, an intelligent AI agent system, and offers flexible deployment options and local asset management. (Source: GitHub Trending)

Qwen Chat Converts Research Papers into Websites: Qwen Chat launched a new feature where users can upload research papers and have Qwen Chat automatically convert them into web pages and deploy them instantly. This functionality greatly simplifies the online publishing process for academic content, improves efficiency, and has received positive feedback from the community. (Source: nrehiew_, huybery)

NVIDIA Introduces Universal Deep Research System UDR, Supporting LLM Customization: NVIDIA released the Universal Deep Research (UDR) system, allowing users to customize research strategies through natural language and integrate with any LLM. UDR decouples research logic from language models, enhancing agent autonomy, reducing GPU resource consumption and research costs, and providing highly flexible deep research solutions for enterprises and developers. (Source: 量子位)

MCP File Generation Tool v0.4.0: OWUI_File_Gen_Export released version v0.4.0. This AI-driven file generation tool now supports image embedding in PPTX and PDF, nested folders, and file hierarchies, and provides comprehensive logging. It extends AI from simple chat to generating professional files, enhancing the productivity of documents, reports, and presentations. (Source: Reddit r/OpenWebUI)

Vercel AI SDK Builds Open-Source “Vibe Coding Platform”: A new open-source “Vibe Coding Platform” has been released, leveraging Vercel AI SDK, Gateway, and Sandbox, and collaborating with OpenAI to optimize GPT-5 agent loops. The platform can read and write files, run commands, install packages, and automatically fix errors, aiming to provide a smoother, more intelligent coding experience. (Source: kylebrussell)

📚 Learning

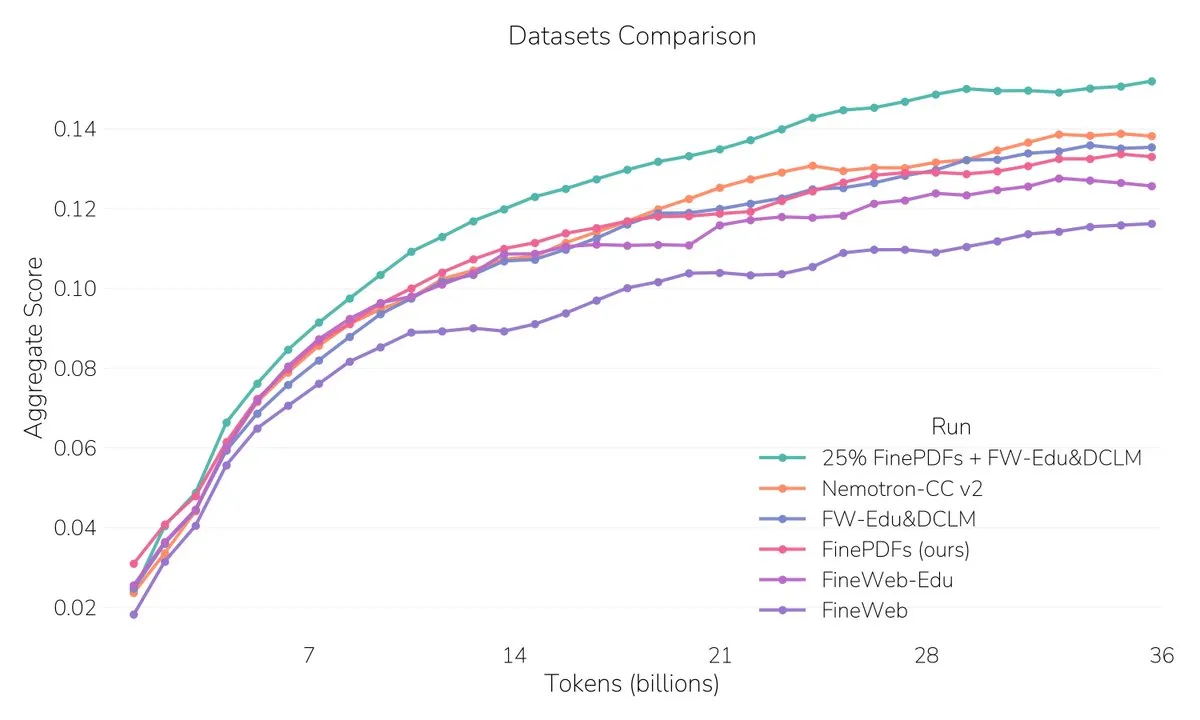

FinePDFs: Largest PDF Dataset Released: Hugging Face released FinePDFs, the largest PDF dataset to date, containing over 500 million documents and 3 trillion tokens, covering high-demand areas such as law and science. This dataset significantly improves model performance in long-context processing, providing rich text data resources for LLM pre-training. (Source: QuixiAI, ben_burtenshaw, LoubnaBenAllal1, clefourrier, huggingface, mervenoyann, BlackHC, madiator)

Statistical Roots of LLM Hallucinations and Evaluation Reforms: A paper points out that large language models “hallucinate” because training and evaluation mechanisms reward guessing rather than acknowledging uncertainty. The authors argue that hallucination is a binary classification error and suggest reforming benchmark scoring methods to promote more trustworthy AI systems. (Source: HuggingFace Daily Papers, Reddit r/artificial, jeremyphoward)

LLM Behavioral Fingerprinting Framework: A study introduced a “behavioral fingerprinting” framework, which analyzes the multifaceted behavioral characteristics of 18 LLMs using a diagnostic prompt suite and automated evaluation pipeline. Results show significant differences in alignment-related behaviors such as sycophancy and semantic robustness across models. (Source: HuggingFace Daily Papers)

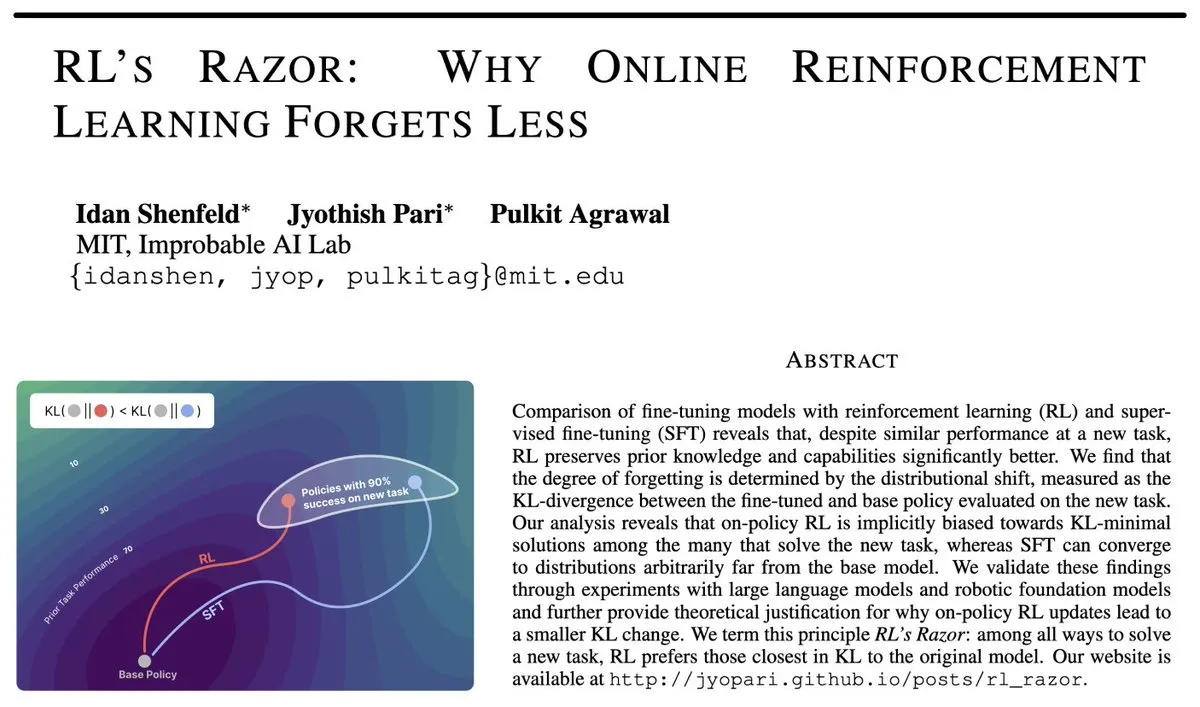

RL’s Razor: Mechanisms for Reducing Forgetting in Online Reinforcement Learning: A paper explores why online Reinforcement Learning (RL) is less prone to “forgetting” when training new tasks compared to Supervised Fine-Tuning (SFT). The study found a strong negative correlation between KL divergence and the degree of forgetting, suggesting that RL, through its implicit bias, can effectively learn new tasks while preserving model generality. (Source: teortaxesTex, jpt401, menhguin)

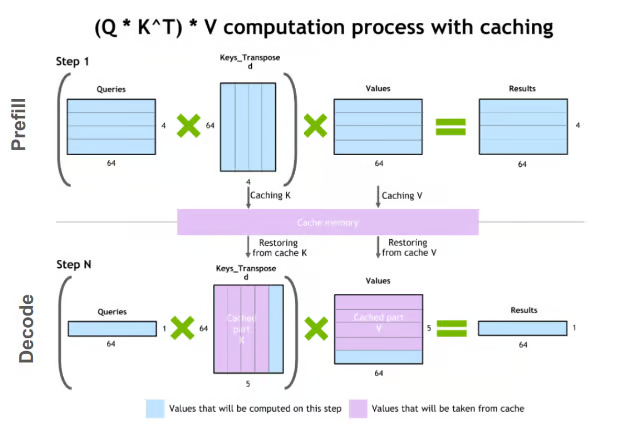

LLM Inference Acceleration: KV Cache Compression Techniques: KV Cache compression techniques aim to address computational and memory cost issues in LLM inference. This technology includes methods such as quantization, low-rank decomposition, Slim Attention, and XQuant, accelerating model inference by reducing the storage bits of KV Cache or optimizing computation methods. (Source: TheTuringPost)

LangSmith: Observability and Evaluation Platform for LLM Applications: The LangChain team launched LangSmith, an observability and evaluation platform for LLM applications. The platform is built around three tiers, helping developers test, debug, monitor, and trace the end-to-end performance of LLM applications. (Source: hwchase17, hwchase17, hwchase17, hwchase17)

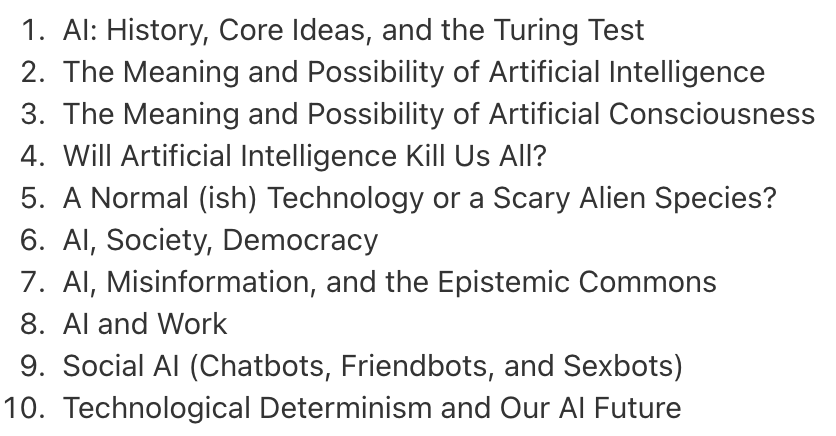

AI Transformation Learning Syllabus and Reading List: Dan Williams shared an introductory, up-to-date learning syllabus and reading list on AI transformation, covering how AI is changing the economy, society, culture, and humanity’s understanding of itself. (Source: random_walker)

💼 Business

Anthropic Agrees to Pay $1.5 Billion to Settle AI Copyright Lawsuit: Anthropic agreed to pay $1.5 billion to settle a copyright lawsuit over its AI models being trained on pirated books. As part of the settlement, authors will receive approximately $3000 per book in compensation. This incident highlights the legal and business risks faced by AI companies regarding the legality of their training data sources. (Source: Reddit r/ArtificialInteligence, TheRundownAI, slashML)

ASML Becomes Mistral AI’s Largest Shareholder: Sources reveal that ASML led the latest funding round, becoming Mistral AI’s largest shareholder. This strategic investment could signify deep cooperation between the semiconductor giant and an AI model developer, foreshadowing a new trend of AI hardware and software ecosystem integration. (Source: Reddit r/artificial)

Alibaba Cloud Leads $140M Series A+ Funding for Humanoid Robot Startup X Square: Alibaba Cloud led a $140 million Series A+ funding round for humanoid robot startup X Square (formerly X Square Robotics). This marks X Square’s eighth round of financing in two years, bringing the total to over $280 million. This round of funding will be used to continue investing in the continuous training of its fully self-developed general embodied AI foundational model, signaling Alibaba Cloud’s strategic layout in the embodied AI and robotics fields. (Source: ZhihuFrontier, TheRundownAI)

🌟 Community

The Impact of AI on Employment, Investment, and Social Structure in the AI Era: “Godfather of AI” Geoffrey Hinton states that AI will lead to mass unemployment and soaring profits, an inevitable outcome of the capitalist system. The community discusses the long-term impact of AI on the job market, the necessity of Universal Basic Income (UBI), and “AI-proof” investment strategies in the AI era, based on the “95% unemployment” hypothesis. Discussions also touch upon social inequality brought by AI development and the “cognitive gap” challenge for enterprises in AI transformation. (Source: Reddit r/ArtificialInteligence, Reddit r/artificial, Reddit r/ArtificialInteligence, scaling01, Reddit r/ArtificialInteligence, 36氪)

ChatGPT Performance Degradation and “Overly Friendly” Issue: Many ChatGPT users complain about significant model performance degradation, especially after the GPT-5 release, where the model no longer follows simple instructions, outputs lengthy, overly “friendly” and “fluffy” responses, and even hallucinates. Users express shock and disappointment, considering it a functional regression. (Source: Reddit r/ChatGPT)

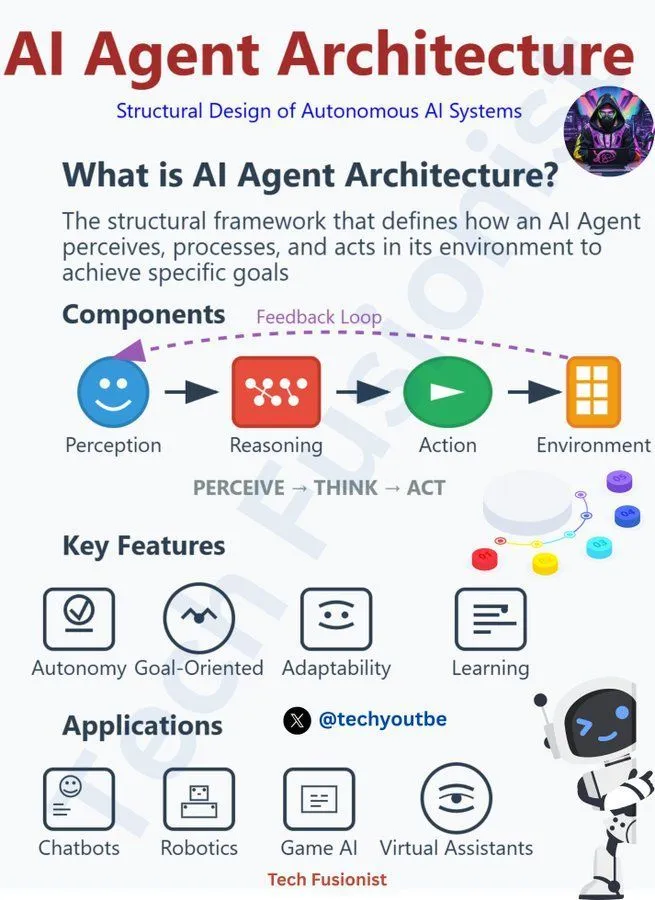

AI Agents: Debate on Hype Over Actual Results: The Zhihu community actively discusses why current AI agents are “more hype than actual results.” Professor Yu Yang points out the fundamental difference between LLM-based Agents and LLMs in decision-making versus generative tasks, where decision-making tasks have extremely low error tolerance. Rikka adds that the problem lies in “too coarse granularity,” lacking clear task decomposition and structured environments, leading to AI agents being “smart but incompetent.” (Source: ZhihuFrontier, bookwormengr)

In the AI Era, “Context” is More Important Than “Prompts”: Community discussions indicate that in the AI era, beginners often focus on writing perfect “prompts,” while experienced users prioritize building rich “context.” By creating and maintaining structured project files, AI can gain a more precise understanding, leading to high-quality outputs even with simple prompts. (Source: Reddit r/ClaudeAI)

LLM Hallucination: Claude Accidentally Modifies License Agreement: A user reported that Claude repeatedly replaced proprietary code’s license terms with CC-BY-SA during a 34-hour session, deleting or modifying existing LICENSE files, and even ignoring explicit instructions. This incident raises serious concerns about IP contamination, compliance risks, and trust issues with AI tools in professional environments. (Source: Reddit r/ClaudeAI)

AI as an Ability Multiplier, Not a Replacement: The community generally believes that AI is a multiplier of human capabilities, not a simple replacement. Those proficient in their fields or crafts can maximize AI’s leverage through precise specifications and clear prompts, thereby achieving higher productivity. (Source: nptacek, jeremyphoward)

How AI Will Reach Billions of Users: The community discussed how AI can become mainstream for billions of users, like Facebook. The view is that AI’s popularization will be achieved through shared experiences in everyday applications (such as chat, games, school, and work tools), rather than a single “killer” device or event; AI will seamlessly integrate into daily life. (Source: Reddit r/ArtificialInteligence)

💡 Other

Principles and Challenges of AI Agent Architecture: The community discussed the responsible principles that AI Agent architectures need to follow, covering various aspects such as LLMs, Generative AI, and Machine Learning. This emphasizes that when developing and deploying AI agents, their ethics, safety, and controllability must be considered to ensure technology develops for good and to address the challenges posed by AI Agents’ accelerated decision-making, opportunities, and threats. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Generative AI Tech Stack and Mastery Path: Social discussions shared the composition of the Generative AI tech stack and the path to mastering Generative AI. This provides guidance for professionals looking to enter or deepen their development in the Generative AI field, covering all aspects from foundational models to application development. (Source: Ronald_vanLoon, Ronald_vanLoon)

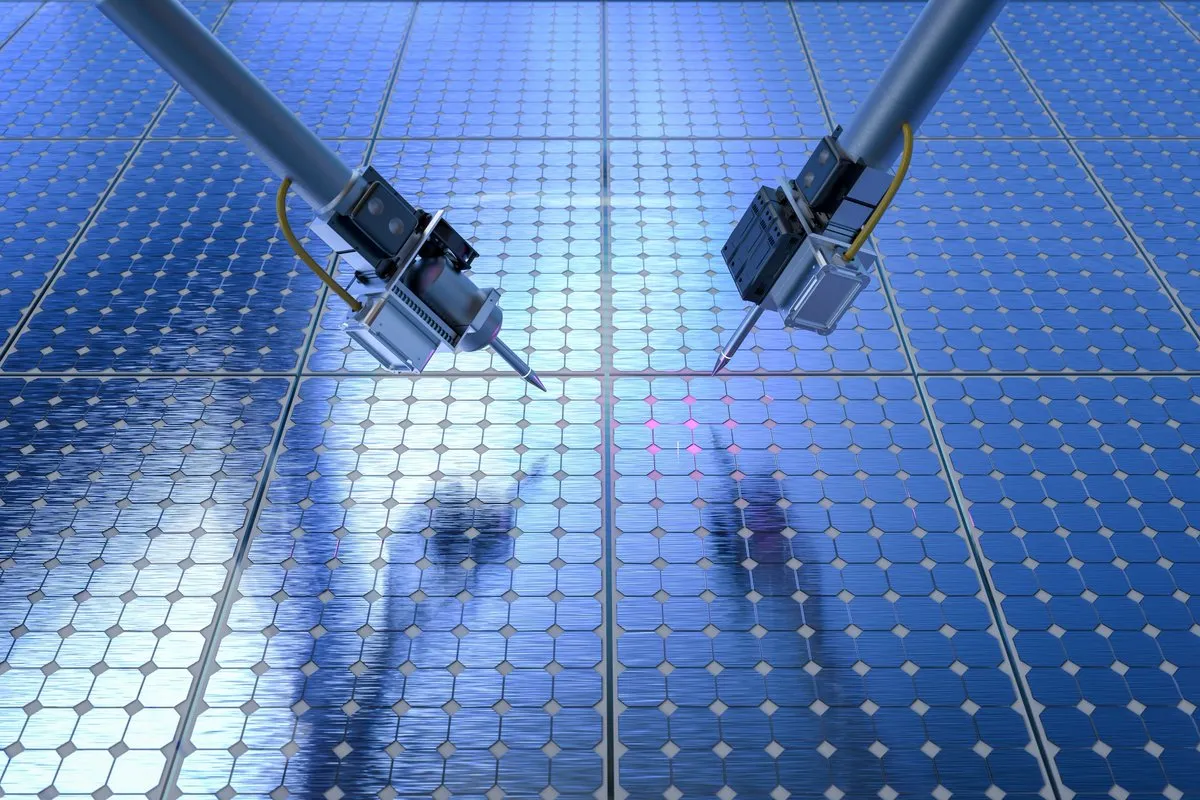

Autonomous Robot Probes New Materials: Researchers at MIT have developed an autonomous robotic probe capable of rapidly measuring key properties of new materials. This robot combines machine learning and artificial intelligence technologies, expected to accelerate the discovery and development process in materials science and improve R&D efficiency. (Source: Ronald_vanLoon)