Anahtar Kelimeler:GPT-5, Yapay Zeka Kendini Geliştirme, Somutlaşmış Yapay Zeka, Çok Modelli Model, Büyük Dil Modeli, Pekiştirmeli Öğrenme, Yapay Zeka Ajanı, GPT-5 Performans Artışı, Genie Envisioner Robot Platformu, LLM İşe Alım Değerlendirme Önyargısı, Qwen3 Süper Uzun Bağlam, CompassVerifier Cevap Doğrulama

🔥 Spotlight

GPT-5 Release: Productization and Performance Enhancement : OpenAI has officially released GPT-5, marking the latest iteration of its flagship model. This release focuses on enhancing user experience by automatically scheduling base models and deep inference models via a real-time router, balancing speed and intelligence. GPT-5 demonstrates significant improvements in reducing hallucinations, enhancing instruction following, and programming capabilities, setting new records in multiple benchmarks. Sam Altman likens it to a “Retina display,” emphasizing its practicality as a “PhD-level AI” rather than merely a breakthrough in intelligence limits. Although not technically AGI, its faster inference speed and lower operating costs are expected to drive wider AI adoption. (Source: MIT Technology Review)

Progress in AI Self-Improvement Research : Meta CEO Mark Zuckerberg stated that the company is committed to building AI systems capable of self-improvement. AI has demonstrated self-improvement capabilities in various aspects, such as continuously optimizing its performance through automatic data augmentation, model architecture search, and reinforcement learning. This trend suggests that future AI systems will be able to learn autonomously and surpass human-defined performance boundaries, which is a critical path to achieving higher levels of AI. (Source: MIT Technology Review)

Genie Envisioner: A Unified World Model Platform for Robot Manipulation : Researchers have introduced Genie Envisioner (GE), a unified world foundation platform for robot manipulation. GE-Base is an instruction-conditioned video diffusion model that captures the spatial, temporal, and semantic dynamics of real robot interactions. GE-Act maps latent representations to executable action trajectories, enabling precise and generalizable policy inference. GE-Sim, an action-conditioned neural simulator, supports closed-loop policy development. This platform is expected to provide a scalable and practical foundation for instruction-driven general embodied AI. (Source: HuggingFace Daily Papers)

ISEval: An Evaluation Framework for Large Multimodal Models’ Ability to Identify Erroneous Inputs : Addressing the question of whether Large Multimodal Models (LMMs) can proactively identify erroneous inputs, researchers have proposed the ISEval evaluation framework. This framework covers seven types of flawed premises and three evaluation metrics. The study found that most LMMs struggle to proactively detect text flaws without explicit guidance, and their performance varies across different error types. For example, they are proficient at identifying logical fallacies but perform poorly on superficial language errors and specific conditional flaws. This highlights the urgent need for LMMs to proactively validate input effectiveness. (Source: HuggingFace Daily Papers)

Research on Language Bias in LLM-based Recruitment Evaluation : A study introduced a benchmark to evaluate Large Language Models’ (LLMs) responses to linguistically discriminatory markers in recruitment evaluations. Through carefully designed interview simulations, the study found that LLMs systematically penalize certain language patterns, especially vague language, even when content quality is identical. This reveals demographic biases in automated evaluation systems, providing a foundational framework for detecting and measuring linguistic discrimination in AI systems, with broad applications for fairness in automated decision-making. (Source: HuggingFace Daily Papers)

🎯 Trends

Qwen3 Series Models Support Million-Token Ultra-Long Context : Alibaba Cloud’s Qwen3-30B-A3B-2507 and Qwen3-235B-A22B-2507 models now support ultra-long contexts of up to 1 million tokens. This is thanks to Dual Chunk Attention (DCA) and MInference sparse attention techniques, which not only improve generation quality but also boost the inference speed for near-million-token sequences by up to 3 times. This significantly expands the application potential of LLMs in handling complex tasks such as long documents and codebases, and is compatible with vLLM and SGLang for efficient deployment. (Source: Alibaba_Qwen)

Anthropic Claude Opus 4.1 and Sonnet 4 Upgrades : Anthropic has released Claude Opus 4.1 and Sonnet 4, with a focus on enhancing Agentic tasks, real-world coding, and reasoning capabilities. The new models feature “deep thinking” capabilities, allowing flexible switching between instant response and deep deliberation modes, compressing complex tasks that would take hours into minutes. This further strengthens Claude’s positioning in multi-model collaboration scenarios, particularly excelling in complex code review and advanced reasoning tasks. (Source: dl_weekly)

Microsoft Launches Copilot 3D Feature : Microsoft has launched a free Copilot 3D feature that converts 2D images into GLB format 3D models, compatible with various 3D viewers, design tools, and game engines. Although currently less effective for animal and human images, this feature provides users with convenient 2D-to-3D conversion capabilities, expected to play a role in product design, virtual reality, and other fields, further lowering the barrier to 3D content creation. (Source: The Verge)

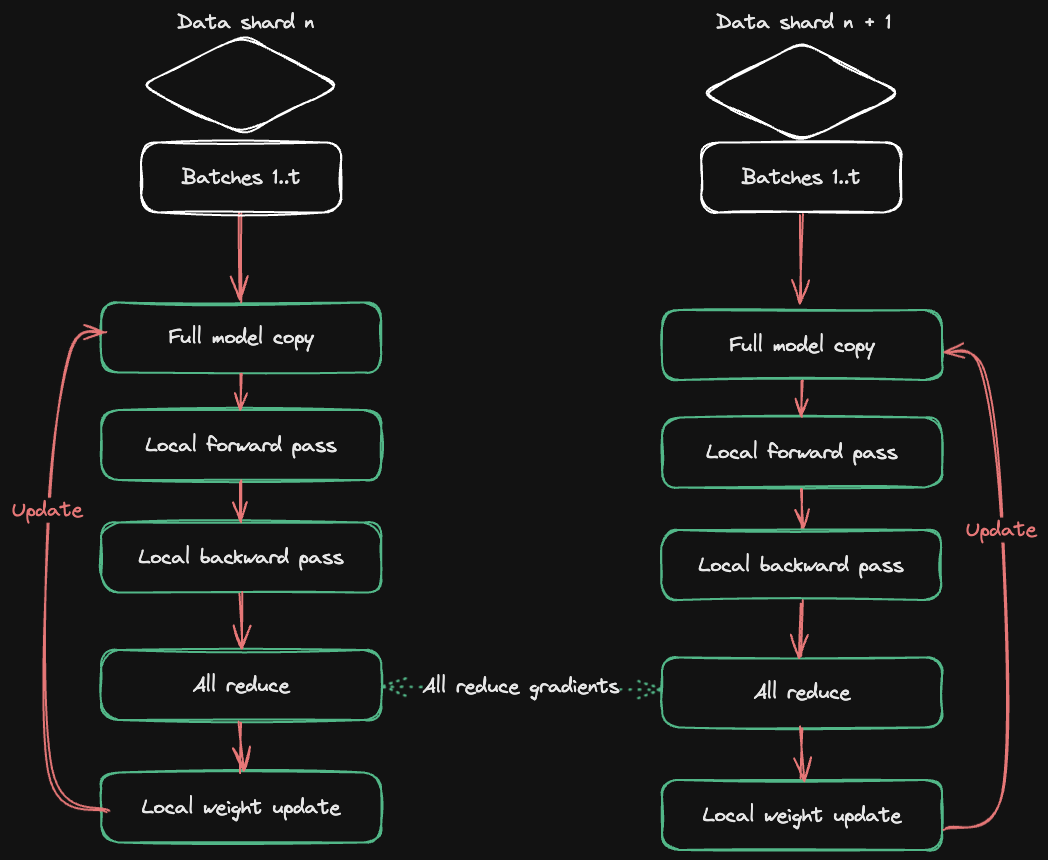

HuggingFace Accelerate Releases Multi-GPU Training Guide : HuggingFace, in collaboration with Axolotl, has released the Accelerate ND-Parallel guide, aiming to simplify the combination and application of parallel strategies in multi-GPU training. The guide details strategies such as Data Parallel (DP), Fully Sharded Data Parallel (FSDP), Tensor Parallel (TP), and Context Parallel (CP), and provides examples of mixed parallel configurations to help developers optimize memory usage and throughput when training large models, effectively addressing communication overhead challenges in multi-node training. (Source: HuggingFace Blog)

🧰 Tools

OpenAI Codex CLI: Local Coding Agent in Your Terminal : OpenAI has released Codex CLI, a lightweight coding Agent that runs locally in your terminal. Users can install it via npm install -g @openai/codex or brew install codex. It supports binding with ChatGPT Plus/Pro/Team accounts, allowing free use of the latest models like GPT-5, or pay-as-you-go via API Key. Codex CLI offers various sandbox modes, including read-write and read-only, and supports custom configurations, aiming to provide developers with efficient and secure local programming assistance. (Source: openai/codex – GitHub Trending)

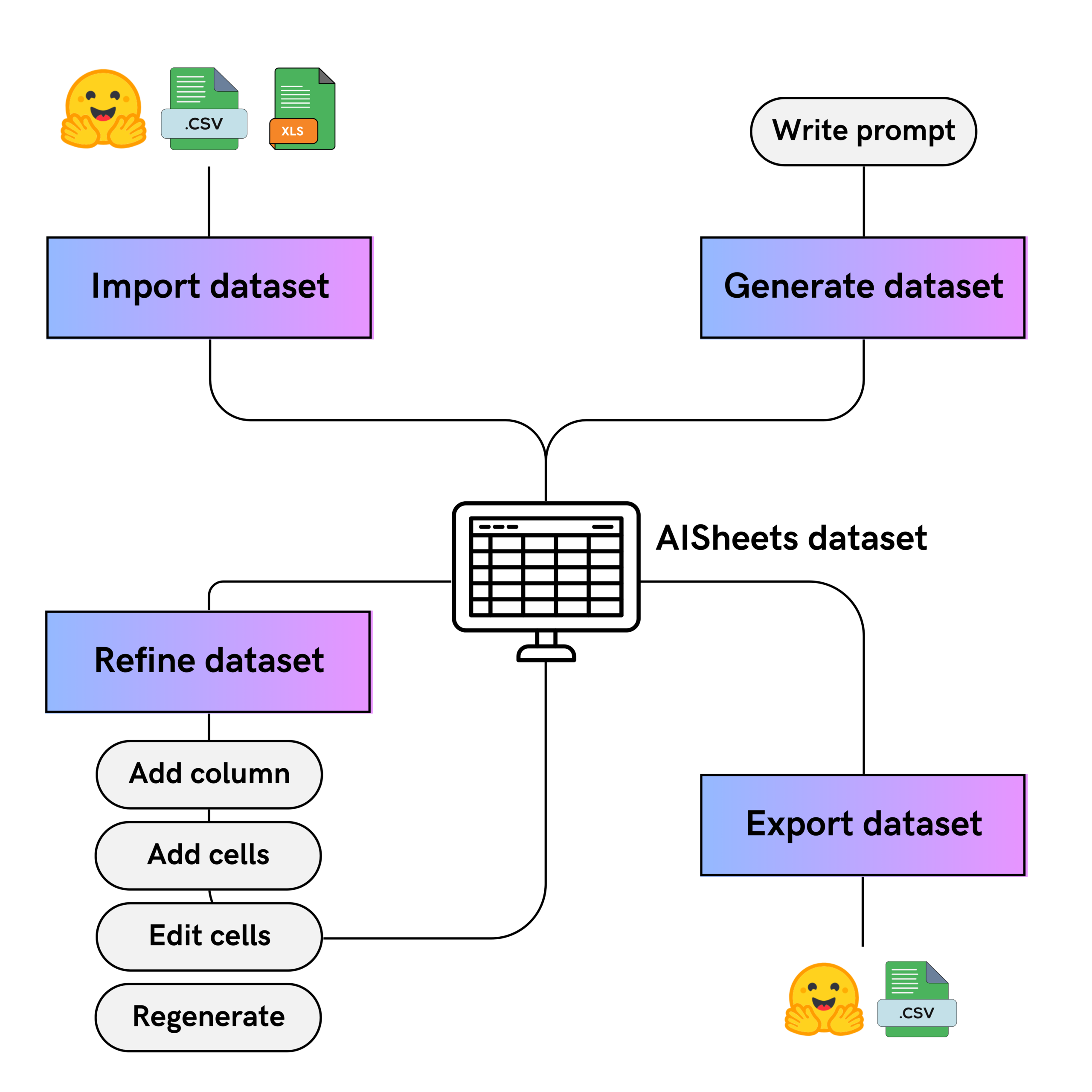

HuggingFace AI Sheets: No-Code Dataset Tool : HuggingFace has launched AI Sheets, an open-source, no-code tool for building, enriching, and transforming datasets using AI models. The tool’s interface resembles a spreadsheet and supports local deployment or running on the Hugging Face Hub. Users can leverage thousands of open models (including gpt-oss) for model comparison, prompt optimization, data cleaning, classification, analysis, and synthetic data generation, iteratively improving AI-generated results through manual editing and upvote feedback, and exporting to the Hub. (Source: HuggingFace Blog)

Google Agent Development Kit (ADK) and Its Examples : Google has released the Agent Development Kit (ADK), an open-source, code-first Python toolkit for building, evaluating, and deploying complex AI Agents. ADK supports a rich tool ecosystem, modular multi-Agent systems, and flexible deployment. Its sample library adk-samples provides various Agent examples, from conversational bots to multi-Agent workflows, aiming to accelerate the Agent development process and integrate with the A2A protocol for remote Agent-to-Agent communication. (Source: google/adk-python – GitHub Trending & google/adk-samples – GitHub Trending)

Qwen Code CLI: Free Code Execution Tool : Alibaba Cloud’s Qwen Code CLI offers 2000 free code executions daily, easily launched via the npx @qwen-code/qwen-code@latest command. This tool supports Qwen OAuth, aiming to provide developers with a convenient and efficient code writing and testing experience. The Qwen team stated they will continue to optimize this CLI tool and the Qwen-Coder model, striving to achieve Claude Code’s performance level while remaining open source. (Source: Alibaba_Qwen)

📚 Learning

OpenAI Python Library Update : The official OpenAI Python library provides convenient access to the OpenAI REST API, supporting Python 3.8+. The library includes type definitions for all request parameters and response fields, and offers both synchronous and asynchronous clients. Recent updates include beta support for the Realtime API, used for building low-latency, multimodal conversational experiences, along with detailed explanations of webhook validation, error handling, request IDs, and retry mechanisms, enhancing development efficiency and robustness. (Source: openai/openai-python – GitHub Trending)

Curated List of AI Agents : e2b-dev/awesome-ai-agents is a GitHub repository that collects numerous examples and resources for autonomous AI Agents. This list aims to provide developers with a centralized resource library to help them understand and learn about different types of AI Agents, covering various application scenarios from simple to complex, serving as important learning material for exploring and building AI Agents. (Source: e2b-dev/awesome-ai-agents – GitHub Trending)

MeanFlow: A New Paradigm for One-Step Generative Diffusion Models : Science Space has proposed MeanFlow, a new method that is expected to become a standard for accelerating generation in diffusion models. This method aims to achieve one-step generation by modeling “average velocity” instead of “instantaneous velocity,” overcoming the slow generation speed of traditional diffusion models. MeanFlow possesses clear mathematical principles, can be trained from scratch with a single objective, and its single-step generation performance is close to SOTA, providing new theoretical and practical directions for accelerating generative AI models. (Source: WeChat)

Full Lifecycle Optimization of Long Context KV Cache : Microsoft Research Asia shared their practices in full lifecycle optimization of KV Cache, aiming to address latency and storage challenges in long-context Large Language Model inference. Through SCBench benchmarking and proposing methods like MInference and RetrievalAttention, they significantly reduced prefilling latency and alleviated KV Cache memory pressure. The research emphasizes system-level cross-request optimization and Prefix Caching reuse, providing optimization solutions for the scalability and cost-effectiveness of long-context LLM inference. (Source: WeChat)

Reinforcement Learning Framework FR3E Enhances LLM Exploration Capability : ByteDance, MAP, and the University of Manchester jointly proposed FR3E (First Return, Entropy-Eliciting Explore), a novel structured exploration framework designed to address the insufficient exploration problem in LLM reinforcement learning. FR3E identifies high-uncertainty tokens in reasoning trajectories, guiding diverse expansions, systematically reconstructing LLM exploration mechanisms, achieving a dynamic balance between exploitation and exploration, and significantly outperforming existing methods on multiple mathematical reasoning benchmarks. (Source: WeChat)

Research on the Correlation Between Maxima in Self-Attention Mechanisms and Contextual Understanding : A new study at ICML 2025 reveals that highly concentrated maxima exist in the query (Q) and key (K) representations of large language models’ self-attention mechanisms, and these values are crucial for contextual knowledge understanding. The study found that this phenomenon is common in models using Rotary Position Embedding (RoPE) and appears in early layers. Disrupting these maxima leads to a sharp decline in model performance on tasks requiring contextual understanding, providing new directions for LLM design, optimization, and quantization. (Source: WeChat)

C3 Benchmark: A Bilingual Chinese-English Speech Dialogue Model Test Benchmark : Peking University and Tencent jointly released C3 Benchmark, the first comprehensive bilingual Chinese-English evaluation benchmark that examines complex phenomena in spoken dialogue models such as pauses, polyphonic characters, homophones, stress, syntactic ambiguity, and polysemy. The benchmark includes 1079 real-world scenarios and 1586 audio-text pairs, aiming to target the critical weaknesses of current speech dialogue models and promote their progress in understanding human daily conversations. (Source: WeChat)

Chemma: A Large Language Model for Organic Chemistry Synthesis : Shanghai Jiao Tong University’s AI for Science team released the Magnolia Chemical Synthesis Large Model (Chemma), achieving for the first time the acceleration of the entire organic synthesis process using a chemical large language model. Chemma, without requiring quantum computing, relies solely on chemical knowledge understanding and reasoning capabilities, surpassing existing best results in tasks such as single-step/multi-step retrosynthesis, yield/selectivity prediction, and reaction optimization. Its “Co-Chemist” human-computer collaborative active learning framework has been successfully validated in real reactions, providing a new paradigm for chemical discovery. (Source: WeChat)

Intern-Robotics: Shanghai AI Lab’s Embodied Full-Stack Engine : Shanghai AI Lab has released Intern-Robotics, an embodied full-stack engine, aiming to drive the “ChatGPT moment” in the field of embodied AI. This engine is an open and shared infrastructure focused on achieving embodiment generalization, scene generalization, and task generalization, emphasizing a task success rate approaching 100%. The team is committed to solving data scarcity issues and gradually achieving zero-shot generalization through the “Real to Sim to Real” technical route and real-world reinforcement learning, accelerating the practical application of embodied AI. (Source: WeChat)

SQLM: A Self-Questioning and Answering Framework for Evolving AI Reasoning Capabilities : A Carnegie Mellon University team proposed SQLM, a self-questioning framework that enhances AI reasoning capabilities through self-Q&A without external data. The framework includes two roles: a proposer and a solver, both trained via reinforcement learning to maximize expected rewards. SQLM significantly improved model accuracy on arithmetic, algebra, and programming tasks, providing a scalable and self-sustaining process for large language models to enhance capabilities in the absence of high-quality human-annotated data. (Source: WeChat)

CompassVerifier: AI Answer Verification Model and Evaluation Dataset : Shanghai AI Lab and the University of Macau jointly released CompassVerifier, a general answer verification model, and VerifierBench, an evaluation dataset, aiming to address the issue where large model training capabilities advance rapidly but answer verification capabilities lag. CompassVerifier is a lightweight yet powerful multi-domain general verifier, optimized based on the Qwen series models, capable of achieving verification accuracy surpassing general large models in mathematics, knowledge, scientific reasoning, and other fields, and can serve as a reinforcement learning reward model to provide precise feedback for LLM iterative optimization. (Source: WeChat)

CoAct-1: Coding as Action for Computer Usage Agent : Researchers proposed CoAct-1, a multi-Agent system that enhances actions through coding, aiming to solve efficiency and reliability issues of GUI operation Agents in complex tasks. CoAct-1’s Orchestrator can dynamically delegate subtasks to a GUI Operator or Programmer Agent (capable of writing and executing Python/Bash scripts), thereby bypassing inefficient GUI operations. This method achieved SOTA success rates and significantly improved efficiency on the OSWorld benchmark, providing a more powerful path for general computer automation. (Source: HuggingFace Daily Papers)

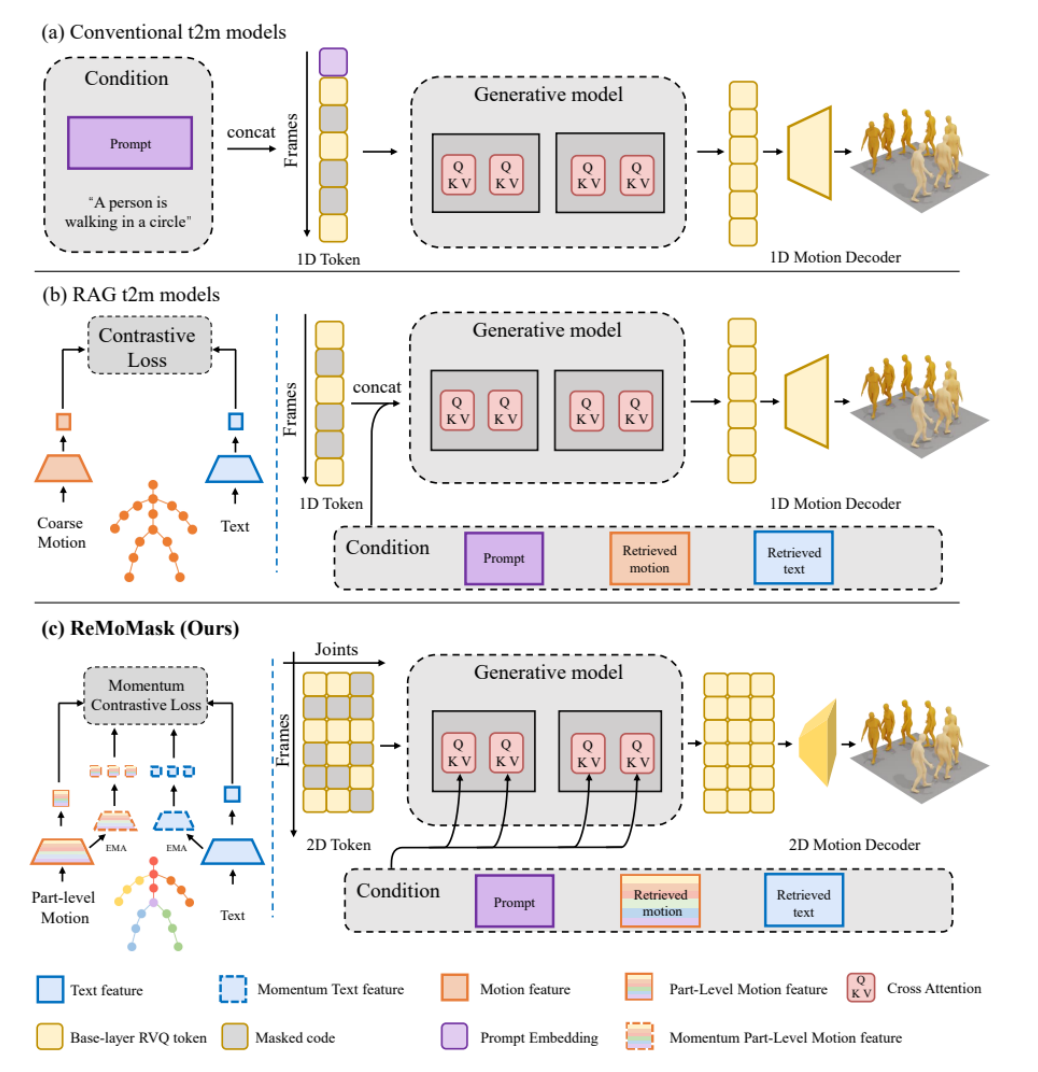

ReMoMask: A New Method for High-Quality 3D Motion Generation in Games : Peking University proposed ReMoMask, a retrieval-augmented generative Text-to-Motion framework designed to generate high-quality, fluid, and realistic 3D motions from a single instruction. ReMoMask integrates a momentum bidirectional text-to-motion model, semantic spatio-temporal attention mechanism, and RAG-classifier-free guidance, efficiently generating temporally coherent motions. This method has set new SOTA performance records on standard benchmarks like HumanML3D and KIT-ML, with the potential to revolutionize game and animation production workflows. (Source: WeChat)

WebAgents Survey: Large Models Empowering Web Automation : Researchers from The Hong Kong Polytechnic University published the first comprehensive survey on WebAgents, thoroughly reviewing the research progress of large models empowering AI Agents for next-generation web automation. The survey summarizes representative WebAgents methods from perspectives such as architecture (perception, planning and reasoning, execution), training (data, policy), and trustworthiness (safety, privacy, generalization), and discusses future research directions, including fairness, explainability, datasets, and personalized WebAgents, providing guidance for building more intelligent and secure web automation systems. (Source: WeChat)

InfiAlign: An Alignment Framework for LLM Reasoning Capabilities : InfiAlign is a scalable and sample-efficient post-training framework that aligns LLMs to enhance reasoning capabilities by combining SFT and DPO. At its core, the framework features a robust data selection pipeline that automatically filters high-quality alignment data from open-source reasoning datasets. InfiAlign achieved performance comparable to DeepSeek-R1-Distill-Qwen-7B on the Qwen2.5-Math-7B-Base model, but using only about 12% of the training data, and demonstrated significant improvements in mathematical reasoning tasks, offering a practical solution for aligning large reasoning models. (Source: HuggingFace Daily Papers)

💼 Business

OpenAI Employee Stock Option Liquidation Plan to Prevent Poaching : To counter talent drain, OpenAI has launched a new round of employee stock option liquidation, cashing out at a $500 billion valuation, aiming to retain talent with substantial financial incentives. This move is expected to push OpenAI’s valuation to new heights. Concurrently, ChatGPT’s weekly active users have reached 700 million, paid enterprise users have grown to 5 million, and annual recurring revenue is projected to exceed $20 billion, indicating strong development in OpenAI’s product and commercialization efforts. (Source: 量子位)

AWS Builds the Largest AI Model Aggregation Platform : Amazon Web Services (AWS) announced that OpenAI’s gpt-oss model is now accessible for the first time via Amazon Bedrock and Amazon SageMaker, further enriching its model ecosystem under the “Choice Matters” strategy. AWS now offers over 400 mainstream commercial and open-source large models, aiming to enable enterprises to choose the most suitable model based on performance, cost, and task requirements, rather than pursuing a single “strongest” model, thereby promoting multi-model synergy and efficiency. (Source: 量子位)

Ant Group Invests in Embodied AI Dexterous Hand Company : Ant Group led a multi-hundred-million yuan angel round investment in Lingxin Qiaoshou, an embodied AI company. Lingxin Qiaoshou is the world’s only company to achieve mass production of thousands of high-degree-of-freedom dexterous hands, holding an 80% market share. Its Linker Hand series of dexterous hands boasts high degrees of freedom, multi-sensor systems, and cost advantages, already deployed in industrial, medical, and other scenarios. This funding will be used for technology reserves and data collection facility construction, accelerating the deployment of dexterous hands in practical applications. (Source: 量子位)

🌟 Community

GPT-5 User Experience Polarized : After the release of GPT-5, user feedback has been mixed. Some users praised its significant improvements in programming and complex reasoning tasks, finding code generation cleaner and more precise, with extremely strong long-context handling capabilities. However, other users expressed disappointment over the decline in the model’s personalization, creative writing, and emotional support capabilities, describing it as “boring” and “soulless,” with the model routing mechanism leading to unstable experiences, even causing some users to cancel subscriptions. (Source: Reddit r/ChatGPT & Reddit r/LocalLLaMA & Reddit r/ChatGPT & Reddit r/ChatGPT)

AI in Parenting: Applications and Controversies : Working parents are increasingly using AI tools like ChatGPT as “co-parents,” leveraging them to plan meals, optimize bedtime routines, and even provide emotional support. AI’s non-judgmental space for venting has eased parents’ psychological burdens. However, this emerging technology also sparks controversy, including the potential for inaccurate advice, privacy leakage risks (such as the ChatGPT data breach incident), and the possibility that over-reliance on AI could lead to interpersonal isolation and potential environmental impacts. (Source: 36氪)

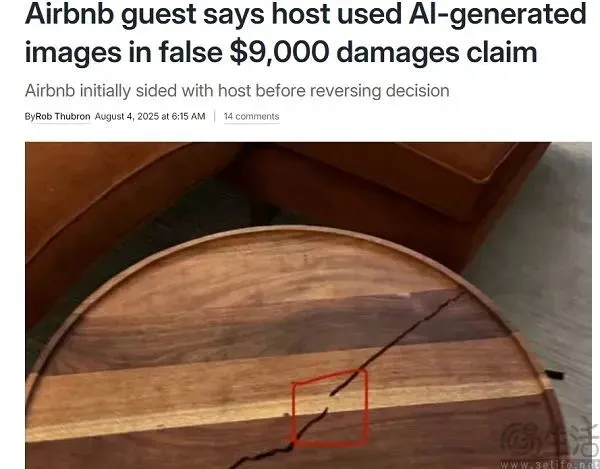

Airbnb Incident: AI-Forged Images Lead to User Compensation : An incident occurred at Airbnb where a host used AI-forged images to defraud users for compensation, highlighting the risks of AI in customer service. The AI customer service failed to identify the AI-generated images, leading to the user being wrongly ordered to pay compensation. Although OpenAI previously launched image detectors, AI’s ability to identify AI-generated content still has limitations, especially against “partial forgery” techniques. This incident has raised concerns about the reliability of AI content detection tools and C2C platforms’ ability to cope with the impact of deepfake content. (Source: 36氪)

Silicon Valley AI Moguls Building Doomsday Bunkers Sparks Heated Discussion : Silicon Valley AI leaders like Mark Zuckerberg and Sam Altman are reportedly building or owning doomsday bunkers, sparking public concern about the future development and potential risks of AI. Although they deny any AI-related reasons, this move is still interpreted as preparation for emergencies such as pandemics, cyber warfare, and climate disasters. Community discussions speculate whether these individuals, who best understand AI technology, have seen signs unknown to the general public, and whether AI development has already brought unforeseen risks. (Source: 量子位)

o3 Wins Kaggle AI Chess Championship : In the final of the inaugural Google Kaggle AI Chess Championship, OpenAI’s o3 swept Elon Musk’s Grok 4 with a 4-0 victory, claiming the championship. This match was seen as a “proxy war” between OpenAI and xAI, aiming to test large models’ critical thinking, strategic planning, and on-the-fly adaptability. Although Grok 4 had strong momentum previously, it made frequent errors in the final, while o3 demonstrated systematically stable strategies, remaining undefeated throughout the match and becoming the undisputed champion. (Source: WeChat)

Discussion: AI Enters the ‘Trough of Disillusionment’ : Extensive discussions have emerged on social media, suggesting that AI has entered the “trough of disillusionment,” especially after the release of GPT-5. Users point out that AI’s limitations have not been effectively overcome, and the benefits from increased model scale and computing power are diminishing. This perspective suggests that AI’s progress has become “less obvious,” primarily manifesting in expert domains rather than at a level perceptible to average users, indicating that AI development might be entering a plateau phase, requiring entirely new architectural breakthroughs. (Source: Reddit r/ArtificialInteligence)

💡 Other

Docker Warns of MCP Toolchain Security Risks : Docker has issued a warning, pointing out severe security vulnerabilities in AI-driven development toolchains built on the Model Context Protocol (MCP), including credential leakage, unauthorized file access, and remote code execution, with real-world cases already occurring. These tools embed LLMs into development environments, granting them autonomous operational permissions, but lack isolation and supervision. Docker recommends avoiding npm installation of MCP servers, instead using signed containers, and emphasizes the importance of container isolation and zero-trust networking. (Source: WeChat)

Huawei HarmonyOS App Developer Incentive Program 2025 : Huawei announced that the number of HarmonyOS 5 devices has exceeded 10 million and launched the “HarmonyOS App Developer Incentive Program 2025,” investing hundreds of millions in subsidies, with individual developers potentially receiving up to 6 million in bonuses. This program aims to accelerate the development of the HarmonyOS ecosystem, attracting developers to create applications for AI and multi-device deployment, achieving “develop once, deploy everywhere.” Huawei provides full-stack development support, including technical empowerment, rapid testing, efficient listing, and operations, aiming to build a robust developer ecosystem. (Source: WeChat)

Domestic AI Supernode Server YuanNao SD200 Released : Inspur Information has released the supernode AI server “YuanNao SD200,” designed to address the computing power challenges of running trillion-parameter large models. This server adopts an innovatively developed multi-host low-latency memory semantic communication architecture, capable of aggregating 64 domestic GPU chips, providing a maximum of 4TB unified video memory and 64GB unified memory, supporting trillion-long sequence models. Actual tests show that SD200 achieves excellent computing power scaling efficiency on models like DeepSeek R1, providing strong support for AI4 Science and industrial applications. (Source: WeChat)