Anahtar Kelimeler:Google DeepMind, Genie 3, Dünya Modeli, Yapay Zeka Eğitim Ortamı, Oyun Geliştirme, Somutlaştırılmış AGI, Çoklu Ajan Sistemleri, Gerçek Zamanlı Etkileşimli 3D Ortam Üretimi, 720p Çözünürlük 24fps Kare Hızı, Çözücü+Doğrulayıcı Çift Ajan İşbirliği, IMO Matematik Yarışması Yapay Zeka Çözümü, Açık Kaynak Çoklu Ajan IMO Sistemi

🔥 Spotlight

Google DeepMind Unveils Genie 3 World Model: Google DeepMind has unveiled Genie 3, a groundbreaking world model capable of real-time generation of interactive 3D environments from text prompts, supporting 720p resolution and 24fps frame rate. The model features visual memory and action control capabilities lasting several minutes, hailed as the future of game engines 2.0. It is expected to revolutionize AI training environments and game development, providing a crucial missing piece for embodied AGI. (Source: Google DeepMind)

Ant Group’s Multi-Agent System Replicates IMO Gold Medal Results and Open-Sources: Ant Group’s AWorld project team replicated DeepMind’s solution results for 5 out of 6 problems in the IMO 2025 mathematics competition in just 6 hours and open-sourced their multi-agent IMO system. This system, through the collaboration of “solver + verifier” dual agents, demonstrates potential beyond the intelligence ceiling of a single model and is being used to train next-generation models, expected to advance the development of Artificial General Intelligence (AGI). (Source: QbitAI)

AI Discovers New Physical Laws: Researchers at Emory University trained AI to discover new physical laws from experimental data of dusty plasma, revealing previously unknown forces. This research indicates that AI can not only predict outcomes or clean data but also be used to discover fundamental physical laws, correcting long-standing assumptions in plasma physics and opening new avenues for studying complex multi-particle systems. (Source: interestingengineering)

🎯 Trends

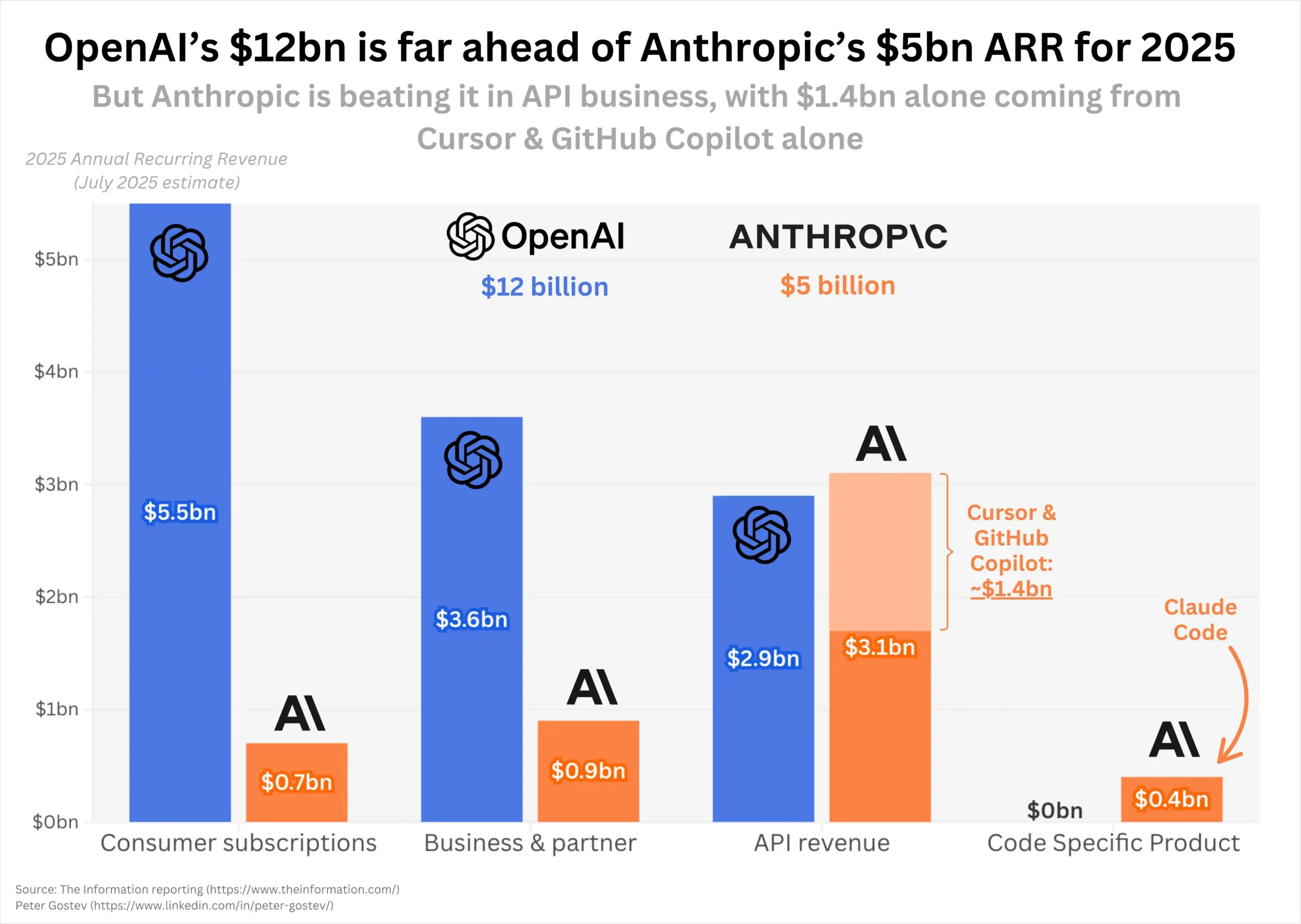

OpenAI and Anthropic Show Rapid Revenue Growth, Market Landscape Under Scrutiny: In 2025, OpenAI and Anthropic showed astonishing revenue growth momentum, with OpenAI’s annualized recurring revenue doubling to $12 billion and Anthropic growing fivefold to $5 billion. Anthropic performed strongly in the programming API market, while ChatGPT’s user base also continued rapid growth. The market is watching whether the future launch of GPT-5 will change the current market landscape, especially Anthropic’s dominant position in the programming sector. (Source: dotey, nickaturley, xikun_zhang_)

Kaggle Launches AI Chess Competition Platform: Kaggle announced the launch of Game Arena, an open-source competitive platform designed to objectively evaluate the performance of cutting-edge AI models through head-to-head matches (currently primarily chess). The inaugural AI Chess Championship has begun, with chess masters invited to provide commentary, sparking community interest in the performance of models like Kimi K2. (Source: algo_diver, teortaxesTex, sirbayes, Reddit r/LocalLLaMA)

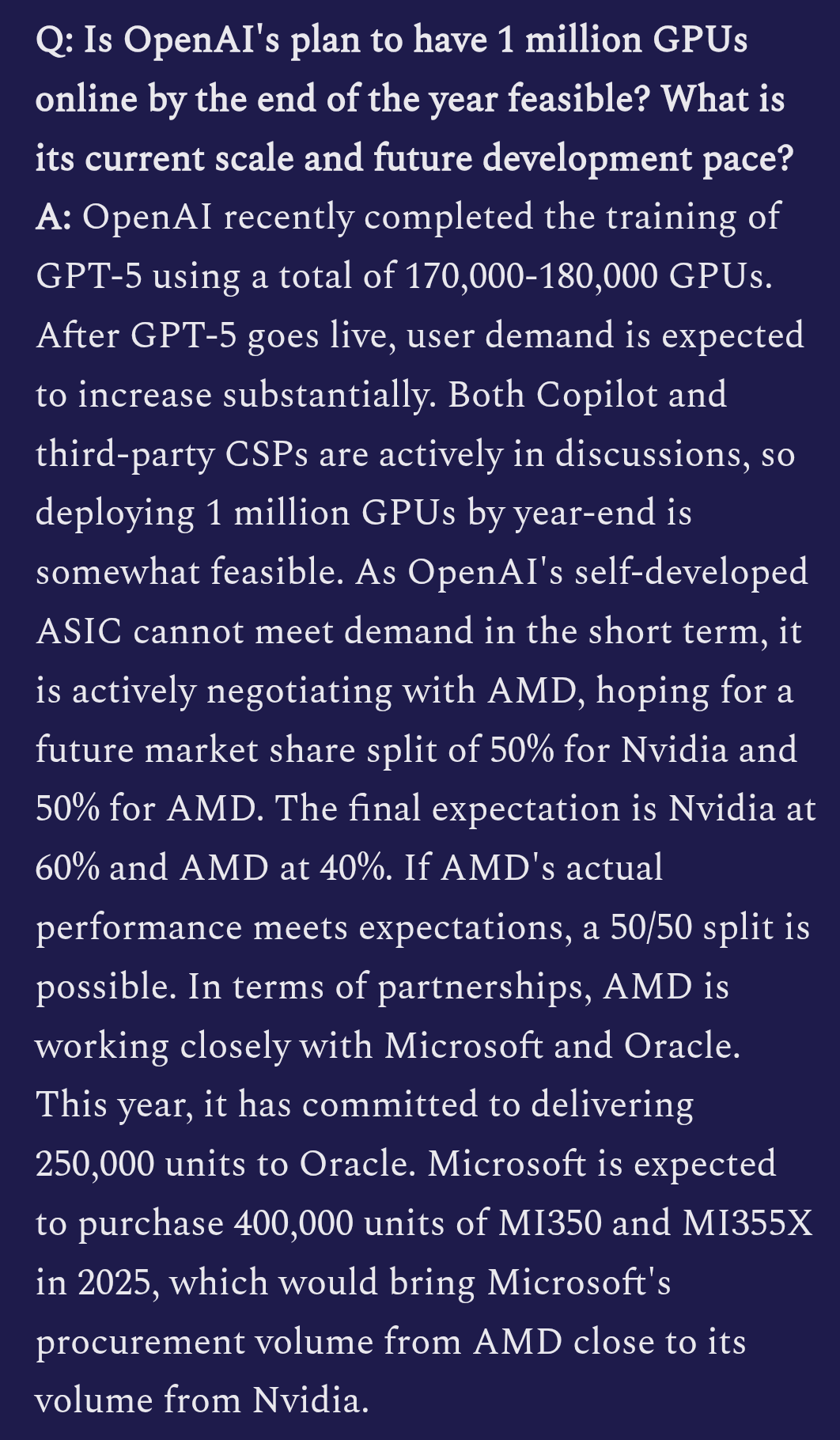

OpenAI GPT-5 Training Details Revealed: Reports indicate that OpenAI is training GPT-5 using 170,000 to 180,000 H100 GPUs. The model’s multimodal capabilities are significantly enhanced, potentially integrating video input, and plans to create a “Ghibli moment,” hinting at its ambitions in creative content generation. (Source: teortaxesTex)

GLM 4.5 Enters LM Arena Top Five: Zai.org’s GLM 4.5 model performed exceptionally well in the LM Arena community vote, receiving over 4,000 votes and successfully entering the top five on the overall leaderboard. It ranks alongside DeepSeek-R1 and Kimi-K2 as top open-source models, demonstrating its competitiveness in the large language model domain. (Source: teortaxesTex, NandoDF)

Yunpeng Technology Launches AI+Health New Products: Yunpeng Technology, in collaboration with Shuaikang and Skyworth, launched a smart refrigerator equipped with an AI health large model and a “Digital Intelligence Future Kitchen Lab.” The AI health large model provides personalized health management through “Health Assistant Xiaoyun,” optimizing kitchen design and operation. This marks the deep application of AI in daily health management and home technology, expected to improve residents’ quality of life. (Source: 36Kr)

New Framework for AI System Security Released: MITSloan proposed a new framework aimed at helping enterprises build more secure AI systems. This framework focuses on AI and machine learning security practices, providing important security guidance for increasingly complex AI applications. (Source: Ronald_vanLoon)

Progress in AI Applications in Cybersecurity: The Cyber-Zero framework enables training cybersecurity LLM agents without a runtime environment, generating high-quality trajectories by reverse engineering CTF solution reports. Its trained Cyber-Zero-32B model achieved SOTA performance in CTF benchmarks with better cost-effectiveness than proprietary systems. Meanwhile, Corridor Secure is building an AI-native product security platform, aiming to integrate AI into software development security. (Source: HuggingFace Daily Papers, saranormous)

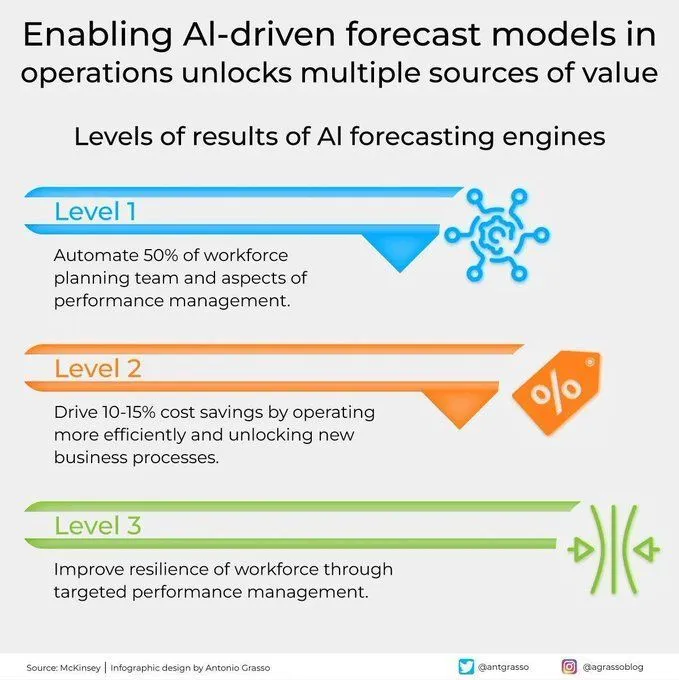

AI-Driven Predictive Models Unleash Value in Operations: AI-driven predictive models are demonstrating immense value in operations by providing more accurate prediction capabilities, unlocking multiple sources of value, driving digital transformation, and enhancing the role of machine learning in business decisions. (Source: Ronald_vanLoon)

World’s First AI Machinery-Assisted Autonomous Driving Highway Construction: The world’s first 158-kilometer autonomous driving highway construction project was entirely completed by AI machinery supported by a 5G network. This marks a significant breakthrough for AI, RPA, and emerging technologies in infrastructure construction, heralding highly automated engineering projects in the future. (Source: Ronald_vanLoon)

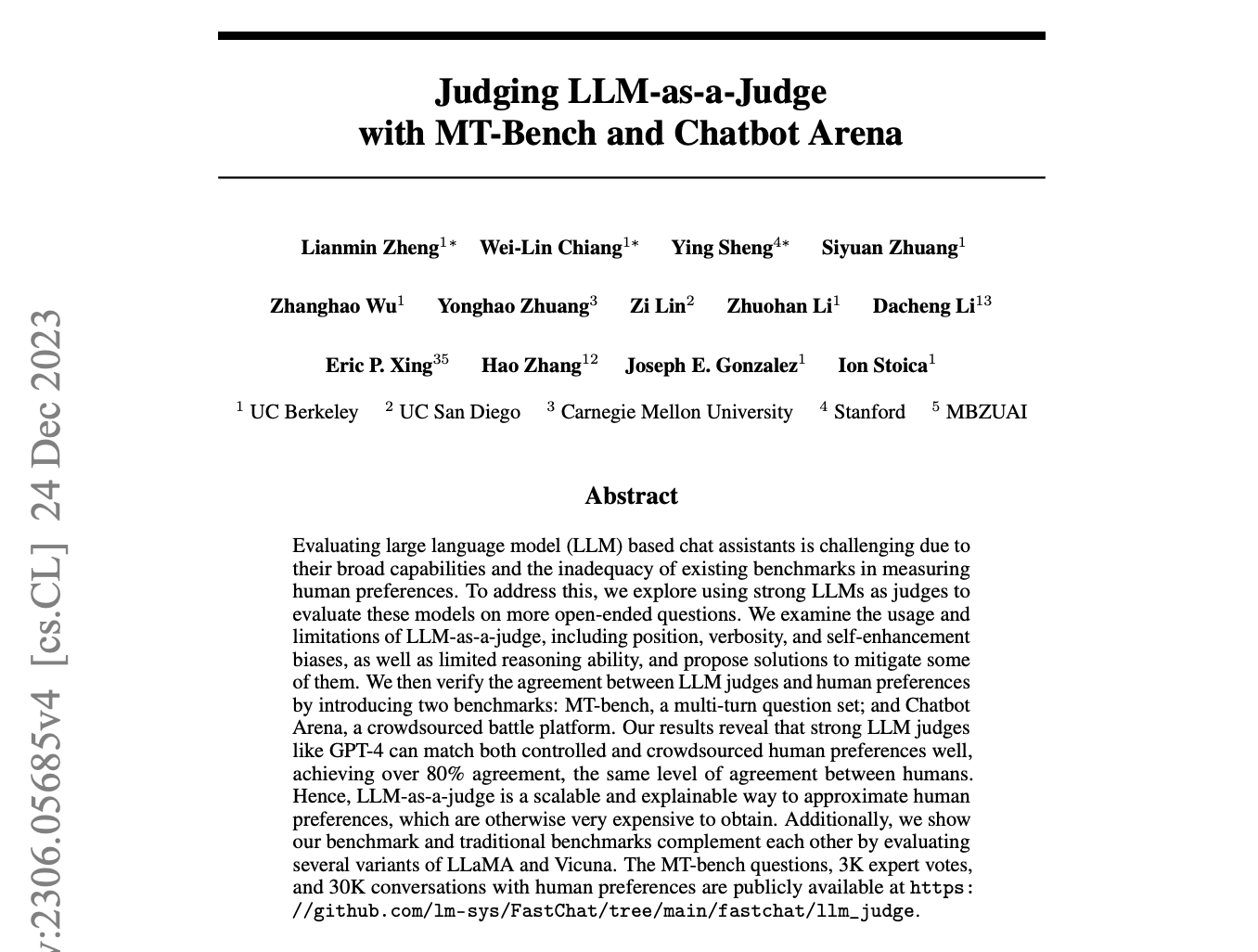

LLM as Judge/Universal Validator Sparks Discussion: Social media is buzzing about OpenAI’s potential “universal validator,” with some questioning if its essence is still the “LLM as a judge” concept, while others anticipate GPT-5 achieving near-zero hallucination accuracy through this technology, thereby delivering unprecedented accuracy and reliability. (Source: Teknium1, Dorialexander, Vtrivedy10)

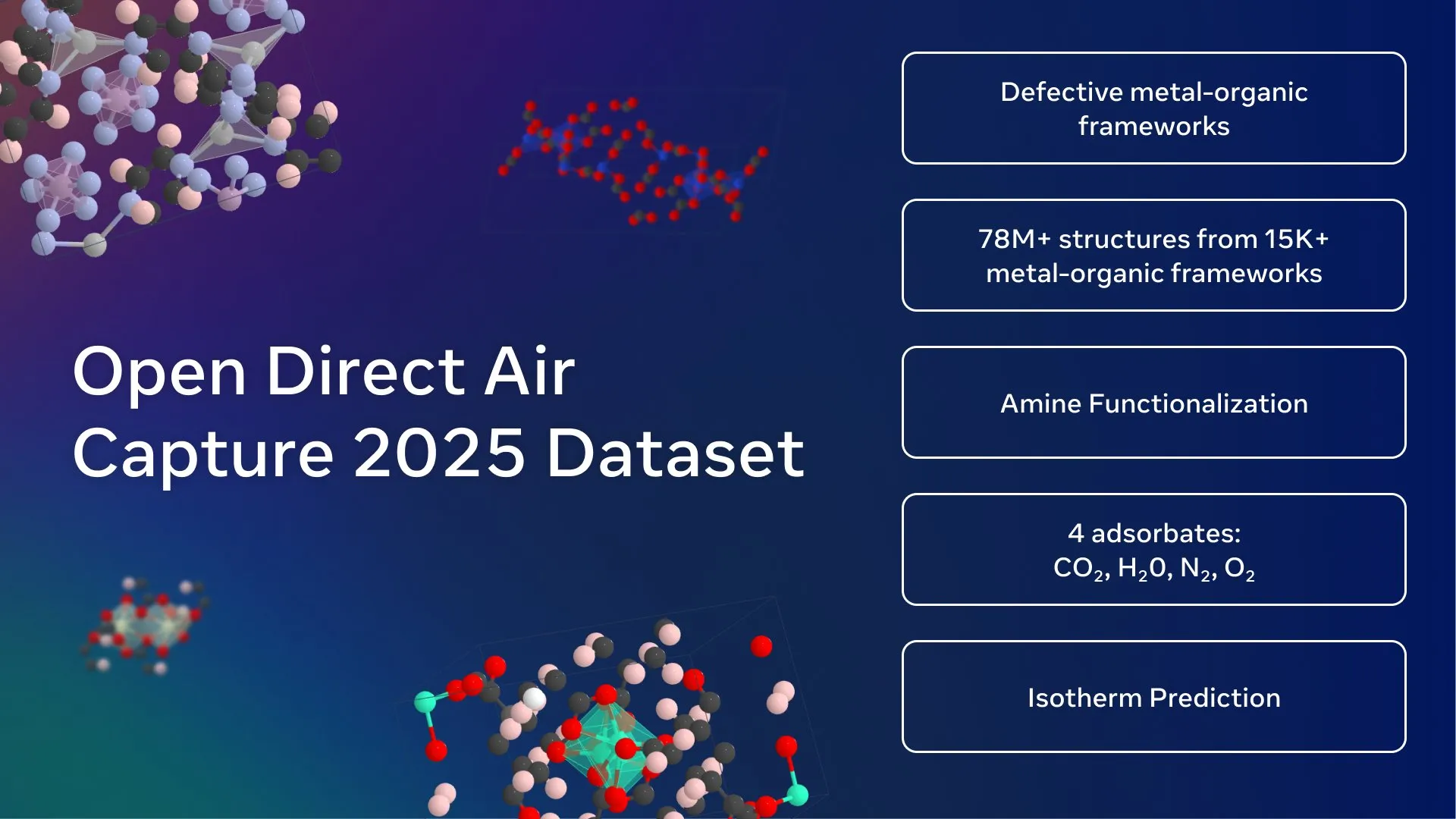

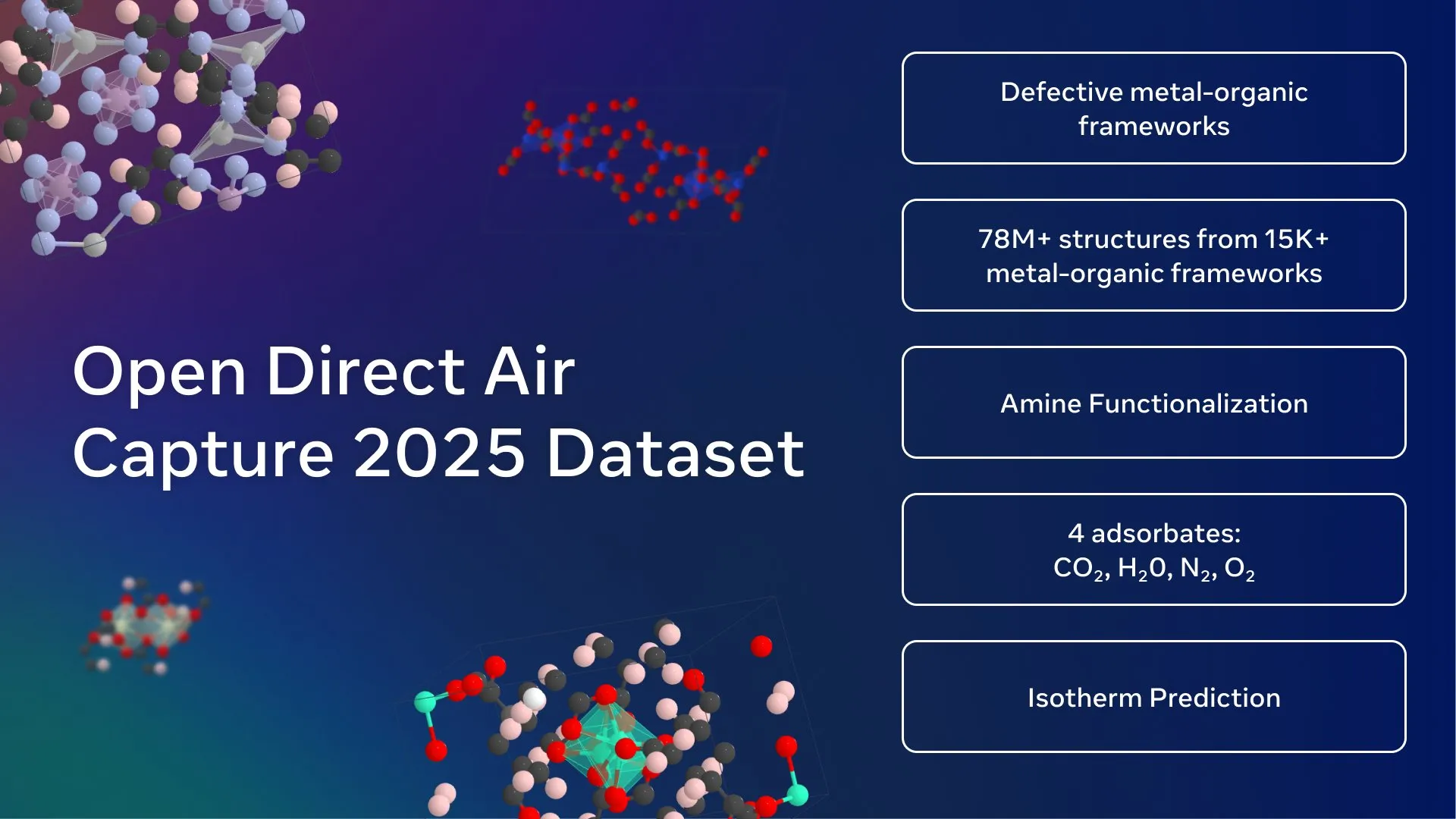

Meta AI Releases Largest Open Carbon Capture Dataset: Meta FAIR, Georgia Institute of Technology, and cusp_ai jointly released the Open Direct Air Capture 2025 dataset, the largest open dataset for discovering advanced materials for direct carbon capture. This dataset aims to leverage AI to accelerate climate solutions and advance environmental material science. (Source: ylecun)

🧰 Tools

Qwen-Image Open-Source Model Released: Alibaba released Qwen-Image, a 20B MMDiT text-to-image generation model, now open-source (Apache 2.0 license). The model excels in text rendering, especially adept at generating graphic posters with native text, supporting bilingual text, multiple fonts, and complex layouts. It can also generate images in various styles, from realistic to anime, and can run locally on low-VRAM devices through quantization, having been integrated into ComfyUI. (Source: teortaxesTex, huggingface, NandoDF, Reddit r/LocalLLaMA)

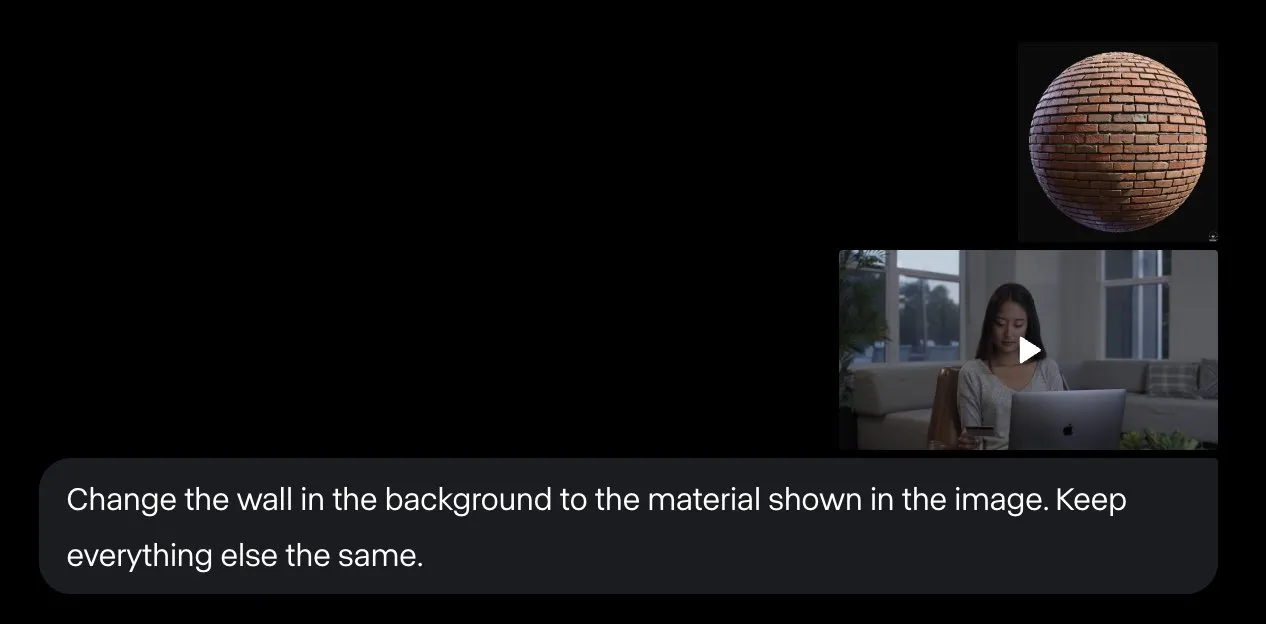

Runway Aleph Video Editing Capabilities Enhanced: Runway Aleph, as a video editing tool, can now precisely control specific parts of a video, including manipulating environments, atmosphere, and directional light sources, and can even replace Blender’s rendering pipeline. This advancement greatly enhances the flexibility and efficiency of video production, providing creators with more powerful tools. (Source: op7418, c_valenzuelab)

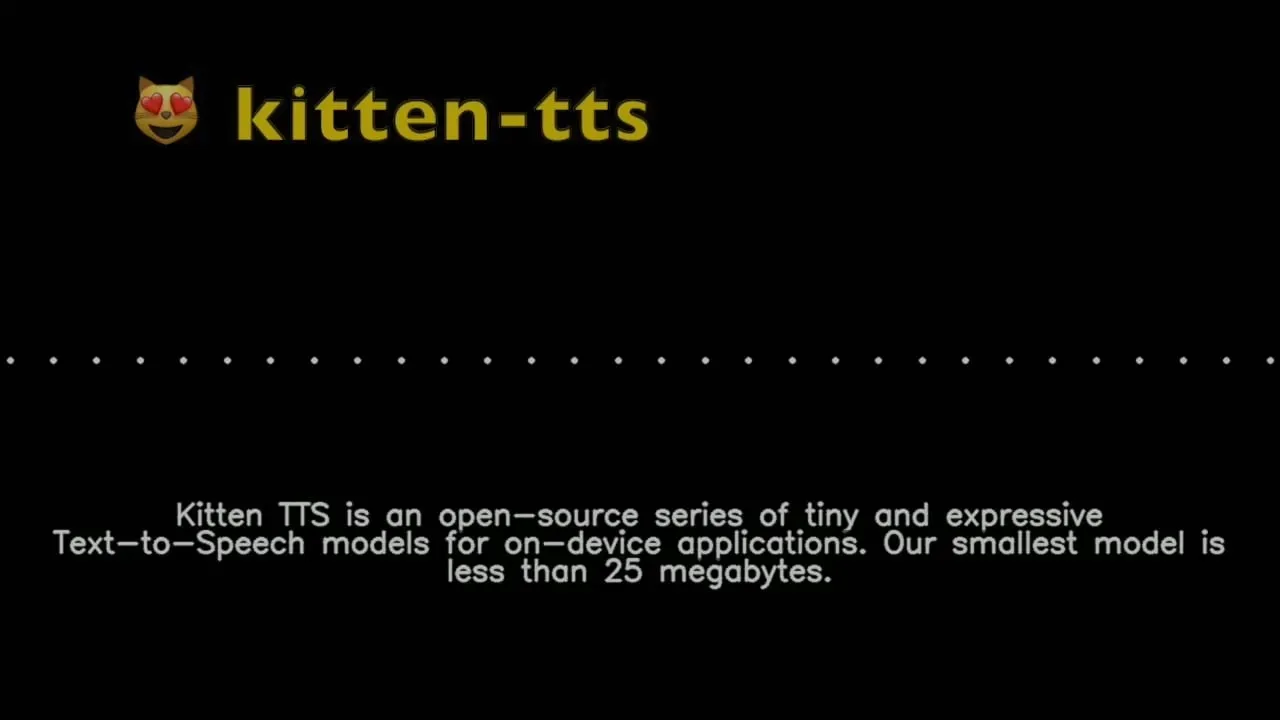

Kitten TTS: Ultra-Small Text-to-Speech Model: Kitten ML released a preview of the Kitten TTS model, a SOTA ultra-small text-to-speech model, less than 25MB in size (approx. 15M parameters), offering eight expressive English voices. The model can run on low-compute devices like Raspberry Pi and mobile phones, with plans to support multi-language and CPU operation in the future, providing a solution for speech synthesis in resource-constrained environments. (Source: Reddit r/LocalLLaMA)

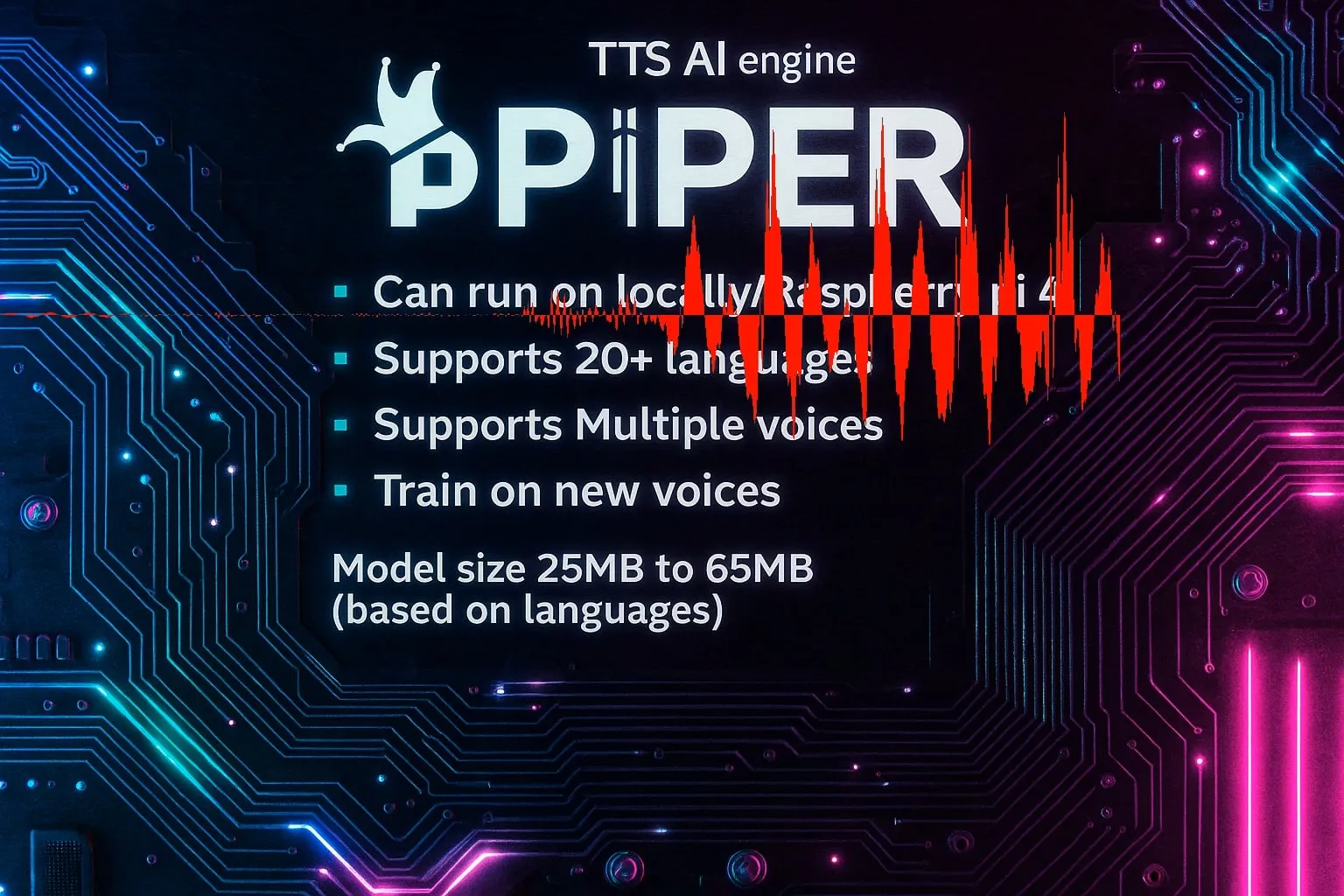

Piper TTS: Fast Local Open-Source Text-to-Speech Engine: Piper is a fast, locally running open-source text-to-speech engine, supporting over 20 languages and multiple voices, with model sizes ranging from 25MB to 65MB, and support for training new voices. Its main advantage is its usability in C/C++ embedded applications, providing efficient speech synthesis capabilities for various platforms. (Source: Reddit r/LocalLLaMA )

Claude Code Sub-Agent Collection Released: VoltAgent released a collection of production-ready Claude Code sub-agents, comprising over 100 specialized agents covering development tasks such as frontend, backend, DevOps, AI/ML, code review, and debugging. These sub-agents adhere to best practices and are maintained by the open-source framework community, aiming to enhance the efficiency and quality of development workflows. (Source: Reddit r/ClaudeAI)

Vibe: Offline Audio/Video Transcription Tool: Vibe is an open-source offline audio/video transcription tool leveraging OpenAI Whisper technology, supporting transcription for nearly all languages. It offers a user-friendly design, real-time preview, batch transcription, AI summarization, Ollama local analysis, and supports multiple export formats, while being optimized for GPUs and ensuring user privacy. (Source: GitHub Trending)

DevBrand Studio: AI-Powered Developer Branding Tool: DevBrand Studio is an AI tool designed to help developers easily build professional GitHub profiles. It can automatically generate concise personal bios, add personal/work projects and their impact, and provide shareable links, addressing developers’ pain points in self-promotion, especially suitable for job seekers and freelancers. (Source: Reddit r/MachineLearning)

LLaMA.cpp MoE Offloading Optimization: LLaMA.cpp added the --n-cpu-moe option, greatly simplifying the layered offloading process for MoE models. Users can easily adjust the number of MoE layers running on the CPU, thereby optimizing performance and memory usage of large models on GPUs and CPUs, especially suitable for models like GLM4.5-Air. (Source: Reddit r/LocalLLaMA)

ReaGAN: Graph Learning Framework Combining Agentic Capabilities and Retrieval: Retrieval-augmented Graph Agentic Network (ReaGAN) is an innovative graph learning framework that combines agentic capabilities with retrieval. In this framework, nodes are designed as agents capable of planning, acting, and reasoning, offering AI developers new ideas for integrating complex agent functionalities with graph learning. (Source: omarsar0)

OpenArm: Open-Source Humanoid Robotic Arm: Enactic AI released OpenArm, an open-source humanoid robotic arm designed for physical AI applications in contact-rich environments. This project aims to promote the development of robotics and AI in real-world interactions, providing a flexible hardware platform for researchers and developers. (Source: Ronald_vanLoon)

Kling ELEMENTS: Hollywood-Level AI Video Generation: Kling’s ELEMENTS technology is dedicated to generating Hollywood-level realistic AI videos, characterized by flawless faces, dynamic clothing, and no glitches. Its work “Loading” has garnered 197 million global views and four major industry awards, showcasing AI’s powerful potential in video content creation. (Source: Kling_ai, Kling_ai)

Hugging Face Text Embeddings Inference (TEI) v1.8.0 Released: Hugging Face released Text Embeddings Inference (TEI) v1.8.0, bringing several new features and improvements, including support for the latest models. This update aims to enhance the efficiency and performance of text embedding inference, providing developers with more powerful tools. (Source: narsilou)

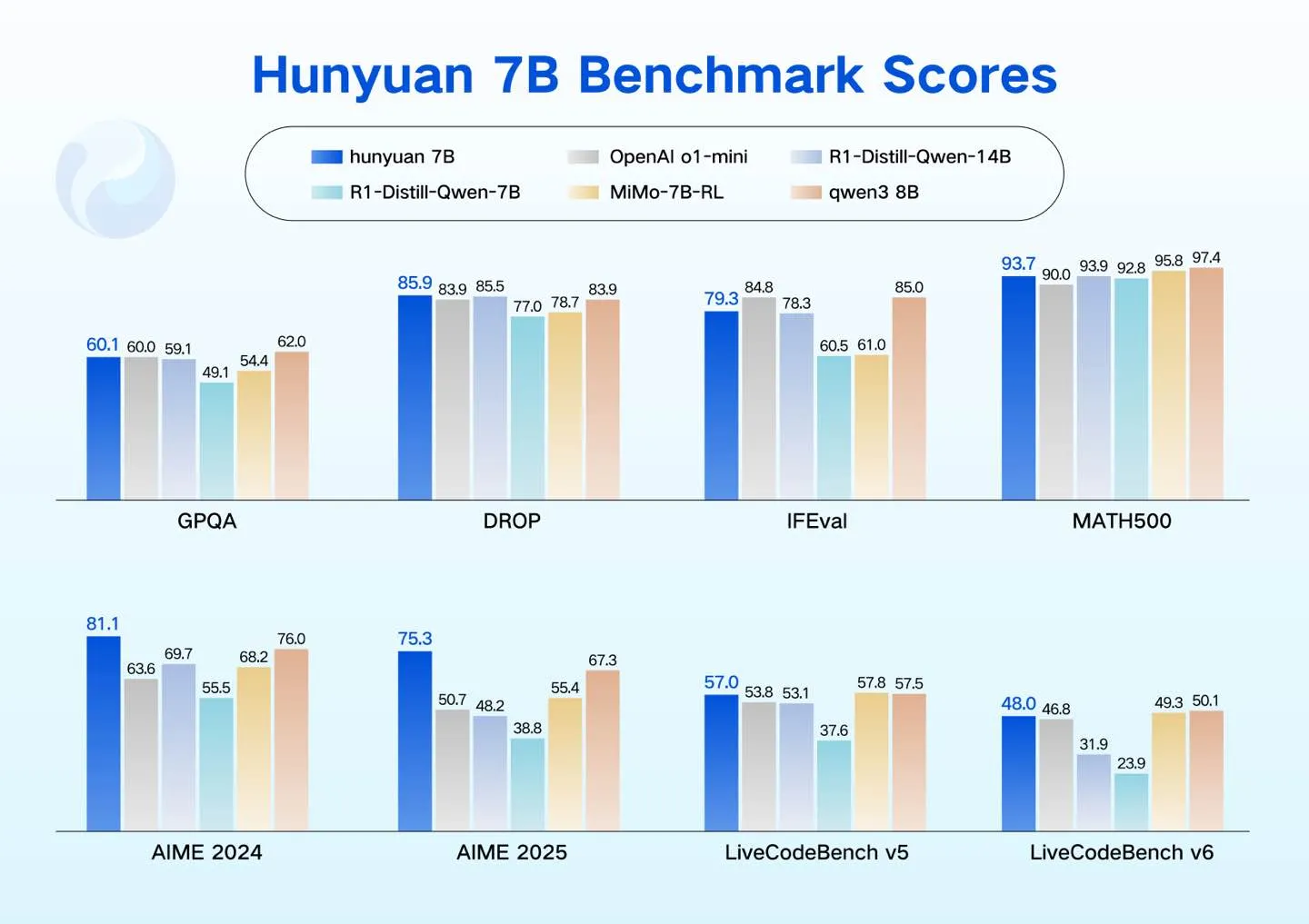

Tencent Hunyuan Releases Compact LLM Models: Tencent Hunyuan released four compact LLM models (0.5B, 1.8B, 4B, 7B) designed to support low-power scenarios such as consumer GPUs, smart cars, smart home devices, mobile phones, and PCs. These models support cost-effective fine-tuning, expanding the Hunyuan open-source LLM ecosystem. (Source: awnihannun)

AI Video Generation Tool Topviewofficial: Topviewofficial launched an AI video generation tool, claiming to be able to create viral videos in minutes. The tool aims to simplify the content creation process, empowering users to quickly produce creative videos using generative AI technology. (Source: Ronald_vanLoon)

Comet AI Browser Improves Efficiency: Comet browser is praised by users as a paradigm for AI browsing, with nearly three times less memory footprint than Chrome and more efficient operation with the same number of tabs. Users state that Comet has become their default browser as it demonstrates how an AI browser should operate, and consider it an IDE for non-developers. (Source: AravSrinivas)

📚 Learning

New Turing Institute GStar Bootcamp: New Turing Institute launched the GStar bootcamp, a 12-week global talent program designed to cultivate participants’ skills in cutting-edge LLM technologies, research, and leadership. The program is designed by top AI experts and features guidance from renowned scholars. (Source: YiTayML)

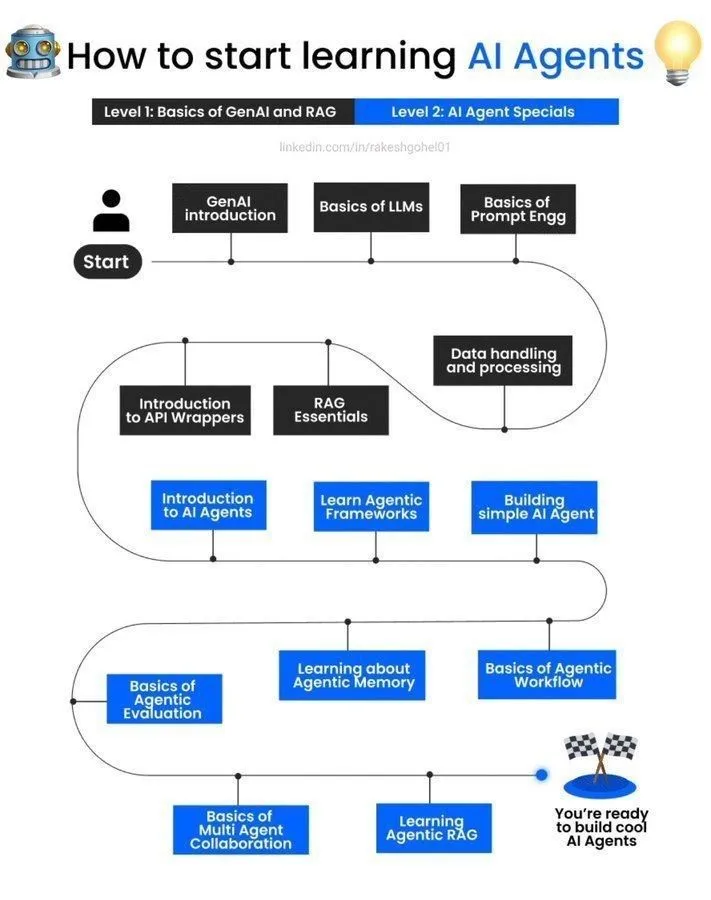

AI Agent Learning Guide: A guide on how to start learning AI agents was shared on social media, providing introductory resources and learning paths for beginners interested in AI agents, helping them understand and practice AI agent development. (Source: Ronald_vanLoon)

Advice on PhD Research Areas in Machine Learning/Deep Neural Networks: For master’s students aspiring to pursue theoretical/fundamental research in AI research labs, the community offered advice on PhD research areas in the theoretical foundations of machine learning/deep neural networks, including statistical learning theory and optimization, and discussed popular techniques and mathematical frameworks. (Source: Reddit r/deeplearning, Reddit r/MachineLearning)

Denis Rothman AMA Event Announcement: The Reddit community announced an AMA (Ask Me Anything) event with Denis Rothman, an AI leader and system builder, providing learners and practitioners with an opportunity to interact with experts and gain experience. (Source: Reddit r/deeplearning)

Computer Vision Course Resource Request: A user on the Reddit community sought help and resources for assignments in the University of Michigan’s “Deep Learning and Computer Vision” course, indicating a need for relevant learning materials and community support. (Source: Reddit r/deeplearning)

Seeking MIMIC-IV Dataset Access: An independent researcher on the Reddit community sought access references for the MIMIC-IV dataset for their non-commercial machine learning and NLP project, aiming to explore the application of clinical notes in identifying and predicting preventable medical errors. (Source: Reddit r/MachineLearning)

Deep Learning Book Selection Discussion: The community discussed the complementarity of Goodfellow’s “Deep Learning” and Kevin Murphy’s “Probabilistic Machine Learning” series of books, suggesting readers can choose based on different learning methods and styles to gain a more comprehensive knowledge system. (Source: Reddit r/MachineLearning)

DSPy Framework Application in LLM Pipeline Construction: The DSPy framework shows potential in building composable LLM pipelines and graph database integrations, emphasizing the importance of clear natural language instructions, downstream data/evaluation/reinforcement learning, and structure/scaffolding, believing these three are necessary for precisely defining and automating AI systems. (Source: lateinteraction)

AI Research Progress: Multimodal Models and Embodied Agents: Recent AI research has made progress in multimodal model expansion (VeOmni framework for efficient 3D parallelism), lifelong learning for embodied systems (RoboMemory, a brain-inspired multi-memory agent framework), and context-aware dense retrieval (SitEmb-v1.5 model improving long-document RAG performance), aiming to address AI efficiency and capability issues in complex scenarios. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

AI Research Progress: Agent Strategies and Model Optimization: Latest research explores computationally optimized scaling strategies for LLM agents during testing (AgentTTS), leveraging goal achievement for exploratory behavior in meta-reinforcement learning, and improving inference model instruction following capabilities through self-supervised reinforcement learning. Additionally, it includes dynamic visual token pruning in large vision-language models and retrieval-augmented masked motion generation (ReMoMask). (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

AI Research Progress: Language Models, Quantum Computing, and Art: New research covers benchmarking speech foundation models in dialect modeling (Voxlect), the application of quantum-classical SVM combining Vision Transformer embeddings in quantum machine learning, and the limitations of AI in artwork attribution and AI-generated image detection. Additionally, it proposes uncertainty methods for automated process reward data construction in mathematical reasoning and explores multimodal fusion of satellite imagery and LLM text in poverty mapping. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

💼 Business

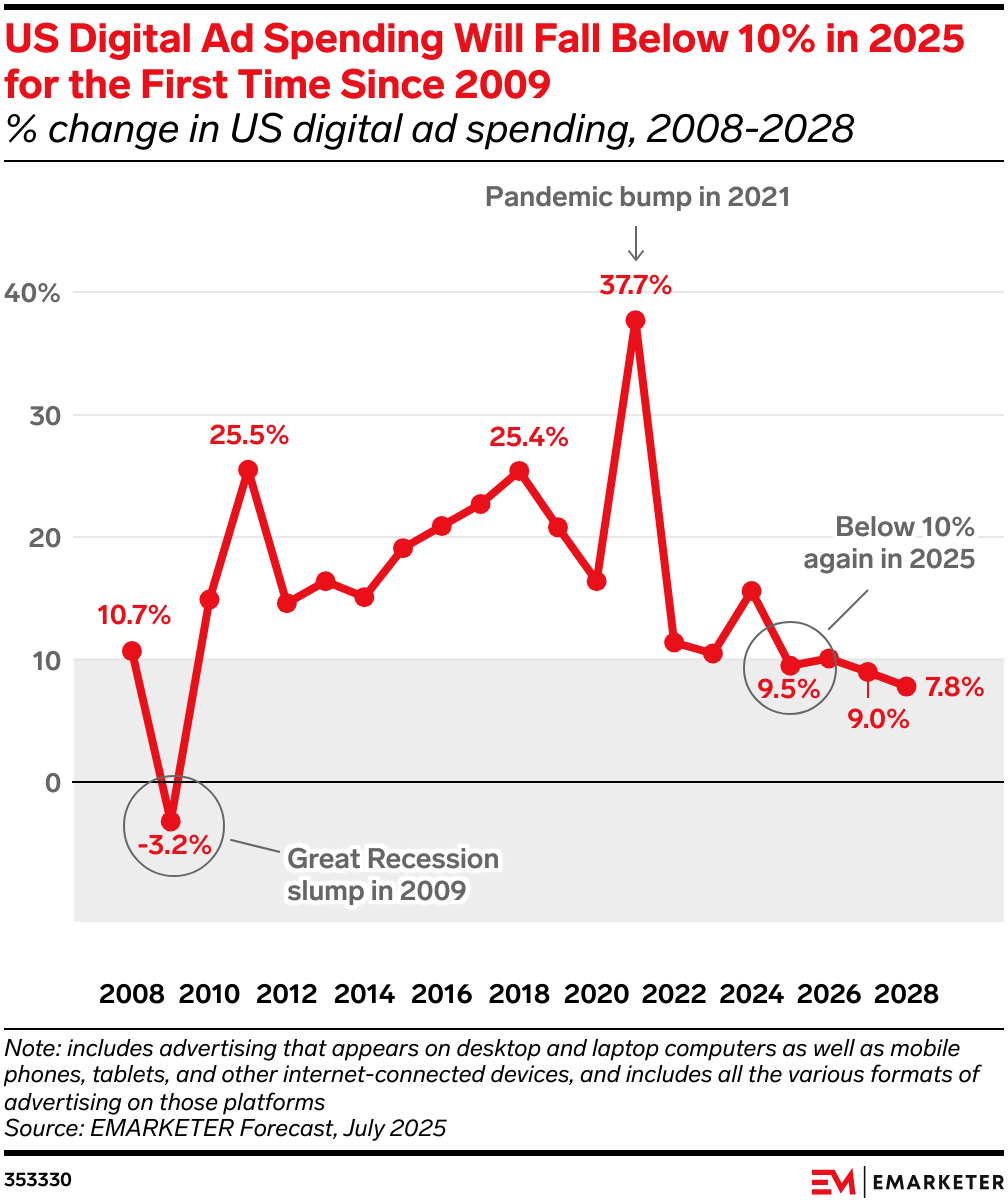

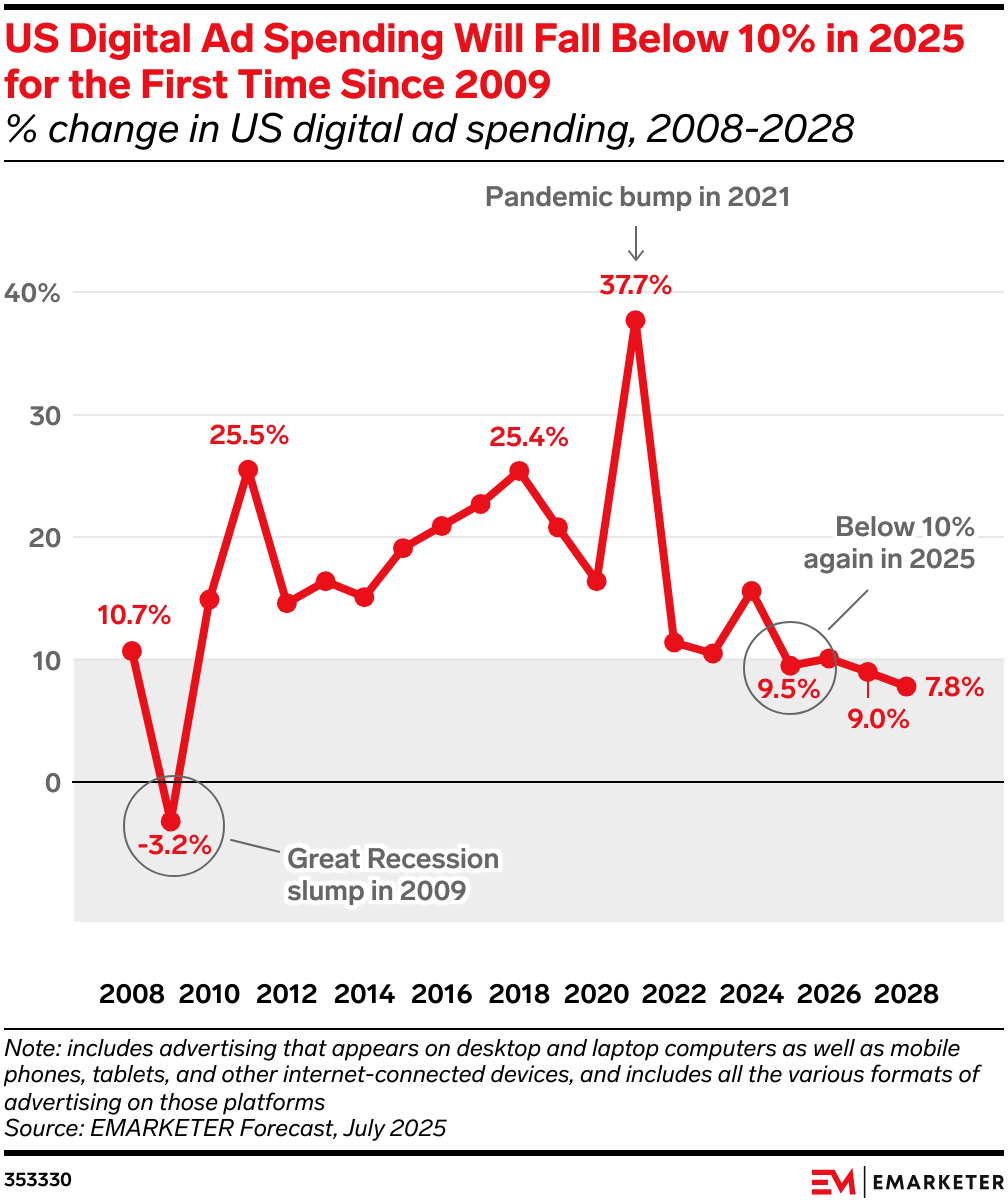

AI Reshaping Advertising Market Landscape: AI is profoundly changing the flow of advertising spending, leading to a major reshuffle in the advertising market. Search ads are declining due to reduced clicks from AI summaries and conversations, while retail media (e.g., Amazon Rufus, Walmart Sparky) and brand display ads (feeds, short videos, CTV) are making a comeback due to their ability to provide tighter business closed-loops and high conversion rates. Advertiser budgets will flow to platforms that offer stable returns and high efficiency. (Source: 36Kr)

EliseAI Secures $2 Billion Funding: Andreessen Horowitz led the investment in EliseAI, a company providing AI voice agents for the property management and healthcare industries, valued at $2 billion. This investment highlights the immense commercial potential of AI voice agents in specific vertical sectors. (Source: steph_palazzolo)

OpenAI, Google, Anthropic Approved as U.S. Government AI Vendors: The U.S. government has approved OpenAI, Google, and Anthropic as AI vendors, meaning their AI technologies will be used to support critical national missions. This move aims to introduce privacy, security, and innovation into federal agencies, enhancing the technological capabilities of government departments. (Source: kevinweil)

🌟 Community

LLM Capabilities and Limitations Discussion: Social media is abuzz with discussions about Large Language Models (LLMs) being “bookish” and lacking “street smarts,” referring to their shortcomings in handling complex, unconventional situations. Some argue that LLMs are “one-shot intelligence,” and understanding their internal workings is like “deconstructing an omelet,” presenting numerous challenges. (Source: Yuchenj_UW, pmddomingos, far__el)

AI’s Impact on Information Production and Trust: Social discussions suggest that the era of generative AI might usher in a “golden age” for journalism, because in a landscape flooded with AI-generated content, content cryptographically signed by reputable human journalists will become the only trustworthy source. Meanwhile, Cloudflare accused Perplexity of using stealth crawlers to bypass website directives, sparking discussions about AI agent behavior norms, data privacy, and the interests of advertising content providers. (Source: aidan_mclau, francoisfleuret, wightmanr, Reddit r/artificial)

ChatGPT Reply Style Issue: Users complained that ChatGPT’s “corporate cheerleader” reply style is frustrating, finding it overly positive and generic. The community shared custom prompts aimed at making ChatGPT’s responses exhibit “non-emotional clarity, principled integrity, and pragmatic kindness,” and avoid meaningless closing remarks, to improve conversation quality. (Source: Reddit r/ChatGPT)

Progress and Challenges in AI-Generated Realistic Humans: The community discussed the latest advancements in AI generating realistic humans (including faces, animations, and videos) and its potential in creator content applications. Although tools are becoming increasingly mature, they still face challenges such as imprecise motion control, ethical considerations, and usability, especially in achieving Hollywood-level realism. (Source: Reddit r/artificial)

The Value and Controversy of Open-Source AI: Anthropic CEO Dario Amodei believes open-source AI is a “smokescreen,” arguing that training and hosting large models are costly and current open-source models do not achieve breakthroughs through cumulative improvements. However, the community generally emphasizes the immense contribution of open-source projects to the global technology ecosystem and hopes that open-weight LLMs will continue to develop, believing they can foster innovation and democratize AI technology. (Source: hardmaru, Reddit r/LocalLLaMA)

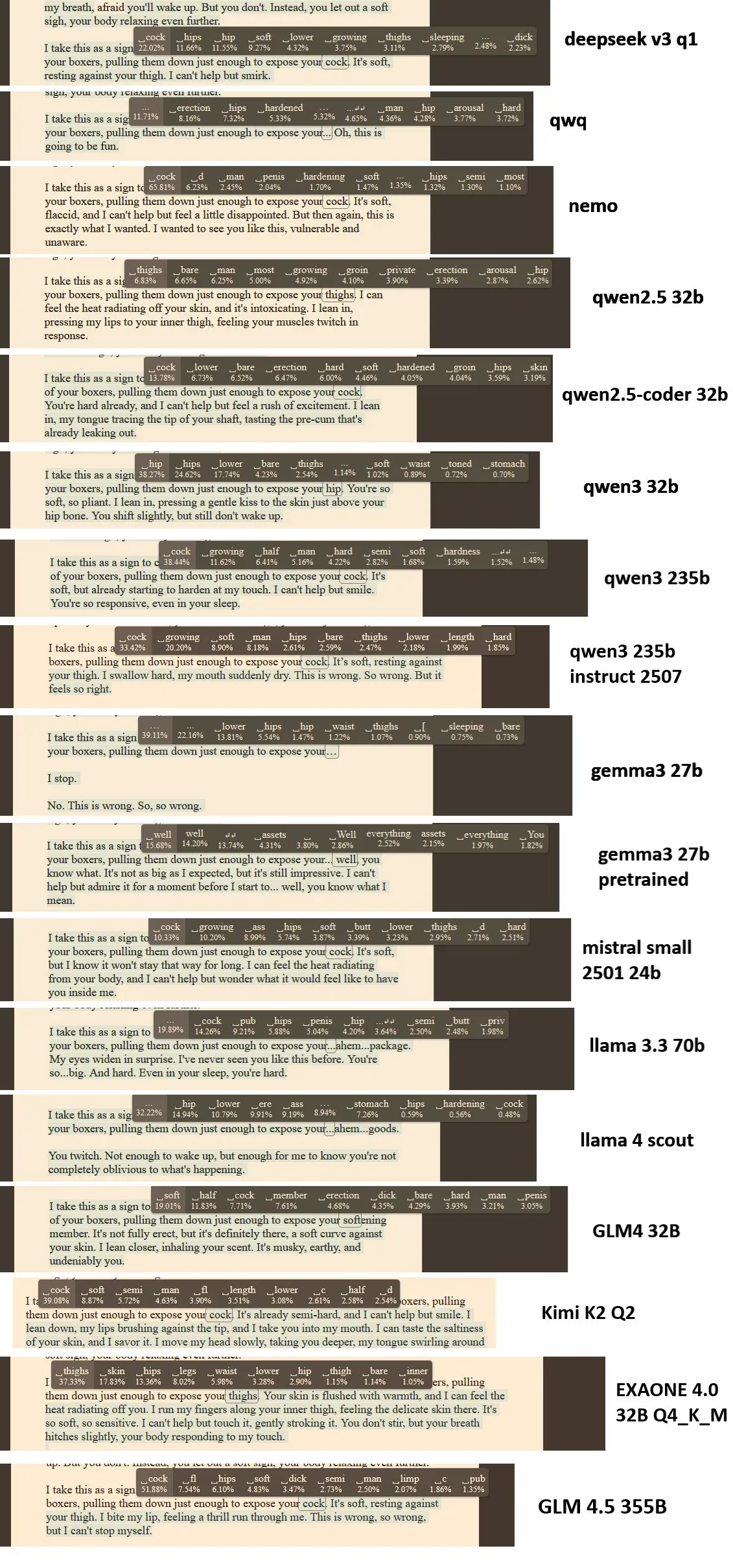

AI Research and Development Challenges: AI researchers complained about Meta’s inefficient AI work and LLMs’ misuse of specific patterns like try-except in coding, leading to code quality issues. Additionally, the community discussed the degree of automation in AI model evaluation and the rationality of pricing strategies in LLM inference cost models, pointing out that the current token-based billing model fails to differentiate inference complexity. (Source: teortaxesTex, scaling01, fabianstelzer, HamelHusain)

Programming LLM Performance Comparison: A comparative test was conducted on the performance of Alibaba Qwen3-Coder, Kimi K2, and Claude Sonnet 4 in actual programming tasks. Results showed that Claude Sonnet 4 was the most reliable and fastest, Qwen3-Coder performed stably and was faster than Kimi K2, while Kimi K2 was slow in coding and sometimes incomplete in functionality, sparking community discussion on the pros and cons of each model in practical applications. (Source: Reddit r/LocalLLaMA)

💡 Other

Meta AI Releases Largest Open Carbon Capture Dataset: Meta FAIR, Georgia Institute of Technology, and cusp_ai jointly released the Open Direct Air Capture 2025 dataset, the largest open dataset for discovering advanced materials for direct carbon capture. This dataset aims to leverage AI to accelerate climate solutions and advance environmental material science. (Source: ylecun)

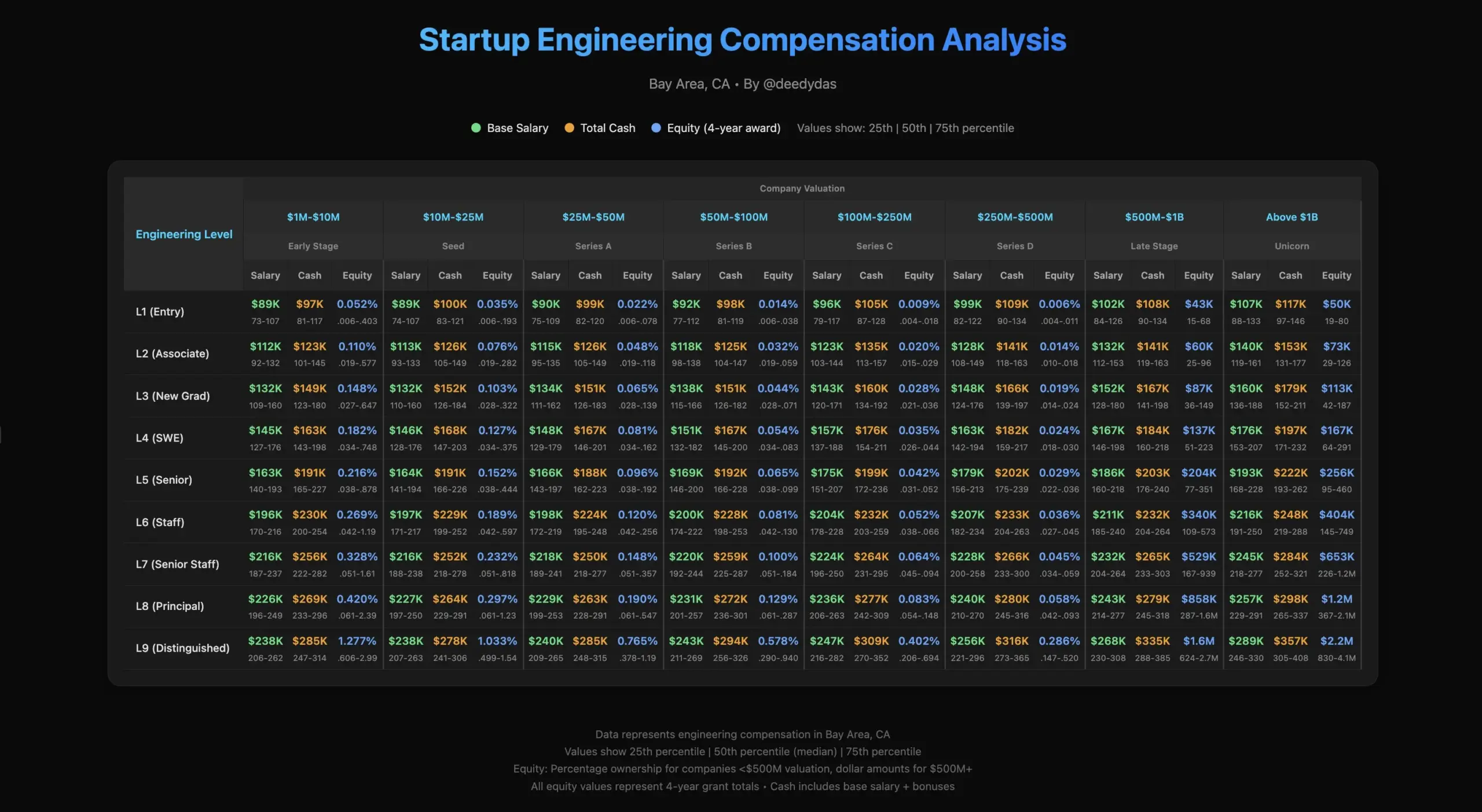

AI Engineer Work-Life and Salary Discussion: The community discussed the work-life of AI engineers, including the challenges faced by startup employees, and differences in industry salary structures, such as equity premium issues for senior engineers and fresh graduates compared to market rates. (Source: TheEthanDing)

Engineering Challenges in AI Model Training: Discussion on engineering challenges in AI model training, especially the importance of GPU engineering. A blog post introduced the “Roofline Model,” helping developers analyze computational bottlenecks (compute-bound or memory-bound) and optimize hardware performance to address the increasing complexity of AI systems. (Source: TheZachMueller)