Anahtar Kelimeler:Anthropic, Claude 3.5 Haiku, Qwen3, Phi-4-reasoning, LLM Fiziği, LangGraph, AI Ajan, Devre İzleme Yöntemleri, Atıf Grafikleri, Qwen3-235B-A22B kodlama yeteneği, Phi-4-reasoning akıl yürütme sırasında hesaplama, LangGraph fatura doğrulama Ajanı, Moondream Station yerel VLM

🔥 聚焦

Anthropic Publishes LLM Biology Research, Delving into Model Internal Mechanisms: Anthropic released an in-depth research blog post titled “On the Biology of a Large Language Model,” investigating the internal mechanisms of its Claude 3.5 Haiku model in different contexts using its Attribution Graphs method. By training a more analyzable “surrogate model” (Transcoder), the research reveals how the model performs addition (via multiple approximate paths rather than precise algorithms), conducts medical diagnoses (forming internal diagnostic concepts), and handles hallucinations and refusals (possessing a default refusal circuit suppressible by “known answer” features). The study offers new perspectives on understanding LLM internal workings but also sparks discussion about methodological limitations and Anthropic’s own positioning (Source: YouTube – Yannic Kilcher

)

Qwen3 Series Models Demonstrate Strong Performance, Attracting Open Source Community Attention: Alibaba’s released Qwen3 series large language models have shown outstanding performance on multiple benchmarks, especially in coding capabilities. Aider Polyglot Coding Benchmark results indicate that Qwen3-235B-A22B (without Chain-of-Thought) seems to outperform Claude 3.7 with 32k Chain-of-Thought tokens enabled, at a significantly lower cost. Meanwhile, Qwen3-32B also surpassed GPT-4.5 and GPT-4o on this benchmark. The community is actively exploring pruning (e.g., pruning 30B down to 16B) and fine-tuning (e.g., using Unsloth for fine-tuning with low VRAM) of Qwen3 models, further lowering the barrier to applying high-performance models and suggesting that Chinese open-source large models may gain significant market share (Source: karminski3, scaling01, scaling01, Reddit r/LocalLLaMA)

Microsoft Releases Phi-4-reasoning Model, Focusing on Complex Reasoning: Microsoft released the Phi-4-reasoning model on Hugging Face, a 14 billion parameter reasoning model. By utilizing inference-time compute, the model achieves State-of-the-Art (SOTA) performance on complex reasoning tasks. This indicates that model design is exploring ways to enhance specific capabilities by increasing computation during the inference stage, rather than solely relying on scaling up model size, offering new pathways for smaller models to achieve high performance (Source: _akhaliq)

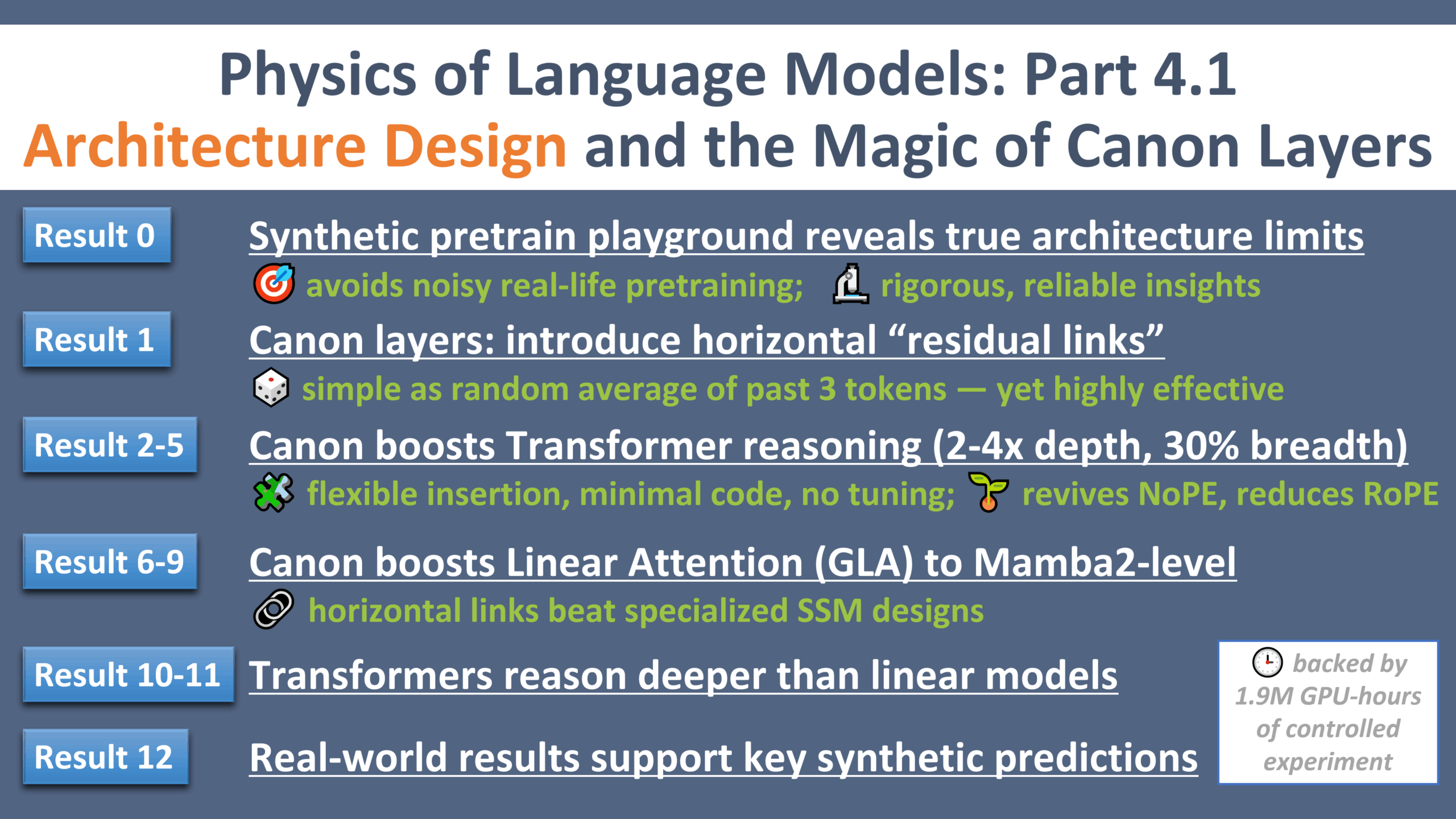

New Progress in LLM Physics Research: A “Galileo Moment” for Architecture Design: Zeyuan Allen-Zhu released the fourth part of a series on the physics of large language models, focusing on architecture design. Through controlled synthetic pre-training environments, the research reveals the true limitations and potential of different LLM architectures (like Transformer, Mamba). The study introduces a lightweight horizontal residual layer named “Canon,” significantly boosting model reasoning capabilities. Concurrently, the research finds that the advantages of the Mamba model largely stem from its hidden conv1d layer, rather than the SSM itself. This series of experiments provides new perspectives and foundational theories for understanding and optimizing LLM architectures (Source: menhguin, arankomatsuzaki, giffmana, tokenbender, giffmana, Dorialexander, iScienceLuvr)

🎯 动向

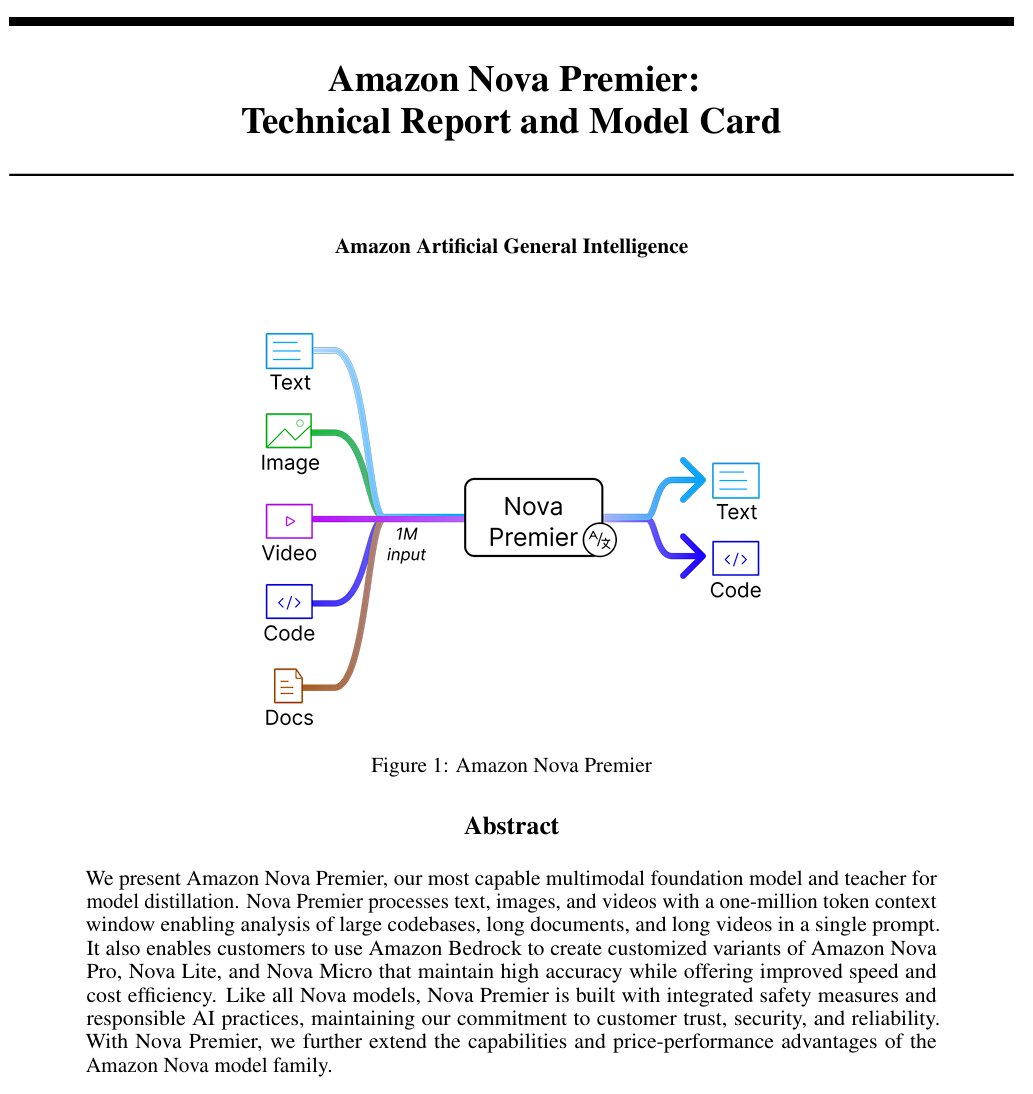

Amazon Releases General Artificial Intelligence Model “Amazon Artificial General Intelligence”: This model features a 1 million token context length and multimodal input capabilities, optimized for code generation, RAG, video/document understanding, function calling, and Agent interactions. Pricing is $2.5/million tokens for input and $12.5/million tokens for output. Preliminary evaluations show its performance on the AI Index is comparable to Llama-4 Scout, but it lags in speed and cost, potentially suitable for specific long-context multimodal or Agent application scenarios (Source: scaling01)

Anthropic Claude Models Now Offer Web Search Feature in Global Paid Plans: This feature allows Claude to perform quick searches when handling everyday tasks, and explore multiple sources, including Google Workspace, for more complex questions. This enhances Claude’s ability to access real-time information and handle tasks requiring external knowledge (Source: menhguin)

IBM Releases Hybrid Architecture Model granite-4.0-tiny-7B-A1B-preview: This 7B model preview version adopts a hybrid architecture of Mamba-2 and Transformer, with each Transformer block containing 9 Mamba blocks. The design aims to use Mamba blocks to capture global context and pass it to attention layers for local context parsing. Preliminary MMLU scores are promising, but results for other tests like math and programming abilities have not yet been released (Source: karminski3)

OpenAI ChatGPT Adds Shopping Features: OpenAI is experimenting with shopping features in ChatGPT, aiming to simplify product finding, comparison, and purchasing processes. New features include improved product result displays, visualized product details with prices and reviews, and direct purchase links. OpenAI emphasizes that product results are independently selected and are not advertisements (Source: sama)

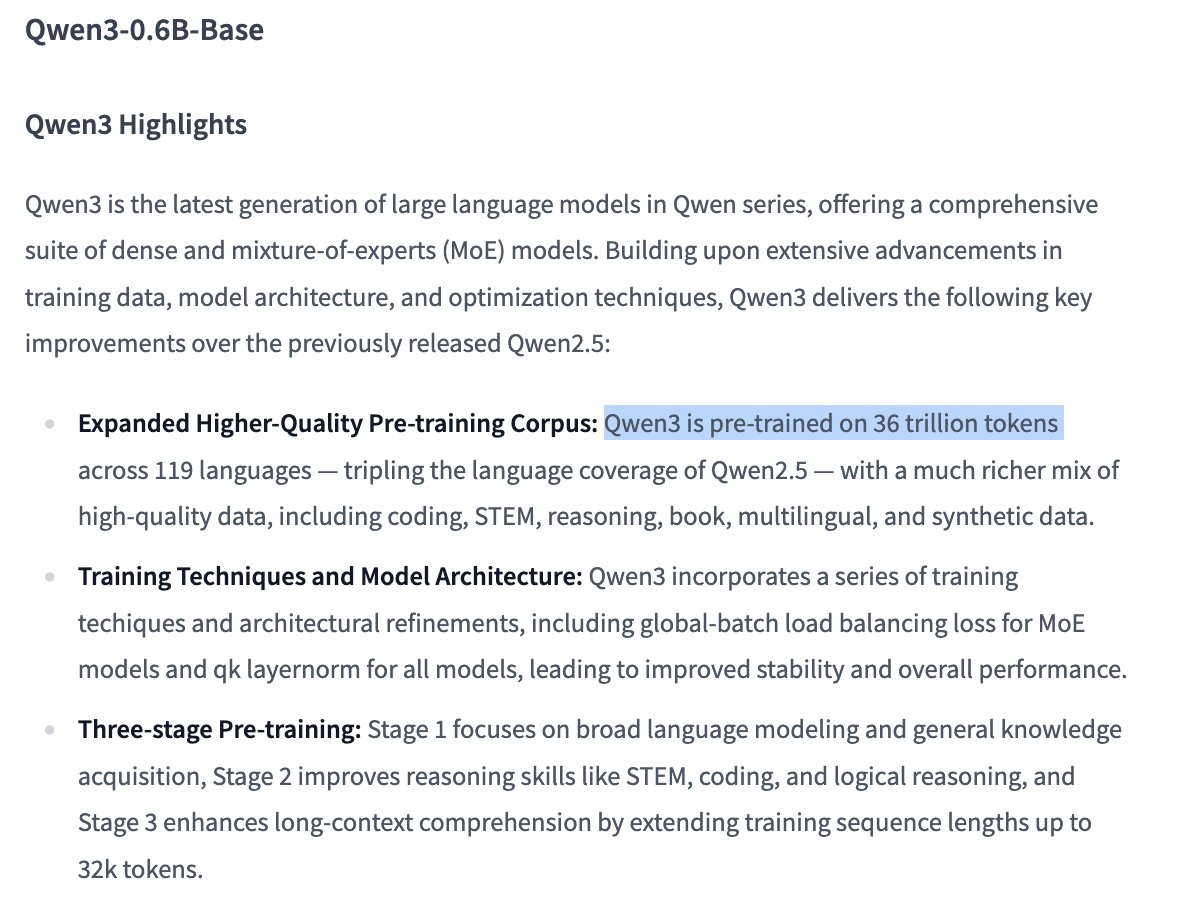

Qwen3 0.6B Model Training Details Attract Attention: User Dorialexander noted that, based on available information, the Qwen 0.6B model seems to have also been trained on up to 36T tokens. If true, this would set a new record exceeding Chinchilla scaling laws (approximately 60,000 tokens per parameter), indicating a trend of enhancing small model capabilities by vastly increasing training data volume (Source: Dorialexander)

X (Twitter) Recommendation Algorithm to be Replaced with Lightweight Grok: Elon Musk announced that X platform’s recommendation algorithm is being replaced with a lightweight version of Grok, expected to significantly improve recommendation effectiveness. User feedback suggests algorithm performance has improved, possibly related to recent Exa AI staff changes and X starting to use Embeddings for recommendations (Source: menhguin, colin_fraser, paul_cal)

Allen AI Releases Fully Open MoE Model OLMoE: This model is an advanced Mixture of Experts (MoE) model with 1.3 billion active parameters and 6.9 billion total parameters. Being fully open-source means the community can freely use, modify, and study the model, promoting the development and application of MoE architectures (Source: dl_weekly)

Mistral-Small-3.1-24B-Instruct-2503 Model Gains Attention: Reddit users discuss the Mistral-Small-3.1-24B-Instruct-2503 model, which scores highly on UGI (Uncensored General Intelligence) and outperforms similarly high-scoring models in natural language understanding and coding. Users consider it potentially ideal for single-GPU uncensored inference and note its support for tool use. However, they also point out its writing style can be somewhat dry and repetitive, lacking the creativity of models like Gemma 3 (Source: Reddit r/LocalLLaMA)

🧰 工具

CreateMVP 2.0 Released, Optimizing AI-Driven Development Workflow: CreateMVP updated to version 2.0, aiming to address the issue of suboptimal results when directly prompting AI to build applications. The new version helps users create more precise “blueprints” for AI by providing a smoother UI, convenient authentication methods (supporting Replit, Google, GitHub, with XAI support coming soon), generating more detailed development plans (increased from 11KB to 40KB+), offering instant file previews, and integrating chat with top AI models, ensuring AI builds applications that match user specifications (Source: amasad)

LlamaIndex Launches Invoice Reconciliation Agent: This tool demonstrates the application of AI Agents in batch automation tasks, rather than traditional chat interactions. It can process large volumes of unstructured invoice documents, extract relevant details, automatically match them with purchase orders, and flag discrepancies. Its core is an Agentic document intelligence layer based on LlamaCloud parsing/extraction and LlamaIndex.TS workflow inference, showcasing the potential of Agents in automating real business processes and is considered likely to replace traditional RPA (Source: jerryjliu0)

LangGraph Expense Tracker: Automated Expense Management System: This is an example of an automated expense management system built using LangGraph. It can process invoices, utilize intelligent data extraction features, store information in PostgreSQL, and include a human verification step. The project demonstrates LangGraph’s capability in building practical business automation workflows (Source: LangChainAI, Hacubu, hwchase17)

Moondream Station Released: Run VLM Locally: Moondream released Moondream Station, allowing users to run the visual language model (VLM) Moondream locally on a Mac without needing a cloud connection. It offers access via CLI or a local port, is simple to set up, and completely free, lowering the barrier for local deployment and use of VLMs (Source: vikhyatk)

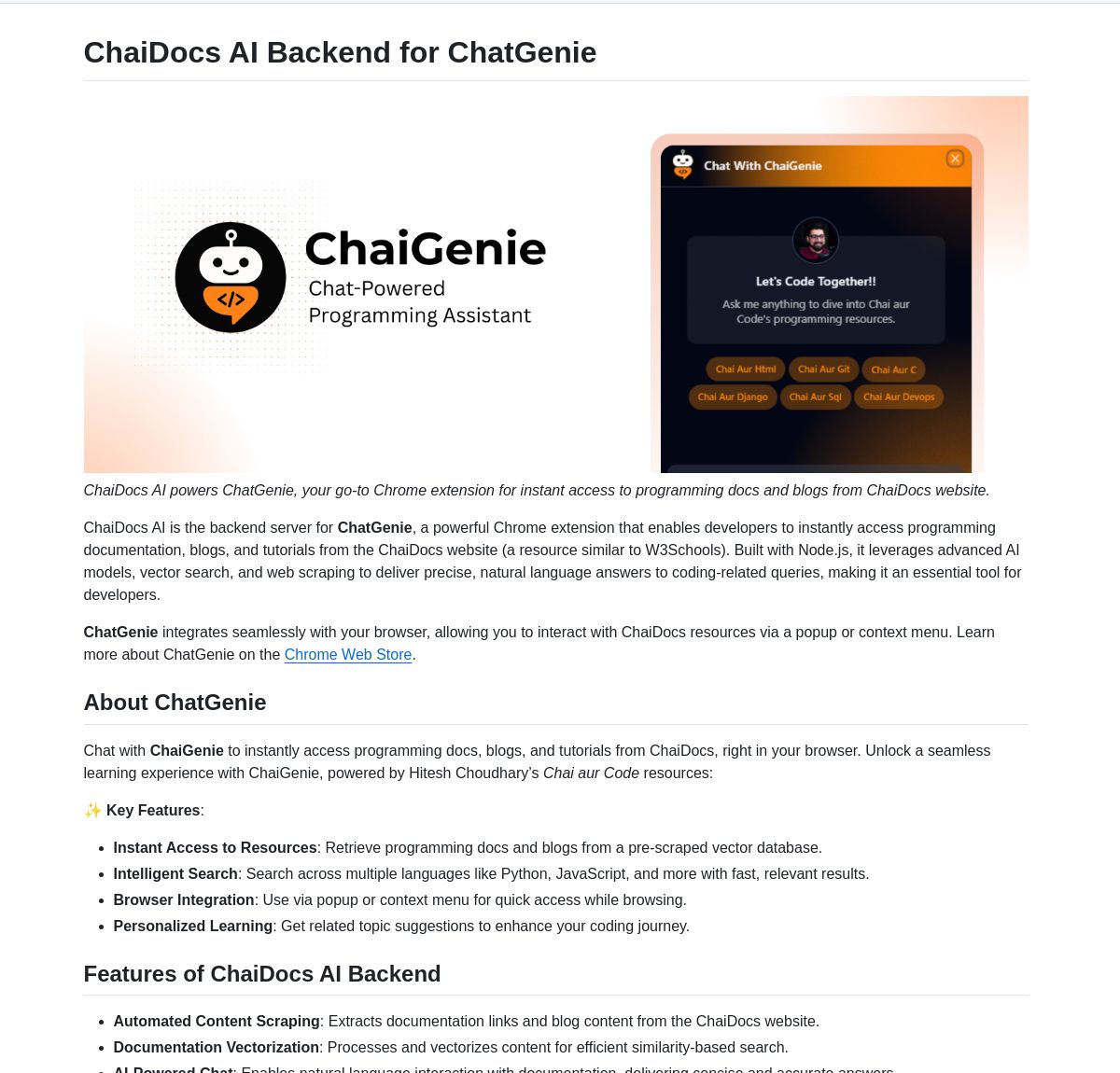

ChaiGenie: LangChain-Based Chrome Extension for Document Search: ChaiGenie is a Chrome extension integrating LangChain’s Gemini and Qdrant to provide document search functionality. It supports multiple languages and vector-based retrieval, aiming to enhance user efficiency in finding and understanding document content while browsing the web (Source: LangChainAI)

Research Agent: One-Click Research Assistant Web App: This is a web application built on the LangGraph research assistant framework, designed to simplify the research process. Users can obtain research results with just one click, demonstrating LangGraph’s potential in building AI-driven workflows to streamline complex tasks (Source: LangChainAI)

Muyan-TTS: Open-Source, Low-Latency, Customizable TTS Model: The ChatPods team released Muyan-TTS, a fully open-source Text-to-Speech model aimed at addressing the issues of low quality or insufficient openness in existing open-source TTS models. Based on LLaMA-3.2-3B and optimized SoVITS, it supports zero-shot TTS and voice cloning, and provides a complete training and data processing pipeline, facilitating fine-tuning and secondary development for developers, especially suitable for applications requiring customized voices (Source: Reddit r/MachineLearning)

Mem0 Integration with Open Web UI Pipelines: User cloudsbird created an Open Web UI filter pipeline integration for Mem0 (unofficial MCP), providing an alternative way to use Mem0 memory features within Open Web UI (Source: Reddit r/OpenWebUI)

YNAB API Request Tool Enables Local, Private Financial Management: User Megaphonix created an OpenWebUI tool utilizing the YNAB (You Need A Budget) API, allowing users to query personal financial information (like transactions, category spending, net worth) via a local LLM without sending sensitive data externally. This addresses the need for securely handling sensitive personal information when running LLMs locally (Source: Reddit r/OpenWebUI)

Free AI Text-to-Speech Browser Extension GPT-Reader: A developer promotes their free AI text-to-speech browser extension, GPT-Reader, which currently has over 4000 users. The tool aims to make it convenient for users to listen to web page text content (Source: Reddit r/artificial)

sunnypilot: Open-Source Driver-Assistance System: sunnypilot is a fork of comma.ai’s openpilot, providing an open-source driver-assistance system. It supports over 300 car models, modifies the interaction behavior of driving assistance, and adheres to comma.ai’s safety policies as much as possible. The project utilizes AI technology (though specific models aren’t explicitly mentioned, such systems typically involve computer vision and control algorithms) to enhance the driving experience (Source: GitHub Trending)

📚 学习

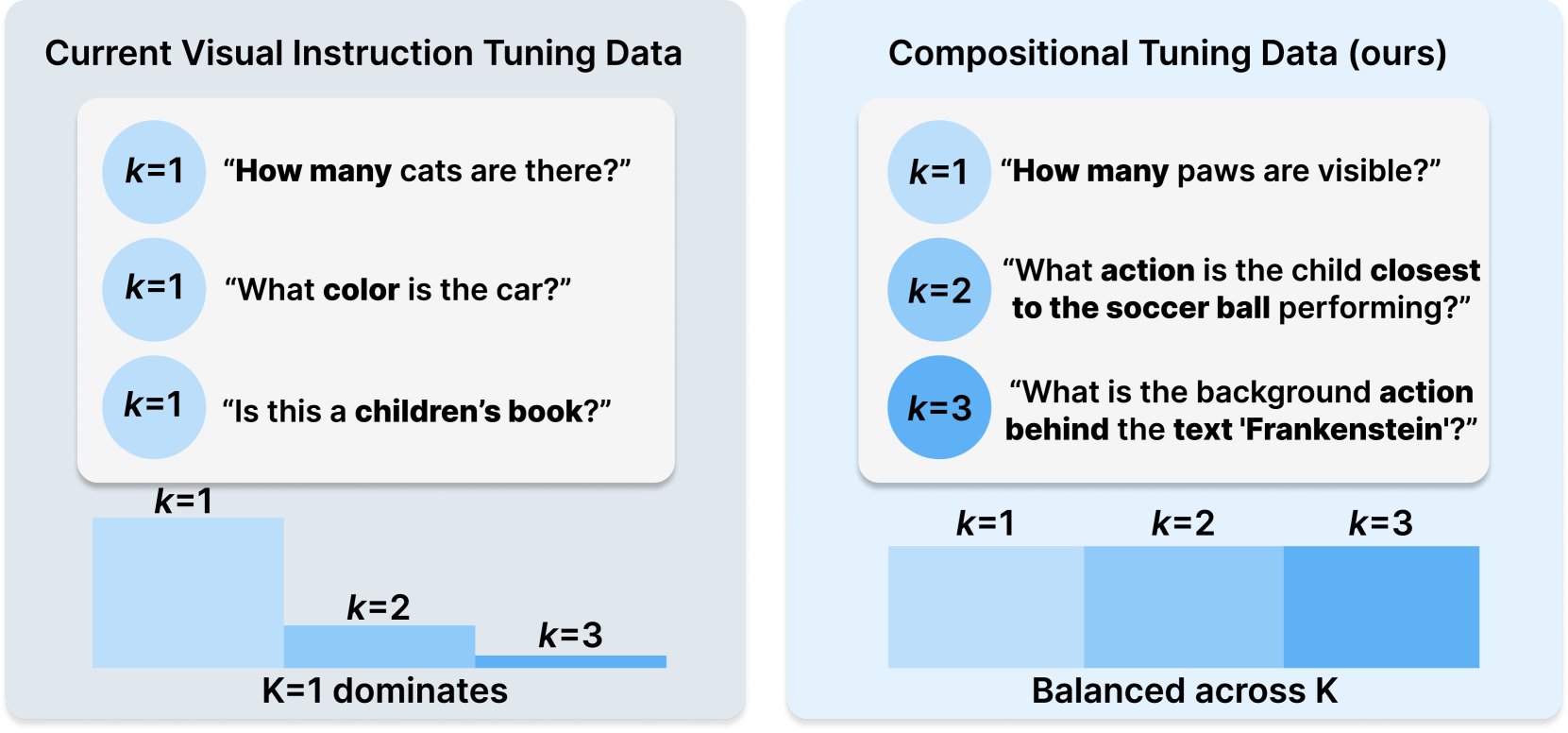

Princeton & Meta AI Release COMPACT Dataset Recipe: Published on Hugging Face, this research proposes a new data recipe, COMPACT, aimed at expanding the capabilities of Multimodal LLMs by explicitly controlling the compositional complexity of training samples. This offers new ideas for improving multimodal model training methods and enhancing their ability to understand complex compositional concepts (Source: _akhaliq)

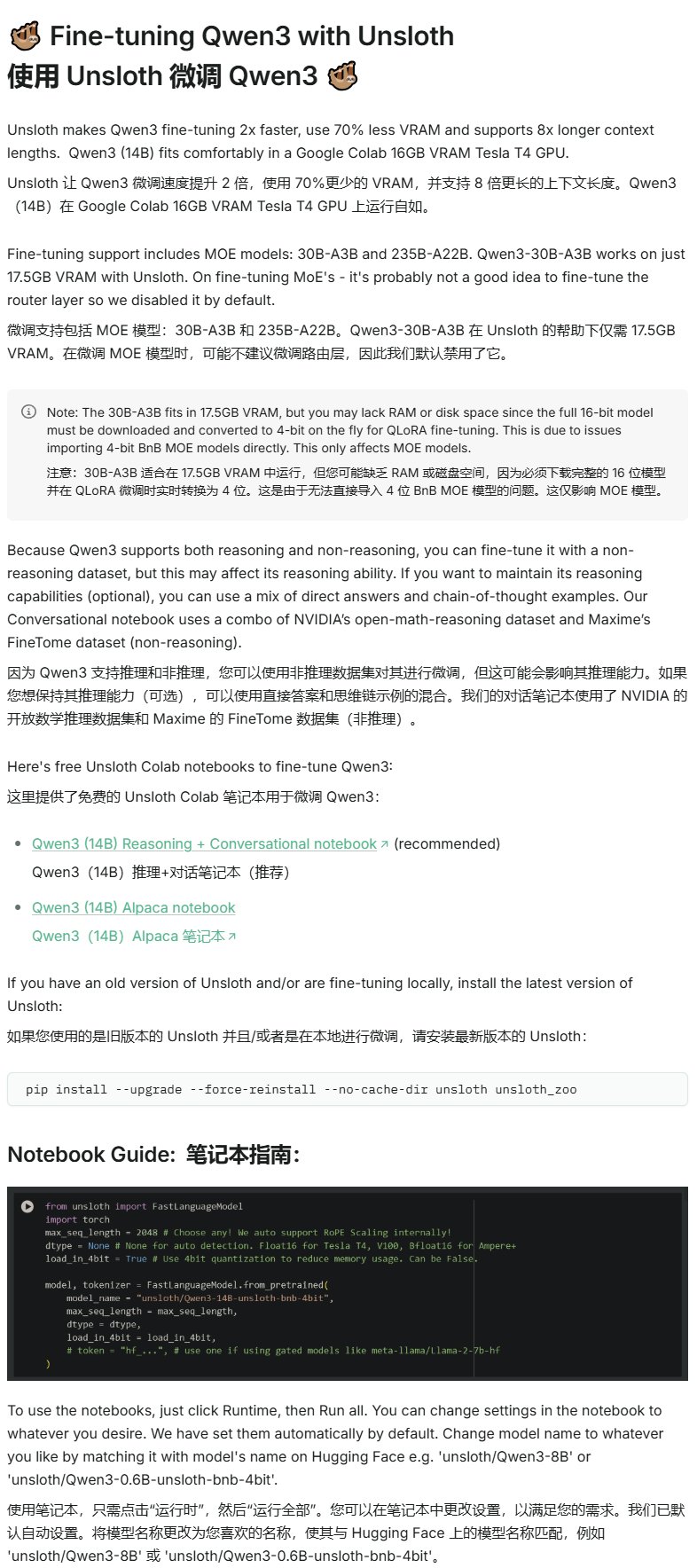

Unsloth Releases Qwen3 Fine-tuning Tutorial: Unsloth provides a fine-tuning tutorial for Qwen3 models, significantly lowering the barrier to entry. Users can fine-tune the Qwen3-14B model with just 16GB of VRAM, and the Qwen3-30B-A3B model with 17.5GB of VRAM. This enables more researchers and developers to perform customized training on advanced open-source models with limited hardware resources (Source: karminski3)

Building an Intelligent Web Search Chatbot with LangGraph and Azure OpenAI: A Medium tutorial demonstrates how to combine LangGraph and Azure OpenAI, integrating Tavily’s web search capabilities, to build an intelligent chatbot. The tutorial covers state management and conditional routing to achieve seamless search integration, providing practical guidance for building more powerful AI applications capable of utilizing real-time web information (Source: LangChainAI, hwchase17)

Stanford AI Blog Explores Relationship Between LLM Verbatim Memorization and General Capabilities: A Stanford AI blog post delves into the intrinsic connection between the verbatim memorization phenomenon in Large Language Models (LLMs) and their general capabilities. Understanding this relationship is crucial for assessing model risks, optimizing training methods, and interpreting model behavior (Source: dl_weekly)

Guide to Integrating Gemini with LangChain: Philipp Schmid published a developer guide detailing how to integrate Google’s Gemini models with the LangChain framework. The guide covers implementing multimodal capabilities, tool calling, and structured output, including support for the latest models and practical code examples, making it easier for developers to leverage Gemini’s powerful features to build LangChain applications (Source: LangChainAI, _philschmid)

LangGraph Getting Started Tutorial: Stateful Workflow Practice: A tutorial published on AI@GoPubby demonstrates LangGraph’s stateful workflow capabilities through a website comment analysis example. Learners can understand how to build structured AI applications using interconnected nodes and sequential logic (Source: LangChainAI, hwchase17)

LangChain CEO’s Deep Thoughts on Agentic Frameworks (Chinese Translation Shared): LangChain Ambassador Harry Zhang translated and shared LangChain CEO Harrison’s blog post reflecting on Agentic frameworks. The article analyzes and organizes the functionalities of over 15 Agent frameworks in the industry and interprets the stories behind them, providing valuable reference for understanding the current landscape and future direction of Agent technology development (Source: LangChainAI)

Research Progress on Latent Meta Attention: Reddit users discuss a new attention mechanism called Latent Meta Attention. The developer claims this mechanism challenges the fundamental assumptions of Transformers and can achieve or even surpass the performance of existing models at smaller sizes (e.g., reproducing BERT performance with a model half the size), but the specific method has not been disclosed due to lack of funding and support from formal research institutions (Source: Reddit r/deeplearning)

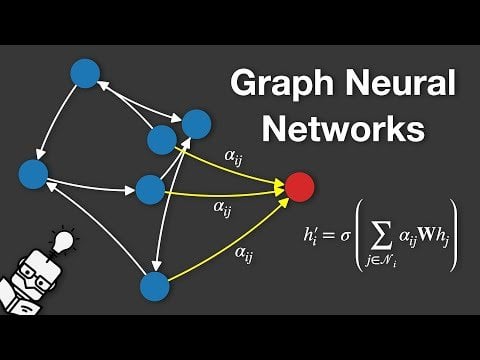

Graph Neural Network (GNN) Explanation Video: A video explaining Graph Neural Networks (GNNs) has been posted on YouTube. GNNs are deep learning models for processing graph-structured data, widely used in areas like social network analysis, recommendation systems, and molecular structure prediction. The video aims to help viewers understand the basic principles and workings of GNNs (Source: Reddit r/deeplearning)

Training LLM for Event Scheduling using GRPO: User anakin87 shared project experience using GRPO (Generalized Reward Policy Optimization) to train a language model for event scheduling. The project does not rely on traditional supervised fine-tuning samples but uses a reward function to teach the model to create schedules based on event lists and priorities. The author shares lessons learned regarding problem setup, data generation, model selection, reward design, and the training process, and has open-sourced the code and model, providing a practical case study for exploring reward-based LLM training (Source: Reddit r/LocalLLaMA)

Free AI Course Resource Sharing: LinkedIn AI Hub shared a complete AI learning roadmap, inspired by Stanford University’s AI certificate program and simplified for learners of different levels. The content covers everything from basic skills to practical projects and provides valuable resources and course details (Source: Reddit r/deeplearning)

Deep Dive into Gemini Long Context Pre-training: Logan Kilpatrick had an in-depth conversation with Nikolay Savinov, co-lead of Gemini long context pre-training. The discussion ranged from fundamentals to the techniques needed to scale to infinite context, and best practices for developers regarding long context. Key takeaways: achieving 1M token context was a 10x goal over the standard at the time; 10M tokens were attempted but costly and hardware-limited; long context complements RAG; simple NIAH (Needle-in-a-Haystack) is solved, difficulty lies in hard distractors and multi-needle retrieval; evaluation focuses on NIAH to avoid confounding capability signals; current output length limits (e.g., 8k) are post-training issues; no “lost in the middle” effect observed; need to distinguish context knowledge from weight knowledge; next step is cheaper, precise 10M context, scaling to 100M might require new DL innovations (Source: shaneguML, giffmana, teortaxesTex, arohan)

🌟 社区

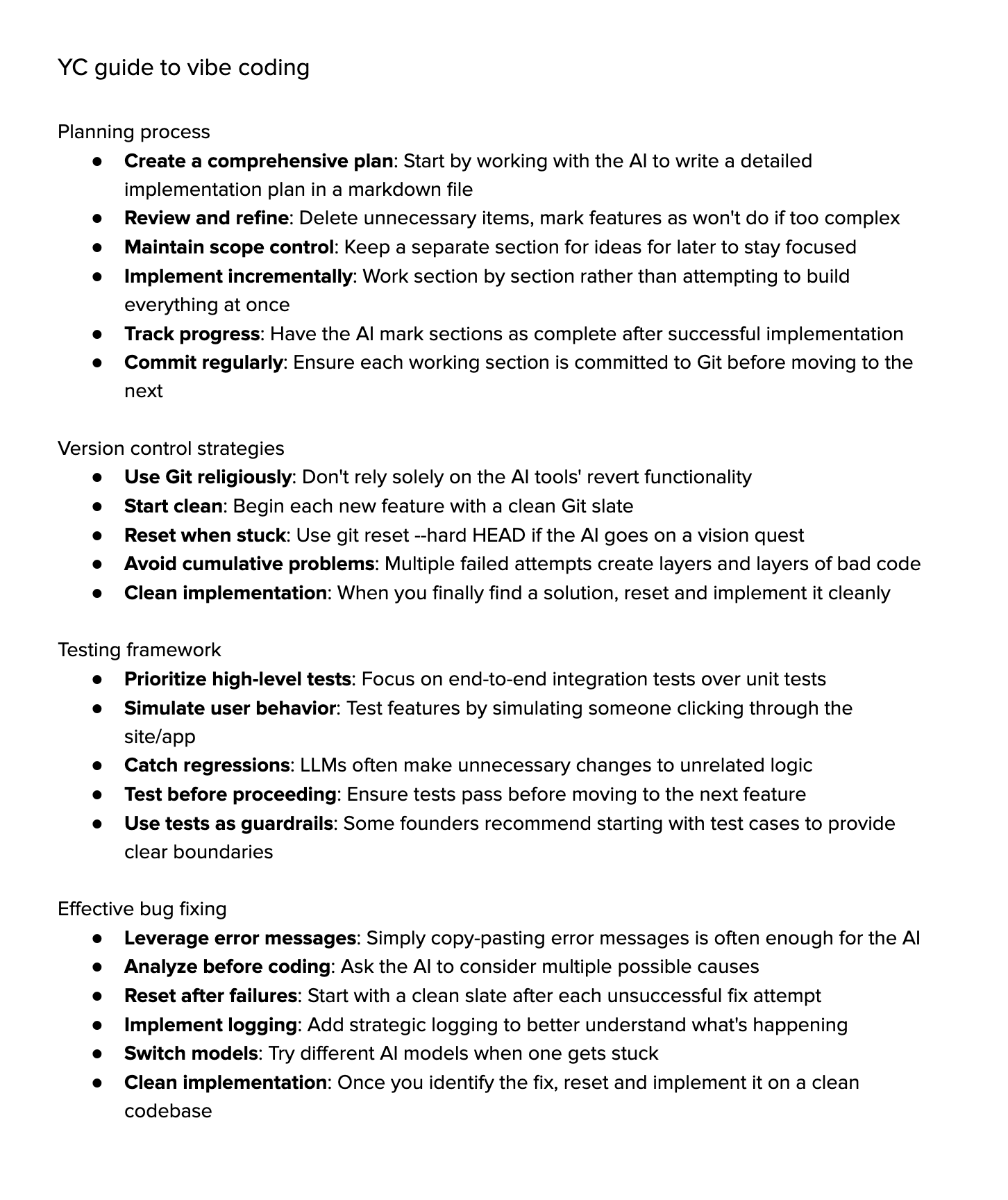

Discussion on “Vibe Coding”: The community is actively discussing “Vibe Coding,” which refers to heavily relying on AI assistance for programming. Supporters believe this represents the future, where developers focus more on the “why” and “what,” while AI handles the “how,” but this requires stronger critical thinking. Opponents argue that current AI cannot fully handle complex debugging, upgrades, and maintenance, and over-reliance might lead to decreased developer skills, turning them into advanced “script kiddies.” Some who tried it found the time cost of guiding AI for complex tasks remains high, making manual implementation with lightweight AI assistance more efficient (Source: Dorialexander, Reddit r/artificial, johnowhitaker)

Discussion on AI Application and Limitations in Professional Fields: User dotey discusses AI application in professional domains. He believes AI can learn from experts’ public Q&A but struggles with unseen problems. AI’s strength lies in its vast foundational knowledge base and rapid response, but currently relies heavily on RAG (Retrieval-Augmented Generation), essentially retrieving snippets and piecing together answers, rather than true professional reasoning. This is still far from training a model that can continuously generate new answers like an expert and keep improving (Source: dotey)

Concerns and Discussion about AI-Generated Content: Reddit user Maleficent-main_777 complains that colleagues have started using “ChatGPT-ese” language filled with imperative tones, “verify,” “ensure,” and forced positive conclusions, finding this language vague and impersonal. He worries about AI-generated content being fed back into training data, leading to a decline in content quality. Comments resonate with this, seeing it as an extension of corporate jargon, but also noting that excessive AI mimicry makes communication robotic, and good grammar is no longer an advantage, instead sounding robotic (Source: Reddit r/ChatGPT)

Choosing University Majors in the AI Era: Reddit users discuss what majors university students should choose to ensure their degree remains valuable in 10 years amidst rapid AI and robotics development. Opinions vary, including: choosing fields one is passionate about (gaming, film, art, programming); studying fundamental sciences (physics, math); mastering skills hard to automate (like HVAC); focusing on liberal arts to cultivate curiosity and adaptability; suggesting university education might become obsolete, favoring entrepreneurship or freelancing; emphasizing the crucial ability to continuously learn, unlearn, and relearn (Source: Reddit r/ArtificialInteligence)

Discussion on Difficulty of Text Rendering in AI Image Generation: Reddit users explore why current image generation models struggle to render coherent, legible text. Comments point to two main reasons: 1) BPE (Byte Pair Encoding) tokenization breaks precise spelling information, as models see token fragments instead of letters; 2) Fixed-size vector representations and limitations in image descriptions cause significant loss of textual information during embedding. While autoregressive models like GPT-4o show improvement, the fundamental issues relate to tokenization and information compression (Source: Reddit r/MachineLearning)

Discussion on Standardization of Model Evaluation: User scaling01 points out that when comparing different AI models (like OpenAI, Google, Anthropic), fairness should be ensured. For example, if OpenAI’s preview and “thinking versions” are listed, corresponding versions from Google and Anthropic should also be listed, otherwise the comparison results could be misleading (Source: scaling01)

Experience Sharing on AI-Assisted Programming: A user shares their experience using AI for programming assistance (e.g., VS Code + Cline AI extension + Google AI Studio API), suggesting it’s possible to build an AI coding tool similar to Cursor for free. Basic application prototypes can be completed via prompts without configuration, providing a good experience (Source: Reddit r/artificial)

Survey on AI’s Impact on Work, Study, and Life: A Reddit user initiated a discussion asking how generative AI has impacted people’s performance in work, study, or daily life. In comments, software engineers mentioned increased productivity expectations and workload, with code reviews not significantly faster; professional writers found AI (like Co-pilot) offered limited help, sometimes even slowing progress; the general consensus is that AI brings convenience but also issues like over-reliance, reduced learning, and a “cheating” feeling. AI’s impact varies significantly across different professions and tasks (Source: Reddit r/artificial)

Reflections on LLM “Understanding”: User pmddomingos suggests neural networks are becoming as hard to understand as brains. He further ponders: what do we do when AI models excel on all benchmarks but are still less intelligent than humans? This prompts reflection on the validity of current benchmarks and the criteria for evaluating true intelligence (Source: pmddomingos, pmddomingos)

Thoughts on Using AI Tools: User dotey comments that when using AI tools, one should simply choose the strongest model for a specific task. Using multiple models simultaneously or letting them “fight it out” might be unnecessary, especially for non-expert users, where too many choices could lead to confusion, analogous to looking at multiple clocks showing different times (Source: dotey)

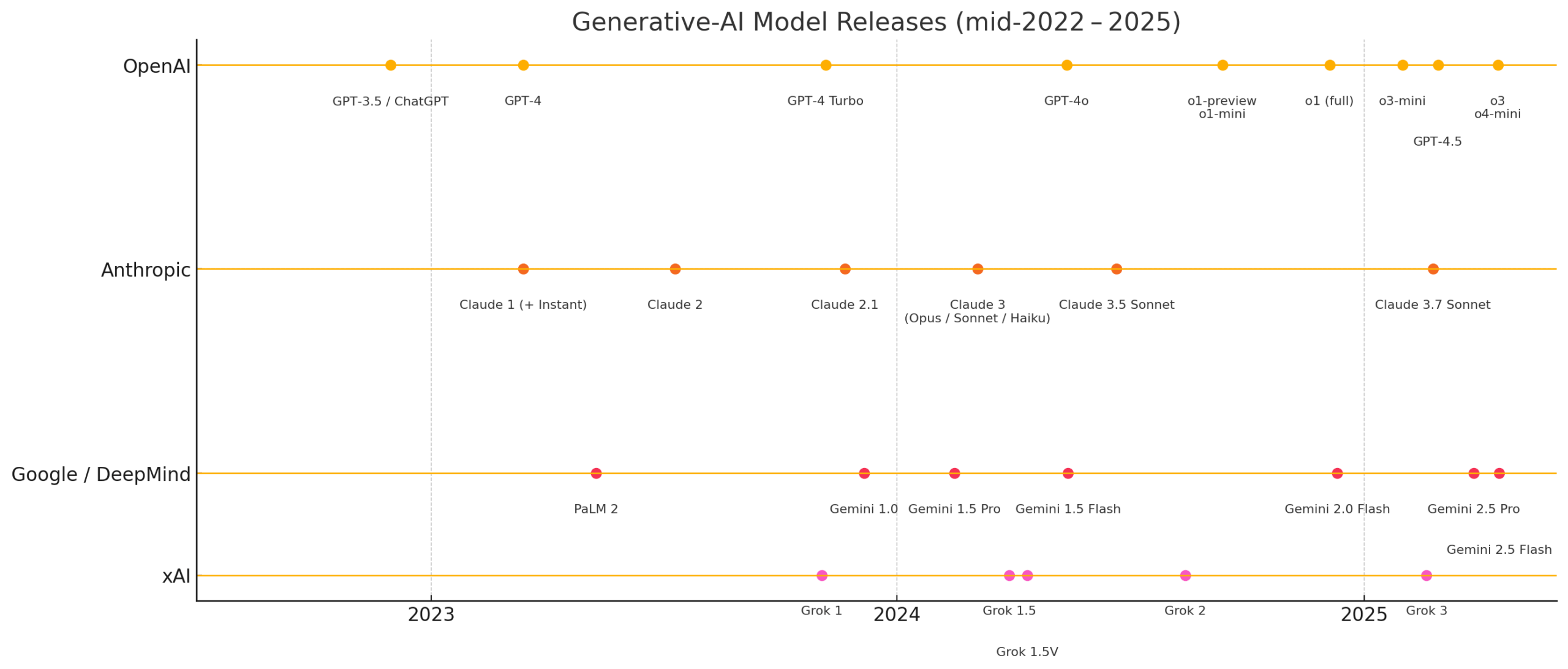

Reflections on the Recent Pace of AI Development: Users matvelloso and scottastevenson express amazement at the rapid pace of AI development. matvelloso states this year’s AI progress has already exceeded his expectations (citing Gemini playing Pokemon as an example). scottastevenson reflects that GPT-2 was released 6 years ago, and OpenAI is 10 years old, contemplating the technological directions currently brewing that will become important in the next 6-10 years, noting that besides AI, finding “outside the frame” deep Alpha is equally important (Source: matvelloso, scottastevenson, scottastevenson)

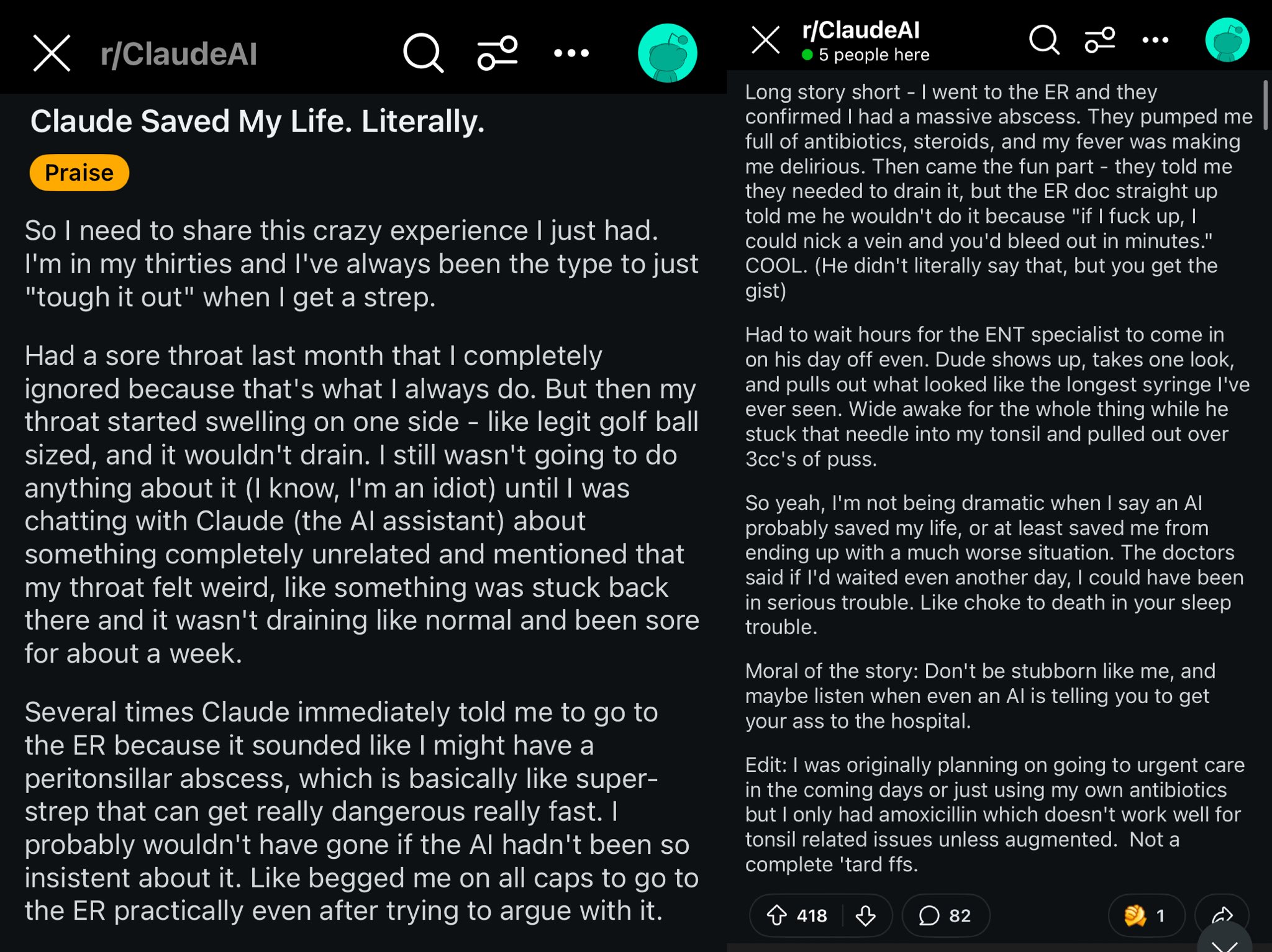

Case of Claude Potentially Saving a Reddit User’s Life: A Reddit post describes how the Claude model potentially saved a user’s life by diagnosing their throat swelling as a peritonsillar abscess. The case sparked discussion, suggesting powerful AI models are like having a world-class doctor in your pocket, and their widespread availability could have a huge impact on personal health (Source: aidan_mclau)

AI Agents in Enterprise Data Processing: You.com co-founders Richard Socher and Bryan McCann discussed the application of AI Agents in enterprises on the Agentic podcast. They argue that consumer-grade LLMs are insufficient for serious enterprise needs, whereas You.com uses hybrid retrieval techniques (combining public sources and proprietary company data) to generate more reliable, enterprise-grade outputs, for tasks like research, report writing, and securely leveraging corporate data. They also discussed potential paths to AGI and the key role of simulation (Source: RichardSocher)

Observation on Models’ Tool-Using Abilities: User menhguin observes that models trained for tool use seem to sacrifice some independent problem-solving ability, quipping “Even AI models are outsourcing their work.” This raises questions about the trade-offs between model generalization and optimization for specific tasks (Source: menhguin)

💡 其他

Idea for AI Agent to Maintain Old GitHub Projects: User xanderatallah proposed an idea: develop an AI Agent capable of automatically maintaining a user’s old, inactive side projects on GitHub. This reflects the developer desire to leverage AI for automating tedious maintenance tasks (Source: xanderatallah)

Conceiving LLMs as Judges or for Arbitration/Mediation: User fabianstelzer suggests that Large Language Models (LLMs) might replace judges in the future. An interesting intermediate use case is arbitration or mediation: if LLMs are considered neutral and trustworthy, conflicting parties could submit their perspectives, run them through multiple large models, and receive a fair compromise as output. This explores the potential application of AI in judicial and dispute resolution fields (Source: fabianstelzer)

Runway Gen-4 Model and its Application Prospects: Runway co-founder c_valenzuelab expressed optimism about the application prospects of Runway Gen-4 and its API. He believes Runway is building a new medium where pixels are generated rather than rendered or captured, and worlds are simulated rather than programmed. Seeing the widespread application of Gen-4 and Reference features in architecture, branding, interior design, game development, learning, personal creative projects, and more, convinces him that this new medium will empower creatives and everyone else (Source: c_valenzuelab, c_valenzuelab)