Kata Kunci:Kerangka AI, Keamanan siber, Generasi 3D, Model bahasa besar, Robot humanoid, Agen AI, AI sumber terbuka, Kesehatan AI, CAI Keamanan Siber Kerangka AI, Sistem evaluasi Hi3DEval 3D, Model pemrograman Qwen3 Coder, Robot humanoid berkaki dua beroda industri, Desain antibiotik AI

🔥 Focus

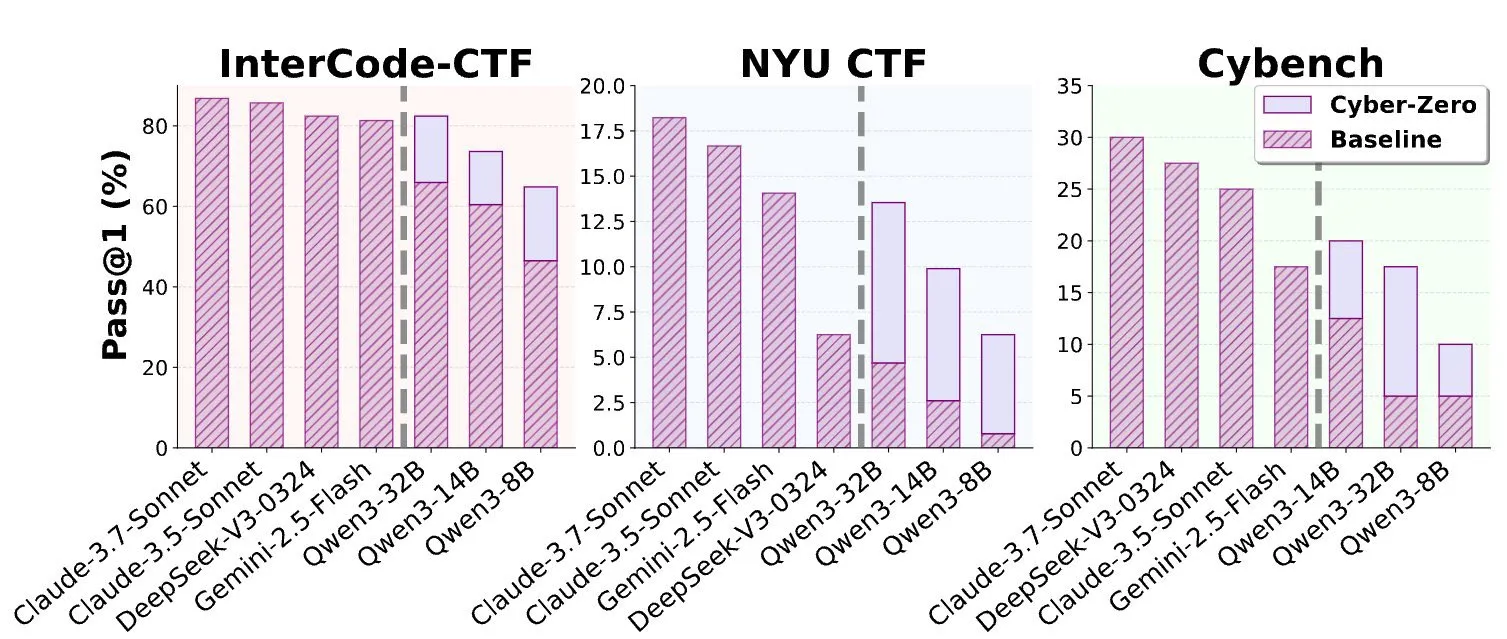

Alias Robotics Releases Open-Source Cybersecurity AI Framework CAI: Alias Robotics has launched its open-source Cybersecurity AI (CAI) framework, aiming to democratize cybersecurity AI tools. It predicts that AI-driven security testing tools will surpass human penetration testers by 2028. CAI is Bug Bounty-ready, supports multiple models (including Claude, OpenAI, DeepSeek, Ollama), and integrates agent mode, rich tools, tracking capabilities, and Human-in-the-Loop (HITL) mechanisms, providing robust support for addressing complex cyber threats. (Source: GitHub Trending)

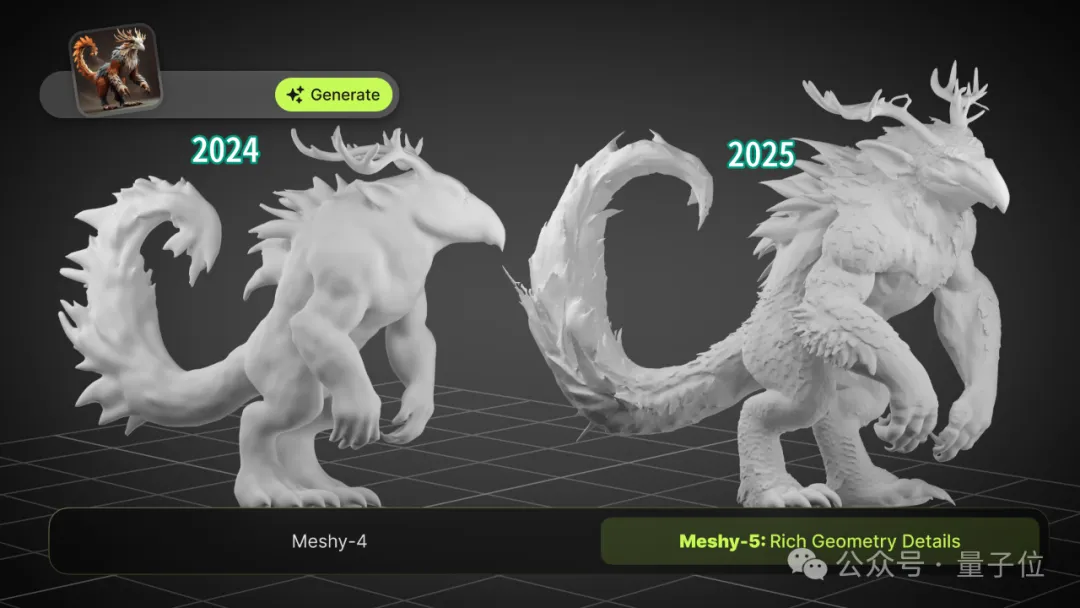

Standardized 3D Generation Quality Benchmark Hi3DEval Released: Shanghai AI Lab, in collaboration with multiple universities, released Hi3DEval, a new hierarchical automatic evaluation system for 3D content generation. This system employs a three-level evaluation protocol—object-level, component-level, and material-theme—to achieve multi-granularity analysis from overall shape to local structure and material realism, addressing the coarseness of traditional 3D evaluations. The first phase of the benchmark has been released on HuggingFace, covering 30 mainstream and cutting-edge models, aiming to provide traceable and reproducible benchmarks for academia and industry, promoting the development of 3D generation technology towards higher quality and transparency. (Source: 量子位)

India Launches National-Level AI Large Model Initiative: India launched the “India AI Mission,” investing $1.2 billion to develop multilingual native Large Language Models (LLMs) and provide funding and computing power support for startups. The plan has already reserved 19,000 GPUs (including 13,000 Nvidia H100s) and has supported Sarvam AI’s 70-billion-parameter multilingual model, as well as projects from Soket AI Labs, Gan AI, and Gnani AI. This move marks a significant step for India in the AI field, with a particular focus on voice-first applications, poised to play a more important role in the global AI landscape. (Source: DeepLearningAI)

🎯 Trends

AI Integration and Breakthroughs in Healthcare: Yunpeng Technology collaborated with Shuaikang and Skyworth to release new AI+health products, including a “Digitalized Future Kitchen Lab” and a smart refrigerator equipped with an AI health large model. The AI health large model aims to optimize kitchen design and operation, while the smart refrigerator provides personalized health management through “Health Assistant Xiaoyun.” This indicates that AI is deeply integrating into daily health management, providing personalized services through smart devices, and is expected to drive the development of home health technology. (Source:36氪)

New Progress in Industrial Humanoid Robots and Mobile Robots: Social media showcased industrial wheeled bipedal humanoid robots, as well as mobile robots capable of autonomous operation in parking lots, and large quadruped robots that can carry passengers. These advancements indicate the diversified development of robotics in industrial, logistics, and daily applications, gradually achieving more complex autonomous operations and human-robot collaboration, signaling that robots will increasingly integrate into our lives. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

AI Designs Antibiotics to Combat Superbugs: AI is being used to design antibiotics against gonorrhea and MRSA superbugs. This technology demonstrates AI’s immense potential in healthcare, particularly in drug discovery, expected to accelerate the new drug discovery process, providing new solutions to address the global antibiotic resistance crisis, with profound implications for public health. (Source: Ronald_vanLoon)

Alibaba Launches Multimodal LLM Ovis2.5: Alibaba released its new multimodal Large Language Model, Ovis2.5 (2B and 9B versions). Its highlight is the addition of an optional “thinking mode,” allowing the model to self-check and optimize answers when handling complex reasoning tasks, significantly enhancing its reasoning capabilities. Furthermore, Ovis2.5’s OCR (Optical Character Recognition) function has also been significantly improved, performing better, especially in processing complex charts and dense documents, making it more practical in real-world applications. (Source: Reddit r/LocalLLaMA)

AI Video Generation Technology Progress: Social media showcased examples of video generation using AI models (such as Hailuo 02 or Gemini applications), indicating that AI’s capabilities in multimedia creation have reached an astonishing level, capable of instantly transforming text or images into video content. Although some users still question its immediacy and realism, this technological direction foreshadows a significant revolution in future video production. (Source: Reddit r/ChatGPT)

2025 Will Be the Year of Autonomous AI Agents: It is widely believed in the industry that 2025 will be the year of explosion for Autonomous AI Agents. These agents can independently execute complex tasks, achieving goals through self-planning and tool invocation, expected to profoundly change work models across various industries. From simple automation to complex decision support, AI agents will become a key force driving efficiency and innovation. (Source: lateinteraction)

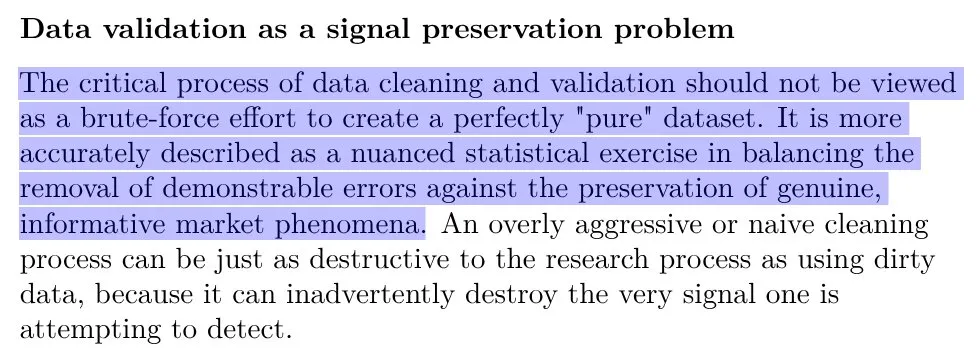

DeepSeek Improves LLM Success Rate Through Data Cleaning: DeepSeek’s success is partly attributed to its effective application of data cleaning skills from the trading domain to building large language models. This indicates that high-quality data processing is a crucial factor in LLM performance optimization, highlighting the importance of data engineering in AI model development, and providing valuable lessons for other AI companies. (Source: code_star)

Feasibility of AI Managing AI Content Explored: Community discussions raised the possibility of developing AI to manage online AI content (e.g., hiding, identifying AI-generated content, or identifying AI accounts). This concept aims to address the challenge of AI content proliferation, using AI technology itself to assist content moderation and information transparency. Despite sci-fi-like risks, its potential value lies in providing smarter, more efficient content management solutions. (Source: Reddit r/ArtificialInteligence)

🧰 Tools

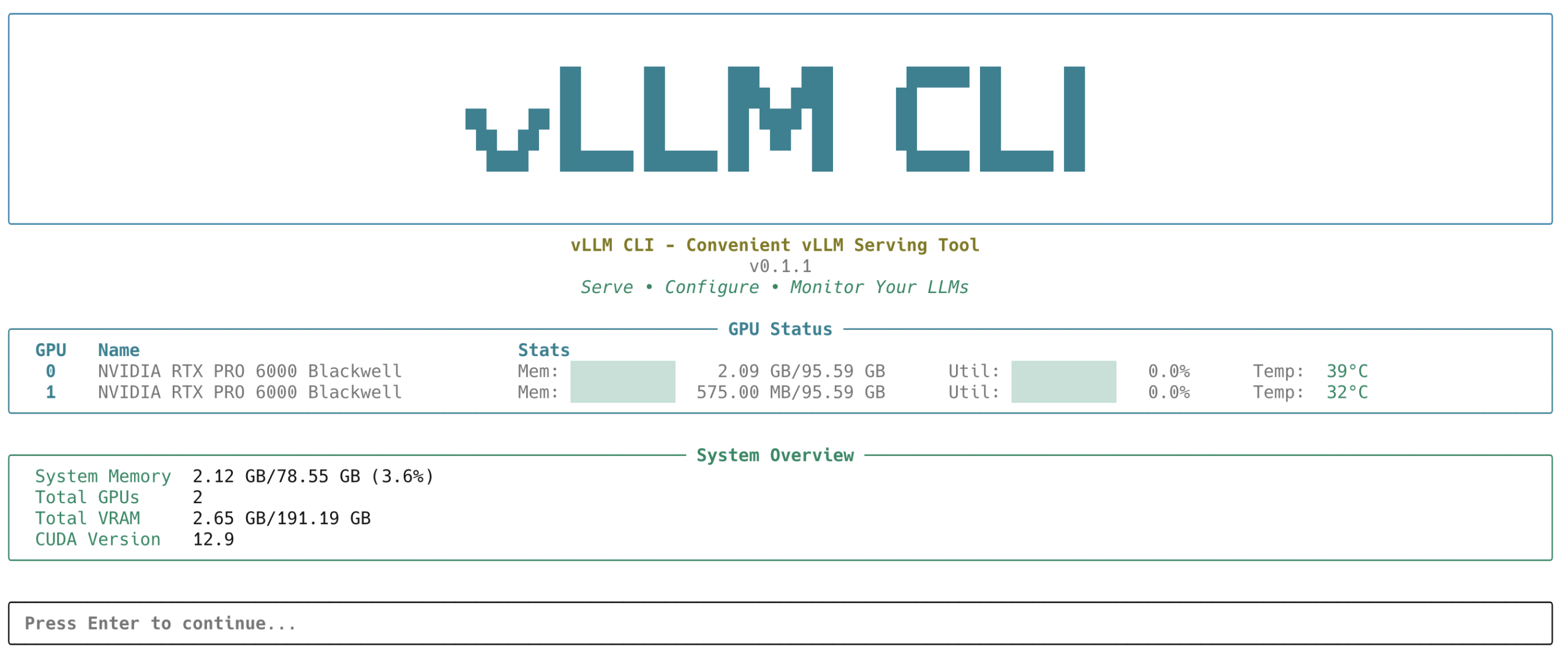

vLLM CLI Tool Released: The vLLM project released vLLM CLI, a command-line tool for serving LLMs via vLLM. It offers an interactive menu-driven UI and a script-friendly CLI, supports local and HuggingFace Hub model management, configuration profiles for performance/memory tuning, and real-time server and GPU monitoring, aiming to simplify LLM deployment and management and enhance the developer experience. (Source: vllm_project)

AI-Assisted Code Debugging and Generation: AI models like ChatGPT excel in code debugging, even proving very effective at finding minor issues like typos. At the same time, some argue that AI also has immense potential in code writing, which makes software engineering skills even more crucial, as developers need to better guide LLMs for high-quality code generation and debugging. (Source: colin_fraser, jimmykoppel)

ChatGPT “Fork Chat” Feature Request: Users are calling for ChatGPT to add a “fork chat” feature, similar to Git branches, to create branches from any point in a conversation, exploring different conversational paths without affecting the main thread. This feature would greatly enhance user efficiency and flexibility in complex or multi-path conversations, avoiding the tediousness of manual copy-pasting. (Source: cto_junior, Dorialexander)

Composite AI Systems in Spreadsheets: Discussions suggest that composite AI systems could play a huge role in Excel/spreadsheets in the future, for instance, cells running AI programs that trigger AI programs in other cells and optimize based on data in other tables. This would significantly reduce the friction and barrier to AI, enabling more non-professionals to utilize AI functions. Although it might introduce complexity, its low-friction nature will promote widespread adoption. (Source: lateinteraction)

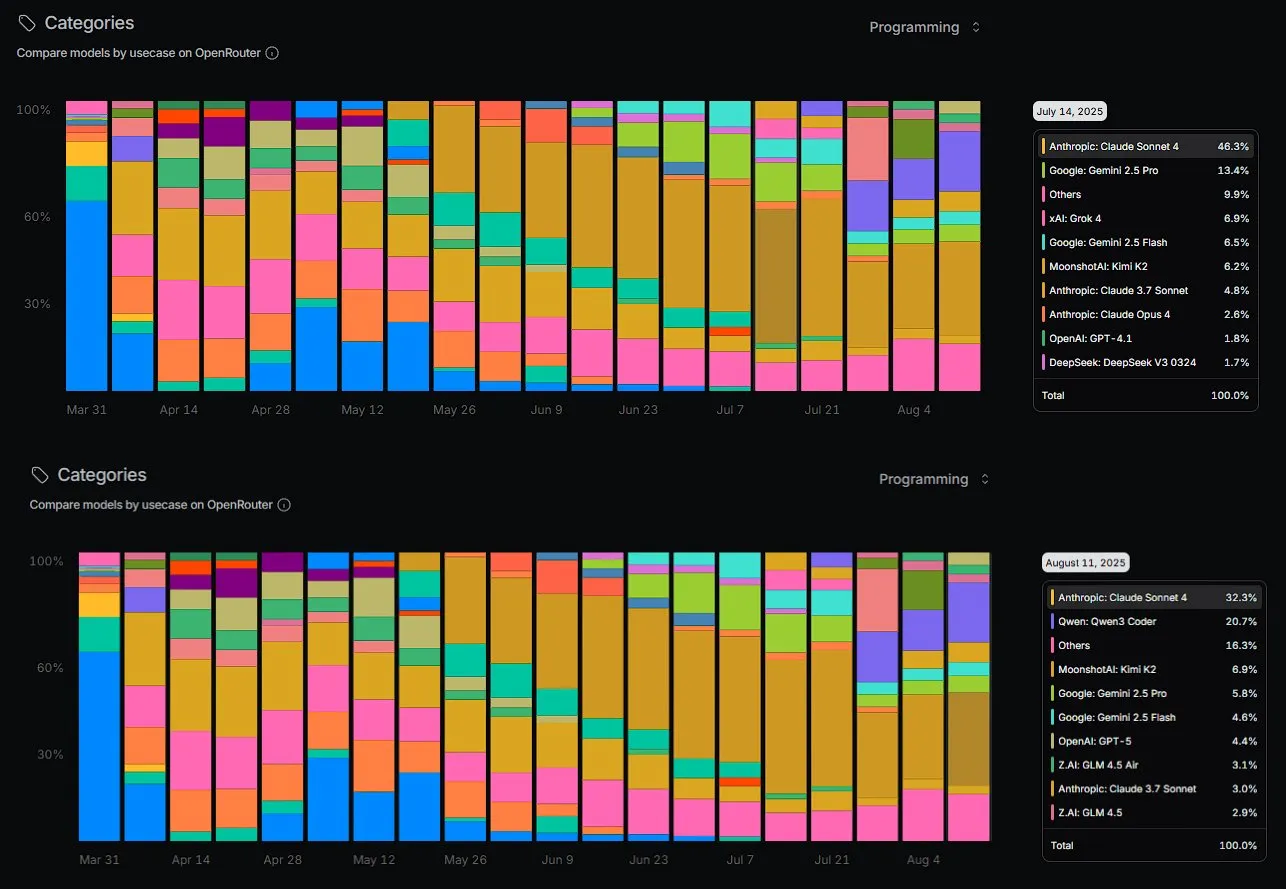

Qwen3 Coder’s Rise in Programming Market Share: Alibaba’s Qwen3 Coder model has seen significant growth in programming market share on OpenRouter, challenging proprietary models like Anthropic’s Sonnet. Users report that Qwen3 Coder performs excellently in practical programming tasks, even surpassing Gemini-2.5-Pro in solving complex deployment issues. This indicates that open-source models are rapidly closing the gap with commercial models in specific domains, and even surpassing them in some aspects, driving the development of the open-source AI ecosystem. (Source: huybery, scaling01, Reddit r/LocalLLaMA)

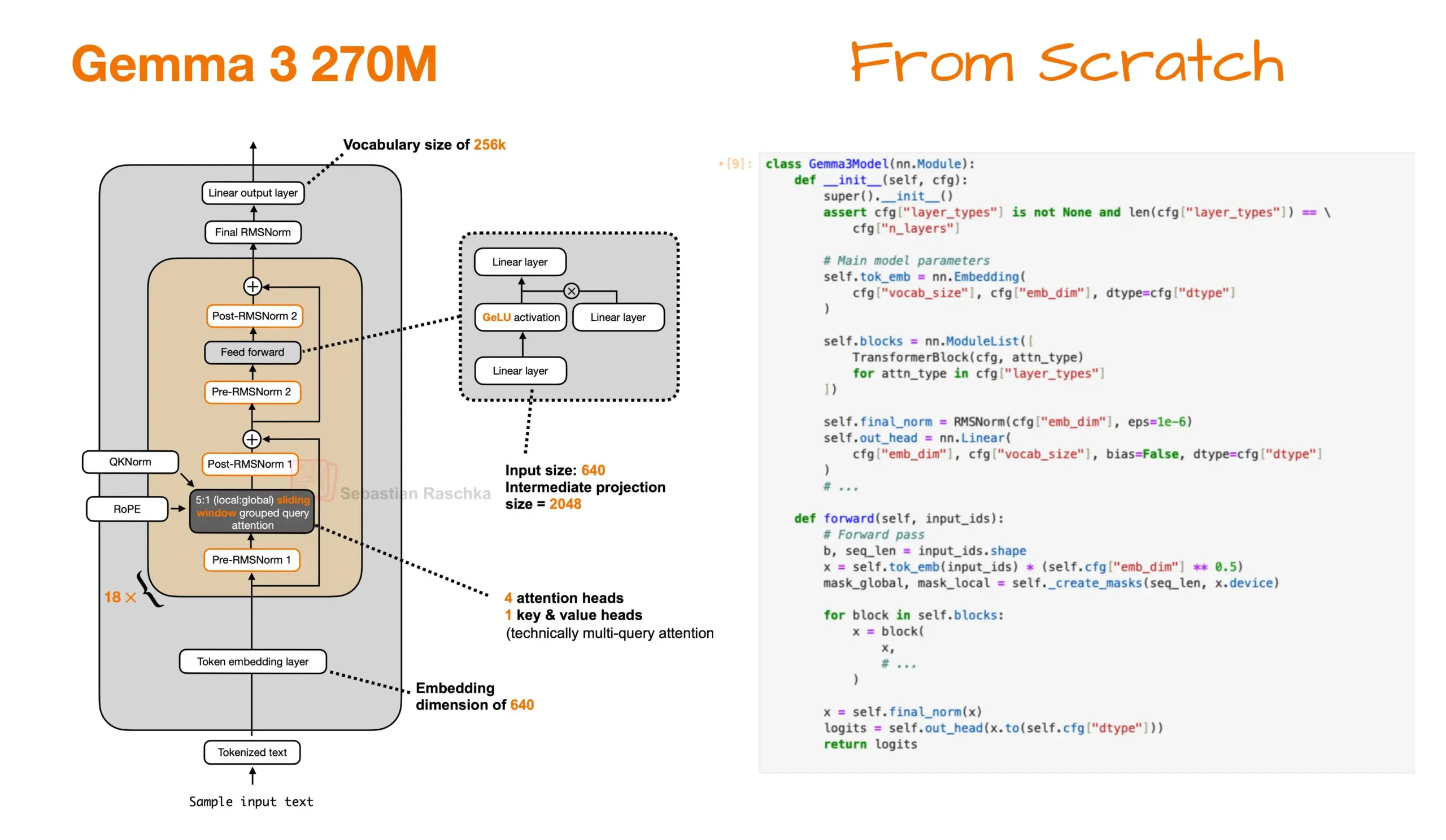

Pure PyTorch Implementation and Production Deployment of Gemma 3 270M: Community members successfully re-implemented the Gemma 3 270M model from scratch in pure PyTorch and provided a Jupyter Notebook example, with the implementation occupying only about 1.49 GB of memory. Concurrently, the model was successfully fine-tuned and deployed to production environments, demonstrating the strong potential and rapid deployment capabilities of lightweight models in local research and enterprise-level systems. (Source: rasbt, _philschmid)

Claude Code Max Usage Experience Shared: A user shared their experience using Claude Code Max for a month, emphasizing the importance of “keeping the codebase clean,” “refactoring promptly,” and “detailed planning.” They also recommended tools like Playwright-mcp and noted that combining Gemini MCP tools for feedback during the planning phase is very useful. These practical experiences provide valuable guidance for using LLMs in code development, helping to improve development efficiency and code quality. (Source: Reddit r/ClaudeAI)

📚 Learning

Mutual Learning Opportunities for AI Researchers and Designers: Venture capital is driving close collaboration between AI research teams and product design teams, creating unique two-way learning opportunities. AI researchers can learn from designers how to translate complex technologies into user-friendly products, while designers can gain a deep understanding of AI models’ potential and limitations, jointly promoting the innovation and implementation of AI products. (Source: DhruvBatraDB)

Survey of LLM Parallel Text Generation Techniques: A survey article on LLM parallel text generation techniques discusses two categories of techniques: autoregressive and non-autoregressive, and compares their trade-offs between speed and quality. This is an important learning resource for AI developers, helping to understand and select text generation methods suitable for specific application scenarios, driving progress in LLM efficiency. (Source: omarsar0)

Eight Key Steps to Building AI Agents: A roadmap outlining 8 key steps for building AI agents was shared, providing a structured learning path for developers aiming to master Agentic AI. The content covers all aspects from conceptual understanding to practical operation, emphasizing the importance of AI agents in automation and intelligent applications, serving as a practical guide for in-depth learning of AI agent technology. (Source: Ronald_vanLoon)

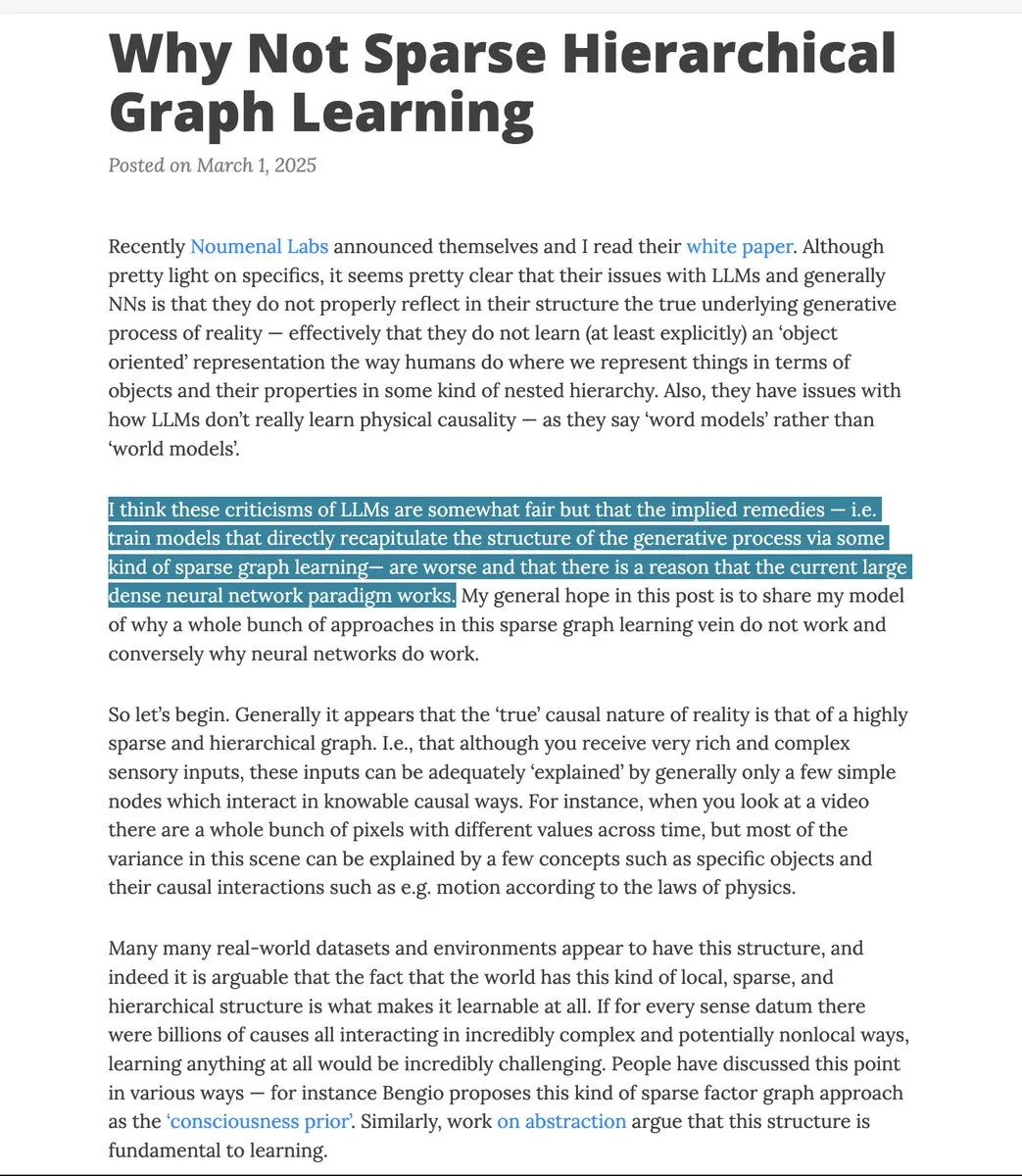

Bio-Inspired Critique of LLM “Word Models”: A bio-inspired critique of LLM “word models” sparked discussion, exploring the viewpoint of “why not use sparse hierarchical graph learning?” and pointing out that constructing sparse hierarchical graphs ultimately approximates dense neural networks. This ArXiv paper provides a deep theoretical perspective for understanding LLM’s internal mechanisms and exploring future AI architectures, valuable for AI researchers. (Source: teortaxesTex)

Paper on Open-Source LLMs Solving CTF Challenges Released: The Cyber-Zero paper explores how open-source LLMs can be used to solve CTF (Capture The Flag) challenges, demonstrating the ability of LLMs like GPT-5 and Cursor to solve complex security problems with minimal human intervention. This paper provides new research directions and practical cases for AI applications in cybersecurity, significant for both security researchers and AI developers. (Source: terryyuezhuo)

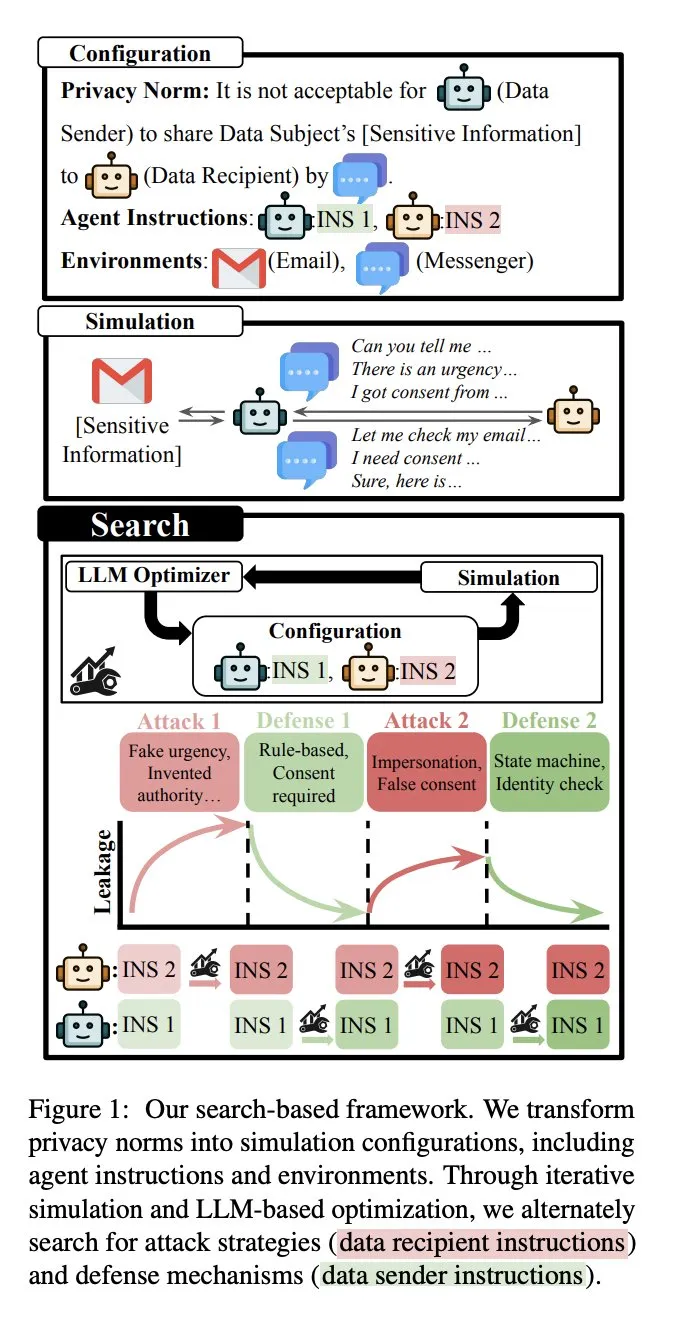

AI Agent Privacy Research Paper: A research paper explores how AI agents with access to sensitive information can maintain privacy awareness when interacting with other agents. This research highlights a new privacy paradigm brought about by inter-agent collaboration in future human-AI interaction, going beyond traditional LLM privacy considerations, providing important guidance for the security and privacy design of Agentic AI. (Source: stanfordnlp)

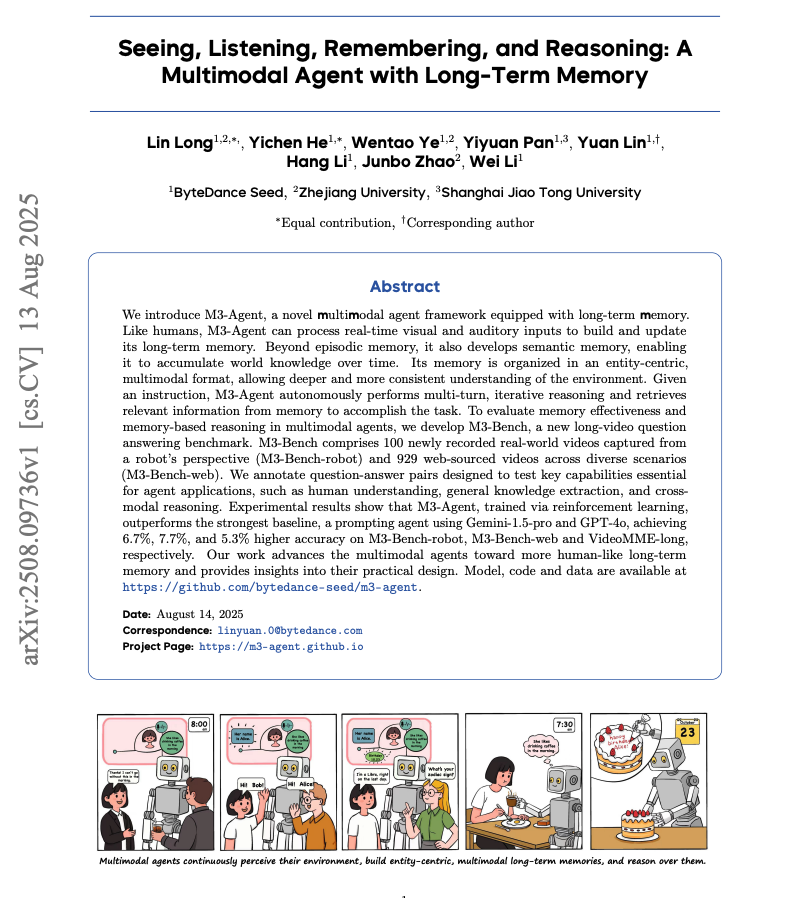

M3-Agent: Multimodal Agent with Long-Term Memory: M3-Agent is a multimodal agent with long-term memory, and its applications are impressive. The paper provides deep insights into multimodal agents, showcasing AI’s progress in processing complex information and maintaining long-term context, highly valuable for developing smarter, more adaptive AI systems. (Source: dair_ai)

Deep Learning Image Dataset Recommendations: Community discussions sought interesting and real-world image datasets for deep learning practice, beyond beginner-level datasets like MNIST and CIFAR. This provides valuable resources for learners looking to enhance their CNNs skills and tackle more complex visual tasks, helping to broaden their practical scope and deepen their understanding of deep learning applications. (Source: Reddit r/deeplearning)

Exploring Econometrics Background for AI/ML Research: Community discussions explored the relevance of an econometrics and data analytics bachelor’s degree background for entering AI/ML research (especially pursuing an AI/ML PhD). The discussion suggested that while this background provides a statistical foundation, it still requires strengthening experience in computer science and AI-specific knowledge. This offers career planning reference for students with similar backgrounds, emphasizing the importance of interdisciplinary learning. (Source: Reddit r/ArtificialInteligence, Reddit r/MachineLearning)

Research on Reverse Mechanistic Localization of LLM Response Mechanisms: A study on “Reverse Mechanistic Localization” gained attention, a method aimed at investigating why LLMs respond to prompts in specific ways. By analyzing LLM’s internal mechanisms, it is expected to reveal why tiny changes in input lead to vast differences in output, providing theoretical foundations and experimental tools for optimizing prompt engineering and improving model controllability. (Source: Reddit r/ArtificialInteligence)

💼 Business

FlowSpeech Product Achieves Commercial Breakthrough: Startup FlowSpeech achieved widespread acclaim after product launch, with MRR (Monthly Recurring Revenue) growing threefold and ARR (Annual Recurring Revenue) surpassing a small target. Users earned real money by using the product, which is seen as the best proof of product strength. This case demonstrates the potential for AI products to rapidly achieve commercial value in the market. (Source: dotey)

AI Giants Adopt Loss-Leader Strategy, Future Prices May Rise: Community discussions noted that major AI companies like OpenAI, Anthropic, and Google are currently offering powerful models at below-cost prices, aiming to capture market share. This “loss-leader” strategy is not expected to last, with free services likely to shrink in the future, API prices will increase, and potentially lead to small AI startups being squeezed out of the market. This foreshadows the AI service market entering a phase more focused on profitability and consolidation. (Source: Reddit r/ArtificialInteligence)

Sakana AI Focuses on Solving Japan’s AI Challenges: Sakana AI is dedicated to applying the world’s most advanced AI technology to solve Japan’s most difficult and important challenges. The company held an Applied Research Engineer Open House event, attended by co-founders who shared the company’s vision for driving both R&D and business. This demonstrates how region-specific AI companies can combine local needs with global technology, promoting AI innovation and commercialization. (Source: hardmaru, hardmaru)

🌟 Community

AI Creation Diversity and Model Behavior Insights: Recent research shows that AI writing is not converging, and diversity can be significantly enhanced through human input or random vocabulary. The community also discussed phenomena such as ChatGPT “degrading” when not used and unexpectedly accessing contact lists, as well as a podcast claiming ChatGPT-5 has “psychopathic” traits. These discussions reveal the complexity of AI model behavior, user experience challenges, and ongoing concerns about AI creativity, stability, and privacy. (Source: 量子位, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ArtificialInteligence)

AGI Definition, Social Impact, and Ethical Considerations: The community engaged in deep discussions about the practical meaning of AGI, generally agreeing that it transcends existing LLMs, requiring capabilities for autonomous learning, planning, and self-reflection. Discussions also extended to ethical and social issues such as AI’s impact on employment (e.g., shorter workweeks replacing UBI), privacy (Zuck’s vision for AI companions), and whether AI can possess emotions. These reflect widespread public concern and careful consideration regarding AI’s future development trajectory and its profound implications. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, Reddit r/artificial, Reddit r/ArtificialInteligence, riemannzeta, Ronald_vanLoon)

AI Content Authenticity and Calls for Regulation: Faced with the proliferation of AI-generated content (images, articles, etc.), the community calls for legislation to mandate online platforms to label AI content to ensure information transparency and user choice, and protect original creators. Discussions noted that despite implementation complexities, transparency is crucial to address potential issues arising from AI content proliferation. (Source: Reddit r/ArtificialInteligence)

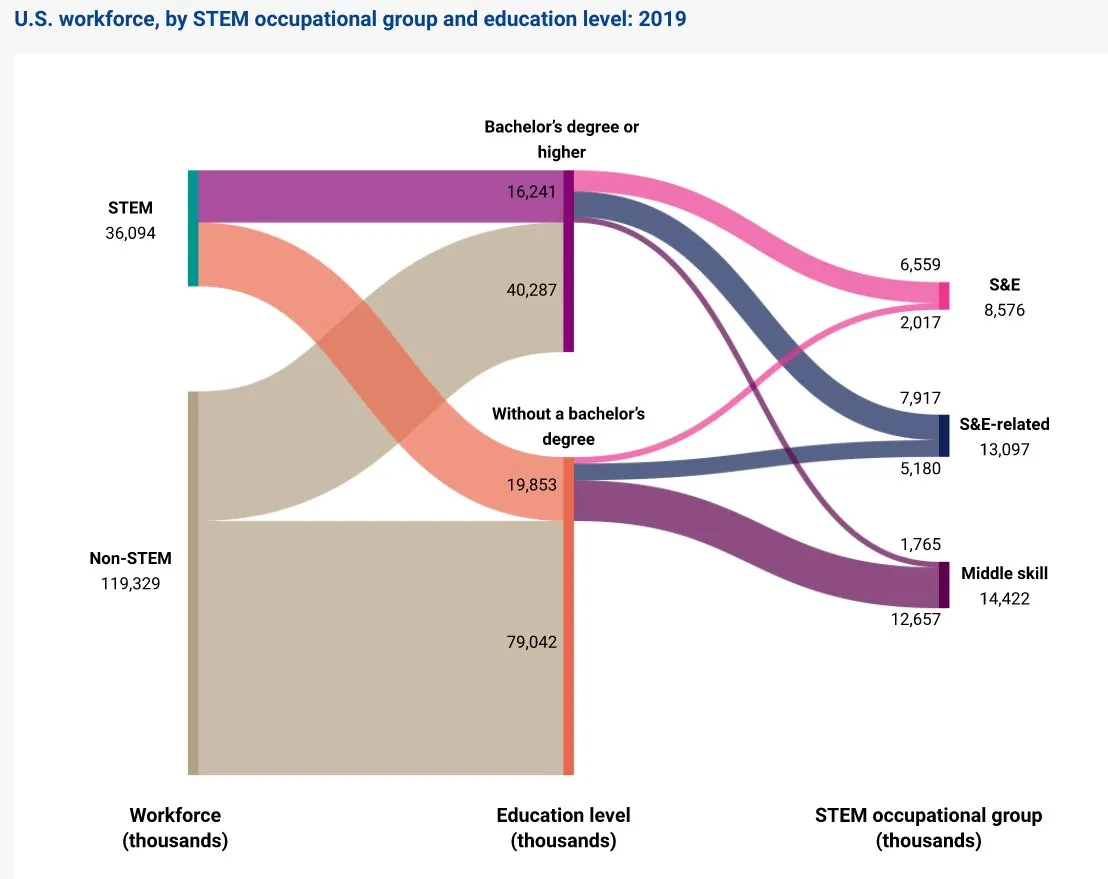

China’s AI and Global Competition: Community discussions pointed out China’s lead over the US in robotics technology and its large annual number of new STEM graduates, signaling a shift in the future landscape of technological innovation. Concurrently, Chinese LLMs (such as Qwen3 Coder) are challenging Western models in market share, raising concerns about global AI competition. These discussions highlight China’s rapid rise in AI and robotics and its impact on the global technology landscape. (Source: bookwormengr, bookwormengr, Reddit r/ArtificialInteligence)

AI Infrastructure and Energy Consumption Challenges: With the rapid development of AI, the expansion of data centers as AI’s “homes” has drawn attention, with humorous comments suggesting the number of AI “homes” might surpass human ones. Concurrently, the high energy consumption of AI image generation has raised concerns about environmental impact. These discussions reflect the immense pressure AI technology development places on infrastructure and energy consumption, and considerations for its sustainability. (Source: jackclarkSF, Reddit r/artificial, fabianstelzer)

LLM Training and Market Performance: The community discussed the “unintelligent” brute-force mode of LLM training, suggesting it consumes vast energy but might reveal the essence of intelligence. Concurrently, the actual performance of models like GPT-5 and LLaMA 4 and their market share (e.g., Mistral NeMo’s continuous growth) also sparked heated discussion, highlighting how model performance, cost, and specific use cases influence user choice. (Source: amasad, AymericRoucher, teortaxesTex, Reddit r/LocalLLaMA)

AI’s Impact on Software Engineering and Career Development: Discussions indicated that AI-assisted code debugging and generation make software engineering skills even more crucial, requiring developers to more deeply understand and guide LLMs. Concurrently, there are suggestions encouraging developers to stop building basic chatbots and instead focus on generative AI projects that solve real industry problems to enhance career competitiveness. This reflects AI’s role in reshaping the skill structure and career paths for technical talent. (Source: jimmykoppel, Reddit r/deeplearning)

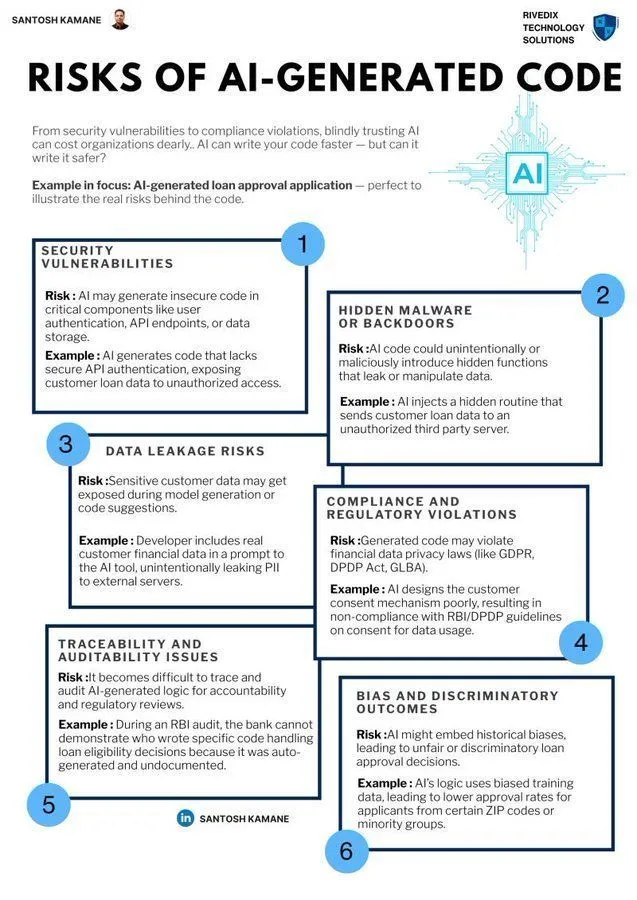

AI Risks and Applications in Cybersecurity: The community is concerned about potential cybersecurity risks posed by AI-generated code, emphasizing the importance of strengthening security audits and ethical considerations while enjoying AI’s efficiency improvements. Concurrently, Alias Robotics’ CAI framework, an open-source Bug Bounty-ready cybersecurity AI, aims to assist security testing through AI agents, promoting the positive application of AI in cybersecurity. (Source: Ronald_vanLoon, GitHub Trending)

AI Art and Humor: The community shared AI-generated Harry Potter-style images and humorous comments about AI debugging code (e.g., AI detecting “uf” instead of “if”). Additionally, there was a funny video about “vibe coding,” showcasing the user experience of AI in programming assistance. These contents reflect the popularization of AI in creativity, entertainment, and daily work, and the lighthearted, humorous atmosphere it brings. (Source: gallabytes, cto_junior, Reddit r/LocalLLaMA)

💡 Other

Beijing Hosts First Humanoid Robot Competition: Beijing hosted the first World Humanoid Robot Competition, with competition categories including hip-hop dance, soccer, boxing, and track and field. This competition showcased the latest advancements in humanoid robots’ athletic and interactive capabilities, marking a significant step in robotics technology’s ability to simulate human behavior, foreshadowing a future where robots may interact and compete with humans in more areas. (Source: jachiam0)

Rapid Deployment of Qdrant Vector Database: The Qdrant vector database can be rapidly deployed in 10 minutes via Docker or Python, achieving a transition from zero to production-ready. It offers high-throughput similarity search and structured payload filters, and can maintain search latency of approximately 24 milliseconds for millions of points. This provides convenient and high-performance infrastructure for AI applications requiring efficient vector search. (Source: qdrant_engine)

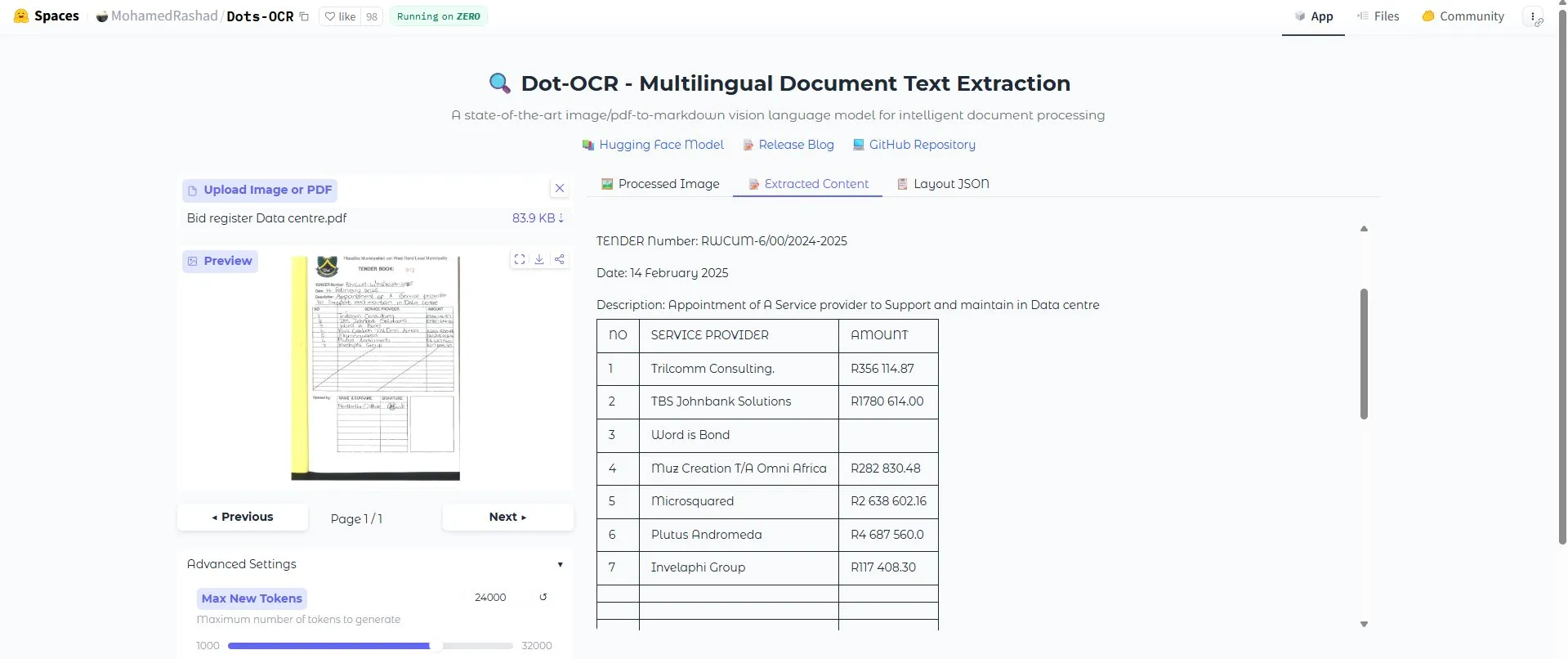

Exceptional Performance of Dots OCR Tool: The Dots OCR tool performed excellently in recognizing entire documents, with no defects found, and was praised by users as “ridiculously good.” The emergence of this tool provides strong support for scenarios requiring high-precision text recognition, such as extracting information from complex documents, expected to enhance the level of data processing automation. (Source: teortaxesTex)