Keywords:AI model, OCR technology, AI infrastructure, Large language model, AI agent, Multimodal model, AI energy consumption optimization, AI open source ecosystem, DeepSeek OCR model, Gemini 3 multimodal reasoning, Emu3.5 world model, Kimi Linear hybrid attention architecture, AgentFold memory folding technology

AI Editor’s Picks

🔥 Spotlight

DeepSeek OCR Model: A New Breakthrough in AI Memory and Energy Efficiency Optimization : DeepSeek has released an OCR model, whose core innovation lies in its information processing and memory storage methods. The model compresses text information into image form, significantly reducing the computational resources required for operation, which is expected to alleviate AI’s growing carbon footprint. This method simulates human memory through hierarchical compression, blurring less important content to save space while maintaining high efficiency. This research has attracted the attention of experts like Andrej Karpathy, who believe that images might be more suitable than text as LLM input, opening new directions for AI memory and agent applications. (Source: MIT Technology Review)

Tech Giants Continue Heavy Investment in AI Infrastructure : Tech giants like Microsoft, Meta, and Google announced in their latest earnings reports that they would continue to significantly increase AI infrastructure spending. Meta expects capital expenditures to reach $70-72 billion this year, with further expansion planned for next year; Microsoft Intelligent Cloud revenue surpassed $30 billion for the first time, with Azure and other cloud services growing by 40%, and AI capacity projected to increase by 80%. Google CEO Pichai emphasized that a full-stack AI approach brings strong momentum, previewing the upcoming release of Gemini 3. These investments reflect the giants’ optimistic outlook for future AI breakthroughs and their determination to seize market opportunities. (Source: Wired, Reddit r/artificial)

Anthropic Discovers LLMs Possess Limited “Introspection Capabilities” : Anthropic’s latest research indicates that Large Language Models (LLMs) like Claude possess “genuine introspective awareness,” although this capability is currently limited. The study explores whether LLMs can recognize their internal thoughts or merely generate plausible answers based on prompts. This finding suggests that LLMs may possess a deeper level of self-awareness than anticipated, holding significant implications for understanding and developing more intelligent, conscious AI systems. (Source: Anthropic, Reddit r/artificial)

Extropic Unveils New Thermodynamic Computing Hardware TSU, Claiming AI Energy Breakthrough : Extropic has introduced a new computing device, the TSU (Thermodynamic Sampling Unit), whose core is the “probabilistic bit” (P-bit), capable of flickering between 0 and 1 with programmable probabilities, aiming for a 10,000x efficiency improvement in AI energy consumption. The company has released the X0 chip, XTR0 desktop test suite, and Z1 commercial-grade TSU, and open-sourced the Thermol software library for GPU simulation of TSU. Although the definition of its efficiency improvement benchmark has been questioned, this direction aims to address the massive gap in AI compute and energy, potentially bringing a paradigm shift to AI computing. (Source: TheRundownAI, pmddomingos, op7418)

🎯 Trends

Google Previews Gemini 3 Release, Reinforcing AI Model Family Specialization Trend : Google CEO Sundar Pichai previewed during an earnings call that the new flagship model, Gemini 3, will be released later this year. He emphasized that Google’s family of AI models is moving towards specialization, with Gemini focusing on multimodal reasoning, Veo for video generation, Genie for interactive agents, and Nano for on-device intelligence. This strategy indicates that Google is shifting from a single general-purpose model to an interconnected system architecture optimized for different scenarios, aiming to improve reliability, reduce latency, and support edge deployment. (Source: Reddit r/ArtificialInteligence, shlomifruchter)

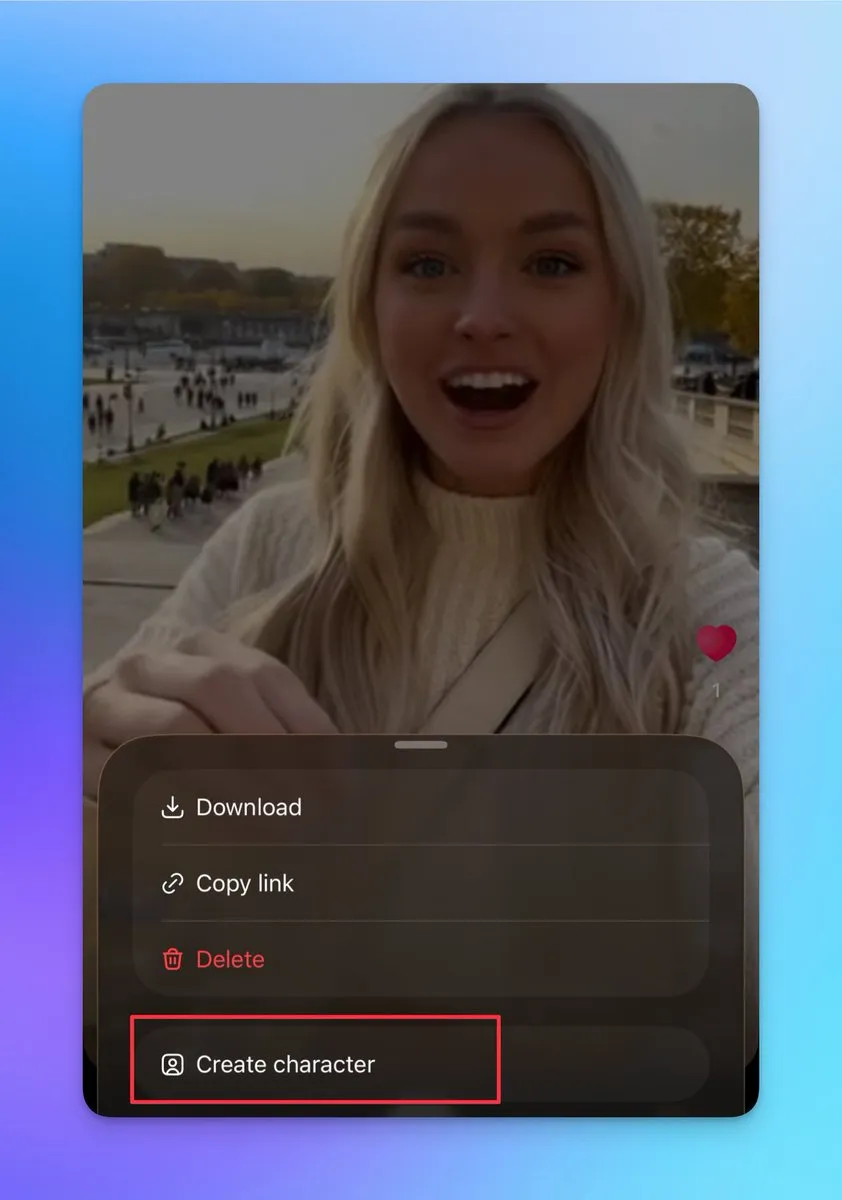

Sora 2 Adds Custom Character and Video Stitching Features, Supporting Continuous Long Video Creation : Sora 2 recently updated several important features, including support for creating other characters (real photos cannot be uploaded, but characters can be created from existing video figures). Users can leverage this feature to ensure character consistency, which is crucial for building continuous long videos. Additionally, the draft page supports publishing multiple stitched videos, and the search page has added a leaderboard showcasing creators of live-action shows and secondary creations. These updates significantly enhance Sora 2’s creative flexibility and user interactivity, expected to substantially increase its daily active users. (Source: op7418, billpeeb, op7418)

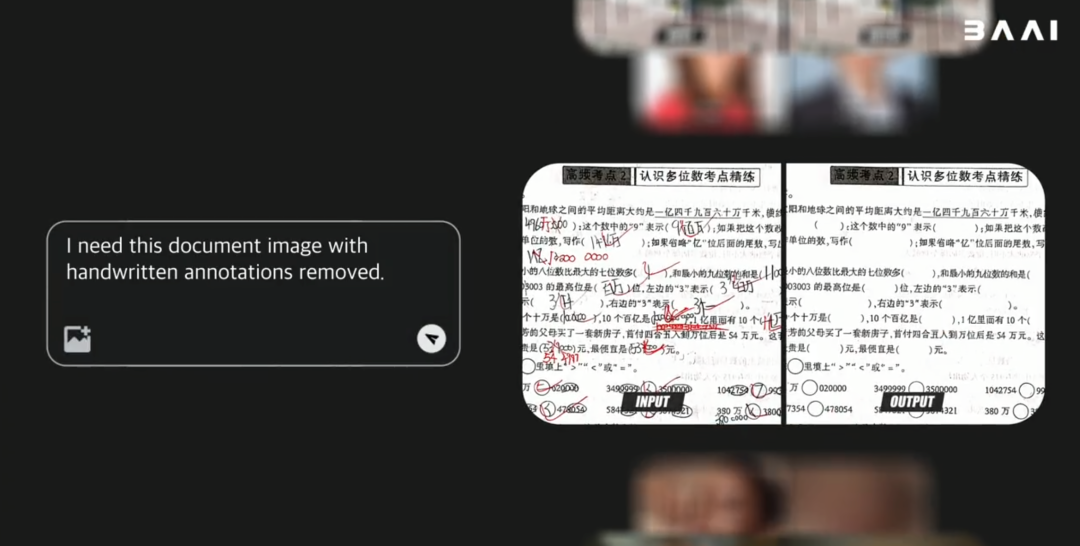

BAAI Releases Open-Source Multimodal World Model Emu3.5, Outperforming Gemini-2.5-Flash-Image : Beijing Academy of Artificial Intelligence (BAAI) has released Emu3.5, an open-source multimodal world model with 34B parameters. The model, based on a Decoder-only Transformer framework, can simultaneously handle image, text, and video tasks, unifying them into a next-state prediction task. Pre-trained on massive internet video data, Emu3.5 possesses the ability to understand spatio-temporal continuity and causality. It excels in visual storytelling, visual guidance, image editing, world exploration, and embodied operations, with significant improvements in physical realism, performing comparably to or even surpassing Gemini-2.5-Flash-Image (Nano Banana). (Source: 36氪)

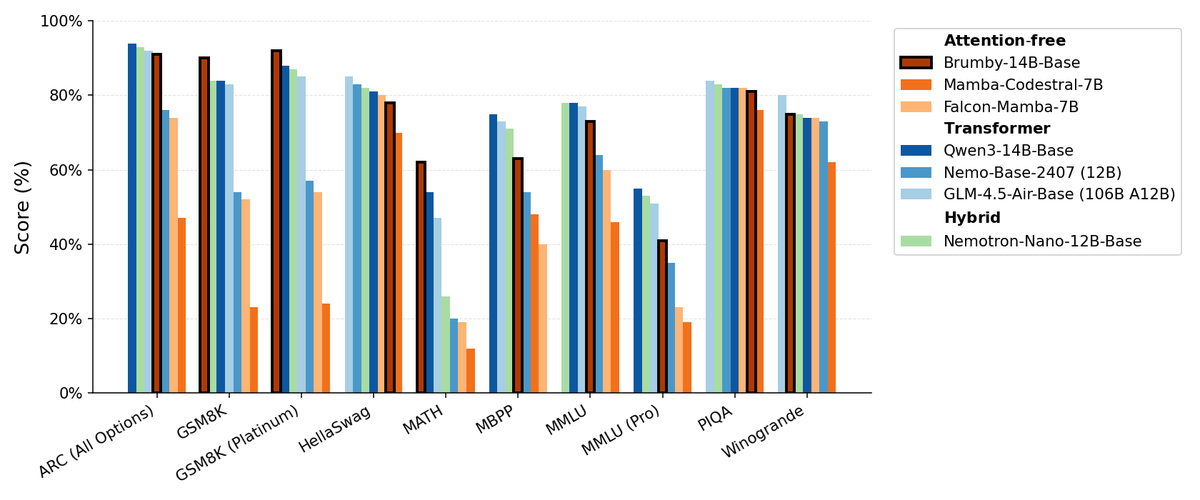

Moonshot AI Releases Kimi Linear Model, Featuring Hybrid Linear Attention Architecture : Moonshot AI has launched the Kimi Linear model, a 48B parameter model based on a Hybrid Linear Attention (KDA) architecture, with 3B active parameters, supporting a 1M context length. By optimizing Gated DeltaNet, Kimi Linear significantly enhances performance and hardware efficiency for long-context tasks, reducing KV cache requirements by up to 75% and improving decoding throughput by 6x. The model performs excellently in various benchmarks, surpassing traditional full attention models, and has open-sourced two versions on Hugging Face. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, bigeagle_xd)

MiniMax M2 Model Adheres to Full Attention Architecture, Emphasizing Production Deployment Challenges : Haohai Sun, MiniMax M2’s pre-training lead, explained why the M2 model still uses a Full Attention architecture instead of linear or sparse attention. He noted that while efficient attention theoretically saves computational power, its performance, speed, and cost in real-world industrial systems are still difficult to surpass full attention. The main bottlenecks lie in the limitations of evaluation systems, the high experimental costs of complex inference tasks, and immature infrastructure. MiniMax believes that when pursuing long-context capabilities, optimizing data quality, evaluation systems, and infrastructure is more critical than merely changing the attention architecture. (Source: Reddit r/LocalLLaMA, ClementDelangue)

Manifest AI Releases Brumby-14B-Base, Exploring Attention-Free Foundation Models : Manifest AI has released Brumby-14B-Base, claiming it to be the strongest attention-free foundation model to date, trained with 14 billion parameters at a cost of just $4,000. The model’s performance is comparable to Transformer and hybrid models of similar scale, signaling that the Transformer era might be slowly coming to an end. This advancement offers new possibilities for AI model architectures, particularly demonstrating immense potential in reducing training costs, challenging the dominance of traditional attention mechanisms. (Source: ClementDelangue, teortaxesTex)

New Nemotron Model Based on Qwen3 32B Optimizes LLM Response Quality : NVIDIA has released Qwen3-Nemotron-32B-RLBFF, a Large Language Model fine-tuned based on Qwen/Qwen3-32B, aimed at improving the quality of responses generated by LLMs in their default thinking mode. This research model significantly outperforms the original Qwen3-32B in benchmarks such as Arena Hard V2, WildBench, and MT Bench, and shows similar performance to DeepSeek R1 and O3-mini, but with inference costs below 5%, demonstrating advancements in both performance and efficiency. (Source: Reddit r/LocalLLaMA)

Mamba Architecture Still Holds Advantages in Long-Context Processing, But Parallel Training is Limited : The Mamba architecture excels in handling long contexts (millions of tokens), avoiding the Transformer’s memory explosion problem. However, its main limitation lies in the difficulty of parallelization during training, which hinders its widespread adoption in larger-scale applications. Despite the existence of various linear mixers and hybrid architectures, Mamba’s parallel training challenge remains a critical bottleneck for its large-scale deployment. (Source: Reddit r/MachineLearning)

NVIDIA Releases ARC, Rubin, Omniverse DSX, and More, Strengthening AI Infrastructure Leadership : At the GTC conference, NVIDIA made a series of significant announcements, including NVIDIA ARC (Aerial RAN Computer) for 6G development in collaboration with Nokia, Rubin, a third-generation rack-scale supercomputer, Omniverse DSX (a blueprint for virtual collaborative design and operation of gigafactories for AI), and NVIDIA Drive Hyperion (a standardized architecture for robotaxis) in partnership with Uber. These releases indicate that NVIDIA is repositioning itself from a chip manufacturer to an architect of national infrastructure, emphasizing “Made in America” and the energy race to address the challenges of the AI and 6G eras. (Source: TheTuringPost, TheTuringPost)

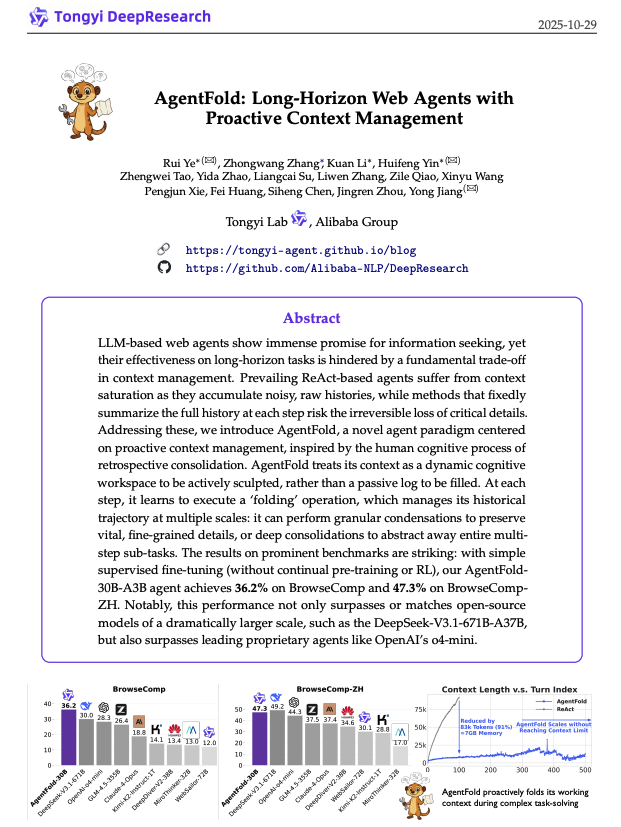

AgentFold: Adaptive Context Management to Enhance Web Agent Efficiency : AgentFold proposes a novel context engineering technique that compresses an agent’s past thoughts into structured memory through “Memory Folding,” dynamically managing the cognitive workspace. This method addresses the context overload issue of traditional ReAct agents and performs excellently in benchmarks like BrowseComp, surpassing large models such as DeepSeek-V3.1-671B. AgentFold-30B achieves competitive performance with a smaller number of parameters, significantly improving the development and deployment efficiency of web agents. (Source: omarsar0)

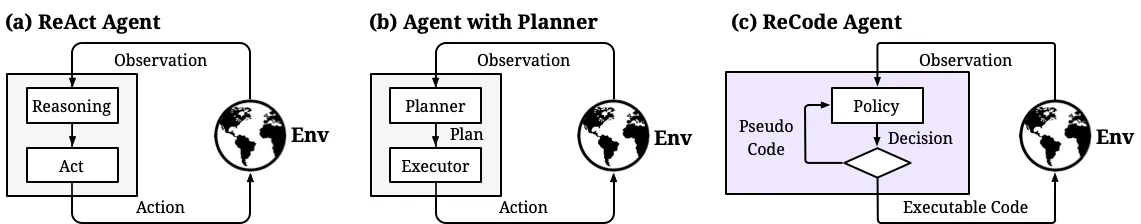

ReCode: Unifying Planning and Action for Dynamic Control of AI Agent Decision Granularity : ReCode (Recursive Code Generation) is a new Parameter-Efficient Fine-Tuning (PEFT) method that unifies AI agents’ planning and action representations by treating high-level plans as recursive functions decomposable into fine-grained actions. This method achieves SOTA performance with only 0.02% of training parameters and reduces GPU memory footprint. ReCode enables agents to dynamically adapt to different decision granularities, learn hierarchical decision-making, and significantly outperforms traditional methods in efficiency and data utilization, marking an important step towards human-like reasoning. (Source: dotey, ZhihuFrontier)

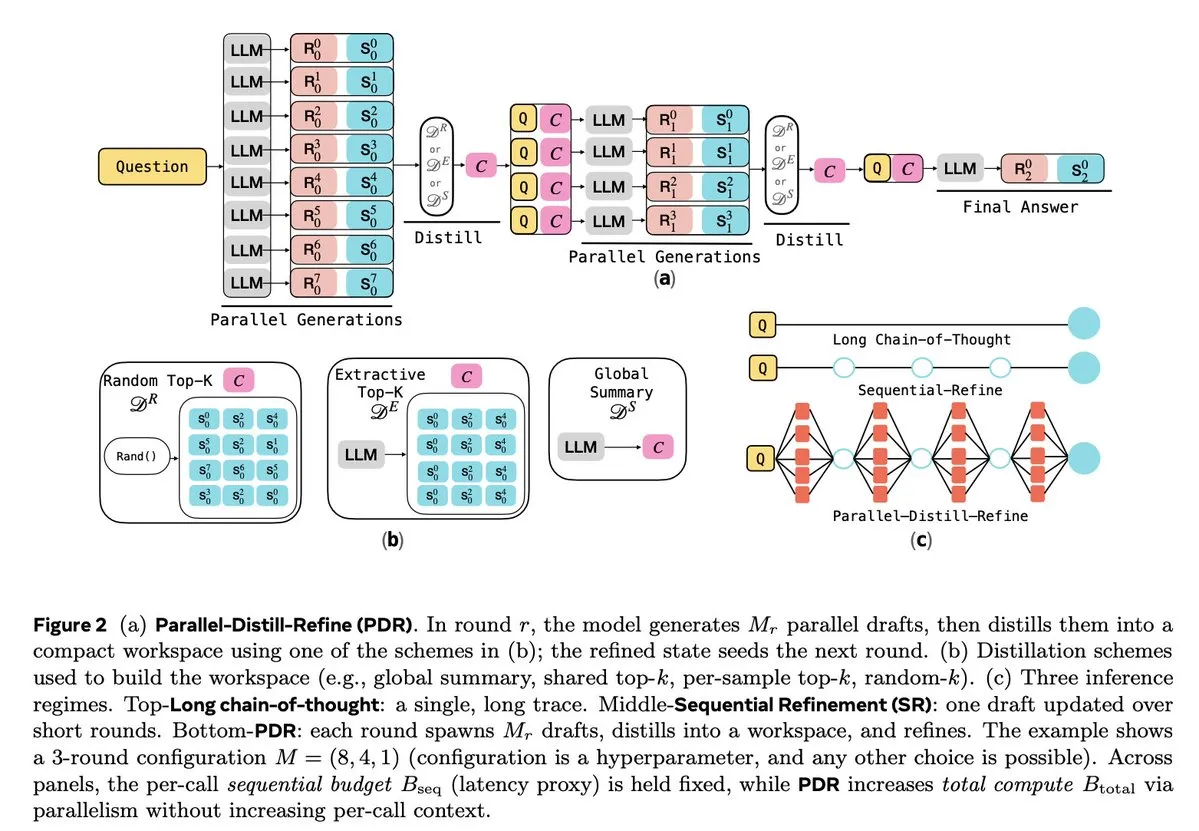

LLM Inference and Reinforcement Learning Optimization : Multiple studies are dedicated to improving the inference efficiency and reliability of LLMs. Parallel-Distill-Refine (PDR) reduces the cost and latency of complex inference tasks by generating and refining drafts in parallel. Flawed-Aware Policy Optimization (FAPO) introduces a reward-penalty mechanism to correct flawed patterns during inference, enhancing reliability. The PairUni framework balances understanding and generation tasks for multimodal LLMs through paired training and the Pair-GPRO optimization algorithm. PM4GRPO leverages process mining techniques to enhance the reasoning capabilities of policy models through inference-aware GRPO reinforcement learning. The Fortytwo protocol, through distributed peer-ranking consensus, achieves excellent performance in AI group inference and strong resistance to adversarial prompts. (Source: NandoDF, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

🧰 Tools

Tencent Open-Sources WeKnora: An LLM-Driven Document Understanding and Retrieval Framework : Tencent has open-sourced WeKnora, an LLM-based document understanding and semantic retrieval framework designed specifically for handling complex, heterogeneous documents. It adopts a modular architecture, combining multimodal preprocessing, semantic vector indexing, intelligent retrieval, and LLM inference, following the RAG paradigm to deliver high-quality, context-aware answers by integrating relevant document chunks and model inference. WeKnora supports various document formats, embedding models, and retrieval strategies, and provides a user-friendly Web interface and API, supporting local deployment and private cloud to ensure data sovereignty. (Source: GitHub Trending)

Jan: Open-Source Offline ChatGPT Alternative, Supporting Local LLM Execution : Jan is an open-source ChatGPT alternative that runs 100% offline on the user’s computer. It allows users to download and run LLMs from HuggingFace (such as Llama, Gemma, Qwen, GPT-oss, etc.) and supports integration with cloud models like OpenAI and Anthropic. Jan offers custom assistants, an OpenAI-compatible API, and Model Context Protocol (MCP) integration, emphasizing privacy protection and providing users with a fully controlled local AI experience. (Source: GitHub Trending)

Claude Code: Anthropic’s Developer Toolkit and Skills Ecosystem : Anthropic’s Claude Code significantly enhances developer productivity through a series of “skills” and agents. These include the Rube MCP connector (connecting Claude to 500+ applications), the Superpowers developer toolkit (offering /brainstorm, /write-plan, /execute-plan commands), a document suite (handling Word/Excel/PDF), Theme Factory (brand guideline automation), and Systematic Debugging (simulating advanced developer debugging processes). These tools, through modular design and context-aware capabilities, help developers achieve automated workflows, code reviews, refactoring, and bug fixes, even supporting non-technical teams in building their own small tools. (Source: Reddit r/ClaudeAI, Reddit r/ClaudeAI)

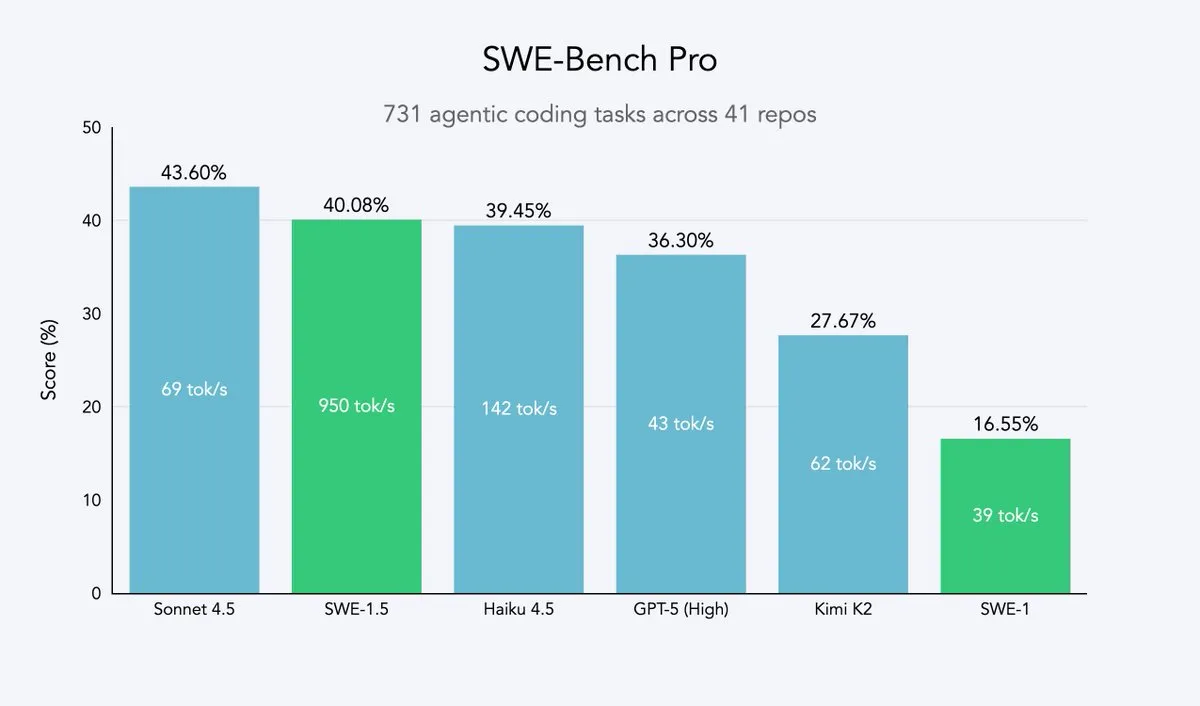

Cursor 2.0 and Windsurf: Code Agents Pursuing Speed and Efficiency : Cursor and Windsurf have released code agent models and a 2.0 platform focused on speed optimization. Their strategy involves fine-tuning open-source large models (like Qwen3) with reinforcement learning and deploying them on optimized hardware to achieve “medium intelligence but ultra-fast speed.” This approach is an efficient strategy for code agent companies, allowing them to approach the Pareto frontier of speed and intelligence with minimal resource costs. Windsurf’s SWE-1.5 model sets a new standard for speed while achieving near-SOTA coding performance. (Source: dotey, Smol_AI, VictorTaelin, omarsar0, TheRundownAI)

Perplexity Patents: The First AI Patent Research Agent, Empowering IP Intelligence : Perplexity has launched Perplexity Patents, the world’s first AI patent research agent, aiming to make IP intelligence accessible to everyone. This tool supports searching and researching across patents, and will later introduce Perplexity Scholar, focusing on academic research. This innovation will greatly simplify patent retrieval and analysis processes, providing efficient and user-friendly intellectual property intelligence services for innovators, lawyers, and researchers. (Source: AravSrinivas)

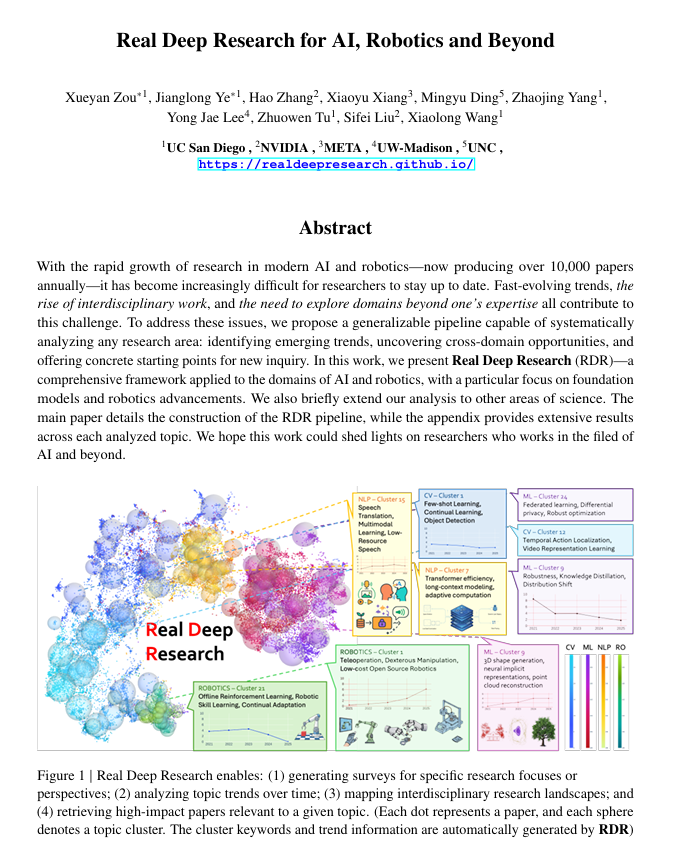

Real Deep Research (RDR): An AI-Driven Framework for In-Depth Scientific Research : Real Deep Research (RDR) is an AI-driven framework designed to help researchers keep pace with the rapid advancements in modern science. RDR bridges the gap between expert-authored surveys and automated literature mining, offering scalable analytical workflows, trend analysis, cross-domain connective insights, and generating structured, high-quality summaries. It serves as a comprehensive research tool, helping researchers better understand the big picture. (Source: TheTuringPost)

LangSmith Launches No-Code Agent Builder, Empowering Non-Technical Teams to Build Agents : LangChainAI’s LangSmith has launched a no-code Agent Builder, aiming to lower the barrier to building AI agents, enabling non-technical teams to easily create them. This tool facilitates the widespread adoption and application of AI agents through conversational agent building UX, built-in memory features that help agents remember and improve, and by empowering both non-technical teams and developers to collaboratively build agents. (Source: LangChainAI)

Verdent Integrates MiniMax-M2, Enhancing Coding Capabilities and Efficiency : Verdent now supports the MiniMax-M2 model, bringing users advanced coding capabilities, high-performance agents, and efficient parameter activation. By trying MiniMax-M2 for free in VS Code via Verdent, developers can enjoy a smarter, faster, and more cost-effective coding experience. This integration brings the powerful capabilities of MiniMax-M2 to a broader developer community. (Source: MiniMax__AI)

Base44 Launches New Builder, Accelerating Concept-to-Application Conversion : Base44 has released its new Builder, marking a fundamental shift in its approach. The new Builder acts more like an expert developer, capable of conducting research before building, gathering context from multiple sources, intelligently debugging, and making informed architectural decisions. This update aims to increase the speed of converting concepts into functional applications tenfold, significantly boosting development efficiency. (Source: MS_BASE44)

Qdrant Partners with Confluent to Empower Real-Time Context-Aware AI Agents : Qdrant has partnered with Confluent to provide fresh, real-time context for intelligent AI agents and enterprise applications through Confluent Streaming Agents and the Real-Time Context Engine. Qdrant’s vector search capabilities, combined with Confluent’s real-time streaming data, enable developers to build and scale event-driven, context-aware AI agents, thereby unlocking the full potential of agentic AI and significantly reducing resolution times and costs in scenarios like incident response. (Source: qdrant_engine, qdrant_engine)

📚 Learning

ICLR26 Paper Finder: An LLM-Based AI Paper Search Tool : A developer has created the ICLR26 Paper Finder, a tool that leverages language models as its backbone to search for papers from specific AI conferences. Users can query by title, keywords, or even paper abstracts, with abstract searches yielding the highest accuracy. The tool is hosted on a personal server and Hugging Face, providing AI researchers with an efficient way to retrieve literature. (Source: Reddit r/deeplearning, Reddit r/MachineLearning)

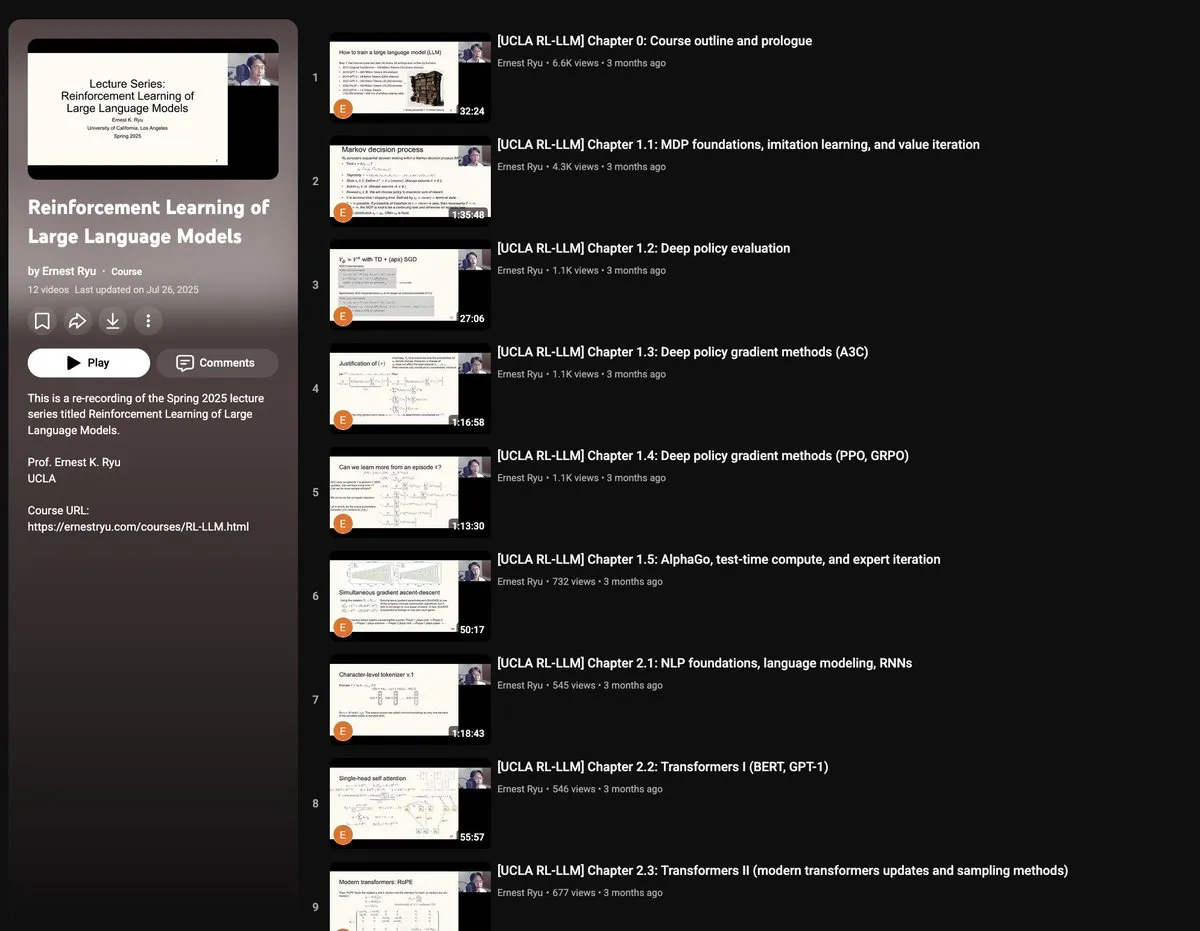

UCLA Spring 2025 Course: Reinforcement Learning for Large Language Models : UCLA will offer a “Reinforcement Learning for Large Language Models” course in Spring 2025, covering a wide range of RLxLLM topics, including fundamentals, test-time computation, RLHF (Reinforcement Learning from Human Feedback), and RLVR (Reinforcement Learning from Verifiable Rewards). This new series of lectures provides researchers and students with an opportunity to delve into the cutting-edge theories and practices of LLM reinforcement learning. (Source: algo_diver)

Hand-Drawn Autoencoder Guide: Understanding the Foundations of Generative AI : ProfTomYeh has released a 7-step detailed hand-drawn guide to Autoencoders, aiming to help readers understand this neural network that plays a crucial role in compression, denoising, and learning rich data representations. Autoencoders are fundamental to many modern generative architectures, and this guide intuitively explains how they encode and decode information, serving as a valuable resource for learning core generative AI concepts. (Source: ProfTomYeh)

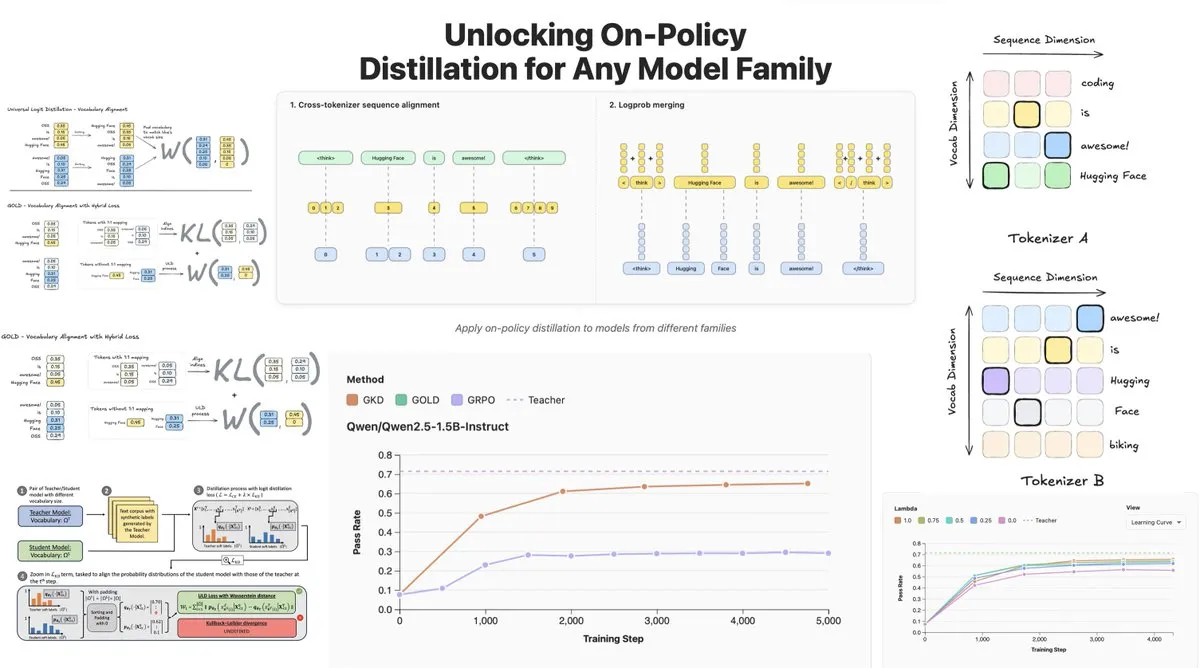

Hugging Face Releases On-Policy Logit Distillation, Supporting Cross-Model Distillation : Hugging Face has introduced General On-Policy Logit Distillation (GOLD), extending policy distillation methods to allow any teacher model to be distilled into any student model, even if their Tokenizers differ. This technique has been integrated into the TRL library, allowing developers to select any model pair from the Hub for distillation, providing immense flexibility and performance recovery for LLM post-training, especially addressing the issue of general performance degradation after fine-tuning in specific domains. (Source: clefourrier, winglian, _lewtun)

Lumi: Google DeepMind Leverages Gemini 2.5 to Assist arXiv Paper Reading : Google DeepMind’s PAIR team has released Lumi, a tool that leverages the Gemini 2.5 large model to assist in reading arXiv papers. Lumi can add summaries, references, and inline Q&A to papers, helping researchers read more intelligently and efficiently, improving the comprehension efficiency of scientific literature. (Source: GoogleDeepMind)

💼 Business

AI Drives Tech Giants to Record Revenues, Microsoft and Google Post Impressive Earnings : Google’s parent company Alphabet and Microsoft both achieved landmark results in their latest earnings reports, with AI emerging as a core growth engine. Alphabet’s quarterly revenue surpassed $100 billion for the first time, reaching $102.3 billion, with Google Cloud growing by 34% and 70% of existing customers using AI products. Microsoft’s revenue grew by 18% to $77.7 billion, with Intelligent Cloud revenue exceeding $30 billion for the first time, Azure cloud services growing by 40%, and significant AI-driven momentum. Both companies plan to substantially increase AI capital expenditures to solidify their leading positions in the AI sector and gain capital market recognition. (Source: 36氪, Yuchenj_UW)

Block CTO: AI Agent Goose Automates 60% of Complex Work, Code Quality Not Directly Related to Product Success : Dhanji R. Prasanna, CTO of Block (formerly Square), shared how the company saved 12,000 employees 8-10 hours of work per week within 8 weeks through its open-source AI Agent framework “Goose.” Goose, based on the Model Context Protocol (MCP), connects enterprise tools to automate tasks such as code writing, report generation, and data processing. Prasanna emphasized that AI-native companies should reposition themselves as technology companies and undergo organizational restructuring. He put forward the counter-intuitive view that “code quality is not directly related to product success,” arguing that whether a product solves user problems is key, and encouraged engineers to embrace AI, noting that senior and junior engineers have the highest acceptance of AI tools. (Source: 36氪)

Digital Human Industry Enters Elimination Round, 3D Digital Human Production Shifts to Platform-Based Approach : With the explosion of large models, the digital human industry faces a reshuffle, with companies lacking AI capabilities being eliminated. 2D digital humans account for 70.1% of the market, while 3D digital humans are limited by technological iteration and high GPU costs. Leading companies like MoFa Technology emphasize that 3D digital humans need to match large model capabilities, pointing out that high-quality data accumulation, scarce talent, and strong artistic skills are key. Industry trends indicate that 3D digital human production is moving towards a platform-based approach, with AI technological advancements reducing costs and enabling large-scale applications. Companies like ShadowPlay Technology and Baidu have also launched 3D generation platforms, aiming to use digital humans as infrastructure to empower more application scenarios. (Source: 36氪)

🌟 Community

Users’ Complex Perceptions of AI Emotion and Trust: R2D2 vs. ChatGPT : Social media is abuzz with discussions on the differences in emotional connection users have with R2D2 versus ChatGPT. Users find R2D2 endearing due to its unique temperament, loyalty, and “horse-like” image, and it doesn’t involve real-world social ethical issues. In contrast, ChatGPT, as a “real fake AI,” struggles to build the same emotional bond due to its utility, content moderation restrictions, and potential surveillance concerns. This comparison reveals that users’ expectations for AI extend beyond intelligence to include “humanized” interactive experiences and perceptions of social impact. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT)

Limitations of AI Psychological Counseling and the Necessity of Human Intervention : With the rise of AI psychological counseling products, people are beginning to use AI to address loneliness and mental health issues. Research shows that approximately 22% of Gen Z professionals have seen a therapist, and nearly half consult AI. AI has advantages in providing information, ruling out factors, and offering companionship, but it cannot replace human therapists in empathy, understanding subtle cues (‘reading the room’), and guiding the pace of therapy. Cases indicate that AI requires a manual circuit breaker mechanism for identifying extreme risks, and humans are indispensable in judging the boundary between emotions and pathology, accumulating experience, and non-verbal communication. AI should primarily undertake repetitive, auxiliary tasks and lower the barrier to seeking help, with the ultimate goal of guiding people back to real human relationships. (Source: 36氪)

Seniors and Large Model Interaction: Defining “Algorithms” from “Ways of Living” : Fudan University, Tencent SSV Time Lab, and other institutions conducted a year-long study, teaching 100 seniors to use large models. The study found that seniors’ attitude towards AI is not resistance, but a “pragmatic technological view” based on life experience. They are more concerned with whether technology can integrate into daily life and provide companionship, rather than extreme functionality. In trust calibration, seniors exhibited various patterns such as “limited correction,” “collaborative reciprocity,” and “cognitive rigidity,” along with “questioning hesitation” and a “gender gap.” They expect large models to be “fortune-telling demigods,” “trustworthy doctors,” “chatting friends,” and “relaxing toys” – that is, “human-understanding” technology that comprehends more gently and is closer to daily life. This indicates that the value of technology lies in how long it can wait, not how fast it runs, calling for technology to be “symbiotic” rather than merely “age-appropriate,” measured by human feelings, pace, and dignity. (Source: 36氪)

AI’s Societal Impact: From Privacy Surveillance to Energy Consumption and Employment Transformation : Social media widely discusses the multifaceted impact of AI on society. Users worry that AI has achieved “invisible surveillance” through various applications, searches, and cameras, predicting and influencing individual behavior, rather than sci-fi-like robot control. Concurrently, the immense demand for energy and water resources by AI data centers has sparked community protests, leading to power outages and water shortages. Furthermore, AI boosts productivity in code generation and automated tasks, but it has triggered discussions about changes in employment structures and the challenges of AI code quality on production efficiency. These discussions reflect the public’s complex emotions regarding the convenience and potential risks brought by AI technology. (Source: Reddit r/artificial, MIT Technology Review, MIT Technology Review, Ronald_vanLoon, Ronald_vanLoon)

Rapid Evolution of AI Terminology and Concepts : Community discussions highlight that terminology and concepts in the AI field are rapidly evolving. For instance, “training/building models” often refers to “fine-tuning,” while “fine-tuning” is now considered a new form of “prompt/context engineering.” This evolution reflects the increasing complexity of the AI tech stack and the demand for more refined operations. Furthermore, regarding the trade-off between model speed and intelligence, developers tend to prefer “slow and smart” models because they provide more reliable results, even if it means more waiting time. (Source: dejavucoder, dejavucoder, dejavucoder)

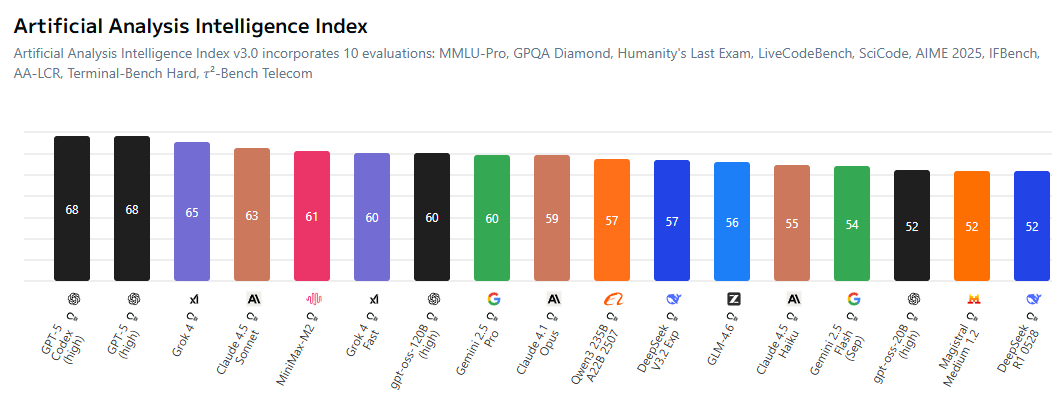

Intensifying Competition Between AI Open-Source Ecosystem and Proprietary Models : The community is actively discussing the narrowing gap between open-source AI models and proprietary ones, forcing closed-source labs to be more competitive with their pricing. Open-source models like MiniMax-M2 perform excellently on the AI Index at extremely low costs. Meanwhile, Chinese companies and startups are actively open-sourcing AI technology, while US companies are relatively lagging in this regard. This trend signals an era where “everyone trains models based on open source,” fostering the democratization and innovation of AI technology. (Source: ClementDelangue, huggingface, clefourrier, huggingface)

Impact of AI-Generated Content on Traditional Industries and Ethical Challenges : AI-generated content is increasingly permeating traditional industries, for example, AI-powered “artists” topping music charts, and deepfake technology being used for fraud (such as fake Jensen Huang speeches promoting cryptocurrency scams). These phenomena have sparked discussions on copyright, ethics, and regulation. At the same time, AI also brings new productivity tools in areas like code generation and automated social media account management, but the quality and reliability of its generated content still require human review. This highlights the challenge of balancing technological innovation with social responsibility and ethical norms during the widespread adoption of AI. (Source: Reddit r/artificial, 36氪, jeremyphoward)

AI Research Community’s Focus on Data Quality and Evaluation : The AI research community is increasingly focusing on the critical role of data quality in model training, noting that acquiring high-quality data is more challenging than renting GPUs or writing code. Concurrently, there is widespread discussion about the limitations of evaluation benchmarks, with the view that existing benchmarks may not fully reflect a model’s true capabilities and are prone to over-optimization. Researchers are calling for the development of more informative and realistic evaluation systems to promote healthy AI research. (Source: code_star, code_star, clefourrier, tokenbender)

AI Applications and Outlook in Healthcare : AI demonstrates immense potential in the healthcare sector. For example, Yunpeng Technology has launched new AI+health products, including a smart kitchen lab and a smart refrigerator equipped with an AI health large model, offering personalized health management. Additionally, MONAI, as a medical imaging AI toolkit, provides a PyTorch open-source framework. AI-powered exoskeletons help wheelchair users stand and walk, and LLM diagnostic agents learn diagnostic strategies in virtual clinical environments. These advancements indicate that AI will profoundly transform healthcare services, from daily health management to assisted diagnosis and treatment. (Source: 36氪, GitHub Trending, Ronald_vanLoon, Ronald_vanLoon, HuggingFace Daily Papers)

Organizational Transformation and Talent Demand in the Age of AI : With the widespread adoption of AI, enterprises face profound changes in organizational structure and talent requirements. Block CTO Dhanji R. Prasanna emphasized that companies need to reposition themselves as “technology companies” and shift from a “general manager system” to a “functional system” to centralize technological focus. AI tools like Goose can significantly boost productivity, but high-level architecture and design still require experienced engineers. When recruiting, companies prioritize a learning mindset and critical thinking over mere AI tool usage skills. AI also blurs job boundaries, with non-technical roles beginning to utilize AI tools, fostering the formation of “human-machine collective” collaboration models. (Source: 36氪, MIT Technology Review, NandoDF, SakanaAILabs)

💡 Other

Continuous Innovation in Multifunctional Robotics : The robotics field showcases diverse innovations, including the octopus-inspired robot SpiRobs, swimming drones, Helix robots for parcel sorting, and humanoid robots assisting with quality checks in NIO factories. These advancements cover biomimetic design, automation, human-robot collaboration, and adaptability to special environments, signaling the broad potential of robotics in industrial, military, and daily applications. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Deepening AI Agent Concept and Market Outlook : AI Agents are defined as intelligent entities capable of reasoning and adapting like humans, enabling seamless human-machine dialogue. They are seen as a trend in future labor, with numerous AI Agent building tools emerging in the market. The core value of AI Agents lies in becoming “production tools” capable of performing actual tasks, not just auxiliary tools for “chatting,” and their development will drive the deep application of AI across various fields. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, dotey)

AI and Autonomous Driving: Uber Fleet Adopts Nvidia’s New Chips, Advancing Robotaxi Development : Uber’s next-generation autonomous fleet will adopt Nvidia’s new chips, expected to reduce the cost of robotaxis. Nvidia’s Drive Hyperion platform is a standardized architecture for “robotaxi-ready” vehicles, and the partnership with Uber will accelerate the popularization of autonomous driving technology among consumers. This indicates that AI applications in the transportation sector are rapidly developing, aiming to achieve safer and more economical autonomous driving services. (Source: MIT Technology Review, TheTuringPost)