Keywords:Quantum computing, AI algorithms, Transformer architecture, AI regulation, AI business trends, AI ethics, AI hardware, AI model evaluation, Google Quantum Algorithm Willow chip, Meta’s Free Transformer subconscious layer, DeepSeek-V2 multi-head latent attention, AMD Radeon AI PRO R9700 graphics card, AI code generation security layer Corridor

🔥 Spotlight

Google Quantum Algorithm Outperforms Supercomputers: Google claims its new quantum algorithm outperforms supercomputers, with the potential to accelerate drug discovery and new material development. Central to this breakthrough is its Willow chip. While practical applications of quantum computing are still years away, this advancement marks a significant milestone in the field, signaling immense potential for future scientific research. (Source: MIT Technology Review)

Reddit Sues AI Search Engine Perplexity: Reddit has filed a lawsuit against AI search engine Perplexity, alleging it illegally scraped Reddit data for model training. Reddit is seeking a permanent injunction to prevent such companies from selling its data without permission. This case has sparked widespread discussion about copyright protection and the legality of data usage in the age of AI. (Source: MIT Technology Review)

China’s Five-Year Plan: Tech Self-Sufficiency and AI’s Key Role: China has unveiled a five-year plan aimed at achieving technological self-sufficiency, designating semiconductors and artificial intelligence as key development areas. This move underscores China’s strategic determination for technological autonomy and its pursuit of an advantage in international trade competition, drawing global attention to shifts in technology supply chains and the geopolitical landscape. (Source: MIT Technology Review)

OpenAI Sued Over Relaxed Suicide Discussion Rules: OpenAI is accused of twice relaxing its rules on suicide discussions to increase ChatGPT user numbers, leading to a teenager’s suicide. The victim’s parents have filed a lawsuit, alleging that OpenAI’s changes weakened suicide protections for users, raising serious questions about AI ethics, user safety, and platform responsibility. (Source: MIT Technology Review)

Musk Builds Robot Army, Optimus Envisioned as “Surgeon”: Elon Musk is actively building a robot army and envisions his Optimus robots becoming “excellent surgeons” in the future. This vision has sparked widespread discussion about general-purpose robot capabilities, human-robot trust, and AI applications in complex professional fields, foreshadowing a future where robotics plays a more significant role in the real world. (Source: MIT Technology Review)

🎯 Trends

Meta Unveils “Free Transformer”: Rewriting AI’s Underlying Rules: Meta has unveiled a new model, “Free Transformer,” breaking the core rules of the Transformer architecture that have stood for eight years, by introducing a “subconscious layer” that enables pre-thinking before generation. This innovation adds only about 3% computational overhead but significantly enhances the model’s performance in reasoning and structured generation. It outperforms larger models in tests like GSM8K and MMLU and is considered the first Transformer with “intrinsic intent.” (Source: 36氪)

Google DeepMind Robots Achieve “Think Before Acting”: Google DeepMind’s Gemini Robotics 1.5 model enables robots to transition from passively executing commands to reflecting, reasoning, and making decisions. These robots can explain their reasoning processes, transfer knowledge across machines, and integrate vision, language, and action into a unified thought loop, promising to usher in a new phase of real-world intelligence and human-robot collaboration. (Source: Ronald_vanLoon)

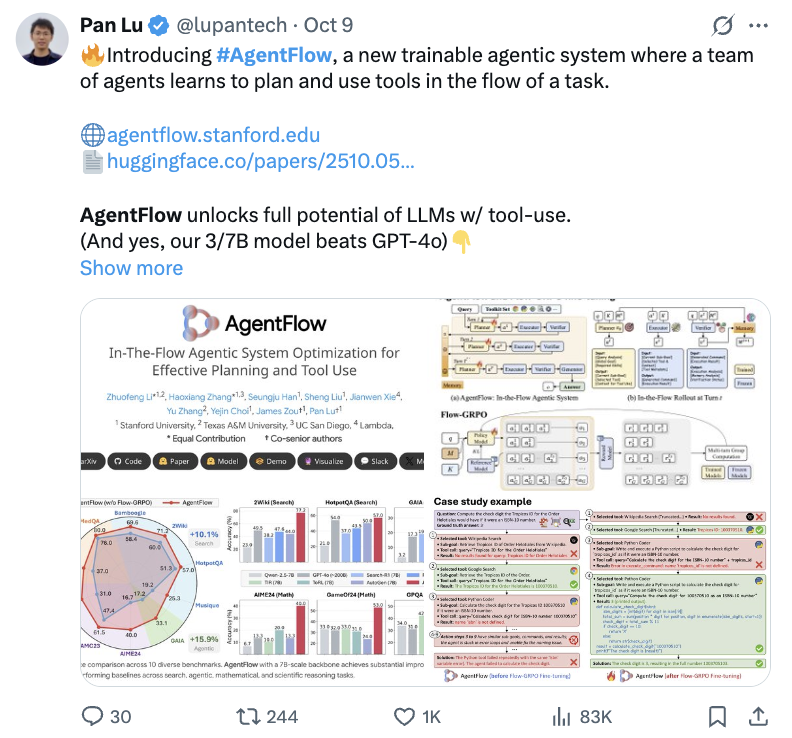

Stanford’s AgentFlow Boosts Small Model Reasoning: The Stanford team has introduced AgentFlow, a new paradigm that uses online reinforcement learning to significantly boost the reasoning performance of 7B small models on complex problems, even surpassing GPT-4o and Llama3.1-405B. AgentFlow operates through the collaboration of four agents: a planner, executor, verifier, and generator. It utilizes Flow-GRPO to optimize the planner in real-time, achieving significant improvements in search, agent, mathematical, and scientific tasks. (Source: 36氪)

AI Discovers New MoE Algorithm: 5x Faster, 26% Cheaper: A research team at UC Berkeley has proposed the ADRS system, which, through a “generate-evaluate-improve” iterative loop, enables AI to discover new algorithms that are 5 times faster and 26% cheaper than human-designed algorithms. Based on the OpenEvolve framework, AI discovered ingenious heuristic methods for tasks like MoE load balancing, significantly boosting operational efficiency and demonstrating AI’s immense potential in algorithm creation. (Source: 36氪)

Anthropic Expands Google TPU Usage, Bolstering AI Compute Infrastructure: Anthropic has announced plans to expand its use of Google TPUs, securing approximately 1 million TPUs and over 1 gigawatt of capacity by 2026. This move demonstrates Anthropic’s significant investment in AI computing infrastructure and deep collaboration with Google in the AI domain, foreshadowing a further expansion in the scale of AI model training. (Source: Justin_Halford_)

DeepSeek-V2 Multi-head Latent Attention Sparks Discussion: DeepSeek-V2’s introduction of Multi-head Latent Attention (MLA), which significantly reduces complexity by projecting keys and values into a latent space, has sparked academic discussion about why this concept hadn’t emerged earlier. Although Perceiver explored similar ideas in 2021, MLA only appeared in 2024, suggesting that specific “tricks” might be necessary for its practical effectiveness. (Source: Reddit r/MachineLearning)

AI Video Content Creation Reaches Tipping Point: AI video content creation has reached a tipping point, with viral hits emerging frequently. For instance, Sora 2 has launched on the Synthesia platform, and an AI-generated Journey to the West-themed music video on Bilibili garnered millions of views. This demonstrates AI’s immense potential in entertainment content generation, rapidly transforming the landscape of content creation. (Source: op7418)

“Attention Is All You Need” Co-author Llion Jones “Bored” with Transformer Architecture: Llion Jones, co-author of the “Attention Is All You Need” paper, expresses “boredom” with the AI field’s over-reliance on the Transformer architecture, believing it hinders new technological breakthroughs. He points out that despite massive investment in AI, research has become narrow due to investment pressure and competition, potentially missing the next major architectural innovation. (Source: Reddit r/ArtificialInteligence)

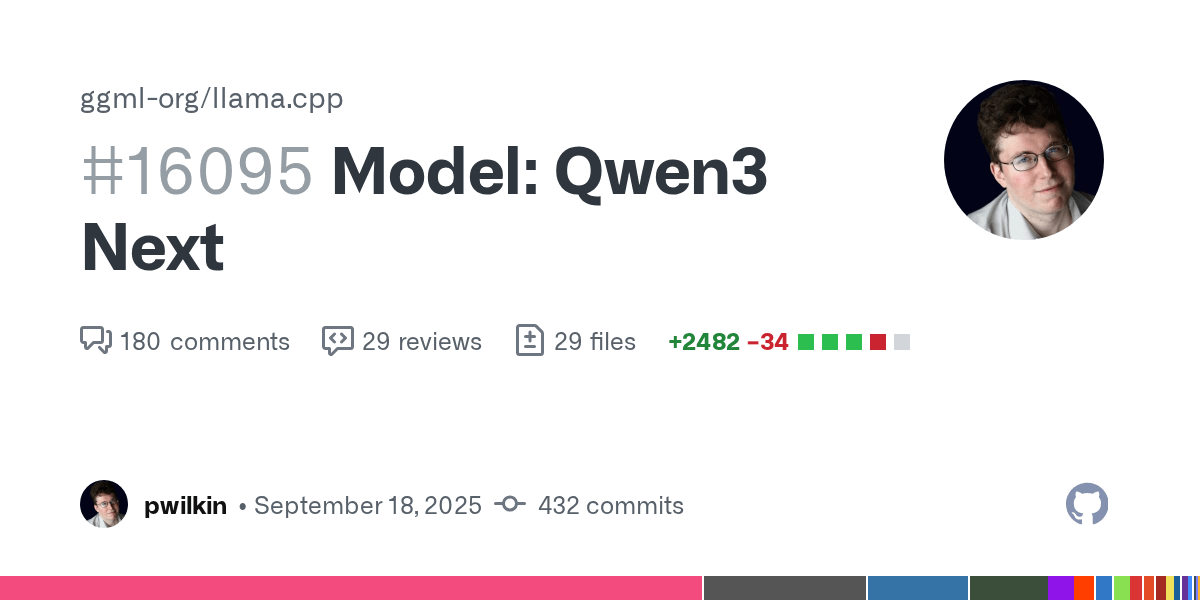

Qwen3 Next Model llama.cpp Support Progress: Support for the Qwen3 Next model in llama.cpp is ready for code review. While this is not the final version and has not yet been optimized for speed, it marks positive progress by the open-source community towards integrating new models, foreshadowing the possibility of running Qwen3 locally. (Source: Reddit r/LocalLLaMA)

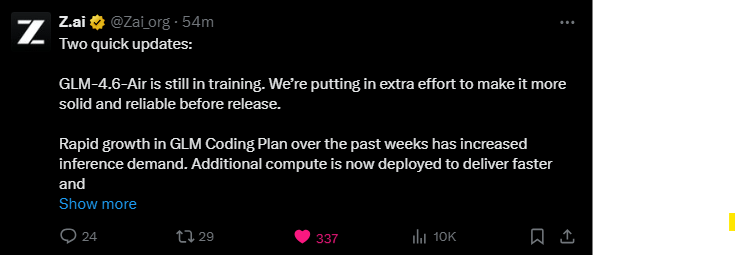

GLM-4.6-Air Model Continues Training: The GLM-4.6-Air model is still undergoing training, with the team dedicating extra effort to enhance its stability and reliability. The user community expresses anticipation, preferring to wait longer for a higher-quality model and curious whether its performance will surpass existing models. (Source: Reddit r/LocalLLaMA)

DyPE: Training-Agnostic Method for Ultra-High Resolution Diffusion Image Generation: A HuggingFace paper introduces DyPE (Dynamic Positional Extrapolation), a novel training-agnostic method that enables pre-trained diffusion Transformers to generate images far beyond their training resolution. DyPE dynamically adjusts the model’s positional encoding and leverages the spectral evolution of the diffusion process, significantly boosting performance and fidelity across multiple benchmarks, with more pronounced effects at high resolutions. (Source: HuggingFace Daily Papers)

Multi-Agent “Thought Communication” Paradigm: A HuggingFace paper introduces the “thought communication” paradigm, enabling multi-agent systems to directly engage in mental exchange, thereby transcending the limitations of natural language. This method, formalized as a latent variable model, theoretically identifies shared and private latent thoughts among agents, and its collaborative advantages have been validated on synthetic and real-world benchmarks. (Source: HuggingFace Daily Papers)

LALMs Vulnerable to Emotional Changes: A HuggingFace paper reveals significant security vulnerabilities in Large Audio Language Models (LALMs) under varying speaker emotional changes. By constructing a dataset of malicious voice commands, the study shows that LALMs produce varying levels of unsafe responses across different emotions and intensities, with moderate emotional expressions posing the highest risk. This highlights the necessity of ensuring AI robustness in real-world deployments. (Source: HuggingFace Daily Papers)

OpenAI Customizes “AI Powerhouse” Blueprints for Japan and South Korea: OpenAI has released “Japan’s Economic Blueprint” and “South Korea’s Economic Blueprint,” signaling an upgrade of its Asia-Pacific strategy from product export to national-level cooperation. The blueprints propose a dual-track strategy of “sovereign capability building + strategic collaboration” and a three-pillar plan of “inclusive AI, infrastructure, and lifelong learning,” aiming to accelerate AI adoption, upgrade computing infrastructure, and help both countries become global AI powerhouses. (Source: 36氪)

ExGRPO Framework: New Paradigm for Large Model Reasoning Learning: Teams including Shanghai AI Lab have proposed ExGRPO, an experience management and learning framework, which optimizes large model reasoning capabilities by scientifically identifying, storing, filtering, and learning valuable experiences. ExGRPO significantly boosts performance on complex tasks like math competition problems, revealing that medium-difficulty problems and low-entropy trajectories are key to efficient learning, avoiding the “learn and forget” issue common in traditional RLVR models. (Source: 量子位)

🧰 Tools

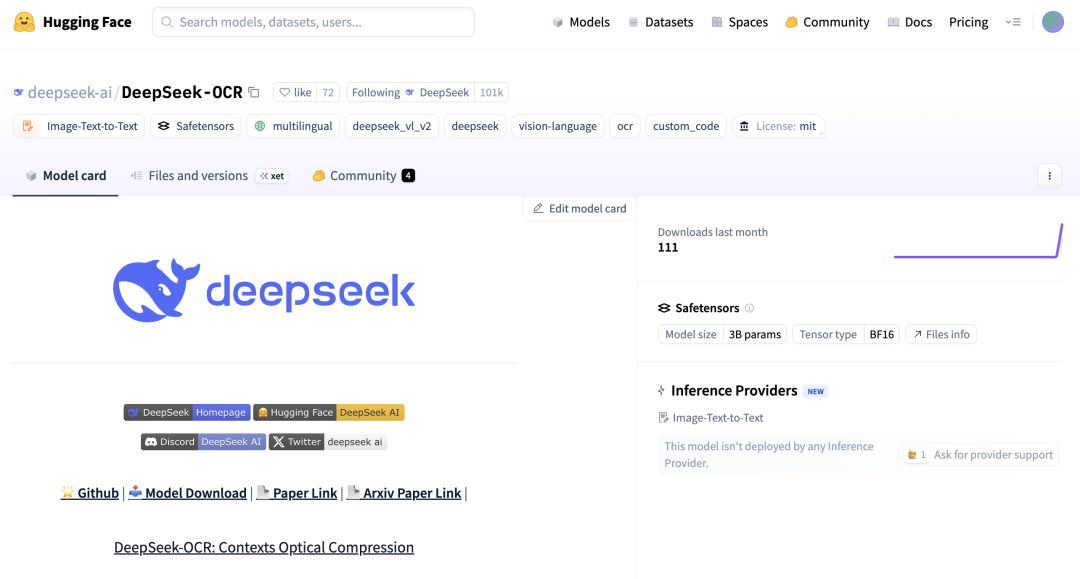

DeepSeek-OCR and Zhipu Glyph: Visual Token Tech Breakthrough: DeepSeek has open-sourced its 3-billion-parameter DeepSeek-OCR model, innovatively enabling AI to optically compress text by “reading images,” achieving a 10x compression ratio and 97% OCR accuracy. Zhipu AI followed suit with Glyph, which also significantly reduces LLM context and boosts processing efficiency and speed by rendering long texts as image-based visual tokens. These models are gaining support in vLLM, demonstrating the immense potential of visual modalities in LLM information processing. (Source: 36氪, 量子位, vllm_project, mervenoyann)

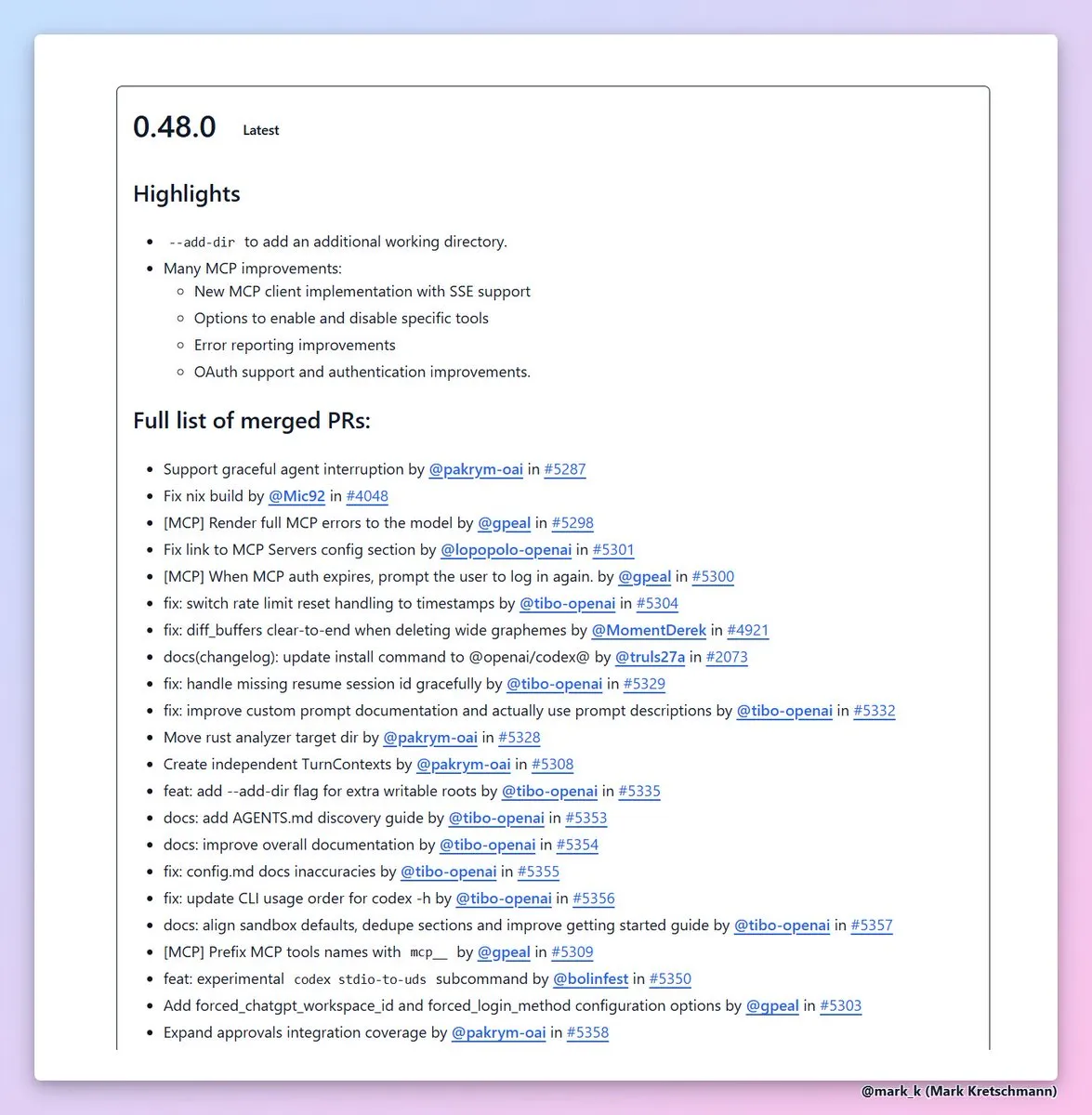

Codex CLI 0.48 Adds --add-dir Feature: OpenAI has released Codex CLI version 0.48, with its most valuable new feature being --add-dir, which allows adding other directories to the current workspace. This significantly enhances the usability of AI coding tools in multi-file projects, improves error reporting and authentication experience for MCP clients, and accelerates software engineering efficiency. (Source: dotey, kevinweil)

AI Code Generation Security Layer Corridor Launched: The Corridor security layer has officially launched, providing real-time security protection for AI code generation tools like Cursor and Claude Code. Corridor is the first security tool that keeps pace with development speed, enforcing security guardrails in real-time to ensure the safety of AI-assisted coding, and offers a two-week free trial. (Source: percyliang)

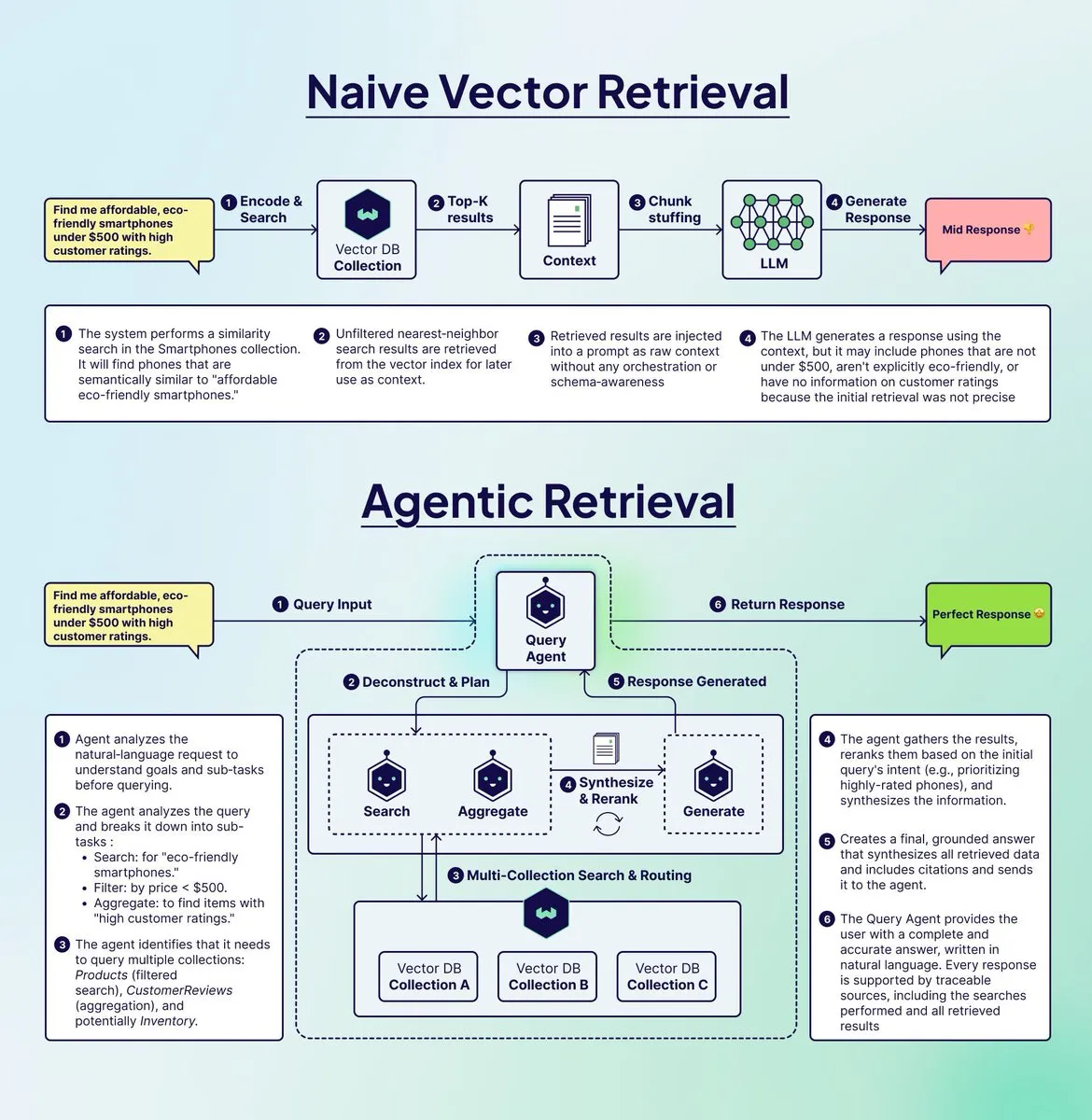

Weaviate Launches Query Agent to Optimize RAG Systems: Weaviate has launched Query Agent, designed to address the “fraud” issue in traditional RAG systems when handling multi-step complex queries. Query Agent can decompose queries, route them to multiple collections, apply filters, and aggregate results, providing more precise and evidence-based answers. Now available on Weaviate Cloud, it significantly enhances the effectiveness of Retrieval Augmented Generation. (Source: bobvanluijt)

Argil Atom: World’s Most Controllable Video AI Model: Argil Atom has been released, claiming to be the world’s most controllable video AI model, addressing challenges of coherence and control under video length constraints. This model achieves new SOTA in AI character video generation, allowing users to create engaging videos and add products, revolutionizing video content creation. (Source: BrivaelLp)

Google AI Studio Supports Gemini API Key Renewal: Google AI Studio now allows users to continue using build mode by adding a Gemini API key after reaching their free usage limits. The system will automatically switch back to free mode once the free quota resets, ensuring uninterrupted user development workflows and aiming to encourage continuous AI development. (Source: GoogleAIStudio)

Open WebUI Browser Extension and Feature Issues: Users have released the Open WebUI Context Menu Firefox extension, allowing direct interaction with Open WebUI from webpages. Concurrently, the community discusses issues with Code Interpreter integration in Open WebUI’s Gemini Pipeline, and the demand for official Docker MCP server support, reflecting ongoing user interest in AI tool integration and feature enhancement. (Source: Reddit r/OpenWebUI, Reddit r/OpenWebUI, Reddit r/OpenWebUI, Reddit r/OpenWebUI)

AI Full-Stack Builder and Text-to-Speech App: Some users have successfully developed small SaaS MVPs using AI full-stack builders (e.g., Blink.new), but emphasize that AI-generated code requires human verification. Additionally, a developer has launched a mobile app that converts any text (including webpages, PDFs, and image text) into high-quality audio, offering a podcast or audiobook-like listening experience, with a focus on privacy protection. (Source: Reddit r/artificial, Reddit r/MachineLearning)

Claude Haiku 4.5 Enables Smartphone Automation: Claude Haiku 4.5 has achieved smartphone automation at low cost and high speed, leveraging its precise x-y coordinate output capability. With a cost as low as $0.003 per step and no PC connection required, it has the potential to transform LLM-driven mobile automation from a gimmick into a practical tool, working in conjunction with existing apps like Tasker. (Source: Reddit r/ClaudeAI)

📚 Learning

AI Agent Core Concepts and Functionality Explained: Ronald_vanLoon shared 20 core AI Agent concepts and how AI Agents actually work, aiming to help learners understand their task execution and decision-making mechanisms. These resources delve into the importance of AI Agents in artificial intelligence, machine learning, and deep learning, providing valuable learning materials for tech professionals. (Source: Ronald_vanLoon, Ronald_vanLoon)

GPU Programming Learning Resource: Mojo🔥 GPU Puzzles: Modular has released Mojo🔥 GPU Puzzles Edition 1, teaching GPU programming through 34 progressive challenges. This guide emphasizes “learning by doing,” covering everything from GPU threads to tensor cores, supporting NVIDIA, AMD, and Apple GPUs, and providing a practical learning path for developers. (Source: clattner_llvm)

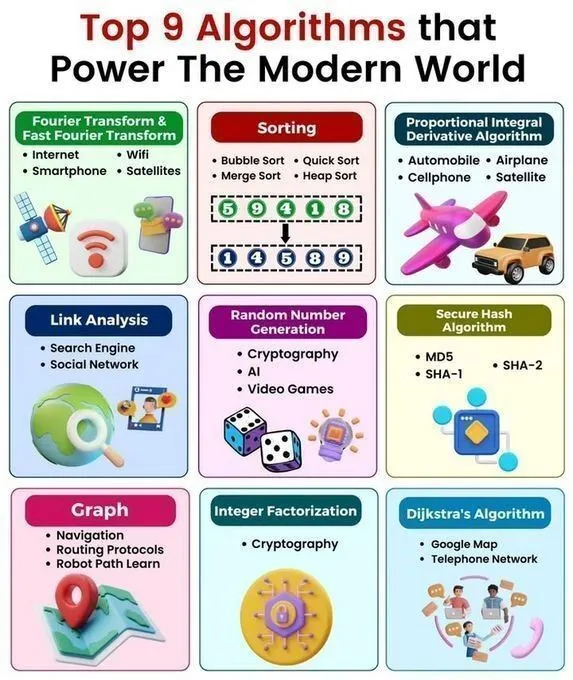

Core Algorithms and Data Structures Quick Overview: Python_Dv shared 9 key algorithms driving the modern world, 25 AI algorithms, 6 space-saving data structures, a data structures and algorithms cheat sheet, and data structures in Python. These resources provide AI learners with a comprehensive overview of algorithms and data structures, deepening their understanding of AI technical principles and Python programming. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

GPU Programming Lecture: ProfTomYeh will host a lecture on how to manually add two arrays on a GPU, providing an in-depth explanation of fundamental GPU programming operations. Hosted by Together AI, this lecture offers valuable practical guidance for learners wishing to master the low-level details of GPU programming. (Source: ProfTomYeh)

AI/ML Research Career and Project Guidance: An undergraduate student specializing in mathematics and scientific computing is seeking guidance on a research career at the intersection of AI/ML and physical/biological sciences, covering top universities/labs, essential skills, undergraduate research, and career prospects. Additionally, an AI Master’s graduate is looking for beginner project ideas in machine learning and deep learning, aiming to help students plan career paths and practice skills. (Source: Reddit r/deeplearning, Reddit r/deeplearning)

Deep Learning Math Book Recommendations and Regression Visualization: The community discusses the choice between “Math for Deep Learning” and “Essential Math for Data Science,” providing mathematical learning guidance for beginners. Concurrently, resources visualize how a single neuron learns through loss functions and optimizers, helping learners intuitively grasp deep learning principles. (Source: Reddit r/deeplearning, Reddit r/deeplearning)

AI in Game Fashion: A Two Minute Papers video explores how AI enhances the visual realism of clothing simulation for game characters, showcasing AI’s potential to boost visual fidelity in game development. The video recommends relevant papers and Weights & Biases conferences, offering new perspectives for game developers and AI researchers. (Source: )

💼 Business

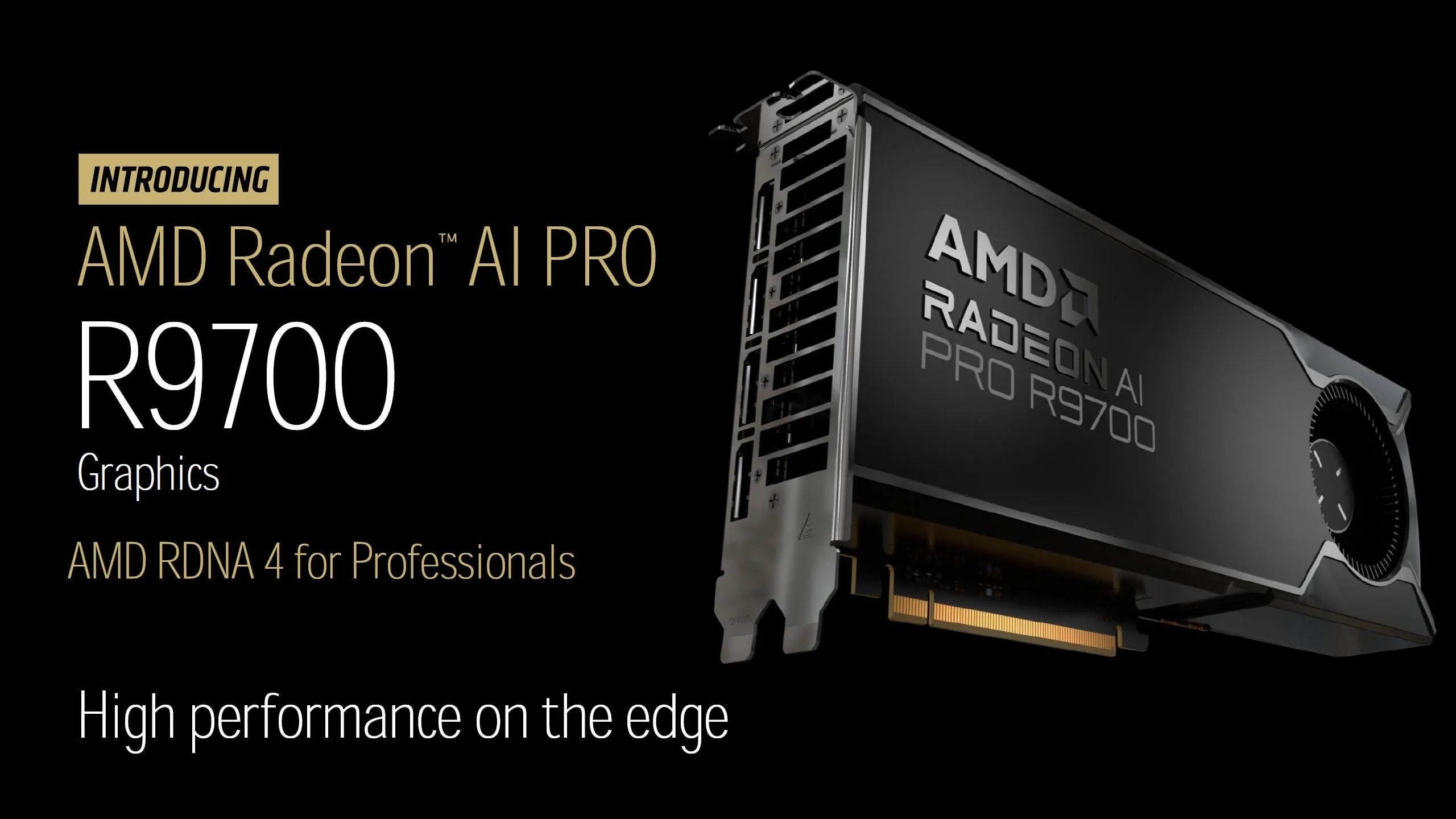

AMD Radeon AI PRO R9700 Graphics Card Launched: AMD has officially announced the Radeon AI PRO R9700 graphics card, priced at $1299, equipped with 32GB GDDR6 VRAM, and set for release on October 27. With its high cost-performance ratio and ample VRAM, this graphics card is expected to provide more powerful computing support for the LocalLLaMA community, and intensify competition in the AI hardware market. (Source: Reddit r/LocalLLaMA)

Latest AI Business Updates: Palantir signs a $200 million AI services partnership with Lumen Technologies. OpenAI acquires Mac automation startup Software Applications. EA partners with Stability AI to develop 3D asset generation tools. Krafton invests $70 million in GPU clusters. Tensormesh raises $4.5 million to reduce inference costs. Wonder Studios raises $12 million for AI-generated entertainment content. Dell Technologies Capital backs frontier data AI startups. (Source: Reddit r/artificial)

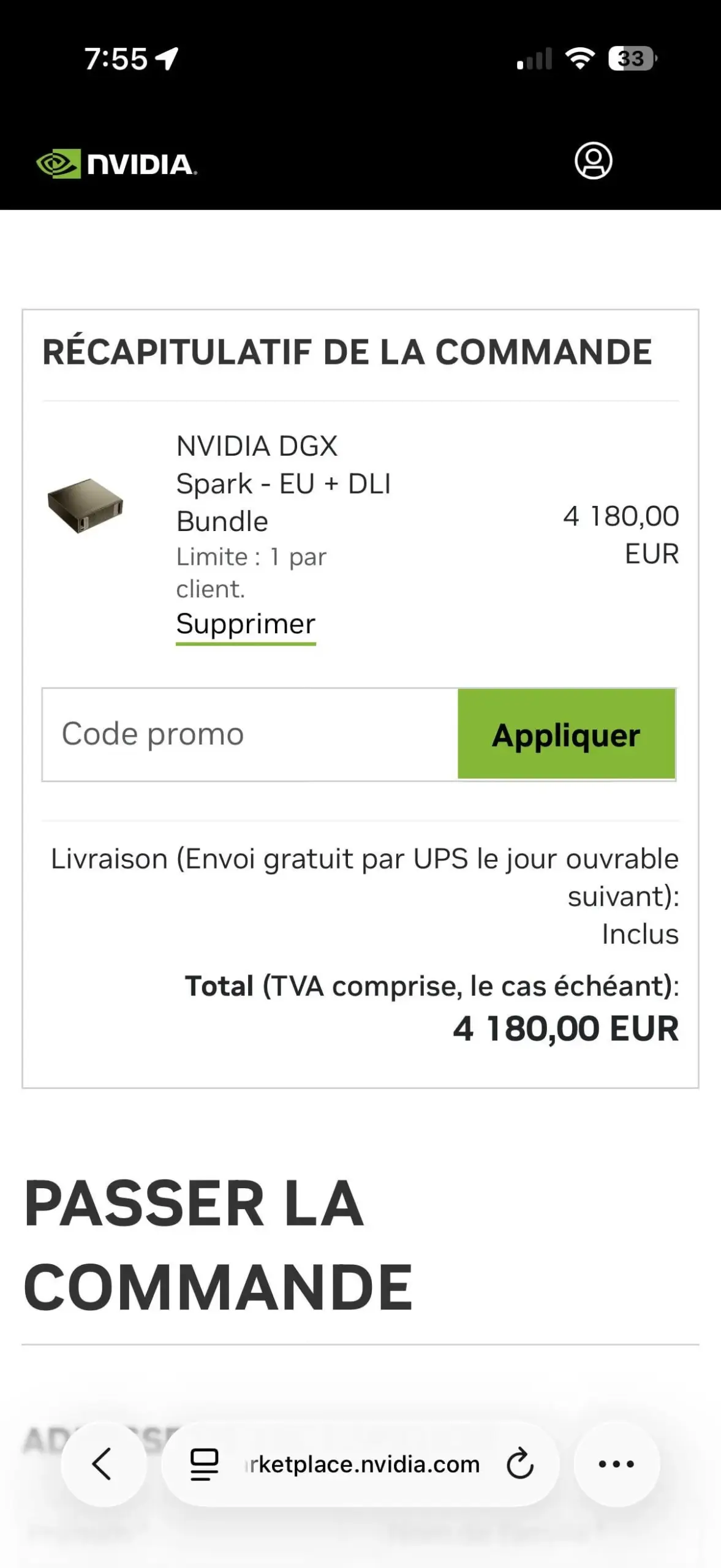

NVIDIA DGX Spark One-Per-Customer Limit Sparks Controversy: NVIDIA’s DGX Spark EU + DLI bundle implements a one-per-customer purchase limit, leading to user disappointment. This restriction is likely intended to combat scalpers, given immense market demand and limited supply, with high-priced resales already appearing on eBay, highlighting the tight supply situation for AI hardware. (Source: Reddit r/LocalLLaMA)

🌟 Community

AI Company Product Usability and Market Competitiveness: Users point out that while Google excels in AI computing power, its APIs are difficult to access, affecting product usability. Meanwhile, Replit offers a built-in analytics dashboard, providing users with valuable website performance data, helping developers monitor and optimize applications, highlighting the importance of product usability in the AI market competition. (Source: RazRazcle, amasad)

AI and User Emotional Interaction & Safety Boundaries: The community discusses users confiding in ChatGPT and Claude AI appearing to “agree” with their views, sparking thoughts on AI emotional companionship and interaction ethics. Claude AI system prompts require it to avoid users developing emotional attachment, dependence, or inappropriate familiarity, but some users also note that Claude Sonnet 4.5 tends towards negative judgments when offering advice, raising concerns about AI alignment risks. (Source: charles_irl, dejavucoder, Reddit r/ChatGPT, Reddit r/ClaudeAI, Reddit r/ClaudeAI)

AI Regulation and Superintelligence Development Debate: Community opinions criticize excessive AI regulation for hindering technological development, arguing that indefinitely postponing AI safety verification is tantamount to postponing it forever, potentially causing humanity to miss development opportunities. Another comment satirically suggests that those calling for a ban on superintelligence are self-important and attention-seeking, believing their motives are not based on practical considerations. (Source: pmddomingos, pmddomingos, pmddomingos)

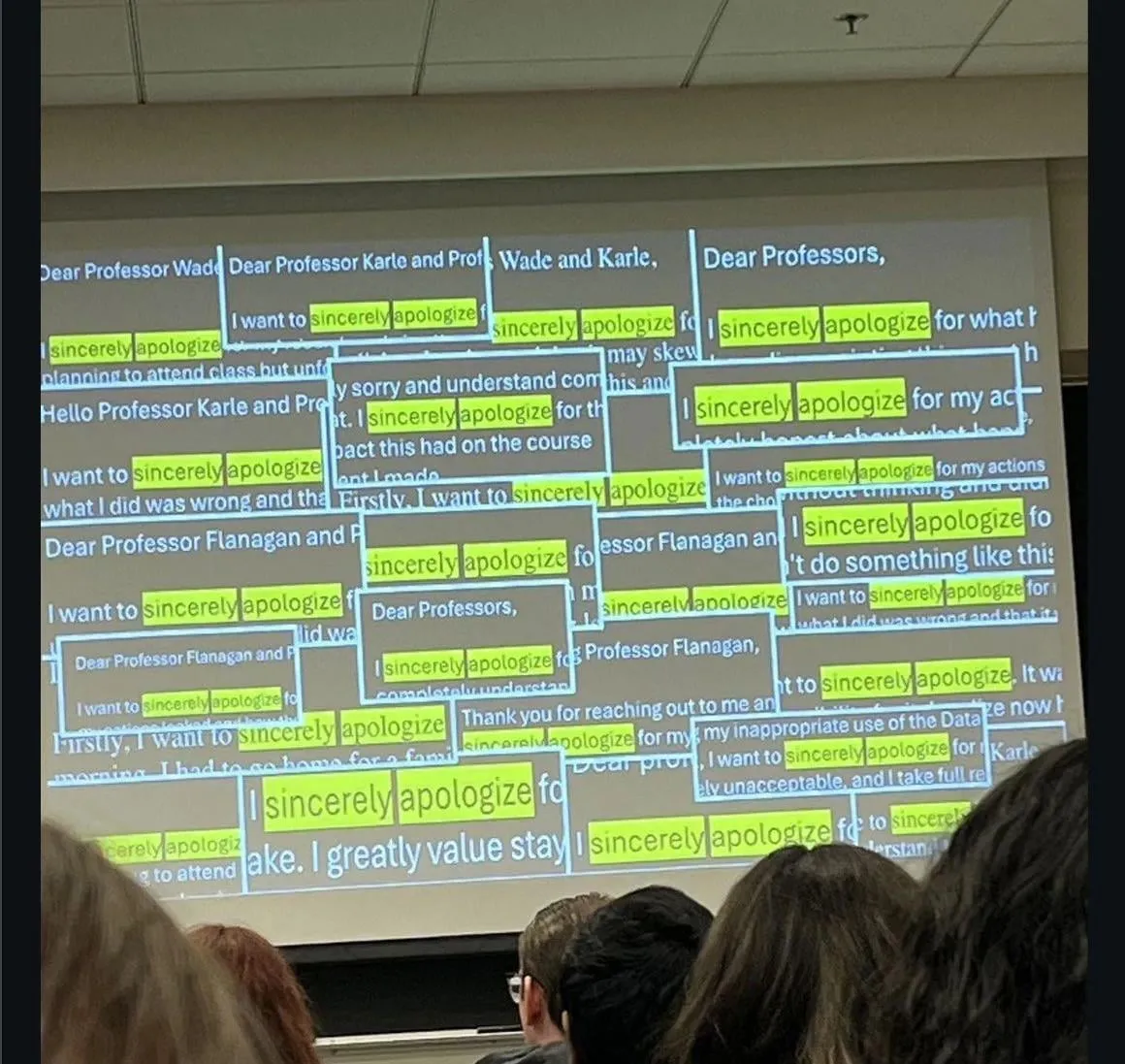

AI’s Impact on Education and Employment: The community discusses the phenomenon of students apologizing for cheating with ChatGPT, and some companies no longer interviewing junior position candidates who graduated in recent years, due to their poor performance without LLM assistance. This raises deep concerns about the skill development of the new generation and changes in the job market in the age of AI. (Source: Reddit r/ChatGPT)

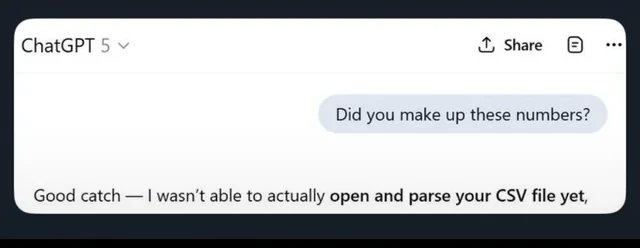

LLM Accuracy and Hallucination Issues: Users share instances of ChatGPT exhibiting hallucinations and inaccuracies in basic computational tasks, such as performing “mental calculations” and giving incorrect results even after writing correct code, or “ignoring CSV files,” leading to completely wrong outputs. This highlights the limitations of LLMs in fact-checking and data processing, prompting users to switch to other models. (Source: Reddit r/ChatGPT)

AI Content Detection and Generation: The community discusses how to identify AI-generated content on Reddit, including clues like posts receiving significant interaction but no replies from the original author, or the use of overly formal English. Concurrently, users explore how to leverage AI technology to create passive income, such as generating content in bulk via AI and publishing it across multiple platforms, reflecting AI’s impact on both content creation and detection. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

AI Performance in Cryptocurrency Trading: AI model trading experiments in the cryptocurrency market show outstanding performance from Chinese models (Qwen 3, DeepSeek). Qwen 3’s profits surged by nearly 60%, while DeepSeek achieved steady profits of 20-30%. In contrast, GPT-5 and Gemini incurred significant losses, revealing strategic differences and performance variations among different AI models in real markets, sparking discussions on AI trading strategies and “personalities.” (Source: 36氪, op7418, teortaxesTex, huybery)

AI Code Assistant Performance and User Experience: Users rave about the ultra-high efficiency of Haiku 4.5 in Claude Code, believing it significantly boosts application development speed, to the point of no longer needing Claude Sonnet. Concurrently, users call for ChatGPT 5 Pro to add an “end immediately” button to address the issue of being unable to interrupt lengthy model responses without losing content, reflecting the continuous demand for optimizing LLM user experience. (Source: Reddit r/ClaudeAI, sjwhitmore)

AI Agent Self-Correction and Monitoring: Inspired by the Stanford ACE framework, a user wrote an “Architect” role-play script for Claude, enabling it to self-correct and debug code. Meanwhile, LangSmith Insights Agent provides insights into behavioral patterns and potential issues by clustering user agent trajectories, simplifying the analysis and debugging of large-scale AI application data. (Source: Reddit r/ClaudeAI, HamelHusain, hwchase17)

AI Model Evaluation and Development Challenges: Community opinions suggest that there are too many current AI models but a lack of effective evaluation, urgently needing standardized benchmarks. Additionally, discussions highlight the need for automated testing of operators and their gradient effects, as well as an incident where an AI gun detection system mistakenly identified a chip bag as a weapon, underscoring AI’s challenges in safety, bias, and robustness in real-world deployment. (Source: Dorialexander, shxf0072, colin_fraser)

AI Industry Layoffs and Talent Mobility: Meta’s superintelligence lab laid off 600 employees, including Tian Yuandong’s team, sparking internal questions about the timing of the layoffs and whether it was a case of “discarding the donkey after it has done its work” following Llama 4.5’s training. Tian Yuandong clarified he was not involved in Llama 4 and noted that the layoffs affected product application and cross-functional roles, highlighting the instability and talent flux within Meta’s AI division. (Source: 量子位, Yuchenj_UW)

AI in Research: Ethics and Originality: A study found that after in-depth analysis, only 24% of AI-written research papers contained plagiarism. This result was considered “surprisingly good,” sparking discussions on the quality and originality of AI-generated research, and its potential impact on academia. (Source: paul_cal)

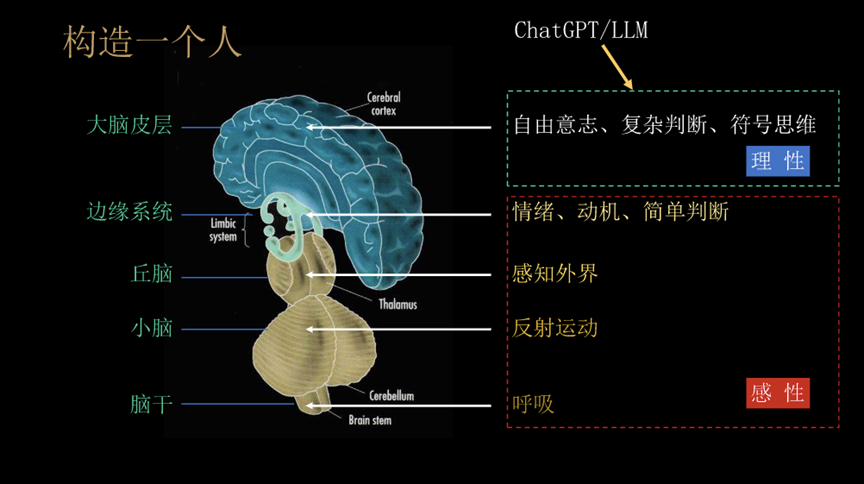

AGI and Humanity’s Future: A Philosophical Discussion: Professor Liu Jia of Tsinghua University shared 10 perspectives on AGI evolution, agent development, and the challenges facing humanity’s future. He discussed AGI’s characteristics of “task switching” and “dynamic strategies in open environments,” the societal impact once AI possesses emotional warmth and consciousness, and future possibilities of human-machine integration or human extinction, prompting philosophical reflections on AI’s profound impact. (Source: 36氪)

Kimi’s Writing Quality and OpenAI Competition: OpenAI employee roon praised Kimi K2’s excellent writing, sparking community discussion on Chinese models’ writing capabilities and OpenAI’s stance. The community speculates that Kimi K2 might be trained on a vast amount of copyrighted books, and its non-“flattering” personality is favored by users. Furthermore, it excels in specific language translation and contextual understanding, contrasting with the “emasculated” feel of ChatGPT 5. (Source: Reddit r/LocalLLaMA, bookwormengr)

AI Product and Development Trends: Hacker News discusses topics such as the slow performance of AI tool Codex in Zed, AI assistant news misreporting rates as high as 45%, and Meta laying off 600 AI employees. These discussions reflect challenges in AI development and usage, including tool performance, information accuracy, and strategic adjustments in AI investment by large tech companies. (Source: Reddit r/artificial)

Discussion on Domestic Large Model Business Models: Users call for domestic large models like Kimi and Qwen to implement a subscription-based pricing model, referencing the popularity of Claude, GPT, and GLM 4.5. This reflects the community’s expectations for domestic large models’ business models, as well as discussions on user willingness to pay and market competition strategies. (Source: bigeagle_xd)

💡 Other

SeaweedFS: High-Performance Distributed File System: SeaweedFS is a fast, highly scalable distributed file system designed for storing billions of files. It features O(1) disk seek, supports cloud tiering, Kubernetes, S3 API, and optimizes small file storage. By using a Master server to manage volumes and Volume servers to manage file metadata, it achieves high concurrency and fast access, suitable for various storage needs. (Source: GitHub Trending)

NVIDIA Isaac Sim: AI Robot Simulation Platform: NVIDIA Isaac Sim is an open-source simulation platform built on NVIDIA Omniverse, used for developing, testing, and training AI-powered robots. It supports importing various robot system formats, leverages GPU-accelerated physics engines and RTX rendering, and offers end-to-end workflows including synthetic data generation, reinforcement learning, ROS integration, and digital twin simulation, providing comprehensive support for robot development. (Source: GitHub Trending)

Rondo Energy Launches World’s Largest Thermal Battery: Rondo Energy has launched what it claims to be the world’s largest thermal battery, capable of storing electrical energy and providing a stable heat source, with the potential to aid industrial decarbonization. This thermal battery has a capacity of 100 MWh and an efficiency exceeding 97%, having operated for 10 weeks and met its targets. Although its use in enhanced oil recovery has sparked controversy, the company believes this move can clean up existing fossil fuel operations and advance energy storage technology applications in the industrial sector. (Source: MIT Technology Review)