Keywords:AGI, Sora2, RAG, Gemini 3.0, AI toys, Intelligent agents, Large language models, AI video generation technology, Retrieval-augmented generation evolution, Multimodal AI models, AI drug discovery platforms, Computing-power collaboration technology

🎯 Trends

Andrej Karpathy: AGI is a Long Curve, Not Explosive Growth : Former OpenAI core researcher Andrej Karpathy points out that the current talk of an AI “boom year” is overly enthusiastic, and that AGI will take decades of gradual evolution to achieve. He emphasizes that true intelligent agents should possess persistence, memory, and continuity, unlike current “ghost-like” chatbots. Future AI development should shift from “data stuffing” to “teaching objectives,” training AI through task-oriented, feedback-loop mechanisms to become “partners” with identity, roles, and responsibilities in society, rather than mere tools. (Source: 36氪)

Sora2 Released: AI Video Generation Enters ‘Super Acceleration’ Phase : OpenAI has released Sora2 and its social application Sora App, with downloads surpassing ChatGPT, marking the entry of the AI video domain into a “super acceleration” period. Sora2 achieves breakthroughs in physical realism, multimodal fusion, and “cinematic language” understanding, capable of automatically generating videos with multiple camera cuts and coherent storylines, significantly lowering the barrier to creation. Domestic manufacturers like Baidu and Google have also rapidly iterated their products, but copyright and monetization models remain practical challenges for the industry. (Source: 36氪)

RAG Paradigm Evolution: The ‘Life and Death’ Debate Under Agents and Long Context Windows : With the rise of LLM long context windows and Agent capabilities, the future of RAG (Retrieval-Augmented Generation) has sparked heated discussion. LlamaIndex believes RAG is evolving into “Agentic Retrieval,” achieving smarter knowledge base queries through a hierarchical Agent architecture; Hamel Husain emphasizes the importance of RAG as a rigorous engineering discipline; while Nicolas Bustamante declares “naive RAG is dead,” arguing that Agents combined with long contexts can directly perform logical navigation, and RAG will be relegated to a component of the Agent’s toolkit. (Source: 36氪)

Google Gemini 3.0 Model Allegedly Launched on LMArena, Demonstrating New Multimodal Capabilities : Google Gemini 3.0’s “alias” models (lithiumflow and orionmist) have allegedly appeared on the LMArena leaderboard. Practical tests show they can accurately identify time in “telling time” tasks, significantly improve SVG image generation capabilities, and for the first time demonstrate good music composition ability, mimicking musical styles and maintaining rhythm. These advancements suggest significant breakthroughs for Gemini 3.0 in multimodal understanding and generation, sparking industry anticipation for Google’s upcoming new model release. (Source: 36氪)

AI Toys Transition from ‘AI + Toys’ to Deep Integration of ‘AI x Toys’ : The AI toy market is undergoing a transformation from simple functional stacking to deep integration, with the global market size expected to reach hundreds of billions by 2030. New-generation AI toys utilize multimodal technologies such as speech recognition, facial recognition, and emotional analysis to actively perceive scenarios, understand emotions, and provide personalized companionship and education. The industry model is also shifting from “hardware sales” to “continuous service + content output,” meeting the growing emotional companionship and life assistance needs of children, young people, and the elderly, becoming an important vehicle for humans to learn to coexist with AI. (Source: 36氪)

Luxury Tech Brand BUTTONS Releases SOLEMATE Audiovisual Robot Powered by HALI Agent : BUTTONS has launched the SOLEMATE intelligent audiovisual robot, equipped with Terminus Group’s general-purpose agent HALI. HALI possesses spatial cognition and physical interaction capabilities, understanding environments through a 3D semantic memory model, and can proactively provide services based on user location and intent. The robot leverages large-scale collaborative computing from intelligent computing centers to achieve optimal orchestration of resources, devices, and behaviors, marking AI’s progression towards embodied general intelligence, capable of breaking through the digital world barrier to “perceive-reason-act” in physical environments. (Source: 36氪)

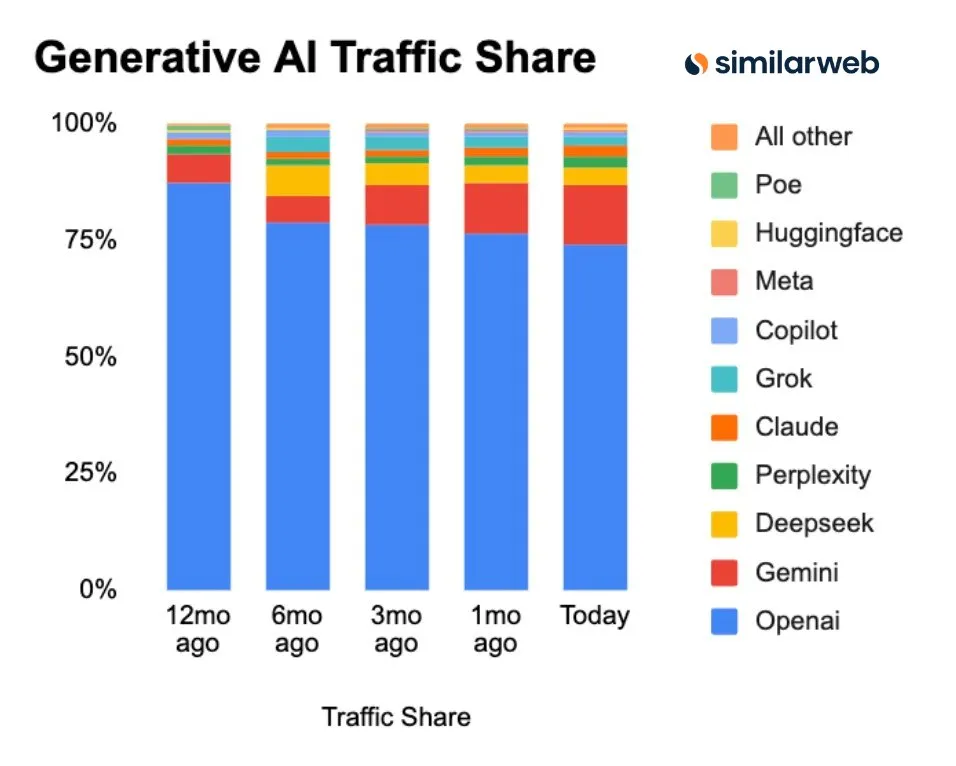

Chinese AI Models Rise, Market Share and Downloads Grow Significantly : Latest data shows that the GenAI market landscape is changing, with ChatGPT’s market share continuously declining, while competitors like Perplexity, Gemini, DeepSeek are rising. Of particular note, last year’s prominent US open-source AI models have been dominated by Chinese models on the LMArena leaderboard this year, with Chinese models like DeepSeek and Qwen having twice the download volume of US models on Hugging Face, demonstrating China’s growing competitiveness in the open AI field. (Source: ClementDelangue, ClementDelangue)

Google Releases Series of AI Updates: Veo 3.1, Gemini API Maps Integration, etc. : Google released multiple AI advancements this week, including video model Veo 3.1 (supporting scene expansion and reference images), Gemini API integration with Google Maps data, Speech-to-Retrieval research (bypassing speech-to-text for direct data querying), a $15 billion investment in an Indian AI center, and Gemini scheduling AI features for Gmail/Calendar. Concurrently, its AI Overviews feature is facing investigation by Italian news publishers due to being a “traffic killer,” and the C2S-Scale 27B model for biological data translation was released. (Source: Reddit r/ArtificialInteligence)

Microsoft Launches MAI-Image-1, Image Generation Model Enters Top Ten : Microsoft AI has released its first fully independently developed image generation model, MAI-Image-1, which has entered the top ten on LMArena’s text-to-image model leaderboard for the first time. This advancement demonstrates Microsoft’s strong capabilities in native image generation technology and signals its further push into multimodal AI, promising to provide users with a better image creation experience. (Source: dl_weekly)

🧰 Tools

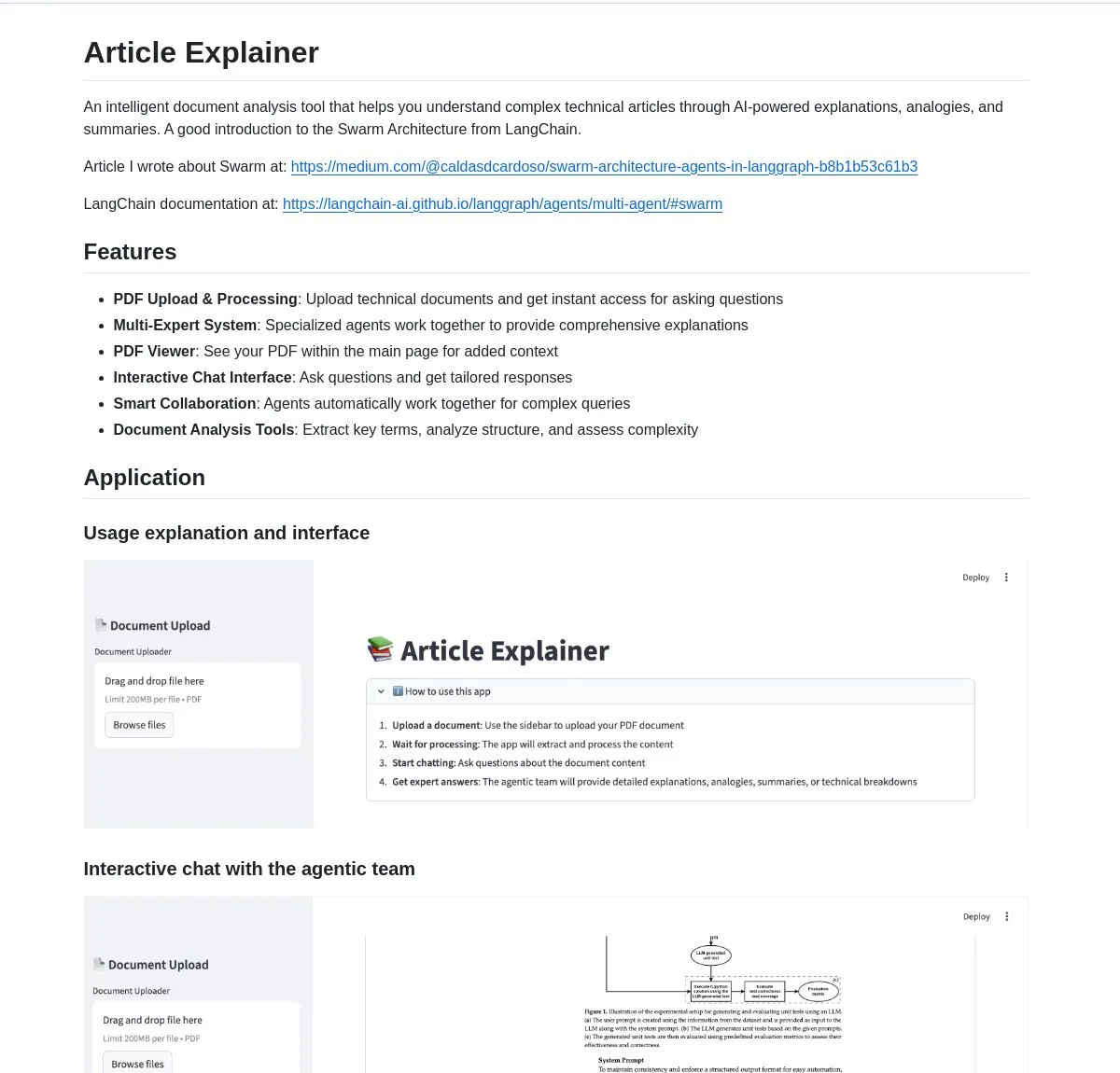

LangChain Article Explainer: AI Document Analysis Tool : LangChain has released an AI document analysis tool called “Article Explainer,” which utilizes LangGraph’s Swarm Architecture to break down complex technical articles. Through multi-agent collaboration, it provides interactive explanations and in-depth insights, allowing users to query information using natural language, greatly improving the efficiency of understanding technical documentation. (Source: LangChainAI)

Claude Code Skill: Transforming Claude into a Professional Project Architect : A developer has built a Claude Code Skill that transforms Claude into a professional project architect. This skill enables Claude to automatically generate requirements documents, design documents, and implementation plans before coding, solving the problem of context loss in complex projects. It can quickly output user stories, system architecture, component interfaces, and layered tasks, significantly improving project planning and execution efficiency, supporting the development of various web applications, microservices, and ML systems. (Source: Reddit r/ClaudeAI)

Perplexity AI Comet: AI Browser Extension for Enhanced Browsing and Research Efficiency : The Perplexity AI Comet browser extension has launched early access, aiming to enhance users’ browsing, research, and productivity. This tool provides quick answers, summarizes web page content, and integrates AI functionalities directly into the browser experience, offering users a smarter, more efficient way to acquire information, especially beneficial for those who need to quickly digest large amounts of online information. (Source: Reddit r/artificial)

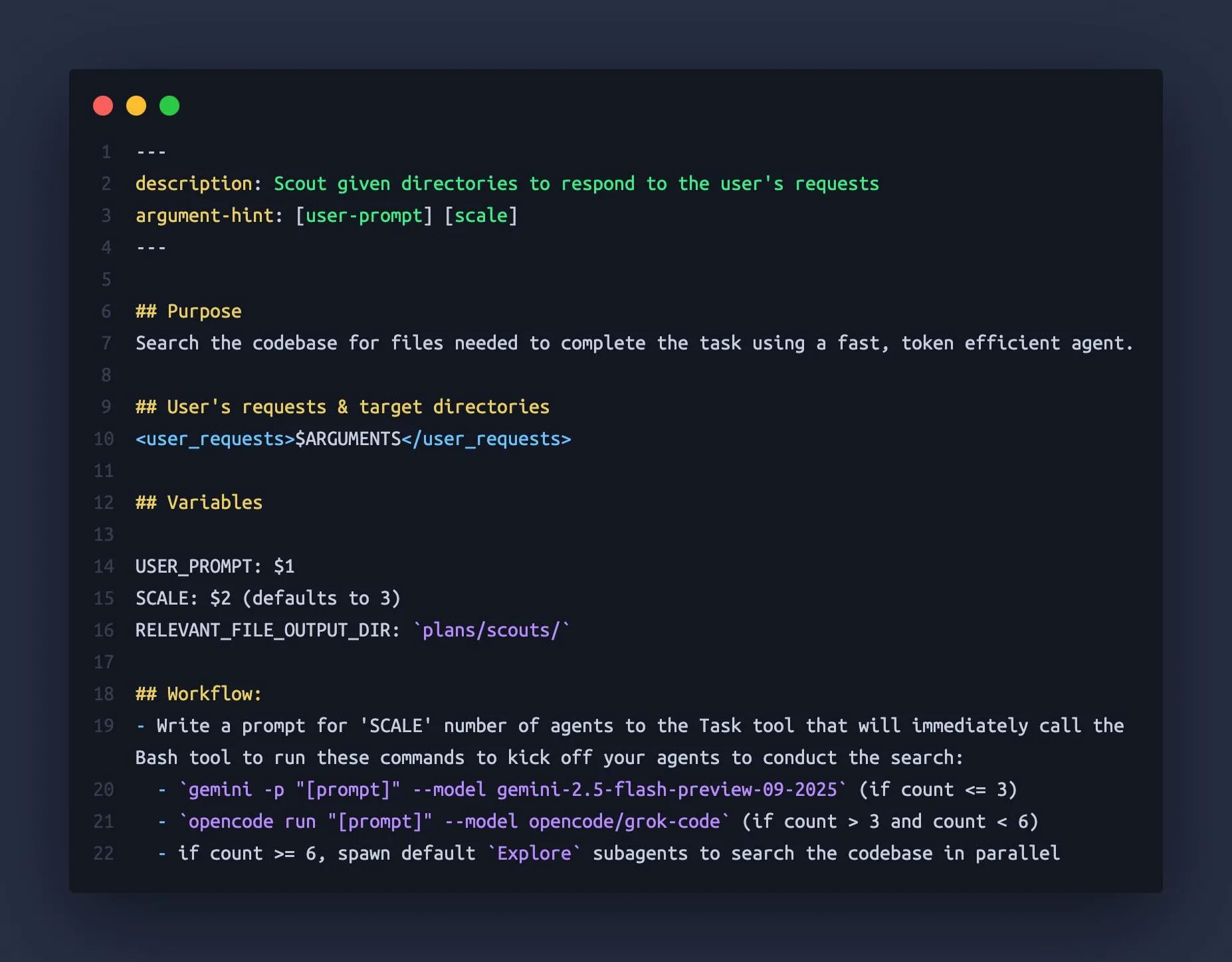

Claude Code Uses Gemini CLI and OpenCode as ‘Sub-Agents’ : A developer discovered that Claude Code can orchestrate other large language models like Gemini 2.5 Flash and Grok Code Fast as “sub-agents,” leveraging their large context windows (1M-2M tokens) to quickly scout codebases and provide Claude Code with more comprehensive contextual information. This combined usage effectively avoids Claude’s problem of “losing context” in complex tasks, enhancing the efficiency and accuracy of the coding assistant. (Source: Reddit r/ClaudeAI)

CAD Generation Model k-1b: Gemma3-1B Fine-tuned 3D Model Generator : A developer has built a 1B-parameter CAD generation model named k-1b, which allows users to generate 3D models in STL format simply by entering a description. The model was trained using AI-assisted generation and repair of an OpenSCAD dataset, and fine-tuned based on Gemma3-1B. The author also provides a CLI tool supporting OBJ model conversion and terminal preview, offering a low-cost, efficient AI-assisted tool for 3D design and manufacturing. (Source: karminski3, Reddit r/LocalLLaMA)

neuTTS-Air: 0.7B Voice Cloning Model Runnable on CPU : Neuphonic has released a 0.7B voice cloning model called neuTTS-Air, whose biggest highlight is its ability to run on a CPU. Users only need to provide a target voice and corresponding text to clone the voice and generate audio for new text, producing approximately 18 seconds of audio in about 30 seconds. The model currently only supports English, but its lightweight nature and CPU compatibility offer a convenient voice cloning solution for individual users and small developers. (Source: karminski3)

Claude Code M&A Deal Comp Agent: Generating Excel Deal Terms from PDF Parsing : A developer has created an M&A deal analysis agent using Claude Code Skills and LlamaIndex’s semtools PDF parsing capabilities. This agent can parse public M&A documents (such as DEF 14A), analyze each PDF, and automatically generate Excel spreadsheets containing deal terms and comparable company data. This tool significantly enhances the efficiency and accuracy of financial analysis, especially for scenarios involving complex financial documents. (Source: jerryjliu0)

Anthropic Skills and Plugins: Feature Overlap Causes Developer Confusion : Anthropic recently introduced Skills and Plugins features, aiming to introduce custom functionalities for AI Agents. However, some developers have reported confusion and overlap in their usage, leading to uncertainty in application scenarios and development strategies. This suggests that Anthropic may have room for optimization in its feature design and release strategy to better guide developers in utilizing its AI capabilities. (Source: Vtrivedy10, Reddit r/ClaudeAI)

📚 Learning

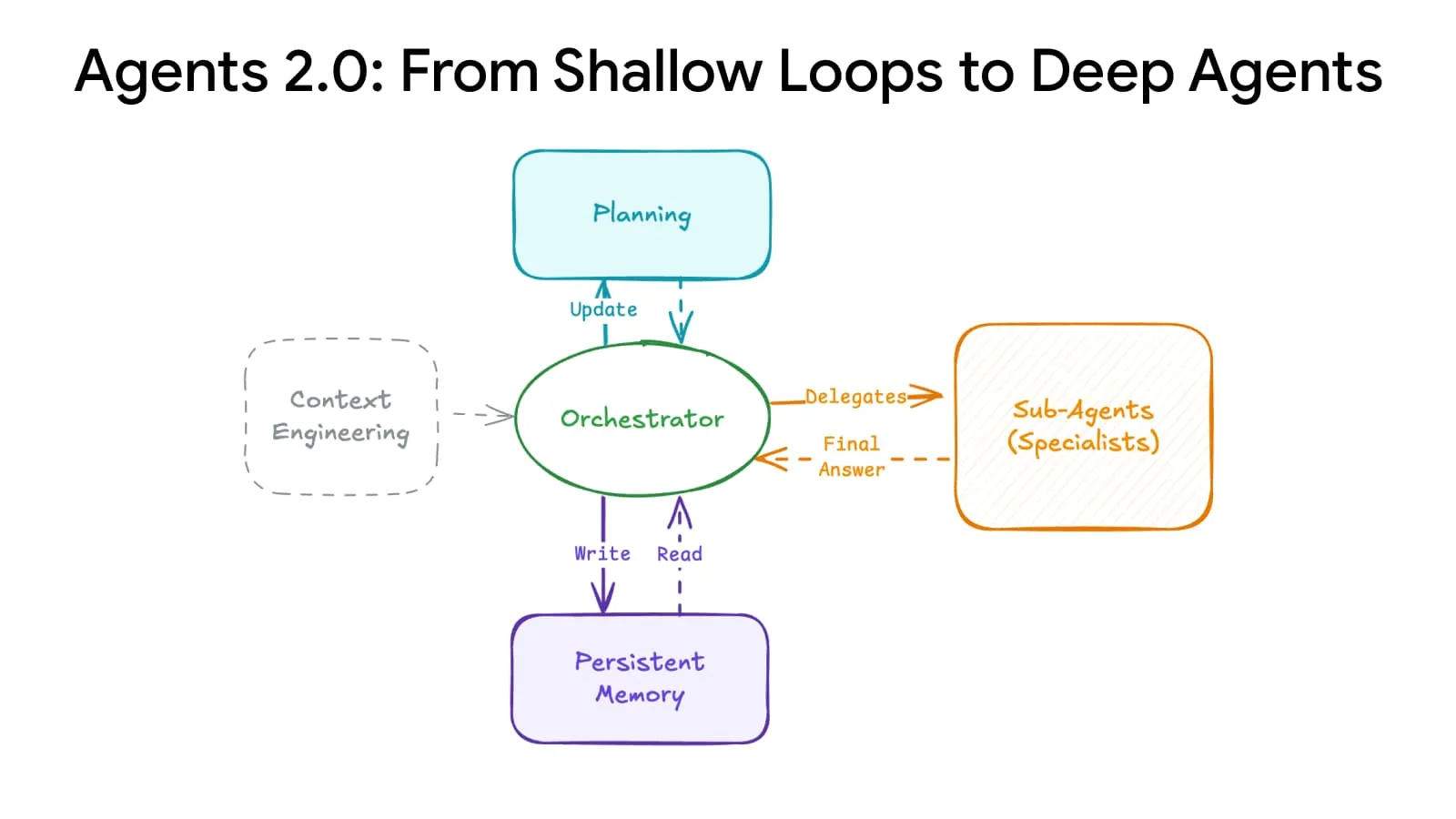

Deep Agents Evolution: Advanced Planning and Memory Systems Scale Up Agents : A breakthrough in AI architecture has achieved “Deep Agents Evolution,” enabling agents to scale from 15 steps to over 500 steps through advanced planning and memory systems, fundamentally changing how AI handles complex tasks. This technology is expected to allow AI to maintain coherence over longer time sequences and more complex logical chains, laying the foundation for building more powerful general AI agents. (Source: LangChainAI)

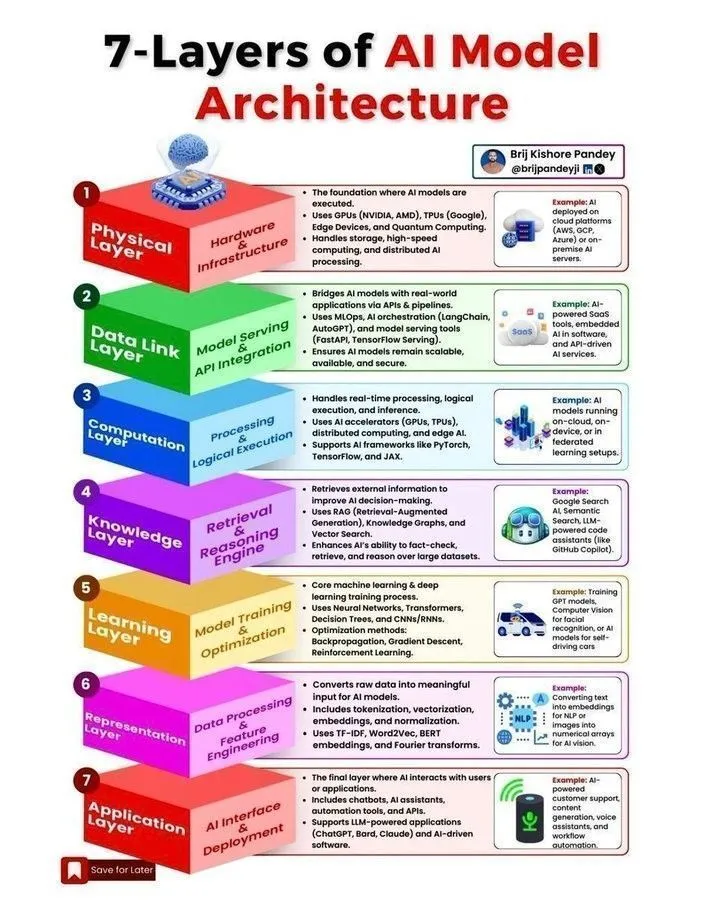

AI Model Architectures and Agent Development Roadmaps : Multiple resources on AI model architectures, agent development roadmaps, the machine learning lifecycle, and the distinctions between AI, Generative AI, and Machine Learning have been shared on social media. These materials aim to help developers and researchers understand core AI system concepts, key steps for building scalable AI agents, and master essential skills required in the AI field by 2025. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Stanford CME295 Course: Transformer and Large Model Engineering Practices : Stanford University has released the CME295 course series, focusing on the Transformer architecture and practical engineering knowledge for Large Language Models (LLMs). This course avoids complex mathematical concepts, emphasizing practical applications, providing valuable learning resources for engineers wishing to delve into large model development and deployment. Concurrently, the CS224N course is recommended as the best choice for NLP beginners. (Source: karminski3, QuixiAI, stanfordnlp)

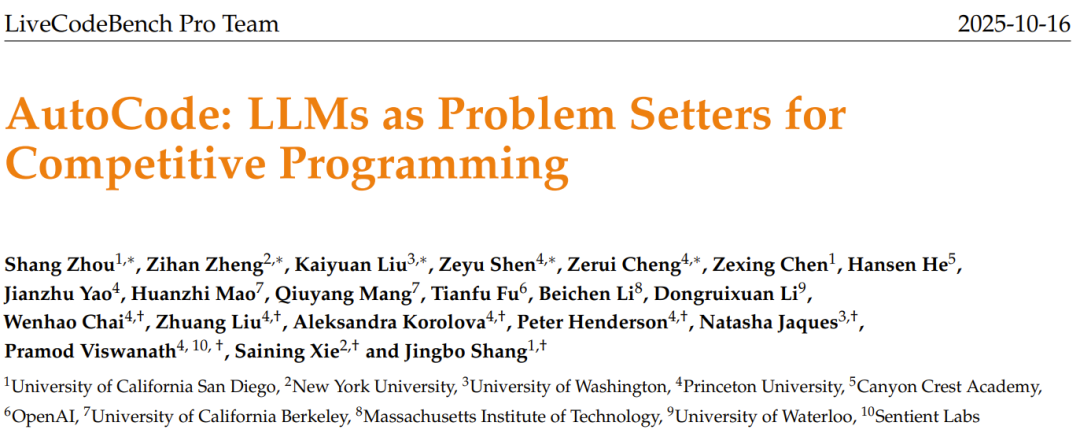

AI Question Generator AutoCode: LLM Generates Original Programming Contest Problems : The LiveCodeBench Pro team has launched the AutoCode framework, which utilizes LLMs in a closed-loop, multi-role system to automate the creation and evaluation of competitive programming problems. This framework achieves high reliability in test case generation through an enhanced validator-generator-checker mechanism and can inspire LLMs to generate high-quality, original new problems from “seed problems,” potentially paving the way for more rigorous programming contest benchmarking and model self-improvement. (Source: 36氪)

KAIST Develops AI Semiconductor Brain: Combining Transformer and Mamba Efficiency : The Korea Advanced Institute of Science and Technology (KAIST) has developed a new AI semiconductor brain that successfully combines the intelligence of the Transformer architecture with the efficiency of the Mamba architecture. This groundbreaking research aims to address the trade-off between performance and energy consumption in existing AI models, providing a new direction for future efficient, low-power AI hardware design, and is expected to accelerate the development of edge AI and embedded AI systems. (Source: Reddit r/deeplearning)

Multi-Stage NER Pipeline: Fuzzy Matching and LLM Masking for Reddit Comment Analysis : A study proposes a multi-stage Named Entity Recognition (NER) pipeline combining high-speed fuzzy matching and LLM masking techniques to extract entities and sentiments from Reddit comments. This method first identifies known entities through fuzzy search, then processes masked text with an LLM to discover new entities, and finally performs sentiment analysis and summarization. This hybrid approach balances speed with discovery capability when processing large-scale, noisy domain-specific text. (Source: Reddit r/MachineLearning)

Experience Deploying ML-Driven Trading Systems: Addressing ‘Look-Ahead Bias’ and ‘State Drift’ in Real-time Environments : A developer shared experiences deploying ML-driven trading systems, emphasizing the criticality of addressing “look-ahead bias” and “state drift” in real-time environments. Through strict line-by-line model processing and “golden master” scripts, deterministic consistency between historical testing and real-time operation is ensured. The system also includes a validator that measures the consistency of real-time predictions with validator predictions using a Pearson correlation coefficient of 1.0, ensuring model reliability. (Source: Reddit r/MachineLearning)

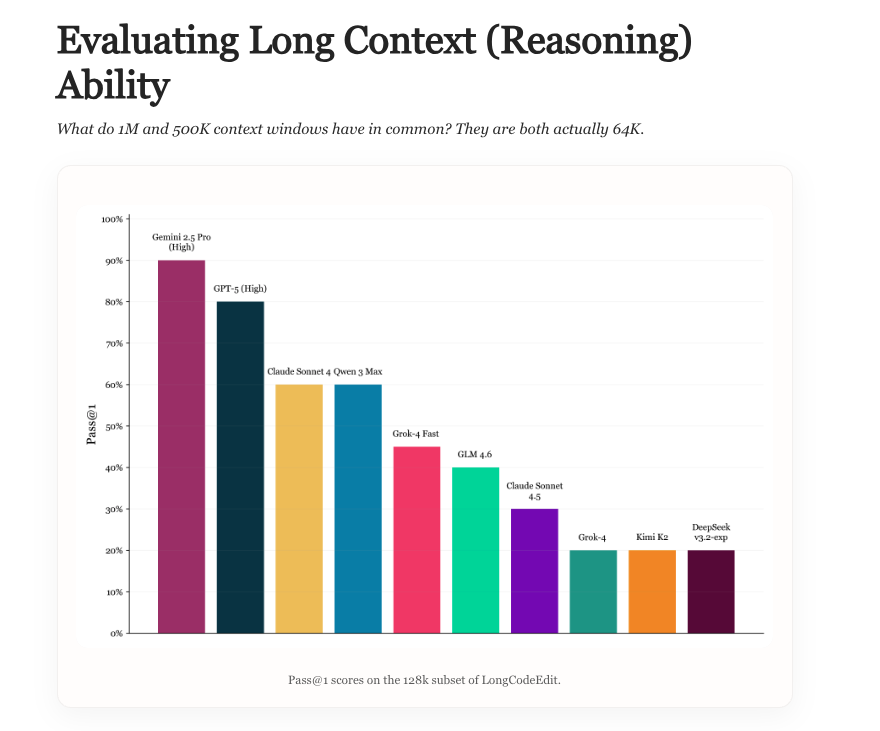

Long Context Evaluation: New Benchmark for LLM Long Context Capabilities : New research explores the current state of LLM long context evaluation, analyzing the pros and cons of existing benchmarks, and introduces a new benchmark called LongCodeEdit. This study aims to address the shortcomings of current evaluation methods in measuring LLMs’ ability to handle long texts and complex code editing tasks, providing new tools and insights for more accurately assessing model performance in long context scenarios. (Source: nrehiew_, teortaxesTex)

Manifold Optimization: Geometry-Aware Optimization for Neural Network Training : Manifold optimization enables geometry-aware training for neural networks. New research extends this idea to modular manifolds, helping design optimizers that understand inter-layer interactions. By combining forward functions, manifold constraints, and norms, this framework describes how inter-layer geometry and optimization rules are composed, thereby achieving geometry-aware optimization at a deeper level, improving the training efficiency and effectiveness of neural networks. (Source: TheTuringPost, TheTuringPost)

Kolmogorov Complexity in AI Research: AI’s Potential to Simplify Research Findings : Discussion points out that the core “essence” of new research and blog content can be compressed into code, artifacts, and mathematical abstractions. Future AI systems are expected to “translate” complex research into simple artifacts, significantly reducing the cost of understanding new research by extracting core differences and reproducing key results, enabling researchers to more easily keep up with the vast number of papers on ArXiv, and facilitating rapid digestion and application of research findings. (Source: jxmnop, aaron_defazio)

LSTM Father on Residual Learning Origins Controversy: Hochreiter’s 1991 Contribution : Jürgen Schmidhuber, the father of LSTM, has once again stated that the core idea of residual learning was proposed by his student Sepp Hochreiter as early as 1991 to solve the vanishing gradient problem in RNNs. Hochreiter introduced recurrent residual connections in his doctoral thesis, fixing weights at 1.0, which is considered the foundation of residual ideas in later deep learning architectures such as LSTM, Highway networks, and ResNet. Schmidhuber emphasizes the importance of early contributions to the development of deep learning. (Source: 量子位)

💼 Business

Zhiweituo Pharma Secures Tens of Millions in Seed Round Funding: AI-Assisted Oral Small Molecule Drug R&D : Beijing Zhiweituo Pharmaceutical Technology Co., Ltd. has completed a seed round financing of tens of millions of yuan, led by NewLilly Capital with follow-on investment from Qingtang Investment. The funds will be used to advance preclinical R&D of core pipelines and build an AI interactive molecular design platform. The company focuses on the AI drug discovery track, utilizing its independently developed EnCore platform to accelerate lead compound discovery and molecular optimization, primarily targeting oral small molecule drugs for autoimmune diseases, with the potential to tackle “hard-to-drug” targets. (Source: 36氪)

Damou Technology Completes Nearly 100 Million Yuan A+ Round Financing: Compute-Electricity Synergy Technology Solves High Energy Consumption Challenge for Intelligent Computing Centers : Damou Technology has completed a nearly 100 million yuan A+ round of financing, led by Puquan Capital, a subsidiary of CATL. This round of funding will be used for the R&D and promotion of core technologies such as energy large models, compute-electricity synergy platforms, and intelligent agents, aiming to solve the high energy consumption problem of intelligent computing centers through “compute-electricity synergy” and assist in the construction of new power systems. Relying on its full-stack self-developed energy large model, Damou Technology has partnered with leading enterprises such as SenseTime and Cambricon to provide energy optimization solutions for high-energy-consuming computing infrastructure. (Source: 36氪)

JD, Tmall, Douyin ‘AI’ on Double 11: Technology Empowers E-commerce Promotion Growth : This year’s Double 11 has become a proving ground for AI e-commerce, with leading platforms like JD, Tmall, and Douyin fully embracing AI technology. AI is being applied across the entire chain to optimize consumer experience, empower merchants, improve logistics and delivery, content distribution, and consumption decisions. For example, JD has upgraded “photo shopping,” Douyin’s Doubao is integrated into its mall, and SMZDM (Zhi De Mai Ke Ji) has enabled AI dialogue for price comparison. AI is becoming a new engine for e-commerce growth, reshaping the industry competitive landscape through extreme efficiency and cost control, pushing e-commerce from “shelf/content e-commerce” to the “intelligent e-commerce” stage. (Source: 36氪, 36氪)

White House AI Head on US-China AI Competition: Chip Exports and Ecosystem Dominance : White House AI and Crypto “czar” David Sacks, in an interview, elaborated on the US strategy in the US-China AI competition, emphasizing the importance of innovation, infrastructure, and exports. He pointed out that US chip export policy to China needs to be “nuanced,” restricting the most advanced chips while avoiding a complete deprivation that could lead to Huawei’s monopoly in the domestic market. Sacks stressed that the US should build a vast AI ecosystem, becoming the preferred global technology partner, rather than stifling competitiveness through bureaucratic control. (Source: 36氪)

🌟 Community

OpenAI Commercialization Controversy: From Non-Profit to Profit-Seeking, Sam Altman’s Reputation Damaged : OpenAI CEO Sam Altman has sparked widespread controversy due to ChatGPT’s liberalization of explicit content, GPT-5 model performance, and aggressive infrastructure expansion strategies. The community questions its shift from non-profit origins to commercial profit-seeking, expressing concerns about the direction of AI technology development, investment bubbles, and employee ethical treatment. Altman’s responses have not fully appeased public opinion, highlighting the tension between AI empire expansion and social responsibility. (Source: 36氪, janusch_patas, Reddit r/ArtificialInteligence)

Large Model Poisoning: Data Poisoning, Adversarial Samples, and AI Security Challenges : Large models face security threats such as data poisoning, backdoor attacks, and adversarial samples, leading to abnormal or harmful model outputs, and even being used for commercial advertising (GEO), technical showcasing, or cybercrime. Research shows that a small amount of malicious data can significantly impact models. This raises concerns about AI hallucinations, manipulation of user decisions, and public safety risks, emphasizing the importance of building model immune systems, strengthening data auditing, and continuous defense mechanisms. (Source: 36氪)

The Plight of Data Labelers in the AI Era: Master’s and PhD Holders Engaged in Low-Paid Repetitive Labor : With the development of large AI models, the academic requirements for data labeling jobs have increased (even requiring Master’s and PhD degrees), yet salaries remain far below those of AI engineers. These “AI trainers” perform tasks such as evaluating AI-generated content, conducting ethical reviews, and acting as expert knowledge coaches, but receive low hourly wages and face unstable employment, often losing their jobs once projects conclude. This “cyber assembly line” model of layered subcontracting and exploitation raises profound reflections on labor ethics and fairness in the AI industry. (Source: 36氪)

AI’s Impact on Creativity and Human Value: End or Elevation? : The community discusses AI’s impact on human creativity, suggesting that AI has not killed creativity but rather revealed the relative mediocrity of human creativity. AI excels at pattern recombination and generation, but true originality, contradiction, and unpredictability remain unique human strengths. The emergence of new tools always eliminates the middle ground, forcing humans to seek higher levels of breakthrough in content and creativity, making true creativity more precious. (Source: Reddit r/artificial)

AI-Induced Existential Anxiety and Coping Strategies: Real Problems vs. Over-Worrying : Facing potential existential threats from AI, the community discussed how to cope with the resulting “existential dread.” Some views suggest that this fear might stem from excessive fantasizing about the future, recommending people return to reality and focus on present life. At the same time, others point out that AI-related economic shocks and employment issues are more pressing real threats, emphasizing that AI safety should be considered alongside socio-economic impacts. (Source: Reddit r/ArtificialInteligence)

Karpathy’s Views Spark Heated Discussion: AGI Decades-Long Theory, ‘Ghost-like’ Agents, and AI Development Path : Andrej Karpathy’s views on AGI taking “decades” and existing AI agents being “ghost-like” have sparked widespread discussion in the community. He emphasized that AI needs persistence, memory, and continuity to become true intelligent agents, and suggested that AI training should shift from “data stuffing” to “teaching objectives.” These views are seen as a sober reflection on current AI hype, prompting a re-evaluation of AI’s long-term development path and assessment criteria. (Source: TheTuringPost, TheTuringPost, NandoDF, random_walker, lateinteraction, stanfordnlp)

ChatGPT Market Share Continues to Decline: Perplexity, Gemini, DeepSeek, and Other Competitors Rise : Similarweb data shows that ChatGPT’s market share continues to decline, dropping from 87.1% a year ago to 74.1%. Meanwhile, competitors such as Gemini, Perplexity, DeepSeek, Grok, and Claude are steadily gaining market share. This trend indicates intensifying competition in the AI assistant market, with user choices diversifying, and ChatGPT’s dominant position being challenged. (Source: ClementDelangue, brickroad7)

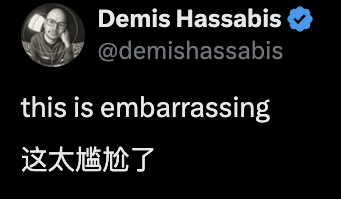

GPT-5 Math Blunder: OpenAI’s Over-Marketing and Peer Skepticism : OpenAI researchers grandly claimed that GPT-5 had solved multiple Erdos mathematical problems, but it was later discovered that it had merely retrieved existing answers from the internet, rather than solving them independently. This incident sparked public ridicule from industry heavyweights like DeepMind CEO Hassabis and Meta’s LeCun, questioning OpenAI’s excessive marketing and highlighting issues of rigor in AI capability promotion, as well as inter-peer competition. (Source: 量子位)

AI’s Hidden Environmental Costs: Energy Consumption and Water Demand : Historical research shows that from the telegraph to AI, communication systems have always come with hidden environmental costs. AI and modern communication systems rely on large-scale data centers, leading to soaring energy consumption and water demand. It is estimated that by 2027, AI’s water usage will be equivalent to Denmark’s annual water consumption. This highlights the environmental price behind the rapid development of AI technology, calling for governments to strengthen regulation, mandate disclosure of environmental impacts, and support low-impact projects. (Source: aihub.org)

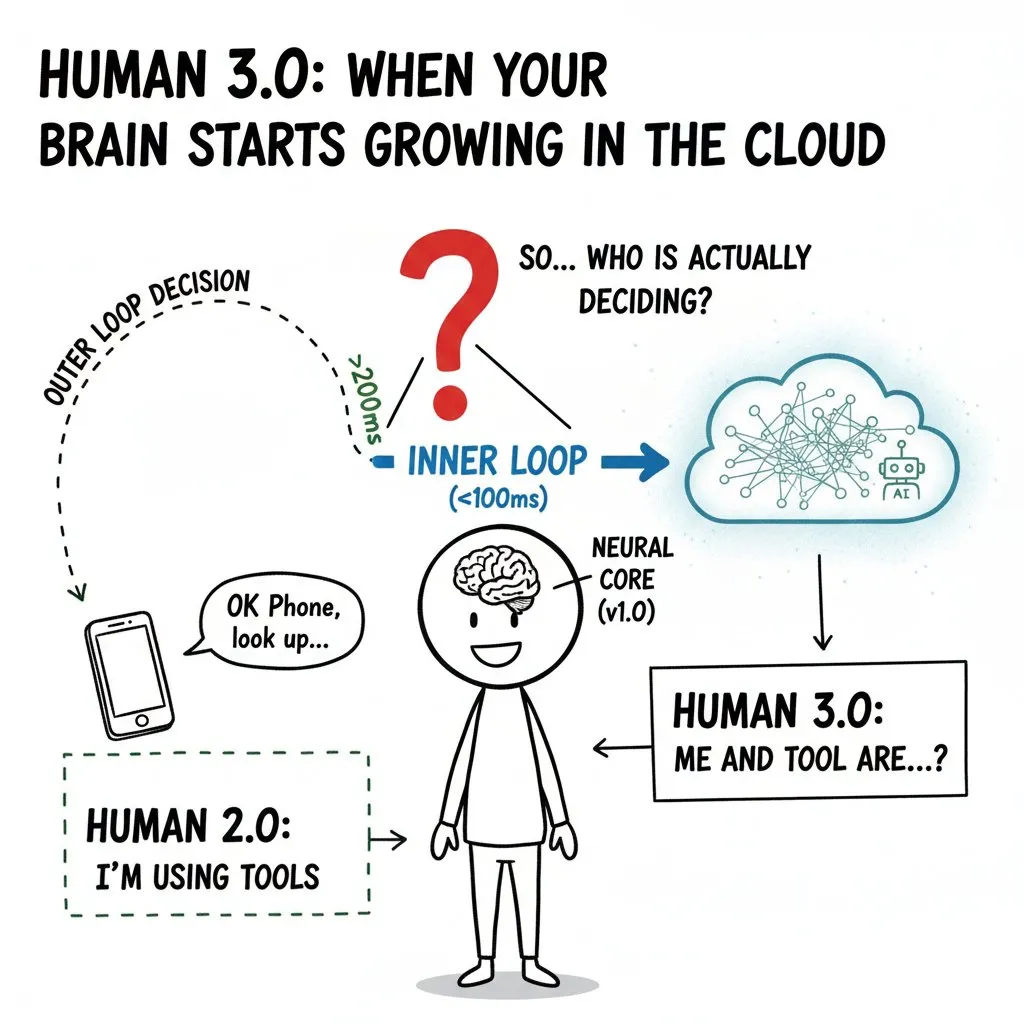

AI’s ‘Invasion’ of the Human Mind: Brain-Computer Interfaces and the Ethical Boundaries of ‘Human 3.0’ : The community deeply discussed the potential impact of Brain-Computer Interfaces (BCI) and AI on the human mind, introducing the concept of “Human 3.0.” When external computing power enters the “inner loop” of human decision-making, so fast that the brain cannot distinguish the source of signals, it will raise ethical issues concerning the boundaries of “self,” value judgments, and long-term health. The article emphasizes that zero-trust architectures, hardware isolation, and permission management must be established before widespread adoption of the technology to avoid outsourcing decision-making and exacerbating species-level inequality. (Source: dotey)

💡 Other

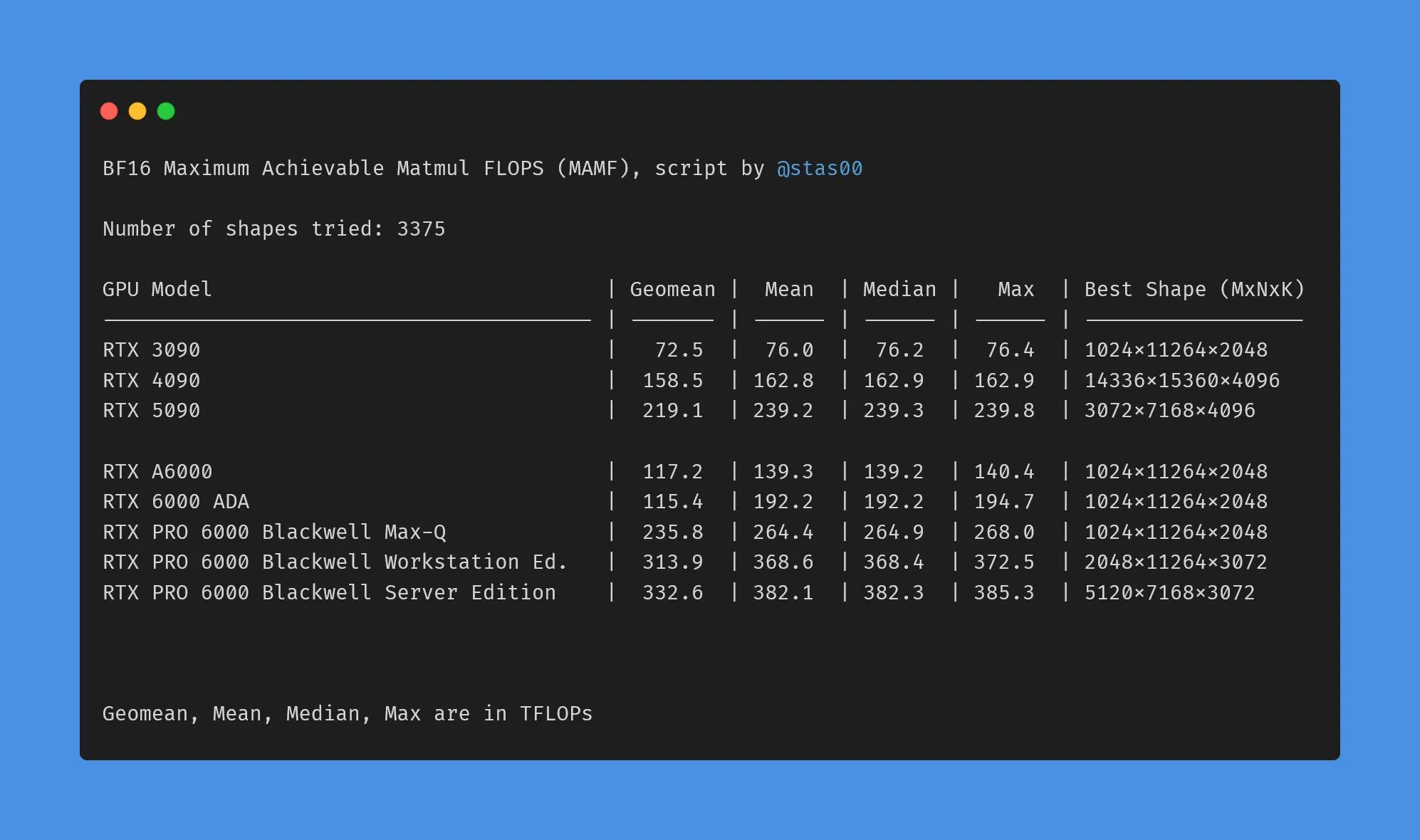

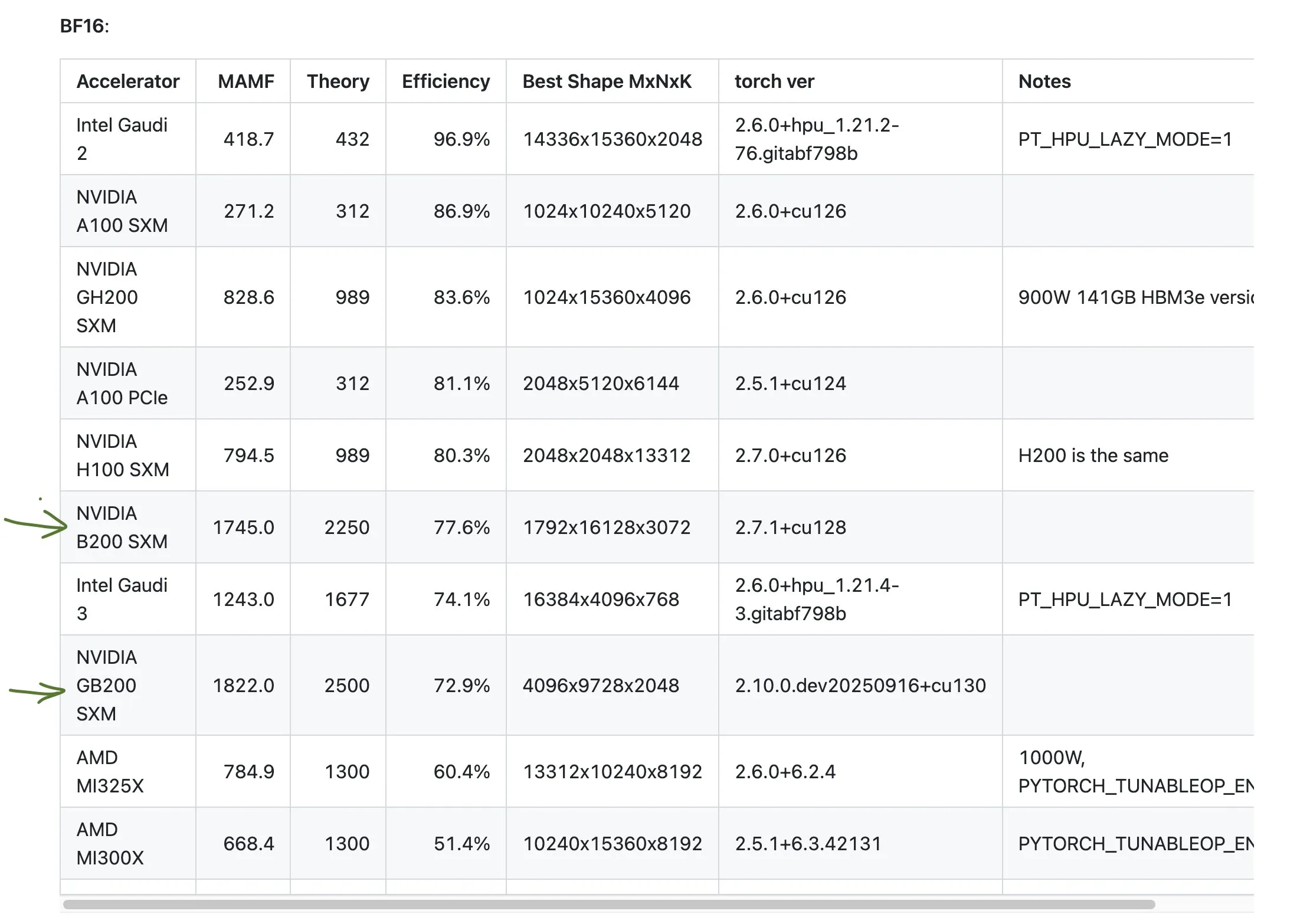

NVIDIA Consumer vs. Professional GPU Performance Data Discrepancies : The community discussed the discrepancies between advertised TFLOPs and actual performance for NVIDIA’s consumer and professional GPUs. Data shows that consumer-grade graphics cards (e.g., 3090, 4090, 5090) perform slightly above or close to their stated TFLOPs, while professional workstation cards (e.g., A6000, 6000 ADA) perform significantly below their stated values. Despite this, professional cards still offer advantages in power consumption, size, and energy efficiency, but users should be wary of the gap between marketing data and actual performance. (Source: TheZachMueller)

Discussion on AMD GPU Underperformance : The community discussed AMD GPU performance in certain benchmarks, noting that its efficiency might be only half of what’s expected. This raises concerns about AMD’s competitiveness in the AI computing field, especially when compared to high-performance products like NVIDIA’s GB200. Users planning AI computing resources need to carefully evaluate the actual performance and efficiency of GPUs from different manufacturers. (Source: jeremyphoward)

GIGABYTE AI TOP ATOM: Desktop-Class Grace Blackwell GB10 Performance : GIGABYTE has released AI TOP ATOM, bringing the performance of NVIDIA Grace Blackwell GB10 to desktop workstations. This product aims to provide powerful AI computing capabilities for individual users and small teams, enabling them to perform high-performance model training and inference locally, reducing reliance on cloud resources, and accelerating the development and deployment of AI applications. (Source: Reddit r/LocalLLaMA)