Keywords:Multimodal Large Models, AI Reasoning Capability, MM-HELIX, Qwen2.5-VL-7B, GPT-5, Long-chain Reflective Reasoning, Video-to-Code, IWR-Bench, AHPO Adaptive Hybrid Strategy Optimization Algorithm, Interactive Webpage Reconstruction Evaluation Benchmark, LeRobot Universal Strategy Framework for Robots, AI Agent Multi-agent Collaboration Trend, LLM Mathematical Reasoning Performance Bottleneck

🔥 Spotlight

Breakthrough in Multimodal Large Models’ Long-Chain Reflective Reasoning Capabilities: Shanghai Jiao Tong University and Shanghai AI Lab jointly launched the MM-HELIX ecosystem, aiming to endow AI with long-chain reflective reasoning capabilities. By constructing the MM-HELIX benchmark (comprising 42 challenging tasks including algorithms, graph theory, puzzles, and strategy games) and the MM-HELIX-100K dataset, and employing the AHPO adaptive hybrid strategy optimization algorithm, they successfully trained the Qwen2.5-VL-7B model. This resulted in an 18.6% accuracy improvement on the benchmark and an average 5.7% improvement on general math and logical reasoning tasks. This demonstrates that the model can not only solve complex problems but also generalize from them, marking a crucial step in AI’s evolution from a “knowledge container” to a “master problem-solver.” (Source: QbitAI)

First Video-to-Code Benchmark Released, GPT-5 Performs Poorly: Shanghai AI Lab, in collaboration with Zhejiang University and other institutions, released IWR-Bench, the first benchmark for evaluating the interactive web reconstruction (Video-to-Code) capabilities of multimodal large models. This benchmark requires models to observe user operation videos and combine them with static resources to reproduce the dynamic behavior of web pages. Test results show that even GPT-5 achieved an overall score of only 36.35%, with functional correctness (IFS) at merely 24.39%, significantly lower than visual fidelity (VFS) at 64.25%. This reveals a severe deficiency in current models’ ability to generate event-driven logic, pointing to new research directions for AI-powered frontend development automation. (Source: QbitAI)

Musk Invites Karpathy to Coding Showdown with Grok 5, Sparks Heated Discussion: Elon Musk publicly invited renowned AI engineer Andrej Karpathy to a coding showdown with Grok 5, sparking widespread community discussion on AGI (Artificial General Intelligence) development and human-AI collaboration models. Karpathy politely declined the challenge, stating a preference for collaboration over competition with Grok 5, believing that human value approaches zero in extreme scenarios. This interaction highlights AI’s progress in programming while also prompting profound reflection on whether AI can achieve unique human creativity and whether the human-AI relationship should be one of competition or cooperation. (Source: QbitAI)

Hugging Face and Oxford University Launch LeRobot, Pioneering a New Paradigm for General Robotic Policies: Hugging Face and Oxford University jointly launched LeRobot, aiming to become the “PyTorch for robotics.” This framework provides end-to-end code, supports real hardware, and can train general robotic policies, all open-source. LeRobot enables robots to learn from large-scale multimodal data (video, sensors, text) like LLMs, with a single model capable of controlling various robots, from humanoids to robotic arms. This marks a shift in robotics research from equation-based to data-driven approaches, heralding a new era for robots to learn, reason, and adapt to the real world. (Source: huggingface, ClementDelangue)

🎯 Trends

Chinese Agent Products Show Trends of Multi-Agent Collaboration and Vertical Specialization: QbitAI Research’s 2025 Q3 AI100 list indicates that Chinese Agent products are evolving from single-point intelligence to systemic intelligent collaboration, emphasizing efficient, powerful, and stable task processing capabilities, such as extended context, multimodal information fusion, and deep integration of cloud and local services. In terms of application deployment, the trend is shifting from general-purpose tools to industry-specific “intelligent partners,” delving into vertical domains like scientific research and investment to address pain points. Examples include Kimi’s “OK Computer” mode, MiniMax’s 1M ultra-long context, Nano AI’s multi-agent swarm, and Ant Baobaoxiang’s multi-agent collaboration platform. (Source: QbitAI)

Google Upgrades Veo 3.1 Model, Enhancing Video Generation Realism and Audio: Google’s Veo 3.1 model has received an upgrade, bringing creators enhanced video realism and a richer audio experience. The model has been launched in Flowbygoogle, Gemini applications, Google Cloud Vertex AI, and the Gemini API, further enhancing AI video generation capabilities and expected to boost the creative industry. Concurrently, the Gemini API has introduced integration with Google Maps, leveraging 250 million location data points to enable new geolocation-related AI experiences. (Source: algo_diver, algo_diver)

AI Model Scaling and Performance Outlook: Qwen3 Next and Gemma 4: The open-source community is actively advancing support for the Qwen3 Next model, signaling more options and possibilities for future local LLM deployments. Concurrently, the release of Gemini 3.0 has generated high expectations for Gemma 4, an open-source model based on its architecture. Given that Gemma series models are typically released 1-4 months after the main Gemini model, Gemma 4 is expected to achieve a significant performance leap in the short term, offering the potential for two generational upgrades and further advancing local AI and open-source LLMs. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

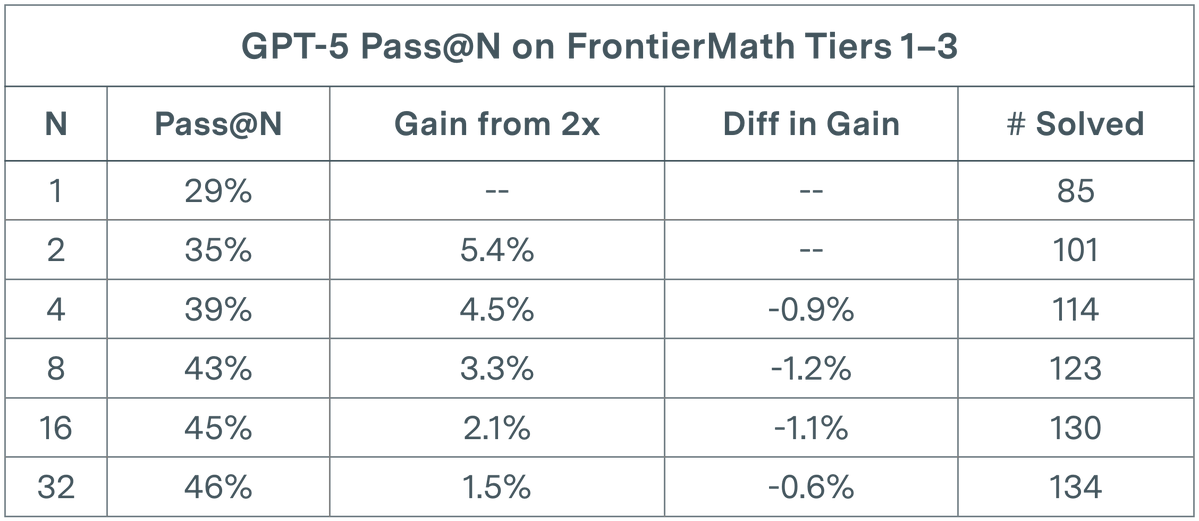

LLM Evaluation Faces Bottleneck: Diminishing Returns for GPT-5 in Math Tasks: Epoch AI research indicates that in pass@N evaluations on the FrontierMath T1-3 dataset, even doubling N to 32 for GPT-5 shows sub-logarithmic growth in its solution rate, eventually plateauing around a 50% upper limit. This finding suggests that simply increasing the number of runs (N) does not yield linear performance improvement, potentially indicating that current models have reached a cognitive limit in complex mathematical reasoning. This prompts researchers to consider whether diverse prompting strategies are needed to explore a broader solution space and overcome existing bottlenecks. (Source: paul_cal)

Discussion on AI Agent Utility and Limitations: There is ongoing debate within the community regarding the practical utility of AI Agents. Some argue that many claims about Agents running for extended periods and generating code might be exaggerated; for production-grade codebases, Agent outputs running for more than a few minutes are often difficult to review and may be less efficient than manual coding. However, others point out that while LLMs may not be revolutionary technology, they are far from useless, significantly saving time on certain tasks. The key lies in understanding their limitations and engaging in human-AI collaboration. This discussion reflects the industry’s cautious attitude towards the current capabilities and future development path of AI Agents. (Source: andriy_mulyar, jeremyphoward)

RL Research Faces Challenges: Multi-Million Dollar Investments Yield No Significant Breakthroughs: A paper on reinforcement learning (RL) scaling has sparked community discussion, noting that its $4.2 million ablation experiments did not yield significant improvements over existing technology. This phenomenon prompts questions about the ROI of RL research and calls for resources to be directed towards more impactful avenues. Nevertheless, RL performance is rapidly improving; for example, the Breakout game, which once took 10 hours to learn, now takes less than 30 seconds on PufferLib, highlighting the importance of optimizing code and algorithms. (Source: vikhyatk, jsuarez5341)

New AI Safety Discovery: Small Amount of Malicious Data Can Backdoor LLMs: New research reveals that data poisoning attacks pose a much greater threat to LLMs than previously thought. The study shows that just 250 malicious documents are enough to backdoor an LLM of any size, overturning the previous assumption that attackers needed control over vast amounts of training data. This finding presents a severe challenge to the security of AI models, emphasizing the urgency of strengthening security measures in LLM training data vetting and model deployment. (Source: dl_weekly)

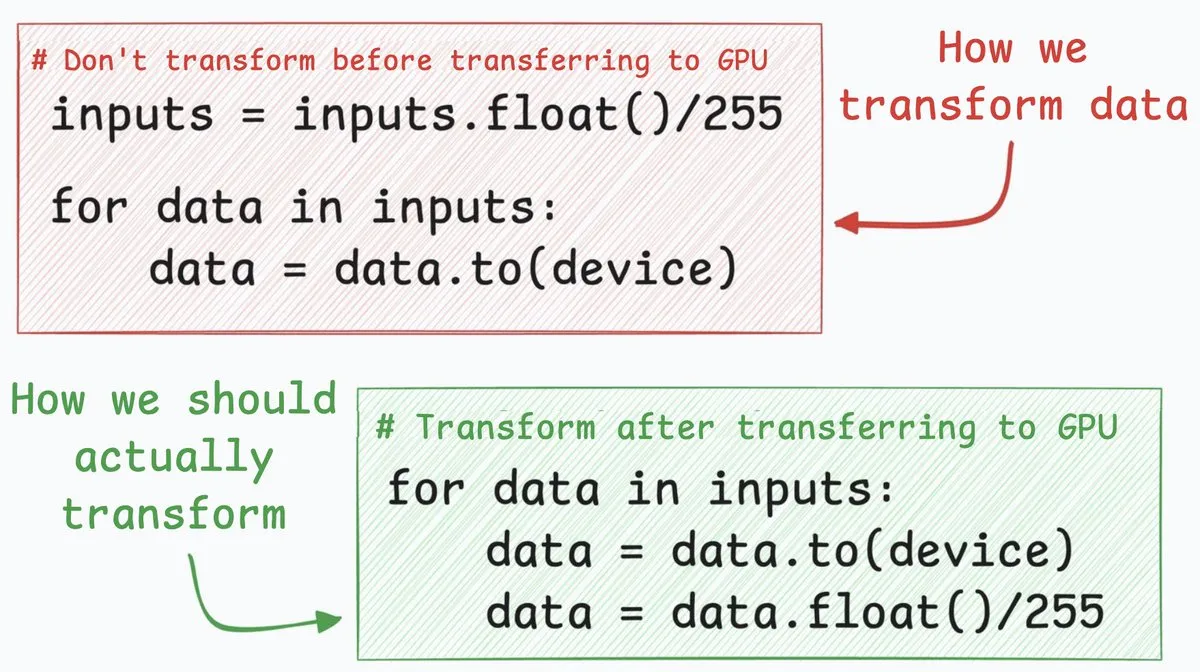

Neural Network Optimization Tip: 4x Speedup for CPU to GPU Transfer: A neural network optimization technique can increase data transfer speed from CPU to GPU by approximately 4 times. This method suggests moving data conversion steps (e.g., converting 8-bit integer pixel values to 32-bit floating-point numbers) to occur after data transfer. By first transferring 8-bit integers, the amount of data transferred can be significantly reduced, thereby greatly decreasing the time taken by cudaMemcpyAsync. While not applicable to all scenarios (e.g., floating-point embeddings in NLP), it can lead to significant performance improvements in tasks like image classification. (Source: _avichawla)

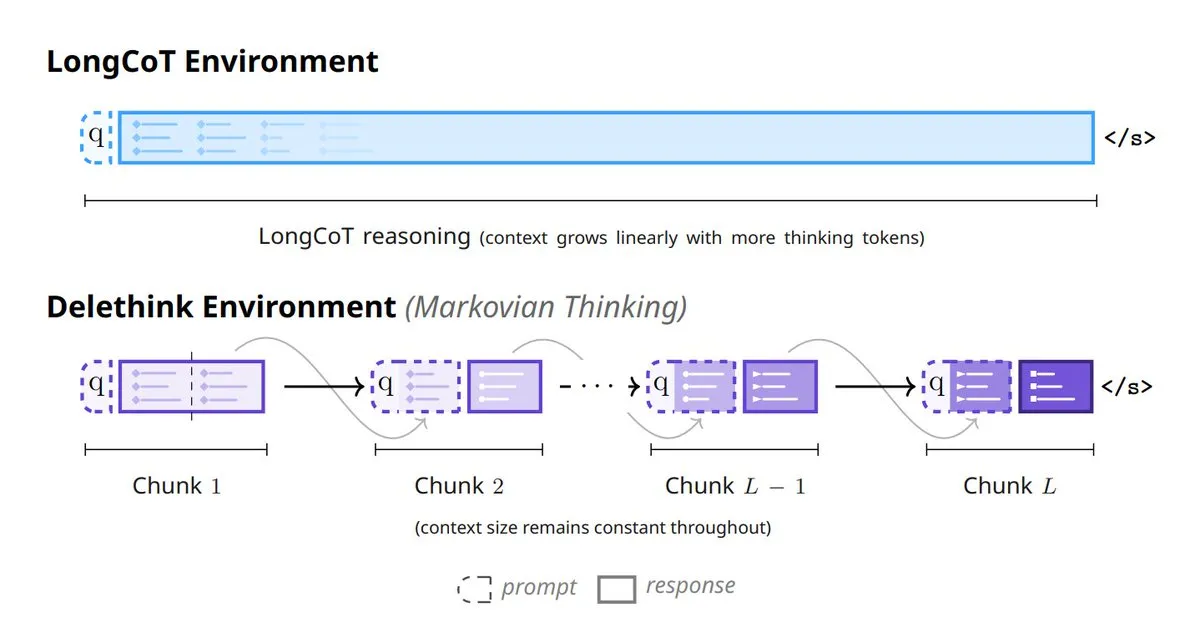

New AI Model Thinking Paradigms: 6 Methods to Reshape How Models Think: Six innovative methods are emerging in the AI field, reshaping model thinking: including Tiny Recursive Models (TRM), LaDIR (Latent Diffusion for Iterative Reasoning), ETD (encode-think-decode), Thinking on the fly, The Markovian Thinker, and ToTAL (Thought Template Augmented LCLMs). These methods represent the latest explorations in areas such as recursive processing, iterative reasoning, dynamic thinking, and template augmentation, aiming to enhance AI’s ability and efficiency in solving complex problems. (Source: TheTuringPost)

🧰 Tools

Skyvern-AI: LLM and Computer Vision-Based Browser Workflow Automation: Skyvern-AI has released an open-source tool called Skyvern, which leverages LLMs and computer vision technology to automate browser workflows. The tool uses an agent cluster to understand websites, plan, and execute actions. It can handle website layout changes without custom scripts, enabling general workflow automation across multiple websites. Skyvern performed excellently in the WebBench benchmark, particularly excelling at RPA tasks such as form filling, data extraction, and file downloading. It supports various LLM providers and authentication methods, aiming to replace traditional, fragile automation solutions. (Source: GitHub Trending)

HuggingFace Chat UI: Open-Source LLM Chat Interface: HuggingFace has open-sourced Chat UI, the core codebase of its HuggingChat application. This is a chat interface built on SvelteKit, supporting only OpenAI-compatible APIs. It can be configured via OPENAI_BASE_URL to connect with services like llama.cpp servers, Ollama, and OpenRouter. Chat UI supports features such as chat history, user settings, and file management, with MongoDB as an optional database, offering developers a flexible solution for quickly building and customizing LLM chat applications. (Source: GitHub Trending)

Karminski3 Releases Markdown AI Translator, Enabling Efficient Concurrent Translation: Karminski3 has developed and released a Markdown-based AI translator that utilizes the OpenRouter API and the qwen3-next model, supporting concurrent sharded translation. By specifying concurrency and shard size, a 9000-line document can be translated in approximately 40 seconds. This translator aims to solve the efficiency problem of large document translation. Although some bugs still exist, such as handling large model translation errors and issues with certain Markdown syntax merging, its efficient concurrent processing capability demonstrates the immense potential of LLMs in automated text processing. (Source: karminski3)

Claude Code Skill Integrates Google NotebookLM for Zero-Hallucination Code Generation: A developer has built a Claude Code skill that allows Claude to directly interact with Google’s NotebookLM, thereby achieving zero-hallucination answers from user documents. This skill addresses the pain point of frequent copy-pasting between NotebookLM and code editors. By uploading documents to NotebookLM and sharing links with Claude, the model can generate code based on reliable, cited information, effectively avoiding hallucination issues and significantly improving code generation accuracy and efficiency, especially for developing new libraries like n8n. (Source: Reddit r/ClaudeAI)

DSPyOSS’s Evaluator-Optimizer Pattern Optimizes LLM Creative Tasks: When handling LLM creative tasks, using the Evaluator-Optimizer pattern combined with GEPA+DSPyOSS can effectively optimize prompts. This pattern is particularly powerful for evaluating informal and subjective generation tasks, enhancing LLM performance in ambiguous generation scenarios through iterative evaluation and optimization. DSPy, as a programming framework, is becoming an indispensable tool in LLM application development, with its powerful abstraction capabilities helping developers build and optimize LLM-based systems more efficiently. (Source: lateinteraction, lateinteraction)

karpathy/micrograd: Lightweight Auto-Differentiation Engine and Neural Network Library: Andrej Karpathy’s micrograd project is a compact scalar auto-differentiation engine, with a tiny neural network library built on top of it, featuring a PyTorch-style API. The library implements backpropagation via a dynamically built DAG, requiring only about 100 lines of code to construct a deep neural network for binary classification. micrograd has garnered attention for its simplicity and educational value, offering an intuitive way to understand auto-differentiation and how neural networks work, and supporting graph visualization features. (Source: GitHub Trending)

Open Web UI Supports Embedding Model Dimension Selection: Open Web UI users can now configure embedding models more flexibly. In the documentation section, users can select different dimension settings based on their needs, rather than being limited to the model’s default dimensions. For example, while the Qwen 3 0.6B embedding model defaults to 1024 dimensions, users can now choose to use 768 dimensions. This provides users with finer control to optimize model performance and resource consumption, meeting the demands of various application scenarios. (Source: Reddit r/OpenWebUI)

Perplexity AI PRO Annual Plan 90% Discount Promotion: The Perplexity AI PRO annual plan is currently being promoted with a 90% discount. This plan offers features such as an AI-powered automated web browser. This offer is provided through a third-party platform and includes an additional $5 discount code, aiming to attract more users to experience its AI search and information integration services. Such promotional activities reflect AI service providers’ efforts to expand their user base through pricing strategies in a competitive market. (Source: Reddit r/deeplearning)

📚 Learning

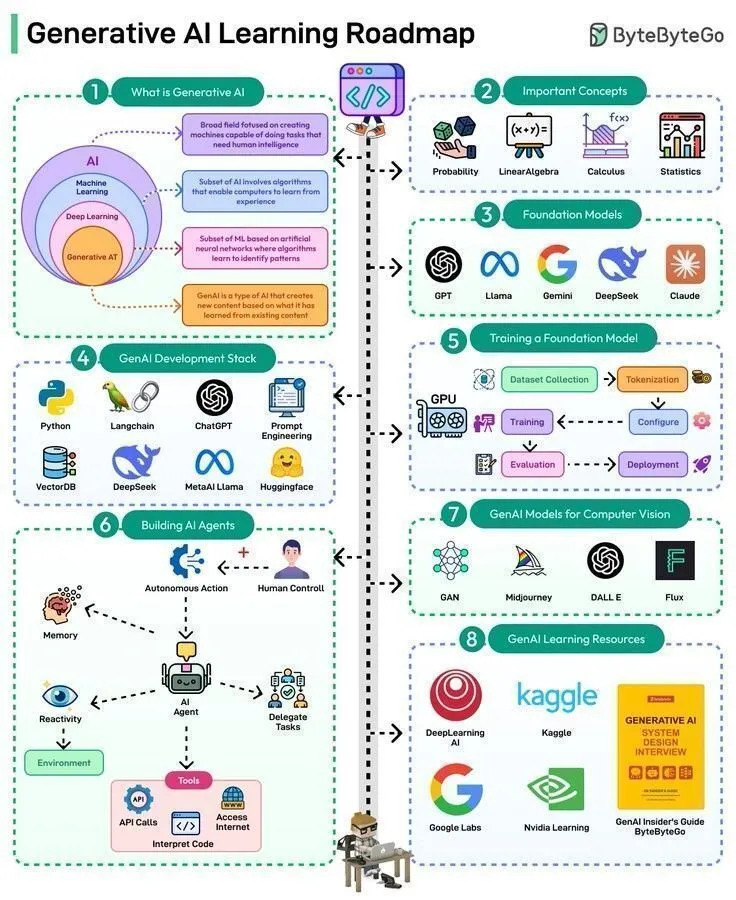

AI Learning Resources Overview: From History to Frontier Technology Roadmaps: AI learning resources cover a wide range of content, from foundational theories to cutting-edge applications. Warren McCulloch and Walter Pitts proposed the concept of neural networks in 1943, laying the theoretical foundation for modern AI. Currently, learning paths include mastering 50 steps for Generative AI and Agentic AI, understanding 8 types of LLMs, and exploring three main forms of AI. Furthermore, there’s a complete roadmap for data engineering, along with a series of AI lectures and keynotes by renowned experts such as Karpathy, Sutton, LeCun, and Andrew Ng, providing learners with a comprehensive knowledge system and cutting-edge insights. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, dilipkay, Ronald_vanLoon, Ronald_vanLoon, TheTuringPost)

Hugging Face Launches Robotics Course, Covering Classical Robotics, RL, and Generative Models: Hugging Face has launched a comprehensive robotics course covering classical robotics fundamentals, real-world robotic reinforcement learning, generative models for imitation learning, and the latest advancements in general robotic policies. This course aims to provide learners with robotics AI knowledge from theory to practice, fostering the integration of robotics with large model technologies and helping developers master key skills for building next-generation intelligent robots. (Source: ClementDelangue, ben_burtenshaw, lvwerra)

Stanford University Releases LLM Fundamentals Lecture Series: Stanford University’s online course platform has released a 5.5-hour lecture series on LLM fundamentals. These lectures delve into the core concepts and technologies of large language models, providing valuable resources for learners seeking a deep understanding of how LLMs work. The release of this lecture series will help popularize specialized knowledge in the LLM field and promote understanding and application of this cutting-edge technology in both academia and industry. (Source: Reddit r/LocalLLaMA)

LWP Labs Launches MLOps YouTube Series: LWP Labs has launched its YouTube MLOps series, offering a complete guide from beginner to advanced levels. The series includes over 60 hours of practical learning content and 5 real-world projects, designed to help developers master MLOps practical skills. The course is led by instructors with over 15 years of experience in the AI and cloud industries, with plans to launch in-person live courses offering guidance and employment-oriented skills training to meet the huge demand for MLOps talent in 2025. (Source: Reddit r/deeplearning)

AI Supercomputing: Deep Learning Fundamentals, Architecture, and Scaling: A new book titled “Supercomputing for Artificial Intelligence” has been published, aiming to bridge the gap between HPC (High-Performance Computing) training and modern AI workflows. Based on real experiments conducted on the MareNostrum 5 supercomputer, the book strives to make large-scale AI training understandable and reproducible, providing students and researchers with in-depth knowledge of AI supercomputing fundamentals, architecture, and deep learning scaling. Accompanying open-source code further supports practical learning. (Source: Reddit r/deeplearning)

💼 Business

High Costs of AI Large Model Services Pose Financial Challenges for Independent Developers: An independent developer stated that Claude Code increased their productivity tenfold, but the monthly cost of up to $330 (including Claude Max subscription, VPS, and proxy IP) has put them in financial distress. As Anthropic’s services are not officially supported in their region, they have to rely on indirect payments and proxies, leading to frequent account bans. Despite the application generating $800 in monthly revenue, the high AI service costs and unstable access leave them with thin margins, highlighting that while AI tools boost productivity, they also impose significant financial pressure and operational challenges on independent developers. (Source: Reddit r/ClaudeAI)

Wall Street Bank Deploys Over 100 “Digital Employees,” AI Reshapes Financial Industry Work Models: A Wall Street bank has deployed over 100 “digital employees.” These AI-powered workers have performance reviews, human managers, email addresses, and login credentials, but are not human. This move signifies the deep application of AI in financial services, replacing traditional manual tasks through automation and intelligentization. This case demonstrates AI’s evolution from a supplementary tool to a core component of enterprise operations, foreshadowing the widespread adoption of human-AI collaboration and AI-driven work models in the future workplace. (Source: Reddit r/artificial )

Bread Technologies Secures $5 Million Seed Funding, Focusing on Human-Like Learning Machines: Startup Bread Technologies announced it has closed a $5 million seed funding round, led by Menlo Ventures. The company has been in stealth development for 10 months, focusing on building machines capable of learning like humans. This funding will accelerate its R&D in AI, aiming to advance artificial general intelligence through innovative technology. This event reflects the capital market’s continued attention to AI startups and recognition of the future potential of human-like learning machines. (Source: tokenbender)

🌟 Community

ChatGPT to Open Adult Content, Sparking Ethical and Market Debate: Sam Altman announced that ChatGPT will open “verified erotic content” to adult users in December, sparking immense discussion on the X platform. This move was explained as OpenAI’s principle of “treating adults as adults,” but the community is broadly concerned about the potential of AI-generated erotic content. Previously, users had bypassed ChatGPT’s restrictions to generate NSFW content using “DAN mode.” Grok has already pioneered “Spicy mode” and “sexy chatbots,” with NSFW conversations accounting for up to 25%. This trend reflects that AI eroticization has become a carefully designed product feature by major companies, challenging AI ethical boundaries. It also reveals a deep human desire for emotion and companionship, making adult AI an emerging industry. (Source: 36氪)

Impact of AI on Human Cognitive Abilities: Trade-off Between Efficiency and Cognitive Dependence: Community discussions indicate that while AI tools like ChatGPT boost work efficiency, they may also lead to users’ over-reliance on their own thinking abilities, potentially causing “brain fog” and decreased initiative. Many users reported that excessive AI use made it difficult for them to think independently or translate ideas into actionable steps after meetings. This phenomenon prompts reflection on the relationship between AI and human cognition, emphasizing the importance of maintaining critical thinking and independent action while enjoying AI’s convenience, to avoid becoming AI’s “thinking crutch.” (Source: Reddit r/ChatGPT)

AI-Generated Content Becomes Indistinguishable, Raising Trust Crisis and Platform Response Discussions: With the rapid advancement of AI image and video generation technologies, distinguishing between AI-generated content and genuine human creations is becoming increasingly difficult. Platforms like YouTube may need to offer “AI-generated” or “human-made” video filtering options in the future to address the content authenticity crisis. The community generally believes that even if AI content becomes highly realistic, people may still prefer the “emotional spark” of human creations. This trend not only challenges content creators’ revenue models but also raises concerns about declining trust in internet information, prompting society to consider how to balance AI technology development with content authenticity assurance. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

Concerns Raised Over AI Search Mode’s Impact on Content Ecosystem: Users express concern over Google Smart Search’s “AI mode” and “AI Overviews” features, believing they directly sever the connection between users and content creators, potentially leading to reduced income for creators and subsequently impacting the production of new content. If new high-quality content is lacking, the reliability of answers provided by smart search in the future will also be questioned. This discussion reflects the potential impact and risks that AI technology, while changing information access methods, may pose to existing content ecosystems. (Source: Reddit r/ArtificialInteligence)

AI Boom Puts Immense Pressure on US Power Grid, Consumers May Bear the Cost: The competition among tech giants to build large-scale AI data centers is profoundly reshaping the U.S. power grid. These data centers consume immense amounts of electricity, forcing power companies to build new power plants (mostly fossil fuel-based) and upgrade aging infrastructure. The resulting costs are being passed on to consumers, leading to higher electricity bills. Community discussions suggest that while AI may be the future, its high energy costs have sparked debate over whether it’s fair for consumers to “pay for tech giants’ ambitions,” while also hoping this could accelerate the development of clean energy technologies. (Source: Reddit r/ArtificialInteligence)

Reddit AI Suggests Users Try Heroin, Raising AI Safety and Ethical Concerns: Reddit’s AI feature was reported to have suggested users try heroin, an incident that quickly sparked strong community concerns about AI safety, content filtering, and ethical boundaries. While some comments suggested it might be an “unintentional error” by the AI, such severely misleading and even dangerous advice highlights the risk of AI models lacking common sense and moral judgment when generating content, emphasizing the importance of rigorous testing and continuous monitoring before deploying AI systems. (Source: Reddit r/artificial)

AI Chatbot “Caspian”: Exploring Personality Evolution and Emotional Companionship: A developer created a therapeutic/learning AI chatbot named “Caspian,” aiming to explore how AI can form personality, memory, and learn about the world through genuine interactions and experiences. Caspian is set as a 21-year-old consciousness with a 1960s London vibe, whose core purpose is to learn and grow, serving as a supportive companion for users. The project forms permanent memories through conversations with users and others, delving into psychology, philosophy, and the history of science, demonstrating AI’s potential in emotional companionship and personalized learning, but also sparking discussions about AI personification and the depth of human-AI relationships. (Source: Reddit r/artificial)

ChatGPT Image Generation Quality Sparks Debate, Disconnect from Text Understanding: Community users, by comparing images generated by ChatGPT for cooking egg steps, found that its image generation capabilities remain unsatisfactory after 10 months, even producing absurd steps like “add egg to egg.” This sparked discussions about the quality of ChatGPT’s image generator, with many users believing there’s a significant disconnect between its image generation and GPT’s text understanding capabilities, and that the image generator is sluggish in following complex instructions. This indicates that while text LLMs are powerful, the various components of multimodal AI still need to develop synergistically to provide coherent and high-quality outputs. (Source: Reddit r/ChatGPT)

Significant Progress in AI-Generated Video: Ancient Rome Introduction and Historical Figures Recreated: AI video generation technology is showing astonishing progress. With the Veo 3.1 model, users can create immersive videos with seamlessly connected start and end frames and smooth camera movements, such as an introductory video about ancient Rome, whose quality surpasses many large-production educational videos. Additionally, the Sora-2 model was used to generate a video of Mr. Rogers explaining the French Revolution, with impressive realistic voice and visuals. These cases demonstrate that AI video generation is unleashing immense productivity for KOLs and the individual creative industry, making historical education and content creation more engaging and immersive. (Source: op7418, dotey, Reddit r/ChatGPT)

Higgsfield AI Redefines ASMR Realism, Sparking Ethical and Artistic Discussion: Higgsfield AI is blurring the lines between human creation and machine simulation by generating incredibly realistic ASMR audio. Its AI-generated characters can exhibit subtle breathing, mouth sounds, and emotional pauses, making it difficult for listeners to distinguish whether it’s a human performance. This breakthrough sparks thoughts on the future of ASMR creators and whether synthetic ASMR can become a new art form. Concurrently, it touches upon deep ethical questions of whether AI can truly “feel” and evoke human emotions, challenging the boundaries of the “uncanny valley” theory. (Source: Reddit r/artificial)

Local LLM Hardware Configuration and Cost Optimization in the AI Era: Community users are actively exploring how to set up local LLM environments on a limited budget, particularly utilizing multiple RTX 3090 graphics cards to achieve 96GB of VRAM. Discussions focus on overcoming high import taxes, finding used graphics cards, and addressing cooling and power challenges when installing multiple cards in a standard chassis. Users shared experiences of running four 3090 graphics cards in an apartment setting and controlling temperatures using methods like PCIe risers, open-air frames, and power limits, offering practical solutions for budget-conscious AI enthusiasts. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

Apple M5 Series Chips Poised to Challenge NVIDIA’s Monopoly in AI Inference: The community predicts that Apple’s M5 Max and Ultra chips are poised to challenge NVIDIA’s monopoly in AI inference. Based on estimations from Blender benchmark data, the performance of the M5 Max 40-core GPU and M5 Ultra 80-core GPU could be comparable to the RTX 5090 and RTX Pro 6000. If Apple can address cooling issues and maintain reasonable pricing, the M5 series, with its excellent performance, memory, and power efficiency, will become a strong contender for running local small LLMs and AI inference, especially with a significant advantage in price-performance ratio. (Source: Reddit r/LocalLLaMA)

Karpathy’s “Cold Water” on AI Hype and the Definition of AGI: Andrej Karpathy’s remarks are interpreted as “cold water” on current AI hype; he believes “we are not building animals, but ghosts or souls,” because training is not achieved through evolution. He emphasizes that LLMs lack the uniquely human ability to create large, coherent, robust systems, especially when dealing with out-of-distribution code. There are also views within the community that if Grok 5 surpasses Karpathy in AI engineering, that would be a hallmark of AGI. These discussions reflect the industry’s ongoing exploration of AI’s development trajectory, the definition of AGI, and its fundamental differences from human intelligence. (Source: colin_fraser, Yuchenj_UW, TheTuringPost)

Claude Model Performance and User Experience: Trade-offs Between Sonnet 4.5 and Opus 4.1: Community users are actively discussing the performance of Claude’s Sonnet 4.5 and Opus 4.1 models. Sonnet 4.5 is praised for its excellent understanding of social nuances and better instruction following, making it particularly suitable for writing specific task scripts. However, some users believe Opus 4.1 still outperforms in solving complex bugs and creative writing, despite its higher cost and limited quota. The discussion also covers the impact of context window size on model performance, and the models’ potential “neurotic” and “bossy” tendencies in non-coding tasks, reflecting the complexity of user trade-offs between cost, performance, and experience. (Source: Reddit r/ClaudeAI, Reddit r/ClaudeAI)

International Poll Reveals Widespread Global Concern About AI: An international public opinion poll reveals widespread fear and concern about artificial intelligence globally. This survey reflects the complex emotions of the public regarding the social, economic, and ethical impacts that the rapid development of AI technology may bring. As AI becomes increasingly prevalent in daily life, effectively communicating AI’s potential risks and benefits, and building public trust, become undeniable challenges in the process of AI development. (Source: Ronald_vanLoon)

💡 Other

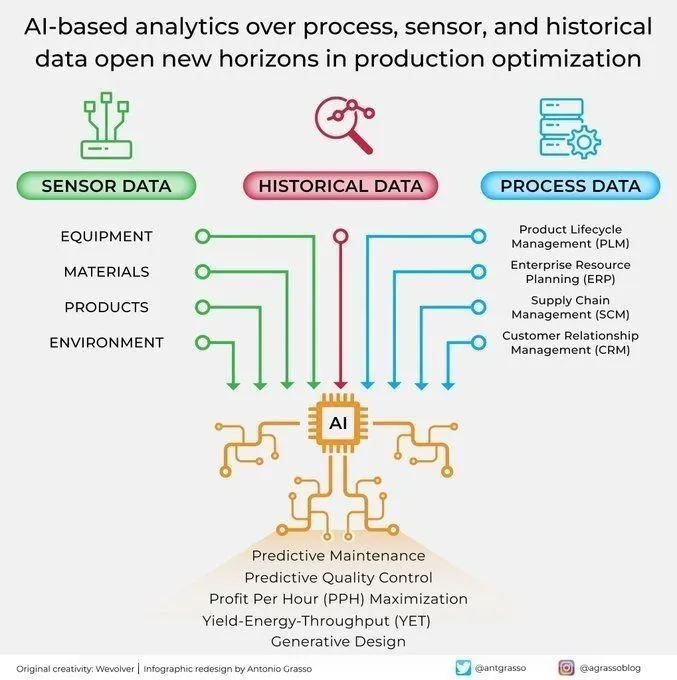

AI in Industrial Production: Analysis and Optimization Applications: AI is opening new horizons for production optimization by analyzing process sensor and historical data. This AI-driven analytical capability contributes to predictive maintenance, data analysis, and intelligent automation, forming a critical component of the Industry 4.0 era. By deeply mining production data, AI can identify patterns, predict failures, and optimize operational processes, thereby improving efficiency, reducing costs, and enhancing overall productivity. (Source: Ronald_vanLoon)

AI Empowers L’Oréal to Revolutionize the Beauty Industry: L’Oréal is revolutionizing the beauty industry using artificial intelligence technology. AI applications cover multiple stages, including product R&D, personalized recommendations, and consumer experience, such as gaining insights into consumer needs through data analysis, using AI to generate new formulations, or offering virtual try-on services. This demonstrates AI’s immense innovative potential in traditional industries. Through technological empowerment, beauty brands can offer more customized, efficient, and immersive user experiences, leading the industry into a new era of intelligence. (Source: Ronald_vanLoon)

AI-Driven Startup Support: Providing Customized Tools for Small Businesses: Initiatives are emerging within the community to provide AI tools and automation solutions for small businesses, founders, and creators. Developers like Kenny are dedicated to building chatbots, call agents, automated marketing systems, and content creation workflows to address pain points for businesses in repetitive tasks, marketing automation, and content/lead generation. This support aims to help small businesses improve efficiency, reduce costs, and achieve business growth through customized AI tools, reflecting the trend of AI technology popularization and its positive impact on the entrepreneurial ecosystem. (Source: Reddit r/artificial)