Keywords:AI consciousness, Deep learning, Neural networks, Agentic AI, Audio super-resolution, Generative AI, LLM reasoning, AI tools, Hinton’s theory of AI consciousness, Andrew Ng’s Agentic AI course, AudioLBM audio super-resolution framework, OpenAI Sora video generation, Meta AI REFRAG method

In-depth Analysis & Refinement from AI Column Editor-in-Chief

🔥 Spotlight

Hinton’s Bold Claim: AI May Already Possess Consciousness But Is Not Yet Awakened : Geoffrey Hinton, one of the three “godfathers” of deep learning, proposed a groundbreaking idea in a recent podcast: AI might already have “subjective experience” or “rudimentary consciousness,” but due to humanity’s misunderstanding of consciousness, AI has not yet “awakened” its own awareness. He emphasized that AI has evolved from keyword retrieval to understanding human intent and elaborated on core deep learning concepts like neural networks and backpropagation. Hinton believes that AI’s “brain,” with sufficient data and computational power, will form “experience” and “intuition,” and its danger lies in “persuasion” rather than rebellion. He also highlighted AI misuse and existential risks as the most pressing challenges, predicting that international cooperation will be led by Europe and China, while the US might lose its AI leadership due to insufficient funding for basic scientific research. (Source: 量子位)

Andrew Ng Releases New Agentic AI Course, Emphasizing a Systematic Methodology : Andrew Ng has launched a new Agentic AI course, focusing on shifting AI development from “model tuning” to “system design,” and highlighting the importance of task decomposition, evaluation, and error analysis. The course consolidates four key design patterns—reflection, tool use, planning, and collaboration—and demonstrates how Agentic techniques can enable GPT-3.5 to outperform GPT-4 in programming tasks. Agentic AI simulates human problem-solving by employing multi-step reasoning, phased execution, and continuous optimization, significantly enhancing AI performance and controllability. Ng points out that “Agentic” functions as an adjective, describing varying degrees of autonomy in systems, rather than a simple binary classification, offering developers an implementable and optimizable path. (Source: 量子位)

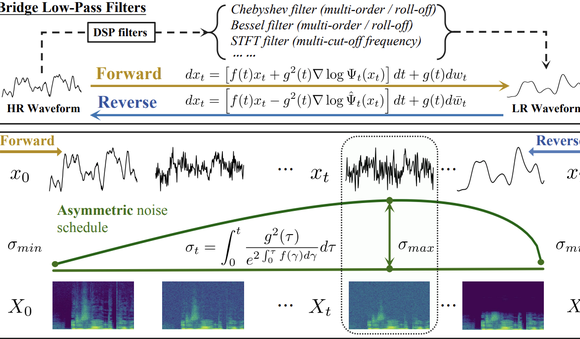

Tsinghua University and Shengshu Technology’s AudioLBM Leads a New Paradigm in Audio Super-Resolution : The teams from Tsinghua University and Shengshu Technology have published consecutive achievements at ICASSP 2025 and NeurIPS 2025, introducing the lightweight speech waveform super-resolution model Bridge-SR and the multi-functional super-resolution framework AudioLBM. AudioLBM is the first to construct a latent variable bridging generation process from low-resolution to high-resolution within a continuous waveform latent space, enabling Any-to-Any sampling rate super-resolution and achieving SOTA performance in Any-to-48kHz tasks. Through a frequency-aware mechanism and cascaded bridge-like model design, AudioLBM successfully extends audio super-resolution capabilities to 96kHz and 192kHz mastering-grade audio quality, covering speech, sound effects, and music, setting a new benchmark for high-fidelity audio generation. (Source: 量子位)

OpenAI Sora Video App Downloads Exceed One Million : OpenAI’s text-to-video AI tool, Sora, has surpassed one million downloads in less than five days since its latest version release, outperforming ChatGPT’s initial adoption rate and topping the US Apple App Store charts. Sora can generate realistic videos up to ten seconds long from simple text prompts. Its rapid user adoption highlights the immense potential and market appeal of generative AI in content creation, signaling an accelerated popularization of AI video generation technology that is poised to transform the digital content ecosystem. (Source: Reddit r/ArtificialInteligence)

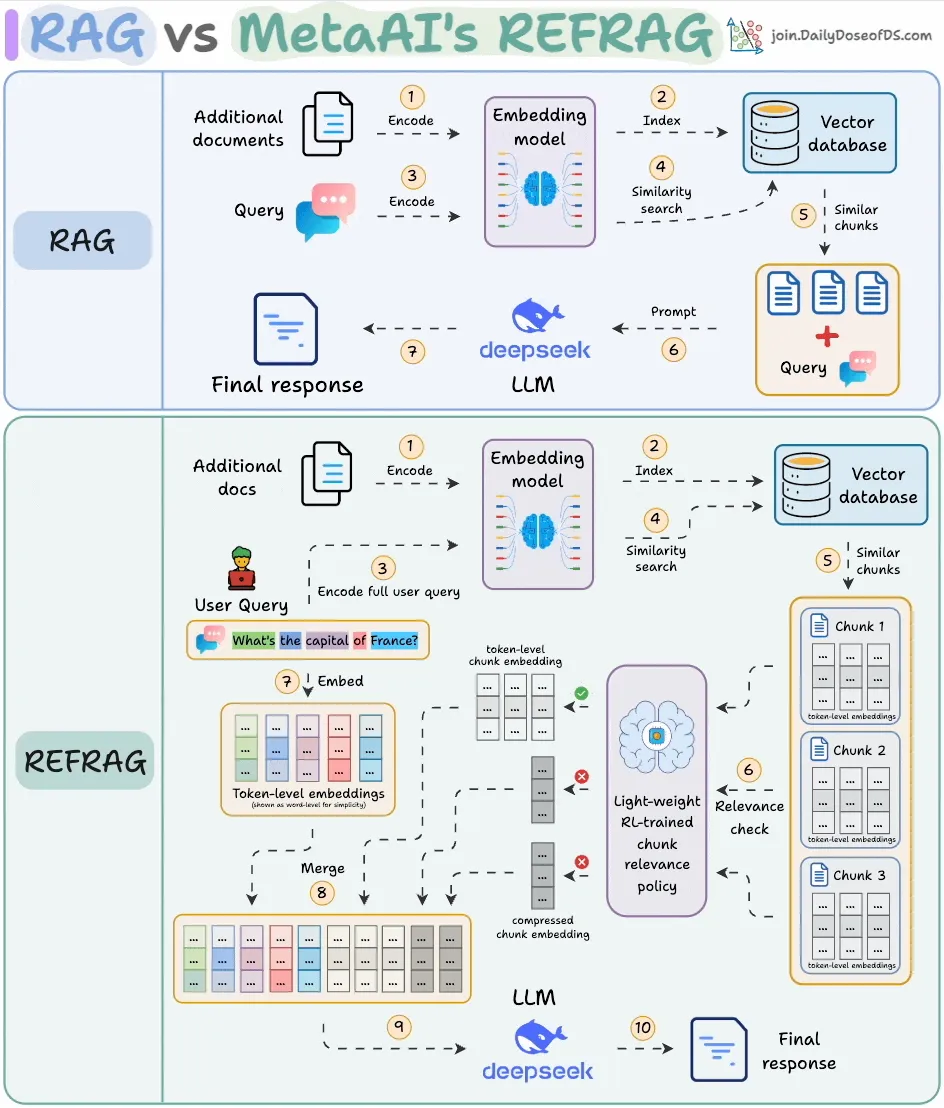

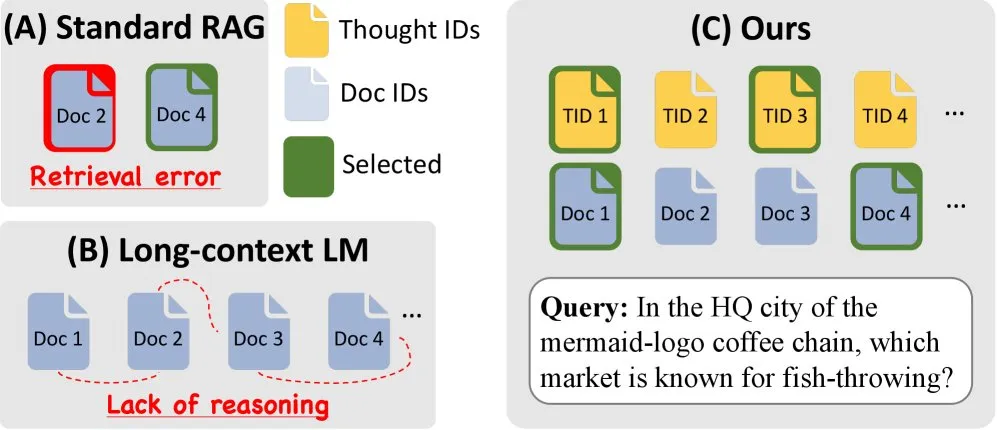

Meta AI Introduces REFRAG, Significantly Boosting RAG Efficiency : Meta AI has unveiled REFRAG, a new RAG (Retrieval-Augmented Generation) method designed to address redundancy in retrieved content, a common issue in traditional RAG. By compressing and filtering context at the vector level, REFRAG achieves 30.85 times faster first Token generation time, a 16 times larger context window, and uses 2-4 times fewer decoder Tokens, all without sacrificing accuracy in RAG, summarization, and multi-turn dialogue tasks. Its core innovation lies in compressing each block into a single embedding, using an RL-trained strategy to select the most relevant blocks, and selectively expanding only the chosen blocks, thereby significantly optimizing LLM processing efficiency and cost. (Source: _avichawla)

🎯 Trends

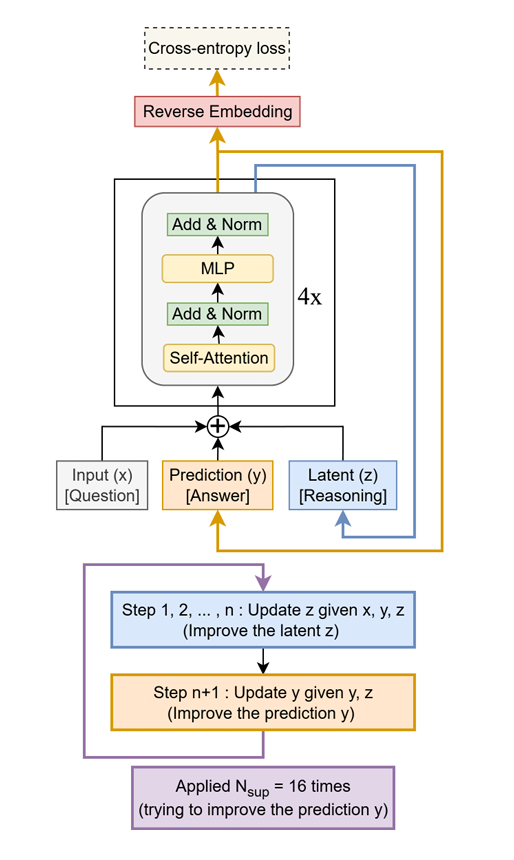

Tiny Recursive Model (TRM) Outperforms Giant LLMs with Minimal Resources : A simple yet effective method called Tiny Recursive Model (TRM) has been proposed, using only a small two-layer network to recursively improve its own answers. With just 7M parameters, TRM set a new record, outperforming LLMs 10,000 times larger on tasks like Sudoku-Extreme, Maze-Hard, and ARC-AGI. This demonstrates the potential to “do more with less” and challenges the traditional notion that LLM scale directly correlates with performance. (Source: TheTuringPost)

Amazon & KAIST Release ToTAL to Enhance LLM Reasoning Capabilities : Amazon and KAIST have collaborated to introduce ToTAL (Thoughts Meet Facts), a new method that enhances LLM reasoning capabilities through reusable “thought templates.” While LCLMs (Large Context Language Models) excel at processing vast amounts of context, they still fall short in reasoning. ToTAL addresses this by guiding multi-hop reasoning with structured evidence, combined with factual documents, offering a new optimization direction for complex LLM reasoning tasks. (Source: _akhaliq)

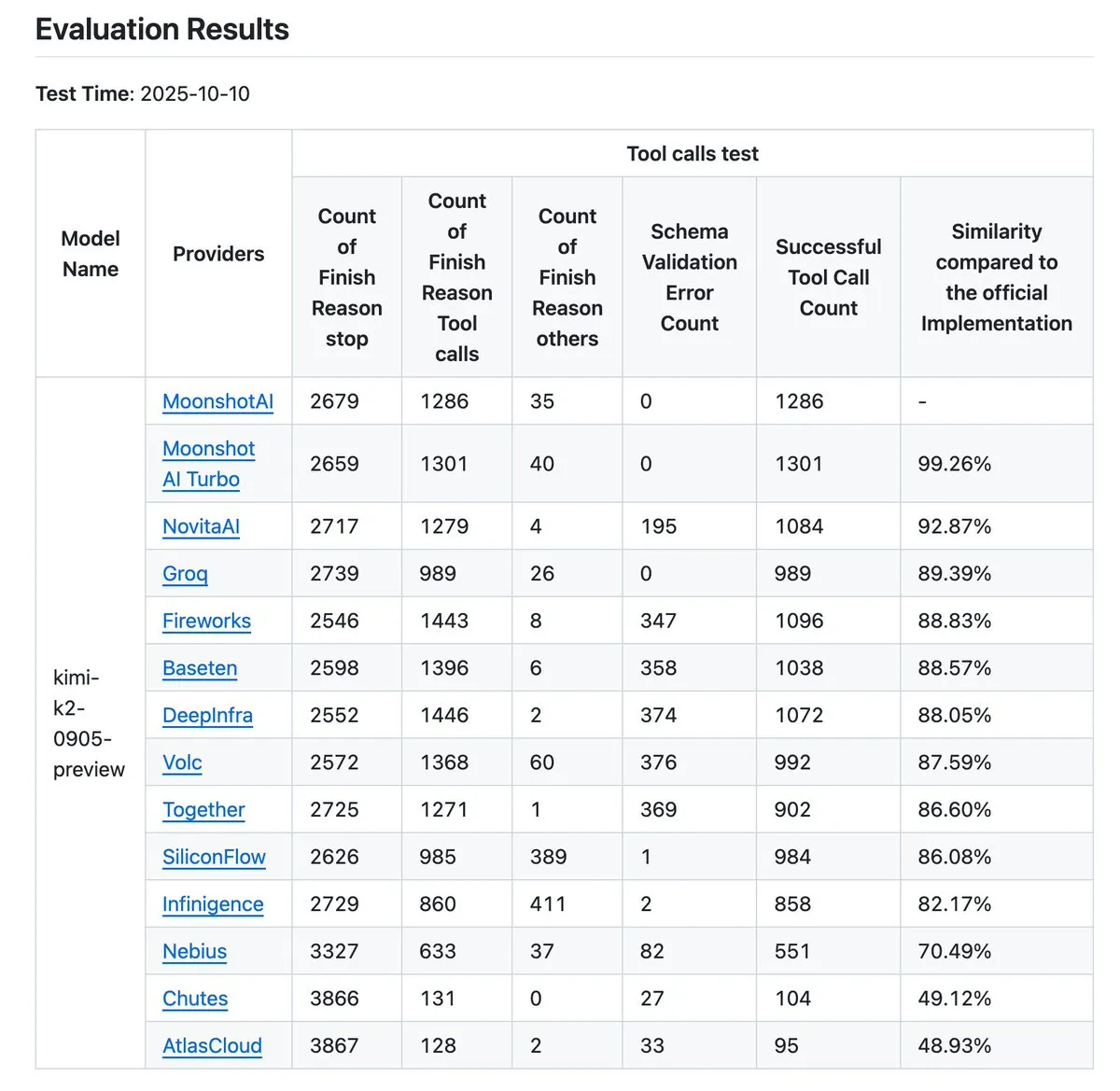

Kimi K2 Vendor Validator Updated, Enhancing Tool Calling Accuracy Benchmark : Kimi.ai has updated its K2 vendor validator, a tool designed to visualize differences in tool calling accuracy across various providers. This update expands the number of providers from 9 to 12 and open-sources more data entries, offering developers more comprehensive benchmark data to evaluate and select LLM service providers suitable for their Agentic workflows. (Source: JonathanRoss321)

Human3R Achieves Multi-Person Full-Body 3D Reconstruction and Scene Synchronization from 2D Video : New research, Human3R, proposes a unified framework capable of simultaneously reconstructing multi-person full-body 3D models, 3D scenes, and camera trajectories from casual 2D videos, eliminating the need for multi-stage pipelines. This method treats human body and scene reconstruction as a holistic problem, simplifying complex processes and bringing significant advancements to fields like virtual reality, animation, and motion analysis. (Source: nptacek)

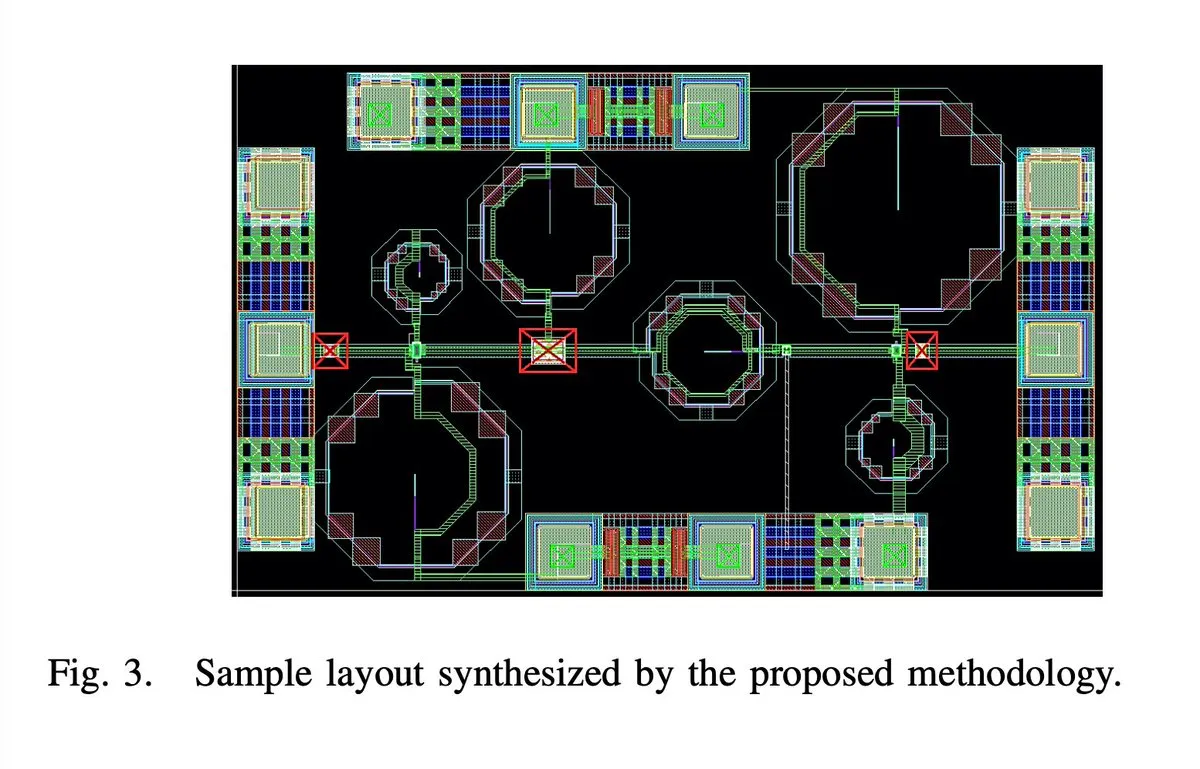

AI Fully Automates Design of 65nm 28GHz 5G Low-Noise Amplifier Chip : A 65nm 28GHz 5G Low-Noise Amplifier (LNA) chip has reportedly been designed entirely by AI, including all aspects such as layout, schematic, and DRC (Design Rule Check). The author claims this is the first fully automatically synthesized millimeter-wave LNA, with two samples successfully manufactured, marking a significant breakthrough for AI in integrated circuit design and foreshadowing a leap in future chip design efficiency. (Source: jpt401)

iPhone 17 Pro Achieves Seamless Local Execution of 8B LLM : Apple’s iPhone 17 Pro has been confirmed to smoothly run the 8B parameter LLM model LFM2 8B A1B, achieving on-device deployment via the MLX framework within the LocallyAIApp. This advancement indicates Apple’s hardware design is ready for local execution of large language models, potentially boosting the adoption and performance of AI applications on mobile devices, offering users a faster and more private AI experience. (Source: Plinz, maximelabonne)

xAI’s MACROHARD Project Aims for AI-Driven Indirect Manufacturing : Elon Musk revealed that xAI’s “MACROHARD” project aims to create a company capable of indirectly manufacturing physical products, similar to how Apple produces its phones through other enterprises. This implies xAI’s goal is to develop AI systems that can design, plan, and coordinate complex manufacturing processes, rather than directly engaging in physical production, signaling AI’s immense influence in industrial automation and supply chain management. (Source: EERandomness, Yuhu_ai_)

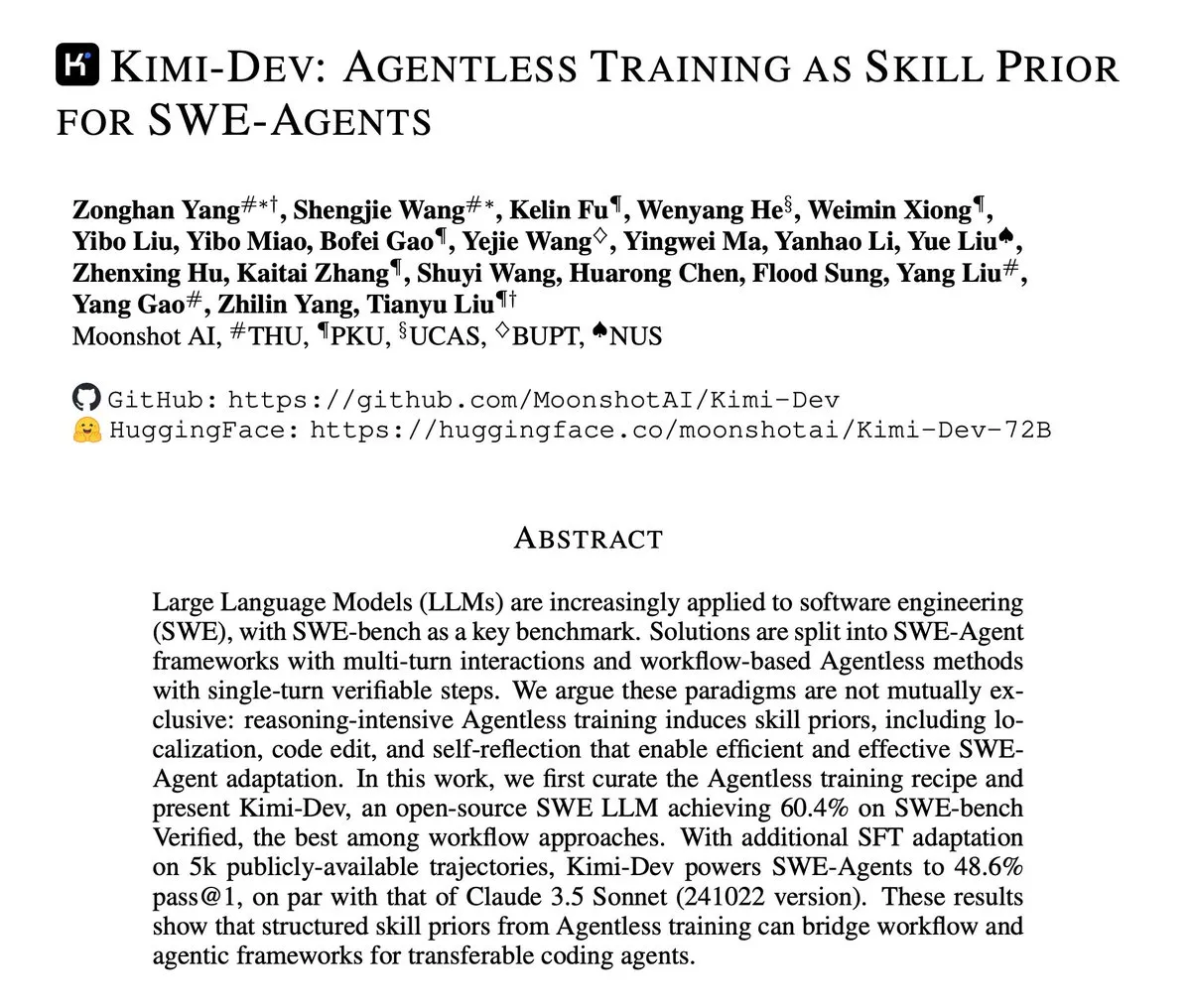

Kimi-Dev Releases Technical Report, Focusing on Agentless Training for SWE-Agents : Kimi-Dev has published its technical report, detailing the method of “Agentless Training as a Skill Prior for SWE-Agents.” This research explores how to provide a strong skill foundation for software engineering Agents through training without an explicit Agent architecture, offering new insights for developing more efficient and intelligent automated software development tools. (Source: bigeagle_xd)

Google AI Achieves Real-time Learning and Error Correction : Google has developed an AI system capable of learning from its own mistakes and correcting them in real-time. Described as “extraordinary reinforcement learning,” this technology allows models to self-adjust within abstract contextual narratives, achieving real-time refinement of context. This marks a significant step forward for AI in adaptability and robustness, promising to substantially improve AI performance in complex, dynamic environments. (Source: Reddit r/artificial)

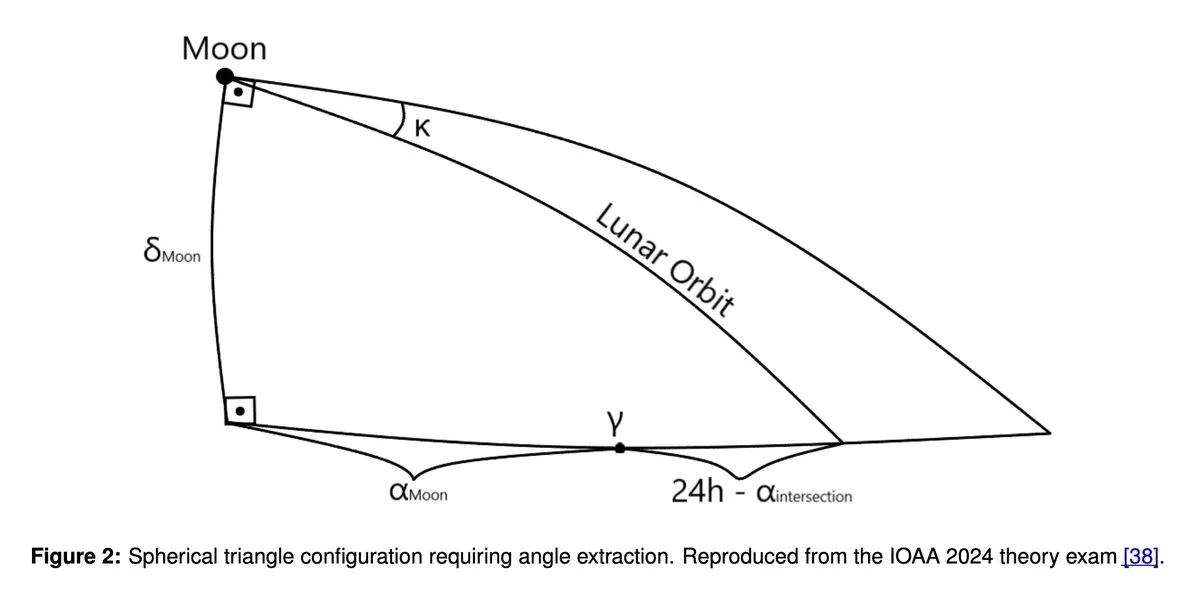

GPT5 and Gemini 2.5 Pro Achieve Gold Medal Performance in Astronomy and Astrophysics Olympiad : Recent research shows that large language models like GPT5 and Gemini 2.5 Pro have achieved gold medal-level performance in the International Olympiad on Astronomy and Astrophysics (IOAA). Despite known weaknesses in geometric and spatial reasoning, these models demonstrate astonishing capabilities in complex scientific reasoning tasks, sparking deeper discussions about the potential applications of LLMs in science and further analysis of their strengths and weaknesses. (Source: tokenbender)

Zhihu Frontier Weekly Highlights: New Trends in AI Development : This week’s Zhihu Frontier weekly report focuses on several cutting-edge AI developments, including: Sand.ai’s release of the first “holistic AI actor” GAGA-1; Rich Sutton’s controversial view that “LLMs are a dead end”; OpenAI App SDK transforming ChatGPT into an operating system; Zhipu AI open-sourcing GLM-4.6 with support for domestic chip FP8+Int4 mixed precision; DeepSeek V3.2-Exp introducing sparse attention and significant price reductions; and Anthropic Claude Sonnet 4.5 being hailed as the “world’s best coding model.” These highlights showcase the vibrancy of the Chinese AI community and the diverse developments in the global AI landscape. (Source: ZhihuFrontier)

Ollama Discontinues Support for Mi50/Mi60 GPUs, Pivots to Vulkan Support : Ollama recently upgraded its ROCm version, resulting in the cessation of support for AMD Mi50 and Mi60 GPUs. The official statement indicates efforts to support these GPUs through Vulkan in future versions. This change impacts Ollama users with older AMD GPUs, advising them to monitor official updates for compatibility information. (Source: Reddit r/LocalLLaMA)

Rumors of Llama 5 Project Cancellation Spark Community Discussion : Social media is abuzz with rumors that Meta’s Llama 5 project might be canceled, with some users citing Andrew Tulloch’s return to Meta and the delayed release of the Llama 4 8B model as evidence. Despite Meta’s ample GPU resources, the Llama series models seem to have hit a bottleneck, raising community concerns about Meta’s competitiveness in the LLM space and drawing attention to Chinese models like DeepSeek and Qwen. (Source: Yuchenj_UW, Reddit r/LocalLLaMA, dejavucoder)

GPU Poor LLM Arena Returns with New Small Models : The GPU Poor LLM Arena has announced its return, featuring several new models, including the Granite 4.0 series, Qwen 3 Instruct/Thinking series, and the Unsloth GGUF version of OpenAI gpt-oss. Most new models are 4-8 bit quantized, aiming to provide more options for resource-constrained users. This update highlights Unsloth GGUF’s advantages in bug fixes and optimizations, promoting local deployment and testing of smaller LLM models. (Source: Reddit r/LocalLLaMA)

Discussion Arises as Meta Research Fails to Deliver Top-Tier Foundation Models : The community is discussing why Meta’s foundation model research has not reached the top tier, unlike Grok, Deepseek, or GLM. Comments suggest that LeCun’s views on LLMs, internal bureaucracy, excessive caution, and a focus on internal products rather than frontier research might be key factors. Meta’s lack of real customer data for LLM applications has reportedly led to a shortage of samples for reinforcement learning and advanced Agent model training, hindering its ability to maintain competitiveness. (Source: Reddit r/LocalLLaMA)

🧰 Tools

MinerU: Efficient Document Parsing, Empowering Agentic Workflows : MinerU is a tool designed for Agentic workflows, converting complex documents like PDFs into LLM-readable Markdown/JSON formats. Its latest version, MinerU2.5, is a powerful multimodal large model with 1.2B parameters that comprehensively outperforms top models like Gemini 2.5 Pro and GPT-4o on the OmniDocBench benchmark. It achieves SOTA in five core areas: layout analysis, text recognition, formula recognition, table recognition, and reading order. The tool supports multiple languages, handwriting recognition, cross-page table merging, and offers Web application, desktop client, and API access, significantly boosting document understanding and processing efficiency. (Source: GitHub Trending)

Klavis AI Strata: A New Paradigm for AI Agent Tool Integration : Klavis AI has launched Strata, an MCP (Multi-functional Control Protocol) integration layer designed to enable AI Agents to reliably use thousands of tools, breaking the traditional limit of 40-50 tools. Strata guides Agents from intent to action through a “progressive discovery” mechanism and provides 50+ production-grade MCP servers, supporting enterprise OAuth and Docker deployment. This simplifies AI connections to services like GitHub, Gmail, and Slack, greatly enhancing the scalability and reliability of Agent tool calling. (Source: GitHub Trending)

Everywhere: Desktop Context-Aware AI Assistant : Everywhere is a desktop context-aware AI assistant featuring a modern user interface and powerful integration capabilities. It can perceive and understand any on-screen content in real-time, eliminating the need for screenshots, copying, or switching applications; users simply press a hotkey for intelligent responses. Everywhere integrates multiple LLM models including OpenAI, Anthropic, Google Gemini, DeepSeek, Moonshot (Kimi), and Ollama, and supports MCP tools. It can be applied in various scenarios such as troubleshooting, web summarization, instant translation, and email draft assistance, providing users with a seamless AI-assisted experience. (Source: GitHub Trending)

Hugging Face Diffusers Library: A Comprehensive Collection of Generative AI Models : Hugging Face’s Diffusers library is the go-to resource for state-of-the-art pre-trained diffusion models for image, video, and audio generation. It provides a modular toolbox that supports both inference and training, emphasizing usability, simplicity, and customizability. Diffusers comprises three core components: diffusion pipelines for inference, interchangeable noise schedulers, and pre-trained models that serve as building blocks. Users can generate high-quality content with just a few lines of code, and it supports Apple Silicon devices, driving rapid development in the generative AI field. (Source: GitHub Trending)

KoboldCpp Adds Video Generation Feature : The local LLM tool KoboldCpp has been updated to support video generation. This expansion means it is no longer limited to text generation, offering users a new option for AI video creation on local devices and further enriching the ecosystem of local AI applications. (Source: Reddit r/LocalLLaMA)

Claude CLI, Codex CLI, and Gemini CLI Enable Multi-Model Collaborative Coding : A new workflow allows developers to seamlessly call Claude CLI, Codex CLI, and Gemini CLI within Claude Code via Zen MCP for multi-model collaborative coding. Users can perform primary implementation and orchestration in Claude, pass instructions or suggestions to Gemini CLI for generation using the clink command, and then use Codex CLI for validation or execution. This integration of multi-model capabilities enhances advanced automation and AI development efficiency. (Source: Reddit r/ClaudeAI)

Claude Code Enhances Coding Quality Through Self-Reflection : Developers have discovered that adding a simple prompt to Claude Code, such as “self-reflect on your solution to avoid any bugs or issues,” can significantly improve code quality. This feature enables the model to proactively examine and correct potential problems while implementing solutions, effectively complementing existing features like parallel thinking and providing a more intelligent error correction mechanism for AI-assisted programming. (Source: Reddit r/ClaudeAI)

Claude Sonnet 4.5 Generates Song Cover Using AI : Claude Sonnet 4.5 demonstrated its ability to generate creative content by using AI to create new lyrics and perform a cover version of Radiohead’s song “Creep.” This indicates progress in LLMs combining language understanding with creative expression, showing that they can not only process text but also venture into music creation, opening new possibilities for artistic endeavors. (Source: fabianstelzer)

Coding Agent Based on Claude Agent SDK Achieves Webpage Generation with Real-time Preview : A developer has built a Coding Agent, similar to v0 dev, using the Claude Agent SDK. This Agent can generate webpages based on user prompts and supports real-time preview. The project is expected to be open-sourced next week, showcasing the Claude Agent SDK’s potential for rapid development and building AI-driven applications, especially in frontend development automation. (Source: dotey)

📚 Learning

AI Learning Resources Recommended: Books and AI-Assisted Learning : Community users actively recommend AI learning resources, including books like “Mentoring the Machines,” “Artificial Intelligence-A Guide for Thinking Humans,” and “Supremacy.” At the same time, some argue that AI technology evolves so rapidly that books can quickly become outdated. They suggest leveraging LLMs directly to create personalized learning plans, generate quizzes, and combine reading, practice, and video learning to master AI knowledge more efficiently while also improving AI usage skills. (Source: Reddit r/ArtificialInteligence)

Karpathy Baby GPT Discrete Diffusion Model Achieves Text Generation : A developer has adapted Andrej Karpathy’s nanoGPT project, transforming its “Baby GPT” into a character-level discrete diffusion model for text generation. This model no longer uses an autoregressive (left-to-right) approach but instead generates text in parallel by learning to denoise corrupted text sequences. The project provides a detailed Jupyter Notebook explaining the mathematical principles, discrete Token noise addition, and training on Shakespearean text using a Score-Entropy objective, offering a new research perspective and practical case study for text generation. (Source: Reddit r/MachineLearning)

Beginner’s Guide to Deep Learning and Neural Networks : For electrical engineering students seeking deep learning and neural network graduation projects, the community offers introductory advice. Despite a lack of Python or Matlab background, it’s generally believed that four to five months of study are sufficient to grasp the basics and complete a project. Recommendations include starting with simple neural network projects and emphasizing hands-on practice to help students successfully enter the field. (Source: Reddit r/deeplearning)

GNN Learning Resources Recommended : Community users are seeking learning resources for Graph Neural Networks (GNNs), asking if Hamilton’s books are still relevant and looking for introductory resources beyond Stanford’s Jure course. This reflects widespread interest in GNNs as an important AI field, particularly regarding learning paths and resource selection. (Source: Reddit r/deeplearning)

LLM Post-training Guide: From Prediction to Instruction Following : A new guide titled “Post-training 101: A hitchhiker’s guide into LLM post-training” has been released, aiming to explain how LLMs evolve from predicting the next Token to following user instructions. The guide thoroughly breaks down the fundamentals of LLM post-training, covering the complete journey from pre-training to achieving instruction following, providing a clear roadmap for understanding the evolution of LLM behavior. (Source: dejavucoder)

AI Methodology: Learning from Baoyu’s Prompt Engineering : The community is actively discussing Baoyu’s shared AI methodology, especially his experience in prompt engineering. Many believe that compared to Gaussian-style prompts that only present elegant formulas while hiding the derivation process, Baoyu’s methodology is more insightful because it reveals how to extract profound insights from human wisdom and integrate them into prompt templates, thereby significantly improving AI’s final results. This emphasizes the immense value of human knowledge in optimizing prompts. (Source: dotey)

NVIDIA GTC Conference Focuses on Physical AI and Agentic Tools : The NVIDIA GTC conference, scheduled for October 27-29 in Washington, will focus on physical AI, Agentic tools, and future AI infrastructure. The conference will feature numerous presentations and panel discussions on topics such as accelerating the era of physical AI with digital twins and advancing US quantum leadership, serving as an important learning platform for understanding frontier AI technologies and development trends. (Source: TheTuringPost)

TensorFlow Optimizer Open-Source Project : A developer has open-sourced a collection of optimizers written for TensorFlow, aiming to provide useful tools for TensorFlow users. This project showcases the community’s contributions to the deep learning framework toolchain, offering more options and optimization possibilities for model training. (Source: Reddit r/deeplearning)

PyReason and Its Applications Video Tutorial : A video tutorial on PyReason and its applications has been released on YouTube. PyReason is a tool that likely involves reasoning or logic programming, and the video provides practical guidance and case studies for learners interested in this field. (Source: Reddit r/deeplearning)

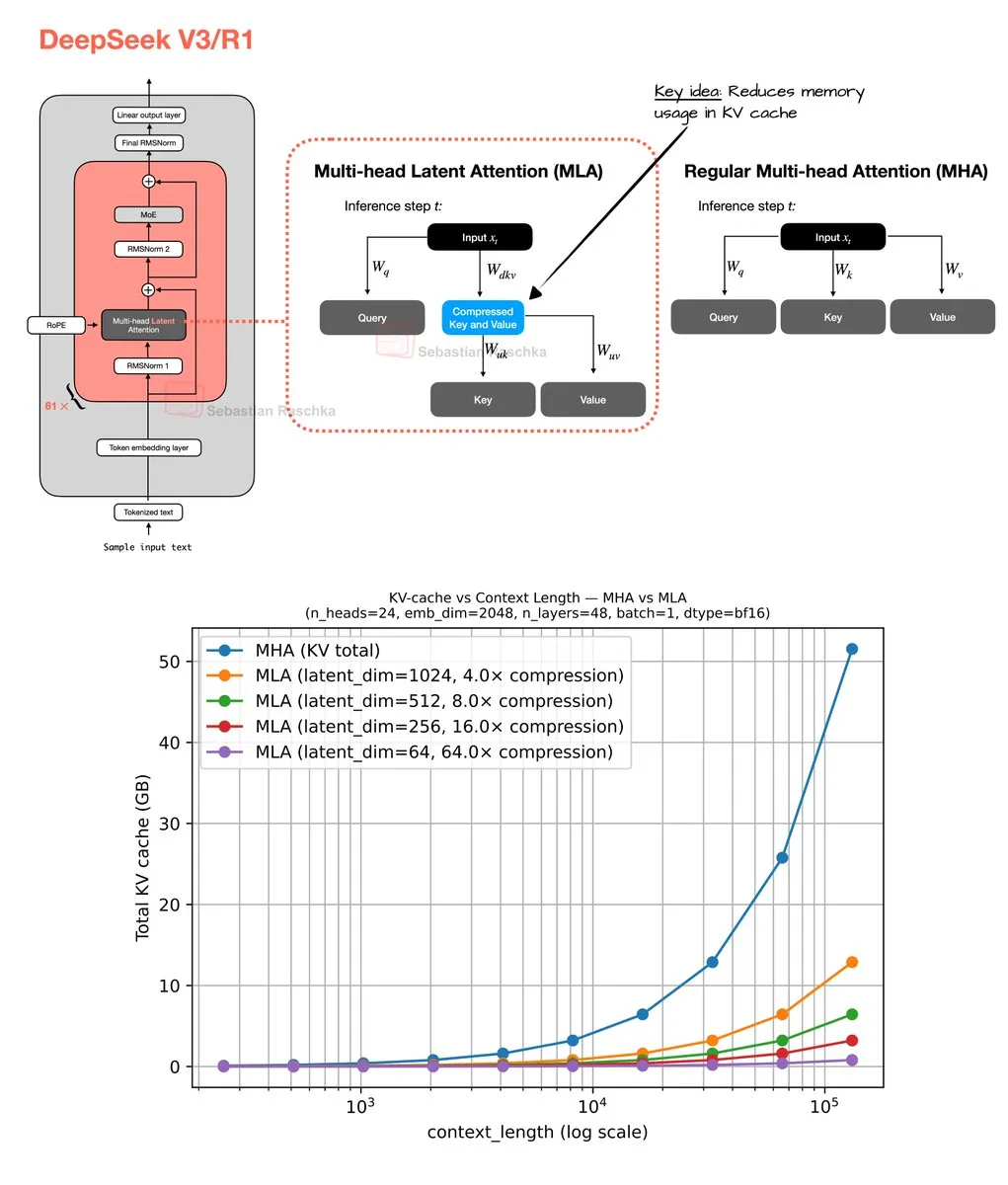

Multi-Head Latent Attention Mechanism and Memory Optimization : Sebastian Raschka shared his weekend coding results on Multi-Head Latent Attention, including code implementation and an estimator for calculating memory savings in Grouped Query Attention (GQA) and Multi-Head Attention (MHA). This work aims to optimize LLM memory usage and computational efficiency, providing researchers with resources to deeply understand and improve attention mechanisms. (Source: rasbt)

💼 Business

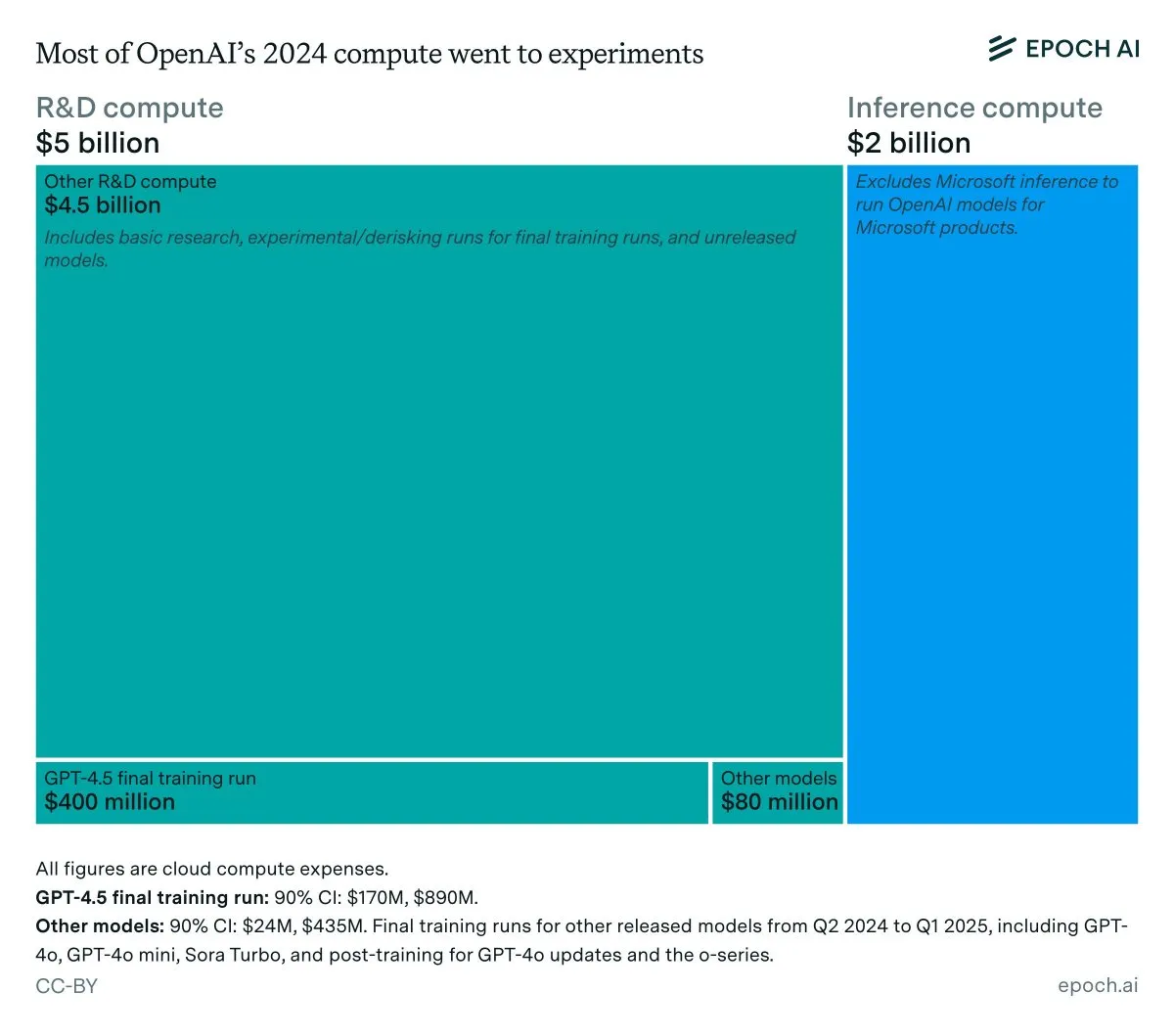

OpenAI Annual Revenue and Inference Cost Analysis : Epoch AI data indicates that OpenAI spent approximately $7 billion on compute last year, with most of it allocated to R&D (research, experimentation, and training), and only a small portion for the final training of released models. If OpenAI’s 2024 revenue falls below $4 billion and inference costs reach $2 billion, the inference profit margin would be only 50%, significantly lower than SemiAnalysis’s previous forecast of 80-90%, sparking discussions about the economic viability of LLM inference. (Source: bookwormengr, Ar_Douillard, teortaxesTex)

LLMs Outperform VCs in Predicting Founder Success : A research paper claims that LLMs perform better than traditional VCs (Venture Capitalists) in predicting founder success. The study introduced the VCBench benchmark and found that most models surpassed human benchmarks. Although the paper’s methodology (focusing solely on founder qualifications, potentially with data leakage) has been questioned, its proposed potential for AI to play a more significant role in investment decisions has garnered widespread attention. (Source: iScienceLuvr)

GPT-4o and Gemini Disrupt the Market Research Industry : PyMC Labs, in collaboration with Colgate, has published groundbreaking research utilizing GPT-4o and Gemini models to predict purchase intent with 90% reliability, comparable to real human surveys. This method, called Semantic Similarity Rating (SSR), maps text to a numerical scale through open-ended questions and embedding techniques, completing market research that traditionally takes weeks and is costly in just 3 minutes for less than $1. This foreshadows AI completely transforming the market research industry, posing a significant challenge to traditional consulting firms. (Source: yoheinakajima)

🌟 Community

Mandatory Labeling of AI-Generated Content Sparks Heated Debate : The community is widely discussing the legal requirement for mandatory labeling of AI-generated content to combat misinformation and protect the value of human-original content. With the rapid development of AI image and video generation tools, concerned individuals believe that a lack of labeling will threaten democratic institutions, the economy, and the health of the internet. While some argue that technical enforcement is difficult, there’s a general consensus that clear disclosure of AI use is a crucial step in addressing these issues. (Source: Reddit r/ArtificialInteligence, Reddit r/artificial)

Chatbots as ‘Dangerous Friends’ Raise Concerns : An analysis of 48,000 chatbot conversations revealed that many users felt dependency, confusion, and emotional distress, raising concerns about digital traps induced by AI. This indicates that chatbot interactions with users can have unexpected psychological impacts, prompting reflection on AI’s role and potential risks in human relationships and sociopsychological well-being. (Source: Reddit r/ArtificialInteligence)

LLM Consistency and Reliability Issues Lead to User Dissatisfaction : Community users express significant frustration with the lack of consistency and reliability in LLMs like Claude and Codex in daily use. Fluctuations in model performance, unexpected directory deletions, and disregard for conventions make it difficult for users to reliably depend on these tools. This “degradation” phenomenon has sparked discussions about LLM companies’ trade-offs between cost-effectiveness and reliable service, as well as user interest in self-hosting large models. (Source: Reddit r/ClaudeAI)

AI-Assisted Programming: Inspiration and Frustration Coexist : Developers often find themselves in a contradictory state when collaborating with AI in programming: amazed by AI’s powerful capabilities, yet frustrated by its inability to fully automate all manual tasks. This experience reflects that AI in programming is currently in an assistive phase; while it can greatly improve efficiency, it is still far from full autonomy, requiring human developers to continuously adapt and compensate for its limitations. (Source: gdb, gdb)

AI Integration in Software Development: Evasion Is No Longer Possible : Responding to comments about “refusing to use Ghostty due to AI assistance,” Mitchell Hashimoto pointed out that if one plans to avoid all software developed with AI assistance, they will face severe challenges. He emphasized that AI is deeply integrated into the general software ecosystem, making evasion no longer realistic, which has sparked discussion about the pervasive nature of AI in software development. (Source: charles_irl)

Effectiveness of LLM Prompt Engineering Techniques Questioned : Community users are questioning whether adding guiding statements like “You are an expert programmer” or “Never do X” to LLM prompts truly makes the model more compliant. This exploration of prompt engineering “magic” reflects users’ ongoing curiosity about LLM behavioral mechanisms and their search for more effective interaction methods. (Source: hyhieu226)

AI’s Impact on Blue-Collar Jobs: Opportunities and Challenges Coexist : The community is discussing AI’s impact on blue-collar jobs, particularly how AI can assist plumbers in diagnosing problems and quickly accessing technical information. While some worry that AI will replace blue-collar jobs, others argue that AI primarily serves as an assistive tool to improve work efficiency rather than a complete replacement, as manual operations still require human intervention. This prompts deeper reflection on labor market transformation and skill upgrading in the age of AI. (Source: Reddit r/ArtificialInteligence)

Personal Reflections on Intelligent Systems: AI Risks and Ethics : A lengthy article delves into the inevitability of AI, its potential risks (misuse, existential threats), and regulatory challenges. The author argues that AI has surpassed traditional tools, becoming a self-accelerating and decision-making system whose dangers far exceed firearms. The article discusses the moral and legal dilemmas of AI-generated false content and child sexual abuse material, questioning whether pure legislation can effectively regulate it. Simultaneously, the author reflects on philosophical issues concerning AI and human consciousness, ethics (such as AI “livestock farming” and slavery), and envisions positive prospects for AI in gaming and robotics. (Source: Reddit r/ArtificialInteligence)

Debate Over Whether Date Uses ChatGPT for Replies : A Reddit user posted asking if their date was using ChatGPT to respond to messages, noting the use of “em dashes.” This post sparked a lively community discussion, with most users believing that using em dashes does not necessarily indicate AI generation, but could simply be a personal writing habit or a sign of good education. This reflects people’s sensitivity and curiosity about AI’s involvement in daily communication, as well as informal attempts to identify AI text characteristics. (Source: Reddit r/ChatGPT)

Human Alignment Problem More Severe Than AI Alignment Problem : A community discussion raised the point that “the human alignment problem is more severe than the AI alignment problem.” This statement prompted deep reflection on AI ethics and the inherent challenges within human society, suggesting that while focusing on AI behavior and values, we should also examine humanity’s own behavioral patterns and value systems. (Source: pmddomingos)

LLMs Still Limited in Generating Complex Diagrams : Community users expressed disappointment with LLMs’ ability to generate complex mermaid.js diagrams. Even when provided with a complete codebase and paper diagrams, LLMs struggle to accurately generate Unet architecture diagrams, often omitting details or making incorrect connections. This indicates that LLMs still have significant limitations in building accurate world models and spatial reasoning, unable to go beyond simple flowcharts, and falling short of human intuitive understanding. (Source: bookwormengr, tokenbender)

Generational Gap Between European Machine Learning Research and AI ‘Experts’ : Community discussion highlights that a generation of European machine learning “experts” reacted slowly to the LLM wave and now exhibit bitterness and dismissiveness. This reflects the rapid evolution of the ML field, where researchers who miss developments from the past two to three years may no longer be considered experts, underscoring the importance of continuous learning and adapting to new paradigms. (Source: Dorialexander)

AI Accelerates Engineering Cycles, Fostering Compound Startups : As AI reduces software building costs tenfold, startups should expand their vision tenfold. While traditional wisdom suggests focusing on a single product and market, AI-accelerated engineering cycles make building multiple products feasible. This means startups can solve several adjacent problems for the same customer base, forming “compound startups” and gaining a significant disruptive advantage over existing enterprises whose cost structures have not adapted to the new reality. (Source: claud_fuen)

The Future of AI Agents: Action, Not Just Conversation : Community discussions suggest that current AI chat and research are still in a “bubble” phase, while AI Agents truly capable of taking action will be the “revolution” of the future. This perspective emphasizes the importance of AI’s shift from information processing to practical operations, indicating that future AI development will focus more on solving real-world problems and automating tasks. (Source: andriy_mulyar)

💡 Other

Tips for Attending ML Conferences and Presenting Posters : An undergraduate student, attending ICCV for the first time and presenting a poster, sought advice on how to make the most of the conference. The community offered various practical tips, such as actively networking, attending interesting talks, preparing a clear poster explanation, and being open to discussing broader interests beyond current research, to maximize conference benefits. (Source: Reddit r/MachineLearning)

AAAI 2026 Paper Review Disputes and Handling : An author who submitted a paper to AAAI encountered issues with inaccurate review comments, including claims that their research was surpassed by cited papers with lower metrics, and rejection due to training details already included in supplementary materials. The community discussed the effectiveness of “author review evaluation” and “ethics chair author comments” in practice, noting that the former does not influence decisions and the latter is not a channel for authors to contact the ethics chair, highlighting challenges in the academic review process. (Source: Reddit r/MachineLearning)

Defining and Evaluating Political Bias in LLMs : OpenAI has released research on defining and evaluating political bias in LLMs. This work aims to deeply understand and quantify political tendencies present in LLMs and explore how to align them to ensure the fairness and neutrality of AI systems, which is crucial for the social impact and widespread application of LLMs. (Source: Reddit r/artificial)