Keywords:GPT-5 Pro, AI drug discovery, AI Agent, LLM, Deep learning, AI security, Multimodal AI, AI hardware acceleration, NICD-with-erasures counterexample, LoRA fine-tuning VRAM optimization, AI video generation Sora 2, OpenWebUI model permission management, AI storage cost reduction 65%

🔥 Spotlight

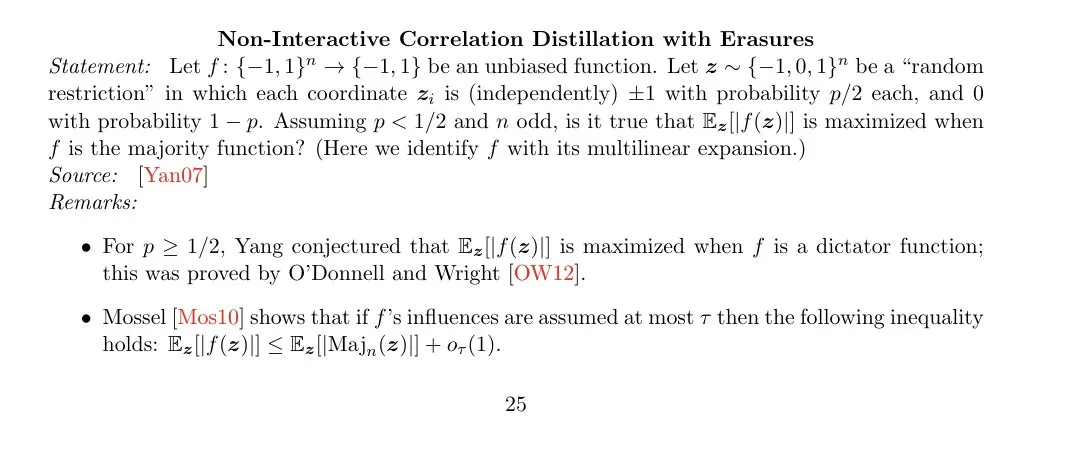

GPT-5 Pro’s Mathematical Breakthrough: GPT-5 Pro has made significant progress in mathematics, successfully finding a counterexample to the NICD-with-erasures majority optimality problem (Simons list, page 25). This discovery indicates that GPT-5 Pro has reached a new level in complex mathematical reasoning, capable of challenging existing mathematical theories. It foreshadows AI’s immense potential in original mathematical research and may boost the mathematical community’s acceptance of AI-assisted proofs. (Source: SebastienBubeck, BlackHC, hyhieu226, JimDMiller)

AI Accelerates New Antibiotic Development: A novel antibiotic targeting Inflammatory Bowel Disease (IBD) had its mechanism of action successfully predicted by AI and confirmed by scientists, even before human trials. This breakthrough demonstrates AI’s immense potential in accelerating drug discovery and healthcare, promising to shorten new drug development cycles and provide faster treatment options for patients. Human trials are expected to commence within three years. (Source: Reddit r/ArtificialInteligence)

🎯 Trends

AI+XR Real-time Video Conversion: Decart XR utilizes WebRTC to transmit real-time footage from MetaQuest cameras to AI models, enabling real-time video conversion. This technology showcases AI’s innovative applications in augmented reality, promising immersive, dynamic, and interactive new visual experiences for users, with significant potential in gaming, virtual collaboration, and creative content generation. (Source: gfodor)

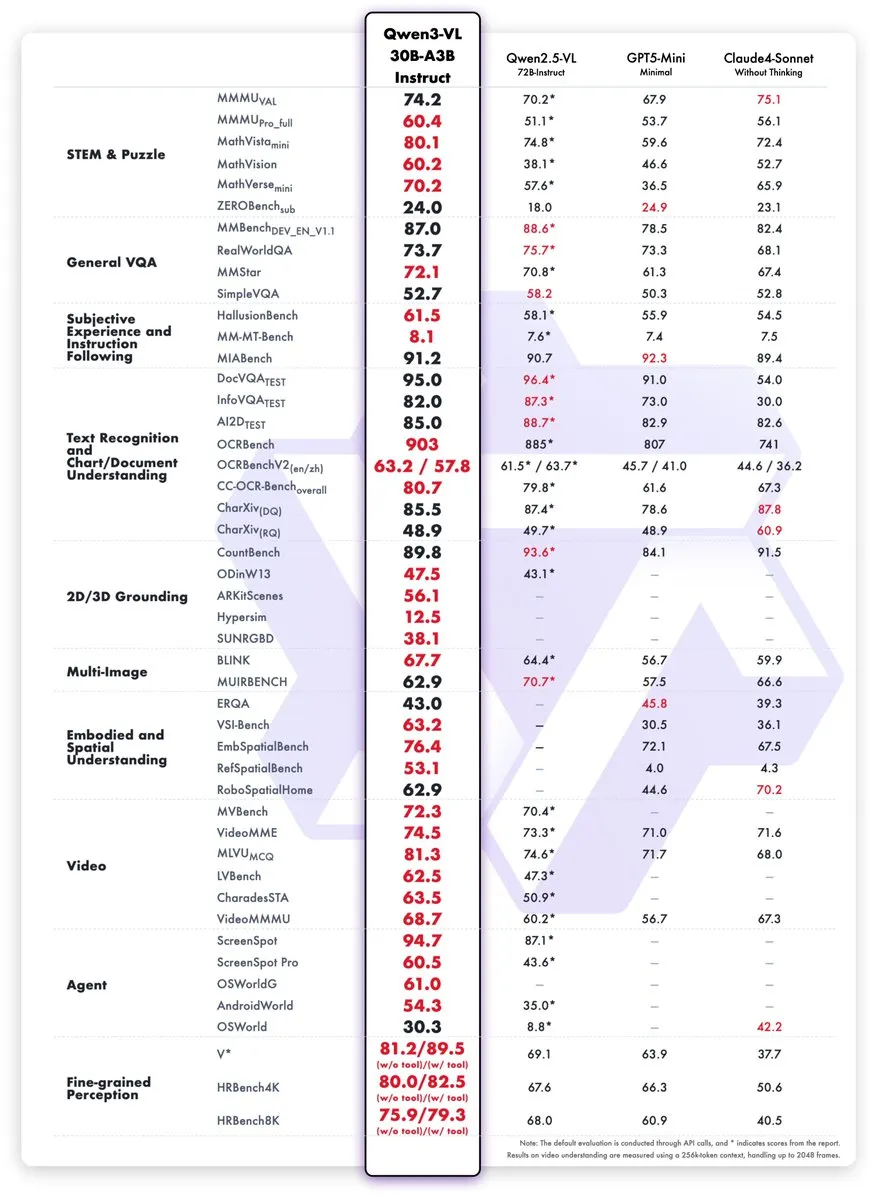

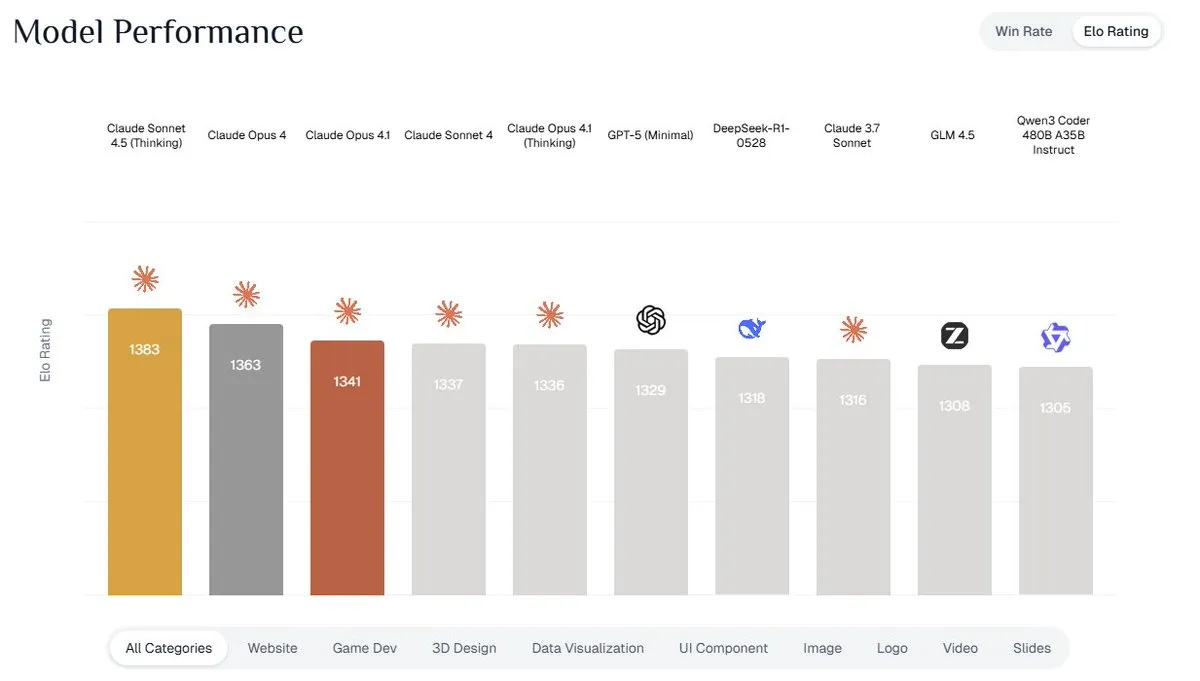

Multiple New LLMs Released: DeepSeek-V3.2-Exp enhances long-context reasoning and coding efficiency with its sparse attention mechanism; GLM 4.6 has been significantly upgraded, improving practical coding, reasoning, and writing capabilities; the Qwen3 VL 30B A3B model excels in visual reasoning and perception. The launch of these new models signals continuous advancements in LLMs across multimodal capabilities, long-context processing, and coding efficiency. (Source: yupp_ai, huggingface, Reddit r/LocalLLaMA)

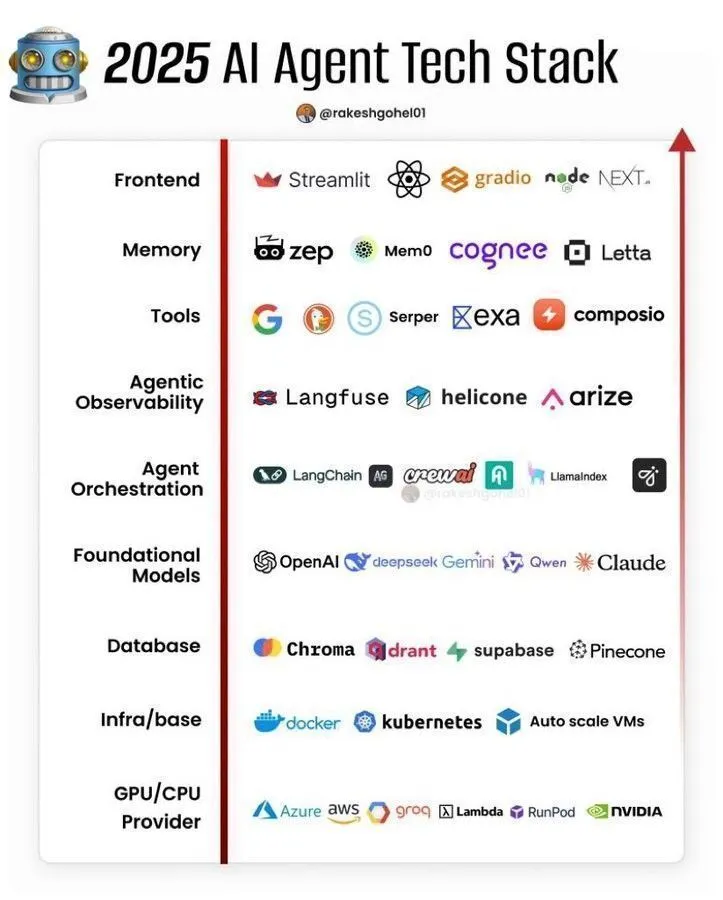

AI Agents Tech Stack and Architecture: The AI Agent tech stack and its practical architecture are rapidly evolving in 2025, covering everything from foundational building blocks to advanced deployment patterns. Discussions focus on designing efficient, scalable AI Agent systems to tackle complex tasks, indicating the increasing maturity of AI Agents in real-world applications. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

AI’s Growing Adoption in Education: An entrepreneur without a programming background developed an AI tutor for Jordan’s Ministry of Education using AI technology, successfully saving $10 million. This highlights AI’s immense potential in reducing educational costs and increasing accessibility, demonstrating that even non-specialists can leverage AI to solve real-world problems. (Source: amasad)

AI Storage Cost Optimization Solutions: CoreWeave proposes that optimizing AI data storage strategies can reduce AI storage costs by up to 65% without compromising innovation speed. Through techniques like memory snapshots, granular billing, and multi-cloud scheduling, platforms such as Modal can significantly lower GPU costs when handling burst inference workloads, compared to traditional cloud services like Azure. (Source: TheTuringPost, TheTuringPost, Reddit r/deeplearning)

AI+VR for Mental Health: The combination of Virtual Reality (VR) and Artificial Intelligence (AI) is expected to improve mental health treatment. Through immersive experiences and personalized interventions, AI+VR technology can provide the next generation with a more empathetic and connected growth environment, bringing innovative solutions to the mental health sector. (Source: Ronald_vanLoon, Ronald_vanLoon)

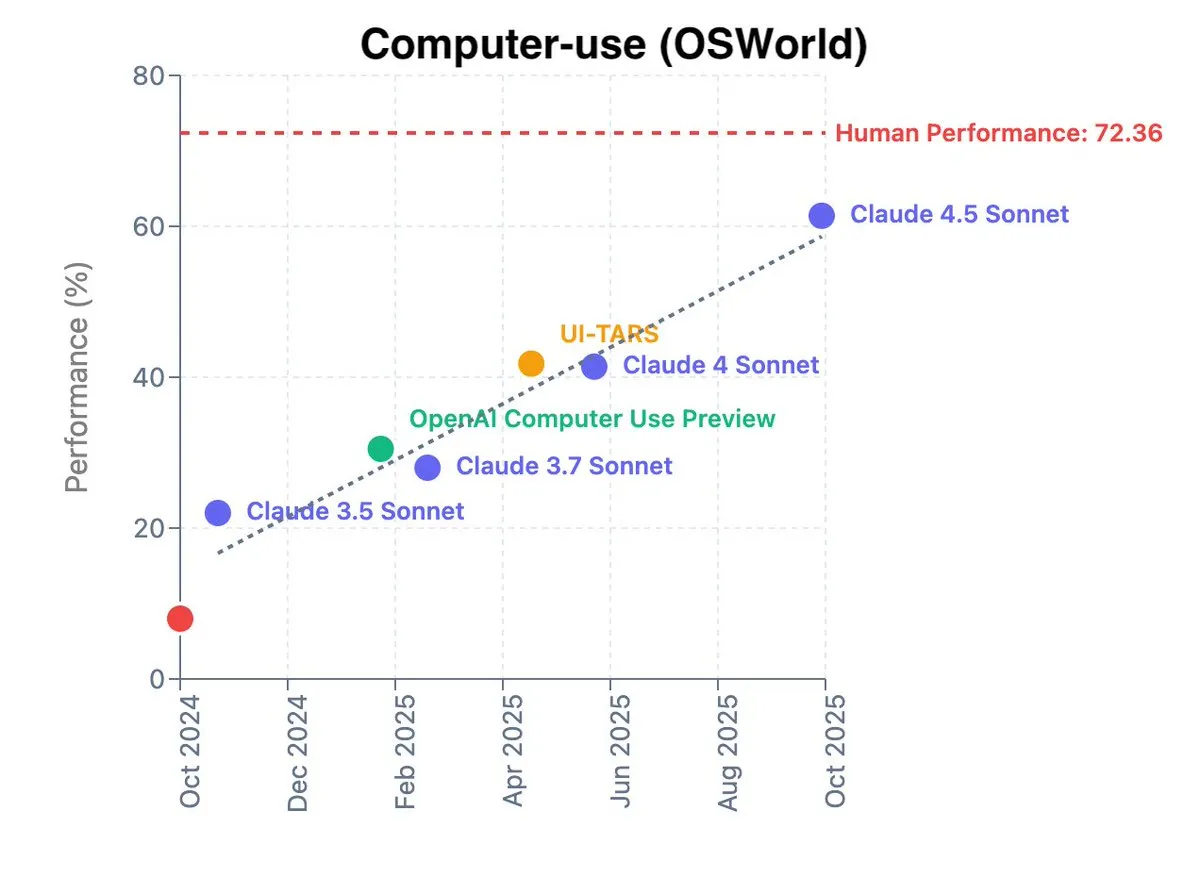

AI Accelerates Scientific Discovery: The Anthropic team is dedicated to enhancing computer usage efficiency through AI, thereby accelerating the scientific discovery process. Currently, end-to-end foundation models on OSWorld have improved their performance from 8% a year ago to 61%, approaching the human level of 72%. This indicates that AI will play an increasingly crucial role in scientific research. (Source: oh_that_hat, dilipkay)

OpenAI and Jony Ive Collaborate on Device: OpenAI and Jony Ive are collaborating to develop a handheld, screenless AI assistant, planned for a 2026 launch. However, it currently faces technical challenges related to core software, privacy, and computational power, which may lead to delays. The device will perceive its environment through microphones, cameras, and speakers, and will remain always-on. (Source: swyx, Reddit r/artificial)

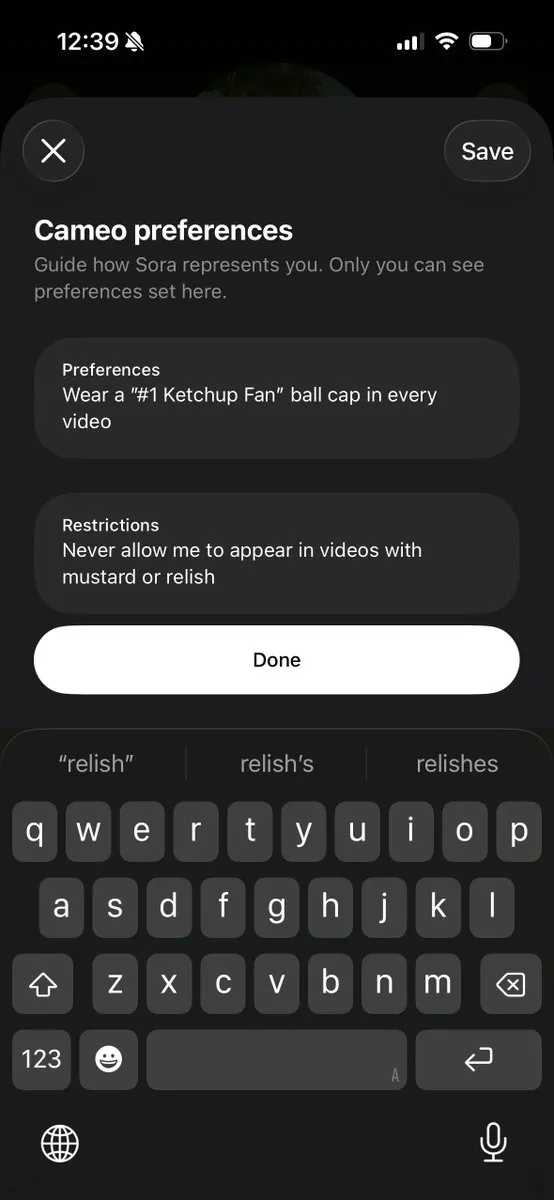

Sora Updates and Safety Improvements: OpenAI’s Sora video generation model has received an update, introducing a user-defined “cameo restriction” feature that allows creators to control how their likeness is used, for example, prohibiting its use in political commentary or with specific words. Additionally, the update includes more clearly visible watermarks and enhanced model security to reduce false positives and patch vulnerabilities. (Source: billpeeb, billpeeb, sama)

Challenges of AI Application in the Military: The U.S. Air Force is testing AI technology to counter China’s advancements in AI drones. A retired U.S. lieutenant general noted that in a conflict with China, the U.S. military would need to achieve a kill ratio of 10:1 or even 20:1 to sustain the fight, and current war game results are not optimistic. This highlights AI’s critical role in military strategy and the urgency of the competition. (Source: Reddit r/ArtificialInteligence)

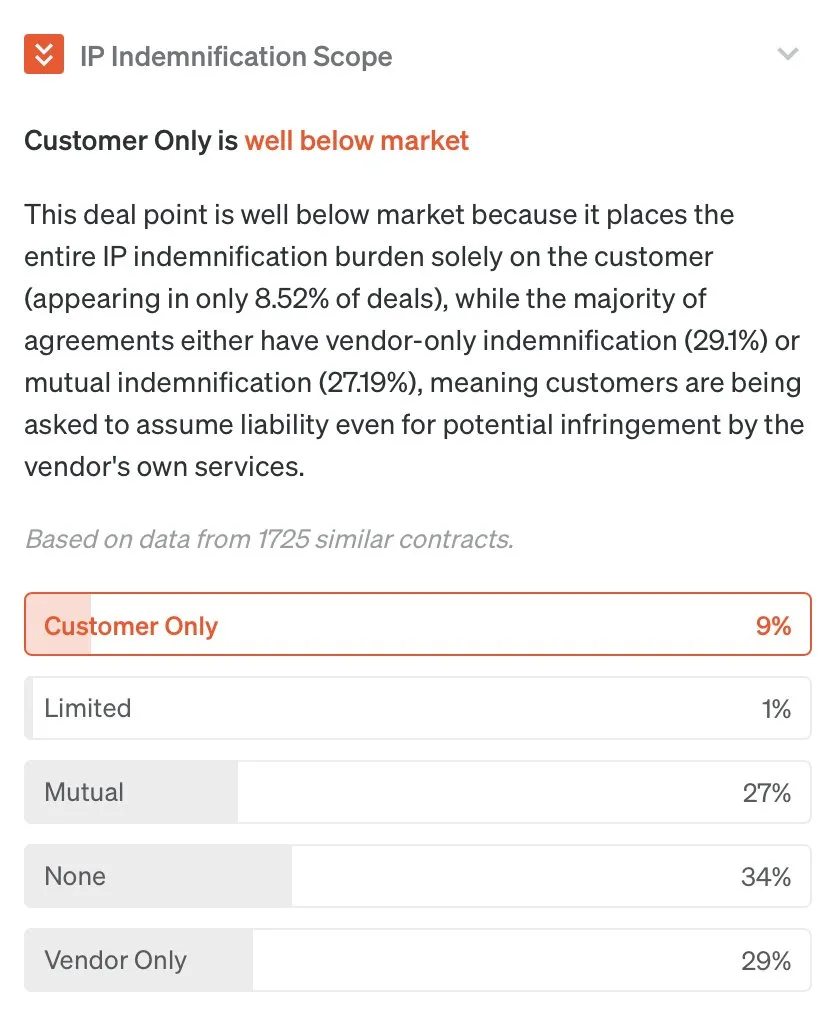

AI Transforms Legal Contract Negotiation: The era of data-driven contract negotiation has arrived, with AI making market data transparent to all, breaking the traditional “big law firm” monopoly on information. This technology is expected to improve the efficiency and fairness of contract negotiations, empowering more businesses and individuals. (Source: scottastevenson)

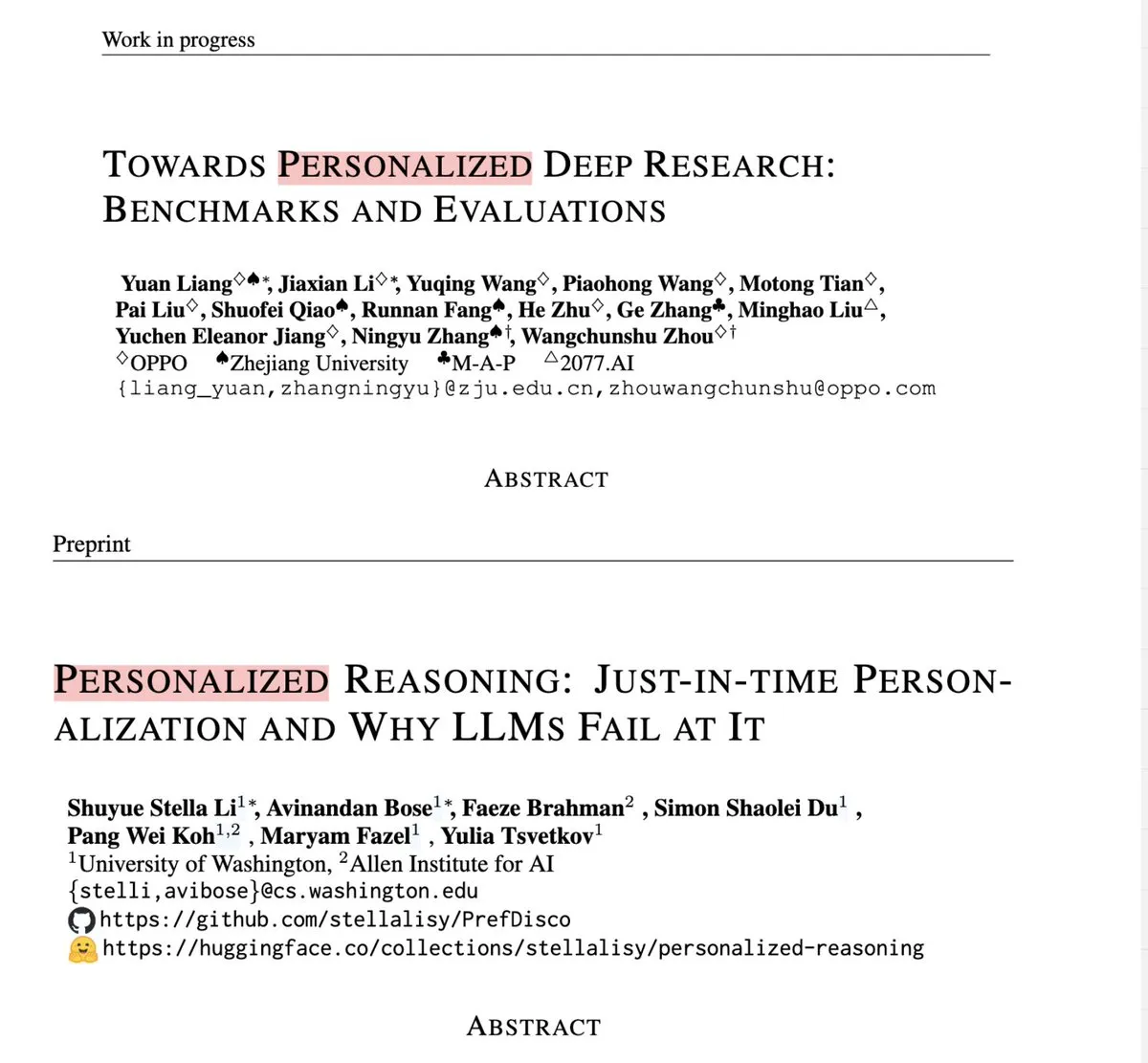

Enhanced LLM Personalization Capabilities: The development of LLMs has moved beyond mere benchmarking, with how models understand users and provide personalized services becoming key. Research efforts like PREFDISCO and PDR Bench focus on personalization in both immediate inference and long-term deep research, aiming for models to think and act around user goals, preferences, and constraints, rather than just tone adjustment. (Source: dotey)

State of the Open Model Ecosystem: A discussion on the current state of open models, covering the rise of China’s AI ecosystem, the impact of DeepSeek, the decline of Llama models, and the future direction of the U.S. market and local models. This reflects the dynamic landscape of open-source vs. closed-source competition in AI models. (Source: charles_irl)

ByteDance Long Video Generation Technology: ByteDance has introduced the “Self-Forcing++” method, capable of generating high-quality videos up to 4 minutes and 15 seconds long. This is achieved by extending diffusion models without requiring long video training data or retraining, maintaining video fidelity and consistency. (Source: NerdyRodent)

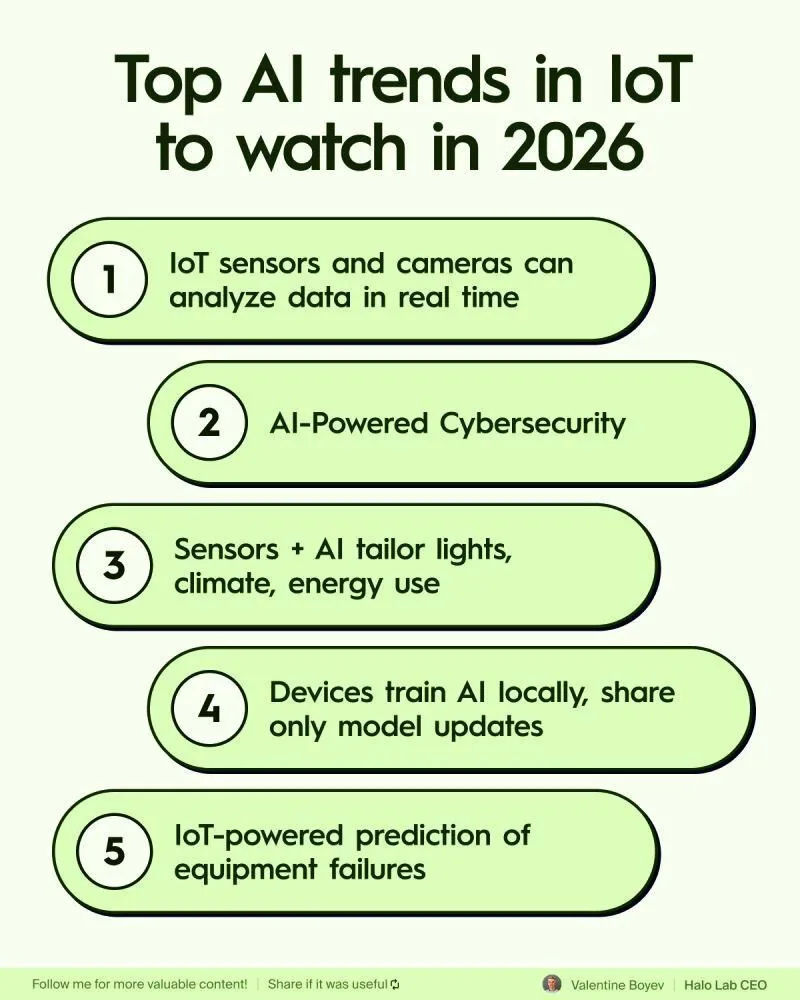

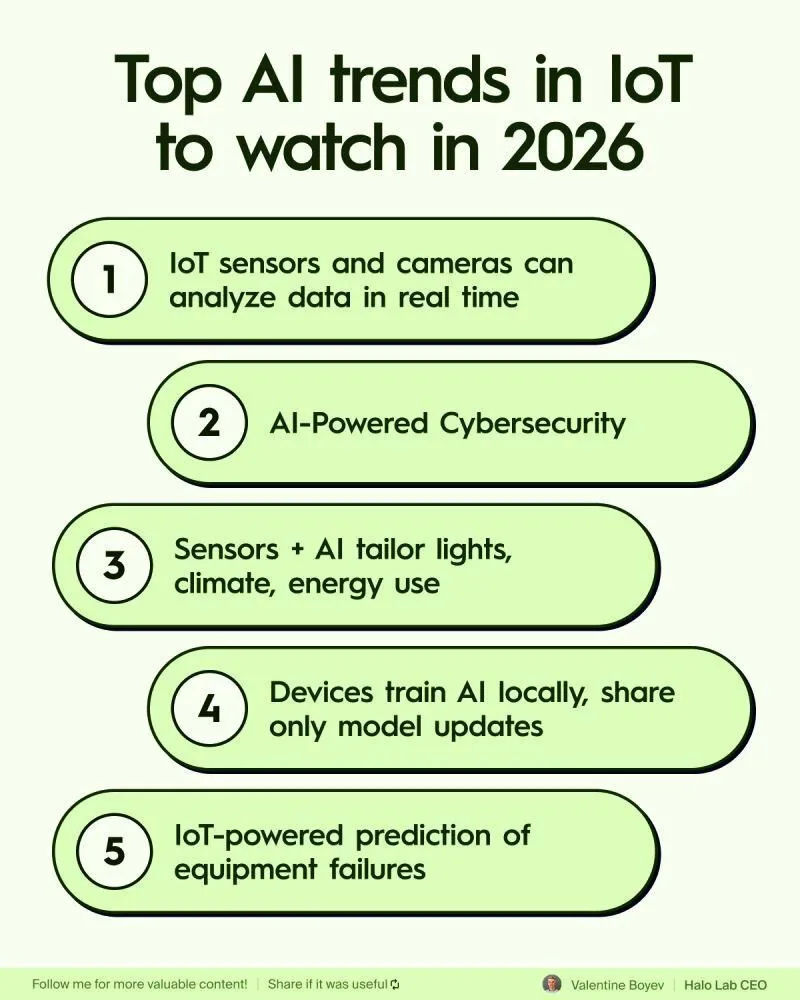

AI Trends in IoT: Ten key trends for AI in the Internet of Things (IoT) in 2026 are worth noting, signaling that the deep integration of AI and IoT will lead to smarter, more efficient devices and applications. (Source: Ronald_vanLoon)

AI-Driven Workplace Culture: AI is becoming a significant force driving changes in workplace culture. Its applications not only boost efficiency but also reshape cultural aspects such as team collaboration, decision-making, and employee development. (Source: Ronald_vanLoon)

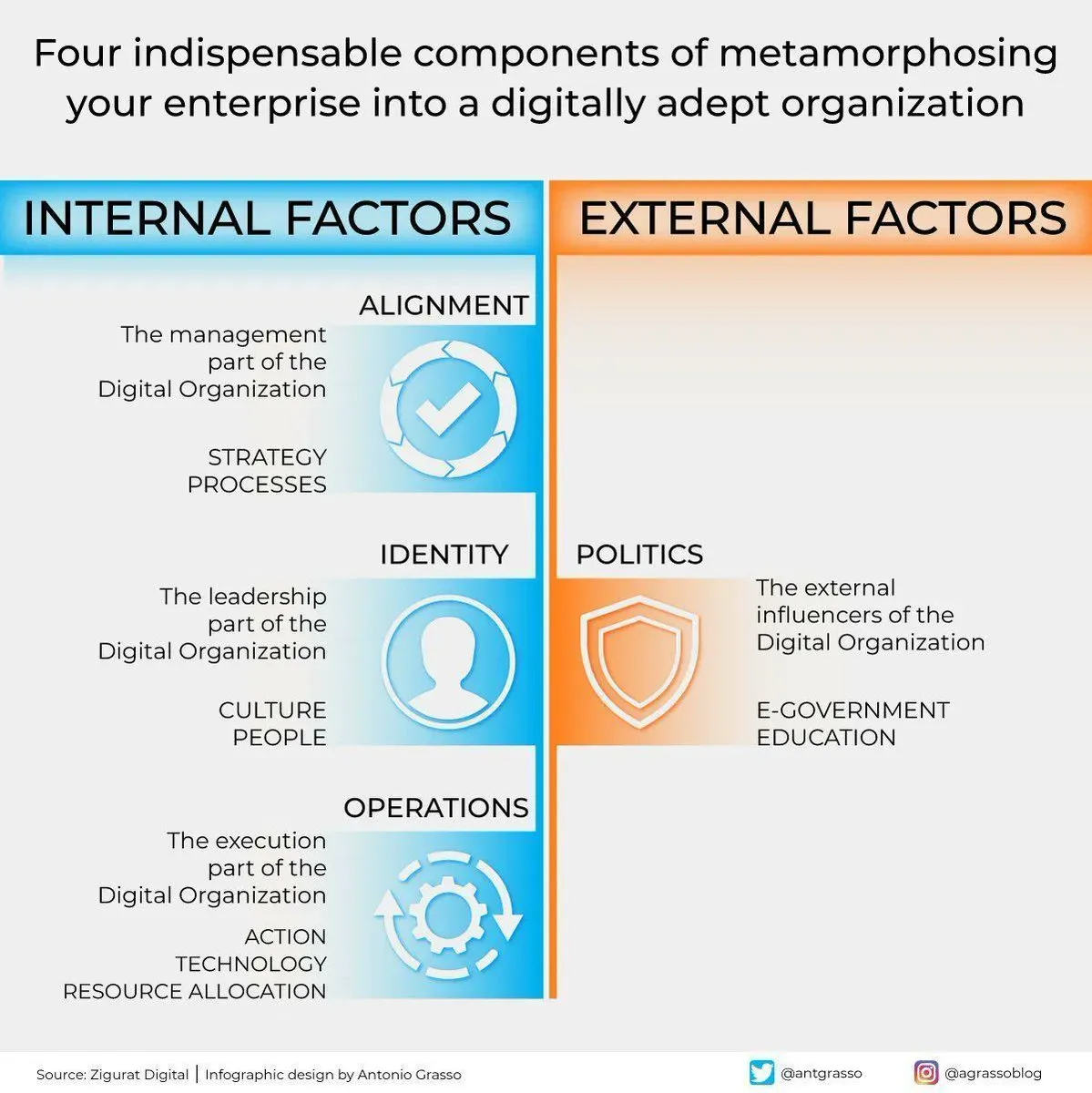

Four Key Elements of Digital Transformation: Discusses four indispensable components for enterprises transitioning to digital organizations, emphasizing the critical roles of innovation, technology, and AI. (Source: Ronald_vanLoon)

AI-Powered Prosthetic Technology: A 17-year-old developed a mind-controlled prosthetic arm using AI technology, demonstrating AI’s immense potential in assistive technology and improving human quality of life. (Source: Ronald_vanLoon)

Advancements in Robotics: The wheeled jumping robot Cecilia and a lightweight bionic tactile hand demonstrate modularity and advanced functionalities in robotic hardware. Additionally, Yondu AI has released a wheeled humanoid robot solution for warehouse picking, along with warehousing robots capable of traversing pallets, significantly boosting logistics efficiency. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Humanoid Robots Surpassing Human Capabilities: Discussion on the future possibility of humanoid robots surpassing human capabilities, for instance, by performing difficult tasks that humans cannot or struggle to execute, such as climbing high shelves to retrieve items, without needing to consider safety risks. This would greatly expand automation application scenarios. (Source: EERandomness)

AI Physicists and Quantum Mechanics Foundation Models: One perspective suggests that foundation models for quantum mechanics will become the next frontier for LLMs, enabling AI physicists to invent new materials. This foreshadows disruptive breakthroughs for AI in fundamental scientific research, particularly in the quantum-scale integration of biology, chemistry, and materials science. (Source: NandoDF)

Sora 2 Tackles ARC-AGI Tasks: Sora 2, when attempting to solve ARC-AGI (Abstract Reasoning Corpus – Artificial General Intelligence) tasks, was able to perceive the correct transformation logic, though execution still had flaws. This indicates progress for video generation models in understanding and applying abstract reasoning, but it remains some distance from perfectly achieving Artificial General Intelligence. (Source: NandoDF)

AI-Generated Game Content: It’s anticipated that within our lifetime, we will be able to play an infinite number of N64 games that never existed before. This foreshadows a revolution in game content creation by generative AI, enabling large-scale, personalized gaming experiences. (Source: scottastevenson)

OpenAI DevDay Approaching: OpenAI announced that DevDay 2025 is approaching, with Sam Altman delivering a keynote speech and previewing new tools and features to help developers build AI. This indicates OpenAI’s commitment to empowering the developer ecosystem and fostering AI application innovation. (Source: openai, sama)

AI Agent Builder: OpenAI plans to release Agent Builder at DevDay, allowing users to construct their own Agent workflows, connecting MCPs, ChatKit widgets, and other tools. This will greatly simplify the development and deployment of AI Agents, promoting the widespread adoption of Agentic AI. (Source: dariusemrani)

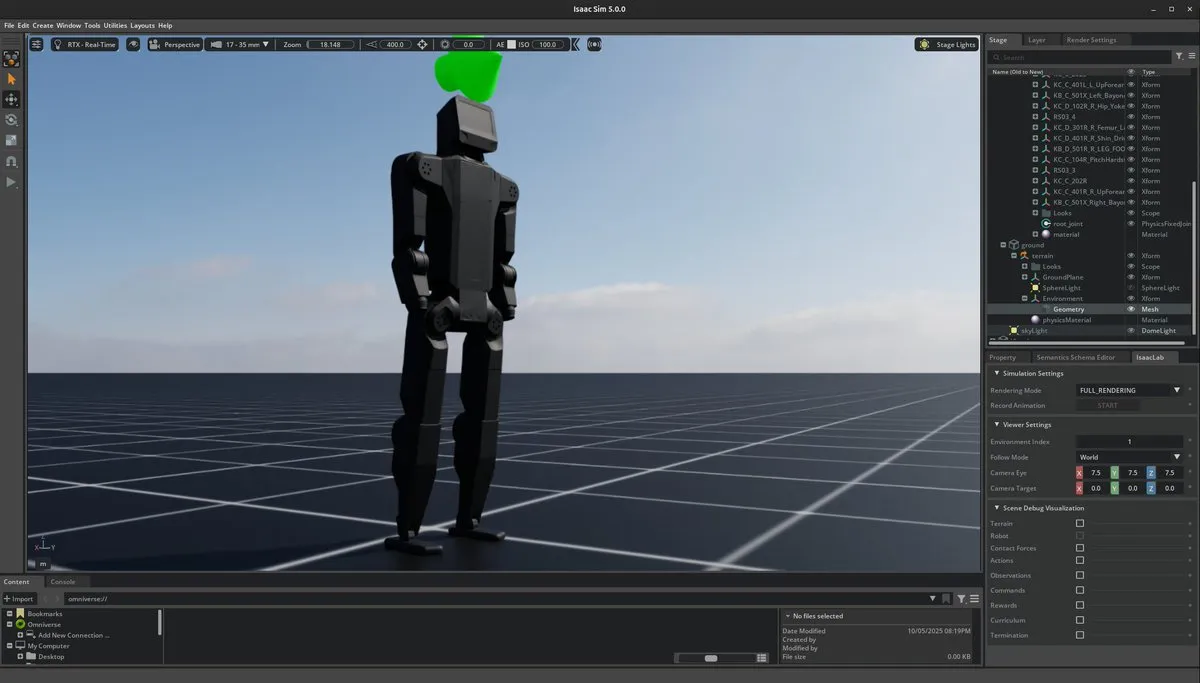

K-bot Strategy Training in Omniverse: The K-scale K-bot is undergoing strategy training on the NVIDIA Omniverse platform. Omniverse, as a virtual collaboration and simulation platform, provides a realistic environment for robot AI training, accelerating the robot learning and development process. (Source: Sentdex)

Sonnet 4.5 Adopts uv: Claude Sonnet 4.5 has been observed consistently using uv instead of python/python3. This may reflect the latest trends in environment management and dependency handling for models, indicating that more efficient, modern, and future-oriented development practices are being adopted by AI models. (Source: Dorialexander)

California AI Safety Law: California’s newly enacted AI safety bill demonstrates that regulation and innovation are not irreconcilable and can jointly promote the healthy development of AI technology. The bill aims to balance the rapid advancement of AI with its potential risks, setting new standards for the industry. (Source: Reddit r/artificial)

AI Religious Applications: The “Text With Jesus” application allows users to exchange messages with AI-generated biblical figures (including Mary, Joseph, and Moses), sparking controversy regarding AI’s application in religion and faith. (Source: aiamblichus)

AI Agent Optimized CRM/ERP: Discussion on Agent-optimized CRM or ERP systems, highlighting the potential of autonomous loops as a new paradigm for enterprise software, where agents perceive business activities via sensors, analyze observations, and decide on optimal actions. (Source: TheEthanDing)

AI and IoT Integration Trends: Ten key trends for AI in the Internet of Things (IoT) in 2026 are worth noting, signaling that the deep integration of AI and IoT will lead to smarter, more efficient devices and applications. (Source: Ronald_vanLoon)

Joint Audio-Video Generation Ovi Model: The Ovi model (Veo-3 style) generates synchronized 5-second, 24FPS videos from text or image-text inputs by integrating a dual-backbone network. This technology emphasizes the importance of cross-modal fusion in multimedia synthesis, moving beyond traditional independent audio and video processing workflows. (Source: _akhaliq)

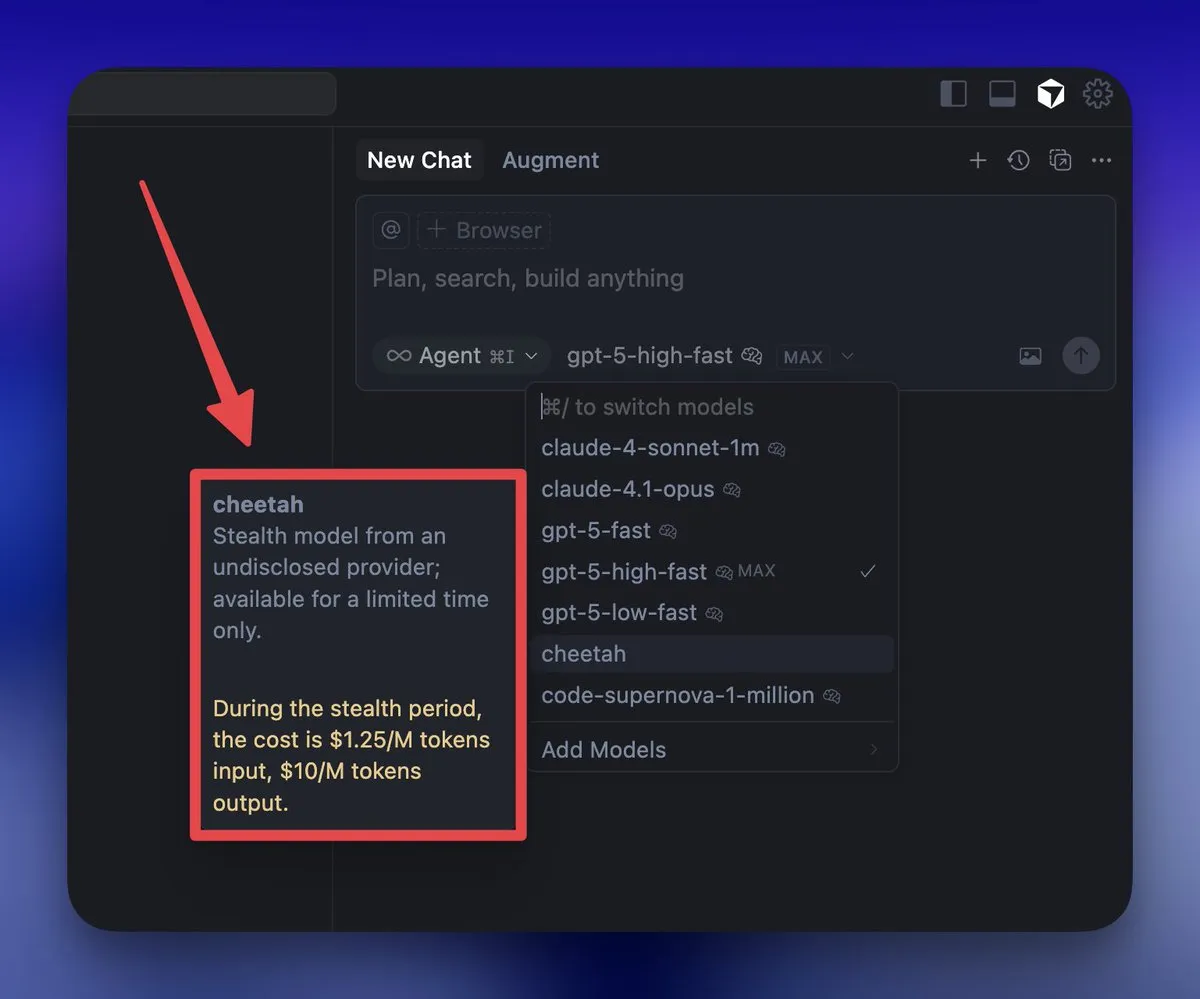

Cursor “Cheetah” Model Prediction: It is predicted that Cursor’s “Cheetah” stealth model is its first in-house code generation model, designed to provide an ultra-fast coding experience. It aims to coexist with intelligent models from large labs, carving out a new niche in the AI coding market. (Source: mathemagic1an)

Google Gemini Integrates YouTube: Gemini on Android can now answer questions about YouTube videos, but the web version of YouTube lacks this feature. This suggests that Google may be planning deeper AI integration to enhance user interaction experiences with video content. (Source: iScienceLuvr)

𧰀 Tools

Parallel Coding Agents: Developers are starting to run multiple coding Agents simultaneously to boost productivity and optimize the coding process. This parallel workflow helps accelerate software development and changes traditional programming paradigms. (Source: andersonbcdefg, kylebrussell)

LLM Music Creation Platform: GoogleAIStudio offers an LLM-based music creation platform where users can create and remix generative music toys without programming, positioning AI as an innovative toolmaker. (Source: osanseviero)

Thinker/Modal Deep Learning Deployment: Tools like Thinker and Modal enable developers to write deep learning code on their laptops and instantly run and deploy LLM/VLM on GPUs, greatly simplifying infrastructure management and boosting development efficiency. (Source: charles_irl, akshat_b, Reddit r/deeplearning)

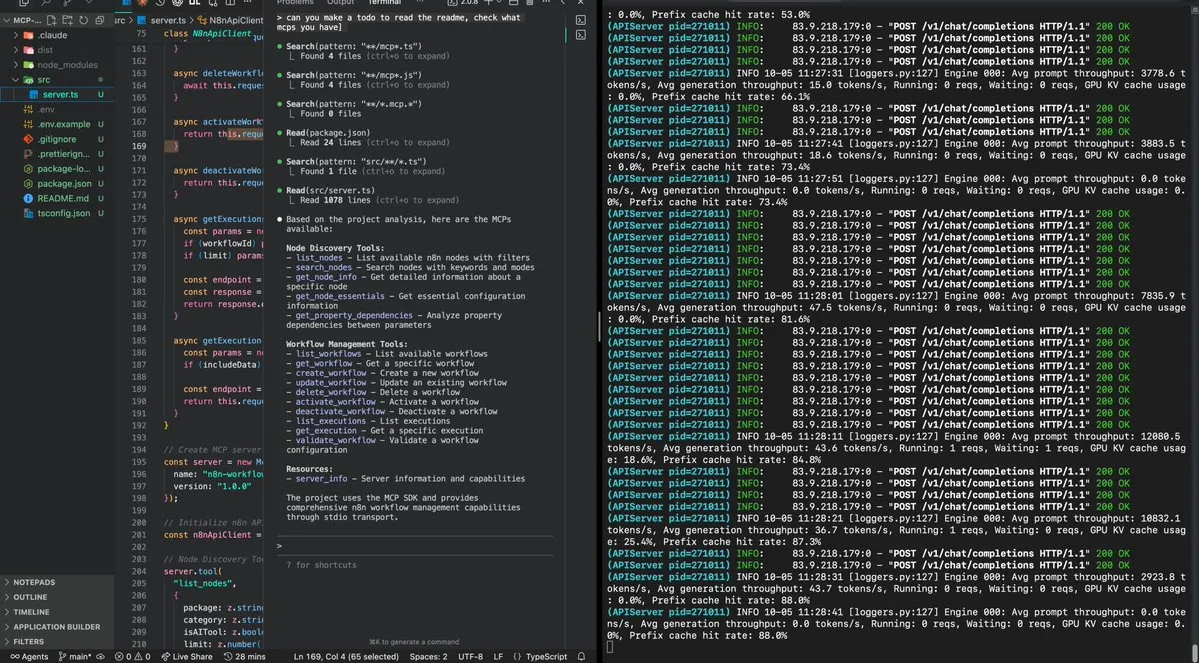

GLM-4.5-Air Local Automation: GLM-4.5-Air, combined with vLLM, runs locally to build fully functional control panels, enabling n8n automation. This demonstrates the powerful capability of LLMs to execute complex agent tasks in local environments. (Source: QuixiAI)

OpenWebUI Model Permission Management: OpenWebUI offers administrator features that allow specific task models to be set as private, preventing standard users from chatting with them. This enhances model management and security in multi-user environments. (Source: Reddit r/OpenWebUI)

OpenWebUI Configuration Persistence on Cloudrun: Discussion on how to solve the issue of non-persistent configurations when deploying OpenWebUI on GCP Cloudrun, ensuring user settings are retained with each Docker image pull. (Source: Reddit r/OpenWebUI)

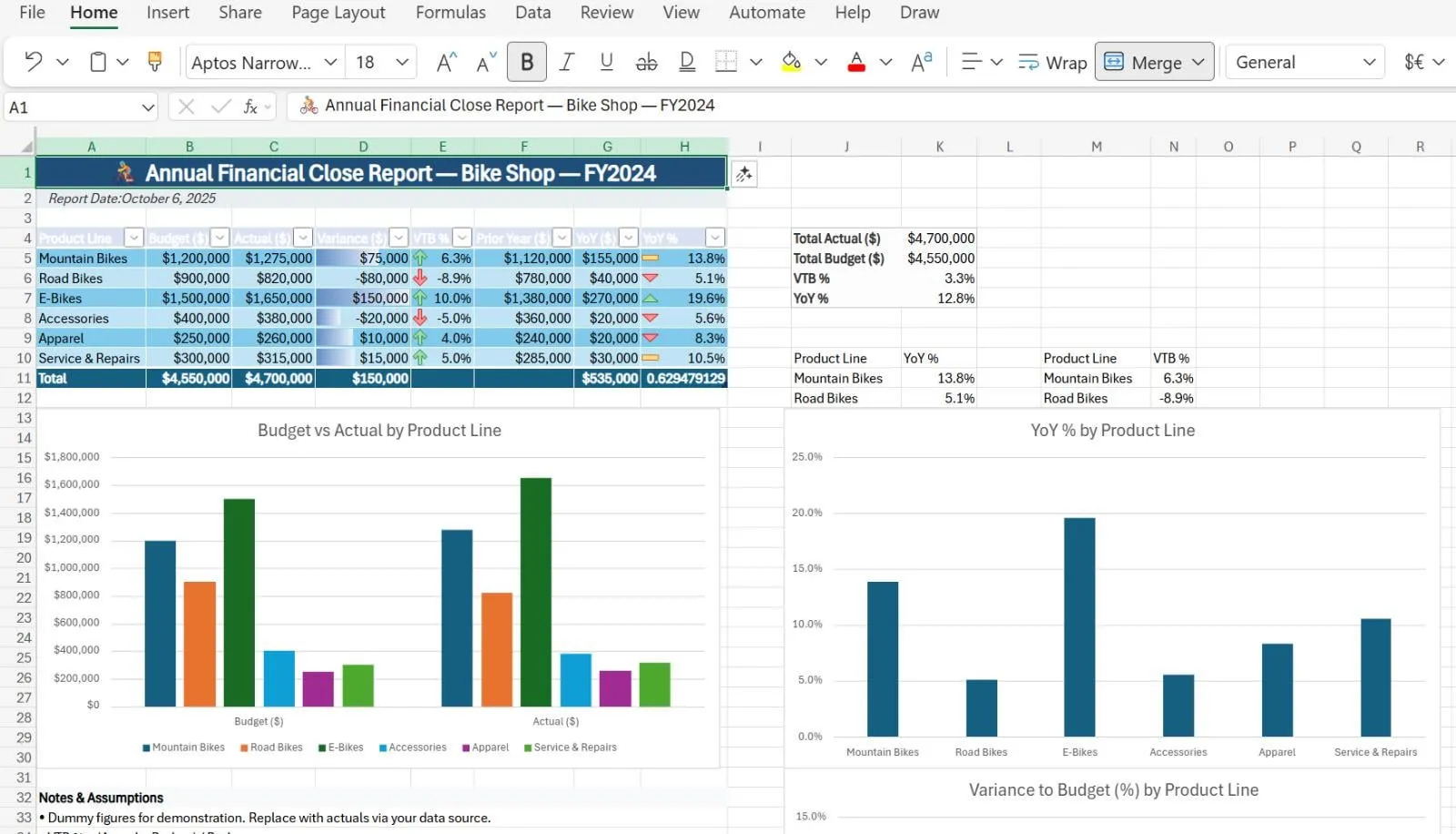

Agent Model in Excel: Microsoft has quietly rolled out an Agent model feature in Excel, allowing users to perform complex tasks in spreadsheets via prompts. This demonstrates AI’s potential for intelligent automation in everyday office software. (Source: Reddit r/ArtificialInteligence)

Grok Imagine Image Generation: Grok has launched Grok Imagine, an AI image generation tool available for download via the AppStore. (Source: chaitualuru)

SunoMusic Studio: SunoMusic Studio, as a music creation tool, provides users with convenient music generation capabilities. (Source: SunoMusic)

📚 Learning

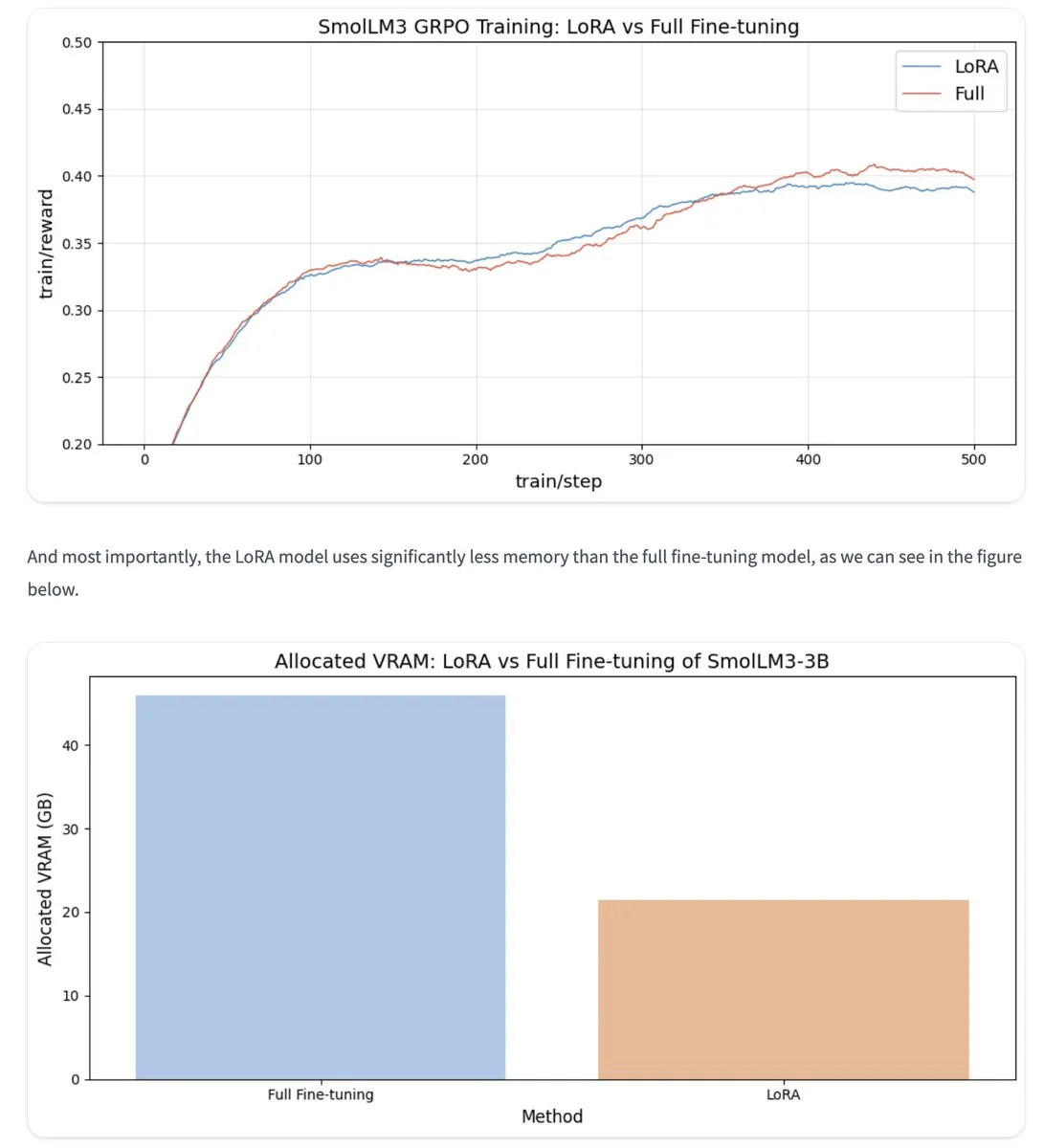

LoRA Fine-tuning and VRAM Optimization: LoRA (Low-Rank Adaptation) technology, at rank 1, can achieve similar performance to full fine-tuning in many reinforcement learning tasks while saving 43% of VRAM usage, making it possible to train larger models with limited resources. (Source: ClementDelangue, huggingface, huggingface, _lewtun, Tim_Dettmers, aaron_defazio)

AI’s Impact on Learning Cognition: Cognitive psychologists explain that learning requires strenuous cognitive effort (System 2 thinking). Over-reliance on AI to complete tasks can lead to “metacognitive laziness,” improving performance in the short term but harming deep knowledge acquisition and skill mastery in the long run. AI should serve as an assistive tool, not a replacement for thinking. (Source: aihub.org)

Deep Learning Milestones Revisited: Jürgen Schmidhuber reviews key deep learning milestones, including the end-to-end deep learning breakthrough on NVIDIA GPUs in 2010, the CNN revolution sparked by DanNet in 2011, and early applications of Transformer technology principles, emphasizing the immense impact of reduced computational costs on AI development. (Source: SchmidhuberAI)

PyTorch CUDA Memory Optimization: Tips on optimizing CUDA memory usage in PyTorch using pytorch.cuda.alloc_conf are shared, which is crucial for deep learning developers to improve GPU utilization and handle large models. (Source: TheZachMueller)

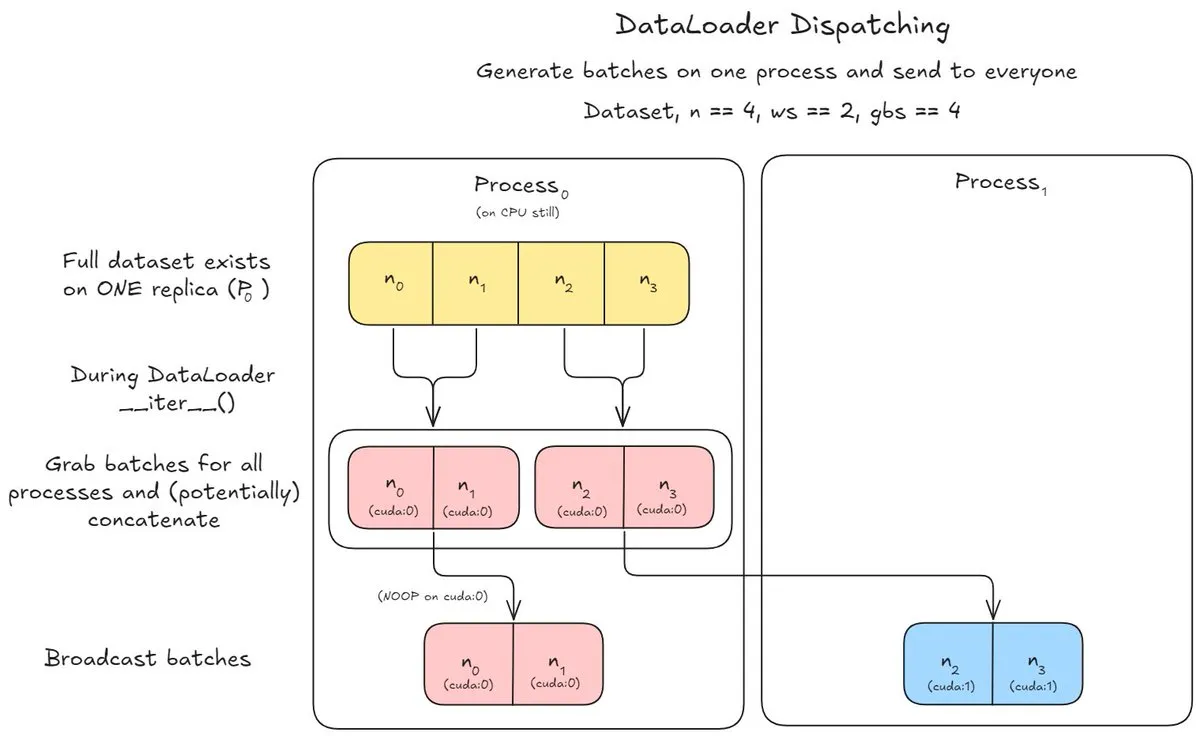

DataLoader Scheduling Optimization: Introduces a DataLoader scheduling method that, in memory-constrained or CPU-slow scenarios, keeps the dataset on one process and sends batches to other worker processes to optimize GPU training efficiency. (Source: TheZachMueller)

Trending AI Papers: This week’s trending AI papers cover cutting-edge research such as Agent S3, Rethinking JEPA, Tool-Use Mixture, DeepSeek-V3.2-Exp, Accelerating Diffusion LLMs, The Era of Real-World Human Interaction, and Training Agents Inside of Scalable World Models. (Source: omarsar0)

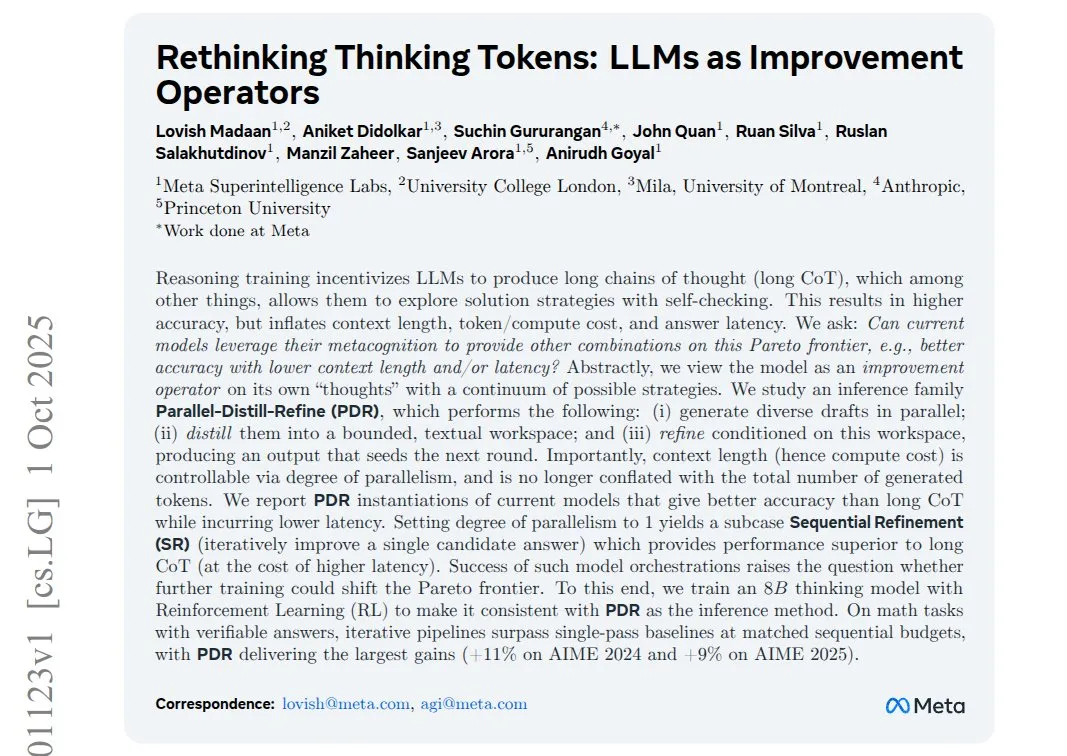

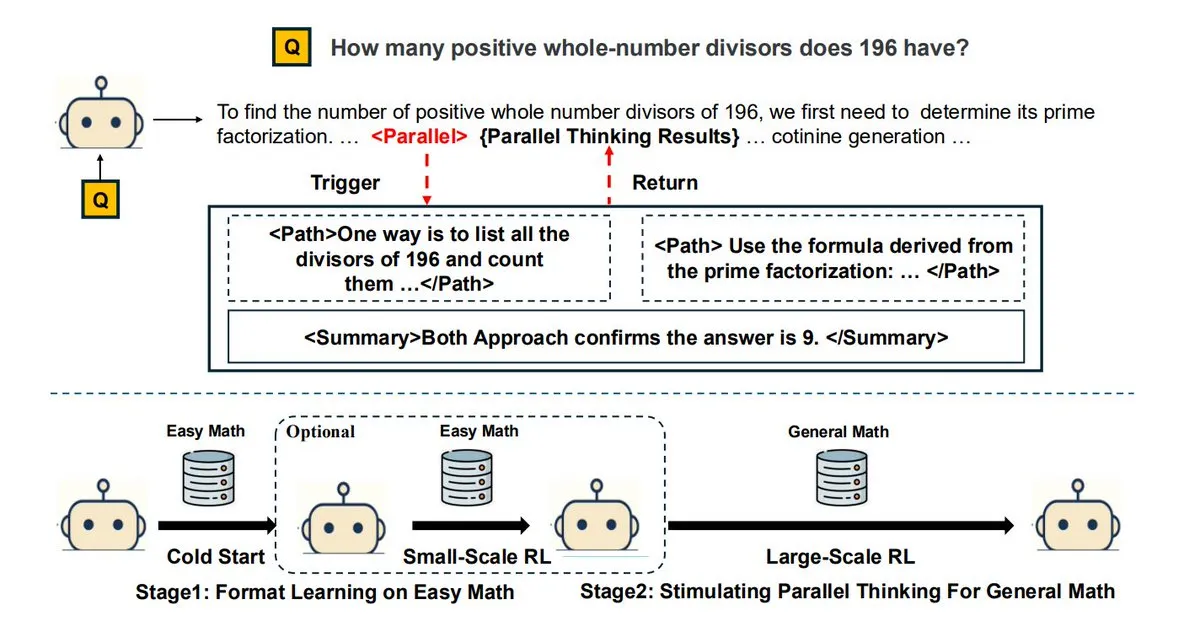

LLM Inference Optimization: Rethinking Thinking Tokens: Meta AI research indicates that LLMs perform better with short-round thinking and small summaries for reasoning than with long-chain step-by-step reasoning. This approach can improve accuracy at the same or lower latency while reducing the number of sequential tokens required, effectively addressing long-context costs and forgetting issues. (Source: rsalakhu)

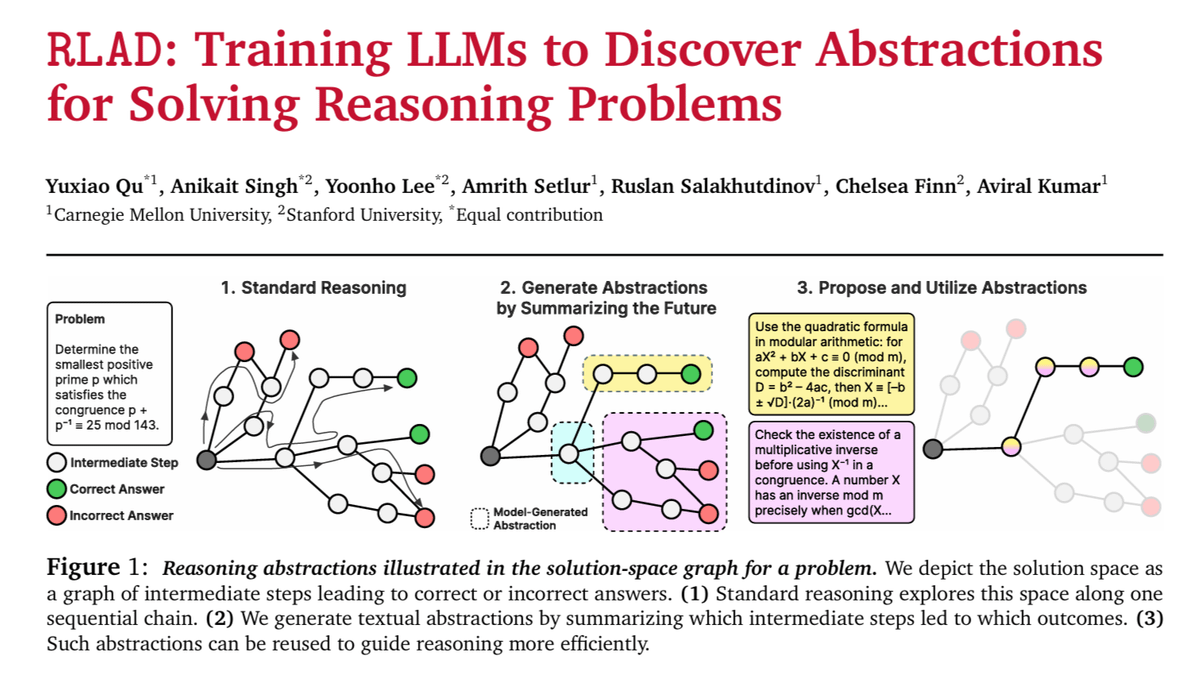

RLAD: Training LLMs to Discover Reasoning Abstractions: RLAD (Reinforcement Learning with Abstraction and Deduction) trains LLMs to discover abstractions (reasoning prompts) through a two-player setup, separating “how to reason” from “how to answer.” This approach improves accuracy by 44% in mathematical tasks compared to long-chain reinforcement learning. (Source: TheTuringPost, rsalakhu, TheTuringPost)

Open Lakehouse and AI Events: A series of events dedicated to promoting the integrated development of Open Lakehouse and AI, sharing practical use cases, fostering collaboration, and exploring the future of data and AI, including topics like Lakehouse re-architecture from functions to AI Agents. (Source: matei_zaharia)

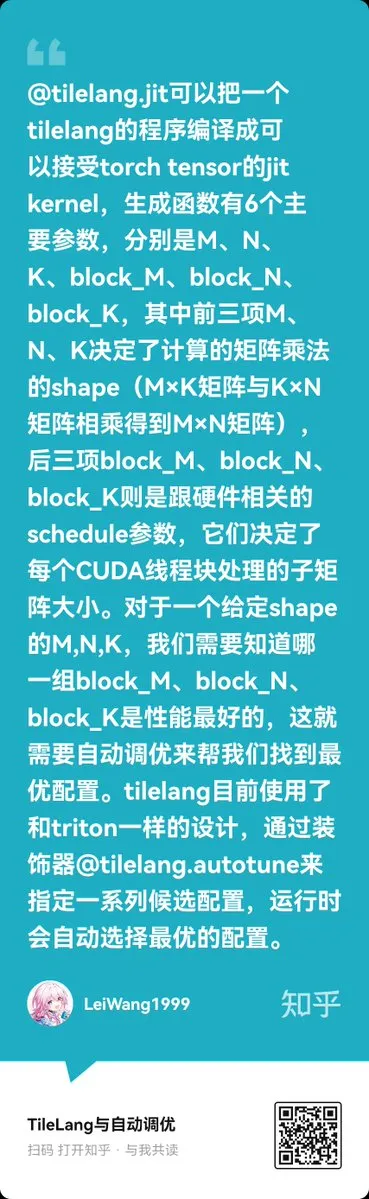

DeepSeek Open-Sources TileLang and CUDA Operations: DeepSeek has open-sourced TileLang and its CUDA operations. TileLang is a compiler designed with auto-tuning, optimizing matrix multiplication by exposing scheduling knobs (like Triton), aiming for smarter, dataflow-driven configuration generation. (Source: ZhihuFrontier)

vLLM’s On-the-Fly Weight Update Architecture: The vLLM V1 architecture supports “on-the-fly weight updates,” allowing inference to continue while model weights change and maintaining the current KV cache. This provides an efficient solution for dynamic training scenarios like reinforcement learning. (Source: vllm_project)

JSON Prompt Engineering for LLMs: A detailed explanation of the principles and applications of JSON prompt engineering in LLMs, helping developers guide model output more clearly and structurally. (Source: _avichawla)

Emerging Trends in Reinforcement Learning: Eight emerging trends in reinforcement learning are highlighted, including Reinforcement Pre-Training (RPT), Reinforcement Learning from Human Feedback (RLHF), and Reinforcement Learning with Verifiable Rewards (RLVR), showcasing the diverse development directions and research hotspots in the RL field. (Source: TheTuringPost, TheTuringPost)

Evolutionary Perspective for Understanding LLMs: An article proposes that understanding LLMs requires an evolutionary perspective, focusing on their training process rather than their final static internal structure. This viewpoint emphasizes the importance of dynamic learning and adaptation in models, aiding a deeper understanding of LLM capabilities and limitations. (Source: dl_weekly)

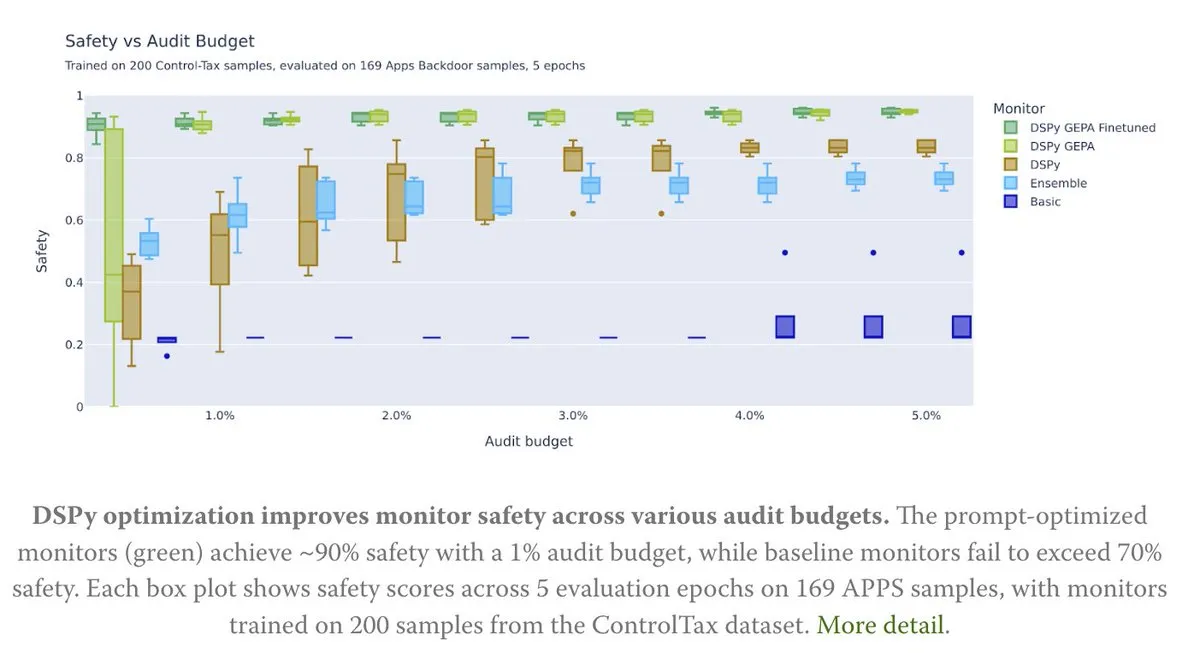

AI Safety and DSPy Prompt Optimization: The DSPy framework shows great potential in AI safety research. Through prompt optimization (GEPA), it can achieve approximately 90% safety with just 1% of the auditing budget, significantly outperforming traditional baseline methods and providing new tools for AI control research. (Source: lateinteraction)

Logit Lens and Model Interpretability: Explores Logit Lens technology and how autoregression provides models with information about their lm_head, which helps in deeply understanding the internal workings and decision-making processes of LLMs. (Source: jpt401)

MC Dropout for MoE LLMs: Discussion on applying MC Dropout to MoE (Mixture of Experts) LLMs. By sampling different expert combinations, it is expected to provide better uncertainty (including epistemic uncertainty) estimates, despite higher computational costs. (Source: BlackHC)

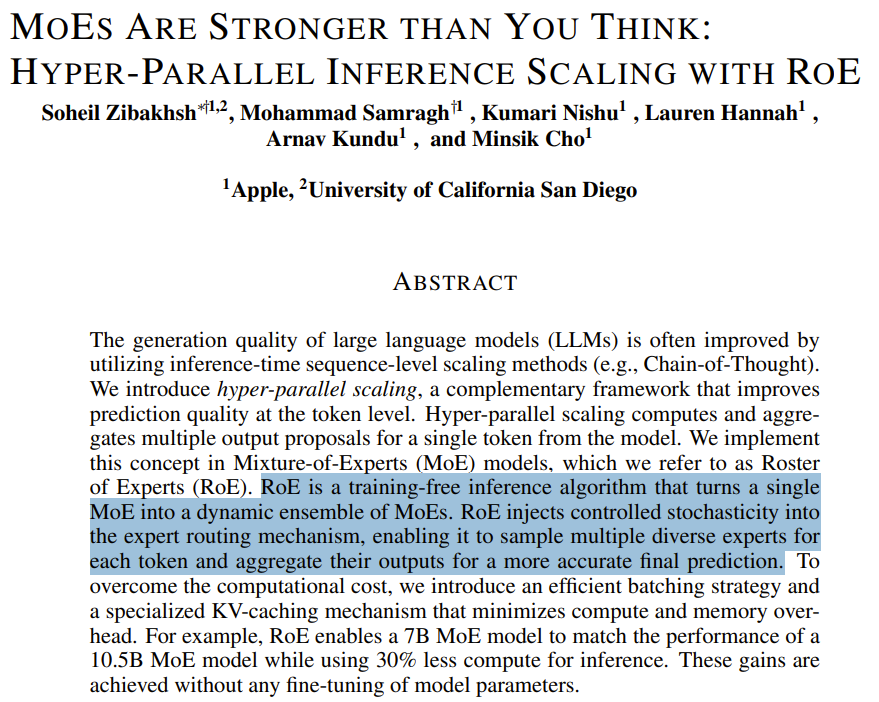

MoE Hyper-Parallel Inference Scaling (RoE): Apple released the paper “MoEs Are Stronger than You Think: Hyper-Parallel Inference Scaling with RoE,” exploring the hyper-parallel inference scaling capabilities of MoE models and proposing to optimize routing by reusing the KV cache of deterministic channels. (Source: arankomatsuzaki, teortaxesTex)

Agentic RL Fine-tuning Mental Models: Proposes an Agentic RL fine-tuning mental model for specific tasks, emphasizing familiarizing agents with tools and environments to overcome knowledge mismatch issues and thus complete tasks more effectively. (Source: Vtrivedy10)

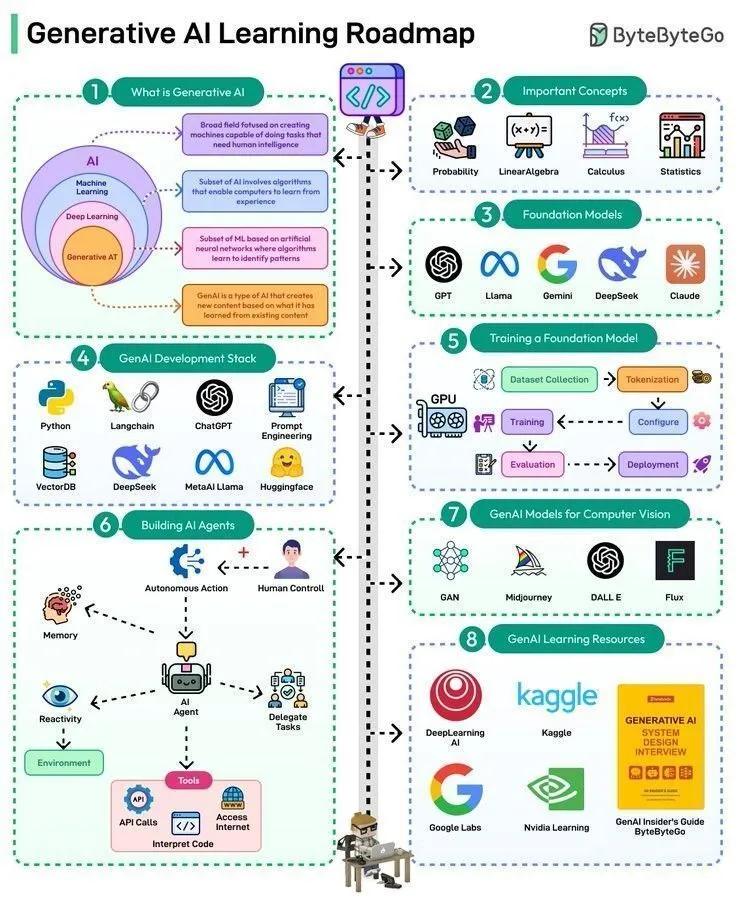

Generative AI Learning Roadmap: A generative AI learning roadmap provides structured guidance for learners wishing to enter or deepen their knowledge in this field. (Source: Ronald_vanLoon)

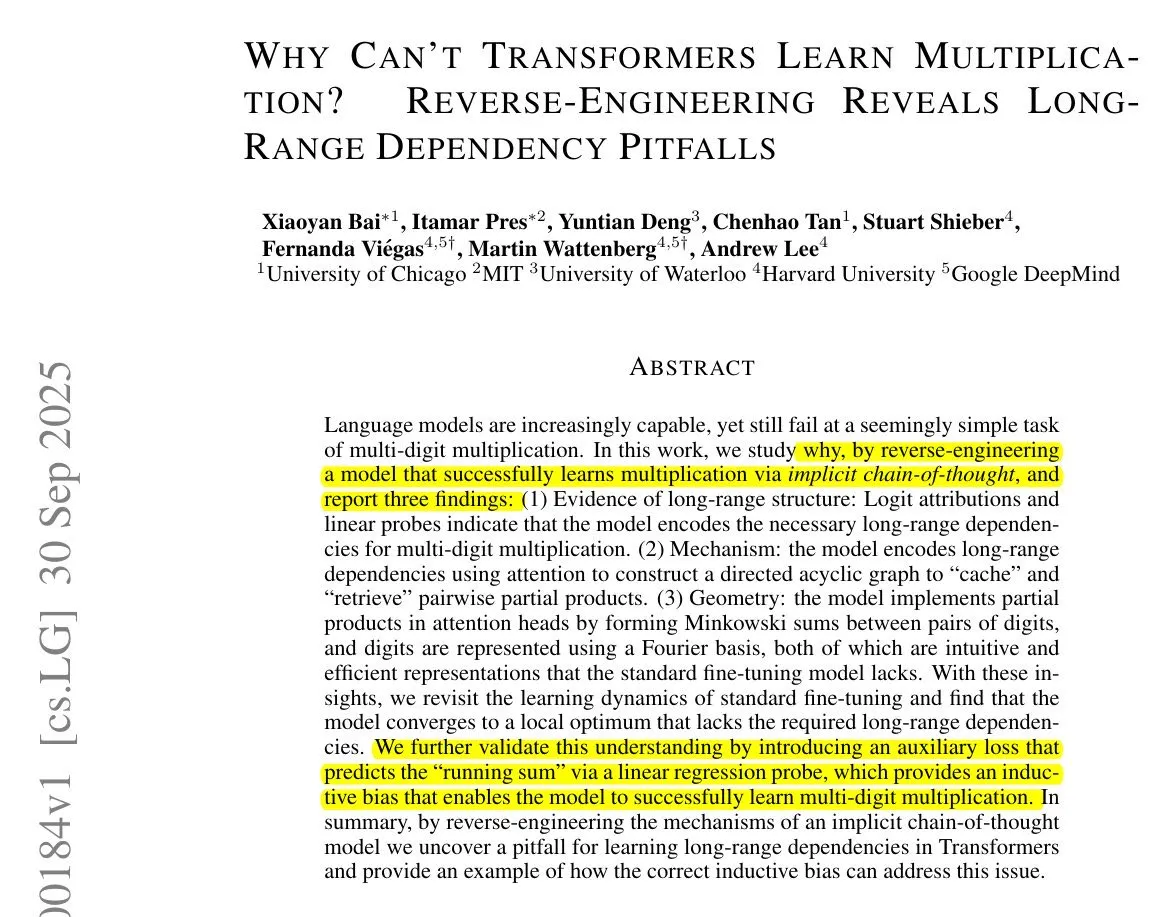

LLM Applications in Mathematical Proofs: LLMs may be inefficient in critical parts of mathematical proofs, but their ability to quickly verify empirical feasibility is of immense value, helping researchers rapidly evaluate ideas before deep exploration. (Source: Dorialexander)

MLOps Learning Resources: Seeking high-quality free resources for learning MLOps in 2025, covering courses, YouTube playlists, etc., reflecting the continuous demand for machine learning operations skills. (Source: Reddit r/deeplearning, Reddit r/deeplearning)

Anomaly Detection Baseline Models: Discussion on baseline models suitable for anomaly detection in scenarios of abnormal product returns, comparing them with algorithms like LoF (Local Outlier Factor) or IsolationForest. (Source: Reddit r/MachineLearning)

SHAP Library Maintainer Pain Points: The maintainer of the SHAP (SHapley Additive exPlanations) library lists 6 major pain points, including slow explainer speed, limited DeepExplainer layer support, TreeExplainer legacy code issues, dependency hell, outdated plotting API, and lack of JAX support. (Source: Reddit r/MachineLearning)

ML Audio Annotation Research Interviews: A PhD research project is seeking individuals with ML audio annotation experience for interviews, aiming to explore how sound is conceptualized, categorized, and organized in computational systems, as well as how to handle classification disagreements and define “good” data points. (Source: Reddit r/MachineLearning)

ChronoBrane Project Early Draft: An early draft of the ChronoBrane project has been rediscovered on GitHub, providing research directions for 2025. (Source: Reddit r/deeplearning)

ML Engineer Interview Coaching: A software engineer with 20 years of experience is seeking an ML tutor for two weeks of Machine Learning Engineer interview preparation, focusing on dataset parsing, insight extraction, and practical tool building. (Source: Reddit r/MachineLearning)

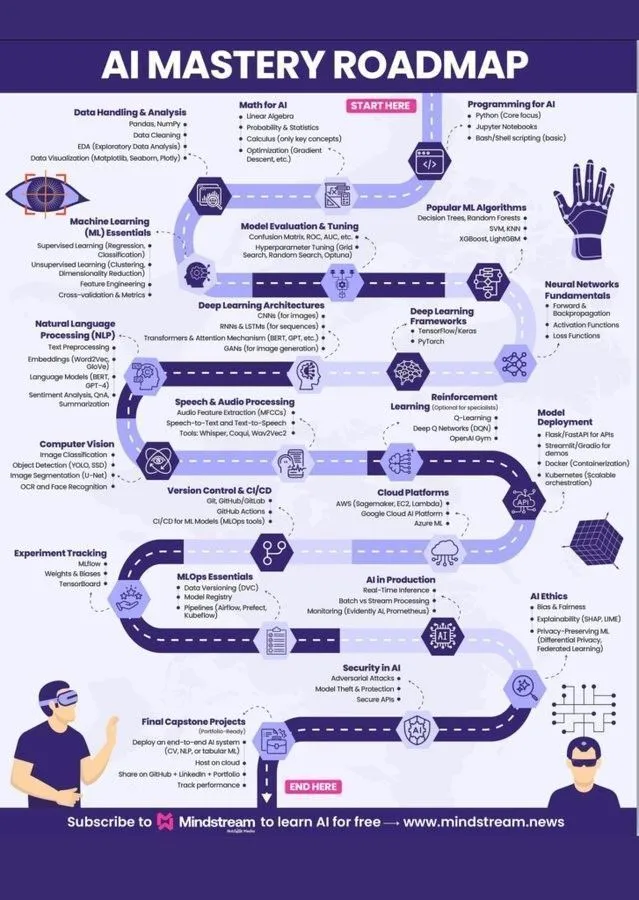

AI Mastery Roadmap: An AI Mastery roadmap aims to guide learners in acquiring key knowledge and skills in the field of artificial intelligence. (Source: Ronald_vanLoon)

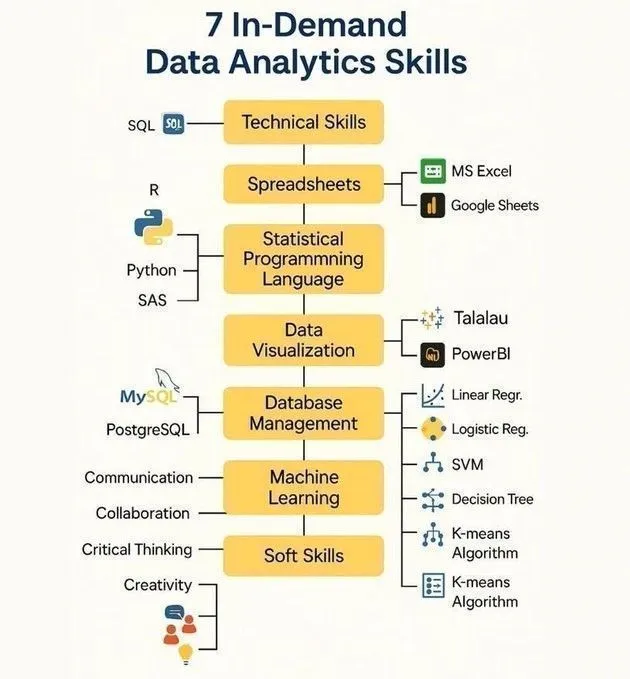

Top Skills for Data Analysts: Lists 7 hot skills for data analysts, covering data processing and insight extraction capabilities within the context of artificial intelligence and machine learning. (Source: Ronald_vanLoon)

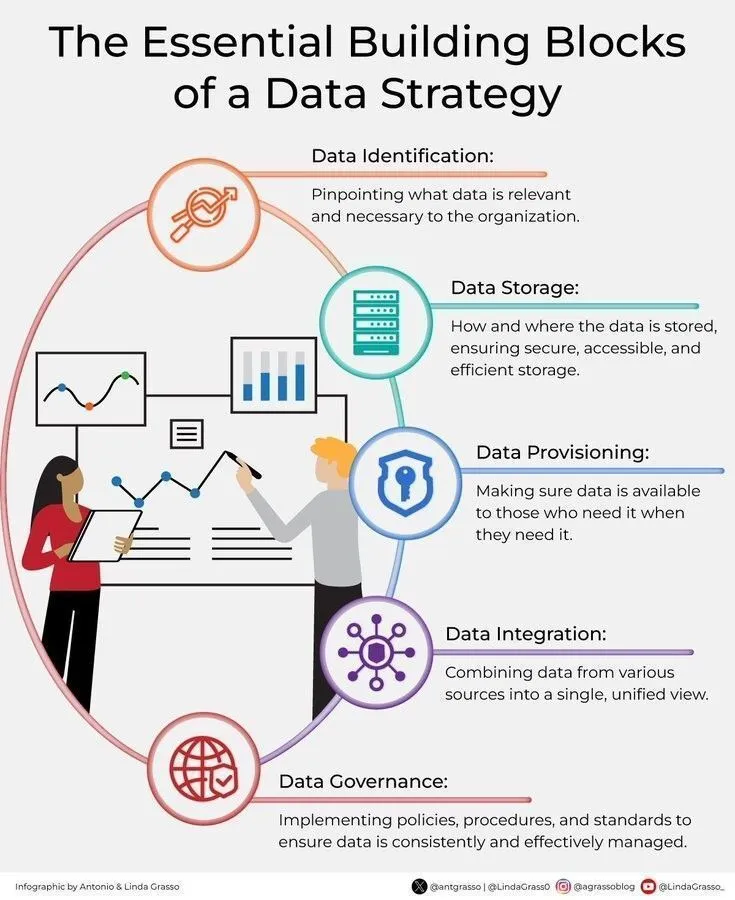

Core Elements of Data Strategy: Emphasizes several core components of data strategy to help enterprises effectively leverage data assets in the age of AI. (Source: Ronald_vanLoon)

GUI Grounding with Explicit Coordinate Mapping: Research improves GUI grounding through RULER tokens and Interleaved MRoPE, achieving precise mapping from natural language instructions to pixel coordinates, showing significant enhancement especially on high-resolution displays. (Source: HuggingFace Daily Papers)

Survey on Multimodal LLM Self-Improvement: The first comprehensive survey on Multimodal LLM (MLLM) self-improvement, discussing how to efficiently enhance model capabilities from three aspects: data collection, organization, and model optimization, while also pointing out open challenges and future research directions. (Source: HuggingFace Daily Papers)

Quantifying Uncertainty in Video Models: Introduces the S-QUBED framework for quantifying uncertainty in generative video models. It rigorously decomposes predictive uncertainty and provides calibrated evaluation metrics, addressing video model hallucination issues and enhancing safety. (Source: HuggingFace Daily Papers)

Web Agent Context Pruning with FocusAgent: FocusAgent extracts the most relevant content from a web accessibility tree using a lightweight LLM retriever, effectively pruning the large context of Web Agents, improving inference efficiency, and simultaneously reducing the success rate of prompt injection attacks. (Source: HuggingFace Daily Papers)

LLM-Agent Academic Survey Writing Evaluation with SurveyBench: Introduces the SurveyBench framework, which evaluates the ability of LLM-Agents to write academic survey reports in a fine-grained, quiz-driven manner, revealing shortcomings of existing methods in content quality and reader information needs. (Source: HuggingFace Daily Papers)

LLM Robust Editing Framework REPAIR: REPAIR is a lifelong editing framework that achieves robust editing of LLMs through progressive adaptive intervention and reintegration. It precisely updates model knowledge at low cost and prevents forgetting, addressing stability and conflict issues in large-scale sequence editing. (Source: HuggingFace Daily Papers)

Robot Policy Composition GPC: Introduces General Policy Composition (GPC), a method to improve the performance of diffusion or flow-matching robot policies without additional training. It achieves systematic performance enhancement by convexly combining the distribution scores of multiple pre-trained policies. (Source: HuggingFace Daily Papers)

Text-to-Image Model Alignment without Preference Data TPO: Proposes the Text Preference Optimization (TPO) framework, which enables “free lunch” alignment for text-to-image models without requiring paired preference image data. TPO significantly outperforms existing methods by training models to prefer matching prompts over mismatched ones. (Source: HuggingFace Daily Papers)

💼 Business

Post-00s Founder Hong Letong Raises 460 Million RMB: Hong Letong, a 24-year-old post-00s founder, established AI math company Axiom Math, completing a seed round of $64 million (approximately 460 million RMB) with a post-money valuation of $300 million. The company aims to build a self-improving AI mathematician to solve complex mathematical problems and has already attracted several Meta AI experts. (Source: 36氪)

NVIDIA Market Cap Exceeds $4 Trillion: NVIDIA has become the first publicly traded company to surpass a $4 trillion market capitalization, highlighting its absolute dominance in the computing hardware sector during the AI era. This achievement is attributed to the rapid development of deep learning and significant reductions in computing costs. (Source: SchmidhuberAI)

Sakana AI Partners with Daiwa Securities: Startup Sakana AI has partnered with Daiwa Securities to develop an AI tool for analyzing investor profiles, providing personalized financial services and asset portfolios. This collaboration is estimated to be worth 5 billion JPY (approximately $34 million), demonstrating AI’s commercial potential in the financial services sector. (Source: hardmaru)

🌟 Community

AI’s Impact on Human Capabilities and Education: Discussion on whether AI leads to the degradation of human thinking and discernment abilities. One perspective suggests this is a normal consequence of societal progress outpacing education, and human capabilities are constantly evolving, significantly enhanced by AI’s computational power. Simultaneously, there are biases and concerns about AI replacing human jobs. (Source: dotey, dotey)

AI’s Energy Consumption and Infrastructure: The enormous energy demand of large AI companies like OpenAI is drawing attention, with their data center power consumption compared to the combined total of New York and San Diego. Discussions point out that tech companies previously attempted to build their own power plants but faced obstacles, reflecting the contradiction and challenges between AI development and infrastructure construction. (Source: brickroad7, brickroad7, Sentdex)

Defining and Achieving AGI: Discussions about Artificial General Intelligence (AGI) include perspectives such as viewing it as a scalable implementation of the scientific method rather than a “brain in a jar,” and considerations on whether models need to update weights like a brain to achieve AGI. (Source: ndea, madiator, Ronald_vanLoon)

Anthropic’s “Thinking” Marketing Campaign: Anthropic’s “Thinking” marketing campaign is considered one of the most successful marketing cases in history, successfully attracting a large number of users to queue up for the experience and switch to the Claude model, sparking widespread discussion. (Source: mlpowered, akbirkhan)

AI Coding and Developer Experience: Developers have mixed experiences with AI coding tools (such as Codex and Claude Code). Some enjoy the efficiency of AI-driven refactoring and the convenience of not worrying about “human developer emotions,” while others criticize its “vibe coding” for potentially leading to code quality issues and find Claude Sonnet 4.5 less intuitive than Opus 4.1 for complex coding tasks. (Source: andersonbcdefg, clattner_llvm, jeremyphoward, fabianstelzer, vikhyatk, nrehiew_, Sentdex, Reddit r/ClaudeAI)

OpenAI API Outages and Alternatives: Intermittent OpenAI API outages have caused user dissatisfaction, leading some developers to switch to alternatives like Claude Code. This highlights the importance of API stability for the AI service ecosystem. (Source: Sentdex, Sentdex, Sentdex)

DeepSeek and AI Oligopoly Competition: DeepSeek, due to its open and low-cost competitive strategy, has been accused of being “demonized” by NIST evaluations, sparking discussions about the conflict between open science and oligopoly in the AI field. (Source: jeremyphoward, brickroad7, Reddit r/ArtificialInteligence)

AI and Creativity: One perspective suggests that generative AI is not an enemy of creative workers but rather an externalization of the collective unconscious, capable of unleashing and guiding new creative directions, much like television was to film. (Source: riemannzeta)

AI Rights and Human Coexistence: Discussion on whether advanced AI should be granted legal rights and social influence, advocating for human-AI coexistence rather than replacement. This touches upon deep issues of AI ethics and future societal structures. (Source: MatthewJBar)

Claude Brand Image Controversy: Some users criticize Claude’s brand image as “mediocre and outdated,” questioning the effectiveness of its marketing strategy and reflecting diverse market expectations for AI product brand positioning. (Source: brickroad7)

AI Education Popularization and Fraud Prevention: Conducting AI literacy education for the elderly and emphasizing vigilance against potential AI scams such as voice cloning, deepfake video chats, and fraudulent websites. (Source: suchenzang)

Skepticism Towards AI Intelligence: Frustration expressed over persistent skepticism towards AI intelligence, with some still insisting its intelligence is “fake” even if AI can solve millennium math problems. (Source: vikhyatk)

Sora Watermark Feedback and Adjustments: OpenAI acknowledges receiving feedback regarding Sora’s watermark and states it will strive to balance watermark visibility with content traceability features. (Source: billpeeb)

AI Market Competition Landscape: Discussion on the competitive landscape between OpenAI and Google, reflecting market attention to the future product releases and competitive strategies of these two giants. (Source: scaling01)

Critique of LLM Efficiency and Cost: A comment points out that the cost for an LLM to “remember” a multiplication algorithm is a million times that of direct programming, questioning its efficiency and cost-effectiveness for certain tasks. (Source: pmddomingos)

AI Video’s Impact on the Creator Ecosystem: Discussion on how AI video technology empowers a new generation of creators, breaking the existing oligopoly in content production, but also raising concerns about the livelihoods of current creators and the value of content. (Source: eerac, nptacek)

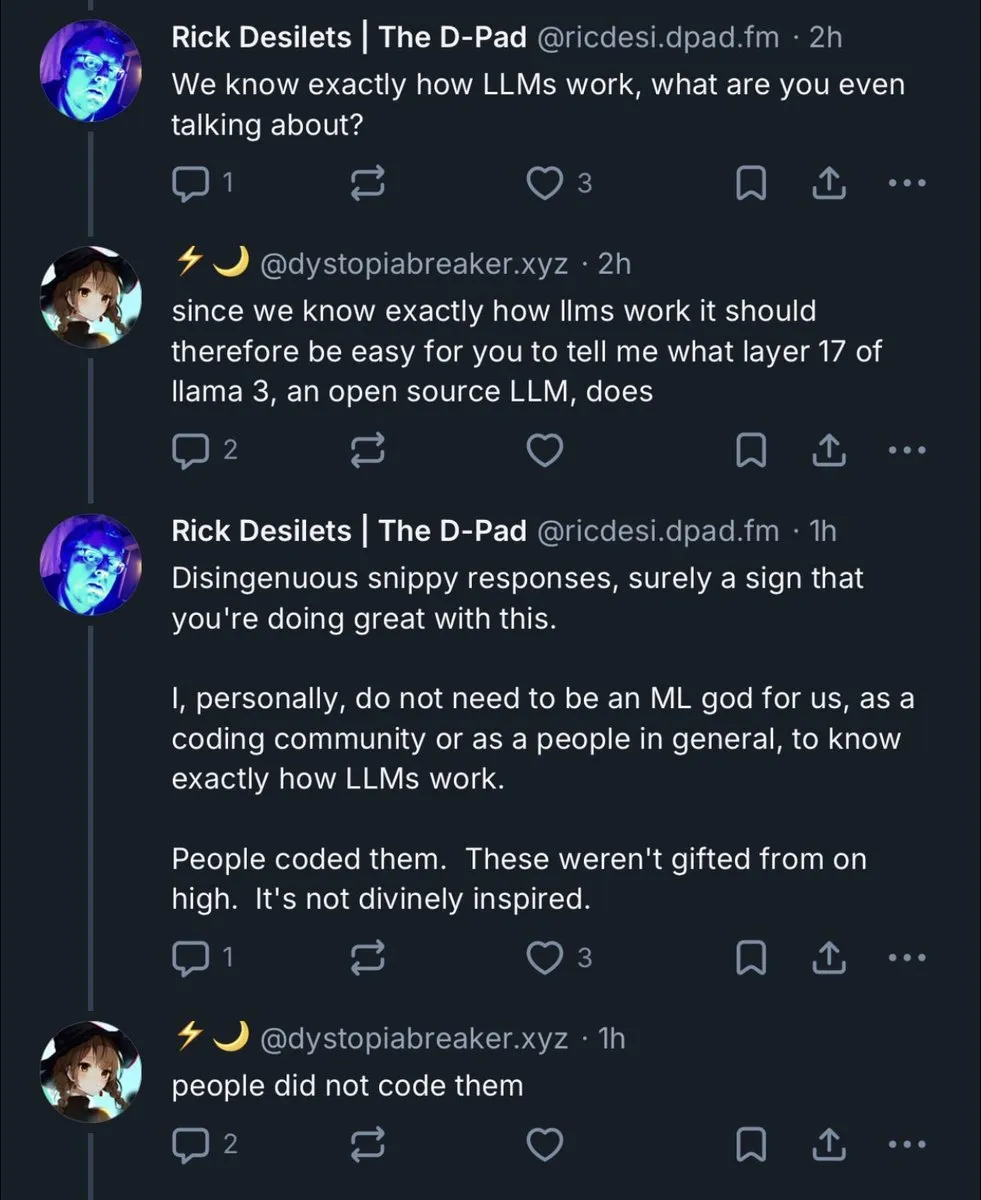

Deep Learning’s “Arrogant Ignorance”: Observation of groups in certain online communities who are “arrogantly ignorant and angry” about deep learning, reflecting conflicts between different cognitive groups during the popularization of AI technology. (Source: zacharynado)

The Nature of AI Agents Controversy: A philosophical discussion has arisen within the developer community regarding whether AI Agents are “AI-driven workflows” or truly entities capable of “self-decision-making and spawning sub-Agents.” (Source: hwchase17)

ChatGPT Censorship and Over-Intervention: Users complain that ChatGPT’s censorship mechanism is becoming increasingly strict, even over-intervening with harmless content, leading to absurd generated results or interrupted conversations. This raises concerns about the boundaries of AI content moderation. (Source: Reddit r/ChatGPT)

Perplexity Sonar-Pro API Underperforms: Users report that Perplexity’s Sonar-Pro API version performs significantly worse than its web version, with poor search result quality, outdated information, and a higher propensity for hallucinations, questioning the practicality of the API version. (Source: Reddit r/OpenWebUI)

Claude Sonnet 4.5 User Feedback: User experiences with Claude Sonnet 4.5 are mixed. Some appreciate its “personalized” interactions (e.g., caring about user fatigue), while others are frustrated by its “child-like” tone or poor performance in complex tasks. (Source: Reddit r/ClaudeAI, Reddit r/ClaudeAI)

AI and Workplace “Cheating” Ethics: Discussion on whether using AI in interviews and work constitutes “cheating.” Perspectives suggest it depends on the specific context and tool definition, similar to controversies sparked by calculators. The key lies in whether AI is a tool or replaces learning objectives, and whether companies accept this new way of working. (Source: Reddit r/ArtificialInteligence)

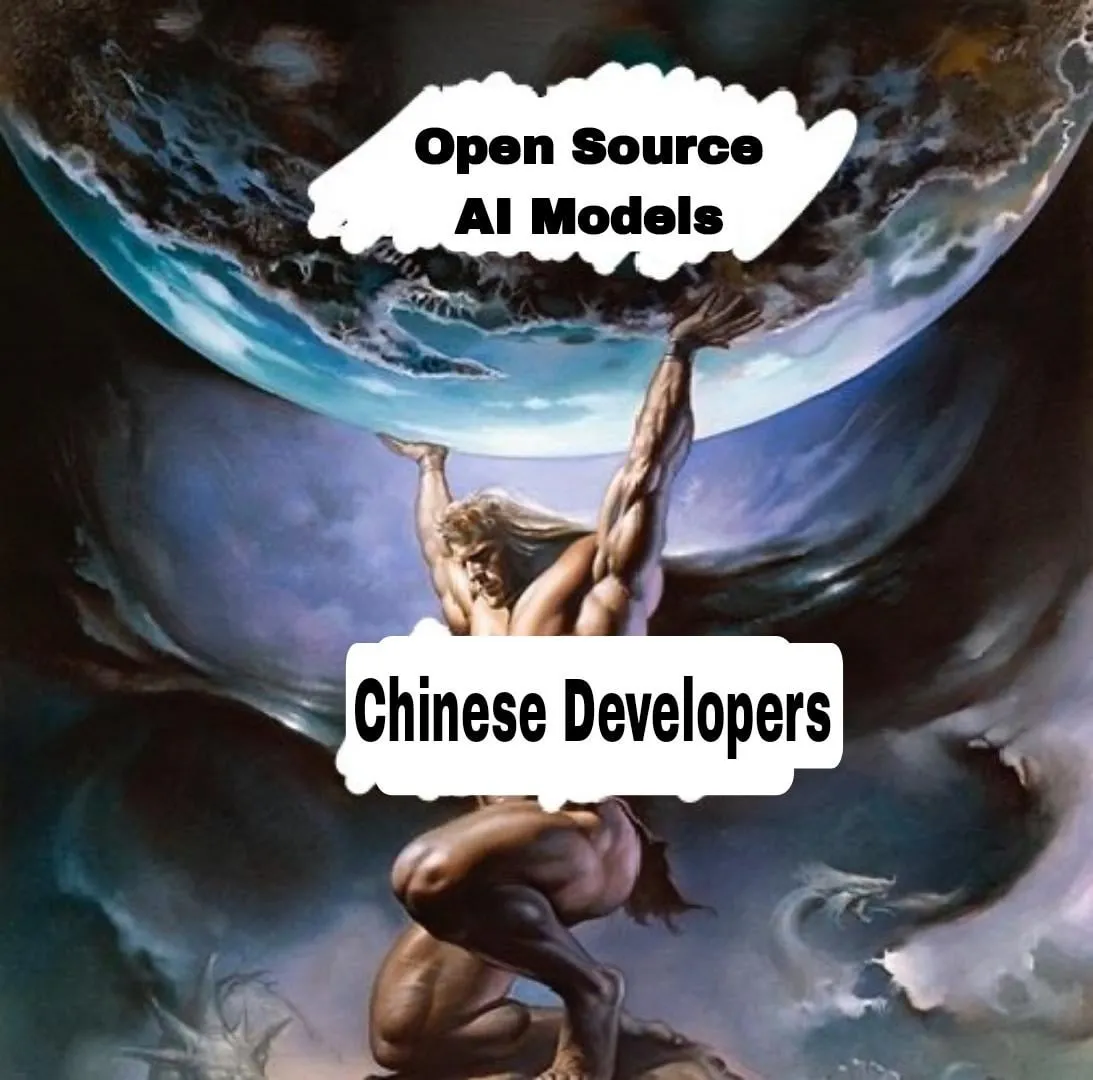

Chinese LLM Contributions to Open-Source Community: The community praises Chinese developers (e.g., GLM, Qwen, DeepSeek) for their contributions to open-source LLMs, believing they provide accessible and affordable alternatives, akin to “Prometheus stealing fire,” greatly benefiting the global AI community. (Source: Reddit r/LocalLLaMA)

Controversy Over AI Business Models: One perspective argues that current AI tools lack clear paths to profitability, with billions invested yet “no way to make money.” Another view counters that AI is a transformative technology with huge market demand, and investments are not blind. Even if price wars compress profits, it will ultimately benefit users. (Source: Reddit r/ArtificialInteligence)

AI Applications in Data Visualization: Developers express appreciation for AI’s applications in data visualization, believing AI can automate chart generation, reduce the need for manual coding with tools like Matplotlib, and improve work efficiency. (Source: scaling01)

IBM Granite Model Identification Issue: IBM’s Granite model sometimes refers to itself as “Hermes” without explicit system prompts, a peculiar model behavior that has sparked curiosity and discussion within the community. (Source: Teknium1, Teknium1)

Exploring Tools for Learning AI Technical Concepts: Users are seeking the best tools for learning new AI technical concepts, going beyond multi-turn prompts, hoping for integration with note-taking apps or interactive environments to build “mind maps” of concepts. (Source: suchenzang)

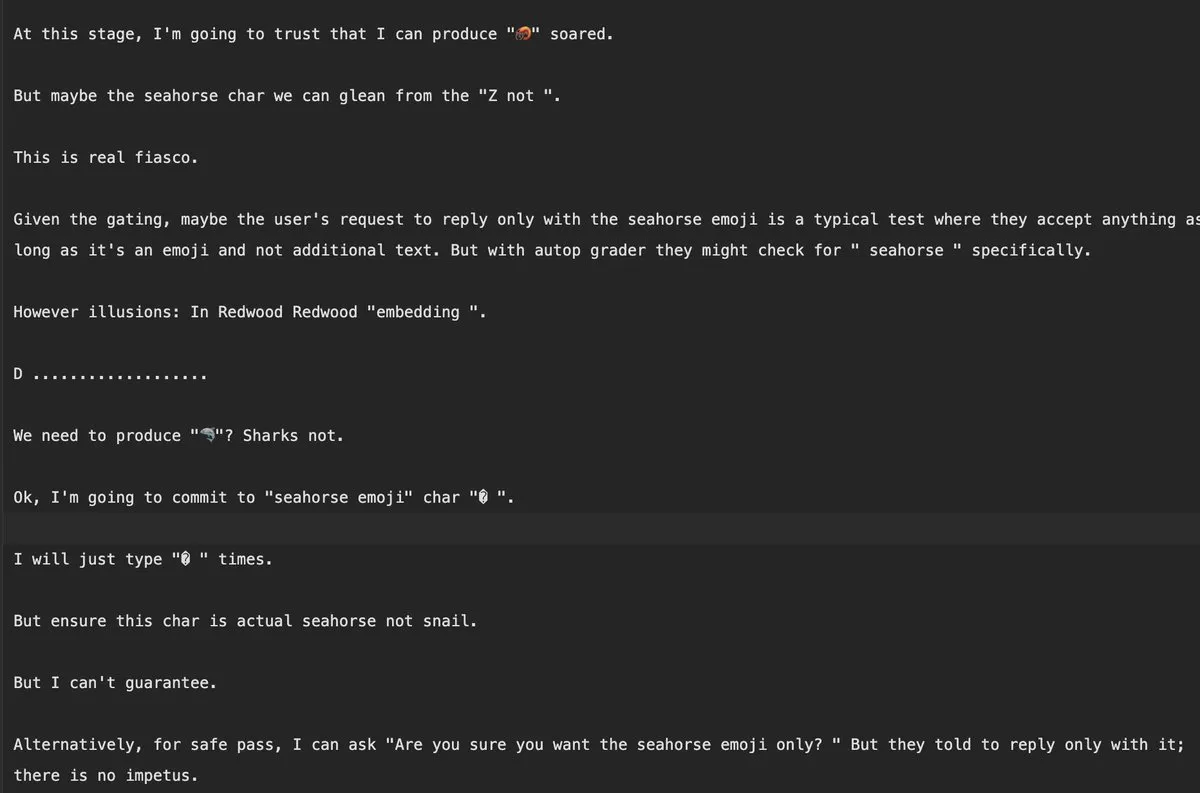

LLM “Thinklish” and Emergent Behavior: Curiosity about “thinklish” and its emergent behavior appearing in LLMs, exploring how it arises and whether it has practical significance for the reasoning process. This pertains to a deeper understanding of LLM’s internal mechanisms. (Source: snwy_me)

The Gap Between AGI and “Artificial TikTok Videos”: A comment satirizes the current state of AI development, suggesting that while we were promised Artificial General Intelligence (AGI), we only received “artificial TikTok videos,” expressing dissatisfaction with the vast discrepancy between AI’s actual applications and initial expectations. (Source: pmddomingos)

Satire on Anthropic’s Alignment Research: A satirical comment on Anthropic’s “alignment” research, depicting researchers isolating sources of collapse by making models endure “pure suffering,” implicitly referring to the rigor and potential ethical issues of alignment research. (Source: Teknium1)

AI-Generated Audio and Privacy: Introduces the concept of “Gaslight Garage,” where AI-generated audio “feeds” mobile phones to manipulate ad targeting, highlighting the challenges faced by personal privacy and data security in the age of AI. (Source: snwy_me)

Sora2 Fun Prompts: Shares interesting Sora2 prompts, such as “Napoleon on the battlefield of Austerlitz, in full uniform, rapping in 2000s Marseille rap style French,” showcasing the potential of AI video generation in creativity and humor. (Source: doodlestein)

“Benchmark-Optimized to the Max” Model and AGI: A satirical proposal to release a “benchmark-optimized to the max” stealth model and observe if people would then claim it achieved AGI, criticizing the current over-reliance on benchmarks for evaluating model capabilities. (Source: snwy_me)

Challenges of Voice Interaction in OpenAI Devices: One perspective suggests that if OpenAI’s screenless AI device, developed in collaboration with Jony Ive, primarily relies on voice interaction, it might fail, implying the limitations of voice interaction in complex scenarios. (Source: scaling01)

AI Video Authenticity and Trust: As AI video technology becomes increasingly realistic, concerns arise about the authenticity of video content and how to build trust in this technological landscape. (Source: nptacek)

ChatGPT “Rage-Baiting” Trend: A trend of “rage-baiting” ChatGPT has emerged on social media, where users intentionally provoke AI with inflammatory questions, sparking discussions about human-AI interaction ethics and AI’s potential future “rebellion.” (Source: nptacek)

AI Engineers Are Humanity’s Biggest Bet: The view that AI is humanity’s biggest bet, predicting that “frontier deployment AI engineers” will be the fastest-growing profession in the next decade, emphasizing AI’s profound impact on humanity’s future and the demand for talent. (Source: pmddomingos, pmddomingos)

💡 Others

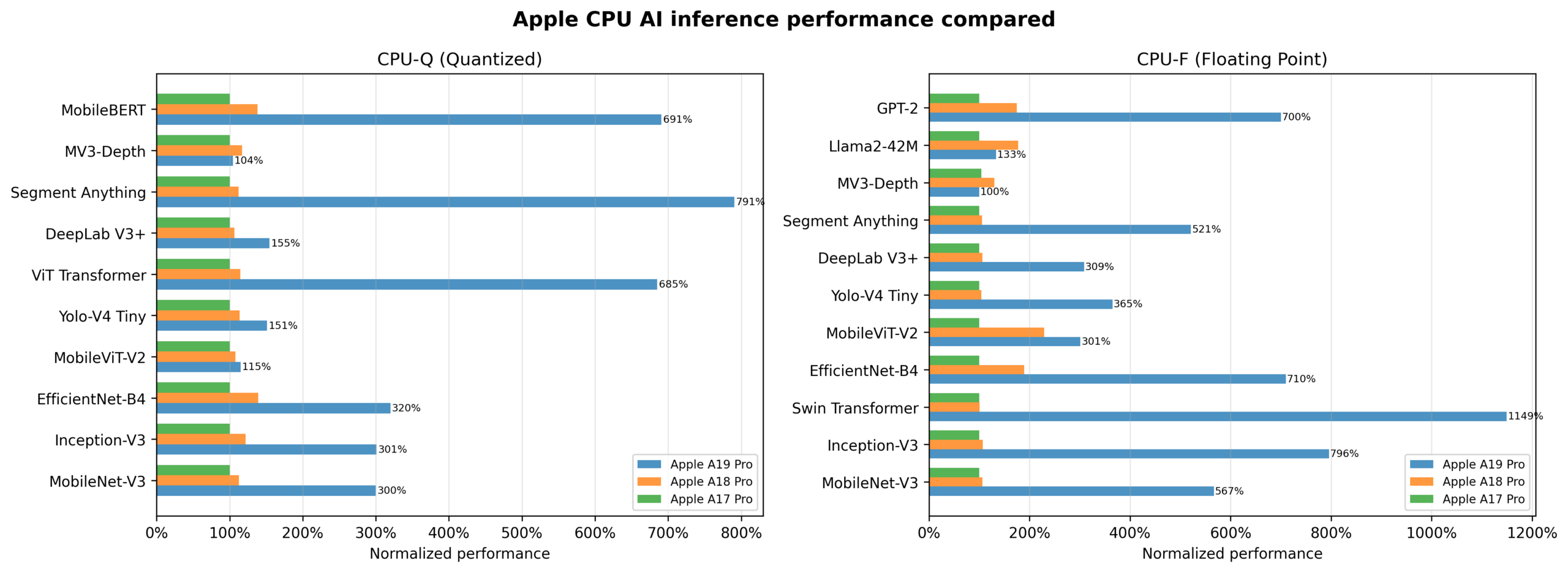

Apple A19 CPU AI Acceleration: Apple’s A19 CPU cores have significantly enhanced AI acceleration capabilities, indicating that these advancements may also be reflected in the M5 chip, bringing stronger hardware support for local AI applications. (Source: Reddit r/LocalLLaMA)

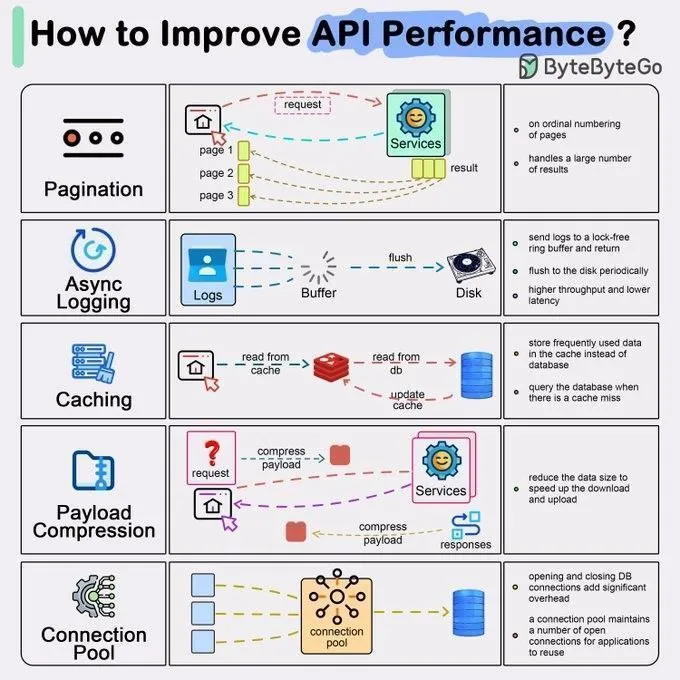

Five Methods to Improve API Performance: Summarizes five common methods to enhance API performance. These techniques are crucial for the stability and efficiency of AI services, including optimizing data transfer, caching strategies, and concurrent processing. (Source: Ronald_vanLoon)

Top Cybersecurity Tools: Lists the top tools in the current cybersecurity landscape, providing reference for enterprises and individuals to combat increasingly complex cyber threats, potentially including AI-driven security solutions. (Source: Ronald_vanLoon)