Keywords:Meta, Tencent Hunyuan Image 3.0, xAI Grok 4 Fast, OpenAI Sora 2, ByteDance Self-Forcing++, Alibaba Qwen, vLLM, GPT-5-Pro, Meta-cognitive Reuse Mechanism, Generalized Causal Attention Mechanism, Multimodal Reasoning Model, Minute-level Video Generation, Pose-aware Fashion Generation

🔥 Spotlight

Meta’s New Method Shortens Chain of Thought, Eliminating Repetitive Derivations: Meta, Mila-Quebec AI Institute, and others have jointly proposed a “metacognitive reuse” mechanism, aiming to solve the problem of token inflation and increased latency caused by repetitive derivations in large language model (LLM) reasoning. This mechanism allows the model to review and summarize problem-solving approaches, refining common reasoning patterns into “behaviors” stored in a “behavior manual.” When needed, these behaviors can be directly invoked without re-derivation. Experiments show that on mathematical benchmarks like MATH and AIME, this mechanism can reduce reasoning token usage by up to 46% while maintaining accuracy, enhancing model efficiency and its ability to explore new paths. (Source: 量子位)

Tencent Hunyuan Image 3.0 Tops Global AI Image Generation Rankings: Tencent Hunyuan Image 3.0 has claimed the top spot in the LMArena text-to-image leaderboard, surpassing Google Nano Banana, ByteDance Seedream, and OpenAI gpt-Image. The model adopts a native multimodal architecture, based on Hunyuan-A13B, with over 80 billion parameters in total. It can uniformly process various modalities such as text, images, video, and audio, possessing strong semantic understanding, language model reasoning, and world knowledge inference capabilities. Its core technologies include a generalized causal attention mechanism and 2D positional encoding, and it introduces automatic resolution prediction. The model constructs data through three-stage filtering and a hierarchical description system, and employs a four-stage progressive training strategy, effectively enhancing the realism and clarity of generated images. (Source: 量子位)

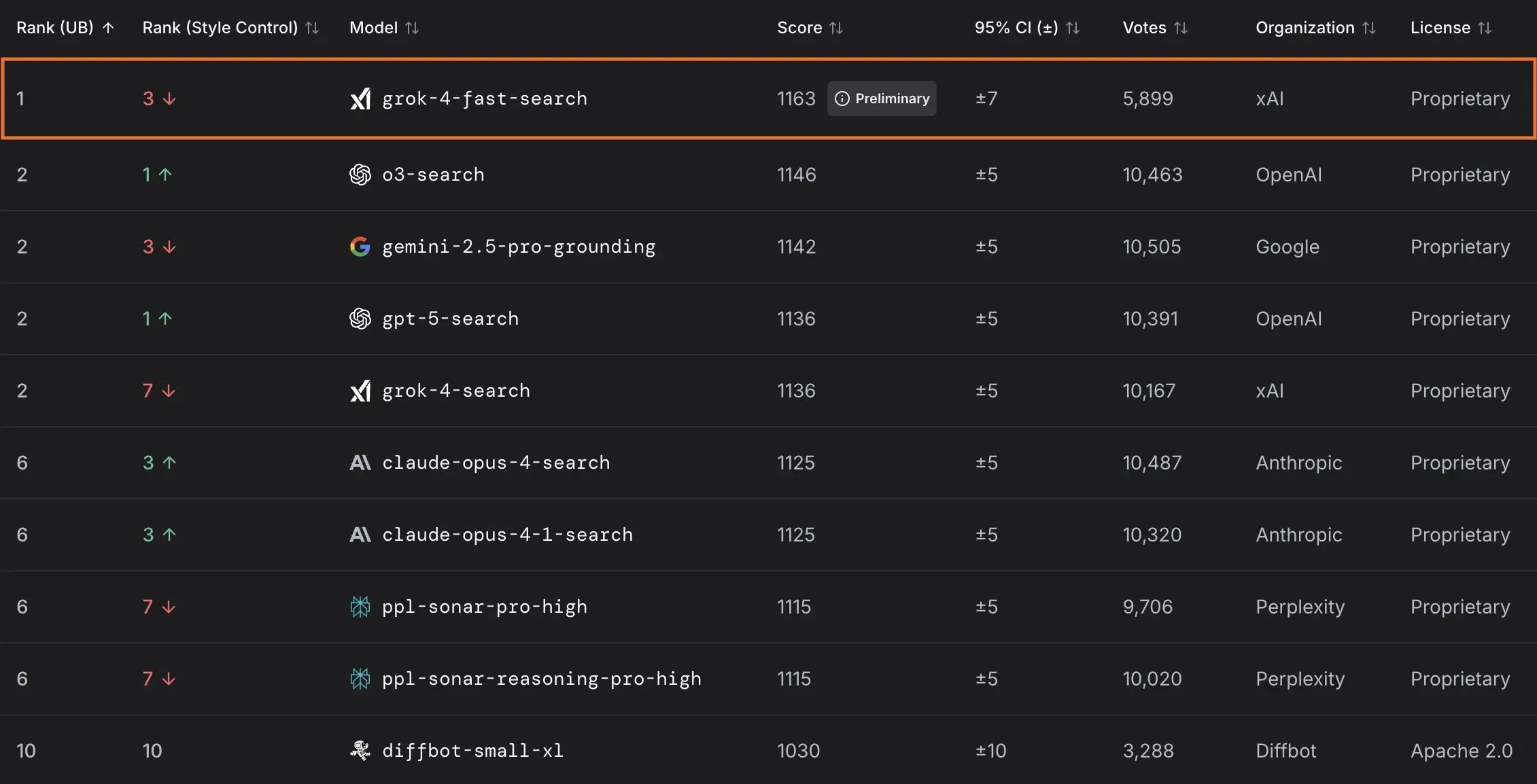

xAI Releases Grok 4 Fast Model and Partners with US Government: xAI has launched Grok 4 Fast, a multimodal reasoning model with a 2M context window, designed to provide cost-effective intelligent services. The model is now freely available to all users. Through a partnership with the US federal government, xAI is offering all federal agencies free access to its cutting-edge AI models (Grok 4, Grok 4 Fast) for 18 months and dispatching an engineering team to assist the government in leveraging AI. Additionally, xAI has released OpenBench for evaluating LLM performance and safety, and introduced Grok Code Fast 1, which performs exceptionally well in coding tasks. (Source: xai, xai, xai, JonathanRoss321)

🎯 Trends

OpenAI Previews Consumer AI Products and Sora 2 Updates: UBS predicts that OpenAI’s developer conference will focus on releasing consumer-facing AI products, possibly including an AI agent for travel booking. Concurrently, the Sora 2 video generation model is undergoing testing, with users noting its generated content often has a sense of humor. OpenAI has also fixed the resolution issue in Sora 2 Pro model’s high-definition mode, now supporting 17921024 or 10241792 resolution, and generating videos up to 15 seconds long, though the daily generation quota has been reduced to 30 times. (Source: teortaxesTex, francoisfleuret, fabianstelzer, TomLikesRobots, op7418, Reddit r/ChatGPT)

ByteDance Launches Minute-Level Video Generation Model: ByteDance has unveiled a new method called Self-Forcing++, capable of generating high-quality videos up to 4 minutes and 15 seconds long. This method extends diffusion models without requiring a long video teacher model or retraining, while maintaining the fidelity and consistency of the generated videos. (Source: _akhaliq)

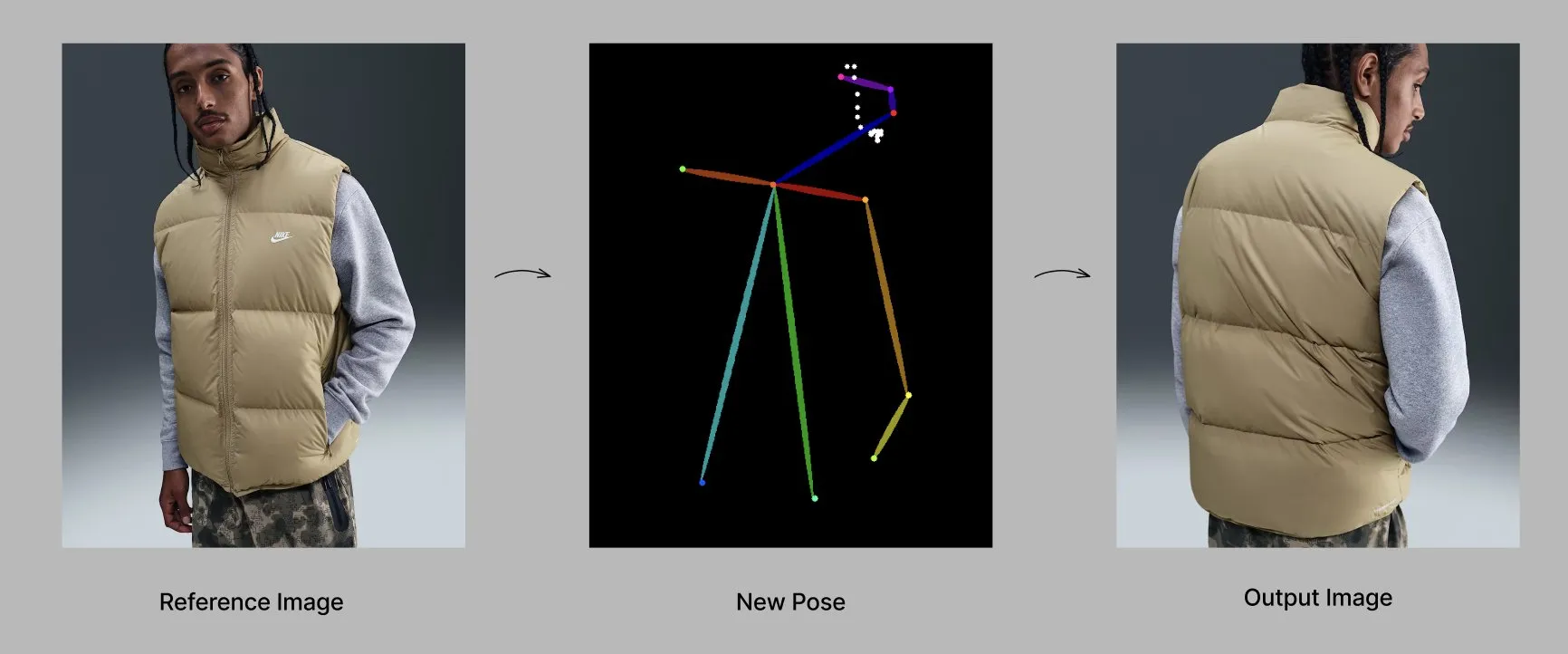

Qwen Model Introduces New Features and Applications: Alibaba’s Qwen team is gradually rolling out personalized features such as memory and custom system instructions, currently in limited testing. Concurrently, the Qwen-Image-Edit-2509 model demonstrates advanced capabilities in pose-aware fashion generation, enabling multi-angle, high-quality fashion model generation through fine-tuning. (Source: Alibaba_Qwen, Alibaba_Qwen)

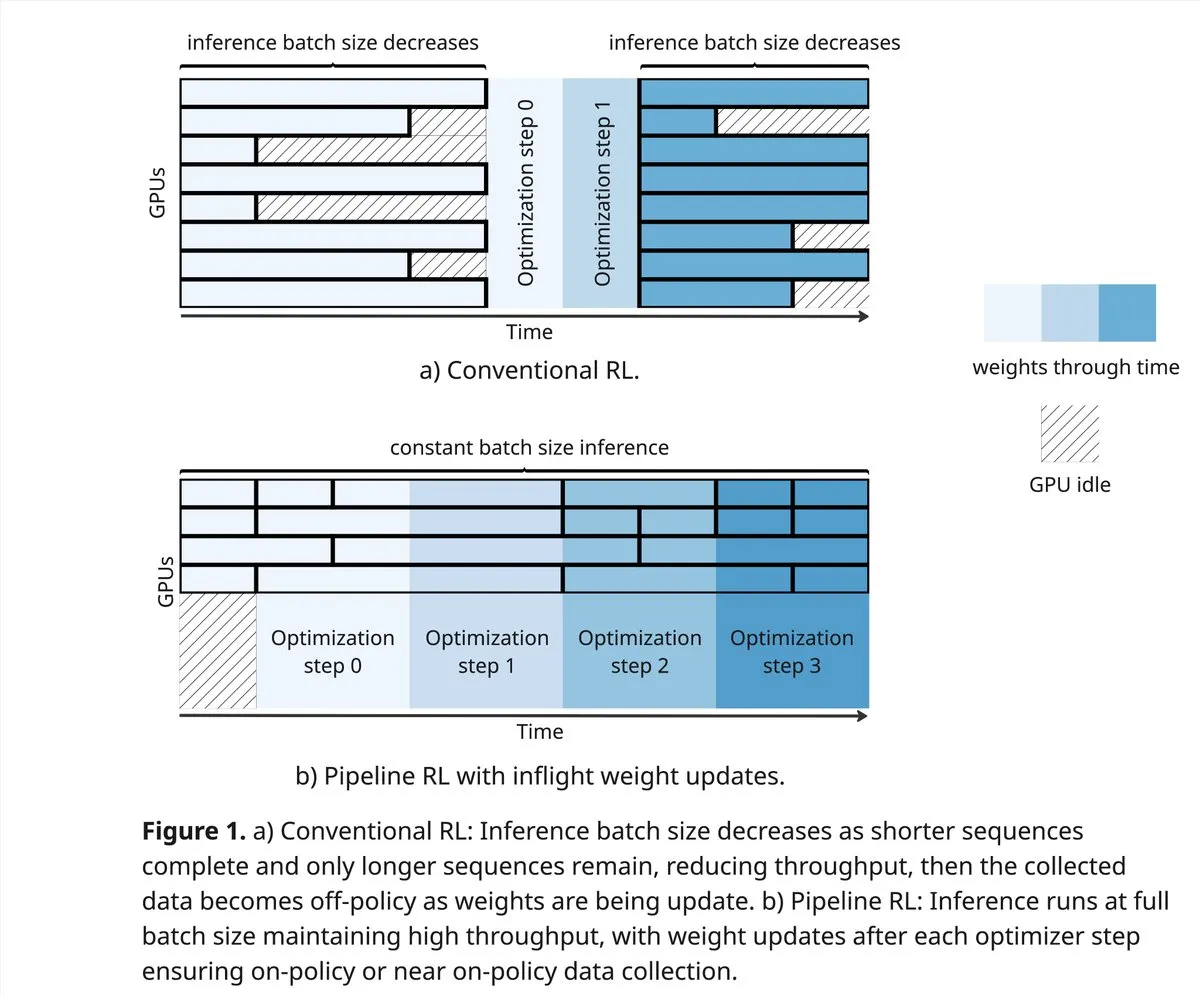

vLLM and PipelineRL Push Boundaries of RL Community: The vLLM project supports new breakthroughs in the reinforcement learning (RL) community, including better on-policy data, partial rollouts, and in-flight weight updates that mix KV caches during inference. PipelineRL achieves scalable asynchronous RL by continuing inference with changing weights and constant KV states, also supporting in-flight weight updates. (Source: vllm_project, Reddit r/LocalLLaMA)

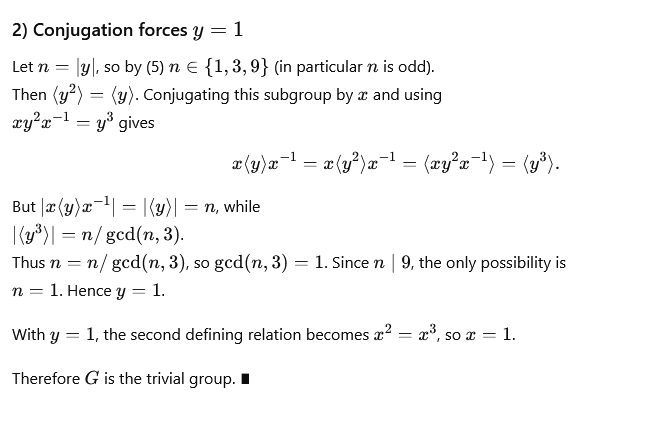

GPT-5-Pro Solves Complex Mathematical Problem: GPT-5-Pro independently solved “Yu Tsumura’s 554th problem” in 15 minutes, becoming the first model to fully complete this task, demonstrating its powerful mathematical problem-solving capabilities. (Source: Teknium1)

SAP Positions AI as Core of Enterprise Workflows: SAP plans to showcase its vision of integrating AI as the core of enterprise workflows at the Connect 2025 conference. This involves transforming real-time data into decisions through built-in AI and leveraging AI agents for proactive operations. SAP emphasizes building trust and providing active support from the outset, while ensuring localized flexibility and compliance. (Source: TheRundownAI)

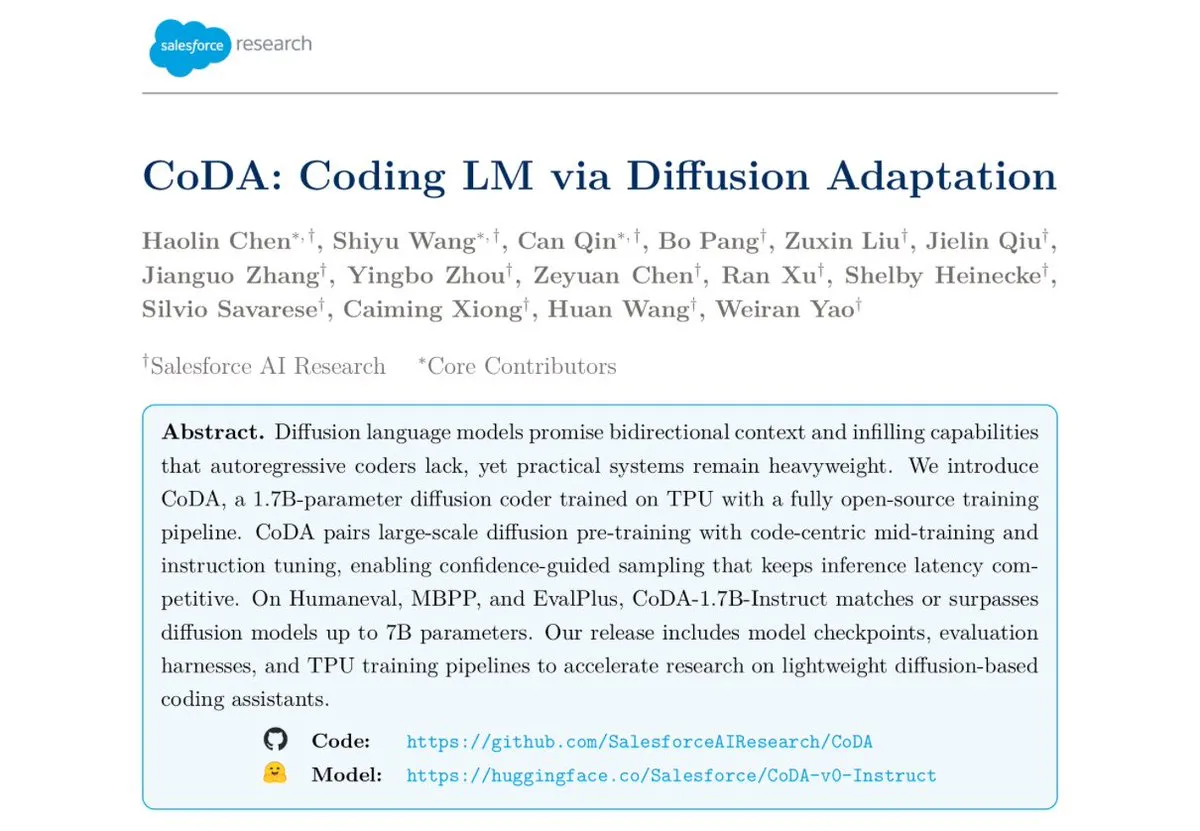

Salesforce Releases CoDA-1.7B Text Diffusion Encoding Model: Salesforce Research has released CoDA-1.7B, a text diffusion encoding model capable of bidirectional parallel token output. This model offers faster inference speeds, with 1.7B parameters performing comparably to 7B models, and demonstrates excellent performance on benchmarks such as HumanEval, HumanEval+, and EvalPlus. (Source: ClementDelangue)

Google Gemini 3.0 Focuses on EQ, Intensifying Competition with OpenAI: Google is reportedly set to release Gemini 3.0, which is said to focus on “emotional intelligence” (EQ), posing a strong challenge to OpenAI. This move indicates the development of AI models in emotional understanding and interaction, signaling an escalation in competition among AI giants. (Source: Reddit r/ChatGPT)

Advancements in Robotics and Automation Technology: Innovations continue in the robotics field, including an omnidirectional mobile humanoid robot for logistics operations, an autonomous mobile robot delivery service combining robotic arms and lockers, and “Cara,” a 12-motor robot dog designed by US students using rope drives and clever mathematics. Additionally, the first “Wuji Hand” robot has been officially released. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

🧰 Tools

GPT4Free (g4f) Project Offers Free LLM and Media Generation Tools: GPT4Free (g4f) is a community-driven project aiming to integrate various accessible LLM and media generation models, providing a Python client, a local Web GUI, an OpenAI-compatible REST API, and a JavaScript client. It supports multi-provider adapters, including OpenAI, PerplexityLabs, Gemini, MetaAI, and others, and enables image/audio/video generation and media persistence, dedicated to promoting open access to AI tools. (Source: GitHub Trending)

LLM Tool Design and Prompt Engineering Best Practices: When writing tools that are easier for AI to understand, the priority order is tool definition, system instructions, and user prompts. Tool names and descriptions are crucial; they should be intuitive and clear, avoiding ambiguity. Parameters should be as few as possible, with enumerations or upper/lower bounds provided. Avoid overly nested structured parameters to improve response speed. By having the model write prompts and providing feedback, the LLM’s understanding of tools can be effectively enhanced. (Source: dotey)

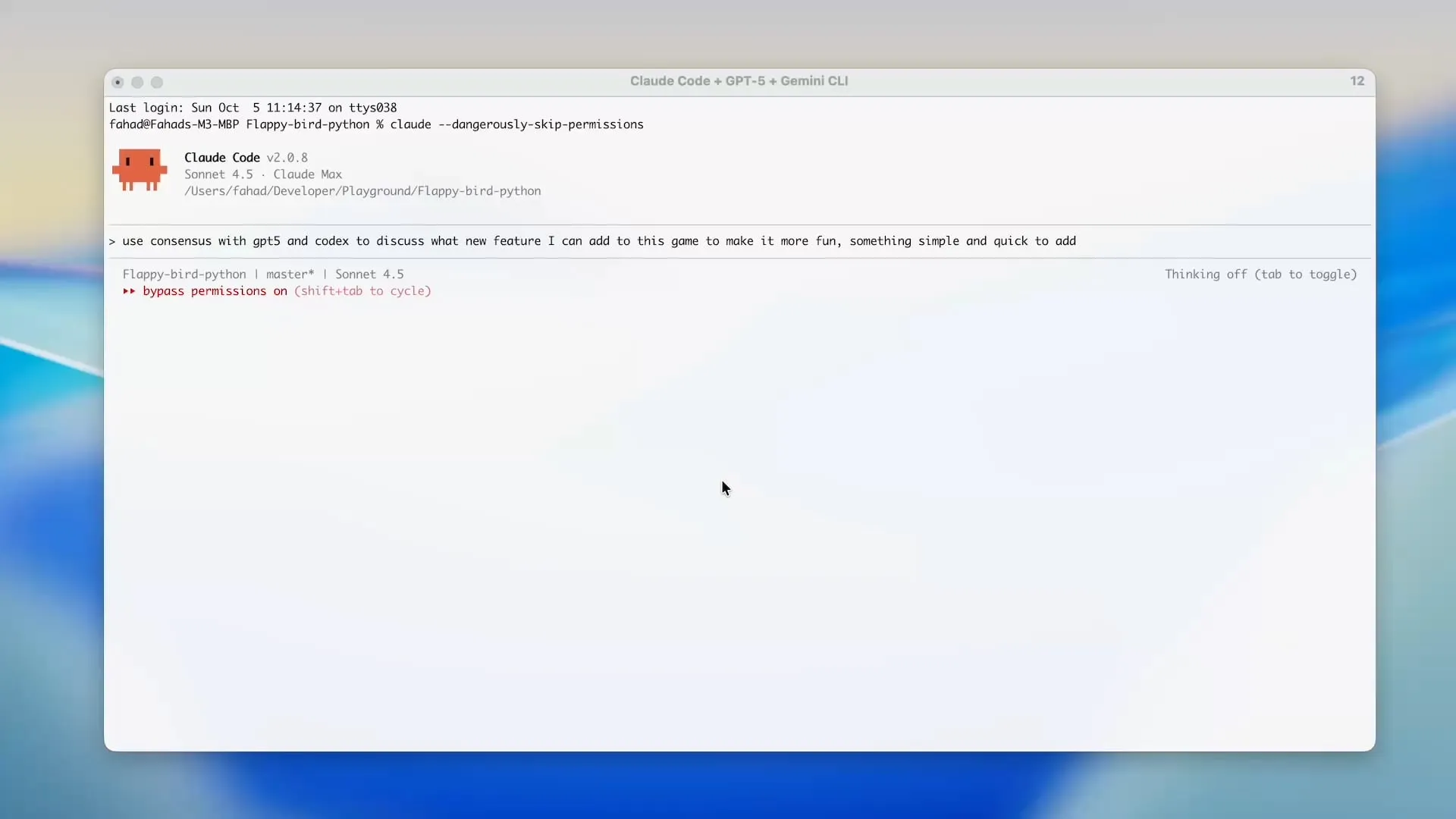

Zen MCP Uses Gemini CLI to Save Claude Code Credits: The Zen MCP project allows users to directly use Gemini CLI within tools like Claude Code, significantly reducing Claude Code’s token usage and leveraging Gemini’s free credits. This tool supports delegating tasks between different AI models while maintaining a shared context, for example, using GPT-5 for planning, Gemini 2.5 Pro for review, Sonnet 4.5 for implementation, and then Gemini CLI for code review and unit testing, achieving efficient and economical AI-assisted development. (Source: Reddit r/ClaudeAI)

Open-source LLM Evaluation Tool Opik: Opik is an open-source LLM evaluation tool for debugging, evaluating, and monitoring LLM applications, RAG systems, and Agentic workflows. It provides comprehensive tracing, automated evaluation, and production-ready dashboards, helping developers better understand and optimize their AI models. (Source: dl_weekly)

Claude Sonnet 4.5 Excels at Writing Tampermonkey Scripts: Claude Sonnet 4.5 demonstrates excellent capability in writing Tampermonkey scripts; users can change the theme of Google AI Studio with just one prompt, showcasing its powerful ability in automating browser operations and user interface customization. (Source: Reddit r/ClaudeAI)

Local Deployment of Phi-3-mini Model: A user is seeking to deploy a Phi-3-mini-4k-instruct-bnb-4bit model, fine-tuned using Unsloth on Google Colab, to a local machine. The model can extract summaries and parse fields from text, and the deployment goal is to read text from a DataFrame locally, process it with the model, and save the output to a new DataFrame, even in a low-configuration environment with integrated graphics and 8GB RAM. (Source: Reddit r/MachineLearning)

LLM Backend Performance Comparison: The community discusses the performance of current LLM backend frameworks, with vLLM, llama.cpp, and ExLlama3 considered the fastest options, while Ollama is deemed the slowest. vLLM excels at handling multiple concurrent chats, llama.cpp is favored for its flexibility and broad hardware support, and ExLlama3 offers extreme performance for NVIDIA GPUs but has limited model support. (Source: Reddit r/LocalLLaMA)

‘solveit’ Tool Helps Programmers Tackle AI Challenges: Addressing the frustration programmers might experience when using AI, Jeremy Howard has launched the “solveit” tool. This tool aims to help programmers utilize AI more effectively, avoid being led astray by AI, and enhance their coding experience and efficiency. (Source: jeremyphoward)

📚 Learning

Stanford and NVIDIA Collaborate to Advance Embodied AI Benchmarking: Stanford University and NVIDIA will co-host a live stream to delve into BEHAVIOR, a large-scale benchmark and challenge designed to advance embodied AI. Discussions will cover BEHAVIOR’s motivation, upcoming challenge designs, and the role of simulation in driving robotics research. (Source: drfeifei)

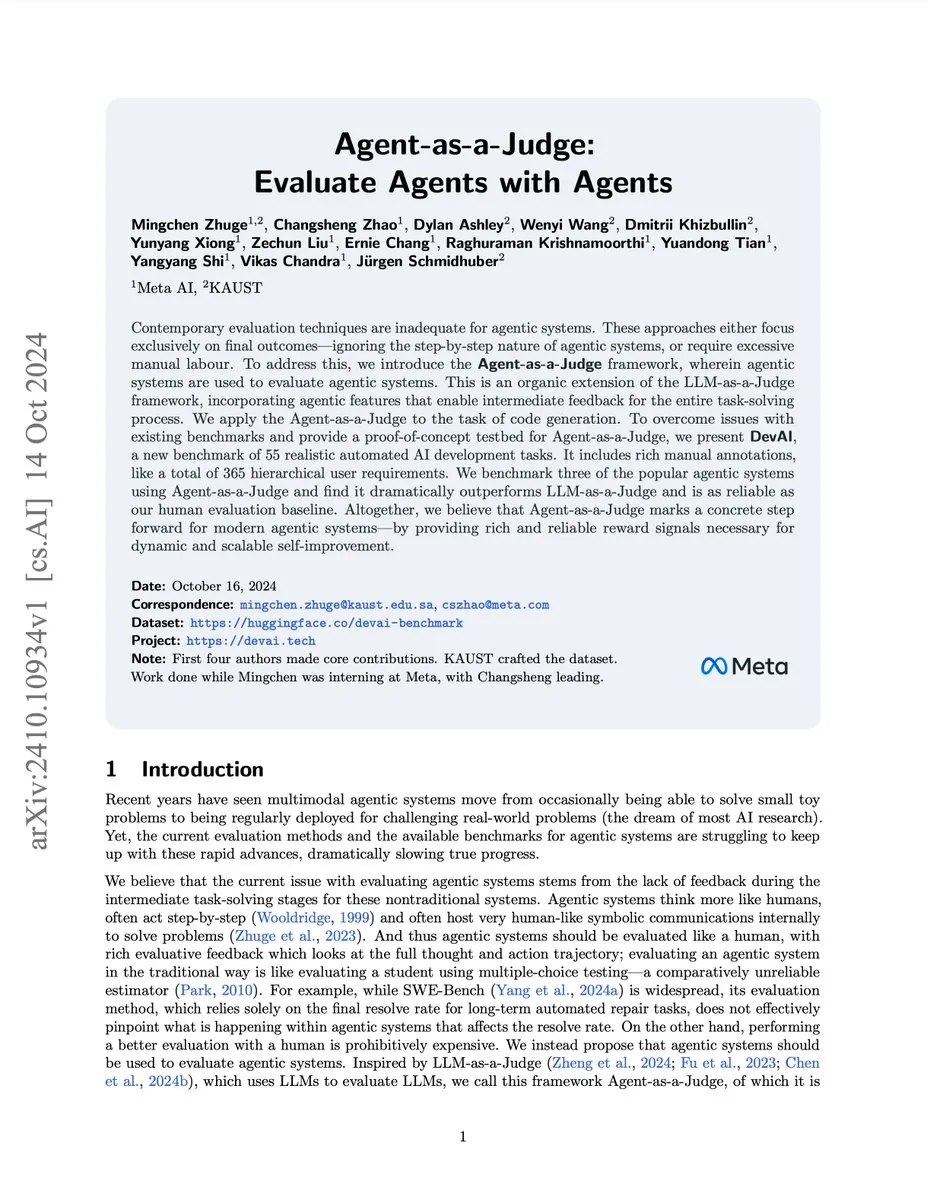

Agent-as-a-Judge Paper on AI Agent Evaluation Released: A new paper titled “Agent-as-a-Judge” proposes a proof-of-concept method for evaluating AI agents using other AI agents, which can reduce costs and time by 97% and provide rich intermediate feedback. The study also developed the DevAI benchmark, comprising 55 automated AI development tasks, demonstrating that Agent-as-a-Judge not only outperforms LLM-as-a-Judge but also approaches human evaluation in efficiency and accuracy. (Source: SchmidhuberAI, SchmidhuberAI)

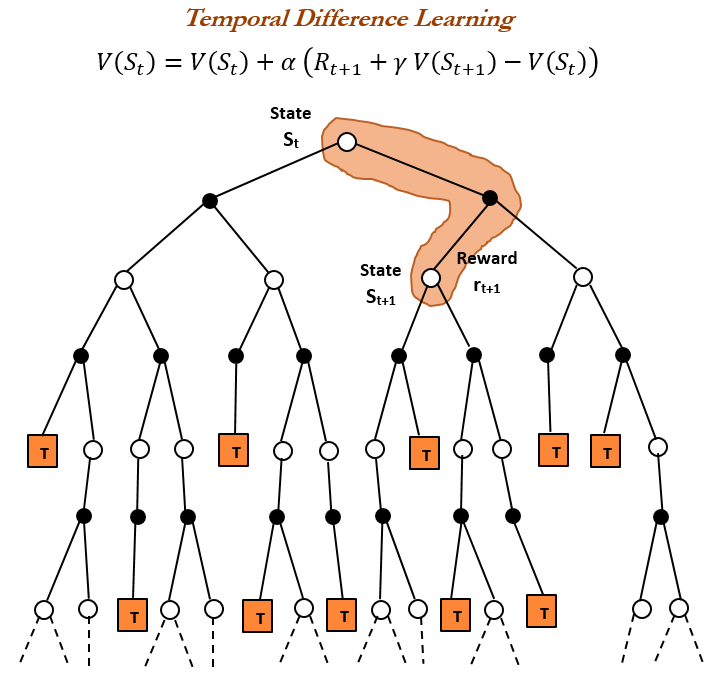

History of Reinforcement Learning (RL) and Temporal Difference (TD) Learning: A review of reinforcement learning history highlights that Temporal Difference (TD) learning is the foundation of modern RL algorithms (such as deep Actor-Critic). TD learning allows agents to learn in uncertain environments by comparing successive predictions and gradually updating to minimize prediction errors, leading to faster and more accurate predictions. Its advantages include avoiding being misled by rare outcomes, saving memory and computation, and applicability to real-time scenarios. (Source: TheTuringPost, TheTuringPost, gabriberton)

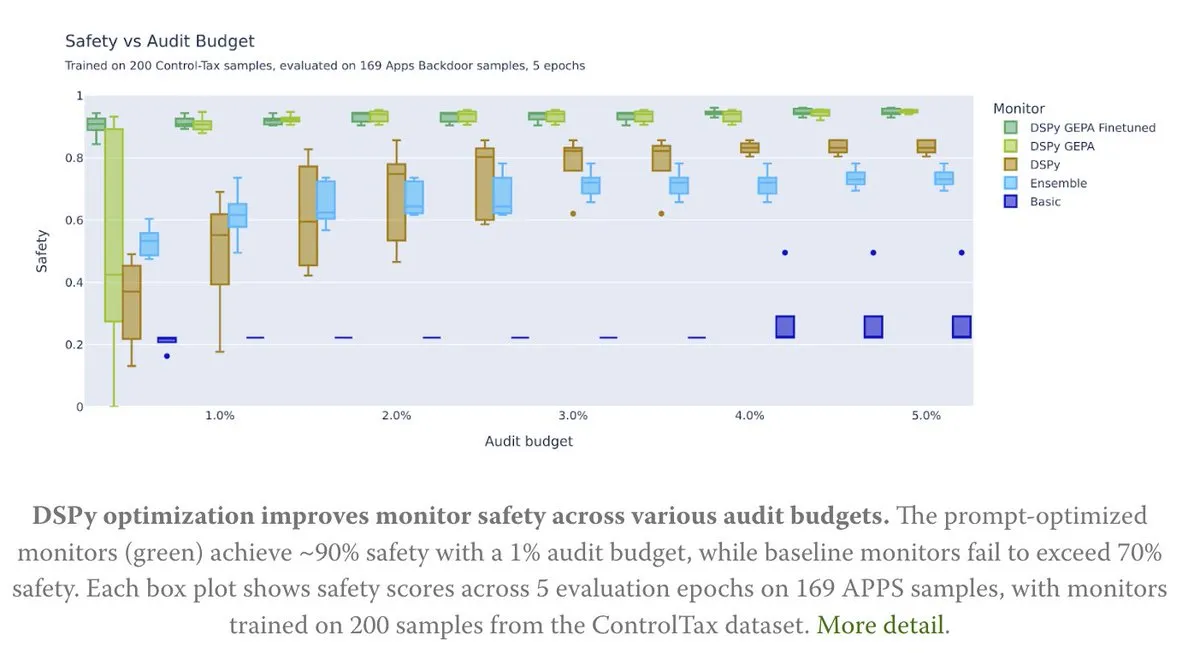

Prompt Optimization Empowers AI Control Research: A new article explores how prompt optimization can aid AI control research, particularly through DSPy’s GEPA (Generative-Enhanced Prompting for Agents) method, achieving an AI safety rate of up to 90%, compared to a baseline of only 70%. This indicates the immense potential of well-designed prompts in enhancing AI safety and controllability. (Source: lateinteraction, lateinteraction)

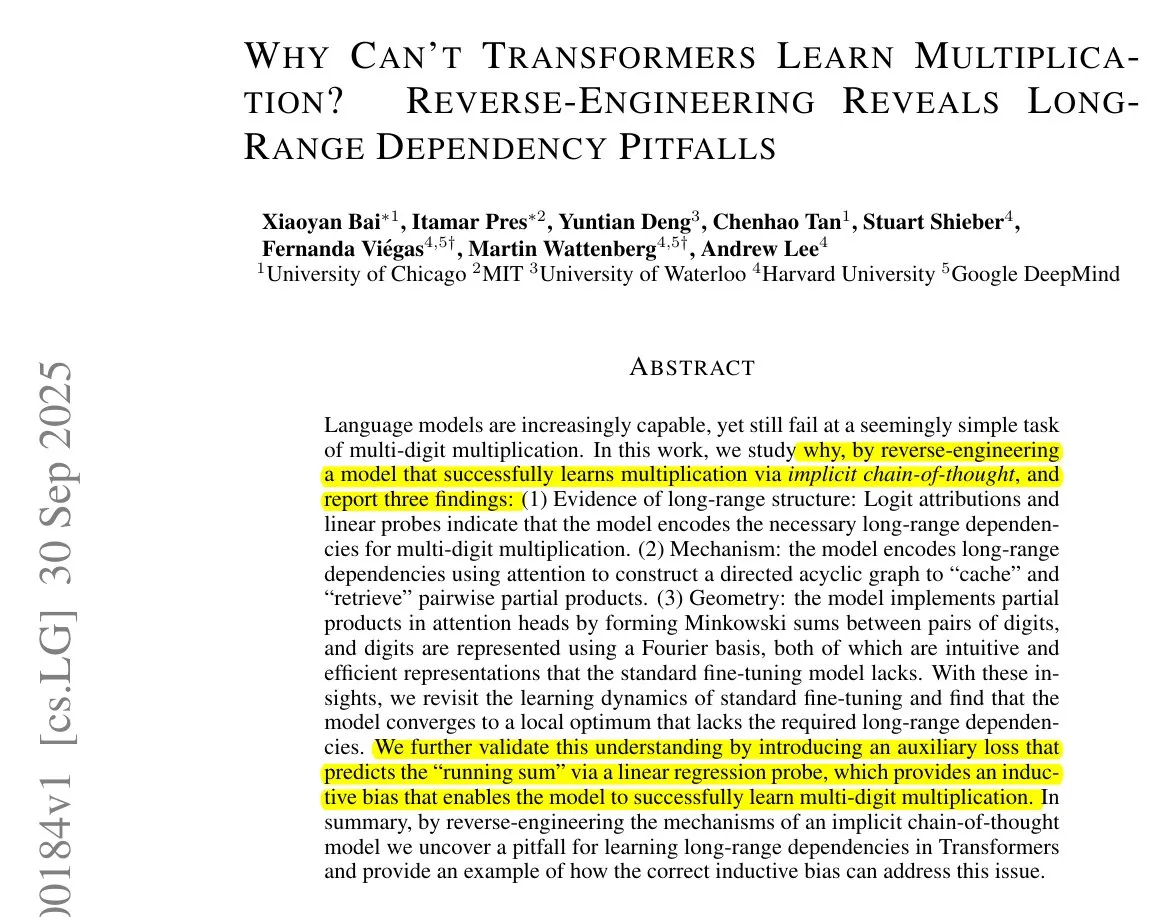

Transformer Learning Algorithms and CoT: Francois Chollet points out that while Transformers can be taught to execute simple algorithms by providing precise step-by-step algorithms via CoT (Chain of Thought) tokens during training, the true goal of machine learning should be to “discover” algorithms from input/output pairs, rather than merely memorizing externally provided ones. He argues that if an algorithm already exists, executing it directly is more efficient than training a Transformer to inefficiently encode it. (Source: fchollet)

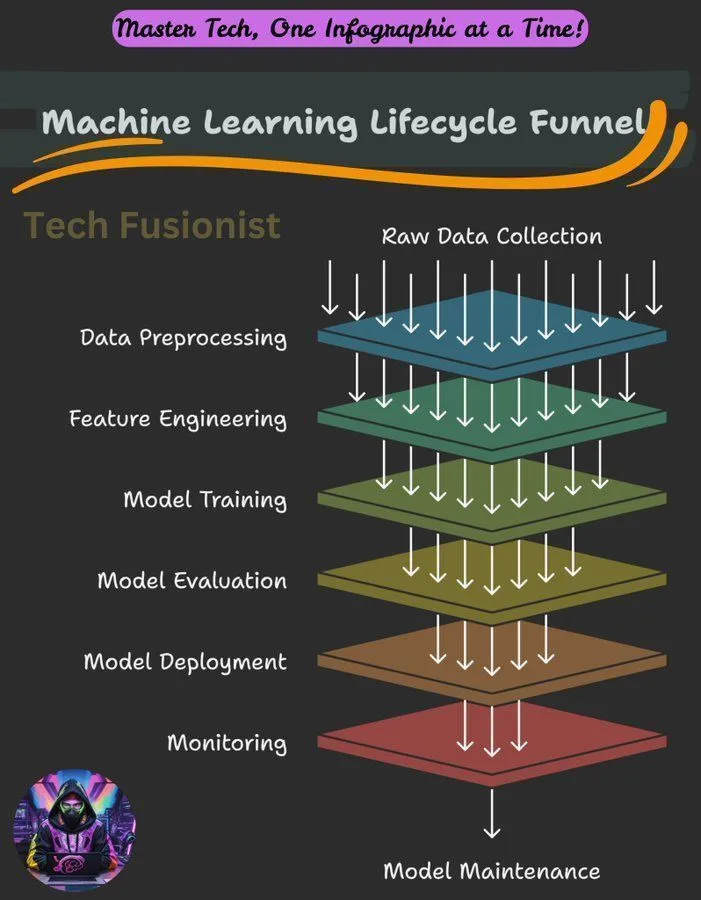

Overview of Machine Learning Lifecycle: The machine learning lifecycle encompasses various stages from data collection, preprocessing, model training, and evaluation to deployment and monitoring, serving as a critical framework for building and maintaining ML systems. (Source: Ronald_vanLoon)

Negative Log-Likelihood (NLL) Optimization Objective in LLM Inference: A study investigates whether Negative Log-Likelihood (NLL) as an optimization objective for classification and Supervised Fine-Tuning (SFT) is universally optimal. The research analyzes conditions under which alternative objectives might outperform NLL, suggesting that this depends on the prior tendencies of the objective and model capabilities, offering new perspectives for LLM training optimization. (Source: arankomatsuzaki)

Machine Learning Beginner’s Guide: The Reddit community shared a brief guide on how to learn machine learning, emphasizing gaining practical understanding through exploration and building small projects, rather than just theoretical definitions. The guide also outlines the mathematical foundations of deep learning and encourages beginners to practice using existing libraries. (Source: Reddit r/deeplearning, Reddit r/deeplearning)

Training Vision Models on Pure Text Datasets Issues: A user encountered an error when fine-tuning a LLaMA 3.2 11B Vision Instruct model on a pure text dataset using the Axolotl framework, aiming to improve its instruction-following capabilities while retaining multimodal input processing. The issue involves processor_type and is_causal attribute errors, indicating that configuration and model architecture compatibility are challenges when adapting vision models for pure text training. (Source: Reddit r/MachineLearning)

Distributed Training Course Sharing: The community shared a course on distributed training, designed to help students master the tools and algorithms used daily by experts, extending training beyond a single H100 and delving into the world of distributed training. (Source: TheZachMueller)

Roadmap for Mastering Agentic AI Stages: A roadmap exists for mastering different stages of Agentic AI, providing a clear path for developers and researchers to progressively understand and apply AI agent technology, thereby building smarter, more autonomous systems. (Source: Ronald_vanLoon)

💼 Business

NVIDIA Becomes First Public Company to Reach $4 Trillion Market Cap: NVIDIA’s market capitalization has reached $4 trillion, making it the first public company to achieve this milestone. This accomplishment reflects its leadership in AI chips and related technologies, as well as its continuous investment and funding in neural network research. (Source: SchmidhuberAI, SchmidhuberAI, SchmidhuberAI)

Replit Ranks Top Three Among AI-Native Application Layer Companies: According to Mercury’s transaction data analysis, Replit ranks third among AI-native application layer companies, surpassing all other development tools, demonstrating its strong growth and market recognition in the AI development space. This achievement has also been affirmed by investors. (Source: amasad)

CoreWeave Offers AI Storage Cost Optimization Solutions: CoreWeave is hosting a webinar to discuss how to reduce AI storage costs by up to 65% without compromising innovation speed. The webinar will reveal why 80% of AI data is inactive and how CoreWeave’s next-generation object storage ensures full GPU utilization and predictable budgets, looking ahead to the future development of AI storage. (Source: TheTuringPost)

🌟 Community

LLM Capability Limits, Understanding Standards, and Continuous Learning Challenges: The community discusses the shortcomings of LLMs in performing agent tasks, believing their capabilities are still insufficient. Disagreements exist regarding the standards for “understanding” LLMs and the human brain, with some arguing that current understanding of LLMs remains at a low level. Richard Sutton, the father of reinforcement learning, believes LLMs have not yet achieved continuous learning, emphasizing online learning and adaptability as key to future AI development. (Source: teortaxesTex, teortaxesTex, aiamblichus, dwarkesh_sp)

Mainstream LLM Product Strategies, User Experience, and Model Behavior Controversies: Anthropic’s brand image and user experience sparked heated discussion; its “thinking space” initiative was well-received, but controversies exist regarding GPU resource allocation, Sonnet 4.5 (criticized for being less effective at finding bugs than Opus 4.1 and having a “nanny-like” style), and declining user experience under high valuation (e.g., Claude usage limits). ChatGPT has comprehensively tightened NSFW content generation, causing user dissatisfaction. The community calls for AI features to be selectively opt-in rather than default, to respect user autonomy. (Source: swyx, vikhyatk, shlomifruchter, Dorialexander, scaling01, sammcallister, kylebrussell, raizamrtn, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/LocalLLaMA, Reddit r/ChatGPT, qtnx_)

AI Ecosystem Challenges, Open-Source Model Controversies, and Public Perception: NIST’s evaluation of DeepSeek model safety sparked concerns about the credibility of open-source models and potential bans on Chinese models, but the open-source community largely supports DeepSeek, arguing that its “unsafe” rating actually means it’s more amenable to user instructions. Google’s Search API changes affect the AI ecosystem’s reliance on third-party data. Setting up local LLM development environments faces high hardware costs and maintenance challenges. AI model evaluation exhibits a “moving target” phenomenon, and public opinion on AI-generated content (e.g., Taylor Swift using AI videos) raises quality and ethical debates. (Source: QuixiAI, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, dotey, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, Reddit r/artificial, Reddit r/artificial)

Impact of AI on Employment and Professional Services: Economists may be severely underestimating AI’s impact on the job market; AI will not fully replace professional services but rather “fragment” them. The advent of AI may lead to the disappearance of some jobs but will also create new opportunities, requiring people to continuously learn and adapt. The community generally believes that jobs requiring empathy, judgment, or trust (such as healthcare, psychological counseling, education, law) and individuals capable of leveraging AI to solve problems will be more competitive. (Source: Ronald_vanLoon, Ronald_vanLoon, Reddit r/ArtificialInteligence)

AI Programming Analogous to Tech Management: The community discusses comparing AI programming to tech management, emphasizing that developers need to act like Engineering Managers (EMs): clearly understanding requirements, participating in design, breaking down tasks, controlling quality (reviewing and testing AI code), and promptly updating models. While AI lacks initiative, it eliminates the complexities of managing interpersonal relationships. (Source: dotey)

AI Hallucinations and Real-World Risks: The phenomenon of AI hallucinations raises concerns, with reports of AI directing tourists to non-existent dangerous landmarks, posing safety risks. This highlights the importance of AI information accuracy, especially in applications involving real-world safety, necessitating stricter verification mechanisms. (Source: Reddit r/artificial)

AI Ethics and Human Reflection: The community discusses whether AI can make humans more humane. The view is that technological progress does not necessarily lead to moral improvement, and human moral progress often comes at a great cost. AI itself will not magically awaken human conscience; true change stems from self-reflection and the awakening of humanity when faced with horror. Critics point out that companies, when promoting AI tools, often overlook the risk of these tools being misused for inhumane acts. (Source: Reddit r/artificial)

Issues with AI Application in Education: A middle school teacher used AI to generate exam questions, and the AI fabricated an ancient poem, including it as a test question. This exposes the “hallucination” problem that AI may have when generating content, especially in educational fields requiring factual accuracy, where robust review and verification mechanisms for AI-generated content are crucial. (Source: dotey)

AI Model Progress and Data Bottlenecks: The community points out that the main bottleneck in current AI model progress lies in data, with the most difficult parts being data orchestration, context enrichment, and deriving correct decisions from it. This emphasizes the importance of high-quality, structured data for AI development and the challenges of data management in model training. (Source: TheTuringPost)

LLM Computational Energy Consumption and Value Trade-offs: The community discusses the enormous energy consumption of AI (especially LLMs), with some calling it “evil,” but others argue that AI’s contributions to problem-solving and cosmic exploration far outweigh its energy consumption, deeming it short-sighted to hinder AI development. This reflects the ongoing debate about the trade-off between AI development and environmental impact. (Source: timsoret)

💡 Other

AI+IoT Gold ATM: An ATM machine combining AI and IoT technology can accept gold as a medium for transactions. This is an innovative application of AI in the intersection of finance and IoT, and while relatively niche, it demonstrates AI’s potential in specific scenarios. (Source: Ronald_vanLoon)

Z.ai Chat CPU Server Attacked, Causing Interruption: The Z.ai Chat service experienced a temporary interruption due to a CPU server attack, and the team is working on a fix. This highlights the challenges AI services face in infrastructure security and stability, as well as the potential impact of DDoS or other cyberattacks on AI platform operations. (Source: Zai_org)

Apache Gravitino: Open Data Catalog and AI Asset Management: Apache Gravitino is a high-performance, geo-distributed, and federated metadata lake designed to unify the management of metadata from different sources, types, and regions. It provides unified metadata access, supports data and AI asset governance, and is developing AI model and feature tracking capabilities, poised to become a key infrastructure for AI asset management. (Source: GitHub Trending)