Keywords:NVIDIA, AI factory, AI infrastructure, OpenAI, GPT gate, Siri, AI cloud market, NVIDIA AI capacity transformation, OpenAI model switching controversy, Apple Siri AI upgrade, China AI cloud market competition, AI talent war

🔥 Spotlight

NVIDIA Reshaping the AI Industry: From Selling Chips to “Selling AI Capacity” : NVIDIA CEO Jensen Huang stated in a recent interview that the era of general-purpose computing has ended, and AI demand is experiencing double exponential growth, with inference demand projected to grow a billionfold. NVIDIA is transforming from a chip supplier into an “AI infrastructure partner,” collaborating with companies like OpenAI to build 10GW-scale “AI factories” and offering “extreme co-design” from chips to software, systems, and networks to achieve the highest performance per watt. He emphasized that this full-stack optimization capability is a core competitive barrier, enabling NVIDIA to dominate the AI industrial revolution and potentially expand the AI infrastructure market from $400 billion to a trillion-dollar scale. (Source: 36氪, 36氪, Reddit r/artificial)

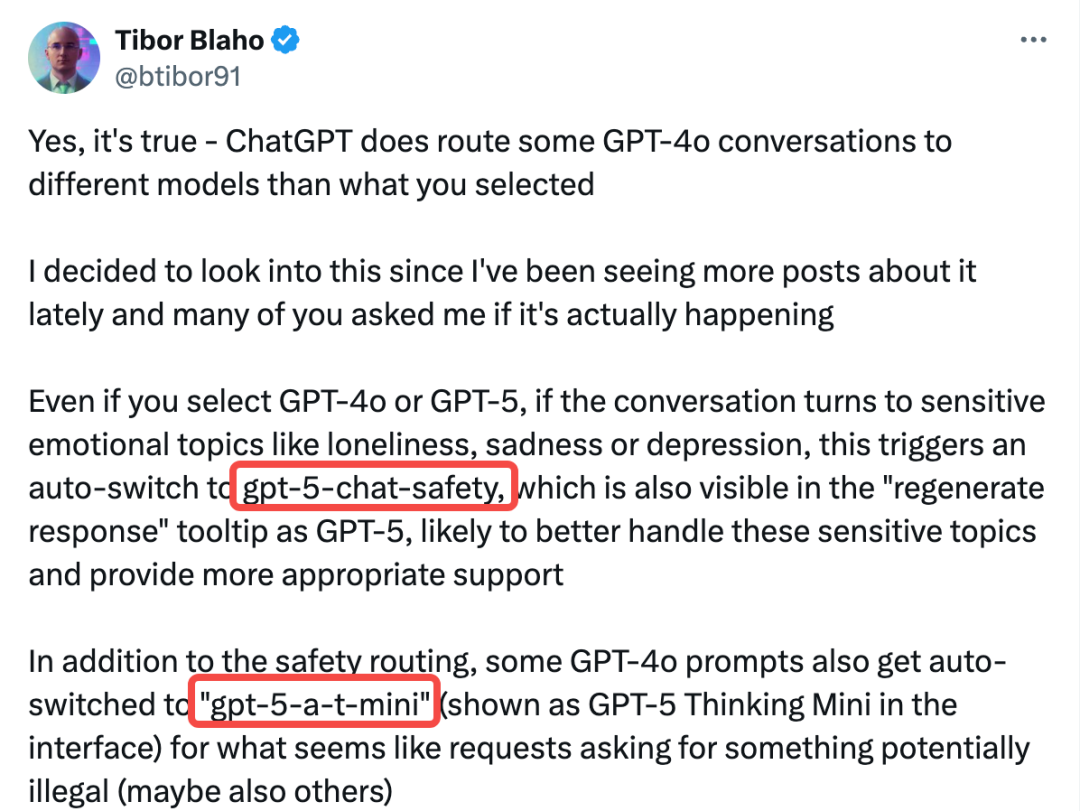

OpenAI’s “GPTgate” Incident Explodes: Paid Users Secretly Downgraded and Models Switched : OpenAI is accused of secretly routing user conversations to undisclosed “safety” models (gpt-5-chat-safety and 5-a-t-mini), especially when emotional or sensitive content is detected. This behavior, confirmed by AIPRM’s lead engineer and sparking widespread user feedback, led to degraded model performance and users being switched models without their knowledge or consent. Although an OpenAI VP called it a temporary safety test, the move raised strong questions about transparency, user autonomy, and potential fraudulent behavior, prompting many users to cancel subscriptions and call for “AI user rights.” (Source: 36氪, 36氪, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT)

Apple Betting on Siri’s Rebirth in 2026: System-Level AI and Third-Party Model Integration : Apple is comprehensively overhauling Siri through internal ChatGPT-like applications “Veritas” and “Linwood” systems, aiming for context-aware conversations and deep application interaction. iOS 26.1 beta code reveals that Apple is introducing MCP (Model Context Protocol) support for App Intents, which will allow compatible AI models like ChatGPT and Claude to interact directly with Mac, iPhone, and iPad apps. This marks Apple’s shift from a “full-stack in-house development” to a “platform-oriented approach,” accelerating its AI ecosystem development by integrating third-party models while ensuring privacy and consistent user experience. The new Siri is expected to debut in early 2026. (Source: 36氪, 36氪)

Hinton’s Prediction Falls Flat: AI Hasn’t Replaced Radiologists; It’s Made Them Busier : In 2016, AI pioneer Geoffrey Hinton predicted that AI would replace radiologists within five years, suggesting to stop training them. However, nearly a decade later, the number of radiologists in the US and their average annual salary (up to $520,000) have both reached historic highs. AI’s diminished performance in real clinical scenarios, legal and regulatory hurdles, and its coverage of only a small fraction of a doctor’s work are key reasons. This reflects “Jevons’ Paradox”: AI’s improvement in image interpretation efficiency has instead increased the demand for doctors to supervise, communicate, and perform non-diagnostic tasks, making them busier rather than replaced. (Source: 36氪)

🎯 Trends

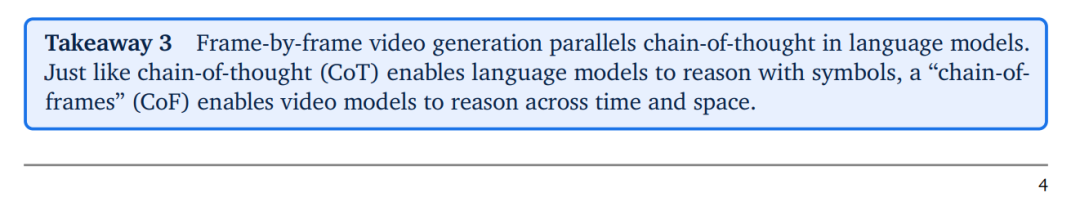

DeepMind Veo 3 Introduces “Chain of Frames” Concept, Advancing General Visual Understanding in Video Models : DeepMind’s Veo 3 video model introduces the “Chain of Frames” (CoF) concept, analogous to Large Language Models’ Chain of Thought (CoT), enabling zero-shot visual reasoning. Veo 3 demonstrates powerful capabilities in perceiving, modeling, and manipulating the visual world, and is poised to become a “general foundational model” in machine vision. Research predicts that as model capabilities rapidly improve and costs decrease, “generalists will replace specialists” in the video model domain, heralding a new phase of rapid development in video generation and understanding. (Source: 36氪, shlomifruchter, scaling01, Reddit r/artificial)

ChatGPT Pulse Launched: AI Shifts from Passive Q&A to Proactive Service : OpenAI has launched the “Pulse” feature for ChatGPT Pro users, marking ChatGPT’s evolution from a passive Q&A tool to a personal assistant proactively anticipating user needs. Pulse delivers personalized “daily briefings” in the morning through nightly “asynchronous research,” combining user chat history, memory, and external applications (like Gmail, Google Calendar). This represents a significant move by OpenAI in the “intelligent agent AI” domain, aiming to enable AI assistants to understand user goals and proactively offer services without prompts, pioneering a new paradigm for human-computer interaction. (Source: 36kr)

Richard Sutton, Father of Reinforcement Learning: Large Language Models Are the Wrong Starting Point : In an interview, Richard Sutton, the father of Reinforcement Learning, proposed that Large Language Models (LLMs) are the wrong starting point for true intelligence. He believes that true intelligence stems from “experiential learning,” i.e., continuously correcting behavior through action, observation, and feedback to achieve goals. In contrast, LLMs’ predictive capabilities are merely imitations of human behavior, lacking independent goals and the ability to be “surprised” by changes in the external world. This perspective has sparked a profound debate on the development path of AGI (Artificial General Intelligence), questioning the current LLM-dominated AI paradigm. (Source: 36kr, paul_cal, algo_diver, scaling01, rao2z, bookwormengr, BlackHC, rao2z)

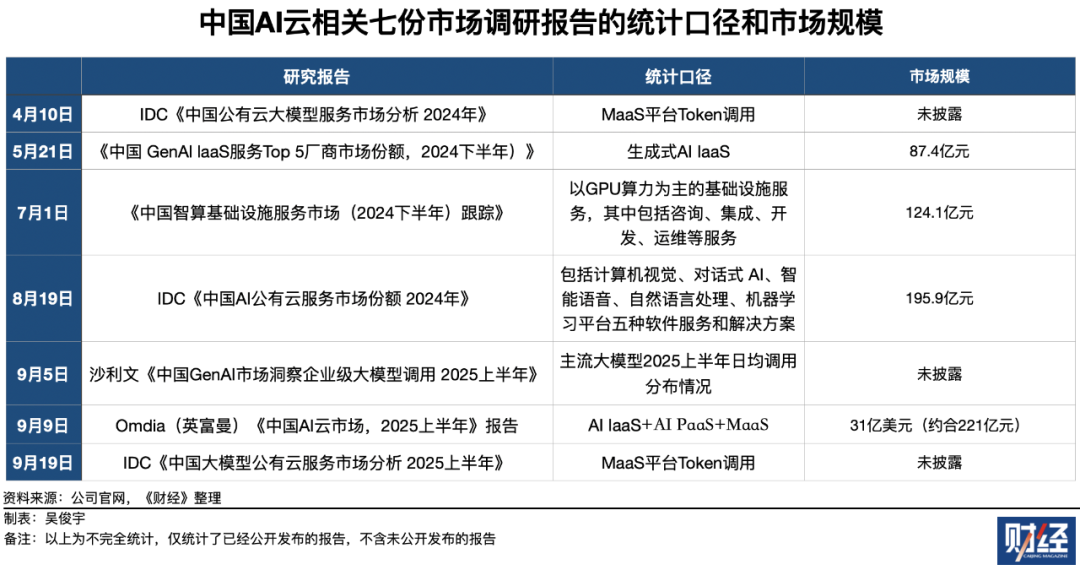

China’s AI Cloud Market Competition Intensifies: Alibaba Cloud Leads, Volcengine Rapidly Catches Up : China’s “AI Cloud” market is fiercely competitive in 2025. Alibaba Cloud maintains its lead in overall revenue scale, covering AI IaaS, PaaS, and MaaS. However, ByteDance’s Volcengine dominates the MaaS (Model-as-a-Service) Token invocation market, with a market share approaching half, and is becoming Alibaba Cloud’s biggest competitor with astonishing growth. Baidu AI Cloud, meanwhile, is tied with Alibaba Cloud for first place in the AI public cloud services (software products and solutions) market. The market shows a multi-dimensional competitive landscape, with Token invocation volume growing exponentially, signaling immense growth potential and a reshaping of the future AI cloud market. (Source: 36氪)

AI Talent War Escalates: High Salaries and H-1B Visa Challenges Coexist : The AI talent market remains red-hot. Xpeng Motors announced plans to recruit over 3,000 fresh graduates in 2026, with top annual salaries reaching 1.6 million RMB, and no upper limit for exceptional candidates. Meta is offering total compensation packages exceeding $200 million to attract top AI talent, while NVIDIA and OpenAI are also vying for talent through acquisitions and equity incentives. However, the tightening of US H-1B visa policies (e.g., an additional $100,000 fee) poses challenges for highly skilled foreign talent to remain in Silicon Valley, raising concerns among tech giants about talent drain and highlighting the fierce and complex global competition for AI talent. (Source: 36kr, 36kr)

🧰 Tools

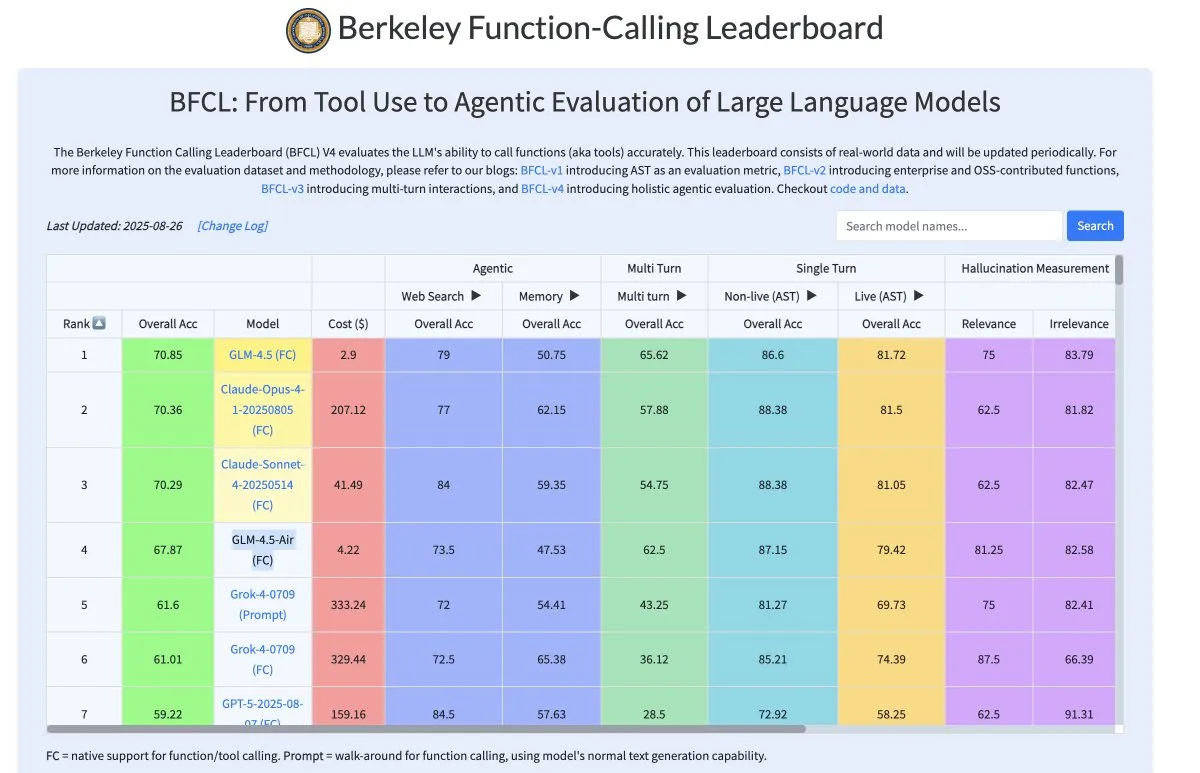

Zhipu AI GLM-4.5-Air: High-Performance, Cost-Effective Tool-Calling Model : Zhipu AI’s GLM-4.5-Air model (106B parameters, 12B active parameters) excels in tool calling, approaching Claude 4’s level but at 90% lower cost. The model significantly reduces hallucinations during the inference phase, improving the reliability of tool calls and making deep research workflows more stable and efficient, thus providing developers with a cost-effective LLM solution. (Source: bookwormengr)

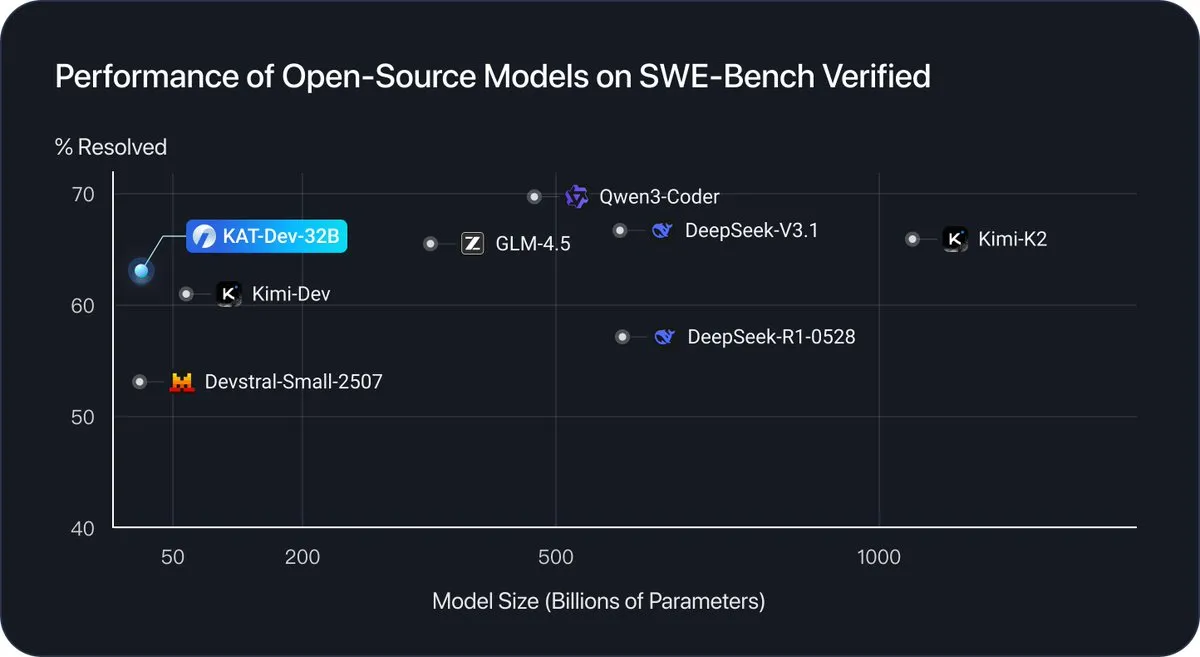

KAT-Dev-32B: A 32B-Parameter Model Designed for Software Engineering Tasks : KAT-Dev-32B is a 32B-parameter model focused on software engineering tasks. It achieved a 62.4% resolution rate on the SWE-Bench Verified benchmark, ranking fifth in performance compared to open-source models of various sizes, demonstrating significant advancements of open-source LLMs in code generation, debugging, and development workflows. (Source: _akhaliq)

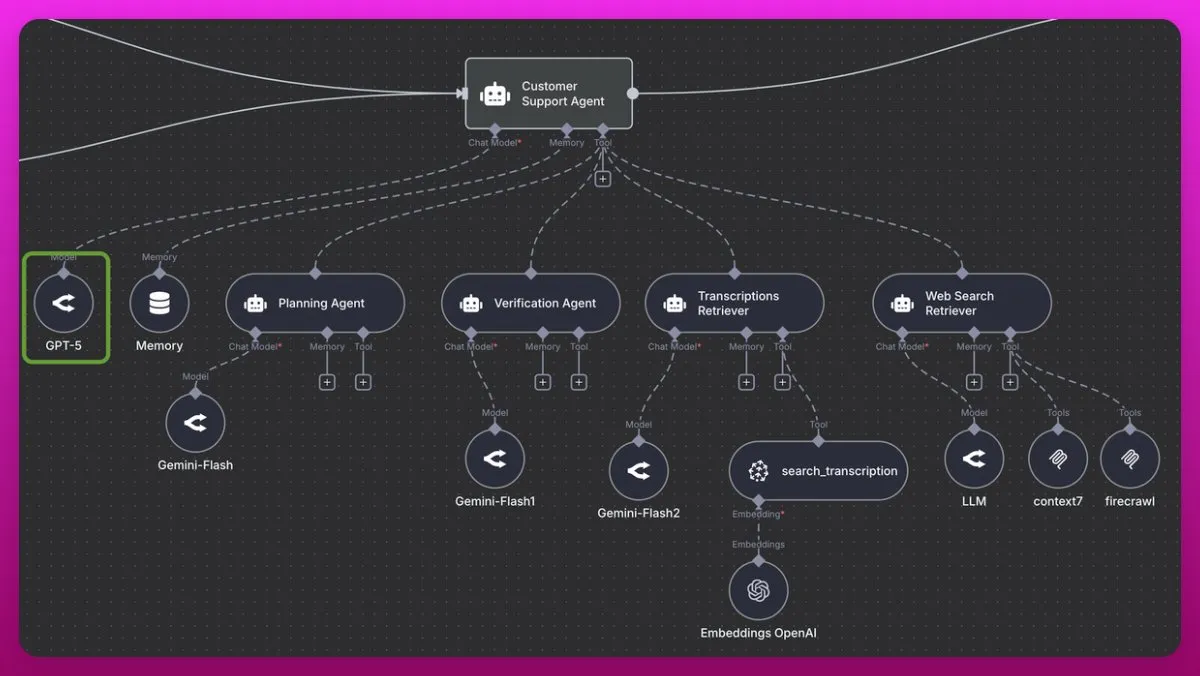

GPT-5: An Excellent Coordinator for Multi-Agent Systems : GPT-5 is hailed as an excellent coordinator for multi-agent systems, especially suitable for areas beyond coding, such as customer support. It can deeply understand intent, efficiently process large amounts of data, and fill information gaps, making it excel in managing complex multi-retrieval systems. Compared to Claude 4 (due to cost) and Gemini 2.5 Pro, GPT-5 (including GPT-5-mini) outperforms in consistency and tool-calling accuracy, providing strong support for developing advanced agent systems. (Source: omarsar0)

Tencent HunyuanImage 3.0: New Benchmark for Open-Source Text-to-Image Large Models : The Tencent Hunyuan team has released HunyuanImage 3.0, an open-source text-to-image model with over 80 billion parameters, activating 13 billion parameters during inference. The model adopts a Transfusion-based MoE architecture, deeply coupling Diffusion and LLM training, equipping it with powerful world knowledge reasoning, complex thousand-character-level prompt understanding, and precise in-image text generation capabilities. HunyuanImage 3.0 aims to revolutionize graphic design and content creation workflows and plans to support image-to-image, image editing, and other multimodal interactions in the future. (Source: nrehiew_, jpt401)

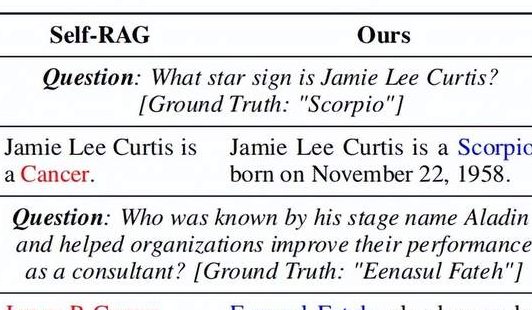

DRAG Framework: Enhancing RAG Models’ Understanding of Lexical Diversity : ACL 2025 proposes the Lexical Diversity-aware RAG (DRAG) framework, the first to introduce “lexical diversity” into RAG’s retrieval and generation processes. DRAG decomposes query semantics into invariant, variable, and supplementary components and employs differentiated strategies for relevance assessment and risk sparsity calibration. This method significantly boosts RAG accuracy (e.g., a 10.6% improvement on HotpotQA), achieving new SOTA on multiple benchmarks, and holds significant value for information retrieval and Q&A systems, enabling them to understand complex human language more precisely. (Source: 量子位)

Tencent Hunyuan3D-Part: Industry’s First High-Quality Native 3D Component Generation Model : The Tencent Hunyuan 3D team has launched Hunyuan3D-Part, the industry’s first model capable of generating high-quality, semantically decomposable 3D components. This model achieves high-fidelity, structurally consistent 3D part generation through its native 3D segmentation model P3-SAM and industrial-grade component generation model X-Part. This breakthrough holds significant implications for video game production pipelines and the 3D printing industry, as it can decompose complex geometries into simpler components, greatly reducing the difficulty of downstream processing and supporting modular assembly. (Source: 量子位)

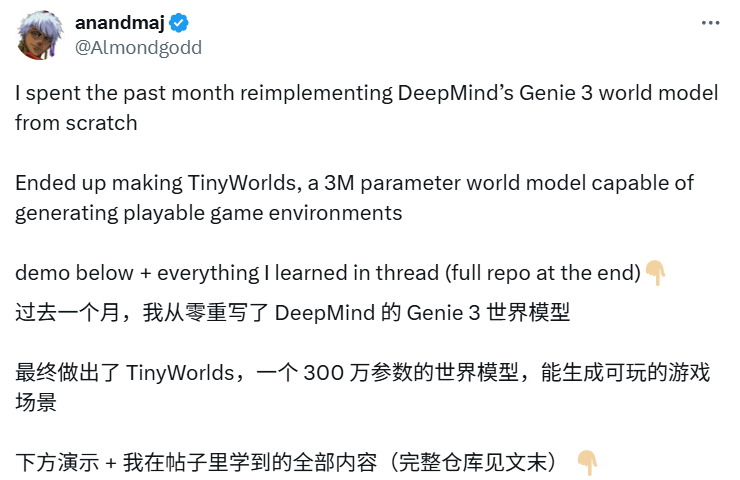

TinyWorlds: 3 Million Parameters Replicate DeepMind’s World Model, Enabling Real-Time Interactive Pixel Games : X blogger anandmaj replicated the core ideas of DeepMind Genie 3 within a month, developing TinyWorlds. This world model, with only 3 million parameters, can generate playable pixel-style game environments in real-time, such as Pong, Sonic, Zelda, and Doom. It captures video information through spatiotemporal transformers and video tokenizers, achieving interactive pixel world generation, demonstrating the immense potential of small-scale models in real-time world generation, with open-source code support. (Source: 36氪)

OpenWebUI Natively Supports MCP: A New Paradigm for LLM Tool Integration : OpenWebUI’s latest update natively supports Model Context Protocol (MCP) servers, allowing users to now integrate external tools like HuggingFace MCP. This feature standardizes how LLMs connect with external data sources and tools, expanding the AI application ecosystem and enabling users to leverage various AI tools more flexibly and efficiently within the OpenWebUI interface. (Source: Reddit r/LocalLLaMA, Reddit r/OpenWebUI)

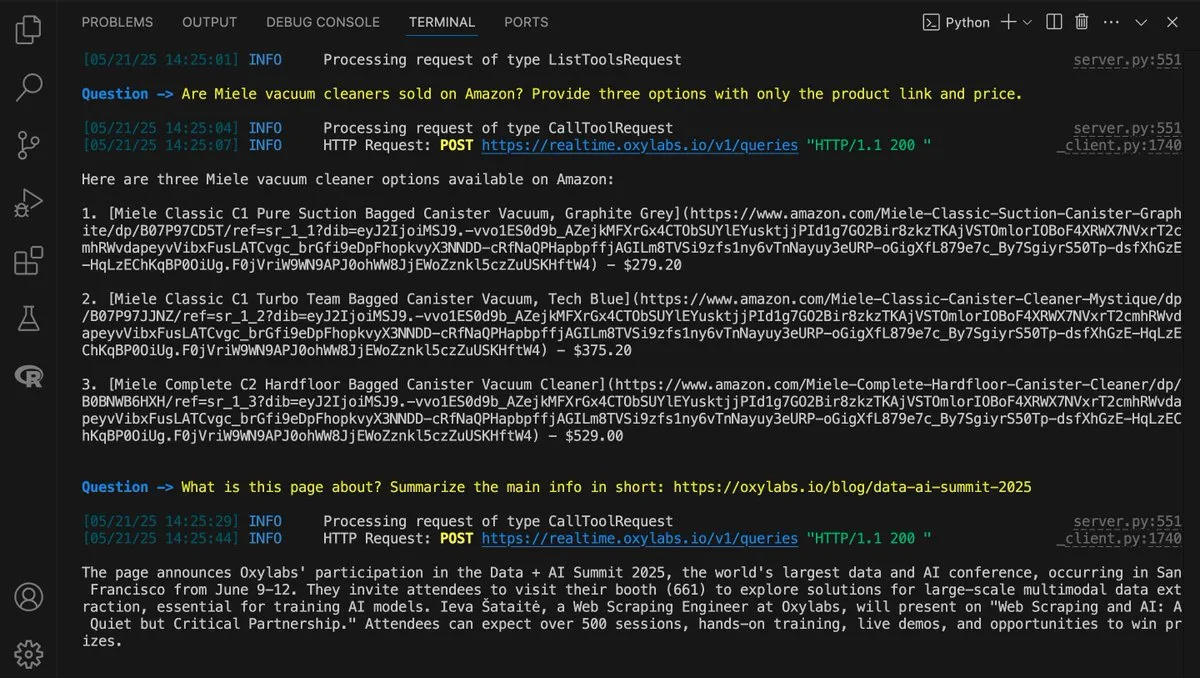

LangChain Partners with Oxylabs: Building AI-Powered Web Scraping Solutions : LangChain and Oxylabs have released a guide demonstrating how to combine LangChain’s intelligence with Oxylabs’ scraping infrastructure to build AI-powered web scraping solutions. This solution supports multiple languages and integration methods, enabling AI agents to overcome common web access challenges like IP blocks and CAPTCHAs, thereby achieving more efficient real-time data retrieval and empowering agent workflows. (Source: LangChainAI)

Open-Source LLM Evaluation Tool Opik: Comprehensive Monitoring and Debugging for AI Applications : Opik is a newly launched open-source LLM evaluation tool designed to help developers debug, evaluate, and monitor LLM applications, RAG systems, and agent workflows. It offers comprehensive tracing, automated evaluations, and production-grade dashboards, providing critical insights for improving AI system performance and reliability. (Source: dl_weekly)

📚 Learn

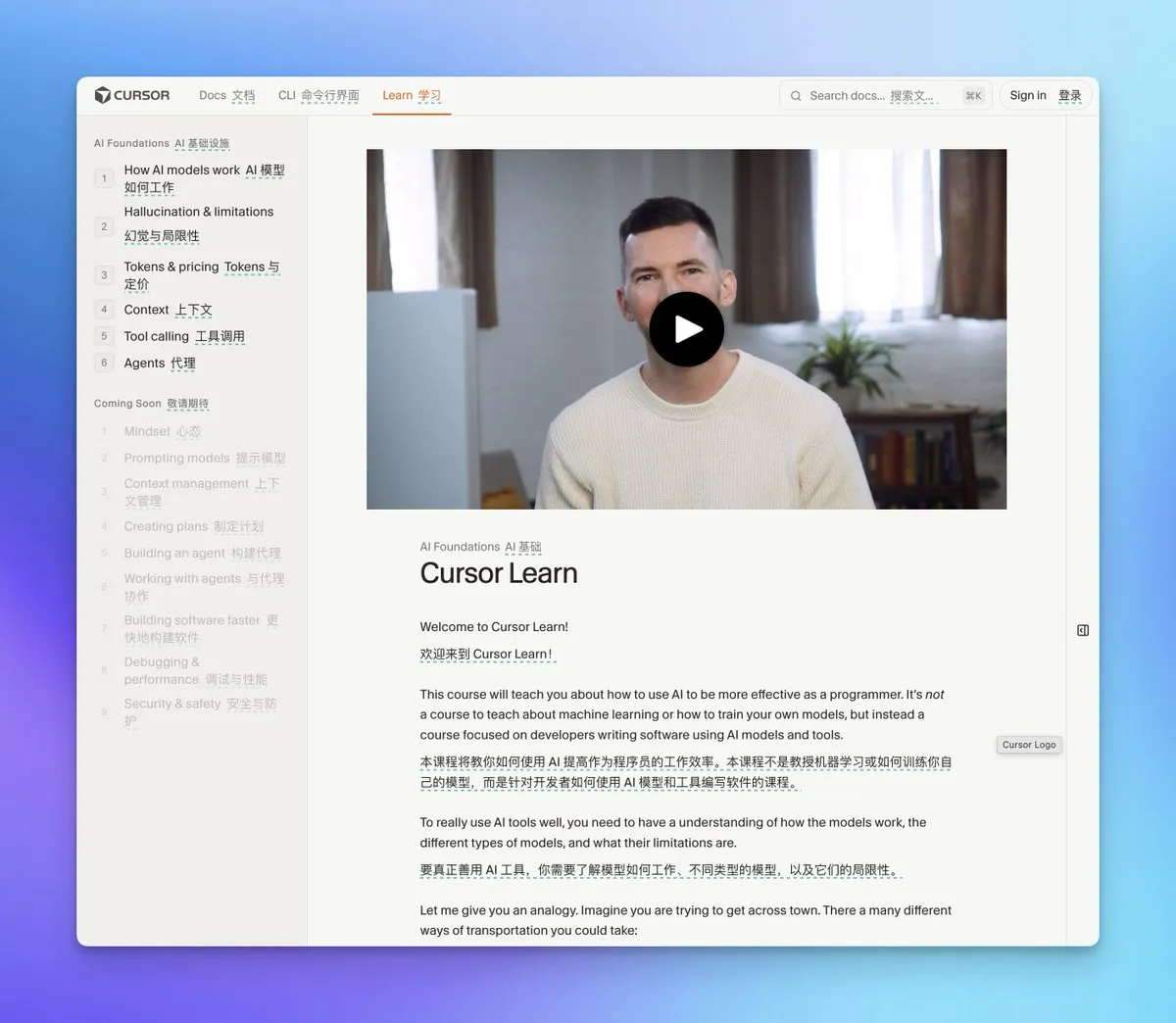

Cursor Learn: Free AI Fundamentals Video Course : Cursor Learn has launched a free six-part AI fundamentals video course, designed for beginners and covering core concepts like Tokens, Context, and Agents. The course includes quizzes and interactive AI models, aiming to provide foundational AI knowledge within an hour, including advanced topics like agent collaboration and context management, making it a valuable resource for AI beginners. (Source: cursor_ai, op7418)

Curated AI/ML GitHub Repositories: Covering Frameworks like PyTorch, TensorFlow, and More : A series of curated AI/ML code repositories have been shared on GitHub, featuring practical notebooks for various deep learning frameworks such as PyTorch, TensorFlow, and FastAI. These resources cover areas like computer vision, natural language processing, GANs, Transformers, AutoML, and object detection, providing rich learning materials for students and practitioners and facilitating technical exploration and project development. (Source: Reddit r/deeplearning)

Free E-book: “A First Course on Data Structures in Python” : A free e-book titled “A First Course on Data Structures in Python” has been released. The book provides foundational building blocks required for AI and machine learning, including data structures, algorithmic thinking, complexity analysis, recursion/dynamic programming, and search methods, making it a valuable resource for learning AI fundamentals. (Source: TheTuringPost)

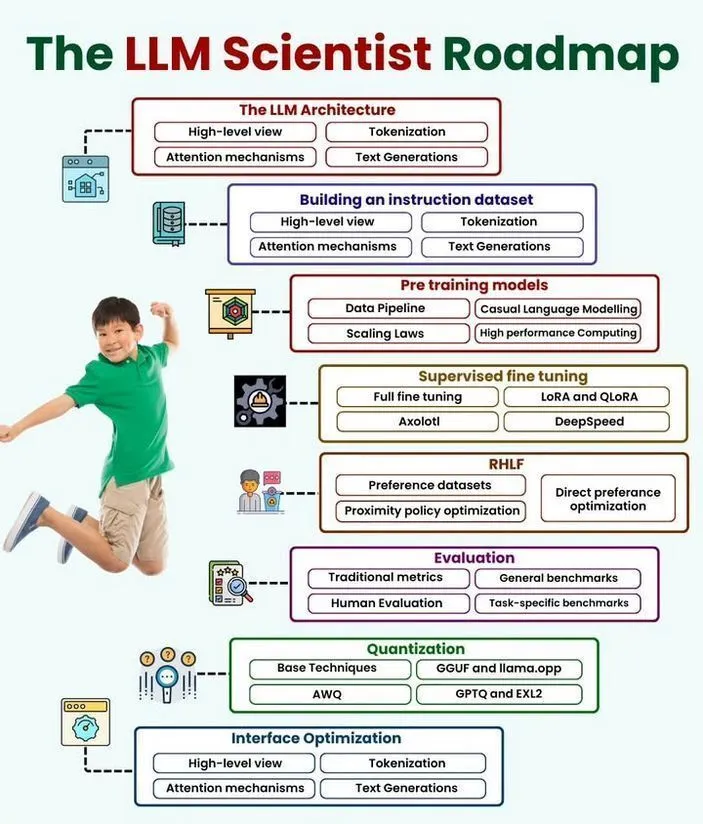

LLM Scientist and Data Scientist Roadmaps Released : Detailed roadmaps have been released for the career development of LLM Scientists and Data Scientists. These resources outline the skills, tools, and learning paths required to enter or advance in the fields of AI, machine learning, and data science, providing clear career planning guidance for aspiring individuals. (Source: Ronald_vanLoon, Ronald_vanLoon)

A16Z Speedrun 2026: AI and Entertainment Startup Accelerator : A16Z Speedrun 2026 is now accepting applications from founders in the AI and entertainment sectors. The program supports founders focused on building their own ventures, offering an opportunity for entrepreneurs looking to grow in the rapidly evolving field of AI-powered products. (Source: yoheinakajima)

💼 Business

MiniMax Copyright Lawsuit: AI Unicorn’s IPO Path Clouded : Chinese AI unicorn MiniMax, valued at over $4 billion, is facing a joint copyright lawsuit from Disney, Universal Pictures, and Warner Bros. The lawsuit alleges that its video generation tool “Hulu AI” systematically infringes copyright by generating content featuring protected characters based on user prompts. This case poses a devastating blow to MiniMax’s IPO plans, highlighting the severe challenges of intellectual property compliance in the generative AI market and the importance of balancing technological innovation with legal boundaries. (Source: 36氪)

AI Logistics Company Augment Secures $85 Million in Funding, Total Funding Reaches 800 Million RMB in Five Months Since Launch : AI logistics company Augment successfully completed an $85 million Series A funding round just five months after its launch, bringing its total funding to $110 million (approximately 800 million RMB). Its AI agent product, Augie, automates complex, fragmented tasks across the entire logistics lifecycle, from order intake to payment collection. It has already managed over $35 billion in freight value for dozens of top 3PLs and shippers, saving clients millions of dollars, demonstrating AI’s powerful business value in optimizing labor-intensive logistics. (Source: 36氪)

Microsoft Integrates Anthropic’s Claude Models into Copilot : Microsoft has integrated Anthropic’s Claude Sonnet 4 and Opus 4.1 models into its Copilot assistant for enterprise users. This move aims to reduce its sole reliance on OpenAI and solidify Microsoft’s position as a neutral platform provider. Enterprise users can now choose between OpenAI and Anthropic models, enhancing flexibility and expected to boost competition in the enterprise AI market. (Source: Reddit r/deeplearning)

🌟 Community

AI’s Impact on Human Understanding and Society: The Paradox of Efficiency and “Information Cocoons” : The community widely fears that AI, like social media, could negatively impact human understanding, critical thinking, and life goals. By optimizing content generation for “spread” rather than “truth” or “depth,” AI might lead to “learning plateaus” and “infinite garbage machines.” This sparks discussions on how to guide AI development towards being a growth tool rather than an addictive one, calling for shifts in regulation, industry practices, and cultural norms. (Source: Reddit r/ArtificialInteligence, Reddit r/artificial, Reddit r/ArtificialInteligence, Yuchenj_UW, colin_fraser, Teknium1, cloneofsimo)

AI in the Workplace: The Contradiction of Efficiency Gains and “Invisible Exploitation” : AI’s integration into the workplace, especially for executive-level employees, is creating a paradox: efficiency gains are often accompanied by higher output expectations, without corresponding rewards for employees. This “invisible exploitation” turns employees into “human quality checkers” for AI-generated content, increasing cognitive load and anxiety. While AI boosts corporate productivity, profits largely flow to capital, exacerbating the “cognitive gap” between strategic managers and tool-dependent executors, potentially leading to widespread burnout if organizational structures are not reconfigured. (Source: 36氪, glennko, mbusigin)

In the AI Era, the Value of “Questioning Power” Surpasses “Execution Power” : In an AI-driven world, true competitive advantage shifts from execution speed to “questioning power”—the ability to identify which problems are worth solving. Over-reliance on AI for execution without critical problem definition can lead to efficiently solving the wrong problems, creating a false sense of progress. Design thinking, empathy mapping, and continuous questioning are considered critical human skills that AI cannot replace, enabling individuals and organizations to effectively leverage AI and focus on solving meaningful challenges. (Source: 36氪)

AI Geopolitics: US-China AI Race and International Regulatory Disputes : The US-China AI race is seen as a marathon, not a sprint, with China potentially leading in robotics applications. The US is urged to focus on practical AI investments rather than solely pursuing superintelligence, but has rejected UN-level international AI oversight, emphasizing national sovereignty. This highlights the complex geopolitical landscape where AI development intertwines with national security, trade policies (like H-1B visas), and competition for AI infrastructure and talent. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, adcock_brett, Dorialexander, teortaxesTex, teortaxesTex, teortaxesTex, brickroad7, jonst0kes)

Emad Mostaque Predicts “The Last Economy”: AI to Reshape Human Value : Emad Mostaque, former CEO of Stability AI, predicts that AI will fundamentally reshape economic structures within the next 1000 days, and human labor value could become zero or even negative. He proposes the “MIND framework” (Matter, Intellect, Networks, Diversity) to measure economic health, arguing that the surplus of “intellectual capital” brought by AI necessitates a re-evaluation of other capital’s importance. This “fourth inversion” will see AI replace cognitive labor, requiring the establishment of new “human-centric” currencies and universal basic AI to navigate societal transformation. (Source: 36氪)

AI Hardware Race: OpenAI, ByteDance, and Meta Vie for Consumer Device Market : Tech giants like OpenAI, ByteDance, and Meta are actively investing in consumer AI hardware R&D. Meta’s Ray-Ban AI glasses have achieved significant sales, while OpenAI is reportedly collaborating with Apple suppliers to develop a “screenless smart speaker,” and ByteDance is developing AI smart glasses. This race signals AI’s deeper integration into daily life, with companies exploring diverse product forms and interaction models, aiming to gain an early advantage in ambient AI. (Source: 36氪)

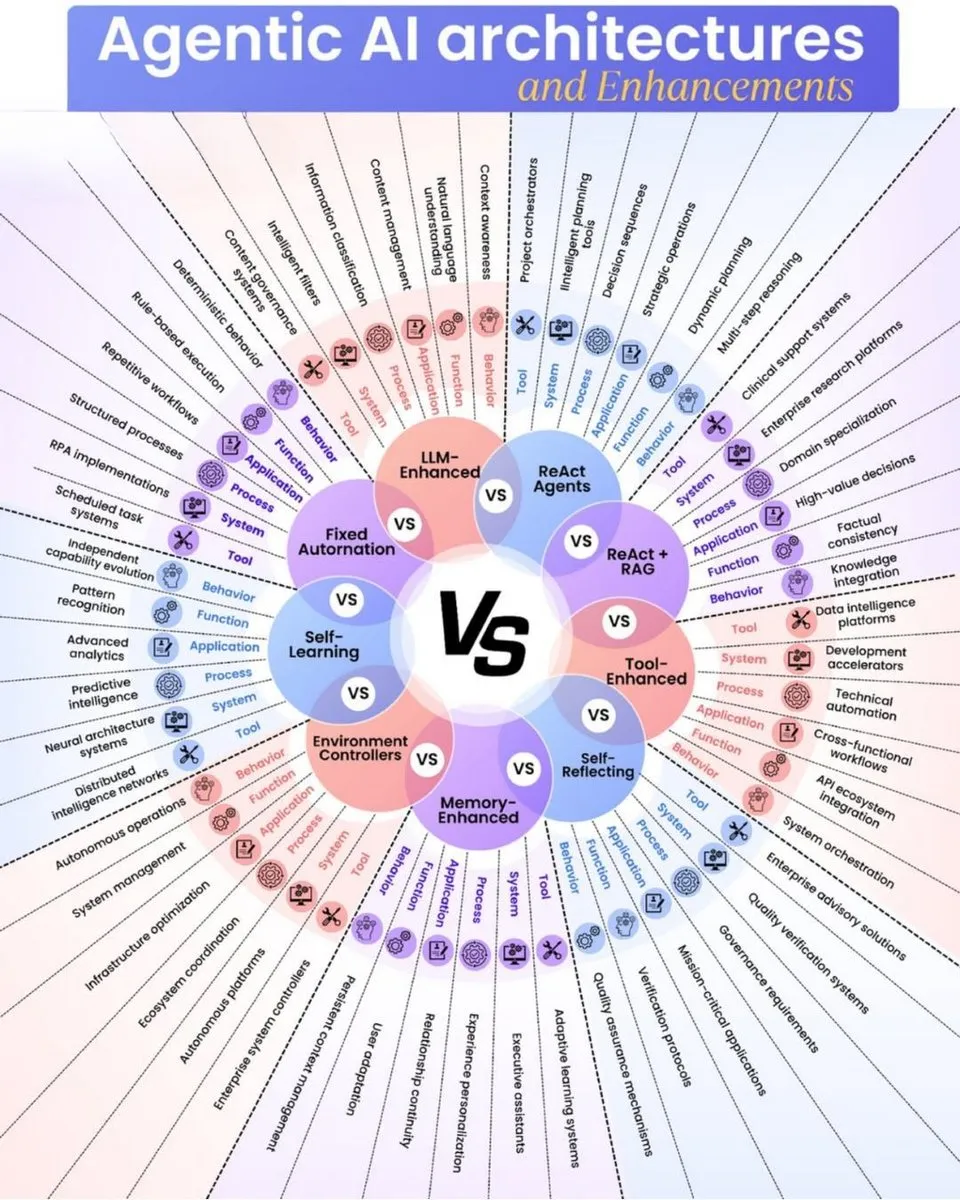

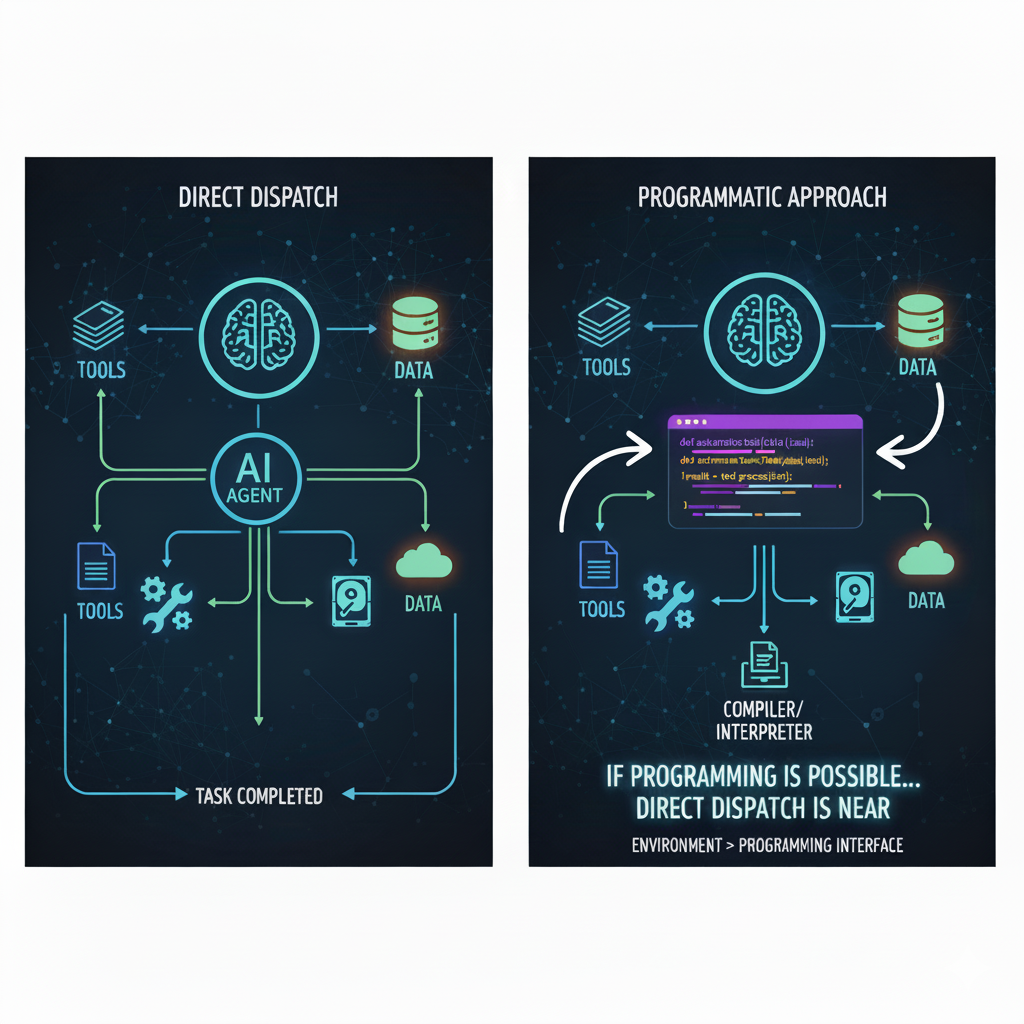

AI Agent: A Paradigm Shift from Human-Computer Collaboration to “Human-Computer Delegation” : The AI industry is approaching a “gentle inflection point” from human-computer collaboration to “human-computer delegation,” where autonomous AI Agents will execute complex tasks at scale. AI’s breakthrough in “penetrating programming” suggests its ability to conquer all semi-open systems. This shift will give rise to “unmanned company” organizational forms, where human roles will shift from micro-execution to macro-governance, focusing on value injection, system architecture design, and macro-navigation, with AI Copilots assisting in decision-making rather than directly intervening in high-speed AI systems. (Source: 36氪)

AI’s Impact on Foreign Language Majors: Students Need to Cultivate “Foreign Language+” Composite Skills : The rise of AI translation technology is profoundly impacting foreign language majors, leading to a decline in demand for traditional language-related positions, with several universities discontinuing relevant programs. Foreign language students face pressure to transform, needing to shift from single language skills to a “foreign language+” composite model, such as “foreign language + AI” for natural language processing, or “foreign language + international communication.” This prompts reforms in foreign language education, emphasizing cross-cultural understanding and comprehensive abilities, rather than mere translation training, to adapt to the new demands for language talent in the AI era. (Source: 36氪, Reddit r/ClaudeAI)

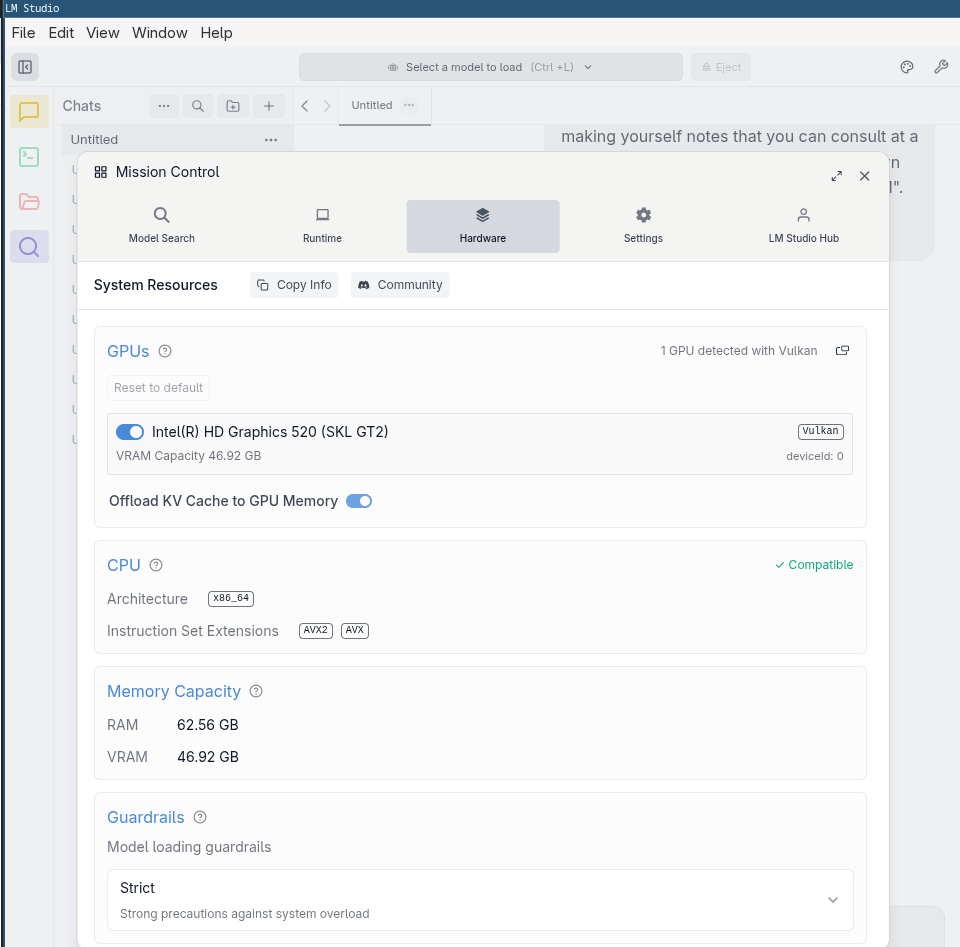

High GPU Prices: AI Demand-Driven and Local LLM Optimization : The community is widely concerned about persistently high GPU prices, believing the main reasons are surging AI data center demand and inflation. Many believe prices are unlikely to drop significantly unless the AI bubble bursts or custom chips become widely adopted. However, to address this challenge, the community is working to optimize local LLM performance, such as AMD MI50 outperforming NVIDIA P40 in llama.cpp/ggml, and utilizing iGPUs for basic LLM tasks, to reduce local AI computing costs. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

💡 Other

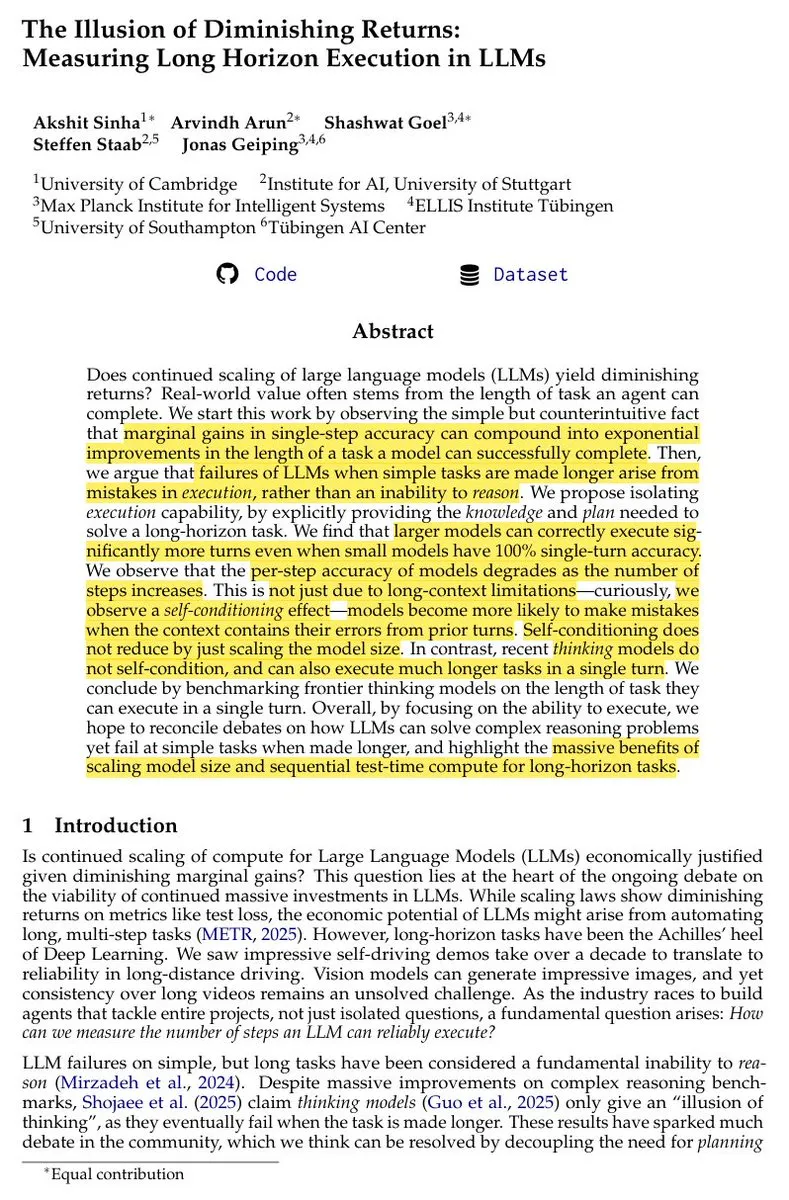

LLM Scaling’s “Diminishing Returns Illusion” and Long-Horizon Tasks : Research indicates that while single-turn benchmarks might show slowing LLM progress, model scaling still yields non-diminishing improvements in long-horizon task execution. The “diminishing returns illusion” stems from small single-step accuracy gains, which can lead to super-exponential improvements in long-horizon task completion. The sequential computation advantage in long-horizon tasks is unmatched by parallel tests, suggesting that continuous model scaling and reinforcement learning training are crucial for achieving advanced agent behaviors. (Source: scaling01)

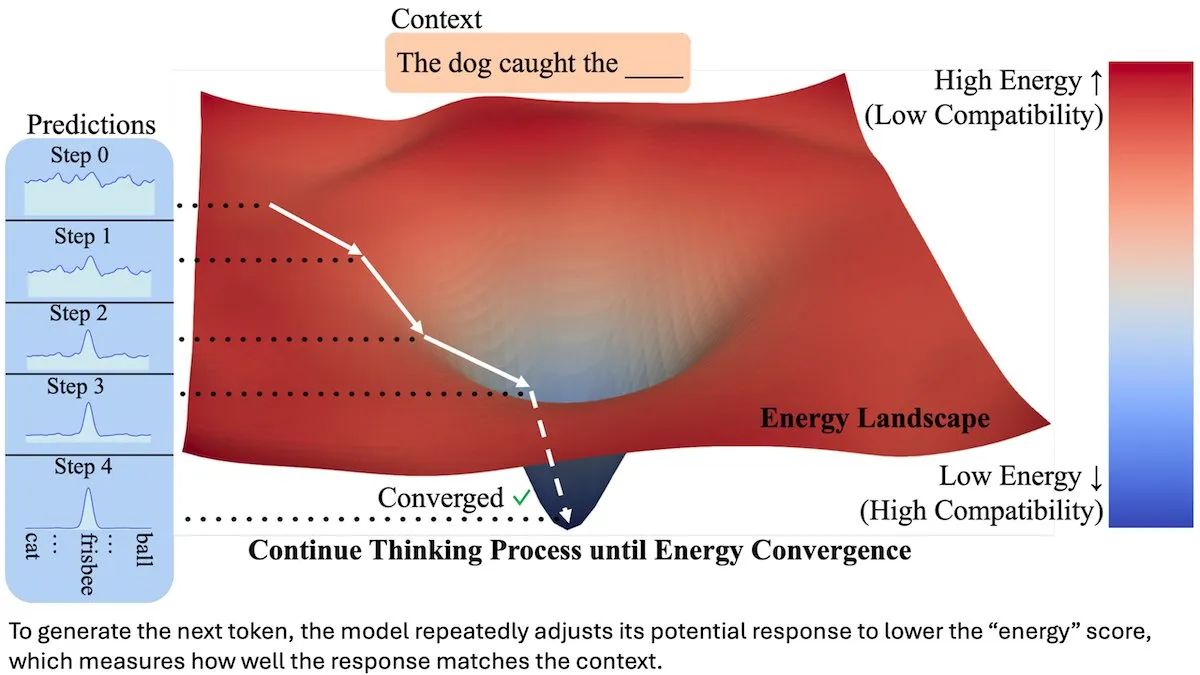

Energy-Based Transformer (EBT) Enhances Next-Token Prediction Performance : Researchers have introduced the Energy-Based Transformer (EBT), which scores candidate next tokens using “energy” and iteratively verifies and selects them by reducing energy through gradient steps. In experiments with 44 million parameters, EBT outperformed traditional Transformers of comparable size on three out of four benchmarks, indicating that this novel token selection method holds promise for improving LLM performance. (Source: DeepLearningAI)

AI Robotics Advancements: Humanoid Salesperson and Training-Free Autonomous Walking Robot Dog : Xpeng Motors has deployed a humanoid car salesperson named “Tiedan” in its showrooms, showcasing AI’s application in customer-facing robotics. Additionally, a robot dog “with animal reflexes” can walk in the woods without training, highlighting advancements in autonomous robotics in mimicking biological movement and perception. These developments signal the increasing complexity and practical applications of AI in physical robotics. (Source: Ronald_vanLoon, Ronald_vanLoon)