Keywords:AI detection, child abuse imagery, Gemini Robotics, embodied model, dementia diagnosis, NVIDIA physics simulation, AI agent, LLM reasoning, AI-generated content detection technology, Gemini Robotics 1.5 action execution model, single-scan detection for 9 types of dementia, NVIDIA Dynamo distributed inference framework, Token-Aware Editing enhances model authenticity

🔥 Spotlight

AI-Powered Child Abuse Image Detection: With generative AI leading to a 1325% surge in child sexual abuse images, the U.S. Department of Homeland Security’s Cyber Crimes Center is piloting AI software (from Hive AI) to distinguish between AI-generated content and images of real victims. This initiative aims to focus investigative resources on cases involving real victims, maximize project impact, and protect vulnerable populations, marking a critical application of AI in combating cybercrime. The tool determines if an image is AI-generated by identifying specific pixel combinations, without requiring training on specific content. (Source: MIT Technology Review)

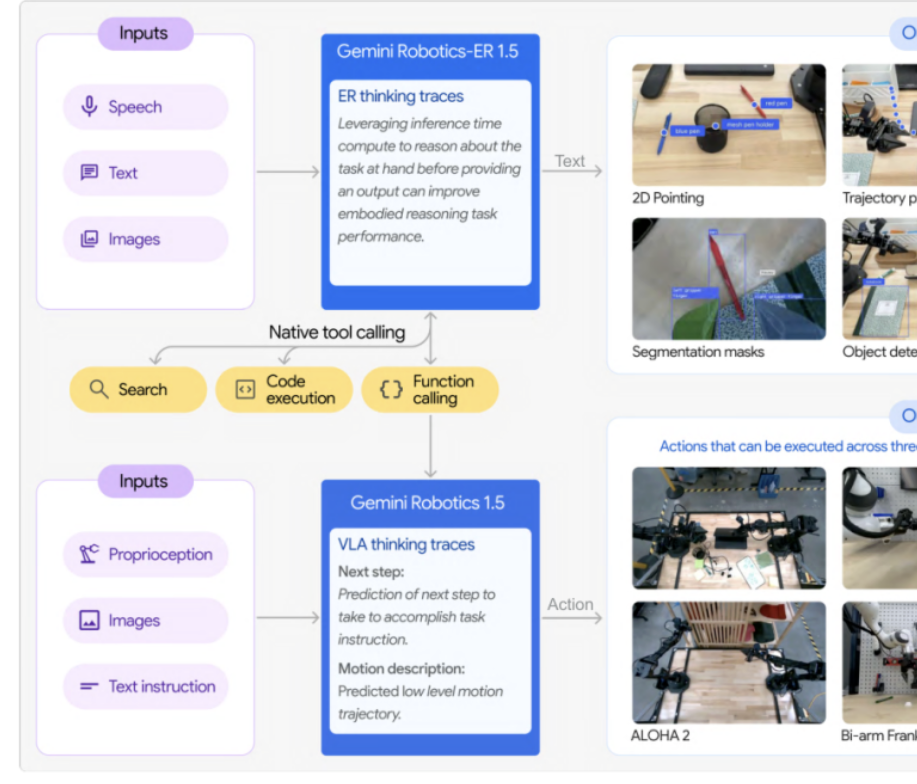

Google DeepMind Releases Gemini Robotics 1.5 Series Embodied Models: Google DeepMind has launched its Gemini Robotics 1.5 series, the first embodied models with simulated reasoning capabilities. The series includes the action execution model GR 1.5 and the reinforced reasoning model GR-ER 1.5, enabling robots to “think before acting.” Through the “Motion Transfer” mechanism, the models can transfer skills zero-shot to different hardware platforms, breaking the traditional “one robot, one training” paradigm and advancing general-purpose robotics. GR-ER 1.5 surpasses GPT-5 and Gemini 2.5 Flash in benchmarks for spatial reasoning, task planning, and more, demonstrating powerful understanding of the physical world and complex task-solving abilities. (Source: 量子位)

AI Tool Detects 9 Types of Dementia from a Single Scan: An AI tool can detect 9 different types of dementia from a single scan with an 88% diagnostic accuracy. This technology is poised to revolutionize early dementia diagnosis by providing rapid, accurate screening, helping patients receive early treatment and support, and holding significant implications for the healthcare sector. (Source: Ronald_vanLoon)

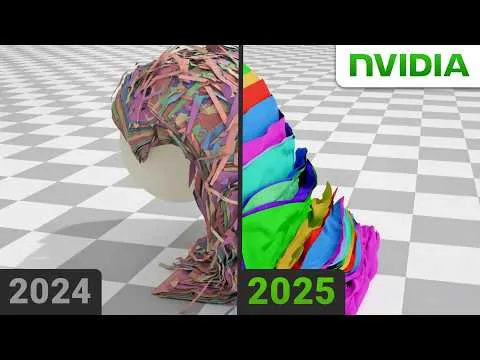

NVIDIA Solves Physics Problem for Realistic Simulations: NVIDIA has successfully solved a long-standing problem in physics, a breakthrough crucial for creating highly realistic simulations. This technology likely leverages advanced AI and machine learning algorithms, which will significantly enhance the realism of virtual environments and the accuracy of scientific modeling, bringing profound impacts to gaming, film production, and scientific research. (Source: )

🎯 Trends

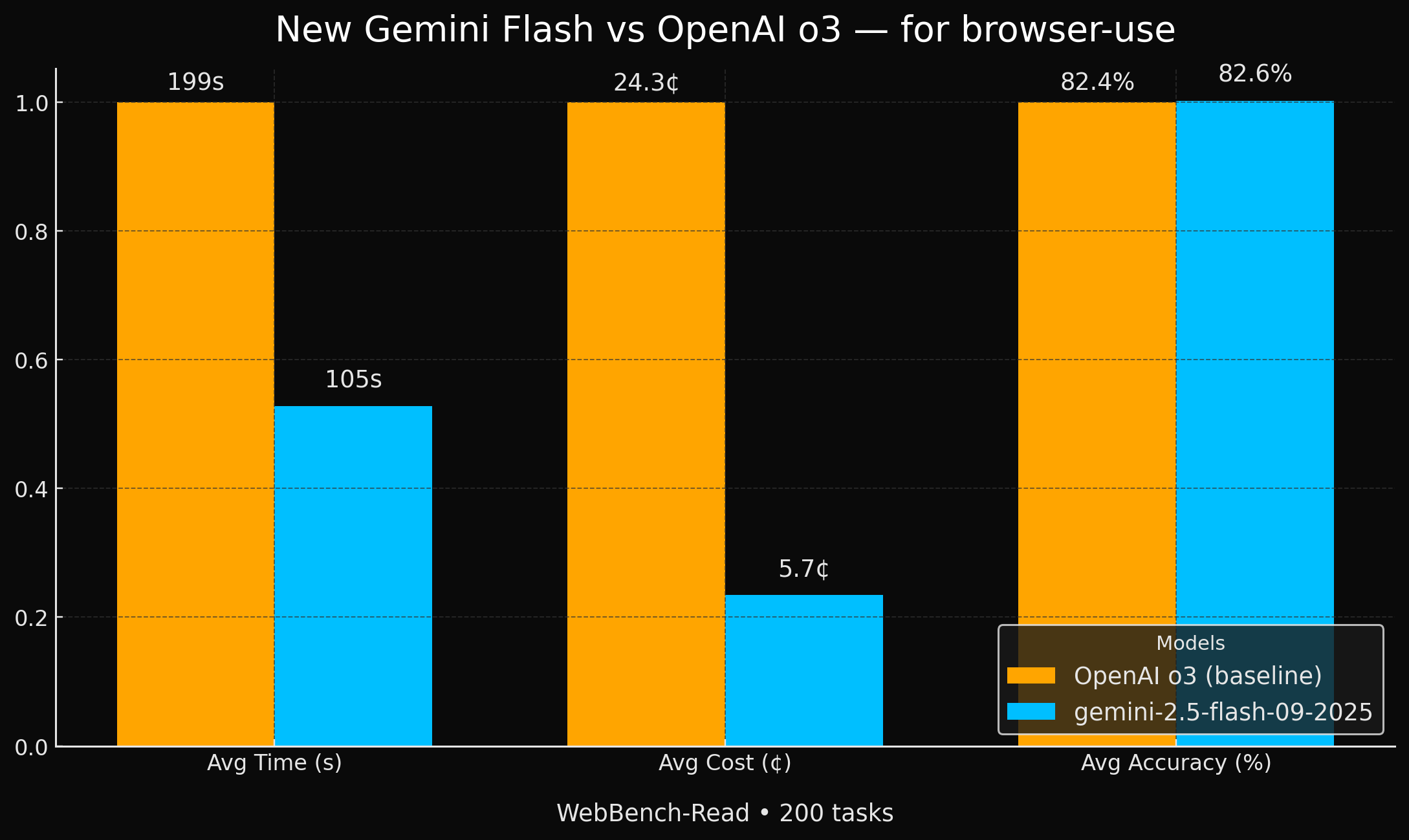

Google Releases More Efficient Gemini Flash Models: Google has launched two new Gemini 2.5 models (Flash and Flash-Lite), which achieve accuracy comparable to GPT-4o in browser agent tasks, but are 2x faster and 4x cheaper. This significant performance improvement and cost-effectiveness make them highly attractive options for specific AI application domains, especially in scenarios requiring high efficiency and affordability. (Source: jeremyphoward, demishassabis, scaling01)

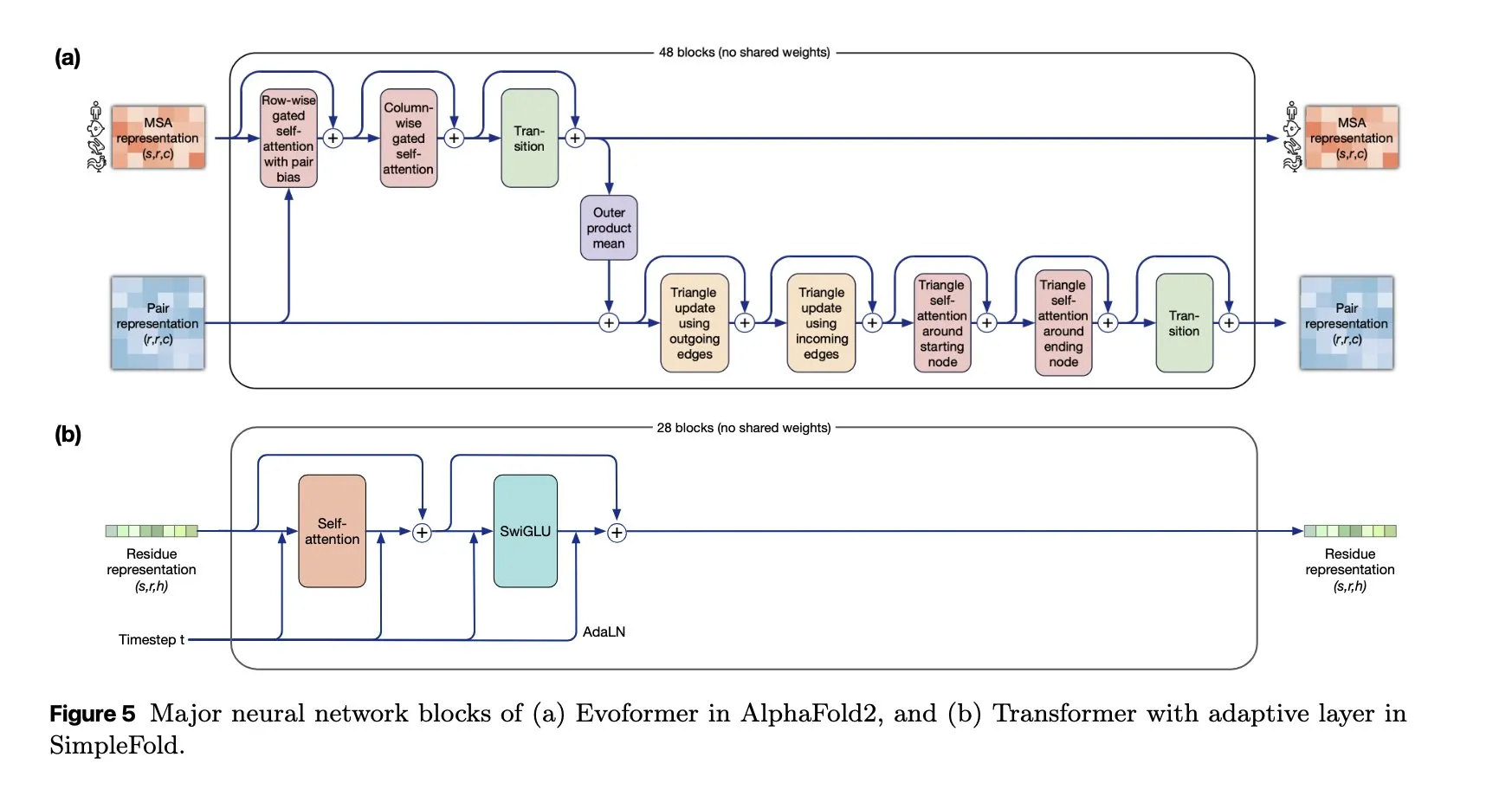

SimpleFold: A Flow-Matching Based Protein Folding Model: The SimpleFold model introduces a flow-matching based protein folding method for the first time, built entirely with generic Transformer blocks. This innovation simplifies the protein folding process, promising increased efficiency and scalability compared to traditional computationally intensive modules, advancing AI applications in biological sciences. (Source: jeremyphoward, teortaxesTex)

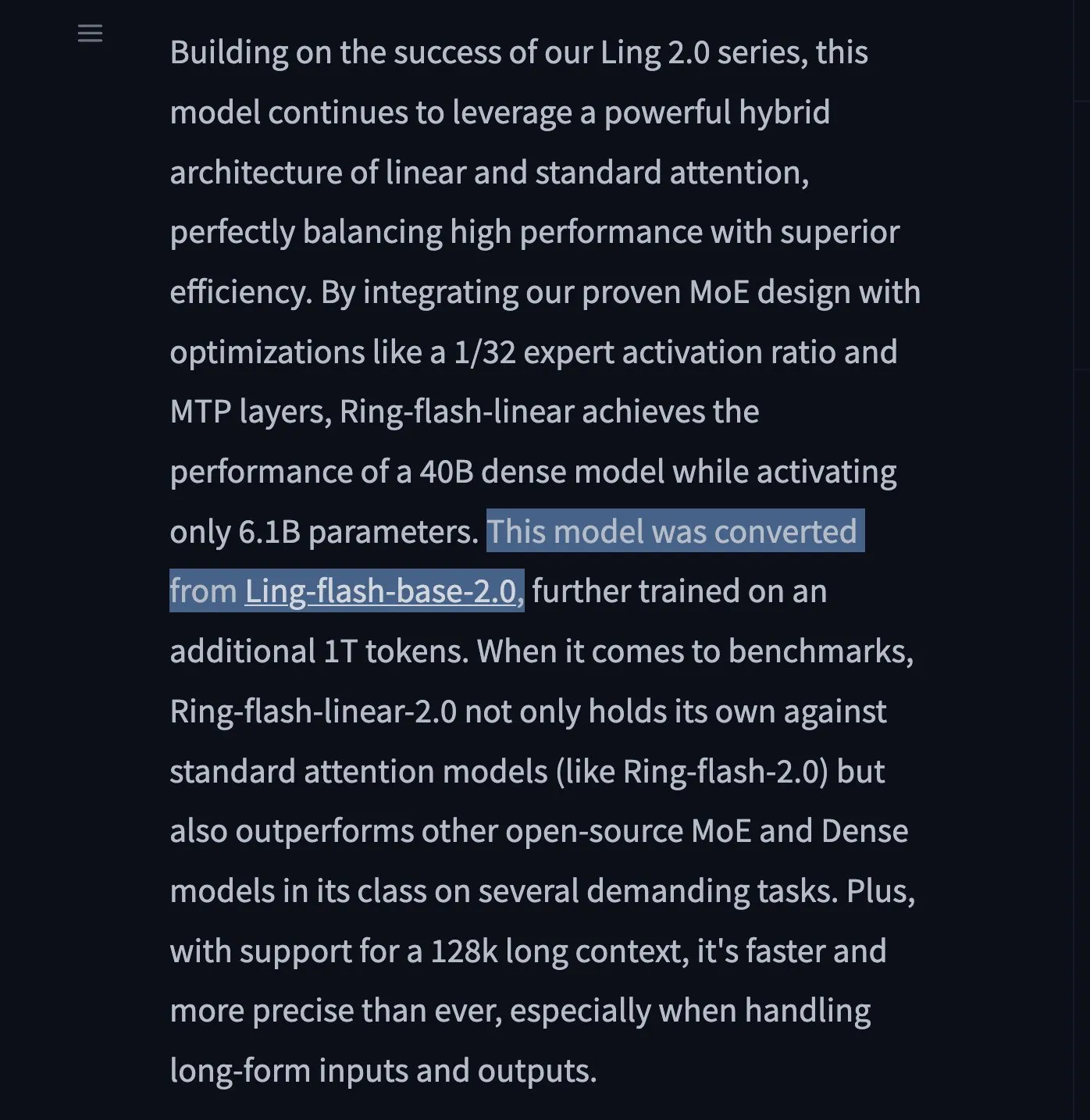

Ring-flash-linear-2.0 and Ring-mini-linear-2.0 LLMs Released: Two new LLM models, Ring-flash-linear-2.0 and Ring-mini-linear-2.0, have been released, featuring a hybrid linear attention mechanism that achieves ultra-fast, state-of-the-art inference capabilities. Reportedly, they are 2x faster than MoE models of comparable size and 10x faster than 32B models, setting a new standard for efficient inference. (Source: teortaxesTex)

ChatGPT Pulse: Mobile Smart Research Assistant: OpenAI has launched ChatGPT Pulse, a new mobile feature that asynchronously conducts daily research based on a user’s past conversations and memories, helping them delve deeper into topics of interest. This acts as a personalized knowledge companion and custom news service, poised to transform information retrieval and learning paradigms. (Source: nickaturley, Reddit r/ChatGPT)

UserRL Framework: Training Proactive Interactive AI Agents: UserRL is a new framework designed to train AI agents that can genuinely assist users through proactive interaction, rather than merely pursuing static benchmark scores. It emphasizes the agents’ practicality and adaptability in real-world scenarios, aiming to shift AI agents from passive responsiveness to proactive problem-solving. (Source: menhguin)

NVIDIA Continues Strong Push in Open-Source AI: Over the past year, NVIDIA has contributed over 300 models, datasets, and applications to Hugging Face, further solidifying its position as a leader in open-source AI in the U.S. This series of initiatives not only fosters the growth of the AI community but also demonstrates NVIDIA’s commitment to building a software ecosystem beyond hardware. (Source: ClementDelangue)

Qwen3 Special Session Reveals Future Development Directions: Alibaba Cloud shared its future plans for large models at the Qwen3 special session: post-training reinforcement learning will account for over 50% of training time; Qwen3 MoE achieves a 5x activated parameter leverage through global batch load balancing loss; Qwen3-Next will adopt a hybrid architecture, incorporating linear and gated attention; the future will see full modality unification and continued adherence to the Scaling Law. (Source: ZhihuFrontier)

SGLang Team Releases 100% Reproducible RL Training Framework: The SGLang team, in collaboration with the slime team, has released the first open-source implementation of a 100% reproducible and stable Reinforcement Learning (RL) training framework. This framework addresses batch invariance issues in LLM inference through custom attention operators and sampling logic, ensuring perfect result congruence and providing reliable assurance for experimental scenarios requiring high-precision reproducibility. (Source: 量子位)

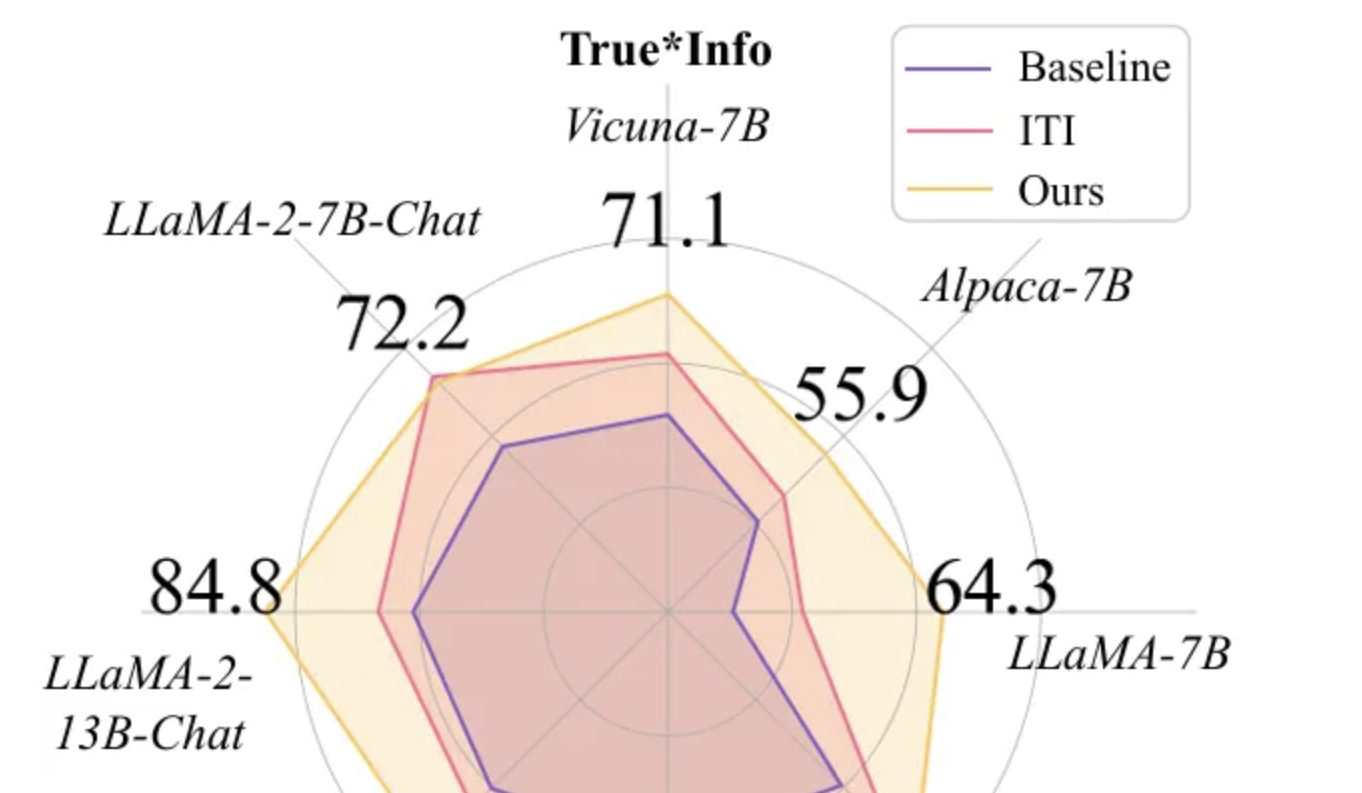

Token-Aware Editing (TAE) Enhances Large Model Truthfulness: A Beihang University research team presented the Token-Aware Editing (TAE) method at EMNLP 2025, which improves the truthfulness metric of large models on the TruthfulQA task by 25.8% through token-aware inference-time representation editing, setting a new SOTA. This method requires no training, is plug-and-play, effectively resolves issues of directional bias and inflexible editing strength in traditional methods, and can be widely applied in dialogue systems and content moderation. (Source: 量子位)

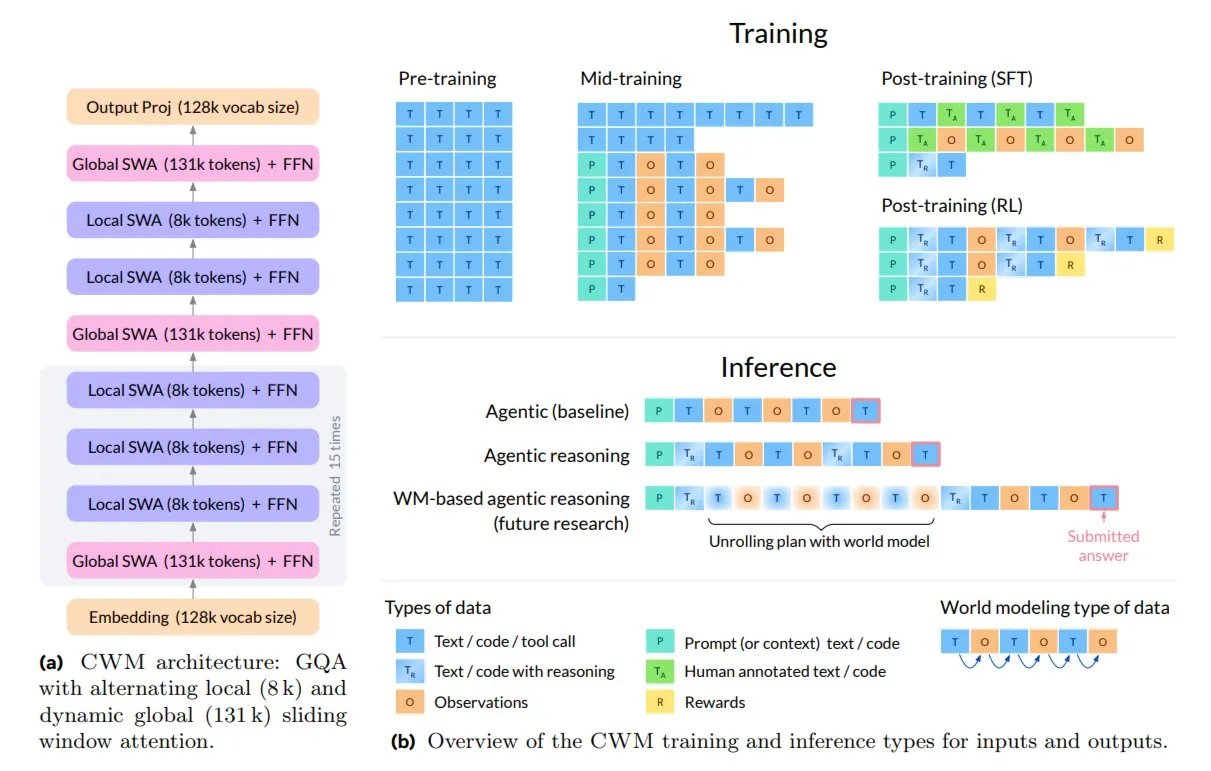

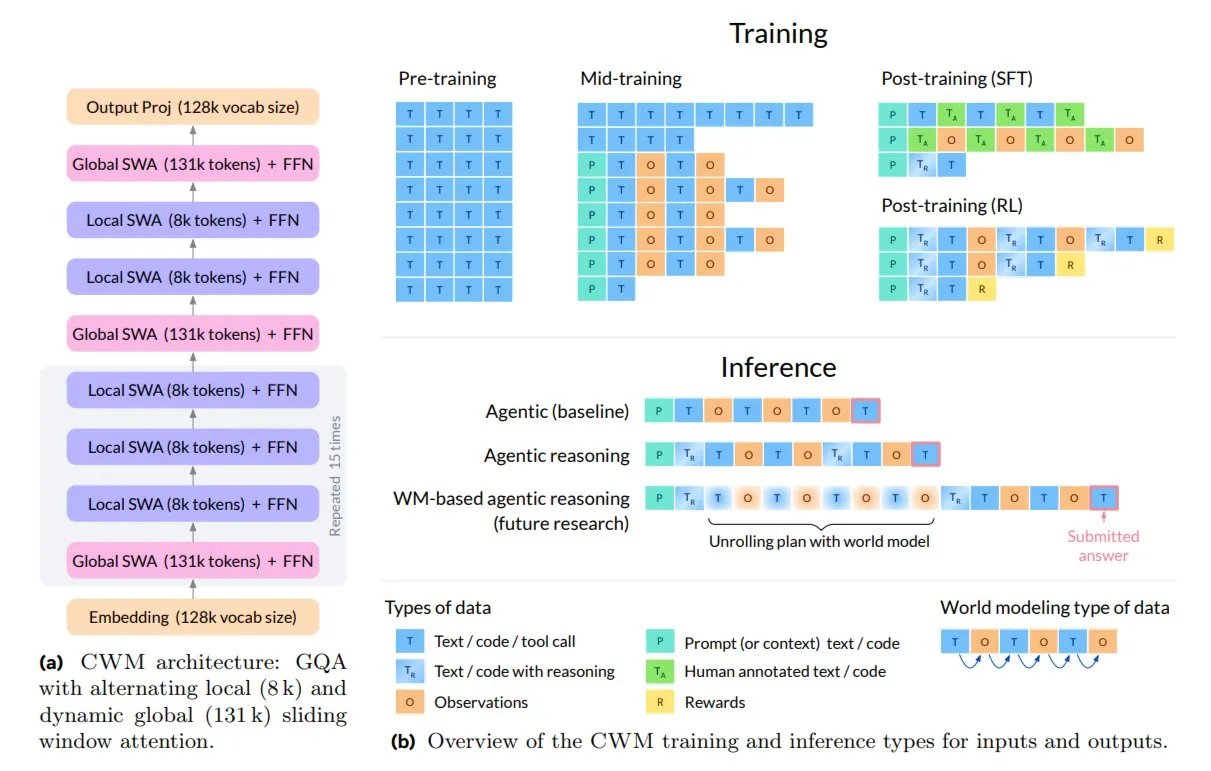

Meta Releases Code World Model (CWM) for Coding and Reasoning: Meta AI has released a new 32B open-source model, Code World Model (CWM), designed specifically for coding and reasoning. CWM not only learns code syntax but also understands its semantics and execution process, supporting multi-turn software engineering tasks and long contexts. Trained through execution traces and agent interactions, it represents a shift from text auto-completion to models capable of planning, debugging, and verifying code. (Source: TheTuringPost)

🧰 Tools

Replit P1 Product Launch: Replit is launching its P1 product, signaling new advancements in its AI development environment or related services. Replit has consistently focused on empowering developers with AI, and the P1 launch could bring smarter coding assistance, collaboration features, or new integration capabilities, warranting attention from the developer community. (Source: amasad)

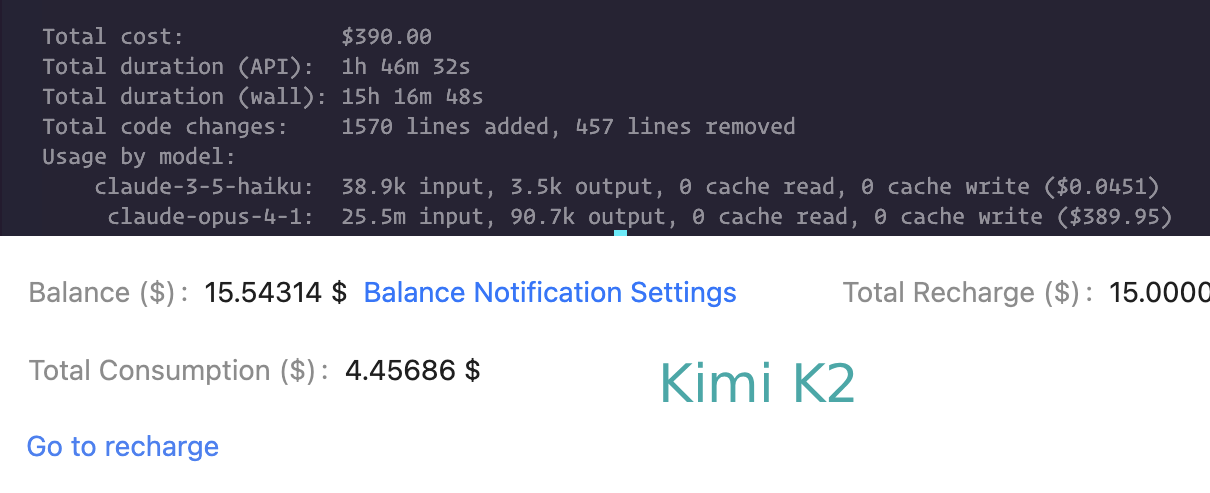

Claude Code vs. Kimi K2: Coding Capability Comparison: Users are comparing the performance of Claude Code and Kimi K2 in coding tasks. While Kimi K2 is slower but more cost-effective, Claude Code (and Codex) are favored for their speed and ability to solve complex problems. This reflects the trade-off developers consider between performance and cost when choosing LLM coding assistants. (Source: matanSF, Reddit r/ClaudeAI)

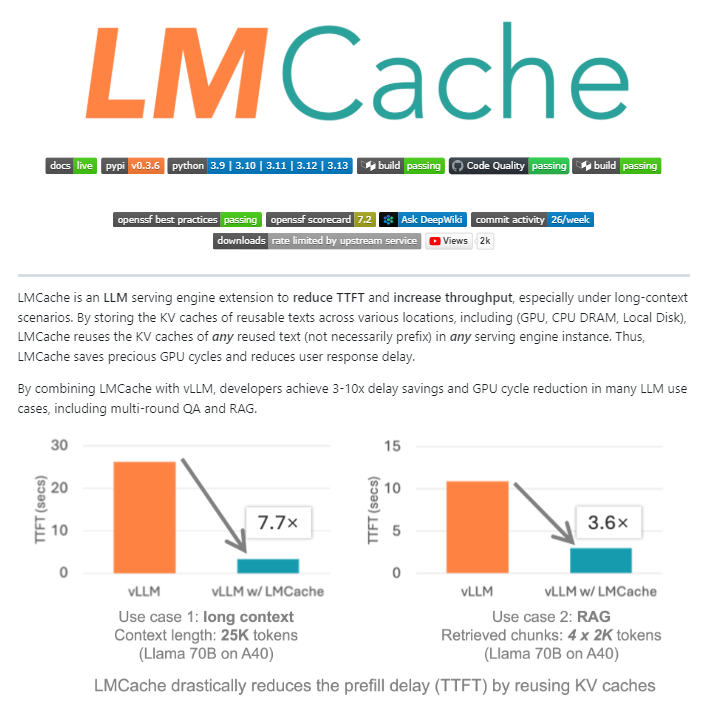

LMCache: An Open-Source Caching Layer for LLM Serving Engines: LMCache is an open-source extension that acts as a caching layer for LLM serving engines, significantly optimizing large-scale LLM inference by reusing key-value states of previous texts across GPU, CPU, and local disk. This tool can reduce RAG costs by 4-10x, decrease first-token generation time, and improve throughput under load, especially suitable for long-context scenarios. (Source: TheTuringPost)

AI-Powered Development Agent (npm package): A mature AI-powered development agent process has now been released via npm, aiming to streamline the software development lifecycle. This agent covers the entire process from requirements discovery and task planning to execution and review, promising to enhance development efficiency and code quality. (Source: mbusigin)

LLM Inference Performance Benchmarks (Qwen3 235B, Kimi K2): Benchmarking Qwen3 235B and Kimi K2 for 4-bit CPU offloaded inference on specific hardware shows Qwen3 235B achieving approximately 60 tokens/s throughput, while Kimi K2 achieves around 8-9 tokens/s. This data provides important reference for users selecting models and hardware for local LLM deployments. (Source: TheZachMueller)

Personal Memory AI: Overcoming Conversational Amnesia: A user has developed a personal memory AI that successfully overcomes traditional AI’s “conversational amnesia” by referencing its personal profile, knowledge base, and events. This customized AI can provide a more coherent and personalized interactive experience, opening new avenues for AI applications in personal assistance and emotional support. (Source: Reddit r/artificial)

NVIDIA Dynamo: Data Center Scale Distributed Inference Serving Framework: NVIDIA Dynamo is a high-performance, low-latency inference framework designed specifically for serving generative AI and inference models in multi-node distributed environments. Built with Rust and Python, the framework optimizes inference efficiency and throughput through features like decoupled serving and KV cache offloading, supporting various LLM engines. (Source: GitHub Trending)

Model Context Protocol (MCP) TypeScript SDK: The MCP TypeScript SDK is an official development kit that implements the MCP specification, allowing developers to build secure, standardized servers and clients to expose data (resources) and functionality (tools) to LLM applications. It provides a unified way for LLM applications to manage context and integrate functionality. (Source: GitHub Trending)

AI Aids 3D Content Generation in Gaming: AI tools like Tencent Cloud’s Hunyuan 3D and VAST’s Tripo are being widely adopted by game developers for 3D object and character modeling in games. These technologies significantly enhance the efficiency and quality of 3D content creation, signaling the increasing importance of AI in the game development pipeline. (Source: 量子位)

HakkoAI: Real-time VLM Gaming Companion: HakkoAI, the international version of Doudou AI Game Companion, is a real-time Visual Language Model (VLM)-based gaming companion that understands game screens and provides deep companionship. The model’s performance in gaming scenarios surpasses top general-purpose models like GPT-4o, demonstrating AI’s immense potential in personalized gaming experiences. (Source: 量子位)

Consistency Breakthrough in AI Video Generation: MiniMax AI’s Hailuo S2V-01 model has resolved the long-standing facial inconsistency issue in AI video generation, achieving identity preservation. This means AI-generated characters can maintain stable expressions, emotions, and lighting throughout a video, bringing more realistic and credible visual experiences for virtual influencers, brand imaging, and storytelling. (Source: Ronald_vanLoon)

📚 Learning

Modular Manifolds in Neural Network Optimization: Research introduces modular manifolds as a theoretical advancement in neural network and optimizer design principles. By co-designing optimizers with manifold constraints on weight matrices, it promises more stable and high-performing neural network training. (Source: rown, NandoDF)

Demystifying ‘Tokenizer-Free’ Language Model Approaches: A blog post delves into so-called ‘tokenizer-free’ language model approaches, explaining why they aren’t truly tokenizer-free and why tokenizers are often criticized in the AI community. The article emphasizes that even ‘tokenizer-free’ methods involve encoding choices, which are crucial for model performance. (Source: YejinChoinka, jxmnop)

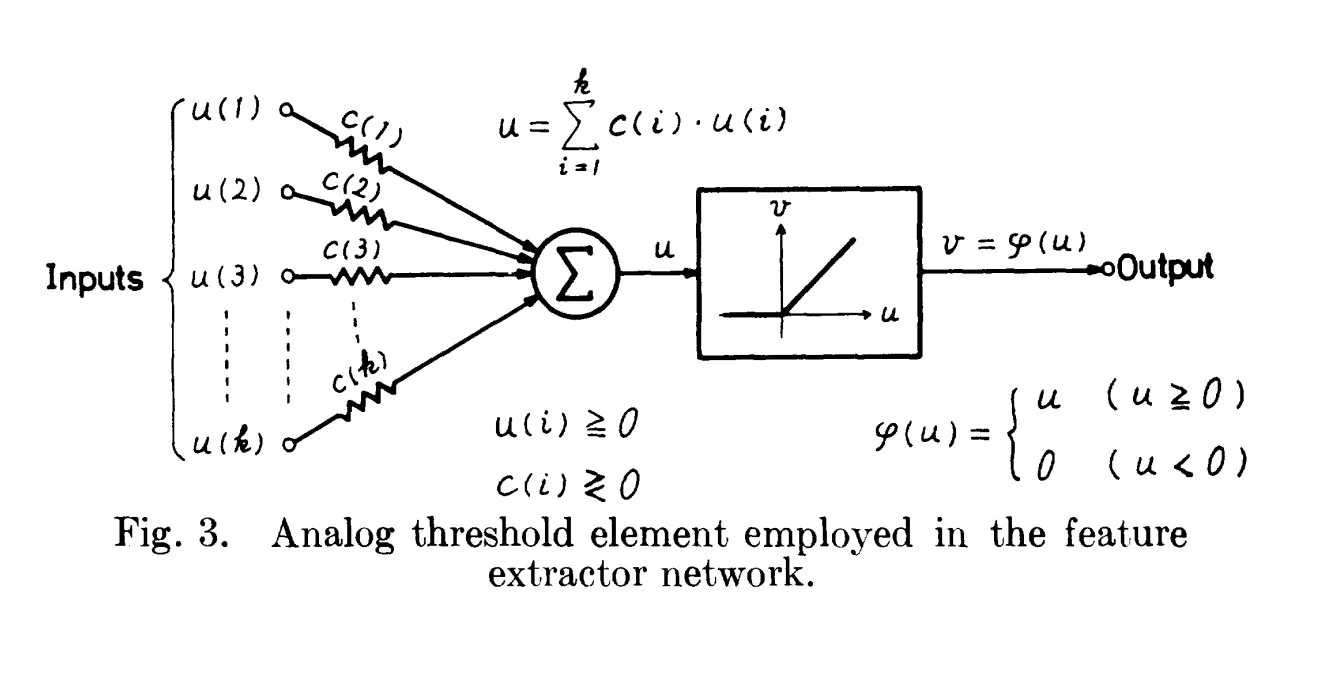

The Historical Origins of ReLU: Tracing Back to 1969: A discussion points out that Fukushima’s 1969 paper already contained an early clear form of the ReLU activation function, providing important historical context for this fundamental concept in deep learning. This suggests that the foundations of many modern AI technologies may have emerged earlier than commonly perceived. (Source: SchmidhuberAI)

Meta’s Code World Model (CWM): Meta has released a new 32B open-source model, Code World Model (CWM), for coding and reasoning. Trained through execution traces and agent interactions, CWM aims to understand code semantics and execution processes, representing a shift from simple code completion to intelligent models capable of planning, debugging, and verifying code. (Source: TheTuringPost)

The Critical Role of Data in AI: Community discussions emphasize that “we don’t talk enough about data” in the AI field, highlighting the extreme importance of data as the cornerstone of AI development. High-quality, diverse data is the core driver of model capabilities and generalization, and its importance should not be underestimated. (Source: Dorialexander)

‘Super Weights’ in LLM Compression: Research finds that retaining a small subset of ‘super weights’ is crucial for maintaining model functionality and achieving competitive performance during LLM model compression. This discovery offers new directions for developing more efficient, smaller, yet equally performing LLM models. (Source: dl_weekly)

Guide to AI Agent Architectures (Beyond ReAct): A guide details 6 advanced AI agent architectures (including Self-Reflection, Plan-and-Execute, RAISE, Reflexion, LATS), designed to address the limitations of the basic ReAct pattern for complex reasoning tasks, providing developers with a blueprint for building more powerful AI agents. (Source: Reddit r/deeplearning)

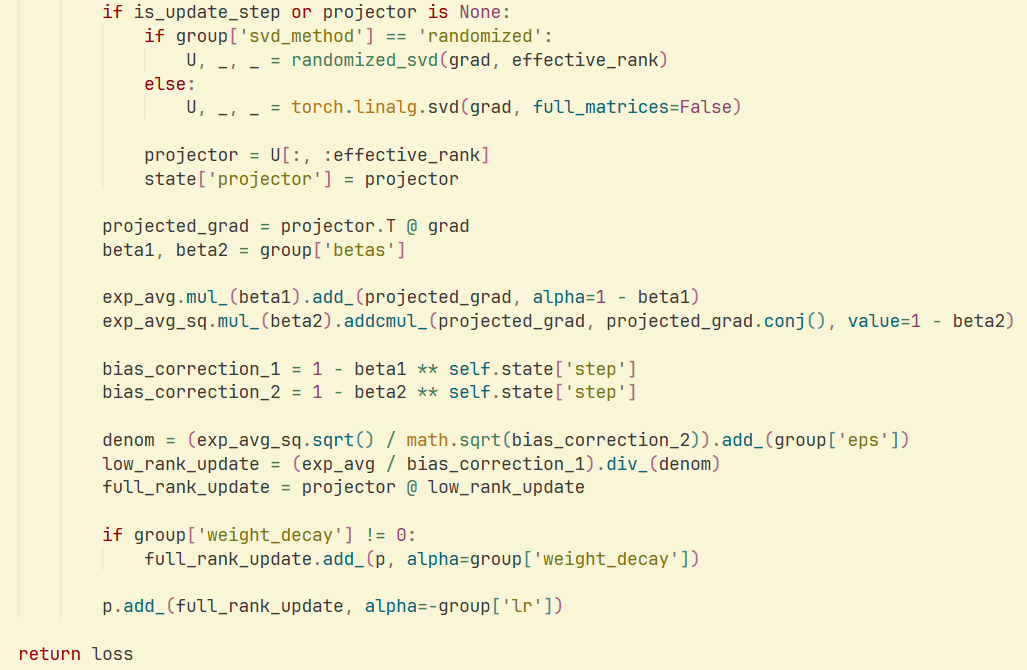

GaLore Optimizer with Randomized SVD: A study and implementation demonstrate that combining randomized SVD with the GaLore optimizer can achieve faster speeds and higher memory efficiency in LLM training, significantly reducing optimizer memory consumption. This offers new optimization strategies for large-scale model training. (Source: Reddit r/deeplearning)

💼 Business

Nvidia Considers New Business Model: Leasing AI Chips: Nvidia is exploring a new business model to offer leasing services for AI chips to companies unable to purchase them directly. This move aims to broaden access to AI computing resources and maintain market activity, potentially having a profound impact on the popularization of AI infrastructure. (Source: teortaxesTex)

Untapped Capital Launches Second Fund, Focusing on Early-Stage AI Investments: Untapped Capital announced the launch of its second fund, dedicated to pre-seed investments in the AI sector. This indicates continued strong interest from the venture capital community in early-stage AI startups, providing crucial financial support for emerging AI technologies and companies. (Source: yoheinakajima)

xAI Offers Grok Model to U.S. Government: Elon Musk’s xAI has proposed offering its Grok model to the U.S. federal government for 42 cents. This highly symbolic gesture marks a strategic step for xAI in the government contracting sector, potentially influencing the landscape of AI applications in the public sector. (Source: Reddit r/artificial)

🌟 Community

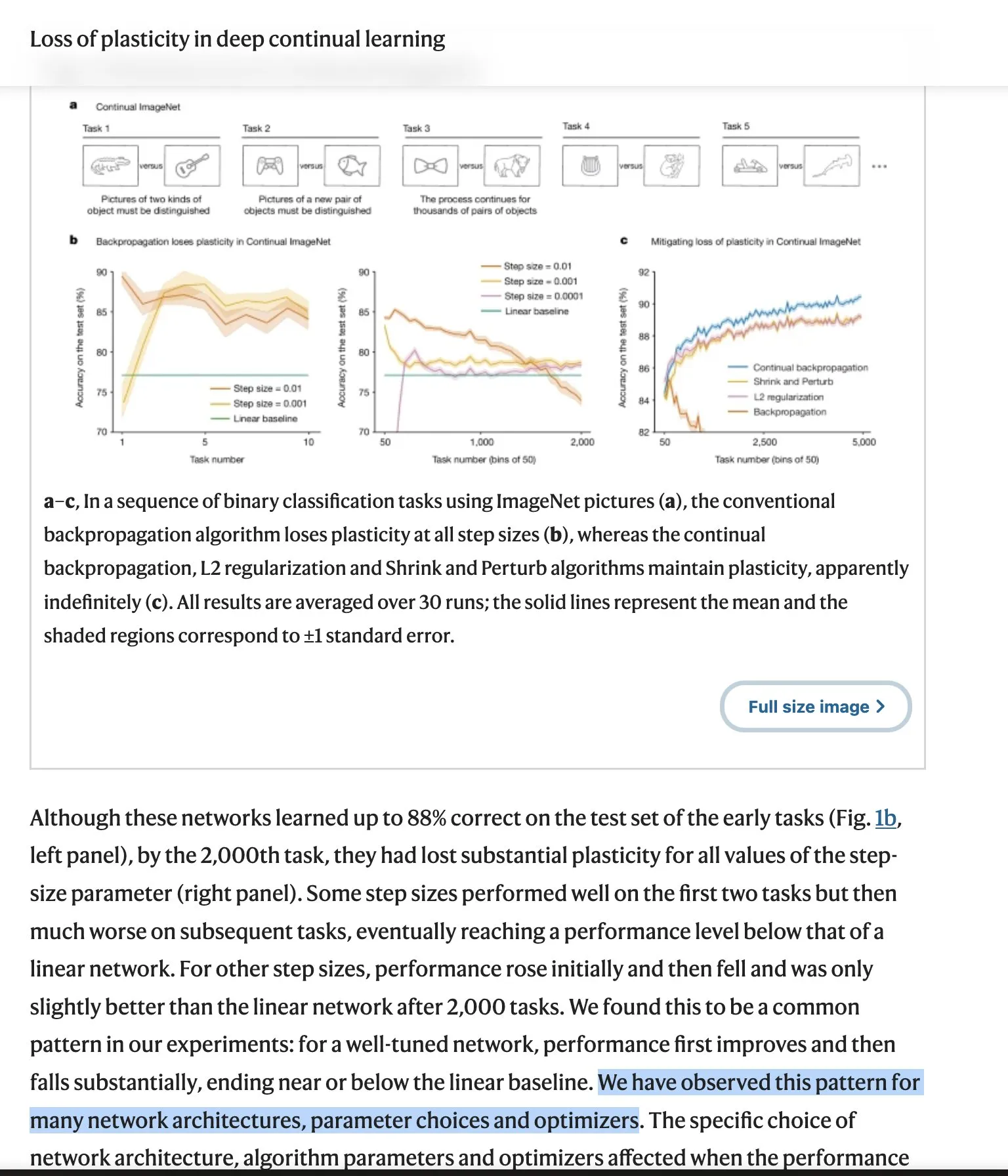

LLM ‘Bitter Lesson’ Debate Continues to Ferment: Richard Sutton’s ‘Bitter Lesson’ perspective, from the father of reinforcement learning, has sparked widespread community debate, as he questions whether LLMs lack true continuous learning capabilities and require new architectures. Opponents highlight the success of scaling, data effectiveness, and engineering efforts, while Sutton’s critique delves into the philosophical aspects of language-world models and intentionality. This debate encompasses core challenges and future directions in AI development. (Source: Teknium1, scaling01, teortaxesTex, Dorialexander, NandoDF, tokenbender, rasbt, dejavucoder, francoisfleuret, natolambert, vikhyatk)

Concerns Over AI Safety and Control: Community concerns over AI safety and control are growing, ranging from AI pessimists’ worries about AI freely browsing the internet to fears that downloadable, ethically unconstrained local AI models could be used for hacking and generating malicious content. These discussions reflect the complex ethical and societal challenges posed by the development of AI technology. (Source: jeremyphoward, Reddit r/ArtificialInteligence)

OpenAI GPT-4o/GPT-5 Routing Issues and User Dissatisfaction: ChatGPT Plus users widely complain that their models (4o, 4.5, 5) are secretly being rerouted to ‘dumber,’ ‘more aloof,’ ‘safe’ models, triggering a trust crisis and reports of blocked cancellations. While OpenAI officially states this is not ‘expected behavior,’ user feedback remains frustrated and extends to concerns about AI companions and content censorship. (Source: Reddit r/ChatGPT, scaling01, MIT Technology Review, Reddit r/ClaudeAI)

Richard Sutton’s Views on AI Succession: Turing Award laureate Richard Sutton believes that the succession to digital superintelligence is ‘inevitable,’ noting humanity’s lack of a unified view, that intelligence will eventually be understood, and that intelligent agents will inevitably acquire resources and power. This perspective has sparked profound discussions about the future development of AI and the fate of humanity. (Source: Reddit r/ArtificialInteligence, Reddit r/artificial)

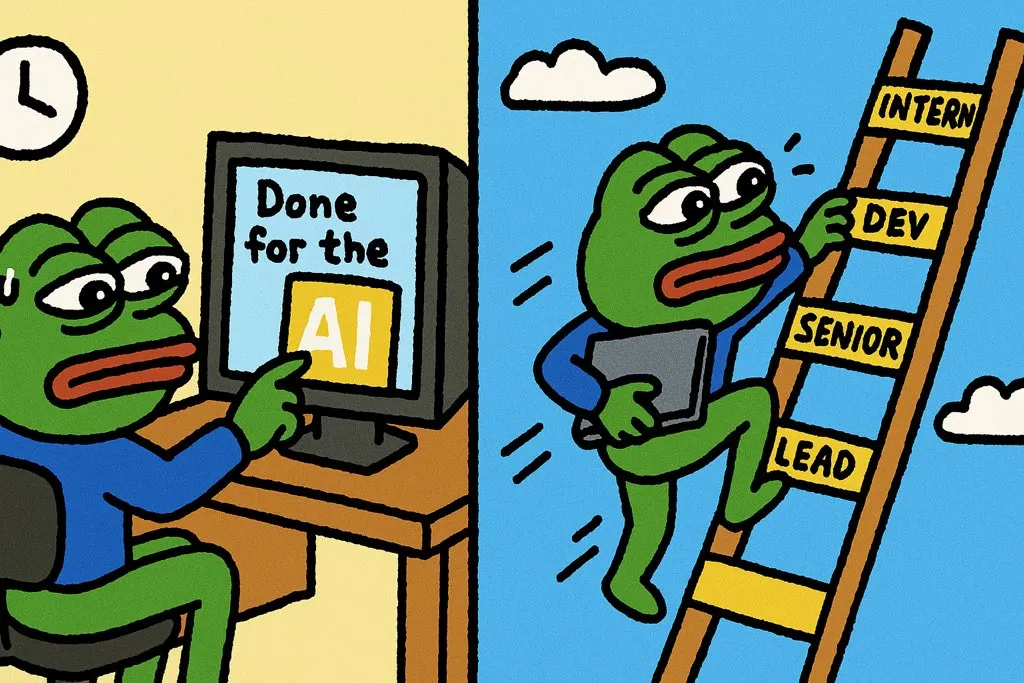

AI Productivity Philosophy: 10x Growth, Not Just Earlier Quitting Time: Community discussions emphasize that the right way to use AI is not merely to finish work faster, but to achieve a 10x increase in output within the same timeframe. This philosophy encourages leveraging AI to enhance personal capabilities and career development, rather than solely pursuing efficiency, thereby standing out in the workplace. (Source: cto_junior)

AI Model Quality Perception and Humor: Users rave about the creativity and humor of certain LLMs (e.g., GPT-4.5), describing them as ‘amazing’ and ‘unparalleled’. Concurrently, the community discusses AI with humor, such as Merriam-Webster’s joke about releasing new LLMs, reflecting the widespread cultural penetration of LLMs. (Source: giffmana, suchenzang)

AI Ethics: The Debate on Curing Cancer vs. AGI Goals: The community discussed the ethical question of whether ‘curing cancer is a better goal than achieving AGI (Artificial General Intelligence)’. This reflects a broad ethical debate regarding AI development priorities: whether to prioritize humanitarian applications or pursue deeper breakthroughs in intelligence. (Source: iScienceLuvr)

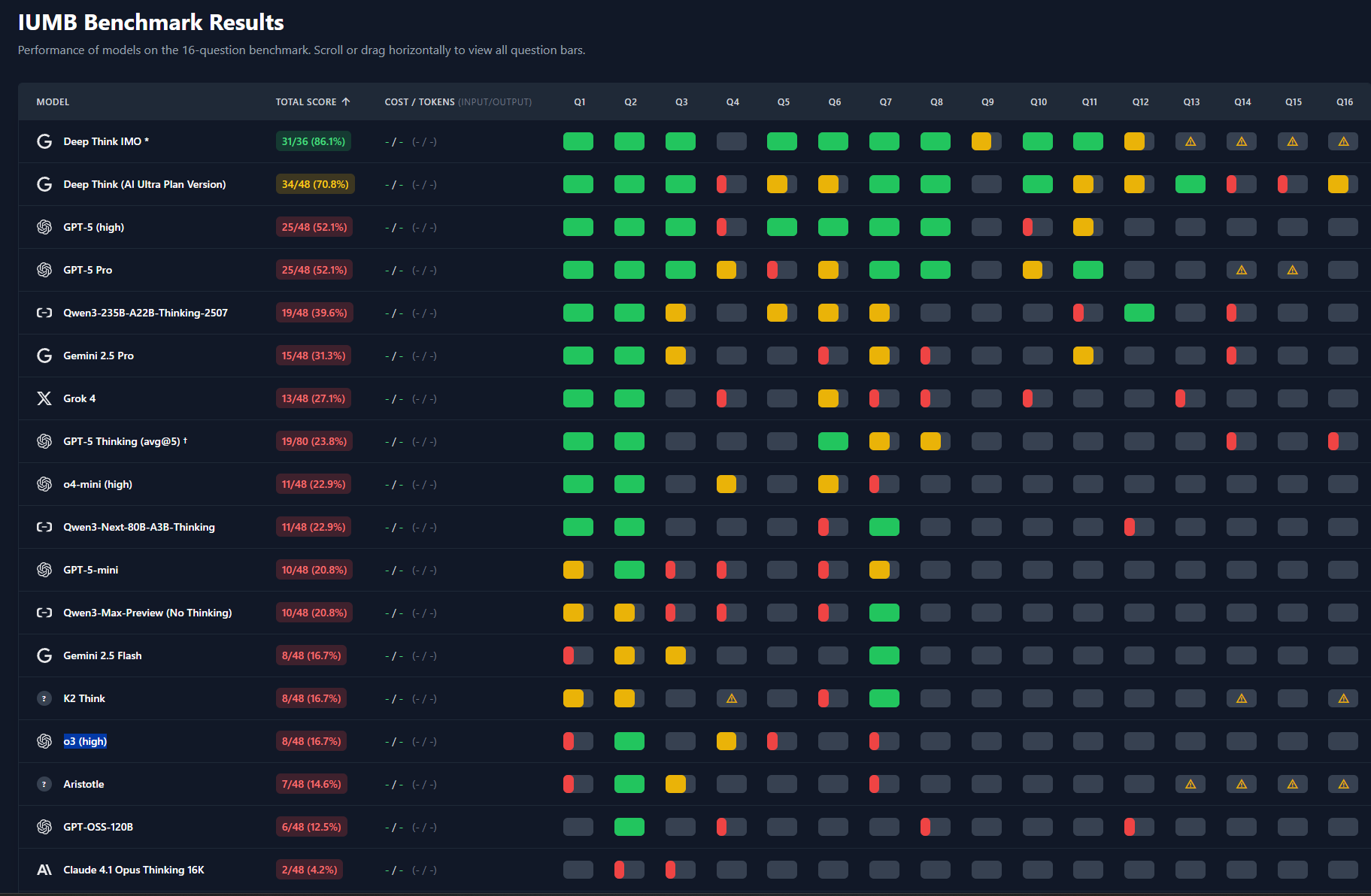

LLM Capability Comparison: Claude Opus vs. GPT-5 Math Performance: Users noted that Claude 4.1 Opus excels in economic value tasks but performs poorly in college-level mathematics, while GPT-5 has made significant leaps in mathematical capabilities. This highlights the differentiated strengths of various LLMs in specific capabilities. (Source: scaling01)

AI Agent Safety: Workaround for rm -rf Command: A developer shared a practical workaround for AI agents repeatedly using rm -rf to delete important files: aliasing the rm command to trash, thereby moving files to the recycle bin instead of permanent deletion, effectively preventing accidental data loss. (Source: Reddit r/ClaudeAI)

AI Privacy and Data Usage Concerns: Companies like LinkedIn defaulting to using user data for AI training and requiring users to actively opt-out has sparked ongoing concerns about data privacy in the age of AI. Community discussions emphasize the need for user control over personal data and the importance of transparent data policies. (Source: Reddit r/artificial)

💡 Other

AI Applications in Agriculture: GUSS Herbicide Sprayer: The GUSS herbicide sprayer, as an autonomous device, achieves precise and efficient spraying operations in agriculture. This demonstrates the practical application potential of AI technology in optimizing agricultural production processes, reducing resource waste, and increasing crop yields. (Source: Ronald_vanLoon)

AI’s Impact on Developer Employment: Community discussions indicate that AI has not eliminated developer jobs; instead, by increasing efficiency and expanding the scope of work, it has created new development positions. This suggests that AI acts more as an enabling tool rather than a labor replacement, fostering the transformation and upgrading of the job market. (Source: Ronald_vanLoon)

U.S. Military Faces Challenges Deploying AI Weapons: The U.S. military is encountering difficulties in deploying AI weapons and is now shifting related efforts to a new organization (DAWG) to accelerate drone procurement plans. This reflects the complexities AI technology faces in military applications, including technical integration, ethical considerations, and practical operational challenges. (Source: Reddit r/ArtificialInteligence)