Keywords:OpenAI GDPval benchmark, Claude Opus 4.1, GPT-5, AI evaluation, Economic task performance, AI model economic impact assessment, Claude Opus 4.1 vs GPT-5, GDPval benchmark testing, AI practical application capabilities, Cross-industry AI performance comparison

🔥 Focus

OpenAI Releases GDPval Benchmark: Claude Opus 4.1 Outperforms GPT-5 : OpenAI has released the new GDPval benchmark to evaluate AI models’ performance on real-world economic tasks across 9 industries and 44 occupations. Initial results show that Anthropic’s Claude Opus 4.1 reached or surpassed human expert levels in nearly half of the tasks, outperforming GPT-5. OpenAI acknowledged Claude’s superior aesthetic performance, while GPT-5 led in accuracy. This marks a shift in AI evaluation towards measuring actual economic impact and reveals the rapid progress in AI capabilities. (来源: OpenAI, menhguin, MillionInt, _sholtodouglas, polynoamial, menhguin, aidan_mclau, sammcallister, menhguin, andy_l_jones, tokenbender, scaling01, scaling01, scaling01, scaling01, scaling01, scaling01, alexwei_, scaling01, scaling01, scaling01, gdb, teortaxesTex, snsf, dilipkay, scaling01, scaling01, jachiam0, jachiam0, sama, ClementDelangue, AymericRoucher, shxf0072, Reddit r/artificial, 36氪, 36氪, 36氪)

AI and Wikipedia’s ‘Doom Spiral’ for Vulnerable Languages : AI models learn languages by scraping internet text, and Wikipedia is often the largest online data source for vulnerable languages. However, a flood of low-quality AI-translated content into these smaller Wikipedia versions leads to widespread errors. This creates a ‘garbage in, garbage out’ vicious cycle, potentially making AI translations of these languages even more unreliable, thereby accelerating the decline of vulnerable languages. The Greenlandic Wikipedia has been proposed for closure due to ‘gibberish’ caused by AI tools. This highlights AI’s potential negative impact on cultural diversity and language preservation. (来源: MIT Technology Review, MIT Technology Review)

OpenAI Top Researcher Song Yang Jumps to Meta : Song Yang, head of OpenAI’s Strategic Exploration team and a key contributor to diffusion models, has moved to Meta’s MSL team, reporting to Chief Scientist Shengjia Zhao. Song Yang is a prodigy who entered Tsinghua University at 16 and is known in the industry as one of the ‘most powerful brains’ for his work on consistency models and other achievements at OpenAI. This move is another significant instance of Meta poaching talent from OpenAI, sparking industry attention on AI talent competition and research directions. (来源: 36氪, dotey, jeremyphoward, teortaxesTex)

China Telecom Tianyi AI Releases High-Quality Dataset with Over 10 Trillion Tokens : China Telecom Tianyi AI has released a general large model corpus dataset with a total storage of 350TB and over 10 trillion tokens, along with specialized datasets covering 14 key industries. This dataset, meticulously annotated and optimized, includes multimodal industry data, aiming to enhance AI model performance and generalization capabilities. China Telecom emphasizes that high-quality datasets are the core fuel for AI development and is building a ‘data-model-service’ closed loop based on its Xingchen MaaS platform. It is committed to promoting inclusive AI development and localized innovation, having successfully trained trillion-parameter large models. (来源: 量子位)

China’s Guoxing Yuhang Achieves Global First in Commercializing Space Computing Constellation : China’s Guoxing Yuhang has successfully launched and achieved routine commercial operation of its space computing constellation, marking a shift in space computing from ‘possible’ to ‘usable.’ The constellation, comprising the first batch of ‘Xingsuan’ satellites, aims to build a space-based computing infrastructure of 2800 computing satellites with a total computing power exceeding 100,000 P, supporting the operation of models with billions of parameters. This success involved deploying a road recognition model to an orbiting satellite, completing the entire process from image acquisition and model inference to result transmission, achieving the first in-orbit operation of an algorithm for the transportation industry, and providing a new paradigm for the spatial extension of global AI infrastructure. (来源: 量子位)

China Restricts Nvidia Chip Procurement, Accelerates Semiconductor Self-Sufficiency : China has prohibited major tech companies from purchasing Nvidia chips, a move indicating that China has made sufficient progress in the semiconductor sector to reduce reliance on US-designed chips. This highlights the US’s vulnerability in Taiwan’s semiconductor manufacturing and China’s increasing self-sufficiency. For example, the DeepSeek-R1-Safe model has been trained on 1000 Huawei Ascend chips. Nvidia’s Jensen Huang also noted that 50% of the world’s AI researchers come from China. (来源: AndrewYNg, Plinz)

🎯 Trends

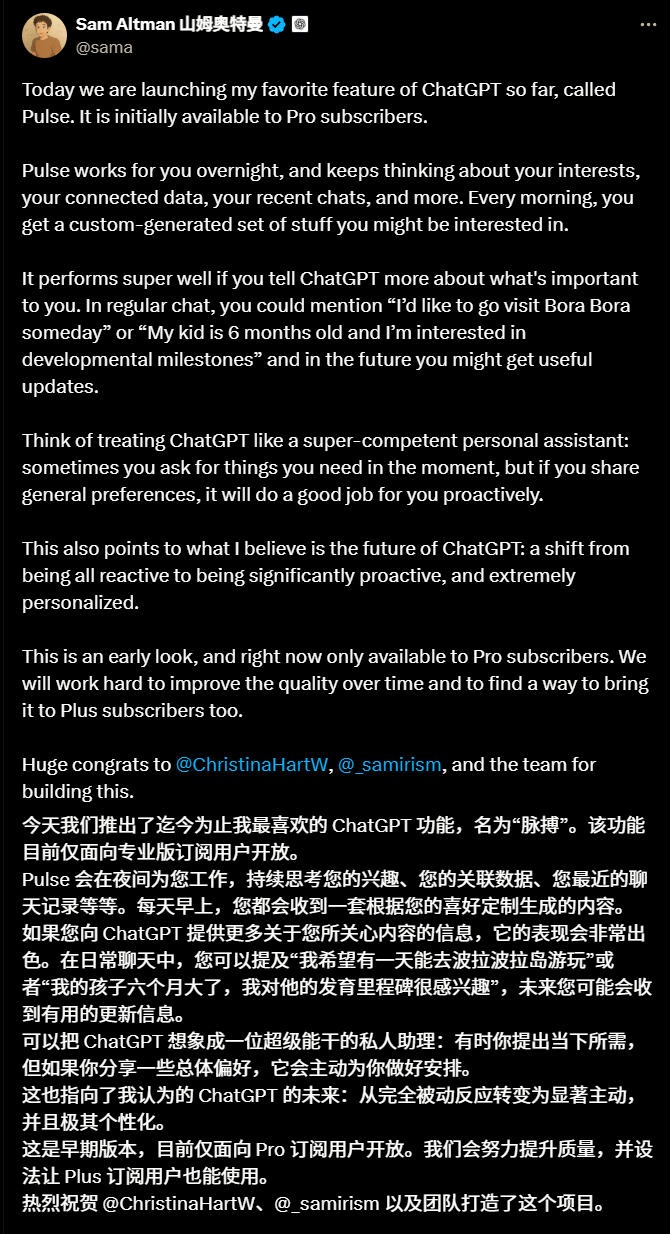

ChatGPT Pulse Launches, Ushering in an Era of Proactive Intelligence : OpenAI has launched a preview of ChatGPT Pulse for Pro users, a feature that transforms ChatGPT from a passive Q&A tool into a proactive intelligent assistant. Pulse generates personalized daily briefings in the background based on user chat history, feedback, and connected applications (e.g., calendar, Gmail), presented as cards. It aims to provide a goal-oriented, non-addictive information experience. Sam Altman called it his ‘favorite feature,’ signaling ChatGPT’s future shift towards highly personalized and proactive services. (来源: Teknium1, openai, dejavucoder, natolambert, gdb, jam3scampbell, jam3scampbell, scaling01, sama, sama, scaling01, nickaturley, kevinweil, dotey, raizamrtn, BlackHC, op7418, 36氪, 36氪, 36氪, 36氪, 量子位)

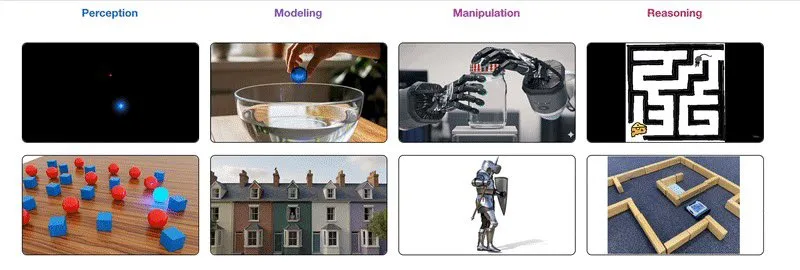

Google Releases Gemini Robotics 1.5 Series, Enabling ‘Cross-Species’ Robot Learning : Google DeepMind has released the Gemini Robotics 1.5 series models (including Gemini Robotics 1.5 and Gemini Robotics-ER 1.5), designed to equip robots with stronger ‘think-before-act’ capabilities and cross-embodiment learning skills. Gemini Robotics-ER 1.5 acts as the ‘brain’ for planning and decision-making, while Gemini Robotics 1.5 acts as the ‘cerebellum’ for executing actions, working in synergy. This series of models excels in embodied reasoning and cross-embodiment learning, capable of transferring actions learned from one robot to another, potentially advancing the development of general-purpose robots. (来源: Teknium1, nin_artificial, dejavucoder, crystalsssup, scaling01, jon_lee0, BlackHC, Google, demishassabis, shaneguML, demishassabis, JeffDean, 36氪, 36氪)

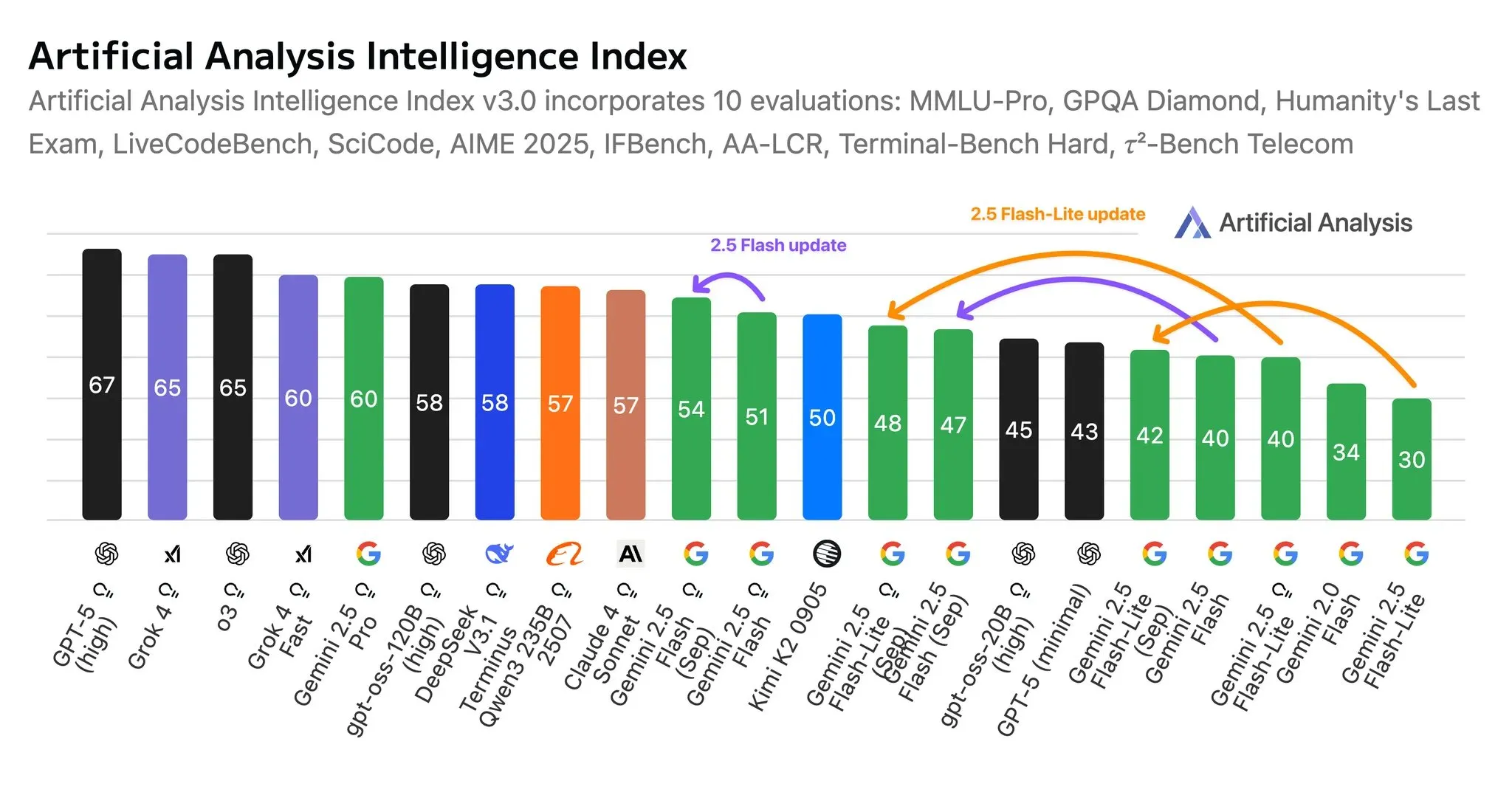

Google Releases Gemini 2.5 Flash Series Model Updates : Google has announced the latest updates to its Gemini 2.5 Flash and Flash-Lite models, which offer improvements in intelligence, cost-effectiveness, and token efficiency. Flash-Lite shows an 8-point increase in intelligence index in inference mode and a 12-point increase in non-inference mode, along with higher token efficiency and faster inference speed. These updates lead to better performance in instruction following, multimodal understanding, and translation, with Flash models being more efficient in Agent tool usage. (来源: scaling01, osanseviero, Google, osanseviero, andrew_n_carr)

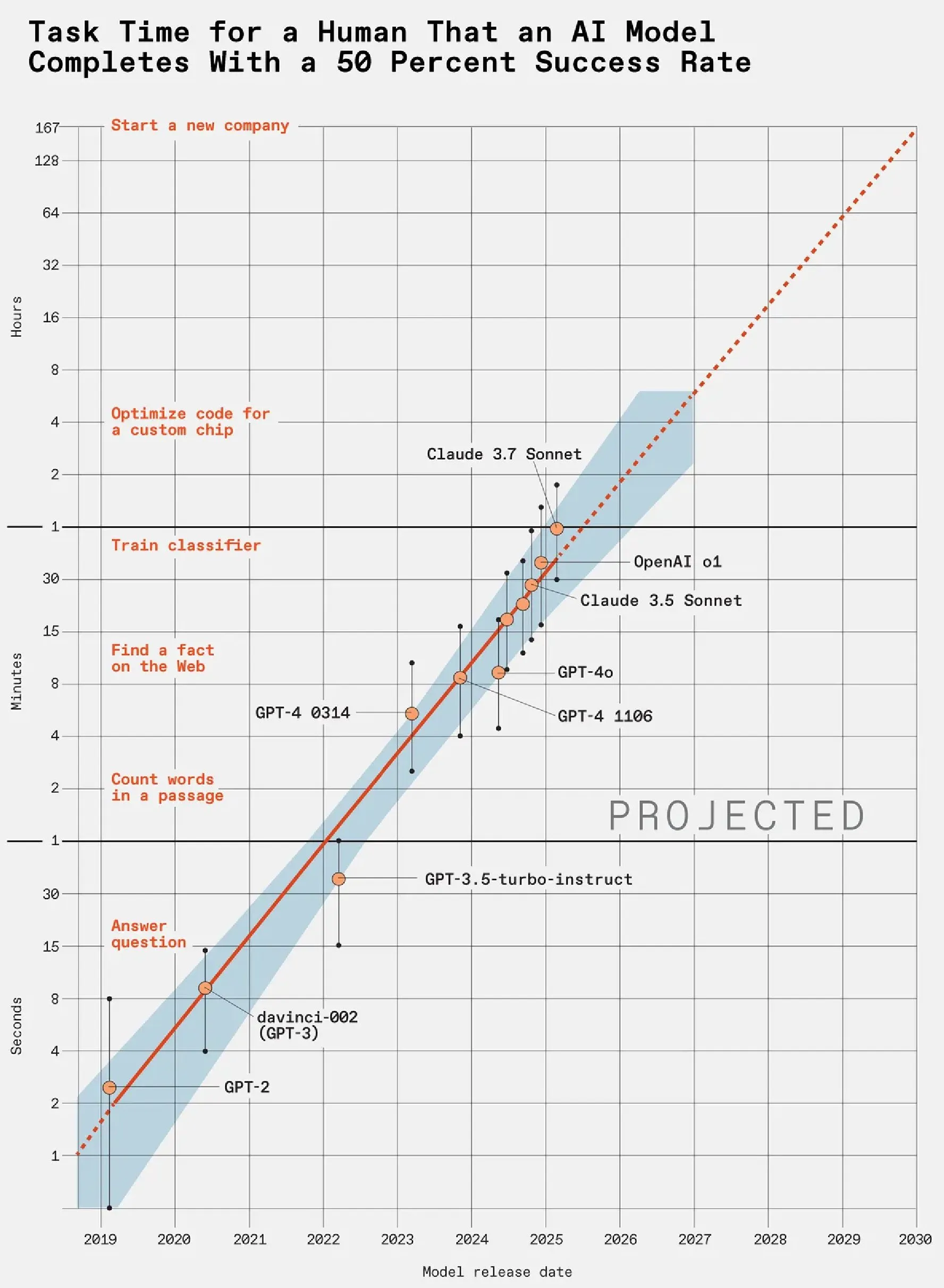

AI Capability Improvement Astounding: LLM Power Doubles Every 7 Months : A METR study on LLM benchmarks, measuring the time LLMs take to complete human tasks, reveals that LLM capabilities are doubling every 7 months. GPT-5 can now consistently complete complex tasks that would take humans several hours. At this rate, by 2030, LLMs could potentially handle work that would take humans a year to complete, such as founding new companies. This foreshadows a disruptive impact of AI on the labor market in the coming years. (来源: karminski3)

Video Models Show Potential for General Visual Intelligence : Video models are experiencing a ‘GPT moment,’ demonstrating general capabilities from simple perception to visual reasoning. Models like Veo3 already possess zero-shot abilities, capable of solving complex tasks within the visual stack. Research suggests that video models are universal ‘spatiotemporal reasoners,’ and are expected to become a key path to general visual intelligence in the future, especially in robotics, where they can address the ‘hardest’ problems like semantics, planning, and common sense. (来源: shaneguML, BlackHC, AndrewLampinen, teortaxesTex)

AI Agents Evolve from ‘Assistants’ to ‘Butlers,’ Delving into the Physical World : Renowned futurist Bernard Marr predicts that by 2026, AI agents will transition from passive assistants to proactive butlers, capable of autonomously managing daily tasks and coordinating complex projects. AI will no longer be confined to the digital world but will deeply integrate into the physical world through autonomous driving, humanoid robots, IoT, and other forms, changing how humans interact with their environment. Major Chinese tech companies like Tencent, Alibaba, and Baidu are also actively deploying enterprise-grade AI agents, emphasizing their task execution and delivery capabilities rather than just conversational abilities, aiming to make them new commercial growth drivers. (来源: 36氪, 36氪, omarsar0)

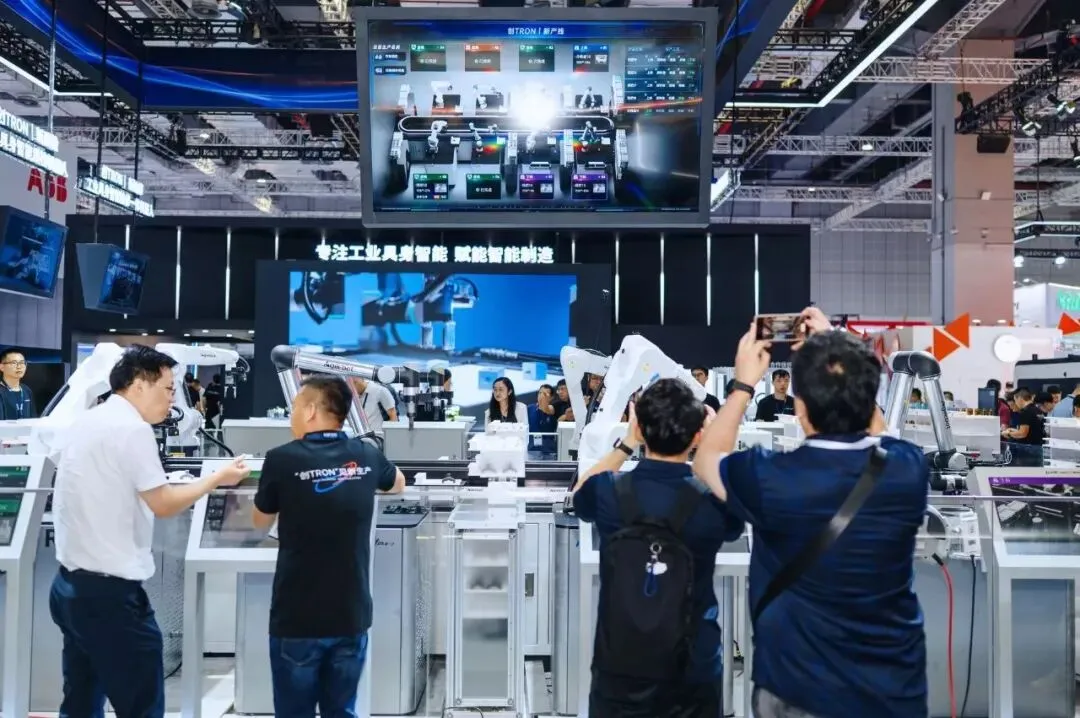

Industrial Robots Transition from ‘Lone Soldiers’ to ‘Super Production Teams’ : Industrial embodied intelligent robots are expanding from single processes to full-workflow collaboration, forming ‘super production teams.’ For example, Micro-E Intelligence’s production line, composed of 8 industrial embodied intelligent robots, can produce 4 different products with minute-level switching and hour-level adjustments. These robots can ‘think’ like humans, take over tasks, and improve production efficiency and flexibility. AI vision technology is becoming a core driving force, pushing industrial robots from ‘execution tools’ to ‘embodied intelligence,’ providing a Chinese solution for the digital and intelligent transformation of manufacturing. (来源: 36氪)

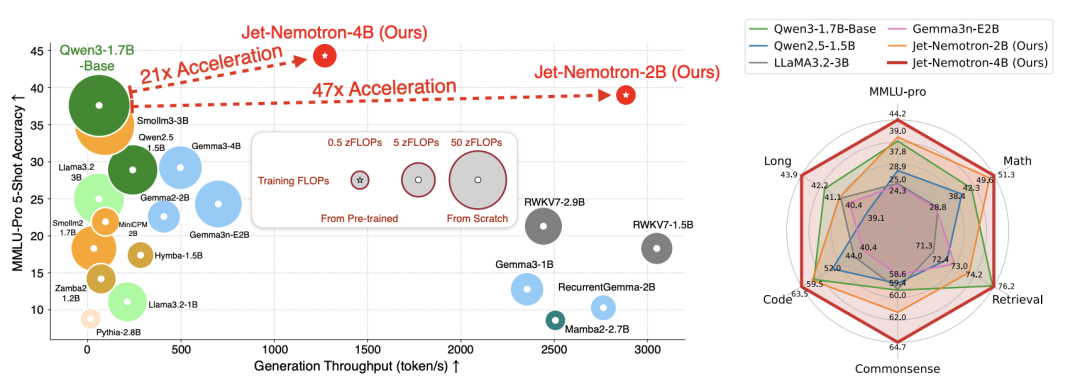

Grok-4-fast’s Efficiency Gains Potentially Linked to NVIDIA Jet-Nemotron Algorithm : Grok-4-fast’s impressive performance in cost reduction and efficiency improvement may be related to NVIDIA’s Jet-Nemotron algorithm. This algorithm, through the PortNAS framework, starts with pre-trained full-attention models and optimizes the attention mechanism, achieving approximately 53x faster LLM inference while maintaining performance comparable to top open-source models. Jet-Nemotron-2B outperforms Qwen3-1.7B-Base in MMLU-Pro accuracy, is 47 times faster, and requires less memory, potentially significantly reducing model costs. (来源: 36氪)

NVIDIA Cosmos Reason Model Downloads Exceed 1 Million : NVIDIA Cosmos Reason model has surpassed 1 million downloads on HuggingFace and ranks highly on the physical reasoning leaderboard. This model aims to teach AI agents and robots to think like humans, offered as easily deployable microservices, and represents a significant achievement for NVIDIA in advancing AI Agents and robotics technology. (来源: huggingface, ClementDelangue)

Meta Releases Code World Model (CWM) to Advance Code Generation Research : Meta FAIR has released the Code World Model (CWM), a 32-billion-parameter research model designed to explore how world models can transform code generation and code reasoning. CWM is open-sourced under a research license, encouraging the community to build upon it, signaling a new direction for research in the code generation domain. (来源: ylecun)

Google Releases EmbeddingGemma, a Lightweight Text Embedding Model : Google has introduced EmbeddingGemma, a lightweight, open text embedding model with only 300M parameters, yet achieving SOTA performance on the MTEB benchmark. It surpasses models twice its size, making it ideal for fast, efficient on-device AI applications. (来源: _akhaliq)

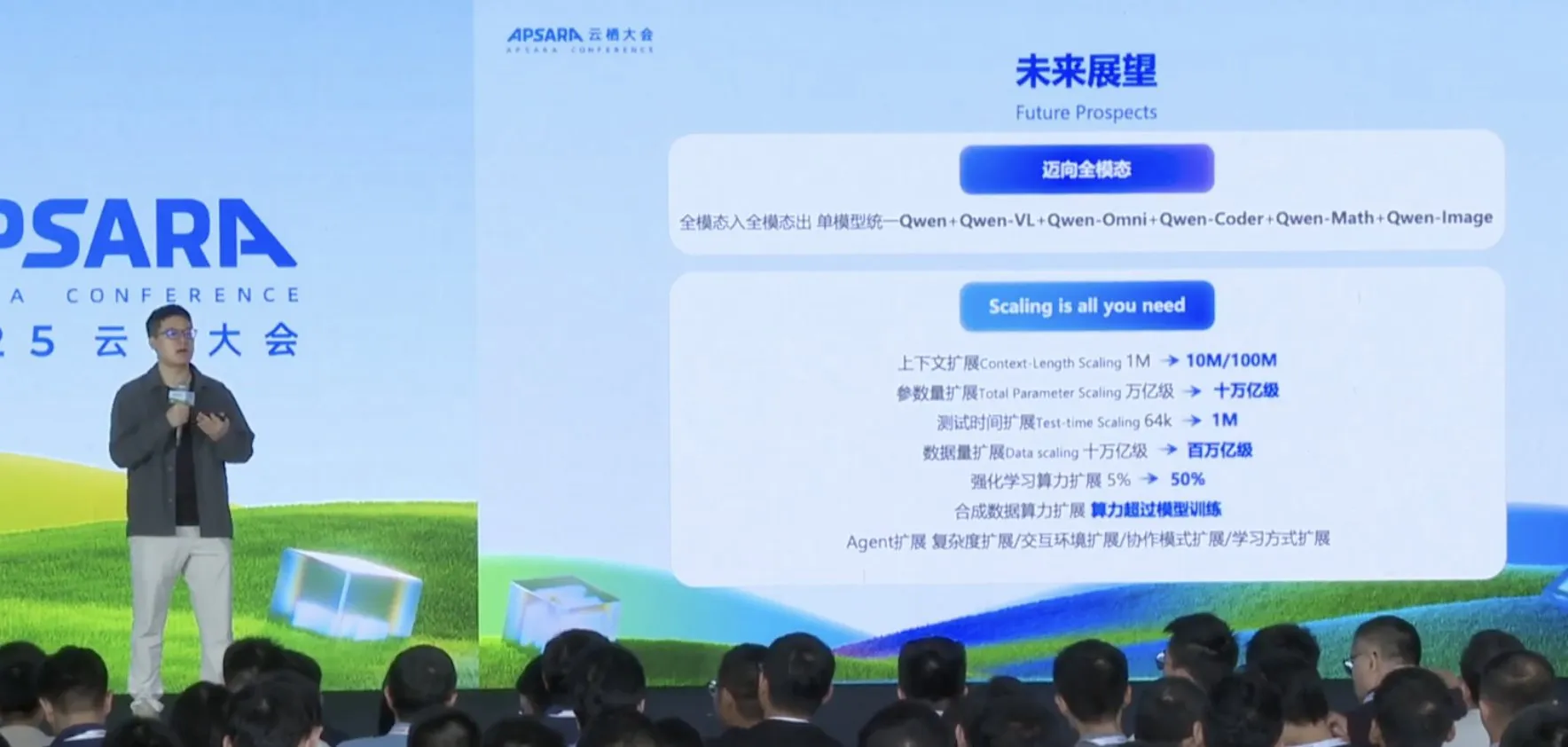

Alibaba Tongyi Qianwen Unveils Multimodal and Large-Scale Expansion Roadmap : Alibaba Tongyi Qianwen has unveiled an ambitious roadmap, heavily betting on unified multimodal models and extreme scale expansion. Goals include extending context length from 1M to 100M tokens, reaching trillion or even ten-trillion parameters, expanding test-time computation to 1M, and data volume to 100 trillion tokens. Additionally, it aims to drive infinite-scale synthetic data generation and expand Agent capabilities, embodying the ‘scale is everything’ philosophy. (来源: menhguin, karminski3)

AI-Assisted Healthcare Enters Clinical Application Phase : AI applications in healthcare are transitioning from cutting-edge experimental products to routine tools. For example, JD Health has launched ‘AI Hospital 1.0’ and upgraded its ‘Jingyi Qianxun 2.0’ medical large model, achieving AI-driven closed-loop ‘medical examination, diagnosis, and prescription’ services covering guidance, consultation, examination, medication purchase, and health management. AI intelligent stethoscopes can now assist in diagnosing heart disease, and AI-powered image reading has made breakthroughs in areas like lung nodules and cerebral hemorrhage, achieving diagnostic accuracy over 96%. AI is fully entering clinical application, enhancing the efficiency and precision of healthcare services. (来源: 36氪, 36氪, 量子位, Ronald_vanLoon, Reddit r/ArtificialInteligence)

Meta AI App Launches AI-Generated Short Videos ‘Vibes’ : The Meta AI App has introduced a new feature called ‘Vibes,’ a dynamic feed focused on AI-generated short videos. This move signifies Meta’s further commitment to AI content creation, aiming to provide users with new, AI-driven short video experiences. (来源: dejavucoder, _tim_brooks, EigenGender)

Breakthrough Achieved in AI-Generated Genomes : Arc Institute has announced three new discoveries, including the world’s first functional AI-generated genome. This breakthrough leverages Evo 2, a bio-ML model released by Arc in collaboration with NVIDIA, enabling scientists to design and write large-scale changes in the human genome, correcting DNA repeats that cause genetic diseases. This is expected to accelerate gene therapy and biomaterials research. (来源: dwarkesh_sp, riemannzeta, zachtratar, kevinweil, Reddit r/artificial)

Apple Introduces SimpleFold, a Lightweight AI for Protein Folding Prediction : Apple researchers have developed SimpleFold, a new AI based on flow matching models for protein folding prediction. It discards computationally expensive components found in traditional diffusion methods, using only generic Transformer blocks to directly convert random noise into protein structure predictions. SimpleFold-3B performs excellently in standard benchmarks, achieving 95% of the performance of leading models, with higher deployment and inference efficiency. This is expected to lower the computational barrier for protein structure prediction and accelerate drug discovery. (来源: Reddit r/ArtificialInteligence, HuggingFace Daily Papers)

Deep Integration of Industrial AI and Physical AI : Alibaba and NVIDIA have partnered to integrate NVIDIA’s complete Physical AI software stack into the Alibaba Cloud platform. Physical AI aims to bring artificial intelligence from screens into the physical world, optimizing AI-generated content through the integration of physical laws to make it more consistent with real-world logic. Its core technologies include world models, physical simulation engines, and embodied intelligence controllers, aiming to achieve AI’s complete understanding of 3D space, real-time physical computation, and concrete actions. This collaboration is expected to promote the widespread application of AI in robotics, logistics, automotive, manufacturing, and other industries, transforming AI from an information processing tool into an intelligent system capable of understanding and manipulating the physical world. (来源: 36氪)

Hunyuan3D-Omni, an AI-Generated 3D Asset Framework, Released : Hunyuan3D-Omni is a unified framework for controllable 3D asset generation, based on Hunyuan3D 2.1. It supports not only image and text conditions but also accepts point clouds, voxels, bounding boxes, and skeletal poses as conditional signals, enabling precise control over geometry, topology, and pose. The model employs a single cross-modal architecture to unify all signals and is trained with a progressive, difficulty-aware sampling strategy, improving generation accuracy and robustness. (来源: HuggingFace Daily Papers)

Tencent Announces Hunyuan Image 3.0, Claimed to be the Strongest Open-Source Text-to-Image Model : Tencent has teased the release of Hunyuan Image 3.0 on September 28th, claiming it to be the world’s most powerful open-source text-to-image model. This announcement has garnered widespread attention and anticipation from the community, especially regarding its potential applications in tools like ComfyUI. (来源: ostrisai, Reddit r/LocalLLaMA)

Llama.cpp Adds Qwen3 Reranker Support : Llama.cpp has merged support for the Qwen3 reranker, a feature that outputs similarity scores for query and document pairs via a reranking model (cross-encoder), significantly boosting the recall performance of retrieval pipelines like RAG. Users will need to use new GGUF files for correct results. (来源: Reddit r/LocalLLaMA)