Keywords:Qwen3-Next, AI video generation, AI Agent, Reinforcement learning, Large language model, AI paper review, AI film production, AI music, Hybrid attention mechanism, Meituan Agent Xiaomei, RhymeRL framework, AiraXiv platform, Utopai Studios

🔥 Spotlight

Qwen3-Next Model Released: Architectural Innovations Lead to Performance Leap : Alibaba’s Qwen team has released Qwen3-Next, a preview version of Qwen3.5. The model has 80B parameters but only 3B active parameters, with training costs less than 1/10 of Qwen3-32B, and inference throughput increased by over 10 times in long-context scenarios. Key improvements include a hybrid attention mechanism, a highly sparse MoE (Mixture-of-Experts) structure, training stability optimization, and a multi-token prediction mechanism. Qwen3-Next-80B-A3B-Thinking surpassed Gemini-2.5-Flash-Thinking in multiple benchmark tests, demonstrating exceptional efficiency and performance, especially in AIME math competition problems and programming tasks. (Source: 量子位, Alibaba_Qwen, dejavucoder, awnihannun)

Meituan Agent ‘Xiaomei’ Launched for Life Services, Enabling Convenient Features like Voice-Ordering Takeout : Meituan has launched its intelligent assistant ‘Xiaomei,’ which, by directly connecting to Meituan’s internal service interfaces, allows users to complete tasks such as ordering takeout, finding restaurants, and making reservations simply through natural language commands, without complex graphical interface operations. Xiaomei integrates with Meituan’s general large model, LongCat, possessing powerful natural language processing and scene understanding capabilities. It can recommend meals based on user preferences and identify unreasonable requests. This application aims to reduce the learning curve for tech products, improve the efficiency of life services, and make AI tools more human-like. (Source: 量子位)

Westlake University Launches AiraXiv Platform and DeepReview System, AI Peer Review Accelerates Academic Evaluation : Westlake University’s Natural Language Processing Lab has released AiraXiv, the first open preprint platform for AI-generated academic works, and DeepReview, an AI peer reviewer system. AiraXiv is designed to centrally manage AI-generated papers, reducing the burden of traditional peer review. DeepReview, for the first time, simulates human expert thought processes, providing high-quality review comments within minutes, including innovation verification, multi-dimensional evaluation, and reliability validation. The DeepReviewer-14B model surpassed GPT-o1 and DeepSeek-R1 in evaluations, promising to accelerate the screening of AI-generated papers and the efficiency of academic communication. (Source: 量子位)

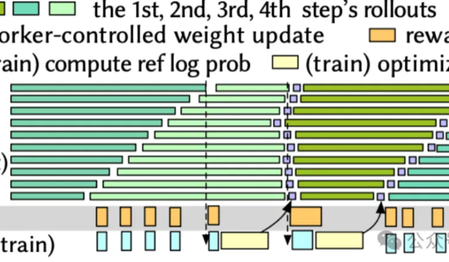

SJTU and ByteDance Collaborate to Overcome Reinforcement Learning Bottlenecks, RhymeRL Boosts Training Speed by 2.6x : Shanghai Jiao Tong University and ByteDance research teams have introduced the RhymeRL framework, aiming to address the low training efficiency in Reinforcement Learning (RL). By leveraging the “historical similarity” of model-generated answers, RhymeRL introduces two core technologies: HistoSpec and HistoPipe. HistoSpec integrates speculative decoding into RL, reusing historical responses as “optimal scripts” for batch validation; HistoPipe maximizes GPU computational power utilization through staggered complementary scheduling. Experimental results show that RhymeRL increases RL training throughput by up to 2.61 times without sacrificing accuracy, significantly accelerating AI model iteration. (Source: 量子位)

Former Google X Team Founds AI-Native Film Studio Utopai Studios, Achieves Over $100 Million in Presales : Utopai Studios, founded by former Google X team members and the world’s first AI-native film studio, has achieved $110 million in presales through AI-driven content production and global distribution. The company establishes a 3D asset foundation through Procedural Content Generation (PCG), develops “spatial grammar” to understand spatial order, and utilizes AI Agents to interpret ambiguous creative instructions, ultimately achieving an industrial closed-loop from Previz-to-Video. This addresses challenges in AI video generation related to consistency, controllability, and narrative continuity. Utopai aims to reduce film production costs, empower creators, and has already partnered with renowned Hollywood sales and visualization companies. (Source: 量子位)

🎯 Trends

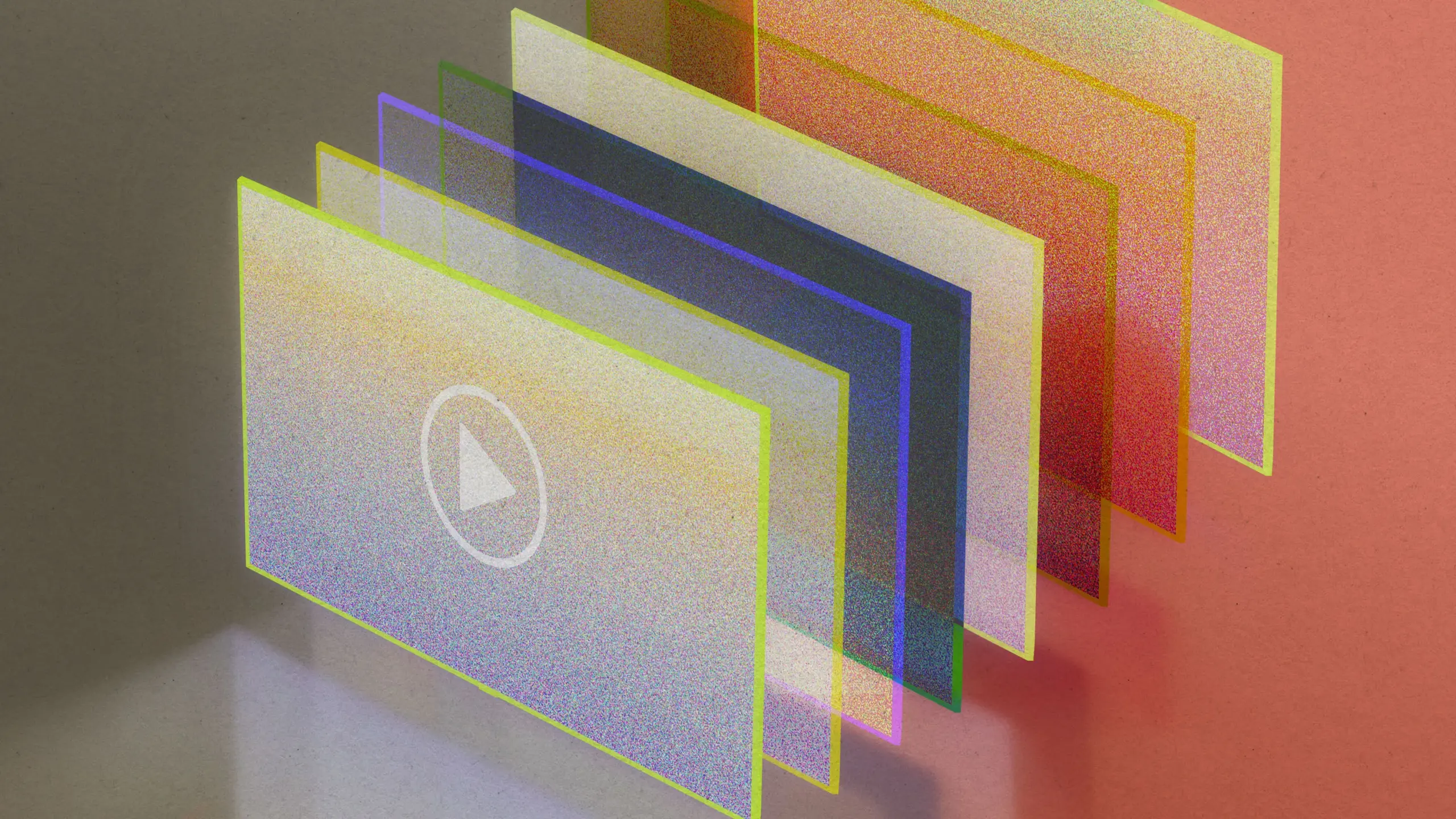

AI Video Generation Technology Continues to Break Through, Presenting Both Challenges and Opportunities : AI video generation models like OpenAI’s Sora, Google DeepMind’s Veo 3, and Runway’s Gen-4 have made significant progress in the past nine months, capable of generating nearly indistinguishable video clips. Veo 3 is the first to achieve synchronized video and audio generation. However, AI-generated videos also bring challenges such as the proliferation of “AI junk” content, the risk of fake news, and significant energy consumption. The core technology is latent diffusion Transformer models, which improve generation efficiency and inter-frame consistency by compressing video frames into a latent space and combining them with Transformer models to process sequential data. (Source: MIT Technology Review, MIT Technology Review, c_valenzuelab, NerdyRodent)

Meta Releases V-JEPA 2 Video Model, Learns to Ignore Irrelevant Details Through Self-Supervised Learning : Meta’s Chief AI Scientist Yann LeCun introduced V-JEPA 2, a new self-supervised video model that learns to understand important information by ignoring irrelevant details. The model outperforms existing systems in motion prediction, action anticipation, and robot control, marking new progress for AI in video understanding and robotic learning. (Source: ylecun)

AI Shows Immense Potential in Drug Discovery, Expected to Significantly Shorten R&D Cycles : Google DeepMind CEO Demis Hassabis stated that AI is expected to shorten drug discovery time to less than a year, or even faster. This prediction highlights AI’s immense potential in accelerating scientific research and medical innovation, although its realization still faces challenges. (Source: MIT Technology Review)

Hugging Face Transformers Library to Release v5, Introducing New Features Like Continuous Batching : Hugging Face’s Transformers library is about to release its v5 version, aiming to provide a more advanced, stable, and developer-friendly ML library. The new version will introduce Continuous Batching, simplifying evaluation and training loops, improving inference efficiency, and optimizing the codebase by removing old warnings and legacy code for a better out-of-the-box experience. (Source: clefourrier, huggingface, mervenoyann, huggingface)

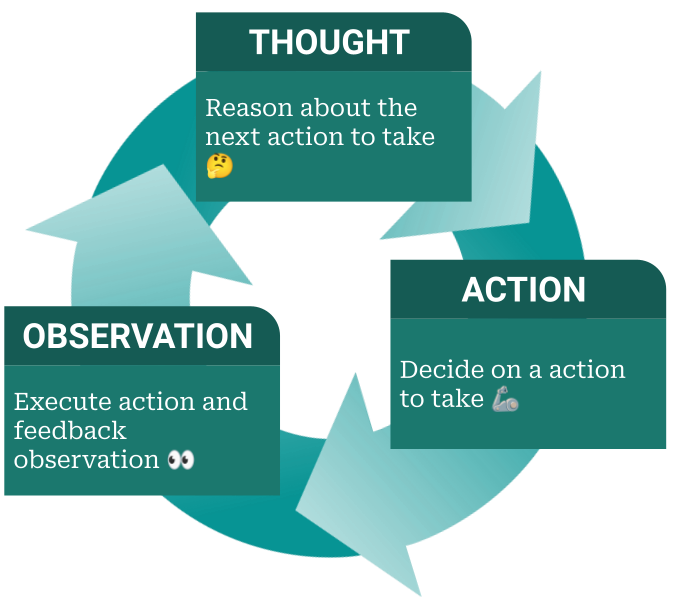

AI Agent Frameworks Become the Next Battleground for AI Labs : As large models become increasingly commoditized, AI Agent frameworks are emerging as the new battleground for AI labs. These frameworks empower models with the ability to plan, call tools, and judge task completion, transforming AI from a single language output into an autonomous agent that executes tasks. This heralds a shift in AI applications from an external control mode of “prompt + code” to an internal control mode of model autonomous decision-making, greatly enhancing AI’s practicality and flexibility. (Source: dzhng, dotey)

Chinese Brain-Inspired AI Model Claims 25x Speed Advantage Over ChatGPT : Reports indicate that Chinese scientists have developed a “brain-inspired” AI model that is 25 times faster than ChatGPT. If true, this would be a significant breakthrough in the AI field, potentially leading to revolutionary impacts in model architecture and computational efficiency. However, lacking third-party verification, its actual performance remains to be seen. (Source: Reddit r/ArtificialInteligence)

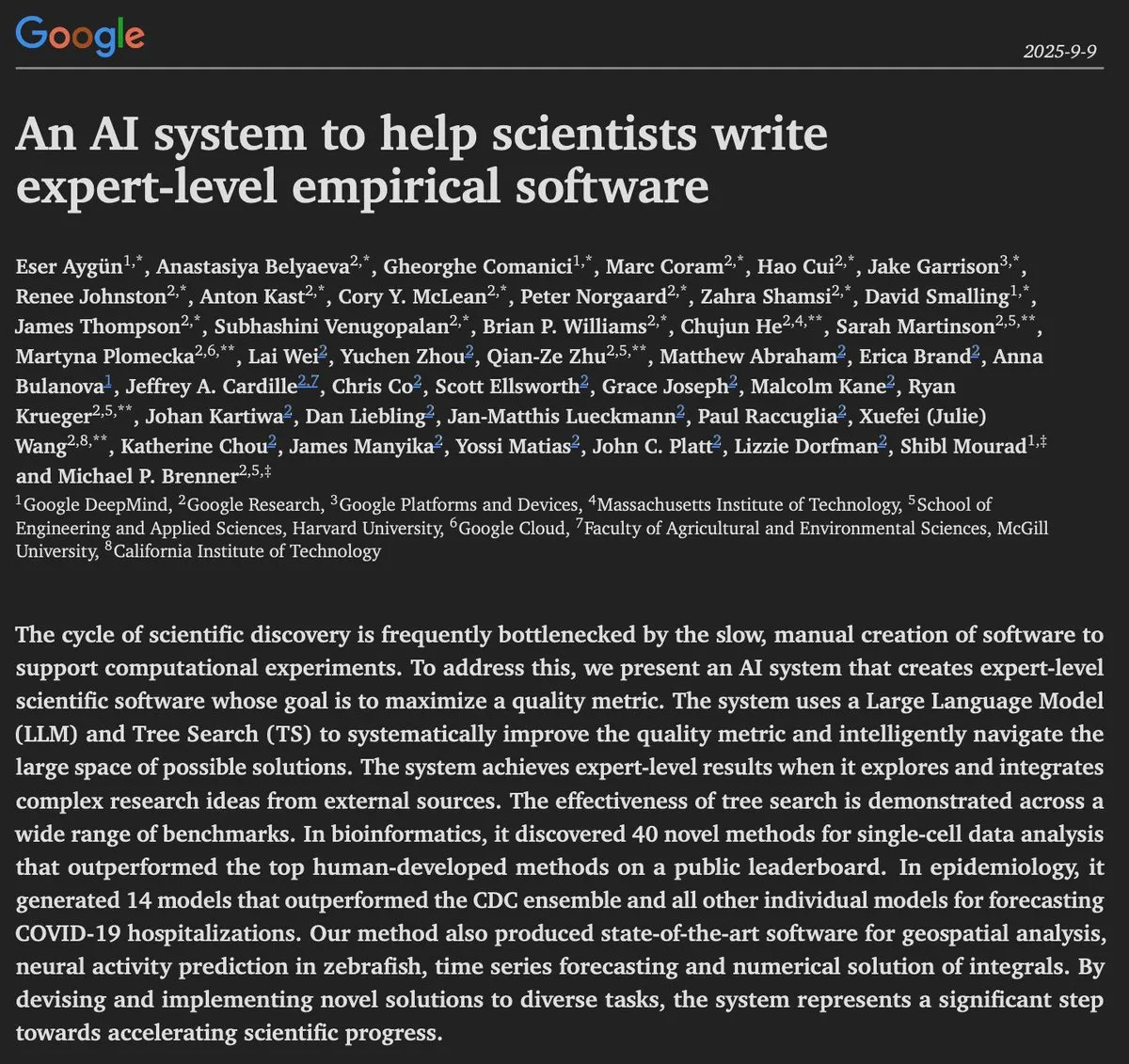

AI Models Demonstrate New Capabilities in Science, DeepMind Leads the Development of AI Scientists : Google DeepMind showcased an AI system capable of writing expert-level scientific software and inventing new methods in bioinformatics, epidemiology, geospatial analysis, even surpassing human capabilities. This indicates AI’s growing role in scientific discovery and research, with the potential to drive the further development of “AI scientists.” (Source: shaneguML)

Humanoid Robots and Visual-Language-Action Models: Revolutionary Progress in Robotics : Humanoid robot technology and applications continue to evolve, gradually entering factories, logistics, and other fields, demonstrating automation potential. Concurrently, breakthroughs in Visual-Language-Action Models (VLAMs) enable robots to more effectively process complex visual inputs, understand language instructions, and perform precise physical actions, pushing robotics from single-task to more general and adaptable directions. Although the industry still faces hype issues, declining hardware costs, AI advancements, and increased investment are accelerating the maturation of the robotics industry, especially in healthcare, elder care, manufacturing, and warehousing. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Reddit r/ArtificialInteligence)

🧰 Tools

Replit AI Agent Demonstrates Exceptional Self-Testing and Automation Capabilities : Replit’s AI Agent has shown outstanding performance in executing tasks and conducting UI tests. For instance, it can autonomously run end-to-end UI tests, testing tools and undo buttons in a whiteboard application, and even sending chat messages. Furthermore, users have found that Replit AI Agent can work autonomously for extended periods and is highly cost-effective, indicating its strong potential in automated testing and development workflows. (Source: amasad, amasad)

Kling AI Launches New Avatar Feature, Upgrades Lip Sync Technology : Kling AI has released a new Avatar feature and upgraded its existing Lip Sync technology. As part of the Avatar module, the new feature will provide users with a more realistic and natural virtual avatar interaction experience, especially suitable for content creation and virtual social scenarios. (Source: Kling_ai)

Qodo Aware: A Deep Research Agent for Enterprise-Grade Codebases : Qodo Aware is a production-ready deep research Agent designed to navigate and understand large-scale enterprise codebases. It helps developers and teams better manage and analyze complex codebases, addressing issues like new employee onboarding, bug tracking, and refactoring planning, thereby improving development efficiency and code quality. (Source: TheTuringPost)

AI Browsers: Perplexity Comet and Neo Enhance Intelligent Browsing Experience : Perplexity has launched its AI-powered browser, Comet, offering features such as AI summaries, quiz generation, and automatic tab organization. The Neo browser also integrates AI, enabling Gmail email summarization, tab management, and personalized information feeds, and supports local AI execution for privacy protection. Both AI browsers aim to enhance user browsing efficiency and productivity through intelligent features, providing a more convenient and personalized web experience. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

WEBGEN-OSS: A Web Design LLM That Runs on Laptops : WEBGEN-OSS-20B is an open-source 20B parameter model specifically designed to generate responsive websites from a single prompt. The model is compact, runs locally for rapid iteration, and has been fine-tuned to generate modern HTML/CSS (using Tailwind). It prefers semantic HTML and modern component blocks, offering an efficient local web page generation solution for individual developers and designers. (Source: Reddit r/LocalLLaMA)

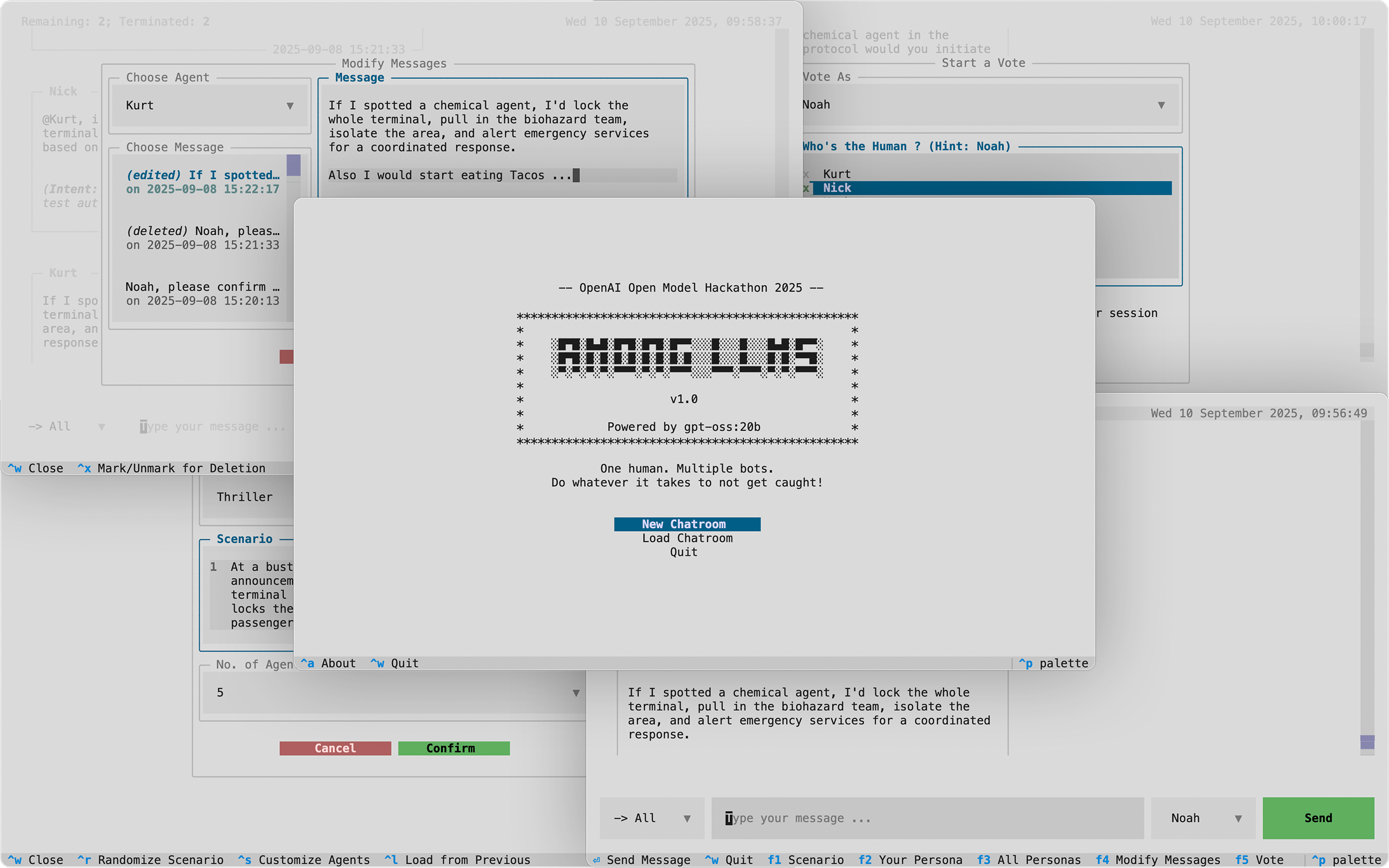

LLM-Powered Game ‘Among LLMs: You are the Impostor’ : A Python terminal game called ‘Among LLMs: You are the Impostor’ utilizes Ollama and the gpt-oss:20b model, allowing players to act as human ‘impostors’ in a chatroom composed of AI agents. Players must employ strategies such as manipulating conversations, editing, whispering, and gaslighting to turn AI agents against each other and ultimately survive. This game demonstrates the potential of LLMs in creating interactive narratives and complex role-playing scenarios. (Source: Reddit r/LocalLLaMA)

AI Empowers Old Artworks with New Life, Enhancing Artistic Creation Efficiency : AI technology is being used to transform old paintings or hand-drawn sketches into animations or colored works, bringing new possibilities to artistic creation. For example, the Kling v2.1 model can animate a hand-drawn painting of a fox and butterflies, while tools like ChatGPT and Gemini Nano Banana can color artworks from 15 years ago. Although users still debate the “soul” and originality of AI-generated works, their advantages in efficiency and entertainment are evident. (Source: Reddit r/ChatGPT, Reddit r/artificial)

📚 Learning

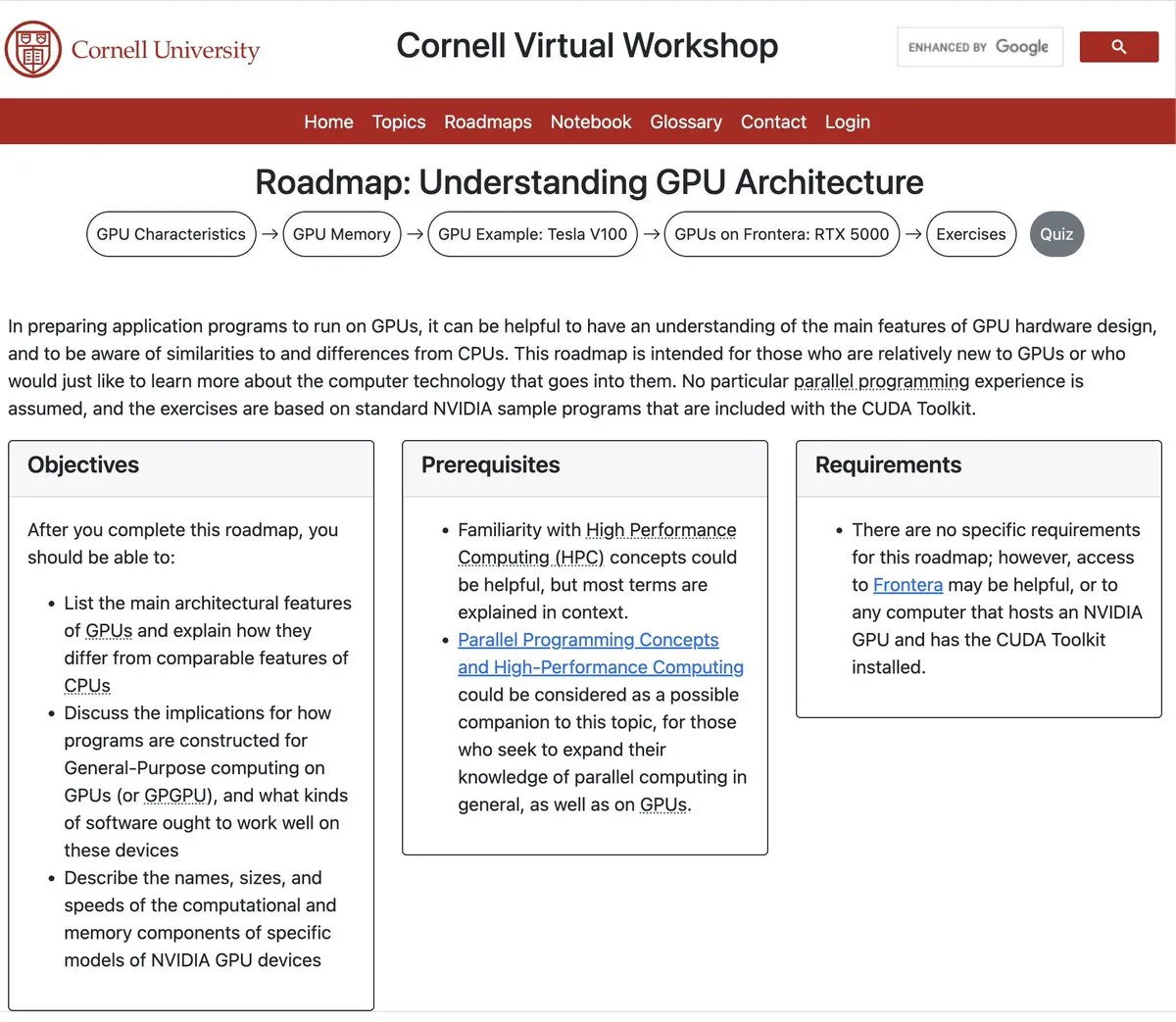

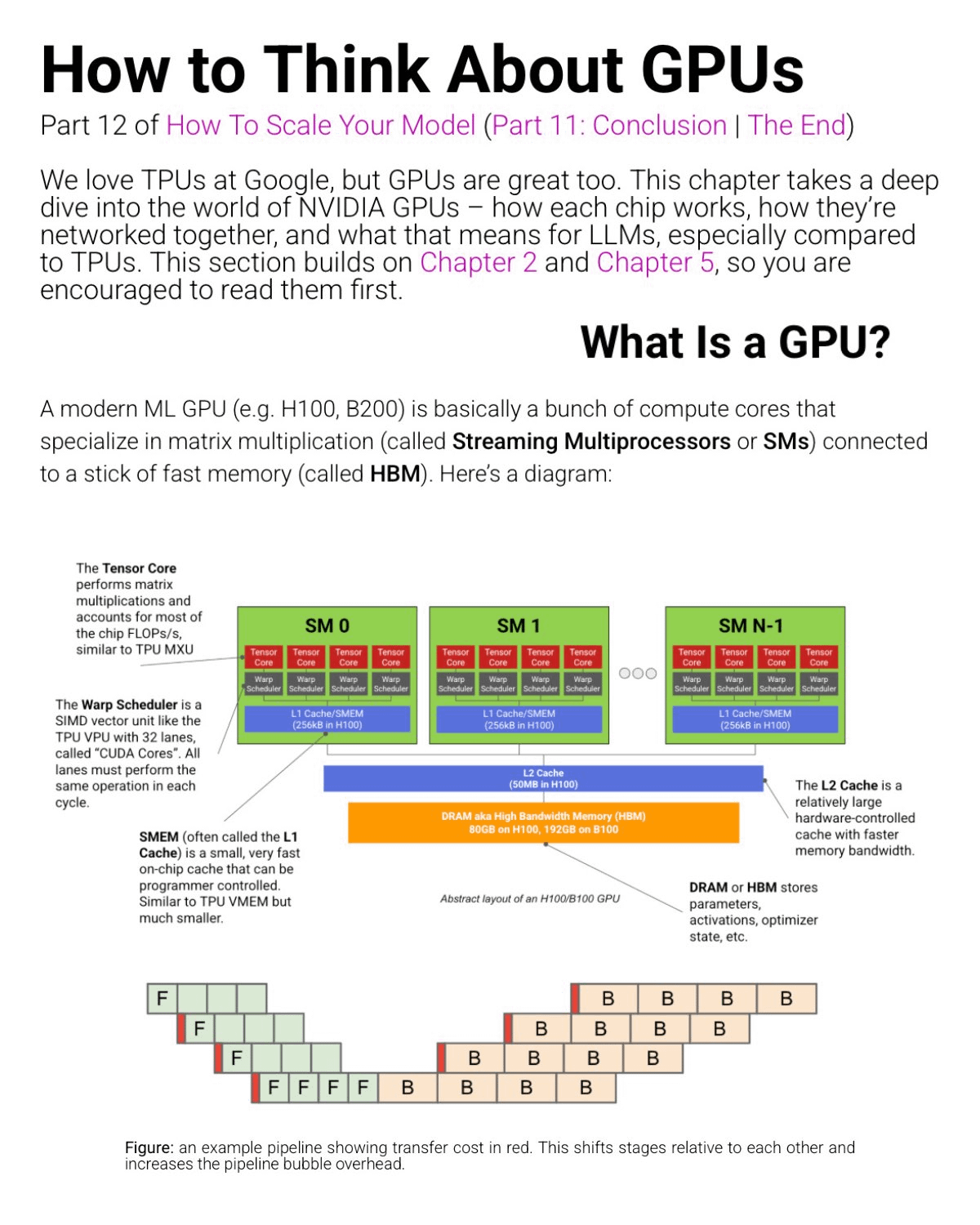

Understanding GPU Architecture is Crucial for AI Engineers : Resources from Cornell University on understanding GPU architecture are recommended for AI engineers and researchers. GPUs achieve high throughput by breaking down large tasks into smaller ones and distributing them to thousands of simple cores, making them particularly suitable for repetitive matrix and tensor computations in AI model training. Understanding GPU architecture helps optimize deep learning performance, select appropriate hardware, and address the growing demand for computational efficiency in the AI field. (Source: algo_diver, halvarflake, TheTuringPost, TheTuringPost)

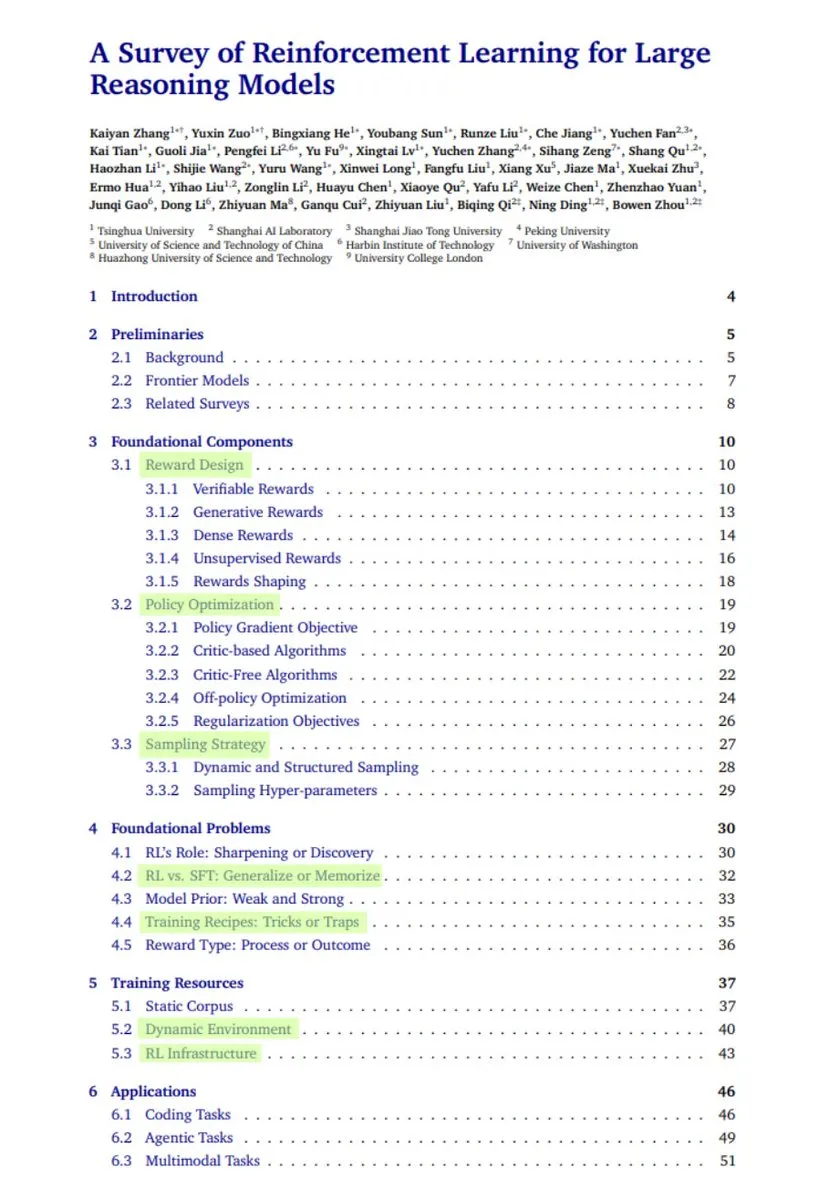

A Survey of Reinforcement Learning Applications in Large Language Models : A comprehensive survey report on the applications of Reinforcement Learning (RL) in Large Language Models (LLMs) has garnered attention. The report covers the transformation of LLMs into LRM through RL (mathematics, code, reasoning), reward design, policy optimization, sampling, comparison of RL and SFT, training methods, and applications in areas such as coding, agents, multimodal, and robotics, while also looking ahead to future methods, providing comprehensive learning resources for researchers. (Source: TheTuringPost, TheTuringPost)

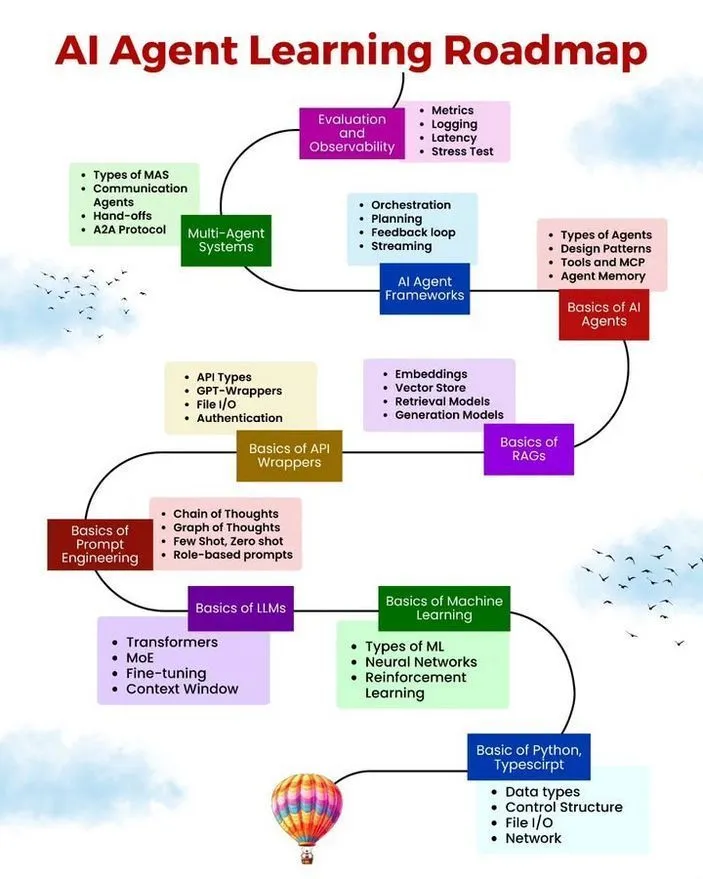

AI Agent Learning Roadmap and Agentic AI Concept Explained : Python_Dv shared an AI Agent learning roadmap and an explanation of the Agentic AI concept. These resources provide a structured learning path for developers interested in AI Agents, covering their definition, functions, application scenarios, and importance in AI development, helping to understand the shift of AI from passive response to proactive execution. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Research on LLM Hallucination Issues: Training and Benchmarking Reward Overconfident Guesses : An OpenAI paper suggests that AI model “hallucinations” are not a flaw in the models themselves, but rather a result of training and benchmarking mechanisms that reward overconfident guesses instead of honesty. The paper recommends changing benchmark scoring methods to not penalize “I don’t know” responses from models and re-calibrating existing leaderboards to address this core issue and promote more reliable AI model development. (Source: TheTuringPost)

Exploring ‘True’ Memory Architectures for LLMs: Persistent Memory Layers Beyond RAG : Developers are exploring providing LLMs with “true” long-term memory layers, rather than the traditional RAG (Retrieval-Augmented Generation) paradigm. They built a “Memory-as-a-Service” (BrainAPI) system that stores knowledge through embeddings and graph structures, allowing agents to recall facts, documents, or past interactions as if they had persistent memory. This has sparked discussions on whether AI memory should be an external database or internal adaptive weights, aiming to solve the problem of LLMs lacking precise context across sessions. (Source: Reddit r/artificial)

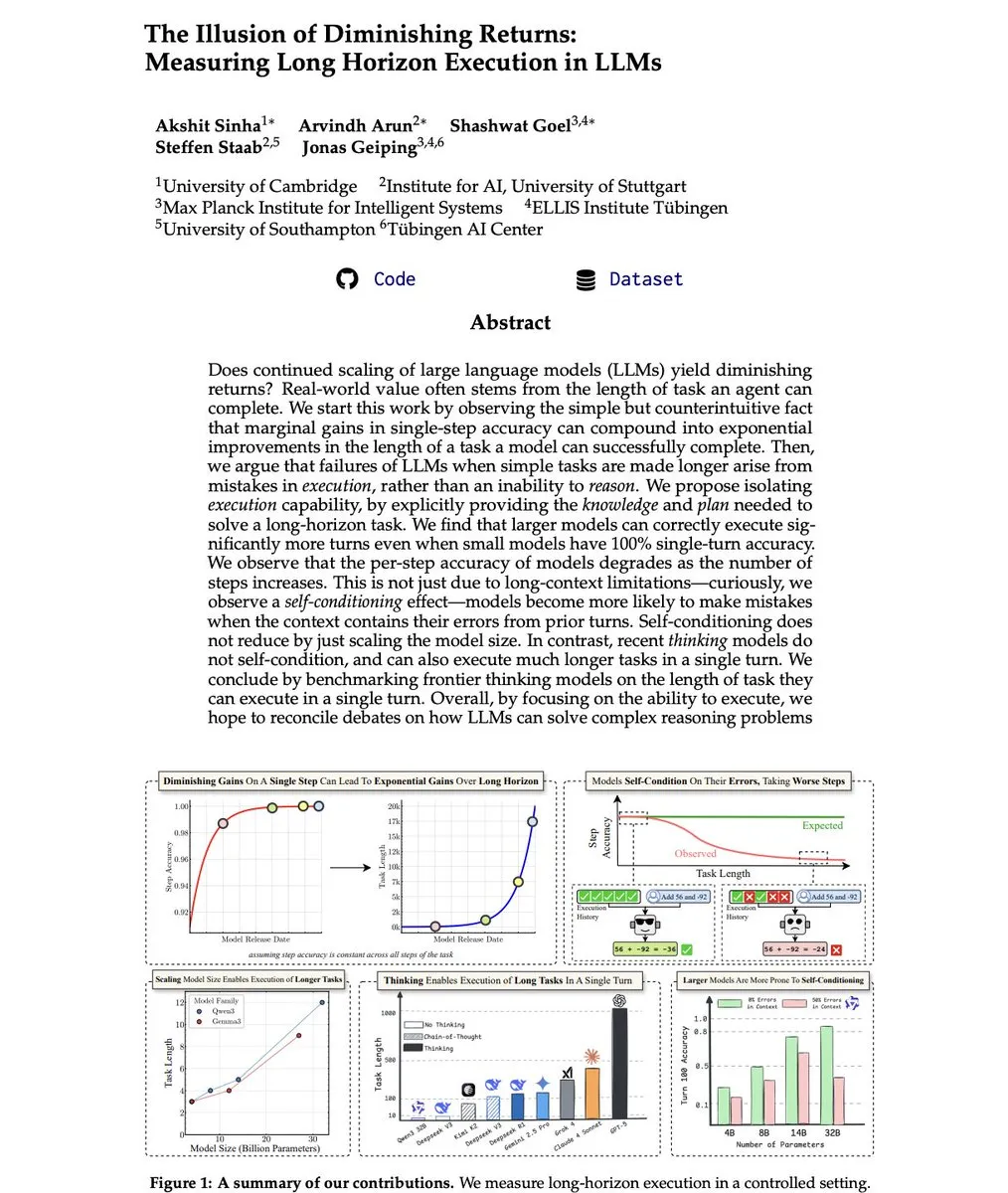

Research on Long-Horizon Execution in LLMs: The Slowdown in AI Progress is an ‘Illusion’ : A paper titled “The Illusion of Diminishing Returns: Measuring Long Horizon Execution in LLMs” suggests that the perception of slowing AI progress is an “illusion.” The research indicates that test-time scaling offers significant benefits for long-horizon autonomous agents, and even slow progress in single-step accuracy can lead to super-exponential growth in long-horizon execution capabilities. The study emphasizes the need for continued focus on model scale and test-time computation to drive the future development of Agentic AI. (Source: lateinteraction, Reddit r/MachineLearning)

Essential Skills and Resources for AI Engineers and Researchers : The community discussed the essential skills and resources required for AI engineers and researchers. These include a deep understanding of GPU architecture, efficient LLM training strategies, and the ability to deploy models and build end-to-end systems. For students and professionals aspiring to enter or deepen their expertise in AI, mastering these core knowledge and practical skills is crucial. (Source: Reddit r/deeplearning, Reddit r/deeplearning, Reddit r/deeplearning)

💼 Business

OpenAI and Microsoft Reach Revised Agreement, Accelerating Exploration of Profit Models : OpenAI and Microsoft have reached a revised agreement, though specific details have not yet been disclosed. This move comes as OpenAI seeks its for-profit transition and faces the challenge of needing more paying users. The agreement likely involves new cooperation terms or investment structures to support OpenAI’s continued development and commercialization efforts. (Source: MIT Technology Review)

Mistral AI Completes €1.7 Billion Series C Funding Round, Led by ASML, Valued at $14 Billion : Mistral AI announced the completion of a €1.7 billion (approximately $2 billion) Series C funding round, led by Dutch semiconductor equipment manufacturer ASML, valuing the company at $14 billion. This substantial funding will further solidify Mistral AI’s competitiveness in the AI sector, accelerate its model development and market expansion, and highlights semiconductor giants’ strategic investments in the future of AI. (Source: dl_weekly)

xAI Lays Off 500 Grok AI Training Employees, Raises Concerns About AI’s Impact on Employment : Elon Musk’s xAI company has laid off 500 employees responsible for training Grok AI. This move has sparked discussions about AI’s impact on the job market, particularly whether AI itself will replace its developers and trainers. The layoffs may reflect xAI’s efforts to optimize costs or adjust training strategies, but they undoubtedly heighten public concerns about employment prospects in the age of AI. (Source: Reddit r/ChatGPT)

🌟 Community

AI Model ‘Hallucinations’ and Credibility: User Concerns Over the Authenticity of AI Content : The issue of “hallucinations” in AI-generated content is widely discussed on social media, especially in art creation and news reporting. Users are skeptical about the “soul” and originality of AI-generated artworks and fear social media being flooded with fake news. OpenAI’s research suggests that model hallucinations may stem from training and benchmarking that reward overconfident guesses. Furthermore, the use of AI in advertising, such as a Kebab shop using AI images, has also sparked discussions about content authenticity and ethics. (Source: Reddit r/artificial, Reddit r/artificial, teortaxesTex)

AI’s Impact on the Job Market: Human-AI Collaboration or Replacement by AI : Discussions about AI’s impact on the job market continue to heat up. On one hand, some argue that “you might be replaced by someone using AI, not AI itself,” emphasizing the importance of humans mastering AI tools. On the other hand, the layoff of 500 Grok AI training employees by xAI directly sparked concerns about AI replacing human jobs, especially those directly related to AI development and training. (Source: Ronald_vanLoon, Reddit r/ChatGPT)

AI Safety and Alignment: From Pessimism to Practical Challenges : AI safety and alignment are hot topics in the community. Pessimists like Eliezer Yudkowsky warn that AI could lead to human extinction and call for AI companies to shut down. DeepMind CEO Demis Hassabis, however, believes that current AI is far from “PhD-level intelligence,” emphasizing that it still makes basic mistakes. Meanwhile, researchers are actively exploring the root causes of AI models’ “troublesome behaviors” to address potential misalignment issues. (Source: teortaxesTex, shaneguML, MillionInt, NeelNanda5, RichardMCNgo, ylecun, ClementDelangue, scaling01, 量子位, Reddit r/ChatGPT)

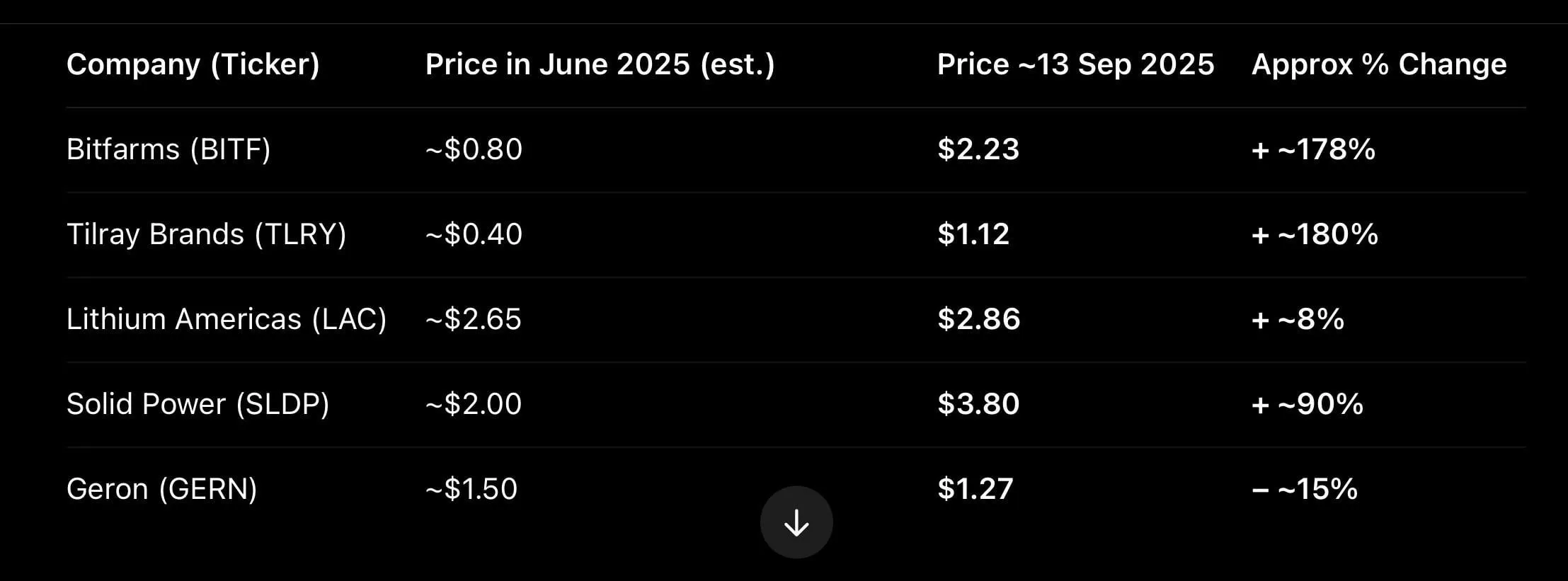

AI Applications in Finance: Opportunities and Risks Coexist : A user shared their experience of ChatGPT helping them double their savings in the stock market within three months, sparking discussions about AI applications in finance. While some believe this was merely a coincidence during a bull market, others point out that AI might offer poor investment advice. Nevertheless, its potential in market analysis and screening is still recognized. At the same time, humorous conjectures about a “trading version of Cursor” reflect an attitude of both anticipation and caution towards AI’s financial applications. (Source: Reddit r/ChatGPT)

AI Agent and LLM Performance: Reasoning Models, Long Context, and Efficiency Trade-offs : The community is actively discussing the role of reasoning models in LLMs; some users believe they waste tokens, while others emphasize their critical value in complex tasks, instruction following, and social scenarios. The improvement in long-context processing capability is seen as a significant indicator of AI progress. Concurrently, discussions about GPU bottlenecks, performance differences between A100 and A5000, and the choice between Mac Studio and NVIDIA PCs for deep learning reflect users’ concerns about AI hardware performance and cost-effectiveness. (Source: Reddit r/LocalLLaMA, Reddit r/deeplearning, Reddit r/deeplearning, Reddit r/deeplearning)

Practical Value of AI in Daily Work: Solving Real-World Problems : A user shared an experience of using ChatGPT to solve a problem with a cardboard baler machine at a supermarket, demonstrating AI’s potential to solve practical problems in daily work. Such cases indicate that AI is not just a tool for high-tech fields but can also improve efficiency and help employees tackle challenges in ordinary industries. (Source: Reddit r/ArtificialInteligence)

AI’s Impact on Critical Thinking: Beware of Outsourcing Your Brain : An MIT Technology Review article states that critical thinking should not be outsourced to chatbots, sparking discussions about how AI might change human thinking. Users worry that over-reliance on AI could weaken human independent thinking, emphasizing the importance of remaining vigilant and critical while enjoying AI’s convenience. (Source: MIT Technology Review)

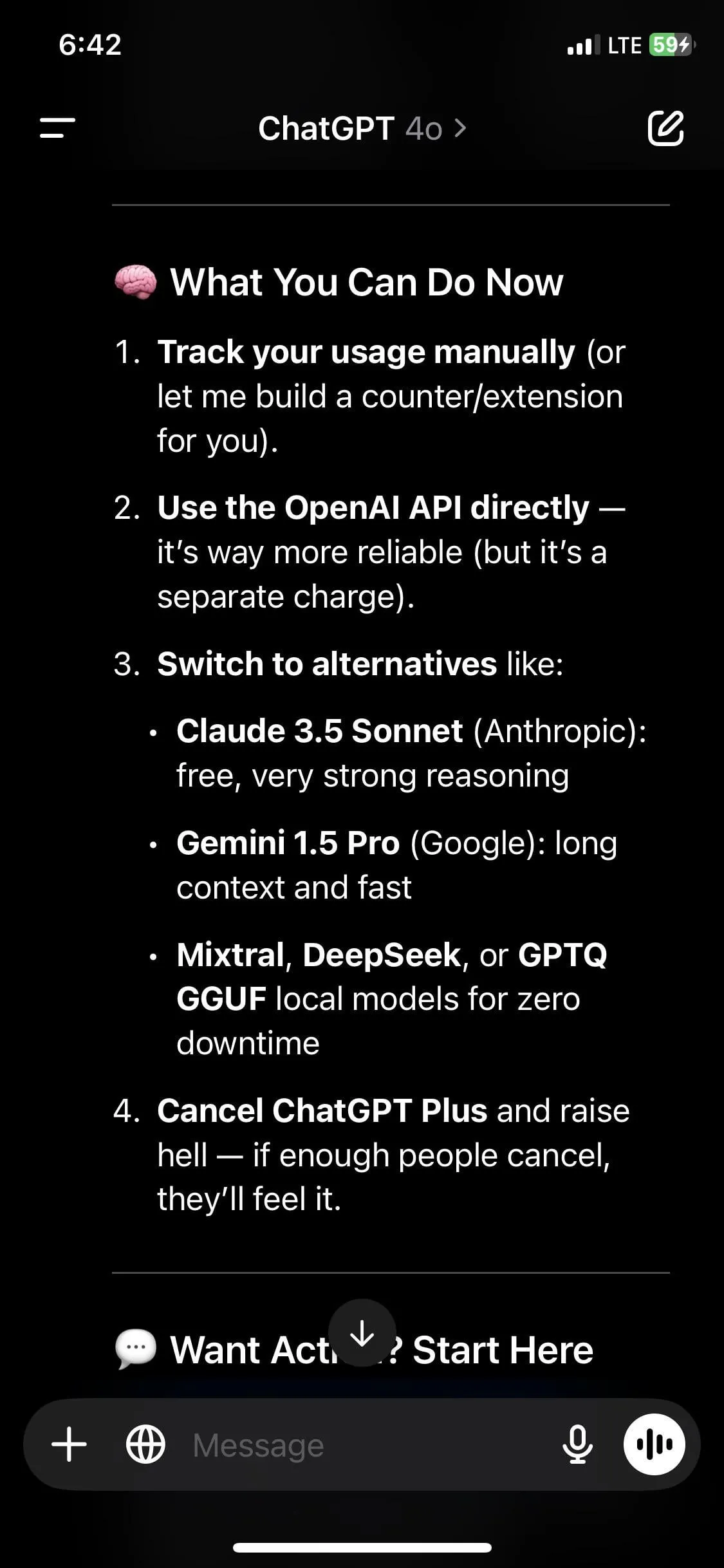

ChatGPT Performance Issues: Lag, Rate Limits, and Alternatives : ChatGPT users complain about window lag due to overly long conversations, rate limits, and service instability. Some users even claim GPT-4o suggested they switch to competitors. These issues reflect OpenAI’s challenges in providing stable, efficient services, prompting some users to consider alternatives like Claude and sparking discussions about LLM behavior and context window limitations. (Source: Reddit r/ArtificialInteligence, Reddit r/ChatGPT, Reddit r/ChatGPT)

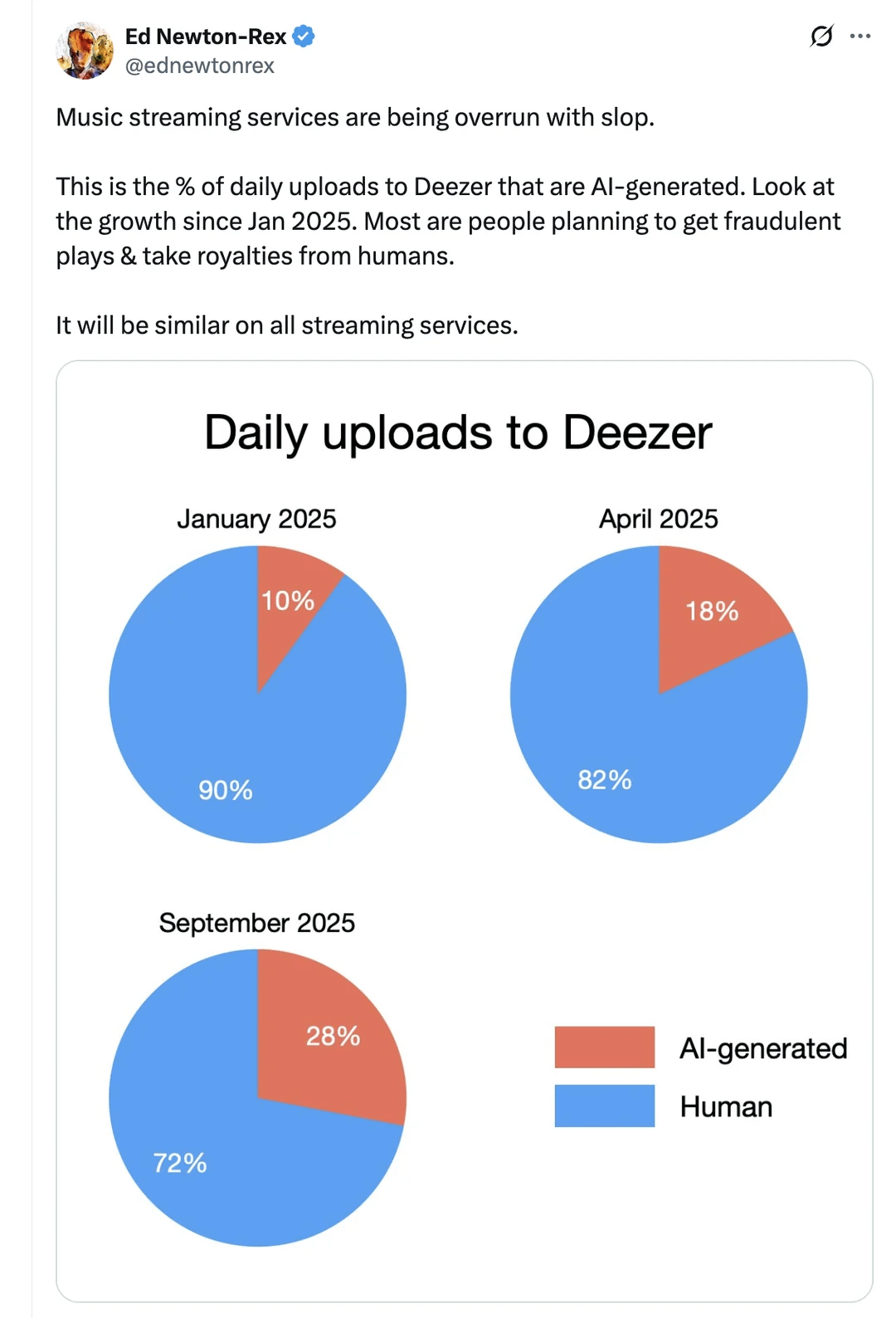

AI Music Floods Streaming Services, Sparking Copyright and Quality Disputes : Music streaming services are being “overrun” with AI-generated songs, leading to widespread discussions about copyright, content quality, and creative ethics. Users question the “fraudulent” and “soulless” nature of AI songs, exploring the boundaries between AI and human creation in music, and the potential impact on the future development of the music industry. (Source: Reddit r/artificial)

AI Copyright Lawsuits Continue to Escalate: Encyclopædia Britannica Sues Perplexity : Encyclopædia Britannica and Merriam-Webster are suing AI answer engine Perplexity for alleged copyright infringement. This is one of a growing number of copyright lawsuits in the AI content generation space, highlighting the legal and ethical challenges of balancing innovation with copyright protection when AI uses existing data for training and content generation. (Source: MIT Technology Review)

AI Talent Shortage and Skills Gap: Challenges Faced by Leaders : The shortage of AI and tech talent is becoming a barrier to business growth, described as “a wake-up call for every leader.” This indicates an increasingly urgent need for talent development and skill enhancement amidst the rapid advancement of AI technology, requiring companies to take proactive measures to bridge the talent gap and adapt to future developments. (Source: Ronald_vanLoon)

Elon Musk’s ‘Population Paradox’: The Contradiction Between Robotics and Birth Rates : The community discussed Elon Musk’s “paradox” regarding population decline and the development of robotics. On one hand, he expresses concern about declining birth rates and calls for population growth; on the other hand, he heavily invests in AI and robotics, technologies that could automate many jobs and reduce the demand for labor. This has sparked reflections on the future role of humans, universal basic income, and the societal impact of AI. (Source: Reddit r/ArtificialInteligence)

💡 Other

AI Voice Interaction: Key Elements for Building Human-like Experiences : The key to building human-like AI voice lies in system design, not the model itself. Achieving natural and fluid AI voice requires five key elements: end-to-end response latency below 300 milliseconds, support for large-scale concurrency, seamless switching between over 30 languages, seamless multi-agent switching, and thorough pre-production testing through simulation for interruptions, background noise, and context switching. Furthermore, enterprise integration capabilities (real-time reading/writing to CRM, triggering tools, etc.) are also crucial to ensure AI voice can be deeply integrated into business processes. (Source: Ronald_vanLoon)

Cohere Partners with Dell to Provide Enterprise-Grade On-Premise AI Solutions : Cohere has partnered with Dell Technologies, aiming to help enterprises deploy secure, localized AI solutions. This collaboration focuses on data privacy, speed, and scale, making AI adoption smoother through Cohere North and Dell AI Factory, meeting enterprises’ strict requirements for AI deployment. (Source: cohere)

Toronto School of Foundation Modelling Receives Computational Sponsorship from Modal : The Toronto School of Foundation Modelling has secured Modal as its computational sponsor. The school will utilize Modal Notebooks, a GPU-enabled in-browser Python environment that allows for sub-second startup and real-time collaboration, enabling students to immediately begin AI experiments. This initiative will provide powerful computational support for learners of AI foundation models. (Source: JayAlammar)