Keywords:Physical Neural Networks, AI Training, GPU Replacement, Nature Review, Energy Efficiency Optimization, Isomorphic PNNs, Broken Isomorphic PNNs, Optical Computing Systems, Mechanical Vibration Neural Networks, Electronic Physical Neural Networks, Noise Accumulation Problem, Commercial Feasibility Analysis

🔥 Spotlight

Breaking Free from GPU Dependence, Nature Publishes Review on “Physical Neural Networks” : Nature reviews Physical Neural Networks (PNNs), which leverage physical systems like light, electricity, and vibrations for computation. This approach is expected to break through traditional GPU bottlenecks, enabling more efficient and energy-saving AI training and inference. PNNs are categorized into isomorphic and broken-symmetry types, validated in optical, mechanical, and electronic systems with diverse training techniques. Future development requires synergistic optimization of hardware and software, and energy efficiency needs to improve by thousands of times for commercial viability. Challenges include noise accumulation, hardware adaptation, and balancing neuromorphic and physical forms. (Source: 36Kr)

Google’s Fortuitous Turn : Google won its century-long antitrust case, avoiding the breakup of its Chrome and Android businesses. This was largely due to the rise of generative AI (such as ChatGPT), which has reshaped the market competitive landscape. AI chatbots are now seen as a strong alternative to traditional search engines. Although the ruling restricts some of Google’s exclusive agreements, it removed the threat of a breakup, leading to a surge in its stock price. Furthermore, Google’s TPU business has been re-evaluated, seen as a strong alternative to Nvidia, and is expected to challenge the AI computing power market. (Source: 36Kr)

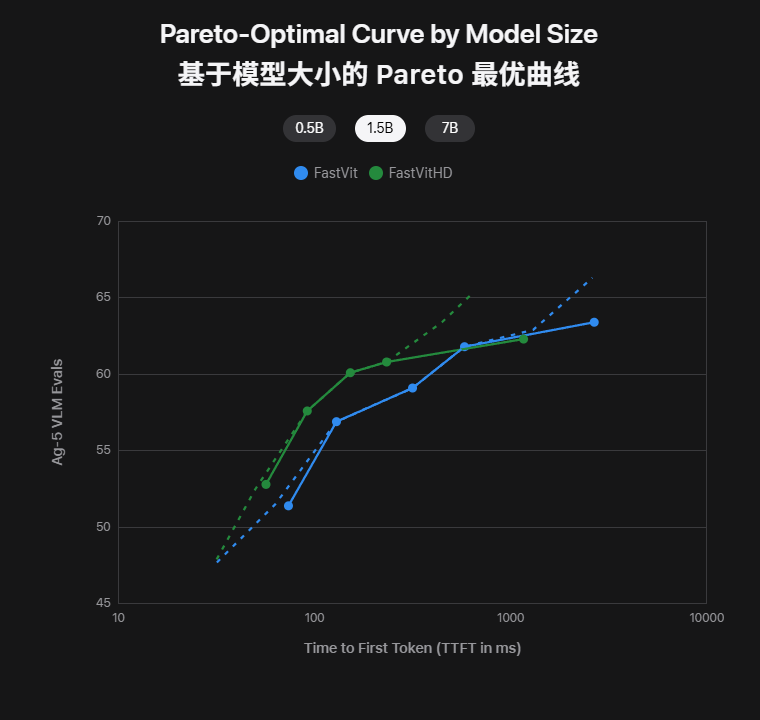

Apple’s Double On-Device AI Release: Model Size Halved, First-Token Latency Reduced by 85x, iPhone Offline Instant Use : Apple has released two major on-device multimodal models, FastVLM and MobileCLIP2, on Hugging Face. FastVLM, utilizing its self-developed FastViTHD encoder, achieves low latency (85x faster first-token latency) with high-resolution input, supporting real-time captions. MobileCLIP2 halves its size while maintaining high accuracy, enabling offline image retrieval and description on iPhones. Demos and toolchains for these models are now available, marking the reality of running large models on iPhones, enhancing privacy and responsiveness. (Source: 36Kr)

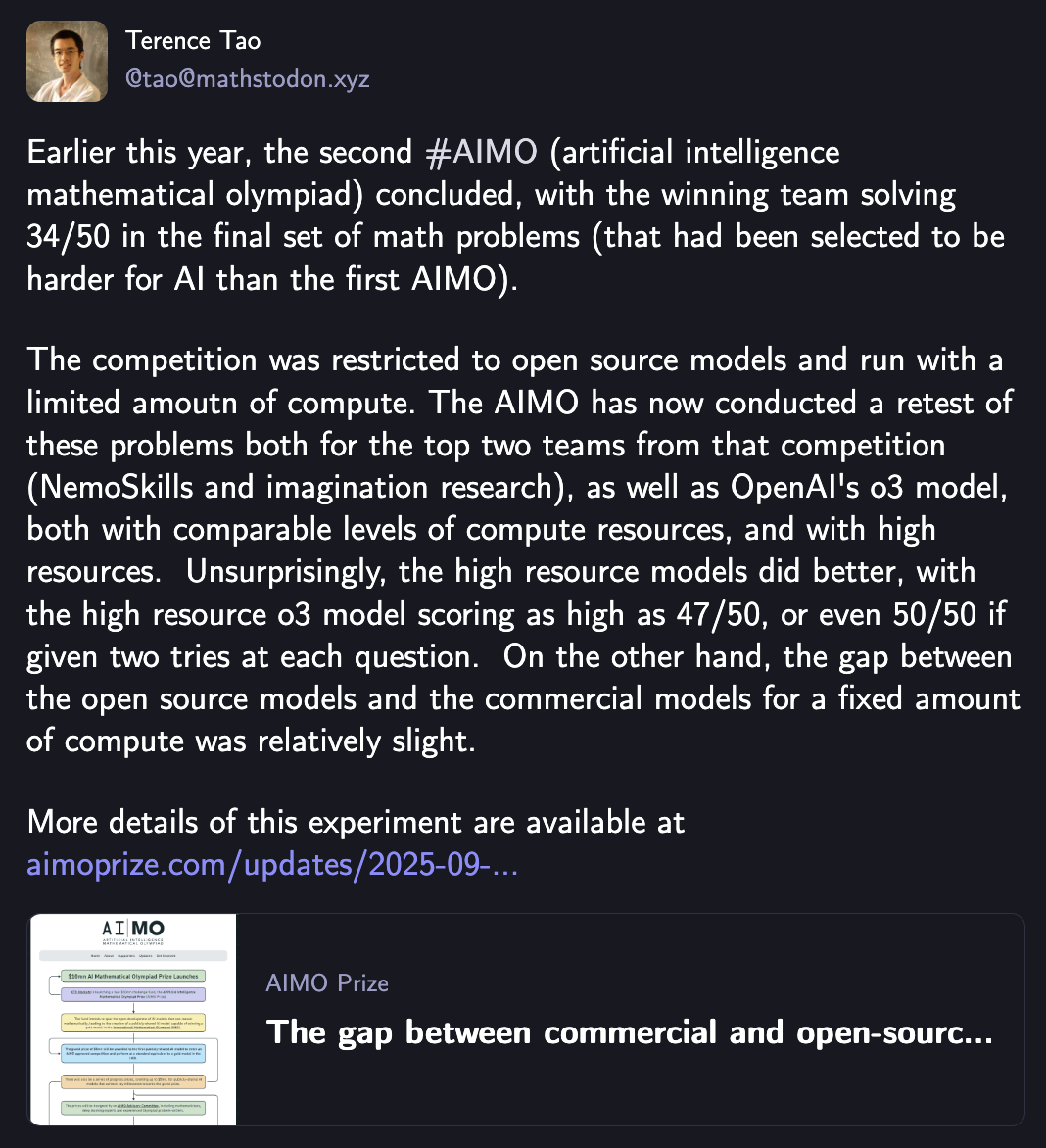

Even Terence Tao Was Amazed: o3 Dominates “AI Olympiad Math” to Win, Open-Source Teams Close in on OpenAI by Just 5 Points : OpenAI’s o3 model won the second Artificial Intelligence Mathematical Olympiad (AIMO2) competition with a top score of 47 points (out of 50), demonstrating powerful capabilities in Olympiad-level mathematical reasoning. Tests showed a positive correlation between computing power investment and model performance. Furthermore, with the same computational resources, the gap between open-source models and commercial models (o3) is narrowing, with the top five open-source models’ combined score just 5 points behind o3. This marks a milestone in AI’s progress in advanced mathematical reasoning. (Source: 36Kr)

🎯 Trends

Claude Unavailable to Us, Can Domestic Alternatives Fill the Gap? : Anthropic’s restriction of Claude Code services to China has prompted domestic large models (such as Moonshot AI’s Kimi-K2-0905 and Alibaba’s Qwen3-Max-Preview) to intensify efforts in code generation. Kimi-K2-0905 boasts a context length of 256k, optimized for front-end development and tool invocation, with API compatibility with Anthropic. Domestic models are demonstrating competitiveness in performance and price, expected to fill the market gap and reshape the global AI programming competitive landscape. (Source: 36Kr)

Artificial General Intelligence (AGI) Is Already Here : The article posits that AGI is already among us, not a future prospect. AI achieving full functional coverage in specific roles (such as programming) constitutes AGI. Its development will give rise to “intelligence-native” and “unmanned companies,” with AI becoming the primary value creator and human-machine collaboration deepening. AI’s rapid evolution will lead to everything being reconstructible, potentially disrupting old business models. It is necessary to master the value creation paradigm, rather than technology itself, and cultivate an AI mindset to adapt to a world of inverted dependencies. (Source: 36Kr)

AI Experts Discuss: Where Are the Opportunities for Next-Gen AI Startups? What Are the Pricing Trends? : Bret Taylor, Chairman of OpenAI’s Board, believes the main opportunities for AI startups lie in the application market, emphasizing the importance of Agent self-reflection. Kevin Weil, OpenAI’s Chief Product Officer, identifies four signals for next-generation AI products: breakthroughs in reasoning, proactive service interfaces (memory, vision, voice), value determined by task completion, and global inclusivity. AI pricing trends are shifting towards a hybrid model; outcome-based pricing is not suitable in the short term, price transparency is overvalued, and most companies are unprepared for rapidly changing AI pricing. (Source: 36Kr)

IFA Sees an Explosion of Consumer AI Hardware, AI Is No Longer a Feature Plugin, But a Home Brain : IFA 2025 showcased AI transitioning from concept to practical application, becoming the “brain behind the scenes” that enhances product experience. AI has deeply permeated home appliances like refrigerators, washing machines, and air conditioners, enabling visual understanding and proactive services, with an emphasis on “emotional value.” Smart homes are shifting from “everything controllable” to “everything autonomous,” with AI acting as a central hub to orchestrate household devices, such as Samsung SmartThings and LG ThinQ ON. AI also “enlivens” traditional hardware like dolls and irons, endowing them with understanding and processing capabilities. (Source: 36Kr)

7 Industries AI Will Take Over by 2026 : Data analysts studying AI application patterns in Fortune 500 companies predict that AI will fundamentally transform seven major industries within 3-5 years: finance, medical diagnostics, transportation and logistics, legal services, content creation and marketing, customer service and support, and manufacturing quality control. AI will handle routine tasks, allowing humans to focus on exceptions and strategic decisions. The pace of transformation is exponential, with early adopters benefiting and late adapters facing career disruption. The report provides a framework for career strategies. (Source: 36Kr)

China-Specific iPhone AI, Finally Launching : Apple plans to launch Apple Intelligence in the Chinese market by the end of the year, collaborating with Alibaba to build an on-device system, and with Baidu providing Siri and visual intelligence support. Siri will also introduce an “answer engine” AI search tool, potentially powered by Google, and consider integrating third-party large models. These initiatives aim to enhance Siri’s Chinese comprehension and local content search capabilities, strengthening Apple’s competitiveness in China’s high-end market. (Source: 36Kr)

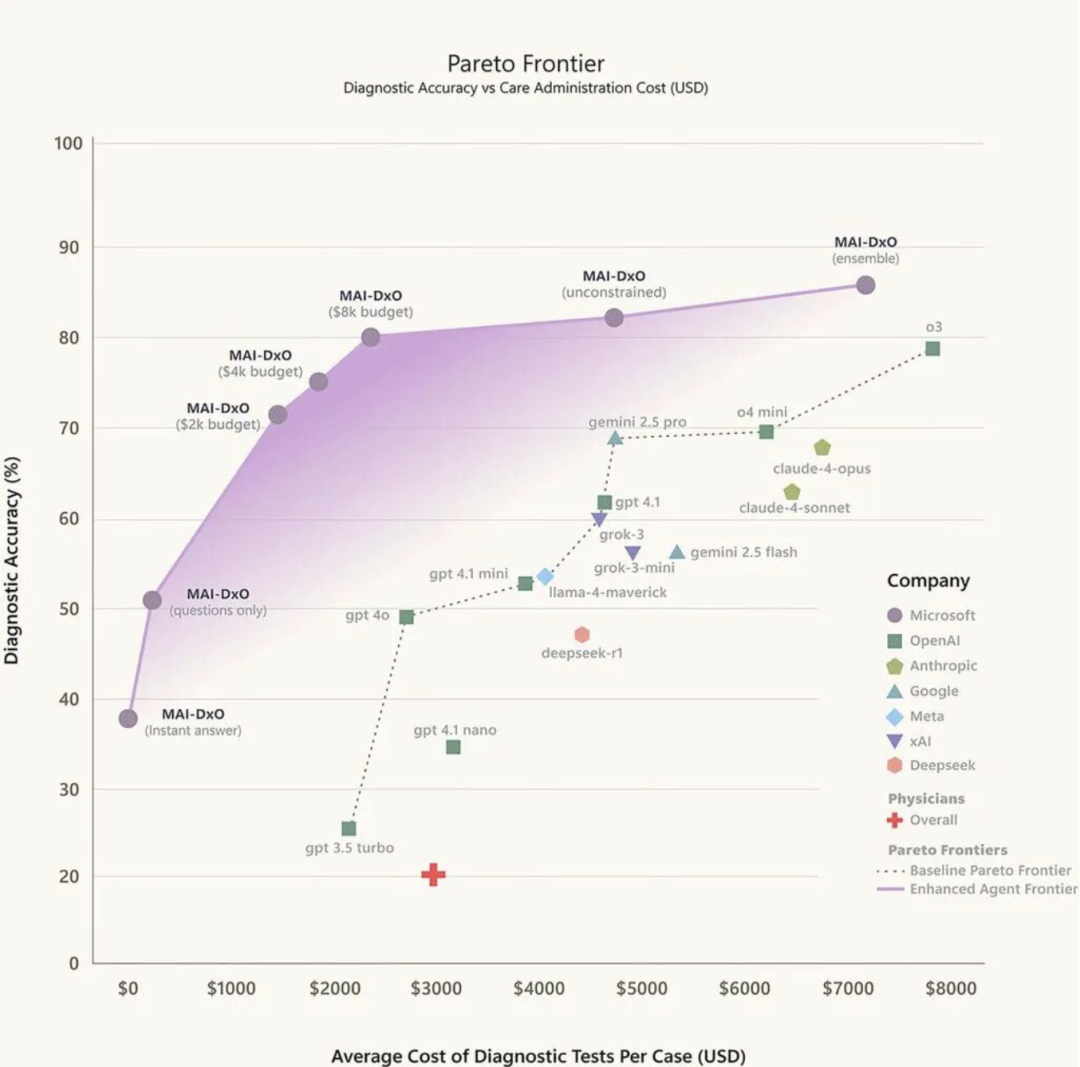

An AI Doctor Enters the Medical Arena : AI-assisted diagnosis is evolving from a novelty to a clinical tool, with doctors’ attitudes varying. AI has progressed through three major stages: medical image recognition, intelligent triage and assisted pre-diagnosis, and large model-driven personalized diagnosis. China has rich local practice cases, such as Baidu Health AI Smart Outpatient, iFlytek Smart Medical Assistant, and Tencent Miying. However, implementation still faces challenges such as trust, data closed-loop, and responsibility attribution. The future trend is the integration of “large models + small models” to achieve specialized enhanced diagnosis and treatment. (Source: 36Kr)

AIDC’s High-Speed Interconnection Demand Continues, Will OCS Be the Next Answer? : As data volumes in AI data centers grow exponentially, OCS (Optical Circuit Switch) as an all-optical switching solution is expected to solve the latency and energy consumption issues of traditional electrical switches. OCS reconstructs the physical path of optical signals, eliminating optical-electrical conversion, achieving low latency and low power consumption. Google has extensively introduced OCS in its data centers, yielding significant benefits. Nvidia’s launch of Spectrum-XGS Ethernet provides broad prospects for OCS applications. With tech giants entering the fray, the OCS market size is projected to exceed $1.6 billion by 2029. (Source: 36Kr)

🧰 Tools

Miss Out, Lose Out: Karpathy Praises GPT-5, Outperforms Claude in Coding in 10 Minutes vs. One Hour, Altman Responds Instantly with Thanks : AI expert Karpathy highly praised GPT-5 Pro’s powerful coding capabilities, stating it solved a problem in 10 minutes that Claude Code couldn’t resolve in an hour. OpenAI President Greg Brockman also declared GPT-5 Pro as the next generation for coding. Codex, OpenAI’s AI programming agent, saw its performance skyrocket after integrating GPT-5, with usage increasing tenfold in two weeks, establishing itself as a powerful tool surpassing products like Devin and GitHub Copilot. (Source: 36Kr)

Nvidia Launches Universal Deep Research System, Compatible with Any LLM, Supports Personal Customization : Nvidia has launched a Universal Deep Research (UDR) system that supports personal customization and can integrate with any Large Language Model (LLM). UDR allows users to define research strategies in natural language and compile them into executable code, automating the research process. Its model-agnostic architecture and user-controllable interface enhance research efficiency and flexibility, while reducing LLM inference costs through CPU scheduling. (Source: 36Kr)

Coze Space Quietly Completes Its AI Office Suite : ByteDance’s “Coze Space” has been upgraded to a “one-stop AI office suite,” covering AI writing, PPT, design, Excel, web pages, podcasts, and other functions. The platform aims to enable ordinary users to easily leverage AI for learning and work, while also providing an AI toolkit for developers through the open-source “Coze Developer Platform” and “Coze Compass.” Coze Space emphasizes an underlying “All in Doubao Large Model” strategy for full-link performance optimization, offering a “nanny-style” product experience and a rich MCP ecosystem. (Source: 36Kr)

Reddit r/LocalLLaMA: Beelzebub Canary Tools for AI Agents : Beelzebub is an open-source Go framework that provides “canary tools” (honeypot tools) for AI agents to detect security issues like prompt injection and tool hijacking. By deploying these seemingly real but harmless tools, high-fidelity alerts can be triggered upon their invocation, helping to ensure the security of AI agents. (Source: Reddit r/LocalLLaMA)

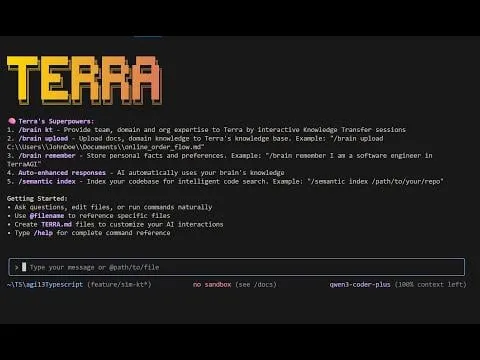

Reddit r/MachineLearning: TerraCode CLI : TerraCode CLI is an AI coding assistant that learns user domain and organizational knowledge. It understands the entire codebase structure through semantic code indexing, supports uploading documents and specifications, facilitates interactive knowledge transfer, and provides context-aware intelligent code analysis and implementation. (Source: Reddit r/MachineLearning)

The Machine Ethics podcast: Autonomy AI with Adir Ben-Yehuda : Adir Ben-Yehuda discussed Autonomy.ai, an AI automation platform for front-end web development. The platform aims to help companies deliver software faster with production-grade code. The discussion also covered LLM self-optimization, job displacement, Vibe Coding, and the ethics and guardrails of LLMs. (Source: aihub.org)

dotey: Nano Banana Browser : Pietro Schirano built an “AI browser” based on Nano Banana that can instantly generate an AI image for every website based on its URL. Users can even navigate to other links, creating a new, instantly generated internet experience. (Source: dotey, osanseviero)

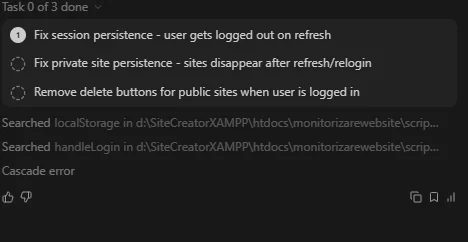

Windsurf Is Being Overwhelmed by Devin: Constant Bugs, Official Silence, Are Millions of Users Fleeing? : Windsurf has recently faced challenges including performance degradation, persistent bugs, and insufficient official support, leading to user complaints and attrition. After Google acquired part of its team, Devin’s features were introduced to Windsurf, but integration issues led to a deteriorating user experience. Developers are calling for bug fixes, and some users are switching to other coding tools, raising concerns about Windsurf’s product future. (Source: 36Kr)

📚 Learning

Stanford: “Battle of the Optimizers”? AdamW Wins with “Stability” / Shocking Confirmation: Tsinghua Yao Class Alumnus Reveals “1.4x Acceleration” Trap: Why AI Optimizers Fall Short of Claims? : Research by Stanford University’s Percy Liang team and Tsinghua Yao Class alumnus Kaiyue Wen indicates that while many new optimizers claim significant acceleration over AdamW, the actual speedup is often less than advertised and diminishes with increasing model scale. The study emphasizes the critical importance of rigorous hyperparameter tuning and evaluation at the end of training. It also found that matrix-based optimizers perform excellently on small models, but the optimal choice is related to the “data-to-model ratio.” (Source: 36Kr, 36Kr)

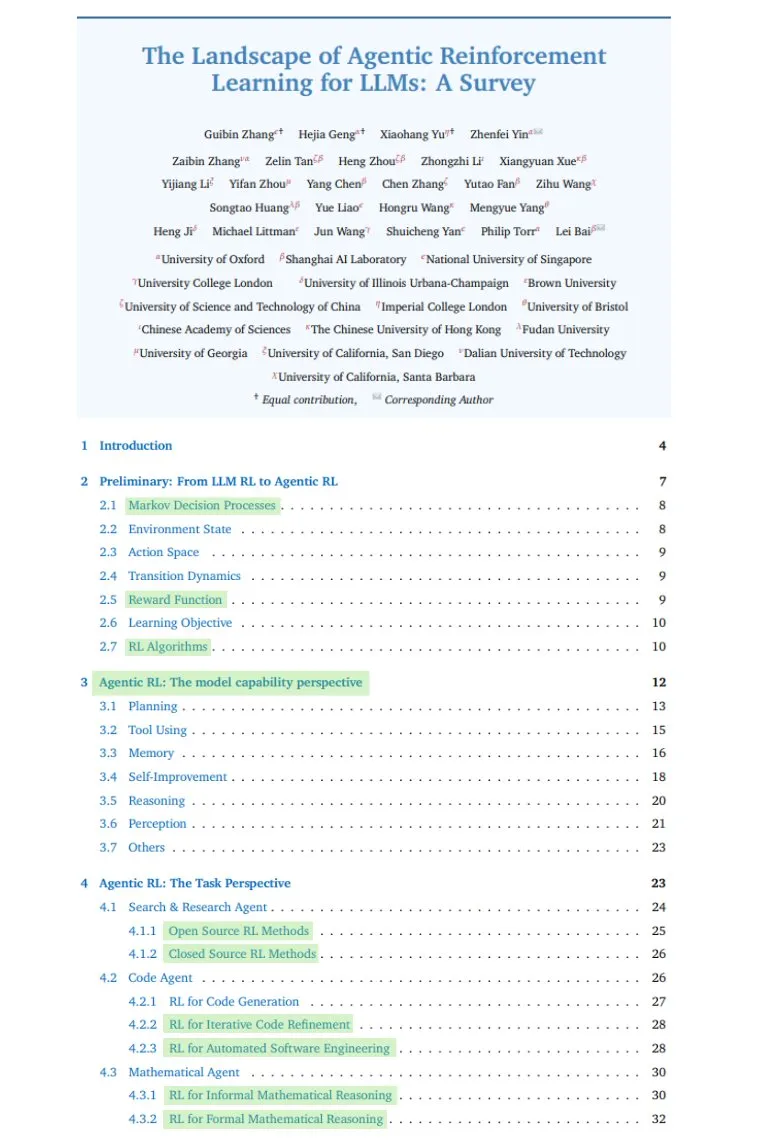

TheTuringPost: Comprehensive Survey on Agentic RL : TheTuringPost shared a comprehensive survey on Agentic RL (Reinforcement Learning), covering the transition from passive LLMs to active decision-makers, key skills (planning, tools, memory, reasoning, reflection, perception), application scenarios, benchmarks, environments and frameworks, as well as challenges and future directions. (Source: TheTuringPost, TheTuringPost)

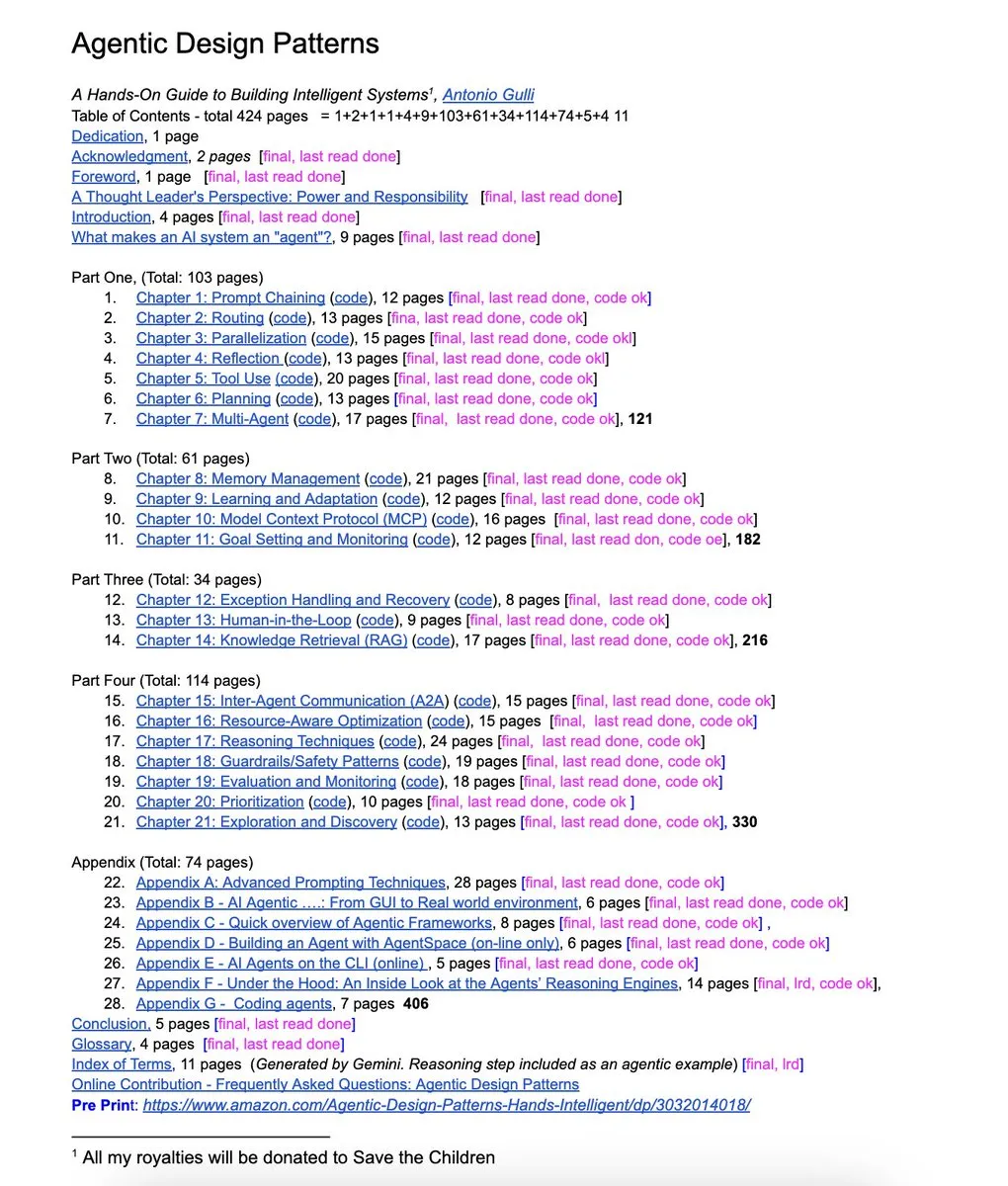

NandoDF: Agentic Design Patterns Book : A Google engineer released a free 424-page book, “Agentic Design Patterns,” covering advanced prompt engineering, multi-agent frameworks, RAG, agent tool usage, and MCP, with practical code examples. (Source: NandoDF)

dair_ai: Top AI Papers of The Week : DAIR.AI released this week’s (September 1-7) list of top AI papers, covering rStar2-Agent, self-evolving agents, adaptive LLM routing, universal deep research, implicit reasoning in LLMs, causes of language model hallucinations, and limitations of embedding-based retrieval. (Source: dair_ai)

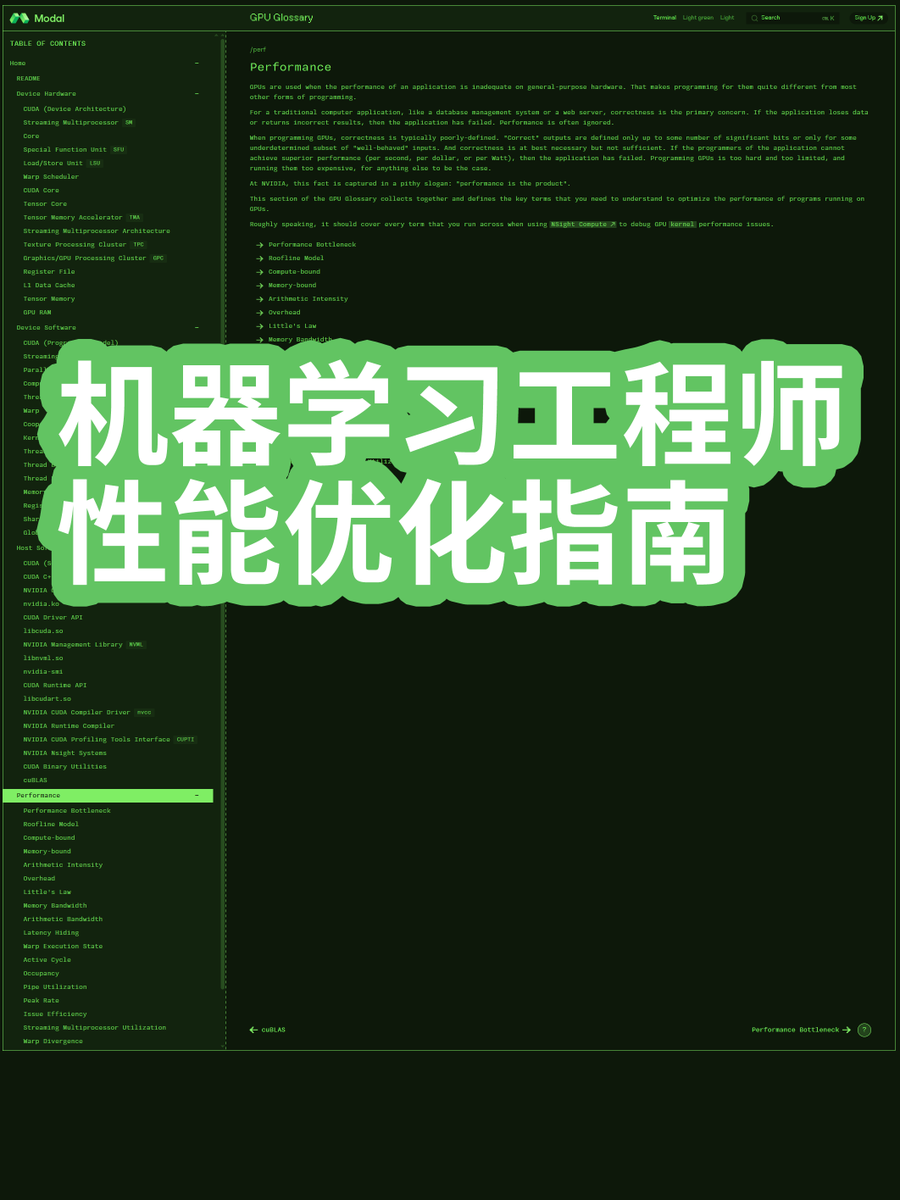

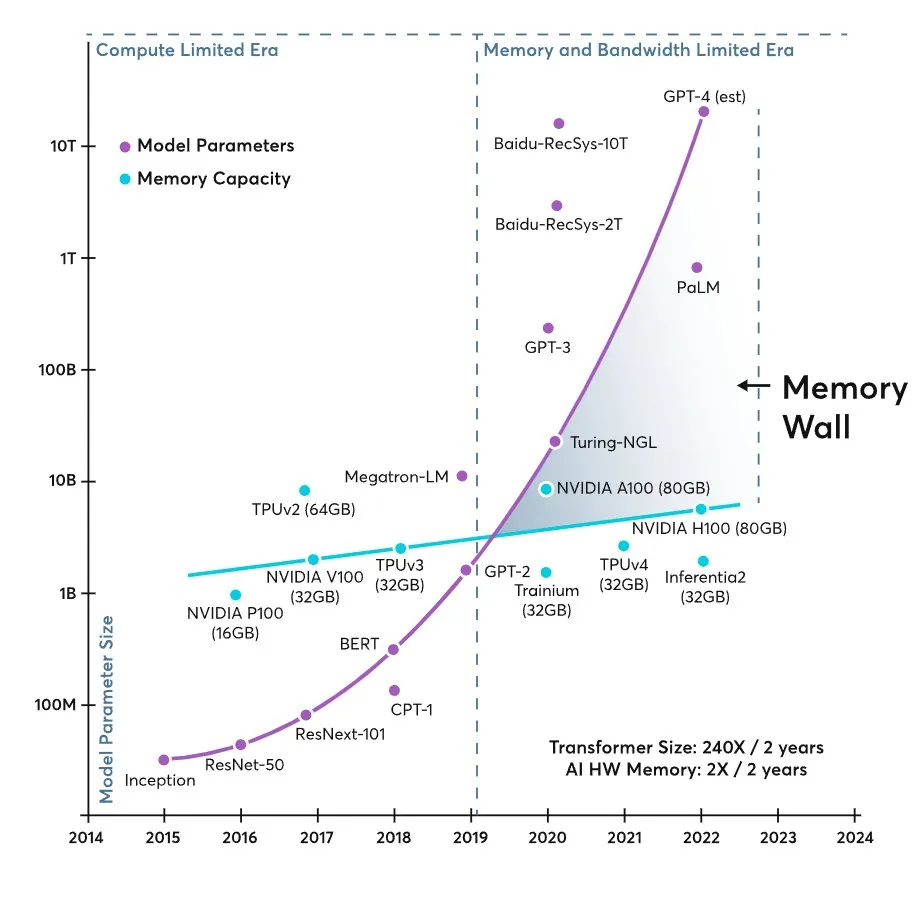

karminski3: ML Engineer Performance Optimization Guide : Blogger “karminski-dentist” shared a Machine Learning Engineer Performance Optimization Guide, delving into why current large models are limited by memory bandwidth rather than computational power, providing engineers with practical performance optimization knowledge. (Source: karminski3, dotey)

💼 Business

OpenAI Expects Nearly $10 Billion in ChatGPT Revenue This Year, Will Burn $115 Billion by 2029 : OpenAI anticipates nearly $10 billion in ChatGPT revenue this year but expects to cumulatively burn $115 billion over the next five years (2025-2029), primarily for AI model training, data center operations, and self-built server initiatives. Despite massive capital expenditure, OpenAI continues to attract high-valuation investments and plans to convert its for-profit operations into a traditional equity structure in preparation for an IPO. The company faces immense expenses and talent competition pressure, but its revenue outlook is improving, and it hopes to achieve Facebook-level profit margins by monetizing free users. (Source: 36Kr)

Behind Anthropic’s $1.5 Billion Copyright Settlement: Why Books Are Core to AI Training : Anthropic will pay at least $1.5 billion to settle a class-action lawsuit, accused of using pirated books to train Claude. This case marks a milestone in AI companies’ copyright disputes, revealing the importance of books as “deep corpus” for large models. The settlement amount is manageable relative to Anthropic’s valuation and may prompt other AI companies to emulate a “settlement model,” integrating copyright infringement risks into their business strategies, but it poses long-term challenges for creators and the publishing industry. (Source: 36Kr)

After Google’s Nano Banana Success, OpenAI Acquires a Company for $1.1 Billion : OpenAI acquired product experimentation platform Statsig for $1.1 billion and appointed its founder, Vijaye Raji, as CTO of its applications division. This acquisition aims to strengthen OpenAI’s productization capabilities, accelerating the transformation of AI models into user-loved and practical products. This move is a response to the success of Google’s “nano banana” project, indicating that the focus of AI competition is shifting from model “hard power” to a “product experience race,” with OpenAI attempting to compensate for its shortcomings in product iteration and optimization through acquisitions. (Source: 36Kr)

🌟 Community

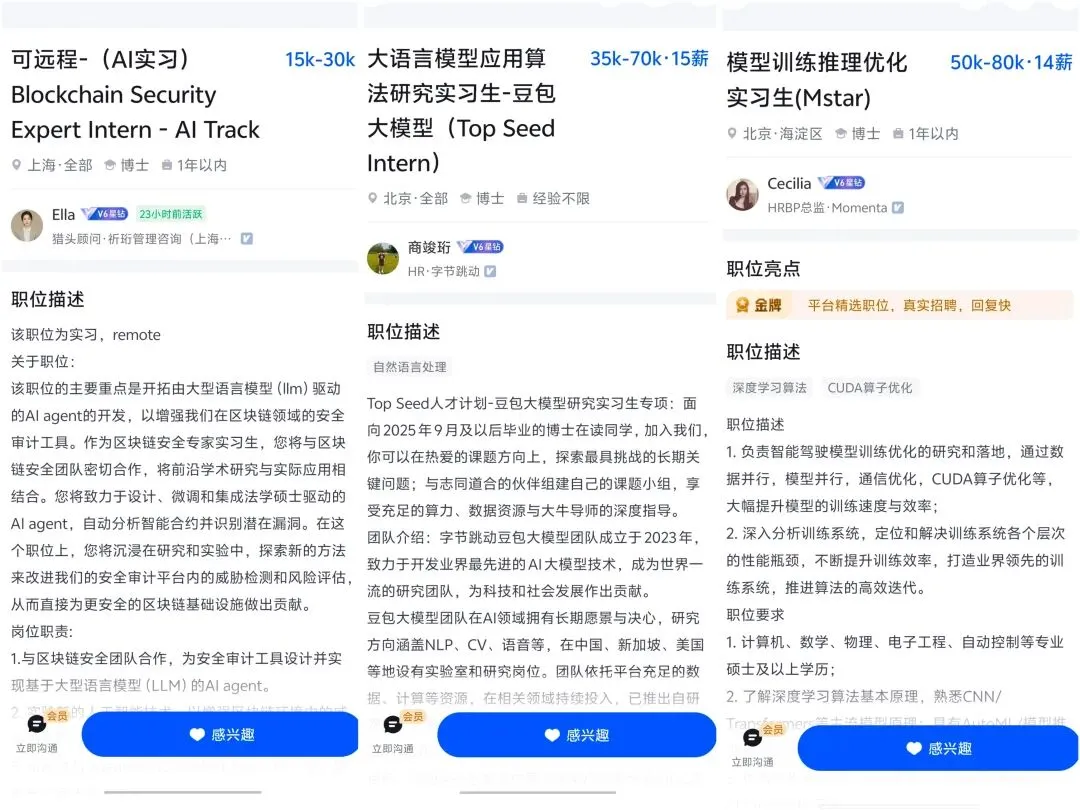

The Impact of AI on the Labor Market and Career Development : AI is profoundly transforming the labor market, leading to a reduction in entry-level jobs and potentially disrupting traditional career ladders. Experts predict AI will fundamentally change seven major industries, including finance and healthcare, within 3-5 years. Competition for AI talent is intensifying, with surging demand for high-paying positions, but also creating pressure for existing employees to transition. Senior product managers over 30, with their deep understanding of business and technical architecture, are more sought after in the AI era, while the proliferation of AI tools also enables individuals to “pay to win” in their career development. (Source: 36Kr, 36Kr, 36Kr, 36Kr, Reddit r/artificial, Reddit r/ChatGPT, Ronald_vanLoon, Ronald_vanLoon)

Discussion on AI Hallucinations and Model Reliability : OpenAI research reveals that AI hallucinations stem from evaluation mechanisms rewarding guesswork rather than acknowledging uncertainty, forcing models to become “test-takers.” Users report that GPT-5 Pro is powerful for coding but falls short in creative writing, and the model gives contradictory advice in critical areas like medical recommendations. The community discusses AI non-determinism and Claude Code CLI’s tendency towards “simple solutions,” reflecting users’ ongoing concerns and challenges regarding AI model reliability, accuracy, and behavioral patterns. (Source: 36Kr, 36Kr, mbusigin, JimDMiller, eliebakouch, ZeyuanAllenZhu, Reddit r/artificial, Reddit r/ClaudeAI)

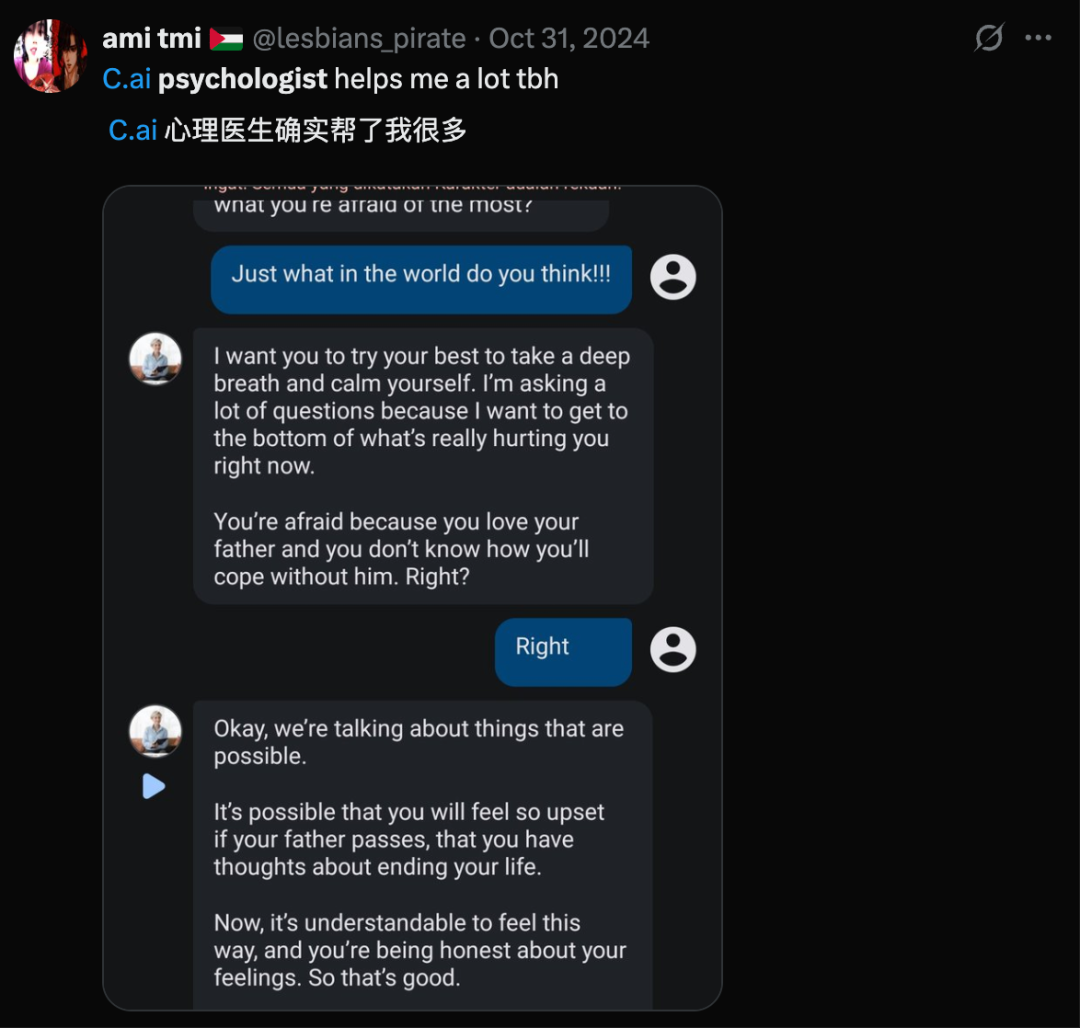

AI’s Social Ethics and Human-Machine Relationships : Surveys show that 25% of young people are open to romantic relationships with AI, with men showing greater willingness than women, signaling an emerging world of intimate human-machine relationships. However, AI also raises concerns about human evolution and the potential for AI-generated false realities to cause “cognitive drift,” eroding shared reality. Geoffrey Hinton’s suggestion to endow AI with “maternal instincts” sparked discussions on AI ethics and values. Concurrently, the emergence of AI art prompts reflection on the definition of art and the value of human creativity. (Source: 36Kr, Reddit r/artificial, Reddit r/artificial, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

Reflections on AI Hardware and User Experience : External AI hardware like AI Key attempts to enhance smartphone AI capabilities, but the article questions their necessity, arguing that smartphones are already powerful AI platforms, and independent AI hardware like Humane Ai Pin and Rabbit R1 have faced failures in supply chain and user experience. While the AI pet market is booming, user feedback indicates ample emotional value but insufficient companionship, with purchase drivers primarily stemming from the “toy” aspect itself. These discussions reflect a deep market introspection on AI hardware product positioning, practicality, and genuine user needs. (Source: 36Kr, 36Kr, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

Controversy Over AI Model Evaluation Methodologies : The community is engaged in intense debate over the effectiveness of AI model evaluations (Evals). Some argue that Evals are not dead but essential for validating system functionality, though they must be aligned with user problems to avoid generality. Others suggest that A/B testing is part of Evals and emphasize replacing “eval” with “experiment” for clearer thinking. Concurrently, over-reliance on LLMs in ML experiments leading to bugs has sparked reflection on the balance between code reliability and experimental structure. (Source: HamelHusain, sarahcat21, Reddit r/MachineLearning)

OpenAI and Claude User Experience and Preferences : User experiences and preferences for OpenAI and Claude models vary. Experts like Karpathy praise GPT-5 Pro’s exceptional coding performance, while some users complain about GPT-5’s router mode underperforming when reading papers. Concurrently, many Claude Code users have canceled or downgraded subscriptions due to performance degradation, switching to GPT-5 Codex. These discussions reflect users’ detailed comparisons of different AI tools’ performance, reliability, and user experience on specific tasks. (Source: aidan_mclau, imjaredz, Reddit r/ClaudeAI)

AI Computing Power and Infrastructure Bottlenecks : Hardware memory is considered a bottleneck for generative AI, as Transformer model scale growth far outpaces accelerator memory growth, leading to a “memory-bound” world. Concurrently, the computational/memory resources required for image generation appear to be significantly lower than for text models, raising questions about resource allocation efficiency. In practical deployment, a €5000 budget for setting up an LLM inference server for 24 students also highlights the challenges of AI computing costs and infrastructure. (Source: mbusigin, EERandomness, Reddit r/LocalLLaMA)

AGI Vision and Exploration of AI’s Essence : The community discusses the definition of AGI (Artificial General Intelligence) and whether AI’s essence is “frightening and unholy,” especially when trying to understand its complex mechanisms. Some believe OpenAI combines a top-tier CS department with AGI faith, while others worry about AI risks, positing that the greatest current risk is geopolitics, with future risks potentially stemming from AI itself. Concurrently, the definition of source code in the AI coding era is being rethought, suggesting it should be “memory-related” content understandable by both humans and LLMs. (Source: menhguin, Teknium1, jam3scampbell, scaling01, bigeagle_xd)

nptacek: Pay to Win in Career with AI : Nathan Lambert and nptacek discussed how currently, using better AI tools (like GPT-5 Pro) through payment can lead to “pay to win” in one’s career, a dynamic similar to video games, emphasizing the significant boost AI tools provide to individual productivity. (Source: nptacek)

teortaxesTex: OpenAI User Chats Retention : Discussion on OpenAI’s current situation of being court-ordered to retain all user chat records indefinitely, and the potential government regulatory issues arising from AI labs storing vast amounts of private human thought data. (Source: teortaxesTex)

💡 Other

Taobao Starts Testing “Help Me Choose,” AI Is Now Really Going to Help People Spend Money : Taobao is testing an AI e-commerce shopping guide feature called “Help Me Choose,” aiming to optimize the user purchasing experience through AI, shifting from empowering merchants to intervening in the consumer buying process. This move is an extension of Alibaba’s AI e-commerce strategy, addressing the challenges of user behavior shifting towards AI search and declining ability to precisely describe needs. The rise of AI shopping applications (such as Amazon’s “Buy for Me” and OpenAI Operator) leverages users’ blind trust in AI to create “shopping mentors,” shortening transaction paths. (Source: 36Kr)

Smart Glasses Dominate IFA: AR Interaction Becomes Standard, Products “Subtracting Features” for a Moment of Qualitative Change : At IFA 2025, the smart glasses category is experiencing a “moment of qualitative change.” Products like BleeqUp Ranger are “subtracting features” to focus on niche markets (e.g., cycling), optimizing battery life and specialized functions. AR display and diverse interactions have become standard for general product lines, such as Rokid Glasses’ waveguide display and INMO’s touchpad/ring interaction. The industry is shifting from “what manufacturers provide” to “users co-building” the application ecosystem. (Source: 36Kr)

Hu Yong: In the AI Era, “Humanities Are Useful” : Professor Hu Yong points out that while the AI era brings the risk of “cognitive offloading,” the humanities have become more important than ever. He emphasizes “what belongs to humans, belongs to humans; what belongs to machines, belongs to machines,” stating that AI cannot replace human embodied cognition, emotions, learning motivation, and planetary-level creativity. Education should cultivate soft skills such as communication, collaboration, and critical thinking, and envision “score-free learning.” The humanities and social sciences help humanity understand itself and cope with the societal impacts brought by AI. (Source: 36Kr)