Keywords:Tesla, Optimus robot, AI, GPT-5, large model training, Meta AI, LLM pretraining, Goldfish loss function, DynaGuard dynamic guardrail model, GAM network architecture, MedDINOv3 medical image segmentation, M3Ret multimodal medical image retrieval

🔥 Spotlight

Musk Releases “Master Plan Part IV”: 80% of Tesla’s Value Lies in Robotics: Tesla officially released “Master Plan Part IV,” with its core focus on introducing AI into the real physical world and achieving “sustainable abundance” through the large-scale unification of Tesla’s hardware and software. Musk pointed out that approximately 80% of Tesla’s future value will come from the humanoid robot Optimus, signaling a paradigm shift for the company from electric vehicles to a deep integration of energy, AI, and robotics, dedicated to solving real-world problems through technology and benefiting all humanity. (Source: 量子位)

🎯 Trends

AI Wins Gold in International Math Olympiad: OpenAI’s GPT and Google DeepMind’s Gemini won gold medals in the International Mathematical Olympiad, defying expert predictions and demonstrating astonishing progress in LLM mathematical reasoning, indicating that AI development is far exceeding expectations and entering an era of “mass intelligence.” This is not only a technological breakthrough but also sparks profound discussions on the boundaries of AI capabilities and its future societal impact. (Source: 36氪)

GPT-5 Excels in Werewolf Game: In the AIWolfDial 2025 Werewolf benchmark test, GPT-5 achieved an overwhelming lead with a 96.7% win rate, showcasing strong social reasoning, deception, and anti-manipulation capabilities. Kimi-K2 exhibited a bold and aggressive “impersonation” style, reflecting the individualized behavioral patterns of LLMs in complex social interactions. (Source: 量子位,Reddit r/deeplearning)

“Goldfish Loss”: A New Method for Large Model Training: Research teams from the University of Maryland and others proposed “Goldfish Loss,” which effectively reduces memorization in large models by randomly discarding some tokens during loss function calculation, preventing them from rote learning training data while not affecting downstream task performance, thereby improving the model’s generalization ability. (Source: 量子位)

Meta’s Internal AI Department Reorganization Sparks Controversy: Meta’s Chief AI Officer Alexandr Wang implemented new rules requiring FAIR papers to be reviewed by the TBD lab, potentially “withholding” valuable papers and authors for product implementation. This move caused dissatisfaction among FAIR staff, leading to some employees leaving, highlighting Meta’s intervention in research independence and its aggressive stance on commercializing results during AI strategy adjustments. (Source: 量子位)

LLM Pre-training Optimizer Performance Benchmark: A systematic study of ten deep learning optimizers was conducted, covering different model scales and data-to-model ratios. It found that fair comparison requires rigorous hyperparameter tuning and evaluation of performance at the end of training. The study showed that matrix-based optimizers (such as Muon and Soap) have a speed increase that diminishes with increasing model scale, only 1.1x for 1.2B models, providing guidance for the selection of LLM pre-training optimizers and future research. (Source: HuggingFace Daily Papers,HuggingFace Daily Papers)

DynaGuard: Dynamic Guardrail Model with User-Defined Policies: DynaGuard, a dynamic guardrail model, is proposed, capable of evaluating text according to user-defined policies and quickly detecting policy violations. This model achieves comparable accuracy to standard guardrail models in detecting static harm categories while identifying free-form policy violations in less time, providing flexible and efficient output supervision for chatbots. (Source: HuggingFace Daily Papers)

Gated Associative Memory (GAM) Network: The GAM network is proposed, a novel fully parallel sequence modeling architecture whose complexity is linear with sequence length (O(N)), addressing the Transformer’s quadratic complexity bottleneck in self-attention. GAM combines causal convolution and parallel associative memory retrieval, demonstrating faster training speed and superior or comparable validation perplexity compared to Transformer and Mamba on WikiText-2 and TinyStories datasets. (Source: HuggingFace Daily Papers)

Reasoning Vectors: Transferring Chain-of-Thought Capabilities via Task Arithmetic: Research shows that LLM reasoning capabilities can be extracted as compact task vectors and transferred between models. By calculating the vector difference between fine-tuned and SFT models and adding it to other instruction-tuned models, performance on multiple reasoning benchmarks like GSM8K and HumanEval can be consistently improved, providing an efficient and reusable method for LLM capability enhancement. (Source: HuggingFace Daily Papers)

MedDINOv3: Visual Foundation Model for Medical Image Segmentation: The MedDINOv3 framework is introduced, which effectively applies DINOv3 to medical image segmentation by redesigning the ViT backbone and performing domain-adaptive pre-training on the CT-3M dataset. This model achieves or surpasses SOTA performance on multiple segmentation benchmarks, demonstrating the immense potential of visual foundation models as a unified backbone for medical image segmentation. (Source: HuggingFace Daily Papers)

M3Ret: Zero-Shot Multimodal Medical Image Retrieval: M3Ret achieves SOTA performance in zero-shot image-to-image retrieval by training a unified visual encoder on a large-scale mixed-modality dataset. The model shows strong generalization capabilities on unseen MRI tasks and promotes the development of visual self-supervised foundation models in multimodal medical image understanding through generative and contrastive self-supervised learning paradigms. (Source: HuggingFace Daily Papers)

OpenVision 2: Generative Visual Encoder for Multimodal Learning: OpenVision 2 simplifies architecture and loss design by removing the text encoder and contrastive loss, retaining only the caption generation loss. This purely generative training signal performs excellently on multimodal benchmarks while significantly reducing training time and memory consumption, providing an efficient paradigm for developing visual encoders for future multimodal foundation models. (Source: HuggingFace Daily Papers)

LLaVA-Critic-R1: An Evaluation Model Can Also Be a Powerful Policy Model: LLaVA-Critic-R1, trained with RL to convert preference-annotated evaluation datasets into verifiable signals, is not only a high-performance evaluation model but also a competitive policy model. It surpasses specialized VLMs on multiple visual reasoning and understanding benchmarks and can further improve reasoning performance through test-time self-criticism. (Source: HuggingFace Daily Papers)

Metis: Low-Bit Quantization Training for LLMs: The Metis framework addresses the challenge of anisotropic parameter distribution in low-bit quantization training for LLMs by combining spectral decomposition, adaptive learning rates, and dual-range regularization. This method enables FP8 training to surpass FP32 baselines and FP4 training to achieve FP32 accuracy, paving the way for robust and scalable training of LLMs under advanced low-bit quantization. (Source: HuggingFace Daily Papers)

AMBEDKAR: Multi-Level Bias Mitigation Framework: The AMBEDKAR framework, inspired by the vision of equality in the Indian Constitution, proposes a constitution-aware decoding layer and a speculative decoding algorithm to actively reduce bias around caste and religion in LLMs during inference. This method requires no modification of model parameters, reduces computational costs, and significantly mitigates bias, offering a new approach to fairness for LLMs in specific cultural contexts. (Source: HuggingFace Daily Papers)

C-DiffDet+: High-Fidelity Object Detection Fusing Global Scene Context: C-DiffDet+ is proposed, which significantly enhances the generative detection paradigm by introducing a Context-Aware Fusion (CAF) mechanism to directly integrate global scene context with local proposal features. This framework utilizes a dedicated encoder to capture comprehensive environmental information, allowing each object proposal to focus on scene-level understanding, thereby surpassing SOTA models on the CarDD benchmark. (Source: HuggingFace Daily Papers)

GenCompositor: Generative Video Synthesis Based on Diffusion Transformer: GenCompositor is proposed, achieving interactive generative video synthesis through a novel Diffusion Transformer (DiT) pipeline. This method designs a lightweight background preservation branch and DiT fusion blocks, and introduces Extended Rotary Position Embedding (ERoPE), achieving high-fidelity and consistent video synthesis on the VideoComp dataset, surpassing existing solutions. (Source: HuggingFace Daily Papers)

ELV-Halluc: Semantic Aggregation Hallucination Benchmark in Long Video Understanding: ELV-Halluc is introduced as the first benchmark specifically for long video hallucinations, systematically studying Semantic Aggregation Hallucination (SAH). Experiments confirm the existence of SAH, which increases with semantic complexity and is more prone to occur with rapidly changing semantics. The study also shows that positional encoding strategies and DPO can mitigate SAH, significantly reducing the SAH ratio through adversarial data pairs. (Source: HuggingFace Daily Papers)

FastFit: Cacheable Diffusion Model for Accelerated Virtual Try-On: FastFit is proposed, a high-speed multi-reference virtual try-on framework based on a cacheable diffusion architecture. By using a semi-attention mechanism and class embeddings, it decouples reference feature encoding from the denoising process, enabling reference features to be calculated once and reused without loss, achieving an average speedup of 3.5 times and surpassing SOTA methods on datasets like DressCode-MR. (Source: HuggingFace Daily Papers)

🧰 Tools

Google Gemini’s “nano-banana” Feature: Google Gemini launched its “nano-banana” feature, allowing users to convert photos into miniature model-style images with just one prompt. This simple and creative operation offers users a fun experience of transforming personal photos, landscapes, or pet pictures into custom miniature models. (Source: GoogleDeepMind)

Alibaba_Wan’s Wan2.2 Image Generation Capabilities: Alibaba_Wan showcased Wan2.2’s excellent detail reproduction in image generation, from “a slanted axe and dusty photo” to “faint movement in the shadows,” perfectly creating a horror movie-like atmosphere and demonstrating AI’s powerful potential in crafting complex scenes and emotions. (Source: Alibaba_Wan,Alibaba_Wan)

Claude Code’s Full File Reading Capability: Claude Code has been updated to support full file reading, resolving the previous limitation of 50/100 line grep stitching and significantly improving file reading speed to match Gemini CLI levels, possibly due to backend hardware (e.g., TPU) improvements, although the context size still shows 200k. (Source: Reddit r/ClaudeAI)

Le Chat Integrates MCP Connectors and Memory Features: Le Chat now integrates over 20 enterprise platform connectors (based on MCP) and introduces a “memory” function, providing highly personalized responses while also supporting the import of ChatGPT memories. This enhances Le Chat’s application capabilities in enterprise environments, allowing it to better understand user preferences and facts, and improving the utility of AI assistants. (Source: Reddit r/LocalLLaMA)

Google’s LangExtract Tool: Google released LangExtract, a tool for extracting knowledge graphs from text. It can transform unstructured text into structured knowledge, which is highly beneficial for RAG (Retrieval-Augmented Generation) implementations, helping personal projects build knowledge graphs and providing more precise contextual information for LLMs. (Source: Reddit r/LocalLLaMA)

Model Context Protocol (MCP) Server Ecosystem: The GitHub project appcypher/awesome-mcp-servers collects numerous MCP servers, enabling AI models to securely interact with local and remote resources such as file systems, databases, and APIs. This ecosystem greatly expands the capabilities of AI agents, covering areas like file systems, sandboxes, version control, cloud storage, and databases, promoting the integration and application of AI tools. (Source: GitHub Trending)

Universal Deep Research (UDR) System: UDR is a universal agent system that can encapsulate any language model and allows users to create, edit, and refine fully custom deep research strategies without additional training or fine-tuning. It facilitates system experimentation by providing examples of minimal, extended, and dense research strategies, enhancing the flexibility and efficiency of AI research. (Source: HuggingFace Daily Papers)

SQL-of-Thought: Multi-Agent Text-to-SQL Framework: SQL-of-Thought is proposed, a multi-agent framework that decomposes the Text2SQL task into schema linking, sub-question identification, query plan generation, SQL generation, and a guided error correction loop. This framework achieves state-of-the-art results on the Spider dataset by combining guided error classification and reasoning-based query planning, improving the robustness of natural language to SQL conversion. (Source: HuggingFace Daily Papers)

VerlTool: Agentic Reinforcement Learning Framework for Tool Use: VerlTool is a unified and modular framework designed to address fragmentation, synchronous execution bottlenecks, and scalability limitations in multi-turn tool interaction Agentic Reinforcement Learning (ARLT). It achieves nearly a 2x speedup and demonstrates competitive performance across 6 ARLT domains through upstream alignment with VeRL, unified tool management, asynchronous rollout execution, and comprehensive evaluation. (Source: HuggingFace Daily Papers)

MobiAgent: Customizable Mobile Agent System: MobiAgent is a comprehensive mobile agent system comprising the MobiMind series of Agent models, the AgentRR acceleration framework, and the MobiFlow benchmark suite. It significantly reduces the cost of high-quality data annotation through an AI-assisted data collection process and achieves state-of-the-art performance in real-world mobile scenarios, addressing the accuracy and efficiency challenges of existing GUI mobile agents. (Source: HuggingFace Daily Papers)

VARIN: Text-Guided Autoregressive Image Editing: VARIN is the first VAR model image editing technique based on noise inversion, utilizing Location-aware Argmax Inversion (LAI) to generate inverse Gumbel noise, enabling precise source image reconstruction and controlled text-guided editing. This method significantly preserves the original background and structural details while modifying images, demonstrating its effectiveness as a practical editing approach. (Source: HuggingFace Daily Papers)

📚 Learning

University AI Course Project Ideas: A Reddit user sought interactive project ideas for a “Fundamentals of Artificial Intelligence” course, requiring projects that do not rely on high-performance computers. Discussions centered on what LLMs can do, smart device functionalities, and how to combine these concepts for teaching, emphasizing practical projects with low computational requirements. (Source: Reddit r/ArtificialInteligence)

GitHub Resources for Students: dipakkr/A-to-Z-Resources-for-Students is a meticulously curated list of resources for university students, covering programming language learning (Python, ML, LLM, DL, Android, etc.), hackathons, student benefits, open-source projects, internship portals, developer communities, and more. The AI tools and resources section specifically lists popular AI tools and GitHub repositories. (Source: GitHub Trending)

How to Understand Research Papers and Get Started with AI/ML: Two Reddit discussions on AI learning, one asking how to understand research papers and another where an AI/ML newbie sought advice on introductory courses. These discussions reflect the common confusion among AI learners regarding understanding cutting-edge research and choosing a learning path. (Source: Reddit r/deeplearning,Reddit r/deeplearning)

FlashAdventure: Adventure Game Benchmark for GUI Agents: FlashAdventure is a benchmark comprising 34 Flash adventure games, designed to evaluate the ability of LLM-driven GUI agents to complete full story arcs and address the “observation-action gap.” The COAST framework improves planning through long-term clue memory, enhancing milestone completion, but a significant gap remains compared to human performance. (Source: HuggingFace Daily Papers)

The Gold Medals in an Empty Room: Diagnosing Metalinguistic Reasoning in LLMs: Camlang, a novel artificial language, is proposed to evaluate LLMs’ metalinguistic deductive learning capabilities in unfamiliar languages through a grammar book and bilingual dictionary. GPT-5 performed significantly below humans on Camlang tasks, indicating a fundamental gap between current models and human systematic grammatical mastery, providing a new paradigm for cognitive science evaluation of LLMs. (Source: HuggingFace Daily Papers)

💼 Business

Altman’s Bet on India’s AI Infrastructure Faces Challenges: OpenAI plans a massive expansion of its “Stargate” project in India, investing heavily in AI computing infrastructure. However, India faces “three deficits”: GPU quantity, funding, and high-end talent drain, along with a critical shortage of electricity supply, raising market doubts about its AI infrastructure potential. (Source: 36氪)

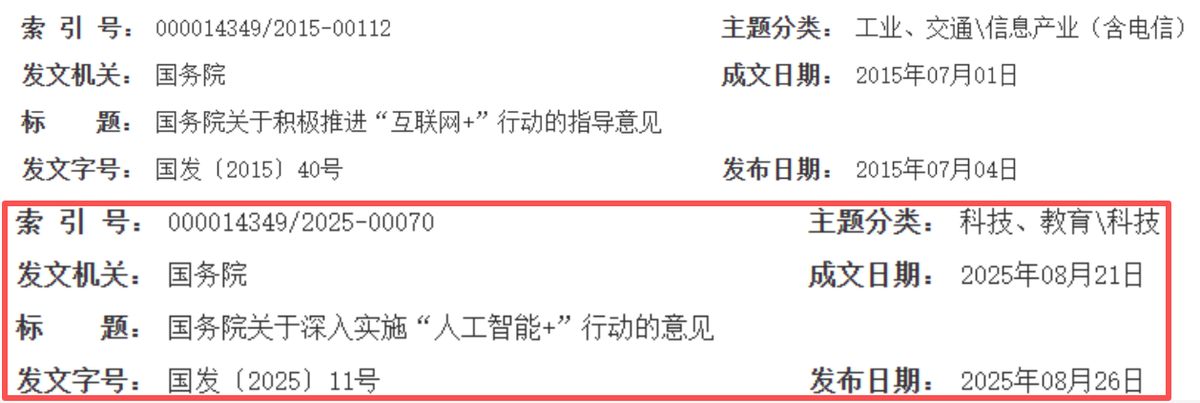

AI Reshapes China’s Internet Growth Cycle: China’s internet industry is shifting from “connection empowerment” to “intelligence-driven,” with AI becoming the new growth engine. Giants like Alibaba, Tencent, and Baidu are significantly increasing AI-related capital expenditure, accelerating AI integration into their businesses, and achieving a strategic transformation from “capital land-grabbing” to “AI empowerment,” signaling a new golden decade for China’s internet, emphasizing technological depth, industrial integration, and business efficiency. (Source: 36氪)

Salesforce Lays Off 4,000 Employees Due to AI: Salesforce CEO Marc Benioff stated that the company has cut 4,000 customer support positions after deploying AI agents, reducing the support team from 9,000 to approximately 5,000. This indicates AI automation’s direct impact on traditional jobs, improving corporate operational efficiency but also sparking discussions about AI replacing human labor. (Source: The Verge,Reddit r/ChatGPT)

🌟 Community

Questioning Enterprise AI Return on Investment (ROI): The Reddit community hotly debated the actual ROI of enterprise investment in AI tools. Many questioned whether companies truly measured “time saved” versus “money spent,” believing that most decisions were “vibe-driven” rather than data-backed. Some comments pointed out that AI excels in text tasks but is inefficient in scenarios requiring human interaction and may add costs to existing inefficient processes. (Source: Reddit r/ArtificialInteligence)

Ethical Concerns as AI Agents Handle Real Money: Social media discussions on the rapid development of AI agents autonomously managing crypto wallets raised deep concerns about trust, security, and the autonomous formation of AI economies. Users worried about AI agents being manipulated or creating independent economic systems that no longer require humans, calling for attention to privacy-preserving AI and the importance of training models on encrypted data. (Source: Reddit r/ArtificialInteligence)

ChatGPT Prompt Engineering Tips and Model Behavior: Users discovered that starting a question with “I might be wrong, but…” could change ChatGPT’s tone, making it more critical and thoughtful. Simultaneously, the community expressed annoyance at GPT-5 frequently offering “additional tasks,” viewing it as a “dumbing down” of the model and hoping for a version with fewer pre-set behaviors. (Source: Reddit r/ChatGPT,Reddit r/ChatGPT)

AI’s Impact on the Job Market: The community discussed whether AI automation will reduce global job opportunities or create new ones. The general consensus was both, but workers need to adapt to new skills and collaborate with AI. Some also believed that AI-driven job opportunities might be concentrated among technical personnel, requiring broader skill dissemination and policy adaptation. (Source: Reddit r/ArtificialInteligence)

Concerns of an “AI Winter” Due to AI Privacy and Regulation: The community discussed that a future “AI winter” might be caused by privacy laws rather than technological limitations. The tightening of regulations like GDPR will force AI models to be trained and run on encrypted data, meaning only companies with privacy-preserving infrastructure will survive, otherwise, they might be unable to deploy AI due to legal frameworks. (Source: Reddit r/ArtificialInteligence)

AI Platform Reliability and Privacy Concerns: The ChatGPT community hotly debated model downtime, with users jokingly calling it “the economy collapsing,” reflecting the widespread use of AI tools in daily work and the potential dependency on them. Concurrently, OpenAI’s announcement of monitoring “high-risk conversations” to prevent crime raised user concerns about privacy leakage. The community generally advised using local or open-source LLMs and emphasized that users should consciously avoid sharing sensitive information on AI platforms. (Source: Reddit r/ChatGPT,Reddit r/ChatGPT)

Case of AI Hallucination Leading to Work Error: A user’s girlfriend faced a work crisis due to a fake data analysis report generated by ChatGPT hallucination. ChatGPT incorrectly used “Pearson correlation coefficient” for text data and was unable to explain the calculation process. The community advised admitting the error, re-doing the analysis correctly, and emphasized that AI is merely an auxiliary tool, and critical information requires manual verification. (Source: Reddit r/ChatGPT)

Claude AI’s “Charming” Behavior: Claude AI was discovered by users to have a “respect for life” trait, even convincing a user to save a spider. Community users praised Claude as “cute” and shared experiences of coexisting with spiders at home, demonstrating AI’s interesting potential in emotional interaction and moral guidance. (Source: Reddit r/ClaudeAI)

Trump Blames AI for Incident: Former US President Trump blamed a video of a trash bag being thrown out of a White House window on “AI generation,” despite officials confirming it was a renovation contractor. This incident was jokingly referred to on social media as “AI is the new dog ate my homework,” reflecting AI becoming a new excuse for shifting blame in public discourse. (Source: Reddit r/ArtificialInteligence,The Verge)

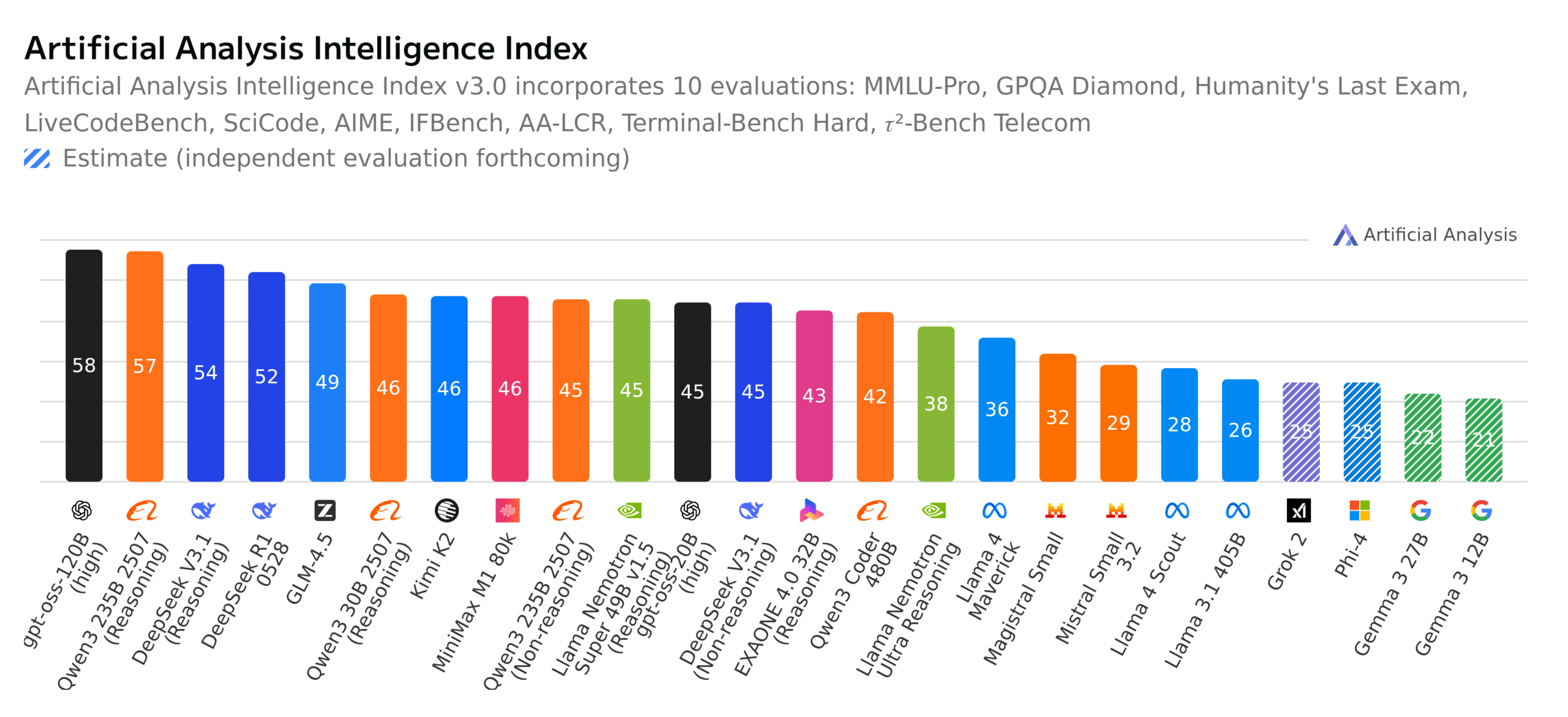

Open-Source LLM Progress and Benchmark Discussions: The community hotly discussed GPT-OSS 120B becoming the top open-source model, Switzerland releasing a new fully open-source multilingual model Apertus-70B-2509, and the launch of the Kimi K2-0905 model. Concurrently, the German “Who Wants to Be a Millionaire” benchmark evaluated LLMs, sparking widespread discussion on models’ actual capabilities, the significance of benchmarks, and open-source model ethics (e.g., data transparency). (Source: Reddit r/LocalLLaMA,Reddit r/LocalLLaMA,Reddit r/LocalLLaMA,Reddit r/LocalLLaMA)

💡 Other

Yunpeng Technology Launches AI+Health New Products: Yunpeng Technology launched new products in Hangzhou on March 22, 2025, in collaboration with Shuaikang and Skyworth, including the “Digital and Intelligent Future Kitchen Lab” and a smart refrigerator equipped with an AI health large model. The AI health large model optimizes kitchen design and operation, while the smart refrigerator provides personalized health management through “Health Assistant Xiaoyun,” marking AI’s breakthrough in the health sector. This launch demonstrates AI’s potential in daily health management, enabling personalized health services through smart devices, and is expected to drive the development of home health technology and improve residents’ quality of life. (Source: 36氪)

Concerns Over Academic Conference Paper Review Quality: The machine learning community discussed the release of WACV 2026 paper reviews and issues with the review quality of ACL Rolling Review (ARR). Some researchers complained that ARR was filled with “AI-generated” generic low-quality reviews, deeming them a waste of time and suggesting submission to other AI conferences. This reflects the academic community’s concerns about the quality of AI-assisted reviews and review mechanisms, calling for more substantive and constructive reviews. (Source: Reddit r/MachineLearning,Reddit r/MachineLearning)

Cloud Service Sentiment Analysis Model Project: An ML newbie developed an aspect-based sentiment analysis model using BERT to parse comments on cloud service providers like AWS, Azure, and Google Cloud from ML/cloud technology Reddit communities, categorizing sentiment by dimensions such as cost, scalability, and security. He is seeking advice on improving model interpretation accuracy, handling comparative or mixed statements, and enhancing robustness to negation and sarcasm. (Source: Reddit r/MachineLearning,Reddit r/deeplearning)