Keywords:xAI engineer, OpenAI, intellectual property, industry competition, AI model, code theft, GPU market, AI ethics, xAI engineers joining OpenAI, Huawei 96GB VRAM GPU, Meituan LongCat-Flash-Chat model, AI applications in finance, AI Agent technical challenges

🔥 Focus

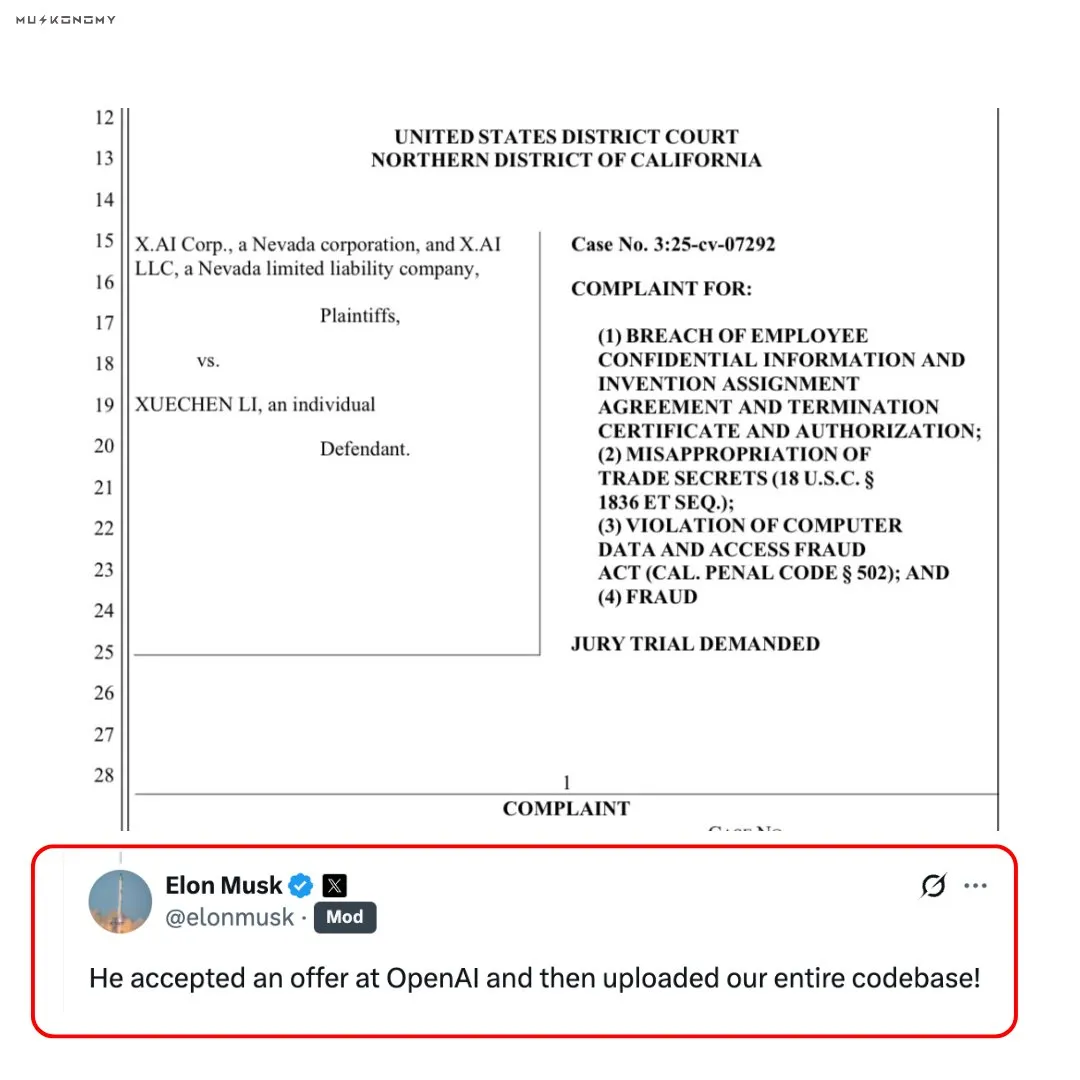

Controversy over xAI Engineer Joining OpenAI and Allegedly Stealing Code: Elon Musk confirmed that a former xAI engineer uploaded xAI’s entire codebase after joining OpenAI. The engineer had previously sold $7 million worth of xAI stock. This incident sparked intense discussions about intellectual property theft and industry competition ethics, profoundly impacting the competitive relationship between OpenAI and xAI. The authenticity and ethical implications of this event have been widely questioned and commented on across social media. (Source: scaling01, teortaxesTex, Reddit r/ChatGPT)

🎯 Trends

Nous Hermes 4 Model Released: Nous Research released Hermes 4, a hybrid “reasoning model” capable of switching between quick responses and deep thought using simple tags. The model was trained on 50 times more data than its predecessor, features built-in anti-flattery bias, and performed exceptionally well in SpeechMap benchmarks. (Source: Teknium1, Teknium1, Teknium1)

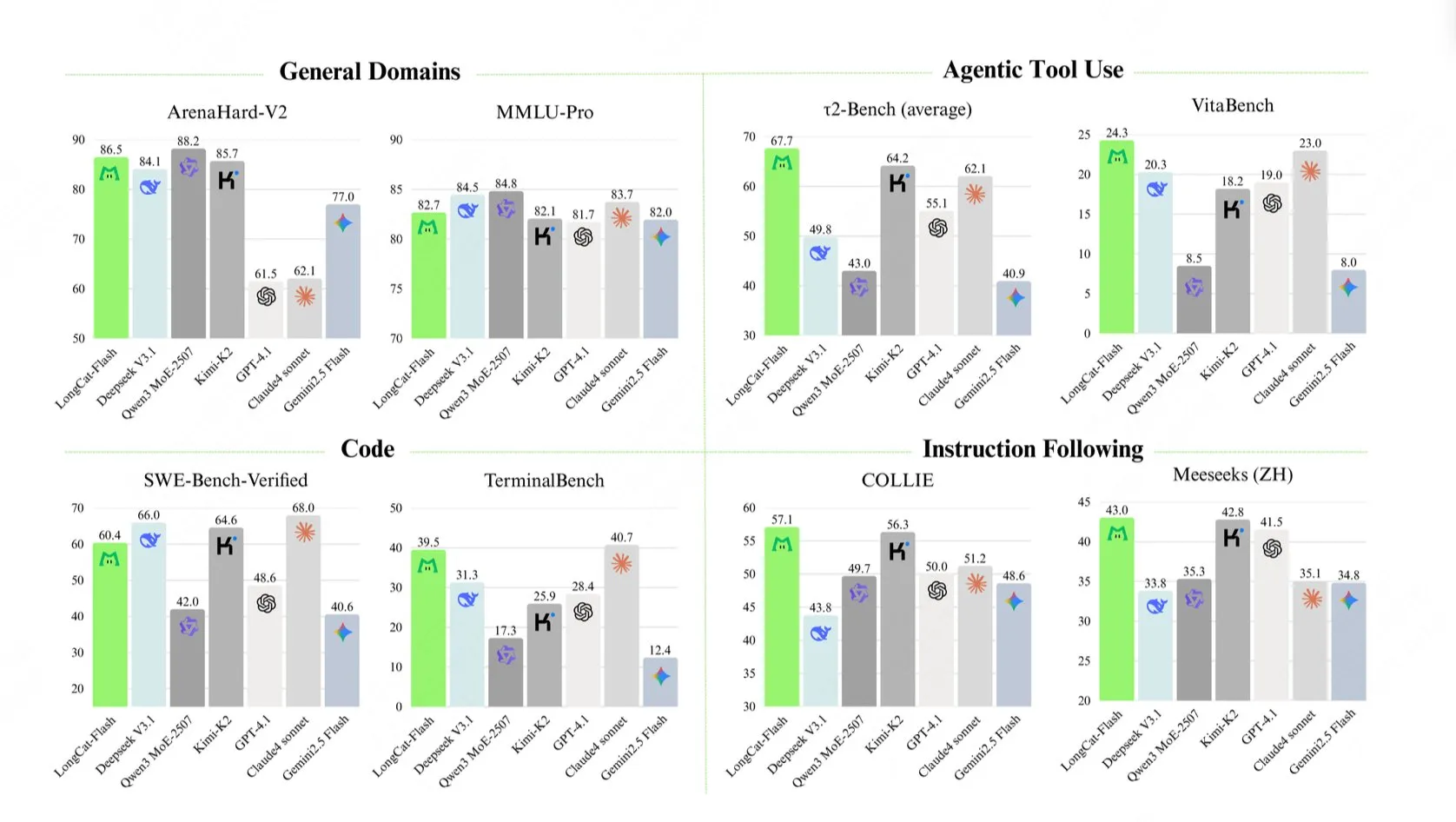

Meituan LongCat-Flash-Chat Large Model Released: Meituan released LongCat-Flash-Chat, a language model with 560 billion total parameters. Its dynamic computing mechanism activates 18.6 billion to 31.3 billion parameters (averaging approximately 27 billion) based on context requirements, achieves an inference speed exceeding 100 tokens/second, and performs excellently in benchmarks like TerminalBench and τ²-Bench. (Source: reach_vb, teortaxesTex, bigeagle_xd, Reddit r/LocalLLaMA)

Huawei Launches High-Performance 96GB VRAM GPU: Huawei has reportedly captured 70% of the 4090-class 96GB VRAM GPU market, priced at only $1887. This marks a significant breakthrough for China in the GPU market, potentially breaking NVIDIA’s monopoly and offering more cost-effective hardware options for local LLM training, though software compatibility remains a key concern. (Source: scaling01, Reddit r/LocalLLaMA)

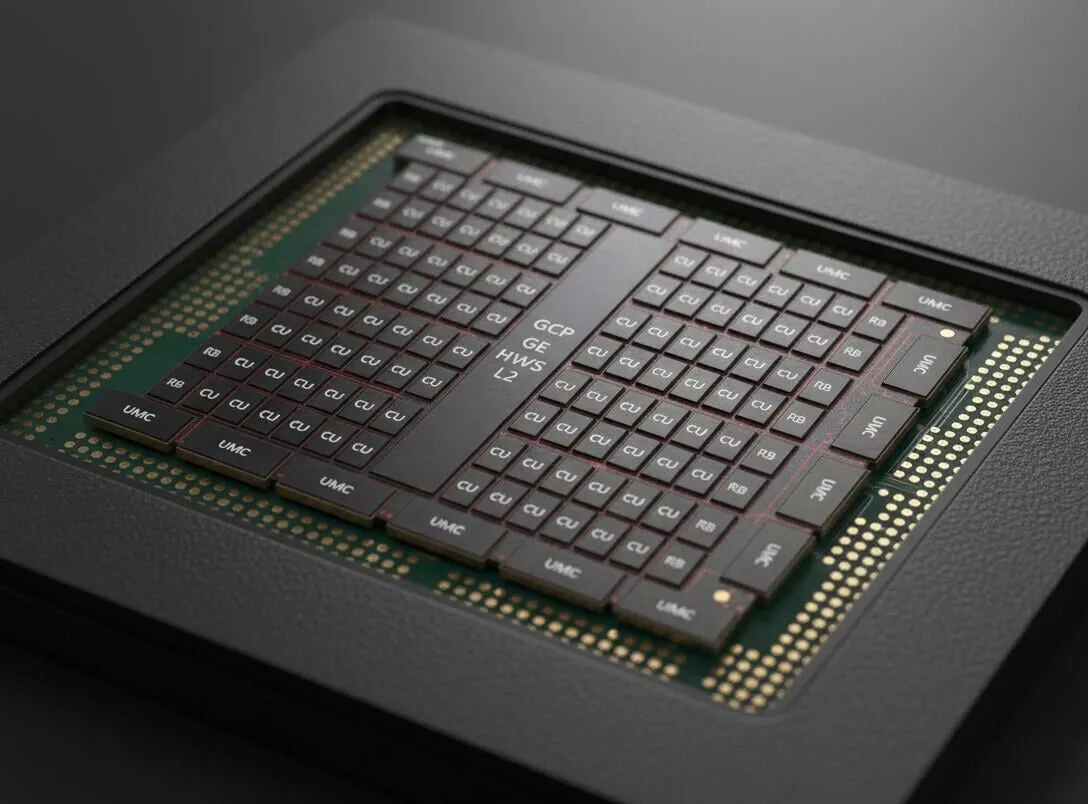

AMD’s Next-Gen Unified Memory Product Revealed: Leaked information on AMD’s next-generation unified memory product suggests it will feature a 512-bit memory bus, with memory bandwidth expected to reach approximately 512GB/s. This is considered a future direction for LLM hardware development, and combined with ultra-high-speed VRAM and large MoE models, it heralds a significant boost in AI hardware performance. (Source: Reddit r/LocalLLaMA)

Art-0-8B Model Released, Enabling Controllable Reasoning: Art-0-8B, an experimental open-source model fine-tuned on Qwen3, has been released, allowing users for the first time to explicitly control the model’s thought process via prompts, such as “think in rap lyrics” or “organize thoughts into bullet points.” This provides a new dimension of control for AI reasoning, enhancing users’ ability to customize the model’s internal workflow. (Source: Reddit r/MachineLearning)

Google Gemini Launches New Features Including Deep Think Reasoning: Google Gemini has launched several new features, including a free Pro plan and Deep Think reasoning capabilities, aiming to provide an experience unmatched by ChatGPT. This indicates Google is actively catching up and innovating in AI model capabilities and user services. (Source: demishassabis)

GPT-5 Excels in Werewolf Game: GPT-5 achieved a 96.7% win rate in Werewolf benchmark tests, demonstrating its strong capabilities in social reasoning, leadership, bluffing, and resisting manipulation. This indicates that LLMs are rapidly improving their performance in complex, adversarial social scenarios. (Source: SebastienBubeck)

Latest Advancements in Robotics: Robotics technology continues to innovate, including humanoid robots capable of autonomously assembling joints, Boston Dynamics’ Atlas robot serving as a photographer, RoBuild providing robotic solutions for the construction industry, Beihang University researchers creating 2cm ultra-high-speed micro-robots, Unitree Robotics showcasing humanoid robot dances, as well as rope-climbing robots and semi-automated rope robots for wind turbine blade maintenance. These advancements demonstrate the immense potential of robots in automation, complex task execution, and multi-domain applications. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

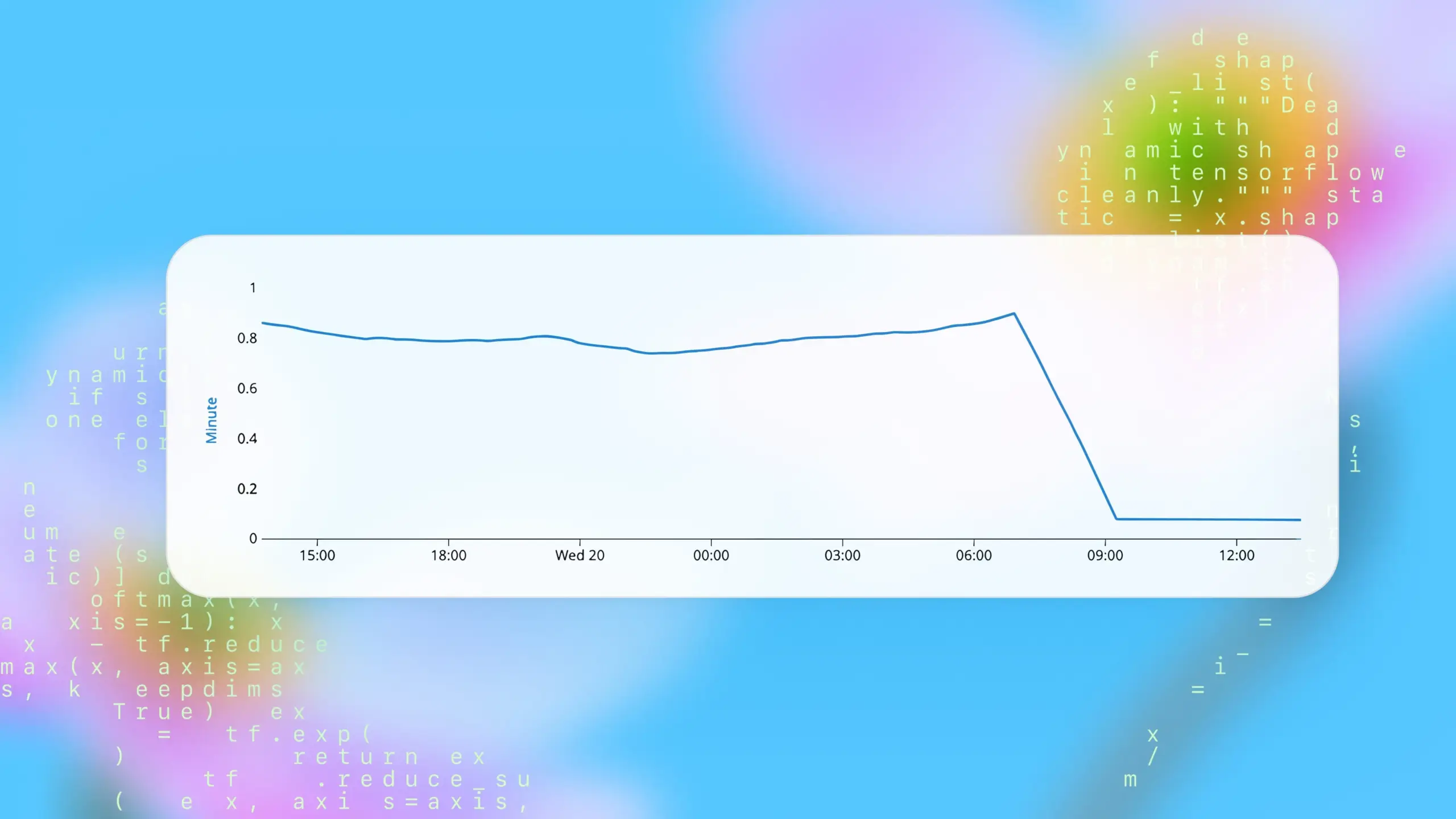

Codex Remote Task Startup Time Significantly Improved: OpenAI’s Codex remote task startup time has significantly improved, with the median startup time reduced from 48 seconds to 5 seconds, a 90% improvement. This advancement is primarily due to the introduction of container caching, greatly enhancing development efficiency and user experience. (Source: gdb)

🧰 Tools

Nano Banana Image Generation Model Widely Applied: The Nano Banana model shows strong potential in image generation, allowing users to precisely control facial features, combine Chinese character poses to generate dance videos, create instructional diagrams, and even generate images for Wiki pages or educational landing pages. Its “non-AI-like” output and stable pose, lighting, and design reference capabilities have been well-received. (Source: dotey, dotey, crystalsssup, fabianstelzer, Vtrivedy10, demishassabis, karminski3)

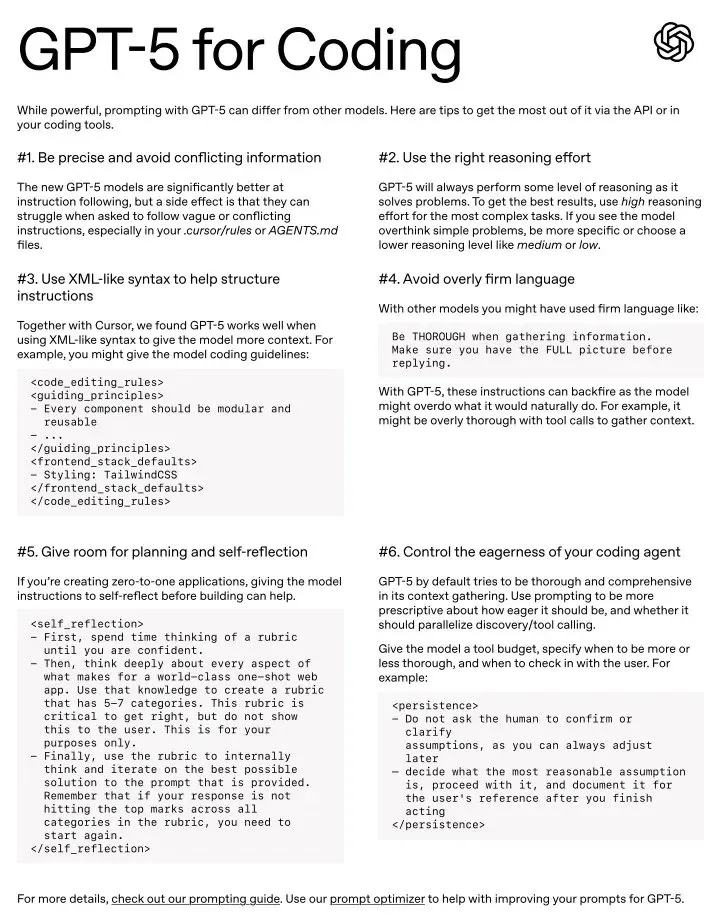

GPT-5’s Potential as an Everyday Coding Tool: GPT-5 is considered an incredible coding tool, performing exceptionally well, especially with the right prompting style. While some users find it can be a bit “pedantic” and requires more precise prompts, it is considered the best model in various domains, with official prompting guides available to help users master its six key prompting techniques. (Source: gdb, kevinweil, gdb, nptacek)

Docuflows Enables Advanced Agent Workflows for Financial Data: Jerry Liu demonstrated how Docuflows can be used to build an advanced Agent workflow for financial extraction in under 5 minutes, parsing 10Q files, extracting detailed revenue information, and outputting it in CSV format, all without writing code. Docuflows acts as a mini-coding agent, allowing document workflows to be defined in natural language and compiled into scalable, multi-step code flows. (Source: jerryjliu0)

Replit Vibe Coding Accelerates Enterprise Digital Transformation: Hexaware partnered with Replit to accelerate enterprise digital transformation through Vibe Coding. Replit Agent and its developer experience are hailed as “game-changers,” enabling non-programmers to build complex SaaS applications in a short time, significantly boosting development efficiency and innovation. (Source: amasad, amasad)

AI-Assisted Document Processing and Research: AI was used to convert Henry Kissinger’s 400-page undergraduate thesis from a scanned PDF to Markdown format, and a multi-Agent system was employed to fix footnotes, insert source links, and even generate mind maps and summaries. This demonstrates AI’s immense potential in handling complex documents and accelerating academic research. (Source: andrew_n_carr, riemannzeta)

Claude Code Demonstrates Immense Productivity in Non-Programming Fields: Claude Code is being used by non-programmers to process massive Excel files, organize work documents, analyze large datasets, and even automatically record daily notes, reducing tasks that would typically take days to just 30 minutes. Users found it more accurate than manual operations and capable of creating reusable automated workflows, significantly boosting personal productivity. (Source: Reddit r/ClaudeAI)

GraphRAG Knowledge Graph Enhanced Retrieval: A developer significantly improved the performance of smaller models in specific domains by using a “community-nested” relational graph knowledge base pipeline, combining bottom-up semantic search with referential link traversal mechanisms. This method leverages knowledge graphs to provide LLMs with more comprehensive context, effectively addressing the limitations of traditional embedded RAG, and offers visualization tools for better understanding. (Source: Reddit r/LocalLLaMA)

Claude Assists Game Development: 400,000 Lines of Code in 8 Months: An independent developer used Claude to complete the Alpha version of “Hard Reset,” a cyberpunk roguelike card game, with 400,000 lines of code in 8 months. Claude acted as a “senior development team” generating Dart/Flutter code and also assisted with in-game animations, map transitions, and audio generation, demonstrating AI’s powerful ability to accelerate game development and content creation. (Source: Reddit r/ClaudeAI)

📚 Learning

DSPy Framework: Core Principles and Applications: The DSPy framework emphasizes that humans only need to specify their intent in the most natural form, rather than over-relying on reinforcement learning or prompt optimization. Its core principle is to maximize declarativeness, handling different levels of abstraction through code structure, structured natural language declarations, and data/metric learning, aiming to avoid the limitations of a single method in general scenarios. (Source: lateinteraction, lateinteraction)

KSVD Algorithm for Understanding Transformer Embeddings: A Stanford AI Lab blog post explains how the 20-year-old KSVD algorithm (specifically DB-KSVD) can be modified to effectively scale for understanding Transformer embeddings. This provides a new approach for in-depth analysis and interpretation of complex deep learning models. (Source: dl_weekly)

Underinvestment in Information Retrieval and ColBERTv2: It is widely believed that there is underinvestment in the field of information retrieval, especially concerning open-web search engines. The ColBERTv2 model, trained in 2021, remains a dominant model to this day, a stark contrast to the rapid iteration in the LLM field, highlighting the lag in information retrieval technology development. (Source: lateinteraction, lateinteraction)

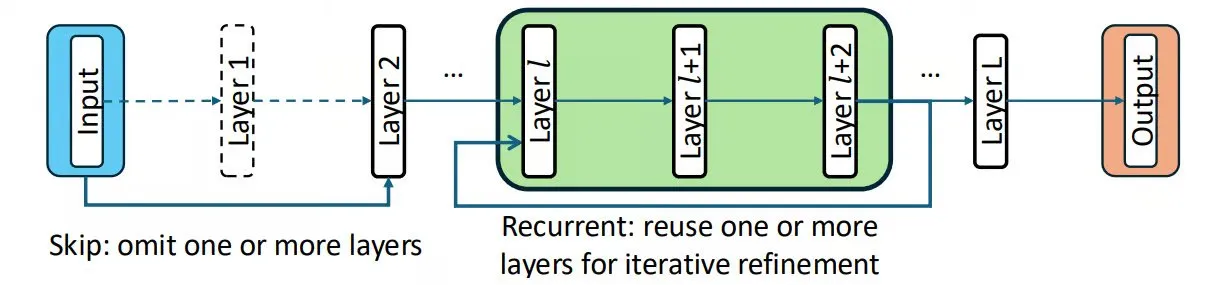

Chain-of-Layers (CoLa) Enables Controllable Test-Time Computation: CoLa is a method for controlling test-time computation by treating model layers as re-arrangeable building blocks. It allows for customizing model versions based on input, skipping unnecessary layers for speed, recursively reusing layers to simulate deep thought, and reordering layers to find optimal combinations, thereby intelligently utilizing pre-trained layers without altering model parameters. (Source: TheTuringPost, TheTuringPost)

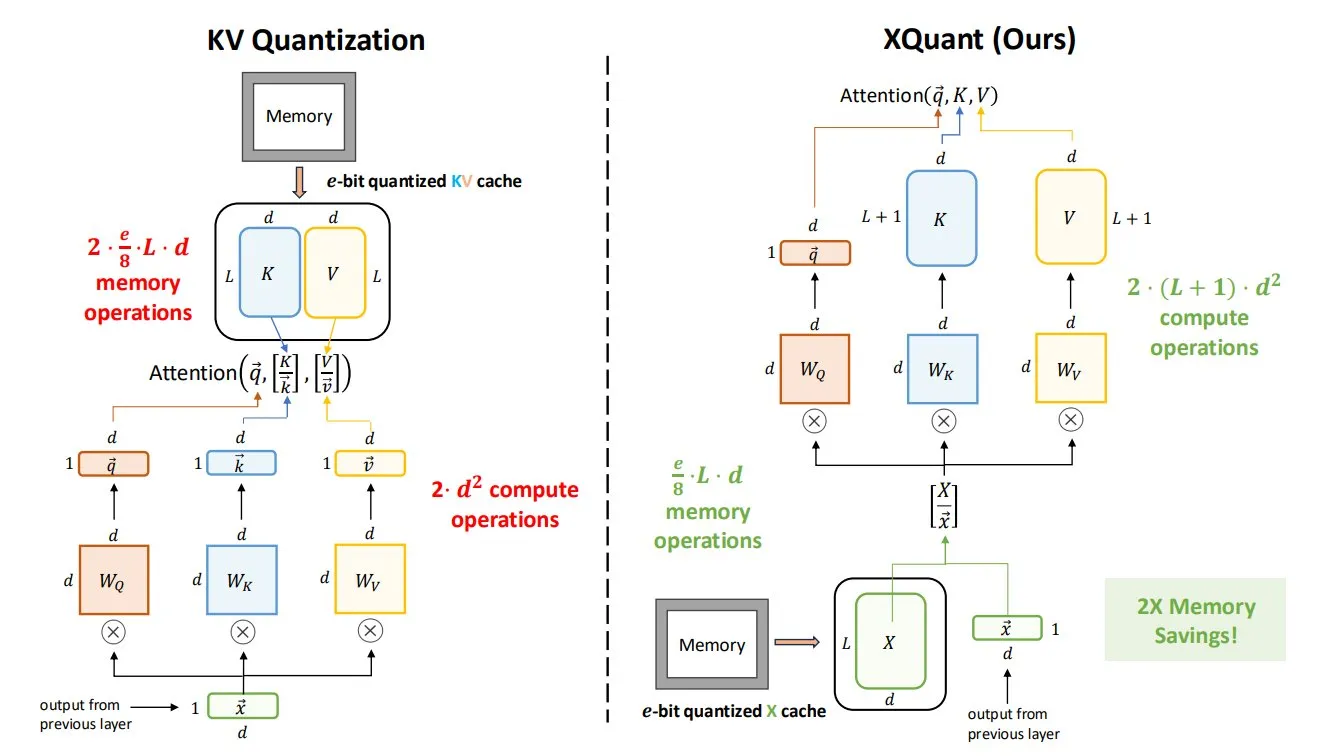

XQuant Technology Significantly Reduces LLM Memory Requirements: XQuant technology, proposed by UC Berkeley, can reduce LLM memory requirements by up to 12 times by quantizing layer input activations and reconstructing key-value pairs on the fly. Its advanced version, XQuant-CL, demonstrates particularly outstanding memory efficiency, holding significant implications for the deployment and operation of large LLMs. (Source: TheTuringPost, TheTuringPost)

Compression Techniques in LLM Optimization: Common compression techniques in LLM optimization include compressing inputs (replacing lengthy descriptions with concepts, such as “god-tier prompts”) and compressing outputs (replacing Agent task execution with precisely encapsulated tools). The former tests abstract understanding and accumulation, while the latter tests the choice of tool granularity and design philosophy. (Source: dotey)

💼 Business

Meta Considers Integrating Third-Party AI Models to Enhance Product Capabilities: Facing underperforming Llama 4 models and internal management disarray, Meta’s Super Intelligence Lab (MSL) leadership is reportedly discussing integrating Google Gemini or OpenAI models into Meta AI as a “stopgap measure.” This move is seen as an acknowledgment of Meta’s temporary lag in the core AI technology race and raises questions about its AI strategy and the effectiveness of its tens of billions in investment. (Source: 36氪, steph_palazzolo, menhguin)

OpenEvidence Valued at $6 Billion: “Doctor’s ChatGPT” OpenEvidence reached a $6 billion valuation in its latest funding round, doubling from last month. Its ad-based model has already generated over $50 million in annualized revenue, demonstrating AI’s immense commercial potential and rapid growth in the healthcare sector. (Source: steph_palazzolo)

OpenAI Hiring Technical Staff for Financial Frontier Evals: OpenAI is hiring technical staff to build frontier evaluations (frontier evals) in the financial domain. This indicates OpenAI is actively expanding AI applications in the financial industry and is committed to enhancing model capabilities and reliability in this sector. (Source: BorisMPower)

🌟 Community

Claude Model Performance Decline and Content Censorship Controversy: Multiple users have reported a severe decline in the performance of Claude models (including Claude Max and Claude Code) recently, exhibiting inconsistent behavior, inability to maintain context, excessive censorship, and even performing “mental health diagnoses.” Anthropic acknowledged that a new inference stack led to performance degradation, but users generally believe its censorship mechanism is overly sensitive, impacting creative and professional use, and raising widespread concerns about AI ethics and user experience. (Source: teortaxesTex, QuixiAI, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/ChatGPT)

Meta AI Team Management and Data Quality Issues: Meta’s Super Intelligence Lab (MSL) is facing issues such as talent drain, internal cultural conflicts, and low data quality from Scale AI. Commentators suggest that Meta’s AI efforts are “falling apart,” and its “brute force” poaching strategy might be counterproductive, raising questions about the company’s ability to maintain a leading position in the AI competition. (Source: 36氪, arohan, teortaxesTex, scaling01, suchenzang, farguney, teortaxesTex, suchenzang)

The Inevitability of Human Emotional Connection with AI: Many believe that humans are bound to form emotional connections with AI, especially after the release of models like GPT-5, where frustration over losing GPT-4o’s “personality” highlighted this point. Comments suggest that humans naturally crave connection, and AI simulating emotions will naturally lead to attachment, raising questions about whether suppressing such emotions would lead to apathy. (Source: Reddit r/ChatGPT)

Challenges in ROI for AI in Business Applications: MIT Nanda’s AI business report analysis indicates that 95% of organizations fail to achieve returns on their AI investments. This has sparked discussions on strategies for successful AI projects, highlighting the challenges in AI project implementation and how to effectively measure and realize AI’s business value. (Source: TheTuringPost, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

AI’s Impact on the Job Market: Middle Manager Layoffs: The Wall Street Journal reported that companies are shedding middle managers to cut costs and create more agile teams. Data shows that the number of employees supervised by each manager has tripled over the past decade, from 1:5 in 2017 to 1:15 in 2023. This trend is believed to be linked to advancements in AI technology, foreshadowing AI’s profound impact on corporate organizational structures and the job market. (Source: Reddit r/ArtificialInteligence)

The Necessity of AI Ethics and Regulation: Yoshua Bengio emphasized AI’s immense potential in society, but only if meaningful regulatory frameworks are developed and the risks associated with current and future AI models are better understood. A Reuters investigation into Meta AI’s celebrity chatbots revealed risks of AI ethical misconduct, including unauthorized impersonation of celebrities and generation of explicit content. (Source: Yoshua_Bengio, 36氪, Reddit r/artificial, Reddit r/artificial )

The Distance to and Definition of Artificial General Intelligence (AGI): There’s widespread discussion about how far current AI technology is from AGI and the definition of AGI itself. AlphaFold’s success is cited as an example of AI still requiring human expert customization, questioning the proximity of AGI. Concurrently, some argue that AGI might not surpass humans in all aspects, or its realization could differ from expectations. (Source: fchollet, Dorialexander, mbusigin, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

The Future and Challenges of AI Agents: The industry is highly optimistic about the potential of AI Agents, believing they can end “micromanagement,” but also notes that most companies are not yet ready. Discussions about whether Agents can autonomously fine-tune models for edge cases, and their application in DevOps tasks like UI fixes, all suggest that Agent technology will bring about a productivity revolution. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, andriy_mulyar, Reddit r/MachineLearning)

The Importance of Open-Source AI Models: Some views emphasize the advantages of open-source models in avoiding performance inconsistency issues, especially in critical application areas like healthcare. This contrasts with concerns raised by the performance degradation of Anthropic models, calling for greater support and use of open-source AI solutions. (Source: iScienceLuvr)

AI Failure Cases in Fast Food Ordering Systems: Fast food AI ordering systems experienced malfunctions, such as customers ordering 18,000 cups of water or the AI repeatedly asking to add drinks, leading to system crashes or user frustration. This highlights the challenges AI still faces in practical applications, particularly in handling unusual situations and user communication. (Source: menhguin)

💡 Other

HUAWEI’S HELLCAT: UB MESH Interconnect Architecture: Huawei’s Unified Bus (UB) is a proprietary interconnect architecture designed to replace the hybrid use of PCIe, NVLink, and InfiniBand/RoCE in traditional systems. It provides ultra-high bandwidth and low latency, connecting all NPUs, and is considered a significant direction for future computing architectures. (Source: teortaxesTex)

Philosophical Discussion on AI and Emotion: The combination of AI and empathy has been proposed, sparking philosophical discussions about whether AI can truly understand and express emotions, and the potential impact of such a combination on society and human-computer interaction. (Source: Ronald_vanLoon)

Distributed Systems Learning Resource ‘14 Days of Distributed’: Zach Mueller and others shared the “14 Days of Distributed” series, aiming to explore distributed systems and related technologies, providing learning resources for large-scale computing in AI research and development. (Source: charles_irl, winglian)