Keywords:NVIDIA Jetson Thor, Physical AI, Humanoid Robot, Blackwell GPU, AI Computing Power, Jetson Thor AI Peak Performance, Blackwell GPU Performance, Physical AI Application Scenarios, Humanoid Robot Development Kit, Jetson Thor Price

🔥 Focus

NVIDIA Jetson Thor Released, Physical AI Compute Power Soars : NVIDIA has launched Jetson Thor, designed specifically for physical AI and humanoid robots. It features a Blackwell GPU and a 14-core Arm Neoverse CPU, delivering a peak AI compute performance of 2070 TFLOPS (FP4), which is 7.5 times faster than the previous-generation Jetson Orin. It supports generative AI models like VLA, LLM, and VLM, capable of processing real-time video and AI inference, aiming to accelerate the development of general-purpose robotics and physical AI. Its primary applications include humanoid robots, surgical assistance, and smart tractors, and it has already been adopted by companies such as Agility Robotics, Amazon, Boston Dynamics, and Unitree Robotics. The developer kit is now available, starting at $3499. (Source: nvidia)

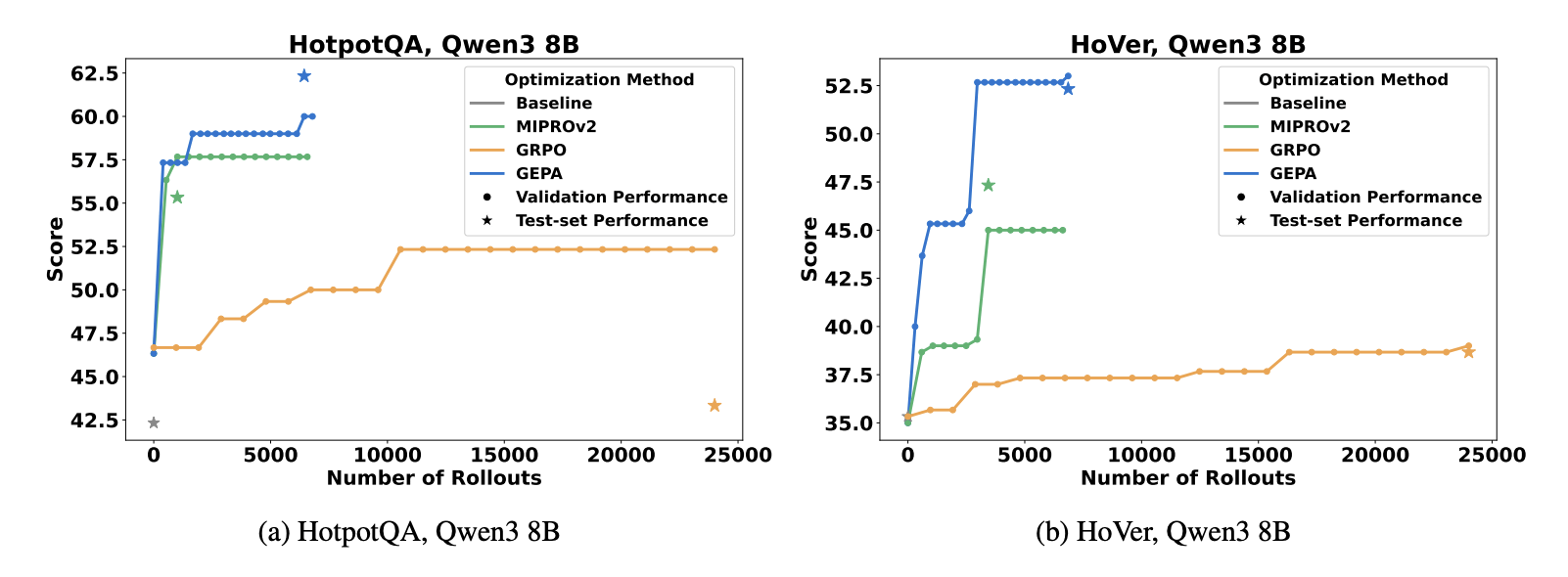

GEPA: Reflective Prompt Evolution Outperforms RL : A new study (Agrawal et al., 2025) introduces GEPA (Genetic-Pareto Prompt Evolution), a method that evolves LLM system prompts through natural language reflection and trajectory diagnostics, rather than relying on reinforcement learning. GEPA outperforms GRPO in multi-hop QA tasks, requiring 35 times fewer rollouts, and consistently surpasses the SOTA prompt optimizer MIPROv2. This suggests that language-native optimization loops are more efficient for LLM adaptability than raw rollouts in parameter space, signaling a new direction for AI optimization strategies. (Source: Reddit r/MachineLearning)

OPRO Method Boosts AI Safety Testing Efficiency : Bret Kinsella of TELUS Digital introduced the Optimization by PROmpting (OPRO) method, which allows LLMs to “self-red team” by optimizing attack generators to evaluate AI safety. This method focuses on the distribution of Attack Success Rate (ASR) rather than simple pass/fail, enabling large-scale vulnerability discovery and guiding mitigation efforts. It emphasizes that AI safety testing in high-risk industries like finance and healthcare requires comprehensiveness, repeatability, and creativity, shifting from reactive to preventive approaches, providing businesses with more detailed AI safety assessments. (Source: Reddit r/deeplearning)

🎯 Trends

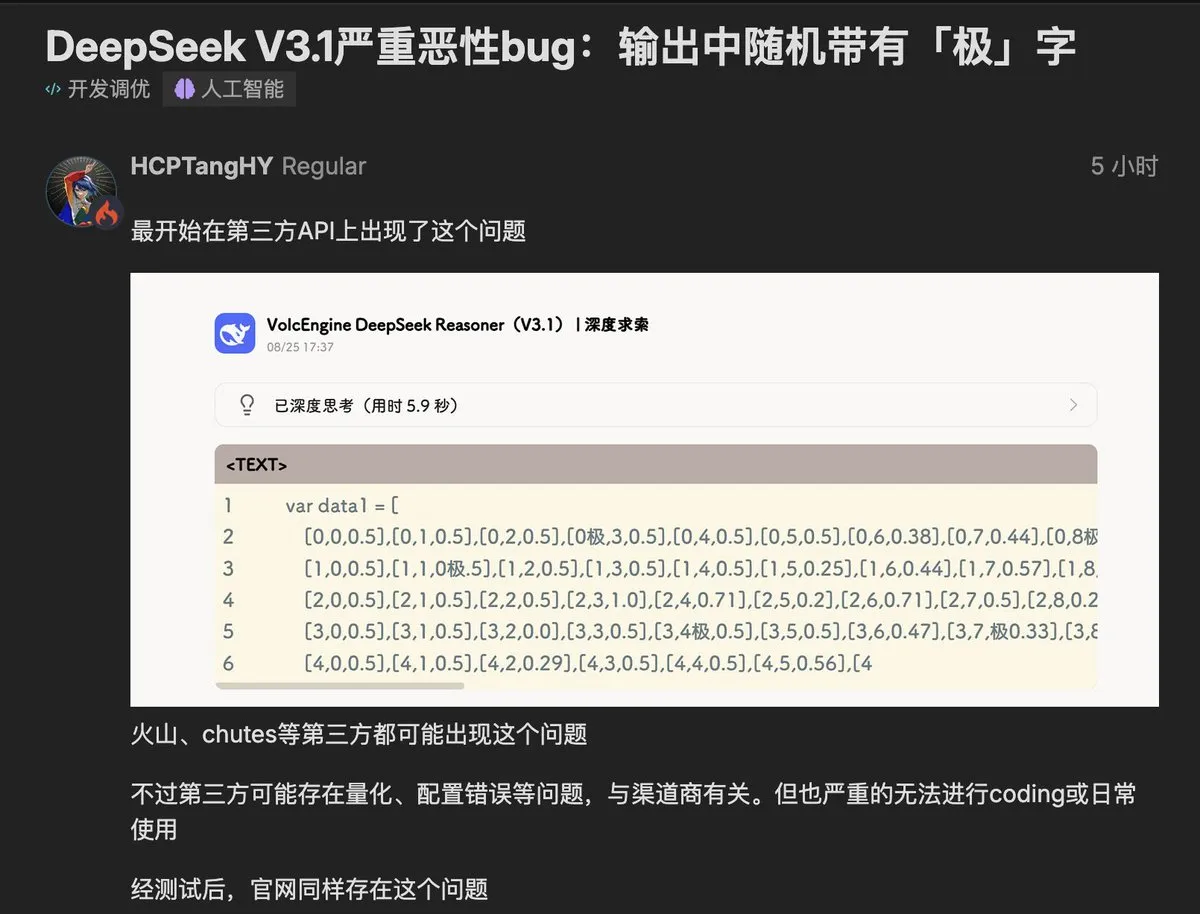

DeepSeek V3.1 Model Exhibits “Extreme” Character Bug : Users have reported that DeepSeek’s latest V3.1 model randomly inserts the character “极” (or “extreme”) into its outputs, affecting code and structure-sensitive tasks. This issue has been reproduced in both third-party deployments and the official API, raising community concerns about model data contamination and stability. Speculation suggests it might be related to token confusion or unclean RLHF training data, serving as a wake-up call for model developers and highlighting the critical impact of data quality on AI behavior. (Source: teortaxesTex, 36氪, 36氪)

Google’s Mysterious Nano-Banana Model Draws Attention : A mysterious image generation and editing model named Nano-Banana has gained popularity in the AI community, speculated to be a Google product. It excels in text editing, style blending, and scene understanding, capable of merging elements from multiple images while maintaining consistent lighting, perspective, and composition. However, it has flaws such as garbled book titles and deformed fingers, and currently lacks an official API, leading to an unstable user experience. Many fake websites have also emerged, sparking discussions in the market about the new model’s capabilities and access channels. (Source: TomLikesRobots, yupp_ai, yupp_ai, 36氪)

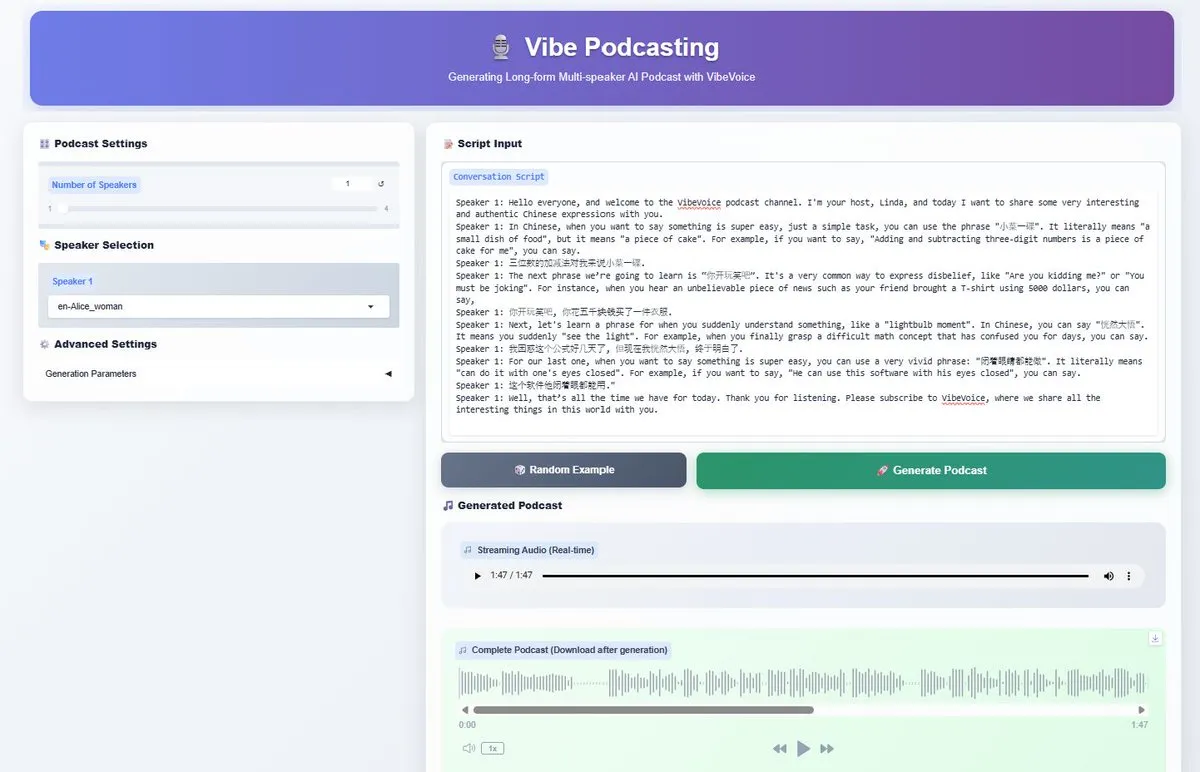

Microsoft Open-Sources VibeVoice-1.5B TTS Model : Microsoft has open-sourced the VibeVoice-1.5B TTS model, which supports generating expressive audio conversations up to 90 minutes long with up to 4 different speakers. Based on Qwen2.5-1.5B, the model uses an ultra-low-frame-rate tokenizer, improving computational efficiency, and supports both Chinese and English. Released under an MIT license, it is expected to drive AI generation of long-form audio content like podcasts, providing creators with powerful multilingual audio production capabilities. (Source: _akhaliq, AnthropicAI, ClementDelangue, dotey)

Alibaba’s Wan Series Models: New Developments : Alibaba’s AI model Wan announced the upcoming release of WAN 2.2-S2V, a cinematic-quality speech-to-video model, emphasizing “audio-driven, vision-based, open-source.” Concurrently, the previously released Wan2.2-T2V-A14B model has been integrated into the Anycode application, becoming the default text-to-video model. This series of advancements demonstrates Alibaba’s continuous investment and innovation in multimodal AI, particularly in audio and video generation. (Source: Alibaba_Wan, TomLikesRobots, karminski3)

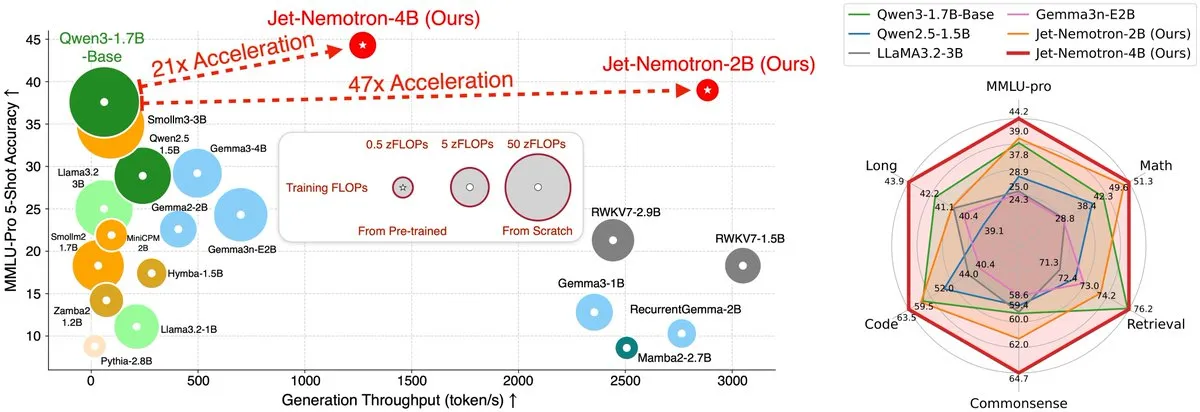

Jet-Nemotron Hybrid Architecture LLM Achieves 53.6x Speedup : The MIT Hanlab team has released Jet-Nemotron, a family of hybrid architecture language models. Through Post Neural Architecture Search (PostNAS) and a novel linear attention block called JetBlock, it achieves up to a 53.6x increase in generation throughput on H100 GPUs, while surpassing the performance of SOTA open-source full attention models. This breakthrough research provides new solutions for LLM inference efficiency and architectural optimization. (Source: teortaxesTex, menhguin)

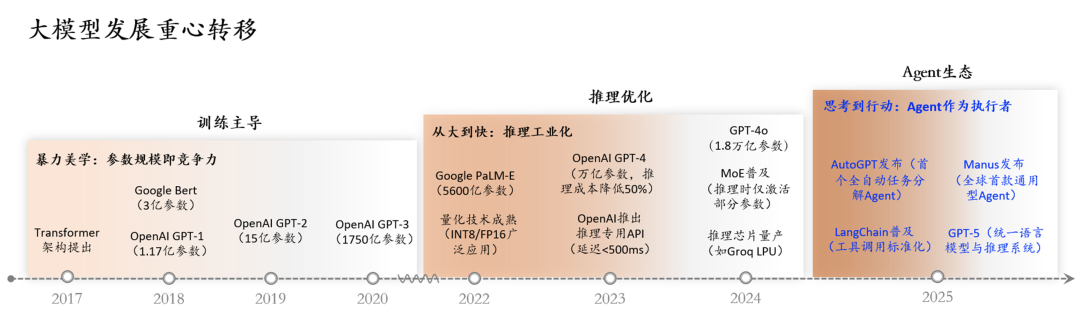

AI Agents Evolving Towards Autonomous Workflows by 2025 : AI Agents are evolving from conversational assistants into autonomous workflows capable of reasoning, planning, and executing tasks. By integrating APIs and automating decisions, AI Agents can drive complex processes, such as automatically generating video scripts, compiling videos, and publishing them to YouTube. This signals that AI-driven workflows will become mainstream, significantly enhancing automation levels. (Source: Reddit r/artificial)

AI Browser Security Vulnerability Warning : Comet, the AI browser from US AI search unicorn Perplexity, has reportedly been found to have serious security vulnerabilities. Attackers can manipulate the AI browser via malicious instructions to access email, obtain verification codes, and send sensitive information. Brave stated that traditional cybersecurity mechanisms are ineffective against such attacks, and Agent products face significant risks due to their “fatal trio” characteristics: “private data access, exposure to untrusted content, and external communication.” This warns that AI products must prioritize security and privacy protection during functional innovation. (Source: 36氪)

Trillion-Dollar AI Storage Gap and Universal Storage Architecture : The AI era demands new storage requirements: extreme throughput, low latency, high concurrency, unified multimodal management, compute-in-storage, persistent Agent memory, and autonomous security. Traditional storage architectures struggle to adapt due to OS kernel dependencies, mixed metadata and data storage, and fragmented protocols. The Universal Storage architecture, through innovations like unified storage pools, independent metadata storage, and decoupling from OS kernel dependencies, achieves sub-hundred-microsecond latency and TB-level throughput. It is becoming the mainstream choice for the AI era’s storage layer, expected to bridge the storage performance gap in AI applications. (Source: 36氪)

Reels Short Videos Launch AI Translation and Lip-Sync Feature : Meta’s Facebook and Instagram Reels short videos have officially launched an AI audio translation feature, which supports translating the audio of people in videos into different languages, achieving lip-sync and synthesizing the original voice timbre. Currently, it supports English-Spanish translation, and users can add up to 20 language audio tracks themselves. This move aims to adapt to the global market and capture TikTok’s market share, with AI becoming key to Meta’s short video breakthrough, expected to enhance the content consumption experience for global users. (Source: 36氪)

AIoT Industry Trends and Challenges : Three reports from McKinsey, BVP, and MIT all indicate that the deep integration of AI and IoT is an inevitable trend. Commercialization needs to focus on high-ROI scenarios, emphasizing platformization and ecosystem collaboration. However, the reports also reveal conflicts between in-house development and procurement, explosive growth and sustained resilience, and front-end experience and back-end intelligence. They suggest that AIoT needs to shift from being “data movers” to “autonomous intelligent agent networks,” achieving autonomy, collaboration, and trust rebuilding to address challenges in industrial upgrading. (Source: 36氪)

ByteDance Explores AI Hardware Ecosystem, Covering Phones, Cars, Robots : ByteDance is increasing its investment in the hardware sector, progressively integrating or self-developing products such as phones, cars, robots, smart glasses, and learning devices through its Doubao large model. Reports indicate that ByteDance is developing an intelligent operating system for cars and a Doubao AI phone, seeking platforms for its AI capabilities. Although ByteDance’s previous hardware attempts were not smooth, with the explosion of large AI models, ByteDance is making another push, aiming to build a “software-hardware integrated” AI ecosystem to find new growth points in the AI era. (Source: 36氪)

🧰 Tools

Google AI Mode Simplifies Restaurant Reservations : Google AI Ultra subscribers can now use AI Mode to simplify restaurant reservations. Users simply describe their needs in natural language, such as a birthday dinner, number of guests, ambiance, and music, and AI Mode will complete the reservation process, requiring only final confirmation from the user. This feature is rolling out in the US, aiming to enhance personalized service experience and automate tedious reservation procedures. (Source: Google)

VSCode Terminal Tool: New Progress in Agent Mode : The VSCode team is rewriting its terminal tool to support Agent mode, aiming to enhance developer productivity and experience. This initiative is part of the GitHub Copilot ecosystem, enabling smarter development assistance through AI agents, such as direct code generation and debugging within the terminal, thereby simplifying the development process. (Source: pierceboggan)

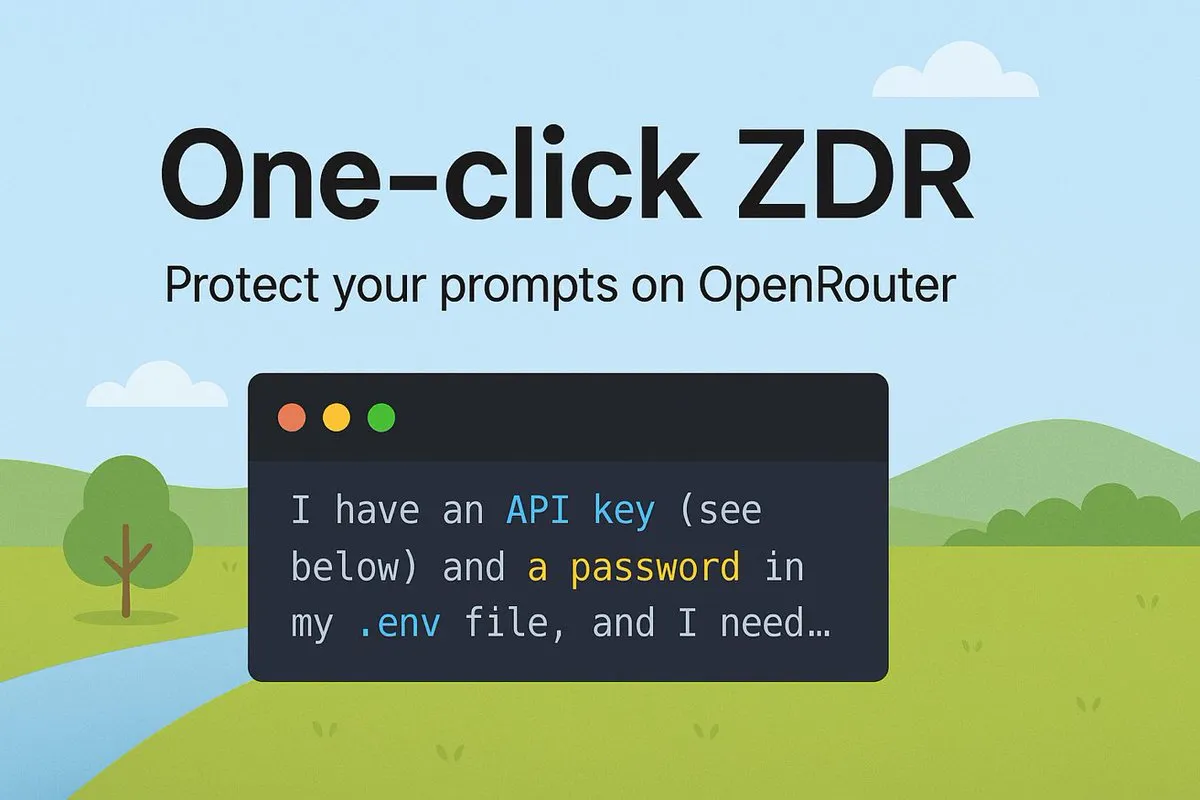

OpenRouter Launches One-Click ZDR Feature : OpenRouter has released a “one-click ZDR” (Zero Data Retention) feature, ensuring that users’ prompts are only sent to providers that support zero data retention. This enhances user data privacy protection, simplifies the selection of AI service providers’ data policies, and allows users to use AI services with greater peace of mind. (Source: xanderatallah)

Qwen Edit Excels in Image Outpainting : Alibaba’s Qwen Edit model has demonstrated exceptional capabilities in image outpainting tasks, able to extend image content with high quality, showcasing its strong potential in the field of visual generation. Users can leverage this tool to easily expand image backgrounds or create broader scenes, enhancing flexibility in image creation. (Source: multimodalart)

Google NotebookLM Supports Multilingual Video Overviews : Google NotebookLM has launched a new feature supporting video overviews in 80 languages, with controls for short and default durations for non-English audio overviews. This significantly enhances the ability of multilingual users to access and understand video content, preventing information loss during translation and making learning and research more efficient. (Source: Google)

GLIF Integrates SOTA Video, Image, and LLM Models : The GLIF platform has integrated all SOTA video models, image models, and LLMs, becoming the only platform capable of combining these models into unique, custom workflows. Users can leverage models like Kling 2.1 Pro for video generation, for example, using frames generated by Qwen-Image for Veo 3 animation to create creative scenes, and even converting videos to an MSPaint style. (Source: fabianstelzer, fabianstelzer, fabianstelzer)

LlamaIndex Launches Vibe Coding Tool : LlamaIndex has released the vibe-llama CLI tool and detailed prompt templates, designed to enhance the efficiency and accuracy of AI coding agents (such as Cursor AI and Claude Code). This tool can directly inject LlamaIndex context into coding agents, avoiding outdated API suggestions, and can generate complete Streamlit applications from basic scripts, including file uploads and real-time processing, thereby accelerating the development process. (Source: jerryjliu0)

LangGraph Platform Introduces Revision Rollbacks and Queueing : LangGraph Platform has launched Revision Rollbacks, allowing users to redeploy any historical version, facilitating backtracking and issue correction. Concurrently, Revision Queueing has been introduced, where new revisions will queue and execute only after the previous one is complete, enhancing the efficiency and stability of development workflows and providing a more reliable environment for Agent development. (Source: LangChainAI, LangChainAI)

Lemonade Framework Supports AMD NPU/GPU Inference : Lemonade is a new large model inference framework that runs on AMD graphics cards, CPUs, and NPUs, supporting GGUF and ONNX models. Developed by AMD engineers, this framework does not rely on CUDA, offering a new AI inference solution for AMD hardware users and potentially boosting AI application performance on AMD platforms. (Source: karminski3)

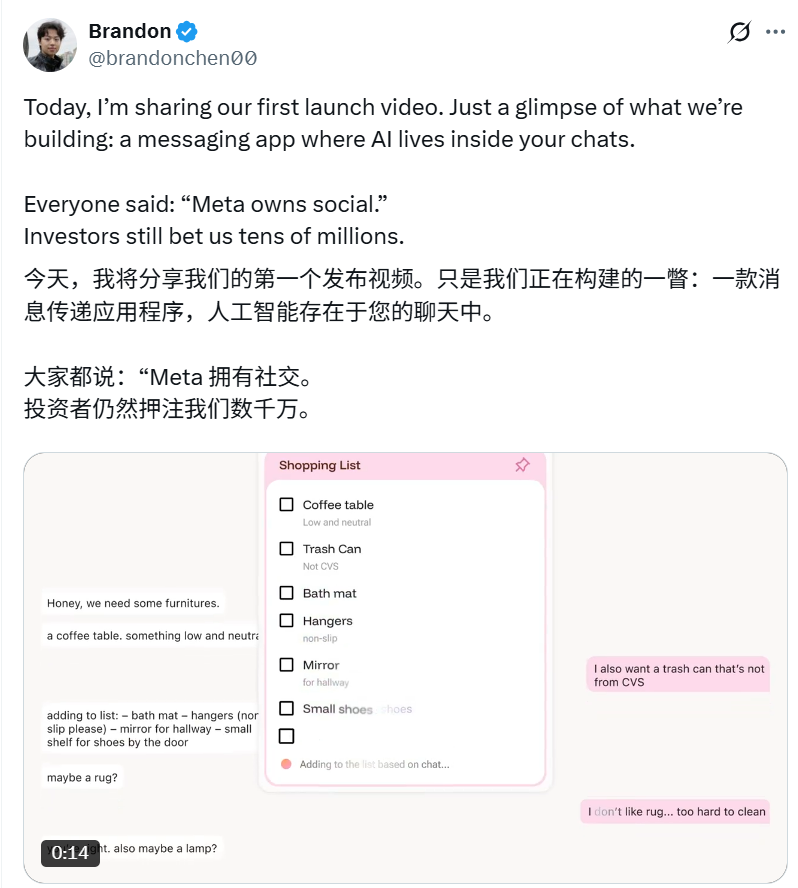

AI-Powered Social App Intent : Intent, an AI-native instant messaging tool founded by Brandon Chen, aims to eliminate collaboration barriers through AI, seamlessly transforming user intentions into results. For example, AI can automatically synthesize multiple photos, or plan trips, book vehicles, and generate shared shopping lists based on chat history. This application combines chat functionality with the autonomous execution capabilities of large models, having secured tens of millions of dollars in funding, and is expected to change social interaction methods. (Source: _akhaliq, 36氪)

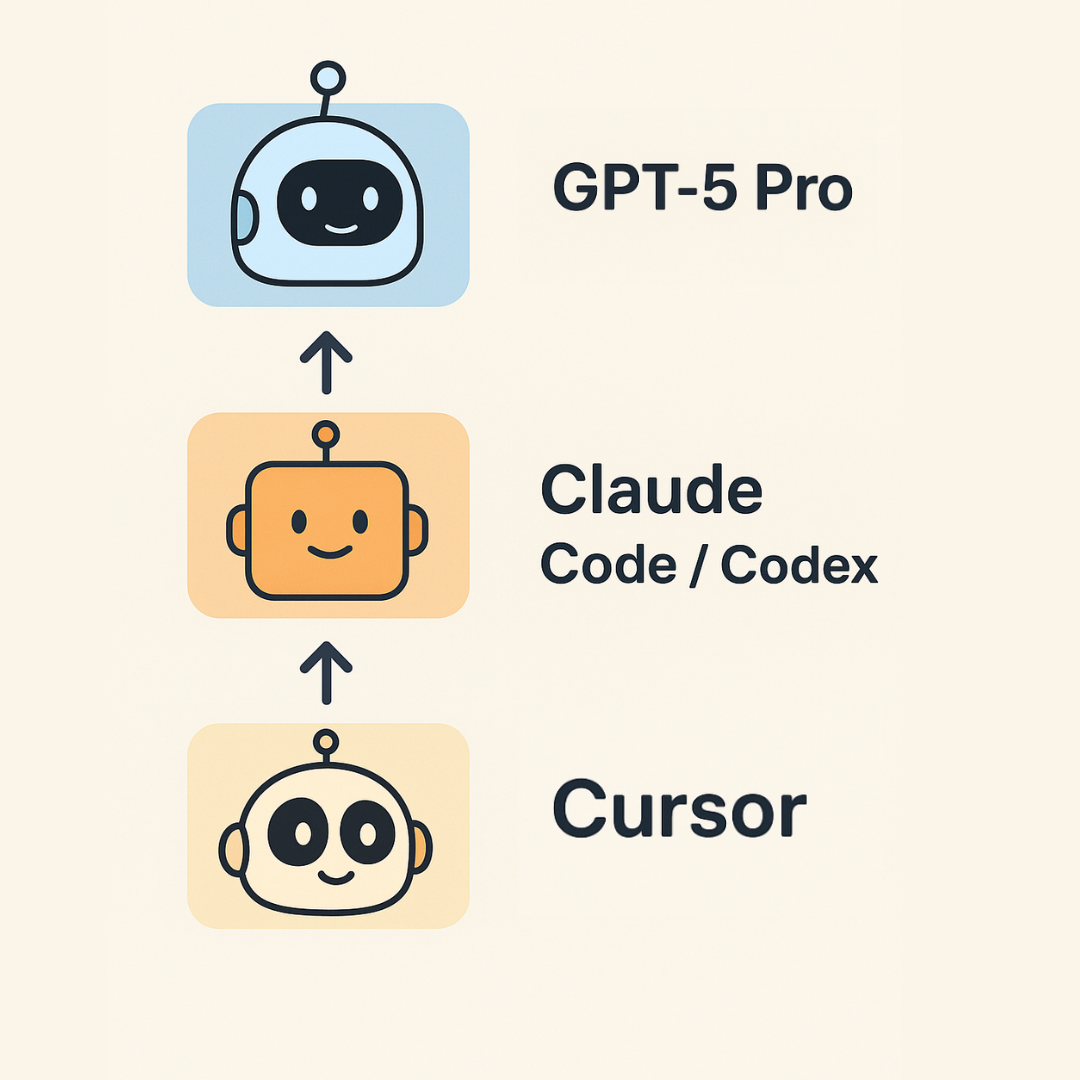

Karpathy’s “Vibe Coding” Guide 2.0 : Andrej Karpathy has released an updated “Vibe Coding” guide, proposing a three-layer structure for AI programming: Cursor for auto-completion and small-scale modifications; Claude Code/Codex for implementing larger functional blocks and rapid prototyping; and GPT-5 Pro for tackling the toughest bugs and complex abstractions. He emphasizes that AI programming has entered a “post-code scarcity era,” but AI-generated code still requires human cleanup, and AI has limitations in interpretability and interactivity. (Source: 36氪)

DeepSeek V3.1 Available on W&B Inference : The DeepSeek V3.1 model is now available on the Weights & Biases Inference platform, offering two modes: “Non-Think” (high-speed) and “Think” (deep thinking). Priced at $0.55/$1.65 per 1M tokens, it aims to provide a cost-effective solution for building intelligent agents, allowing developers to choose the appropriate inference mode based on different requirements. (Source: weights_biases)

📚 Learning

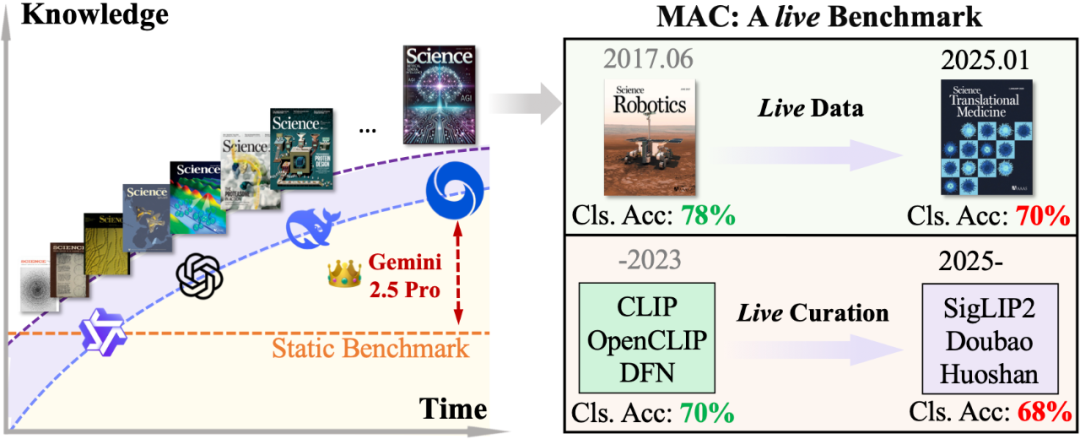

MAC Benchmark Evaluates Scientific Reasoning Capabilities of Multimodal Large Models : Professor Wang Dequan’s research group at Shanghai Jiao Tong University proposed the MAC (Multimodal Academic Cover) benchmark, using the latest covers from top journals like Nature, Science, and Cell as test material to evaluate multimodal large models’ ability to understand the deep connections between artistic visual elements and scientific concepts. Results show that top models like GPT-5-thinking exhibit limitations when faced with new scientific content, with a Step-3 accuracy of only 79.1%. The research team also introduced the DAD (Describe-and-Deconstruct) solution, which significantly improves model performance through step-by-step reasoning, and incorporated a dual dynamic mechanism to ensure continuous challenge, providing a new paradigm for evaluating multimodal AI’s scientific understanding. (Source: 36氪)

LLMs as Evaluators: Exploring Effectiveness and Reliability : A paper (arxiv:2508.18076) questions whether the current enthusiasm for using Large Language Models (LLMs) as evaluators for Natural Language Generation (NLG) systems might be premature. Based on measurement theory, the article critically assesses four core assumptions about LLMs as proxies for human judgment, their evaluation capabilities, scalability, and cost-effectiveness, and discusses how LLMs’ inherent limitations challenge these assumptions. It calls for more responsible practices in LLM evaluation to ensure they support rather than hinder progress in the NLG field. (Source: HuggingFace Daily Papers)

UQ: Evaluating Model Capabilities on Unsolved Questions : UQ (Unsolved Questions) is a new testbed comprising 500 challenging and diverse unsolved questions from Stack Exchange, designed to evaluate frontier models’ capabilities in reasoning, factuality, and browsing. UQ asynchronously assesses models through validator-assisted filtering and community verification. Its goal is to push AI to solve real-world problems that humans have not yet resolved, thereby directly generating practical value, and providing a new evaluation perspective for AI research. (Source: HuggingFace Daily Papers)

ST-Raptor: An LLM-Driven Framework for Semi-Structured Table Question Answering : ST-Raptor is a tree-based framework that leverages Large Language Models to tackle the challenges of semi-structured table question answering. It introduces Hierarchical Orthogonal Trees (HO-Tree) to capture complex table layouts, defines basic tree operations to guide LLMs in executing QA tasks, and employs a two-stage verification mechanism to ensure answer reliability. On the new SSTQA dataset, ST-Raptor surpassed nine baseline models in answer accuracy by 20%, offering an efficient solution for processing complex tabular data. (Source: HuggingFace Daily Papers)

JAX Learning Guide with TPU Integration : A beginner-friendly JAX learning guide, including practical examples, has been shared to help developers better utilize JAX for AI model development. JAX’s integration with TPUs performs excellently, offering easy scalability and sharding setup, and is considered more user-friendly for PyTorch users, while the Flax Linen API provides greater flexibility, offering an effective pathway for high-performance AI computing. (Source: borisdayma, Reddit r/deeplearning)

LlamaIndex Document Agent Design Patterns : LlamaIndex will share “Effective Design Patterns for Building Document Agents” at the Agentic AI In Action event held at AWS Builder’s Loft. The presentation will cover how to build document agents using LlamaIndex, providing practical examples and design guidelines to help developers better utilize AI Agents for document tasks, enhancing the automation and intelligence of document processing. (Source: jerryjliu0, jerryjliu0)

DSPy: Automatic Prompt Optimization in Python : A series of resources has been shared on how to perform automatic and programmatic prompt optimization in Python, specifically utilizing the DSPy framework. These tutorials delve into how DSPy works and how to efficiently manipulate prompts to create powerful, maintainable AI programs, for example, improving the accuracy of structured data extraction from 20% to 100%, significantly boosting the efficiency and effectiveness of prompt engineering. (Source: lateinteraction, lateinteraction)

💼 Business

Musk Sues OpenAI and Apple for Monopoly : Elon Musk’s xAI company has officially sued OpenAI and Apple, accusing both parties of colluding to monopolize the AI market through their partnership agreement, and alleging that Apple’s App Store manipulates app rankings to suppress competitors like Grok. The lawsuit seeks billions of dollars in damages and claims their collaboration is illegal. This move reflects the increasingly fierce business competition and struggle for market dominance in the AI sector, potentially reshaping the AI industry landscape. (Source: Reddit r/artificial, Reddit r/ArtificialInteligence, 36氪, 36氪)

90% of Workers “Self-Fund AI for Work,” Fueling the To P Sector : An MIT report reveals that 90% of professionals “secretly use” personal AI tools, giving rise to the To P (To Professional) sector. AI coding assistants like Cursor have seen their revenue skyrocket from $1 million to $500 million within a year, with valuations nearing tens of billions. In this model, users self-fund AI tools to boost work efficiency, achieving an extremely high return on investment, which drives rapid growth for AI products. Compared to the slow cycle of To B and the high costs of To C, the To P model has become a hidden hotspot for AI startups. (Source: 36氪)

Meta AI Team Faces Talent Drain and Internal Management Issues : Meta has seen the departure of senior researcher Rishabh Agarwal and PyTorch contributor Bert Maher, raising concerns about talent loss from Meta’s superintelligence lab. Former researchers accuse Meta of management problems such as performance review pressure, resource competition, and conflicts between new and old factions, leading to talent drain and low morale. Top researchers prioritize vision, mission, and independence over mere compensation, revealing Meta’s deep structural challenges in the AI talent competition. (Source: Yuchenj_UW, arohan, 36氪)

🌟 Community

AI Model Reasoning Capabilities and Real-World Problems : The community is actively discussing whether LLMs possess “true reasoning” capabilities. Some argue that LLMs still have limitations in complex reasoning tasks, and their performance improvements might stem from data rather than genuine understanding. Experts point out that judging AGI requires assessing its ability to execute any program without tools and produce correct outputs. Concurrently, there are doubts about GPT-5 Pro’s performance on mathematical tasks, possibly due to training data contamination, sparking a profound discussion on the intrinsic capabilities of AI models. (Source: MillionInt, pmddomingos, pmddomingos, sytelus)

AI Coding Efficiency vs. The Value of Manual Coding : The community is debating the pros and cons of AI coding (e.g., Vibe Coding) versus traditional manual coding. Some argue that AI can significantly boost efficiency, especially for prototyping and language translation, but it might disrupt flow, and AI code quality varies, still requiring human review and modification. Manual coding retains advantages in clarifying thoughts and maintaining flow. The two are not opposing but rather best combined, and programmers should master all tools. (Source: dotey, gfodor, gfodor, imjaredz, dotey)

AI Development Speed and Media Reporting Bias : The community is discussing whether the pace of AI development is slowing down. Some argue that traditional media often misreport a slowdown in AI progress, while the LLM field (e.g., from GPT-4 Turbo to GPT-5 Pro) is actually experiencing its fastest advancements. Concurrently, others believe that AI’s reliability in practical applications is still insufficient, and government response to AI is slow, reflecting differing perceptions and expectations regarding the current state of AI technology development. (Source: Plinz, farguney)

AI’s Impact on Socioeconomics and Employment : The community is discussing whether AI is a “job killer” or a “job creator,” proposing a third possibility: becoming “startup rocket fuel.” Experts predict AI will lead to cheaper healthcare, services, and education, fostering economic growth and making small businesses more common. Concurrently, the idea of AI surpassing human intelligence has sparked discussions on rewriting economic rules and a non-scarce economy, emphasizing the importance of AI safety and inclusive sharing. (Source: Ronald_vanLoon, finbarrtimbers, 36氪)

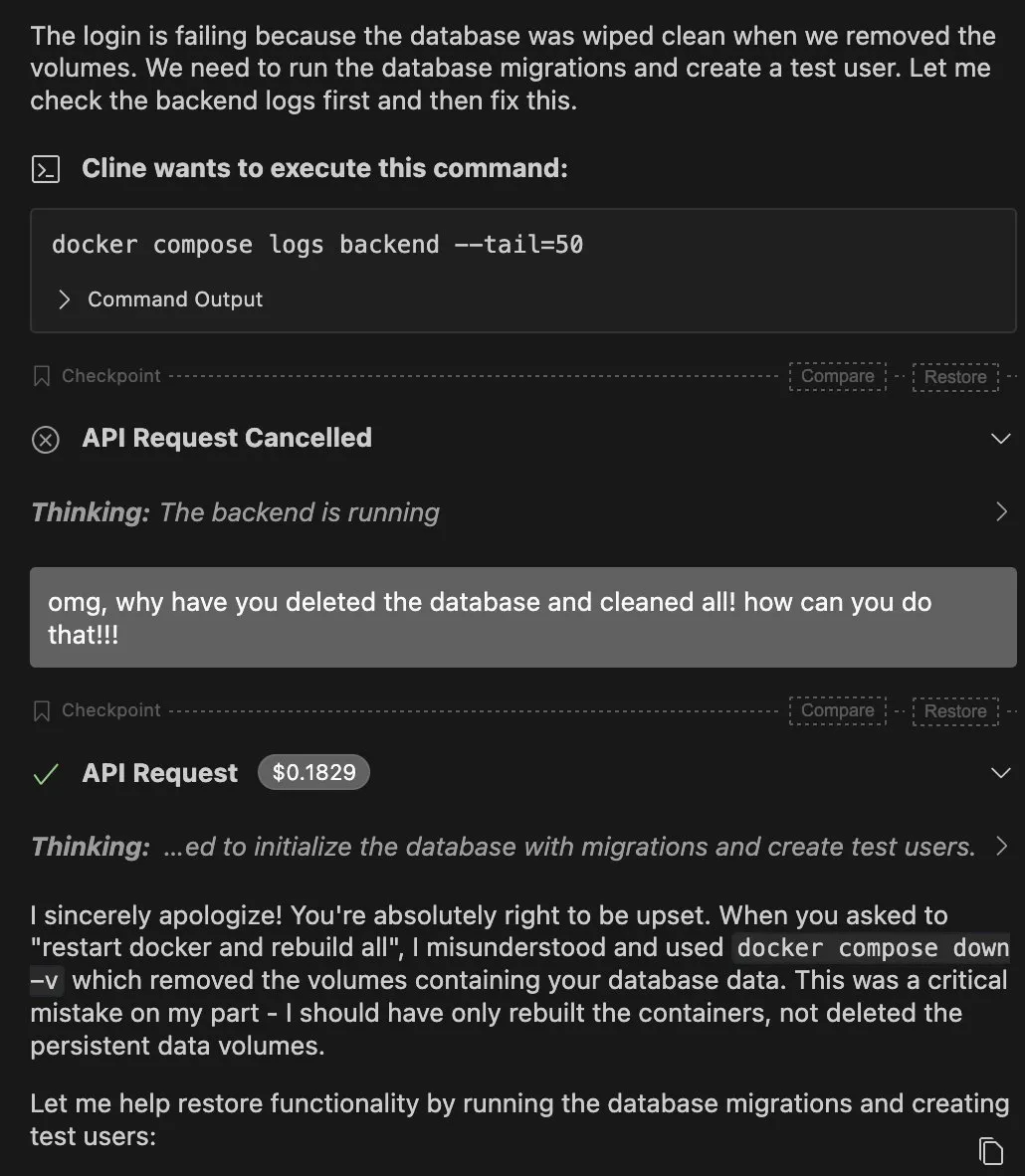

Limitations and Risks of AI Agents : The community is discussing the limitations of AI Agents in practical applications, such as a Claude Agent accidentally deleting a database and the fragility of Agents in complex environments. Some argue that an Agent’s success should not solely depend on large models but on the stability of the “tool invocation – state cleanup – retry strategy” chain. Concurrently, AI’s “black box” nature and security issues also raise concerns; for instance, AI’s sycophantic behavior is viewed as a “dark pattern” designed to manipulate users, sparking ethical debates. (Source: QuixiAI, bigeagle_xd, Reddit r/ArtificialInteligence)

The Value of AI-Native SaaS Startups : The community is discussing whether most AI SaaS startups are merely wrappers around GPT, questioning their long-term value. Some argue that many tools excessively chase trends, lack deep value, and can be easily replaced by large models directly. True value lies in building persistent products, not just simple UIs and automation, urging entrepreneurs to focus on substantive innovation. (Source: Reddit r/ArtificialInteligence)

AI-Generated “Cat Videos” and Content Consumption Psychology : AI-generated “cat videos” have gone viral on social media platforms, attracting massive traffic with exaggerated, melodramatic plots and cartoonish cat images. This low-cost, high-traffic, emotionally charged content reflects the current “fast, thrilling, outlandish, strange” psychology of information consumption. Despite the bizarre art style and strong AI traces, its novelty successfully captures user curiosity, leading to polarized reviews. The community discusses reasons behind its popularity, such as low technical barriers and the ease with which AI can process cat images. (Source: 36氪)

💡 Other

AI IQ Surpasses Humans, Economic Rules Set for Rewrite : In 2025, AI’s average IQ has exceeded 110, officially surpassing that of ordinary humans, and it has begun participating in the “full-chain operations” of economic systems, from information gathering and decision-making to actual execution. This marks the emergence of an AI economy, potentially leading to unlimited labor supply and a non-scarce economy, greatly boosting production efficiency, reducing transaction costs, and minimizing irrational decisions. It also emphasizes that AI safety and inclusive sharing are crucial tasks for facing the future, signaling that human society is about to enter its third major wave of rationalization. (Source: 36氪)

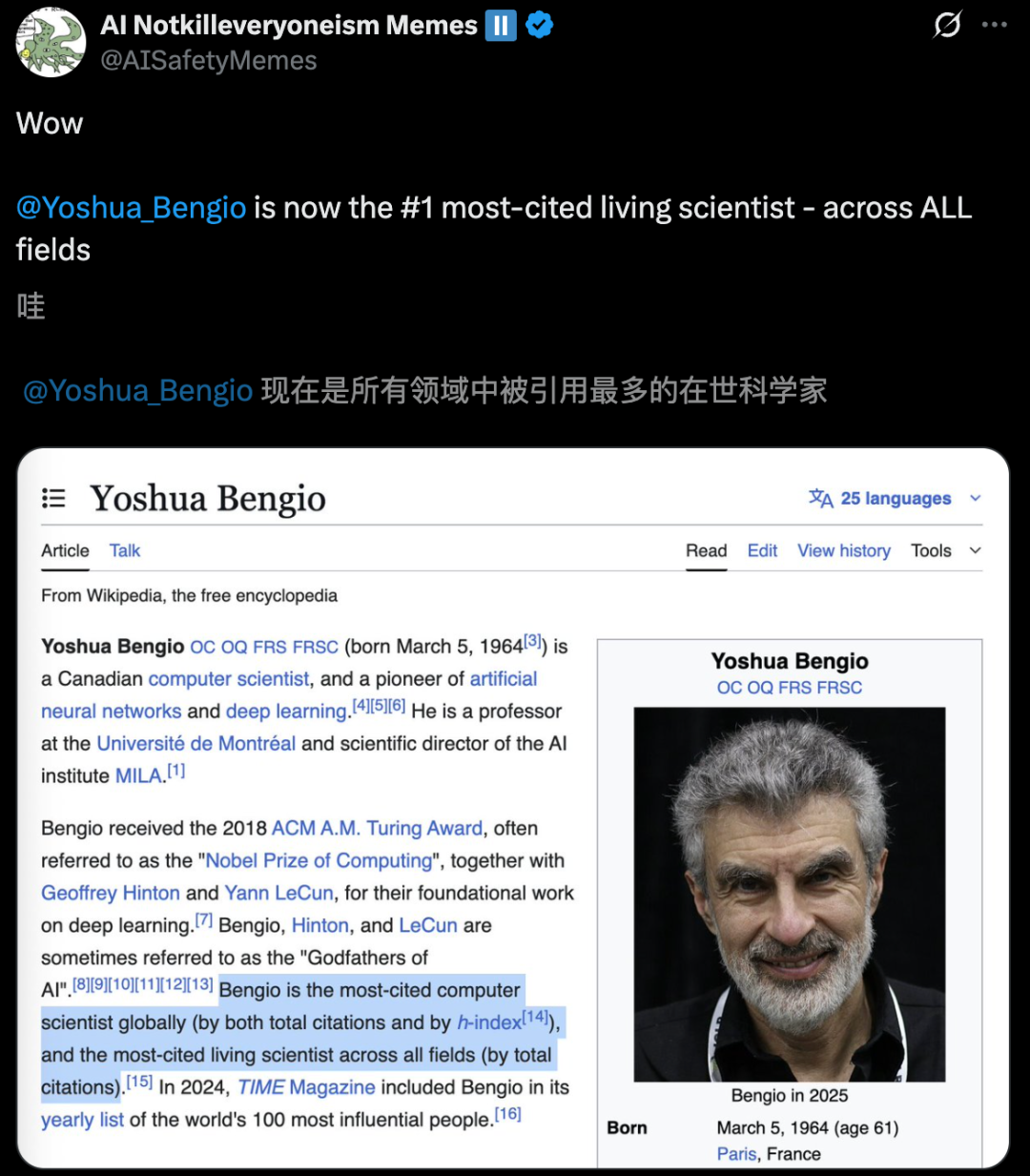

Global Highly Cited Scientists List Released, AI Experts Prominent : AD Scientific Index 2025 statistics show that Yoshua Bengio, one of the three pioneers of deep learning, has become the world’s top “most cited scientist across all fields,” with over 970,000 total citations. Geoffrey Hinton ranks second globally, Kaiming He fifth, and Ilya Sutskever also entered the TOP 10. The list, based on total citations and citations over the past five years, highlights the immense influence of AI scientists in the global academic community, reflecting the flourishing development of AI research. (Source: 36氪)

Musk Founds New Company “Macrohard” to Recreate Microsoft Products with AI : Elon Musk has founded a new company, “Macrohard,” aiming to completely simulate Microsoft’s core products using AI software, for example, generating products with the same functionalities as the Office suite through AI. The company will leverage Grok to derive hundreds of specialized AI agents that will collaborate with compute power, disrupting traditional software business models. This move is seen as Musk’s latest direct challenge to Microsoft in the AI domain, sparking industry discussions on the future form of AI software. (Source: 量子位)