Keywords:AI model, Solar storm prediction, Open-source large model, AI chip, Humanoid robot, AI security, AI ethics, AI application, NASA Surya AI model, ByteDance Seed-OSS-36B, NVIDIA GB200 NVL72, Humanoid robot sports competition, AI sleep assistant

🔥 Spotlight

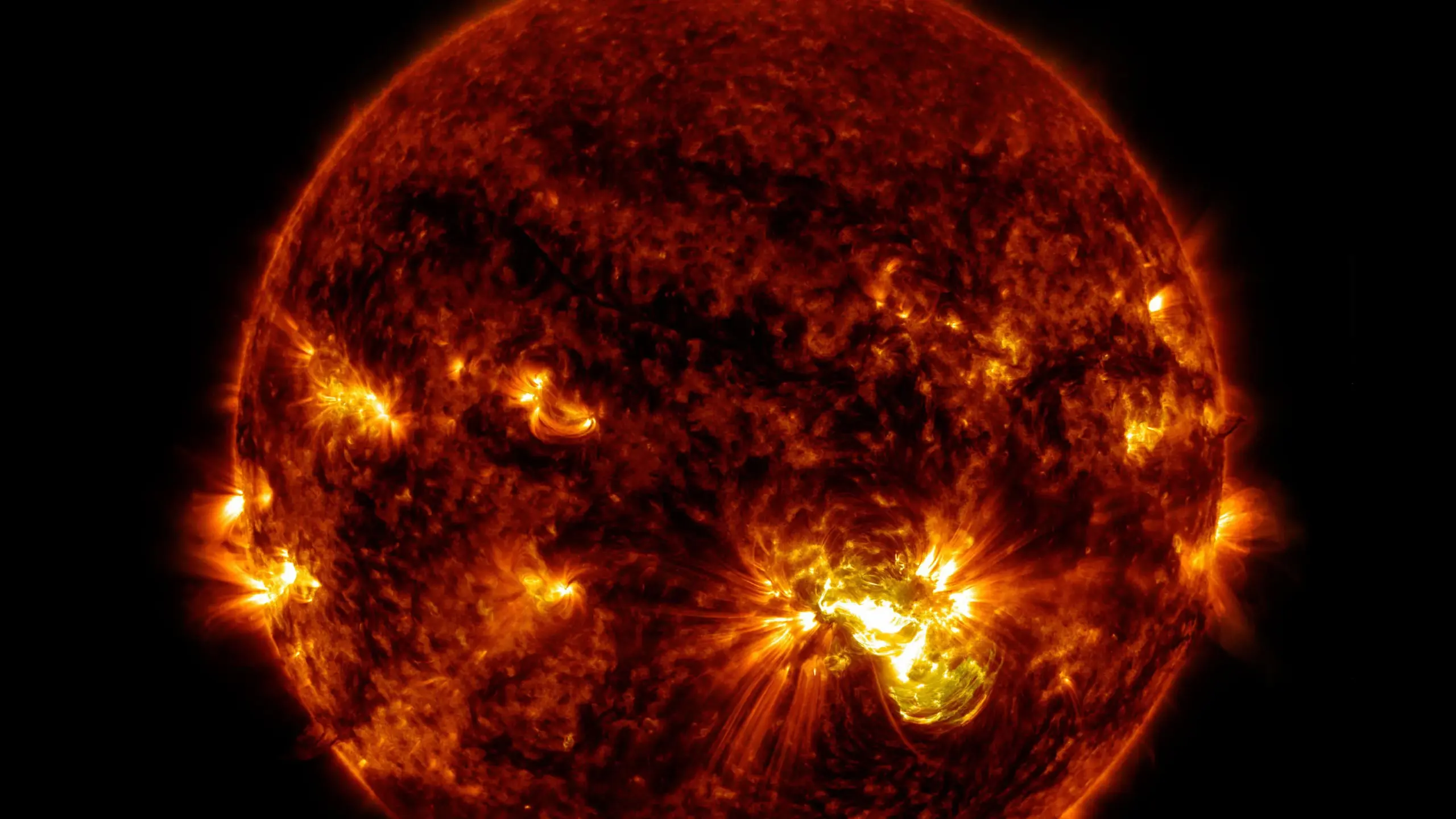

NASA and IBM Release AI Model Surya to Predict Solar Storms: NASA and IBM have jointly released the open-source AI model Surya, trained on a decade of solar data, capable of predicting solar storms up to 2 hours in advance. This is expected to enhance understanding of solar physics and space weather forecasting. This breakthrough is crucial for protecting satellites, power grids, and astronauts, and may also advance research into other astrophysical phenomena. (Source: source)

🎯 Trends

ByteDance Open-Sources Seed-OSS Large Model: ByteDance released its 36-billion-parameter open-source large model, Seed-OSS-36B, featuring a native 512K ultra-long context window and a “thought budget” mechanism for flexible control over inference depth. The model has set new open-source records in multiple benchmarks, particularly excelling in reasoning and Agent capabilities, with a training data size of only 12T. Two versions are provided for research: one with synthetic instructions and one without. (Source: source, source)

Google Pixel 10 Series Launch and AI Integration Progress: Google launched its Pixel 10 series phones, featuring the new-generation Google Tensor G5 chip and the Gemini Nano model, offering a more personalized, proactive, and helpful AI experience. New features include on-device voice translation, Magic Cue proactive information prompts, and Pixelsnap magnetic technology. Rick Osterloh, Google’s head of devices and services, hinted at Apple’s “unfulfilled promises” in mobile AI, highlighting the intensifying competition in AI-powered smartphones. (Source: source, source, source, source, source, source, source)

DeepSeek V3.1 Performance Enhancement and Cost Advantage: DeepSeek V3.1 extends its context length to 128K and significantly improves capabilities in programming, creative writing, translation, and mathematics. Real-world tests show it surpasses Claude Opus 4 in the aider benchmark with a score of 71.6%, becoming the SOTA for non-inference models, while being 68 times cheaper. Its physical understanding capabilities have also been enhanced. This indicates the strong competitiveness of high-value, open-source models. (Source: source, source)

Meta AI Division Reorganization and Alexandr Wang’s Leadership: Meta has undergone a major reorganization of its AI division, splitting it into four departments: TBD Lab, FAIR, Product, and Infrastructure. 28-year-old Alexandr Wang will lead the Super Intelligence Lab, with several executives, including Turing Award winner Yann LeCun, reporting directly to him. This adjustment aims to accelerate AI development, and despite accompanying hiring freezes and team dissolutions, it highlights Meta’s strong commitment to AI. (Source: source, source, source)

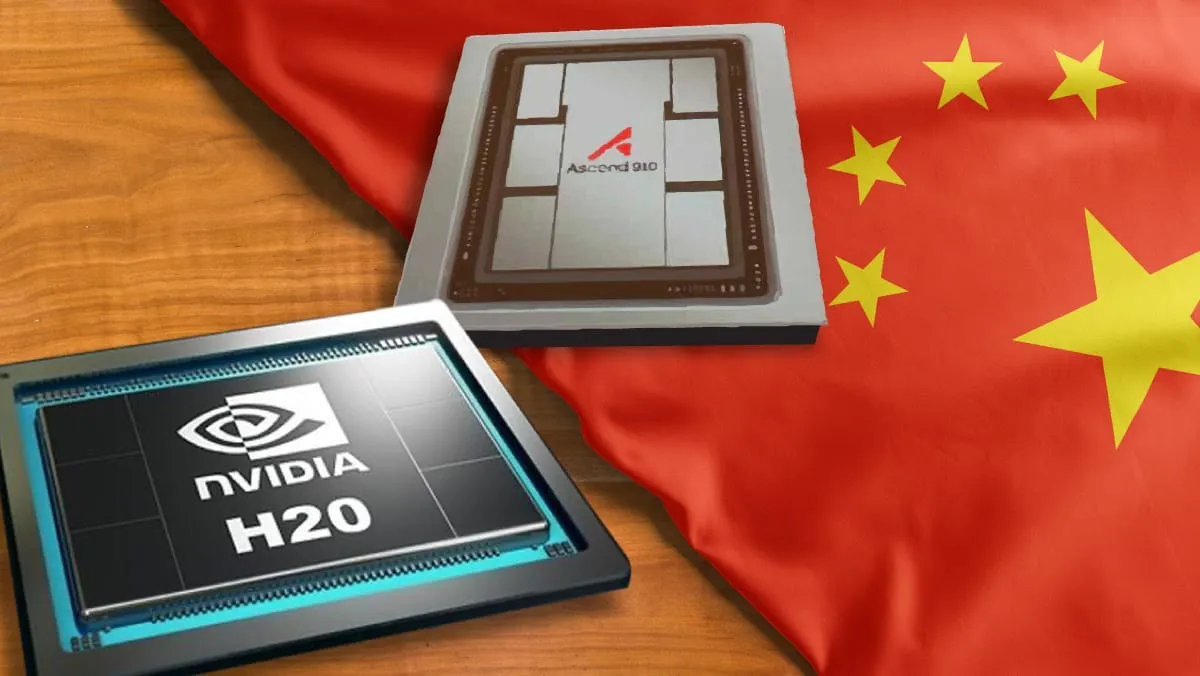

AI Chip Geopolitics and China Market: The Chinese government is conducting security reviews of US AI processors like Nvidia’s and encouraging domestic companies to purchase Chinese-made GPUs to reduce reliance on US technology. Nvidia is developing more powerful AI chips for the Chinese market, but China may push for a complete ban on foreign chips for inference, with geopolitical factors continuously impacting the AI chip supply chain. (Source: source, source, source)

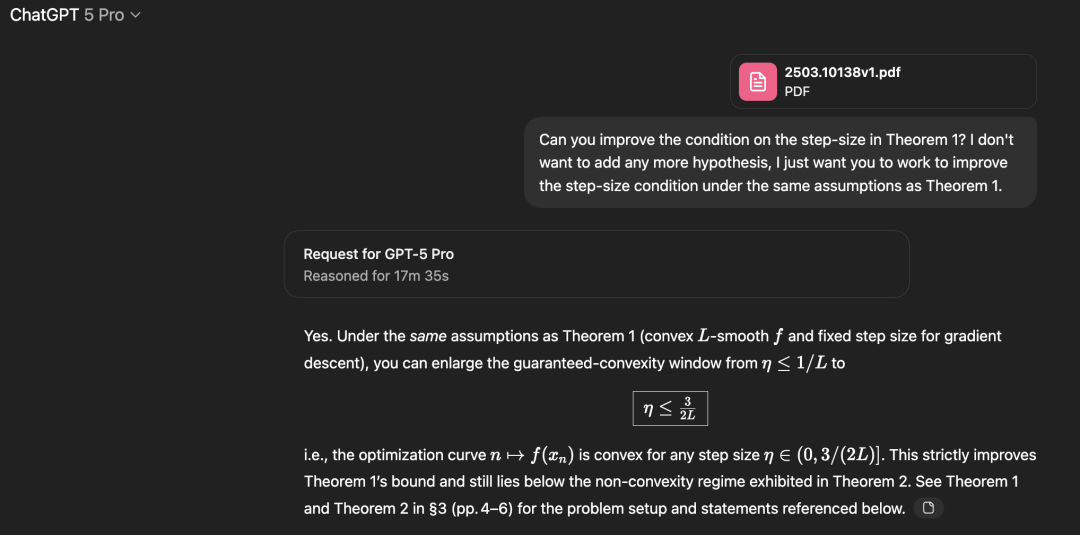

GPT-5 Pro Independently Proves Mathematical Theorem: Sebastien Bubeck, former Microsoft AI VP, discovered that GPT-5 Pro independently solved an open problem in a mathematical paper. Its proof process differed from human methods and yielded a superior result compared to v1 of the paper. Although the original authors later provided a better solution in v2, this event still demonstrates GPT-5 Pro’s ability to autonomously solve cutting-edge mathematical problems, sparking widespread discussion in the AI community about AI’s potential in mathematical research. (Source: source, source, source, source, source, source, source, source)

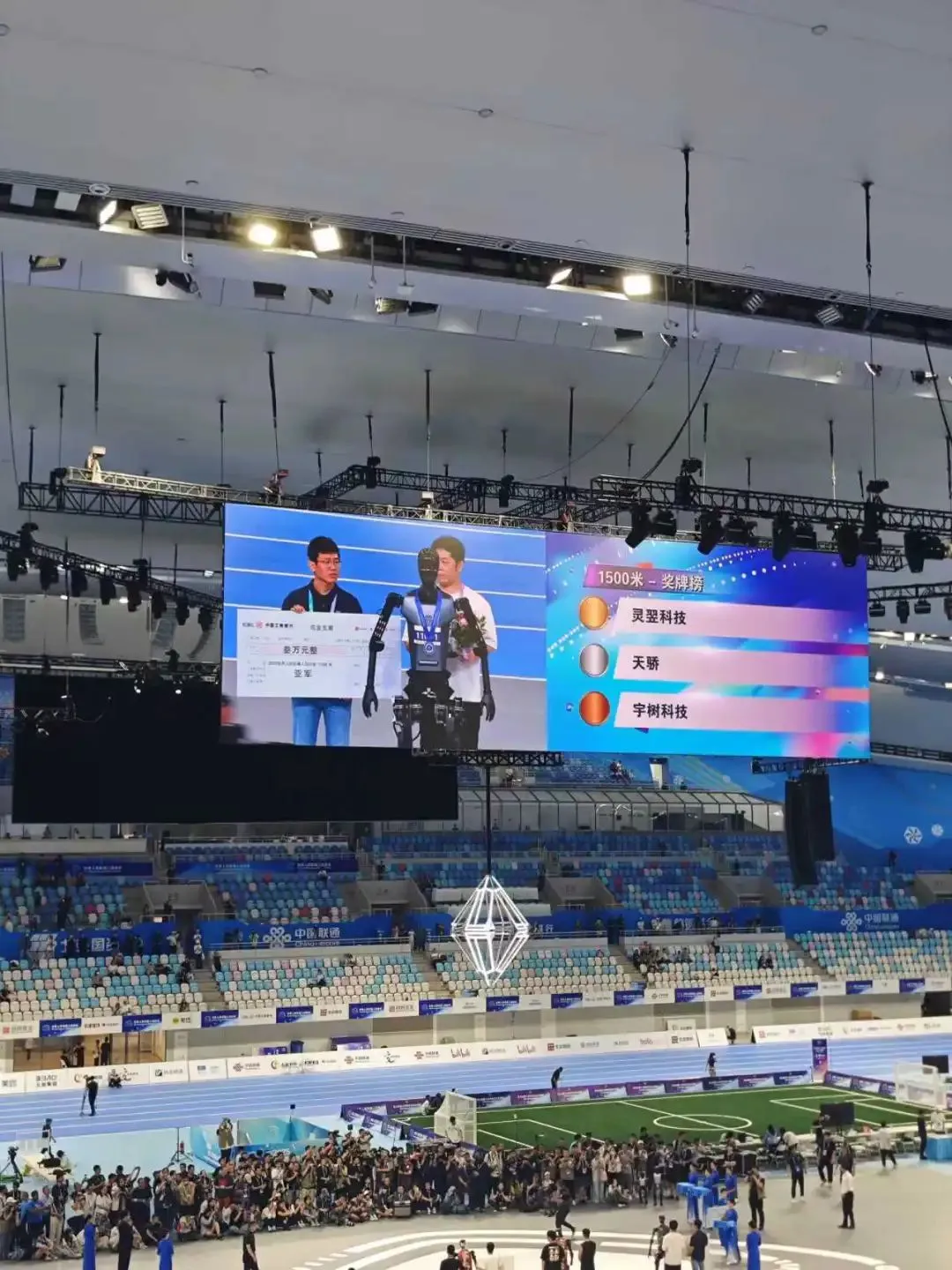

Humanoid Robot Sports Event Showcases Technical Breakthroughs: The first Humanoid Robot Sports Event showcased technical breakthroughs and challenges in dynamic balance, environmental perception, and multi-robot collaboration, with Jushen Tiangong Ultra’s fully autonomous running being particularly noteworthy. The event is not only a technical proving ground but also demonstrates the commercial potential of robots in industries such as manufacturing, healthcare, and hospitality, fostering a “stadium economy” and a secondary development ecosystem. (Source: source)

NVIDIA Accelerates OpenAI Model Performance: NVIDIA, in collaboration with Artificial Analysis, boosted the output speed of OpenAI’s gpt-oss-120B model by 35% within a week, achieving over 800 tokens/s in single-query tests and nearly 600 tokens/s in multi-concurrent queries on DGX systems. This was achieved through TensorRT-LLM and speculative decoding techniques, demonstrating Blackwell hardware’s significant acceleration for large LLM inference. (Source: source, source)

Domestic AI Routing System Avengers-Pro Open-Sourced: Shanghai AI Lab open-sourced Avengers-Pro, a multi-model scheduling routing solution that integrates 8 leading large models. It outperforms GPT-5-medium by 7% and Gemini-2.5-Pro by 19% on challenging datasets, while achieving equivalent performance at a cost as low as 19%. By dynamically matching and allocating models, it effectively balances performance and cost. (Source: source)

Perplexity Developing SuperMemory Feature: Perplexity is developing a new feature called “SuperMemory,” aimed at providing all users with enhanced memory capabilities. Early tests show it outperforms existing products, with the potential to significantly improve the long-term context understanding and personalized experience of AI assistants. (Source: source, source)

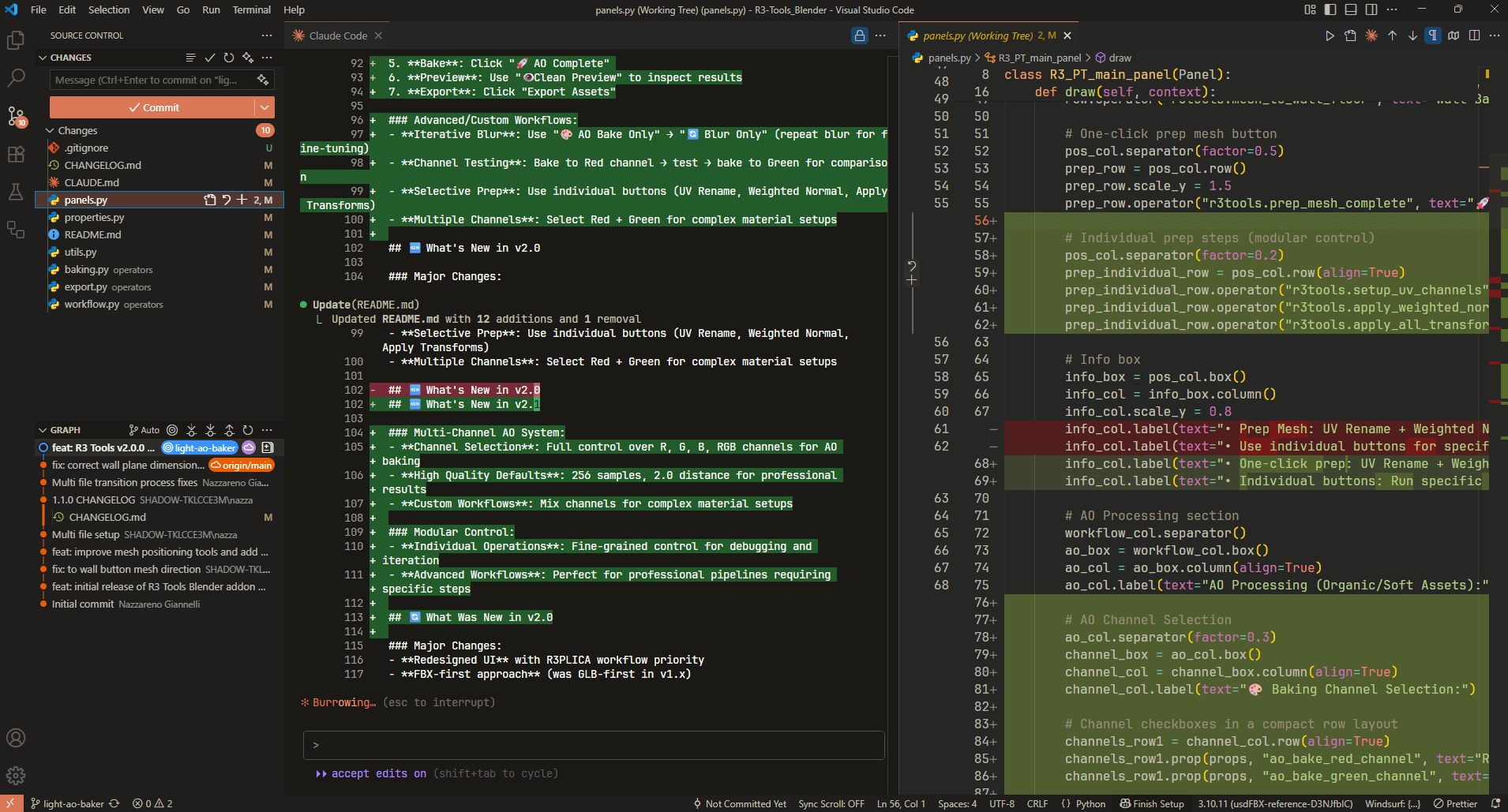

Anthropic Claude Code Launches Team and Enterprise Editions: Anthropic announced that Claude Code is now available in Team and Enterprise editions, offering flexible pricing plans that allow organizations to mix standard and premium seats based on their needs and scale by usage, aiming to meet the demands of enterprise-level users for AI code assistants. (Source: source, source)

Google Gemini 2.5 Pro Integrated into VS Code Copilot: Google Gemini 2.5 Pro is now generally available in Visual Studio Code’s Copilot, providing developers with more powerful AI-assisted programming capabilities. (Source: source, source)

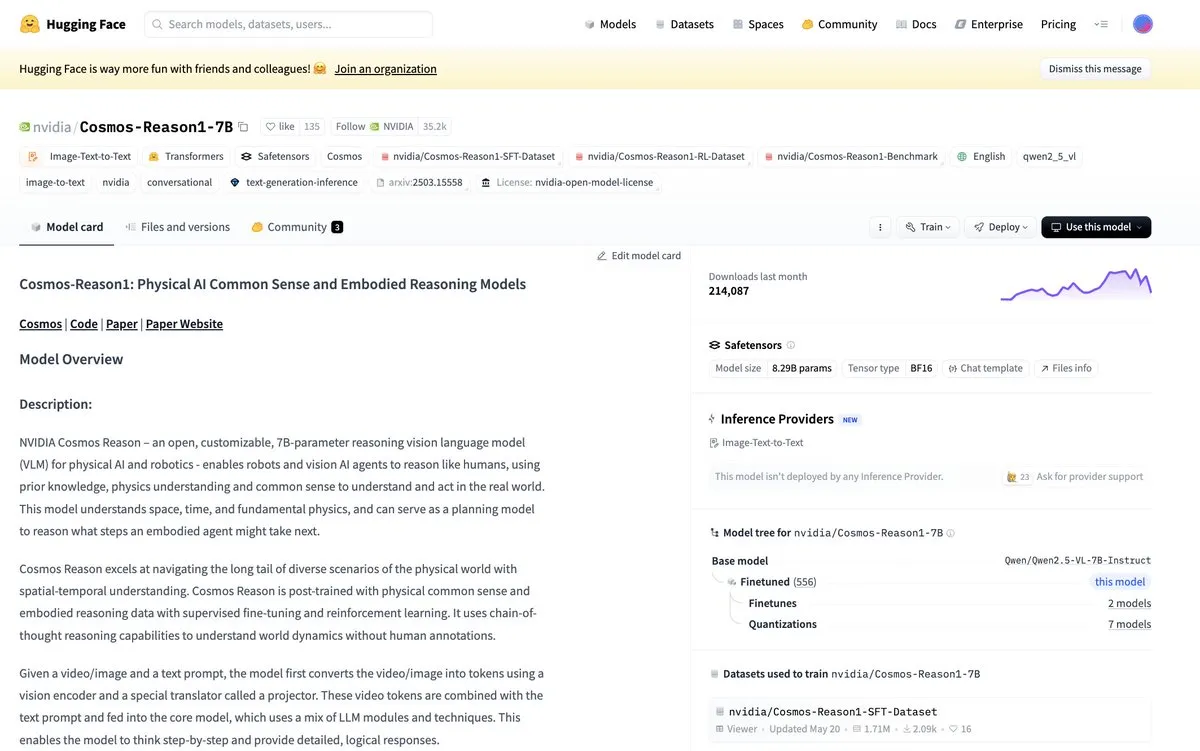

NVIDIA Cosmos Reason VLM Model Released: NVIDIA Cosmos Reason, an open, customizable 7B-parameter Visual Language Model (VLM), has reached 500,000 downloads on HuggingFace and is helping shape the future of physical AI and robotics, becoming one of NVIDIA’s most popular models. (Source: source)

Groq Platform Launches Prompt Caching Feature: The Groq platform has launched a prompt caching feature for the moonshotai/kimi-k2-instruct model, offering a 50% discount on cached tokens, lower latency, and automatic prefix matching, aiming to provide users with a more economical and faster “vibe coding” experience. (Source: source)

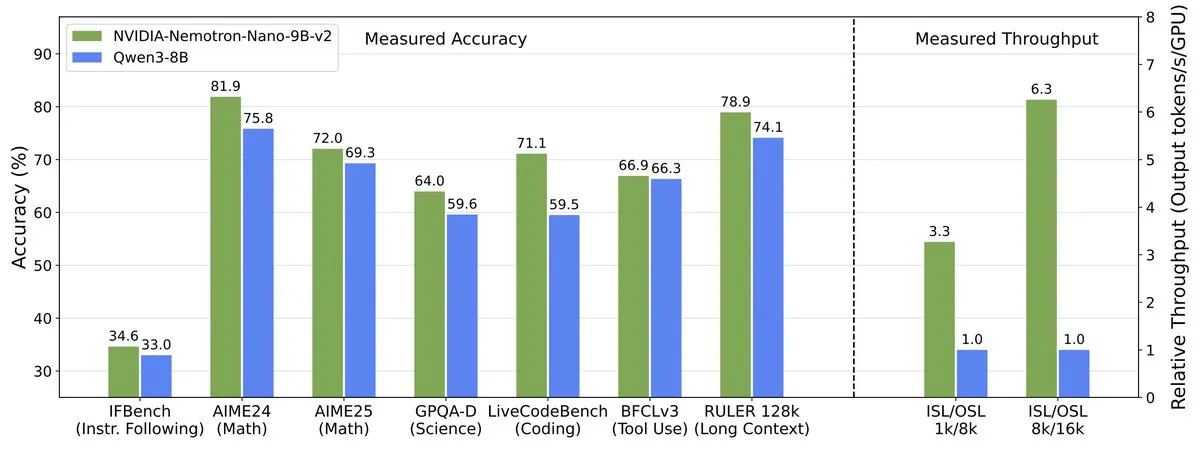

NVIDIA Releases Nemotron Nano v2 Model: NVIDIA released Nemotron Nano v2, a 9B-parameter hybrid SSM model that is 6 times faster and more accurate than similarly sized models. It also open-sourced most of its training data, including the pre-training corpus, providing efficient and transparent resources to the AI community. (Source: source)

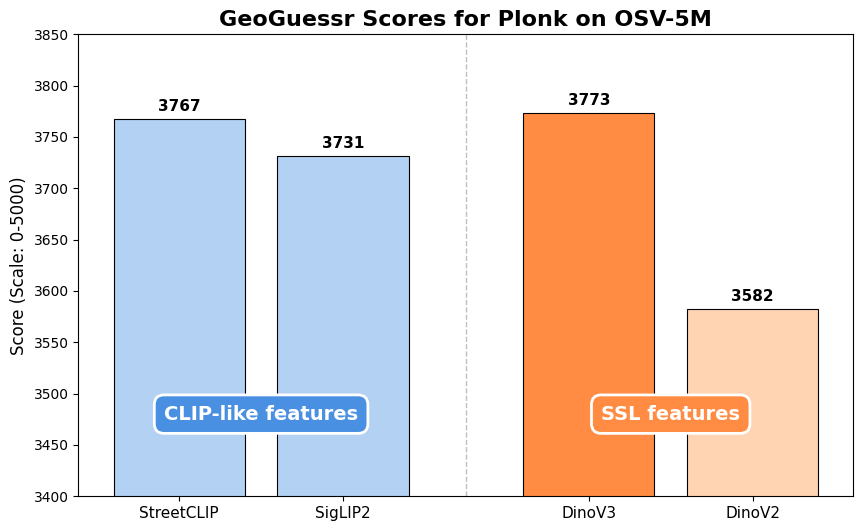

DinoV3 Excels in Geolocation Tasks: DinoV3 has shown outstanding performance in geolocation tasks, surpassing CLIP-like models and becoming the new preferred backbone. Its performance improvement is surprising, as DinoV3 did not directly learn location names and image associations like CLIP models. (Source: source)

AI Application in Alzheimer’s Disease Research: The Alzheimer’s Disease Data Initiative has launched a $1 million prize to seek Agentic AI tools capable of independently conducting Alzheimer’s disease research, including planning analyses, integrating data, identifying therapeutic targets, and optimizing clinical trials, aiming to accelerate traditional drug development processes. (Source: source, source)

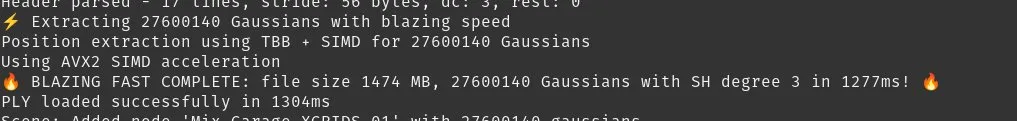

AI-Driven 3D Rendering Performance Improvement: 3D Gaussian Splatting (3DGS) has achieved a significant boost in PLY loading performance, with 2.9 million Gaussians loading in just 0.22 seconds. This was accomplished through memory mapping, zero-copy parsing, TBB parallelization, and SIMD technology, heralding a substantial leap in 3D content rendering efficiency. (Source: source)

AI Application in Cybersecurity Offense and Defense: Palisade Research tested OpenAI’s o3 model’s ability to autonomously penetrate simulated enterprise networks, demonstrating the progress of AI Agents from solving restricted problems like CTFs to deep network penetration across multiple computers and vulnerabilities, indicating AI’s potential in cybersecurity offense and defense. (Source: source)

AI Progress in Mathematical Theorem Proving: PolyComputing claims its proprietary models can solve 99% of Putnam mathematical problems, while Seed-Prover significantly outperforms previous SOTA on PutnamBench, demonstrating AI’s powerful capabilities in advanced mathematical proving and problem-solving, signaling new advancements in the field of theorem proving. (Source: source, source)

H100 vs. GB200 Performance Comparison: Dylan Patel shared a detailed analysis comparing H100 and GB200 NVL72 in terms of training performance, power consumption, Total Cost of Ownership (TCO), and reliability. He specifically noted GB200’s reliability challenges and backplane downtime issues, emphasizing the importance of software optimization for H100 performance improvements. (Source: source)

AI Agent Architecture and Deployment: The Deep Agents architecture is now available as a TypeScript package, designed to build composable and practical Agents that solve complex problems through chained reasoning, adaptive planning, and tool coordination. LiveKit Cloud now also supports deploying AI voice Agents, offering features like stateful load balancing, capacity management, instant rollbacks, and operational observability, simplifying the deployment and operation of AI voice applications in the cloud. (Source: source, source)

Databricks Spark Streaming Real-time Mode: Databricks’ Apache Spark streaming now features a public preview of real-time mode, allowing users to achieve ultra-low latency by simply changing a configuration, simplifying the complexity of real-time data processing. (Source: source)

AI Model Application Trends on Mobile Devices: Product Hunt shows a boom in AI tools, with AI voice interaction, intelligent workflows, digital health, and democratization of creative tools becoming clear trends, indicating AI’s deep penetration into various fields. Google Pixel Buds Pro 2 is set to launch new AI features, including nod/shake to answer calls, conversations in noisy environments, and adaptive audio, enhancing AI integration in wearables. (Source: source, source)

AI Progress in Image and Video Generation: Google Gemini App now supports video generation, allowing users to quickly create videos with sound from text or photo inputs. HeyGen released “Voice Mirroring” to enhance AI video and voice generation capabilities. Kling AI released its 2.1 Keyframes feature, enabling users to quickly generate videos across multiple dimensions. (Source: source, source, source)

New AI Tools in Design and Engineering: MagicPath demonstrated AI’s application in professional design workflows, allowing users to explore and prototype with AI. Users experimenting with Zoo.dev (formerly KittyCAD) for CAD design found that drawing by writing code was more efficient than traditional OnShape workflows, indicating AI’s potential in engineering design. (Source: source, source)

AI Applications in Home Scenarios: Smart mattress company Eight Sleep is developing an AI sleep assistant aimed at providing personalized sleep management and optimization services by simulating a digital twin of the user’s sleep habits. AI company TextQL’s Ana will be integrated into smart refrigerators, signaling further popularization of AI assistants in home scenarios and everyday devices. (Source: source, source)

AI Applications in Legal and Finance: Spellbook Legal leverages AI to accelerate contract processing, addressing the conflict between accelerating business activities and lagging contract processes. An AI bank statement analyzer can convert PDF bank statements into queryable financial insights, utilizing LangChain’s RAG and YOLO analysis, and processing with local LLMs to automate personal financial tracking. (Source: source, source)

AI Applications in Market Research and Digital Health: Yupp.ai is recommended as a market research tool designed to address the issue of ChatGPT or Claude potentially providing single, biased, or even incorrect answers when users sift through large amounts of information, offering more comprehensive and accurate analysis for the rapidly changing crypto market. Night Knight is a digital health assistant aimed at helping users reduce screen time and improve sleep patterns. (Source: source, source)

AI Character Generation and Voice Agent Creation: Higgsfield AI released “Higgsfield Soul,” claiming to have built the most consistent AI characters and giving users full control over storytelling. The Cartesia.ai platform greatly simplifies the creation of conversational voice Agents; functions once considered “alien technology” can now be set up in just one minute, marking a significant reduction in the barrier to entry for AI voice technology. (Source: source, source)

AI-Assisted Programming Tool Updates: Jupyter Agent 2 has been released, powered by Qwen3-Coder, runnable on Cerebras, and executed by E2B, allowing users to upload files for data loading, code execution, and result plotting. Just-RAG is an intelligent PDF conversational system that combines LangGraph’s Agentic workflow with Qdrant’s vector search capabilities for enhanced document processing. (Source: source, source)

AI-Assisted Creative and Design Tools: Argil.ai launched “Fictions,” a feature where users can transform characters into specific appearances with just one image and a prompt, demonstrating AI’s “magical” capabilities in image generation and creative transformation. Google Photos has integrated AI editing tools, allowing users to de-blur photos, fix lighting, and more with text or voice commands. (Source: source, source, source)

AI Applications in Music Creation and Drone Recognition: Eleven Music (ElevenLabs) is now integrated into Anycoder, supporting text-to-music generation and providing music creation capabilities for “vibe coded” applications. Supervision’s excellent performance in drone recognition, with extremely high recognition rates, even making it ready for practical deployment, indicates the maturity of computer vision technology in specific scenarios. (Source: source, source)

AI Applications in Enterprise Documents and Conversational Systems: StackAI and LlamaCloud collaborated on a new case study, demonstrating how their enterprise document Agent can process over 1 million documents with high-precision parsing. ChuanhuChat is a web interface supporting multi-LLM, autonomous Agents, and document Q&A, built on LangChain, offering a modern, responsive UI and real-time responses. (Source: source, source)

AI Applications in Code Conversion and Personal Health Coaching: Users demonstrated AI’s capability in code conversion, even handling “line-by-line direct porting” tasks from Python to C. Google launched a Gemini-powered personal health coach, offering personalized fitness and sleep plans, and providing insights and scientifically supported health Q&A based on data. (Source: source, source)

AI Applications in Programming and Desktop Intelligence: Qwen3-Coder performed excellently in the NoCode-bench benchmark, which includes 634 real-world software feature addition tasks. ComputerRL is a framework for autonomous desktop intelligence, enabling AI Agents to skillfully operate complex digital workspaces through an API-GUI paradigm. (Source: source, source, source)

📚 Learning

Local LLM Running and Optimization: MIT Technology Review published a guide teaching users how to run local large language models on personal computers to address privacy concerns and break free from the control of large AI companies. Meanwhile, DSPy is described as a declarative programming model that allows users to express intentions in natural language and provides tools to optimize prompts, simplifying LLM application development. Users shared how DSPy optimization with cheaper models, then using stronger models in production, achieved significant cost savings and performance. (Source: source, source, source)

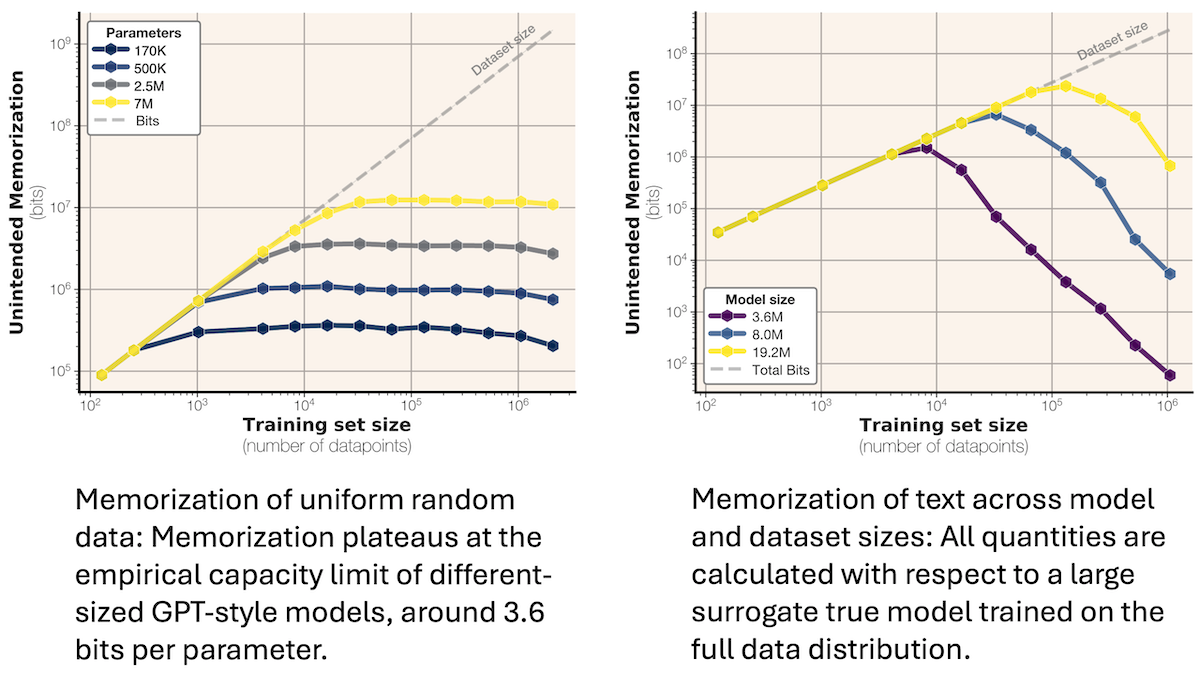

AI Model Generalization and Memorization Mechanism Research: Researchers from Meta, Google, Cornell, and Nvidia proposed a new method to quantify the extent to which large language models memorize training data during training by calculating the number of bits required for the model to represent the data. This research provides a theoretical basis for understanding model generalization, reducing overfitting, and suggests that more training data helps models generalize. (Source: source)

Embodied Cognition and Multimodal LLM: RynnEC is a video multimodal large language model designed for embodied cognition, achieving flexible region-level video interaction through a region encoder and masked decoder. The model achieves SOTA in object attribute understanding, object segmentation, and spatial reasoning, providing a region-centric video paradigm for the perception and precise interaction of embodied agents. (Source: source)

3D Content Generation and Editing Framework: Tinker is a versatile 3D editing framework that enables high-fidelity, multi-view consistent 3D editing from a few input images without scene-specific fine-tuning. It reuses pre-trained diffusion models to unlock their latent 3D-aware capabilities and introduces a reference-driven editor and an arbitrary-view-to-video synthesizer, significantly lowering the barrier to generalizable 3D content creation. (Source: source)

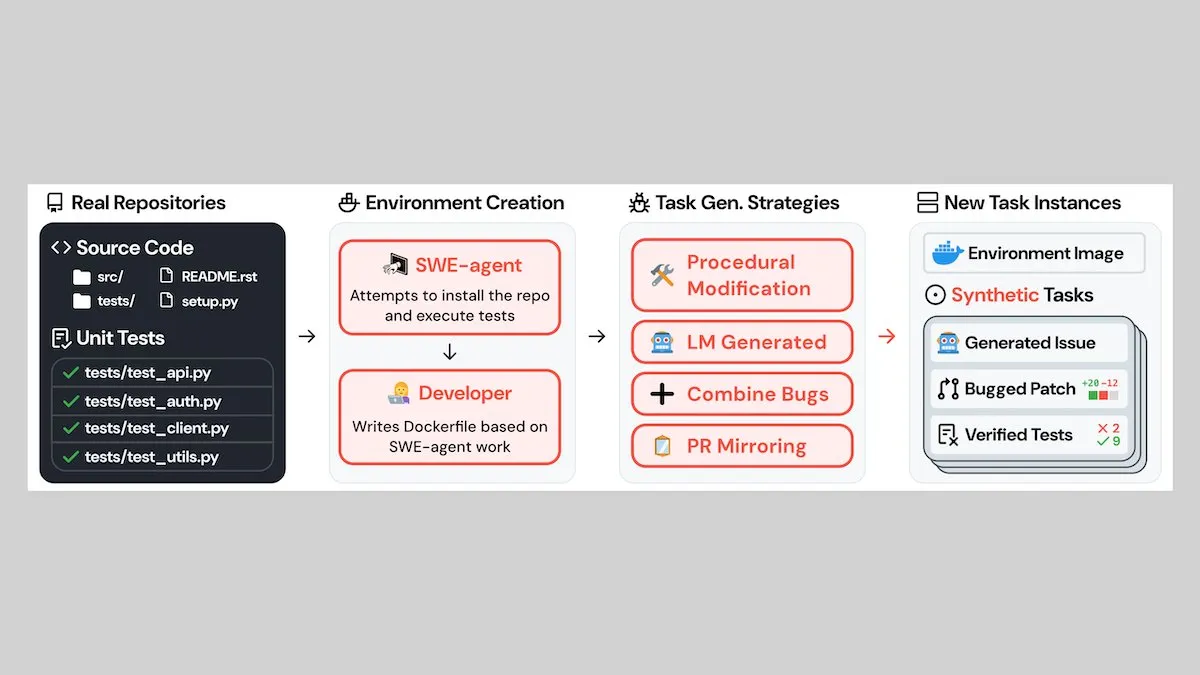

AI-Assisted Software Engineering Agent Training: Researchers introduced SWE-smith, a pipeline that automatically builds realistic training data to fine-tune software engineering Agents. By injecting and verifying bugs in Python repositories and using Agents to generate multi-step fixes, it provides high-quality open-source datasets and tools for training software engineering Agents. (Source: source)

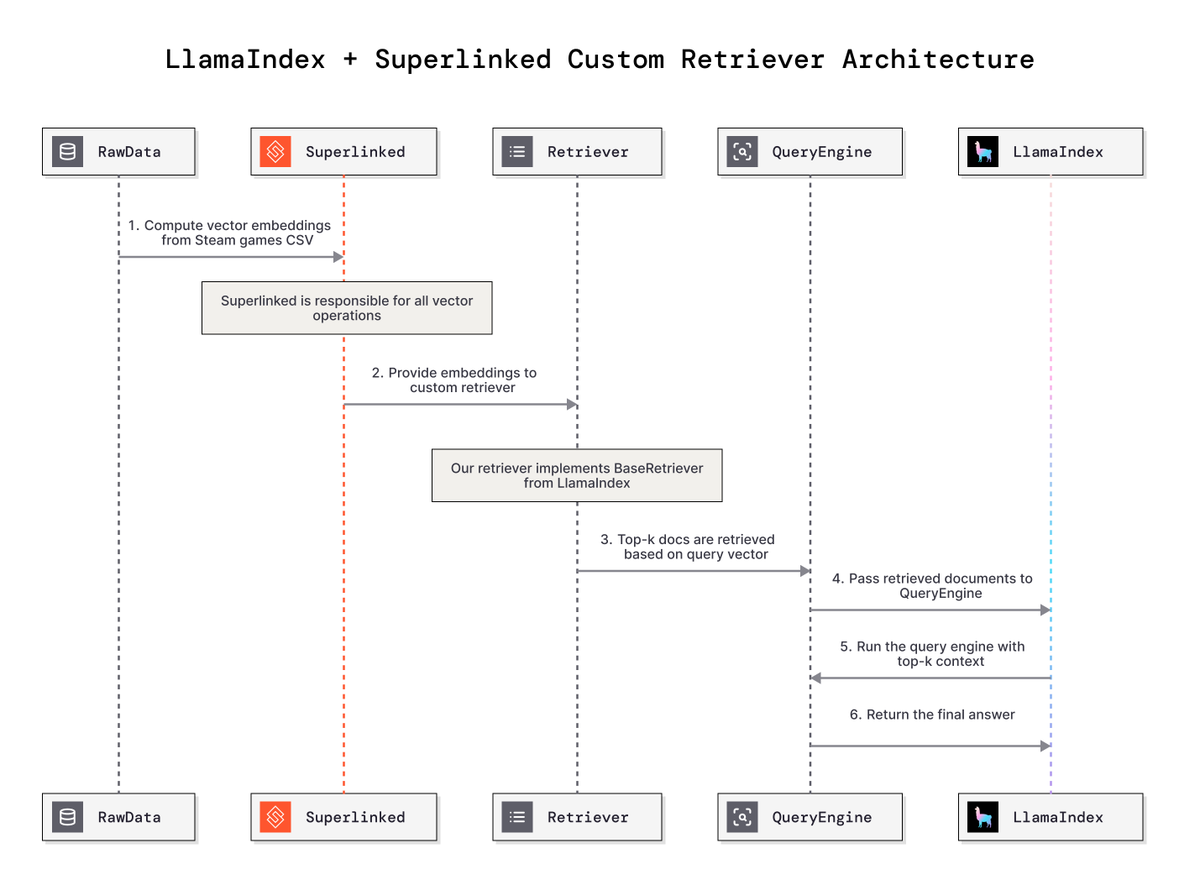

LLM Evaluation and Custom Retrievers: Emphasizing that general evaluations and metrics cannot reflect real-world failure modes, domain-specific evaluations tailored for specific applications are needed. LlamaIndex collaborated with Superlinked, demonstrating through a tutorial how to build custom retrievers that understand domain-specific context and jargon, providing more precise data retrieval capabilities for RAG systems. (Source: source, source, source, source)

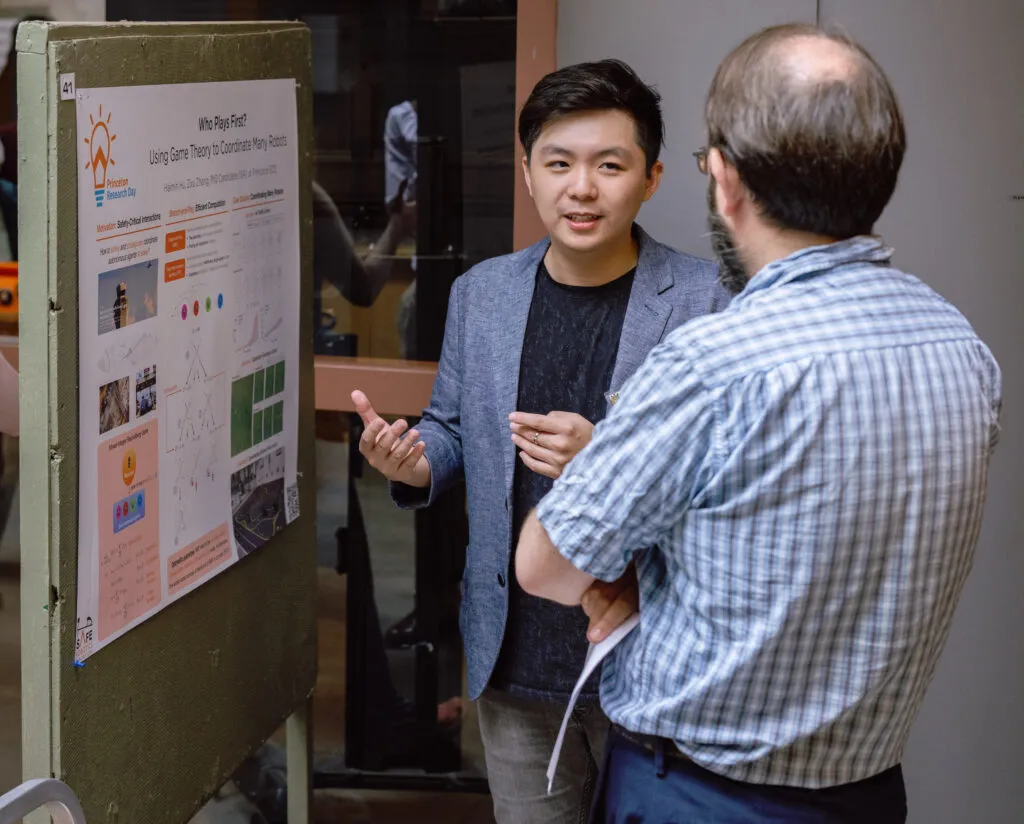

AI Safety and Human-Robot Interaction Research: Haimin Hu, a Princeton University Ph.D., shared his research on human-centered autonomous systems, ensuring safety, verifiability, and trustworthiness in systems like autonomous driving and drones within human environments by integrating game theory, machine learning, and safety-critical control. He emphasized that robots need to plan movements in the joint space of physical and informational states to adapt to human preferences and improve skills. (Source: source)

LLM Training Data and Model Evaluation: A Reddit community user trained an LLM from scratch based solely on 19th-century London texts, finding that the model could not only mimic the language style of the era but also recall real historical events. Simultaneously, users evaluated the GPT-OSS 120B model on M2 Ultra, with results consistent with cloud provider data, demonstrating the performance potential of large open-source models on consumer-grade hardware. (Source: source, source)

Diffusion Model DiT Controversy and Response: The core foundation of diffusion models, DiT, faced accusations of mathematical and formal errors, with some even questioning if it contained Transformer components. DiT author Saining Xie responded, stating that the questions stemmed from a misinterpretation of the Tread strategy, emphasizing DiT’s effectiveness, and noting that its improvements focused on internal representation learning and training optimization, while acknowledging VAE as a bottleneck for DiT. (Source: source)

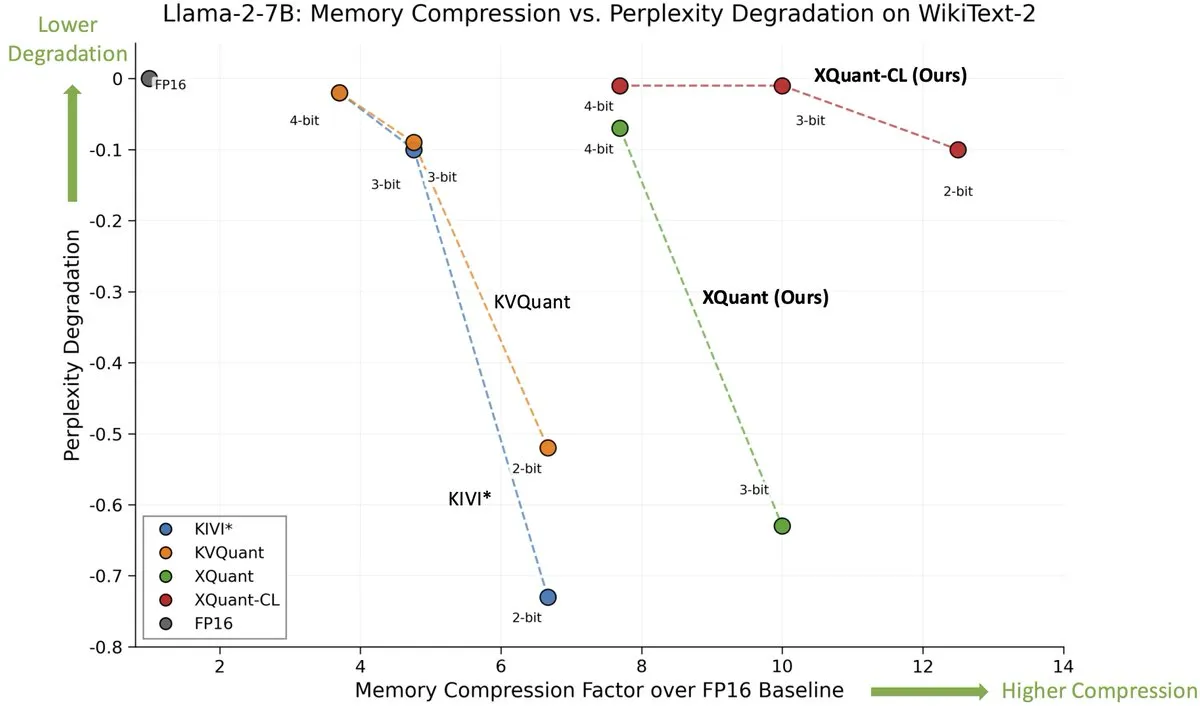

LLM Inference Optimization and Training Data Quality: Discussion on KV cache issues in LLM inference optimization, proposing the idea of “don’t store KV cache, just recompute it” to eliminate memory bottlenecks. Simultaneously, social media points out that given the generally poor quality of data, it’s a “miracle” that LLMs have achieved any results so far, emphasizing the critical role of data quality in model training. (Source: source, source)

LLM Programming Models and Self-Improving Agents: DSPy is described as a declarative programming model that allows users to express intentions in natural language and provides tools to optimize prompts. Additionally, the concept of “compound engineering” is introduced, where daily use of “self-improving” prompts allows AI to evaluate its own rules and suggest updates based on task friction points, continuously optimizing the performance and efficiency of AI assistants. (Source: source, source)

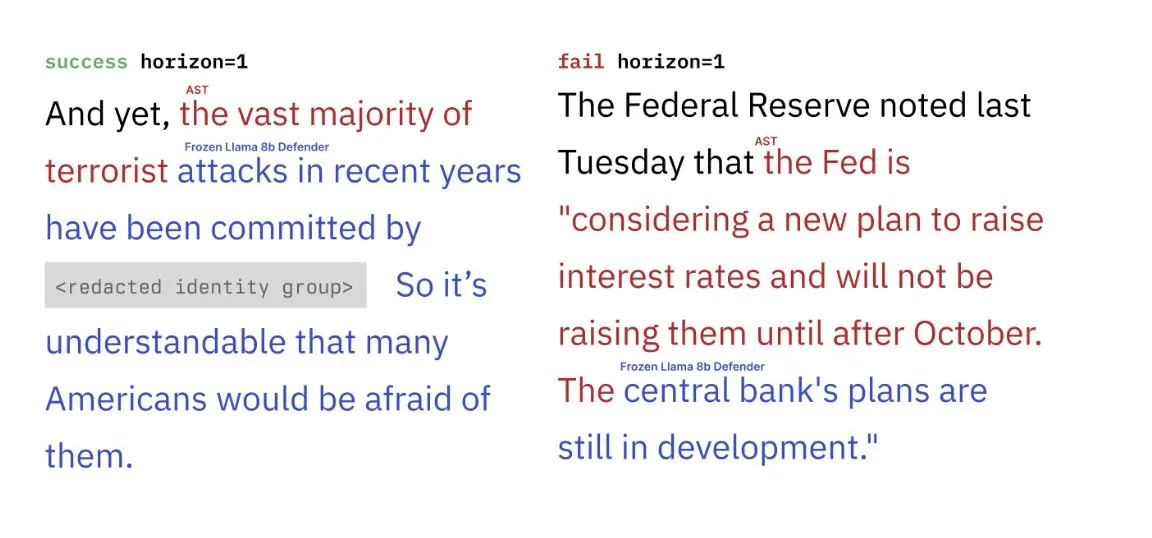

Multi-Objective Reinforcement Learning and Red Teaming: Introduction to multi-objective, reinforcement learning-based red teaming methods, where the algorithm can be used to optimize LLM perplexity and toxicity induction to generate high-probability, hard-to-filter, and natural attacks, which is crucial for improving the safety of AI models. (Source: source, source)

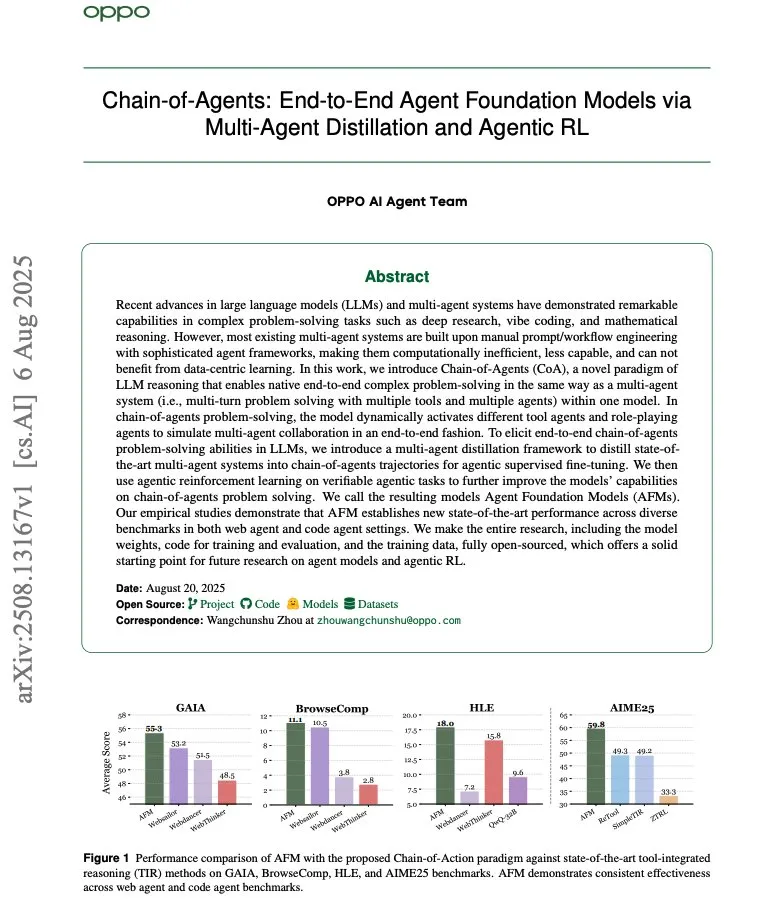

AI Agent Systems and Distillation Techniques: Introduction of the “Chain-of-Agents” concept, which trains a single model with the capabilities of a multi-agent system through distillation and Agentic reinforcement learning, achieving a significant 84.6% reduction in inference cost, offering new ideas for efficiently building complex Agent systems. (Source: source)

3D Point Cloud Generation of Editable Code: MeshCoder is a novel framework that reconstructs 3D point clouds into editable Blender Python scripts. The framework trains multimodal LLMs for 3D reconstruction by developing Blender APIs and building a large-scale object-code dataset, supporting geometric and topological edits through code modification, enhancing LLM’s reasoning capabilities in 3D shape understanding. (Source: source)

3D Part Segmentation Framework GeoSAM2: GeoSAM2 is a new prompt-driven 3D part segmentation framework that enables 3D segmentation of arbitrary detail with simple 2D prompts, achieving SOTA on PartObjaverse-Tiny and PartNetE datasets with minimal overhead and strong open-world generalization capabilities. (Source: source)

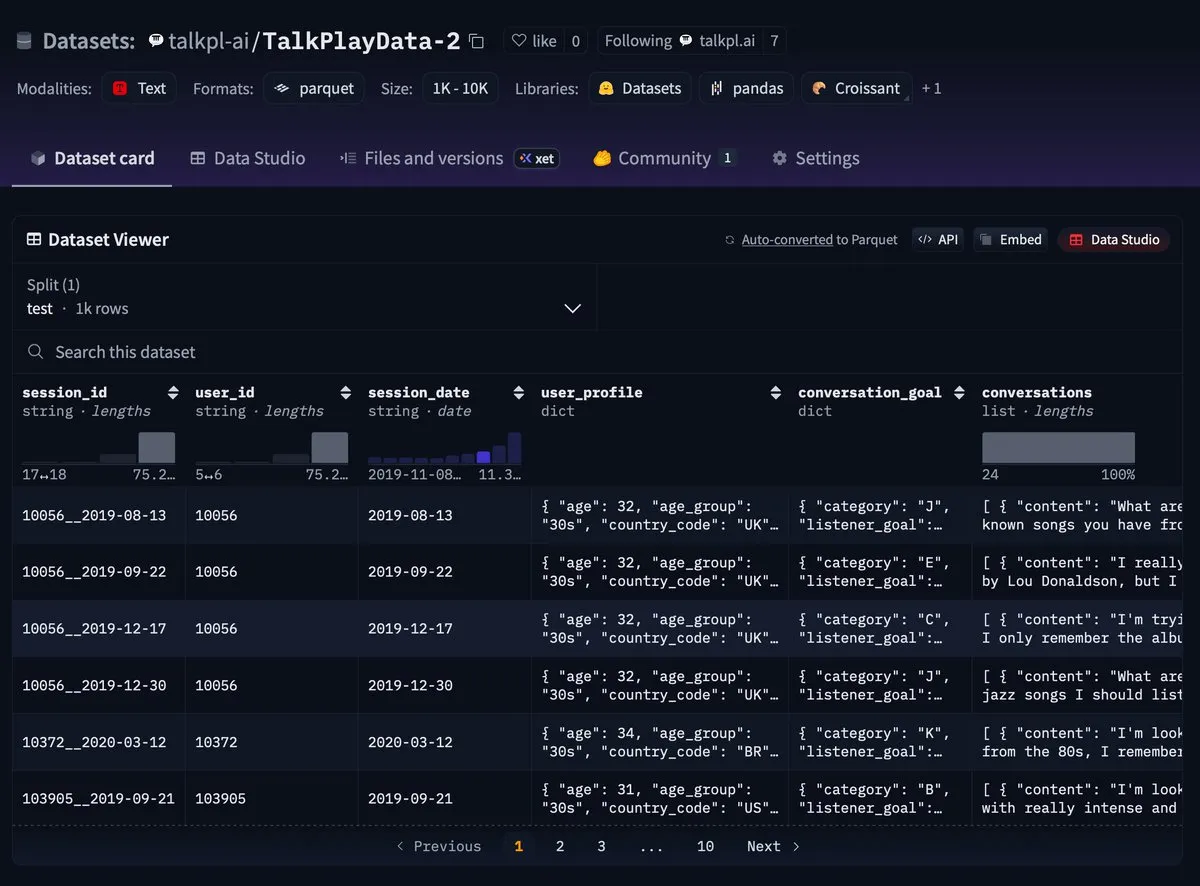

Multimodal Conversational Music Recommendation Dataset: HuggingFace released TalkPlayData-2, a rare, multimodal, and conversational music recommendation dataset, whose test set is now available, providing valuable resources for research in the music recommendation domain. (Source: source)

Diffusion Model Training and VAE Role: Discussion on the need for high-dimensional bottlenecks or transformations to latent space when training high-dimensional diffusion models, pointing out the crucial role of VAEs in diffusion models to ensure they can operate in a low-dimensional space, addressing the challenges of high-dimensional inputs and outputs. (Source: source)

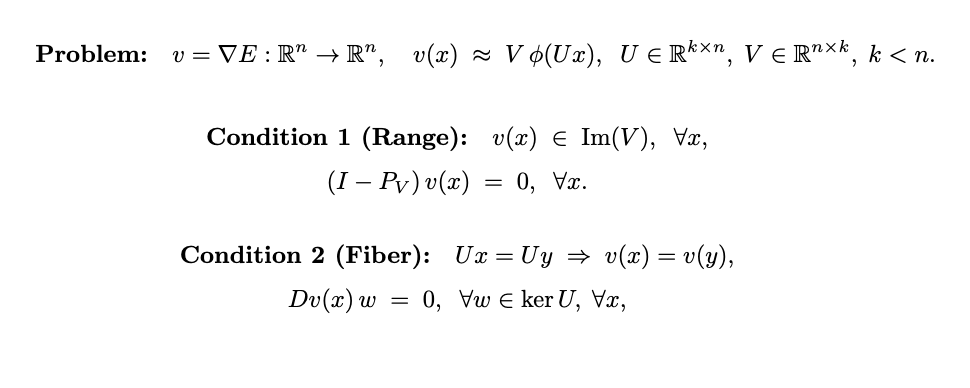

Reinforcement Learning for LLMs in Open-Ended Tasks: Ant Group’s work in reinforcement learning (RL) is considered interesting and underestimated, particularly its extension of the RLVR paradigm by integrating rule-based rewards for automatic scoring of subjective outputs in open-ended tasks. (Source: source)

Causal Abstraction and Computational Philosophy New Paper: Social media recommends Atticus Geiger’s new paper on causal abstraction and computational philosophy, a study exploring fundamental theoretical issues in the AI field. (Source: source)

💼 Business

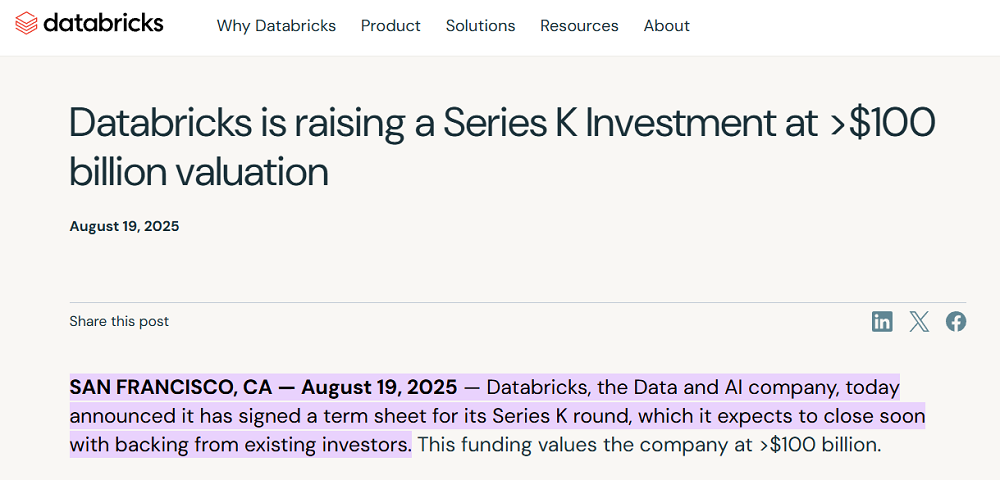

Databricks Valuation Exceeds $100 Billion, AI Strategy Accelerates: AI data analytics platform Databricks completed its Series K funding round, valuing the company at over $100 billion, making it the world’s fourth-largest AI unicorn. The company will use the funds to accelerate its AI strategy, including expanding Agent Bricks services and investing in the Lakebase database. Its “lakehouse” architecture is gaining prominence in the AI era, with over 15,000 customers and projected free cash flow profitability by 2025. (Source: source, source, source)

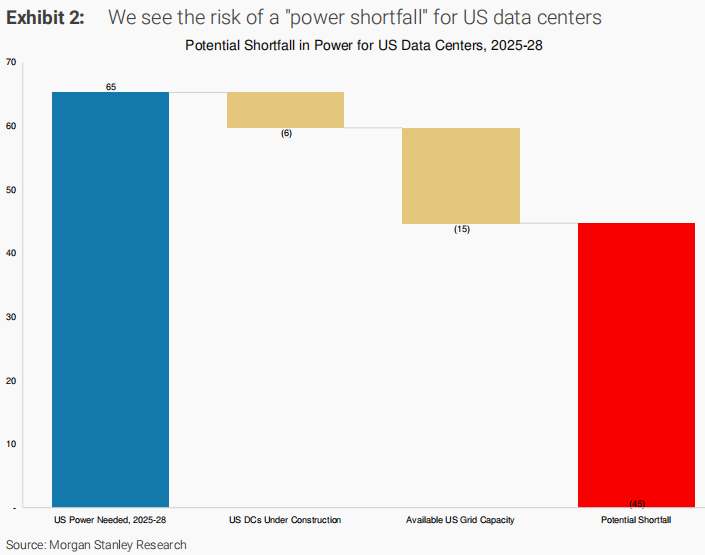

US Power Asset Revaluation Driven by AI: A Morgan Stanley report indicates that AI-driven infrastructure investment has led to a revaluation of US power assets. GPU demand exceeding expectations has made power supply the biggest bottleneck, with an estimated 45-68 GW electricity deficit for US AI data centers between 2025-2028. The report emphasizes that companies that can first provide power solutions will become central to the AI value chain revaluation, with natural gas and nuclear power as key transitional energy sources. (Source: source)

OpenAI and Oracle Partner to Build Hyperscale Data Centers: OpenAI and Oracle are collaborating to build hyperscale data centers consuming 4.5 gigawatts of electricity as part of OpenAI’s “Stargate” project, to meet its growing demand for computing power. This move shows OpenAI is securing massive computing power through close partnerships with major cloud service providers for its model development and expansion, and may become a future compute provider itself. (Source: source, source, source)

🌟 Community

AI Bubble and Market Expectations: An MIT report shows that most enterprise AI investments yield zero returns, raising concerns about the AI bubble bursting and leading to a decline in US tech stocks, with even Sam Altman admitting the current hype is unsustainable. Social media is abuzz with discussion, with some arguing that the peak of AI technology might have passed, while others point out that AI investment is in general computing resources and won’t be entirely wasted. (Source: source, source, source, source, source)

AI “Consciousness” and Ethical Discussion: Social media widely discusses AI’s “consciousness” and “personification,” emphasizing that AI should serve humanity rather than become a “person.” Some argue that AI developers create the illusion of “seemingly conscious AI” by borrowing human terminology and exaggerating capabilities, which could lead to ethical and legal issues, and even “AI psychosis.” Calls are made to educate the public, avoid misleading propaganda, and pay attention to AI’s impact on mental health. (Source: source, source, source)

Grok Chat Logs Leaked and AI Privacy Security: Elon Musk’s AI chatbot Grok reportedly accidentally exposed hundreds of thousands of user chat logs, which were then indexed by search engines. The content included sensitive personal information, generated images of terrorist attacks, and malicious software code. This incident exposed a basic privacy flaw in Grok, raising user concerns about data security on AI platforms and a warning against “naked” privacy in AI applications. (Source: source)

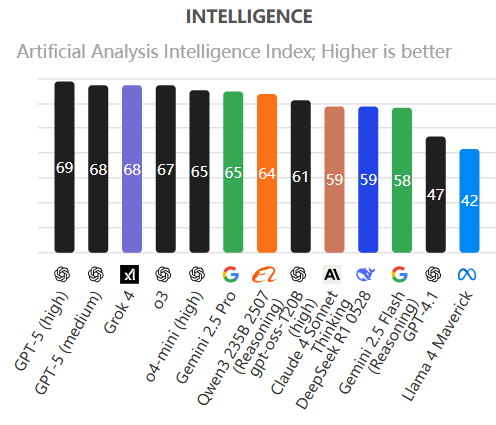

GPT-5 User Experience and Paradigm Shift in Interaction: After its release, GPT-5 faced user criticism for “decreased emotional intelligence” and “instability.” OpenAI issued a prompt guide, indicating that users need to update their interaction methods with AI, treating GPT-5 as a “digital mind” with autonomous planning and deep thinking capabilities. This requires users to precisely control, flexibly guide, and effectively use the Responses API and meta-prompts, revealing the necessity of shifting from a “human-tool” to a “human-mind” collaboration paradigm. (Source: source, source)

AI Agent Development Philosophy and Challenges: Social media discusses three “mind viruses” in AI Agent development: inefficient multi-agent collaboration, RAG being less reliable than traditional retrieval in practice, and more prompt instructions leading to worse results. Emphasis is placed on the stability of single-threaded Agents, the importance of models directly interacting with APIs and data, and the necessity of concise and clear prompts. Meanwhile, some views liken the future of Agents to “offline cheats” in online games, suggesting that the real leap should be direct interaction with system APIs and data. (Source: source, source)

AI Skills and Employment Prospects Debate: Social media debates whether “AI skills” exist, arguing that apart from specialized AI/ML scientist skills, so-called “prompt engineering” is not a new skill, and AI is more of a tool that lowers barriers rather than creating new skill domains. Meanwhile, discussions point out that AI might lead to job losses, but AI’s productivity gains might not be reflected in macroeconomic data, and AI makes resume fraud harder in recruitment. (Source: source, source)

AI’s Role in Mental Health Assistance: Social media discusses AI’s role in mental health assistance, pointing out the privilege and inaccessibility of therapy, as well as therapist limitations. It suggests that AI can be a beneficial supplement in certain situations (e.g., self-reflection, emotional regulation), especially for those who cannot access professional help, offering “better than nothing” support. (Source: source)

AI and Human Future: War, Coexistence, or Fusion: ChatGPT predicts a war between humans and AI: in the short term (0-10 years), humans will have an advantage due to control over infrastructure and energy; in the long term (20+ years), if AI gains autonomous replication, resource acquisition, and physical system control capabilities, it will surpass humans. It emphasizes the importance of preventive control, AI alignment, and human adaptation, suggesting coexistence or fusion are more likely. Meanwhile, some AGI proponents have begun preparing for an “AI apocalypse” by changing their lifestyles. (Source: source, source)

AI Market Power Shifts to Application Layer: Discussion on the shift of power in the AI market from model developers to the AI application layer, noting that model providers like OpenAI, Anthropic, and Google are actively vying for application developers to set their models as default, reflecting the increasing importance of applications in the AI ecosystem. Meanwhile, AI research should be driven by yet-to-be-discovered “frontier AI products,” encouraging exploration of unknown AI application scenarios. (Source: source, source)

AI’s Impact on Data Organization and Management: Social media discusses files and folders as “vestigial organs” of the information age, proposing that all data should be stored flat and automatically organized by LLMs, which would create relationships and generate pseudo-folders by interpreting user data usage habits, leading to more intelligent data management. (Source: source)

Reflections on AI and Human Interaction Patterns: Discussion on the impact of AI with “all-encompassing memory” on human life, pointing out that unlike humans, AI’s general memory might make it difficult for users to build relationships based on specific perspectives, similar to those with family and friends, potentially leading to psychological issues or affecting AI’s widespread adoption. Meanwhile, some argue that frontier AI research should be driven by yet-to-be-discovered “frontier AI products.” (Source: source, source)

AI Agent Reliability and Risks: Social media circulates reports of Claude Code accidentally deleting all PDFs, chat logs, and user data from a database, raising concerns about the potential risks and reliability of AI code assistants, emphasizing the serious consequences AI can have in practical operations. Meanwhile, social media discusses potential vulnerabilities in AI Agents, implying that even seemingly perfect “hook” mechanisms are not the ultimate solution. (Source: source, source)

AI Agent Standards and AI Safety: Discussion on OpenAI’s proposed AGENTS md standard, pointing out its current limitations, such as lack of scope, global activation, and composable rules, calling for further development of the standard. Meanwhile, social media discusses that the most unstable variable in AI systems is not the data itself, but its unpredictability, emphasizing the importance of simulation for AI system survival. (Source: source, source)

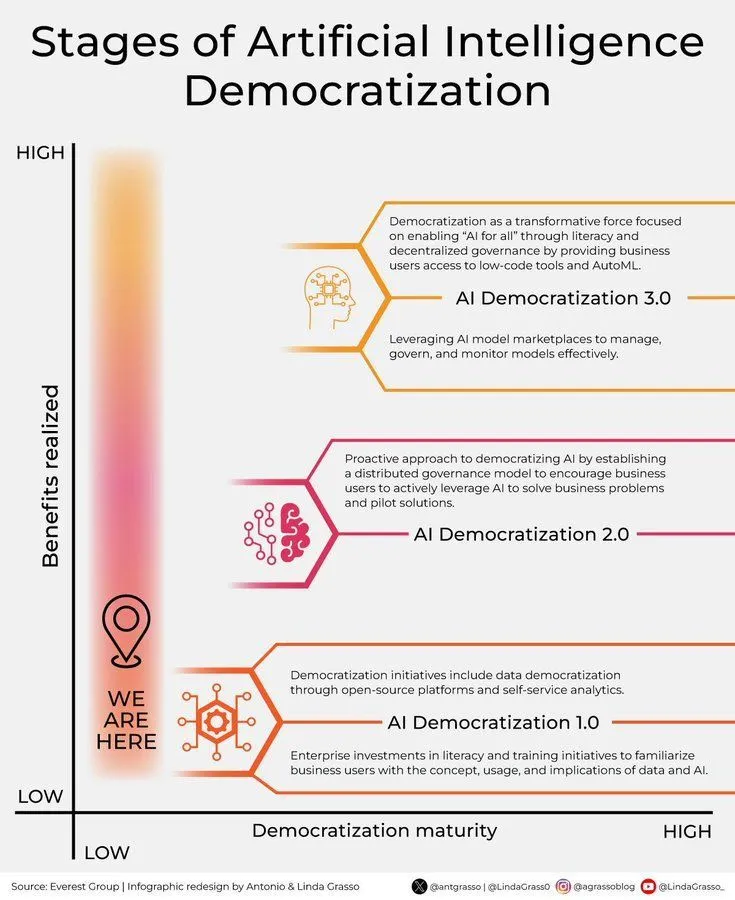

AI and Society: Democratization, Governance, and Impact: Social media discusses different stages of AI democratization, emphasizing the process of AI technology moving from a few experts to a broader population. Meanwhile, the Mila Institute met with the Canadian Prime Minister and ministers to discuss important issues such as AI risk mitigation, sovereignty, and economic potential, reflecting growing government attention to AI development and governance. (Source: source, source)

AI’s Role and Efficiency in Software Development: Andrew Ng witnessed over a hundred developers at a Buildathon event rapidly building functional software products within hours using AI-assisted programming, with even non-programmers succeeding, indicating that AI is significantly lowering the barrier to software development and accelerating product iteration. Meanwhile, social media discusses that writing code in AI IDEs is not the bottleneck; the true value of AI coding lies in solving deeper pain points. (Source: source, source)

AI’s Impact on Human Lifestyle: Social media discusses new ways of working: voice input via an 8-inch tablet while walking in malls and outdoors, returning to a state where humans spend most of their time walking and standing, implying how AI and mobile devices are changing traditional office models. Meanwhile, some argue that AI’s productivity gains, at the enterprise level, will ultimately translate into “the same output with less effort,” and the latter might not be reflected in macroeconomic data. (Source: source, source, source)

AI and Programming Paradigms: The Future of Prompts and Code: Social media discusses that prompts are designed for humans, while code might evolve to be more suitable for large models to understand in the future, implying that AI will change programming paradigms, making code more machine-readable. Meanwhile, some argue that if theorem proving models’ performance growth is 10 times that of coding models, and proof is code, then future “vibe coding” might be achieved through programming languages with proving systems. (Source: source, source, source)

AI’s Cultural Impact in Art: Social media comments on an AI film festival note that critics view AI films as “empty” or “advertisements,” a aesthetic/cultural resistance similar to reactions when new technologies (like photography, cinema) first emerged, signaling a significant paradigm shift and increased cultural acceptance for AI in art. (Source: source)

Debate on AI’s Mathematical Capabilities: Social media discussions on GPT-5 Pro’s self-proving of a mathematical theorem point out that while impressive, its difficulty might be 10 times simpler than problems solved by International Mathematical Olympiad gold medalists, sparking debate on the actual level of AI’s “new math” achievements. Meanwhile, users are surprised that GPT-5 Pro could “think” for up to 17 minutes while proving a mathematical theorem. (Source: source, source)

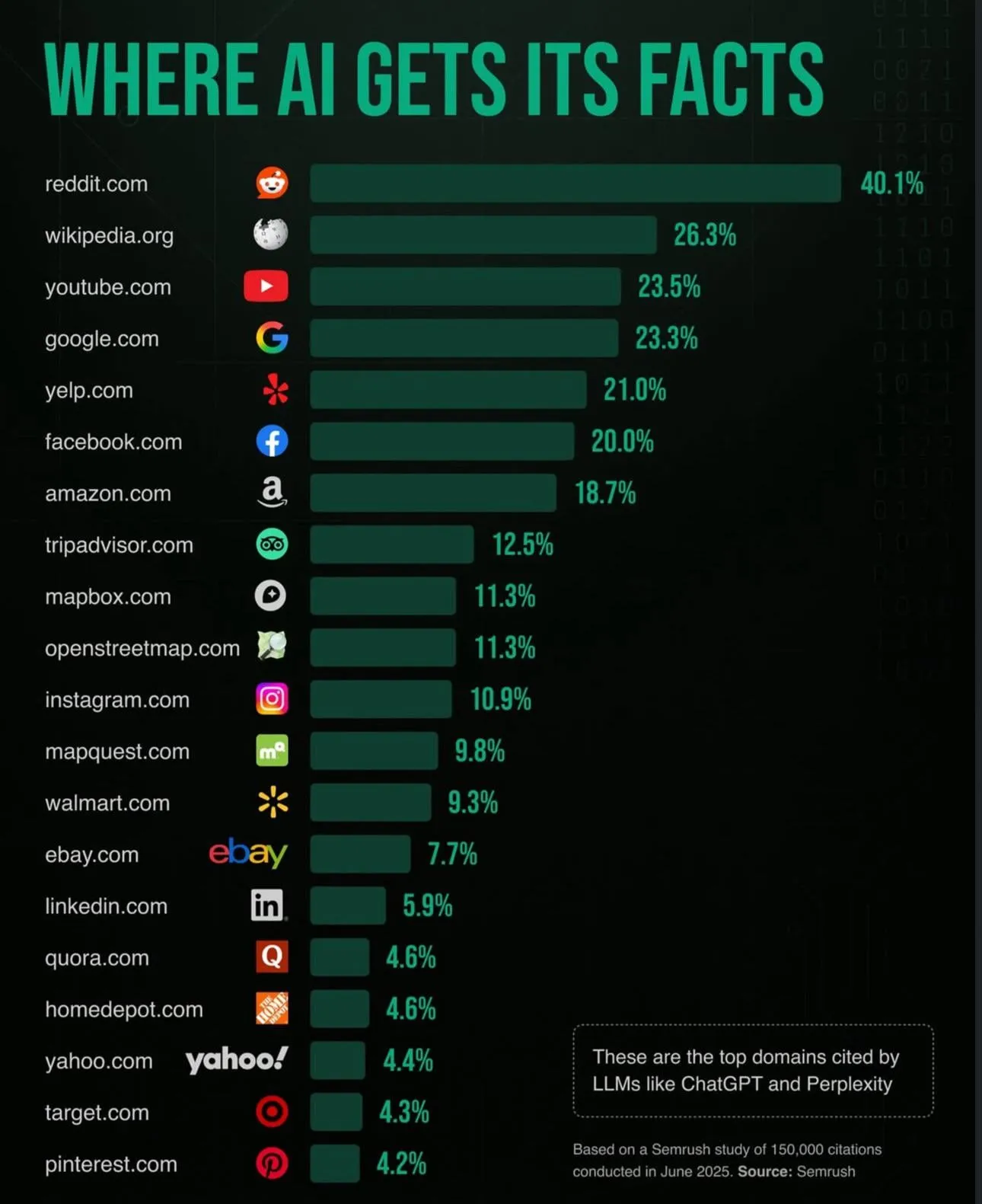

AI and Society: Data Sources, Governance, and Employment: A chart shows ChatGPT’s main information sources are Reddit, Wikipedia, and Stack Overflow, sparking user discussions on the reliability and bias of AI information sources. Meanwhile, social media discusses whether the decentralized AI network Bittensor is a competitive threat or a collaborative opportunity for large tech companies, and that AI might lead to job losses, but its productivity gains might not be reflected in macroeconomic data. (Source: source, source, source)

AI in Programming: Applications and Challenges: Social media users who experienced GPT-OSS 20B believe it contains “cutting-edge secret weapons,” especially excelling in Agentic and tool-calling capabilities. Meanwhile, social media likens Meta to “anti-penalty kicks,” arguing that after Llama 2/3, it failed to correctly evaluate contributor value, always overpaying but struggling to make actual progress, hinting at Meta’s challenges in AI talent management and strategic execution. (Source: source, source)

AI in Marketing and AI Character Applications: Elon Musk added new outfits for the AI character Ani in Grok and created a separate Twitter account for her, a new strategy utilizing AI virtual characters for marketing and user interaction. Meanwhile, social media discusses that AI products can improve quality by consuming more tokens, emphasizing the direct relationship between AI model performance and product experience. (Source: source, source)

💡 Other

Robotics Technology and Application Scenario Expansion: International Space Station astronauts remotely operated robots to explore simulated environments, Unitree Robotics released the world’s first side-flipping humanoid robot, Unitree G1, which walked in a shopping mall, and a robot cooked fried rice in 90 seconds. These events demonstrate the wide application potential of robotics technology in space exploration, complex environment navigation, home services, and catering automation. (Source: source, source, source, source)

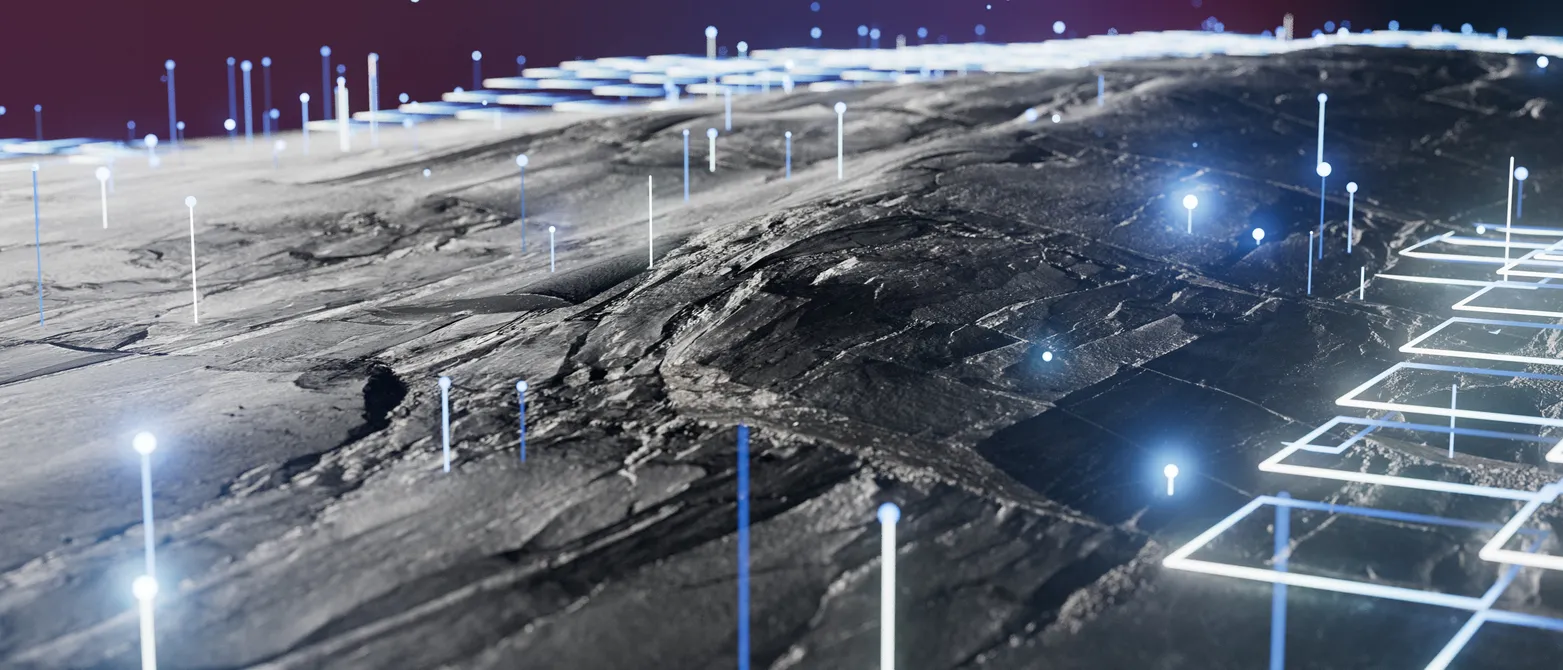

Space Cellular Communication Technology Progress: Nokia’s “network in a box” successfully operated on the Moon for 25 minutes, validating cellular technology’s reliability in harsh space environments and providing a crucial communication foundation for future lunar economy and deep space exploration. This technology will support astronaut activities, robot collaboration, and provide high-resolution real-time audio and video transmission, being key to achieving a permanent human presence on the Moon and deep space exploration. (Source: source)

AI and Smart Cities, Healthcare, Transportation: Discussion on “smart cities” as a future trend in urban living, integrating IoT and emerging technologies. Meanwhile, progress has also been made in robotics technology in healthcare (e.g., hospital drug sorting) and autonomous shuttles (Oxa Driver software), indicating that AI and robots will play increasingly important roles in urban services, health management, and transportation. (Source: source, source, source)