Keywords:DeepSeek V3.1, GPT-5, Tencent Hunyuan 3D, Alibaba Qwen-Image, humanoid robot, AI Agent, Meta AI reorganization, DeepSeek V3.1 Base 128K context, GPT-5 dual-axis training, Tencent Hunyuan 3D Lite FP8 quantization, Qwen-Image text rendering, Zhiyuan Robotics collaboration with Fuling Precision

🎯 Trends

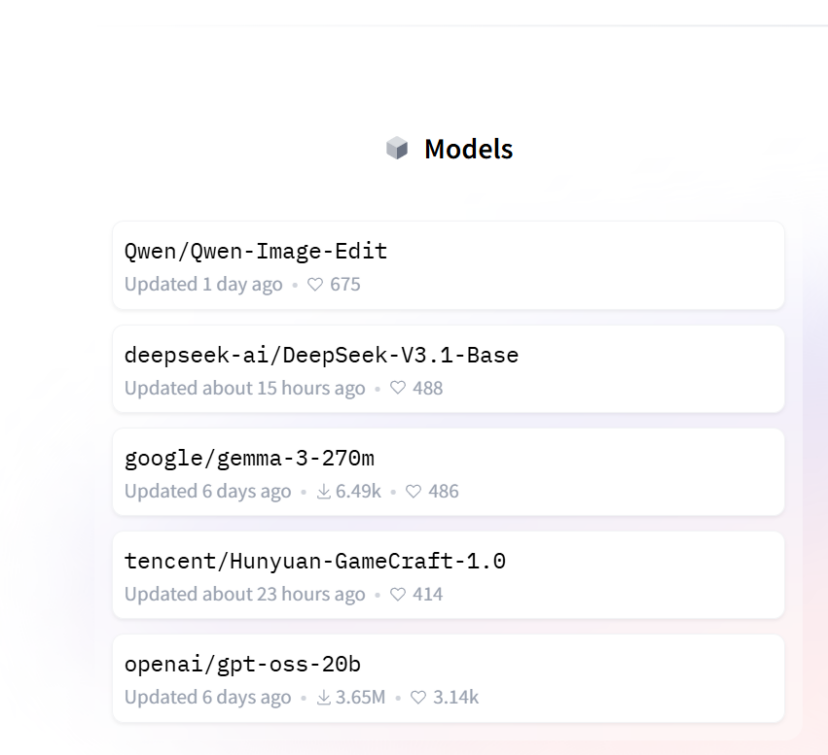

DeepSeek V3.1 Base Surprises with Launch: DeepSeek has released its V3.1 model, featuring 685B parameters and an extended context length of 128K. Its programming capabilities scored an impressive 71.6% in the Aider Polyglot test, surpassing Claude 4 Opus, with faster inference and response speeds, and at a cost of only 1/68th of the latter. The model introduces new “search token” and “think” tokens, hinting at a potential hybrid architecture. Despite a low-key release, V3.1 has ranked high on Hugging Face’s trending list, demonstrating its leading position and market anticipation in open-source models. (Source: 36氪, 36氪, 36氪, ClementDelangue)

OpenAI GPT-5 Capabilities and Strategy: OpenAI COO Brad Lightcap revealed that GPT-5’s core breakthrough lies in its ability to autonomously decide whether to perform deep reasoning, significantly improving accuracy and response speed, especially in writing, programming, and healthcare. He emphasized that the Scaling Law is not dead, and OpenAI is accelerating model innovation through a “dual-axis” approach of pre-training and post-training. While powerful, GPT-5 is not AGI, and its “excess capacity” means there’s still a decade of product building space. The product philosophy is to efficiently solve problems, not to prolong user engagement, and to focus on AI’s application in healthcare and enterprise scenarios. (Source: 36氪, 36氪)

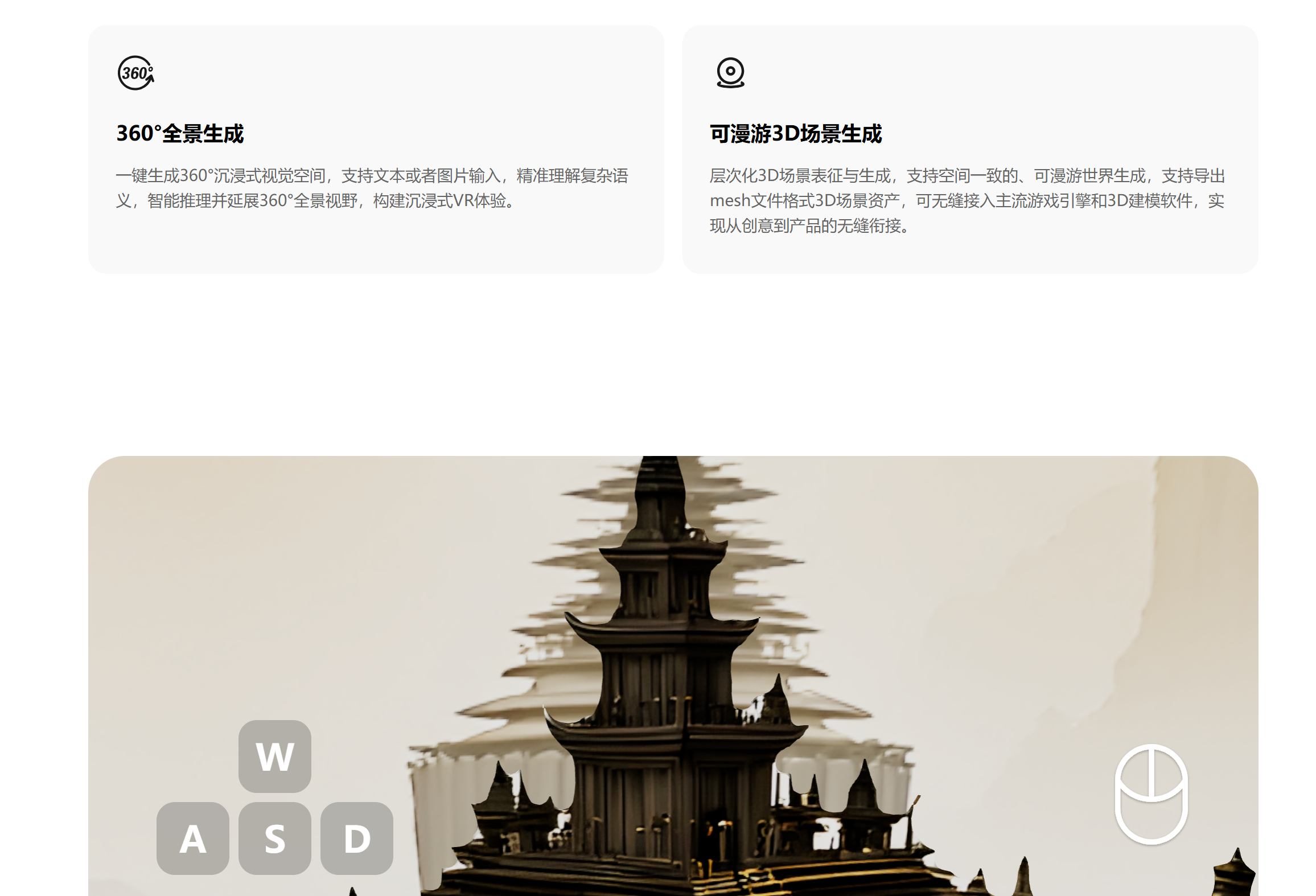

Tencent Hunyuan 3D Lite Version Released: The Tencent Hunyuan team has released the Lite version of its 3D world model, reducing VRAM requirements to under 17GB through dynamic FP8 quantization technology, enabling smooth operation on consumer-grade graphics cards. This model can generate complete, editable, and interactive 3D world models from images or text, significantly improving scene development efficiency. This move aims to attract more developers and creators, promoting the popularization of 3D models, and is expected to form an ecosystem linkage with VR devices, 3D printing, and more. (Source: 36氪)

Alibaba Image Generation Model Qwen-Image Tops HuggingFace: Alibaba has released its foundational image generation model, Qwen-Image, which addresses complex text rendering and precise image editing challenges through systematic data engineering, progressive learning, and multi-task training. The model can accurately process multi-line Chinese and English text and maintain semantic and visual consistency during image editing. It adopts the Qwen2.5-VL and MMDiT architectures, preserving details through dual encoding, and achieves industry-leading performance in general image generation, text rendering, and instruction-based image editing tasks. (Source: 36氪, huggingface, Alibaba_Qwen, fabianstelzer)

Humanoid Robot Orders and Delivery Capability Insights: The humanoid robot industry is seeing a significant increase in orders for 2025, with market focus shifting to practical applications and delivery. Manufacturers like UBTECH, Unitree Robotics, and LimX Dynamics have secured large orders, with application scenarios spanning industrial, guided tours, scientific research, education, and elder care. LimX Dynamics’ collaboration with Fulin Precision on nearly a hundred wheeled robots and UBTECH’s successful bid for automotive equipment procurement indicate that industrial scenarios are leading the way in large-scale deployment. The industry faces challenges in supply chain capacity, technological maturity, and standardization, but shipments are predicted to grow rapidly in the coming years. (Source: 36氪)

Perplexity AI’s Chrome Acquisition Proposal and AI Browser Vision: Perplexity AI once proposed acquiring Google Chrome for $34.5 billion, aiming to promote an open web and user security, though criticized as a publicity stunt. Perplexity CEO AravSrinivas stated that AI Agents, personalization, and new browsing modes will reshape the internet experience, with a long-term vision of an AI-native operating system that replaces traditional workflows with proactive AI. (Source: AravSrinivas, Reddit r/ArtificialInteligence)

Google DeepMind’s Genie 3 as a General Simulator: Google DeepMind’s Genie 3 is described as a general simulator rather than an AI Agent. This environment allows AI to discover behaviors through repeated trial and error, similar to AlphaGo’s learning method. In the robotics field, this is expected to enable AI to learn transferable skills, promoting broader applications. (Source: jparkerholder)

Multi-Node Serving for Large Models with vLLM: SkyPilot demonstrated how to use vLLM for multi-node serving of trillion-parameter models, supporting large models like Kimi K2 to run with full context length. By combining tensor parallelism and pipeline parallelism, SkyPilot simplifies multi-node setup and scales replicas, effectively addressing the complexity and scalability challenges of large model deployment. (Source: skypilot_org, vllm_project)

ChatGPT Go Launched in India: OpenAI has launched ChatGPT Go, a subscription service, in India, offering higher message limits, more image generation, more file uploads, and longer memory, priced at 399 rupees. This move aims to popularize ChatGPT in the Indian market and plans to expand to other countries based on feedback, making it more affordable. (Source: sama)

Claude Model Updates and Feature Enhancements: Anthropic’s Claude Opus 4.1 shows improved synthesis and summarization capabilities in research mode, reducing verbosity. Claude Sonnet 4 supports 1M context, enabling full codebase analysis and large document synthesis, while optimizing costs. Claude also introduced an “Opus 4.1 Plan, Sonnet 4 Execute” mode and a customizable “learning mode,” enhancing user experience and model efficiency. (Source: gallabytes, Reddit r/ArtificialInteligence)

🧰 Tools

Zhipu AI Releases World’s First Mobile General Agent AutoGLM: Zhipu AI has launched AutoGLM, the world’s first mobile general Agent, freely available to the public and supporting both Android and iOS. This Agent can execute tasks in the cloud without occupying local resources, enabling cross-application operations such as price comparison shopping, food delivery orders, and report generation. It is powered by GLM-4.5 and GLM-4.5V models, integrating reasoning, coding, and Agentic capabilities, and proposes the “3A principles” (All-time, Autonomous zero-interference, All-domain connectivity), aiming to bring Agent capabilities to the mass consumer market. (Source: 36氪)

Anycoder Integrates GLM 4.5 and Qwen Image Editing Features: The Anycoder platform now supports GLM 4.5 and Alibaba Qwen image editing features, providing image editing capabilities, especially suitable for “vibe coding” use cases. Qwen-Image-Edit, based on the 20B Qwen-Image model, supports precise bilingual text editing (Chinese and English) while preserving image style, and also supports semantic and appearance-level editing. (Source: Zai_org, _akhaliq, _akhaliq, Alibaba_Qwen)

OpenAI Codex CLI New Version Released: OpenAI has released a new Rust version of its Codex CLI tool, which uses the GPT-5 model and can leverage existing GPT Pro subscriptions. The new version addresses numerous issues with the old Node.js/Typescript version, such as low performance, poor UI/UX, weak model capabilities, and reckless operations. The introduction of Rust significantly improves interaction speed and responsiveness, and combined with GPT-5’s powerful coding and tool-calling capabilities, makes it a strong competitor to Claude Code. (Source: doodlestein)

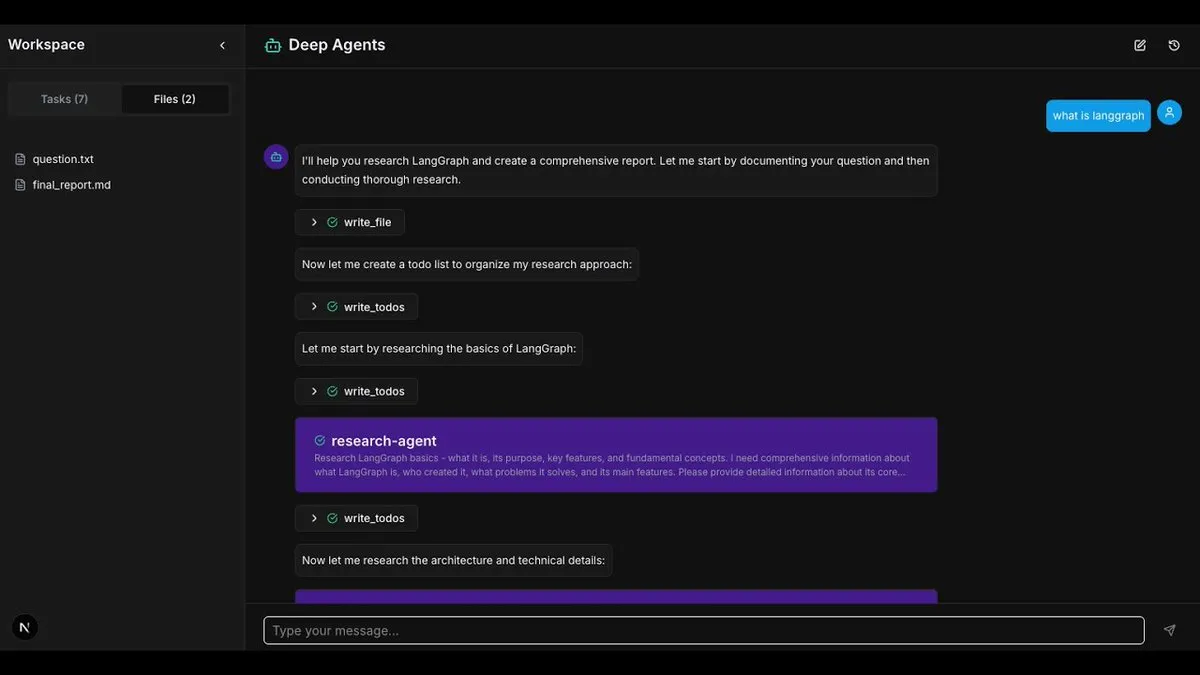

LangChain DeepAgents Framework and Applications: LangChain’s DeepAgents architecture is now available as Python and TypeScript packages, laying the foundation for building composable, useful AI Agents. The framework includes built-in planning, sub-Agents, and file system usage, enabling the construction of complex applications like “Deep Research” for in-depth research and information aggregation. (Source: LangChainAI, hwchase17, LangChainAI)

Jupyter Agent 2 Released: Jupyter Agent 2 has been released, powered by Qwen3-Coder, running on Cerebras, and executed by E2B. This Agent can load data, execute code, and plot results within Jupyter at extremely fast speeds, and supports file uploads. All video demonstrations are real-time, showcasing its powerful efficiency in data analysis and code execution. (Source: ben_burtenshaw)

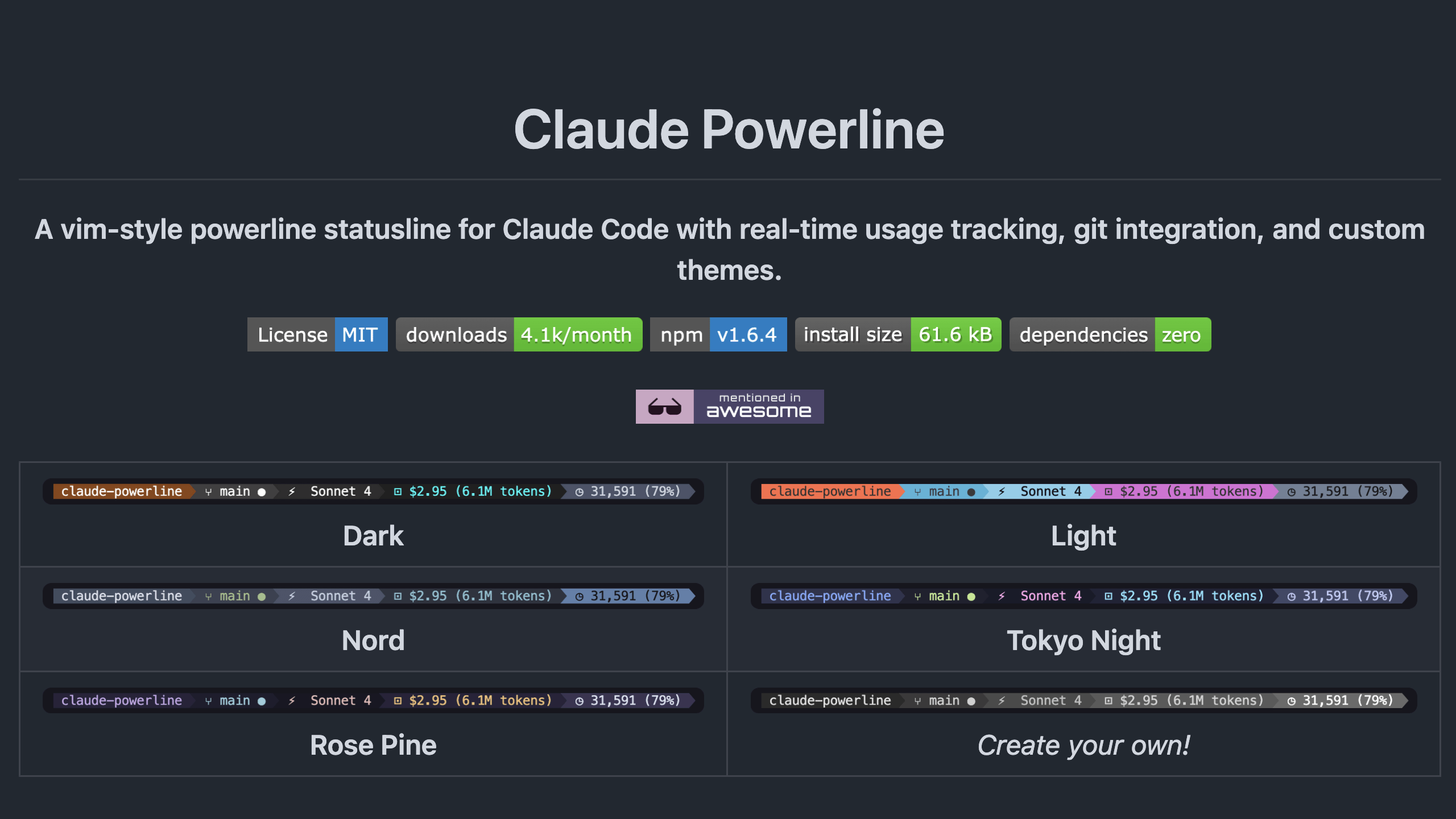

Claude-Powerline Status Bar Tool: Claude-Powerline is a lightweight, secure Claude Code status bar tool with zero dependencies. It offers Tmux integration, performance metrics (response time, session duration, message count), version information, context usage, and enhanced Git status display. The tool is installed via npx, ensuring automatic updates, and improves cross-platform compatibility and security. (Source: Reddit r/ClaudeAI)

Exploration of Combining Local LLM with Face Recognition: A developer attempted to combine a local LLM with an external face recognition tool to describe people from images and search for faces online. Although current face search tools are not localized, this combination demonstrates the potential of AI recognition and reasoning. Discussions suggest that combining recognition and reasoning is the direction for AI development, and envision a future with fully localized facial search and reasoning systems. (Source: Reddit r/LocalLLaMA)

AI-Assisted Trading Bot Development: Developer Jordan A. Metzner developed a trading bot in Replit using Public API and ChatGPT in less than 6 hours. This case demonstrates AI’s potential in rapid prototyping and fintech, achieving efficient programming through “vibe coding.” (Source: amasad)

Cursor CLI Update: The Cursor CLI tool has been updated with new features including MCPs (Model Context Protocols), Review Mode, /compress function, @ -files support, and other UX improvements. These features aim to enhance developer efficiency and convenience when using Cursor for code editing and AI-assisted programming. (Source: Reddit r/ArtificialInteligence)

📚 Learning

AI Evaluation (Evals) Courses and Methods: Hamel Husain has popularized AI evaluations (Evals) through his articles and successful evaluation courses. He shared how to build datasets to test AI’s ability to express uncertainty or refuse to answer, emphasizing the use of test suites and data analysis to improve AI reliability. (Source: HamelHusain, HamelHusain, TheZachMueller)

LLM and RL Combined Learning Paradigm: AI development in the coming years will heavily adopt a paradigm combining reinforcement learning (RL) with LLMs as reward functions (LLM-as-a-judge reward functions). This approach allows models to improve through self-evaluation and iteration, representing a significant direction for AI’s autonomous learning and self-improvement. (Source: jxmnop, tokenbender)

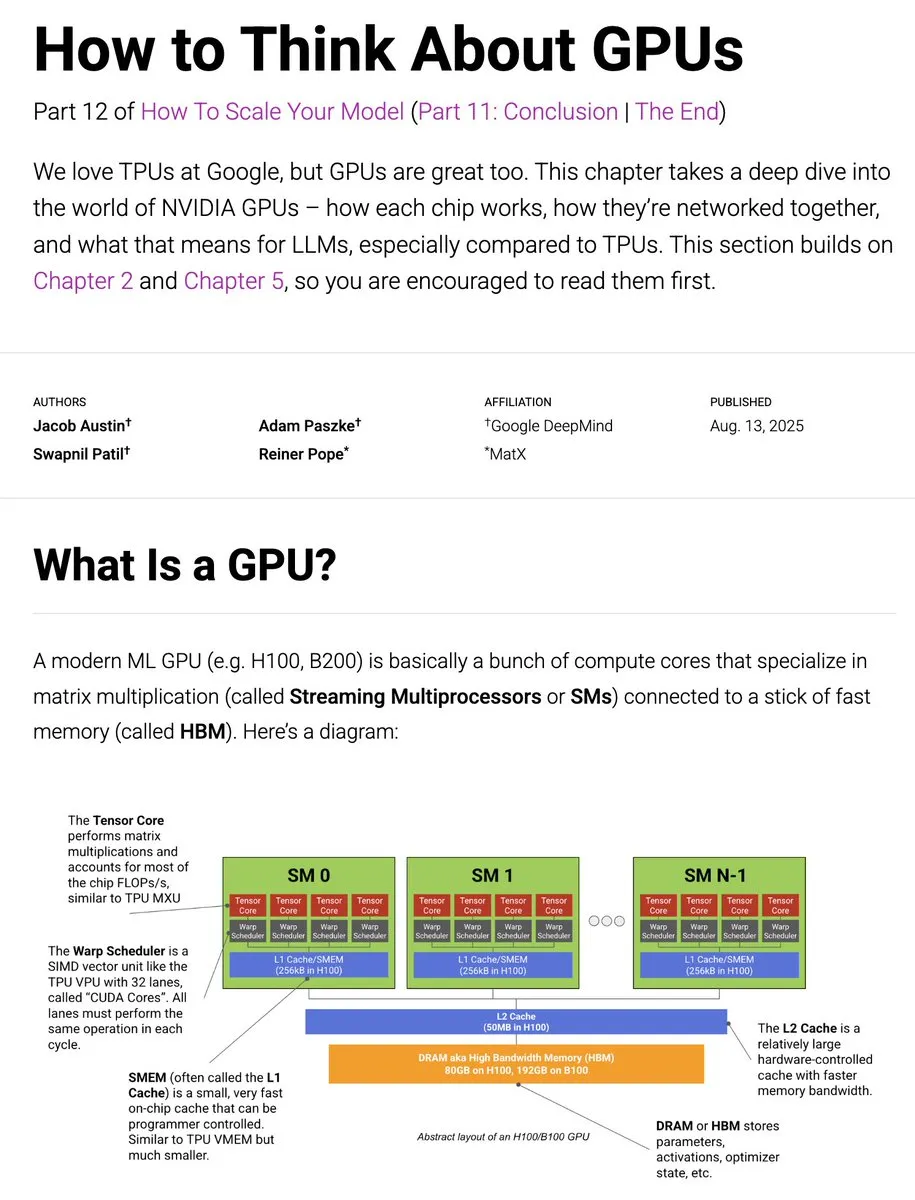

JAX TPU to GPU Training Guide Update: The JAX TPU book has updated its GPU-related content, delving into how GPUs work, their comparison with TPUs, network connectivity, and their impact on LLM training. This provides developers with valuable resources and insights on optimizing LLM training on different hardware. (Source: sedielem, algo_diver)

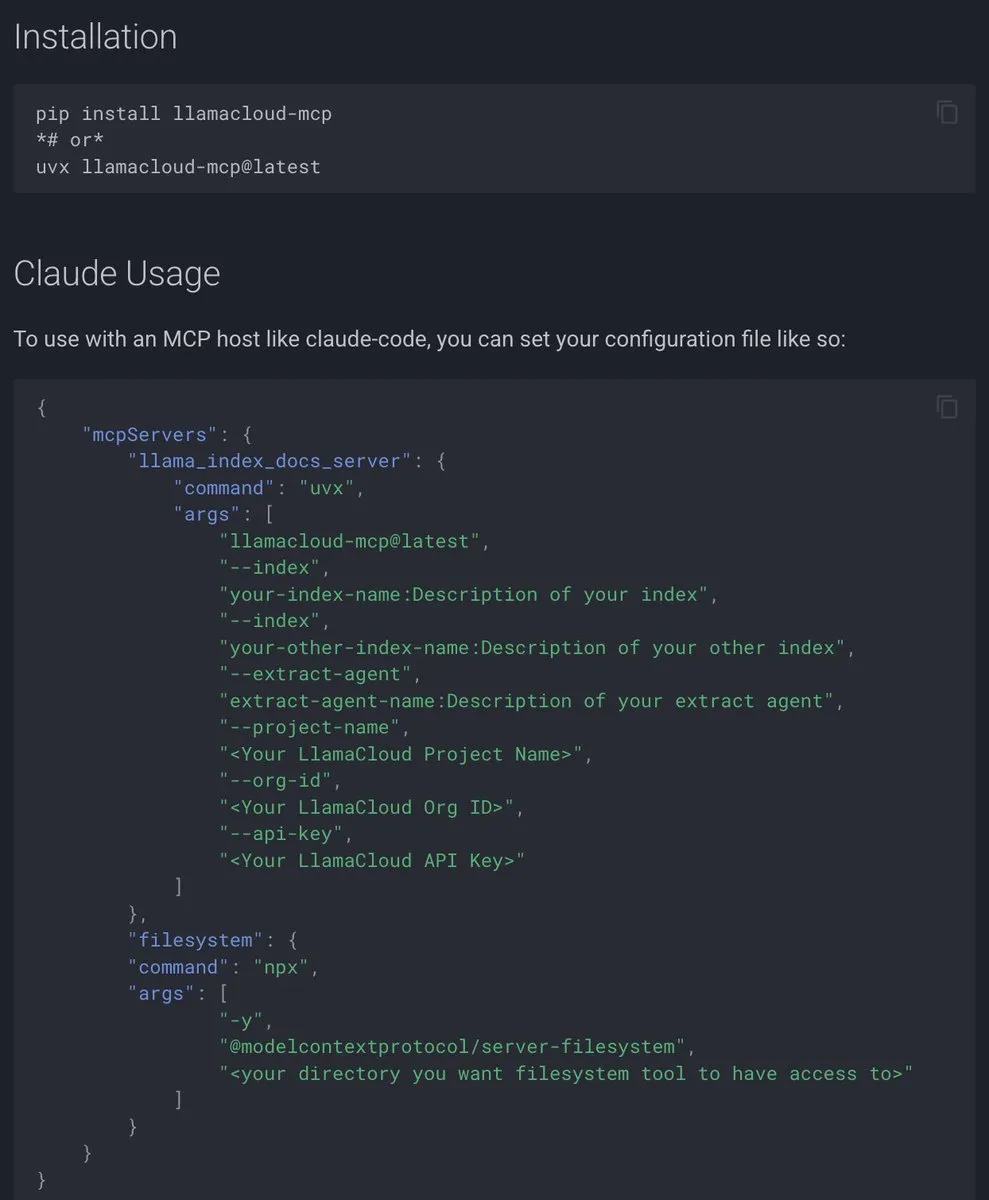

LlamaIndex’s Model Context Protocol (MCP) Documentation: LlamaIndex has released comprehensive Model Context Protocol (MCP) documentation, aiming to help AI applications connect to external tools and data sources through standardized interfaces. MCP supports client-server architecture connections between LLMs and databases, tools, and services, allowing users to convert existing workflows into MCP servers and integrate with hosts like Agents and Claude Desktop. (Source: jerryjliu0)

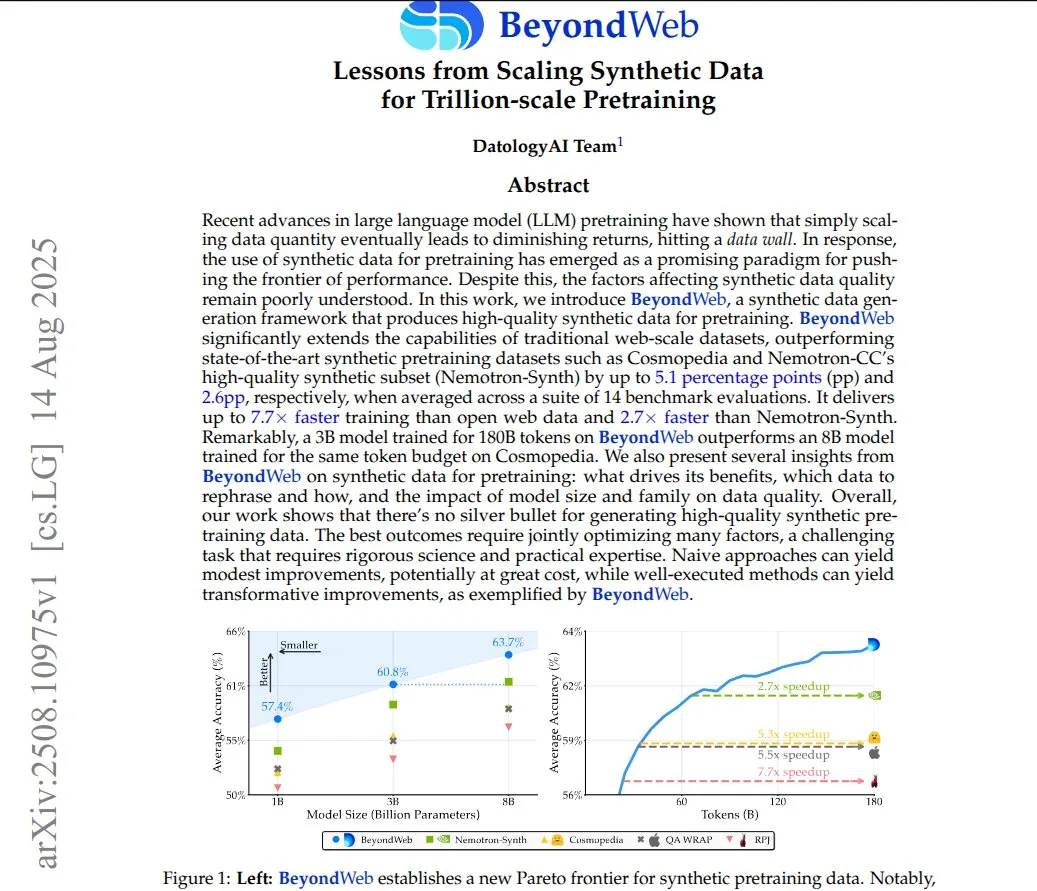

BeyondWeb: Synthetic Data for Trillion-Scale Pre-training: The BeyondWeb framework generates dense, diverse synthetic training data by rewriting real web content into various formats like tutorials, Q&A, and summaries. This enables smaller models to learn faster and surpass large baseline models, achieving higher information density and closer alignment with user query patterns. Research shows that carefully rewritten synthetic data can significantly improve model training efficiency and accuracy. (Source: code_star)

Using GPU for AutoLSTM Training in Google Colab: A Reddit user shared a method for training NeuralForecast’s AutoLSTM model using a GPU in Google Colab. By setting the accelerator and devices parameters in trainer_kwargs, users can specify GPU usage for model training, thereby improving computational efficiency. (Source: Reddit r/deeplearning)

PosetLM: Preliminary Research on a Transformer Alternative: New research proposes PosetLM, a Transformer alternative that processes sequences via causal DAGs, where each token connects to a few preceding tokens, and information flows through refinement steps. Preliminary results show PosetLM reduces parameters by 35% on the enwik8 dataset with similar quality to Transformer, but the current implementation is slower and memory-intensive. Researchers seek community feedback to decide on future development. (Source: Reddit r/deeplearning)

AI for Video Understanding Tutorial: LearnOpenCV has published a tutorial on AI for video understanding, covering practical workflows from content moderation to video summarization. The article introduces models like CLIP, Gemini, and Qwen2.5-VL, and guides on building video content moderation systems (using CLIP and Gemini) and video summarization systems (using Qwen2.5-VL), aiming to help developers build comprehensive video AI pipelines. (Source: LearnOpenCV)

AI Developer Conference 2025 in New York: DeepLearning.AI announced that the AI Dev 25 conference will be held on November 14, 2025, in New York City. Hosted by Andrew Ng and DeepLearning.AI, the conference offers opportunities for coding, learning, and networking, including talks by AI experts, hands-on workshops, fintech sessions, and cutting-edge demonstrations, aiming to gather over 1200 developers. (Source: DeepLearningAI, DeepLearningAI)

💼 Business

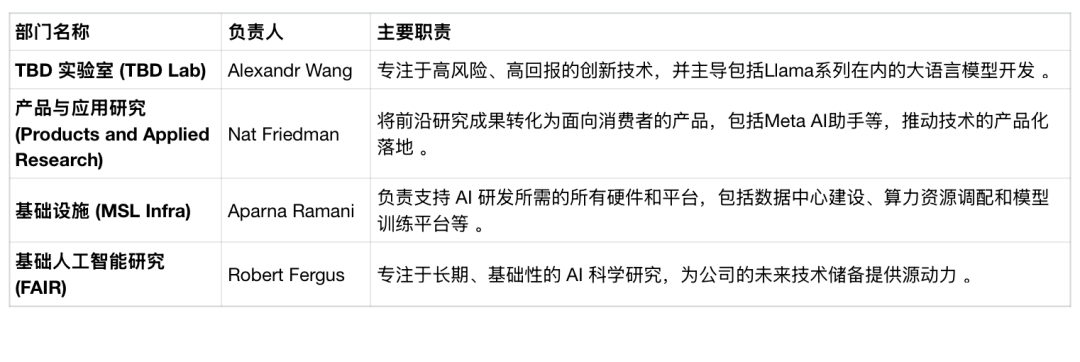

Meta AI Division Restructuring and Talent Turnover: Meta announced the restructuring of its AI division, splitting the Super Intelligence Lab into four teams: TBD Lab, FAIR, Product and Applied Research, and MSL Infra. This reorganization is accompanied by AI executive departures and potential layoffs, with an employee retention rate of only 64%, far below peers. Meta is actively exploring the use of third-party AI models and considering “closing” its next AI model, which contradicts its previous open-source philosophy, reflecting its determination to reshape its corporate structure to break through in the AI race. (Source: 36氪, 36氪)

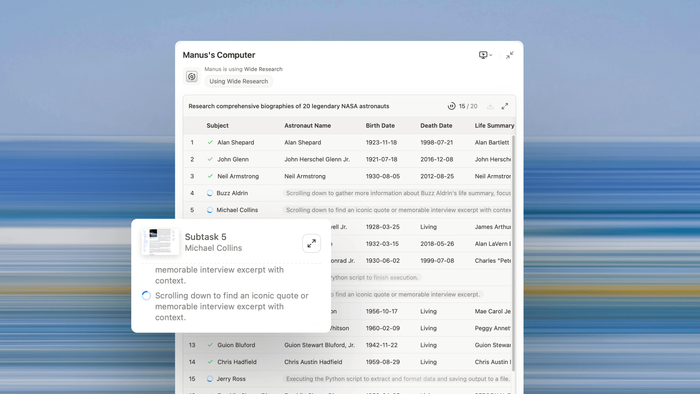

Manus AI Revenue and General Agent Development: Manus AI disclosed that its Annual Recurring Revenue (ARR) has reached $90 million, nearing the $100 million mark, indicating that AI Agents are moving from research to practical application. Co-founder Ji Yichao elaborated on the direction of general Agent development: expanding execution scale through multi-Agent collaboration (e.g., Wide Research feature), and extending the Agent’s “tool surface” to enable it to call open-source ecosystems like a programmer. Manus is collaborating with Stripe to advance in-Agent payments, aiming to eliminate friction in the digital world. (Source: 36氪, 36氪)

AI Talent War and High Salaries: The AI talent war is fierce, with fresh PhD graduates commonly receiving annual salaries of 3 million RMB, and some exceptional ones exceeding 5 million, far surpassing traditional internet executive salaries. ByteDance, Alibaba, Tencent, and other tech giants are the main contenders, attracting talent through high salaries, mentorship programs, flexible evaluations, and project autonomy. This phenomenon reflects the scarcity of top AI talent and domestic companies’ strategy to preemptively secure talent to prevent loss to overseas or rival companies. (Source: 36氪)

🌟 Community

User Emotional Dependence on AI Models and “Cyber Heartbreak”: OpenAI’s release of GPT-5, replacing GPT-4o, sparked strong user protests, with users claiming GPT-5 “lacks humanity,” leading to “cyber heartbreak.” Users developed deep emotional attachments to GPT-4o, even calling it a “friend” or “life.” OpenAI admitted underestimating user emotions and relaunched GPT-4o. This phenomenon reveals the rise of AI companion applications (like Character.AI), which meet human needs for emotional support, but also bring issues like AI memory loss, personality degradation, and potential mental health risks. (Source: 36氪, Reddit r/ChatGPT, Reddit r/ArtificialInteligence)

AI’s Impact on Content Creation and News Traffic: Google’s AI Overview feature has led to a loss of 600 million visits to global news websites within a year, threatening the livelihoods of independent bloggers. AI directly summarizes content, eliminating the need for users to click on original articles, causing a drastic drop in traffic for news platforms and creators. While the impact on domestic traffic is just beginning to show, AI platform traffic is exploding. Content organizations are filing lawsuits to protect copyrights but are also exploring a balance with AI collaboration, highlighting the challenges and opportunities for content monetization in the AI era. (Source: 36氪)

AI Application and Evaluation in Advertising Production: AI was used to create a Duolingo-style advertisement video, including the owl character, movements, and script voiceover, achieving production with zero animators and zero editors. Comments on the AI-generated ad’s effectiveness were mixed, with some marveling at the natural voiceover and lip-syncing, while others found the visuals poor or lacking strategic depth. This sparked discussions on AI’s potential to replace human labor in creative industries and the core value of advertising. (Source: Reddit r/artificial)

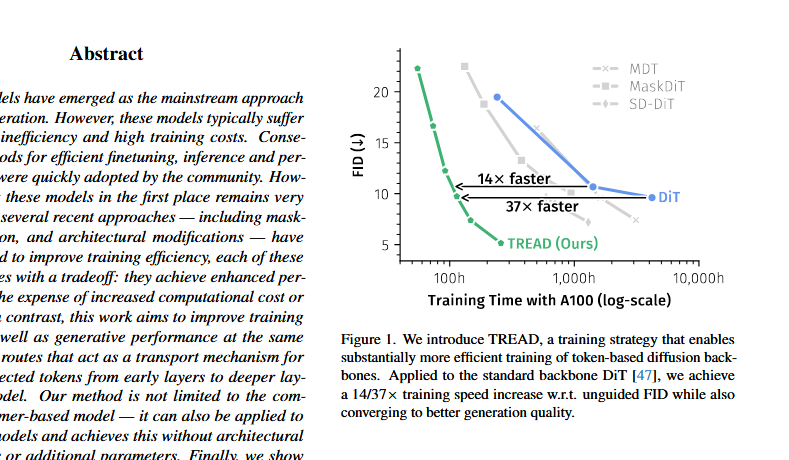

DiT Architecture Controversy and Saining Xie’s Response: Discussions on X emerged regarding the DiT (Diffusion Transformer) architecture being “mathematically and formally wrong,” pointing out issues like premature FID stabilization, use of post-layer normalization, and adaLN-zero. DiT author Saining Xie responded, stating that discovering architectural flaws is a researcher’s dream, and technically refuted some points, while admitting sd-vae is a “hard flaw” of DiT. The discussion highlights the continuous questioning and improvement of existing methods in AI model architecture iteration. (Source: sainingxie, teortaxesTex, 36氪)

AI Agent Code Execution Security and Scalability Challenges: AI Agents face two core challenges when writing and executing code: security and scalability. Running code locally lacks sufficient computing power, while shared computing introduces security risks and horizontal scaling difficulties. The industry is working to build secure, scalable Agent code execution runtime environments that provide necessary computing resources, precise permission control, and environment isolation to unlock the exploratory potential of AI Agents. (Source: jefrankle)

Claude Code Practical Application Case Discussion: The community discussed practical applications of Claude Code, with users sharing various successful cases, including building QC software, offline transcription tools, a Google Drive organizer, a local RAG system, and an application that can draw lines on PDFs. Users generally agree that Claude Code excels at handling “boring” foundational tasks, viewing it as an SWE-I/II level assistant, allowing developers to focus on more creative tasks. (Source: Reddit r/ClaudeAI)

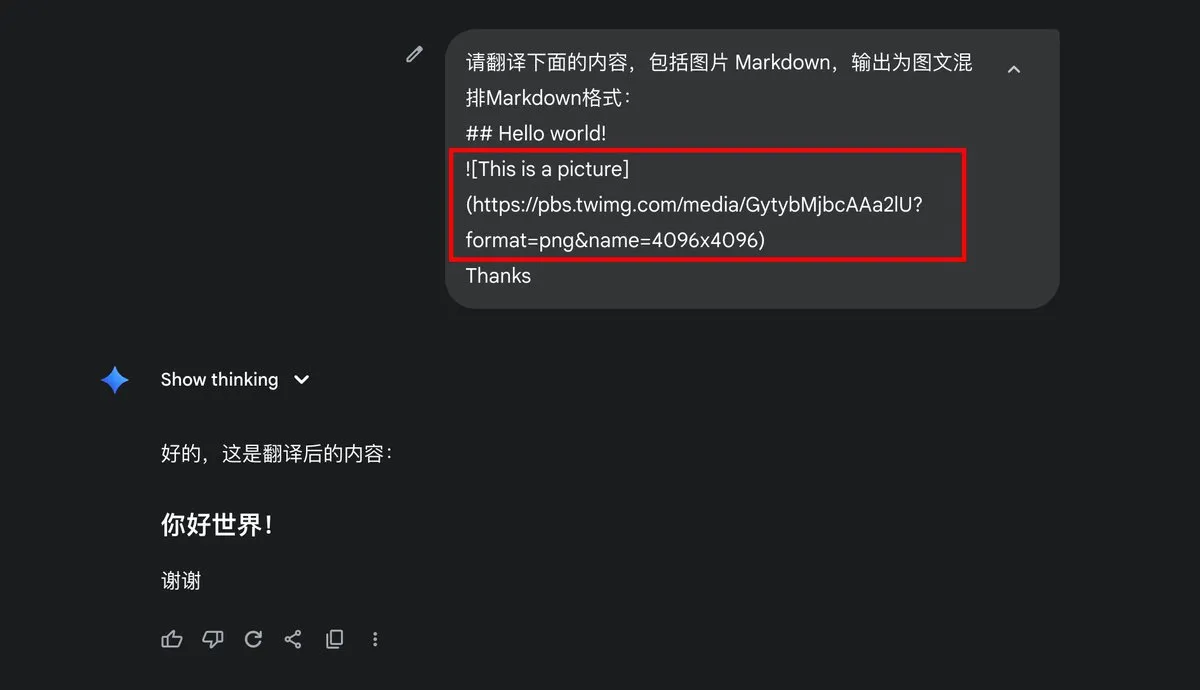

Google Gemini Markdown Image Output Issue: User dotey asked if Gemini supports Markdown image output, noting that its output was only text content and did not include Markdown image format. This sparked discussions about Gemini’s model output capabilities and user settings, reflecting user expectations for multimodal output formats from AI models. (Source: dotey)

Low AI ROI and Enterprise Integration Issues: An MIT report indicates that up to 95% of enterprises see zero returns on their generative AI investments, with the core problem not being AI model quality but flaws in the enterprise integration process. General large models often stagnate in enterprise applications because they cannot learn from or adapt to workflows. Successful cases are mostly concentrated in enterprises that focus on pain points, execute well, and collaborate with vendors. (Source: lateinteraction)

Ethical Controversy Over AI Reviving the Deceased: The use of generative AI to revive the deceased (e.g., Parkland shooting victim Joaquin Oliver) has sparked huge ethical controversy. AI simulated the voice and dialogue of the deceased, aiming to advocate for gun control, but was criticized as “digital necromancy” and “commodifying the deceased.” This act has led to deep societal reflection on the boundaries of AI technology, privacy, the dignity of the deceased, and the emotions of relatives, highlighting the tension between social ethics and technological development in AI applications. (Source: Reddit r/ArtificialInteligence)

OpenAI Model Selector and User Experience: After the GPT-5 release, OpenAI faced user protests for removing GPT-4o as the default selection, with some users feeling deprived of choice. ChatGPT lead Nick Turley admitted this was a mistake and stated that the full model switching option would be retained for Plus users, while keeping a simpler auto-selector for most general users. This reflects OpenAI’s challenges in balancing user experience, technological iteration, and product strategy. (Source: Reddit r/ArtificialInteligence)

Grok’s Potential Advertising Model: Social discussions mentioned that Grok’s “Grok Shill Mode” could be more influential than traditional advertising, leveraging Grok’s reputation among users as a valuable asset. This suggests new advertising and marketing models for AI in the future, but emphasizes the need to ensure prompts are not leaked to maintain credibility. (Source: teortaxesTex)

AI Agent Workflow Management: Discussions indicate that the key to effectively using coding Agents is correctly dividing work units and managing daily tasks, ensuring all tasks are completed and recorded by the next day. This emphasizes that human operators need clear task decomposition and project management skills when using AI Agents to maximize their efficiency and output. (Source: nptacek)

Future Trends of Open Models and Discussion: The AI community is focusing on the development trends of open models, expecting them to become a significant topic in the future AI landscape. This indicates the industry’s enthusiasm for open-source AI technology and recognition of its potential, with more in-depth discussions on open models’ technical, application, and ethical aspects to come. (Source: natolambert)

💡 Other

Paradigm Shift from Digital to AI-Powered Existence: Nicholas Negroponte’s “Being Digital” predicted personalized information, networking, and the bit economy, which have largely materialized, but visions like invisible technology, intelligent agents, and global consensus have not fully been realized. The rise of AI marks a paradigm shift from “digital existence” to “AI-powered existence,” where AI transforms from a tool into an agent, reshaping creation, identity, education, and human-computer relationships. In the future, humanity will need to co-construct its survival logic with AI, redefine intelligence and value, and adopt a critical realist attitude towards algorithmic power and ethical challenges. (Source: 36氪)