Keywords:NVIDIA Nemotron Nano 2, Claude Opus 4.1, AI talent salary competition, Google AI language digitization, AI health management, AI-assisted programming, AI impact on employment, AI parenting applications, Hybrid Mamba-Transformer architecture, LMArena model evaluation, Project Vaani speech data, Digital future kitchen lab, Codex CLI Rust rewrite

🔥 Spotlight

NVIDIA Nemotron Nano 2 Released : NVIDIA has released the Nemotron Nano 2 series of AI models. Its 9B hybrid Mamba-Transformer architecture achieves 6x faster inference throughput than models of comparable size while maintaining high accuracy. The model supports a 128K context length, and most of its pre-training data, including high-quality web, math, code, and multilingual Q&A data, has been open-sourced. This release aims to provide efficient, scalable AI solutions, lower the deployment barrier for enterprises, and foster the development of the open-source AI ecosystem. (Source: Reddit r/LocalLLaMA)

Claude Opus 4.1 Tops LMArena Rankings : Claude Opus 4.1 has surpassed other models to take the top spot in LMArena’s standard, reasoning, and web development categories. Users report improvements in its micro/macro approaches, especially in its “think for a moment, maybe XYZ is better” decision-making pattern. Despite some users finding it expensive or underperforming in certain scenarios, its capabilities in programming and complex task handling have been widely recognized, demonstrating Anthropic’s continuous progress in model performance. (Source: Reddit r/ClaudeAI)

AMD CEO Lisa Su’s View on the AI Talent Salary War : AMD CEO Lisa Su publicly stated her opposition to companies like Meta offering annual salaries of hundreds of millions of dollars to poach AI talent. She believes that while competitive compensation is fundamental, the true key to attracting top talent lies in a company’s mission and making employees feel their actual impact on the company, rather than just being a cog in a machine. She emphasized that excessively high salaries can damage company culture and pointed out that AMD’s success is the result of team effort, not reliance on a few star employees. (Source: 量子位)

Google AI Advances Digitization of 2300 Asian Languages : Google is addressing the “silencing” of Asian languages in the digital world through multiple AI projects. Project Vaani, in collaboration with the Indian Institute of Science, has collected nearly 21,500 hours of speech data covering 86 Indian language variants and made it freely available. Project SEALD, in partnership with AI Singapore, is building the Aquarium database for 1200 Southeast Asian languages. Additionally, Google’s AI translation system CHAD 2 (powered by Gemini 2.0 Flash) has helped Japan’s Yoshimoto Kogyo achieve 90% accuracy in translating comedy content, reducing translation time from months to minutes. (Source: 量子位)

🎯 Trends

Innovative AI Applications in Healthcare : Yunpeng Technology, in collaboration with Shuaikang and Skyworth, launched the “Digitalized Future Kitchen Lab” and smart refrigerators equipped with an AI health large model. The AI health large model optimizes kitchen design and operation, while the smart refrigerator provides personalized health management, marking a breakthrough for AI in daily health management. This launch demonstrates AI’s potential in daily health management, enabling personalized health services through smart devices, which is expected to drive the development of home health technology and improve residents’ quality of life. (Source: 36氪)

AI’s Disruption and Opportunities for Traditional Industries : Duolingo saw revenue growth by embracing AI, but the ability of models like GPT-5 to directly generate language learning tools poses a challenge to its stock price, highlighting AI’s disruptive potential for existing business models. At the same time, Goldman Sachs believes AI will be a force multiplier for the software industry, not a disruptor, and traditional SaaS giants can maintain competitiveness through hybrid AI strategies and deep moats. This indicates that AI is both a challenge and an opportunity to drive industry transformation and create new value. (Source: 36氪, 36氪)

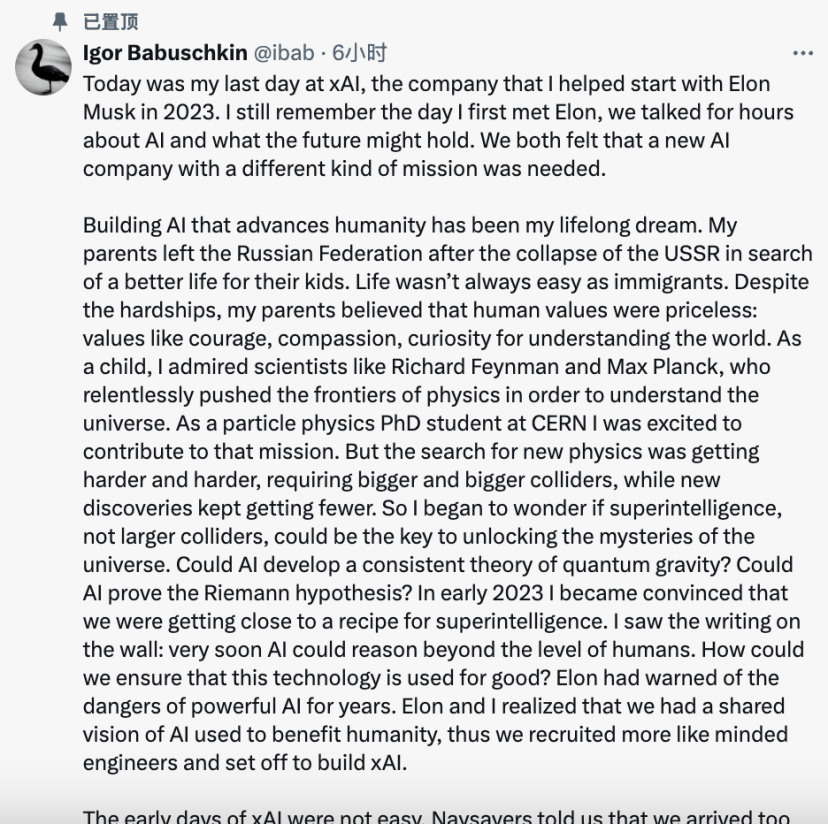

AI Talent Market Dynamics and Career Development : xAI co-founder Igor Babuschkin left to start a venture capital firm focused on AI safety research, aiming to find “the next Elon Musk”; Kevin Lu, the Chinese leader of OpenAI’s GPT-4o mini, joined Mira Murati’s Thinking Machine Lab, emphasizing the importance of internet data for AI progress. Demand for AI positions is strong, but small and medium-sized enterprises struggle to recruit, top talent is highly sought after, ordinary graduates face intense competition, and the value of AI PhDs is questioned, highlighting structural imbalances in AI talent supply and demand and career transition challenges. (Source: 36氪, 36氪, 36氪, 36氪, 36氪)

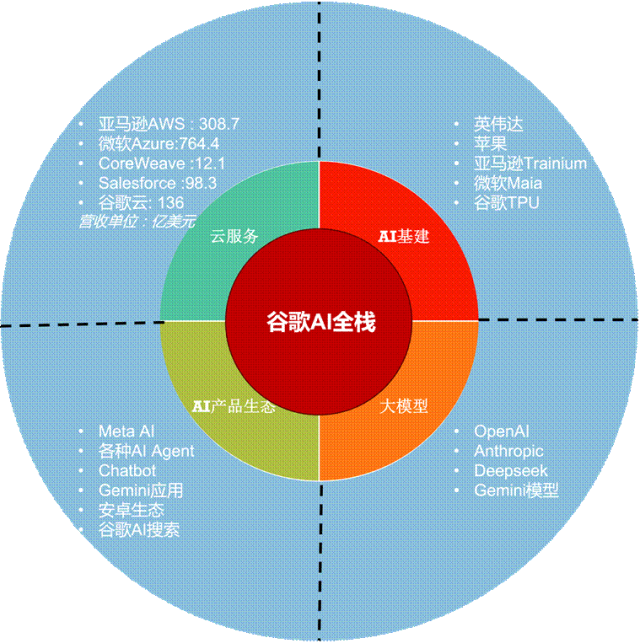

AI Investment and Infrastructure Development : Google and Meta’s financial reports show that market skepticism about AI capital investment has turned into excitement, with AI significantly driving online advertising and cloud service revenue growth. Google substantially raised its capital expenditure forecast to $85 billion, mainly for servers and data centers. Elon Musk’s highly anticipated Tesla Dojo supercomputer project was disbanded, with the company instead spending heavily on NVIDIA AI chips, confirming that in the AI era, vertical integration faces challenges from platform ecosystems, and collaboration with industry giants is more pragmatic. (Source: 36氪, 36氪)

Embodied AI and Robotics Commercialization Accelerate : Qinglang Intelligence CEO Li Tong emphasized that robot commercialization requires deep understanding of customer pain points to achieve “positional” replacement, with over 100,000 commercial robots already sold. Yufan Intelligence, an 11-year visual AI company, launched its spatial cognition large model Manas and quadruped robot dog, fully embracing embodied AI and emphasizing full-stack self-research in “intelligence + hardware.” Major companies like JD, Meituan, and Alibaba are increasing investment in the robotics sector, covering sensors, dexterous hands, and humanoid robots, aiming to reshape fulfillment efficiency and user experience, and push robots into more consumer scenarios. (Source: 36氪, 36氪, 36氪)

New Trends in AI for Content Creation and User Experience : Douyin’s founding team members launched the “Shumei Wanwu” platform, leveraging AI tools to lower the barrier for creative design and product monetization, connecting AI creativity to the physical production chain. Meitu Inc. seeks growth through its AI Agent product RoboNeo, with increased revenue from its imaging and design products and significant growth in overseas users. The AI trendy toy “AI Labubu” gained popularity, combining a trendy appearance with AI conversation capabilities to provide emotional value. These cases show AI’s rapid development in consumer-grade applications such as content generation, creative monetization, and emotional companionship. (Source: 36氪, 36氪, 36氪)

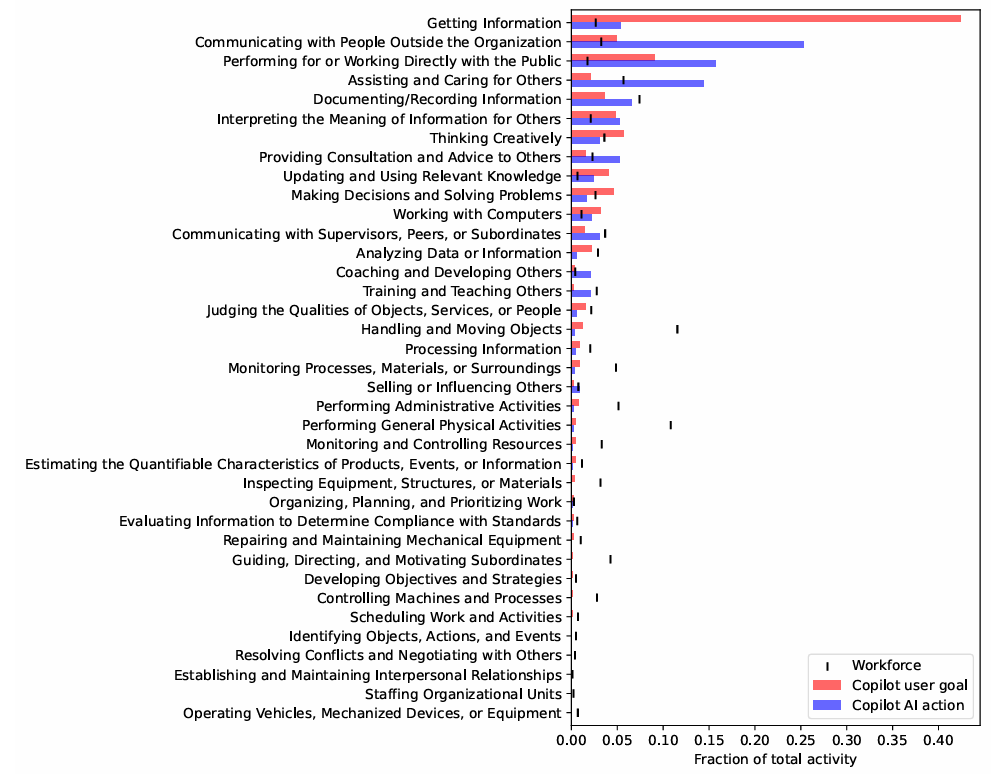

AI’s Profound Impact on the Job Market : Microsoft research based on Copilot data indicates that AI can support tasks like research, writing, and communication, but cannot fully replace all tasks within a single profession. Journalists, translators, and other language and content creation professions are most affected by AI, but AI may also improve efficiency rather than directly replace jobs, similar to the impact of ATMs on bank tellers. AI assistants are like “chatty interns,” strong in explanation but lacking in proactive problem-solving. (Source: 36氪)

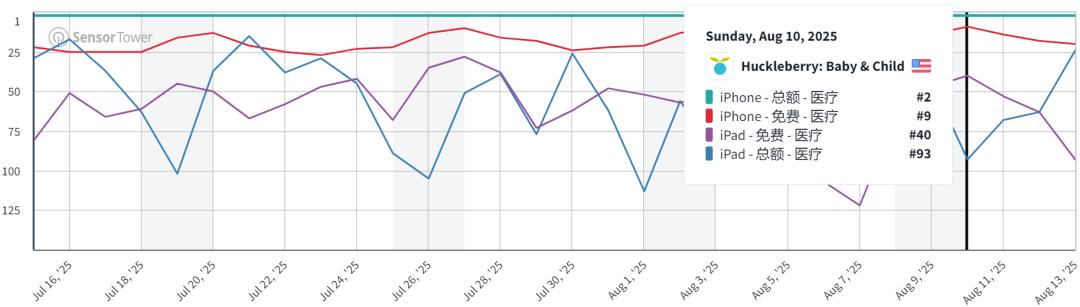

AI’s Commercial Potential in Parenting : AI is quietly entering the infant sleep monitoring field. Applications like Huckleberry analyze infant care logs to accurately predict sleep rhythms, providing a sense of “predictable” control, achieving monthly revenues of tens of millions of dollars. Such products, combined with AI nanny functions, meet parents’ needs for efficient record-keeping and emotional value, becoming a “gold mine” in both low-cost software services and high-cost AI hardware. (Source: 36氪)

🧰 Tools

AI-Assisted Programming and Development Tools : OpenAI’s new Codex CLI, rewritten in Rust and integrated with GPT-5, offers faster interaction speeds and powerful coding capabilities, becoming a strong competitor to Claude Code. LangChain released the JavaScript version of Deep Agents, supporting multi-agent system construction. Replit Agent is exploring support for Python Notebook and Godot game engine development. VS Code Insiders edition supports OpenAI-compatible endpoints and integrates Playwright for UI automation testing. (Source: doodlestein, hwchase17, amasad, pierceboggan)

AI Applications in Office and Content Creation : Paradigm launched an AI-native spreadsheet, aiming to eliminate repetitive work. Huxe added AI features to parse unread news emails. Gemini API now supports URL context tools, capable of directly processing web pages, PDFs, and image content. AI tools like Aleph and RunwayML are revolutionizing video operations, enabling video content to be editable like text. Meitu’s RoboNeo, AI Shan Hai Jing character commercialization, and AI-assisted novel creation systems demonstrate AI’s potential in creative generation and content monetization. (Source: hwchase17, raizamrtn, jeremyphoward, c_valenzuelab, Reddit r/artificial)

LLM Performance and Evaluation Tools : Claude Opus 4.1 shows outstanding performance in LMArena’s coding, web development, and other areas. Datology AI introduced the BeyondWeb synthetic data method, emphasizing the importance of high-quality synthetic data in model pre-training, which can improve the performance of smaller models. NVIDIA Nemotron Nano 2 model adopts a hybrid Mamba-Transformer architecture, excelling in math, code, reasoning, and long-context tasks, and supports inference budget control. (Source: scaling01, code_star, ctnzr)

AI Agents and Automation : NEO AI4AI agent achieved SOTA results on MLE Bench, capable of autonomously performing ML engineering tasks such as data preprocessing, feature engineering, model experimentation, and evaluation. LangChain’s Deep Agents are implemented in JavaScript, supporting complex problem-solving and tool calling. Reka Research provides AI-driven deep research services, synthesizing answers from multiple sources of information. (Source: Reddit r/MachineLearning, hwchase17, RekaAILabs)

AI Image and Video Editing Models : Qwen-Image-Edit was released, based on 20B Qwen-Image, supporting precise text editing in both Chinese and English, advanced semantic editing, and low-level appearance editing, usable for cartoon production. Higgsfield AI offers Hailuo MiniMax 02 for Draw-to-Video, supporting high-quality 1080p generation. (Source: teortaxesTex, _akhaliq)

LLM API and Cost Management : Claude launched the Usage and Cost API, providing near real-time model usage and cost visibility to help developers optimize token efficiency and avoid rate limits. OpenRouter displays LLM market prices and cached prices on its model page. (Source: Reddit r/ClaudeAI, xanderatallah)

📚 Learning

AI Learning Resources and Methods : Andrew Ng emphasized that universities should fully embrace AI, not only teaching AI but also using AI to advance all disciplines. DeepLearning.AI released Andrew Ng’s new e-book, providing an AI career roadmap. GPU_MODE and ScaleML will host a summer lecture series, sharing algorithmic and system advancements of gpt-oss. Reddit communities discussed introductory deep learning books, FastAPI model deployment, CoCoOp+CLIP implementation, and how to optimize model training cycles (e.g., choosing the optimal number of epochs). (Source: AndrewYNg, DeepLearningAI, lateinteraction, Reddit r/deeplearning, Reddit r/deeplearning)

AI Talent Development and Career Paths : Reddit communities discussed whether AI engineers must be mathematicians and how to enter the deep learning field through self-study or a master’s degree. At the same time, some argue that the AI era emphasizes “context engineering” more than “prompt engineering,” requiring a more comprehensive understanding of LLM application building. (Source: Reddit r/deeplearning, Reddit r/MachineLearning)

LLM Training Data and Model Optimization : Reddit discussed how to identify and correct factual errors in LLM training data, as well as current best practices for data validation and correction. The progress of DeepSeek R2 sparked discussion on whether pre-training has reached a bottleneck and the importance of multimodal unified representation for world models. (Source: Reddit r/deeplearning, 36氪)

AI Research Progress and New Architecture Exploration : Simons Foundation and Stanford HAI partnered to explore the physics of learning and neural computation, aiming to understand the learning, reasoning, and imagination of large neural networks. AIhub published a list of August ML/AI seminars. Reddit discussed the value of small language models (SLMs) and local AI, questioning whether excessive pursuit of model scale stifles AI innovation, and suggesting that the Transformer architecture is not the only path, encouraging exploration of other efficient architectures. (Source: ylecun, aihub.org, Reddit r/MachineLearning)

CUDA Kernel Development and Deployment : Hugging Face released the kernel-builder library, simplifying local development, multi-architecture building, and global sharing of CUDA kernels. It supports registering them as PyTorch native operators and is compatible with torch.compile, improving performance and maintainability. (Source: HuggingFace Blog)

Multimodal Models and World Model Research : Hugging Face Daily Papers published several cutting-edge research papers, including: 4DNeX (the first feed-forward framework for single-image 4D scene generation), Inverse-LLaVA (eliminating alignment pre-training through text-to-vision mapping), ComoRAG (cognitively inspired memory organization RAG for long narrative reasoning), as well as a survey on efficient LLM architectures and Matrix-Game 2.0 (a real-time streaming interactive world model). (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

Vision Foundation Model DINOv3 : Meta AI’s DINOv3, as a next-generation vision foundation model, trained purely with self-supervised learning, has successfully scaled to 7B parameters and surpassed weakly supervised and supervised baselines in tasks like segmentation, depth estimation, and 3D keypoint matching. Its Gram Anchoring technique addresses dense feature quality issues during long training and can be applied to specialized fields such as satellite imagery. (Source: LearnOpenCV)

💼 Business

OpenAI Launches ChatGPT Go Subscription Plan in India : OpenAI has launched a new low-cost subscription tier, “ChatGPT Go,” in India, priced at 399 rupees (approximately $4.7) per month. This plan offers 10 times the message limit, image generation, and file uploads compared to the free version, along with 2 times the memory length, and supports UPI payments. This move aims to expand the user base in the Indian market and meet local demand for more economical and efficient AI services. (Source: openai, kevinweil, snsf)

AI Accelerates Enterprise Transformation and Job Market Impact : A CEO laid off 80% of staff due to employees refusing to rapidly adopt AI, sparking discussion on employee adaptability during AI transformation. Concurrently, the emergence of high-paying AI-related jobs (e.g., MLOps engineers, AI research scientists) shows AI is reshaping the traditional data science field. While AI can boost productivity, companies need to build real value around AI, not just rely on the technology itself. (Source: Reddit r/artificial, Reddit r/deeplearning, Reddit r/artificial)

AI Company Valuations and Competitive Landscape : OpenAI’s annualized revenue has exceeded $12 billion, with a valuation of $500 billion, while Anthropic’s annualized revenue is $4 billion, with a valuation of $170 billion, indicating a continued surge in valuations for AI foundation model companies. Google may sell TPUs externally by 2027, challenging NVIDIA’s leading position in the AI chip market. Meanwhile, AI startup Lovable achieved over $100 million ARR in 8 months, demonstrating the huge potential of AI-driven website and application builders. (Source: yoheinakajima, Justin_Halford_, 36氪)

🌟 Community

Synthetic Data and the Future of Pre-training : Datology AI’s BeyondWeb method is widely discussed, emphasizing that pre-training data is facing a “data wall,” and high-quality synthetic data can effectively improve the performance of smaller models, even surpassing larger ones. The community debated whether synthetic data would lead to model “degradation” or “hype,” but generally agreed that well-designed synthetic data is key to breaking through data bottlenecks. (Source: code_star, sarahookr, BlackHC, Reddit r/MachineLearning)

AI Model Performance and User Experience : Claude Opus 4.1 topped multiple LMArena rankings, especially excelling in coding and web development. However, the release of GPT-5 led to user calls of “give me back GPT-4o” due to its “cold” interaction style, highlighting user demand for AI’s emotional and empathetic capabilities. At the same time, some argue that AI models’ excessive pursuit of scale might stifle innovation, and that small models and local AI have huge development potential. (Source: scaling01, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/MachineLearning)

Discussions on AI’s Impact on Employment and Careers : Social media buzzed with discussions on whether AI will “take jobs” and the difference between “AI engineer” and “prompt engineer.” Some argue that AI will prompt career transitions rather than complete replacement, and that future careers will require greater adaptability and problem-solving skills. Additionally, AI models’ “toxic positivity” or “flattery” training drew user dissatisfaction, who felt it lacked authenticity and critical thinking. (Source: jeremyphoward, Teknium1, Reddit r/ClaudeAI, Reddit r/ArtificialInteligence)

AI Community Events and Engagement : LangChain, in collaboration with Grammarly, Uber, and others, hosted offline meetups on multi-agent systems and LangGraph applications. The Hugging Face community discussed Japanese AI model releases, kernel sharing, and tools like AI Sheets. Weights & Biases held Code Cafe events, encouraging developers to build and share AI projects on-site. (Source: LangChainAI, ClementDelangue, weights_biases)

Philosophical Discussions on AI Safety and Ethics : The community discussed whether AI can adjust its own goals and if intelligence necessarily leads to a desire for dominance, delving into deep AI safety issues. Some argued that AI safety is an engineering problem that can be solved through design. Concerns were also raised about the risks of AI model “hallucinations” in enterprise scenarios and the possibility of AI flooding information channels with low-quality services. (Source: Reddit r/ArtificialInteligence, BlancheMinerva, Ronald_vanLoon)

Discussions on AI Hardware and Infrastructure : Social media discussed the importance of AI UX in AI infrastructure, as well as the performance and energy consumption of AI chips. Some argued that NVIDIA’s advantage lies in its ecosystem beyond just GPUs, and that Google’s TPUs might be sold externally in the future. (Source: ShreyaR, m__dehghani, espricewright)

💡 Other

AI Applications in Finance : A study demonstrated how to train a small (270M parameter) Gemma-3 model to achieve a financial analyst’s “thinking” pattern through supervised fine-tuning and GRPO (Group Relative Policy Optimization), capable of outputting verifiable structured results. This indicates that small models can also achieve intelligent reasoning in specific domains, with lower costs and latency. (Source: Reddit r/deeplearning)

Voice Data Analysis and Separation : The Reddit community discussed how to cluster human voices in songs to identify different artists. Suggestions included using Mel-frequency Cepstral Coefficients (MFCCs) to extract voice features and utilizing Python libraries like Librosa or python_speech_features for processing. Additionally, audio editing software for separating vocals and instruments was mentioned, as well as challenges in signal separation like the “cocktail party effect.” (Source: Reddit r/MachineLearning)

AI-Assisted Research Discovery : Hugging Face released the “MCP for Research” guide, demonstrating how to connect AI with research tools through the Model Context Protocol (MCP) to automate the discovery and cross-referencing of papers, code, models, and datasets. This enables AI to efficiently integrate research information from platforms like arXiv, GitHub, and Hugging Face via natural language requests, enhancing research efficiency. (Source: HuggingFace Blog)