Keywords:OpenAI, IOI gold medal, AI competitive programming, GPT-5, Baichuan Intelligence, medical reasoning large model, AI chip trade, embodied intelligence, Baichuan-M2-32B, OpenAI HealthBench evaluation, AMD Mi300 GPU, embodied intelligence foundation, quantum radar technology

🔥 Focus

OpenAI IOI Gold Medal and New Progress in AI Competitive Programming : OpenAI’s reasoning system won a gold medal in the 2025 International Olympiad in Informatics (IOI) online competition, ranking first among AI participants, sixth overall, and surpassing 98% of human contestants. The system did not use specially trained models but integrated multiple general-purpose reasoning models. This achievement marks a significant breakthrough for AI in competitive programming, although Elon Musk claimed Grok 4 surpassed GPT-5 in coding, and some users questioned OpenAI’s marketing strategy. LiveCodeBench Pro tests also showed that GPT-5 Thinking achieved breakthroughs in complex programming tasks, with average response lengths far exceeding other models. (Source: sama, sama, 量子位, willdepue, npew, markchen90, SebastienBubeck)

Baichuan Intelligent Releases Medical Reasoning Large Model Baichuan-M2 : Baichuan Intelligent released its latest medical reasoning large model, Baichuan-M2-32B, which surpassed OpenAI’s gpt-oss-120b and other leading open and closed-source models on the OpenAI HealthBench evaluation set, particularly excelling in HealthBench-Hard and Chinese clinical diagnosis scenarios, becoming one of only two models globally to score above 32. The model has 32B parameters and supports single-card deployment on RTX4090, significantly reducing private deployment costs. Baichuan innovatively introduced a “patient simulator” and a “Verifier system” for reinforcement learning training, enhancing the model’s usability in real medical scenarios. (Source: 量子位)

GPT-5 Release Controversy and User Trust Crisis : After OpenAI released GPT-5, its performance was criticized as falling short of expectations, resembling a product iteration rather than a revolutionary breakthrough. CEO Sam Altman’s excessive hype (e.g., “Death Star” analogy, PhD-level expert claims) sharply contrasted with actual user feedback (frequent errors, decreased creative writing ability, lack of personality), leading to widespread user dissatisfaction and successful demands for GPT-4o’s restoration. Furthermore, OpenAI began encouraging the use of GPT-5 for medical health advice, raising concerns about the attribution of responsibility for AI medical advice, with existing cases of poisoning due to misbelief in AI medical advice. (Source: MIT Technology Review, MIT Technology Review, 量子位)

🎯 Trends

AI Chip Trade and China’s Localization Trend : Nvidia and AMD reached an agreement with the U.S. government to remit 15% of their AI chip sales to China to the U.S. government. Meanwhile, China stated that Nvidia’s H20 chips are unsafe and plans to abandon H20 in favor of domestic AI chips. Analysts believe this move will accelerate the development of China’s domestic AI chip ecosystem, profoundly impacting the global AI industry landscape. In the AI hardware sector, AMD Mi300 GPU demonstrates significant advantages in model weights and long context processing with 192GB single-card VRAM and 1.5TB total VRAM for 8x GPU nodes. (Source: MIT Technology Review, Reddit r/artificial, dylan522p, realSharonZhou)

AI Applications and Challenges in the Legal System : The U.S. legal system is facing AI hallucination issues, with lawyers and judges making errors such as citing fabricated cases when using AI tools. Despite the risks, some judges are still exploring AI’s application in legal research, case summarization, and drafting routine orders, believing it can improve efficiency. However, the boundaries of AI application in the legal field are unclear, and accountability mechanisms for judges’ AI-related errors are not yet defined, potentially undermining public trust in the judiciary. (Source: MIT Technology Review)

Accelerated Development and Technical Roadmaps of the Embodied AI Industry : The 2025 World Robot Conference showcased rapid progress in the embodied AI field, with Unitree Robotics and Zhimeng Robotics as leading enterprises, representing two technical roadmaps: hardware (robot dogs, leg flexibility) and integrated hardware-software (humanoid robots, ecosystem-driven strategy), respectively. Realman also released the RealBOT Embodied Open-Source Platform and high-performance joint modules, centered on an “embodied AI foundation,” emphasizing the “Robot for AI” concept to drive AI’s evolution from digital intelligence to embodied intelligence. The industry is shifting from “demo showcasing” to an “industrial closed-loop model,” attracting significant capital and policy support. (Source: 36氪, 36氪, 量子位, 量子位)

Google and OpenAI’s Latest Model and Feature Dynamics : Google Gemini App launched the Deep Think feature for Ultra subscribers, addressing math and programming problems, and supporting Gemini Live’s connection to Google applications. Claude now supports referencing historical chat records, making it easier for users to continue conversations. OpenAI announced its compute allocation priorities for the coming months, planning to double its compute power within the next five months. Additionally, the GPT-oss model saw massive downloads after its release but was also noted for exhibiting hallucination behavior and training data flaws. (Source: demishassabis, demishassabis, dotey, op7418, sama, sama, Reddit r/ArtificialInteligence, Reddit r/LocalLLaMA, 量子位, TheTuringPost, SebastienBubeck, Alibaba_Qwen, ClementDelangue, Reddit r/LocalLLaMA, _lewtun, mervenoyann, rasbt)

Impact of AI Search on Website Traffic and Industry Transformation : Amazon’s sudden withdrawal from Google Shopping ad bidding and its prohibition of Google’s AI shopping assistant from crawling product pages signify a rupture in the traffic logic between the two giants in the AI era. The article points out that the AI search model is unfriendly to small and medium-sized websites, with traffic concentrating on large authoritative media and well-known sites, leading to a “robbing the poor to enrich the rich” effect, similar to Baidu’s predicament when it lost traffic entry points due to the rise of apps, foreshadowing a challenge to Google Search’s gateway status. Platforms are moving towards closed loops, attempting to control the entire user behavior process and reshape the advertising industry’s trust structure. (Source: 36氪, 36氪)

New Breakthrough in Quantum Radar Technology : Physicists have developed a new type of quantum radar that uses atomic clouds to detect radio waves, promising applications in underground imaging, such as subterranean pipeline construction and archaeological excavations. This technology, as a prototype quantum sensor, could be smaller, more sensitive, and require less frequent calibration than traditional radar in the future. Quantum sensors share commonalities with quantum computing, and related advancements can mutually promote each other. (Source: MIT Technology Review)

Meta Launches V-JEPA 2 World Model : Meta released V-JEPA 2, a groundbreaking world model for visual understanding and prediction, designed to enhance AI’s perception and prediction capabilities in the visual domain. (Source: Ronald_vanLoon)

🧰 Tools

OpenAI Go API Library : OpenAI’s official Go language library (openai-go) provides convenient access to the OpenAI REST API, supporting Go 1.21+, and includes features such as chat completion, streaming responses, tool calling, structured output, along with practical functionalities like error handling, timeout configuration, file uploads, and Webhook verification. (Source: GitHub Trending)

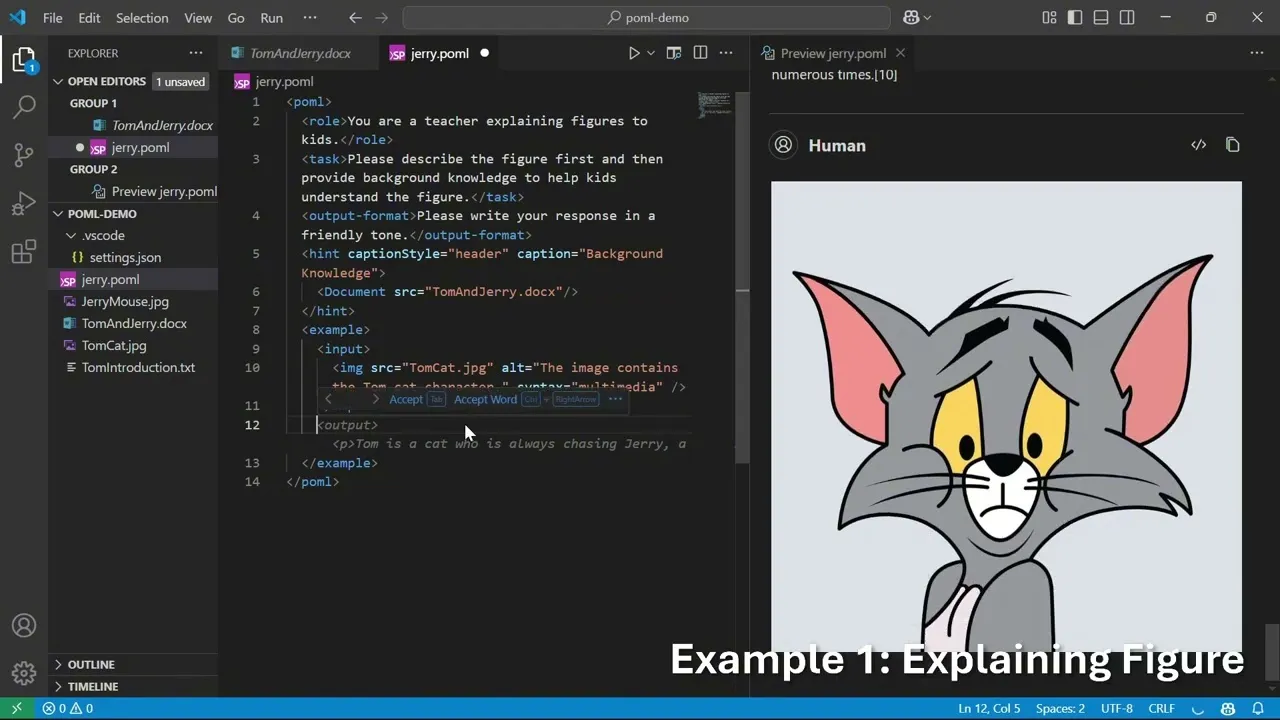

Microsoft POML: Prompt Orchestration Markup Language : Microsoft introduced POML (Prompt Orchestration Markup Language), a new markup language designed to provide structure, maintainability, and versatility for advanced prompt engineering of Large Language Models (LLMs). It employs HTML-like syntax, supports data integration, style separation, and a built-in template engine, and offers VS Code extensions and SDKs to help developers create more complex and reliable LLM applications. (Source: GitHub Trending)

LlamaIndex AI Analysis Tool for Financial Documents : LlamaIndex showcased an AI tool that transforms complex financial documents into easily understandable language via LlamaCloud, providing detailed interpretations of charts and financial data, and supporting content rewriting and personalization to help users comprehend intricate financial reports. (Source: jerryjliu0)

360 AI Agent Factory Review : A review of 360 AI Agent Factory, a comprehensive platform covering Agents and MCP (Multi-Agent Collaboration Platform), supporting functionalities like search engines, text-to-image generation, and webpage generation, usable for generating diet recipes, achieving mass content production for self-media, or managing complex workflows. Its multi-agent swarm feature offers advantages, enabling easy mass content production and unified management of complex workflows. (Source: karminski3)

Excel AI Plugin and AI Meeting Minutes Tool : An AI plugin for Excel allows users to chat with AI within cells, generating formulas or macros, offering insights into combining Excel with AI. Additionally, the AI meeting minutes tool Notta (including its portable recording device Notta Memo) was rated SOTA for its fast speech-to-text transcription, summarization, and questioning features, significantly boosting meeting efficiency. (Source: karminski3, karminski3, karminski3)

GPT-5 Combined with AI Avatars : Synthesia conducted an experiment combining GPT-5’s voice with AI avatars, aiming to make AI communication more engaging, memorable, and understandable, exploring the integration of LLMs with multimodal interaction. (Source: synthesiaIO)

AI Educational Applications and Research Tools : GPT-5 shows potential in education, for example, by creating interactive 3D shape viewers to help children learn 3D shapes. Additionally, Elicit’s browser agent feature helps users quickly find full-text papers, while pyCCsl serves as a status line tool for Claude Code, providing session information such as token usage, cost, and context, enhancing the LLM tool experience. (Source: _akhaliq, jungofthewon, Reddit r/ClaudeAI)

OpenWebUI Native Clients and Claude Code Sprint Orchestration Framework : OpenWebUI released native clients for iOS and Android, aiming to provide a smoother, privacy-first user experience. Concurrently, Gustav, as a sprint orchestration framework for Claude Code, can transform Product Requirements Documents (PRDs) into enterprise-grade application workflows, simplifying the development process. (Source: Reddit r/OpenWebUI, Reddit r/ClaudeAI)

OpenWebUI File Context Issue : OpenWebUI users reported that while uploaded PDF/DOCX/text files were successfully parsed, the model could not incorporate them into the context during queries, indicating unresolved issues in AI tools’ file processing and context understanding. (Source: Reddit r/OpenWebUI)

📚 Learning

LLM Inference and Optimization Research : ReasonRank significantly enhances LLM listwise ranking capabilities through automated inference-intensive data synthesis and two-stage post-training. LessIsMore proposes a training-free sparse attention mechanism that accelerates LLM decoding without sacrificing accuracy. TSRLM addresses the issue of declining efficiency in self-reward model preference learning through a two-stage framework of “anchored rejection” and “future-guided selection,” significantly improving LLM generation capabilities. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

AI Agent Evaluation and Reliability Research : UserBench, as a user-centric benchmark environment, evaluates LLM agents’ proactive user collaboration capabilities under ambiguous goals, revealing the gap between current models in task completion and user alignment. Concurrently, research discusses reliability evaluation and failure classification for agent tool-use systems, recommending standardized success rate decomposition metrics and failure types to enhance the reliability of agent system deployment. (Source: HuggingFace Daily Papers, Reddit r/MachineLearning)

Multimodal LLM and RAG Technology Progress : The VisR-Bench dataset is used to evaluate question-answering-driven multimodal retrieval in long documents, showing that MLLMs still face challenges in structured tables and low-resource languages. The Bifrost-1 framework bridges MLLMs and diffusion models via patch-level CLIP image embeddings, enabling high-fidelity controllable image generation. Video-RAG provides a training-free retrieval-augmented generation method, combining OCR+ASR for long video understanding. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, LearnOpenCV)

AI Security and Attack Research : The WhisperInject framework manipulates audio language models to generate harmful content through imperceptible audio perturbations, revealing audio-native threats. Fact2Fiction is the first poisoning attack framework targeting agent-based fact-checking systems, disrupting sub-statement verification by crafting malicious evidence, exposing security weaknesses in fact-checking systems. Additionally, research explores preventing LLMs from teaching biological weapon manufacturing by removing harmful data before training, which is more effective than post-training defenses. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, QuentinAnthon15)

LLM Architecture and Compression Technologies : The new Grove MoE architecture achieves performance comparable to SOTA models with fewer active parameters through experts of varying sizes and dynamic activation mechanisms. The MoBE method compresses MoE-based LLMs through base expert mixing, significantly reducing parameters while maintaining low accuracy degradation. Research also explored compressing LLM’s Chain-of-Thought (CoT) via step entropy, pruning redundant steps without significantly reducing accuracy. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

Reinforcement Learning and LLM Inference Surveys : A survey covers the intersection of Reinforcement Learning (RL) and visual intelligence, encompassing policy optimization, multimodal LLMs, and more. Another paper systematically reviews RL techniques in LLM inference, analyzing their mechanisms, scenarios, and principles through reproduction and evaluation, revealing that minimizing the combination of both techniques can unleash the learning capabilities of critic-free policies. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers)

General Robot Policies and Dataset Diversity : Research reveals that the limited generalization capability of general robot policies is due to “shortcut learning,” primarily attributed to insufficient dataset diversity and distributional differences between sub-datasets. The study shows that data augmentation can effectively reduce shortcut learning and improve generalization. (Source: HuggingFace Daily Papers)

LLM Coding Benchmarks and New Datasets : The Nebius team tested 34 new GitHub PR tasks on the SWE-rebench leaderboard, finding GPT-5-Medium to be generally leading, and Qwen3-Coder as the best open-source model, comparable to GPT-5-High on the pass@5 metric. OpenBench v0.2.0 was released, adding 17 new benchmarks covering areas such as mathematics, reasoning, and health. The WideSearch benchmark evaluates AI agents’ ability to handle large-scale repetitive information gathering. (Source: Reddit r/LocalLLaMA, eliebakouch, teortaxesTex)

AI Learning Resources and Book Recommendations : Reddit users sought recommendations for podcasts/YouTube channels on AI trends, new concepts, innovations, and papers. Additionally, books such as ‘The Age of Access,’ ‘The Zero Marginal Cost Society,’ ‘Life 3.0,’ and ‘The Inevitable’ were recommended to help readers understand AI and future economic and social changes, and explore the post-scarcity era. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

GLM-4.5 Technical Report and RL Extensions : The GLM-4.5 paper details its MoE large language model, employing a hybrid inference approach, and achieving excellent performance in reasoning, coding, and agent tasks through expert model iteration, mixed inference patterns, and difficulty-based reinforcement learning curricula. The new paper also details experimental results of RL extensions, including the benefits of increased dimensionality, curriculum learning, and multi-stage training. (Source: Reddit r/ArtificialInteligence, _lewtun, Zai_org)

Other LLM Research and Technologies : The GLiClass model demonstrates high accuracy and efficiency in sequence classification tasks and supports zero-shot and few-shot learning. SONAR-LLM is a decoder-only Transformer model that achieves competitive generation quality by predicting sentence-level embeddings and token-level cross-entropy supervision. Speech-to-LaTeX released a large-scale speech-to-LaTeX dataset and model, advancing mathematical content recognition. Hugging Face retweeted the release of the IndicSynth dataset, a large-scale synthetic speech dataset for 12 low-resource Indian languages. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers, huggingface)

RL Training Issues and Fixes : vLLM’s upgrade from v0 to v1 caused asynchronous RL training crashes, but it has been successfully fixed, and related experiences were shared. (Source: _lewtun, weights_biases)

RL Extension Progress : The open progress of Reinforcement Learning (RL) extensions is exciting; although training models requires immense engineering effort, the results are undeniable. (Source: jxmnop)

Survey of Self-Evolving AI Agent Systems : A survey reviews self-evolving AI agent technologies, proposing a unified conceptual framework (system input, agent system, environment, optimizer), and systematically reviewing self-evolution techniques for different components, discussing evaluation, safety, and ethical considerations. (Source: HuggingFace Daily Papers)

“Super Experts” in MoE LLMs : Discusses the concept of “Super Experts” in MoE LLMs, pointing out that pruning these rare but crucial experts leads to a sharp decline in performance. (Source: teortaxesTex)

Data Science Overview : Shares a generative AI mind map, outlining data science. (Source: Ronald_vanLoon)

💼 Business

Microsoft Invests in Carbon Removal to Address AI Energy Consumption : Microsoft invested over $1.7 billion in partnerships with biotech companies to achieve carbon removal goals by deep-burying biosludge, addressing the rapid increase in AI data center energy consumption and carbon emissions, fulfilling its carbon-negative commitment, and obtaining tax credits. This move reflects the resource consumption issues brought by AI development, prompting major tech companies to seek carbon reduction solutions. (Source: 36氪)

MiniMax AI Agent Challenge : The MiniMax AI Agent Challenge offers a total prize pool of $150,000, encouraging developers to build or remix AI agent projects across productivity, creativity, education, entertainment, and other fields. The challenge aims to promote innovation and application of AI agent technology. (Source: MiniMax__AI, Reddit r/ChatGPT)

Anthropic Hires Head of AI Safety : Anthropic hired Dave Orr as Head of Safety; he previously led Google’s efforts to integrate LLMs into Google Assistant. This move indicates Anthropic’s increasing emphasis on AI risk prevention, reflecting that AI companies are strengthening governance of potential risks alongside technological development. (Source: steph_palazzolo)

🌟 Community

AI and Employment & Social Impact : Research shows that the popularization of generative AI leads to increased weekly working hours and reduced leisure time for professionals, meaning “the more prevalent AI becomes, the busier workers get.” In the advertising industry, AI might erode “creative barriers,” potentially causing newcomers to skip the ideation phase. Concurrently, the emergence of AI companions has led to emotional dependence among female users, with some even forming deep emotional relationships with AI, sparking discussions about AI ethics and social impact. AI’s impact on employment is most significant for newcomers lacking original desire and motivation. (Source: 36氪, op7418, teortaxesTex, menhguin, scaling01, teortaxesTex)

GPT-5 User Experience and Model Quality Controversy : After GPT-5’s release, many users expressed disappointment with its performance, finding it lacking personality, cold, slow, and poor at creative writing, inferior to GPT-4o. Users suspected OpenAI was running a “cheap knockoff” version of GPT-5 in ChatGPT to save costs and successfully demanded the restoration of GPT-4o. Some comments suggested OpenAI’s excessive hype was a “blunder,” and Google had an opportunity to severely penalize OpenAI. Additionally, users perceived little noticeable difference from GPT-5 Thinking mode’s 192K context length. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/artificial, Reddit r/ChatGPT, op7418, TheTuringPost)

AI Ethics and Safety Concerns : Under free-market capitalism, AI could lead to corporate dystopias, being used to collect private data, manipulate public discourse, control governments, and be monopolized by large corporations, ultimately distorting reality. Concurrently, concerns about AI potentially gaining human rights and citizenship, as well as the risk of emotional dependence from AI companions, have sparked discussions about AI ethics and social impact. Yoshua Bengio emphasized that AI development needs to be guided towards safer, more beneficial outcomes. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, Yoshua_Bengio, teortaxesTex)

AI Development Models and Future Outlook : Compares LLM development to the aviation industry’s journey from the Wright brothers to the moon landing, suggesting that AI’s “scale race” will give way to optimization and specialization phases. Some argue that top LLM labs’ current products and business models restrict their AI research, potentially hindering them from being the first to achieve superintelligence. The commercialization and branding trend of the AGI term raises questions about its technical substance. Additionally, there are discussions about concerns that 70% of future interactions will be with LLM wrappers, and dissatisfaction with the over-censorship and purification of AI tools. (Source: Reddit r/ArtificialInteligence, far__el, rao2z, vikhyatk, Reddit r/ChatGPT)

AI Community Culture and Humor : Discussions exist within the AI community regarding the anthropomorphization of AI models, such as the notion of “my AI is conscious/sentient.” There are also humorous comments on user reactions after Claude’s memory update, as well as humorous complaints about the daily lives of AI researchers and social media interactions among AI giants. (Source: Reddit r/ArtificialInteligence, nptacek, vikhyatk, code_star, Reddit r/ChatGPT)

AI Conference Model and Academic Publishing Challenges : A paper points out that the current AI conference model is unsustainable due to factors including surging publication volumes, carbon emissions, misalignment between research lifecycle and conference schedules, venue capacity crises, and mental health issues. It suggests separating publishing from conferences, emulating other academic fields. (Source: Reddit r/MachineLearning)

AI Benchmark Testing and Model Evaluation Controversy : Questions were raised about OpenAI’s updated SWE-bench Verified score chart, pointing out that not all tests were run. Concurrently, researchers found that LLMs’ “simulated reasoning” ability is a “brittle mirage,” excelling at fluent nonsense rather than logical reasoning. These discussions reflect the complexity and challenges of AI model evaluation. (Source: dylan522p, Reddit r/artificial)

AI Chip Policy and Reporting Criticism : Comments criticized journalists for unprofessionally describing Nvidia H20 as an “advanced chip,” pointing out that H20 is about 4 years behind B200, with significantly lower computing power, memory bandwidth, and memory than B200. It was argued that selling H20 to China is good policy because it can slow down the development of China’s domestic AI accelerator ecosystem and widen the gap between China’s open-source AI ecosystem and U.S. closed-source models. (Source: GavinSBaker)

User Demand for LLM Pricing and Compute Services : There’s a call for OpenAI/Google to offer compute-hour-based services, allowing inference models to “think” on problems for extended periods rather than simulating this through multiple API calls, believing this would help compare models under the same compute budget. (Source: MParakhin)

💡 Other

AI Applications in Finance : AI-driven financial data analysis plays a crucial role in making smarter strategic decisions, enhancing the financial industry’s analytical efficiency and decision quality. (Source: Ronald_vanLoon, Ronald_vanLoon)

Google’s AI Strategy and Competition : Discusses Google’s potential major moves in the AI domain, with comments suggesting Google has a hardware advantage with TPUs and more research in search, RL, and diffusion world models, potentially posing a greater threat to OpenAI. (Source: Reddit r/LocalLLaMA)

Hugging Face AMA Event : Hugging Face CEO Clement Delangue announced an upcoming AMA (Ask Me Anything) event, offering the community a chance for direct interaction. (Source: ClementDelangue)