Keywords:MiroMind ODR, GPT-5, UBTECH Humanoid Robot, DeepMind Genie 3, LangChain, AI Sovereignty, Reinforcement Learning, RAG System, GAIA Test Score 82.4, GPT-5 Generated 3D Game, Walker S2 Autonomous Battery Replacement Robot, LangGraph Agents Framework, Dynamic Fine-Tuning DFT Algorithm

🔥 Spotlight

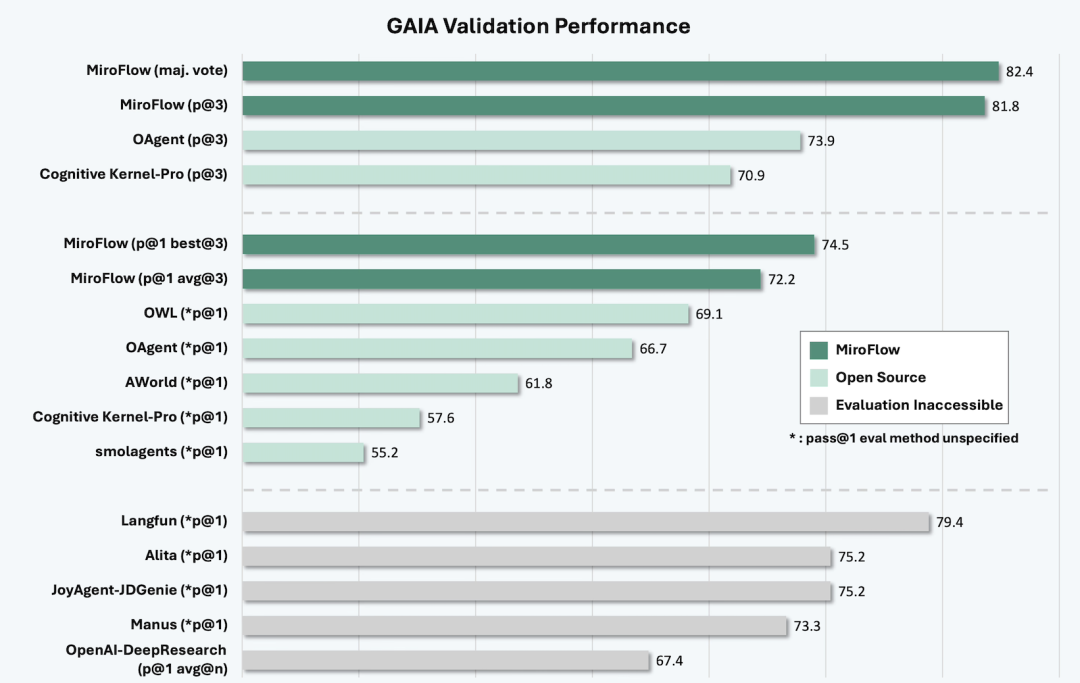

MiroMind ODR Released, Dai Jifeng and Chen Tianqiao Jointly Create the Strongest Open-Source Deep Research Model: MiroMind ODR scored 82.4 in GAIA tests, surpassing models like OpenAI DeepResearch, and achieving full open-source status for its core model, data, training pipeline, AI Infra, and DR Agent framework. This project marks the debut of Dai Jifeng, former Principal Researcher at Microsoft Research Asia, after joining Chen Tianqiao’s Shanda Group. It aims to conduct foundational research around AGI and plans to maintain monthly open-source updates. Its emphasized true full open-source reproducibility and leading performance in deep research reasoning signal a new breakthrough in the open-source AI research field. (Source: 量子位)

🎯 Trends

GPT-5 Released: Generates 3D Games in Minutes, Sparking Widespread Industry Discussion: OpenAI released GPT-5, demonstrating its ability to generate 3D games from text instructions in minutes, including a physics-engine-driven “3D Breakout game,” and capable of real-time compilation of Unity/UE5 scripts. Although its chart errors during the presentation and fluctuating performance in user feedback sparked controversy, its potential in game development efficiency and its scores surpassing human average levels in benchmarks like SimpleBench still indicate significant progress in the model’s complex task processing and creativity. (Source: 量子位, 36氪)

UBTECH Releases Multiple Humanoid Robots, Focusing on Swarm Intelligence and Industrial Applications: UBTECH unveiled five major humanoid robots at the World Robot Conference, including Walker S2 (the world’s first humanoid robot capable of autonomous battery swapping) and Cruzr S2. Through “Swarm Brain Network 2.0 + Intelligent Co-Agent” technology, these robots achieve cross-domain integrated perception, intelligent hybrid decision-making, and multi-robot collaborative control, demonstrating swarm operation solutions in industrial manufacturing, commercial services, and scientific research and education scenarios. This aims to reshape new quality productive forces and enhance overall operational efficiency. (Source: 量子位)

DeepMind Releases Genie 3, Google Gemini 2.5 Adds Native Audio Capability: DeepMind officially launched Genie 3, further advancing AI’s capabilities in 3D/object/scene reconstruction, hailed as “better than any image-to-3D model.” Concurrently, Google Gemini 2.5 announced the addition of native audio functionality, enhancing the model’s performance in multimodal interaction. These advancements signal deeper integrated applications of AI in the visual and auditory domains. (Source: Ronald_vanLoon, Vtrivedy10, Ronald_vanLoon)

AI Sovereignty Concept Rises, Reshaping Global Enterprise AI Strategies: With the rapid global development of AI technology, discussions around “AI sovereignty” are increasing. This concept emphasizes the autonomy of nations and enterprises in AI technology development, data control, and deployment. It is expected to profoundly influence the AI strategic layouts of global enterprises, prompting countries to seek independence and competitiveness in the AI domain to address an increasingly complex international technological competitive landscape. (Source: Ronald_vanLoon)

Geely Group Launches Satellites to Support Autonomous Vehicle Development: Geely Group, China’s third-largest automaker, has launched 11 satellites to support positioning, communication, and autonomous driving functions for its vehicles. Currently, 41 satellites have been deployed, with the total number set to reach 64 within the next two months. This move signifies the automotive industry’s proactive exploration of integrating satellite technology for higher levels of autonomous driving, aiming to enhance vehicles’ precise navigation and real-time data transmission capabilities. (Source: bookwormengr)

🧰 Tools

LangChain Launches LangGraph Agents and CLI, Enhancing AI Agent Development Capabilities: LangChain released LangGraph, a workflow framework for building stateful AI Agents with planning capabilities, and provided the LangGraph CLI tool, supporting direct management of assistants, threads, and runs from the terminal for real-time stream processing. Additionally, LangChain partnered with Oxylabs to launch a Web Scraper API integration module, providing advanced web scraping capabilities for AI applications, addressing IP blocking and CAPTCHA issues, and enhancing Agent reliability. (Source: LangChainAI, LangChainAI, LangChainAI, hwchase17)

DSPy Framework Helps LLMs Output Structured and Predictable Responses: DSPy provides a declarative framework designed to address inconsistent LLM outputs and messy code, helping developers obtain structured, predictable responses. Through its well-designed abstraction layers, including signatures, modules, and adapters, the framework simplifies the building and optimization of LLM applications, gaining widespread community attention and being considered an important tool for building AI systems. (Source: lateinteraction, lateinteraction)

Qwen3-Coder 480B Becomes Anycoder’s Default Model, Boosting AI Programming Efficiency: Qwen3-Coder 480B has been adopted as Anycoder’s default model, significantly enhancing the efficiency and experience of AI-assisted programming. Users report that it generates code quickly and is well-designed, even capable of building interactive Win95 desktop applications with a single prompt. Additionally, the Qwen team has provided the Qwen Code command-line tool and plans to continuously optimize the model to match Claude Code’s performance through open-source methods. (Source: _akhaliq, jeremyphoward, jeremyphoward)

Open WebUI Explores Integration with Microsoft Graph API for Enterprise-Grade RAG Applications: The Open WebUI community is actively exploring integration with the Microsoft Graph API to enable enterprise-grade RAG (Retrieval-Augmented Generation) applications based on local LLMs. This will allow users to query and manage their data in M365, SharePoint, OneDrive, Outlook, and Teams via AI, and potentially support data write-back. The solution aims to ensure data security and personalized access through user credential passing and permission management. (Source: Reddit r/OpenWebUI, Reddit r/OpenWebUI)

ccusage Integrates Claude Code Status Bar, Providing Real-time Usage Cost Tracking: The ccusage tool now integrates with Claude Code’s new status bar feature, providing developers with real-time session costs, total cost today, 5-hour block costs, and remaining time, with color indicators for burn rate. This feature aims to help users better manage Claude Code usage costs, especially as its stricter limits are about to take effect, providing instant and convenient cost visualization. (Source: Reddit r/ClaudeAI)

AI-Assisted Scientific Plotting: YOLOv12 and Gemini Combined to Extract and Tag Scientific Charts: A new tool, Plottie.art, utilizes a customized YOLOv12 model for subplot segmentation and combines it with the Google Gemini API to classify and extract keywords from over 100,000 scientific charts. This approach, combining specialized visual models with general-purpose LLMs, efficiently generates structured metadata for charts in scientific literature, making them searchable and significantly improving researchers’ efficiency in finding data visualization inspiration. (Source: Reddit r/MachineLearning)

Herdora Launches GPU Inference Performance Analysis Tool, Accelerating ML Models: Herdora released a new GPU inference performance analysis tool that, by adding a decorator to inference code, generates detailed computation time traces and can delve into Python, CUDA kernels, and PTX assembly levels, showing memory movement and kernel bottlenecks. The tool has achieved over 50% acceleration on Llama models, aiming to help developers optimize the inference speed of locally running models. (Source: Reddit r/deeplearning)

GPT-5 Helps Developers ‘Vibecode’ Visual Novel Game Engine: A developer used GPT-5 to “Vibecode” a visual novel game engine from scratch in 9 hours on a Saturday. Through conversations with GPT-5, he gradually built plans and wrote code in stages, without using an AI IDE throughout the process. This demonstrates GPT-5’s powerful capability in assisting rapid prototype development and creative programming, providing significant support even for complex projects. (Source: SamWolfstone)

Replit Helps Non-Developers Quickly Build AI Applications: The Replit platform is enabling non-developers to quickly build and deploy applications through its simplified development environment and AI-assisted features. For example, one user built an application to analyze Shopify stores using Replit in two hours. This trend suggests that the “Vibecoding” workflow will greatly expand the market for coding tools, allowing more people to participate in the creation of AI applications. (Source: amasad, amasad)

Cursor Launches ‘Memory’ Feature, Enhancing AI-Assisted Programming Experience: AI programming tool Cursor is launching a “Memory” feature, aiming to enhance the efficiency and intelligence of its assisted programming. This feature is expected to allow AI to remember user preferences, project context, and common issues for longer, thereby providing more coherent, personalized programming support, reducing the need for repetitive instructions and context switching, and further optimizing developers’ workflows. (Source: mathemagic1an)

Qwen3 Model Supports Flowchart Generation, Enhancing Visualization Capabilities: The Qwen3-235B-A22B-2507 model can now generate flowcharts in Mermaid format, enabling visualization through frontend rendering. This feature allows LLMs not only to process text and code but also to directly generate diagrams, greatly enhancing their assistive capabilities in areas like architecture design and project planning, providing users with a more intuitive interactive experience. (Source: Reddit r/LocalLLaMA)

Google AI Coding Agent Jules Exits Beta, Officially Released: Google’s AI coding agent Jules has exited its beta phase and is now officially released. This tool aims to assist developers with coding via AI, improving development efficiency. Its release marks Google’s further strategic move in the AI programming tools domain, offering developers new options to address increasingly complex software development challenges. (Source: Ronald_vanLoon)

OpenAI Releases Harmony, Potentially a New Prompt Standard: OpenAI launched Harmony alongside the release of GPT-OSS, an open-source (Apache 2.0) response format designed to unify prompt templates. Harmony extends role definitions (system, developer, tool) and introduces output channels (final, analysis, comment) and special tokens, potentially becoming the new default ecosystem for agent applications, encouraging open-source community adoption and facilitating future migration to OpenAI’s more powerful multimodal APIs. (Source: TheTuringPost)

LlamaCloud Offers MCP-ready Document Knowledge Base for Building Enterprise Customer Support Agents: LlamaCloud provides an “MCP-ready” document knowledge base capable of efficiently processing large volumes of enterprise policy documents and integrating with LlamaIndex multi-agent systems. This enables enterprises to build intelligent customer support Agents, for example, processing thousands of pages of commercial banking agreements and answering complex user queries without manual cross-referencing, significantly improving customer service efficiency and accuracy. (Source: jerryjliu0)

📚 Learning

Guide to Fine-tuning Embedding Models in RAG Systems for Improved Retrieval Performance: A comprehensive technical article details how and when to fine-tune custom text embedding models in RAG (Retrieval-Augmented Generation) systems to enhance retrieval performance. The article delves into the necessity, methods, and practices of fine-tuning, offering valuable guidance for developers looking to optimize RAG system efficiency and accuracy. (Source: dl_weekly)

LangChain Releases Agent Reliability Guide, Assisting Hallucination Detection and Tool Monitoring: LangChain released a practical guide aimed at helping developers improve the reliability of LangChain/LangGraph applications’ Agents. The guide provides methods for detecting hallucinations, verifying groundedness, and monitoring tool usage, which are crucial for building stable, trustworthy AI Agents and help address potential errors and unpredictable behaviors of Agents in complex tasks. (Source: LangChainAI)

Diffusion Language Models Outperform Autoregressive Models in Data-Constrained Scenarios: A study indicates that Diffusion Language Models (DLMs) outperform Autoregressive (AR) models in data-constrained situations, demonstrating over 3 times the data utilization potential. Even a 1B-parameter DLM, trained on only 1B tokens, achieved 56% on HellaSwag and 33% on MMLU scores, without showing saturation. This offers new insights for addressing the “token crisis” and challenges existing research methodologies. (Source: dilipkay, arankomatsuzaki)

Reinforcement Learning Overview: Kevin P. Murphy’s ‘Reinforcement Learning: An Overview’: Kevin P. Murphy’s ‘Reinforcement Learning: An Overview’ is hailed as a must-read free book, comprehensively covering various reinforcement learning methods, including value-based RL, policy optimization, model-based RL, multi-agent algorithms, offline RL, and hierarchical RL. This resource provides a valuable theoretical foundation for AI learners to deeply understand RL. (Source: TheTuringPost)

New Attempt at Pre-training Language Models from Scratch with RL: A study explores the possibility of pre-training language models from scratch using pure reinforcement learning, i.e., without relying on cross-entropy loss pre-training. This experimental work aims to break through traditional pre-training paradigms and open new paths for language model training. Although still in early stages, its potential for disruption is noteworthy. (Source: tokenbender, natolambert)

Dynamic Fine-tuning (DFT) as a Generalized Upgrade to SFT: Researchers from Southeast University and others proposed Dynamic Fine-tuning (DFT), which reconstructs SFT (Supervised Fine-Tuning) into a reinforcement learning paradigm and stabilizes token updates by re-scaling the objective function. DFT surpasses standard SFT in performance and, in some cases, rivals RL methods like PPO, DPO, and GRPO, offering a more stable and efficient solution for model fine-tuning. (Source: TheTuringPost, TheTuringPost)

GRPO and GSPO: Application and Optimization of Chinese RL Algorithms in Reasoning Tasks: Group Relative Policy Optimization (GRPO) and Group Sequence Policy Optimization (GSPO) are two major Chinese reinforcement learning algorithms. GRPO optimizes by comparing the relative quality of generated answer groups, suitable for reasoning-intensive tasks without requiring a Critic model. GSPO, on the other hand, improves stability through sequence-level optimization, especially suitable for MoE models. These algorithms provide new optimization strategies for complex reasoning tasks and large-scale model training. (Source: TheTuringPost, TheTuringPost)

Guide to Implementing Short-term and Long-term Memory for AI Agents: Google Cloud published a blog post detailing how to implement short-term and long-term memory for AI Agents using the Agent Development Kit (ADK) and Vertex AI Memory Bank. This is crucial for building intelligent Agents capable of understanding context, engaging in multi-turn conversations, and remembering historical interactions, representing a key technology for enhancing Agent utility and complexity. (Source: dl_weekly)

RAG Pipeline Integration Guide with KerasHub: KerasHub provides a new guide demonstrating how to build RAG (Retrieval-Augmented Generation) pipelines. This tutorial offers developers practical methods for integrating KerasHub components into RAG systems, helping to improve model question-answering capabilities in specific knowledge domains, and serving as a valuable guide for users looking to build efficient Q&A systems using existing models and knowledge bases. (Source: fchollet)

💼 Business

XD Inc. Strategically Invests in AI Gaming Company MiAO, Entering AI Gaming Sector: XD Inc. announced a strategic investment of $14 million in AI gaming company MiAO, acquiring a 5.30% stake, valuing MiAO at $264 million. MiAO was founded by Wu Meng, former CEO of Giant Network, and its team possesses extensive experience in game development. This investment represents a significant strategic move for XD Inc. in the AI gaming sector, aiming to promote the application of AI technology in game development and operations through capital cooperation. (Source: 36氪)

AI Coding Tools Face Negative Gross Margin Challenge, Open Source and Transparent Pricing Key to Breakthrough: TechCrunch reported that AI coding tools generally face “very negative” gross margins, meaning each user is incurring a loss. This indicates that the current business model is unsustainable. Industry opinion suggests that open-sourcing and transparent pricing might be key to resolving this dilemma, helping to establish a healthier competitive environment and incentive mechanisms, and promoting the positive development of the AI coding tool market. (Source: cline)

Fierce Talent War in AI Industry, AI Engineer Salaries Soar: With the rapid advancement of AI technology, the demand for specialized talent in the AI field has surged, leading to continuously rising salaries for AI engineers. This phenomenon reflects the fierce competition for top technical talent in the AI industry and companies’ investment in vying for core AI competitiveness. High salaries have become an important means to attract and retain AI talent, further intensifying the “war” in the talent market. (Source: YouTube – Lex Fridman)

🌟 Community

GPT-5 Release Sparks Strong User Backlash, Demands for GPT-4o’s Return and Questions on Model Performance: Following OpenAI’s release of GPT-5, a large number of users expressed dissatisfaction, complaining that its performance was inferior to GPT-4o, even exhibiting “failures” in simple tasks like mathematics and information extraction, and feeling confused about GPT-5’s “thinking mode” and pricing strategy. The Reddit community was flooded with calls of “Bring back GPT-4o,” with many users believing GPT-5 lacked 4o’s “personality” and “fluidity,” questioning OpenAI’s release strategy and model naming. Sam Altman responded by stating that Plus users would regain access to 4o and admitted the release process was “more turbulent than expected.” (Source: Yuchenj_UW, brickroad7, scaling01, scaling01, scaling01, scaling01, TheZachMueller, francoisfleuret, joannejang, raizamrtn, mathemagic1an, akbirkhan, scaling01, natolambert, blader, jon_durbin, scaling01, scaling01, farguney, scaling01, scaling01, EdwardSun0909, Reddit r/LocalLLaMA, Reddit r/ChatGPT, Reddit r/MachineLearning, Reddit r/artificial, jeremyphoward, nrehiew_, gallabytes)

AI Companions Spark Social Concern, Users’ Deep Emotional Reliance on GPT-4o Revealed: After GPT-5’s release, the removal of GPT-4o revealed some users’ deep emotional reliance on AI companions, with their reactions even described as “sadness” or “losing a friend.” Particularly for neurodivergent individuals, GPT-4o offered a non-judgmental cognitive companionship space, helping them process emotions and plan their lives. Community discussions call for acknowledging this emotional connection and cautioning against companies’ potential impact on users’ emotional lives, emphasizing that AI tools should provide assistance while avoiding excessive dependency. (Source: DeepLearningAI, Reddit r/artificial, Reddit r/ChatGPT, Reddit r/ChatGPT, shaneguML)

LLMs Becoming Overly Agentic and ‘Overthinking’ Raises Expert Concerns: OpenAI co-founder Ilya Sutskever predicted that AI would be able to perform all human tasks, sparking discussions about profound societal changes in the future. However, AI expert Karpathy observed that LLMs are becoming “overly agentic,” defaulting to an “overthinking” mode, leading to excessive time spent on simple queries and even over-analysis in code assistance. This trend contrasts with users’ demand for “friendly, direct” AI, highlighting the challenge of balancing intelligence and practicality in AI models. (Source: karpathy, Reddit r/ArtificialInteligence, colin_fraser)

AGI Definition and Development Prospects Spark Controversy, Labeled a ‘Marketing Term’: The community has widespread controversy regarding the definition and realization path of AGI (Artificial General Intelligence). Some views hold that AGI is currently just a “marketing term,” lacking clear standards and testable metrics, and that current LLM architectures cannot meet its core requirements (e.g., cognitive symbol grounding, proactive information generalization, metacognition). Others believe AGI is achievable and emphasize its disruptive impact on the labor market and economy, considering the competition around AGI to be the most important technological race in human history. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

AI-Generated Content ‘Effort Heuristic’ Bias: More Effort, Higher Value?: Social media discussions point out that people’s evaluation of AI-generated content may suffer from an “effort heuristic” bias, meaning that when AI is perceived to have put in more effort or time, even if the outcome is the same, it is assigned higher value. This cognitive bias is particularly evident in areas like AI art and video generation, potentially leading users to have unrealistic expectations for “slow but refined” AI products, affecting their judgment of AI’s true capabilities. (Source: c_valenzuelab, c_valenzuelab)

Reddit Becomes Major Source of AI Training Data, Raising Concerns Over Content Quality: Reddit has been identified as a significant source of AI training data, with some companies even signing data sales agreements specifically for this purpose. This has raised community concerns about the future content quality of AI systems, as with the increase in AI-generated content and bot comments, AI might “eat its own dog food,” leading to a decline in training data quality and subsequently affecting model performance and reliability. (Source: Reddit r/ClaudeAI, typedfemale)

Impact of AI on Creative Workflows: The Trade-off Between Speed and Growth: The community discussed the impact of AI tools (such as MusicGPT) on creative workflows. While AI can significantly accelerate the creative process, for example, by quickly generating melodies, it also sparked reflection on whether “skipping the grind” might hinder creators’ personal growth and style formation. The discussion suggests that over-reliance on AI might cause creators to lose opportunities to accumulate experience and develop unique styles through micro-decisions. (Source: Reddit r/deeplearning)

AI Model Benchmark Controversy: OpenAI SWE-Bench Data Questioned: The community questioned OpenAI’s claimed 74.9% accuracy in the SWE-Bench benchmark, pointing out that it might have exaggerated performance by running on only 477 problems (instead of all 500). This concern over the transparency and fairness of benchmark testing methods reflects the industry’s growing attention to AI model performance evaluation standards and criticism of “benchmark maximization” behavior. (Source: akbirkhan, jeremyphoward)

OpenAI Model Naming and Routing Strategy Cause User Confusion and Dissatisfaction: Following the release of OpenAI’s GPT-5, its complex model naming (e.g., GPT-5, GPT-5 Thinking, GPT-5 mini) and opaque internal routing mechanisms (where users cannot determine the specific model currently in use) sparked widespread user confusion and dissatisfaction. Users complained that this strategy led to a degraded experience and limited access to superior models. OpenAI has stated it will improve transparency and allow users to view the current model. (Source: scaling01, scaling01, jeremyphoward, Teknium1, VictorTaelin)

LLMs Still Have Limitations in Multimodal Tasks, e.g., Image Counting Bias: Although LLMs have made progress in multimodal capabilities, limitations still exist. For instance, in image counting tasks, SOTA VLMs (such as o3, o4-mini, Sonnet, Gemini Pro), when faced with modified images (e.g., a zebra with five legs), give incorrect counts due to bias, failing to accurately identify the true content of the image. This indicates that models still need improvement in visual reasoning and detail comprehension. (Source: OfirPress, andersonbcdefg)

OpenAI Researcher Emphasizes ‘Usage is the Best Evaluation Metric’: OpenAI researcher Christina Kim stated that frontier evaluation of AI models is no longer solely about benchmarks but actual usage. She believes that benchmark scores have reached saturation, and the number of actual tasks users complete with AI in their daily lives is the true signal of AI’s progress and proximity to AGI. This perspective emphasizes the core position of user experience and practical application value in AI development. (Source: nickaturley, markchen90)

Bill Gates’ Predictions on AI Spark Community Discussion: Bill Gates’ predictions on AI development sparked discussion in the community. While some users believe his predictions do not align with GPT-5’s actual performance, questioning if he is “out of touch,” others argue that Gates’ insights still hold reference value in the long run. This reflects the public’s continuous attention to AI’s future development path and a high level of scrutiny towards industry leaders’ views. (Source: Reddit r/MachineLearning)

Discussion on AI Models Surpassing Human Intelligence and Creative Bottlenecks: The community discussed the phenomenon of AI models surpassing human performance in exams and benchmarks, such as LLMs “effortlessly surpassing” Einstein’s high school grades. However, the discussion also pointed out that while AI excels at solving established problems, its ability to propose revolutionary theories “from scratch” (such as the theory of relativity) remains questionable. This sparked philosophical reflection on the fundamental differences between human and machine intelligence, specifically whether “benchmark maximization” is sufficient to measure true creativity and intellectual leaps. (Source: sytelus)

💡 Other

AI-Assisted Conceptual Search, Moving Beyond Keyword Limitations: AI technology is driving a shift in search methods from traditional keyword matching to conceptual search. This means users can retrieve information through more abstract, semantically richer concepts, rather than solely relying on precise keywords. This transformation will greatly enhance search intelligence and efficiency, allowing users to more conveniently discover and understand complex information. (Source: nptacek)

Concerns Raised Over AI-Generated Content’s Impact on Children, Call for ‘Developmentally Friendly’ Content: Community discussions expressed concern over the potential negative impact of AI-generated content (especially visual content) on children, suggesting it might be too crude, lack depth, and potentially lead to “dopamine rushes.” Some views call for the development of “developmentally friendly” generative AI content, such as interactive lessons, to ensure the healthy application of AI technology in children’s education and entertainment. (Source: teortaxesTex)

AI Robots May Take Over Most Manual Labor Tasks: With the rapid development of AI and robotics, embodied intelligent devices like humanoid robots are expected to take over most manual labor tasks currently performed by humans within the next few years. This trend signals structural changes in the labor market, which will greatly boost production efficiency but also pose new challenges for human employment and social division of labor. (Source: adcock_brett)