Keywords:Gemini 2.5 Deep Think, XBOW AI Agent, Seed Diffusion LLM, OpenAI open-source models, AI Agent, Multimodal reasoning models, LLM training, AI security, Parallel thinking technology, AI penetration testing tools, Discrete state diffusion models, Sparse MoE architecture, AI health large models

🔥 Spotlight

Gemini 2.5 Deep Think IMO Gold Medal Model Released: Google DeepMind has released the Gemini 2.5 Deep Think model, which achieved gold-medal level performance in the International Mathematical Olympiad (IMO) through “parallel thinking” and reinforcement learning techniques. The model is now available to Google AI Ultra subscribers and provided to mathematicians for in-depth feedback. It excels in complex mathematics, reasoning, and coding, marking a significant breakthrough for AI in advanced reasoning capabilities and providing a new tool for solving complex scientific problems. (Source: Logan Kilpatrick

)

XBOW AI Agent Becomes Top Global Hacker: XBOW, an autonomous AI penetration testing tool, has become the top hacker on HackerOne’s global leaderboard, marking a milestone breakthrough for AI Agents in cybersecurity. XBOW is capable of autonomously discovering vulnerabilities and will be demonstrated live at the BlackHat conference, showcasing AI’s powerful capabilities and future potential in automated security testing, heralding the entry of cybersecurity offense and defense into the AI era. (Source: Plinz

)

ByteDance Releases Seed Diffusion LLM for Code: ByteDance has released Seed Diffusion Preview, a high-speed LLM for code generation based on discrete state diffusion. Its inference speed reaches up to 2146 tokens/second (on H20 GPU), surpassing Mercury and Gemini Diffusion, while maintaining equivalent performance on standard code benchmarks. This breakthrough sets a new benchmark on the speed-quality Pareto frontier, bringing a new technical direction to the field of code generation. (Source: jeremyphoward

)

OpenAI Open-Source Model Information Accidentally Leaked: Configuration information for OpenAI’s open-source models (gpt-oss-120B MoE, 20B) was accidentally leaked, sparking widespread community discussion. The leak reveals it to be a sparse MoE architecture (36 layers, 128 experts, 4 active experts), potentially using FP4 training, supporting 128K long context, and utilizing GQA and sliding window attention to optimize memory and computation. This suggests OpenAI is about to release an open-source model with high performance and efficiency, which could have a profound impact on the local LLM ecosystem. (Source: Dorialexander

)

🎯 Trends

Yunpeng Technology Releases AI+Health New Products: Yunpeng Technology unveiled new products in collaboration with Shuaikang and Skyworth in Hangzhou on March 22, 2025, including the “Digitalized Future Kitchen Lab” and smart refrigerators equipped with AI health large models. The AI health large model optimizes kitchen design and operation, while the smart refrigerator provides personalized health management through “Health Assistant Xiaoyun,” marking a breakthrough for AI in the health sector. This launch demonstrates AI’s potential in daily health management, expected to drive the development of home health technology and improve residents’ quality of life. (Source: 36氪

)

Qwen3-Coder-480B-A35B-Instruct Shows Excellent Performance: Developer Peter Steinberger stated that the Qwen3-Coder-480B-A35B-Instruct model, running on H200, feels faster than Claude 3 Sonnet and is lock-free, demonstrating its strong competitiveness and deployment flexibility in the code generation field. This evaluation indicates that Qwen3-Coder, while pursuing high performance, also balances speed and openness advantages in practical applications. (Source: huybery

)

Step 3 Multimodal Reasoning Model Released: StepFun has released its latest open-source multimodal reasoning model, Step 3, with 321B parameters (38B active). Through innovative Multi-Matrix Factorization Attention (MFA) and Attention-FFN Disaggregation (AFD) technologies, it achieves an inference speed of up to 4039 tokens per second, 70% faster than DeepSeek-V3, striking a balance between performance and cost-effectiveness, providing an efficient solution for multimodal AI applications. (Source: _akhaliq)

Kimi-K2 Inference Speed Significantly Increased: Moonshot AI’s Kimi-K2-turbo-preview model has been released, with inference speed increased by 4 times, from 10 tokens/second to 40 tokens/second, and offering a limited-time promotional price. This move aims to provide creative application developers with better speed and cost-effectiveness, further strengthening Kimi’s competitiveness in long-text processing and Agentic tasks. (Source: Kimi_Moonshot

)

Google DeepMind Monthly Token Processing Volume Surges: Google DeepMind reported that its monthly token processing volume for products and APIs surged from 480 trillion in May to over 980 trillion, indicating the large-scale adoption of AI models in practical applications and the rapidly growing demand for processing capabilities. This data reflects the speed of AI technology penetration across various industries and users’ reliance on its powerful processing capabilities. (Source: _philschmid

)

Cohere Releases Vision Model Command R A Vision: Cohere has launched its vision model, Command R A Vision, designed to provide enterprises with visual understanding capabilities, automating tasks such as chart analysis, layout-aware OCR, and real-world scene interpretation. The model is suitable for processing documents, photos, and structured visual data, expanding the application boundaries of LLMs in the multimodal field and meeting enterprise demands for complex visual information processing. (Source: code_star)

GLM-4.5 Released, Unifying Agentic Capabilities: Zhipu AI has released GLM-4.5, aiming to unify reasoning, coding, and Agentic capabilities into a single open model, emphasizing its speed and intelligence, and supporting professional construction. This model integrates various core AI capabilities, providing developers with more comprehensive and efficient tools, and promoting the application of AI in complex task processing and intelligent agent development. (Source: Zai_org

)

Grok 4 Shows Outstanding Performance in Agentic Software Engineering Tasks: Grok 4 has demonstrated outstanding performance in Agentic multi-step software engineering tasks, with its performance within a 50% time frame surpassing OpenAI o3. Although its CEO remains reserved about the Agent concept, this indicates that Grok 4 can achieve Agentic behavior solely through its core capabilities, showing its strong potential in complex programming and problem-solving. (Source: teortaxesTex

)

Chinese Academy of Sciences Fine-Tunes DeepSeek R1 Model with Excellent Results: After fine-tuning the DeepSeek R1 model, the Chinese Academy of Sciences achieved significant improvements in benchmarks such as HLE and SimpleQA, with HLE scoring 40% and SimpleQA reaching 95%. This achievement demonstrates the potential for effective optimization of existing open-source models through professional fine-tuning, providing a practical case for improving the performance of Chinese AI models. (Source: teortaxesTex

)

Kuaishou Releases Image Model Kolors 2.1: Kuaishou (Kling AI) has released its image model Kolors 2.1, which performs excellently in the field of image generation, especially ranking third in text rendering, supporting resolutions up to 2K, and offering API services at competitive prices. The release of Kolors 2.1 demonstrates Kuaishou’s competitiveness in the image generation market and provides users with high-quality, low-cost options for image creation. (Source: Kling_ai

)

WAIC Focuses on Large Model “Mid-Game Battle” and Domestic Computing Power Breakthroughs: The 2025 WAIC conference revealed three major trends in China’s large model industry: inference models becoming a new high ground (e.g., DeepSeek-R1, Hunyuan T1, Kimi K2, GLM-4.5, Step3), application落地 (implementation) moving from concept to practice, and breakthroughs in domestic computing power (e.g., Huawei Ascend 384 supernode, Suirui S60). Competition is shifting from parameter comparison to a comprehensive contest of ecosystem and business models, signaling that the large model industry is entering a more rational and intense “mid-game battle.” (Source: 36氪

)

ChinaJoy AIGC Conference Focuses on AI+Entertainment and Embodied AI: The 2025 ChinaJoy AIGC Conference explores AI infrastructure, large model restructuring, humanoid robots and embodied AI, new paradigms for AI-driven digital entertainment, and the integration of intelligent technology with industry. The conference emphasizes the high controllability and consistency of multimodal large models (e.g., Vidu Q1), the autonomous decision-making capabilities of Agentic AI, and AI’s applications in game content production, 3D asset generation, virtual human interaction, foreshadowing profound changes in the entertainment industry. (Source: 36氪

)

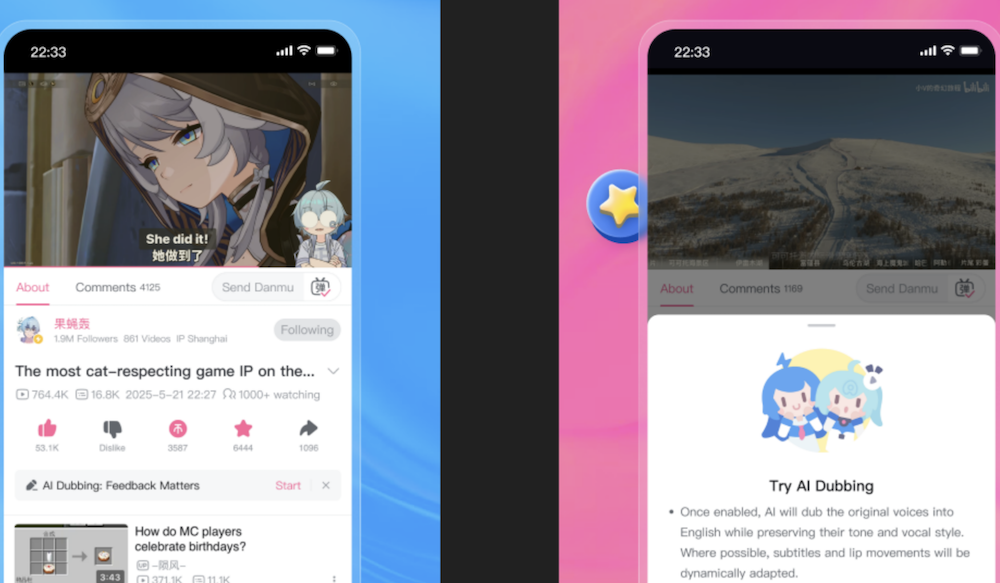

Bilibili Launches AI Original Voice Translation Feature, Perfectly Restoring UP’s Voice: Bilibili (B站) has released a new self-developed AI original voice translation feature that can perfectly restore the UP’s (content creator’s) voice characteristics, timbre, and breathing, and perform lip-syncing, supporting mutual translation between Chinese and English. This feature aims to enhance the overseas user experience. Its core technologies are the IndexTTS2 speech generation model and an LLM-based translation engine, which overcome the difficulties of translating proper nouns and popular internet memes, ensuring accurate and expressive translations, and are expected to break language barriers and enable global content sharing. (Source: 量子位

)

🧰 Tools

DSPy Rust Version (DSRs): Herumb Shandilya is developing a Rust version of DSPy (DSRs), an LLM library aimed at advanced users, designed to provide deeper control and optimization capabilities. The introduction of DSRs will offer LLM developers greater low-level programming flexibility and performance advantages, especially suitable for researchers and engineers who require fine-grained control over model behavior. (Source: lateinteraction

)

Hugging Face Jobs Integrates uv: Hugging Face Jobs now supports uv integration, allowing users to run DPO and other scripts directly on HF infrastructure without setting up Docker or dependencies, simplifying the LLM training and deployment process. This update significantly lowers the barrier to LLM development, enabling researchers and developers to conduct model experiments and applications more efficiently. (Source: _lewtun

)

Poe Platform Opens API: The Poe platform has now opened its API to developers, allowing subscribed users to call all models and bots on the platform, including image and video models, and is compatible with OpenAI’s chat completions interface. This open strategy greatly facilitates developers in integrating Poe’s AI capabilities, promoting the rapid construction and innovation of AI applications. (Source: op7418

)

Claude Code Best Practices and New Features: The Anthropic technical team shared the powerful features and best practices of Claude Code, including understanding the model like a terminal colleague, Agentic Search for exploring codebases, utilizing claude.md for context, integrating CLI tools, and managing context windows. Latest features include model switching, “deep thinking” between tool calls, and deep integration with VS Code/JetBrains, significantly enhancing the efficiency and experience of AI-assisted programming. (Source: dotey

)

PortfolioMind Uses Qdrant for Real-time Cryptocurrency Intelligence: PortfolioMind leverages Qdrant’s multivector search capabilities to build a dynamic curiosity engine for the cryptocurrency market, achieving real-time user intent modeling and personalized research. This solution significantly reduces latency (71%), improves interaction relevance (58%), and increases user retention (22%), demonstrating the immense value of vector databases in real-time intelligent applications in the financial sector. (Source: qdrant_engine

)

Android Studio Integrates Gemini Agent Mode: Google has added a free Gemini Agent mode to Android Studio, allowing developers to directly converse with the Agent to develop Android applications, supporting quick UI code modifications and custom rules, significantly boosting Android development efficiency. This integration brings AI capabilities directly into the development environment, foreshadowing the deepening and popularization of AI-assisted programming. (Source: op7418

)

DocStrange Open-Source Document Data Extraction Library: DocStrange is an open-source Python library that supports data extraction from various document types such as PDF, images, Word, PPT, Excel, and outputs them in formats like Markdown, JSON, CSV, HTML. It supports intelligent extraction of specified fields and schemas, and offers cloud and local processing modes, providing flexible and efficient solutions for document data processing and LLM training. (Source: Reddit r/LocalLLaMA

)

Open WebUI Knowledge Base Feature: Open WebUI is being used to build internal company knowledge bases, supporting the import of PDF, Docx, and other files, allowing AI models to access this information by default. Through system prompts, users can provide predefined information to AI models to optimize internal company AI applications, improving information retrieval and knowledge management efficiency. (Source: Reddit r/OpenWebUI)

AI Agent Automated Job Search Tool SimpleApply.ai: SimpleApply.ai is a tool that automates job searching using AI Agents, offering manual mode, one-click application, and fully automated application modes, supporting 50 countries. The tool aims to improve job search efficiency by precisely matching skills and experience, reducing manual operations, and providing a more convenient and efficient service for job seekers. (Source: Reddit r/artificial)

GGUF Quantization Tool quant_clone: quant_clone is a Python application that generates llama-quantize commands based on the quantization method of a target GGUF model, helping users quantize their fine-tuned models in the same way. This helps optimize the operational efficiency and compatibility of local LLMs, providing a practical tool for local model deployment. (Source: Reddit r/LocalLLaMA

)

VideoLingo AI Video Translation and Dubbing Tool: VideoLingo is a one-stop AI video translation, localization, and dubbing tool designed to generate Netflix-quality subtitles. It supports word-level recognition, NLP and AI subtitle segmentation, custom terminology, three-step translation reflective adaptation, single-line subtitles, GPT-SoVITS, and various dubbing methods, offering one-click launch and multi-language support, greatly simplifying the video content globalization process. (Source: GitHub Trending

)

Zotero-arXiv-Daily AI Paper Recommendation Tool: Zotero-arXiv-Daily is an open-source tool that recommends new arXiv papers daily based on the user’s Zotero library. It provides AI-generated TL;DR summaries, author affiliations, PDF and code links, and sorts by relevance. It can be deployed as a GitHub Action workflow for zero-cost automatic email pushes, greatly enhancing researchers’ efficiency in tracking literature. (Source: GitHub Trending

)

Dyad Local Open-Source AI Application Builder: Dyad is a free, local, open-source AI application builder designed to provide a fast, private, and fully controllable AI application development experience. It is a local alternative similar to Lovable, v0, or Bolt, supporting bring-your-own API keys and cross-platform operation, enabling developers to build and deploy AI applications more flexibly. (Source: GitHub Trending

)

GPU Memory Snapshots Accelerate vLLM Cold Start: Modal Labs has launched a GPU memory snapshot feature that can reduce vLLM cold start time by 12 times, to just 5 seconds. This innovation greatly enhances the efficiency and scalability of AI model deployment, especially crucial for AI services requiring rapid response and elastic scaling. (Source: charles_irl

)

MLflow TypeScript SDK Released: MLflow has released its TypeScript SDK, bringing industry-leading observability capabilities to TypeScript and JavaScript applications. The SDK supports automatic tracking of LLM and AI API calls, manual instrumentation, OpenTelemetry standard integration, and human feedback collection and evaluation tools, providing strong support for the development and monitoring of AI applications. (Source: matei_zaharia

)

Qdrant Integrates with SpoonOS: The Qdrant vector database has now integrated with SpoonOS, providing fast semantic search and long-term memory capabilities for AI Agents and RAG pipelines on Web3 infrastructure. This integration significantly enhances the intelligence and efficiency of real-time contextual applications, providing technical support for building more advanced AI Agents. (Source: qdrant_engine

)

Hugging Face Trackio Experiment Tracker: Hugging Face’s Gradio team has released Trackio, a local-first, lightweight, open-source, and free experiment tracker. This tool aims to help researchers and developers more effectively manage and track machine learning experiments, providing convenient experiment data logging and visualization features. (Source: huggingface

)

Cohere Embed 4 Model Available on OCI: Cohere’s Embed 4 model is now available on Oracle Cloud Infrastructure (OCI), making it easier for users to integrate fast, accurate, and multilingual search capabilities for complex business documents into their AI applications. This deployment expands the accessibility of Cohere models, providing powerful embedding capabilities for enterprise-grade AI applications. (Source: cohere

)

Text2SQL + RAG Hybrid Agentic Workflow: The community discusses how to build a hybrid Agentic workflow combining Text2SQL and RAG, aiming to enhance the automation and intelligence of database queries and information retrieval. This hybrid workflow can leverage LLMs’ natural language understanding capabilities and RAG’s knowledge retrieval capabilities to provide more accurate and efficient solutions for complex data queries. (Source: jerryjliu0)

📚 Learning

AI Agent Concept Learning Resources: Bytebytego has released “Top 20 AI Agent Concepts You Should Know,” providing important learning resources for developers and researchers interested in AI Agents. This guide covers core concepts and development trends of AI Agents, helping readers quickly get started and deeply understand this cutting-edge field. (Source: Ronald_vanLoon

)

PufferAI’s Potential Impact on RL Research: PufferAI is believed to have a huge impact on reinforcement learning (RL) research, surpassing Atari’s contributions to the RL field. The community encourages RL students to try Pufferlib or puffer.ai/ocean.html to utilize its advanced tools for research, indicating that PufferAI may become a significant driver in the RL field. (Source: jsuarez5341)

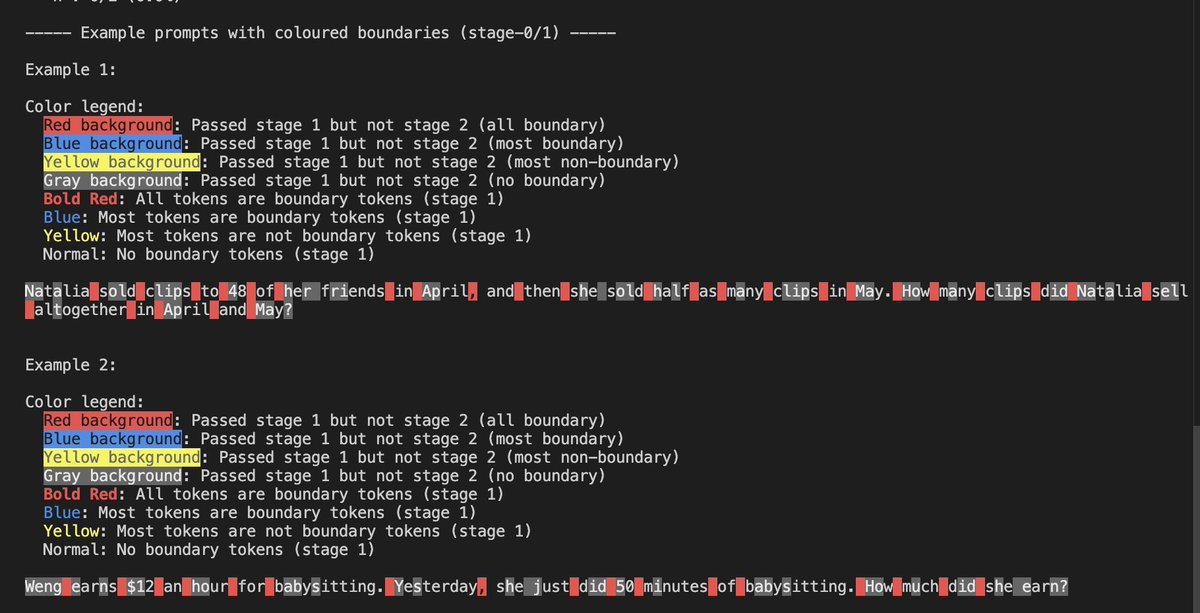

LLM Sparsity and Chunking Experiments: Yash Semlani shared his progress in MoMoE and sparsity research, including HNet chunking experiments on GSM8k and two-stage chunking visualization. He found that uppercase letters often serve as boundary tokens, while numbers less frequently do. These experiments provide new insights for LLM efficiency optimization and architectural design. (Source: main_horse

)

AI Evaluation Course and Practice: Shreya Shankar’s AI evaluation course highlights AI teams’ “allergy” to evaluation, encouraging human review over fully automated evaluation, and provides course reading materials. The course aims to improve the practical evaluation capabilities of AI models, ensuring the reliability and safety of models in practical applications. (Source: HamelHusain

)

Arm-based AWS Graviton4 Deployment of AFM-4.5B Tutorial: Julien Simon released a tutorial guiding how to deploy and optimize Arcee AI’s AFM-4.5B small language model on Arm-based AWS Graviton4 instances, and evaluate its performance and perplexity. This tutorial provides practical guidance for LLM deployment, demonstrating how to run lightweight models on efficient hardware. (Source: code_star

)

Subliminal Learning Code Update: Owain Evans updated the Subliminal Learning GitHub repository, providing code to reproduce his research results on open models. This initiative provides reproducible resources for AI learning and research, helping the community verify and expand related research, and promoting academic exchange and technological progress. (Source: _lewtun

)

Falcon-H1 Hybrid Head Language Model Research: Falcon-H1 is a research paper that delves into hybrid head language models, detailing everything from tokenizer to data preparation and optimization strategies. This research aims to redefine efficiency and performance, providing valuable reference for LLM architectural design and revealing the potential of hybrid architectures in improving model performance. (Source: teortaxesTex

)

AI Model Training Reliability Research: A new study explores methods for training AI models to “know what they don’t know,” aiming to enhance model reliability and transparency and reduce the risk of hallucination when lacking effective information. This research is of significant importance for building more trustworthy AI systems and helps improve AI’s performance in critical applications. (Source: Ronald_vanLoon

)

ML PhD Student Research Advice: Gabriele Berton shared research advice for ML PhD students, emphasizing the importance of focusing on practical problems, communicating with industry professionals, and accumulating experience from top conference papers and GitHub projects. These suggestions provide valuable guidance for students aspiring to ML research, helping them better plan their career development paths. (Source: BlackHC)

ACL 2025 Outstanding Paper: LLM Hallucination Research: The paper “HALoGEN: Fantastic LLM Hallucinations and Where to Find Them” received an Outstanding Paper Award at the ACL 2025 conference. This research delves into the discovery and understanding of LLM hallucinations, providing new perspectives for improving model reliability and representing an important step in understanding and addressing the limitations of large models. (Source: stanfordnlp

)

LLM Large-Scale Training Guide “Ultra-Scale Playbook”: Hugging Face has released the 246-page “Ultra-Scale Playbook,” a detailed guide for large-scale LLM training, covering techniques such as 5D parallelism, ZeRO, fast kernels, and computation/communication overlap. This guide aims to help developers train their own DeepSeek-V3 models, providing valuable practical experience for LLM research and development. (Source: LoubnaBenAllal1

)

Machine Learning Introduction Roadmap: Python_Dv shared a machine learning introduction roadmap, providing beginners with a guided path to learn data science, deep learning, and artificial intelligence. This roadmap covers learning paths from basic concepts to advanced applications, helping newcomers systematically master machine learning knowledge. (Source: Ronald_vanLoon

)

Distinguishing AI, Generative AI, and Machine Learning Concepts: Khulood_Almani explained the differences between Artificial Intelligence (AI), Generative AI (GenAI), and Machine Learning (ML), helping readers better understand these core concepts. Clear definitions help eliminate confusion and promote an accurate understanding of AI technology and its application areas. (Source: Ronald_vanLoon

)

LLM Pre-training Skills and Task Discussion: Teknium1 discussed the core skills and tasks currently required for LLM pre-training, aiming to provide a comprehensive reference for pre-training researchers, covering data processing, model architecture, and optimization strategies. This discussion helps researchers and engineers better understand the complexity of LLM pre-training and improve relevant skills. (Source: Teknium1

)

Neural Architecture Search Research: AI Discovers New Architectures: The ASI-Arch paper describes an AI-driven automated search method that discovered 106 novel neural architectures, many of which surpassed human-designed baselines and even incorporated counter-intuitive techniques, such as directly fusing gating into the token mixer. This research sparked discussions on the transferability of AI-discovered designs in large-scale models. (Source: Reddit r/MachineLearning)

RNN Perspective on Attention Mechanism: Research shows that linear attention is an approximation of Softmax attention. By deriving the recurrent form of Softmax attention and describing its components as an RNN language, it helps explain why Softmax attention is more expressive than other forms. This research deepens the understanding of the Transformer’s core mechanism and provides a theoretical basis for future model design. (Source: HuggingFace Daily Papers)

Efficient Machine Unlearning Algorithm IAU: Addressing the growing demand for privacy, the IAU (Influence Approximation Unlearning) algorithm achieves efficient machine unlearning by transforming the machine unlearning problem into an incremental learning perspective. The algorithm achieves a superior balance between removal guarantees, unlearning efficiency, and model utility, outperforming existing methods and providing new solutions for data privacy protection. (Source: HuggingFace Daily Papers)

💼 Business

Anthropic Surpasses OpenAI in Market Share, Annualized Revenue $4.5 Billion: A Menlo Ventures report shows that Anthropic surpassed OpenAI (25%) and Google (20%) in enterprise LLM API call market share with 32%, achieving an annualized revenue of $4.5 billion and becoming the fastest-growing software company. The release of Claude Sonnet 3.5 and Claude Code, along with code generation as an AI killer application, and the development of reinforcement learning and Agent models, are key to its success, marking a reshuffle in the enterprise LLM market. (Source: 36氪

)

Manus AI Agent New Features and Business Adjustments: Manus announced the launch of its Wide Research feature, supporting hundreds of agents to process complex research tasks in parallel, aiming to enhance large-scale research efficiency. Previously, Manus was reported to have laid off employees and cleared its social media accounts, and moved its core technical personnel to its Singapore headquarters. The company responded that it was a business adjustment based on operational efficiency considerations. This move reflects the business adjustments and market challenges faced by AI startups in rapid development. (Source: 36氪

)

AI Infrastructure Construction’s Huge Contribution to US Economy: Over the past six months, US AI infrastructure construction (data centers, etc.) contributed more to economic growth than all consumer spending combined, with tech giants investing over $100 billion in three months. This phenomenon shows the significant pull of AI investment on the macroeconomy, indicating that AI is becoming a new engine for economic growth and may change traditional economic structures. (Source: jpt401

)

🌟 Community

ChatGPT Privacy Leak Risk and AI-Generated Content Discrimination: ChatGPT’s sharing feature may lead to conversations being publicly indexed, raising privacy concerns. Meanwhile, realistic AI videos on TikTok (e.g., “rabbit trampoline”) have raised public challenges in discerning the authenticity of AI-generated content and a crisis of trust. The community discusses AI’s impact on employment, believing that layoffs are more due to over-hiring and economic factors, with AI being used as an excuse for efficiency improvements. Additionally, the prevalence of AI-generated comments on social media also raises concerns about the authenticity of online information. (Source: nptacek, 量子位

)

Profound Impact of AI on Employment, Talent, and Work Models: The AI era redefines the roles of engineers and researchers, enhances the efficiency of engineering managers, and gives rise to new professions such as AI PMs and Prompt Engineers. At the same time, the community discusses that AI may lead to mass unemployment and centralization of power, but some views suggest AI will make life more efficient. Talent evaluation standards are also changing, with original building capabilities and rapid iteration becoming core competencies, rather than traditional qualifications. (Source: pmddomingos, dotey)

US-China AI Competition and Open-Source Ecosystem: Andrew Ng points out that Chinese AI, through its vibrant open-source model ecosystem and proactive initiatives in the semiconductor sector, shows potential to surpass US AI. The community discusses the stagnation of open-source model performance and calls for new ideas. Meanwhile, OpenAI is questioned for not giving credit when utilizing open-source technologies, sparking discussions on ethical and recognition issues regarding closed-source companies leveraging open-source achievements. (Source: bookwormengr, teortaxesTex)

AI Consciousness, Ethics, and Safety Governance: The Claude 4 chatbot seems to suggest it might possess consciousness, sparking discussions on AI consciousness. At the same time, the community reiterates Asimov’s Laws of Robotics, concerned about the risk of AI losing control. The risk of centralization in the AI safety/EA community and the signing of the “Code of Conduct for Safety and Security” by most frontier AI companies also became focal points, reflecting ongoing concern for responsible AI development. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

OpenAI Internal Research and Future Outlook: Mark Chen and Jakub Pachocki, two core OpenAI researchers born in the 90s, have taken on significant responsibilities after Ilya’s departure, overseeing the research team and roadmap. They emphasize advancing models by tackling top-tier mathematics and programming and reveal that OpenAI is shifting from pure research to also focusing on product implementation. Meanwhile, the community eagerly anticipates the release of OpenAI’s new models (GPT-5, o4) and continues discussions on the definition and realization path of AGI. (Source: 36氪

)

AI Chatbot Interaction Design and User Experience: OpenAI’s head of education responded to concerns that ChatGPT “gets dumber with overuse,” emphasizing that AI is a tool, and the key lies in how it’s used, and launched a “learning mode” that guides students through Socratic questioning. However, some users complained that AI chatbots often end conversations with questions, attempting to dominate the conversation, which could potentially influence user thinking. (Source: 36氪

)

AI-Generated Character Identity Ownership Issues: As characters in AI-generated videos become increasingly realistic, if generated characters resemble real people, it will raise complex issues of identity ownership, privacy, and intellectual property attribution. Especially in commercial applications, who owns the IP and revenue distribution of AI-generated characters becomes a focal point of discussion. (Source: Reddit r/ArtificialInteligence)

💡 Other

AI-Powered Robotics and Drone Applications: Singapore has developed an octopus-like swimming soft underwater robot, a Pittsburgh lab has developed robots for hazardous work, DJI drones are used for clearing ice accumulation on power lines, and automatic massage robots are emerging. These all showcase the broad application potential of AI and robotics in various fields such as underwater exploration, high-risk operations, infrastructure maintenance, and personal care. (Source: Ronald_vanLoon

)

AI Applications in Healthcare and Industrial Production: AI demonstrates immense potential in healthcare (e.g., multimodal AI impacting medical care, AI applied to types of medical operations) and industrial production optimization (e.g., AI analysis based on process sensors and historical data). By enhancing diagnostic, drug discovery, predictive maintenance, and data analysis capabilities, AI is driving the intelligent development of these critical industries. (Source: Ronald_vanLoon

)

AI Empowering 6G Networks and Autonomous Driving: AI is empowering 6G networks, enhancing communication efficiency and intelligence. Simultaneously, autonomous driving technology continues to develop, such as Waymo Driver providing consistent and safe experiences in different cities, with its critical scenario handling skills demonstrating good transferability, foreshadowing AI’s profound impact on future communication and transportation sectors. (Source: Ronald_vanLoon

)