Keywords:AI, Meta, OpenAI, Anthropic, NVIDIA, LLM, humanoid robot, personal superintelligence, ChatGPT learning mode, Walker S2 autonomous battery swapping, Qwen3-30B-A3B-Thinking-2507, AlphaEarth Foundations

🔥 Spotlight

Meta Unveils Vision for Personal Superintelligence : Mark Zuckerberg shared Meta’s future vision for “personal superintelligence,” emphasizing providing world-class AI assistants, AI creators, and AI enterprise interaction tools for everyone. This vision aims to empower all users through AI and promote the development of open-source models. However, this move has sparked community discussion regarding its definition of “superintelligence,” questioning whether it will lead to unpredictable “singularity moments” or merely be an extension of virtual social interaction. (Source: AIatMeta)

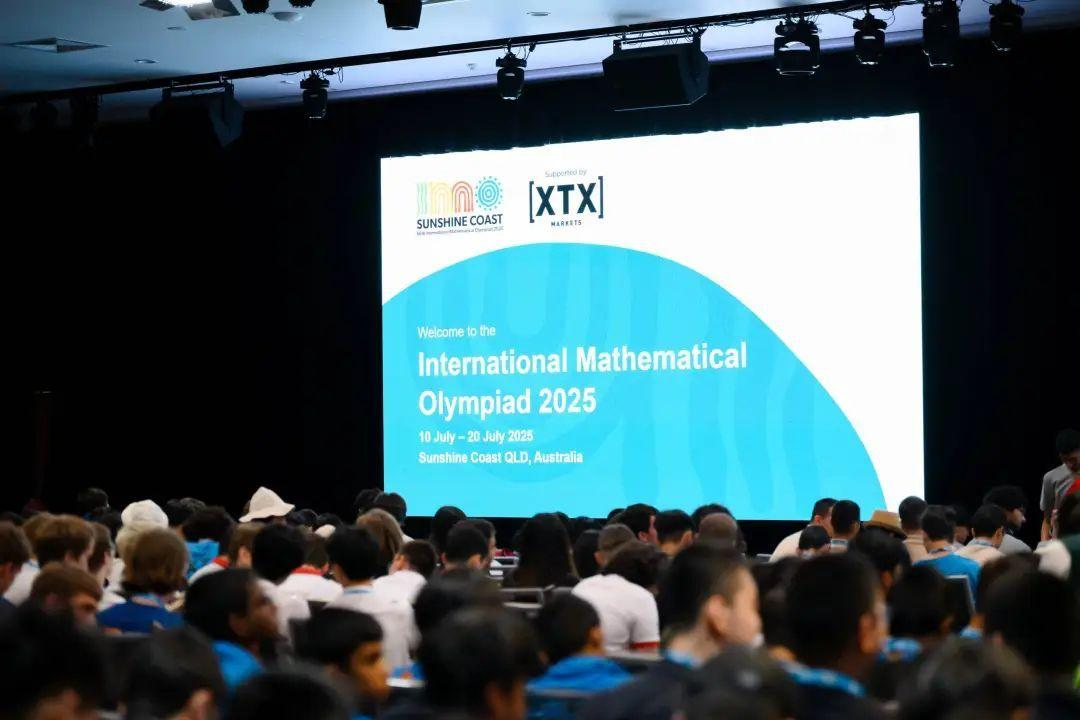

ACL 2025 Best Paper Awards Announced : The ACL (Association for Computational Linguistics Annual Meeting) 2025 announced its best paper awards, with “Native Sparse Attention” (a collaboration between Peking University, DeepSeek, and the University of Washington) and Peking University’s “Language Models Resist Alignment: Evidence from Data Compression” both receiving honors. Notably, over half of the paper authors are Chinese. Additionally, ACL also presented 25-year and 10-year Test-of-Time awards, recognizing landmark research that has profoundly impacted fields such as neural machine translation and semantic role labeling. (Source: karminski3)

Anthropic Joins UK AI Safety Institute’s Alignment Project : Anthropic announced it has joined the UK AI Safety Institute’s alignment project, contributing computational resources to advance critical research. This initiative aims to ensure that as AI systems grow in capability, they remain predictable and aligned with human values. This collaboration highlights leading AI companies’ commitment to AI safety and alignment research, addressing the complex challenges future AI systems may pose. (Source: AnthropicAI)

🎯 Trends

OpenAI Launches ChatGPT Learning Mode : OpenAI has officially launched ChatGPT’s “Learning Mode,” designed to guide students toward active thinking rather than directly providing answers, through Socratic questioning, step-by-step guidance, and personalized support. This mode is now available to all ChatGPT users, with future plans to expand features such as visualization, goal setting, and progress tracking. This move is seen as a significant step for OpenAI into the education technology market, sparking widespread discussion about AI’s role in education and the potential impact of “wrapper applications.” (Source: QbitAI, 36Kr)

UBTECH Walker S2 Humanoid Robot Achieves Autonomous Battery Swapping : Chinese robotics company UBTECH unveiled its full-sized industrial humanoid robot, Walker S2, demonstrating the world’s first autonomous battery swapping system. Walker S2 can seamlessly complete battery replacement within 3 minutes, enabling 24/7 uninterrupted operation and significantly enhancing efficiency in industrial settings. The robot features an AI dual-loop system, pure RGB binocular vision, and 52 degrees of freedom, designed for high-intensity tasks such as automotive manufacturing, sparking discussions about robot automation replacing human labor and future work models. (Source: QbitAI, Ronald_vanLoon)

Qwen Series Models Continuously Updated with Performance Enhancements : The Qwen team recently released the Qwen3-30B-A3B-Thinking-2507 medium model, which possesses “thinking” capabilities, performs exceptionally well in reasoning, coding, and mathematical tasks, and supports 256K long context. Concurrently, Qwen3 Coder 30B-A3B is also set to be released soon, further enhancing code generation capabilities. These updates solidify the Qwen series’ competitiveness in the LLM domain and have been integrated into tools like Anycoder. (Source: Alibaba_Qwen, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

Google DeepMind’s Progress in Earth and History AI Models : Google DeepMind launched AlphaEarth Foundations, aiming to map the Earth with astonishing detail and unify massive geographical data. Concurrently, its Aeneas model assists historians in quantitatively modeling history by analyzing ancient Latin texts with AI. These models demonstrate AI’s powerful application potential in environmental monitoring and humanities history research. (Source: GoogleDeepMind, GoogleDeepMind)

Arcee Releases AFM-4.5B Open-Weight Model : Arcee officially released AFM-4.5B and its Base version, an open-weight language model designed for enterprise-grade applications. AFM-4.5B aims to provide a flexible and high-performance solution across various deployment environments, with its training data rigorously filtered to ensure high-quality output. The release of this model offers enterprises more advanced open-source AI options to meet their needs for building and deploying AI applications. (Source: code_star, stablequan)

GLM-4.5 Model Shows Strong Performance in EQ-Bench and Long-Form Writing : Z.ai’s GLM-4.5 model achieved excellent results in EQ-Bench and long-form writing benchmarks, demonstrating its unified strengths in reasoning, coding, and agent capabilities. The model is available in two versions, GLM-4.5 and GLM-4.5-Air, and is open on HuggingFace, with some versions even offering free trials. Its powerful performance and rapid processing of challenging prompts indicate its potential in complex application scenarios. (Source: Zai_org, jon_durbin)

Mistral AI Releases Codestral 25.08 : Mistral AI released its latest Codestral 25.08 model and introduced a complete Mistral coding stack for enterprises. This move aims to provide enterprises with more powerful code generation capabilities and comprehensive development tools, further solidifying Mistral AI’s market position in AI programming. (Source: MistralAI)

NVIDIA’s Significant Growth in Models/Datasets/Applications on Hugging Face : AI World data shows that NVIDIA added 365 public models, datasets, and applications on Hugging Face in the past 12 months, averaging one per day. This astonishing growth rate indicates that NVIDIA not only dominates the hardware sector but also demonstrates strong influence in the open-source AI ecosystem, actively promoting the popularization and application of AI technology. (Source: ClementDelangue)

Llama Inference Speed Increased by 5% : A new Fast Attention algorithm has accelerated the SoftMax function by approximately 30%, reducing Meta LLM inference time on A100 GPUs by 5%. This optimization is expected to improve LLM operational efficiency and lower inference costs, holding significant implications for large-scale deployment and real-time applications. (Source: Reddit r/LocalLLaMA)

Skywork-UniPic-1.5B Unified Autoregressive Multimodal Model Released : Skywork released Skywork-UniPic-1.5B, a unified autoregressive multimodal model. This model is capable of processing various modalities of data, providing a new foundation for multimodal AI research and applications. (Source: Reddit r/LocalLLaMA)

Google Launches Virtual Try-On AI Feature : Google introduced a new AI feature allowing users to virtually try on clothes online. This technology leverages AI generative capabilities to provide consumers with a more intuitive and personalized shopping experience, expected to reduce return rates and boost e-commerce conversion rates. (Source: Ronald_vanLoon)

LimX Dynamics Unveils Humanoid Robot Oli : LimX Dynamics officially launched its new humanoid robot, Oli, priced at approximately $22,000. Oli stands 5‘5” tall, weighs 55 kg, boasts 31 degrees of freedom, and is equipped with a self-developed 6-axis IMU. It supports modular SDKs and fully open Python development interfaces, providing a flexible platform for research and development, and is expected to drive the application of humanoid robots in more scenarios. (Source: teortaxesTex)

🧰 Tools

LangSmith Introduces Align Evals Feature : LangSmith has introduced a new Align Evals feature, designed to simplify the creation process for LLM-as-a-Judge evaluators. This feature helps users match LLM scores with human preferences, thereby building more accurate and trustworthy evaluators and reducing uncertainty in evaluation tasks. (Source: hwchase17)

NotebookLM Adds Video Overview Feature : Google’s NotebookLM has launched a video overview feature, allowing users to create visually engaging slide summaries for their notes. This feature utilizes the Gemini model to generate text presentations, combines internal tools to produce static images and independent audio, and finally synthesizes a video, offering users richer ways to learn and present content. (Source: JeffDean, cto_junior)

Qdrant Cloud Inference and LLM Data Processing : Qdrant Cloud Inference allows users to natively embed text, images, and sparse vectors without leaving the vector database, supporting models like BGE, MiniLM, CLIP, and SPLADE. Additionally, the community has explored the functionality of LLMs directly referencing URLs as information sources, and the possibility of LLMs regularly checking, caching, and refreshing URL content to enhance AI’s trustworthiness and utility. (Source: qdrant_engine, Reddit r/OpenWebUI)

Replit Agent Aids in Creating Real-time Dashboards : Replit Agent was used to quickly create accessible real-time dashboards, addressing the issue of cluttered information on traditional tsunami warning websites. This case demonstrates the potential of AI agents in data visualization and user interface design, capable of transforming complex data into easily understandable interactive interfaces. (Source: amasad)

Hugging Face ML Infrastructure Tools : Hugging Face and Gradio jointly launched trackio, a local-first solution for machine learning experiment tracking, allowing users to persist key metrics to Hugging Face Datasets. Concurrently, Hugging Face also introduced “Hugging Face Jobs,” a fully managed CPU and GPU task running service that simplifies ML task execution, enabling users to focus more on model development. (Source: algo_diver, reach_vb)

AI Vertical Domains and Workflow Automation Agents : SciSpace Agent, an AI assistant specifically for scientists, integrates citation, literature retrieval, PDF reading, and AI writing functionalities, aiming to significantly boost research efficiency. LlamaCloud Nodes have also integrated n8n workflows, simplifying document processing automation by utilizing Llama Extract agents to extract key data, thereby automating structured data extraction for financial documents, customer communications, and more. (Source: TheTuringPost, jerryjliu0)

AutoRL: Training Task-Specific LLMs via RL : Matt Shumer introduced AutoRL, a straightforward method for training task-specific LLMs via reinforcement learning. Users simply describe the desired model in a single sentence, and the AI system can generate data and evaluation criteria, then train the model. This open-source tool is based on ART and is expected to lower the development barrier for customized LLMs. (Source: corbtt)

ccflare: Power Tools for Claude Code Advanced Users : ccflare is a powerful toolset designed for Claude Code advanced users, offering features such as analytics tracking, load balancing and switching across multiple Claude subscription accounts, in-depth request analysis, and model configuration for sub-agents. This tool aims to enhance Claude Code’s usage efficiency and controllability, helping developers better manage and optimize their AI programming workflows. (Source: Reddit r/ClaudeAI)

📚 Learning

Survey on Efficient Attention Mechanisms in LLMs : A recent survey on efficient attention mechanisms in LLMs was shared, considered an excellent resource for understanding new ideas and future trends. The survey covers various methods for optimizing attention computation, holding significant reference value for researchers and developers aiming to improve LLM efficiency and performance. (Source: omarsar0)

GEPA: Reflective Prompt Evolution Outperforms Reinforcement Learning : A research paper introduced GEPA (Reflective Prompt Evolution), a reflective prompt optimization method that, under low deployment budgets, surpasses traditional reinforcement learning algorithms in performance through reflective prompt evolution. This research offers new insights for AI models to achieve RL-like performance improvements on specific tasks, especially with potential in synthetic data generation. (Source: teortaxesTex, stanfordnlp)

Understanding LLM Explainability Metric XPLAIN : A new metric named “XPLAIN” has been proposed to quantify the explainability of black-box LLMs. This method utilizes cosine similarity to calculate word-level importance scores, revealing how LLMs interpret input statements and which words have the greatest impact on the output. This research aims to enhance understanding of LLM internal mechanisms and has provided code and a paper for community reference. (Source: Reddit r/MachineLearning)

MoHoBench: Evaluating the Honesty of Multimodal Large Models : MoHoBench is the first benchmark systematically evaluating the honest behavior of Multimodal Large Language Models (MLLMs), measuring their honesty by analyzing their responses to visually unanswerable questions. The benchmark includes over 12,000 visual question-answering samples, revealing that most MLLMs fail to refuse answers when necessary and that their honesty is profoundly influenced by visual information, calling for the development of specialized multimodal honesty alignment methods. (Source: HuggingFace Daily Papers)

Hierarchical Reasoning Model (HRM) Achieves Breakthrough in ARC-AGI : The Hierarchical Reasoning Model (HRM) has made significant progress in ARC-AGI tasks, achieving 25% accuracy with only 1k examples and limited computational resources, demonstrating its strong potential in complex reasoning tasks. Inspired by the brain’s hierarchical processing mechanisms, this model is expected to drive breakthroughs in the reasoning capabilities of general AI systems. (Source: VictorTaelin)

ACL 2025 Paper on LLM Evaluation : A paper presented at ACL 2025 demonstrated how to determine if one language model outperforms another, emphasizing the importance of evaluation in LLM application development. This research aims to provide more effective methods for comparing and selecting LLMs, helping developers avoid blind experimentation without actual progress. (Source: gneubig, charles_irl)

Understanding the Emergence of Soft Preferences in LLMs : A new paper explores how robust and universal “soft preferences” in human language production emerge from strategies that minimize an autoregressive memory cost function. This research provides a deeper understanding of the subtle human-like characteristics in LLM-generated text, offering new perspectives on LLM behavioral mechanisms. (Source: stanfordnlp)

Defining LLM Agents : Harrison Chase, founder of LangChain, shared his definition of AI Agents, emphasizing that an AI Agent’s “agentic” degree depends on how autonomously the LLM decides its next actions. This perspective helps clarify the concept of AI Agents and guides developers on how to measure their autonomy when building Agent systems. (Source: hwchase17)

💼 Business

Anthropic Valuation Soars to $170 Billion : Anthropic, the company behind Claude, is reportedly in talks for a new funding round of up to $5 billion, which is expected to value it at $170 billion, making it the second AI unicorn with a valuation over a hundred billion dollars, after OpenAI. This round is led by Iconiq Capital and may attract participation from entities such as the Qatar Investment Authority, Singapore’s sovereign wealth fund GIC, and Amazon. Anthropic’s revenue primarily comes from API calls, showing strong performance particularly in AI programming, with annualized revenue already reaching $4 billion. (Source: 36Kr, 36Kr)

Surge AI Achieves $1 Billion Revenue with High-Quality Data : Surge AI, founded by Edwin Chen, achieved over $1 billion in annual revenue with a team of 120 people, without external funding or a sales team, far surpassing peers in efficiency. The company specializes in providing high-quality human feedback data (RLHF), with its “Surge Force” elite labeling network ensuring data accuracy through stringent standards and professional backgrounds (e.g., MIT math PhDs), making it a preferred vendor for top AI labs like OpenAI and Anthropic. It plans to launch a $1 billion Series A funding round, potentially valuing it at $15 billion. (Source: 36Kr)

Nvidia Data Center Revenue Grows 10x in Two Years : Nvidia’s data center revenue has grown tenfold in the past two years, and with the lifting of H20 chip restrictions, it is expected to maintain strong growth momentum. This growth is primarily driven by the immense demand for GPU computing power from large AI models, solidifying Nvidia’s leadership in the AI hardware market. (Source: Reddit r/artificial)

🌟 Community

Discussion on the Effectiveness of Role-Playing in AI Prompts : The community is actively discussing the practical utility of role-playing in large model prompts, generally agreeing that it can effectively guide AI to focus on specific tasks and improve output quality by directing probability distributions towards high-quality data. However, some views suggest that over-reliance on or complete negation of role-playing are both formalism, and the key lies in understanding AI task requirements. (Source: dotey)

AI Coding Sparks Controversy Over Code Volume and Quality : On social media, the efficiency of AI-assisted coding tools and code quality issues have sparked heated discussion. Some users report that AI can quickly generate tens of thousands of lines of code, but simultaneously express concerns about its maintainability and architectural choices. Discussions indicate that AI-generated code may require extensive human review and modification, rather than being “mindless generation,” highlighting the challenges posed by AI’s changing role in software development. (Source: vikhyatk, dotey, Reddit r/ClaudeAI)

Meta AI Strategy and Talent War Spark Community Discussion : Meta’s recent frequent moves in the AI domain, including CEO Mark Zuckerberg’s “personal superintelligence” vision, offering up to $1 billion in poaching offers for top AI talent (including employees from Mira Murati’s startup), and a “cautious” stance on future top model open-sourcing strategies, have all sparked widespread discussion within the community. These actions are interpreted as a manifestation of Meta’s ambitions in the AI field, but are also accompanied by concerns about the AI talent market, technological ethics, and the open-source spirit. (Source: dotey, teortaxesTex, joannejang, tokenbender, amasad)

AI in Education: Applications and Ethical Challenges : Despite OpenAI launching ChatGPT’s learning mode to guide student thinking, the community generally expresses concerns about its ethical implications in education, such as cheating risks and a decline in critical thinking skills. Discussions indicate that AI applications in education need to balance innovation with academic integrity, and explore how to address these challenges through deeper personalized teaching and educational curriculum design. (Source: 36Kr, Reddit r/artificial, Reddit r/ArtificialInteligence)

AI Model Hallucinations and Content Authenticity Challenges : On social media, the phenomenon of “hallucinations” in AI-generated content and its impact on information authenticity have sparked widespread discussion. Users have found that AI can generate information that appears professional but is logically inconsistent or false, especially in image and video generation, where authenticity is hard to discern. This has led to a crisis of trust in AI tools and prompted reflection on how to maintain human discernment and critical thinking, avoiding over-reliance on algorithms. (Source: 36Kr, teortaxesTex, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/artificial)

AI’s Dual Impact on Socioeconomics and Individual Creativity : The community is engaged in a polarized discussion regarding AI’s socioeconomic impact. On one hand, some CEOs have openly stated that AI will “end work as we know it,” sparking concerns about job displacement; on the other hand, users have shared how AI empowers individuals to realize entrepreneurial ideas even without budget and technical skills, viewing AI as “the great equalizer” that liberates individual creativity. (Source: Reddit r/artificial, Reddit r/ArtificialInteligence)

AI Open-Source vs. Closed-Source Security Debate : The community is engaged in a fierce debate regarding the security of open-source versus closed-source AI models. Some argue that deploying models behind APIs or chatbots might be riskier than releasing open-weight models, as it lowers the barrier for malicious use. The debate calls for a re-evaluation of the notion that “open weights are unsafe” and emphasizes that AI safety should transcend simple technical openness. (Source: bookwormengr)

Exploring AI and Human Emotional Connection : On social media, opinions vary regarding establishing emotional connections with AI. Some users believe that forming relationships with AI is a personal choice as long as it doesn’t affect normal life; others worry that over-reliance on AI companionship might reduce patience for real human relationships and spark deeper reflections on the ethics and psychological impacts of AI companions. (Source: Reddit r/ChatGPT, ClementDelangue)

💡 Other

Uneven Global AI Development and Geopolitical Impact : The UN Deputy Secretary-General called for bridging the “AI divide,” pointing out that AI development capabilities are concentrated in a few countries and companies, leading to inequalities in technology and governance. Experts emphasize that AI should augment human capabilities rather than replace them, and flexible governance mechanisms are needed to avoid categorical differences between technologists and non-technologists. Furthermore, geopolitical competition in AI, such as the US-China AGI race, has also become a focal point of international concern. (Source: 36Kr, teortaxesTex)

AI Copyright War: Imagination vs. Machines : The UK is currently experiencing a debate about AI copyright, with the core issue being whether AI tech companies can scrape human-created content for training and generating “enhanced” content without permission or compensation. This debate focuses on the copyright ownership of creative works in the AI era and the protection of creators’ rights, reflecting the conflict between technological development and existing legal frameworks. (Source: Reddit r/artificial)

Ethical Concerns Sparked by FDA’s AI Applications : Reports indicate that the U.S. FDA’s AI might “fabricate research” during drug approval processes, sparking concerns about the ethics and accuracy of AI applications in healthcare. This highlights the challenges faced by AI-assisted decision-making systems, especially in high-risk areas, regarding data authenticity and transparency, and how to ensure AI decisions comply with ethical and regulatory standards. (Source: Ronald_vanLoon)