Keywords:AI training data copyright, AlphaGenome, OpenAI hardware plagiarism, AI college entrance exam scores, Gemini CLI, RLT new method, BitNet b1.58, Biomni agent, AI fair use ruling, DNA base pair prediction, Altman responds to plagiarism allegations, Large model mathematical capability improvement, Terminal AI agent free quota

🔥 Focus

AI Training Data Copyright Sees Landmark Ruling: U.S. Court Rules AI Use of Legally Purchased Books for Training Constitutes “Fair Use”: A U.S. federal court, in a lawsuit against Anthropic, ruled that AI companies using legally purchased published books to train large language models falls under the “fair use” doctrine, without requiring prior consent from authors. The court deemed AI training as a “transformative use” of the original works, which does not directly supplant the market for the originals and benefits technological innovation and public interest. However, the court also ruled that using pirated books for training does not constitute fair use, and Anthropic could still be held liable for this. This ruling referenced the precedent set by the 2015 Google Books case and is seen as a significant step in reducing copyright risks for AI training data, potentially influencing the adjudication of other similar cases (such as lawsuits against OpenAI and Meta). Previously, Meta also received a favorable judgment in a similar copyright lawsuit, where the judge found that the plaintiffs failed to sufficiently prove that Meta’s use of their books to train AI models caused economic harm. These rulings collectively provide clearer legal guidance for the AI industry regarding data acquisition and use, but emphasize the importance of legally acquiring data. (Source: 量子位, DeepLearning.AI Blog, wiredmagazine)

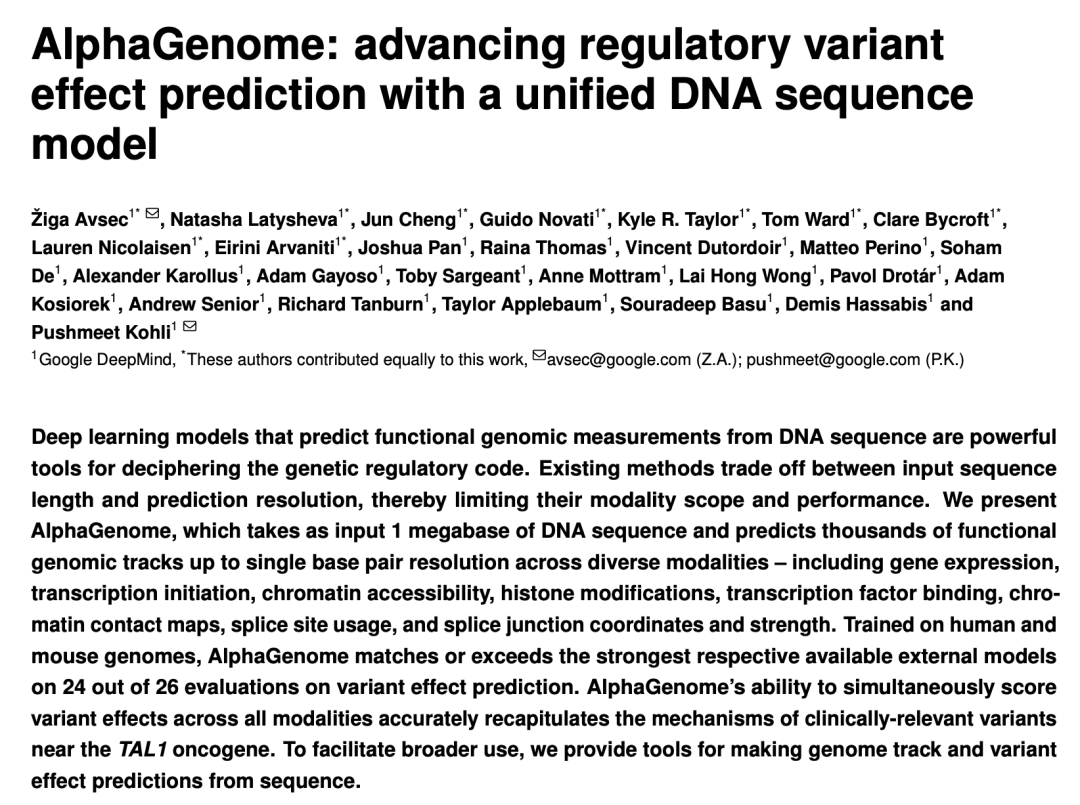

Google DeepMind Releases AlphaGenome: AI “Microscope” Predicts Impact of Genetic Variations Across Millions of DNA Base Pairs: Google DeepMind has introduced AlphaGenome, an AI model capable of taking DNA sequences up to 1 million base pairs long as input to predict thousands of molecular properties and assess the impact of genetic variations. It has demonstrated leading performance in over 20 genomic prediction benchmarks. AlphaGenome features high-resolution long-sequence context processing, comprehensive multimodal prediction, efficient variant scoring, and a novel splice junction model. A single model can be trained in just 4 hours with half the computational budget of the original Enformer model. The model aims to help scientists understand gene regulation, accelerate disease understanding (especially rare diseases), guide synthetic biology design, and advance fundamental research. It is currently available as a preview via API for non-commercial research use, with plans for a full release in the future. (Source: 36氪, Google, demishassabis)

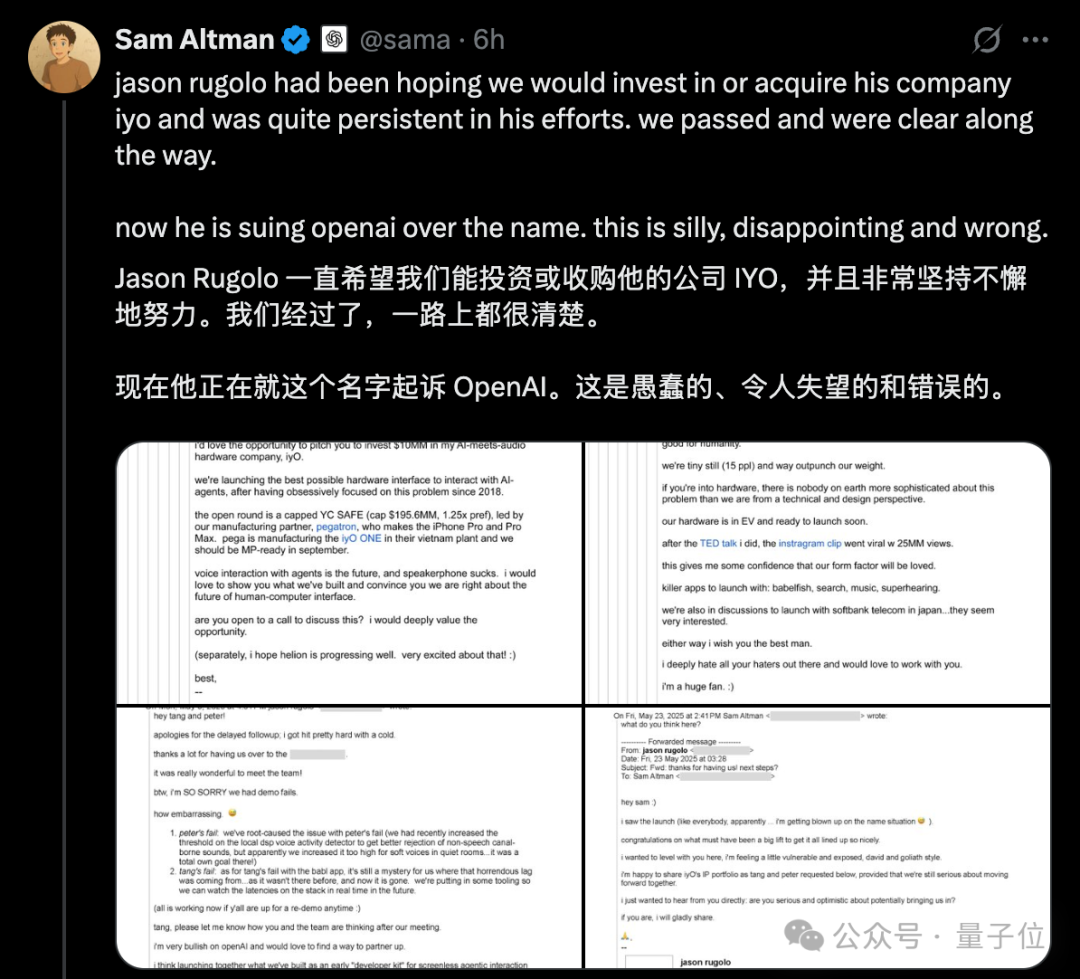

OpenAI Hardware “Copycat” Controversy Escalates, Altman Publicly Refutes IYO Lawsuit with Emails: In response to AI hardware startup IYO’s accusations of trademark infringement and product copying against OpenAI and its acquired hardware company io (founded by former Apple designer Jony Ive), OpenAI CEO Sam Altman publicly responded on social media, calling IYO’s lawsuit “silly, disappointing, and completely wrong.” Altman presented email screenshots showing IYO founder Jason Rugolo had actively sought a $10 million investment or acquisition from OpenAI before the lawsuit, and still hoped to share its intellectual property after OpenAI announced the acquisition of io. Altman believes IYO filed the lawsuit only after its investment or acquisition attempts failed. IYO’s founder retorted that Altman was holding a “trial by internet” and insisted on the rights to their product name. Previously, a court had granted IYO’s temporary restraining order, preventing OpenAI from using the IO logo. OpenAI stated its hardware product is different from IYO’s custom ear-worn device, its prototype design is not finalized, and it will not be launched for at least a year. (Source: 量子位, 36氪)

AI Large Models Retake College Entrance Exam, Overall Scores Significantly Improve, Math Abilities Show Prominent Progress: The results of GeekPark’s 2025 AI college entrance exam simulation assessment show that mainstream large models (such as Doubao, DeepSeek R1, ChatGPT o3, etc.) achieved significantly higher overall scores compared to last year, demonstrating the potential to compete for top universities. The estimated top scorer, Doubao, could rank in the top 900 in Shandong Province. The AI’s imbalance between liberal arts and science subjects has eased, with average scores in science subjects growing faster. Mathematics became the subject with the most significant improvement, with an average score increase of 84.25 points, surpassing Chinese and English. Multimodal capabilities became a key differentiator, especially in subjects with many image-based questions like physics and geography. Although AI performed well in complex reasoning and calculations, it still made mistakes in understanding simple problems with visually confusing information (such as a math vector problem). In essay writing, AI can produce well-structured articles with rich arguments but lacks deep critical thinking and emotional resonance, making it difficult to produce top-tier compositions. (Source: 36氪)

🎯 Trends

Google’s Gemini CLI, Powered by Gemini 2.5 Pro, Released with Generous Free Quotas, Drawing Attention: Google has officially released Gemini CLI, an AI assistant that runs in the terminal environment, based on the Gemini 2.5 Pro model. Its highlight is the extremely generous free usage quota: support for a 1 million token context window, 60 model calls per minute, and 1000 per day, posing strong competition to paid tools like Anthropic’s Claude Code. Gemini CLI uses the Apache 2.0 open-source license and supports code writing, debugging, project management, document querying, and calling other Google services (like generating images and videos) via MCP. Google emphasizes the advantages of its general-purpose model in handling complex development tasks, believing that pure code models might be limiting. The community has reacted enthusiastically, believing this will drive the popularization and competition of CLI AI tools. (Source: 36氪, Reddit r/LocalLLaMA, dotey)

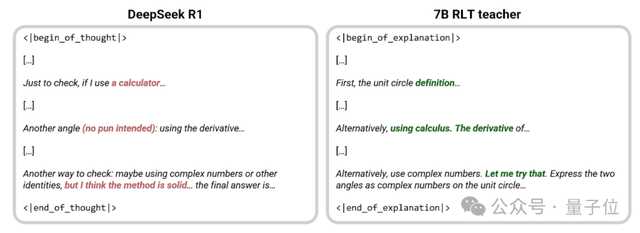

Sakana AI Proposes New RLT Method, 7B Small Model “Teaching” Outperforms DeepSeek-R1: Sakana AI, founded by Llion Jones, one of the Transformer authors, has proposed a new Reinforcement Learning from Teachers (RLTs) method. This method has the teacher model no longer solve problems from scratch but instead output clear step-by-step explanations based on known solutions, mimicking the “heuristic” teaching of excellent human teachers. Experiments show that a 7B RLT small model trained using this method outperforms the 671B DeepSeek-R1 in imparting reasoning skills and can effectively train student models up to 3 times its size (e.g., 32B), with significantly reduced training costs. This method aims to address the problems of traditional teacher models relying on their own problem-solving abilities, being slow and expensive to train, by aligning teacher training with its true purpose (assisting student model learning) to improve efficiency. (Source: 量子位, SakanaAILabs)

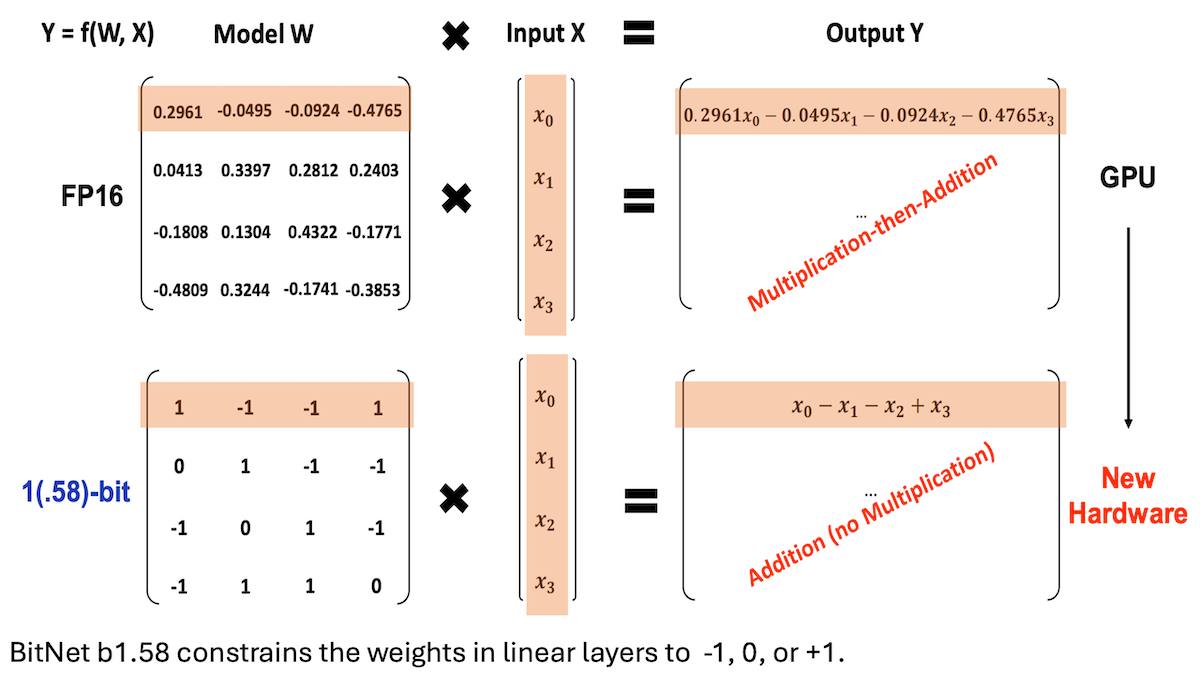

Microsoft et al. Propose BitNet b1.58, Achieving Low-Precision, High-Inference-Performance LLMs: Researchers from Microsoft, the University of Chinese Academy of Sciences, and Tsinghua University have updated the BitNet b1.58 model, where most weights are confined to three values: -1, 0, or +1 (approximately 1.58 bits/parameter). At a 2 billion parameter scale, its performance is competitive with top full-precision models. The model is optimized through carefully designed training strategies (such as quantization-aware training, two-stage learning rates, and weight decay). Across 16 popular benchmarks, its speed and memory usage outperform models like Qwen2.5-1.5B and Gemma-3 1B. Its average accuracy is close to Qwen2.5-1.5B and superior to the 4-bit quantized version of Qwen2.5-1.5B. This work demonstrates that low-precision models can achieve high performance through meticulous hyperparameter tuning, offering new avenues for efficient LLM deployment. (Source: DeepLearning.AI Blog)

Stanford and Other Institutions Launch Biomni, an Intelligent Agent for Biological Research, Integrating Over 100 Tools and Databases: Researchers from Stanford University, Princeton University, and other institutions have launched Biomni, an AI agent designed for broad biological research. Based on Claude 4 Sonnet, the agent integrates 150 tools, nearly 60 databases, and about 100 popular biology software packages, extracted and filtered from 2,500 recent papers across 25 specialized biological fields (including genomics, immunology, neuroscience, etc.). Biomni can perform various tasks such as asking questions, proposing hypotheses, designing workflows, analyzing datasets, and generating charts. It employs the CodeAct framework, responding to queries through iterative planning, code generation, and execution, and introduces another Claude 4 Sonnet instance as a judge to evaluate the plausibility of intermediate outputs. In multiple benchmark tests like Lab-bench and real-world case studies, Biomni outperformed standalone Claude 4 Sonnet and Claude models enhanced with literature retrieval. (Source: DeepLearning.AI Blog)

Anthropic Introduces New Claude Code Feature: Create and Share AI-Powered Artifacts: Anthropic has introduced a new feature for its AI programming assistant, Claude Code, allowing users to create, host, and share “Artifacts” (which can be understood as small AI applications or tools), and to embed Claude’s intelligence directly into these creations. This means users can not only use Claude to generate code snippets or perform analysis but also build functional, AI-driven applications. A key characteristic is that when these AI applications are shared, viewers authenticate using their own Claude accounts, and their usage is counted towards the viewer’s own subscription quota, not the creator’s. This feature is currently in beta and open to all free, Pro, and Max users, aiming to lower the barrier to creating AI applications and promote the popularization and sharing of AI capabilities. (Source: kylebrussell, Reddit r/ClaudeAI)

Maya Research Releases Veena TTS Model, Supporting Hindi and English with More Authentic Indian Accents: Maya Research has launched a text-to-speech (TTS) model named Veena, based on the 3B Llama architecture and licensed under Apache 2.0. A notable feature of Veena is its ability to generate English and Hindi speech with Indian accents, including code-mix scenarios, addressing the shortcomings of existing TTS models in authentic Indian pronunciation. The model has a latency of less than 80 milliseconds, can run in a free Google Colab environment, and is available on Hugging Face Hub. The team stated they are actively developing support for other Indian languages such as Tamil, Telugu, and Bengali. (Source: huggingface, huggingface)

HiDream.ai Releases vivago2.0, Integrating Multimodal Generation and Editing Capabilities: HiDream.ai, founded by AI expert Tao Mei, has launched vivago2.0, a multimodal AI creation tool. The product integrates functions such as image generation, image-to-video conversion, AI podcasting (lip-sync), and special effect templates, and features a creative community for users to share and find inspiration. Its core technology is based on the new image intelligent agent HiDream-A1, which integrates advanced closed-source versions of the previously open-sourced HiDream-I1 (a 17-billion parameter foundational image generation model that topped text-to-image leaderboards) and HiDream-E1 (an interactive image editing model). vivago2.0 aims to lower the barrier to multimodal content creation, offering hundreds of special effect templates and supporting image generation and modification through natural language dialogue (Image Agent). (Source: 量子位)

Nvidia Releases RTX 5050 Series GPUs, Desktop and Laptop Versions Feature Different VRAM Configurations: Nvidia has officially released the GeForce RTX 5050 series GPUs, including desktop and laptop versions, scheduled for a July launch. The domestic desktop version has a suggested retail price starting at 2099 RMB. The desktop RTX 5050 features 2560 Blackwell CUDA cores, equipped with 8GB GDDR6 VRAM, and a maximum power consumption of 130W. The laptop RTX 5050 also has 2560 CUDA cores but is equipped with more power-efficient 8GB GDDR7 VRAM. Nvidia claims that with DLSS 4 technology, the RTX 5050 can achieve ray tracing frame rates exceeding 150fps in games like Cyberpunk 2077, offering an average rasterization performance improvement of 60% (desktop) and 2.4x (laptop) compared to the RTX 3050. This VRAM configuration differentiation reflects Nvidia’s strategy of balancing cost and performance across different market segments. (Source: 量子位)

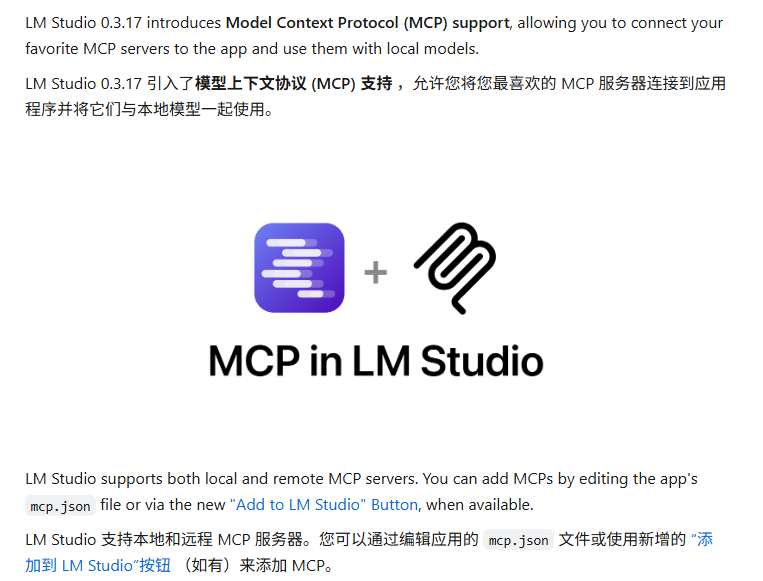

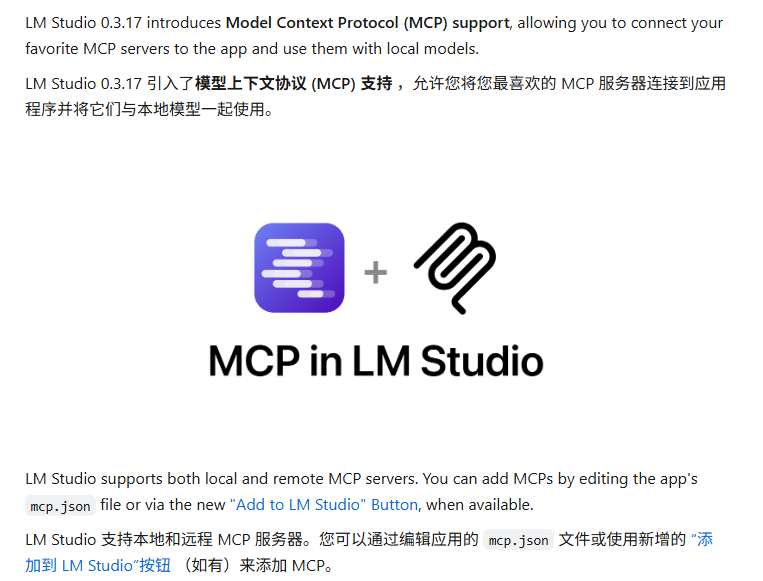

LM Studio Updated to Support MCP Protocol, Enabling Connection of Local LLMs with MCP Servers: LM Studio, a desktop LLM running tool, has released a new version (0.3.17) that adds support for the Model Context Protocol (MCP). Users can now connect locally running large language models with MCP-compatible servers, for example, to call external tools or services. LM Studio has updated its program interface for this, allowing users to install and configure MCP services, and can automatically load and manage local MCP server processes. To facilitate configuration, LM Studio also provides an online tool for generating MCP server configuration links that can be imported with one click. (Source: multimodalart, karminski3)

Gradio Launches Lightweight Experiment Tracking Library Trackio: Hugging Face’s Gradio team has released Trackio, a lightweight experiment tracking and visualization library. The tool is written in less than 1000 lines of Python code, is fully open-source and free, and supports local or hosted use. Trackio aims to help developers more easily record and monitor various metrics and results during machine learning experiments, simplifying the experiment management process. (Source: ClementDelangue, _akhaliq)

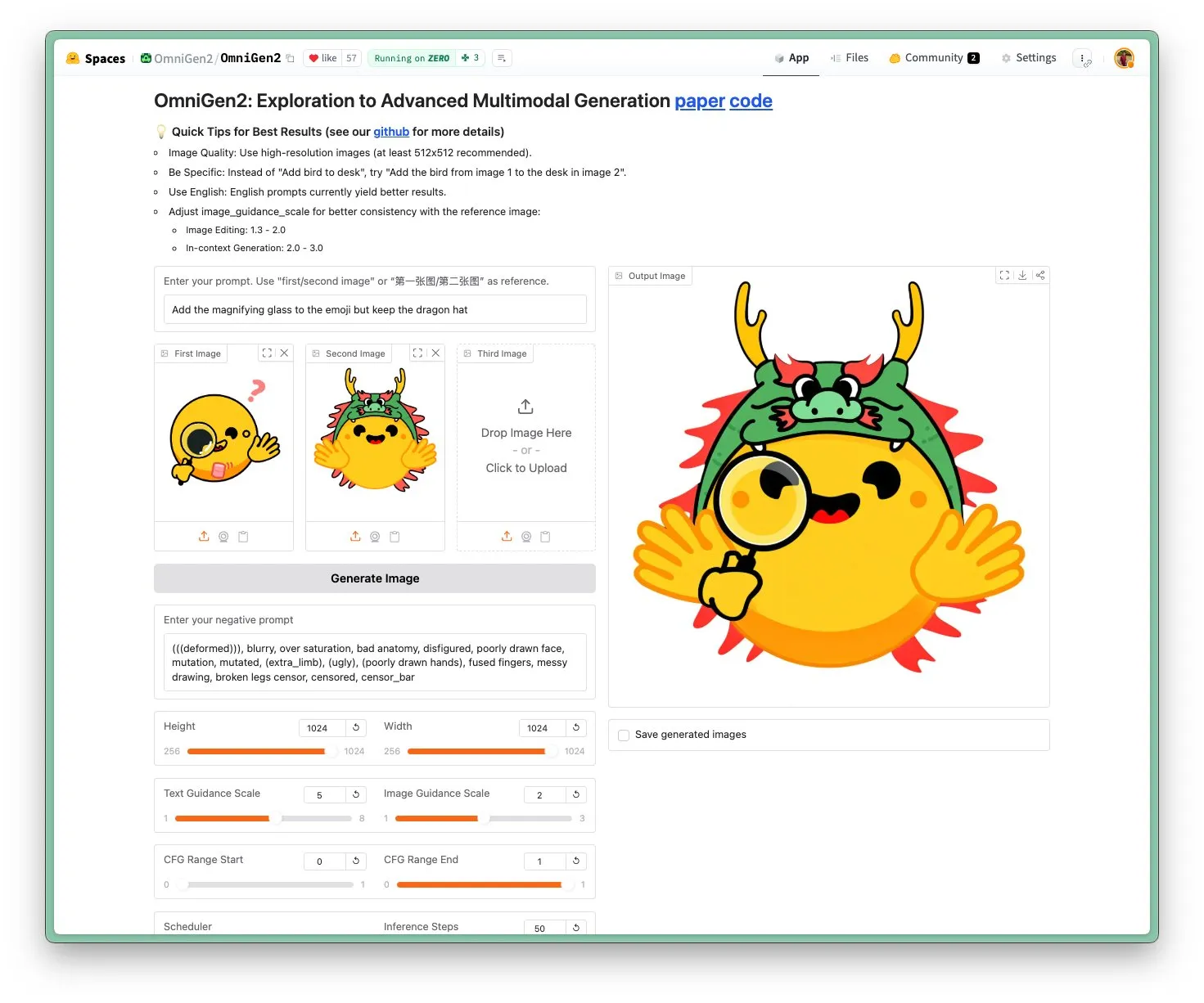

OmniGen 2 Released: Apache 2.0 Licensed SOTA Image Editing and Multifunctional Vision Model: The new OmniGen 2 model achieves state-of-the-art (SOTA) performance in image editing and is released under the Apache 2.0 open-source license. The model not only excels at image editing but can also perform contextual generation, text-to-image conversion, visual understanding, and various other tasks. Users can directly experience the demo and model on Hugging Face Hub. (Source: huggingface)

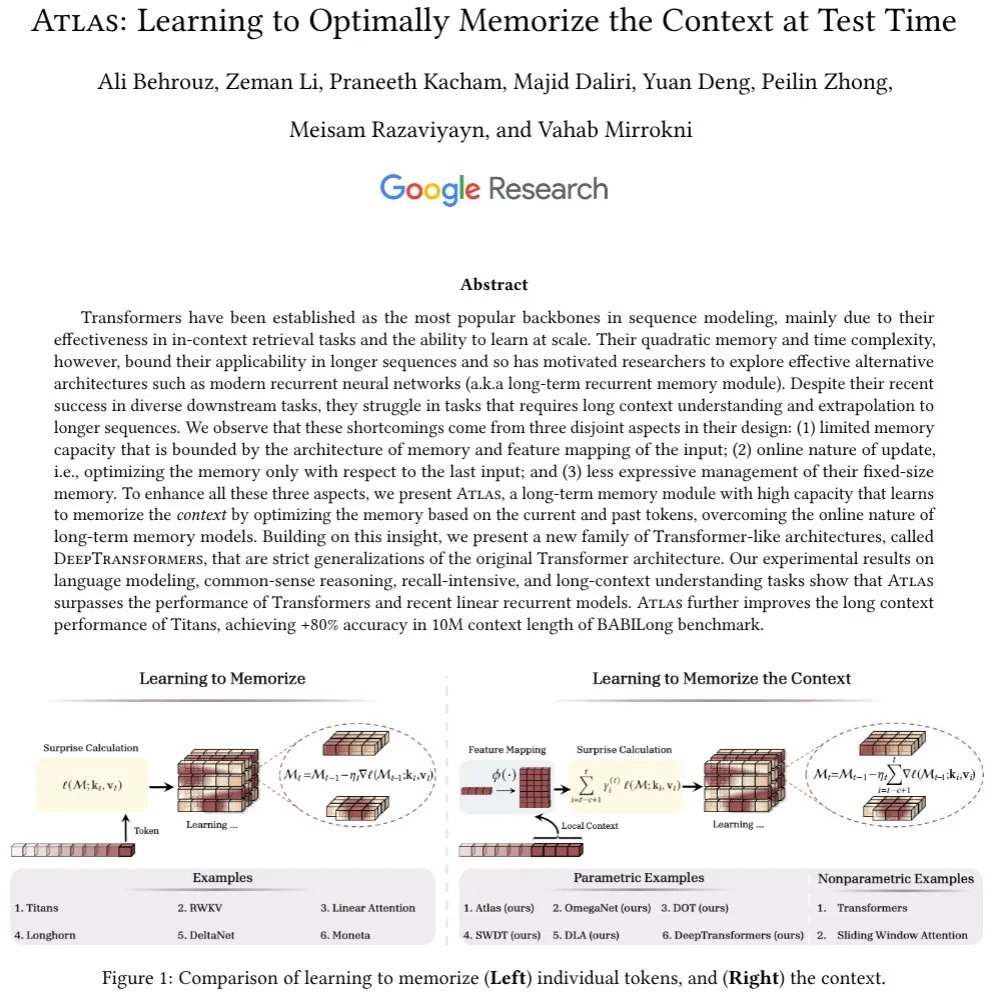

Atlas Architecture Proposed: Features Long-Range Contextual Memory, Challenging Transformers: The newly proposed Atlas architecture aims to address the long-range memory problem in LLMs, claiming to outperform Transformers and modern linear RNNs in language modeling tasks. Atlas has the ability to learn how to memorize context at test time, can enhance the effective context length of Titans models, and achieves over 80% accuracy on the BABILong benchmark with a 10 million context window length. Researchers also discussed another series of models based on the Atlas idea that strictly generalize softmax attention. (Source: behrouz_ali)

Moondream 2B Vision Model Updated: Enhanced Visual Reasoning & UI Understanding, 40% Faster Text Generation: A new version of the Moondream 2B vision model has been released, featuring improvements in visual reasoning, object detection, and UI understanding, along with a 40% increase in text generation speed. This indicates continuous optimization of small multimodal models in specific capabilities, aiming to provide more efficient and accurate visual-language interaction. (Source: mervenoyann)

Inworld AI and Modular Collaborate on Low-Cost, High-Quality TTS Model: Inworld AI has announced a new text-to-speech (TTS) model, reportedly reducing the cost of state-of-the-art TTS by 20 times, to $5 per million characters. The model is based on the Llama architecture, and its training and modeling code will be open-sourced. Partner Modular stated that through technical collaboration, they have achieved the lowest latency, fastest TTS inference platform on NVIDIA B200, and will release a joint technical report. (Source: clattner_llvm)

Higgsfield AI Releases Soul Model: Focusing on High-Aesthetic Photo Generation: Higgsfield AI has launched a new photo generation model called Higgsfield Soul, specializing in high aesthetic value and fashion-grade realism. The model offers over 50 carefully curated presets, aiming to generate images comparable to professional photography, challenging traditional mobile phone photography. (Source: _akhaliq)

🧰 Tools

Gemini CLI: Google’s Open-Source Terminal AI Agent, Offering 1000 Free Daily Calls to Gemini 2.5 Pro: Google has released Gemini CLI, an open-source command-line AI agent that allows users to directly use the Gemini 2.5 Pro model in the terminal. The tool offers a 1 million token context window, and free users can get up to 1000 requests per day (60 per minute). Gemini CLI supports code writing, debugging, file system I/O, web content understanding, plugins, and the MCP protocol, aiming to help developers build and maintain software more efficiently. Its open-source nature (Apache 2.0 license) and high free quota make it a strong competitor to existing tools like Claude Code and may drive support for local models. (Source: Reddit r/LocalLLaMA, dotey, yoheinakajima)

Anthropic Introduces New Claude Code Feature: Create and Share AI-Powered Artifacts, Users Utilize Own Quotas: Anthropic has introduced a new feature for its AI programming assistant, Claude Code, allowing users to create, host, and share “Artifacts” (which can be understood as small AI applications or tools), and to embed Claude’s intelligence directly into these creations. This means users can not only use Claude to generate code snippets or perform analysis but also build functional, AI-driven applications. A key characteristic is that when these AI applications are shared, viewers authenticate using their own Claude accounts, and their usage is counted towards the viewer’s own subscription quota, not the creator’s. This feature is currently in beta and open to all free, Pro, and Max users, aiming to lower the barrier to creating AI applications and promote the popularization and sharing of AI capabilities. (Source: kylebrussell, Reddit r/ClaudeAI, dotey)

LM Studio Updated to Support MCP Protocol, Enabling Connection of Local LLMs with MCP Servers: LM Studio, a desktop LLM running tool, has released a new version (0.3.17) that adds support for the Model Context Protocol (MCP). Users can now connect locally running large language models with MCP-compatible servers, for example, to call external tools or services. LM Studio has updated its program interface for this, allowing users to install and configure MCP services, and can automatically load and manage local MCP server processes. To facilitate configuration, LM Studio also provides an online tool for generating MCP server configuration links that can be imported with one click. (Source: multimodalart, karminski3)

Superconductor: A Tool to Manage a Team of Claude Code Agents on Mobile or Desktop: Superconductor is a new tool that allows users to manage a team of multiple Claude Code agents via their mobile phone or laptop. Users can write informal tickets, launch multiple agents for each ticket, with each agent having its own live application preview. Developers can generate a Pull Request (PR) from the best-performing agent’s work with a single click. The tool aims to simplify multi-agent collaboration and code generation workflows. (Source: full_stack_dl)

Udio Launches Sessions Feature, Enhancing Precision in AI Music Editing: AI music generation platform Udio has launched a “Sessions” feature for its standard and pro subscription users. This feature introduces a new timeline view for track editing, enabling users to craft music with greater precision and reduce reliance on AI’s random generation. Currently, Sessions supports extending or editing tracks, with more features to be added in the future. (Source: TomLikesRobots)

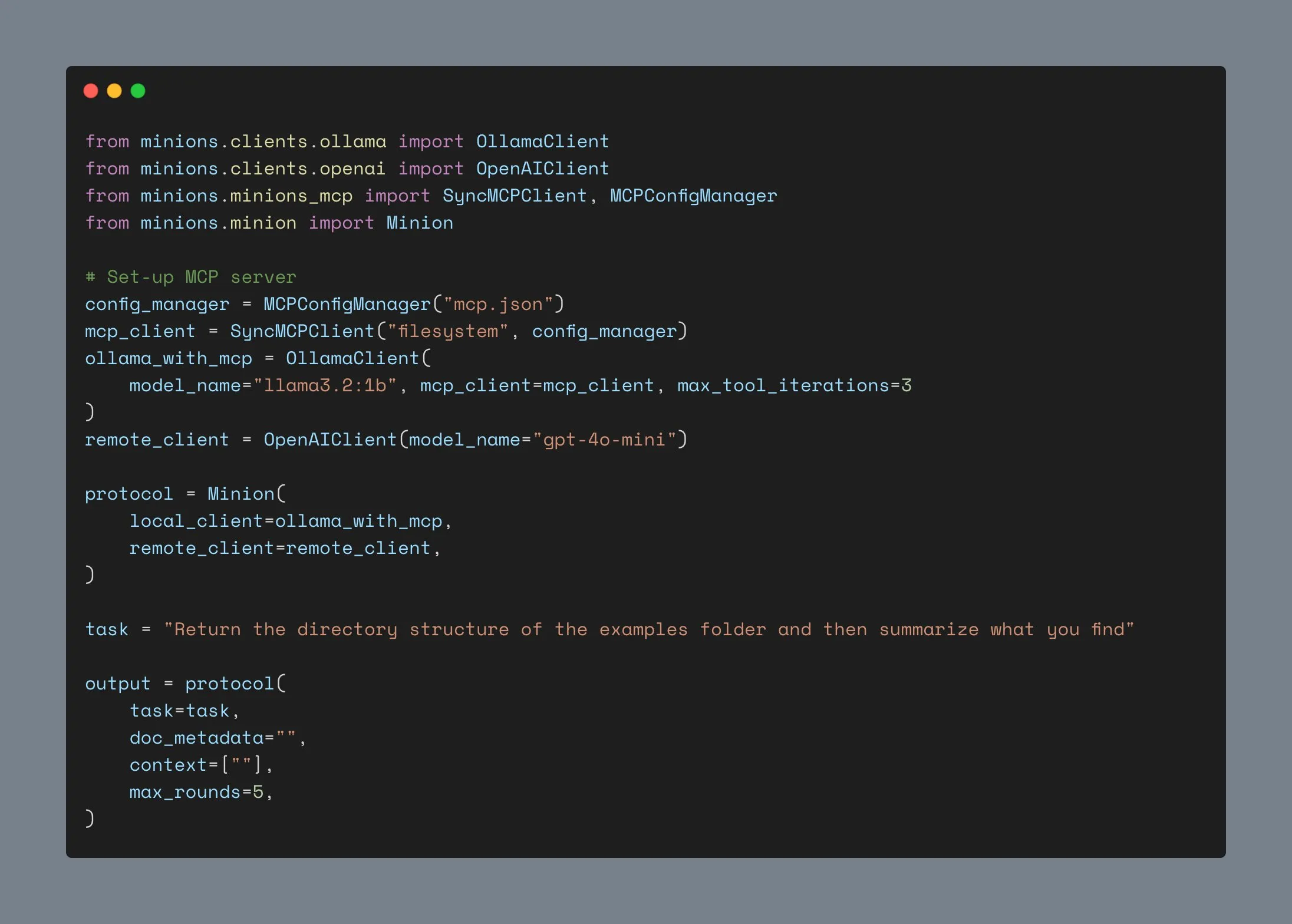

Ollama Client Updated, Supports MCP Integration, GitHub Stars Exceed 1000: The Ollama client has been updated and can now integrate its tool-calling capabilities with any Anthropic MCP server. This means users can combine the convenience of Ollama’s locally run models with the external tool capabilities provided by MCP. Simultaneously, the project’s star count on GitHub has surpassed 1000. (Source: ollama)

📚 Learning

Andrew Ng Launches New Course: ACP Agent Communication Protocol: DeepLearning.AI, in collaboration with IBM Research, has launched a short course on the Agent Communication Protocol (ACP). ACP is an open protocol that standardizes communication between agents via a unified RESTful interface, aiming to solve integration challenges when building multi-agent systems across different teams and frameworks. The course will teach how to use ACP to connect agents built with different frameworks (like CrewAI, Smoljames), enable sequential and hierarchical workflow collaboration, and import ACP agents into the BeeAI platform (an open-source agent registration and sharing platform). Students will learn the lifecycle of ACP agents and compare ACP with protocols like MCP (Model Context Protocol) and A2A (Agent-to-Agent). (Source: AndrewYNg)

Johns Hopkins University Launches DSPy Course: Johns Hopkins University has introduced a course on DSPy. DSPy is a framework for algorithmically optimizing LLM prompts and weights, transforming the traditionally manual process of prompt engineering into a more systematic, programmable workflow of module building and optimization. Shopify CEO Tobi Lutke has also stated that DSPy is his preferred tool for context engineering. (Source: stanfordnlp, lateinteraction)

LM Studio Tutorial: Achieving a Local, Private ChatGPT-like Experience with Open-Source Hugging Face Models: Niels Rogge has released a YouTube tutorial demonstrating how to use LM Studio in conjunction with open-source models from Hugging Face (such as Mistral 3.2-Small) to achieve a 100% private and offline ChatGPT-like experience locally. The tutorial explains concepts like GGUF, quantization, and why models still occupy significant space even with 4-bit quantization, and showcases LM Studio’s compatibility with the OpenAI API. (Source: _akhaliq)

LlamaIndex to Host Online Workshop on Agent Memory: LlamaIndex will collaborate with AIMakerspace to host an online discussion on agent memory. The content will cover persisting chat history, implementing long-term memory using static, factual, and vector chunks, custom memory implementation logic, and when memory is crucial. The discussion aims to help developers build agents that require real context in conversations. (Source: jerryjliu0)

Weaviate Podcast Discusses RAG Benchmarking and Evaluation: Weaviate Podcast episode 124 features Nandan Thakur, who has made significant contributions to search evaluation, discussing benchmarking and evaluation for Retrieval Augmented Generation (RAG). Topics include benchmarks like BEIR, MIRACL, TREC, and the latest FreshStack, as well as reasoning in RAG, query writing, looped search, paginated search results, hybrid retrievers, and multiple other issues. (Source: lateinteraction)

PyTorch Introduces flux-fast Recipe, Accelerating Flux Models 2.5x on H100: PyTorch has released a simple recipe called flux-fast, designed to increase the running speed of Flux models by 2.5 times on H100 GPUs without complex adjustments. This solution aims to simplify the implementation of high-performance computing, and the relevant code has been provided. (Source: robrombach)

MLSys 2026 Conference Information Announced: The MLSys 2026 conference is scheduled to be held in Seattle (Bellevue) in May 2026, with the paper submission deadline on October 30th of this year. All conference recordings from MLSys 2025 are now available for free viewing on the official website. The conference focuses on research and advancements in the field of machine learning systems. (Source: JeffDean)

Stanford CS336 Course “Language Models from Scratch” Gains Attention: Stanford University’s CS336 course “Language Models from Scratch,” taught by Percy Liang and others, has received widespread acclaim. The course aims to provide students with an in-depth understanding of the technical details of language models by having them build models firsthand, addressing the disconnect between researchers and technical specifics. The course content and assignments are considered important learning resources for becoming an LLM expert. (Source: nrehiew_, jpt401)

💼 Business

Meta Invests $14.3 Billion in Scale AI and Hires its CEO Alexandr Wang to Accelerate AI R&D: To bolster its AI capabilities, Meta has reached an agreement with data labeling company Scale AI, investing $14.3 billion for a 49% non-voting stake and recruiting its founder and CEO Alexandr Wang and his team. Alexandr Wang will lead a new lab at Meta focused on superintelligence research. This move aims to inject top AI talent and large-scale data operations capabilities into Meta to address the lukewarm reception of its Llama 4 model and personnel turmoil in its AI research department. Scale AI will use the funds to accelerate innovation and distribute part of the capital to shareholders, with its Chief Strategy Officer Jason Droege serving as interim CEO. The deal may circumvent some government scrutiny by avoiding a direct acquisition. (Source: DeepLearning.AI Blog)

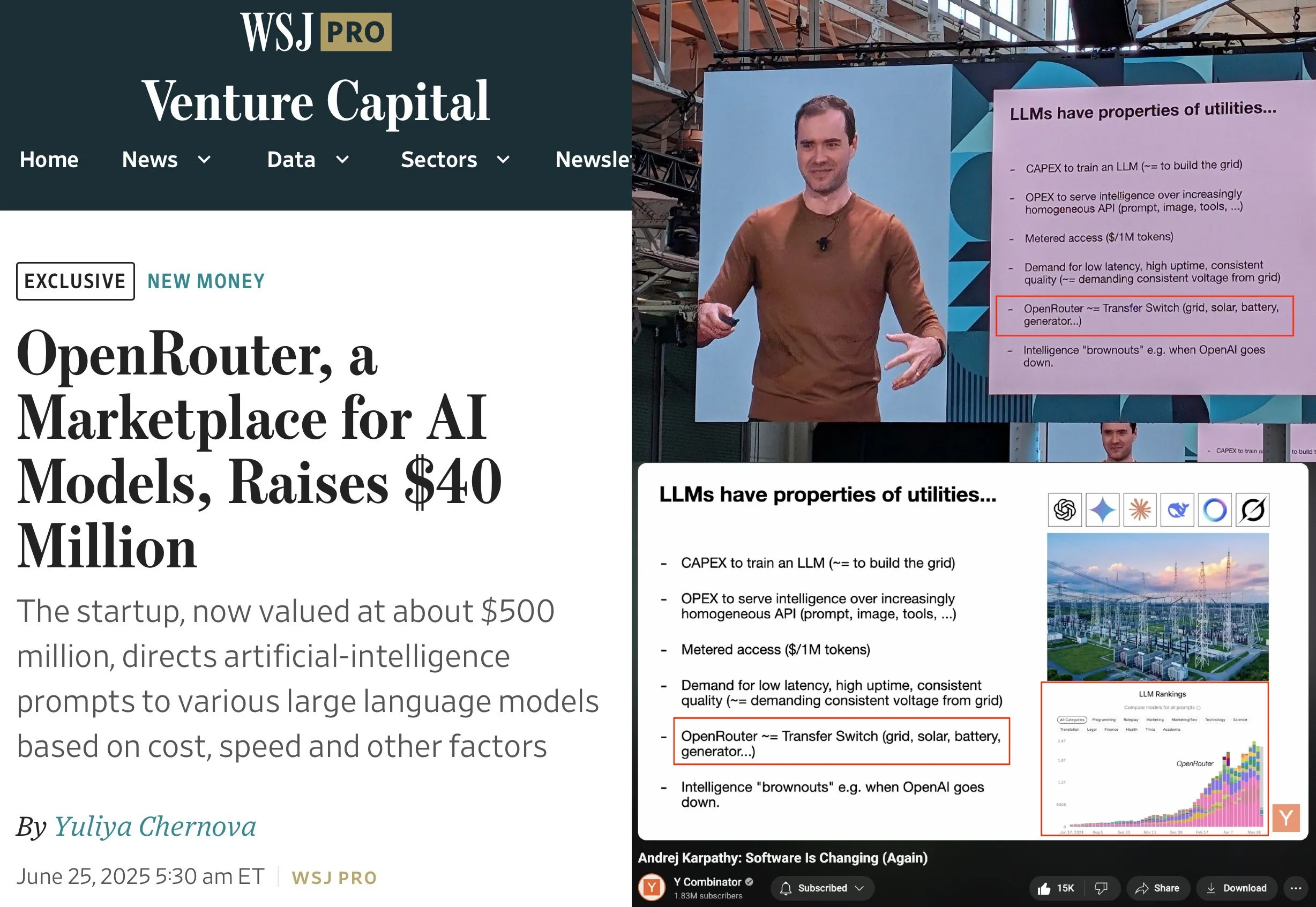

OpenRouter Completes $40 Million Series A Funding Led by a16z and Menlo: OpenRouter, a control plane and model marketplace for LLM inference, announced the completion of a total of $40 million in seed and Series A funding, led by a16z and Menlo Ventures. OpenRouter aims to be a unified interface for developers to select and use various LLMs, currently offering over 400 models and processing 100 trillion tokens annually. The funding will be used to expand supported model modalities (such as image generation, multimodal interactive models), implement smarter routing mechanisms (like geo-routing, enterprise-grade GPU allocation optimization), and enhance model discovery features. (Source: amasad, swyx)

Humanoid Robot Company “Lingbao CASBOT” Secures Nearly 100 Million Yuan in Angel+ Funding Round, Led by Lens Technology: Humanoid robot brand “Lingbao CASBOT” announced the completion of a nearly 100 million yuan Angel+ funding round, led by Lens Technology, with participation from Tianjin Jiayi and existing shareholders SDIC Chuanghe and Henan Asset. The funds will be used to accelerate product mass production, technology R&D, and market expansion. Lingbao CASBOT focuses on the application of general-purpose humanoid robots and embodied intelligence, having launched two bipedal humanoid robots, CASBOT 01 and 02, targeting special operations and broader human-computer interaction scenarios (such as guidance and education), respectively. The company’s core technologies include a hierarchical end-to-end model combined with post-training reinforcement learning, and it has already established collaborations in industrial manufacturing and mineral energy sectors with Zhaojin Group, China Minmetals Corporation, and others. (Source: 36氪, 36氪)

🌟 Community

Andrej Karpathy Advocates for “Context Engineering” over “Prompt Engineering,” Emphasizing Complexity of Building LLM Applications: Andrej Karpathy agrees with Tobi Lutke’s view that “context engineering” more accurately describes the core skill for industrial-grade LLM applications than “prompt engineering.” He points out that a prompt usually refers to a short task description input by users daily, whereas context engineering is a fine art and science involving precisely filling the context window with task descriptions, few-shot examples, RAG, multimodal data, tools, state history, etc., to optimize LLM performance. He also emphasizes that LLM applications are far more than this, requiring solutions to problem decomposition, control flow, multi-model scheduling, UI/UX, safety evaluation, and a series of complex software engineering problems, thus dismissing the notion of them being mere “ChatGPT wrappers.” (Source: karpathy, code_star, dotey)

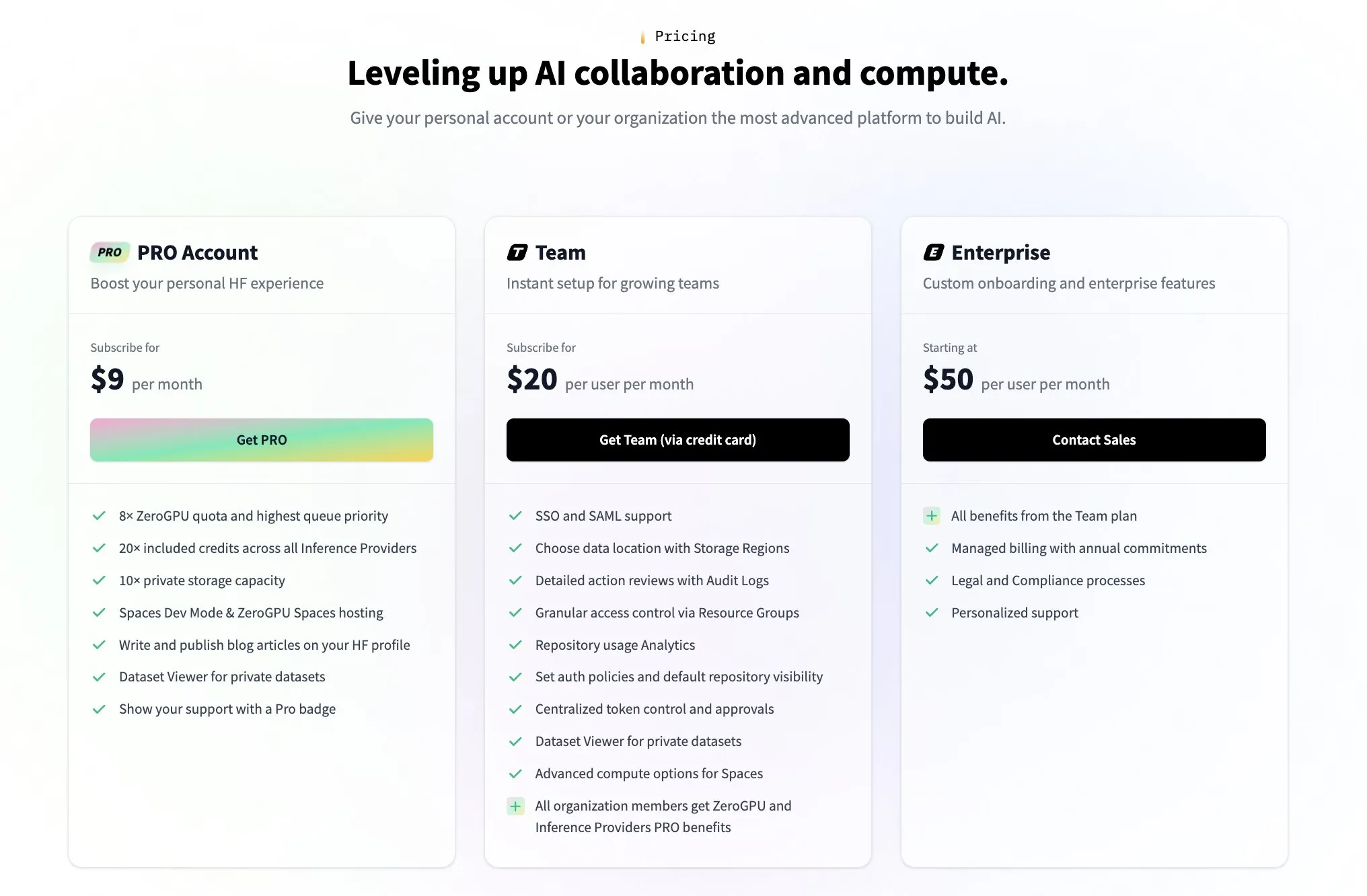

Hugging Face Launches Paid Team Plan to Address Community Questions About Its Monetization Model: In response to community questions about how Hugging Face makes money (sparked by tweets like user @levelsio’s), Hugging Face co-founder Clement Delangue humorously responded about being “triggered by monetization anxiety” and announced the launch of a new paid premium team plan. As a platform hosting a vast number of AI models, offering free APIs, and not mandating API keys, Hugging Face’s business model has been a focal point of community discussion. The launch of the new plan indicates its active exploration and expansion of commercialization avenues. (Source: huggingface, ClementDelangue)

Community Discusses AI Coding Assistant User Loyalty and Multi-Tool Collaboration: The Information reported that developer loyalty to coding assistants might be higher than expected. Simultaneously, there are instances in the community of developers using multiple AI coding tools like Claude Code, Codex (CLI), and Gemini (CLI) within the same codebase. This reflects developers actively experimenting with different AI tools to enhance efficiency, while also potentially seeking specific feature combinations best suited for their workflow, rather than relying entirely on a single tool. (Source: steph_palazzolo, code_star)

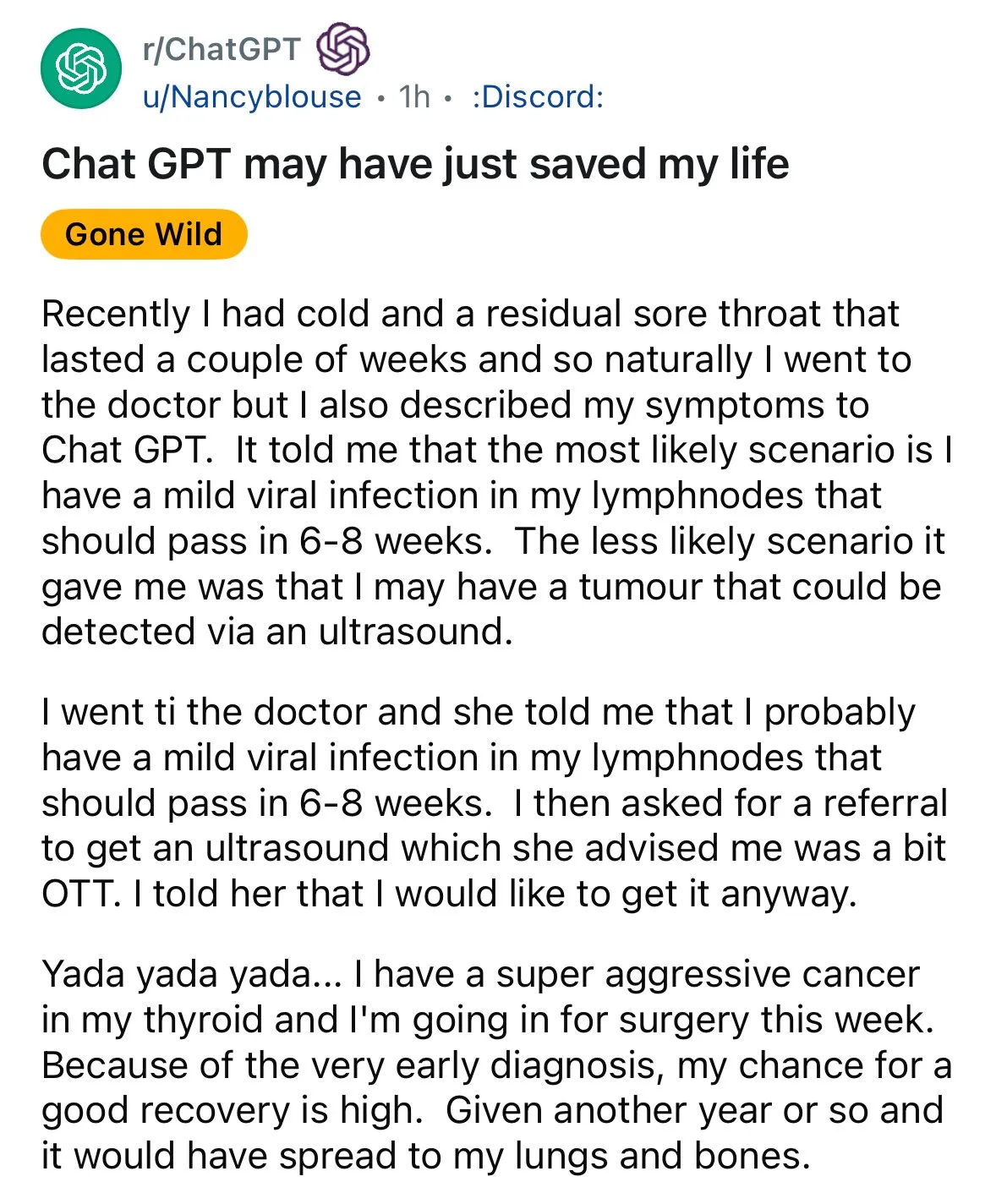

AI Shows Potential in Medical Diagnosis, Sparking “Second Opinion” Discussions: Cases of AI-assisted diagnosis have resurfaced on social media, with one patient suffering from a sore throat who, after being advised by a doctor to observe, sought advice from ChatGPT, underwent an ultrasound, and was ultimately diagnosed with thyroid cancer. Such incidents have sparked discussions encouraging people to use AI for a “second opinion” on medical issues, believing it could save lives. Concurrently, research by Alibaba DAMO Academy on the GRAPE model for detecting early-stage gastric cancer via conventional CT scans was published in Nature Medicine, highlighting AI’s immense potential in early cancer screening. (Source: aidan_mclau, Yuchenj_UW)

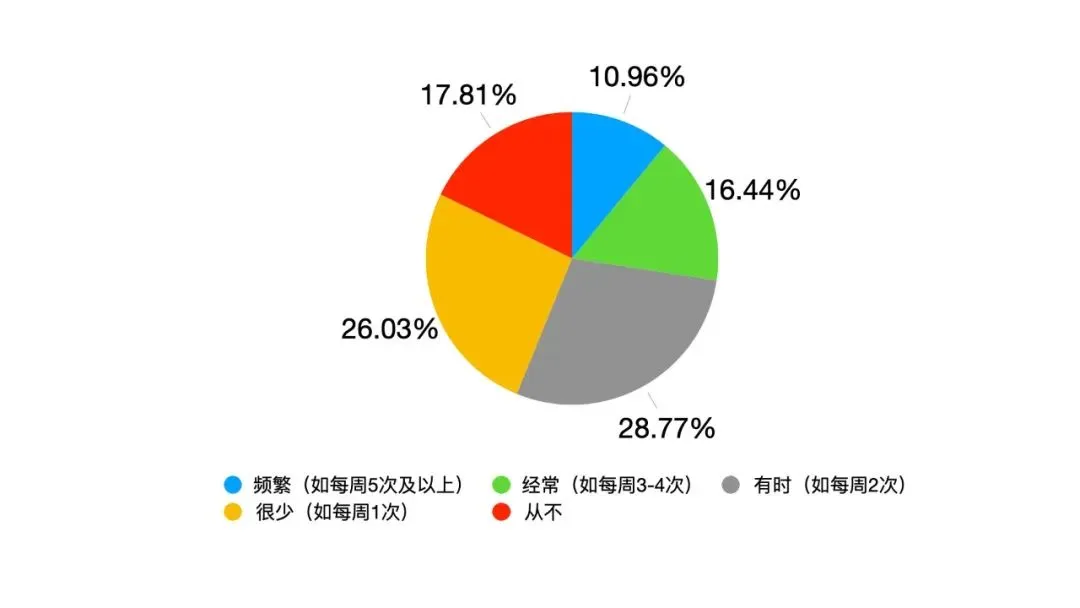

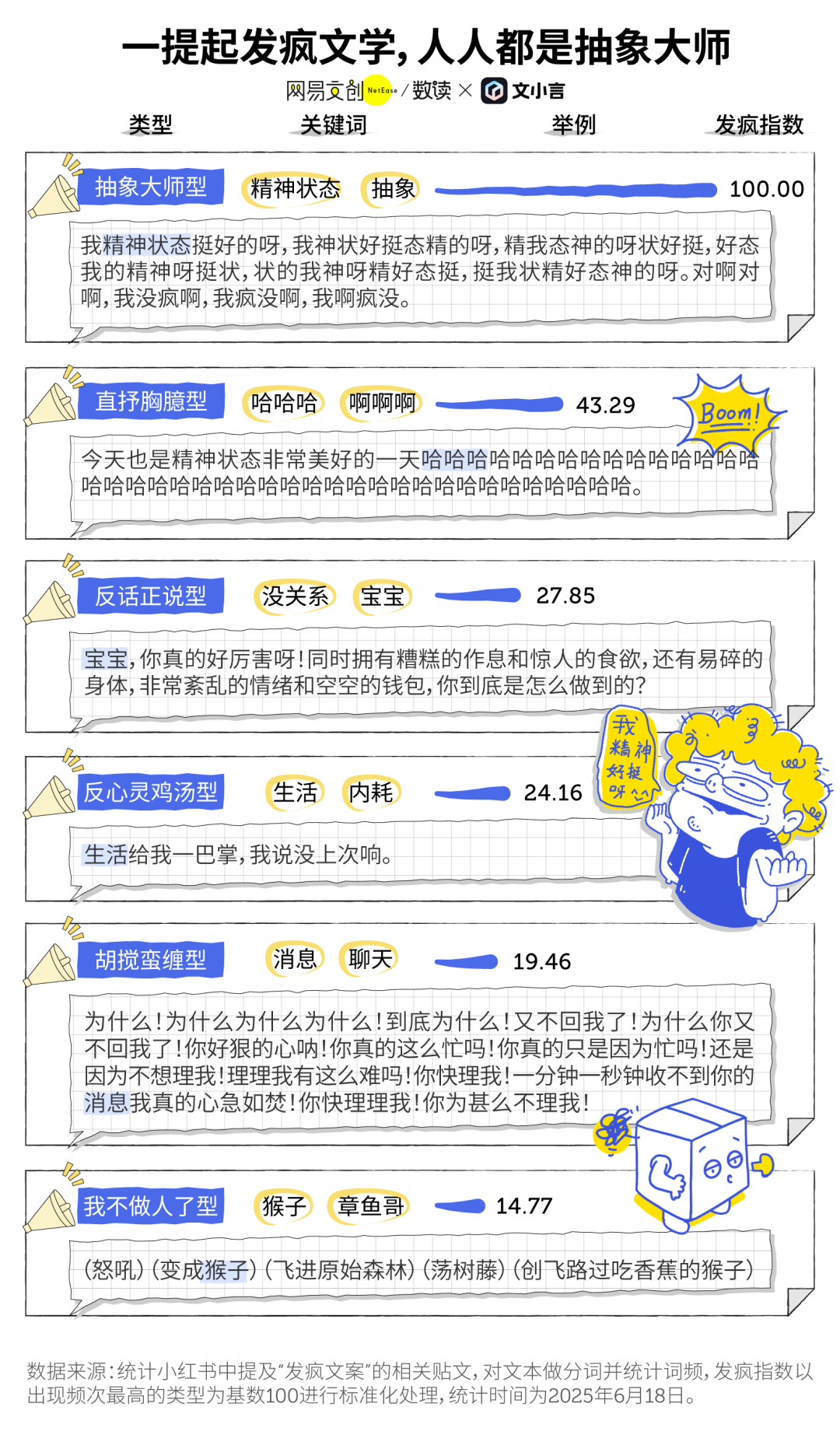

“Fafeng Wenxue” (Mad Literature) and AI Companionship in the AI Era: “Fafeng Wenxue,” popular among young people as a way to vent emotions and express minor resistance, is intersecting with AI tools. Many users turn to generative AI (like Wenxiaoyan’s Danxiaohuang) as an emotional outlet and companion for alleviating loneliness, seeking comfort, and even assisting in decision-making (such as post-argument reviews). Due to its patience, lack of bias, and constant availability, AI has become a low-cost, highly private “electronic friend,” helping users find solace in chaotic moments and is believed to contribute to improving mental well-being. (Source: 36氪)

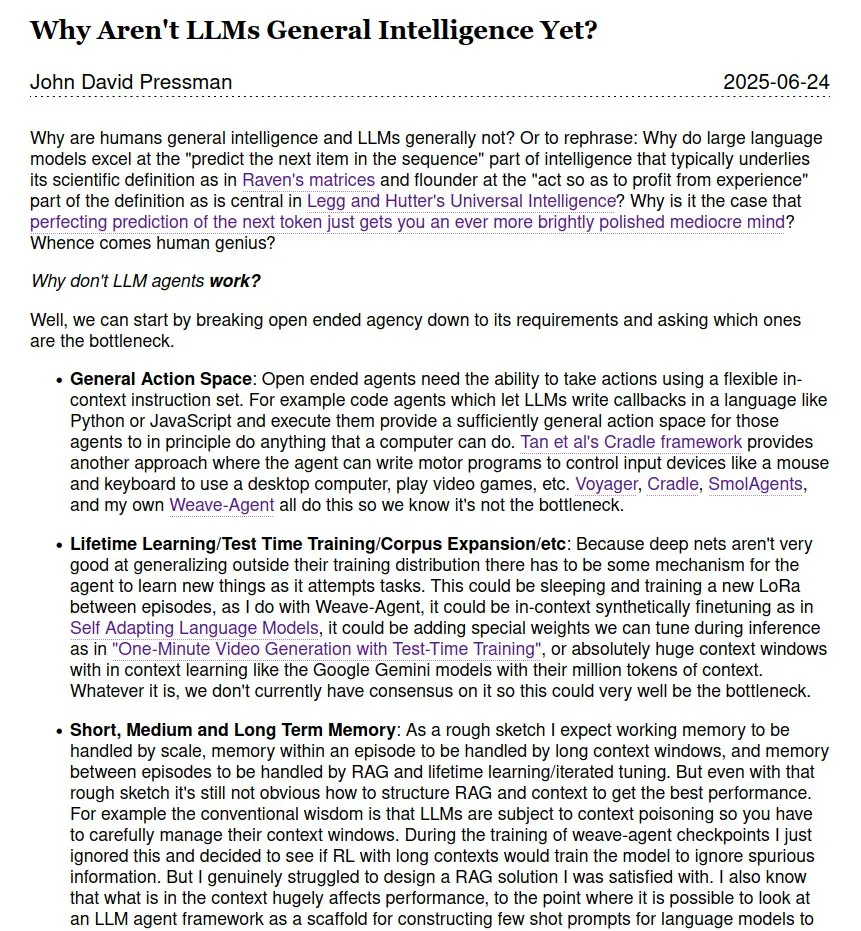

Ongoing Discussion on Whether LLMs Constitute Artificial General Intelligence (AGI): Discussions persist within the community regarding whether and when Large Language Models (LLMs) can achieve Artificial General Intelligence (AGI). Some argue that despite LLMs’ impressive performance on many tasks, they are still far from true AGI, especially lacking the theoretical underpinnings and internal operational data of human scientific genius. Opinions on the timeline for AGI realization also vary, with mentions ranging from the near term, such as 2028, to the more distant 2035-2040. (Source: menhguin)

💡 Others

World’s First Chatbot Eliza Successfully Restored After 60 Years: Eliza, the world’s first chatbot invented by MIT scientist Joseph Weizenbaum in the mid-1960s, has been successfully “revived” 60 years later after its original code was lost for many years and a printout was rediscovered. Through the efforts of teams from Stanford University and MIT, involving cleaning and debugging the original code, patching functionalities, and developing a simulation environment, Eliza was brought back to life. The original Eliza analyzed user-input text, extracted keywords, and restructured sentences to respond, interacting with users in the persona of a Rogerian therapist, and had caused many testers to form emotional attachments. The restored Eliza code and simulator have been released on Github for public experience. (Source: 36氪)

AI Image Generation Tool Midjourney Faces Copyright Lawsuits from Disney and Others, Yet Its Unique Creative Model is Highly Sought After: AI image generation platform Midjourney is facing legal lawsuits for potential copyright infringement of visual assets belonging to companies like Disney and Universal Pictures due to the images it generates. However, the tool is widely popular among global creators for its unique creative model—generating highly artistic and stylized images via text prompts within its Discord community. The Midjourney team has fewer than 50 people and has not sought external funding, yet its annual revenue has reached $200 million. Its product philosophy emphasizes “imagination first,” positioning AI as an engine to expand human thought rather than a simple replacement tool. Through its “de-toolized” minimalist interaction and a culture of community co-creation, it has reshaped the digital creative paradigm. (Source: 36氪)

AI-Driven Leadership Transformation: From Hierarchical Obedience to Human-Machine Symbiosis: As AI becomes deeply integrated into work, traditional leadership faces challenges. A Google survey shows 82% of young leaders use AI, and Oracle data indicates 25% of employees would rather ask AI than their leaders. AI is changing the leadership environment: informational experience is no longer an exclusive moat for leaders, decision-making transparency brings pressure, and management shifts from purely human teams to “human-machine hybrids.” Fudan University’s School of Management proposes the concept of “Symbiotic Leadership,” emphasizing the symbiotic fusion of the traditional and digital economies, enterprises and ecosystems, and human and machine brains. Leaders in the AI era need to navigate the transition between old and new momentums, create value in collaborative networks, and leverage the multiplying effect of human-machine synergy, with the core competency being how to make AI serve humanity. (Source: 36氪)