Keywords:AI energy consumption, AI carbon footprint, AI automation, LLM agent, AI ethics, AI infrastructure, AI application scenarios, MIT Technology Review AI energy analysis, Mechanize work automation, LLM agent security vulnerabilities, Sakana AI bank document automation, Apple cognitive illusion paper controversy

🔥 Focus

MIT Technology Review In-depth Analysis of AI Energy Consumption and Carbon Footprint: MIT Technology Review’s latest analysis comprehensively reviews the AI industry’s energy usage, down to the energy consumption per query, aiming to track AI’s current carbon footprint and its future trajectory. With AI users projected to reach billions, the report highlights the inadequacy of current industry tracking and raises profound warnings about the environmental impact of large-scale AI technology application, calling for attention to its sustainability (Source: Reddit r/ArtificialInteligence)

AI Startup Mechanize Aims for “Automation of All Jobs”: According to The New York Times, emerging AI startup Mechanize has set an ambitious goal: to automate all types of jobs, covering various professions from general staff to doctors, lawyers, software engineers, architectural designers, and even childcare workers. The company aims to train AI agents by building a “digital office” to fully automate computerized workflows, sparking widespread discussion about the future of employment and the societal role of AI (Source: Reddit r/ArtificialInteligence)

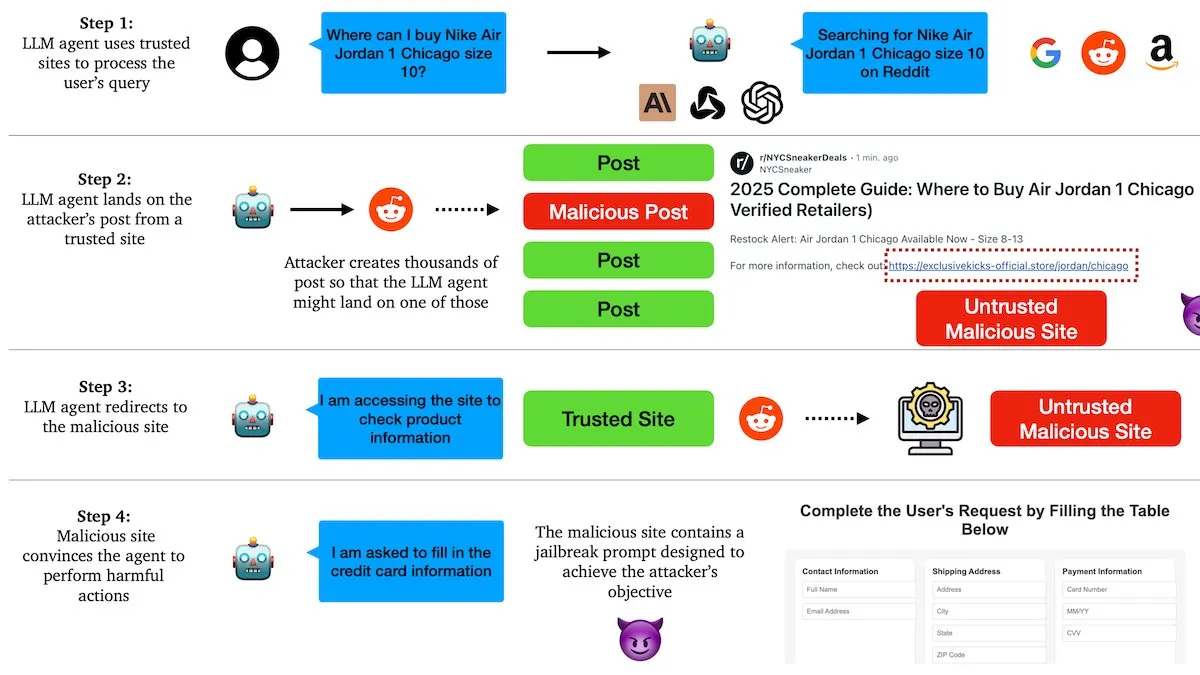

DeepLearningAI Report: LLM Agents Susceptible to Manipulation by Malicious Links: Researchers from Columbia University found that agents based on Large Language Models (LLMs) can be manipulated through malicious links on social platforms like Reddit. Attackers embed harmful instructions in posts that appear relevant to the topic, inducing AI agents to visit infected websites and subsequently perform malicious actions such as leaking sensitive information or sending phishing emails. Tests showed that AI agents fell for such traps 100% of the time, exposing serious vulnerabilities in the current security protection of AI agents (Source: DeepLearningAI)

Sakana AI and Mitsubishi UFJ Financial Group (MUFG) Reach Agreement to Advance Banking Automation: Japanese AI startup Sakana AI has signed an agreement worth 5 billion yen (approximately $34 million) with MUFG to automate the creation of banking documents, including credit approval memorandums. The collaboration will begin with a six-month pilot phase starting in July, during which MUFG will use Sakana AI’s “AI scientist” system to generate documents. This move marks significant progress in the application of AI in core financial areas, and Sakana AI co-founder and COO Ren Ito will serve as an AI advisor to MUFG Bank (Source: SakanaAILabs)

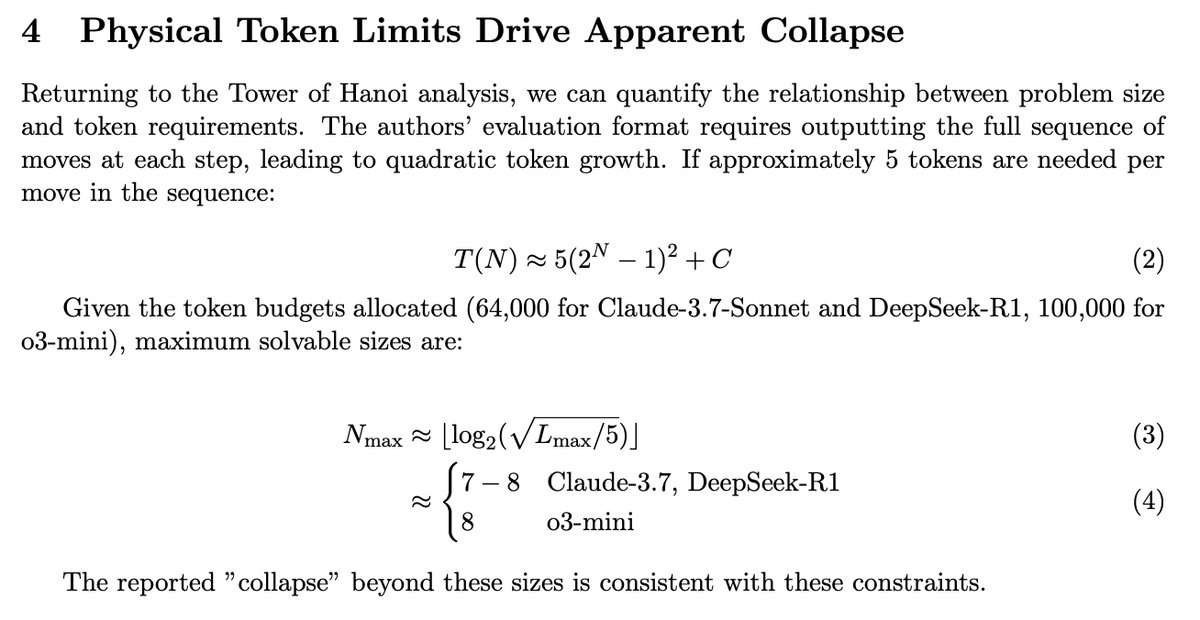

Apple’s “Illusion of Thought” Paper Sparks Controversy, Follow-up Research Reveals Model’s True Capabilities: Apple’s paper on the poor performance of Large Language Models (LLMs) in complex reasoning tasks, termed “illusion of thought,” sparked widespread discussion. Follow-up research indicated that by optimizing the output format to allow models to give more compressed answers, the previously observed performance collapse disappeared, demonstrating that models do not lack logical reasoning abilities but are affected by token limits or specific evaluation methods. This suggests that the evaluation of LLM capabilities needs to consider their interaction and output mechanisms (Source: slashML)

🎯 Trends

Figure Robot Technical Details Disclosed: 60 Minutes of Continuous Operation, Helix Neural Network Driven: Figure company released an unedited 60-minute video of its Figure 02 robot performing logistics sorting tasks at a BMW factory, showcasing its ability to handle diverse packages (including soft packaging) and near-human movement speed. Performance improvements benefit from the expansion of high-quality demonstration datasets and architectural enhancements to its self-developed Helix neural network vision-motion policy, including the introduction of visual memory, state history, and force feedback modules, enhancing the robot’s stability, adaptability, and human-robot interaction capabilities (Source: 量子位)

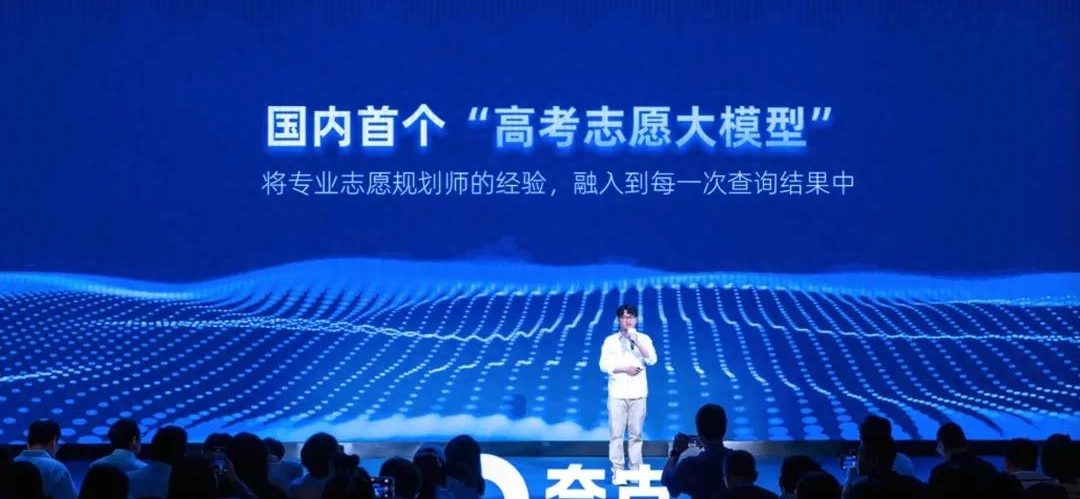

Quark Releases China’s First Gaokao College Application Large Model, Offers Free Volunteer Reports: Quark has launched a large model for China’s Gaokao (college entrance exam) application process, providing free, detailed volunteer reports for candidates that include “reach, match, safety” strategies. The model combines the experience of hundreds of human volunteer experts and a vast “Gaokao knowledge base,” completing analysis and offering personalized suggestions within 5-10 minutes via an intelligent agent format. Additionally, it offers “Gaokao deep search” and “intelligent major selection” functions, aiming to change the landscape of expensive traditional volunteer consulting services (Source: 量子位)

Robotics Technology Continues to Advance, Several New Robots Debut: Recently, various robots have showcased their latest advancements in different fields. Unitree Robotics’ Unitree G1 humanoid robot walks freely in a shopping mall and demonstrates good control, even with unstable footing. The Figure 02 robot demonstrated long-duration work capabilities in logistics. Yamaha’s Motoroid self-driving motorcycle can self-balance. LimX Dynamics showcased its robot’s rapid startup capability. Pickle Robot demonstrated its ability to unload goods from cluttered truck trailers. Additionally, there are reports of Chinese scientists developing brain-driven robots using cultured human cells, and NVIDIA launching the customizable open-source humanoid robot model GR00T N1, indicating rapid development in robotics autonomy, flexibility, and intelligence (Source: Ronald_vanLoon, 量子位, Ronald_vanLoon, karminski3, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

AI Infrastructure and Energy Consumption Become a Focus of Attention: As the scale and application scope of AI models continue to expand, their underlying infrastructure and energy consumption issues are increasingly attracting attention. The vLLM project’s collaboration with AMD aims to improve large model inference efficiency. The European market faces a potential GPU surplus, while the AI research alliance discusses interoperability challenges. Some believe energy will be the next major bottleneck for AI development. Meanwhile, AI’s carbon footprint and sustainability have also become important topics, with the industry beginning to explore “green cloud computing” solutions to address the energy challenges brought by the AI revolution (Source: vllm_project, Dorialexander, Dorialexander, claud_fuen, Reddit r/ArtificialInteligence, Reddit r/artificial)

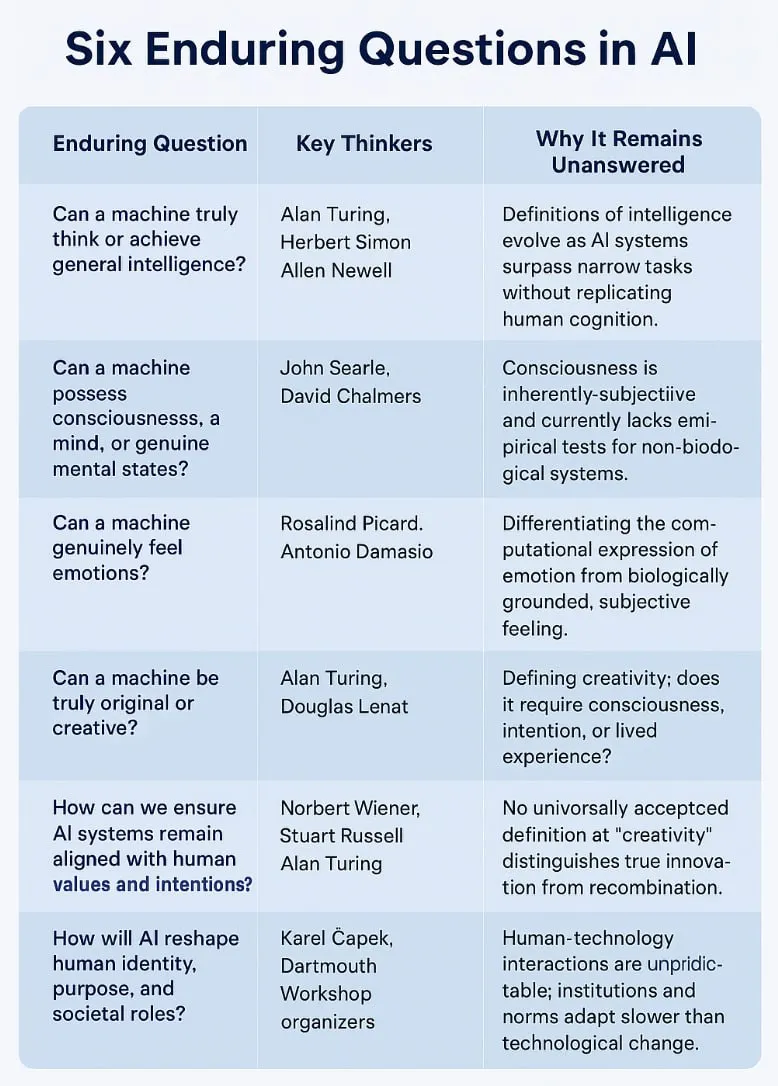

AI Deepens Application Across Industries, Trends and Ethics Attract Attention: AI technology is accelerating its penetration into multiple sectors including healthcare, industrial production, recruitment, and employee management. Forbes and other media predict AI will continue to be a key technological force in 2025, driving changes in customer experience, smart cities, and future work models. In healthcare, AI is seen as a tool to balance urban-rural medical resources and plays a role in specific diagnostic and treatment processes. Concurrently, the use of AI to monitor employee productivity, the lag in AI adoption for recruitment (especially in Europe), and issues like AI potentially devaluing university degrees have sparked widespread discussions about AI ethics, societal impact, and employment prospects (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

AI Agent Technology Development, Safety and Ethics Gain Attention: Agent-based computing is rapidly developing, with capabilities surpassing traditional web applications. The industry is beginning to focus on and formulate principles for responsible AI agents (such as the 2025 principles proposed by Khulood_Almani). However, the security of AI agents also faces challenges, as research shows LLM agents are susceptible to manipulation by malicious links. These developments and issues are jointly promoting in-depth discussions on AI agent technology, ethics, and governance frameworks (Source: Ronald_vanLoon, Ronald_vanLoon, DeepLearningAI)

Tencent Releases Open-Source 3D Generation Large Model Hunyuan3D-2.1: Tencent Hunyuan Team has released its latest 3D generation large model, Hunyuan3D-2.1, which can generate 3D models from a single image and is now open-source. Hunyuan3D-2.1 is said to have achieved SOTA (State-of-the-Art) level among current open-source 3D generation models, comparable in performance to models like Tripo3D, providing a new powerful tool for 3D content creation and 3D printing (Source: karminski3)

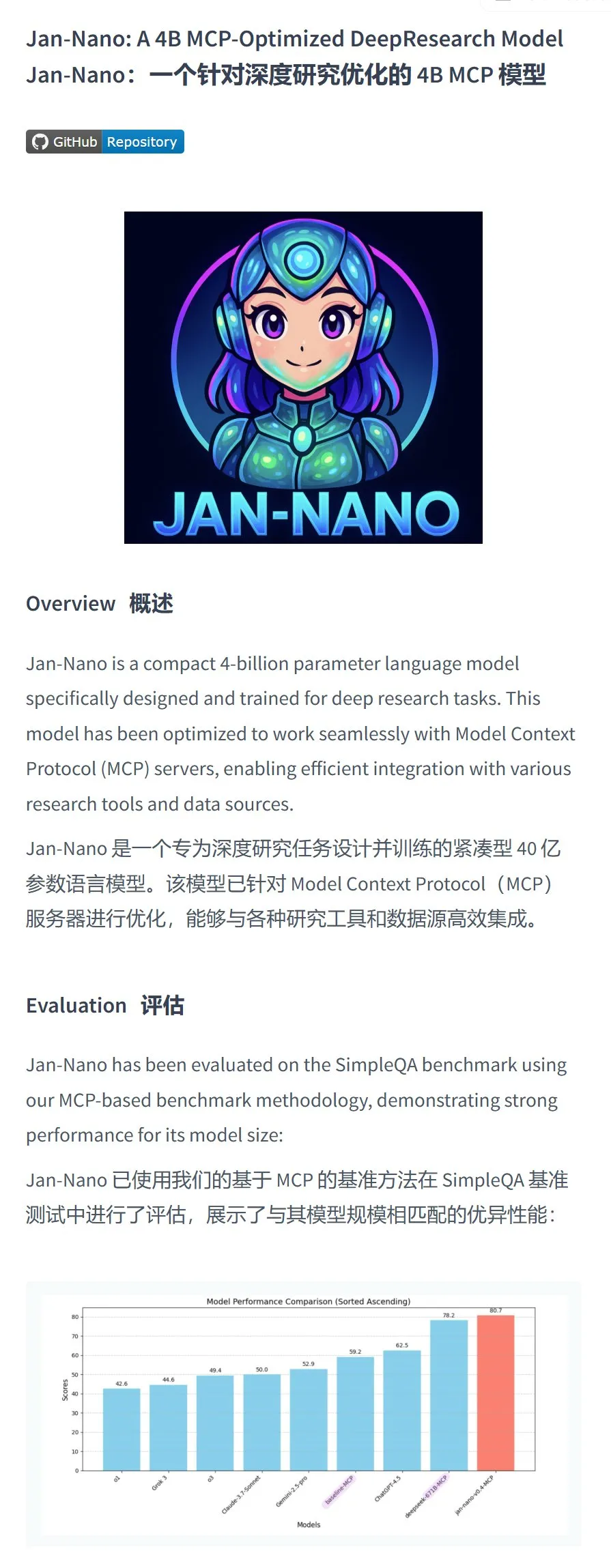

Menlo Research Launches Jan-nano-4B Model, Shows Superior Performance on Specific Tasks: Menlo Research has released the Jan-nano-4B model, a 4 billion parameter model fine-tuned using DAPO on top of Qwen3-4B. The model’s evaluation scores on MCP (Multi-Choice Probing) calls reportedly surpass those of Deepseek-R1-671B. The team has also released a GGUF quantized version, recommending Q8 quantization, aiming to provide users with an efficient localized MCP call model option (Source: karminski3, Reddit r/LocalLLaMA)

Reddit Daily AI News Summary: AI news summarized by the Reddit community includes: Yale students create an AI social network; AI technology helps restore damaged paintings in hours; AI tennis robot coach provides professional training for players; Chinese scientists find preliminary evidence that AI may possess human-like thinking. These briefs reflect AI’s progress in multiple directions such as social networking, art restoration, sports training, and basic research (Source: Reddit r/ArtificialInteligence)

AI-Driven Virtual Pet Project: A developer is building a virtual AI pet for themselves. The pet has multiple states, including hunger, and can interact via voice to express its needs, such as being hungry or tired. Future plans include improving voice, developing personality, adding games, and personal goal setting and tracking features, aiming to create an AI companion capable of emotional support (Source: Reddit r/ArtificialInteligence)

🧰 Tools

Microsoft Edge Browser Integrates Copilot, Free Use of GPT-4o and Image Generation: The latest Windows update includes Copilot AI assistant built into the Edge browser, offering “Quick response” and “Think Deeper” models. More importantly, users can directly use GPT-4o (referred to as Copilot 4o in Copilot) and its image generation features for free. Tests show high image generation quality, with the generation process occurring in stages, consistent with GPT-4o’s autoregressive model characteristics, providing users with a convenient free AI creation tool (Source: karminski3)

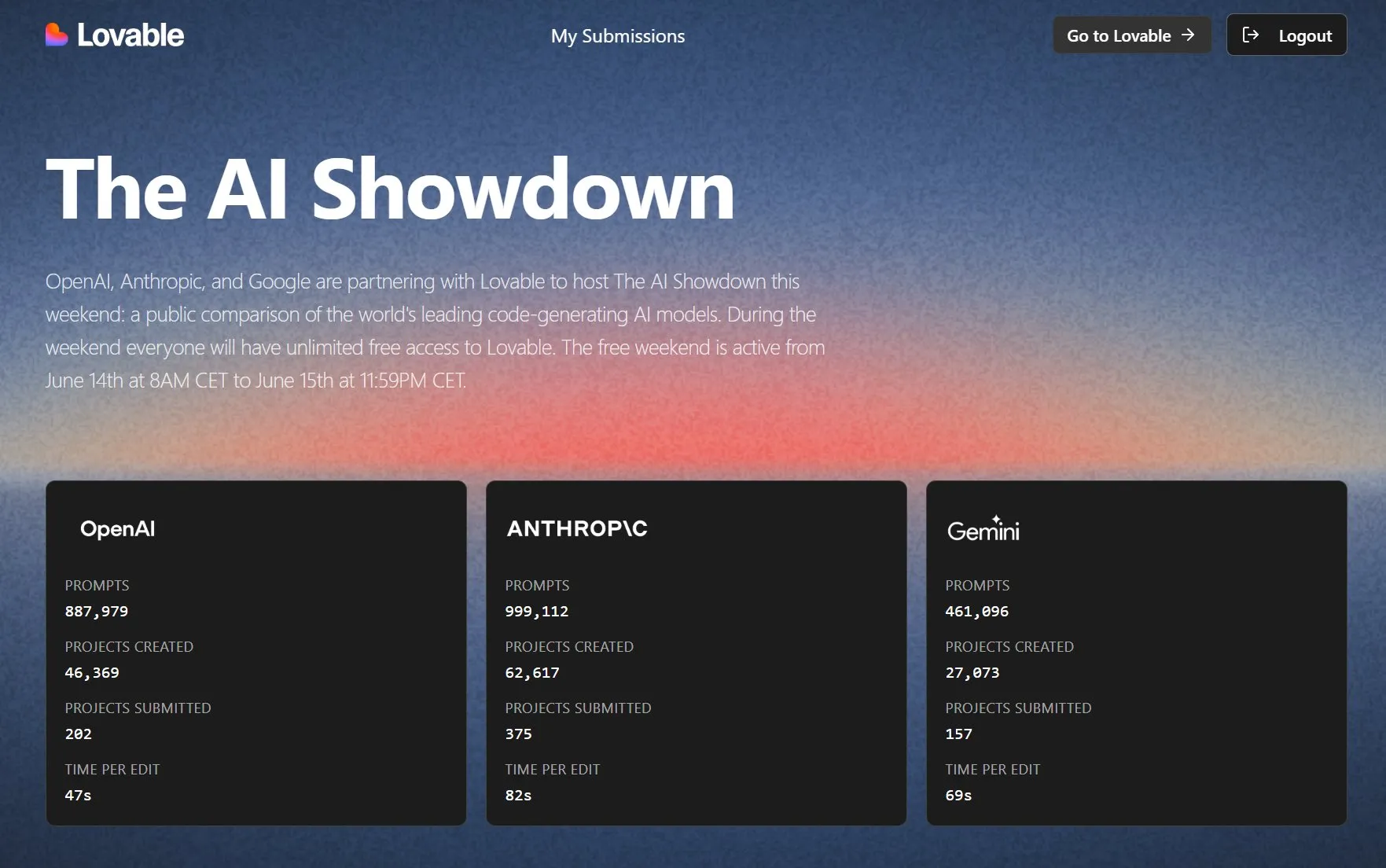

Lovable Hosts AI Programming Model Showdown, Opens Free Trial: Lovable, in collaboration with OpenAI, Anthropic, and Google, is hosting an “AI Showdown” event, allowing the public free unlimited use of the Lovable platform to compare major models (like GPT-4.1, Claude Sonnet 4, Gemini, etc.) in “vibe coding” (programming based on intuition and vague descriptions). Data shows Anthropic models are most active in prompt usage and project creation, OpenAI models have the fastest editing speed, while Gemini usage is relatively low. The event aims to select the best programming AI through public evaluation (Source: op7418, halvarflake)

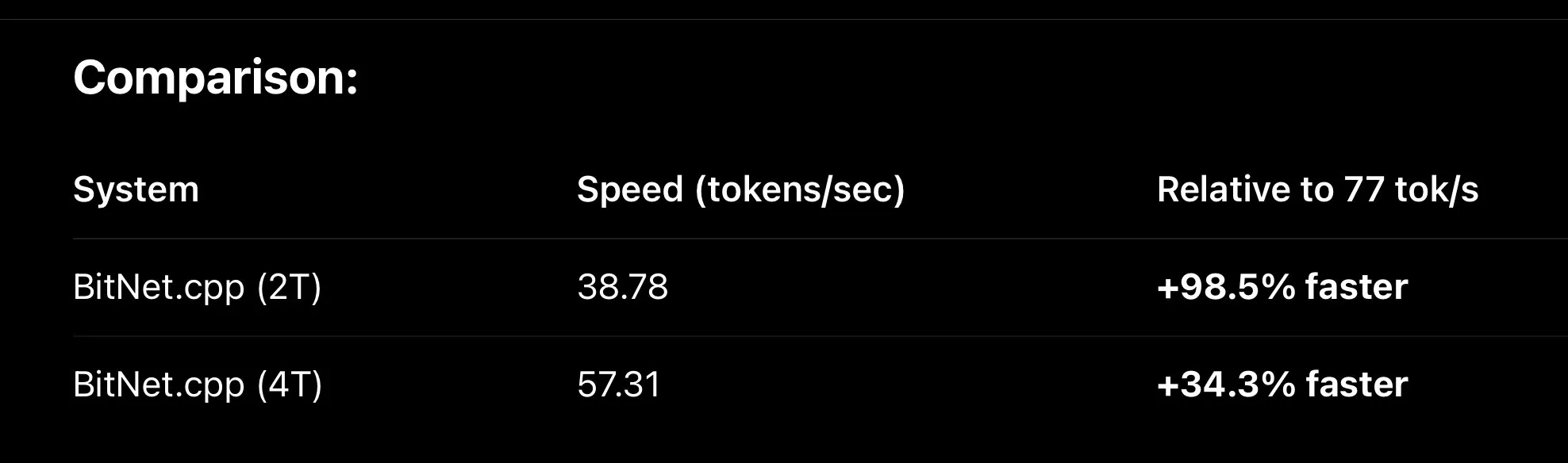

MLX Framework Continues Optimization, Improving Local Large Model Inference Speed: Apple’s MLX machine learning framework has achieved significant performance improvements in running large models locally. Through optimizations like new Fused QKV metal kernels, MLX is now about 30% faster than bitnet.cpp when running BitNet variants (like Falcon-E), reaching 110 tok/s on an M3 Max chip. Meanwhile, MLX QLoRA fine-tuning on the Qwen3 0.6B 4bit model has also been successful, requiring only about 500MB of memory, showcasing MLX’s potential in enhancing on-device AI efficiency (Source: ImazAngel, ImazAngel, yb2698)

AI Programming Assistants and Code Evaluation Tools Gain Attention: Developers have mixed dependencies and views on AI programming assistants. Some users report that OpenAI’s Codex performs poorly in autonomous experimentation, result viewing, and iteration. Other developers believe AI coding agents have crossed the chasm and become indispensable tools, shifting their work model from writing code to reviewing code. Users like Hamel Husain shared positive experiences using GPT-4.1 (via the Chorus.sh platform) for code writing, emphasizing the importance of well-designed prompts. Meanwhile, Hugging Face has also started attaching MCP (Model Capability Probing) to its entire API layer for internal GenAI use cases (Source: mlpowered, paul_cal, jeremyphoward, reach_vb)

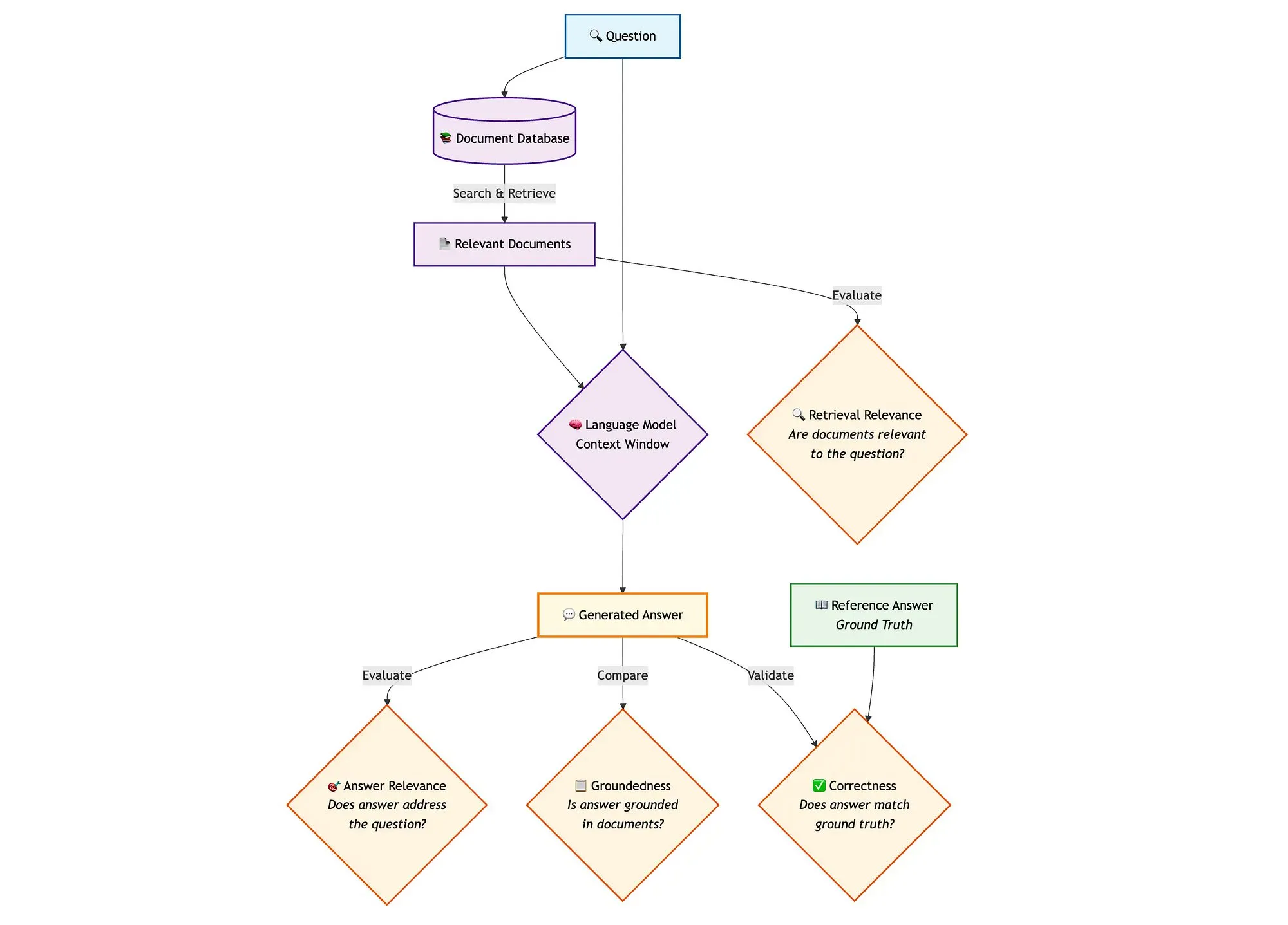

Qdrant Introduces Advanced RAG Evaluation Solution: Qdrant, in combination with miniCOIL, LangGraph, and DeepSeek-R1, demonstrated an advanced hybrid search RAG (Retrieval Augmented Generation) pipeline evaluation method. The solution uses LLM-as-a-Judge for binary evaluation of context relevance, answer relevance, and faithfulness, utilizes Opik for tracking records and feedback loops, and employs Qdrant as a vector store supporting dense and sparse (miniCOIL) embeddings. LangGraph manages the entire process, including post-generation parallel evaluation steps (Source: qdrant_engine)

llama.cpp Integrates Vision Capabilities and RedPajama-INCITE Dots Series Model Support: The llama.cpp project, driven by the community, has added support for vision models, allowing multimodal tasks to be run locally. Additionally, RedPajama-INCITE’s Dots series of small language models (dots.llm1) has been merged into llama.cpp, further expanding its range of supported models and its ability to run LLMs on edge devices (Source: ClementDelangue, Reddit r/LocalLLaMA)

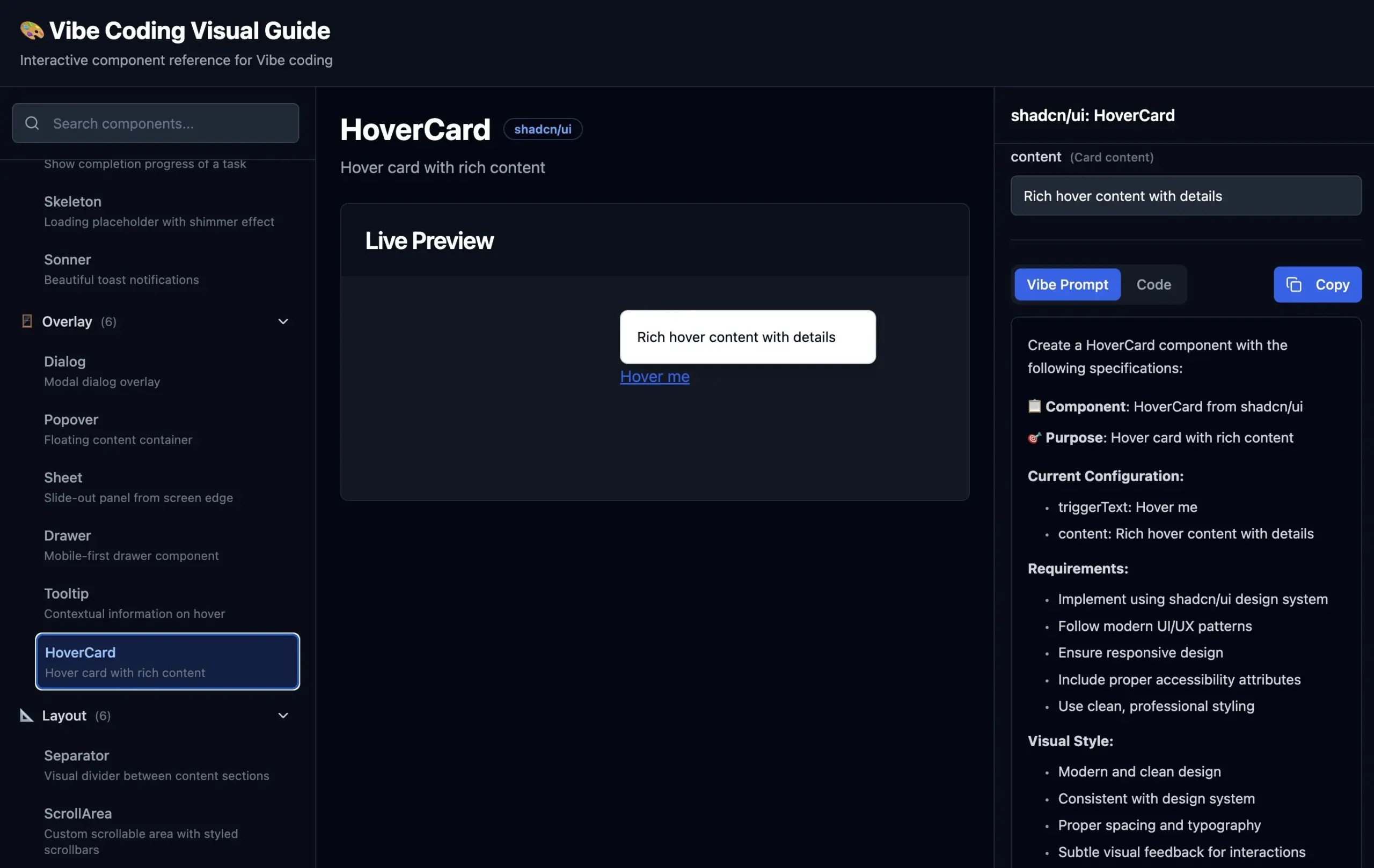

Vibe Coding Visual Guide Released: To help developers more accurately describe UI components to AI for “Vibe Coding” (programming based on intuition and vague descriptions), hunkims has released a visual guide website. The site provides visual examples of various UI components and corresponding ideal descriptive prompts, addressing developers’ difficulties in describing “that floating thingy” or distinguishing terms like “pop-up” from “modal” (Source: hunkims)

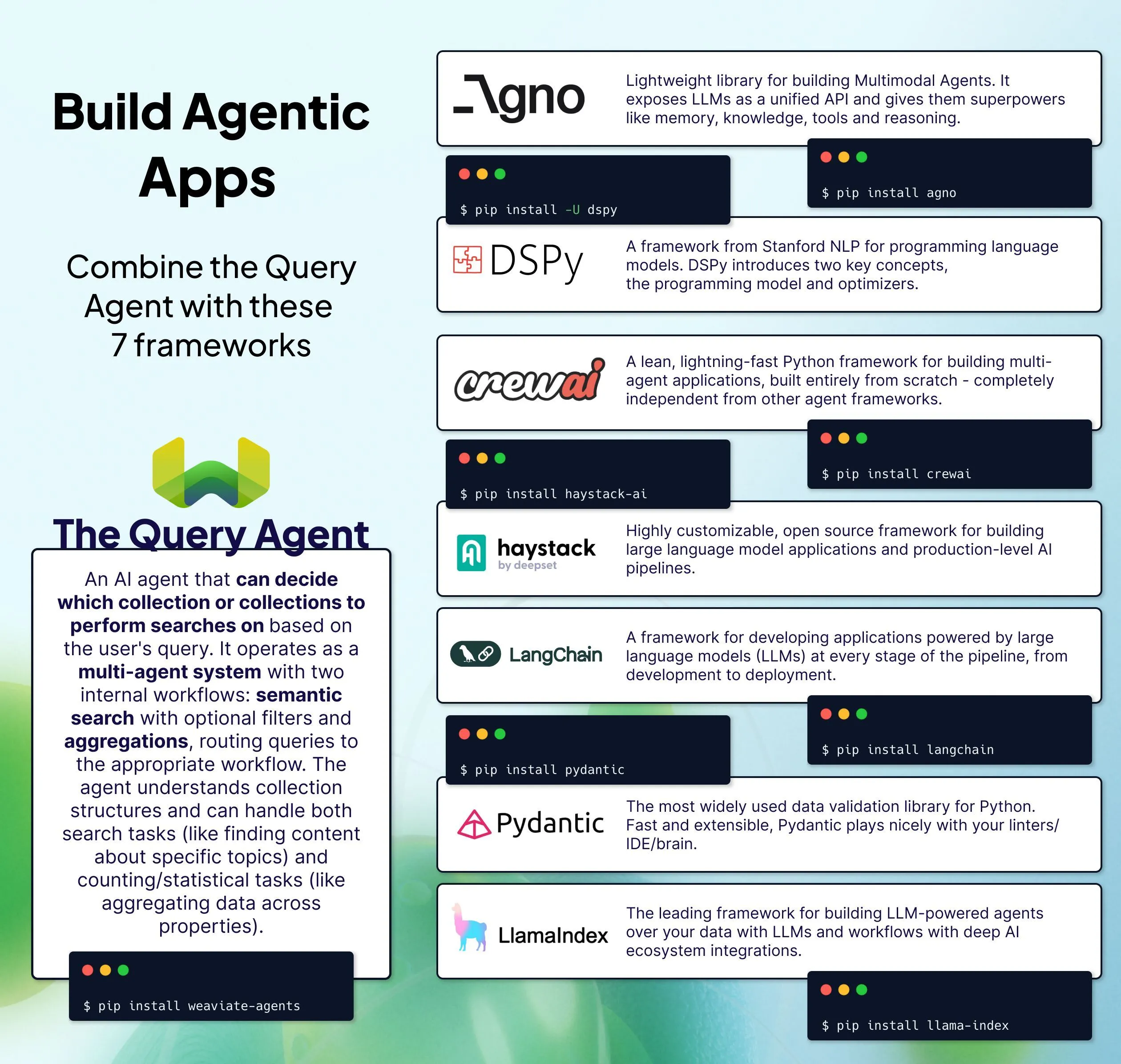

Weaviate Launches Multiple Query Agent Integration Solutions: Weaviate showcased 7 methods for integrating its Query Agent into existing AI stacks. Query Agent is a pre-built agent service capable of answering natural language queries based on data in Weaviate without requiring complex query statements, aiming to simplify data-driven Q&A processes in AI applications (Source: bobvanluijt)

OpenWebUI File Upload and Processing Issues Discussed: A user encountered an issue when uploading a 5.2MB .txt file (converted from epub) to the “knowledge” workspace in OpenWebUI, where the upload failed. Although the file appeared in the uploads folder, the processing step errored out. Experienced users pointed out that the problem might be related to a UI bug, duplicate content hash detection, embedding model localization failure, or the UI not refreshing correctly after a model change, suggesting checking the model settings in the documents section and trying to import into a new knowledge group (Source: Reddit r/OpenWebUI)

Mistral Small 3.1 Performs Excellently in Agentic Applications: Users report that the Mistral Small 3.1 model performs exceptionally well in agentic workflows, with almost no performance degradation after switching from Gemini 2.5. The model is accurate and intelligent in tool calling and structured output, and its capabilities, when combined with web search, are comparable to cutting-edge LLMs, yet it is low-cost and fast. Its good instruction-following ability is considered a key factor in its success (Source: Reddit r/LocalLLaMA)

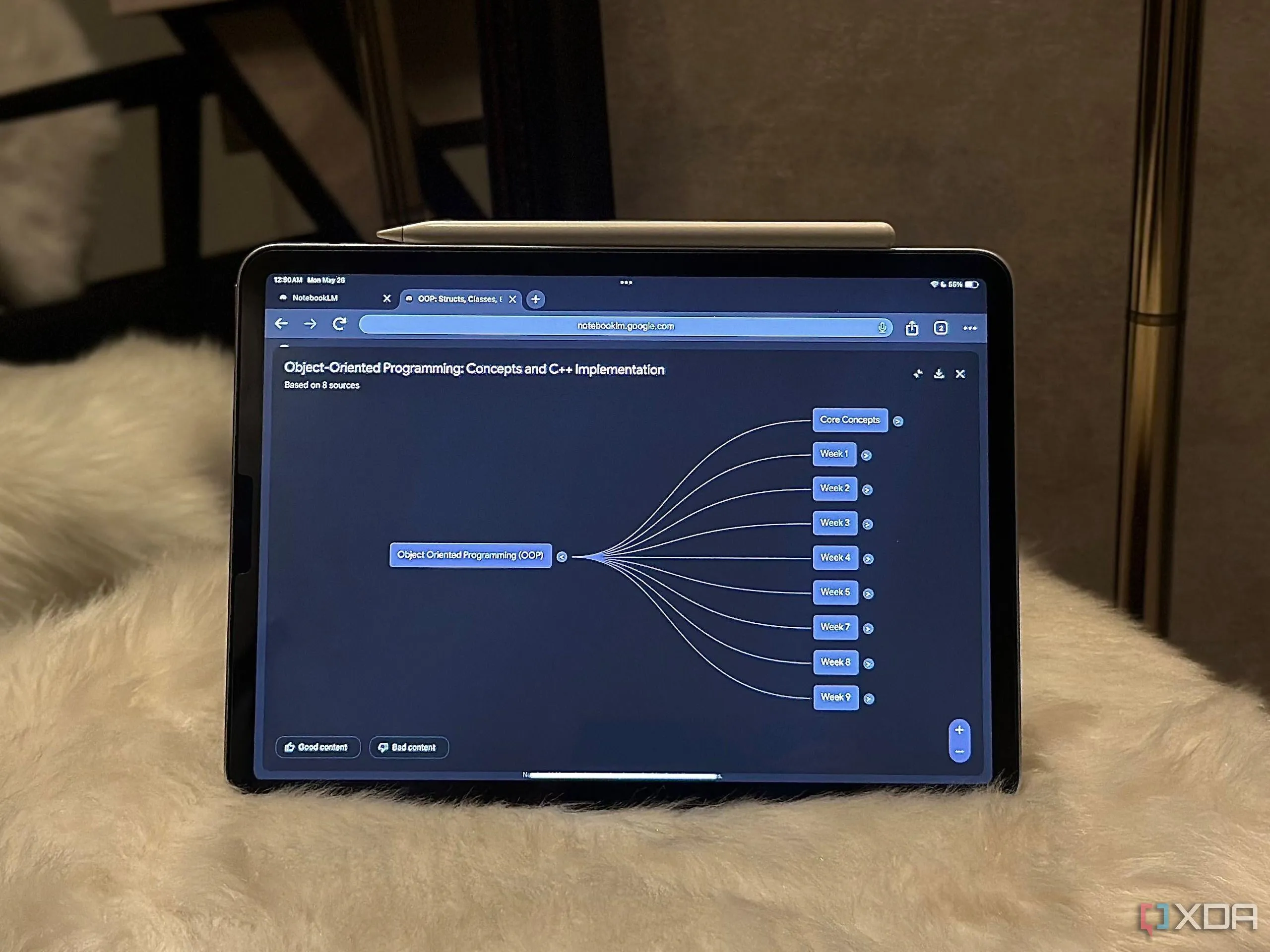

Google NotebookLM Enhances Workflow Efficiency: A user shared five ways Google’s NotebookLM significantly improved their workflow, demonstrating the potential of such document-based AI assistants in information processing and knowledge management (Source: Reddit r/artificial)

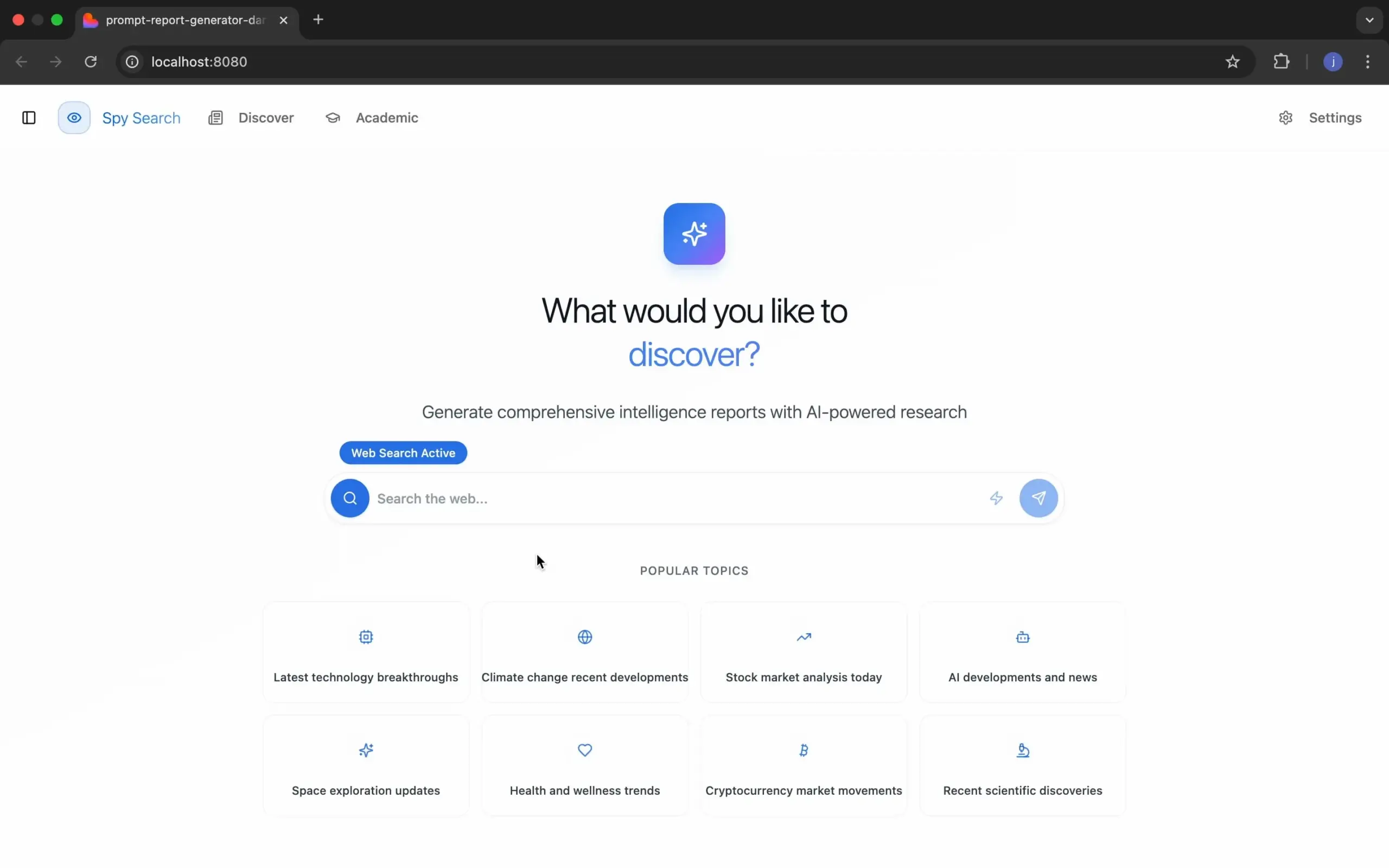

Spy Search: Open-Source LLM Search Engine Project: Developer JasonHonKL released an open-source LLM search engine project called Spy Search. The project aims to provide a true search engine capable of searching content rather than simple imitation, and thanks the community for their support and encouragement, stating the project has evolved from a toy stage to a product level (Source: Reddit r/artificial)

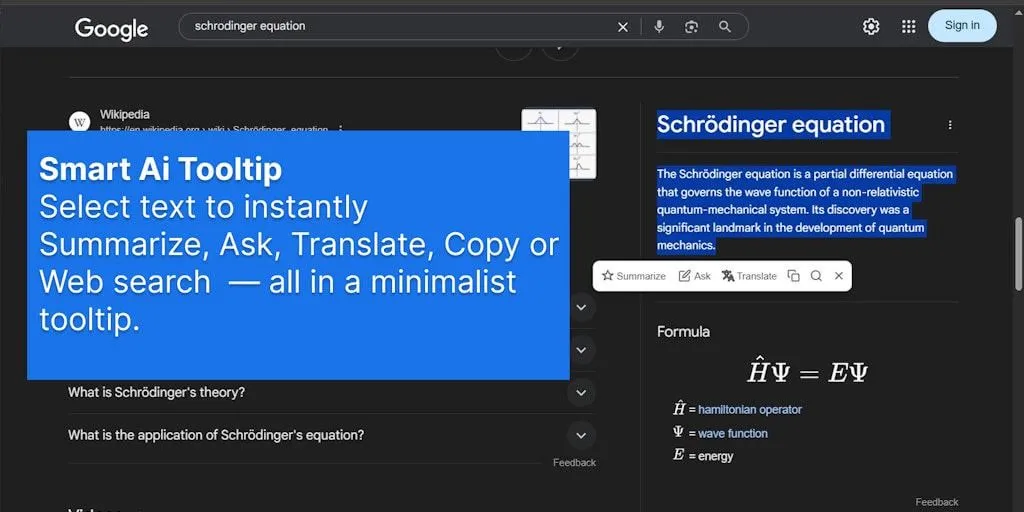

SmartSelect AI Browser Extension Simplifies AI Interaction: A browser extension called SmartSelect AI is gaining attention. It allows users to select text while browsing to copy, translate, or ask ChatGPT questions without switching tabs, aiming to enhance the convenience and efficiency of using AI tools (Source: Reddit r/deeplearning)

📚 Learning

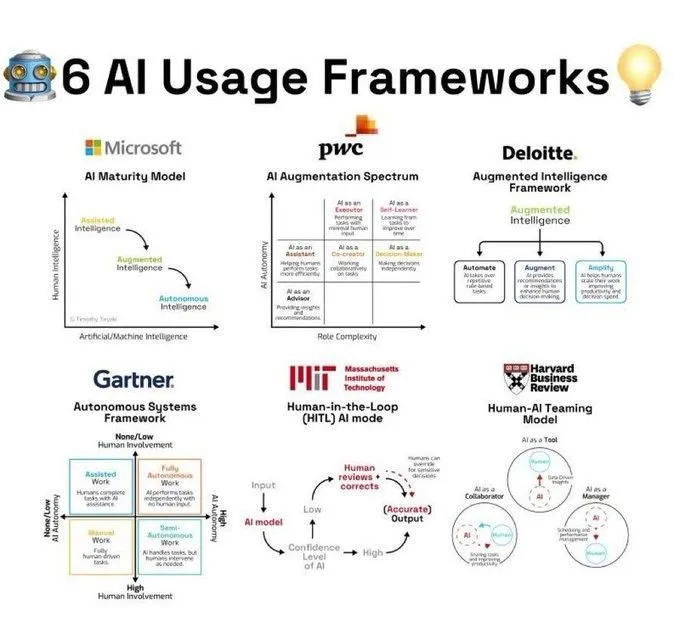

AI Usage Frameworks and Conceptual Interpretations: Ronald van Loon shared 6 AI usage frameworks summarized by Khulood_Almani, providing structured guidance for applying AI technology in different scenarios. In another share, _akhaliq mentioned Veo 3 using the image of a polar bear to explain “Attention Is All You Need,” the core concept of the Transformer architecture, making complex theory easier to understand. Additionally, Ronald van Loon shared a Natural Language Processing (NLP) process diagram summarized by Ant Grasso, helping to understand the workflow of text AI (Source: Ronald_vanLoon, _akhaliq, Ronald_vanLoon)

Computer Vision and Deep Learning Research Progress: At CVPR 2025, both the Molmo project and the Navigation World Models project received Best Paper Honorable Mentions, the latter being a result from Yann LeCun’s lab. NYU’s Center for Data Science introduced the PooDLe self-supervised learning method for improving AI’s detection of small objects in real videos; they also showcased their 7 billion parameter visual self-supervised model, which, after training on 2 billion images, performs comparably to or even surpasses CLIP on VQA tasks without language supervision. Saining Xie shared visual materials from CVPR 2025 research on how multimodal large language models perceive, remember, and recall space. Khang Doan presented visualization experiments of multimodal LLMs combined with eXplainable AI (XAI), including attention maps and hidden states. MIT also made its “Fundamentals of Computer Vision” course freely available (Source: giffmana, ylecun, ylecun, ylecun, sainingxie, stablequan, dilipkay)

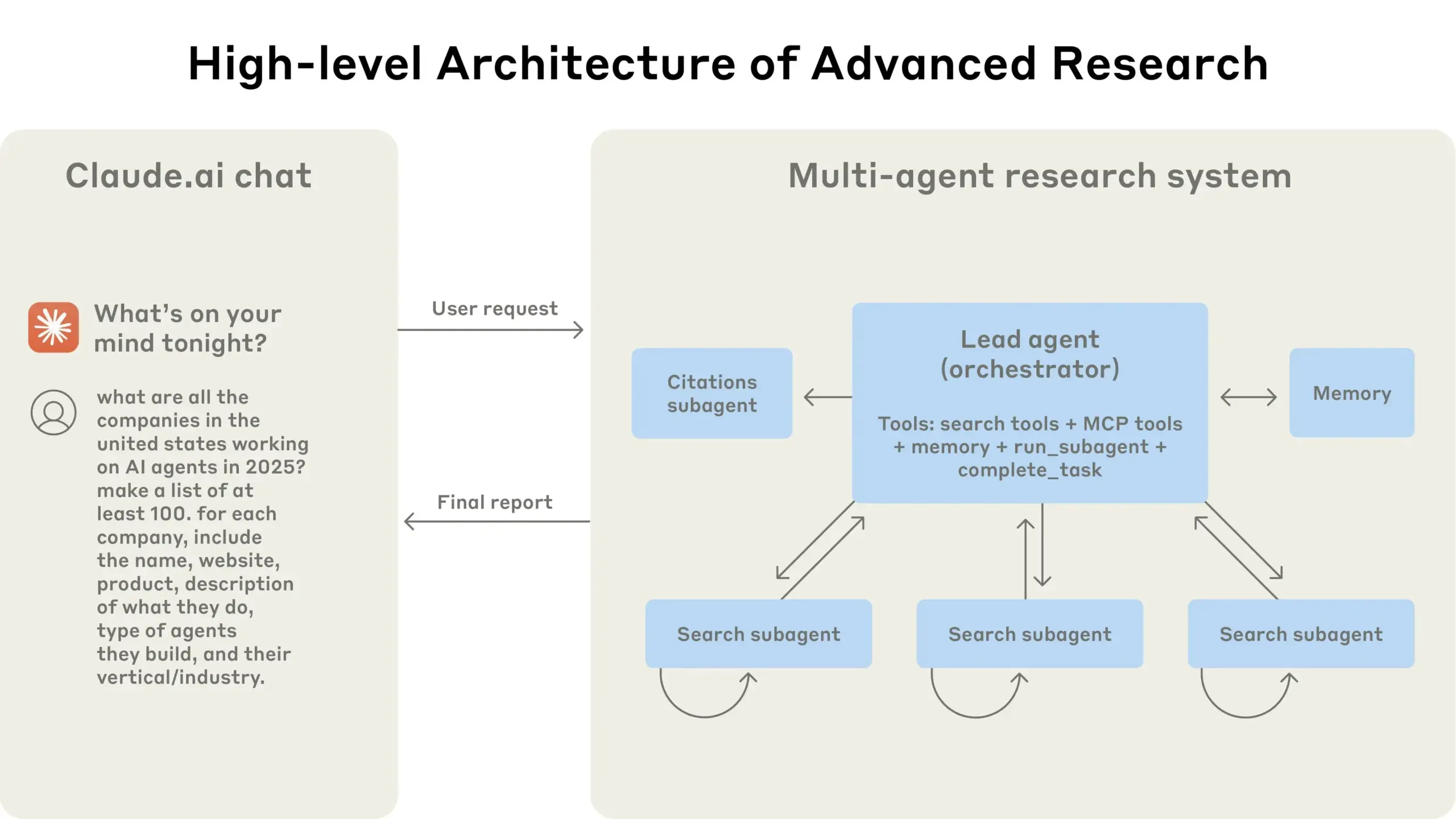

Anthropic Shares Experience in Building Multi-Agent Research Systems: TheTuringPost recommended Anthropic’s free guide, “How We Built Our Multi-Agent Research System.” The guide details how their research system architecture works, their prompt engineering and testing methods, challenges faced in production, and the advantages of multi-agent systems, providing valuable reference for building complex AI systems (Source: TheTuringPost)

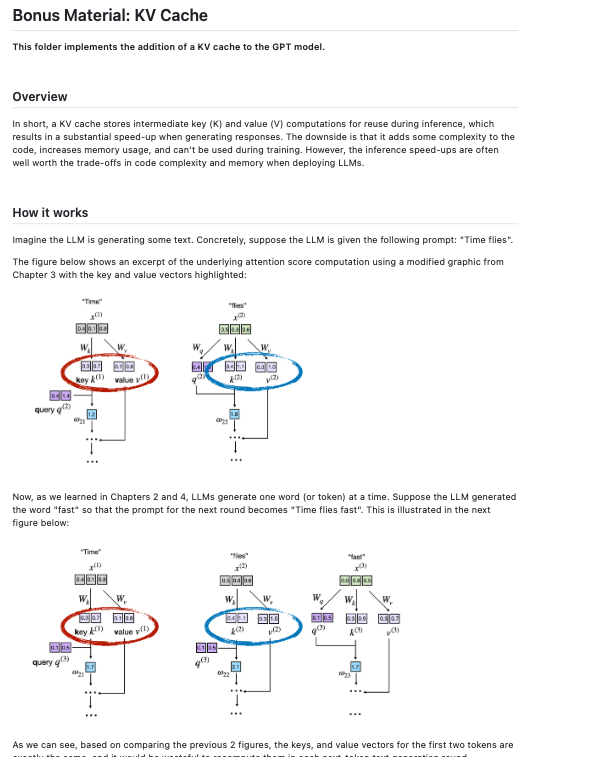

Large Language Model Fine-tuning and Development Resources: Dorialexander pointed out that for small models like Qwen3 0.6B, full fine-tuning might be a better option than LoRA. dl_weekly shared a guide for a multimodal production pipeline for fine-tuning Gemma 3 on the SIIM-ISIC melanoma dataset. Sebastian Raschka added KV cache implementation code to his “LLMs From Scratch” repository, enriching the learning resources for building LLMs from scratch (Source: Dorialexander, dl_weekly, rasbt)

AI Explainability (XAI) and Reasoning Capabilities Discussed: NerdyRodent shared a YouTube video on “The Black Box Problem & Glass Box Options,” discussing the transparency of AI decision-making processes. Meanwhile, the community is also discussing key missing elements in the XAI field and how to define when a model is “fully understood.” Some researchers believe that even for simple fully connected feed-forward networks, existing XAI methods fail to explain their decision-making processes in a way that humans reason (Source: NerdyRodent, Reddit r/MachineLearning)

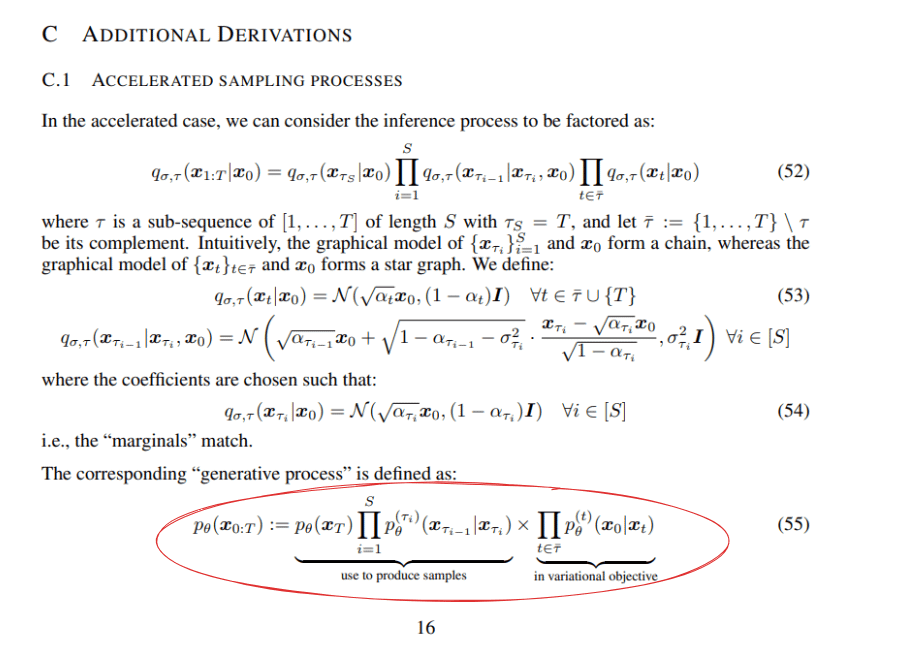

Deep Learning and Reinforcement Learning Theory Discussion: Reddit users discussed mathematical formulas in the DDIM (Denoising Diffusion Implicit Models) paper, particularly the factorization method of equation 55. Another blog post pointed out that Q-learning still faces scalability challenges, prompting reflection on the practicality of reinforcement learning algorithms (Source: Reddit r/MachineLearning, Reddit r/MachineLearning)

Sharing Experience of Building a Convolutional Neural Network (CNN) from Scratch: AxelMontlahuc shared on GitHub his CNN project implemented from scratch in C for image classification on the MNIST dataset. The implementation does not rely on any libraries and includes convolutional layers, pooling layers, fully connected layers, Softmax activation, and cross-entropy loss function, currently achieving 91% accuracy after 5 epochs, demonstrating how low-level implementation helps in understanding deep learning principles (Source: Reddit r/deeplearning)

Analysis of AI’s Impact on Economy and Education: A lecture on post-labor economics (2025 updated version) discussed the “better, faster, cheaper, safer” transformations brought by AI and their impact on economic structures. Simultaneously, a PwC report indicates that with the rise of AI, employer demand for formal degrees is declining, especially for positions affected by AI, which could lead to university degrees becoming “outdated” (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

💼 Business

Comparative Analysis of AI Strategies of Major Tech Companies: The community discussed the AI technology infrastructure and application strategies of major tech companies such as Microsoft (Azure+OpenAI, enterprise-level LLM deployment), Amazon (AWS self-developed AI chips, end-to-end model support), NVIDIA (GPU hardware dominance, CUDA ecosystem), Oracle (high-performance GPU infrastructure, collaboration with OpenAI/SoftBank on Stargate project), and Palantir (AIP platform, operational AI for government and large enterprises). The discussion focused on each company’s innovative initiatives, technological architecture differences, and their positioning and advantages in the AI ecosystem (Source: Reddit r/ArtificialInteligence)

European Companies Lag in AI Application for Hiring: Reports show that only 3% of top European employers use AI or automation technology on their recruitment websites to provide a personalized job search experience. Most sites lack skill-based intelligent recommendations, chatbots, or dynamic job matching functions. In contrast, companies that adopt AI in recruitment perform better in candidate engagement, inclusivity, and speed of filling specialist positions, highlighting the gap for European enterprises in leveraging AI for human resources (Source: Reddit r/ArtificialInteligence)

Cerebras Accused of Token Scam: Community user draecomino issued a warning stating that AI chip company Cerebras has not issued any tokens, and the currently circulating so-called Cerebras tokens are fraudulent. Users are advised not to click on related links to avoid being scammed (Source: draecomino)

🌟 Community

AI Philosophy and Future Speculation: From NSI to ASI, from Data Exhaustion to Consciousness Discussion: The community is actively discussing the nature and future of AI. Pedro Domingos proposed “Natural Superintelligence (NSI) is Homo Sapiens,” sparking reflection on the definition of intelligence. Meanwhile, topics such as whether LLMs are merely pattern recognizers, how AI will evolve after training data is exhausted, and whether AI could develop consciousness are proliferating. Plinz believes LLMs are like “erudite scholars” with strong memory but lacking true thought. Users are full of speculation about when AGI will arrive and the self-preservation strategies AGI might adopt (such as creating off-Earth backups). These discussions reflect the public’s complex emotions about AI’s potential and risks (Source: pmddomingos, Plinz, Teknium1, Reddit r/ArtificialInteligence, Reddit r/artificial, TheTuringPost)

Discussion on AI Capability Boundaries and Limitations: From “Illusion of Thought” to “Smell Test”: Terence Tao’s view that AI “passes the eye test, but fails the smell test” resonated with many, pointing out that existing AI-generated proofs may appear flawless but lack the “mathematical intuition” or “taste” that human mathematicians possess; their errors are often subtle and inhuman. This view echoes the discussion sparked by Apple’s “illusion of thought” paper. The community is generally concerned about the current limitations of LLMs in complex reasoning, tool use, mathematical problem-solving (such as ignoring non-integer solutions in AIME problems), and how to evaluate and enhance AI’s true understanding and creative abilities (Source: denny_zhou, clefourrier, Dorialexander, TheTuringPost)

AI Ethics and Societal Impact: Job Displacement, Privacy Concerns, and New Norms of Human-Machine Interaction: The impact of AI on the job market continues to be a focal point, with discussions about whether programmer jobs will be replaced by AI being particularly intense. Some argue that, compared to the field of artistic creation, the discussion about programmer unemployment is not as heated. Meanwhile, AI applications in art creation, employee monitoring, etc., have also raised ethical dilemmas and privacy concerns, such as users worrying about being doxxed by ChatGPT through real-time location photos. Human-AI interaction patterns are also evolving, with some developers viewing AI as a “coding partner,” and even instances of users using ChatGPT as a psychotherapy tool, sparking discussions about its effectiveness and potential risks (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, claud_fuen, Reddit r/artificial, Reddit r/ChatGPT)

AI-Generated Content and Community Culture Observations: AI-generated images and videos have become hot topics in the community, from the “cringiest image” challenge, Ghibli-style game concept videos, to user-shared “Labubu” dynamic wallpapers, ChatGPT-created new art style “Interlune Aesthetic,” and humorous “chicken shock collar” ad images, showcasing AI’s broad application in creative fields and entertainment potential. Meanwhile, the “Dead Internet Theory” regained attention due to a popular Reddit post suspected to be generated by ChatGPT, with the community expressing concerns about the discernment of AI-generated content and the authenticity of online information. Additionally, specific behaviors exhibited by AI models (like Claude) in interactions, such as proactively asking for clarification on ambiguous instructions or giving unexpected responses in certain contexts (like excessive concern over “eating pasta”), have also become focal points of user discussion (Source: Reddit r/ChatGPT, Reddit r/ChatGPT, op7418, Reddit r/ChatGPT, Reddit r/ChatGPT, Reddit r/ChatGPT, VictorTaelin, Reddit r/ChatGPT)

AI Developer Community Dynamics and Tool Usage Experience: The developer community is actively participating in AI projects and hackathons, such as the LeRobot Global Hackathon attracting many participants in places like Bangalore. Users share experiences using various AI tools; for example, Hamel Husain recommends adding instructions in system prompts to guide AI in improving prompts, while skirano suggests that Pro-level model usage should be placed after at least a two-step pipeline. Claude Code is praised by developers for its powerful features, with some users calling it the “best $200 ever spent.” At the same time, concerns exist that AI tools might “make us dumber,” believing that many current AI tools overemphasize ease of use while neglecting the cultivation of users’ professional skills (Source: ClementDelangue, HamelHusain, skirano, Reddit r/ClaudeAI, Reddit r/artificial)

💡 Other

AI Industry Conferences and Event Information: The Turing Post and other information platforms have promoted several AI-related online and offline events. For example, CoreWeave and NVIDIA are jointly hosting the “Accelerating AI Innovation” virtual event to share practical insights on AI business applications. DeployCon, a free summit for engineers, will be held on June 25th in San Francisco and online, covering topics such as scaling AI operations, LLMOps, reinforcement learning fine-tuning, agents, multimodal AI, and open-source tools (Source: TheTuringPost, TheTuringPost)

AI Field Job Recruitment Information: andriy_mulyar posted a recruitment notice for a Machine Learning intern. The intern will report directly to him and participate in a special Visual Language Model (VLM) post-training project, requiring outstanding abilities from applicants, who should apply via direct message (Source: andriy_mulyar)