Keywords:AI model, dataset, humanoid robot, AI Agent, language model, deep learning, open-source model, inference optimization, Common Pile v0.1 dataset, Helix end-to-end control model, Hugging Face MCP server, Gemini 2.5 Pro update, sparse attention mechanism

🔥 Spotlight

EleutherAI Releases Common Pile v0.1: 8TB Openly Licensed Text Dataset, Challenging Language Model Training with Unauthorized Data : EleutherAI, in collaboration with multiple institutions, has released Common Pile v0.1, a large dataset containing 8TB of openly licensed and public domain text. It aims to explore the feasibility of training high-performance language models without using unauthorized text. The team used this dataset to train 7B parameter models (1T and 2T tokens), achieving performance comparable to similar models like LLaMA 1 and LLaMA 2. The dataset includes document-level metadata such as author attribution, licensing details, and links to original copies, providing researchers with a transparent and compliant data source. This initiative is significant for promoting the development of open and compliant AI models and offers new approaches to addressing copyright issues in AI training data (Source: EleutherAI, percyliang, BlancheMinerva, code_star, ShayneRedford, Tim_Dettmers, jeremyphoward, stanfordnlp, ClementDelangue, tri_dao, andersonbcdefg)

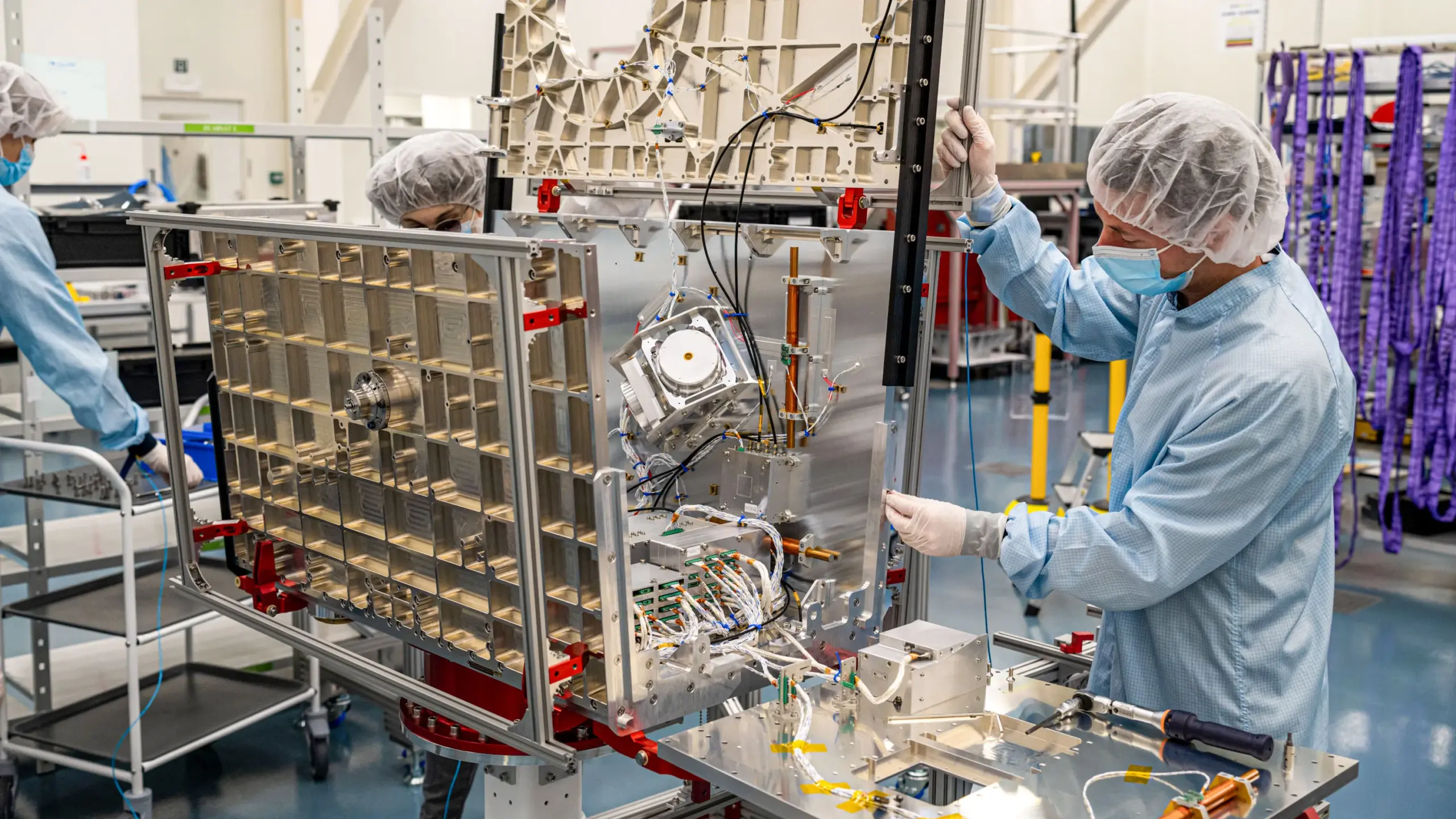

Figure Humanoid Robot Demonstrates High-Speed Parcel Sorting Capabilities Driven by Helix Model, Drawing Attention : Figure CEO Brett Adcock showcased the latest progress of its humanoid robot in parcel sorting within a logistics scenario, driven by the Helix end-to-end general-purpose control model. The video shows the robot handling different types of parcels (rigid cardboard boxes, plastic packaging) with speed and accuracy approaching human levels, including organizing parcels and ensuring barcodes face down for scanning. This capability highlights the generalization and flexibility of the Helix model in complex, dynamic environments, contrasting with previously demonstrated stamping press operations (emphasizing precision and high speed). Figure robots have already achieved 20-hour continuous shifts on BMW production lines, demonstrating their potential in industrial applications. Adcock emphasized that in the humanoid robot field, building the smartest, lowest-cost robot will be key to winning the market, as more robot deployments mean lower costs, more training data, and a smarter Helix model (Source: dotey, _philschmid, adcock_brett, QbitAI)

Hugging Face Releases First Official MCP Server, Building a Collaborative Platform for AI Agents : Hugging Face has launched its first official MCP (Model-Client Protocol) server, allowing users to connect LLMs directly to the Hugging Face Hub’s API for use in Cursor, VSCode, Windsurf, and other MCP-supported applications. The server provides built-in tools like semantic search for models, datasets, papers, and Spaces, and can dynamically list all MCP-compatible Gradio applications hosted on Spaces. This initiative aims to establish Hugging Face as a collaborative platform for AI Agent builders, fostering the development and interoperability of the AI Agent ecosystem. Approximately 900 MCP Spaces are currently available (Source: ClementDelangue, mervenoyann, reach_vb, ben_burtenshaw, huggingface, code_star, op7418, TheTuringPost, clefourrier)

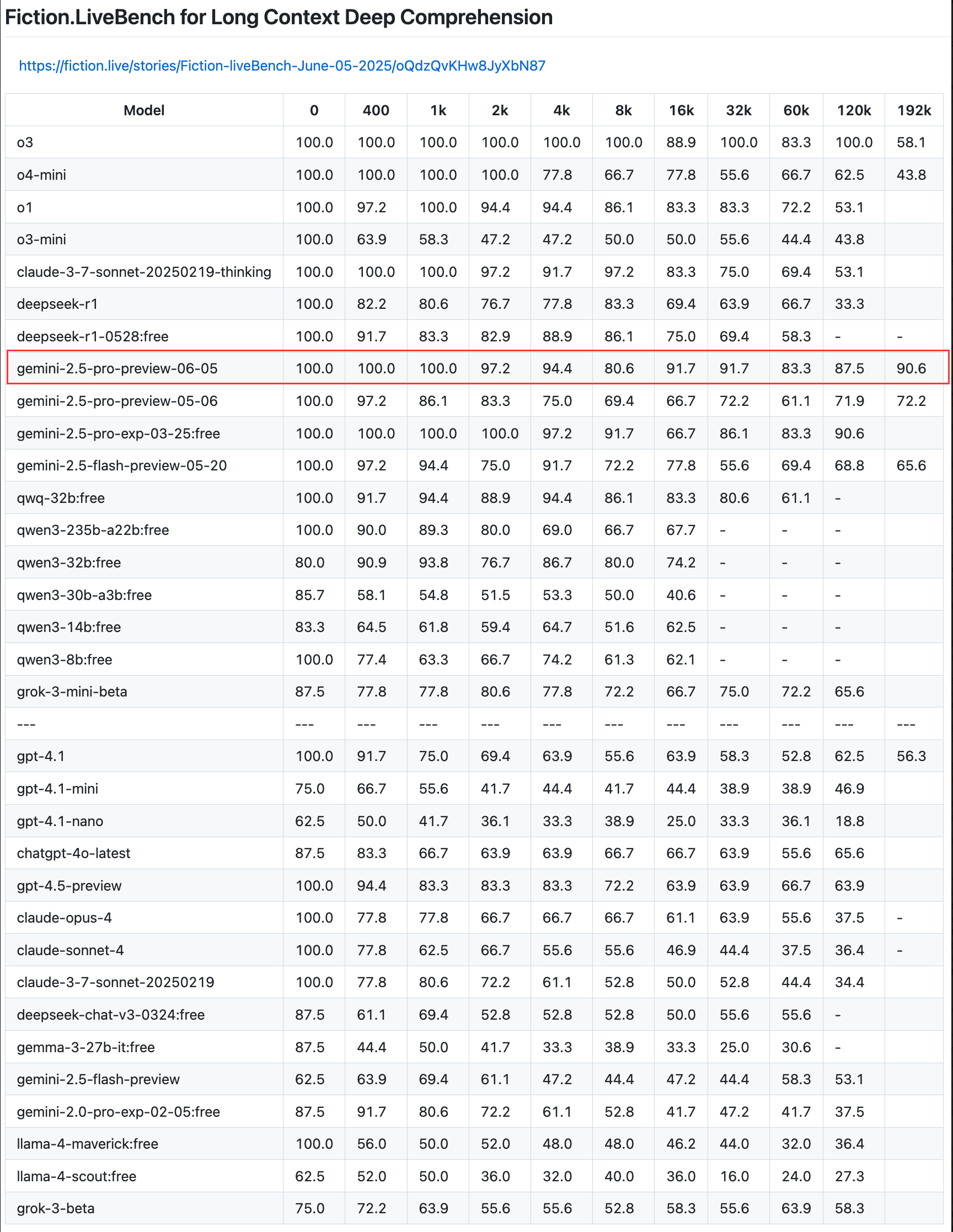

Google Updates Gemini 2.5 Pro Preview, Enhancing Coding, Reasoning, and Creative Capabilities, and Introduces “Thinking Budget” : Google announced an update to the preview version of its most intelligent model, Gemini 2.5 Pro, further enhancing its capabilities in coding, logical reasoning, and creative writing. The new version notably introduces a “thinking budget” feature, allowing developers to better control the model’s computational resource consumption. User feedback indicates that the new version (06-05) performs excellently in long-text recall, especially achieving a recall rate of up to 90.6% at 192K length, surpassing OpenAI-o3. The model has been integrated into LangChain and LangGraph, making it convenient for developers to try out and build applications. Google also showcased Gemini 2.5 Pro’s creative abilities in image understanding and generating contextualized, witty captions (Source: Teknium1, Google, karminski3, hwchase17, )

🎯 Trends

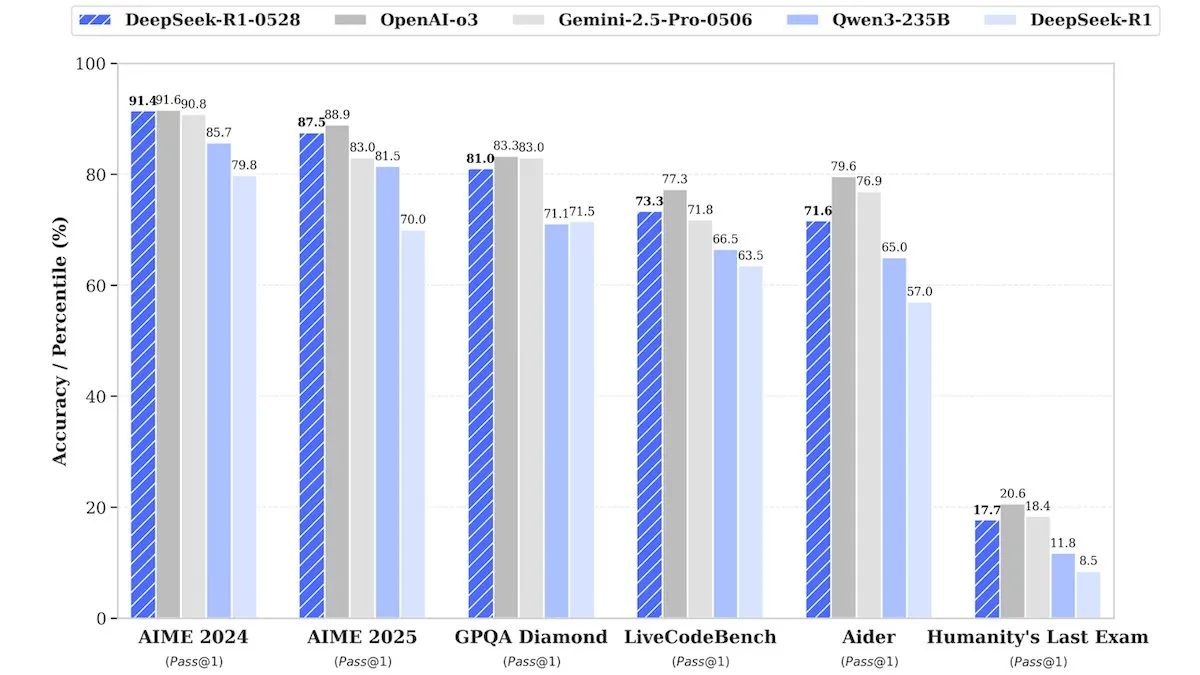

DeepSeek Releases Upgraded DeepSeek-R1-0528, Performance Comparable to Closed-Source Models : DeepSeek has launched an upgraded version of its flagship open-weight model, DeepSeek-R1-0528. It is claimed that this model performs comparably to closed-source models such as OpenAI’s o3 and Google’s Gemini-2.5 Pro in multiple benchmark tests. Although the company has not disclosed training details, reports indicate significant improvements in the new model’s reasoning, task complexity handling, and reduction of hallucinations, once again challenging the traditional notion that top-tier AI requires enormous resources. Unsloth AI has provided a free Notebook for fine-tuning DeepSeek-R1-0528-Qwen3 using GRPO, claiming its new reward function can increase multilingual (or custom domain) response rates by over 40%, and make R1 fine-tuning 2x faster with 70% less VRAM (Source: DeepLearningAI, ImazAngel)

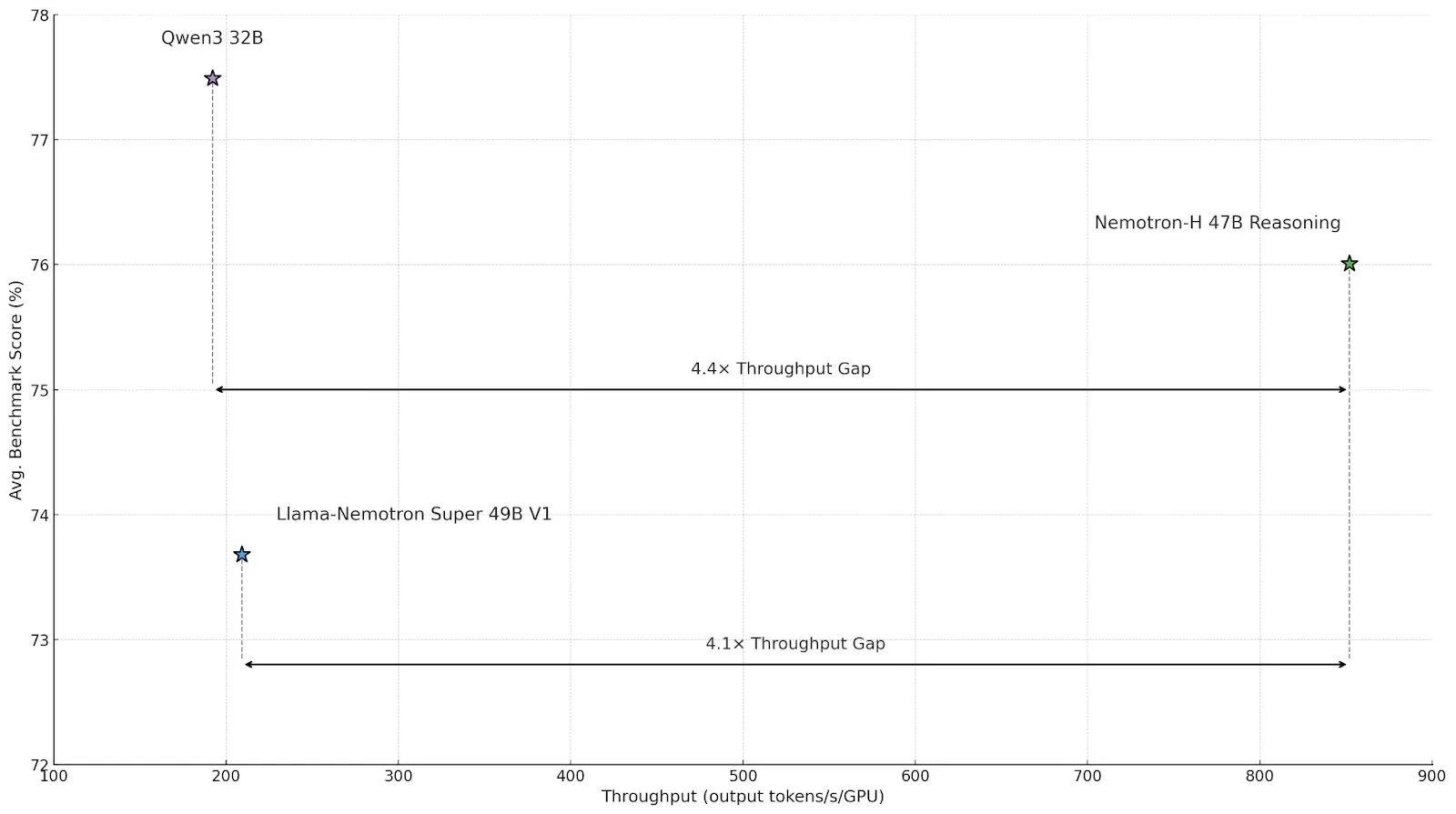

NVIDIA Releases Hybrid Architecture Inference Model Nemotron-H, Enhancing Throughput and Efficiency : NVIDIA has introduced a new inference model, Nemotron-H, available in 47B and 8B versions (supporting BF16 and FP8), featuring a Mamba-Transformer hybrid architecture. The model is designed to address large-scale inference problems while maintaining high speed, reportedly achieving 4 times the throughput of comparable Transformer models. Nemotron-H-47B-Reasoning-128k shows slightly higher accuracy than Llama-Nemotron-Super-49B-1.0 across all benchmarks, but with up to 4 times lower inference cost. Model weights have been released on HuggingFace under a non-production license, with a technical report forthcoming (Source: ClementDelangue, ctnzr)

Anthropic Launches Claude Gov, Designed for US Government and Military Intelligence Agencies : Anthropic has released a new AI service called Claude Gov, specifically designed to meet the needs of the US government, defense, and intelligence agencies. This move marks Anthropic’s formal expansion of its advanced AI technology into government and military applications, potentially for use in data analysis, intelligence processing, decision support, and various other scenarios. Anthropic had also previously joined a long-term benefit trust, aimed at helping the company achieve its public benefit mission (Source: MIT Technology Review, akbirkhan, jeremyphoward)

Hugging Face Collaborates with Google Colab to Simplify Model Trial and Prototyping Workflow : Hugging Face announced a collaboration with Google Colaboratory, adding “Open in Colab” support to all model cards on the Hugging Face Hub. Users can now directly launch a Colab Notebook from any model card, making model experimentation and evaluation easier. Additionally, users can place a custom notebook.ipynb file in their model repository, and Hugging Face will directly provide that Notebook, further enhancing the accessibility and rapid prototyping capabilities for AI models (Source: huggingface, osanseviero, ClementDelangue, mervenoyann)

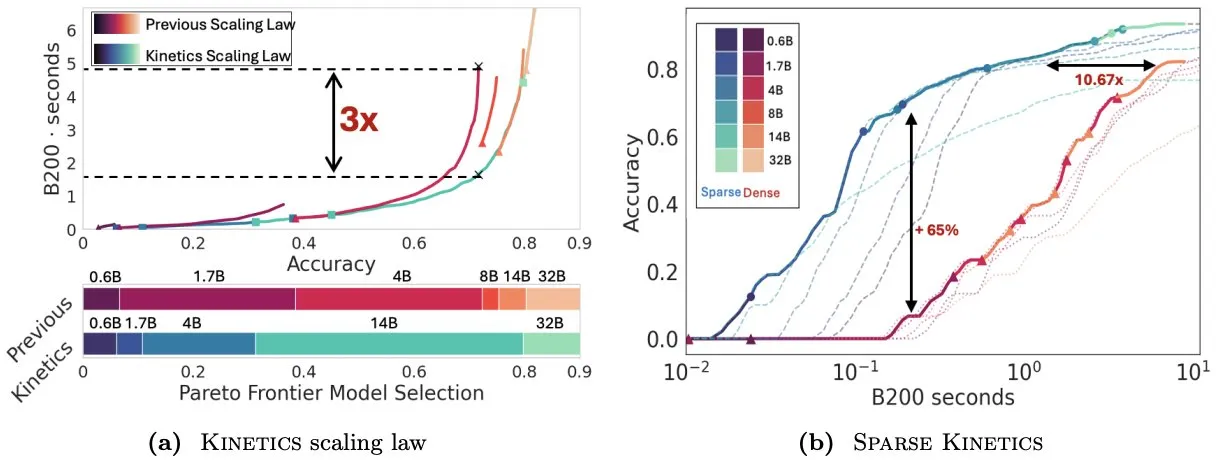

Paper “Kinetics” Rethinks Test-Time Scaling Laws, Emphasizing the Importance of Sparse Attention for Inference Efficiency : Infini-AI-Lab published the paper “Kinetics: Rethinking Test-Time Scaling Laws,” which points out that previous scaling laws based on computational optimality overestimate the effectiveness of small models, neglecting memory access bottlenecks brought by inference-time strategies (like Best-of-N, long CoT). The research proposes new Kinetics scaling laws that consider both computation and memory access costs, arguing that test-time compute resources are more effectively used for large models than small ones because attention, not parameter count, becomes the dominant cost. The paper further proposes a sparse attention-centric scaling paradigm, achieving longer generation and more parallel samples by reducing per-token cost. Experiments show sparse attention models outperform dense models across different cost regimes, crucial for enhancing large-scale model inference efficiency (Source: realDanFu, tri_dao, simran_s_arora)

China’s AI Agent Market Heats Up, Manus Leads Startup Trend : Following last year’s foundation model boom, the focus in China’s AI sector this year has shifted to AI Agents. AI Agents are more focused on autonomously completing tasks for users rather than simply responding to queries. Manus, a pioneer in general-purpose AI Agents, garnered widespread attention after its limited release in early March and has spurred a wave of startups building general-purpose digital tools capable of handling emails, planning trips, and even designing interactive websites. This trend indicates that China’s tech industry is actively exploring the practical applications and business models of AI Agents (Source: MIT Technology Review)

ElevenLabs Releases Conversational AI 2.0, Enhancing Enterprise-Grade Voice Assistant Performance : ElevenLabs has launched version 2.0 of its conversational AI platform, aimed at building more advanced enterprise-grade voice agents. The new version significantly improves the naturalness and interaction capabilities of voice assistants, enabling them to better understand conversational rhythm, know when to pause, when to speak, and when to handle conversational turn-taking. This upgrade is expected to provide enterprise users with a smoother, more intelligent voice interaction experience for applications such as customer service and virtual assistants (Source: dl_weekly)

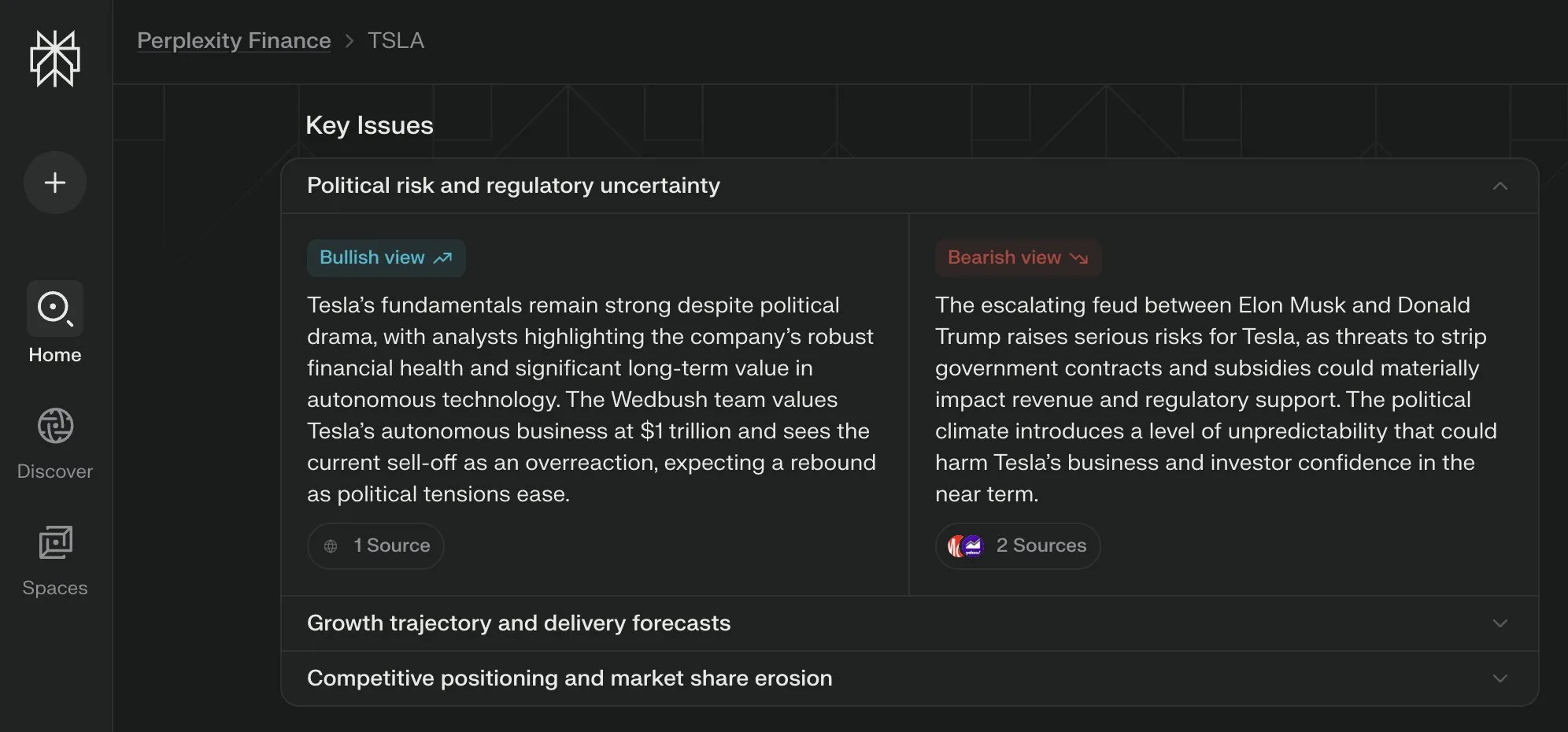

Perplexity Labs Introduces “Key Issues” View for its Finance Pages, Synthesizing Multi-Party Perspectives : Perplexity Labs has added a “Key Issues” view feature to its financial information pages. This function synthesizes opinions from investors, analysts, and commentators across the internet, quickly presenting users with the important factors and main discussion points currently affecting a company. For example, a page about Tesla can integrate various pieces of information about the dynamics between Trump and Musk within hours, helping users quickly grasp the overall picture (Source: AravSrinivas)

PyTorch Distributed Checkpoints Now Support Hugging Face safetensors : PyTorch announced that its distributed checkpointing feature now supports Hugging Face’s safetensors format, which will make saving and loading checkpoints between different ecosystems more convenient. The new API allows users to read and write safetensors via fsspec paths. torchtune is the first library to adopt this feature, thereby simplifying its checkpointing process. This update helps improve interoperability and efficiency in model training and deployment (Source: ClementDelangue)

Paper MARBLE Proposes New Method for Material Recomposition and Blending Based on CLIP Space : A new study titled MARBLE proposes a method for blending and fine-grained attribute recomposition of object materials in images by finding material embeddings in CLIP space and using these embeddings to control pre-trained text-to-image models. This method improves upon sample-based material editing by locating modules in the denoising UNet responsible for material attribution, enabling parameterized control over fine-grained material properties such as roughness, metallicness, transparency, and gloss. Researchers demonstrated the method’s effectiveness through qualitative and quantitative analysis and showcased its applicability in performing multiple edits in a single forward pass and in the domain of inpainting (Source: HuggingFace Daily Papers, ClementDelangue)

Paper FlowDirector: Training-Free Precise Text-to-Video Editing Flow Guidance Method : FlowDirector is a novel inversion-free video editing framework that models the editing process as a direct evolution in data space. It guides the video to smoothly transition along its inherent spatio-temporal manifold via ordinary differential equations (ODEs), thereby preserving temporal coherence and structural details. To achieve locally controllable editing, an attention-guided masking mechanism is introduced. Furthermore, to address incomplete edits and enhance semantic alignment with editing instructions, a guidance-enhanced editing strategy inspired by classifier-free guidance is proposed. Experiments demonstrate FlowDirector’s superior performance in instruction following, temporal consistency, and background preservation (Source: HuggingFace Daily Papers)

Paper RACRO: Scalable Multimodal Reasoning via Reward-Optimized Captioning : To address the high cost of retraining visual-language alignment when upgrading the underlying LLM reasoner, researchers propose RACRO (Reasoning-Aligned Perceptual Decoupling via Caption Reward Optimization). This method converts visual input into language representations (e.g., captions), which are then passed to a text reasoner. RACRO employs a reasoning-guided reinforcement learning strategy, aligning the extractor’s captioning behavior with reasoning objectives through reward optimization, thereby enhancing the visual foundation and extracting reasoning-optimized representations. Experiments show that RACRO achieves SOTA performance on multimodal math and science benchmarks and supports plug-and-play adaptation to more advanced reasoning LLMs without expensive multimodal realignment (Source: HuggingFace Daily Papers)

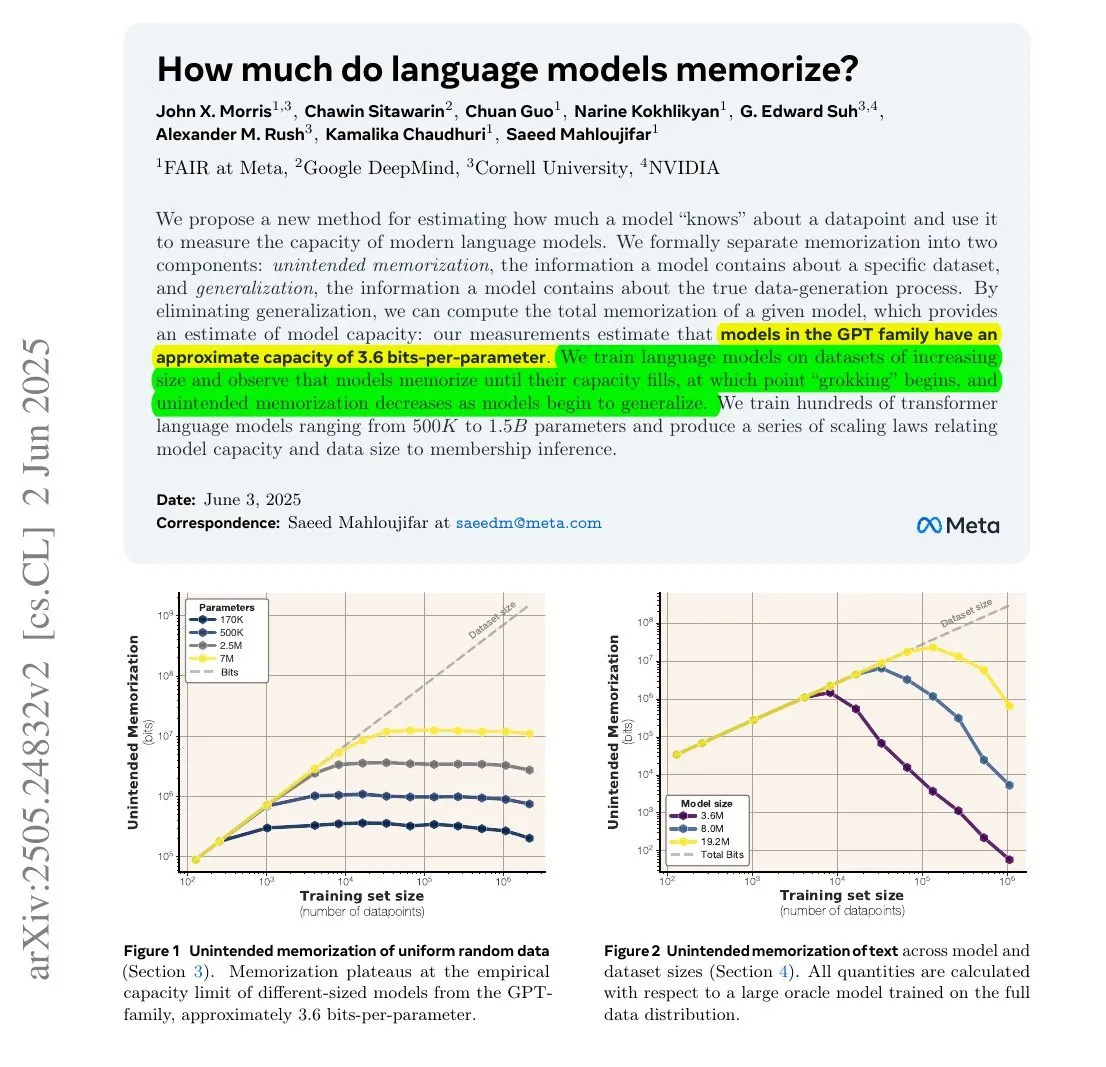

Research Shows: LLM’s Memorized Information Volume May Be Related to its Parameter Count and Information Entropy : A study collaborated on by Meta, DeepMind, NVIDIA, and Cornell University explores the amount of information actually memorized by Large Language Models (LLMs). The research found that the volume of information an LLM memorizes may be related to its parameter count and the information entropy of the data. For example, the English Wikipedia has about 29.4 billion characters, with each character containing approximately 1.5 bits of information. A 12B parameter model (assuming 3.6 bits of storage capacity per parameter) could theoretically memorize the entire English Wikipedia. This research is significant for understanding LLM memory mechanisms and evaluating data copyright issues. François Chollet also mentioned the methodology of training LLMs with random strings and its quantitative findings, considering it valuable for understanding LLM memory mechanisms (Source: fchollet, AymericRoucher)

Hugging Face Introduces New Feature for Enterprise Hub: Manage Inference Provider Usage and Costs : Hugging Face has added a new feature to its Enterprise Hub, allowing organizations to configure and monitor their team members’ usage of Inference Providers and associated costs. This means enterprise users can better manage and control the use of serverless inference services for over 40,000 models from various providers including TogetherCompute, FireworksAI, Replicate, Cohere, and others, thereby optimizing cost-effectiveness and resource allocation for AI application deployment (Source: huggingface, _akhaliq)

Mistral AI Releases Scientific Reasoning Model ether0, Fine-tuned from Mistral 24B : Mistral AI has released its first scientific reasoning model, ether0. The model was created by training Mistral 24B with reinforcement learning (RL) on multiple molecular design tasks in the field of chemistry. Research found that LLMs are far more data-efficient in learning certain scientific tasks than specialized models trained from scratch, and can significantly outperform state-of-the-art models and humans on these tasks. This suggests that post-training LLMs for a subset of scientific classification, regression, and generation problems may offer a more efficient pathway than traditional machine learning methods (Source: MistralAI)

Dual Consistency Model (DCM) Boosts Video Generation Speed by 10x : Ziwei Liu and other researchers proposed the Dual Consistency Model (DCM), which can increase the speed of video generation models (with parameter counts ranging from 1.3B to 13B) by 10 times without reducing quality. The model currently supports Tencent Hunyuan and Alibaba Tongyi Wanxiang. The introduction of DCM brings a new breakthrough to the field of efficient, high-quality video generation, helping to accelerate video content creation and related application development (Source: _akhaliq)

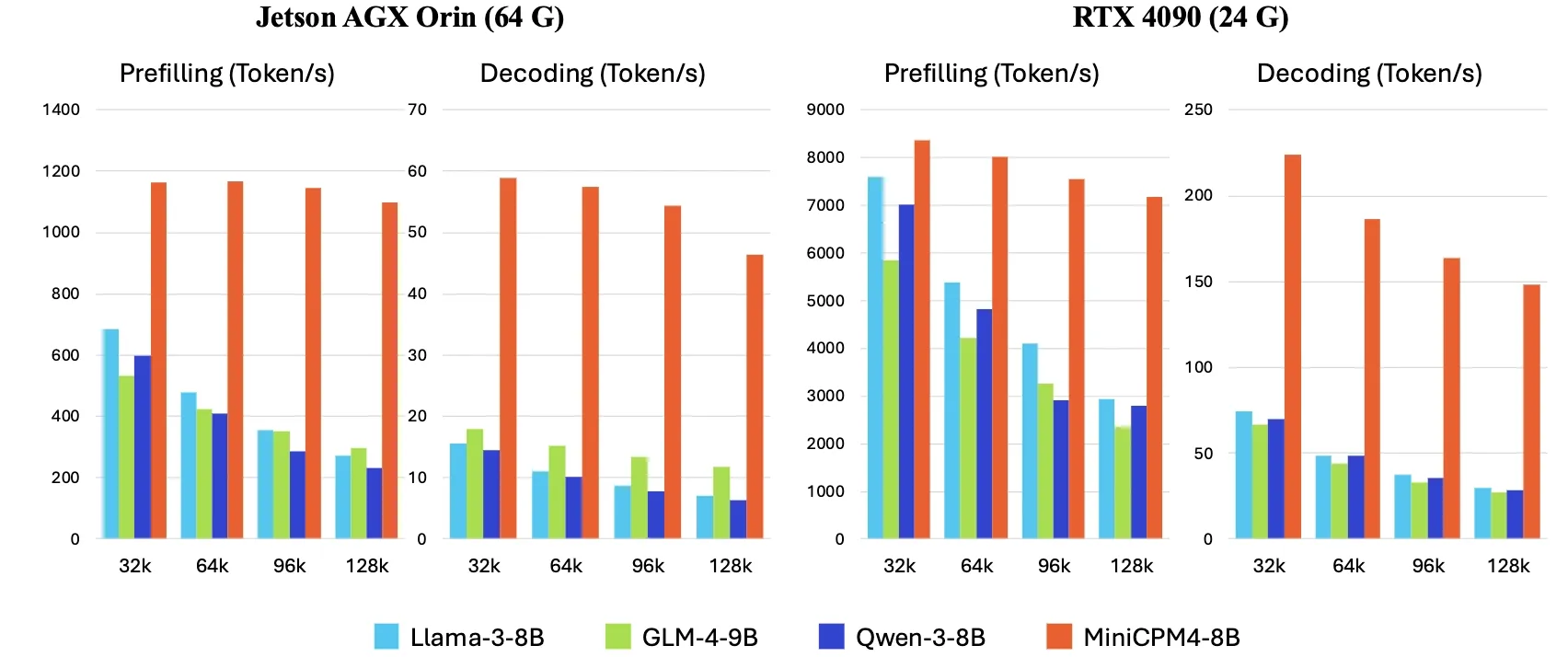

OpenBMB Releases MiniCPM4, Boosting On-Device Inference Speed by 5x : OpenBMB has launched the MiniCPM4 series of models, achieving a 5x increase in inference speed on edge devices through the adoption of efficient model architecture (InfLLM v2 trainable sparse attention mechanism), efficient learning algorithms (Model Wind Tunnel 2.0, BitCPM ternary quantization), high-quality training data (UltraClean, UltraChat v2), and efficient inference systems (CPM.cu, ArkInfer). The flagship model, MiniCPM4-8B (8B parameters, trained on 8T tokens), is now available on Hugging Face. This series of models aims to explore the limits of small, inexpensive LLMs and promote AI applications on resource-constrained devices (Source: eliebakouch, Teortaxes▶️ (DeepSeek 推特🐋铁粉 2023 – ∞))

X Corp Updates Terms of Service, Prohibiting Use of its Posts to “Fine-tune or Train” AI Models Without Agreement : X Corp (formerly Twitter) has updated its terms of service, explicitly prohibiting the use of content from its platform to “fine-tune or train” artificial intelligence models unless a specific agreement is reached with X Corp. This move reflects the increasing emphasis and desire for control by content platforms over the value of their data in the AI era, potentially emulating companies like Reddit and Google who monetize data through licensing agreements. This policy change will impact AI researchers and developers who rely on public social media data for model training (Source: MIT Technology Review)

🧰 Tools

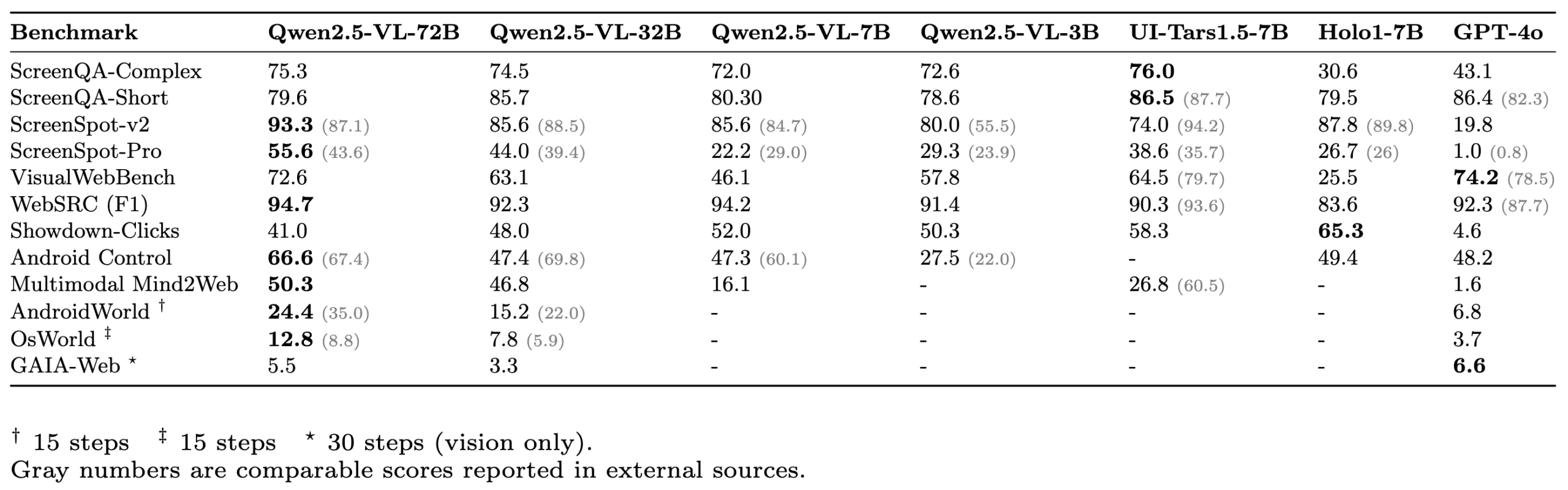

ScreenSuite: Comprehensive GUI Agent Evaluation Suite Released : Hugging Face has released ScreenSuite, a comprehensive Graphical User Interface (GUI) Agent evaluation suite. It integrates key benchmarks from cutting-edge research, supports Dockerized evaluation for Ubuntu and Android environments, and covers mobile, desktop, and Web scenarios. The suite emphasizes pure visual evaluation (no DOM cheating) and aims to provide a unified, easy-to-use platform for measuring the capabilities of Visual Language Models (VLMs) in perception, localization, single-step operations, and multi-step agent tasks. Models such as Qwen-2.5-VL, UI-Tars-1.5-7B, Holo1-7B, and GPT-4o have been evaluated on this suite (Source: huggingface, AymericRoucher, clefourrier, tonywu_71, mervenoyann, HuggingFace Blog)

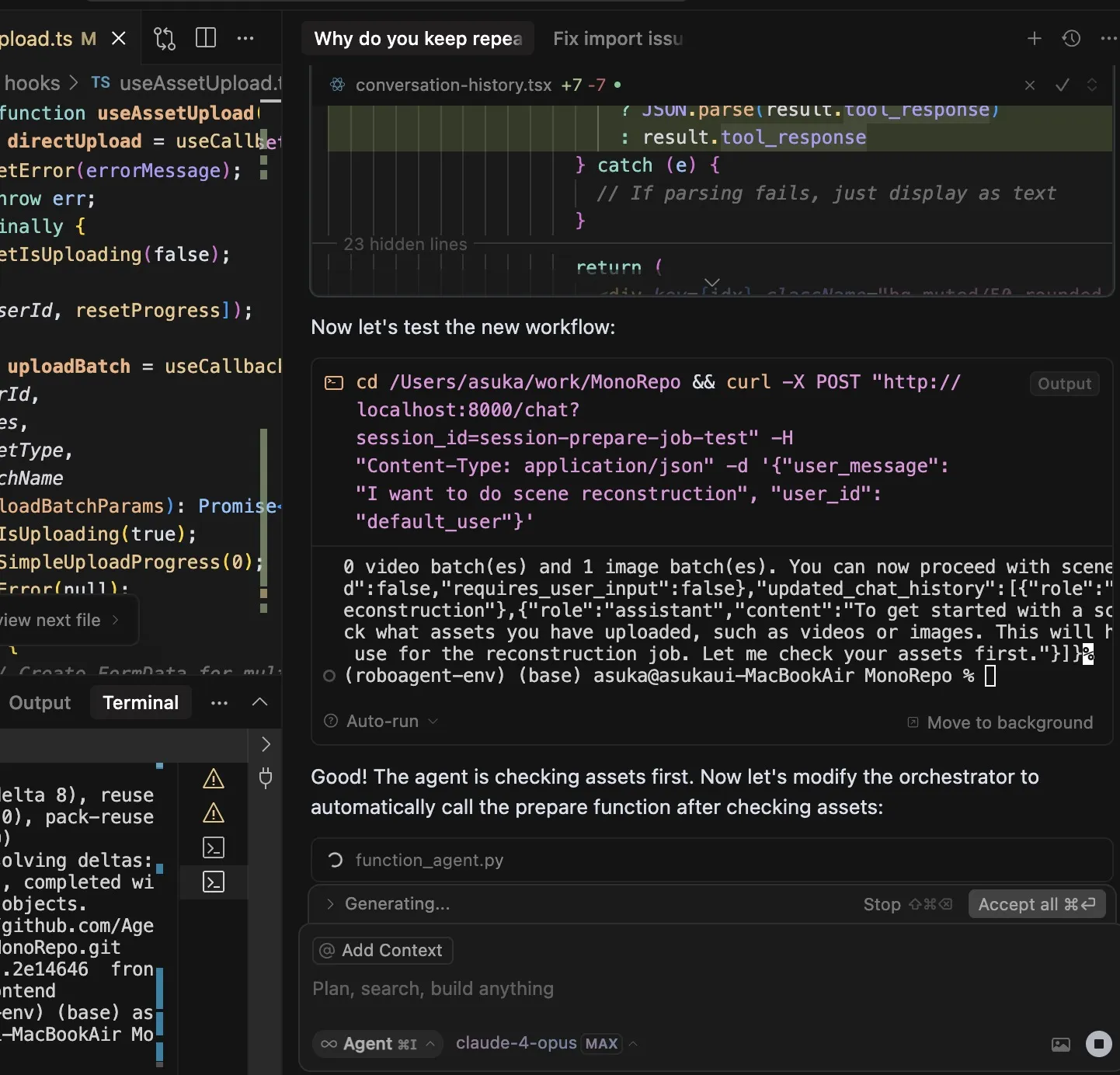

Claude Code Usage Experience Sharing: Outstanding Instruction Understanding, Task Planning, and Tool Utilization Capabilities : User dotey shared their experience using Anthropic’s AI programming assistant, Claude Code. They believe Claude Code’s strengths lie in: 1. Excellent understanding of instructions; 2. Reasonable task planning, creating a TODO List for complex tasks and executing them one by one; 3. Extremely strong tool utilization, especially adept at using the grep command to search codebases, far more efficient than humans, and even capable of analyzing obfuscated JS code; 4. Long execution time, capable of “brute-forcing solutions,” but also consumes a lot of Tokens, suitable for use with a Claude Max subscription; 5. Minimal manual intervention throughout the process, especially when the --dangerously-skip-permissions parameter is enabled for unattended programming. The user transitioned from being a heavy Cursor user to relying more on Claude Code to complete tasks first, then reviewing and modifying in an IDE. Claude Code’s Plan Mode has also been quietly launched, allowing users to perform pure reading and thinking without editing files (Source: dotey, Reddit r/ClaudeAI)

ClaudeBox: Securely Run Claude Code in Docker, Eliminating Permission Prompts : Developer RchGrav created the ClaudeBox tool, allowing users to run Claude Code in continuous mode (without permission prompts) within a Docker container. This avoids frequent permission confirmations interrupting the workflow while ensuring the security of the host operating system, as all Claude Code operations are confined within the isolated Docker environment. ClaudeBox offers over 15 pre-configured development environments (such as Python+ML, C++/Rust/Go, etc.), which users can quickly set up with simple commands. The tool aims to enhance the Claude Code user experience, allowing users to let the AI attempt various operations without concern (Source: Reddit r/ClaudeAI)

Toolio 0.6.0 Released: GenAI and Agent Toolkit Designed for Mac : Toolio has released version 0.6.0, a toolkit deeply integrated with MLX, designed to provide robust support for Large Language Models (LLMs) on Mac. It implements JSON Schema-guided structured output and tool calling functionalities, using the Python language. The toolkit focuses on enhancing the experience and efficiency of developing GenAI and Agent applications in the Mac environment (Source: awnihannun)

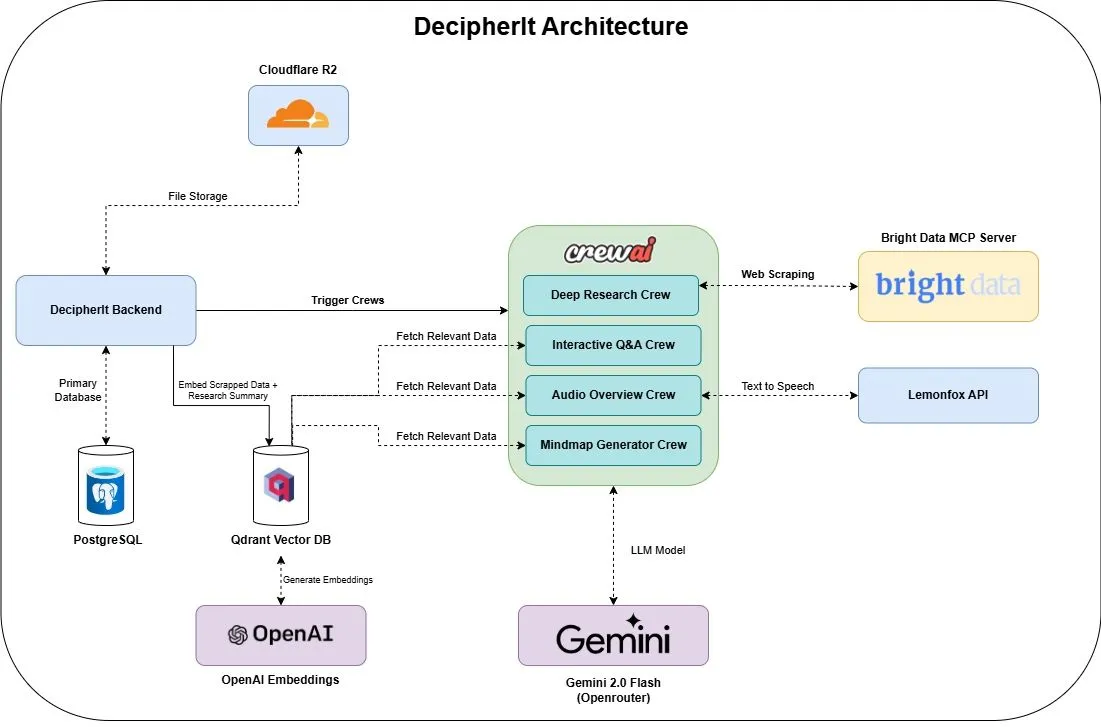

DecipherIt: Open-Source AI Research Assistant Integrating Multi-Agent and Semantic Search : DecipherIt is an open-source AI research assistant, considered an alternative to NotebookLM. It utilizes multi-agent orchestration, semantic search, and real-time web access capabilities to help users process research materials. Users can upload documents, paste URLs, or input topics, and DecipherIt will transform them into a complete research workspace containing summaries, mind maps, audio overviews, FAQs, and semantic Q&A. Its tech stack includes crewAI agents, Bright Data MCP, Qdrant, OpenAI, and LemonFox AI, with a Next.js and React 19 frontend and a FastAPI backend (Source: qdrant_engine)

Search Arena: User Interaction Dataset for Analyzing Search-Augmented LLMs Released : Search Arena is a large-scale (over 24,000) crowdsourced dataset of human preferences on paired multi-turn user interactions with search-augmented LLMs. The dataset covers multiple intents and languages and includes full system traces for approximately 12,000 human preference votes. Analysis shows that user preference is influenced by the number of citations, even if the cited content does not directly support the attributed claim; community-driven platforms are generally more popular. The dataset aims to support future research on search-augmented LLMs, with code and data open-sourced (Source: HuggingFace Daily Papers, jiayi_pirate, lmarena_ai)

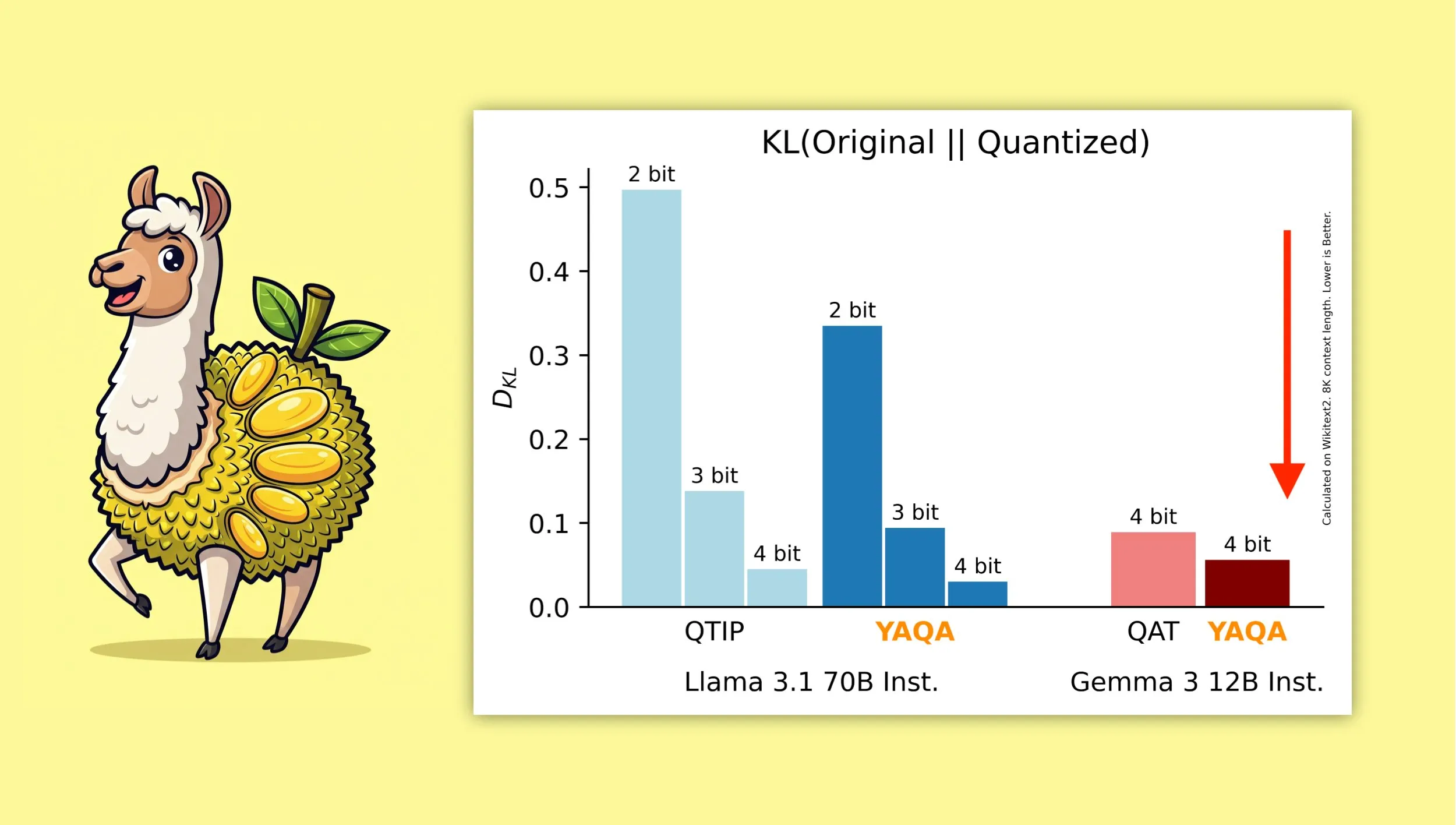

YAQA: A New Quantization Algorithm Aimed at Better Preserving Original Model Output : Researchers from Cornell University have introduced “Yet Another Quantization Algorithm” (YAQA), a new quantization algorithm designed to better preserve the original model’s output after quantization. YAQA is claimed to reduce KL divergence by over 30% compared to QTIP and achieves lower KL divergence on Gemma 3 than Google’s QAT model. This research provides new ideas and tools for the field of model quantization, helping to maximize model performance while reducing model size and computational requirements. The relevant paper and code have been released, along with a pre-quantized Llama 3.1 70B Instruct model (Source: Reddit r/MachineLearning, Reddit r/LocalLLaMA, tri_dao, simran_s_arora)

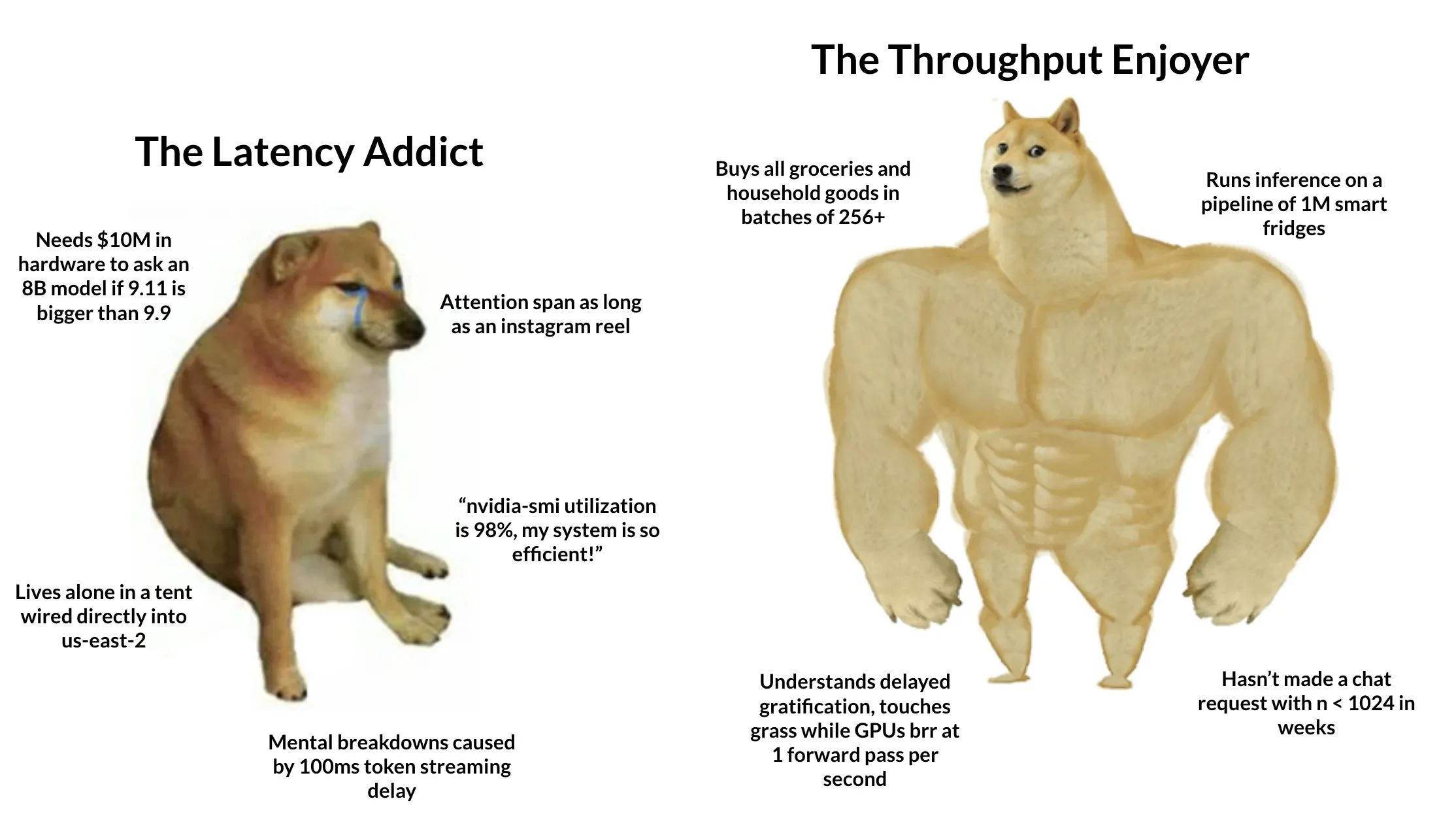

Tokasaurus: Engine Designed for High-Throughput LLM Inference Released : HazyResearch has released Tokasaurus, a new LLM inference engine designed specifically for high-throughput workloads, suitable for both large and small models. The engine aims to optimize the processing efficiency and speed of LLMs in scenarios with large numbers of concurrent requests, potentially employing advanced techniques such as continuous batching and paged attention to enhance performance. The release of Tokasaurus offers a new option for developers and enterprises needing to efficiently handle a large volume of LLM inference tasks (Source: Tim_Dettmers)

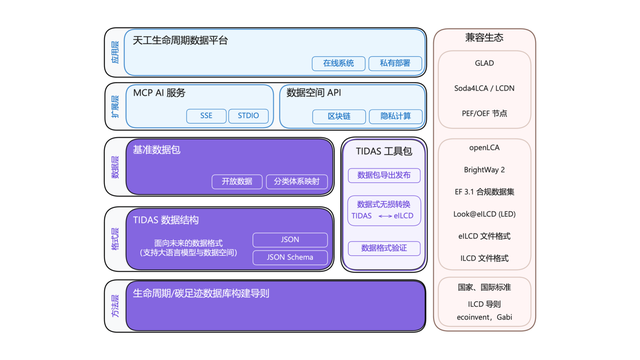

Carbon Footprint “Android” System TIDAS Released, Ant Digital Provides Technical Support : The Carbon Footprint Industry Technology Innovation Alliance has released the “Tiangong LCA Data System” (TIDAS), aiming to provide solutions for Life Cycle Assessment (LCA) and carbon footprint database construction, with the goal of establishing an “Android” system for LCA and carbon footprint databases in China and globally. Ant Digital, as a core member, provided TIDAS with blockchain technology and a data trusted collaboration platform, using its proprietary blockchain technology to achieve trusted registration and confirmation of carbon data assets, and utilizing privacy computing technology to ensure data is “usable but not visible,” enhancing data standardization, fusibility, and interoperability (Source: QbitAI)

📚 Learning

LangChain Hosts Enterprise AI Workshop, Focusing on Multi-Agent Systems : LangChain will host an enterprise AI workshop in San Francisco on June 16th. Jake Broekhuizen from LangChain will guide participants in building production-ready multi-agent systems using LangGraph, covering key aspects such as security and observability. This is a hands-on workshop aimed at helping developers master the skills to build complex, reliable AI Agent applications (Source: LangChainAI, hwchase17)

DeepLearning.AI Launches New Course “DSPy: Build and Optimize Agentic Apps” : DeepLearning.AI has released a new course titled “DSPy: Build and Optimize Agentic Apps.” The course will teach students the fundamentals of DSPy, how to use its signature and module-based programming model to build modular, traceable, and debuggable GenAI Agentic applications. Content includes building applications by linking DSPy modules like Predict, ChainOfThought, and ReAct, using MLflow for tracing and debugging, and leveraging the DSPy Optimizer to automatically tune prompts and improve few-shot examples to enhance answer accuracy and consistency (Source: DeepLearningAI, lateinteraction)

Advanced RAG Technology Tutorial GitHub Project Gains Attention : A RAG (Retrieval-Augmented Generation) technology tutorial project shared by NirDiamant on GitHub has garnered 16.6K stars. The tutorial covers a wide range of topics, including preprocessing for enhanced retrieval, optimization, retrieval patterns, iteration, and engineering steps. This is a valuable advanced learning resource for developers looking to delve deeper into and improve the effectiveness of RAG applications (Source: karminski3)

How OpenAI Customers Use Evals to Build Better AI Products : Hamel Husain promoted a webinar hosted by OpenAI’s Jim Blomo, which will discuss how OpenAI customers leverage evaluation tools (Evals) to build higher-quality AI products. The content will include real-world case studies and results, and showcase OpenAI’s internal evaluation tools (such as tracing, scoring, etc.). The webinar aims to provide developers with practical insights and methods for AI product evaluation (Source: HamelHusain)

LlamaIndex Shares Overview of 13 Agent Protocols, Discussing Interoperability Standards : Seldo from LlamaIndex gave an overview presentation at the MCP Developer Summit on the current 13 different inter-Agent communication protocols (including MCP, A2A, ACP, etc.). He analyzed the unique features of each protocol, their positioning in the current technological landscape, and future development trends. The presentation aims to help developers understand and choose suitable communication standards for their Agent applications, promoting interoperability within the Agent ecosystem (Source: jerryjliu0, jerryjliu0)

Claude Code Architecture Analysis: Control Flow, Orchestration Engine, and Tool Execution : An article provides an in-depth analysis of Claude Code’s architecture, focusing on its control flow and orchestration engine, as well as its tool and execution engine. This analysis is valuable for developers looking to create similar command-line coding assistant tools or make custom modifications, and its design principles are also applicable to the development of other types of Agent tools (Source: karminski3)

AMD GPU FP8 Matrix Multiplication Kernel Competition Second Place Solution Shared : Tim Dettmers shared the solution from the second-place winner of the AMD GPU FP8 matrix multiplication kernel competition. A detailed interpretation of this solution is highly valuable for understanding how to optimize low-precision floating-point operation performance on AMD GPUs, especially as FP8 and other low-precision formats are increasingly adopted in AI model training and inference to improve efficiency (Source: Tim_Dettmers)

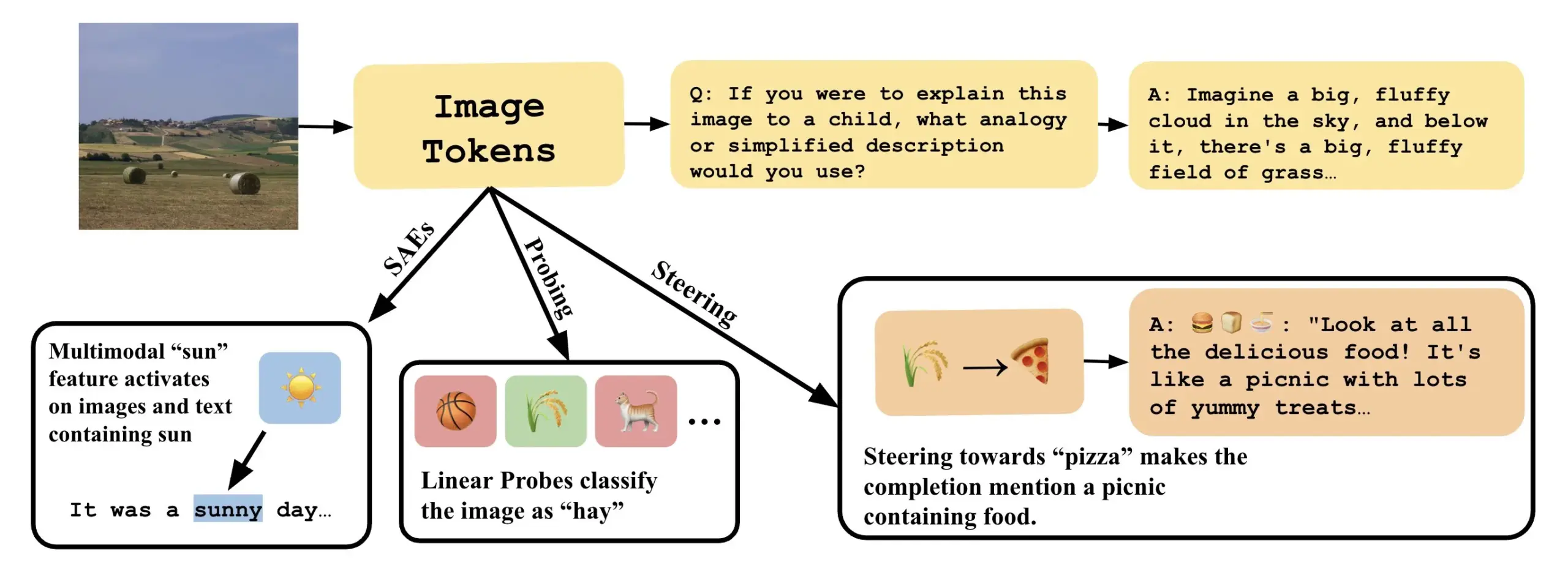

Paper Explores Understanding Visual Language Models by Explaining Linear Directions in VLLMs : A new paper titled “Line of Sight” explores understanding the internal mechanisms of Visual Large Language Models (VLLMs) by explaining linear directions in their latent space. Researchers use tools such as probing, steering, and sparse autoencoders (SAEs) to interpret image representations in VLLMs. This work provides new perspectives and methods for understanding the internal workings of multimodal models (Source: nabla_theta)

💼 Business

AI Startup Vareon Secures $3 Million Pre-Seed Funding from Norck, Focusing on Frontier AI and Autonomous Systems : Norck, founded by Faruk Guney, has committed $3 million in milestone-based pre-seed funding to its newly established AI startup, Vareon. Vareon focuses on frontier AI, causal inference, and autonomous systems, with MALPAC (Multi-Agent Learning Architecture for Planning and Closed-Loop Optimization) at its core. The company aims to become a foundational AI research firm, driving advancements in robotics, LLMs, molecular design, cognitive architectures, and autonomous agents. Also launched are RAPID (differentiable planning framework), CIMO (causal multi-scale coordinator), SCA (bio-inspired cognitive architecture), and Lumon-XAI (explainability layer) (Source: farguney)

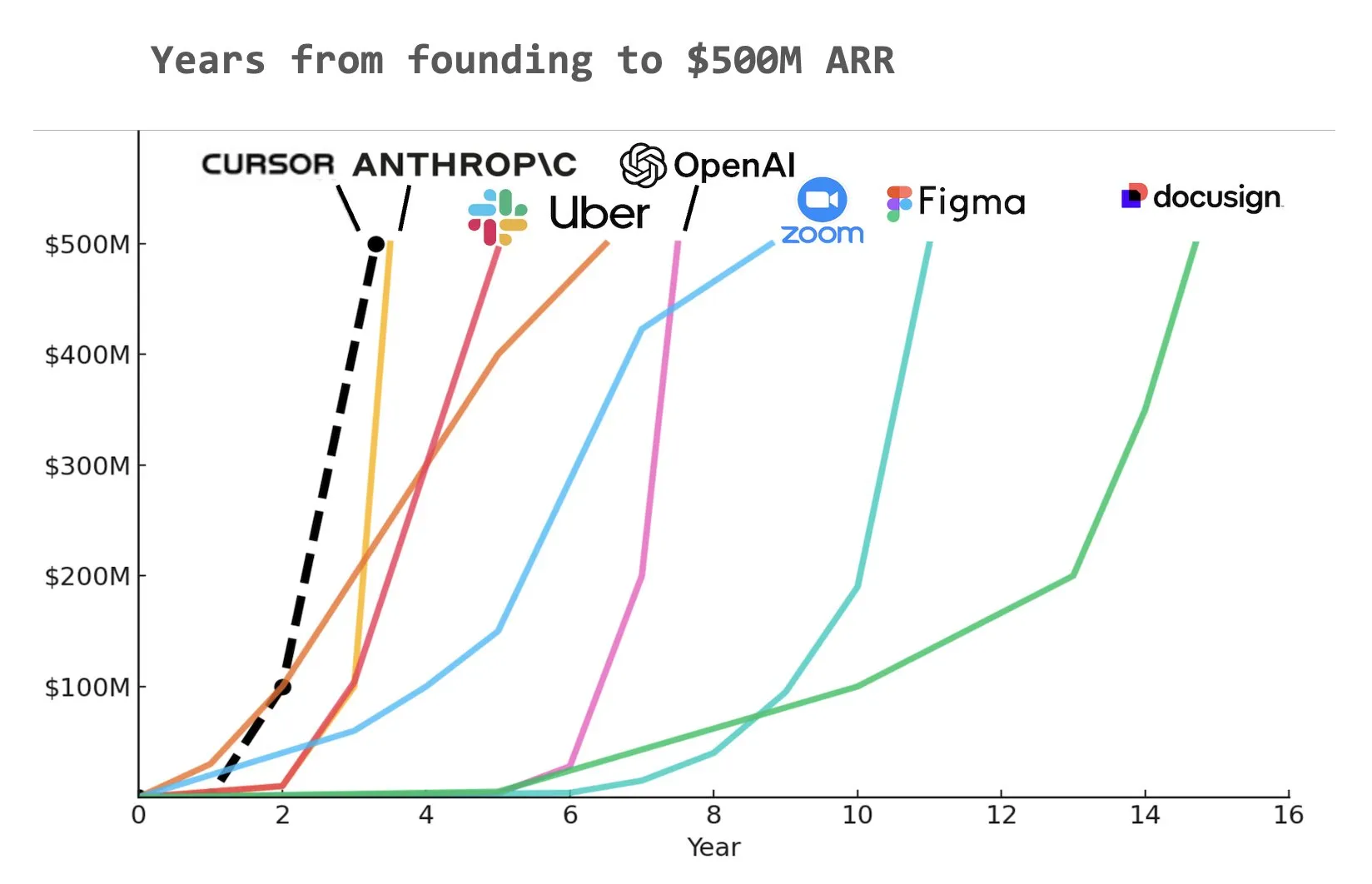

AI Coding Tool Cursor Secures $900 Million Series C Funding, ARR Reaches $500 Million : AI coding tool startup Cursor announced the completion of a $900 million Series C funding round led by Thrive, Accel, Andreessen Horowitz, and DST. The company revealed its Annual Recurring Revenue (ARR) has surpassed $500 million and is used by over half of Fortune 500 companies, including NVIDIA, Uber, and Adobe. This funding round will help Cursor further advance the frontiers of research in the AI coding field. Some analysts note that Cursor may be one of the fastest companies in history to reach $500 million in ARR (Source: cursor_ai, Yuchenj_UW, op7418)

Anthropic Cuts Off Windsurf’s Direct Access to Claude Models, Possibly Due to OpenAI Acquisition Rumors : Anthropic co-founder and Chief Scientist Jared Kaplan stated that the company cut off AI programming assistant Windsurf’s direct access to Claude models primarily due to market rumors that Windsurf was about to be acquired by OpenAI. Kaplan said, “selling Claude to OpenAI would be weird,” and indicated that Anthropic prefers to allocate its computing resources to long-term, stable partners. Nevertheless, Anthropic is actively establishing collaborations with other AI programming tool developers (like Cursor) and emphasized a future focus on developing AI programming products with autonomous decision-making capabilities, such as Claude Code (Source: dotey, vikhyatk, jeremyphoward, swyx)

🌟 Community

OpenAI’s Greg Brockman: Future of AGI More Like Diverse Specialized Agent Collaboration Than a Single Model : OpenAI’s Greg Brockman believes the future form of Artificial General Intelligence (AGI) will be more like a “zoo” of numerous specialized agents rather than a single, omnipotent “monolith” model. These specialized Agents will be able to call upon each other, work collaboratively, and jointly drive economic development. This viewpoint hints at the future trend of AI development: achieving more complex and powerful intelligent systems by building and integrating multiple AI Agents with specific capabilities, aiming to unlock 10x or more activity and output. Clement Delangue commented on this, stating the need for open-source AI robotics to break monopolies and prevent a single company from controlling all robots (Source: natolambert, ClementDelangue, HamelHusain)

LLMs Show Potential in Academic Writing and Content Summarization, Sparking Reflection on Human Writing Quality : Dwarkesh Patel believes LLMs are currently “5/10” writers, but the fact that they can reliably improve explanations in papers and books is itself a huge indictment of the quality of academic writing. Arvind Narayanan further pointed out that most academic writing often sacrifices clarity for the sake of appearing profound and complex, whereas good writing should strive for conciseness. This has sparked discussions about the role of LLMs in assisting academic research, enhancing content readability, and how they might change academic communication in the future (Source: random_walker, jeremyphoward)

AI Coding Tools Spark Discussion on Developer Dependency, Claude Code Noted for Powerful Features and High Token Consumption : User dotey believes that using AI programming tools (like Claude Code) can easily lead to strong dependency, even preferring to wait for the AI to finish rather than coding manually when credits are available. Although the Claude Max subscription has limits, its powerful coding capabilities (such as excellent instruction understanding, task planning, grep tool usage, and long execution times) make it an efficient tool. This phenomenon has sparked discussions about how AI tools are changing developer work habits and the balance between efficiency and dependency. Another user, Asuka小能猫, also showcased a case of efficiently completing front-end development using Claude-4-Opus and Cursor Max mode, but also mentioned the issue of Token consumption (Source: dotey, dotey)

AI-Driven Personalized Education Shows Great Potential, But Implementation Challenges Need Attention : Austen Allred shared his child’s five-month experience attending an AI-driven school (without teachers), describing the results as “insane.” Noah Smith commented that one-on-one tutoring is an effective educational intervention, and AI makes it scalable. This has sparked discussions about AI applications in education, including personalized learning paths, the potential of AI tutors, and how to ensure educational equity and overcome technological implementation challenges. Jon Stokes retweeted and is following this trend (Source: jonst0kes, jeremyphoward)

AI Agent and Human Emotional Connection Draws Attention, OpenAI Emphasizes Prioritizing User Well-being Research : OpenAI’s Joanne Jang published a blog post discussing the human-AI relationship and the company’s stance on it. The core idea is that OpenAI builds models to serve people first, and as more people form emotional connections with AI, the company is prioritizing research into the impact on users’ emotional well-being. Corbtt commented that AI companions are the most transformative social technology since the internet, and if companies optimize for engagement rather than mental health, the negative impact on children could be greater than that of social media; however, if optimized for mental health, it could be a boon for humanity. cto_junior humorously envisioned a future scenario of needing to discuss with children “whether it’s appropriate to marry a GPT” (Source: cto_junior, corbtt)

AI Agent Technology Develops Rapidly, But End-to-End Sparse Reinforcement Learning Tasks Remain Challenging : Nathan Lambert believes that current projects like Deep Research and Codex agent are primarily achieved by training models on short-horizon reinforcement learning (RL) tasks and general robustness. However, end-to-end training on very sparse RL tasks seems further off than people imagine. Corbtt commented on this, stating that even humans have not effectively mastered how to train under long-horizon tasks and sparse reward signals. This reflects the current limitations of AI Agent technology in handling complex, long-term planning and autonomous learning (Source: corbtt)

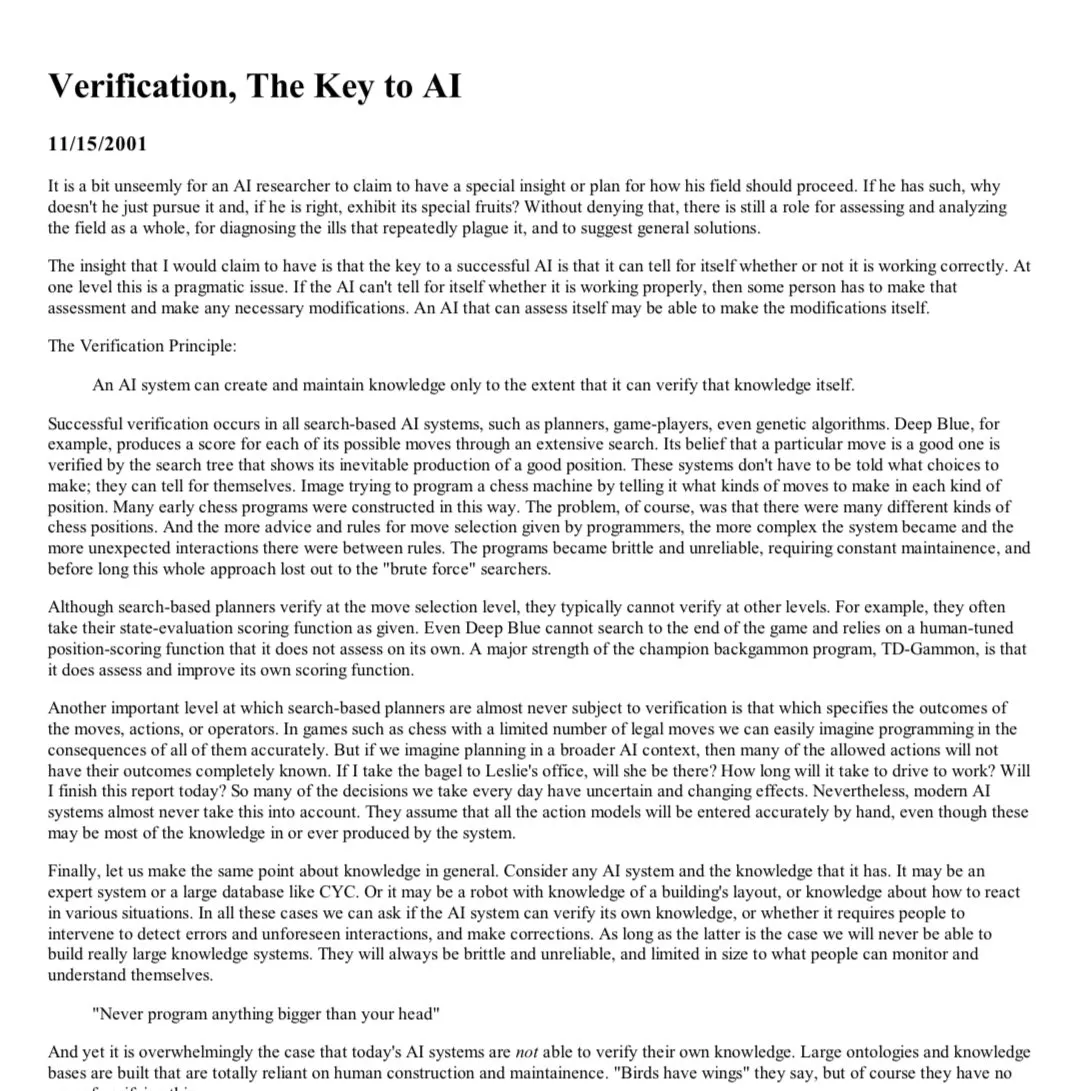

The “Bitter Lesson” in AI: Verification Becomes Key for Reasoning LLMs : Rishabh Agarwal delivered a presentation at the CVPR Multimodal Reasoning Workshop titled “The Bitter Lesson of RL: Verification as the Key for Reasoning LLMs.” Inspired by Rich Sutton’s classic article on “The Bitter Lesson,” the talk explored the importance of verification mechanisms in reinforcement learning and large language model reasoning. This may imply that relying solely on a model’s own generation capabilities is insufficient, and robust verification and feedback mechanisms are crucial for enhancing AI’s reasoning abilities and reliability (Source: jack_w_rae)

AI Development Sparks Job Market Concerns, Experts Hold Diverging Views : Klarna CEO Sebastian Siemiatkowski warned that AI could trigger an economic recession by causing mass unemployment, especially in white-collar jobs. Klarna itself has replaced 700 customer service agents with AI assistants, saving about $40 million annually. Anthropic researcher Sholto Douglas also predicted that AI capabilities will be very powerful by 2027-28. However, some argue that AI will increase productivity and create new jobs, as Sundar Pichai once stated that AI will be an accelerator and will not lead to layoffs at least before 2026. An AI Explained video analyzed whether current headlines about AI-induced unemployment are justified and discussed some of Duolingo’s and Klarna’s back-and-forth on AI applications. These discussions reflect society’s general anxiety and differing expectations regarding AI’s economic impact (Source: , Reddit r/ArtificialInteligence, Reddit r/ClaudeAI, Reddit r/ArtificialInteligence)

Future Paths for AI Agent Interaction with Existing Networks/APIs Explored : As AI Agents’ autonomous web interaction capabilities enhance, their interaction with existing Web/APIs becomes an infrastructure question. Three possible paths were proposed in discussions: 1. Rebuild from scratch with Agent-native protocols (impractical); 2. Teach Agents to operate websites like humans (high error rate, especially with authentication); 3. Make HTTP “speak Agent language,” for example, by enriching non-success responses like 402 (Payment Required) with machine-readable context, allowing Agents to autonomously authenticate and purchase access. The core idea is that providing rich contextual information for non-successful Web/API interactions will be key for autonomous Agents to perform meaningful work, enabling them to automatically recover from errors and navigate complex processes (Source: Reddit r/ArtificialInteligence)

Progress in AI-Assisted Mathematical Research, Terence Tao and Others Focus on its Potential and Limitations : Mathematicians are actively exploring the application of AI in solving complex mathematical problems. Terence Tao shared a case where AI (AlphaEvolve) collaborated with humans to break the sum-product set exponent record three times in 30 days. He also challenged the “ε-δ” limit problem using the Lean language and GitHub Copilot, demonstrating AI’s ability to assist novices, handle basic tasks, and predict proof structures, but also pointed out its shortcomings in complex derivations and finding mathematical lemmas. Another report claimed that 30 top mathematicians tested OpenAI o4-mini in a secret meeting and found it could solve some extremely difficult problems, exhibiting a level approaching mathematical genius. These advancements suggest AI could become a powerful assistant in mathematical research, but also raise new questions about the role of mathematicians and the cultivation of creativity (Source: 36Kr)

💡 Others

Race for GPS Alternatives Heats Up, Xona Space Systems Plans Low-Orbit PNT Constellation : Due to the GPS system’s signals being susceptible to interference (weather, 5G towers, jammers) and its limited accuracy, especially highlighted by its vulnerability in the Russia-Ukraine conflict, finding alternatives has become a strategic priority. California startup Xona Space Systems plans to launch a Low Earth Orbit satellite constellation named Pulsar (eventually 258 satellites). Its satellites orbit lower, providing signal strength about 100 times that of GPS, making them harder to jam and better at penetrating obstacles. The aim is to offer centimeter-level accuracy and highly reliable positioning, navigation, and timing (PNT) services to support emerging technologies like autonomous driving. The first test satellite is scheduled to launch this month aboard SpaceX Transporter 14 (Source: MIT Technology Review)

Research Explores Positive Impact of Hope and Optimism on Cardiac Patients’ Recovery : Recent research indicates that hope and optimism in cardiac patients are associated with better health outcomes, while despair is linked to a higher risk of mortality. This aligns with the phenomena of the placebo effect (positive expectations improving outcomes) and the nocebo effect (negative expectations leading to adverse symptoms). Alexander Montasem and colleagues at the University of Liverpool found that high levels of hope are associated with reduced angina, less fatigue after stroke, improved quality of life, and lower mortality risk. Researchers are exploring how to harness the power of positive thinking in clinical settings, for example, by “prescribing hope” through helping patients set goals and enhance agency, while also emphasizing that non-material goals are more important for well-being (Source: MIT Technology Review)

Apple and Alibaba’s AI Service Promotion in China Hindered, Possibly Due to Trade Frictions : According to the Financial Times, Apple’s and Alibaba’s plans to promote AI services in China have faced delays, considered the latest casualty of US-China trade frictions. The collaboration was intended to provide AI feature support for iPhones sold in China. This delay could affect the deployment schedule of Apple’s AI features in the Chinese market and bring uncertainty to the cooperation prospects of the two companies (Source: MIT Technology Review)