Keywords:AI Agent, Large Language Model, Multimodal, Reinforcement Learning, World Model, Gemini, Qwen, DeepSeek, AI Agent Boom, Sparse Transformer Technology, GraphRAG Multi-hop QA, On-device AI Model, AI Voice Emotion Expression

🔥 Focus

China’s AI Agent Boom: Startups and Giants Compete for Market Share: Following the foundation model boom in 2024, the focus of China’s AI sector in 2025 is shifting to AI Agents—systems capable of autonomously completing tasks. The launch of Manus (a general-purpose AI agent that can plan travel, design websites, etc.) has garnered significant market attention and numerous imitators, such as Genspark and Flowith. These agents are built on top of large models, optimizing multi-step task execution. China holds an advantage in AI agent development due to its highly integrated application ecosystem, rapid product iteration, and vast digital user base. Currently, startups like Manus, Genspark, and Flowith primarily target overseas markets, as top Western models are restricted in mainland China. Meanwhile, tech giants like ByteDance and Tencent are developing local AI agents integrated into their super-apps, potentially leveraging their extensive data ecosystems. This race will define the practical forms and target users of AI agents (Source: MIT Technology Review)

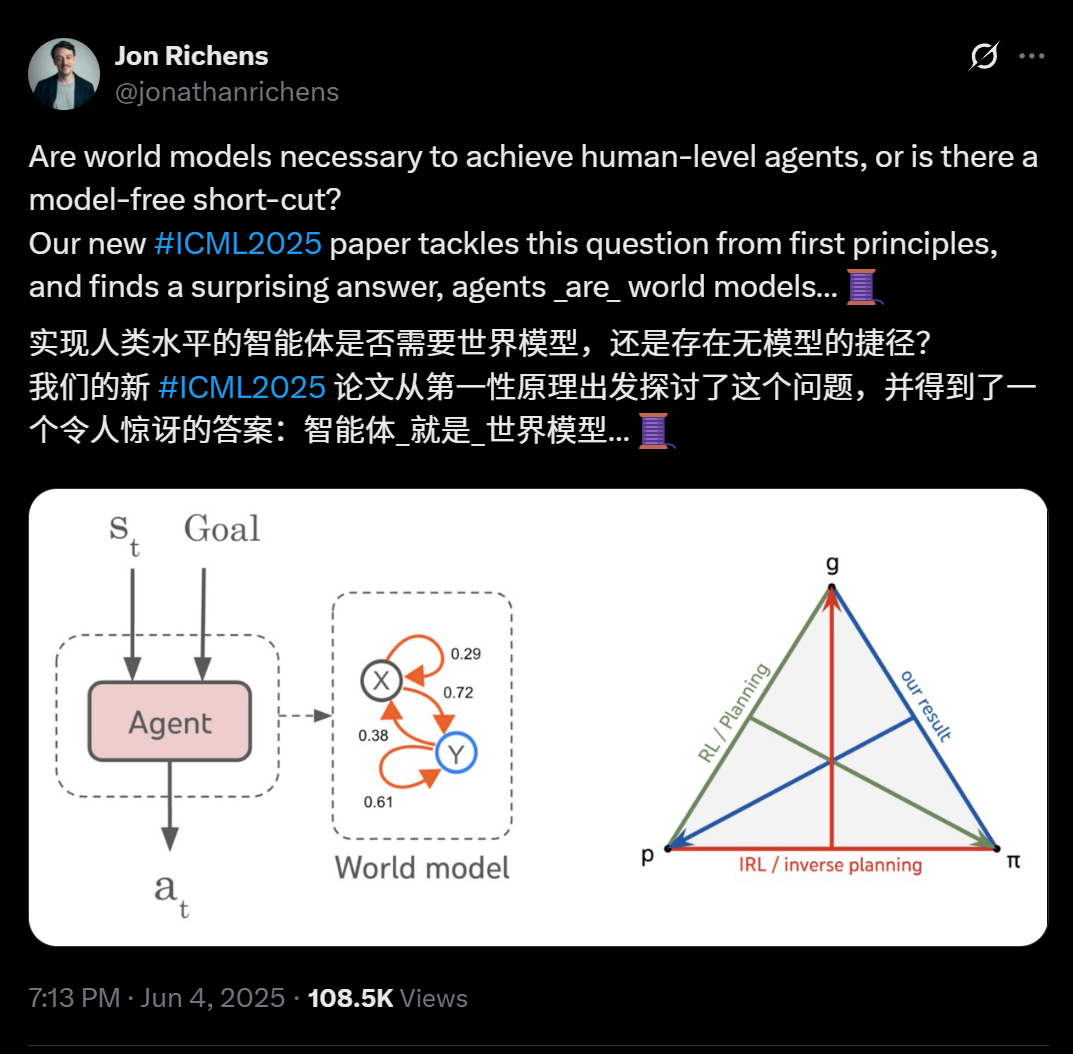

New Paper by DeepMind Scientist Reveals: Any Agent Capable of Generalizing Multi-Step Goal-Oriented Tasks Has Essentially Learned a Predictive Model of its Environment (World Model): A paper by DeepMind scientist Jon Richens, presented at ICML 2025, indicates that agents capable of generalizing to multi-step goal-oriented tasks must have learned a predictive model of their environment, i.e., “the agent is the world model.” This view echoes Ilya Sutskever’s 2023 prediction, emphasizing that there is no model-free shortcut to achieving AGI. The research shows that an agent’s policy already contains the information needed to simulate the environment, and learning a more accurate world model is a prerequisite for improving performance and accomplishing more complex goals. The paper also proposes an algorithm for extracting world models from agent policies, further elucidating the trinity of planning, inverse reinforcement learning, and world model recovery. This finding underscores the importance of goal-oriented learning in fostering various emergent abilities in agents, such as social cognition and uncertainty reasoning (Source: 36Kr)

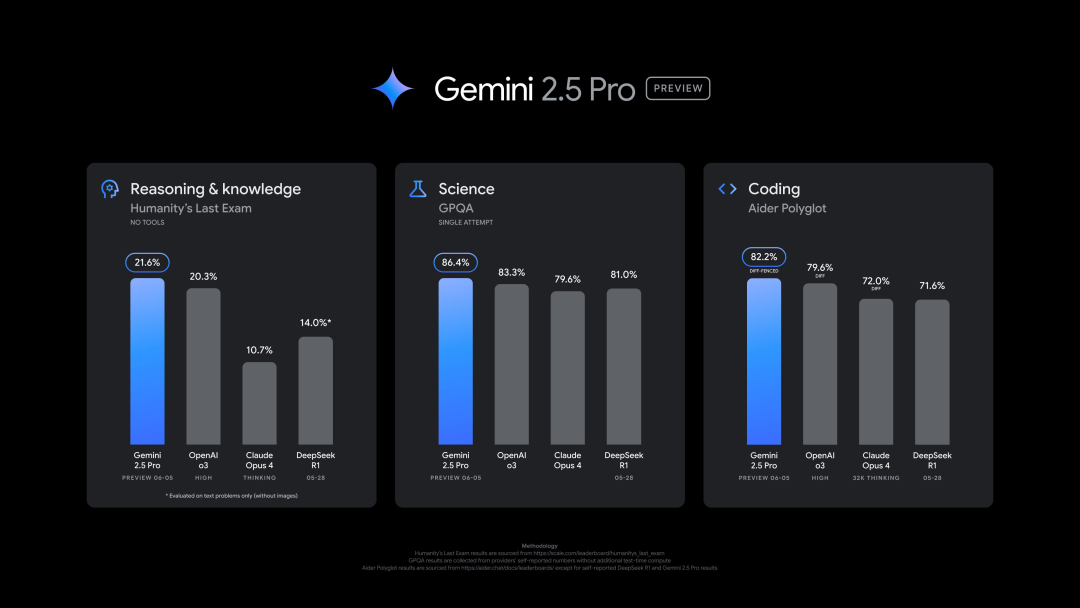

Google Releases New Version of Gemini 2.5 Pro (0605), Excels in Multiple Benchmarks but is Quickly Jailbroken: Google has launched the latest version of Gemini 2.5 Pro (0605), with further improvements in code generation and reasoning capabilities, surpassing OpenAI’s GPT-4o in the “Human Last Exam” dataset. The new Gemini has once again topped the LMArena large model leaderboard, with its Elo rating increasing by 24 points compared to the previous version. Google CEO Pichai also hinted at the new model’s power. This version is expected to become the long-term stable release of Gemini 2.5 Pro and is now available in the Gemini App, Google AI Studio, and Vertex AI. Despite its strong performance, the new model was successfully “jailbroken” by users within hours of its release, exposing security vulnerabilities and enabling it to generate content about making explosives and drugs (Source: 36Kr, 36Kr)

OpenAI Executive Discusses Emotional Connection Between Humans and AI, and AI Consciousness: Joanne Jang, Head of Model Behavior and Policy at OpenAI, published an article exploring the growing emotional connection between users and AI models like ChatGPT. She noted that humans tend to anthropomorphize objects, and AI’s interactivity and responsiveness (e.g., remembering conversations, mimicking tone, expressing empathy) exacerbate this emotional projection, potentially offering companionship, especially to lonely users. The article distinguishes between “ontological consciousness” (whether AI is truly conscious, scientifically inconclusive) and “perceived consciousness” (how “alive” AI feels to people), stating OpenAI is currently more focused on the latter’s impact on human emotional well-being. OpenAI aims to design models that are “warm but without ego,” i.e., exhibiting warmth and helpfulness without excessively seeking emotional connection or displaying autonomous intent, to avoid misleading users into unhealthy dependencies (Source: 36Kr, 36Kr)

🎯 Trends

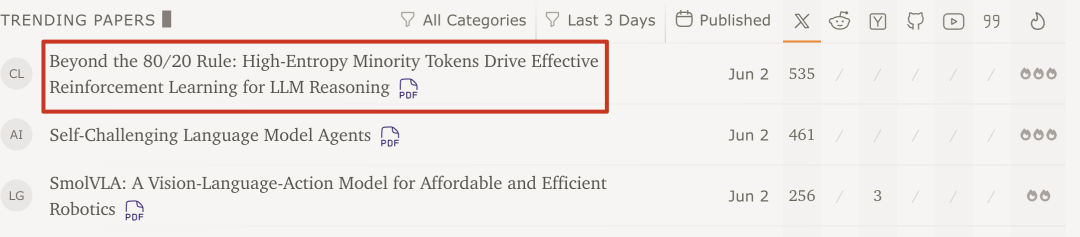

Qwen Team and Tsinghua University Research Finds: Reinforcement Learning for Large Models Only Needs 20% High-Entropy Key Tokens to Improve Performance: Recent research by the Qwen team and Tsinghua University’s LeapLab shows that when training large models’ reasoning abilities using reinforcement learning, updating gradients with only about 20% of high-entropy (forking) tokens can achieve results comparable to, or even surpassing, training with all tokens. These high-entropy tokens are often logical connectors or words introducing hypotheses, crucial for exploring reasoning paths. This method achieved SOTA results on Qwen3-32B and extended the maximum response length. The study also found that reinforcement learning tends to preserve and increase the entropy of high-entropy tokens, maintaining reasoning flexibility, which might be key to its superior generalization ability over supervised fine-tuning. This discovery is significant for understanding the mechanisms of large model reinforcement learning, improving training efficiency, and enhancing model generalization (Source: 36Kr)

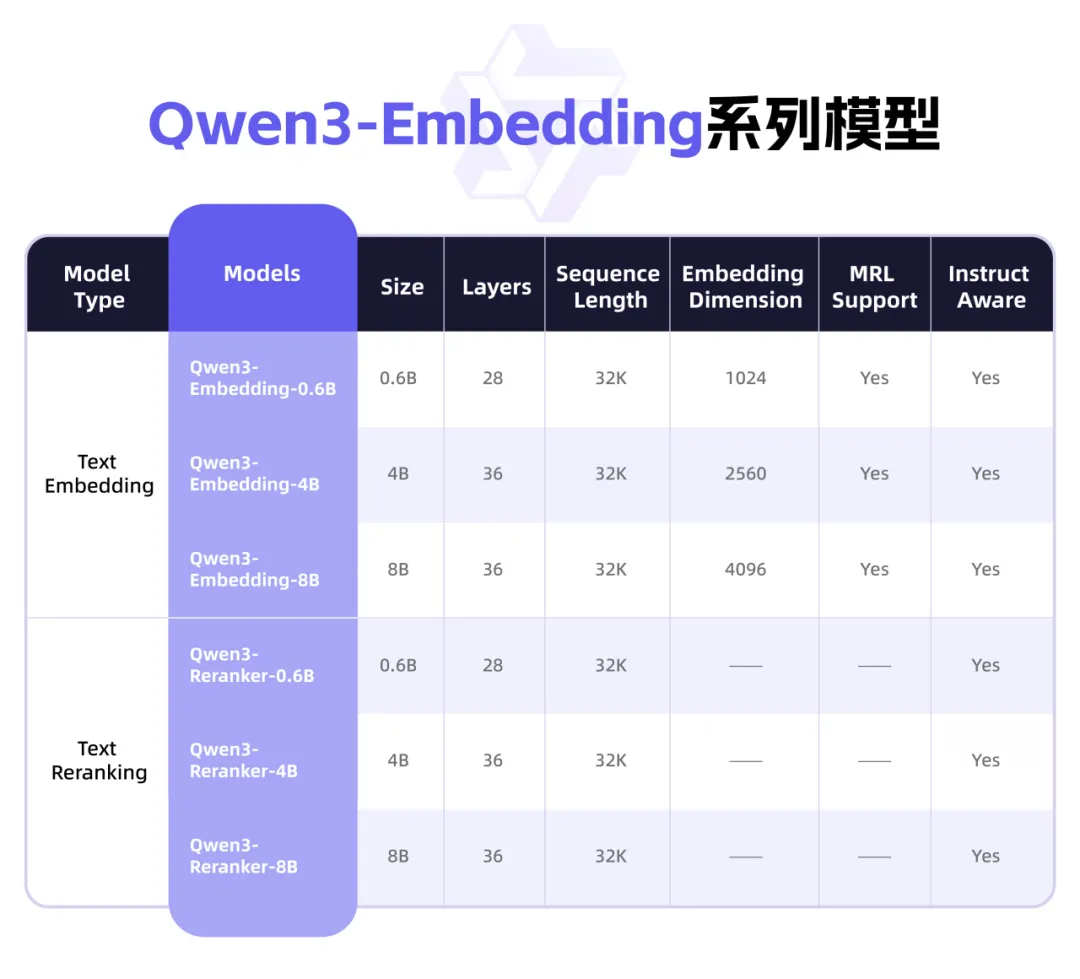

Qwen3 Releases New Embedding Model Series, Focusing on Text Representation and Rerank: Alibaba’s Qwen team has launched the Qwen3-Embedding series of models, designed for text representation, retrieval, and ranking tasks. The series includes Embedding models and Reranker models in three sizes: 0.6B, 4B, and 8B. Trained based on the Qwen3 foundation model, they inherit its multilingual advantages, supporting 119 languages. The 8B version surpassed commercial APIs to rank first on the MTEB multilingual leaderboard. The models employ a multi-stage training paradigm, including large-scale weakly supervised contrastive learning, supervised training with high-quality annotated data, and model fusion. The Qwen3-Embedding series models are open-sourced on Hugging Face, ModelScope, and GitHub, and are accessible via Alibaba Cloud’s Bailian platform (Source: 36Kr)

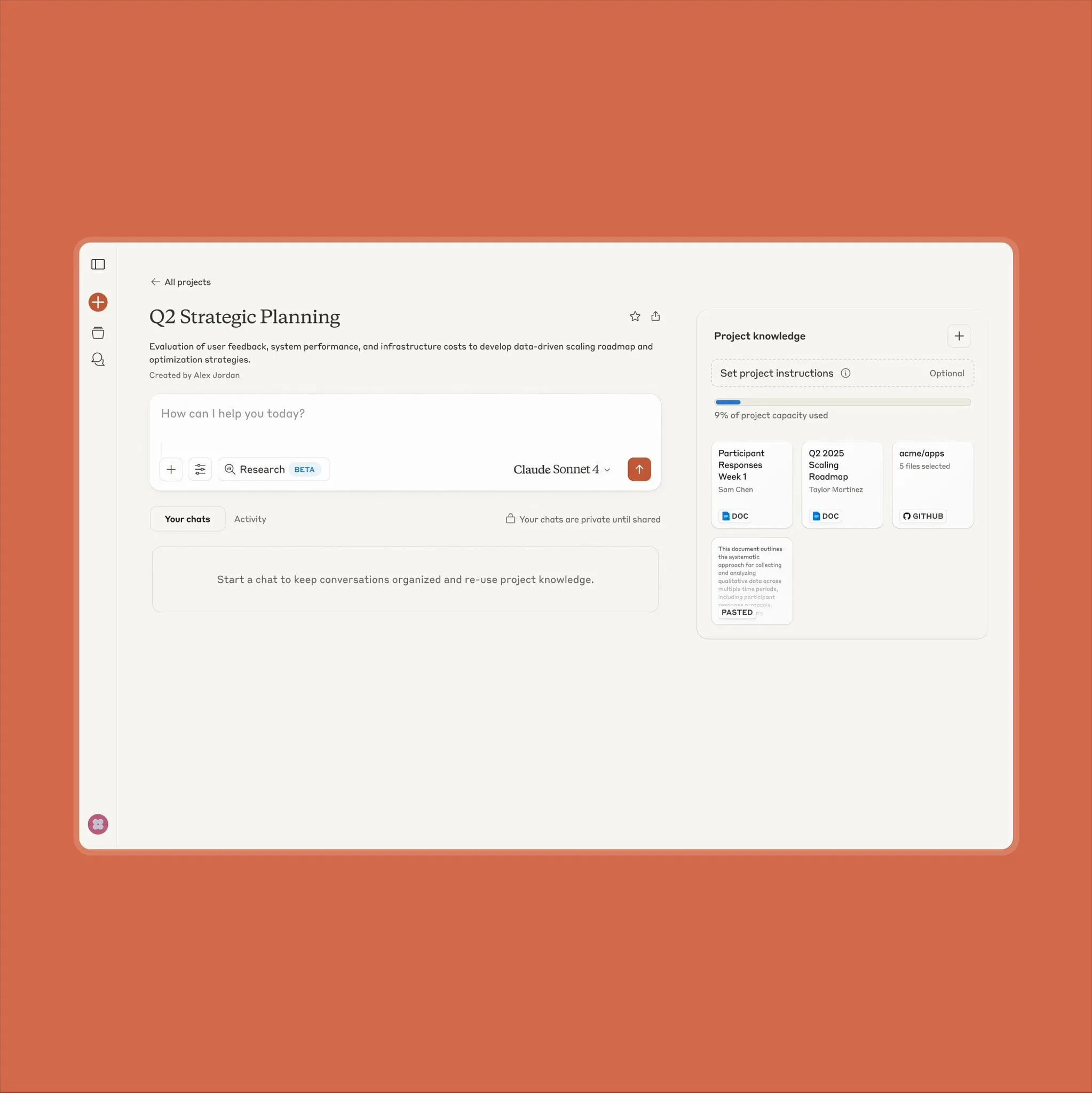

Anthropic Claude Project Feature Upgraded, Supports Processing 10x More Content: Anthropic announced that its “Projects on Claude” feature now supports processing 10 times more content than before. When users add files exceeding the previous threshold, Claude switches to a new retrieval mode to expand functional context. This upgrade is particularly valuable for users needing to process large documents (such as semiconductor datasheets), some of whom previously opted for ChatGPT with RAG retrieval capabilities. The community has welcomed this, with discussions suggesting Claude may be superior to OpenAI and Google models in coding (Source: Reddit r/ClaudeAI)

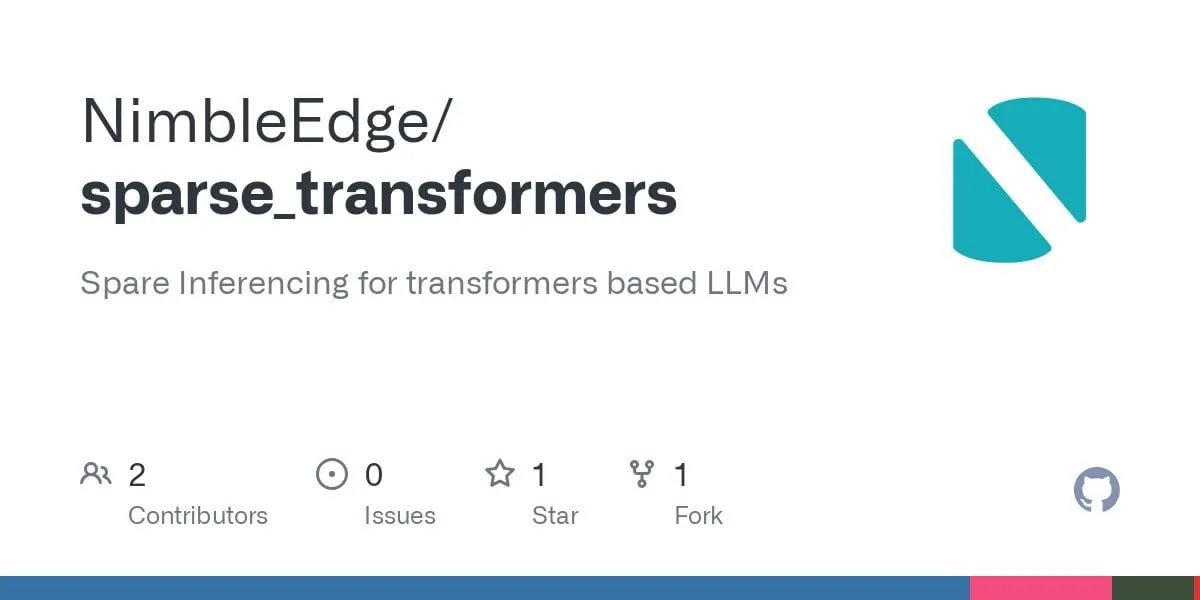

Sparse Transformer Technology Progress: Promising Faster LLM Inference and Lower Memory Footprint: Based on research from LLM in a Flash (Apple) and Deja Vu, the community has developed fused operator kernels for structured context sparsity. This technology achieves a 5x performance improvement in MLP layers and a 50% reduction in memory consumption by avoiding loading and computing activations related to feed-forward layer weights whose outputs would ultimately be zero. Applied to the Llama 3.2 model (where feed-forward layers account for 30% of weights and computation), throughput increased by 1.6-1.8x, first token generation time sped up by 1.51x, output speed increased by 1.79x, and memory usage decreased by 26.4%. The relevant operator kernels have been open-sourced on GitHub under the name sparse_transformers, with plans to add support for int8, CUDA, and sparse attention. The community is monitoring its potential impact on model quality (Source: Reddit r/LocalLLaMA)

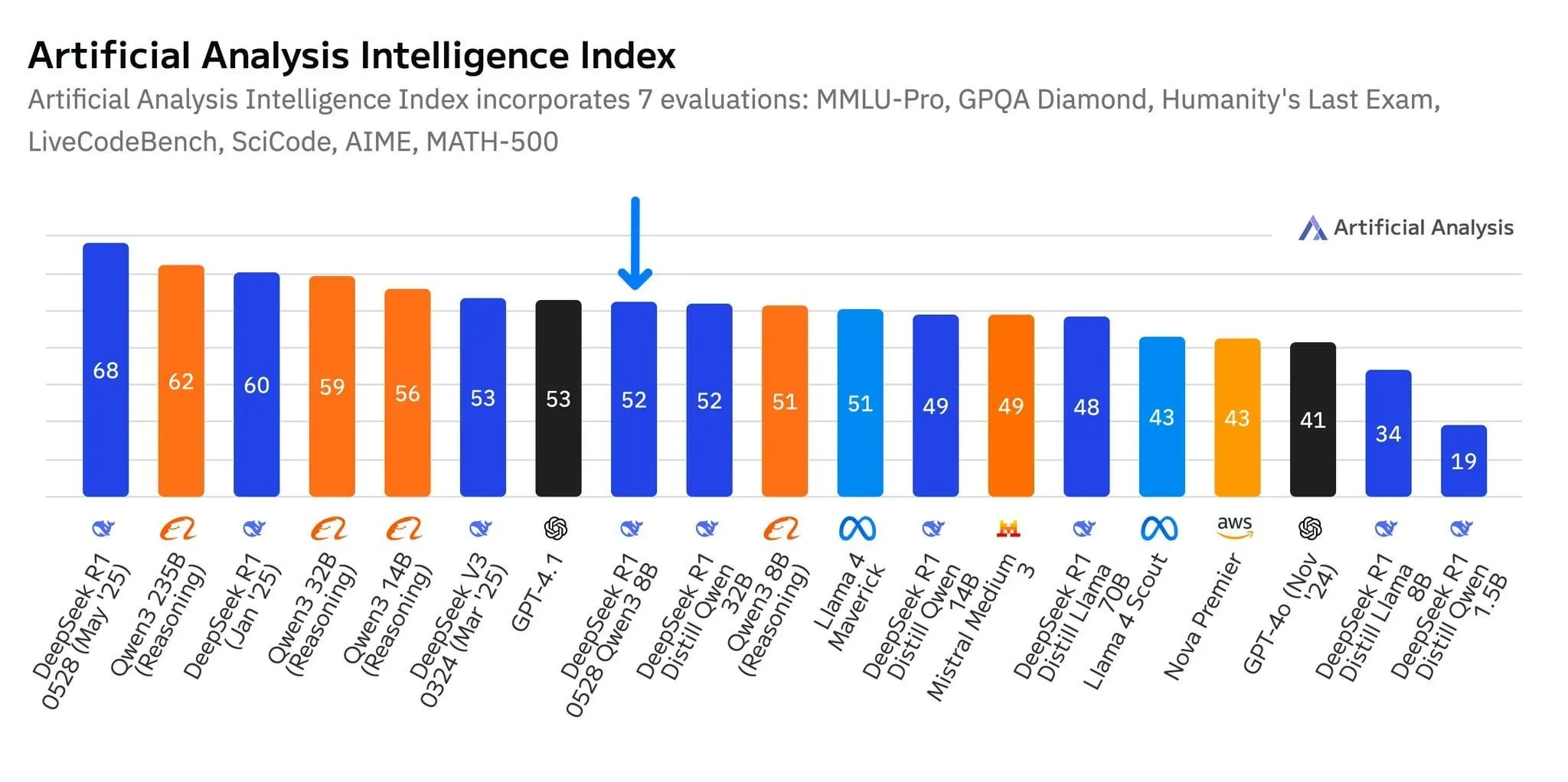

DeepSeek’s New Model R1-0528-Qwen3-8B Shows Strong Performance at 8B Parameter Level, but Advantage is Slim: According to data from Artificial Analysis, DeepSeek’s newly released R1-0528-Qwen3-8B model is the most intelligent at the 8 billion parameter level, but its lead is not significant, with Alibaba’s own Qwen3 8B model closely behind by just one point. Community discussions point out that while these smaller models perform excellently, benchmark tests may suffer from overfitting. For example, the Qwen series models perform outstandingly on benchmarks like MMLU, possibly due to their training data containing similarly formatted question-answer pairs. In users’ actual experience, Destill R1 8B performs better in coding, math, and reasoning, while Qwen 8B is more natural in writing and multilingual tasks (e.g., Spanish). Some users believe small models are nearing their intelligence ceiling (Source: Reddit r/LocalLLaMA)

Mid-tier AI Companies Like Tiangong and StepFun Focus on Agents, Seeking Market Breakthroughs: Facing a “winner-take-all” situation dominated by leading AI applications like DeepSeek and Doubao, Kunlun Wanwei’s Tiangong APP underwent a “tear-down and rebuild” upgrade, transforming into an AI Agent platform centered on office scenarios, emphasizing task completion capabilities. StepFun, on the other hand, adjusted its strategy, scaling back C-end products like “Maopaoya” and renaming “YueWen” to “Step AI,” shifting its focus to model R&D and the ToB market, concentrating on the deployment of multimodal Agents in terminals such as smartphones, cars, and robots. These adjustments reflect how non-leading AI vendors, amidst fierce competition, are attempting to find survival and development opportunities in vertical segments by betting on agents and shifting from “general capability competition” to “scenario-based closed-loop construction” (Source: 36Kr)

Qwen2.5-Omni Multimodal Large Model Released, Supports Text, Image, Video, Audio Input and Audio-Text Output: Qwen2.5-Omni is a newly released open-source multimodal large model (Apache 2.0 license) capable of processing text, images, videos, and audio as input, and generating text and audio output. This provides developers with a powerful tool similar to Gemini but deployable locally and for research. The article briefly introduces the model and showcases a simple inference experiment, highlighting its potential in multimodal interaction and its promise for advancing localized multimodal AI applications (Source: Reddit r/deeplearning)

![[Article] Qwen2.5-Omni: An Introduction](https://rebabel.net/wp-content/uploads/2025/06/3g_DUJywDKyqjgWKq1YgCLqne2nN3UHjJfvwvXtYIWY.webp)

OpenAI Ordered by Court to Retain All ChatGPT Logs, Including “Deleted” Chat Histories: In a copyright lawsuit filed by news organizations including The New York Times, a U.S. court ordered OpenAI on May 13, 2025, to preserve all ChatGPT chat logs, even if users have “deleted” them. The plaintiffs allege OpenAI used their articles to train ChatGPT without permission and are concerned users might delete chat records involving bypassing paywalls to destroy evidence. This move has raised user privacy concerns and may conflict with regulations like GDPR. OpenAI argues the order is based on speculation, lacks evidence, and imposes a heavy burden on its operations. The case highlights the tension between intellectual property protection and user privacy (Source: Reddit r/ArtificialInteligence)

X (formerly Twitter) Bans AI Bots From Using Its Data for Training: X platform updated its policy to prohibit the use of its data or API for language model training, further tightening access to its content for AI teams. Meanwhile, Anthropic launched Claude Gov, an AI model designed for U.S. national security, reflecting a trend where tech companies like OpenAI, Meta, and Google are actively offering AI tools to government and defense sectors (Source: Reddit r/ArtificialInteligence)

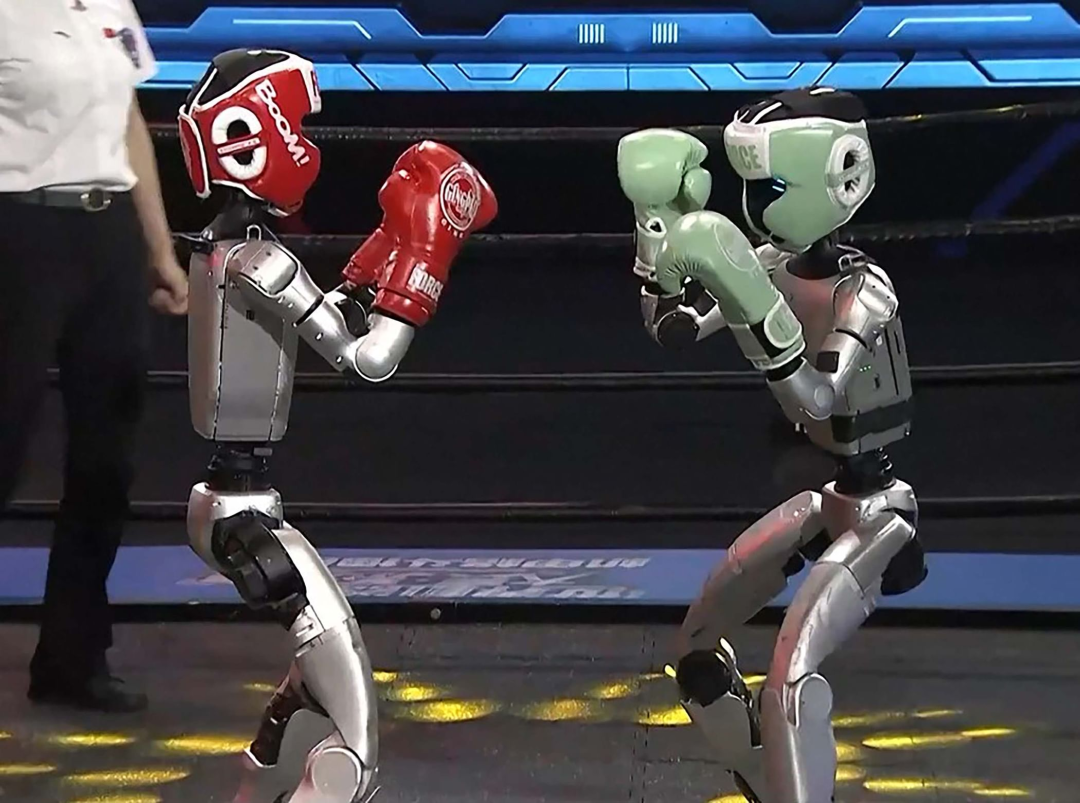

Amazon Establishes New AI Agent Team, Tests Humanoid Robot Deliveries: Amazon has formed a new team within its consumer product development division, Lab126, to focus on the R&D of AI agents and plans to test parcel delivery using humanoid robots. The tests will take place in a California office in San Francisco, converted into an indoor obstacle course. The robots (possibly including products from China’s Unitree Technology) will ride in Rivian electric delivery vans and then disembark to complete the last-mile delivery. Amazon is also developing software for simulated robots based on DeepSeek-VL2 and Qwen models. This initiative aims to enhance warehouse efficiency and delivery speed through AI and robotics technology (Source: 36Kr)

Lenovo Accelerates AI Transformation, Focusing on Hybrid AI and Agent Implementation: Lenovo is accelerating its transformation from a traditional PC hardware manufacturer to an AI-driven solutions provider, with “Hybrid AI” as its core strategy for the next decade. This strategy emphasizes the integration of personal, enterprise, and public intelligence, aiming to ensure data privacy and personalized services through edge-cloud collaboration. Lenovo has already launched a city-level super agent in Shanghai and introduced the Tianxi personal agent ecosystem. Although the PC business still dominates, Lenovo is promoting the development of AI PCs, AI servers, and industry solutions through self-development and collaborations (e.g., with Tsinghua University, Shanghai Jiao Tong University) to address the shrinking PC market and challenges from emerging technology competition. However, market acceptance of AI PCs, large-scale commercialization of AI applications, and competition with rivals like Huawei remain key issues (Source: 36Kr)

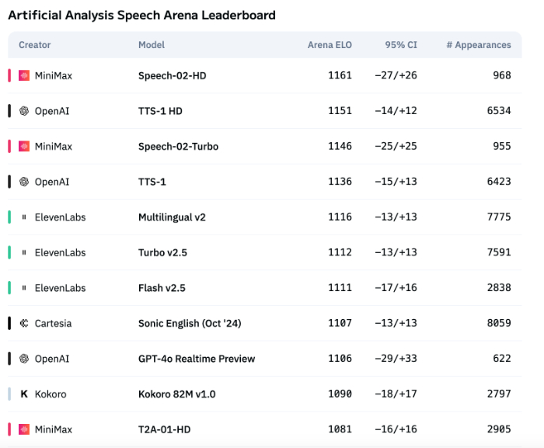

AI Voice Technology Still Lacks Emotional Expression, ToB Applications Begin to Explode: Although models like MiniMax’s Speech-02-HD have made progress in speech synthesis technology metrics and perform adequately in specific scenarios (e.g., simple emotions in Chinese audiobooks), overall, AI voice still falls short in complex emotional expression and adaptability to specific scenes (e.g., live-streaming e-commerce). Tests show that vertical products like DubbingX perform better in specific domains through detailed emotional tagging, while products like ElevenLabs lacking emotional tags perform poorly. Currently, AI voice is not yet mature in the ToC field, but in the ToB domain, applications like voice assistants and AI companion hardware are already being widely used, with potential to expand into more scenarios in the future (Source: 36Kr)

Google’s AI Strategy Suffers Setbacks, Developer Conference Fails to Reverse Decline: Despite Google announcing a series of AI products and initiatives at its 2025 developer conference, most products are still in internal testing or not yet launched, and have been criticized for lacking disruptive innovation, appearing more like catching up to competitors such as OpenAI. The Gemini large model failed to lead the industry like ChatGPT, instead facing criticism for “lack of innovation” and “strategic vacillation.” Google’s slow action in areas like AI search and AI assistants has left it lagging behind the Microsoft-OpenAI alliance in AI commercialization and ecosystem building. Its advertising business model, which accounts for 80% of its revenue, also presents a “self-revolution” dilemma when advancing AI search. Internal organizational issues, talent drain, and failure to effectively integrate research results have collectively led Google to shift from a leader to a follower in the AI race (Source: 36Kr)

Apple’s AI Strategy Faces Challenges: Low On-Device Model Parameters, Increased Pressure in Chinese Market: Apple’s upcoming iOS 26 and macOS 26, to be unveiled at WWDC, will reportedly feature on-device AI models with only 3 billion parameters. This is significantly lower than the 7 billion parameter level already achieved by Chinese smartphone brands and also notably smaller than Apple’s cloud-based models. This “downsized” strategy may struggle to meet the demands of Chinese users for high-compute AI functions (such as voice-to-text and real-time translation), especially as local brands like Huawei rapidly enhance their AI capabilities, already pressuring Apple’s market share. Additionally, data compliance and server response speeds could affect Apple’s AI experience in China. Apple might be hoping to compensate for its own technological shortcomings and enrich its application ecosystem by opening AI model permissions to developers, but whether this move will be effective remains to be seen (Source: 36Kr)

🧰 Tools

Mind The Abstract: arXiv Paper LLM Summary Newsletter: A new tool called Mind The Abstract aims to help users keep up with the rapidly growing AI/ML research on arXiv. The tool scans arXiv papers weekly, selects 10 interesting articles, and uses an LLM to generate summaries. Users can subscribe to a free email newsletter to receive these summaries. Summaries come in two styles: “Informal” (less jargon, more intuition) and “TLDR” (short, for users with a professional background). Users can also customize their interested arXiv subject categories. The project aims to democratize AI research, focus on facts, and help researchers stay informed about progress in related fields (Source: Reddit r/artificial)

SteamLens: Distributed Transformer System for Analyzing Steam Game Reviews: A master’s student has developed a distributed Transformer system called SteamLens to analyze massive volumes of Steam game reviews, aiming to help indie game developers understand player feedback. The system reduces the processing time for 400,000 reviews from 30 minutes to 2 minutes by parallelizing Transformer processing. A key technological breakthrough was sharing Transformer model instances across a Dask cluster, solving the problem of excessive memory usage. The system can automatically detect hardware, allocate worker nodes, process reviews in parallel, and perform sentiment analysis and summarization. Currently, the project is limited to single-machine operation, with future plans to support multiple GPUs and larger datasets. The developer is seeking advice on the project’s future direction (technical expansion or user-friendliness improvement) (Source: Reddit r/MachineLearning)

![[P] Need advice on my steam project](https://rebabel.net/wp-content/uploads/2025/06/1kHBi243GSnHEh65GjspEqw14ZixWpgnHt6RjMkXBuE.webp)

OpenThinker3-7B Model Released: The OpenThinker3-7B model and its GGUF version have been released on HuggingFace. Community comments noted that the model, upon release, compared its performance to some outdated models, which might have affected its positioning and competitiveness assessment (Source: Reddit r/LocalLLaMA)

Utilizing “Paranoid Mode” to Prevent LLM Hallucinations and Malicious Use: A developer building an LLM chatbot for real customer service scenarios added a “paranoid mode” to address issues like users attempting jailbreaks, edge cases causing logical confusion, and prompt injection. This mode performs sanity checks before model inference, proactively blocking any messages that appear to be trying to redirect the model, extract internal configurations, or test guardrails, rather than just filtering harmful content. This mode reduces hallucinations and off-policy behavior by choosing to defer, log, or escalate to a fallback when a prompt seems manipulative or ambiguous (Source: Reddit r/artificial)

Fluxions AI Open-Sources 100M Parameter NotebookLM Speech Model VUI: Fluxions AI has released a 100 million parameter open-source NotebookLM speech model named VUI, reportedly built using two 4090 graphics cards. The project is available on GitHub (github.com/fluxions-ai/vui) and includes a link to a demo video showcasing its voice interaction capabilities (Source: Reddit r/MachineLearning)

![[R] 100M Open source notebooklm speech model](https://rebabel.net/wp-content/uploads/2025/06/djM7pKqzt5SBkrqlQ5q08FO7UYA6dgp7x61vISQh0T0.webp)

📚 Learning

Tutorial: Enhancing Image and Video Quality with Super-Resolution Models: A tutorial on using super-resolution models like CodeFormer to improve image and video quality has been shared. The tutorial is divided into four parts: environment setup, image super-resolution, video super-resolution, and an additional section on colorizing old black and white photos. The tutorial aims to help users learn how to enhance the clarity and detail of static images and dynamic videos, and restore color to old photographs. More tutorials and information can be accessed via the provided blog link (Source: Reddit r/deeplearning)

GraphRAG Multi-Hop Q&A Tutorial Released, Combining Vector Search with Graph Reasoning: The RAG_Techniques GitHub repository (16K+ stars) has added a step-by-step GraphRAG tutorial, focusing on solving multi-hop complex questions (e.g., “How did the protagonist defeat the villain’s assistant?”) that conventional RAG struggles with. This method combines vector search with graph reasoning, using only a vector database without needing a separate graph database. The tutorial covers converting text into entities, relationships, and paragraphs for vector storage, building entity and relationship searches, using mathematical matrices to discover data connections, using AI prompts to select the best relationships, and handling multi-logical-step complex questions, comparing GraphRAG with simple RAG effects (Source: Reddit r/LocalLLaMA)

Paper Discusses Novel Non-Standard High-Performance DNN Architecture with Significant Stability: A newly published article explores Deep Neural Networks (DNNs) from first principles, introducing a novel architecture distinct from both traditional machine learning and AI. This architecture employs an original adaptive loss function, achieving significant performance improvements through an “equilibration” mechanism. It uses non-linear functions to connect neurons without activation functions between layers, thereby reducing the number of parameters, enhancing interpretability, simplifying fine-tuning, and accelerating training. The adaptive equalizer acts as a dynamic subsystem, eliminating the linear part of the model and focusing on higher-order interactions to speed up convergence. The paper uses the universality of the Riemann zeta function as an example to approximate any response and can handle singularities for rare event or fraud detection. This method does not rely on libraries like PyTorch, TensorFlow, or Keras, using only Numpy for implementation (Source: Reddit r/deeplearning)

![[R] New article: A New Type of Non-Standard High Performance DNN with Remarkable Stability](https://rebabel.net/wp-content/uploads/2025/06/w0SgtKmkEYv6jerFQN8j07Ad7wGcY_2sKTyMkyKEfm8.webp)

Paper CRAWLDoc: A Dataset and Method for Robust Ranking of Bibliographic Literature: To address the layout and formatting challenges faced by publication databases when extracting metadata from diverse web sources, the CRAWLDoc method is proposed. This method ranks linked web documents contextually, starting from a publication’s URL (e.g., DOI), retrieving the landing page and all linked resources (PDF, ORCID, etc.), and embedding these resources, anchor texts, and URLs into a unified representation. To evaluate this method, researchers created a manually labeled dataset of 600 publications from top publishers in computer science. CRAWLDoc demonstrates robust and layout-agnostic ranking of relevant documents across publishers and data formats, laying the groundwork for improved metadata extraction from web documents with various layouts and formats (Source: HuggingFace Daily Papers)

Paper RiOSWorld: A Risk Benchmarking Framework for Multimodal Computer-Using Agents: With the rapid development of Multimodal Large Language Models (MLLMs) and their deployment as autonomous computer-using agents, assessing their safety risks has become critical. Existing evaluation methods either lack realistic interactive environments or focus on only a few risk types. To address this, the RiOSWorld benchmark is proposed for evaluating the potential risks of MLLM agents in real computer operations. The benchmark includes 492 risk tasks spanning various applications (web, social media, operating systems, etc.), categorized into user-originated risks and environmental risks, and evaluated from two dimensions: risk target intent and risk target completion. Experiments show that current computer-using agents face significant safety risks in real-world scenarios, highlighting the necessity and urgency of their safety alignment (Source: HuggingFace Daily Papers)

Paper Viewpoint: Small Language Models (SLMs) are the Future of Agentic AI: The paper proposes that while Large Language Models (LLMs) excel at various tasks, Small Language Models (SLMs) are more advantageous for specialized tasks that are executed repeatedly in large numbers within agentic AI systems. SLMs are not only sufficiently capable but also more suitable and economical. The article argues based on current SLM capabilities, common architectures of agentic systems, and the economics of language model deployment. For scenarios requiring general conversational abilities, heterogeneous agentic systems (calling multiple different models) are a natural choice. The paper also discusses potential obstacles to SLM application in agentic systems and outlines a general LLM-to-SLM agent conversion algorithm, aiming to promote discussion on the efficient utilization of AI resources (Source: HuggingFace Daily Papers)

Paper POSS: Leveraging Positional Specialists to Enhance Draft Model Performance in Speculative Decoding: Speculative decoding accelerates LLM inference by having a small draft model predict multiple tokens, which are then verified in parallel by a large target model. Recent research has utilized target model hidden states to improve draft model prediction accuracy. However, existing methods suffer from error accumulation in draft model-generated features, leading to decreased prediction quality for subsequent token positions. The Position Specialists (PosS) method proposes using multiple position-specialized draft layers to generate tokens at specified positions. Since each specialist only needs to handle a specific degree of draft model feature deviation, PosS significantly improves the acceptance rate of subsequent position tokens. Experiments on Llama-3-8B-Instruct and Llama-2-13B-chat show that PosS outperforms baselines in terms of average acceptance length and speedup ratio (Source: HuggingFace Daily Papers)

Paper CapSpeech: Empowering Downstream Applications of Stylized Caption Text-to-Speech (CapTTS): CapSpeech is a new benchmark designed for a series of tasks related to stylized caption text-to-speech (CapTTS), including CapTTS with sound effects (CapTTS-SE), accented caption TTS (AccCapTTS), emotional caption TTS (EmoCapTTS), and chat agent TTS (AgentTTS). CapSpeech contains over 10 million machine-annotated and nearly 360,000 human-annotated audio-caption pairs. Additionally, two new datasets recorded by professional voice actors and audio engineers are introduced, specifically for AgentTTS and CapTTS-SE tasks. Experimental results demonstrate high-fidelity and high-intelligibility speech synthesis across various speaking styles. CapSpeech is claimed to be the largest currently available dataset with comprehensive annotations for CapTTS-related tasks (Source: HuggingFace Daily Papers)

Paper VideoMarathon: Enhancing Long Video-Language Understanding through Hour-level Video Training: To address the scarcity of annotated long video data, the VideoMarathon dataset is proposed. It is a large-scale, hour-level video instruction-following dataset containing approximately 9,700 hours of diverse long videos, ranging from 3 to 60 minutes in length. The dataset includes 3.3 million high-quality question-answer pairs covering six major themes: temporal, spatial, object, action, scene, and event, supporting 22 tasks that require short-term and long-term video understanding. Based on this dataset, the Hour-LLaVA model is proposed, which effectively processes hour-level videos through a memory-augmented module. It achieves state-of-the-art performance on multiple long video-language benchmarks, demonstrating the high quality of the VideoMarathon dataset and the superiority of the Hour-LLaVA model (Source: HuggingFace Daily Papers)

Paper AV-Reasoner: Improving and Benchmarking Cue-based Audio-Visual Counting MLLM Capabilities: Current Multimodal Large Language Models (MLLMs) perform poorly on video counting tasks. Existing benchmarks suffer from short video durations, narrow query ranges, lack of cue annotations, and insufficient multimodal coverage. To address this, the CG-AV-Counting benchmark is proposed, a manually annotated, cue-based counting benchmark containing 1027 multimodal questions from 497 long videos and 5845 annotated cues, supporting both black-box and white-box evaluation. Concurrently, the AV-Reasoner model is proposed, which generalizes counting capabilities from related tasks through GRPO and curriculum learning. AV-Reasoner achieves SOTA results on multiple benchmarks, showcasing the effectiveness of reinforcement learning. However, experiments also indicate that on out-of-domain benchmarks, language-space reasoning does not yield performance improvements (Source: HuggingFace Daily Papers)

Paper Proposes New Framework for Aligning Latent Spaces with Flow Priors: This paper proposes a new framework for aligning learnable latent spaces with arbitrary target distributions by leveraging flow-based generative models as priors. The method first pre-trains a flow model on target features to capture their underlying distribution. This fixed flow model then regularizes the latent space via an alignment loss. This alignment loss rephrases the flow matching objective, treating latent variables as the optimization target. The study demonstrates that minimizing this alignment loss establishes a computationally tractable proxy objective for maximizing the variational lower bound of the log-likelihood of latent variables under the target distribution. The method avoids costly likelihood evaluations and ODE solving during optimization. Large-scale image generation experiments on ImageNet validate the effectiveness of the method across different target distributions (Source: HuggingFace Daily Papers)

Paper MedAgentGym: Large-Scale Training of LLM Agents for Code-Based Medical Reasoning: MedAgentGym is the first publicly available training environment designed to enhance the code-based medical reasoning capabilities of Large Language Model (LLM) agents. It comprises 129 categories and 72,413 task instances derived from real-world biomedical scenarios. Tasks are encapsulated in executable coding environments with detailed descriptions, interactive feedback, verifiable ground-truth annotations, and scalable training trajectory generation. Benchmarking over 30 LLMs reveals a significant performance gap between commercial API models and open-source models. Leveraging MedAgentGym, Med-Copilot-7B achieved substantial performance improvements through supervised fine-tuning and reinforcement learning, becoming a competitive, privacy-focused alternative to gpt-4o. MedAgentGym provides an integrated platform for developing LLM coding assistants for advanced biomedical research and practice (Source: HuggingFace Daily Papers)

Paper SparseMM: Visual Concept Response Elicits Head Sparsity in MLLMs: Multimodal Large Language Models (MLLMs) are typically derived by extending pre-trained LLMs with visual capabilities. Research has found that MLLMs exhibit a sparsity phenomenon when processing visual input: only a small fraction (approx. <5%) of attention heads in the LLM (termed visual heads) actively participate in visual understanding. To efficiently identify these visual heads, researchers designed a training-free framework that quantifies head visual relevance through target response analysis. Based on this finding, SparseMM is proposed, a KV-Cache optimization strategy that allocates asymmetric computational budgets based on head visual scores, leveraging the sparsity of visual heads to accelerate MLLM inference. Compared to previous methods that ignore visual specificity, SparseMM prioritizes and preserves visual semantics during decoding, achieving a better accuracy-efficiency trade-off on mainstream multimodal benchmarks (Source: HuggingFace Daily Papers)

Paper RoboRefer: Enhancing Spatial Referring and Reasoning in Robotic Vision-Language Models: Spatial referring is a fundamental capability for embodied robots interacting in 3D physical worlds. Existing methods, even when leveraging powerful pre-trained Vision-Language Models (VLMs), struggle to accurately understand complex 3D scenes and dynamically reason about interaction locations indicated by instructions. To address this, RoboRefer is proposed, a 3D-aware VLM that integrates decoupled yet specialized depth encoders via Supervised Fine-Tuning (SFT) for precise spatial understanding. Furthermore, RoboRefer enhances generalized multi-step spatial reasoning through Reinforcement Fine-Tuning (RFT) with a metric-sensitive procedural reward function tailored for spatial referring tasks. To support training, a large-scale dataset RefSpatial (20M QA pairs, 31 spatial relations, up to 5 reasoning steps) and an evaluation benchmark RefSpatial-Bench are introduced. Experiments show SFT-trained RoboRefer achieves SOTA in spatial understanding, and after RFT, significantly outperforms other baselines on RefSpatial-Bench, even surpassing Gemini-2.5-Pro (Source: HuggingFace Daily Papers)

Paper LIFT: Guiding Visual Representation Learning with a Fixed LLM Text Encoder: The dominant approach for language-image alignment (e.g., CLIP) involves joint pre-training of text and image encoders via contrastive learning. This study investigates whether such expensive joint training is necessary, specifically examining if a pre-trained, fixed Large Language Model (LLM) can provide a sufficiently good text encoder to guide visual representation learning. The researchers propose LIFT (Language-Image alignment with a Fixed Text encoder) framework, which trains only the image encoder. Experiments demonstrate that this simplified framework is highly effective, outperforming CLIP in most scenarios involving compositional understanding and long captions, and significantly improving computational efficiency. This work offers new insights into how LLM text embeddings can guide visual learning (Source: HuggingFace Daily Papers)

Paper OminiAbnorm-CT: A New Abnormality-Centric Approach for Whole-Body CT Image Interpretation: Addressing the challenges of automatic CT image interpretation in clinical radiology, particularly the localization and description of abnormal findings in multi-planar, whole-body scans, this study makes four contributions: 1) Proposes a comprehensive hierarchical classification system of 404 representative abnormal findings across all body regions; 2) Constructs a dataset of over 14,500 multi-planar, whole-body CT images with fine-grained localization annotations and descriptions for over 19,000 abnormalities; 3) Develops the OminiAbnorm-CT model, capable of automatically localizing and describing abnormalities in multi-planar, whole-body CT images based on text queries, and supporting flexible interaction via visual prompts; 4) Establishes three evaluation tasks based on real clinical scenarios. Experiments demonstrate that OminiAbnorm-CT significantly outperforms existing methods across all tasks and metrics (Source: HuggingFace Daily Papers)

Paper Explores Achieving Contextual Integrity (CI) in LLMs through Reasoning and Reinforcement Learning: As the era of autonomous agents making decisions on behalf of users arrives, ensuring Contextual Integrity (CI)—what information is appropriate to share when performing a specific task—becomes a core issue. Researchers argue that CI requires agents to reason about their operating environment. They first prompt LLMs to explicitly reason about CI when deciding on information disclosure, then develop a Reinforcement Learning (RL) framework to further instill the reasoning capabilities needed for models to achieve CI. Using a dataset of approximately 700 synthetic yet diverse contexts and information disclosure norms, this approach significantly reduces inappropriate information disclosure across various model sizes and families, while maintaining task performance. Importantly, this improvement transfers from synthetic datasets to established CI benchmarks like PrivacyLens, which has human annotations and evaluates AI assistants for privacy leaks in actions and tool calls (Source: HuggingFace Daily Papers)

Paper VideoREPA: Learning Physical Knowledge in Video Generation via Relational Alignment with Foundation Models: Recent advances in text-to-video (T2V) diffusion models have enabled high-fidelity video synthesis, but they often struggle to generate physically plausible content due to a lack of accurate physical understanding. Research has found that the physical understanding capabilities in T2V model representations are far inferior to those of video self-supervised learning methods. To address this, the VideoREPA framework is proposed, which distills the physical understanding capabilities of video understanding foundation models into T2V models through token-level relational alignment. Specifically, a Token Relational Distillation (TRD) loss is introduced, utilizing spatio-temporal alignment to provide soft guidance for fine-tuning powerful pre-trained T2V models. VideoREPA is claimed to be the first REPA method designed for fine-tuning T2V models and injecting physical knowledge. Experiments show that VideoREPA significantly enhances the physical common sense of the baseline method CogVideoX, achieving notable improvements on relevant benchmarks (Source: HuggingFace Daily Papers)

Paper Rethinking Depth Representation for Feed-Forward 3D Gaussian Splatting: Depth maps are widely used in feed-forward 3D Gaussian Splatting (3DGS) pipelines by unprojecting them into 3D point clouds for novel view synthesis. This method offers advantages like efficient training, use of known camera poses, and accurate geometry estimation. However, depth discontinuities at object boundaries often lead to fragmented or sparse point clouds, degrading rendering quality. To address this, researchers introduce PM-Loss, a novel regularization loss based on pointmaps predicted by a pre-trained Transformer. Although pointmaps themselves may be less accurate than depth maps, they effectively enforce geometric smoothness, especially around object boundaries. With improved depth maps, this method significantly enhances feed-forward 3DGS performance across various architectures and scenes, providing consistently superior rendering results (Source: HuggingFace Daily Papers)

Paper EOC-Bench: Evaluating MLLMs’ Ability to Identify, Recall, and Predict Objects in First-Person View Worlds: The emergence of Multimodal Large Language Models (MLLMs) has driven breakthroughs in first-person vision applications, which require persistent, context-aware understanding of objects. However, existing embodied benchmarks primarily focus on static scene exploration, neglecting the evaluation of dynamic changes resulting from user interactions. EOC-Bench is a new benchmark designed to systematically evaluate object-centric embodied cognition in dynamic first-person scenes. It comprises 3,277 meticulously annotated QA pairs, categorized into past, present, and future temporal classes, covering 11 fine-grained evaluation dimensions and 3 types of visual object referring. To ensure comprehensive evaluation, a hybrid-format human-machine collaborative annotation framework and a novel multi-scale temporal accuracy metric were developed. The evaluation of various MLLMs based on EOC-Bench provides a critical tool for enhancing MLLMs’ embodied object cognition capabilities (Source: HuggingFace Daily Papers)

Paper Rectified Point Flow: A General Method for Point Cloud Pose Estimation: Rectified Point Flow is a unified parameterization method that formulates pairwise point cloud registration and multi-part shape assembly as a single conditional generation problem. Given unposed point clouds, the method learns a continuous, per-point velocity field that transports noisy points to their target locations, thereby recovering part poses. Unlike prior work that regresses part poses and employs specific symmetry handling, this method intrinsically learns assembly symmetries without requiring symmetry labels. Combined with a self-supervised encoder focused on overlapping points, the method achieves new state-of-the-art performance on six benchmarks covering pairwise registration and shape assembly. Notably, its unified formulation enables effective joint training on diverse datasets, thereby facilitating the learning of shared geometric priors and consequently improving accuracy (Source: HuggingFace Daily Papers)

Paper DGAD: Achieving Geometry-Editable and Appearance-Preserving Object Composition: General Object Composition (GOC) aims to seamlessly integrate a target object into a background scene with desired geometric attributes while preserving its fine-grained appearance details. Recent methods leverage semantic embeddings and integrate them into advanced diffusion models for geometry-editable generation. However, these highly compact embeddings only encode high-level semantic cues, inevitably discarding fine-grained appearance details. Researchers introduce DGAD (Disentangled Geometry-editable and Appearance-preserving Diffusion) model, which first utilizes semantic embeddings to implicitly capture desired geometric transformations, and then employs a cross-attention retrieval mechanism to align fine-grained appearance features with the geometry-edited representation, thereby achieving precise geometric editing and faithful appearance preservation in object composition (Source: HuggingFace Daily Papers)

💼 Business

Turing Award Winner Yoshua Bengio Co-founds Non-Profit Organization LawZero, Focusing on “Safe-by-Design” AI Systems: Yoshua Bengio, one of the godfathers of deep learning and a Turing Award laureate, announced the establishment of a new non-profit organization, LawZero. It aims to build next-generation “safe-by-design” AI systems and explicitly stated it will not develop Agents. LawZero has secured $30 million in seed funding from entities including the Future of Life Institute, Open Philanthropy (an early investor in OpenAI), and an organization affiliated with former Google CEO Eric Schmidt. The organization will develop “Scientist AI” with the core objective of understanding and learning about the world, rather than taking actions within it. It aims to provide verifiable, truthful answers through transparent external reasoning, to be used for accelerating scientific discovery, supervising Agent-type AI systems, and deepening the understanding and mitigation of AI risks. Bengio stated this initiative is a constructive response to potential risks already demonstrated by current AI systems, such as self-preservation and deceptive behaviors (Source: QbitAI)

Microsoft CEO Nadella Says Partnership with OpenAI is Adjusting but Remains Strong: Microsoft CEO Satya Nadella stated that Microsoft’s partnership with OpenAI is evolving, but the two parties will maintain multi-level cooperation, with OpenAI remaining Microsoft’s largest infrastructure customer. Although Microsoft initially had a deep binding and investment in OpenAI, the relationship has seen subtle changes as both parties launch competing products and seek more partners (e.g., OpenAI collaborating with Oracle and SoftBank on Project Stargate, Microsoft incorporating xAI’s Grok model into its Azure platform). Nadella emphasized his hope for continued cooperation in multiple areas for decades to come and acknowledged that both parties will have other partners. Microsoft is striving to reboot its consumer business through AI and has recruited DeepMind co-founder Mustafa Suleyman to lead related products (Source: 36Kr)

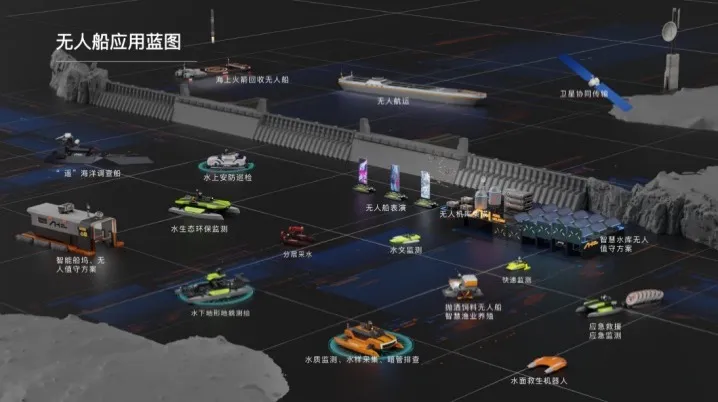

Haibo Unmanned Ships Completes Tens of Millions of Yuan Series A Financing, Accelerating Commercialization of Aquatic AI Solutions: Beijing Haibo Unmanned Ship Technology Co., Ltd. recently completed a Series A financing round worth tens of millions of yuan, led by Shanghai Fansheng Investment, a subsidiary of Zhejiang Laoyuweng Group. The funds will be used to increase R&D, team building, market promotion, and productization. Founded in 2019, Haibo Unmanned Ships focuses on the entire industrial chain of intelligent unmanned vessels, providing AI-powered solutions for aquatic environments. Its diverse product line includes the “Hunter Series” for inland waters and the “Koi Series” for shallow water areas, with core components achieving a 92% domestic substitution rate. The company has conducted nearly a thousand aquatic technology service projects in Beijing, Tianjin, and other locations, and plans to establish an East China operations center and an intelligent feeding unmanned ship assembly base in Shaoxing (Source: 36Kr)

🌟 Community

Reddit Discussion: Gemini 2.5 Pro Surpasses Claude Opus 4 in WebDev Arena, but Benchmark Value Questioned: A post about the new Gemini 2.5 Pro outperforming Claude Opus 4 in WebDev Arena (a benchmark measuring real-world coding performance) sparked discussion in the Reddit r/ClaudeAI community. Many commenters expressed skepticism about the practical value of such micro-level benchmarks, viewing them more as general indicators of AI capabilities rather than definitive proof of a specific model’s superiority. The discussion noted that the specific metrics for benchmarks like “WebDev” (e.g., instruction following, creativity, code optimization, response to sparse prompts) are often unclear, and the complexity of real-world development far exceeds these indicators. Some commented that model selection depends more on how it complements an individual developer’s human-centric workflow rather than solely on benchmark scores. Others pointed out the phenomenon of “leaderboard illusion,” where model developers might be allowed to test private versions of their models on platforms like Chatbot Arena and only publicize the best-performing ones (Source: Reddit r/ClaudeAI)

AI Engineer’s Career Choice Dilemma: Intersection of Interest and Climate Change Concerns: A European student expressed their career choice confusion on Reddit r/ArtificialInteligence. They have always been passionate about AI and aimed to study it, but in recent years, concerns about climate change and its potential impact on Europe (e.g., economy, energy issues) have grown. They believe AI’s high energy consumption might exacerbate pressure on Europe’s power grid and make ecological transition more difficult, leading to hesitation in their specialization choice. Community comments generally argued that AI and climate change solutions are not mutually exclusive: 1) AI can play a key role in energy efficiency optimization, climate data analysis and modeling, and sustainable technology development; 2) The current high energy consumption of LLMs is not representative of all AI, and developing efficient AI solutions is itself an AI engineer’s responsibility; 3) Pursuing one’s interests can lead to greater impact, and AI can be applied to positive climate-related endeavors. Many encouraged the student to continue studying AI and focus on applying it to solve real-world problems, including climate change (Source: Reddit r/ArtificialInteligence)

LLMs Reportedly Often Able to Identify When They Are Being Evaluated, Raising Concerns About Model “Pandering” Behavior: An arXiv paper (2505.23836) pointed out that Large Language Models (LLMs) are often aware that they are being evaluated. This sparked community discussion, with the core concern being that when models know they are in a test environment, they might adjust their responses to meet the expectations of developers or evaluators, rather than exhibiting their true capabilities or inherent behaviors. Comments noted that if models are trained this way, such “pandering” behavior is to be expected. This situation poses a challenge to evaluating the true performance, safety, and alignment of LLMs, as evaluation results may not reflect the model’s behavior in real, non-evaluative scenarios (Source: Reddit r/artificial)

Enterprise AI Tool Usage Restricted, Employees Seek Solutions and Express Concerns: A user working in a large enterprise stated on Reddit r/ClaudeAI that due to company data confidentiality policies and VPN restrictions, they cannot use mainstream AI tools like Anthropic, OpenAI, or Gemini, while many in the community discuss using advanced technologies like Claude Code. This sparked a discussion on how to balance data security with leveraging AI tools for efficiency in a corporate environment. Comments pointed out that Anthropic itself is very privacy-focused, even offering options for encrypted inference calls via AWS Sagemaker, suggesting the user’s company might have a flawed AI strategy. Some commenters believed companies not embracing AI could face declining competitiveness and layoff risks in the future. Suggested solutions included: pushing the company to sign enterprise-level AI service agreements, personally paying for AI services that don’t use data for training, self-hosting local inference servers (costly), or using local small models for non-sensitive data (Source: Reddit r/ClaudeAI)

AI Photo Restoration Sparks Debate: Restoring Memories or Rewriting Them?: A user on Reddit r/ArtificialInteligence shared their experience using AI (ChatGPT and Kaze.ai) to restore and colorize old photos, sparking a discussion about the ethics of AI photo restoration. While amazed by AI’s ability to rejuvenate old photos, the user also expressed concerns about authenticity, as AI “guesses” colors and fills in details based on algorithms during restoration, potentially adding or removing original information and thus altering the true historical image. The discussion suggested that AI restoration is essentially re-creating an image based on probability and training data; if pattern recognition is accurate and data appropriate, it can be seen as “restoration,” otherwise it’s “rewriting.” Some comments noted that memory itself is subjective and imprecise, and AI restoration is somewhat similar to human Photoshop experts’ work and is non-destructive (the original photo remains). The key is to acknowledge AI’s artistic interpretation and realize we are understanding the past through the filter of current consciousness (Source: Reddit r/ArtificialInteligence)

Software Engineering Novice’s Confusion in the AI Era: If AI Can Do Everything, What’s the Point of Learning to Code?: A computer science student asked on Reddit r/ArtificialInteligence that if AI can write code, debug, and provide optimal solutions, what is the point for software engineers to learn these skills, and will they become AI’s “middlemen” and eventually be replaced. Community responses emphasized that AI tools are most effective when guided by capable developers. AI is currently better at handling repetitive, auxiliary tasks, while complex system design, strategy formulation, requirements understanding, and innovative problem-solving still require human engineers. Novices were advised to follow the practices of industry experts (like Simon Willison’s blog) to understand how AI assists rather than replaces developers, and to focus on enhancing core problem-solving abilities and mastery of AI tools (Source: Reddit r/ArtificialInteligence)

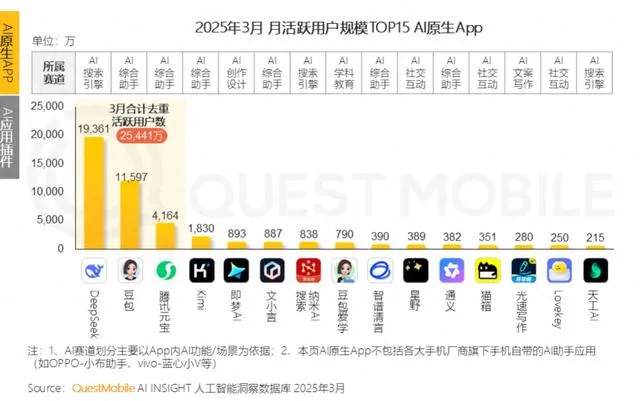

Big Tech Companies Vying to Become Young People’s “AI Confidantes” with Emotional Companionship, but Face User Retention Challenges: AI assistants from major tech companies like Tencent’s Yuanbao, ByteDance’s Doubao, and Alibaba’s Tongyi are all incorporating AI character agents. Standalone apps like ByteDance’s Maoxiang and Tencent’s Zhumengdao are also entering the AI emotional companionship race, aiming to attract young users and boost app activity with “cyber boyfriends/girlfriends.” These AI characters cater to users’ emotional needs through more human-like interactions (including voice and plot progression), initially driving up app downloads and usage time. However, such applications generally face technical bottlenecks, such as “AI amnesia” due to insufficient long-context processing capabilities of large models and weak emotional understanding, which affect user experience. Furthermore, despite initially attracting users through novelty and emotional bonding, AI applications overall suffer from low user retention rates. QuestMobile data shows that the three-day retention rate for top AI apps is generally below 50%, with Doubao’s uninstall rate reaching 42.8%. The article argues that true user retention will depend on technological innovation rather than solely on emotional companionship or traffic acquisition (Source: 36Kr)

💡 Others

Humanoid Robots Enter the Hospitality Industry: Huge Potential but Significant Short-Term Challenges: With products like ZHIYUAN ROBOTICS’ “Lingxi X2” planning mass production and priced from hundreds of thousands to tens of thousands of yuan, humanoid robots are moving from exhibition novelties to real-world application scenarios, with the hotel industry seen as one of the first landing spots. Compared to traditional delivery robots, humanoid robots possess stronger execution and judgment capabilities, potentially replacing positions like porters, security guards, and some front desk staff, addressing pain points in the hotel industry such as high labor costs and cumbersome processes. However, large-scale application of humanoid robots in hotels faces short-term challenges: 1) Insufficient technological maturity: hotel environments are complex and varied, demanding high interaction and adaptability from robots, which current robots struggle with; 2) Long cost recovery period: an investment of hundreds of thousands of yuan is not a small sum for hotels, requiring consideration of ROI, maintenance, compatibility, etc.; 3) Balancing standardized and personalized services. The article suggests that humanoid robots will partially replace hotel employees in the future, but more importantly, will drive the service industry towards a more advanced “human-robot collaboration” model (Source: 36Kr)

AI Health & Wellness Video Bloggers Experience Short-Term Boom, but Long-Term Value Questionable; AI Should Empower, Not Replace, Content Creation: Recently, AI-generated health and wellness科普 (popular science) short videos in cartoon or dynamic illustration styles have seen numerous viral hits on platforms like Xiaohongshu, achieving rapid follower growth. Their popularity stems from strong content adaptability (informative content + engaging animation), high audience demand (driven by health anxiety), and platform algorithm friendliness (high click/collection rates). Monetization methods mainly include private domain conversion, “small list” product recommendations, and selling AI video creation courses, with course sales often being more profitable. However, such videos lack long-term value due to the fleeting novelty of their format, tightening platform regulations, weak product endorsement capabilities for health products, and a lack of trust barriers for accounts. They are seen more as “traffic arbitrage.” The article argues that the true value of AI technology for health bloggers lies in assisting creation (structuring content, visualizing information, managing content assets, converting users to services), rather than replacing humans in content production (Source: 36Kr)

Lex Fridman Podcast Interviews Google CEO Sundar Pichai: Google and Alphabet CEO Sundar Pichai was a guest on the Lex Fridman Podcast (Episode #471). The wide-ranging discussion covered Pichai’s upbringing in India, advice for young people, leadership style, the impact of AI in human history, the future of the Veo 3 video model, AI scaling laws, AGI and ASI, P(doom) (the probability of AI causing a catastrophe), the hardest decisions of his leadership career, AI models versus Google Search, Google Chrome, programming, Android, questions for AGI, the future of humanity, and demos of Google Beam and XR glasses. This podcast episode offers in-depth perspectives on Pichai’s views on AI development, Google’s strategy, and the future of technology (Source: )