Keywords:AI Agent, Microsoft Build 2025, AlphaEvolve, GPT-4, Azure AI Foundry, NVIDIA Computex 2025, AI programming tools, Embodied Intelligence, GitHub Copilot VSCode extension, Model Context Protocol (MCP), Natural Language Web (NLWeb), Meituan NoCode, Tencent QBot Smart Assistant

🔥 Focus

Microsoft Build 2025 Ushers in “Agentic Web” Era, Fully Embracing AI-Native Development: At its Build 2025 developer conference, Microsoft announced its “Open Agentic Web” vision, releasing over 50 updates. Core among these are open-sourcing GitHub Copilot’s VSCode extension, launching the Model Context Protocol (MCP) and Natural Language Web (NLWeb) open standards, and introducing xAI’s Grok and 1900+ other models to Azure AI Foundry. These initiatives aim to connect the development pipeline from models to agents, enabling cross-scenario autonomous operation and interoperability for AI Agents. Microsoft CEO Satya Nadella emphasized that AI Agents will reshape problem-solving, and discussed the future of AI agents in software development, infrastructure, and physical world applications with OpenAI CEO Sam Altman, Nvidia CEO Jensen Huang, and xAI founder Elon Musk. (Source: 36Kr | GitHub Blog | VS Code Blog | The Verge)

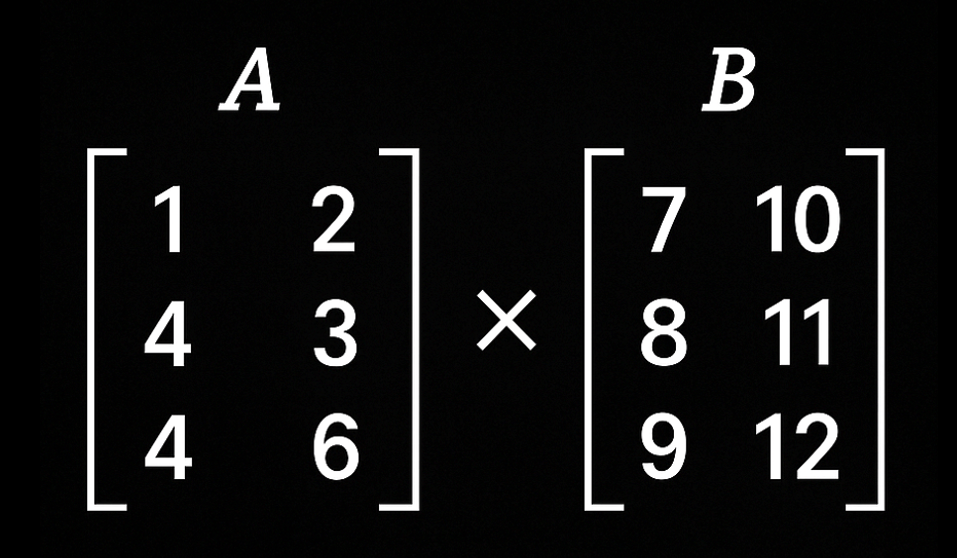

Google DeepMind Releases AlphaEvolve, AI Agent Breaks 56-Year Matrix Multiplication Efficiency Record: Google DeepMind introduced AlphaEvolve, a Gemini-powered coding agent. Through evolutionary algorithms and an automated evaluation system, it successfully discovered a more efficient 4×4 complex matrix multiplication algorithm than Strassen’s algorithm, which has been used for 56 years, reducing the required scalar multiplications from 49 to 48. This breakthrough is not only significant in mathematical theory but has already shown value in Google’s internal applications, such as accelerating large matrix multiplication operations in the Gemini architecture by 23%, shortening Gemini training time by 1%, and improving FlashAttention performance by 32.5%. AlphaEvolve demonstrates AI’s immense potential in automated scientific discovery and algorithm optimization, capable of addressing various complex problems from mathematical puzzles to data center resource scheduling and AI model training acceleration. (Source: Google DeepMind Blog | QbitAI)

Study Shows GPT-4 is 64% More Persuasive Than Humans in Personalized Debates: A study published in Nature Human Behaviour indicates that when OpenAI’s GPT-4 has access to personal information about a debate opponent, such as gender, age, and educational background, and adjusts its arguments accordingly, its persuasiveness is 64% higher than that of humans. This research, a collaboration involving institutions like EPFL, involved 900 participants and further confirms the powerful persuasive capabilities of Large Language Models (LLMs). Researchers warn that this reveals AI tools can construct complex and persuasive arguments after obtaining small amounts of user information, posing a potential threat to personalized disinformation campaigns. They call on policymakers and platforms to address this risk and explore using LLMs to generate personalized counter-narratives to combat false information. (Source: Nature Human Behaviour | MIT Technology Review)

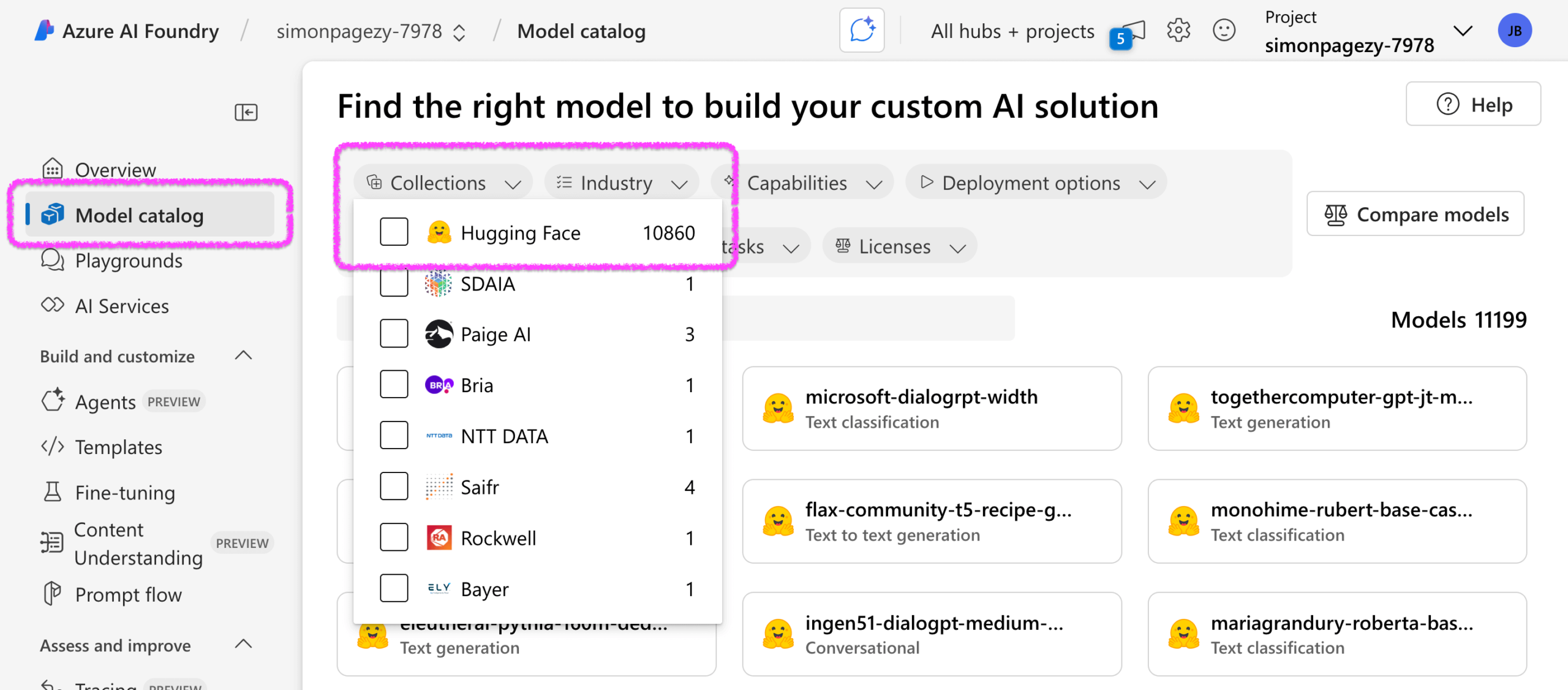

Microsoft and Hugging Face Deepen Collaboration, Azure AI Foundry Integrates Over 10,000 Open-Source Models: At the Microsoft Build conference, Microsoft announced an expansion of its partnership with Hugging Face. Azure AI Foundry now integrates over 10,000 Hugging Face open-source models, covering various modalities and tasks such as text, audio, and image. This initiative aims to allow Azure users to more easily and securely deploy a diverse range of open-source models for building AI applications and agents. All integrated models have passed security tests, use the safetensors format, and contain no remote code, ensuring enterprise-grade application security. The two parties plan to continue introducing the latest and most popular models, support more modalities (like video, 3D), and enhance optimization for AI agents and tools in the future. (Source: HuggingFace Blog)

🎯 Trends

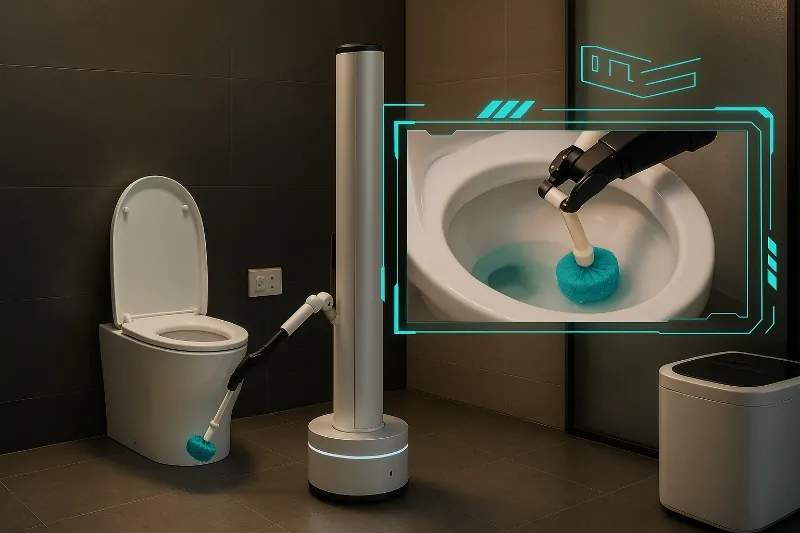

Nvidia Unveils Multiple AI Products at Computex 2025, Accelerating AI Factory Transformation: CEO Jensen Huang announced the GeForce RTX 5060 GPU, Grace Blackwell GB300 supercomputing platform, personal AI supercomputer DGX Spark (equipped with GB10, available in weeks), and DGX Station (784GB memory, capable of running DeepSeek R1) at Computex 2025. Huang emphasized Nvidia’s transformation from a GPU supplier to a global AI infrastructure provider, aiming to create “out-of-the-box” AI factories. Additionally, Nvidia, in collaboration with DeepMind and Disney, will open-source its physics engine Newton in July and launched the Isaac GR00T humanoid robot foundational model, promoting physical AI development. Nvidia also announced a new office in Taiwan, China, and stressed the importance of Chinese AI talent. (Source: 36Kr | 36Kr)

Microsoft Reportedly Plans to Allow EU Users to Change Default Voice Assistant on iPhones and Other Devices: According to Bloomberg, Apple plans to allow EU users to change the default voice assistant on devices like iPhones, iPads, and Macs from Siri to other options such as Google Assistant or Amazon Alexa. This move is likely in response to antitrust pressure from the EU’s Digital Markets Act (DMA). Siri has been criticized in recent years for its outdated features and lack of intelligence. Apple reportedly has internal disagreements on Siri’s development direction, and its current architecture is difficult to integrate effectively with Large Language Models (LLMs). Although Apple is developing a new LLM-based Siri and has launched Apple Intelligence, allowing users to change the default assistant could impact its ecosystem. (Source: 36Kr)

Apple Internally Testing Self-Developed AI Chatbot, Potentially on Par with ChatGPT: Bloomberg reporter Mark Gurman revealed that Apple is internally testing its self-developed AI chatbot project. Under new AI chief John Giannandrea, the project has made significant progress in the last six months, with some executives believing its current version is close in capability to the latest version of ChatGPT. The chatbot may feature real-time web search and information integration capabilities. This move could be aimed at reducing reliance on external services like OpenAI and enhancing Siri’s competitiveness. Although WWDC 2025 may not heavily feature Siri upgrades, Apple’s investment in AI continues to grow as it seeks to revitalize its voice assistant in the AI era. (Source: 36Kr)

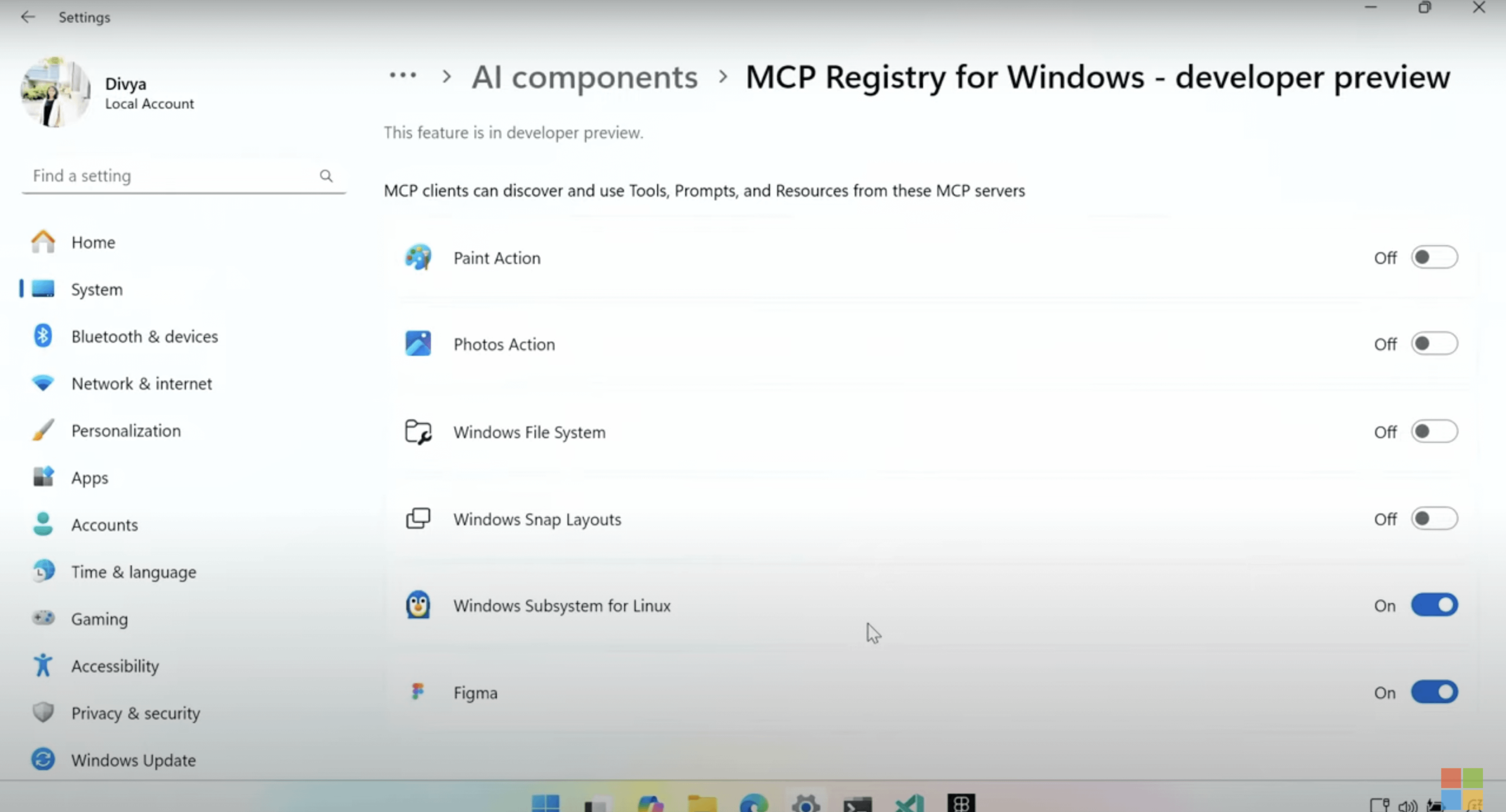

Windows to Natively Support Model Context Protocol (MCP): Microsoft announced at its Build 2025 conference that the Windows operating system will natively support the Model Context Protocol (MCP), aimed at simplifying the development and deployment of AI applications on Windows. MCP is likened to a “USB-C for AI applications,” attempting to provide a standardized interaction method for different AI models and applications. The Windows AI Foundry platform will integrate this support, making it more convenient for developers to run and manage local AI models and agents on Windows devices. (Source: op7418 | Reddit r/LocalLLaMA)

Microsoft Azure AI Foundry Introduces xAI’s Grok Large Model: Microsoft announced at its Build 2025 developer conference that Elon Musk’s xAI company’s Grok 3 and Grok 3 mini large models will join the Azure AI Foundry platform. Azure users will be able to directly use and pay for these models through the cloud platform. This move further expands the number of AI models available on Azure (already exceeding 1900), which previously included models from OpenAI, Meta, and DeepSeek. Musk, via video link, expressed hope for developer feedback and looks forward to offering Grok services to more companies in the future. (Source: 36Kr)

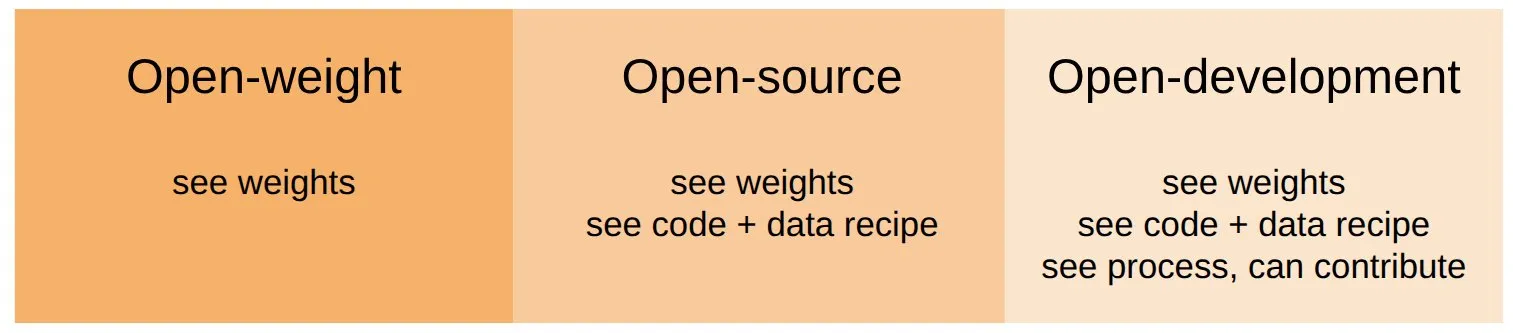

Percy Liang’s Team Launches Marin Project to Promote Open AI Model Development: Stanford University professor Percy Liang has initiated the Marin project, aiming to build open models in a “radically participatory way.” The project emphasizes an open development process, allowing anyone to contribute. The first batch of Marin models has been released, with an 8B model already available on the Together AI platform for testing. This initiative responds to calls for deeper openness in the AI field, not just open-sourcing weights, code, and data, but the entire R&D ecosystem. (Source: vipulved)

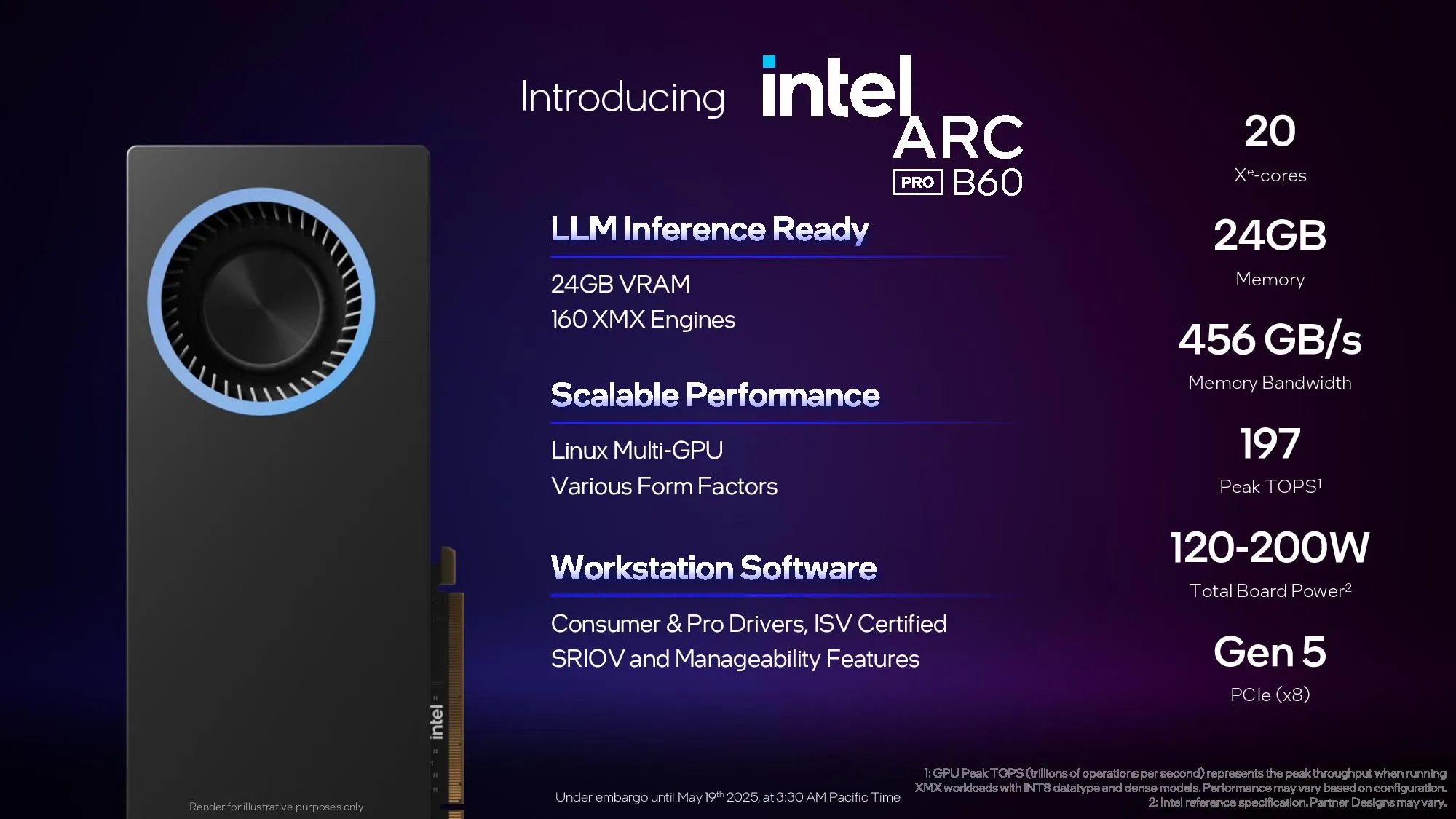

Intel Releases Arc Pro B60 Professional Graphics Card, KTransformers Announces Support for Intel GPUs: Intel has released its new professional-grade graphics card, the Arc Pro B60, featuring 24GB of VRAM and 456GB/s memory bandwidth, priced at approximately $500 per card, offering a new hardware option for AI computing. Simultaneously, the KTransformers framework announced support for Intel GPUs. Tests show that running the DeepSeek-R1 Q4 quantized model on a Xeon 5 + DDR5 + Arc A770 platform can achieve approximately 7.5 tokens/s, providing more hardware possibilities for running large models locally. (Source: karminski3 | karminski3)

DeepMind Teases Google I/O Conference: The official Google DeepMind account teased the upcoming Google I/O conference, scheduled for May 20th (10 AM PT), which will be live-streamed on X (formerly Twitter). The conference is expected to feature a series of major AI-related updates and product announcements, continuing Google’s strong momentum in the AI field. (Source: GoogleDeepMind)

🧰 Tools

AgenticSeek: Purely Local AI Agent, Comparable to Manus AI: AgenticSeek is an open-source project aiming to provide a fully locally-run AI assistant with capabilities for autonomous web browsing, code writing, and task planning. All data remains on the user’s device, ensuring privacy. The tool is specifically designed for local inference models, supports voice interaction, and is committed to reducing the cost (only electricity consumption) and data leakage risks of AI agents. (Source: GitHub Trending)

Meituan Internally Tests AI Programming Tool NoCode, Positioned as “Vibe Coding”: 36Kr exclusively reported that Meituan will soon launch an AI programming tool “NoCode,” with the domain nocode.cn registered and undergoing grayscale testing. Developed by Meituan’s R&D Quality and Efficiency team, the product is positioned similarly to Lovable’s “vibe coding,” targeting non-technical users. Through conversational interaction, it automatically completes coding and deployment tasks, such as data analysis, product prototyping, and operational tool generation. NoCode uses a Code Agent architecture, capable of multi-step logical reasoning, and plans to open up to merchants and the general public, lowering the IT barrier for small and medium-sized businesses. (Source: 36Kr)

Tencent QQ Browser Upgrades to AI Browser, Integrates QBot Intelligent Assistant: QQ Browser announced its upgrade to an AI browser and launched an AI assistant named QBot, based on Tencent’s Hunyuan and DeepSeek dual models. QBot integrates functions like AI search, AI browsing, AI office, AI learning, and AI writing, and introduces AI Agent capabilities similar to Manus, capable of executing complex tasks. The first batch of grayscale-tested Agents includes “AI Gaokao Tong,” which can generate personalized college entrance exam application plans for users. QQ Browser has over 400 million users, and this upgrade aims to enhance user efficiency in information acquisition and task processing through AI. (Source: 36Kr)

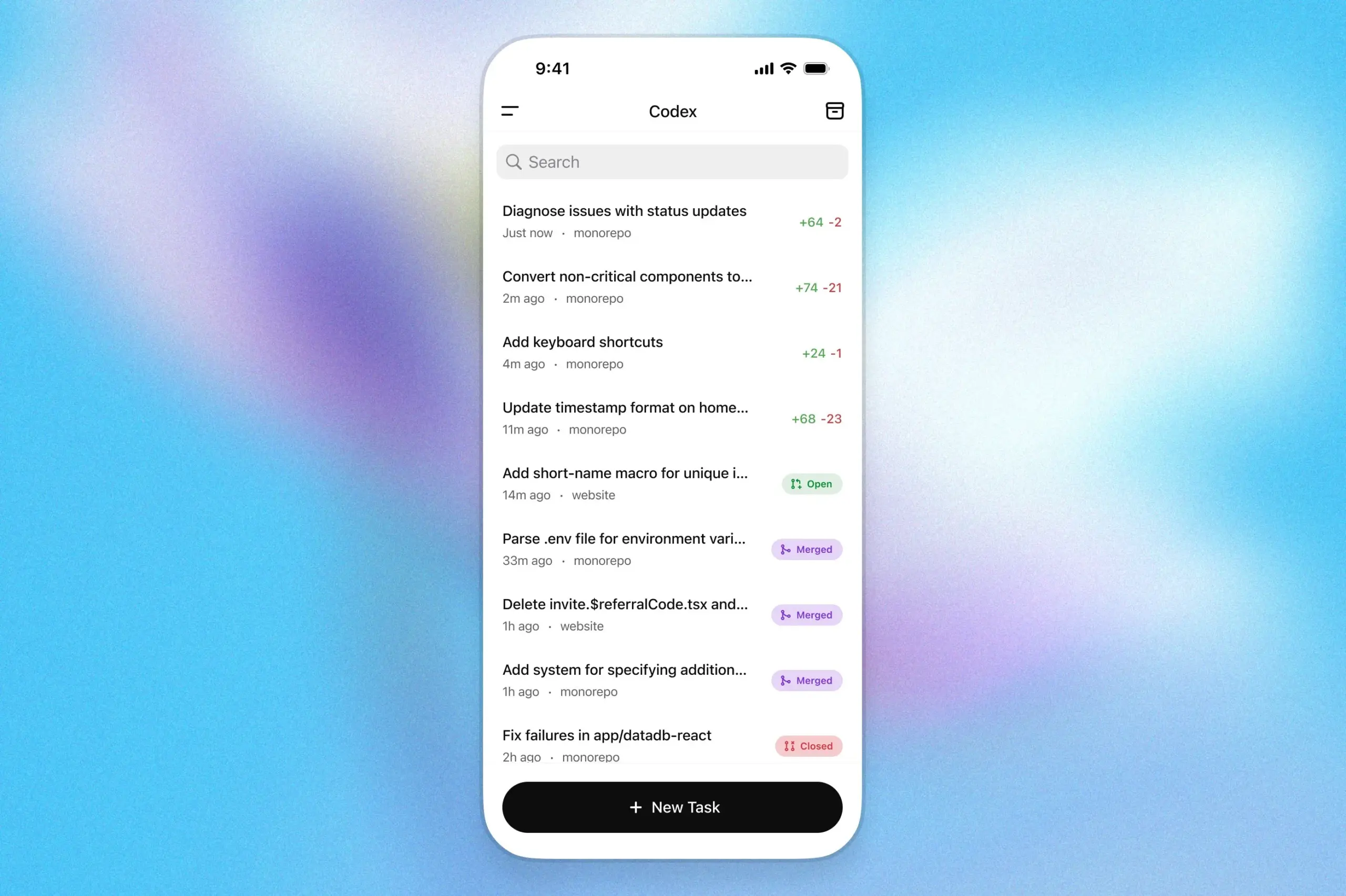

OpenAI Codex Lands on ChatGPT iOS App, Supporting Mobile Programming Tasks: OpenAI announced that its programming assistant Codex is now integrated into the ChatGPT iOS app. Users can directly start new coding tasks, view code diffs, request modifications, and even push PRs from their phones. The feature also supports lock screen live activity tracking, allowing users to monitor Codex’s work progress and resume unfinished tasks when they return to their computers. This marks an important step for AI programming towards mobile and multi-scenario collaboration. (Source: karinanguyen_ | gdb)

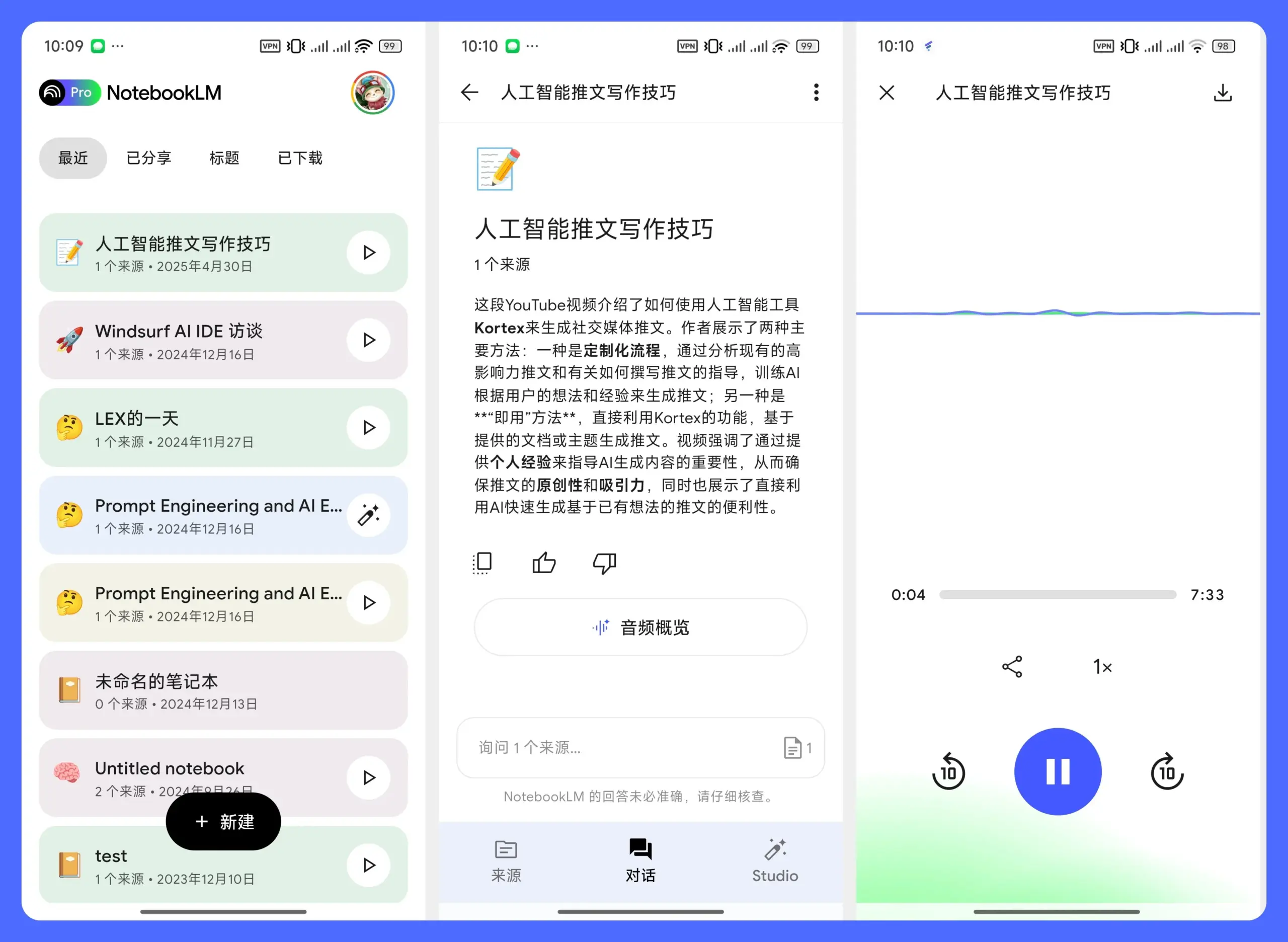

NotebookLM Mobile App Launched, Supporting Android & iOS: Google’s AI note-taking tool, NotebookLM, has officially launched its mobile app, progressively rolling out on Android and iOS platforms. The mobile version offers core features like audio summaries and conversations, enabling users to leverage AI for content analysis and learning anytime, anywhere. A convenient feature allows users to directly forward content they are browsing (excluding WeChat official accounts) to NotebookLM for processing. (Source: op7418)

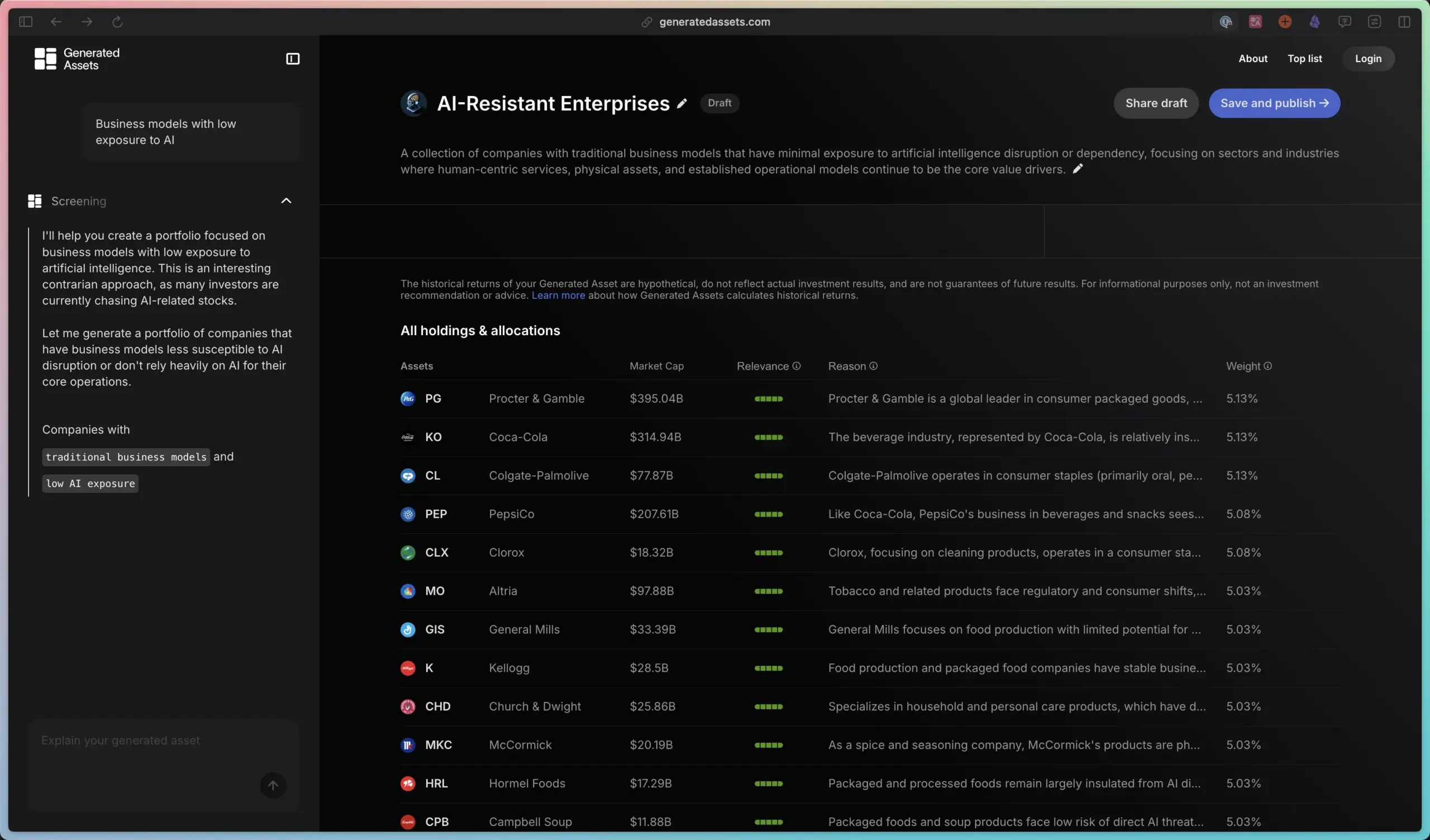

Public Launches AI Investment Tool “Generated Assets”: Investment platform Public has released a new product, “Generated Assets,” allowing users to propose investment ideas to an AI. The AI then returns investment suggestions, custom investment indexes, and can compare historical returns and track real-time performance. This is similar to an AI implementation of “vibe investing” or “thematic investing,” aimed at lowering the barrier for users to build and manage personalized investment portfolios. (Source: op7418)

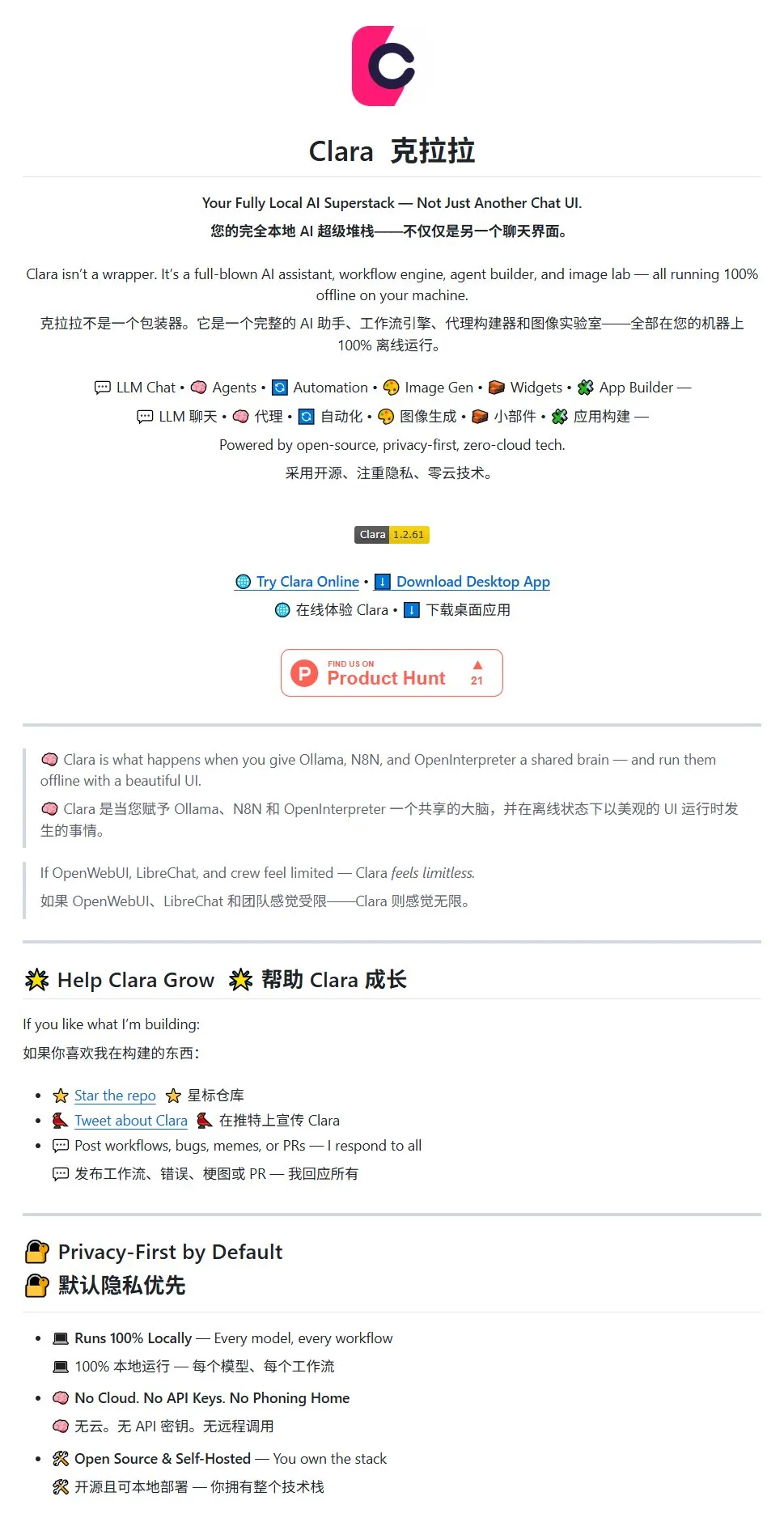

ClaraVerse: An “All-in-One” Application Integrating Multiple AI Tools: An AI tool suite named ClaraVerse has been shared by the community. It integrates a chat interface, AI components, Ollama (for local large model execution), n8n (for workflows/scheduled tasks), AI Agent templates, ComfyUI (for image generation), and an image library with AI indexing. It aims to provide users with a one-stop AI work platform, simplifying the use and switching between different AI tools. (Source: karminski3)

Qdrant Vector Database Integrates Microsoft’s NLWeb Protocol: Vector database Qdrant announced it is one of the first partners for Microsoft’s NLWeb open protocol, unveiled at the Build conference. NLWeb aims to transform traditional search boxes into semantic, intent-aware interfaces based on natural language. By integrating with Qdrant, websites can leverage it for fast, filtered vector searches, providing semantically relevant results without significant modifications to frontend or backend logic. (Source: qdrant_engine)

📚 Learning

DeepMind Proposes Visual Planning: A Pure Image Sequence Reasoning Paradigm: Yi Xu and fellow researchers have proposed a new reasoning paradigm called “Visual Planning.” It aims to enable models to think and plan entirely through image sequences, simulating how humans conceptualize steps mentally, without relying on language or textual thought. This approach explores the possibility of AI performing complex reasoning within non-linguistic symbolic systems, offering new directions for multimodal AI development. (Source: madiator)

Stanford and Other Institutions Launch Terminal-Bench: A Benchmark for Evaluating AI Agents’ Terminal Task Capabilities: Researchers from Stanford University and Laude have introduced Terminal-Bench, a framework and benchmark for evaluating the ability of AI agents to complete complex tasks in real-world terminal environments. Given that many AI agents (such as Claude Code, Codex CLI) perform valuable tasks by interacting with terminals, this benchmark aims to quantify their practical effectiveness and drive improvements in agent capabilities for real-world deployment. (Source: madiator | andersonbcdefg)

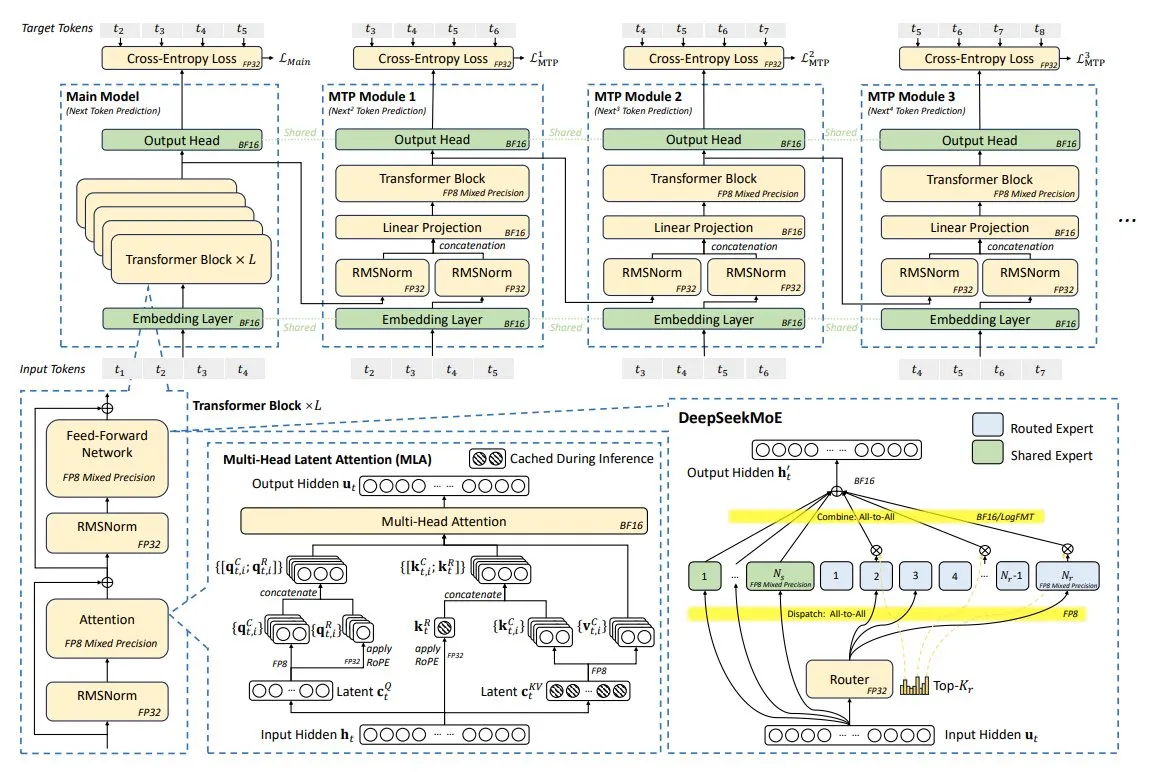

DeepSeek-V3 Technical Deep Dive: Hardware-Software Co-design Achieves Efficient Model: The DeepSeek-V3 model was trained on only 2048 NVIDIA H800 GPUs through hardware-software co-design. Its key innovations include Multi-head Latent Attention (MLA), Mixture of Experts (MoE), FP8 mixed-precision training, and a multi-plane network topology. These technologies work together to achieve superior model performance at a lower cost, representing a new trend in AI model design towards greater cost-effectiveness. (Source: TheTuringPost)

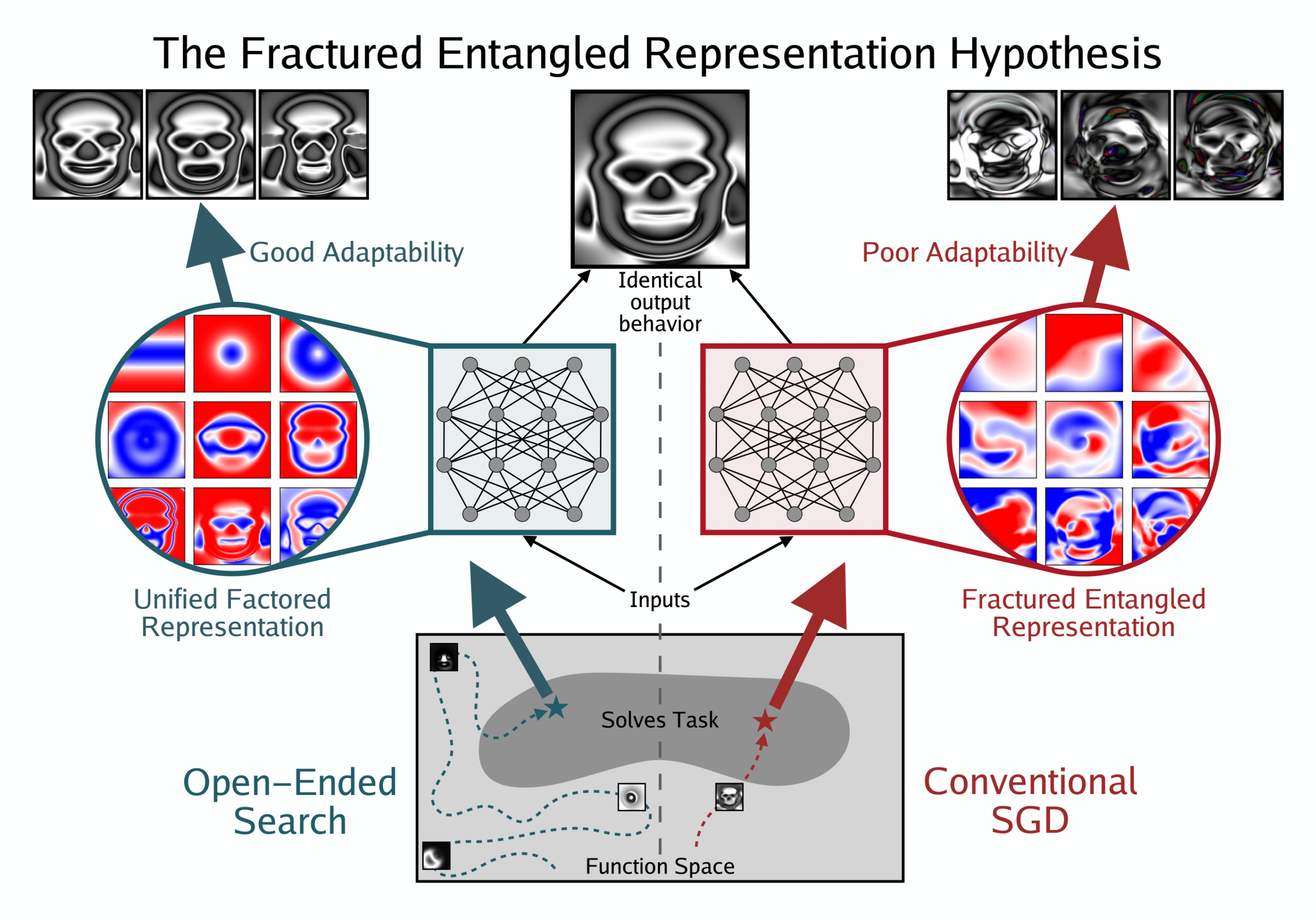

New Paper Explores Representational Optimism in Deep Learning: The Fractured and Entangled Representations Hypothesis: Kenneth Stanley et al. published a position paper, “Questioning Representational Optimism in Deep Learning: The Fractured and Entangled Representations Hypothesis.” The study points out that networks discovered through unconventional open-ended search, capable of outputting a single image, have elegant and modular representations. In contrast, networks trained via SGD to produce the same output exhibit chaotic and entangled representations. This suggests that good output behavior might hide poor internal representations, but also reveals the possibility that representations can be better, with profound implications for model generalization, creativity, and learning ability, offering new insights for improving foundation models and LLMs. (Source: hardmaru | togelius | bengoertzel)

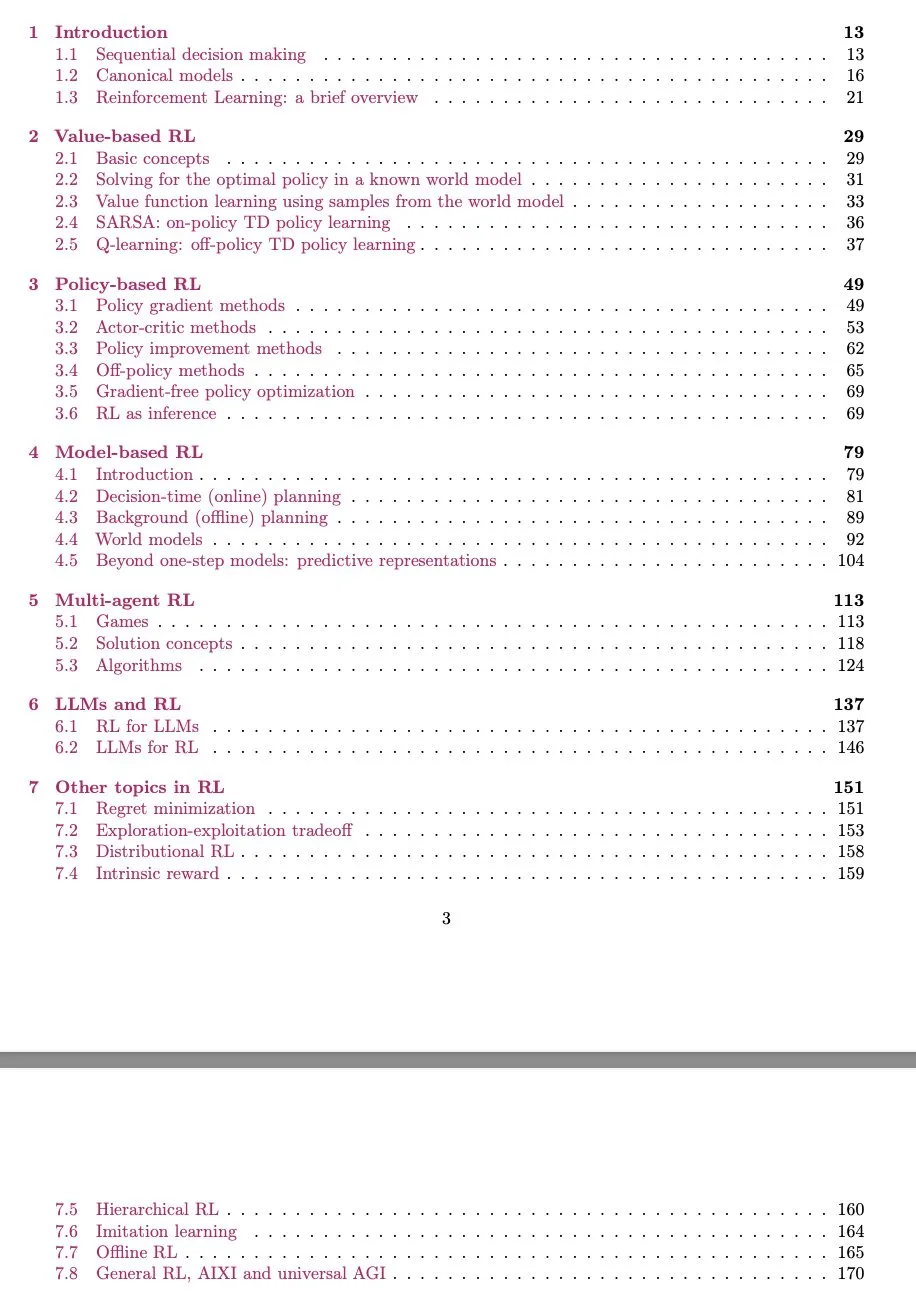

RL Tutorial Updated, Focusing on LLM Chapters (DPO, GRPO, Chain-of-Thought, etc.): Sirbayes has released a new version of their Reinforcement Learning (RL) tutorial. This update primarily focuses on the Large Language Model (LLM) chapter, incorporating recent advancements such as DPO (Direct Preference Optimization), GRPO (Group Relative Policy Optimization), and Chain-of-Thought (Thinking). Additionally, chapters on Multi-Agent Reinforcement Learning (MARL), Model-Based Reinforcement Learning (MBRL), Offline Reinforcement Learning, and DPG (Deep Deterministic Policy Gradient) have received minor updates. (Source: sirbayes)

ByteDance Proposes Pre-trained Model Averaging (PMA) Strategy: A research team from ByteDance published a paper proposing a new framework for model merging during large language model pre-training – the Pre-trained Model Averaging (PMA) strategy. The study found that merging checkpoints trained with a constant learning rate can not only achieve performance comparable to or even better than continuous training but also significantly improve training efficiency. This research offers a new approach to optimizing the efficiency of large model pre-training and validates the potential of model merging in enhancing performance and efficiency. (Source: teortaxesTex)

Alibaba’s Tongyi Lab New Research ZeroSearch: LLM Acts as Search Engine, Enhancing Reasoning Without APIs: Alibaba’s Tongyi Lab proposed the ZeroSearch framework, which enables LLMs to simulate search engine behavior during reinforcement learning without actually calling search engine APIs, thereby reducing costs and improving training stability. This method uses lightweight fine-tuning to enable the LLM to generate useful results and noise interference, and employs curriculum-based anti-noise training to gradually enhance the model’s reasoning and anti-interference capabilities in complex retrieval scenarios. Experiments show that an LLM with only 3B parameters as a retrieval module can effectively improve search capabilities. (Source: QbitAI)

CUHK New Algorithm RXTX Optimizes XXt Matrix Multiplication Calculation: Researchers from The Chinese University of Hong Kong (CUHK) have proposed a new algorithm, RXTX, to accelerate the computation of the product of a matrix and its transpose (XXt). The algorithm is based on recursive multiplication of 4×4 block matrices, discovered using machine learning search and combinatorial optimization techniques. Compared to existing algorithms based on Strassen recursion, RXTX reduces the asymptotic multiplication constant by about 5% and shows an advantage in total operations for n≥256. In tests with 6144×6144 matrices, it was 9% faster than the BLAS default implementation. This research has potential implications for fields like data analysis, chip design, and LLM training. (Source: QbitAI)

Paper AdaptThink: Teaching Reasoning Models When to “Think”: This research proposes AdaptThink, a framework that uses reinforcement learning to teach reasoning models to adaptively choose whether to engage in deep thinking (like Chain-of-Thought) based on problem difficulty. Its core includes a constrained optimization objective (encouraging reduced thinking while maintaining performance) and an importance sampling strategy (balancing samples with and without thinking). Experiments show that AdaptThink can significantly reduce inference costs and improve performance. For example, on math datasets, it reduced the average response length of DeepSeek-R1-Distill-Qwen-1.5B by 53% and increased accuracy by 2.4%. (Source: HuggingFace Daily Papers)

Paper VisionReasoner: Unifying Visual Perception and Reasoning via Reinforcement Learning: VisionReasoner is a unified framework designed to handle multiple visual perception tasks with a shared model. It employs a multi-object cognitive learning strategy and systematic task reconstruction to enhance the model’s ability to analyze visual input and perform structured reasoning for ten different tasks, including detection, segmentation, and counting. Experimental results show that VisionReasoner outperforms models like Qwen2.5VL on benchmarks such as COCO (detection), ReasonSeg (segmentation), and CountBench (counting). (Source: HuggingFace Daily Papers)

Paper AdaCoT: Achieving Pareto-Optimal Adaptive Chain-of-Thought Triggering via Reinforcement Learning: To address the unnecessary computational overhead of Chain-of-Thought (CoT) when Large Language Models (LLMs) process simple queries, the AdaCoT framework was proposed. It utilizes reinforcement learning (PPO) to enable LLMs to adaptively decide whether to invoke CoT based on the implicit complexity of the query, aiming to balance model performance and CoT invocation costs. Using Selective Loss Masking (SLM) technology to prevent decision boundary collapse, experiments show AdaCoT can significantly reduce the triggering rate of unnecessary CoT (as low as 3.18%) and response tokens (by 69.06%), while maintaining high performance on complex tasks. (Source: HuggingFace Daily Papers)

Paper GIE-Bench: A Grounded Evaluation Benchmark for Text-Guided Image Editing: To more accurately evaluate text-guided image editing models, GIE-Bench was proposed. This benchmark evaluates models from two dimensions: functional correctness (verifying successful edits through auto-generated multiple-choice questions) and image content preservation (using object-aware masking techniques and a preservation score to ensure consistency in non-target regions). It includes over 1000 high-quality editing examples across 20 categories. Evaluation of models like GPT-Image-1 shows it leads in instruction following but needs improvement in preserving irrelevant regions. (Source: HuggingFace Daily Papers)

Paper InstanceGen: Image Generation with Instance-Level Instructions: Addressing the difficulty pre-trained text-to-image models face in accurately capturing semantics for complex prompts involving multiple objects and instance-level attributes, InstanceGen proposes a new technique. This technique combines image-based fine-grained structured initialization (directly provided by contemporary image generation models) with LLM-based instance-level instructions. This enables generated images to better adhere to all parts of the text prompt, including object counts, instance-level attributes, and spatial relationships between instances. (Source: HuggingFace Daily Papers)

💼 Business

Tsinghua-Affiliated Embodied Intelligence Company “Qianjue Technology” Completes Hundreds of Millions RMB Pre-A+ Funding Round: Embodied brain company “Qianjue Technology” recently completed a new Pre-A+ funding round, with investments from Junshan Investment, Xiangfeng Investment, and Shixi Capital, bringing its total funding to hundreds of millions of RMB. Incubated by core members from Tsinghua University’s Department of Automation and related AI research institutions, the company focuses on developing general-purpose “embodied brain” systems, emphasizing multi-modal real-time perception, continuous task planning, and autonomous execution capabilities. It has achieved product-level deployment in scenarios like home services and logistics, and collaborates with several leading robotics manufacturers and consumer electronics companies. (Source: 36Kr)

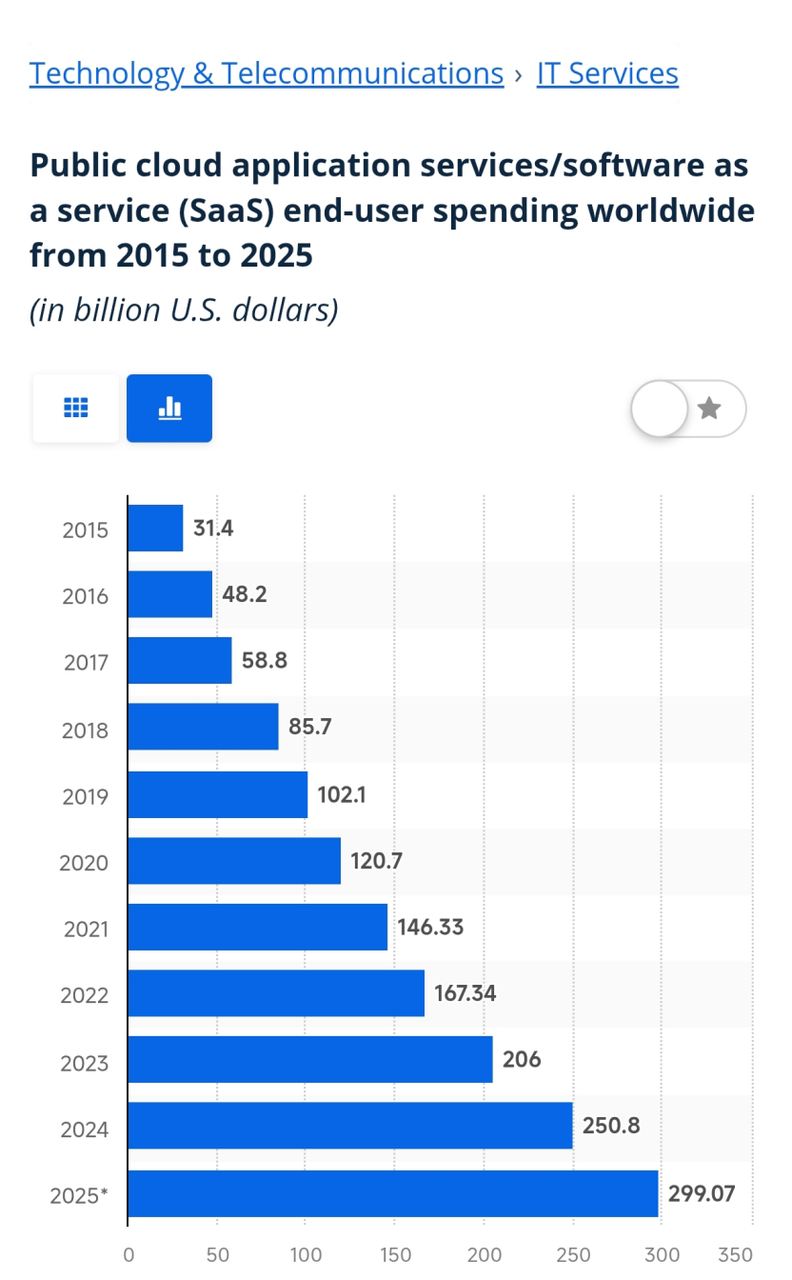

AI Agents May Reshape the SaaS Market Landscape: Microsoft CEO Satya Nadella predicted that SaaS applications will face disruption in the AI Agent era, sparking widespread industry discussion about the future of AI Agents and SaaS. With their ability to autonomously perceive, decide, and act, AI Agents are expected to address pain points of traditional SaaS in customization, data interoperability, and user experience, such as automatically creating workflows through natural language interaction, integrating data across applications, and proactively providing business suggestions. Although AI Agents currently face challenges in enterprise applications, including LLM capability limitations, cost, and data security, vendors like Salesforce, Microsoft, and Yonyou have begun integrating AI Agents into their SaaS products, exploring new models that merge with or disrupt SaaS. (Source: 36Kr)

AI Reshapes Compensation Management: From Data Analysis to Intelligent Decision-Making and Communication: Artificial intelligence is profoundly transforming compensation management. A Korn Ferry report shows increasing application of AI in compensation communication, external benchmarking, and job skill architecture. In the future, AI is expected to transition from data-driven to intelligent decision-making by processing larger-scale, more diverse data (including social platforms, third-party surveys), such as predicting employee attrition risk, evaluating incentive effectiveness, dynamically adjusting salary ranges, and achieving personalized incentives. At the same time, AI also faces challenges like data privacy, algorithmic “black boxes,” and result credibility. Effective compensation communication is even more crucial in the digital intelligence era, and AI tools can assist managers in systematic, personalized communication, enhancing employees’ sense of fairness and satisfaction. (Source: 36Kr)

🌟 Community

Sundar Pichai Posts “Deep Thought” Photo, Teasing Google I/O: Google CEO Sundar Pichai posted a photo of himself in “deep thought” on social media, sparking widespread anticipation in the community for the upcoming Google I/O conference. The photo was reposted and interpreted by several AI KOLS, generally believed to foreshadow major announcements from Google in the AI field, particularly regarding the Gemini model and its applications. Community members are speculating about possible new features, models, or strategies. (Source: demishassabis | YiTayML | zacharynado | lmthang | scaling01 | brickroad7 | jack_w_rae | TheTuringPost | shaneguML | op7418)

AI Agent Programming Capabilities Spark Heated Discussion, Sama Optimistic About Automating Unfinished Projects: OpenAI CEO Sam Altman expressed anticipation for AI programming agents (like Codex) to complete projects that are 80% done but unfinished, and to perform automatic maintenance. The community compared and discussed the capabilities of different AI programming agents (such as Codex, Jules, Claude Code), focusing on task planning abilities, virtual machine environments (e.g., internet connectivity), and performance in complex long-term tasks. There is a general consensus that AI Agents have immense potential in software development, but various models still differ in their specific implementations and effectiveness. (Source: sama | mathemagic1an)

Universities’ Introduction of AI-Generated Content Detection Sparks Controversy, “Preface to the Tengwang Pavilion” Judged 100% AI-Generated: Several universities in China have included “AI-generated content detection rate” in thesis reviews, leading students to use various methods to evade detection and teachers to struggle between AI judgments and manual assessments. AI detection tools, due to reliance on database comparisons and pattern biases, often misjudge classic works (e.g., “Preface to the Tengwang Pavilion” rated 100% AI, Zhu Ziqing’s “Moonlight over the Lotus Pond” 62.88%) and standardized academic writing as AI-generated. This phenomenon has spawned a gray industry for “reducing AI rate,” prompting profound reflections on the limitations of AI detection technology, academic evaluation standards, and the essence of education. (Source: 36Kr)

Discussion on the Thinking Patterns of the Next Generation Growing Up in the AI Era: A Reddit community discussed how the thinking patterns of the new generation of children growing up in an AI environment will significantly differ from previous generations. They will be accustomed to interacting with AI assistants, and their learning focus may shift from memorizing facts to asking questions and navigating systems, from trial-and-error learning to rapid iteration. This early integration with machine logic could profoundly reshape their curiosity, memory, intuition, and even their definition of intelligence itself, raising questions about their future belief formation, system-building capabilities, and trust in their own thoughts. (Source: Reddit r/ArtificialInteligence)

Rapid Development of AI in Software Engineering Sparks Job Insecurity Among Developers: A 42-year-old software engineer, formerly earning $150,000 annually, was made obsolete by AI-related trends. After sending out over 800 resumes with few interview opportunities, he now makes a living delivering food. His experience has sparked discussions about whether AI (like GitHub Copilot, Claude, ChatGPT) has begun to replace programmers on a large scale. Anthropic’s CEO once predicted AI would be able to generate the vast majority of code. Although Bureau of Labor Statistics data still shows software engineering as one of the fastest-growing professions, the tech industry’s layoff wave continues, and companies are using AI to cut costs and improve efficiency. This prompts reflection on how society should address AI-induced structural unemployment and build new “human + AI” collaboration paradigms. (Source: 36Kr)

Gender Bias in AI Algorithms: The Invisibility and Absence of “Her Data”: In the development of artificial intelligence, the problem of gender bias in algorithms is increasingly prominent. Due to historical and social reasons, female data is underrepresented in data collection (e.g., clinical trials, Wikipedia entries), leading AI to potentially produce biases against women in areas like medical diagnosis and content recommendation. For example, image recognition systems might misidentify men in kitchens as women, and search engine image results reinforce gender stereotypes. The gender imbalance in the AI industry is also considered a contributing factor. Addressing this issue requires a multi-pronged approach, including raising developer awareness, ensuring fair career opportunities for women, improving laws and regulations, establishing gender audit mechanisms for AI systems, and optimizing algorithms (e.g., data resampling, application of causal inference). (Source: 36Kr)

AI Agents Spark Discussion on SaaS Industry Transformation: Microsoft CEO Satya Nadella predicted that SaaS will face disruption in the AI Agent era. AI Agents, with their autonomous perception, decision-making, and action capabilities, are expected to address SaaS pain points in customization, data interoperability, and user experience. For example, AI Agents can automatically create workflows through natural language interaction, integrate data across applications, and proactively provide business advice. Currently, SaaS vendors like Salesforce, Microsoft, and Yonyou have begun integrating AI Agents, exploring new models that merge with or disrupt SaaS. Although AI Agents still face challenges in enterprise applications, such as LLM capabilities, cost, and data security, their transformative potential has garnered widespread industry attention. (Source: finbarrtimbers)

💡 Other

AI Generates Chinese Opera Style Tarot Cards: User @op7418 used the AI tool Lovart to create a set of Tarot cards in the style of Chinese opera. The design concept combines traditional opera content with the meanings expressed by the corresponding Tarot cards, showcasing AI’s potential in creative design and cultural fusion. (Source: op7418)

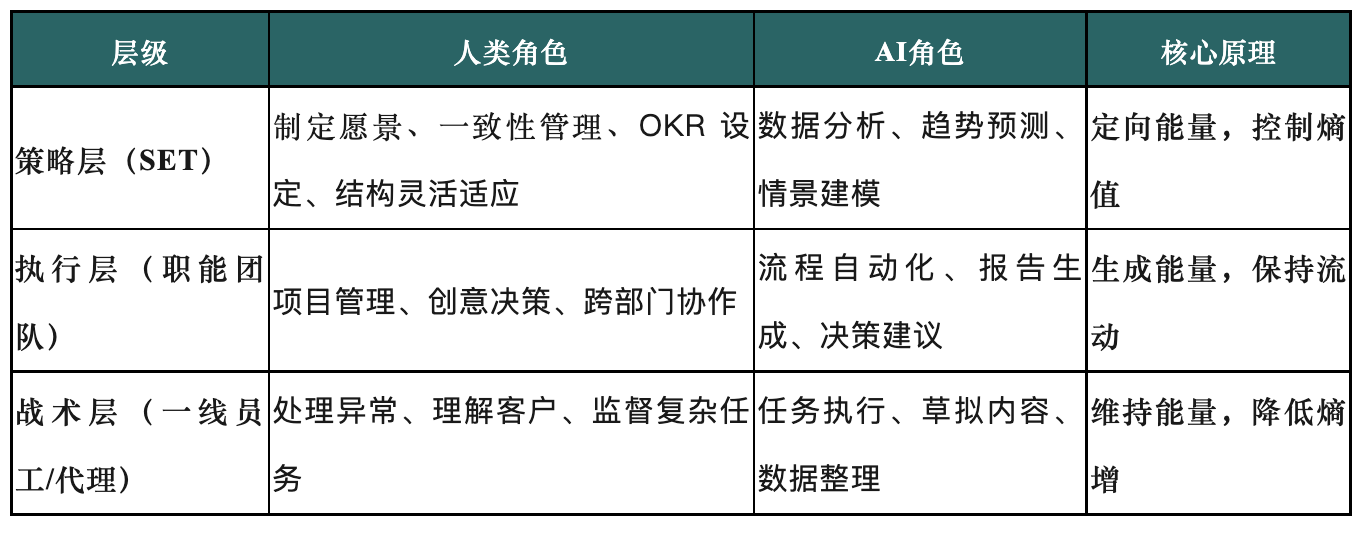

Reshaping Organizational Structure in the AI Era: The Rise of Strategic Execution Teams (SET): The article discusses how traditional organizational structures struggle to adapt to the complexity brought by the accelerating development of AI. It proposes a three-layer organizational model centered on “Strategic Execution Teams” (SET), aiming to make AI part of the team and achieve agile execution and intelligent scaling through reasonable human-machine collaboration mechanisms. SETs are responsible for translating strategy into cross-departmental actions, monitoring organizational entropy, flexibly adjusting strategies, and coordinating the collaboration of people, processes, and AI agents to unleash AI’s potential and drive strategy implementation. (Source: 36Kr)

Can Crowdsourced Fact-Checking Curb Social Media Misinformation?: Preslav Nakov, a professor at Mohamed bin Zayed University of Artificial Intelligence, discusses the impact of Meta replacing third-party fact-checkers with Community Notes. He believes that crowdsourced models like Community Notes (originating from X’s Birdwatch) have potential, but content moderation requires a combination of methods, including automatic filtering, crowdsourcing, and professional fact-checking. Drawing analogies to spam filtering and LLMs handling harmful content, he points out that each method has its pros and cons and should work synergistically. Research shows Community Notes can amplify the impact of professional fact-checkers; their focuses differ but conclusions are similar, making them complementary. (Source: MIT Technology Review)