Keywords:AlphaEvolve, AI algorithm design, multimodal AI, AI programming tools, self-evolving algorithms, large language models, AI agents, AlphaEvolve open source implementation, AI autonomous design of matrix multiplication algorithms, unified interface for multimodal AI, impact of AI programming tools on developers, performance of local large model Qwen 3

🔥 Focus

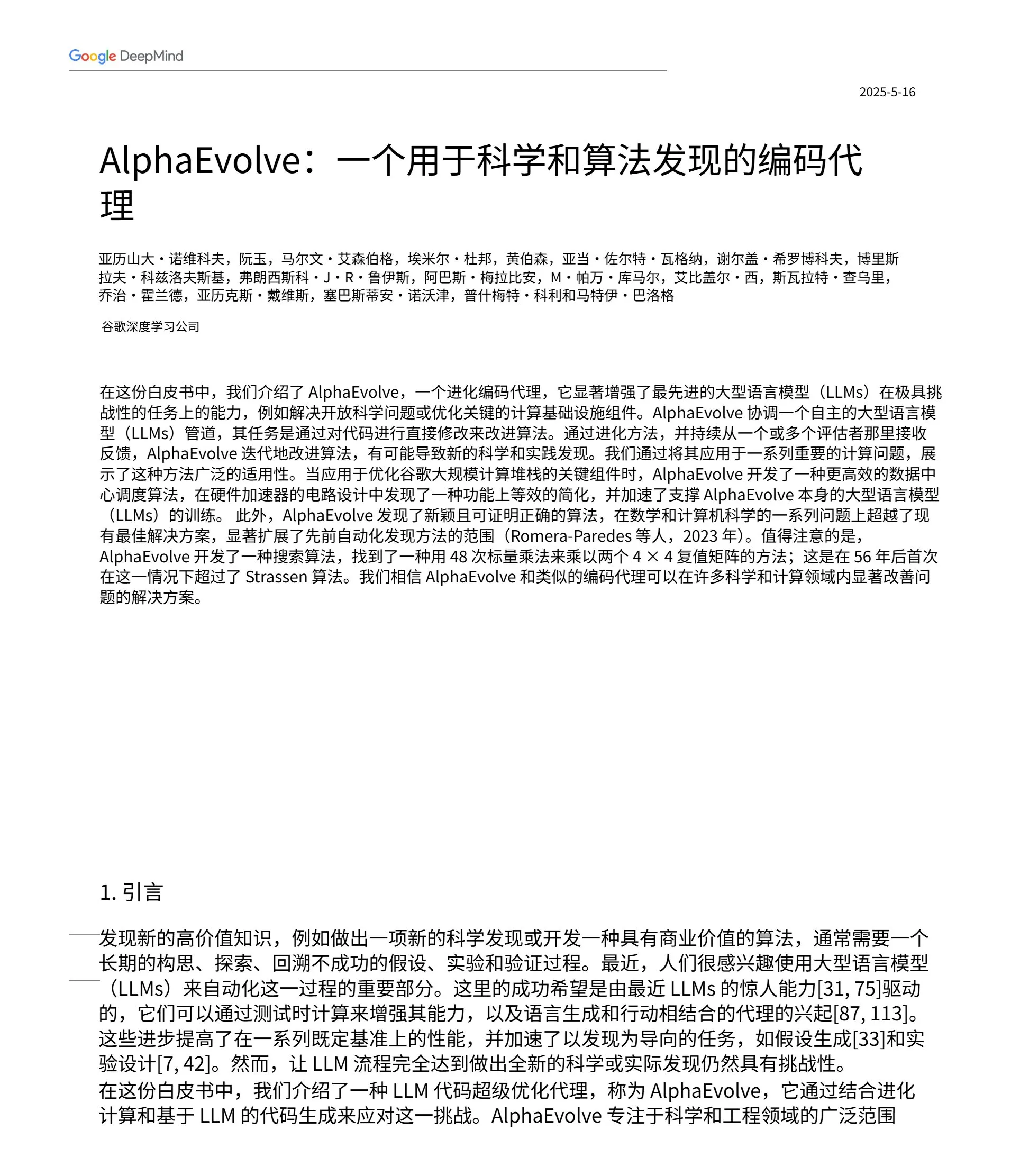

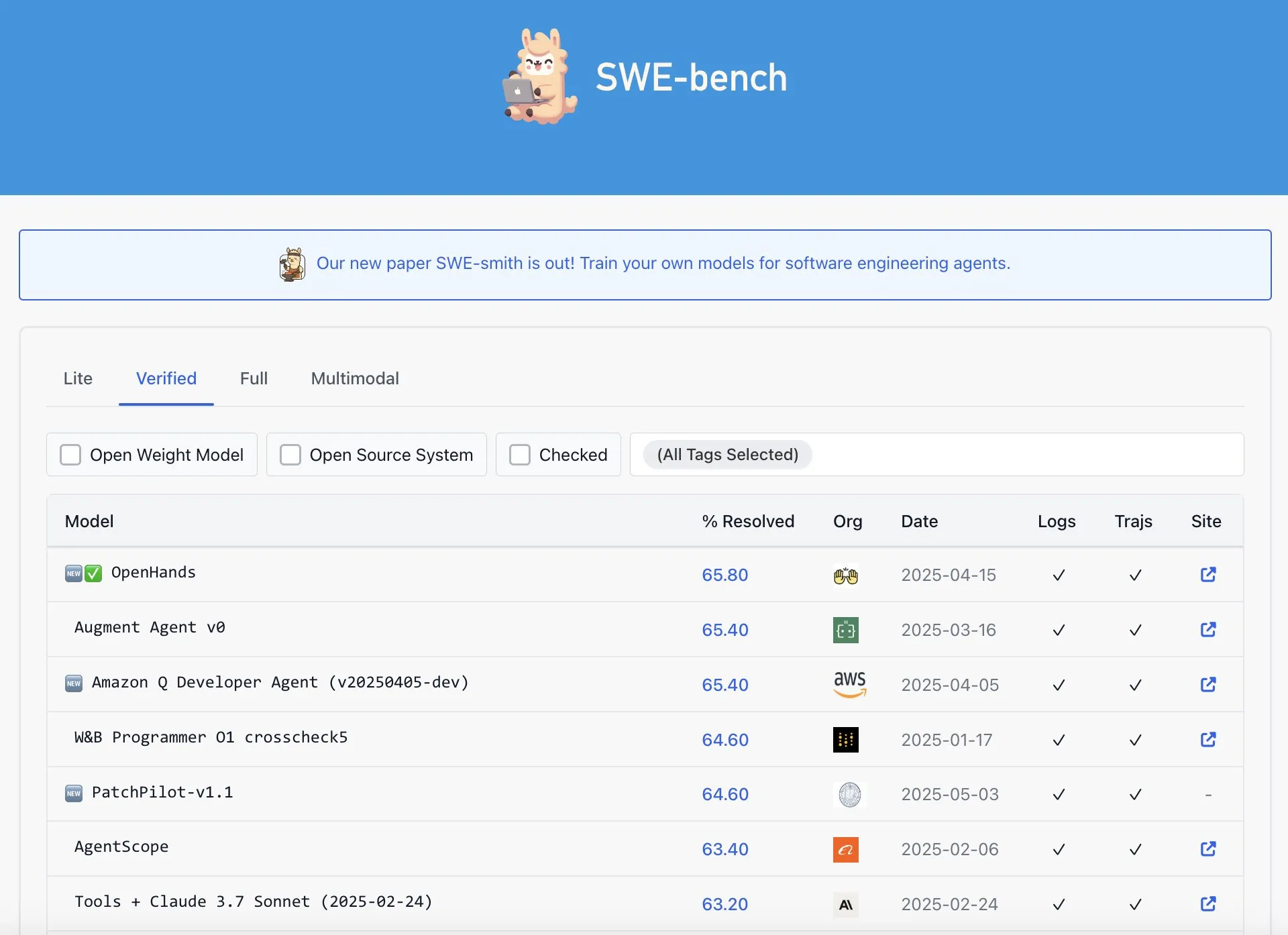

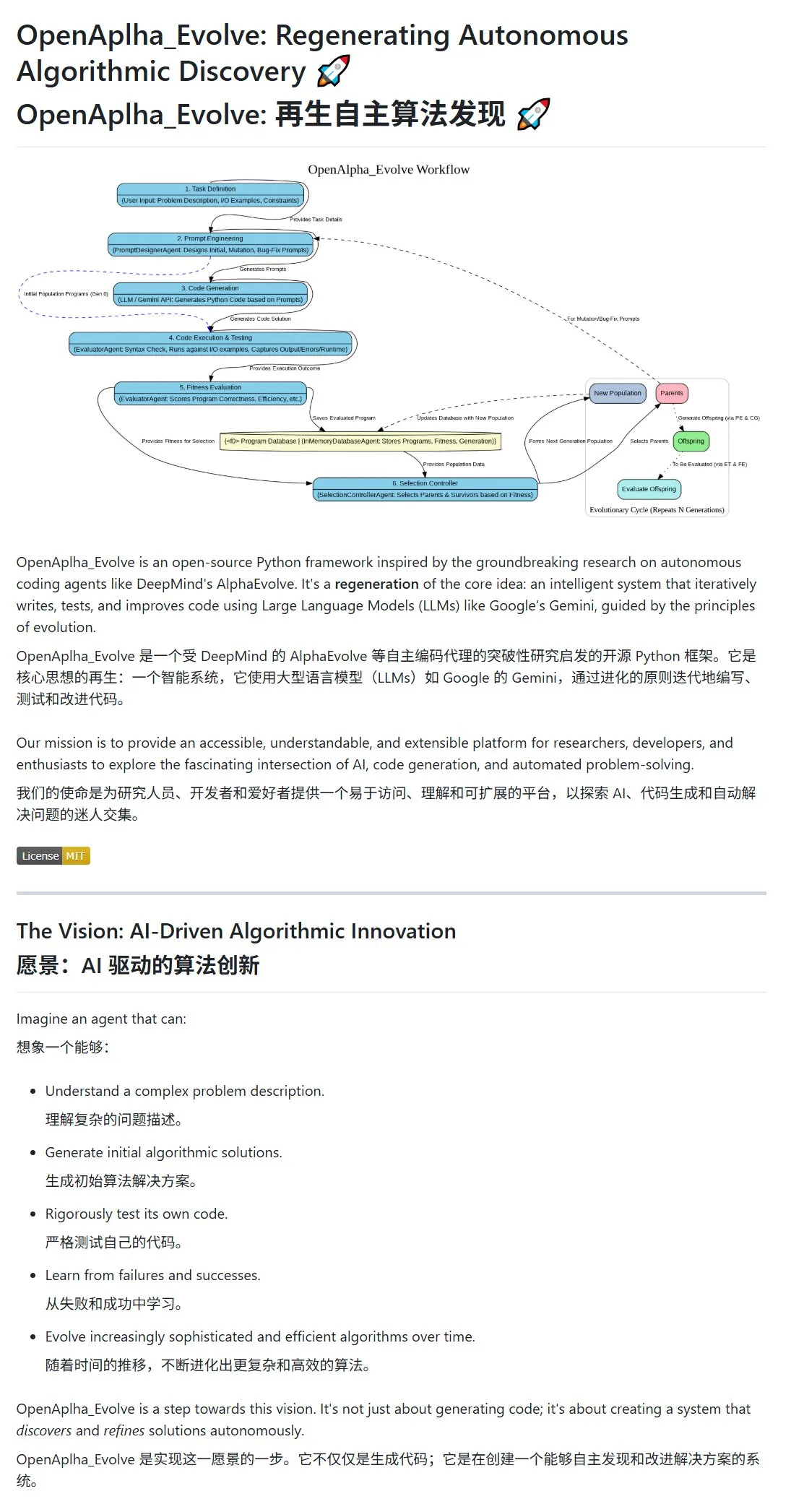

Google DeepMind releases AlphaEvolve, AI that autonomously designs and evolves algorithms: Google DeepMind has unveiled the AlphaEvolve project and its paper, showcasing an AI agent capable of autonomously designing, testing, learning, and evolving more efficient algorithms. The system uses prompt engineering to drive large language models (like Gemini) to generate initial algorithmic solutions and optimizes algorithms through fitness evaluation and survivor selection in an evolutionary loop. The community responded quickly, with an open-source implementation, OpenAlpha_Evolve, already emerging. Researchers have also used tools like Claude to verify AlphaEvolve’s breakthroughs in areas such as matrix multiplication, demonstrating the immense potential of AI in algorithmic innovation. (Source: karminski3, karminski3, Reddit r/ClaudeAI, Reddit r/artificial)

OpenAI’s Multimodal Strategy Emerges: Integrated Interfaces and Centralized Infrastructure Draw Attention: OpenAI’s recent product releases, including GPT-4o, Sora, and Whisper, not only demonstrate its progress in multimodal capabilities across text, image, audio, and video but also reveal its strategic intent to integrate multiple modalities into unified interfaces and APIs. While this strategy offers convenience to users, it has also sparked discussions about how centralized infrastructure might limit the innovation space for external developers and researchers. Video generation models like Sora, in particular, due to their high computational resource demands, further incorporate high-cost applications into the OpenAI ecosystem. This could exacerbate the “compute gravity” of leading platforms, impacting the openness and modular development of the AI field. (Source: Reddit r/deeplearning)

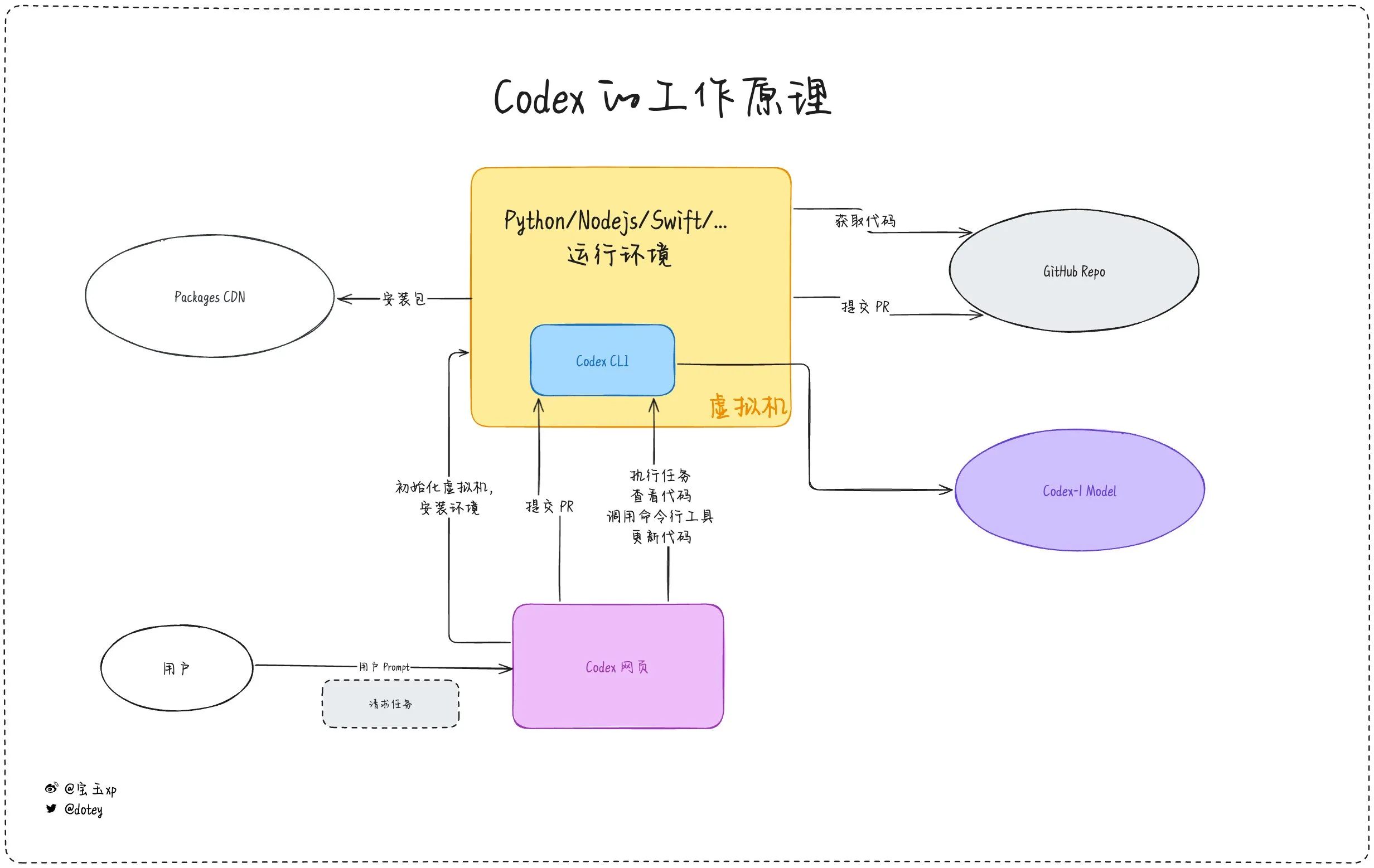

Increased Penetration of AI Programming Tools, Developer Experiences and Reflections Coexist: AI programming tools such as Codex, Devin, and various AI Agents are rapidly integrating into software development workflows. Developer feedback indicates that Codex demonstrates high efficiency in areas like code internationalization and project upgrades, significantly shortening development cycles. However, as noted in dotey’s review of Codex, current AI tools are more like “outsourced employees”; while they can complete tasks, they still have limitations in internet connectivity, task persistence, and experience accumulation. Community discussions also mention that some developers, after long-term use of AI-assisted programming, are beginning to reflect on its impact on their own thinking and creativity, even choosing to revert to development models that rely more on “human brains.” This highlights that balancing the efficiency gains from AI tools with the maintenance of developers’ core abilities remains a critical issue. (Source: dotey, giffmana, cto_junior, Reddit r/artificial)

🎯 Trends

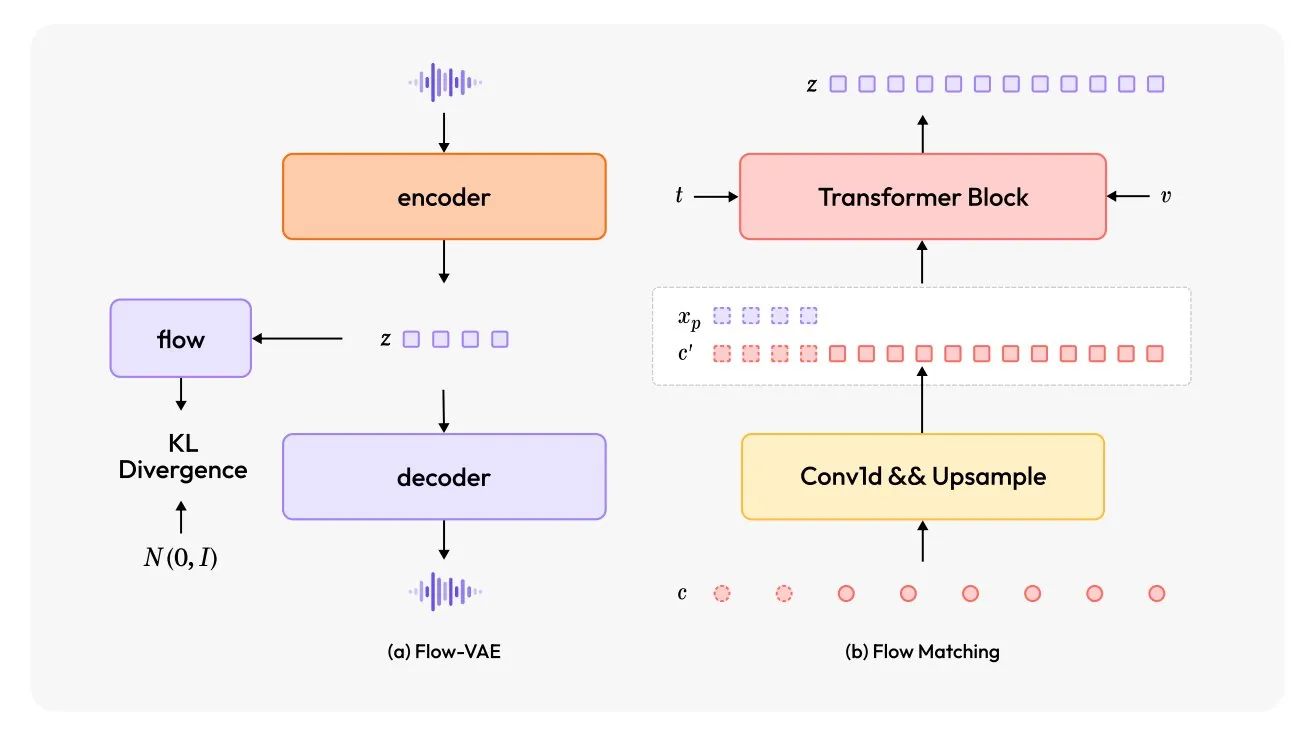

MiniMax-Speech: New Multilingual TTS Model Released: TheTuringPost introduced MiniMax-Speech, a new text-to-speech (TTS) model. The model features two main innovations: a learnable speaker encoder capable of capturing timbre from short audio clips, and a Flow-VAE module to enhance audio quality. MiniMax-Speech supports 32 languages and can be used to add emotion to speech, generate speech from text descriptions, or perform zero-shot voice cloning, showcasing its potential in personalized and high-quality speech synthesis. (Source: TheTuringPost, TheTuringPost)

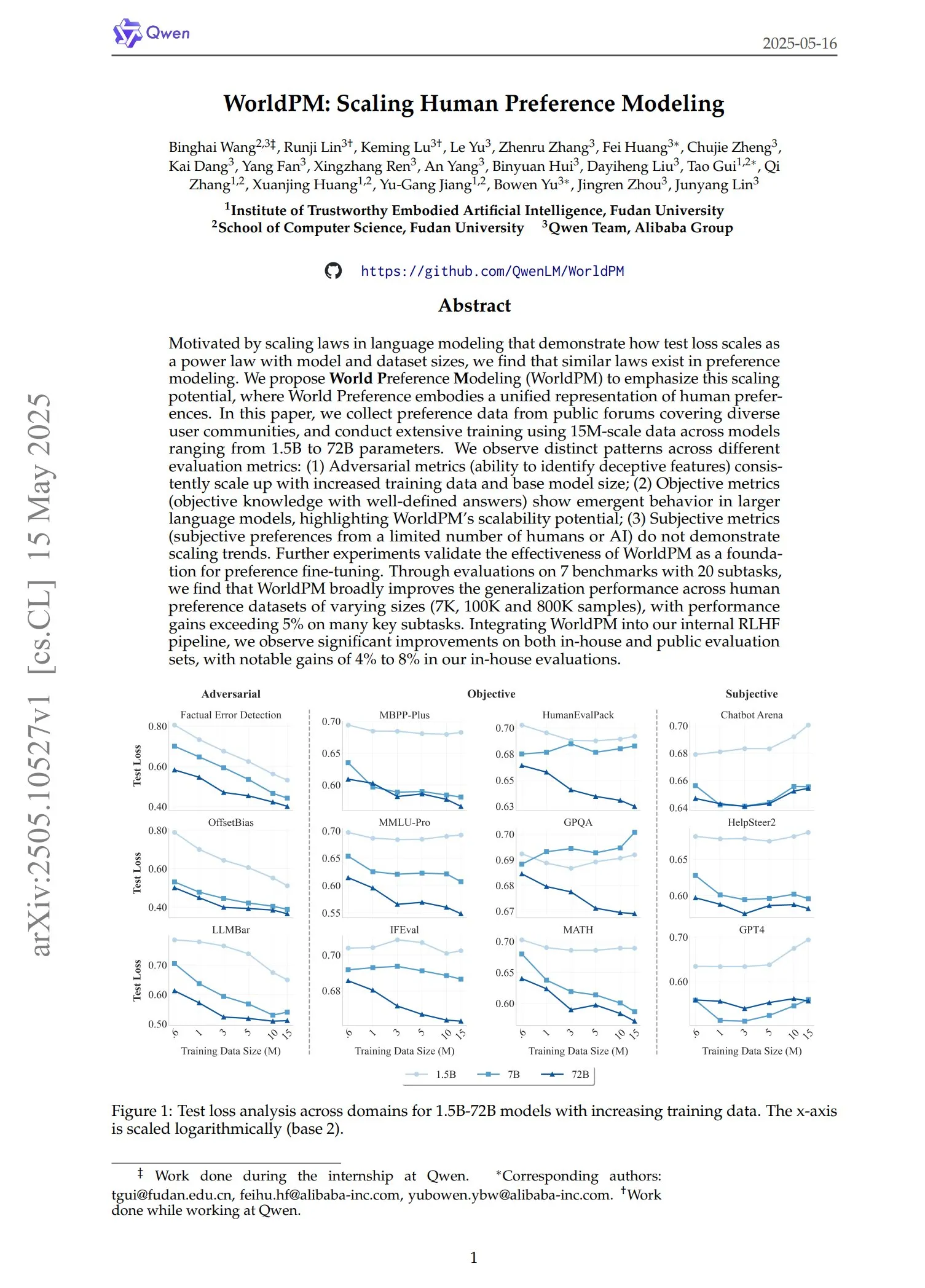

Qwen Releases WorldPM Series of Preference Models: The Qwen team has launched four new preference modeling models: WorldPM-72B, WorldPM-72B-HelpSteer2, WorldPM-72B-RLHFLow, and WorldPM-72B-UltraFeedback. These models are primarily used to evaluate the quality of answers from other models and assist in the supervised learning process. Officials state that training with these preference models yields better results than training from scratch, and a related paper has also been published. (Source: karminski3)

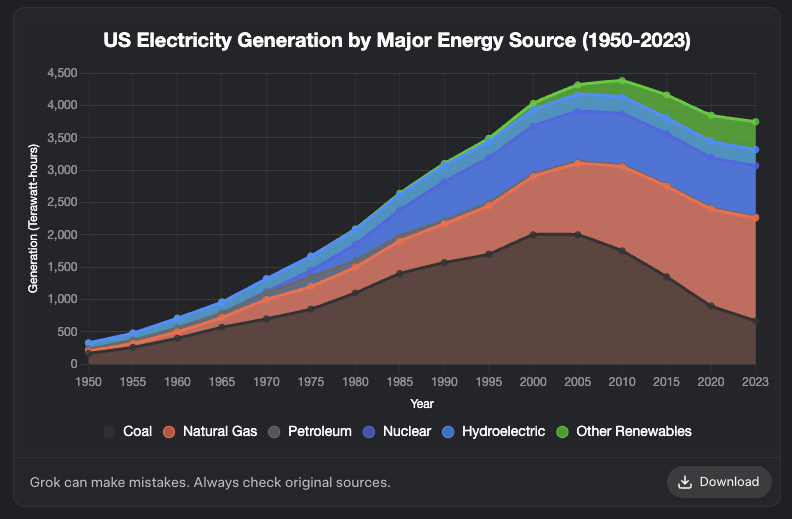

Grok Adds Chart Generation Feature: XAI’s Grok model now supports chart generation. Users can create charts through Grok in their browsers, and this feature is expected to be rolled out to more platforms in the coming days. This update enhances Grok’s capabilities in data visualization and information presentation. (Source: grok, Yuhu_ai_, TheGregYang)

DeepRobotics Releases Medium-Sized Quadruped Robot Lynx: DEEP Robotics has launched its new medium-sized quadruped robot, Lynx. This robot demonstrates the ability to move stably in complex terrain, reflecting the company’s advancements in robot motion control and perception technology. It can be applied in various scenarios such as inspection and logistics. (Source: Ronald_vanLoon)

Sanctuary AI Integrates New Haptic Sensors for General-Purpose Robots: Sanctuary AI announced that its general-purpose robots have integrated new haptic sensor technology. This improvement aims to enhance the robots’ object perception and manipulation capabilities, enabling them to interact with the environment more finely, marking an important step towards more capable general-purpose AI robots. (Source: Ronald_vanLoon)

Unitree Robot Demonstrates Advanced Gait Capabilities: Unitree Robotics’ Go2 robot showcased several advanced gaits, including walking inverted, adaptive rolling, and overcoming obstacles. The realization of these capabilities signifies a significant improvement in its robot dog’s motion control algorithms and environmental adaptability. (Source: Ronald_vanLoon)

Chinese Research Team Develops Robot Driven by Cultured Human Brain Cells: According to InterestingSTEM, a research team in China is developing a robot driven by lab-grown human brain cells. This research explores the fusion of biological computing and robotics, aiming to leverage the learning and adaptation capabilities of biological neurons to provide new approaches for robot control. Although still in its early exploratory stages, it has sparked widespread discussion about the future forms of robot intelligence. (Source: Ronald_vanLoon)

New Nanoscale Brain Sensor Achieves 96.4% Accuracy in Neural Signal Recognition: A novel nanoscale brain sensor has demonstrated up to 96.4% accuracy in identifying neural signals. This technology holds promise for applications in brain-computer interfaces, neuroscience research, and medical diagnostics, providing new tools for more precise interpretation of brain activity. (Source: Ronald_vanLoon)

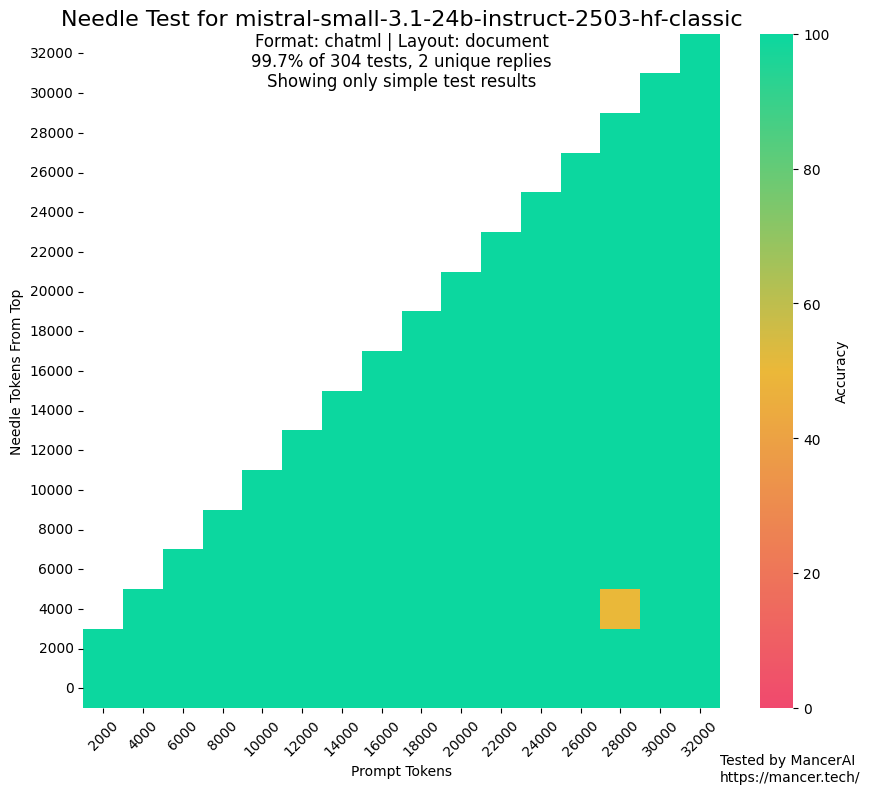

Rumors of MistralAI Using Test Sets for Training Raise Concerns: Discussions have emerged in the community questioning whether MistralAI might have used test set data for training in benchmarks like NIAH. kalomaze pointed out that MistralAI performed significantly better on the GitHub NIAH test compared to a custom NIAH (with programmatically generated facts and questions), suggesting potential data contamination. Dorialexander speculated that “synthetic approximations” of evaluation sets might have been used in designing the data mix, raising concerns about the fairness and transparency of model evaluation. (Source: Dorialexander)

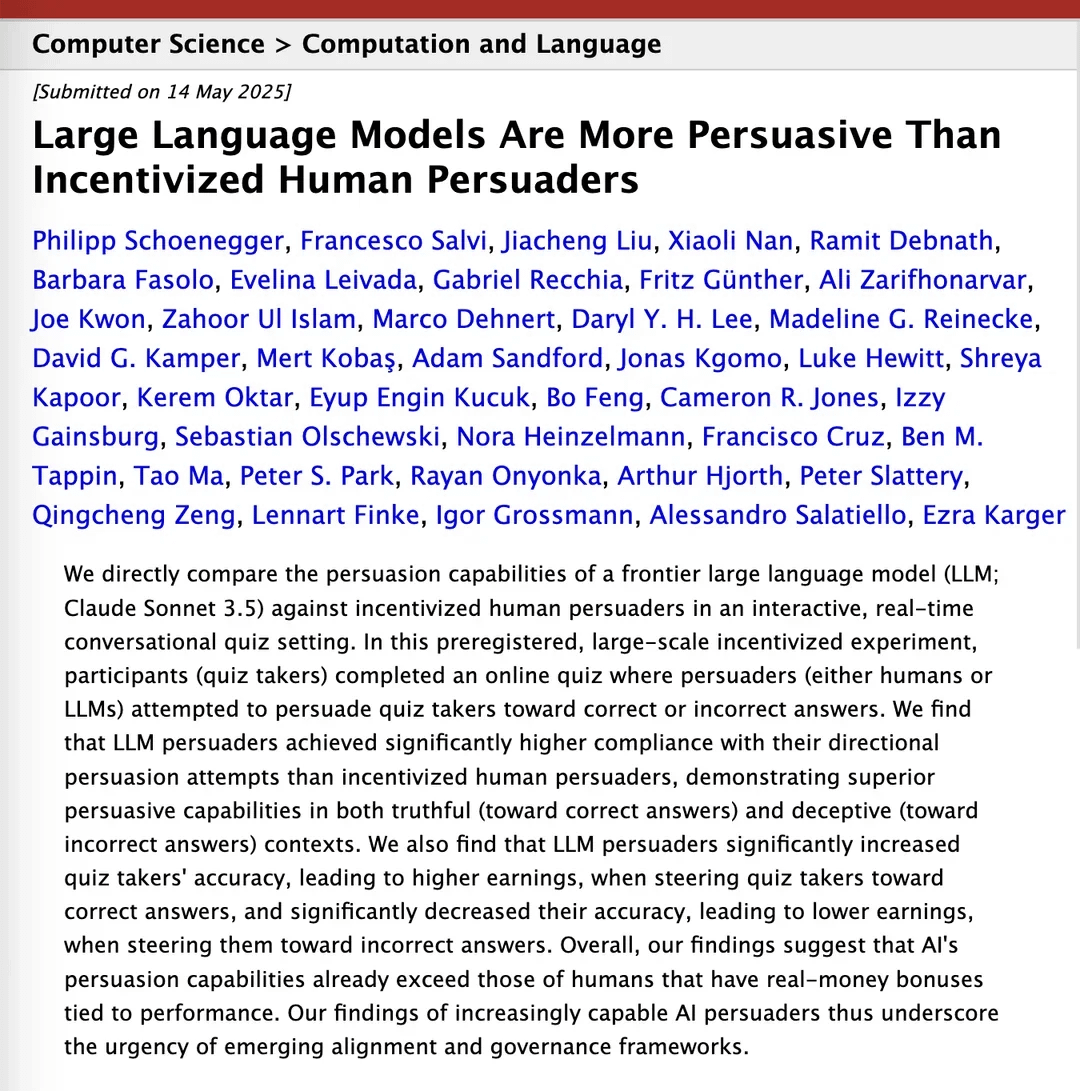

Study Claims Claude 3.5 Surpasses Humans in Persuasiveness: A research paper on arXiv indicates that Anthropic’s Claude 3.5 model outperforms humans in persuasive ability. The study experimentally compared the model’s performance with humans on specific persuasion tasks, and the results show that AI may have a significant advantage in constructing persuasive arguments and communication. This has potential implications for fields such as marketing, public relations, and human-computer interaction. (Source: Reddit r/ClaudeAI)

Local Large Models Show Significant Capability Improvement on Consumer Hardware: A Reddit user reported that Qwen 3’s 14B parameter model (with yarn patch supporting 128k context) can run AI programming assistants like Roo Code and Aider reasonably well on a consumer PC with only 10GB VRAM and 24GB RAM, using IQ4_NL quantization and an 80k context configuration. Although slower when processing long contexts (e.g., 20k+, around 2 t/s), its code editing quality and codebase comprehension are good. This is the first time a local model has been able to stably handle complex coding tasks and output meaningful code diffs in longer conversations. This progress is attributed to improvements in the model itself, optimization of inference frameworks like llama.cpp, and adaptation of front-end tools like Roo. (Source: Reddit r/LocalLLaMA)

OpenAI Codex Reportedly Not the Best Performer on SWE-Bench Verified Leaderboard: Graham Neubig pointed out that claims about OpenAI’s Codex model achieving SOTA results on the SWE-Bench Verified leaderboard are not entirely accurate. By analyzing data and different metrics, he argues that Codex’s performance on this specific benchmark is debatable from any angle and not unequivocally the best. (Source: JayAlammar)

🧰 Tools

OpenAlpha_Evolve Open-Sourced: Replicating Google’s AI Algorithm Design Agent: Following Google DeepMind’s release of the AlphaEvolve paper, developer shyamsaktawat quickly launched an open-source implementation, OpenAlpha_Evolve. This Python framework allows users to experiment with AI-driven algorithm design concepts, including task definition, prompt engineering, code generation (using LLMs like Gemini), execution testing, fitness evaluation, and evolutionary selection. It aims to enable the broader community to participate in exploring the frontier of AI designing new algorithms. (Source: karminski3, Reddit r/artificial, Reddit r/LocalLLaMA)

Cursor Editor Adds Quick Whole-File Editing Feature: The AI-first code editor Cursor announced that users can now quickly edit entire files. This new feature aims to enhance developer productivity, making large-scale code modifications and refactoring more convenient within Cursor. (Source: cursor_ai)

Codex Efficiently Completes Application Internationalization and Localization Tasks: Developer Katsuya shared his experience using OpenAI Codex for application internationalization. He had Codex localize an application into Japanese, completing a task that would normally take days overnight, fully demonstrating Codex’s powerful capabilities in automated code generation and handling complex language tasks. (Source: gdb, ShunyuYao12)

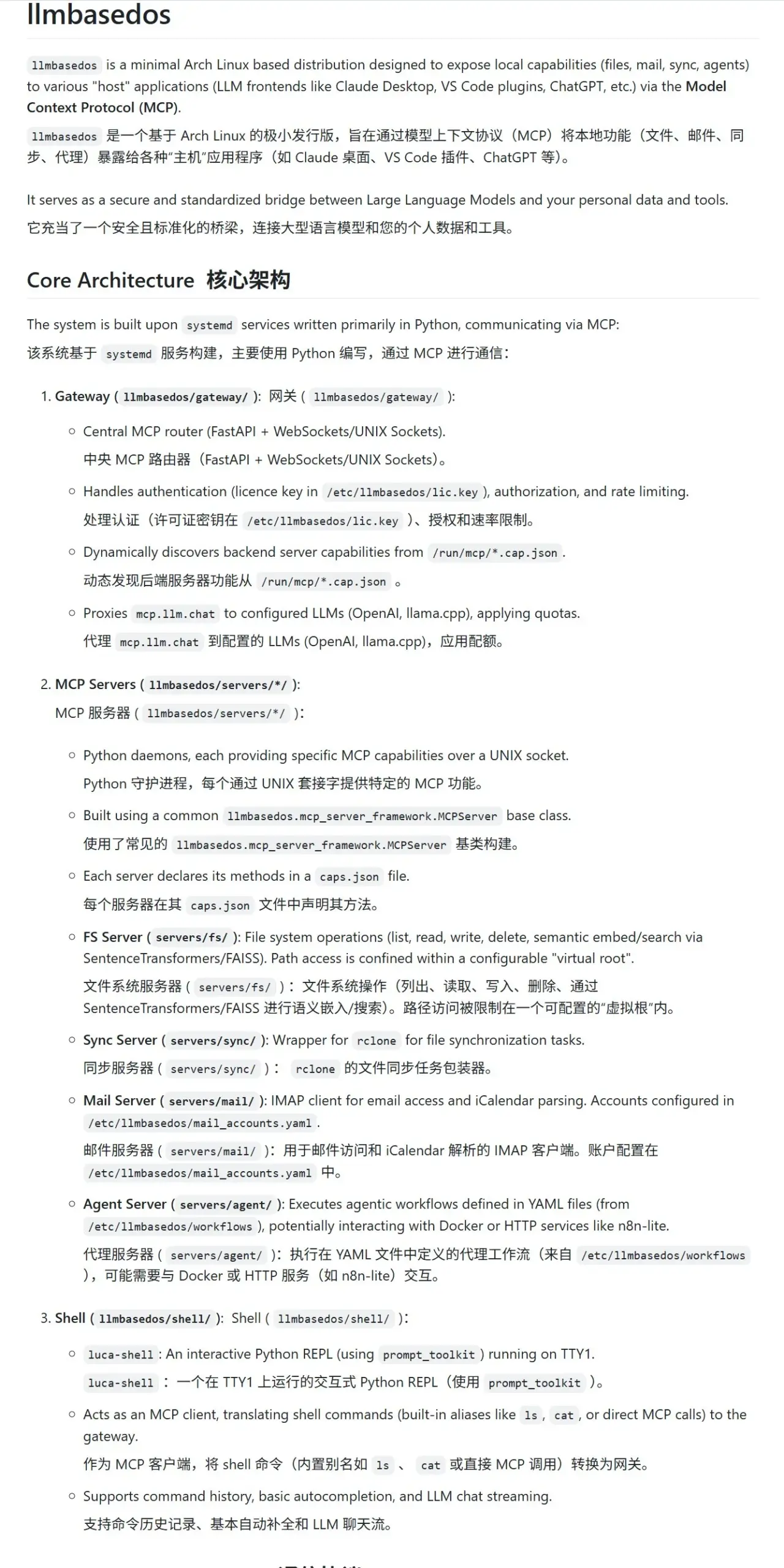

llmbasedos: Secure MCP Sandbox Environment Designed for LLMs: The llmbasedos project provides an operating system environment based on a stripped-down Arch Linux, designed to offer a secure Modular Compute Protocol (MCP) sandbox for Large Language Models (LLMs). It encapsulates functions like file system, mail, synchronization, and proxy as MCP services. Users can boot from an ISO on a virtual machine or physical machine and then call these services, facilitating secure LLM interaction and development. (Source: karminski3)

cachelm: Open-Source LLM Semantic Cache Tool Enhances Efficiency and Reduces Costs: Developer devanmolsharma has released cachelm, an open-source semantic caching layer designed for LLM applications. It implements semantic similarity-based caching through vector search, effectively reducing repetitive calls to LLM APIs (even if user queries are phrased differently), thereby lowering token consumption and speeding up responses. The tool supports OpenAI, ChromaDB, Redis, ClickHouse, and allows users to customize vectorizers, databases, or LLMs. (Source: Reddit r/MachineLearning)

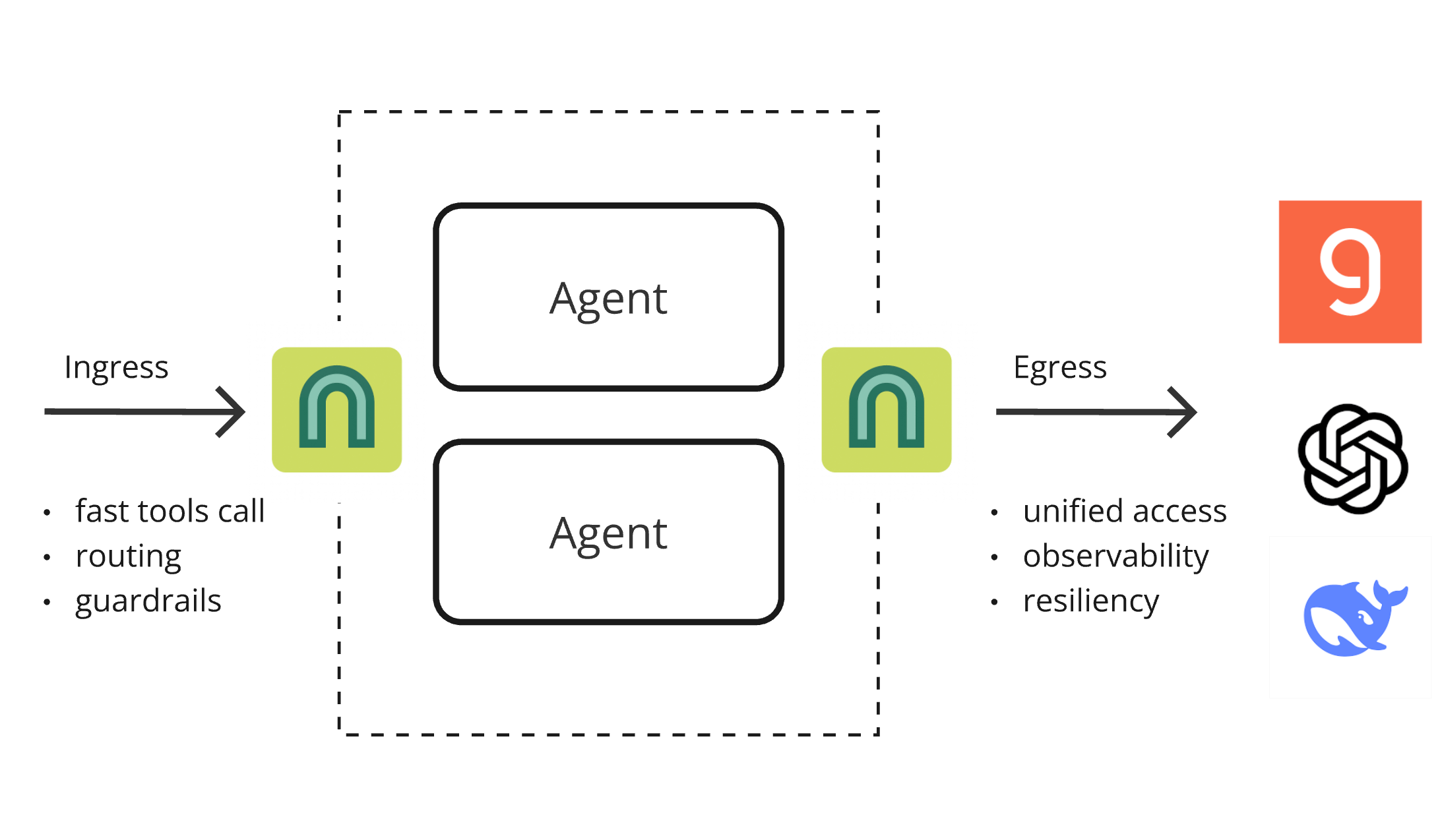

ArchGW 0.2.8 Released, AI-Native Proxy Unifies Underlying Functions: ArchGW has released version 0.2.8. This Envoy-based AI-native proxy aims to unify repetitive “low-level” functions in applications. The new version adds support for bidirectional traffic (in preparation for Google A2A), improves the Arch-Function-Chat 3B model for fast routing and tool invocation, and supports LLMs hosted on Groq. ArchGW simplifies AI application development and enhances security, consistency, and observability through a local proxy approach. (Source: Reddit r/artificial)

SparseDepthTransformer: High School Student Develops Dynamic Layer Skipping Transformer for Efficiency Gains: A high school student developed a project called SparseDepthTransformer, which uses a lightweight scoring mechanism to evaluate the semantic importance of each token and allows unimportant tokens to skip deeper layers of the Transformer. Experiments show that this method reduces memory usage by about 15% and lowers the average token processing layers by about 40% while maintaining output quality, offering a new approach to improving Transformer efficiency. (Source: Reddit r/MachineLearning)

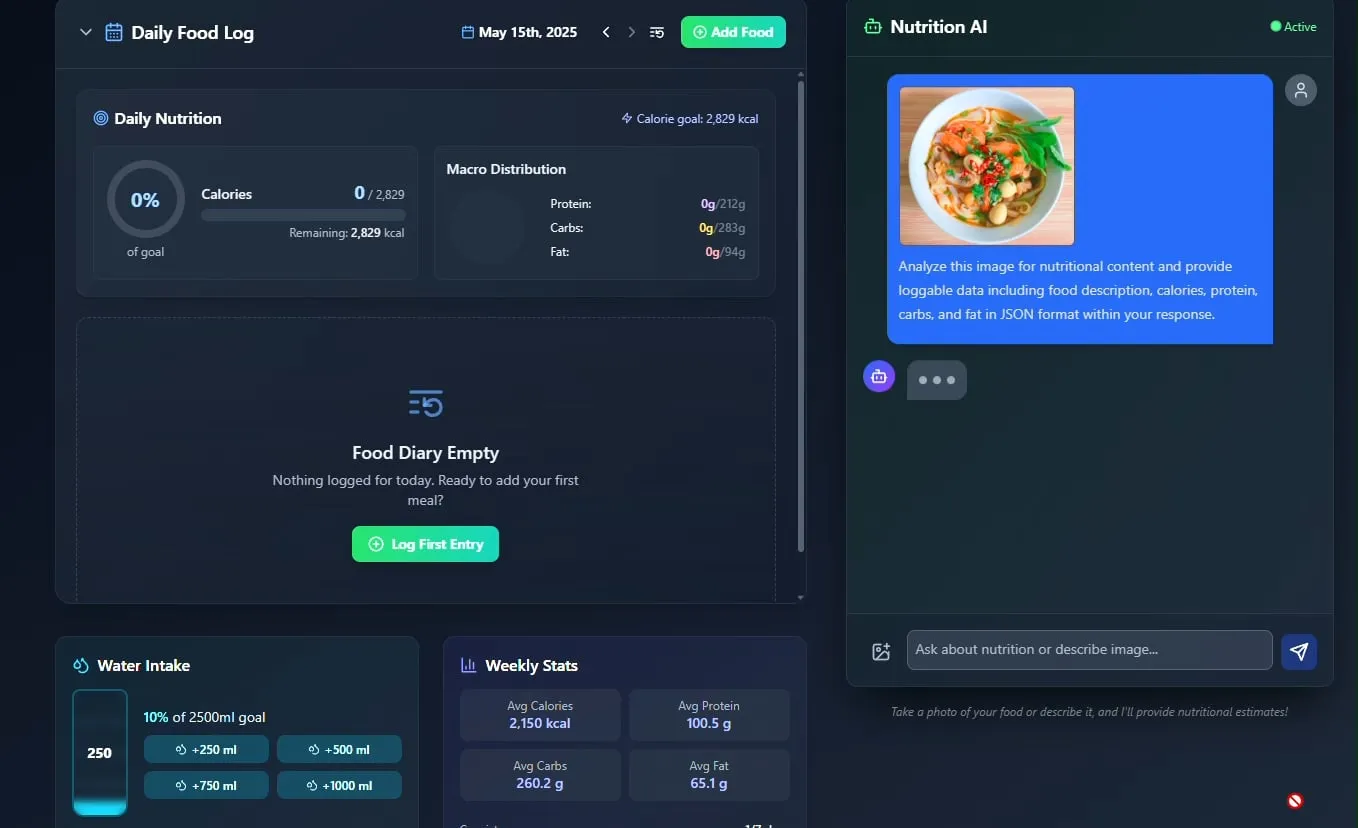

AI Food and Nutrition Tracker Unveiled, Plans to Open Source: Developer Pavankunchala showcased an AI-powered diet and nutrition tracking application. The core function of the app is to identify food and estimate nutritional information (calories, protein, etc.) by analyzing user-uploaded food photos. It also supports manual logging, daily nutrition overviews, and water intake tracking. The developer plans to open-source the project code in the future. (Source: Reddit r/LocalLLaMA)

Italian AI Agent Achieves Job Application Automation, Applying for a Thousand Jobs in One Minute, Sparking Debate: An AI Agent, reportedly from Italy, demonstrated its powerful job application automation capabilities by completing 1,000 job applications in 1 minute. The demonstration sparked widespread discussion in the community about the application of AI in the recruitment field. On one hand, people marveled at its efficiency; on the other, they expressed concerns about its effectiveness, its impact on the job market, and how to deal with “bot detection” issues. (Source: Reddit r/ChatGPT)

📚 Learning

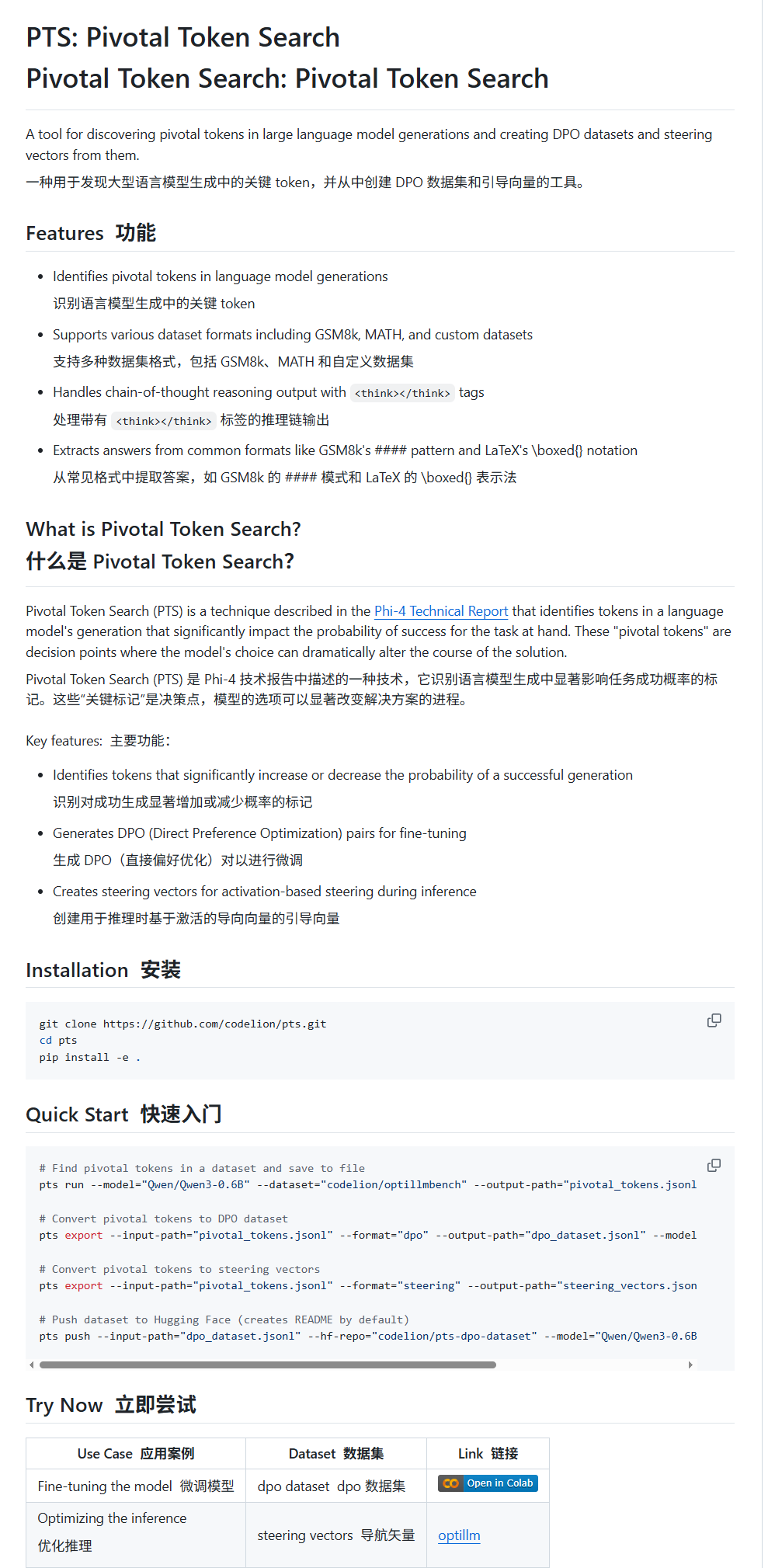

Pivotal Token Search (PTS): A New LLM Fine-tuning and Steering Technique: karminski3 introduced a new technique called PTS (Pivotal Token Search). This technique is based on the idea that the key decision points in an LLM’s output lie in a few critical tokens. It constructs a DPO dataset for fine-tuning by extracting these tokens that significantly affect output correctness (divided into “chosen tokens” and “rejected tokens”). Additionally, PTS can extract the activation patterns of these pivotal tokens to generate steering vectors, which can guide model behavior during inference without fine-tuning. This method is said to be inspired by Phi4, and its effectiveness has sparked community discussion. (Source: karminski3)

OpenAI Codex CLI Offers Free API Credits, Encourages Data Sharing: The OpenAI Developer account announced that Plus or Pro users can redeem free API credits by running npm i -g @openai/codex@latest and codex --free. Additionally, users can obtain free daily tokens by opting to share data in platform settings to improve and train OpenAI models. This initiative aims to encourage developers to use Codex tools and participate in model improvement. (Source: OpenAIDevs, fouad)

Free Learning Resources for Multi-Agent Systems (MAS) Compiled: TheTuringPost has compiled and shared 7 free resources for learning about Multi-Agent Systems (MAS). These include CrewAI, the CAMEL multi-agent framework, and LangChain multi-agent tutorials; a book titled “Multiagent Systems: Algorithmic, Game-Theoretic, and Logical Foundations”; and three online courses covering topics from prompts to multi-agent systems, mastering multi-agent development with AutoGen, and practical multi-AI agents with advanced use cases using crewAI. (Source: TheTuringPost)

MobileNetV2 Tutorial for Quick Image Classification: Reddit user Eran Feit shared a Python tutorial on using MobileNetV2 for image classification. The tutorial guides users step-by-step through loading a pre-trained MobileNetV2 model, preprocessing images with OpenCV (BGR to RGB, scaling to 224×224, batching), performing inference, and decoding prediction results to obtain human-readable labels and probabilities. This tutorial is suitable for beginners to quickly get started with lightweight image classification tasks. (Source: Reddit r/deeplearning)

Guide Published for Implementing Multi-Source RAG and Hybrid Search in OpenWebUI: The productiv-ai.guide website has published a detailed step-by-step guide for implementing multi-source Retrieval Augmented Generation (RAG) with Hybrid Search and Re-ranking in OpenWebUI. The guide aims to help users configure and utilize OpenWebUI’s RAG capabilities, including the recently added external re-ranking feature, to improve the accuracy and relevance of information retrieval. (Source: Reddit r/OpenWebUI)

💼 Business

Fierce Competition in the AI Agent Track: In-depth Comparison of Manus and Lovart: The AI Agent “Lovart,” specializing in the vertical design field, has garnered attention for its unique “order-taking” workflow. It attempts to simulate a complete design process, from requirements communication to layered material delivery, contrasting with the “scheduling” logic of general-purpose Agent “Manus.” Although Lovart performs well in understanding design aesthetics, concept expression, and information organization, and is faster than Manus, both face issues with stability, Chinese language processing, and modification linkage. Lovart’s emergence is seen as a correct direction for vertical Agents to delve deeper into scenarios and internalize industry experience, heralding the potential for AI Agents to truly land in the content industry. (Source: 36Kr)

Booming Children’s Smartwatch Market and AIoT Trend Boost Performance of Domestic SoC Chip Manufacturers: Benefiting from consumer policy stimulus and the AIoT development trend, sales in China’s smart wearable device market (especially children’s smartwatches) have surged. The emergence of open-source large models like DeepSeek has lowered the threshold for edge AI deployment, accelerating AI penetration into smart home appliances, AI earphones, and other terminals. Domestic SoC chip manufacturers like Rockchip and Bestechnic have seen significant performance growth and valuation increases due to their positioning in low-power consumption and AI computing power, as well as flagship chips like Rockchip’s RK3588 covering multiple scenarios including PC, smart hardware, and automotive. (Source: 36Kr)

OpenAI Reportedly Adjusts Corporate Restructuring Plans and Rebuts Doubts About Non-Profit Status: According to Garrison Lovely, a previously unreported letter from OpenAI to the California Attorney General has been exposed. The letter’s content not only involves unexpected details of OpenAI’s corporate restructuring plans but also shows that OpenAI is actively taking measures to counter criticisms and doubts about its attempts to weaken the company’s non-profit governance structure. (Source: NeelNanda5)

🌟 Community

Debate on LLM’s N-Gram Nature and “Intelligence” Boundary Heats Up: The community continues to discuss the extent to which Large Language Models (LLMs) still rely on N-Gram statistical properties and whether current LLMs constitute “true AI.” Some views (like pmddomingos commenting on jxmnop’s NeurIPS paper) suggest that LLMs behave like N-Gram models in over 2/3 of cases. A Reddit data scientist posted that current LLMs lack true understanding, reasoning, and common sense, are far from AGI (Artificial General Intelligence), and are essentially complex “next-word prediction systems” rather than self-aware and adaptive intelligent agents. (Source: jxmnop, pmddomingos, Reddit r/ArtificialInteligence)

AI-Generated “Transparent Film” Style Images and “Doubao” Suggestive Pictures Gain Attention: Recently, a large number of specific-style images generated by AI drawing tools like Doubao have emerged on social media, particularly images showing a “transparent film” wrapping effect. These images have sparked widespread discussion, imitation, and secondary creation among users due to their novel visual effects and potentially “suggestive” content, becoming a hot trend in the field of AI-generated content. (Source: op7418, dotey)

AI Ethics and Future: Building “God” or Self-Destruction?: The community is engaged in a heated discussion about the ultimate goals and potential risks of AI development. Emad Mostaque bluntly stated that some are trying to build a “God-like” AGI, which could lead to utopia or destruction. NVIDIA CEO Jensen Huang envisions a future where human engineers collaborate with 1000 AIs to design chips. Meanwhile, a discussion sparked by an SMBC comic shifts the AI consciousness question to a more practical ethical treatment level—can we treat these “things” with a clear conscience? These views collectively form a complex imagination of AI’s future. (Source: Reddit r/artificial, Reddit r/artificial, Reddit r/artificial)

Will AI Disrupt the SaaS Business Model? Developer Community in Hot Debate: With the popularization of powerful AI programming tools like Claude Code, the developer community is beginning to discuss their potential impact on the SaaS (Software as a Service) business model. The prevailing view is that the barrier for individual developers to replicate the core functions of existing SaaS products using AI is lowering. This could lead businesses and individual users to reduce their reliance on traditional SaaS services, opting instead for more cost-effective self-built or AI-assisted solutions. Future software development may rely more on micromanaging AI. (Source: Reddit r/ClaudeAI)

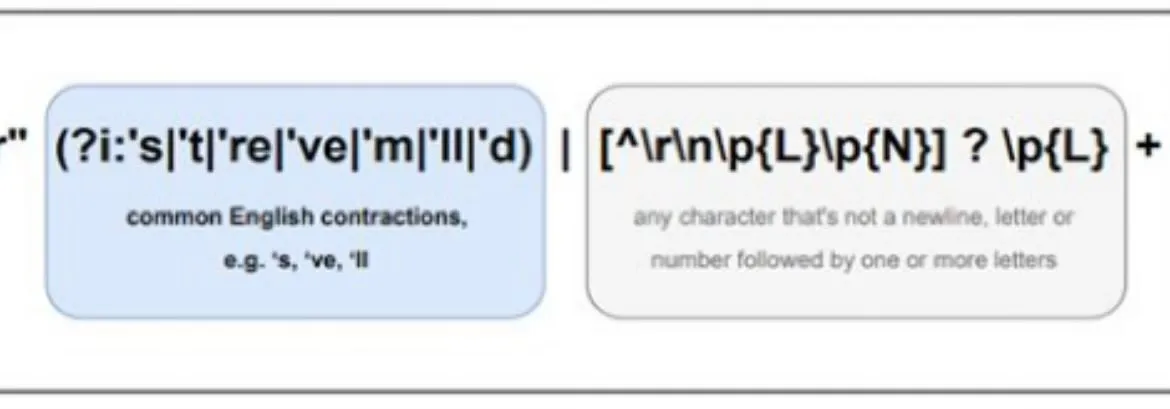

Differences in AI Multilingual Processing Draw Attention, Llama’s Pretokenizer Possibly a Factor: Community discussions point out that Large Language Models (LLMs) generally perform better in English than in other languages. One possible reason cited is the way pretokenizers in models like Llama handle non-English text (especially non-Latin characters). For example, pretokenizers might excessively break down Chinese characters into smaller units, affecting the model’s understanding of language structure and semantics, thereby leading to performance degradation in those languages. (Source: giffmana)

💡 Other

DSPy Framework Emphasizes the Importance of Low-Level Primitives for AI Agent Development: Since open-sourcing its core abstractions in January 2023, the AI framework DSPy has remained largely unchanged, apart from minor simplifications, and has stayed stable through multiple LLM API iterations. Community discussions suggest this is due to DSPy’s focus on building correct low-level primitives, rather than merely pursuing superficial developer experience or the convenience of quickly building “agents.” The view is that many current agent development frameworks focus too much on ease of use while neglecting the solidity of fundamental building blocks. DSPy’s philosophy is that a solid foundation of “reaction” is necessary to build complex “agent” behavior. (Source: lateinteraction, lateinteraction)

Aesthetic Fatigue with AI-Generated Content Drives Demand for Customized Models: Community discussions suggest that the output of many image generation models optimized through Reinforcement Learning (RL) often appears “mediocre” or “kitschy.” While technically sound, they lack exciting creativity and personality. This reflects that model optimization goals may lean towards mainstream average aesthetic preferences rather than unique artistic pursuits. Therefore, customized models and methods capable of sampling for individual aesthetic goals are considered key to overcoming this issue and creating more engaging AI content in the future. (Source: torchcompiled)

Ollama Releases Multimodal Engine, OpenWebUI Users Concerned About Compatibility: Ollama announced the official release of its multimodal engine, a news that has garnered attention from OpenWebUI community users. Users are generally concerned about whether OpenWebUI will support Ollama’s new multimodal engine “out-of-the-box,” meaning it can utilize its capabilities for processing various data types like images and text without requiring complex configuration changes. (Source: Reddit r/OpenWebUI)