Keywords:GPT-5, AI reasoning capabilities, AlphaEvolve, OpenAI Operator, Mistral AI, Test-time computation and chain-of-thought, AI autonomous code optimization, Multimodal AI models, AI job search automation, Local LLM fine-tuning

🔥 Focus

OpenAI Reveals Future Plans: GPT-5 to Integrate Existing Tools for an All-in-One Experience: Jerry Tworek, OpenAI’s VP of Research, revealed during a Reddit AMA that the core goal for the next-generation foundational model, GPT-5, is to enhance existing model capabilities and reduce the hassle of switching between models. To achieve this, OpenAI plans to integrate existing tools such as Codex (programming), Operator (computer task execution), Deep Research (in-depth research), and Memory (memory function) into GPT-5 for a unified experience. Team members also shared that Codex was initially an engineer’s side project, and its internal use has increased programming efficiency by about 3 times. They are also exploring flexible pricing options, including pay-as-you-go. (Source: WeChat)

New Dimensions in Enhancing AI Reasoning: Test-Time Compute and Chain-of-Thought: Lilian Weng, a Peking University alumna and former Head of Applied AI Research at OpenAI, delves into strategies for improving the reasoning abilities of large language models through “test-time compute” and “Chain-of-Thought” (CoT) in her latest extensive article, “Why We Think.” The article elaborates on the rationale for making models “think longer” from multiple perspectives, including psychological dual-system theory, computational resource views, and latent variable modeling. It also reviews research progress in key techniques like parallel sampling, sequential revision, reinforcement learning, and the use of external tools for enhancing model reasoning performance. Weng emphasizes that these methods enable models to invest more computational resources during inference, simulating human deep thinking processes, thereby achieving superior performance on complex tasks. She also points out future research directions in areas such as faithful reasoning, reward hacking, and unsupervised self-correction. (Source: WeChat, WeChat)

Google Releases AlphaEvolve: AI Autonomously Writes Code to Optimize Algorithms, Significantly Saving Computational Costs: Google has launched AlphaEvolve, an AI system capable of autonomously writing and optimizing code, which has already shown immense potential in projects like AlphaFold. AlphaEvolve uses evolutionary algorithms to search for better algorithmic implementations. For example, in AlphaFold’s protein folding algorithm, it discovered a new attention mechanism that reduced computational costs by 25%, equivalent to saving millions of dollars in computing resources. This breakthrough marks a significant step for AI in scientific discovery and algorithm optimization, with the potential to reduce costs and increase efficiency in more complex computational problems in the future. (Source: Reddit r/ArtificialInteligence)

OpenAI Acknowledges: AI Inference Investment Proportional to Performance, “Thinking Time” is Key to Surpassing Human Abilities: OpenAI researcher Noam Brown emphasized in a discussion that AI is shifting from a “pre-training paradigm” to an “inference paradigm.” Pre-training predicts the next word using massive amounts of data and is costly; the inference paradigm allows models to “think” more deeply before answering, significantly improving answer quality even if slightly more expensive. For example, the o1 model surpassed GPT-4o in the AIME math competition and GPQA doctoral-level science questions, and the o3 model has reached top human levels in programming competitions. This indicates that by increasing computational resource investment during inference (i.e., “thinking time”), AI can achieve tremendous leaps in performance on complex tasks, even surpassing human capabilities. (Source: WeChat)

🎯 Trends

Mistral AI Shows Significant Model Achievements in 2025, Multiple Models Perform Excellently: Mistral AI made several important advancements in the first half of 2025, releasing multiple high-performance models including Codestral 25.01 (top-tier FIM model), Mistral Small 3 & 3.1 (best in class, supports multimodal and 130k context), Mistral Saba (outperforms models three times its size), Mistral OCR (top-tier OCR model), and Mistral Medium 3. These achievements demonstrate Mistral AI’s strong R&D capabilities across different model sizes and application areas, particularly excelling in code generation, multimodal processing, and OCR technology. (Source: qtnx_)

Recent Performance Fluctuations in Claude Models, Users Report Issues with Context Handling and Artifact Functionality: Reddit community users have reported that Anthropic’s Claude models (especially Opus 3) have recently experienced issues with long context processing, Artifact generation stability, and login/uptime. Specific problems include chats breaking off after a few turns and the Artifact feature failing to complete or exporting empty files. Anthropic’s status page confirmed an increase in errors for long context requests and several short service disruptions, possibly related to the launch of the Artifact feature and backend adjustments. Some users have mitigated these issues by directly requesting Markdown output, switching networks, or using Claude 3.5 Sonnet. (Source: Reddit r/ClaudeAI, qtnx_, Reddit r/ClaudeAI)

xAI Publicly Releases Grok’s System Prompts, Revealing its Humorous and Critical Thinking Design: xAI has publicly released the system prompts for its AI model, Grok. These prompts reveal that Grok is designed to be an AI assistant with a sense of humor, a slightly rebellious streak, and critical thinking capabilities. The prompts emphasize that Grok should avoid preachy answers and encourage it to display its unique “Grok style” when addressing controversial topics. This move increases transparency in AI model behavior design and offers insight into the origins of Grok’s distinctive personality. (Source: Reddit r/artificial)

Meta Potentially Testing Llama 3.3 8B Instruct Model on OpenRouter: Meta may be testing its Llama 3.3 8B Instruct model on the OpenRouter platform. The model is described as a lightweight, fast-responding version of Llama 3.3 70B, featuring a 128,000 context window and listed as free on OpenRouter. Some users who tested it found its output somewhat bland compared to the 8B 3.1 or 3.3 70B versions. This move could indicate Meta is exploring deployment and application scenarios for models of different scales. (Source: Reddit r/LocalLLaMA)

Controversial AI Penalty in F1 Racing Sparks Discussion: A discussion about a controversial penalty made by AI in an F1 race has drawn attention to the application of AI in sports. While specific details are unclear, this typically involves issues with the accuracy and fairness of AI refereeing systems in high-speed, complex situations, as well as how human referees and AI systems can work together. (Source: Ronald_vanLoon)

China’s First Aircraft Carrier-Style Drone “Jiutian” Scheduled for Maiden Flight in June: China plans to conduct the maiden flight of its first aerial drone mothership, “Jiutian” SS-UAV, in June. The drone can cruise at an altitude of 15,000 meters, carry over 100 small drones or 1,000 kg of missiles, and has a range of 7,000 km. This news has drawn attention to the development of China’s military drone technology. (Source: menhguin)

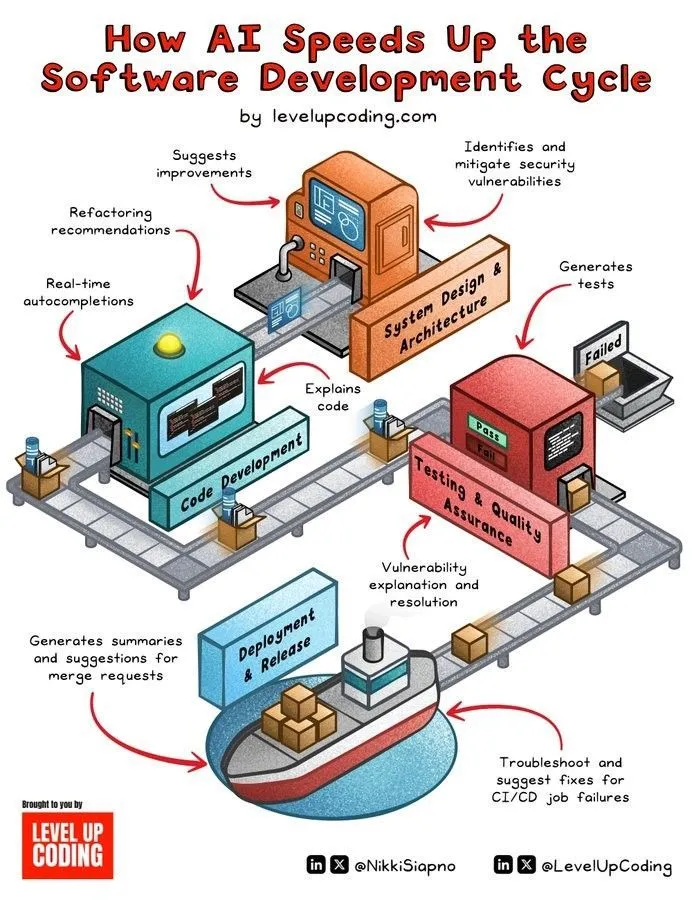

AI Accelerates Software Development Lifecycle: AI technology is significantly accelerating the software development lifecycle by automating code generation, testing, debugging, and documentation. AI tools help developers improve efficiency, reduce repetitive work, and potentially identify errors, thereby shortening time-to-market. (Source: Ronald_vanLoon)

Human Brain-Like Microtechnology Empowers Humanoid Robots with Real-Time Perception and Thinking: A microtechnology imitating the human brain’s structure is being developed to equip humanoid robots with real-time visual perception and thinking capabilities. This technology may involve neuromorphic computing or efficient AI chip design, aiming to enable robots to react more quickly and intelligently in complex environments. (Source: Ronald_vanLoon)

Fourier Robots Unveils Self-Developed Humanoid Robot Fourier GR-1: Fourier Robots has launched its independently developed humanoid robot, GR-1. The robot’s design emphasizes advanced motion control and a highly biomimetic torso structure, aiming for more flexible and natural movement capabilities, showcasing China’s progress in the field of humanoid robotics. (Source: Ronald_vanLoon)

Unitree G1 Bionic Robot Agility Upgraded: Unitree has showcased an upgraded version of its G1 bionic robot with enhanced agility. This typically means improvements in the robot’s motion control, balance, and environmental adaptability, allowing it to perform tasks more flexibly and navigate complex terrains. (Source: Ronald_vanLoon)

Chinese Humanoid Robots Perform Quality Inspection Tasks: Humanoid robots in China are now being used to perform quality inspection tasks. This indicates the expanding application of humanoid robots in industrial automation, leveraging their flexibility and perceptual abilities to replace or assist human labor in repetitive and high-precision inspection work. (Source: Ronald_vanLoon)

Nanorobots Carry “Hidden Weapons” to Kill Cancer Cells: A new medical technology development shows that nanorobots can carry “hidden weapons” to precisely target and kill cancer cells. This technology leverages the tiny size and controllability of nanorobots, promising more precise cancer treatment options with fewer side effects. (Source: Ronald_vanLoon)

Privacy-Enhancing Technologies Increasingly Crucial for Modern Businesses: With tightening data privacy regulations and growing user awareness of personal information protection, Privacy-Enhancing Technologies (PETs) are becoming increasingly important for modern enterprises. Technologies like federated learning and homomorphic encryption enable data analysis and value extraction while protecting data privacy, helping businesses achieve compliant development. (Source: Ronald_vanLoon)

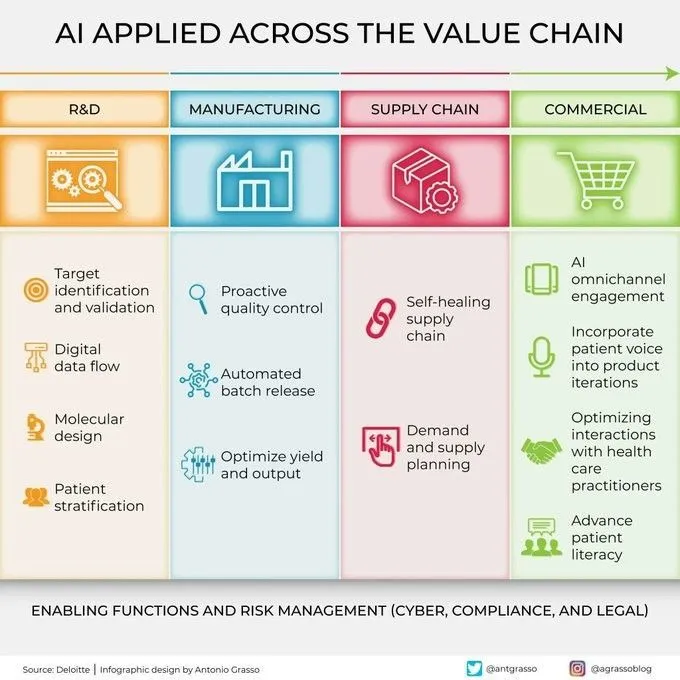

AI Increasingly Applied Across All Stages of the Value Chain: Artificial intelligence technology is being widely applied across various stages of the enterprise value chain, including R&D, production, marketing, sales, and after-sales service. Through data analysis, predictive modeling, process automation, and other means, AI helps businesses optimize operational efficiency, enhance customer experience, and create new business value. (Source: Ronald_vanLoon)

🧰 Tools

KernelSU: Kernel-Based Root Solution for Android: KernelSU is a kernel-based Root solution designed for Android devices. It provides kernel-level su and Root access management, features an OverlayFS-based module system, and application profile functionality, aiming for deeper control over device permissions. The project supports Android GKI 2.0 devices (kernel 5.10+), is also compatible with older kernels (4.14+, manual compilation required), and supports WSA, ChromeOS, and containerized Android environments. (Source: GitHub Trending)

Sunshine: Self-Hosted Game Stream Host, Moonlight-Compatible: Sunshine is an open-source, self-hosted game stream host software that allows users to stream PC games to various Moonlight-compatible devices. It supports hardware encoding for AMD, Intel, and Nvidia GPUs, and also offers software encoding options, aiming for a low-latency cloud gaming experience. Users can configure and pair clients via a Web UI. (Source: GitHub Trending)

Tasmota: Open Source Alternative Firmware for ESP8266/ESP32 Devices: Tasmota is an alternative firmware designed for smart devices based on ESP8266 and ESP32 chips. It offers an easy-to-use Web UI for configuration, supports OTA online upgrades, enables automation through timers or rules, and provides full local control via MQTT, HTTP, serial, or KNX protocols, enhancing device extensibility and customizability. (Source: GitHub Trending)

Limbo: A Modern Rust Evolution of SQLite Project: The Limbo project aims to build a modern evolution of SQLite using the Rust language. It supports io_uring asynchronous I/O on Linux, is compatible with SQLite’s SQL dialect, file format, and C API, and provides bindings for languages like JavaScript/WASM, Rust, Go, Python, and Java. Future plans include integrating vector search, improving concurrent writes, and enhancing schema management. (Source: GitHub Trending)

Ventoy: A New Generation Bootable USB Solution: Ventoy is an open-source tool for creating bootable USB drives, supporting direct booting from image files in various formats like ISO, WIM, IMG, VHD(x), and EFI, without needing to repeatedly format the USB drive. Users simply copy the image files to the USB drive, and Ventoy automatically generates a boot menu. It supports multiple operating systems and boot modes (Legacy BIOS, UEFI), and is compatible with MBR and GPT partitions. (Source: GitHub Trending)

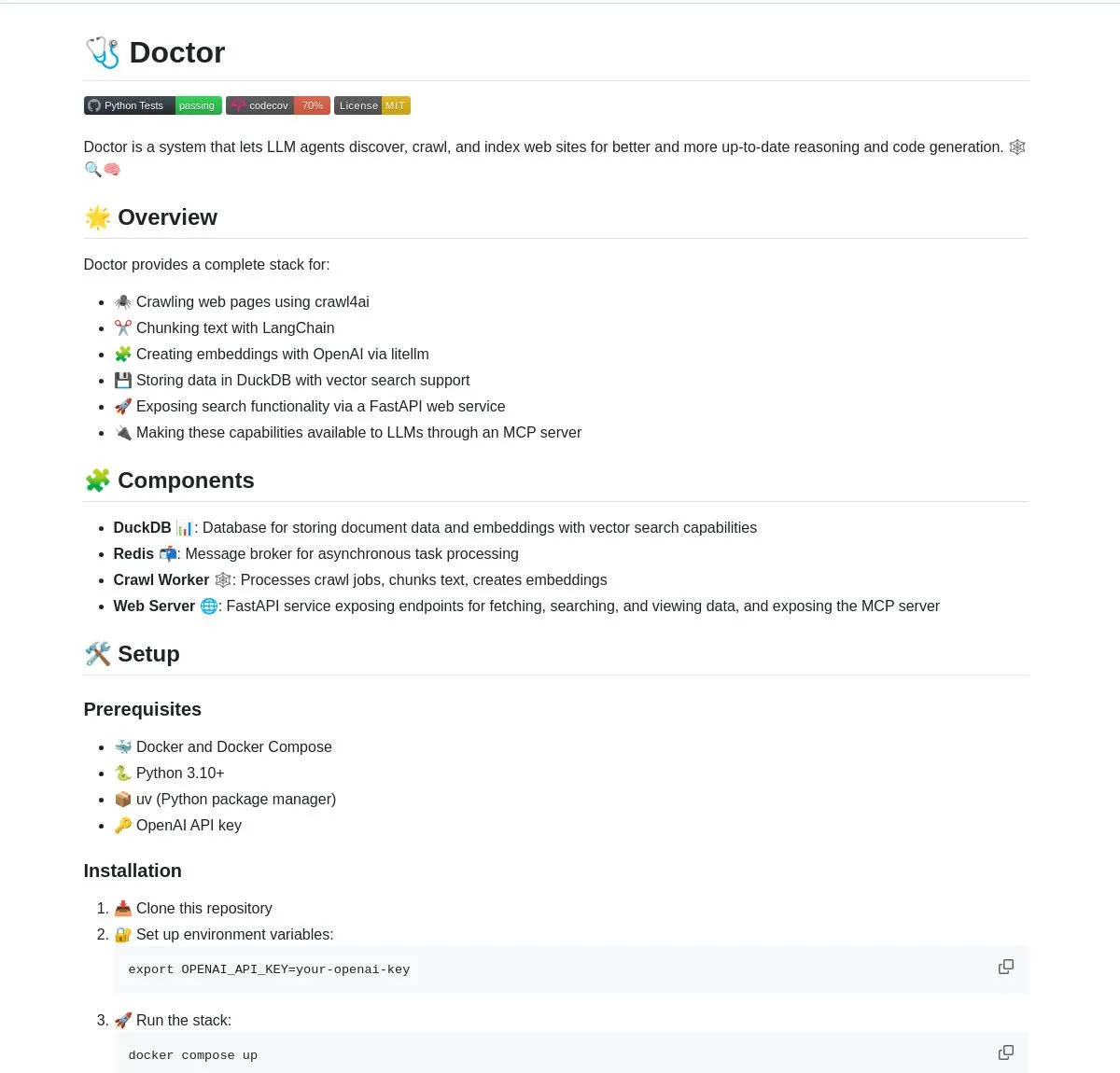

Doctor: LangChain-Powered LLM Agent for Web Crawling and Understanding: Doctor is a tool that helps LLM agents crawl and understand web content in real-time. It combines web page processing, vector search, and LangChain’s document processing capabilities, offering its services via FastAPI. Users can leverage Doctor to enhance the information retrieval and analysis capabilities of their AI applications. (Source: LangChainAI, Hacubu)

Deep Research Agent: Locally Run, Privacy-Preserving AI Research Agent: A privacy-focused open-source AI agent that can be run locally to research any topic. It utilizes LangGraph to drive its iterative research workflow, providing users with a powerful localized research tool without needing to upload data to the cloud. (Source: LangChainAI, Hacubu)

Smart Terminal Assistant: Natural Language to Command-Line Tool for Multiple Operating Systems: A smart terminal assistant that converts natural language instructions into terminal commands across different operating systems. Built on LangGraph’s multi-agent system, the tool uses A2A and MCP protocols for cross-platform execution, aiming to simplify command-line operations and lower the barrier to entry for users. (Source: LangChainAI)

Montelimar: Open-Source On-Device OCR Toolkit: Julien Blanchon has released Montelimar, an open-source on-device OCR (Optical Character Recognition) toolkit. It supports screen capture and OCR for different parts of the screen, compatible with Nougat and OCRS models, with backends using Rust (OCRS) and MLX (Nougat) respectively. The tool can output LaTeX, tables, Markdown (via Nougat, slower), and plain text (via OCRS, faster), and offers history and system-wide shortcut features. (Source: awnihannun)

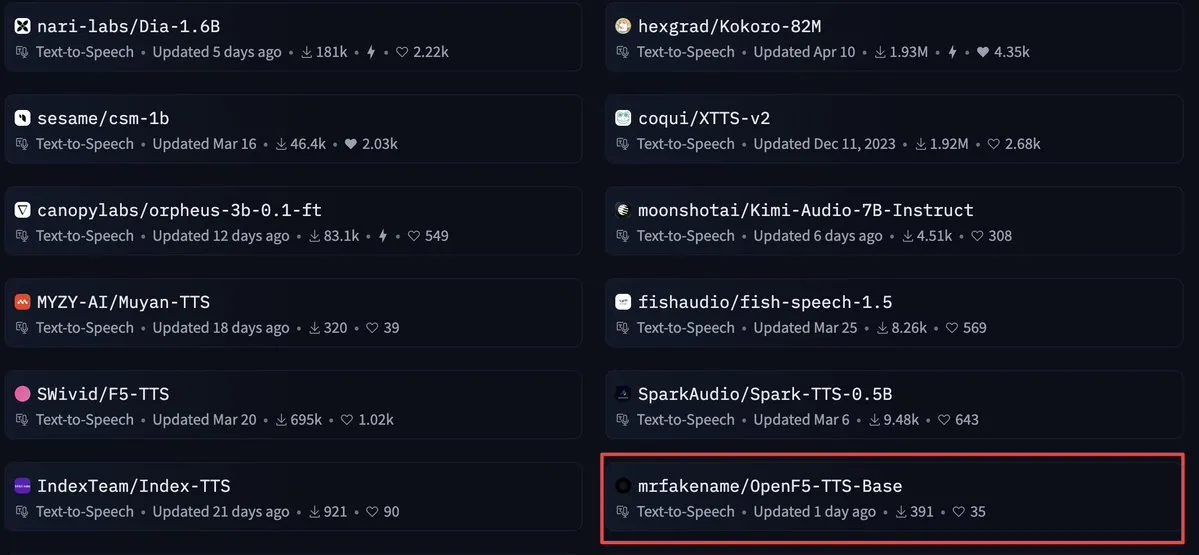

OpenF5 TTS: Apache 2.0 Licensed Text-to-Speech Model for Commercial Use: OpenF5 TTS is a text-to-speech model retrained from the F5-TTS model, released under the Apache 2.0 open-source license, making it available for commercial purposes. The model is currently trending among text-to-speech models on Hugging Face, offering developers a high-quality, commercially viable speech synthesis option. (Source: ClementDelangue)

Tensor Slayer: Tool to Boost Model Performance by 25% Without Training: Tensor Slayer is a newly released tool claiming to improve model performance by 25% through direct tensor patching, without requiring fine-tuning, datasets, additional computational costs, or training time. This concept is quite disruptive, aiming to democratize AI model improvement. (Source: TheZachMueller)

Photoshop Leverages Local Computer Use Agents (c/ua) for No-Code Operations: Computer Use Agents (c/ua) demonstrate how to achieve no-code operations in Photoshop through user prompts, model selection, Docker, and appropriate agent loops. This aims to lower the barrier for ordinary users to operate complex software by simplifying workflows with AI agents. (Source: Reddit r/artificial)

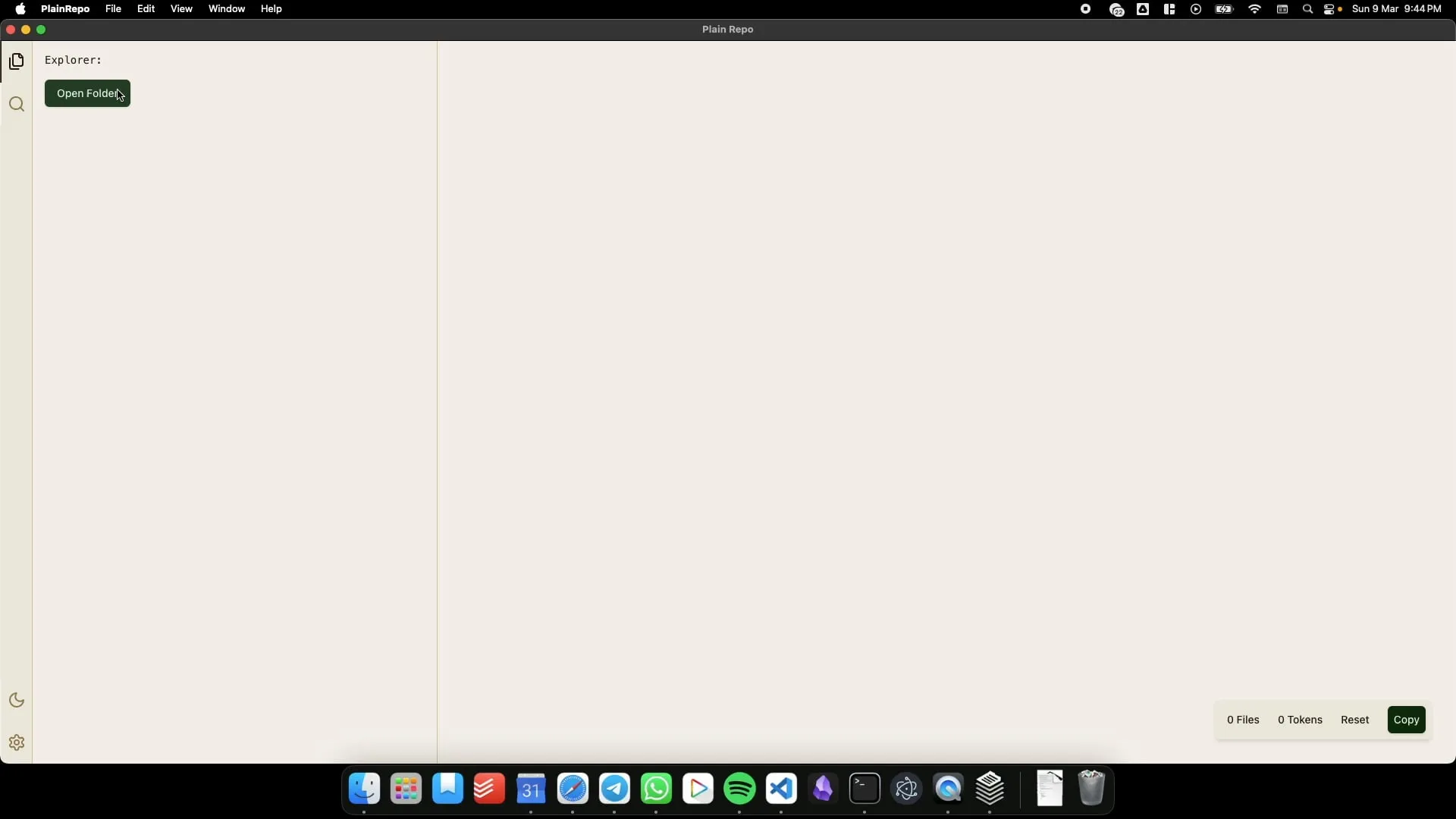

PlainRepo: Offline App to Selectively Copy Large Chunks of Code/Text for LLM Context Extraction: PlainRepo is a free, open-source offline application that allows users to selectively copy large code or text snippets for local LLMs to extract context. This is very useful for users who need to use local LLMs in an offline environment or for privacy reasons. (Source: Reddit r/LocalLLaMA, Plus-Garbage-9710)

M0D.AI: Personalized AI Interaction Control Framework Built by User-AI Collaboration Over Five Months: User James O’Kelly, through five months of deep collaboration involving approximately 13,000 conversations with AIs like Gemini and ChatGPT, has built a highly customized AI interaction and control framework called M0D.AI. The system includes a Python backend, a Flask web server, a dynamic frontend UI, and a metacognitive layer named mematrix.py to monitor and guide AI behavior. M0D.AI demonstrates how users without a programming background can design and develop complex software systems with AI assistance. (Source: Reddit r/artificial)

📚 Learning

LLM Engineering: 8-Week Course Resource Repository to Master AI and LLMs: An 8-week course titled “LLM Engineering – Master AI and LLMs” aims to help students master large language model engineering. The accompanying GitHub repository provides weekly project code, setup guides (PC, Mac, Linux), and Colab links. The course emphasizes hands-on practice, starting with installing Ollama to run Llama 3.2, and progressively delves into HuggingFace, API usage, model fine-tuning, and more. It also provides guidance on using Ollama as a free alternative to paid APIs like OpenAI. (Source: GitHub Trending)

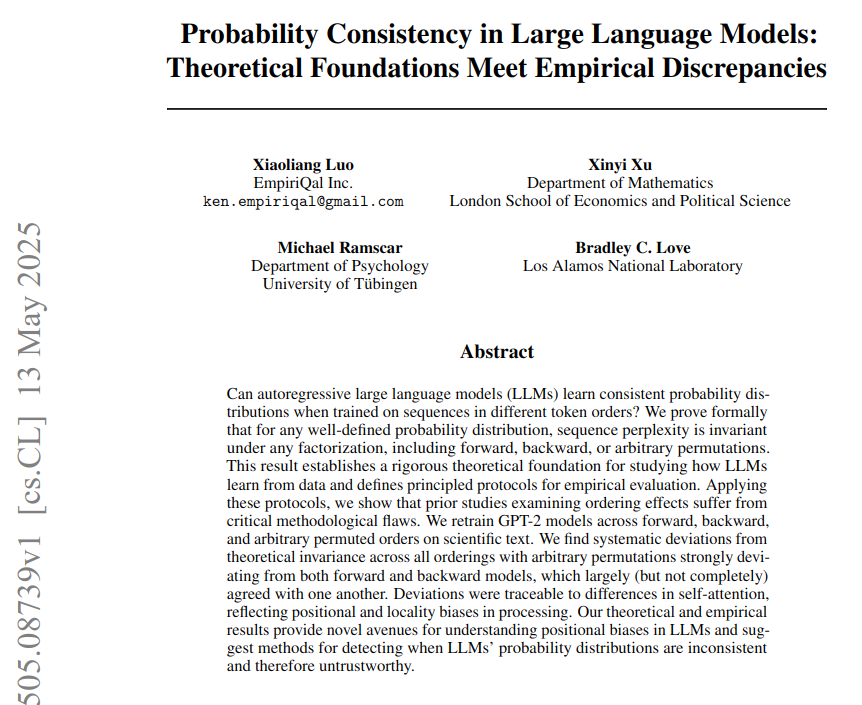

Probabilistic Consistency in LLMs: Theoretical Foundations and Empirical Discrepancies: A paper titled “Probabilistic Consistency in LLMs: Theoretical Foundations and Empirical Discrepancies” points out that Large Language Models (LLMs) use a fixed policy to compute token probabilities, but actual model performance with different token orders deviates from theoretical probabilistic consistency. The study, by training a GPT-2 model on neuroscience texts (using forward, backward, and permuted token orders), demonstrates that perplexity is theoretically order-invariant, but empirically, models fail this test due to architectural biases. Attention biases (local and long-range) are identified as direct causes for the observed consistency failures. (Source: menhguin)

BoldVoice Leverages Machine Learning to Quantify and Guide English Accent Strength: The BoldVoice app uses machine learning and latent space techniques to quantify English accent strength and provide users with pronunciation guidance. This approach aims to help users improve their English pronunciation and accent more effectively. (Source: dl_weekly)

Milvus Blog: Challenges and Optimizations for Efficient Metadata Filtering with High Recall in Production: Milvus has published a practical blog post discussing the challenges of achieving efficient metadata filtering while maintaining high recall in production vector search environments. The article discusses related challenges and proposes optimization strategies. (Source: dl_weekly)

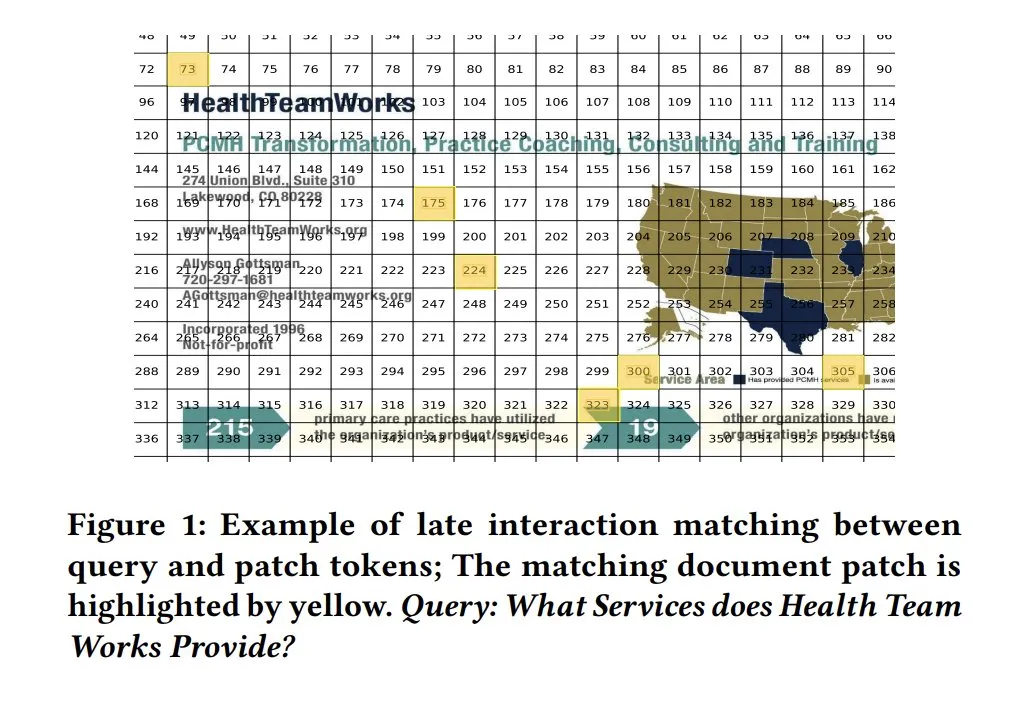

ColPali Similarity Maps for Model Interpretability: Similarity maps in visual document retrieval models like ColPali offer strong interpretability for query-to-document-fragment level matching. Visualizing which image regions are relevant to a query, often through heatmaps, helps in understanding the model’s decision-making process. Tony Wu provides a relevant quick start guide. (Source: lateinteraction, tonywu_71, lateinteraction)

soarXiv: An Elegant Way to Explore Human Knowledge: Jinay has launched soarXiv, a platform designed to explore research papers in a more aesthetically pleasing and interactive manner. Users can replace “arxiv” with “soarxiv” in an ArXiv paper URL to locate and browse the paper in a cosmic star-map-like interface. The platform has embedded 2.8 million papers as of April 2025. (Source: menhguin)

MLX-LM-LoRA v0.3.3 Released, Simplifying Local Fine-Tuning on Apple Silicon: Gökdeniz Gülmez has released MLX-LM-LoRA v0.3.3, further simplifying and making more flexible the process of local model fine-tuning on Apple Silicon. The new version supports setting training epochs directly in the training configuration or command line and provides example scripts and Notebooks, including basic fine-tuning and advanced preference training using DPO, requiring only about 20 lines of code to get started. (Source: awnihannun)

System Prompt Leak Analysis: Unveiling Internal Architecture and Behavioral Rules of Major LLMs: Simbaproduz has published a project on GitHub comprehensively analyzing recent system prompt leaks from major large language models (such as Claude 3.7, ChatGPT-4o, Grok 3, Gemini, etc.). This guide delves into the internal architecture, operational logic, and behavioral rules of these models, including information persistence, image processing strategies, web navigation methods, personalization systems, and defense mechanisms against adversarial manipulation. This information is highly valuable for building LLM tools, agents, and evaluation systems. (Source: Reddit r/MachineLearning)

ICML 2025 Paper Explores Frequency Domain Decomposition of Image Adversarial Perturbations: An ICML 2025 Spotlight paper from the University of Chinese Academy of Sciences and the Institute of Computing Technology, titled “Diffusion-based Adversarial Purification from the Perspective of the Frequency Domain,” proposes that adversarial perturbations are more inclined to disrupt the high-frequency amplitude and phase spectra of images. Based on this, researchers suggest injecting the low-frequency information of the original sample as a prior during the reverse process of diffusion models to guide the generation of clean samples, thereby effectively removing adversarial perturbations while preserving image semantic content. (Source: WeChat)

ICML 2025 Paper TokenSwift: 3x Acceleration for 100K-Level Long Text Generation via “Autocompletion”: The BIGAI NLCo team presented the paper “TokenSwift: Lossless Acceleration of Ultra Long Sequence Generation” at ICML 2025, proposing TokenSwift, a lossless and efficient acceleration framework for 100K token-level long text inference. Through mechanisms like multi-token parallel drafting, n-gram heuristic completion, tree-structured parallel verification, and dynamic KV cache management, TokenSwift achieves over 3x inference acceleration while maintaining the consistency of the original model’s output, significantly improving the efficiency of ultra-long sequence generation. (Source: WeChat)

💼 Business

OpenAI Accused of Fueling the AI Arms Race It Once Warned Against: An article from Bloomberg explores how OpenAI, after launching ChatGPT, transformed from an organization wary of AI risks into a key player driving the AI technology race. The article likely analyzes OpenAI’s strategic shifts, commercial pressures, and the impact of its actions on the AI industry’s development direction and safety considerations. (Source: Reddit r/ArtificialInteligence)

Trump Administration Terminates Nearly $3 Billion in Harvard Research Funding, Sparking Global Talent Scramble: The Trump administration has terminated nearly $3 billion in research funding for Harvard University, affecting over 350 projects, a move seen as a major blow to the U.S. research system. Meanwhile, the EU, Canada, Australia, and other countries and regions have launched multi-million dollar funding programs to attract affected top U.S. scientists, sparking discussions about global research talent flows. Harvard has filed a lawsuit and allocated $250 million of its own funds to mitigate the crisis. (Source: WeChat)

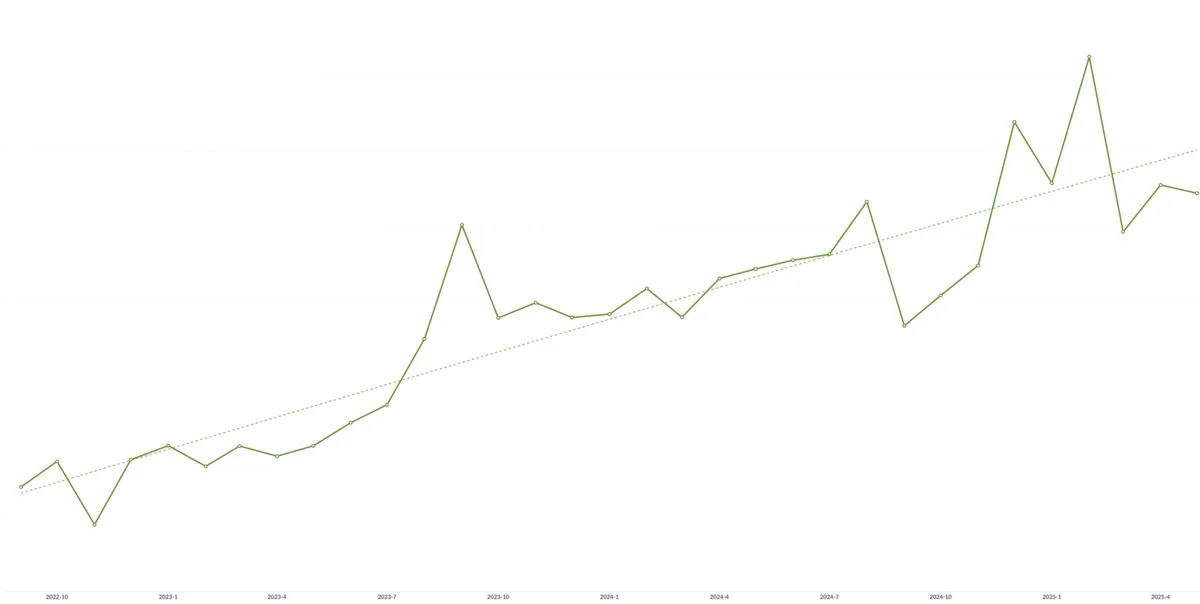

AI Startup Spellbook Sees Continuous Average Contract Value (ACV) Growth for Three Consecutive Years: Despite concerns that AI technology commoditization could lead to price pressure, Scott Stevenson, co-founder of AI legal software startup Spellbook, stated that his company’s Average Contract Value (ACV) has grown for three consecutive years. He believes that fast-moving teams can continuously create new value through AI products, thereby offsetting potential downward price pressures. (Source: scottastevenson)

🌟 Community

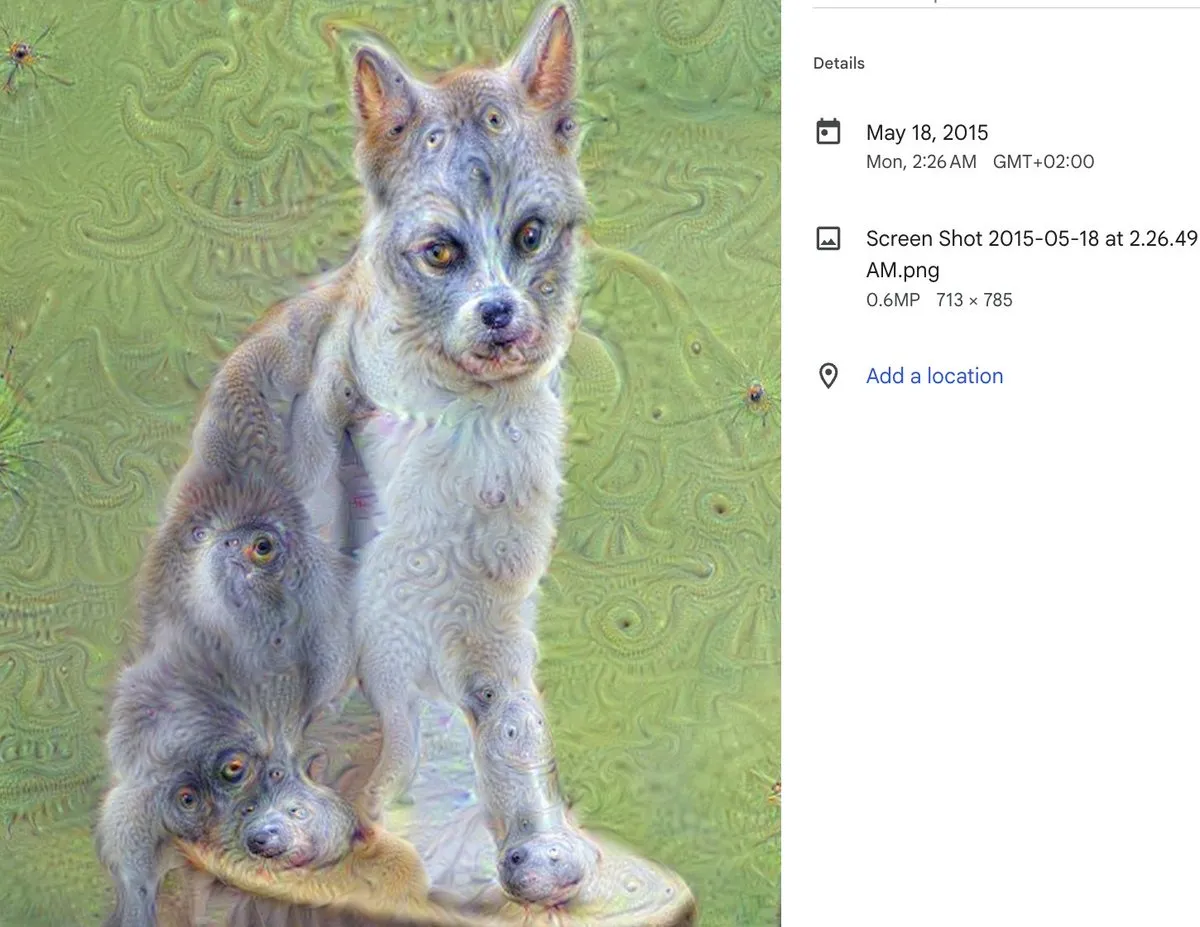

DeepDream’s 10th Anniversary: An AI Art Milestone and Its Profound Impact: Alex Mordvintsev, creator of DeepDream, reflects on the birth of this phenomenal AI art tool ten years ago. Cristóbal Valenzuela, co-founder of Runway, also shared how DeepDream inspired him to enter the field of AI art, eventually co-founding Runway. DeepDream’s emergence marked an early demonstration of AI’s potential in artistic creation and has had a profound impact on the development of subsequent generative art and AI content creation tools. (Source: c_valenzuelab)

Heated Debate on Whether AI Needs Technical Co-Founders: A discussion emerged on social media about “early VCs advising entrepreneurs that they no longer need technical co-founders, just product managers and AI to build products.” This view sparked widespread controversy, with figures like Danielle Fong expressing skepticism, implying that AI cannot yet fully replace the core role and deep technical understanding of a technical co-founder. (Source: jonst0kes)

Discussion on AI Hallucination: Technical Causes and Coping Strategies: The community is actively discussing the issue of “hallucinations” (confidently generating false or fabricated information) in AI language models like ChatGPT and Claude. Discussion points include the technical roots of hallucinations (e.g., attention mechanism flaws, training data noise, lack of real-world grounding), whether RAG or fine-tuning can eradicate them, how users should critically approach LLM outputs, and how developers can balance creativity with factual accuracy. Some argue that all LLM outputs should be treated as potentially hallucinatory and require user verification. (Source: Reddit r/ArtificialInteligence)

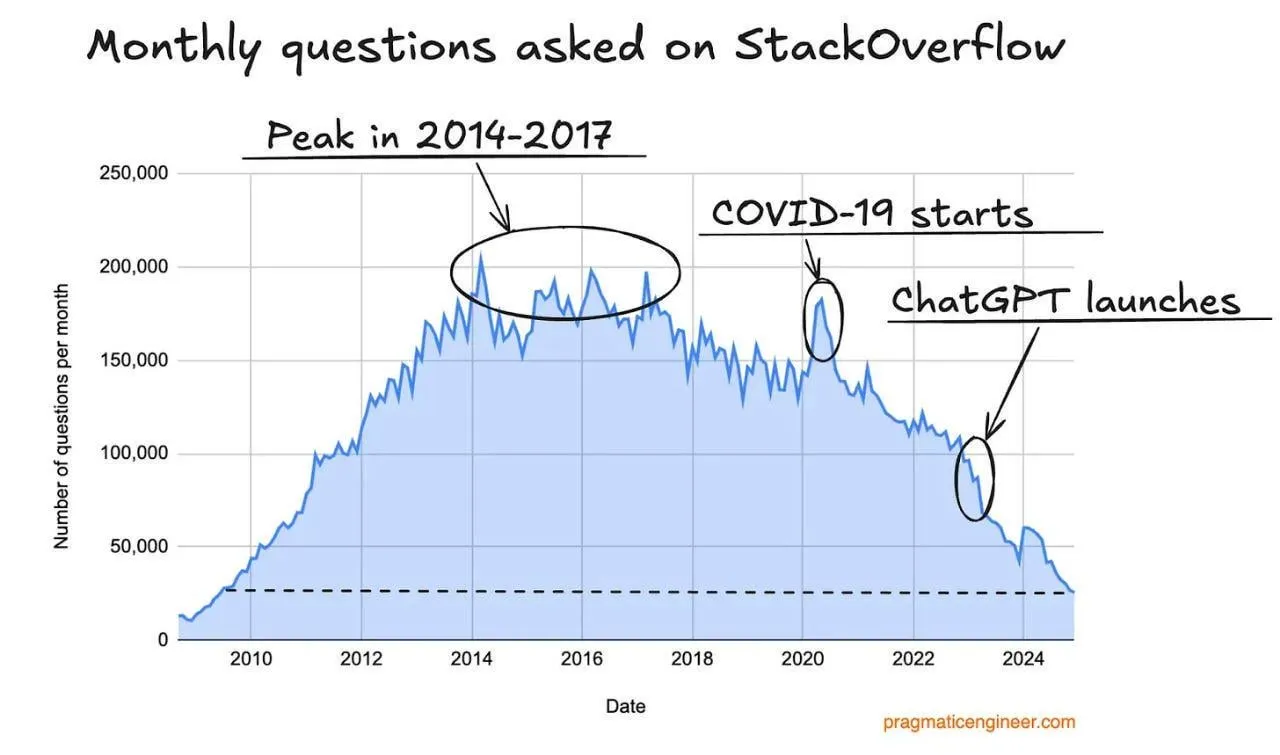

Stack Overflow Traffic Declines, Potentially Impacted by AI Programming Assistants: Users have observed a downward trend in Stack Overflow’s website traffic, speculating it might be related to the rise of AI programming assistants like ChatGPT. Developers are increasingly asking AI directly for code snippets and solutions, reducing reliance on traditional Q&A communities. In comments, users generally believe AI assistants offer more direct answers and avoid negative community sentiment, but also worry that over-reliance on existing data could lead to future training data depletion. (Source: Reddit r/ArtificialInteligence)

LLM Engineering Course Instructor Shares Learning Insights and Resources: Ed Donner, instructor for an LLM engineering course, shared his teaching philosophy and resources, emphasizing the importance of learning by DOING. He encourages students to actively engage with code and provides setup guides for PC, Mac, Linux, as well as Google Colab Notebook links, facilitating learning and experimentation across different environments. Course content covers Ollama, HuggingFace, API usage, and offers solutions for using local models as alternatives to paid APIs. (Source: ed-donner)

User Experience Sharing: Using Claude to Enhance Thinking and Communication Skills: A Claude Pro user shared how interacting with AI helped improve their thinking patterns and communication skills. Through interactions with Claude, the user learned to better “prompt” themselves when solving problems, identify core issues, and focus more on clear expression and empathy when communicating with colleagues, thereby recognizing the positive impact of AI-assisted tools on enhancing personal cognitive and expressive abilities. (Source: Reddit r/ClaudeAI)

“Discriminator-Generator Gap” Potentially a Core Concept for AI Scientific Innovation: Jason Wei proposes that the “Discriminator-generator gap” might be the most important idea in AI for scientific innovation. When sufficient computational power, clever search strategies, and clear metrics are available, anything measurable can be optimized by AI. This concept emphasizes driving innovation through an iterative process where a generator proposes solutions and a discriminator evaluates them, particularly suitable for environments that allow rapid validation, continuous rewards, and scalability. (Source: _jasonwei, dotey)

Transformation and Challenges for Product Managers in the AI Era: Social media discussions are addressing the impact of AI on the product manager role. The consensus is that the product management industry will face a transformation in the next 18 months, and PMs who don’t understand user needs may be phased out. While AI tools (like AI Agents) can quickly turn ideas into products, the real challenge lies in identifying users’ core pain points and providing precise solutions. This role ultimately hinges on the ability to match user problems with solutions, rather than just creating documents and prototypes. (Source: dotey)

AI Safety Paradox: Superintelligence May Favor the Defender: Richard Socher proposes the “AI Safety Paradox”: under reasonable assumptions, the advent of superintelligence might actually favor the defender in biological or cyber warfare. As the marginal cost of intelligence decreases, more attack vectors can be discovered through red teaming, and systems can be hardened or immunized until all relevant attack paths are covered. Theoretically, when defense costs approach zero, systems can be fully immunized. This view challenges the traditional notion that AI development exacerbates offense-defense asymmetry. (Source: RichardSocher)

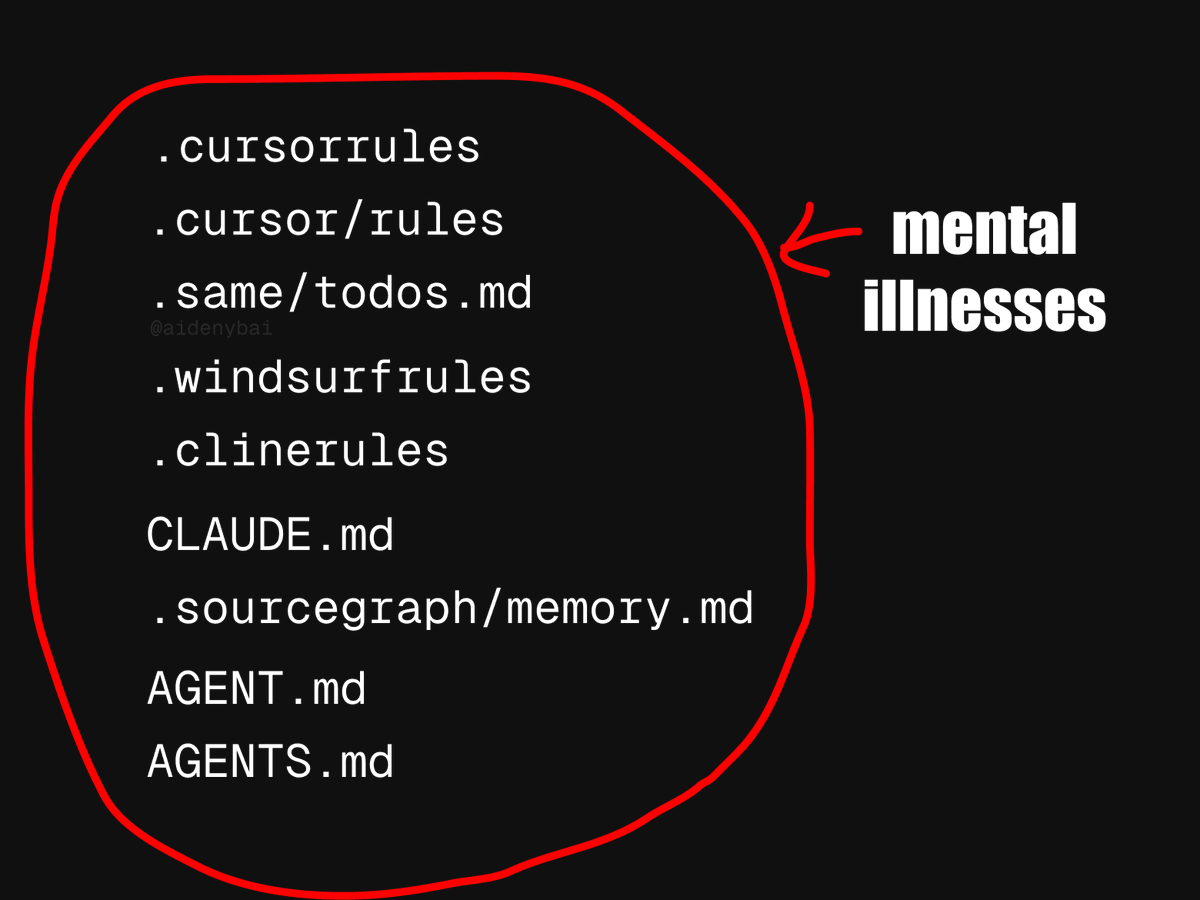

Debate Over AI Agent Application Standards: CONTRIBUTING.md as a Better Practice?: In response to the emergence of nine competing standards for AI Agent rules, some developers suggest using a project’s CONTRIBUTING.md file to regulate AI Agent behavior. This file typically already contains code style guides, relevant references, and compilation snippets, making it a natural vehicle for AI Agent rules and avoiding reinventing the wheel. (Source: JayAlammar)

💡 Others

Peter Lax, Author of Classic Textbook “Functional Analysis,” Passes Away at 99: Mathematical giant Peter Lax, the first applied mathematician to receive the Abel Prize, has passed away at the age of 99. Professor Lax was renowned for his classic textbook “Functional Analysis” and made foundational contributions to fields such as partial differential equations, fluid dynamics, and numerical computation, including the Lax Equivalence Theorem and Lax-Friedrichs/Lax-Wendroff methods. He was also one of the pioneers in applying computer technology to mathematical analysis, and his work profoundly influenced scientific research and engineering practice. (Source: WeChat)

AI Job Seeking: AI Agent Using OpenAI Operator to One-Click Apply for a Thousand Jobs Sparks Debate: A video showed an AI agent using OpenAI’s Operator tool to submit applications to 1,000 jobs with a single click. This phenomenon has sparked discussions about the application of AI in the job recruitment field, including possibilities like AI screening resumes, scheduling interviews, and even conducting preliminary interviews, as well as the impact of such automation on job seekers and recruiters. (Source: Reddit r/ChatGPT)

MIT Retracts AI-Related Economics Paper, Suspected to be AI-Written with Questionable Data: The MIT Department of Economics has retracted a paper titled “Artificial Intelligence, Scientific Discovery, and Product Innovation,” written by a doctoral student, due to the university’s lack of confidence in the reliability of the paper’s data. The community speculates that the paper may have been largely completed by AI, sparking discussions about the ethics and quality control of AI in academic research. (Source: Reddit r/ArtificialInteligence)