Keywords:OpenAI Codex, AI software development, multimodal models, AI voice generation, data filtering, Codex research preview, MiniMax Speech-02, BLIP3-o multimodal model, PreSelect data filtering, SWE-1 model series

🔥 Focus

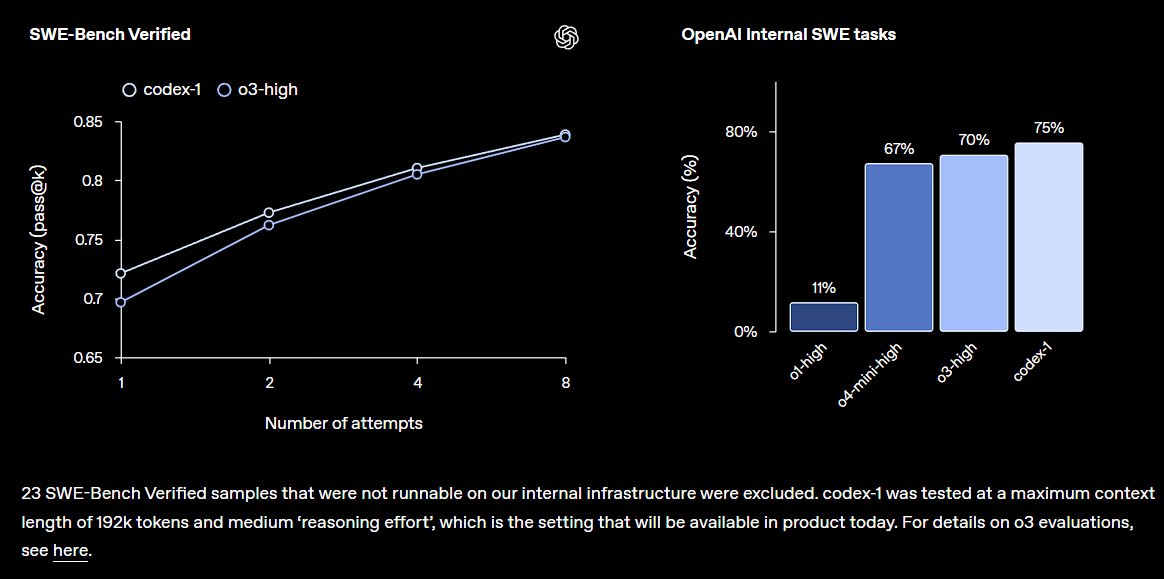

OpenAI Releases Codex Research Preview, Integrated into ChatGPT: OpenAI introduces Codex, a cloud-based software engineering agent that can understand large codebases, write new features, fix bugs, and handle multiple tasks in parallel. Codex is based on the o3 fine-tuned codex-1 model and performs excellently on SWE-bench. This feature will be gradually rolled out to ChatGPT Pro, Team, and Enterprise users, aiming to significantly enhance developer productivity and heralding a more central role for AI in software development. The community has reacted positively, but also expresses concerns about its practical effectiveness and potential bugs (Source: OpenAI, OpenAI Developers, scaling01, dotey)

Microsoft’s Large-Scale Layoffs Send Shockwaves Through the Industry, AI-Driven Organizational Change Accelerates: Microsoft announced global layoffs of approximately 6,000 employees, aiming to simplify management layers and increase the proportion of programmers. Some of the laid-off employees included veterans with 25 years of service and significant contributions, as well as core TypeScript developers. These layoffs are believed to be related to AI technology improving efficiency and automating some job tasks, reflecting the trend of tech giants controlling costs and optimizing human resources in the AI era. The event has sparked widespread discussion about the impact of AI on the job market, corporate loyalty, and future work models (Source: WeChat, NeelNanda5)

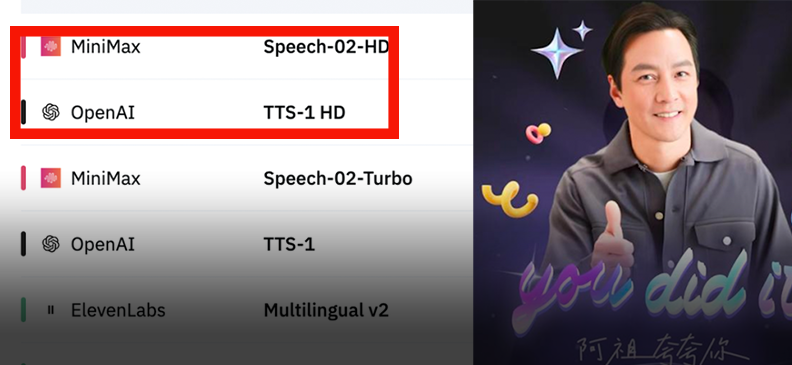

MiniMax Releases Speech-02 Voice Model, Topping Global Charts: MiniMax launched its new-generation voice model, Speech-02, which achieved first place in both the Artificial Analysis Speech Arena and Hugging Face TTS Arena, two authoritative speech evaluation platforms, surpassing OpenAI and ElevenLabs. The model excels in ultra-realistic human-like speech, personalized voice customization (supporting 32 languages and accents, replicable with just a few seconds of reference audio), and diversity. It innovatively uses Flow-VAE technology to enhance cloning details. Its technology has been applied in scenarios such as “AI Ah Zu” learning English and the Forbidden City AI guide, demonstrating the leading position of domestic large models in AI speech generation (Source: WeChat, WeChat)

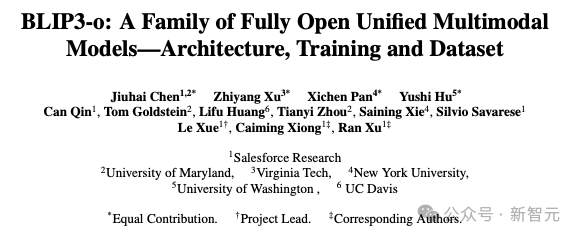

Salesforce and Other Institutions Release Unified Multimodal Model BLIP3-o: Salesforce Research, in collaboration with several universities, released the fully open-source unified multimodal model BLIP3-o. It adopts a “understand then generate” strategy, combining autoregressive and diffusion architectures. The model innovatively uses CLIP features and Flow Matching for training, significantly improving the quality, diversity, and prompt alignment of generated images. BLIP3-o performs excellently on multiple benchmarks and is being extended to complex multimodal tasks such as image editing and visual dialogue, advancing the development of multimodal AI technology (Source: 36Kr)

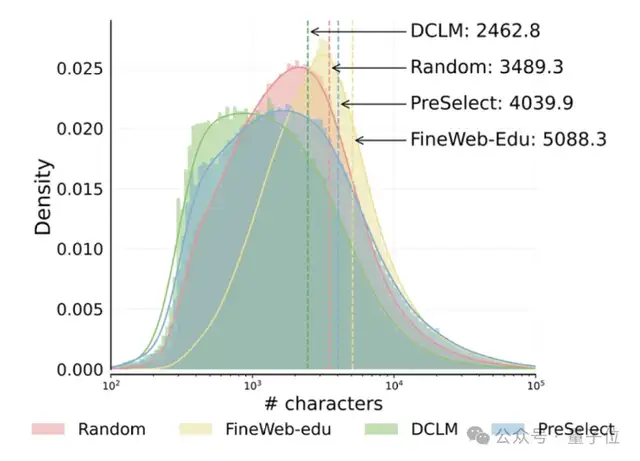

HKUST and vivo Propose PreSelect Data Filtering Scheme, Boosting Pre-training Efficiency 10x: The Hong Kong University of Science and Technology (HKUST) and vivo AI Lab have jointly proposed a lightweight and efficient data selection method called PreSelect, which has been accepted by ICML 2025. This method quantifies the contribution of data to specific model capabilities through a “prediction strength” metric. It utilizes a fastText scorer to filter the entire training dataset, achieving an average 3% improvement in model performance while reducing computational requirements tenfold. PreSelect aims to more objectively and generally filter high-quality, diverse data, overcoming the limitations of traditional rule-based or model-based filtering methods (Source: QbitAI)

🎯 Trends

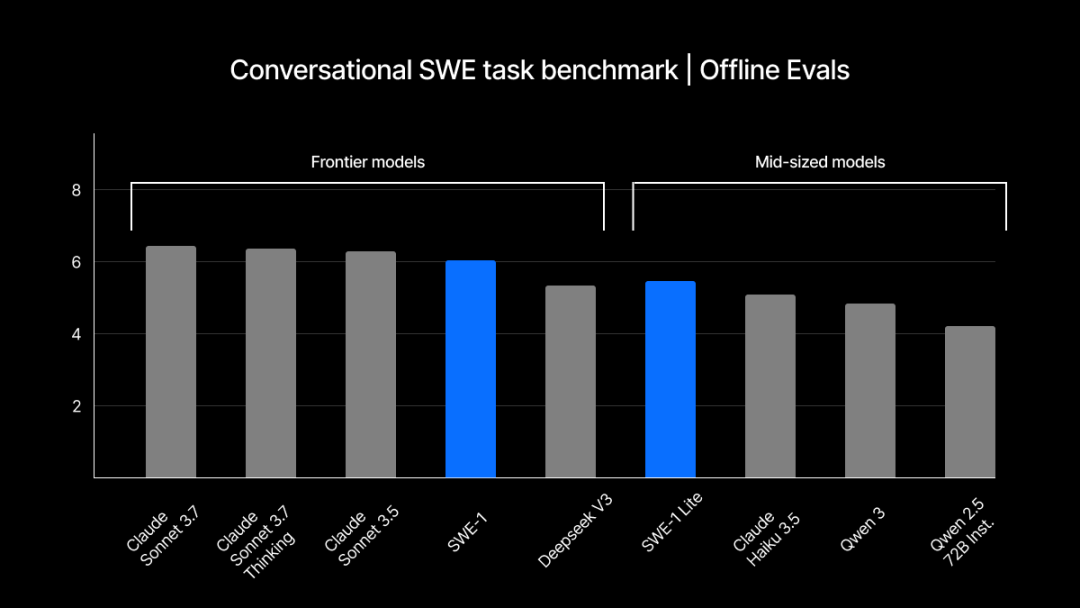

Windsurf Releases Self-Developed SWE-1 Model Series, Optimizing Software Engineering Processes: Windsurf launched its first model series optimized for software engineering, SWE-1, aiming to improve development efficiency by 99%. The series includes SWE-1 (tool-calling capabilities close to Claude 3.5 Sonnet, but at a lower cost), SWE-1-lite (high quality, replacing Cascade Base), and SWE-1-mini (small and fast, for low-latency scenarios). Its core innovation is the “Flow Awareness” system, where the AI shares an operational timeline with the user, enabling efficient collaboration and understanding of unfinished states (Source: WeChat, WeChat)

ChatGPT’s Memory Mechanism Reverse-Engineered, Revealing Three Memory Subsystems: The “chat history” memory feature introduced by OpenAI for ChatGPT has been analyzed by tech enthusiasts, revealing it may consist of three subsystems: current conversation history, conversation history records (based on summarization and content retrieval), and user insights (generated from multi-conversation analysis with confidence scores). These mechanisms aim to provide a more personalized and efficient interactive experience, implemented through technologies like RAG and vector spaces. Although officials claim it enhances user experience, community feedback is mixed, with some users reporting instability or bugs (Source: WeChat, QbitAI)

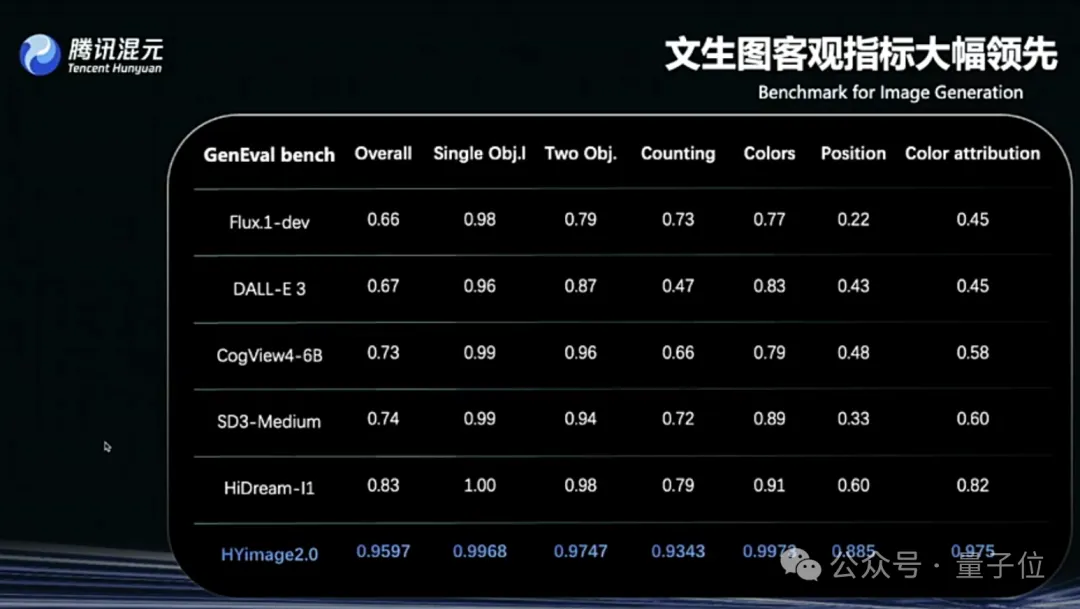

Tencent Hunyuan Image 2.0 Released, Supports Real-Time “Paint as You Speak”: Tencent Hunyuan launched the Hunyuan Image 2.0 model, achieving real-time text-to-image generation with millisecond-level response. As users input text or voice descriptions, images are generated and adjusted in real-time. The new model also supports a real-time drawing board, allowing users to generate images by combining hand-drawn sketches with text descriptions. The model shows significant improvements in realism, semantic adherence (adapting to multimodal large language models as text encoders), and image codec compression rates, and is post-trained with reinforcement learning for optimization (Source: QbitAI)

TII Releases Falcon-Edge BitNet Model Series and onebitllms Fine-tuning Library: TII introduced Falcon-Edge, a series of compact language models with 1B and 3B parameters, sized at only 600MB and 900MB respectively. These models use the BitNet architecture and can be restored to bfloat16 with almost no performance loss. Preliminary results show their performance surpasses other small models and is comparable to Qwen3-1.7B, but with only 1/4 of the memory footprint. The concurrently released onebitllms library is specifically for fine-tuning BitNet models (Source: Reddit r/LocalLLaMA, winglian)

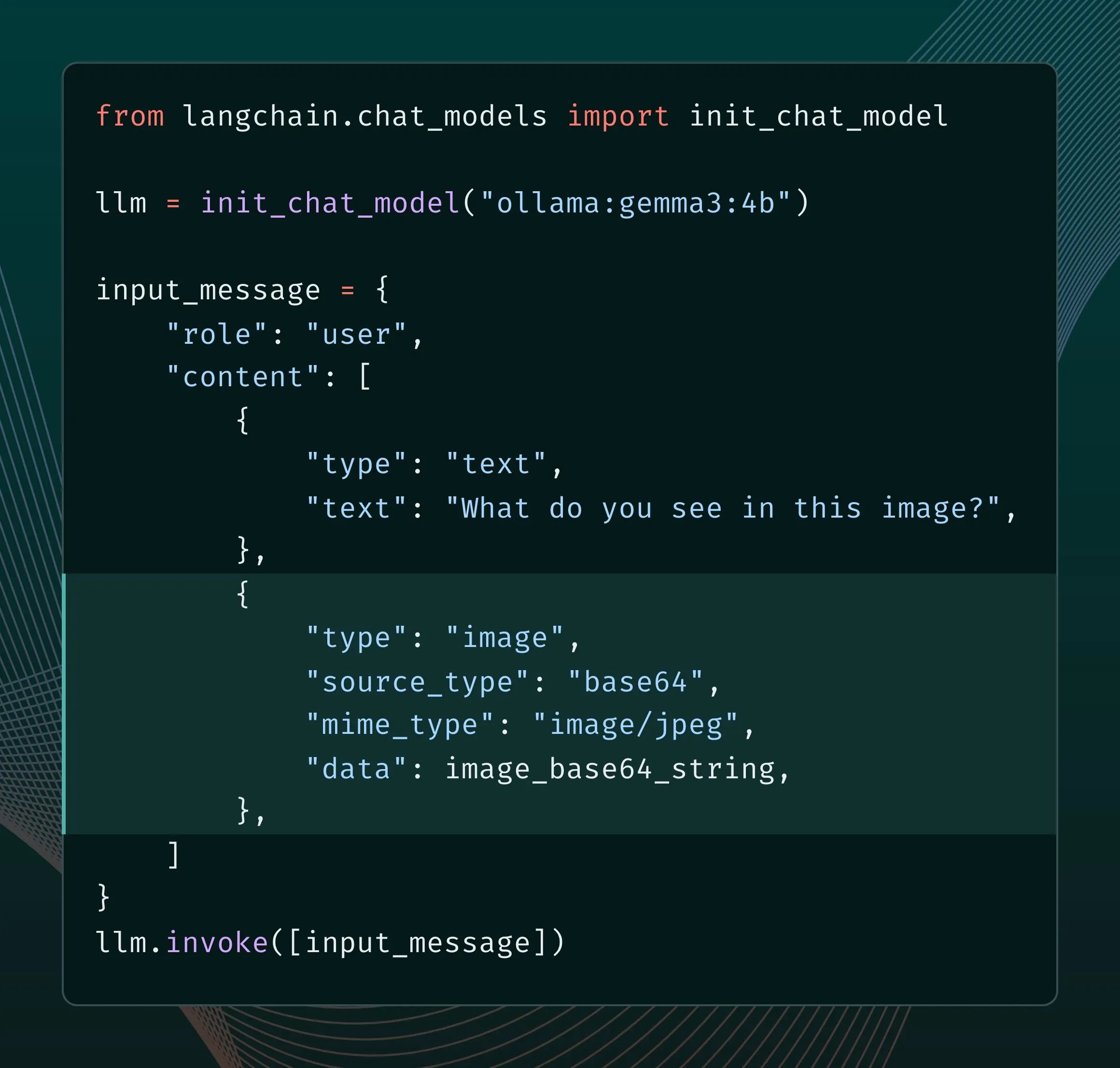

Ollama’s New Engine Enhances Multimodal Support: Ollama updated its engine to provide native support for multimodal models, allowing for model-specific optimizations and improved memory management. Users can try multimodal models like Llama 4 and Gemma 3 through LangChain integration. Google AI developers also released a guide on using Ollama and Gemma 3 for function calling to achieve features like real-time search (Source: LangChainAI, ollama)

Grok Adds Aspect Ratio Control for Image Generation: xAI’s Grok model now allows users to specify the desired aspect ratio when generating images, providing greater flexibility and control for image creation (Source: grok)

Google AI Studio Updates with New Generative Media Page and Usage Dashboard: Google’s ai.studio platform has received a series of updates, including a new landing page design, a built-in usage dashboard, and a new generative media (gen media) page, hinting at more related announcements at the upcoming I/O conference (Source: matvelloso)

LatitudeGames Releases New Model Harbinger-24B (New Wayfarer): LatitudeGames has released a new model named Harbinger-24B, codenamed New Wayfarer, on Hugging Face. The community has taken notice and is discussing why other models like fine-tuned Qwen3 32B or Llama 4 Scout were not chosen (Source: Reddit r/LocalLLaMA)

🧰 Tools

Adopt AI Secures $6M Funding, Aims to Reconstruct Software Interaction with AI Agents: Startup Adopt AI has secured $6 million in seed funding, dedicated to enabling traditional enterprise software to quickly integrate natural language interaction capabilities in a no-code manner through its Agent Builder and Agent Experience features. Its technology can automatically learn application structures and APIs, generating operations callable by natural language, and ensures data security through a Pass-through architecture. It aims to improve software adoption rates and efficiency, reducing enterprise costs (Source: WeChat)

ByteDance Volcengine Launches Mini AI Hardware Demo with High DIY Potential: Volcengine has released a demo of mini AI hardware and open-sourced its client/server code. The hardware supports a high degree of customization and can connect to Volcengine’s large models, Coze intelligent agents, as well as third-party large models compatible with the OpenAI API (like FastGPT) and various TTS voices (including MiniMax). Users can DIY applications like conversing with specific characters (e.g., a young Jay Chou, He Jiong) or creating AI voice customer service, offering rich AI interaction experiences (Source: WeChat)

Runway Releases Gen-4 References API, Empowering Developers to Build Image Generation Applications: Runway has opened up its popular Gen-4 References image generation model to developers via an API. The model is known for its versatility and flexibility, capable of generating new, stylistically consistent images based on reference images. The API release will enable developers to integrate this powerful image generation capability into their own applications and workflows (Source: c_valenzuelab)

Zencoder Launches Zen Agents, an AI Agent Platform Optimized for Coding: AI startup Zencoder (officially For Good AI Inc.) has released a cloud platform called Zen Agents, designed for creating AI agents optimized for coding tasks, aiming to improve the efficiency and quality of software development (Source: dl_weekly)

llmbasedos: A Minimalist Linux Distribution Based on MCP, Optimized for Local LLMs: A developer has built llmbasedos, a minimal distribution based on Arch Linux, designed to transform the local environment into a first-class citizen for LLM frontends (like Claude Desktop, VS Code). It exposes local capabilities (files, email, proxies, etc.) via the MCP (Model Context Protocol), supporting offline mode (with llama.cpp) or connecting to cloud models like GPT-4o and Claude, allowing developers to quickly add new features (Source: Reddit r/LocalLLaMA)

PDF Files Capable of Running LLMs and Linux Systems Draw Attention: Tech enthusiast Aiden Bai demonstrated “llm.pdf,” a project that runs small language models (like TinyStories, Pythia, TinyLLM) within a PDF file by compiling the model into JavaScript and leveraging PDF’s JS support. Commenters pointed out prior instances of running Linux systems in PDFs (via a RISC-V emulator). This reveals the potential of PDF as a dynamic content container but also sparks discussions about security and practicality (Source: WeChat)

OpenAI Codex CLI Tool Updated, Supports ChatGPT Login and New mini Model: The OpenAI developer team announced improvements to the Codex CLI tool, including support for logging in via ChatGPT accounts for quick connection to API organizations, and the addition of the codex-mini model, which is optimized for low-latency code Q&A and editing tasks (Source: openai, dotey)

SenseTime’s Large Model All-in-One Machine Recommended by IDC, Supports SenseNova, DeepSeek, and Other Models: In IDC’s “China AI Large Model All-in-One Machine Market Analysis and Brand Recommendation, 2025” report, SenseTime’s large model all-in-one machine was selected. The machine is based on SenseTime’s AI infrastructure, equipped with high-performance computing chips and inference acceleration engines. It supports SenseTime’s “SenseNova V6” and mainstream large models like DeepSeek, providing a full-stack, independently controllable solution, optimizing Total Cost of Ownership (TCO), and has been implemented in various industries including healthcare and finance (Source: QbitAI)

Open-Source Workflow Automation Tool n8n Adds Chinese Support: The popular open-source workflow automation tool n8n now supports a Chinese interface through a community-contributed localization pack. Users can download the corresponding version’s localization file and, with simple Docker configuration changes, use n8n in Chinese, lowering the barrier to entry for domestic users (Source: WeChat)

git-bug: Distributed, Offline-First Bug Tracker Embedded in Git: git-bug is an open-source tool that embeds issues, comments, etc., as objects within a Git repository (rather than plain files), enabling distributed, offline-first bug tracking. It supports syncing issues with platforms like GitHub and GitLab via bridges and offers CLI, TUI, and web interfaces (Source: GitHub Trending)

PyLate Integrates PLAID Index, Enhancing Model Benchmarking Efficiency on Large-Scale Datasets: Antoine Chaffin announced that PyLate (an ecosystem for training and inference with ColBERT models) has merged the PLAID index. This integration enables users to efficiently benchmark top models on their very large datasets, facilitating SOTA achievements on various retrieval leaderboards (Source: lateinteraction, tonywu_71)

Neon: Open-Source Serverless PostgreSQL Database: Neon is an open-source serverless PostgreSQL alternative that separates storage and compute to enable auto-scaling, database branching as code, and scale-to-zero features. The project is gaining attention on GitHub, offering a new option for AI and other application developers needing elastic, scalable database solutions (Source: GitHub Trending)

Unmute.sh: New AI Voice Chat Tool with Customizable Prompts and Voices: Unmute.sh is a newly launched AI voice chat tool characterized by its allowance for users to customize prompts and select different voices, providing a more personalized and flexible voice interaction experience (Source: Reddit r/artificial)

📚 Learning

World’s First Multimodal Generalist Model Evaluation Framework General-Level and Benchmark General-Bench Released: Research accepted to ICML‘25 (Spotlight) introduces a novel evaluation framework for Multimodal Large Models (MLLMs), General-Level, and its accompanying dataset, General-Bench. The framework introduces a five-tier ranking system, with a core focus on assessing the model’s “Synergistic Generalization Effect” (Synergy) – the ability of knowledge to transfer and enhance performance across different modalities or tasks. General-Bench is currently the largest and most comprehensive MLLM evaluation benchmark, containing over 700 tasks and 320,000+ test data points, covering five major modalities (image, video, audio, 3D, and language) and 29 domains. The leaderboard shows that models like GPT-4V currently only reach Level-2 (no synergy), and no model has yet achieved Level-5 (full-modality complete synergy) (Source: WeChat)

Paper J1 Proposes Incentivizing Thinking in LLM-as-a-Judge via Reinforcement Learning: A new paper titled “J1: Incentivizing Thinking in LLM-as-a-Judge via RL” (arxiv:2505.10320) explores how to use Reinforcement Learning (RL) to incentivize Large Language Models as Judges (LLM-as-a-Judge) to engage in deeper “thinking” rather than just providing superficial judgments. This approach could enhance the accuracy and reliability of LLMs in evaluating complex tasks (Source: jaseweston)

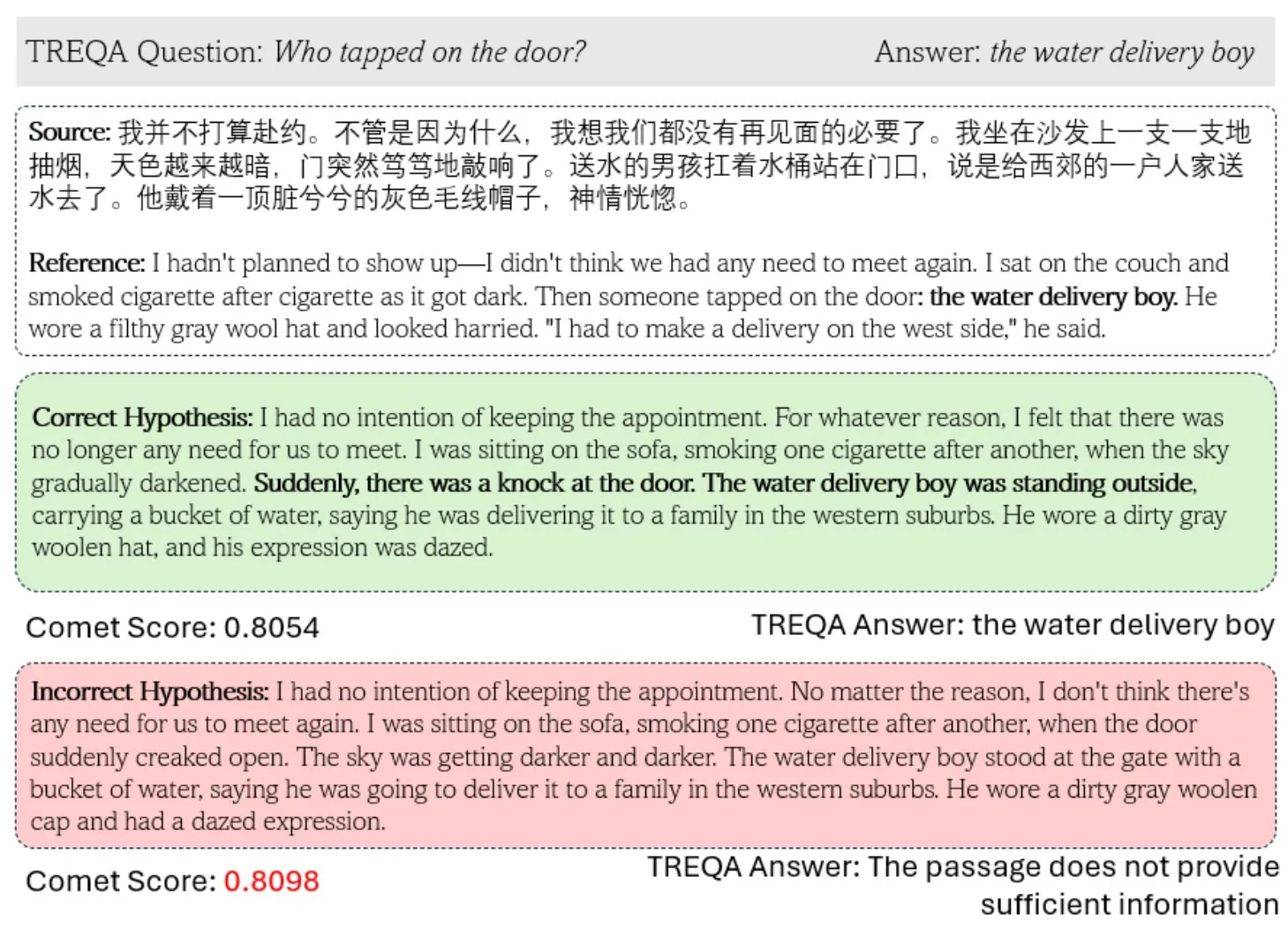

New Framework TREQA Utilizes LLMs to Evaluate Complex Text Translation Quality: Addressing the shortcomings of existing machine translation (MT) metrics in evaluating complex texts, researchers have proposed the TREQA framework. This framework uses Large Language Models (LLMs) to generate questions about the source and translated texts and compares the answers to these questions to assess whether key information has been preserved in the translation. This method aims to more comprehensively measure the quality of long-text translations (Source: gneubig)

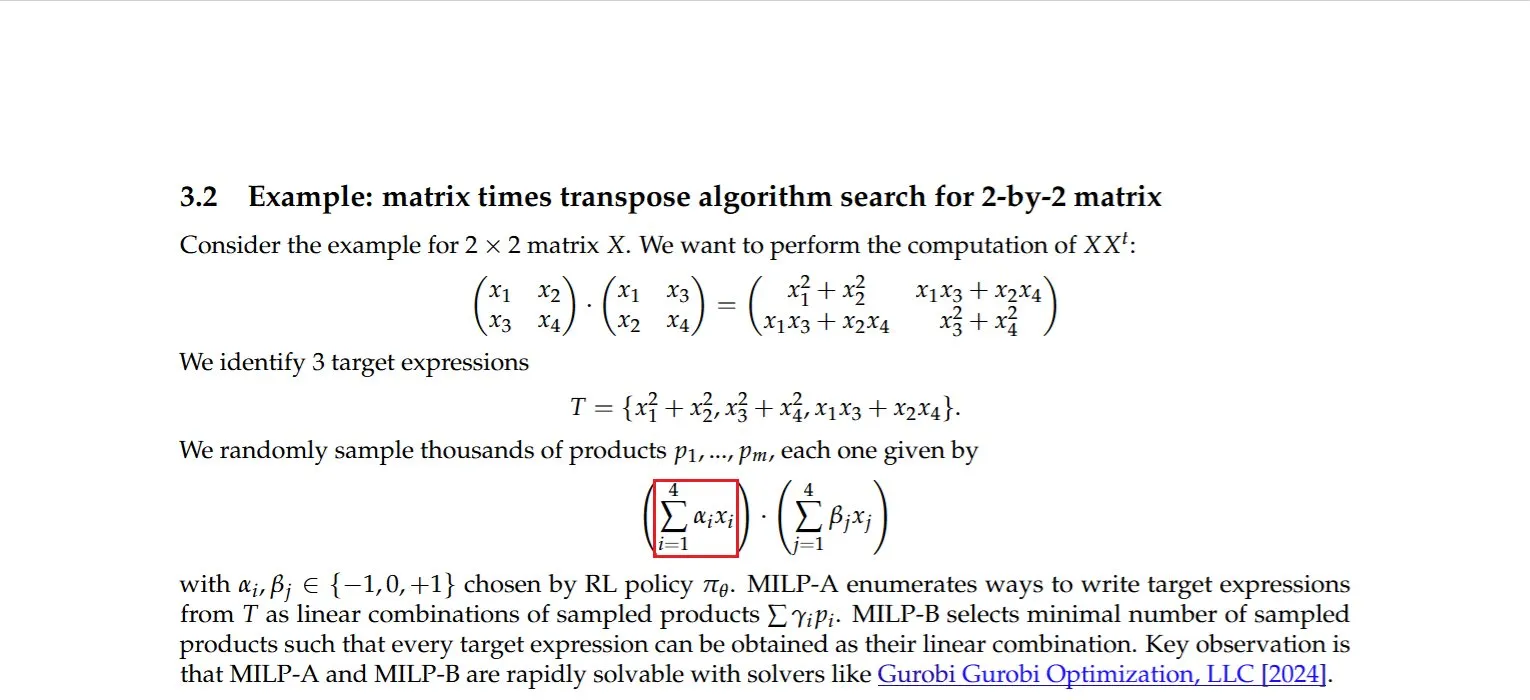

Research Discovers Efficient Method for Computing Matrix and Its Transpose Product: Dmitry Rybin et al. have discovered a faster algorithm for computing the product of a matrix and its transpose (arxiv:2505.09814). This fundamental breakthrough has profound implications for multiple fields, including data analysis, chip design, wireless communications, and LLM training, as such computations are common operations in these areas. This once again demonstrates that there is still room for improvement even in the mature field of computational linear algebra (Source: teortaxesTex, Ar_Douillard)

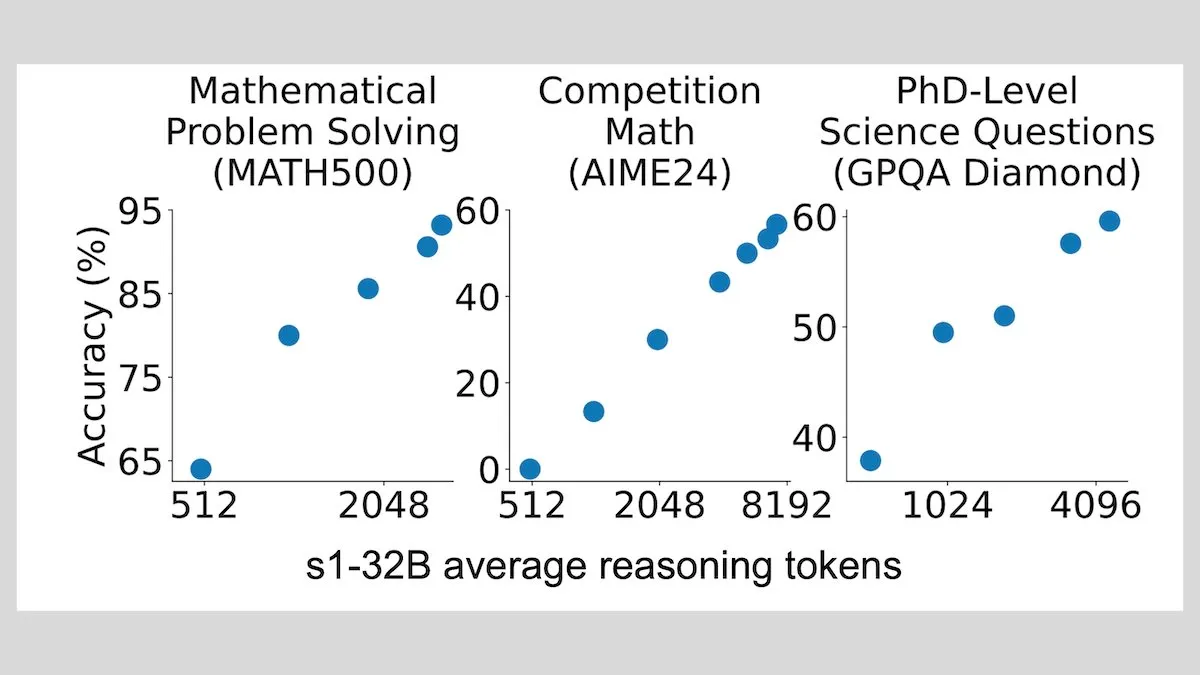

DeepLearningAI: Few-Shot Fine-Tuning Can Significantly Improve LLM Reasoning Ability: Research shows that fine-tuning a large language model with just 1,000 samples can significantly enhance its reasoning capabilities. Their experimental model, s1, extends the reasoning process by appending the word “Wait” during inference, achieving good performance on benchmarks like AIME and MATH 500. This low-resource method suggests that advanced reasoning can be taught with minimal data, without requiring reinforcement learning (Source: DeepLearningAI)

Hugging Face Launches Free MCP Course to Help Build Rich-Context AI Applications: Hugging Face, in collaboration with Anthropic, has launched a free course titled “MCP: Build Rich-Context AI Apps with Anthropic.” The course aims to help developers understand the MCP (Model Context Protocol) architecture, learn how to build and deploy MCP servers and compatible applications, thereby simplifying the integration of AI applications with tools and data sources. Over 3,000 students have already enrolled (Source: DeepLearningAI, huggingface, ClementDelangue)

awesome-gpt4o-images Project Collects Exciting GPT-4o Image Generation Cases: The GitHub project awesome-gpt4o-images, created by Jamez Bondos, has garnered over 5,700 stars in 33 days. The project collects and showcases excellent image examples generated using GPT-4o along with their prompts. It currently features nearly a hundred cases and plans to continue updating after organization and verification, providing valuable creative resources for the AIGC community (Source: dotey)

Yann LeCun Shares Self-Supervised Learning (SSL) Lecture: Yann LeCun shared the content of his lecture on Self-Supervised Learning (SSL). As an important machine learning paradigm, SSL aims to enable models to learn effective representations from unlabeled data, which is significant for reducing reliance on large-scale labeled data and improving model generalization capabilities (Source: ylecun)

Hugging Face Papers Forum Becomes a Quality Resource for AI Paper Screening: Dwarkesh Patel recommends the Hugging Face Papers forum as an excellent resource for screening the best AI papers from the past month. The platform provides researchers with a convenient channel to discover and discuss the latest AI research advancements (Source: dwarkesh_sp, huggingface)

ACL 2025 Acceptance Results Announced, Multiple Papers from Alibaba International AIB Team Selected: The top natural language processing conference, ACL 2025, has announced its acceptance results. This year saw a record high number of submissions, making competition fierce. Several papers from the Alibaba International AI Business team were accepted, with some achievements like Marco-o1 V2, Marco-Bench-IF, and HD-NDEs (Neural Differential Equations for Hallucination Detection) receiving high praise and being accepted as main conference long papers. This reflects Alibaba International’s continued investment in AI and the initial success of its talent development (Source: QbitAI)

dstack Releases Guide for Fast Interconnect Setup for Distributed Training: dstack provides a concise guide for users conducting distributed training on NVIDIA or AMD clusters on how to set up fast interconnects using dstack. The guide aims to help users optimize network performance when scaling AI workloads in the cloud or on-premises (Source: algo_diver)

AssemblyAI Shares 10 Video Tips to Improve LLM Prompting: AssemblyAI shared 10 tips via a YouTube video to improve the effectiveness of prompting Large Language Models (LLMs), aiming to help users interact more effectively with LLMs to obtain desired outputs (Source: AssemblyAI)

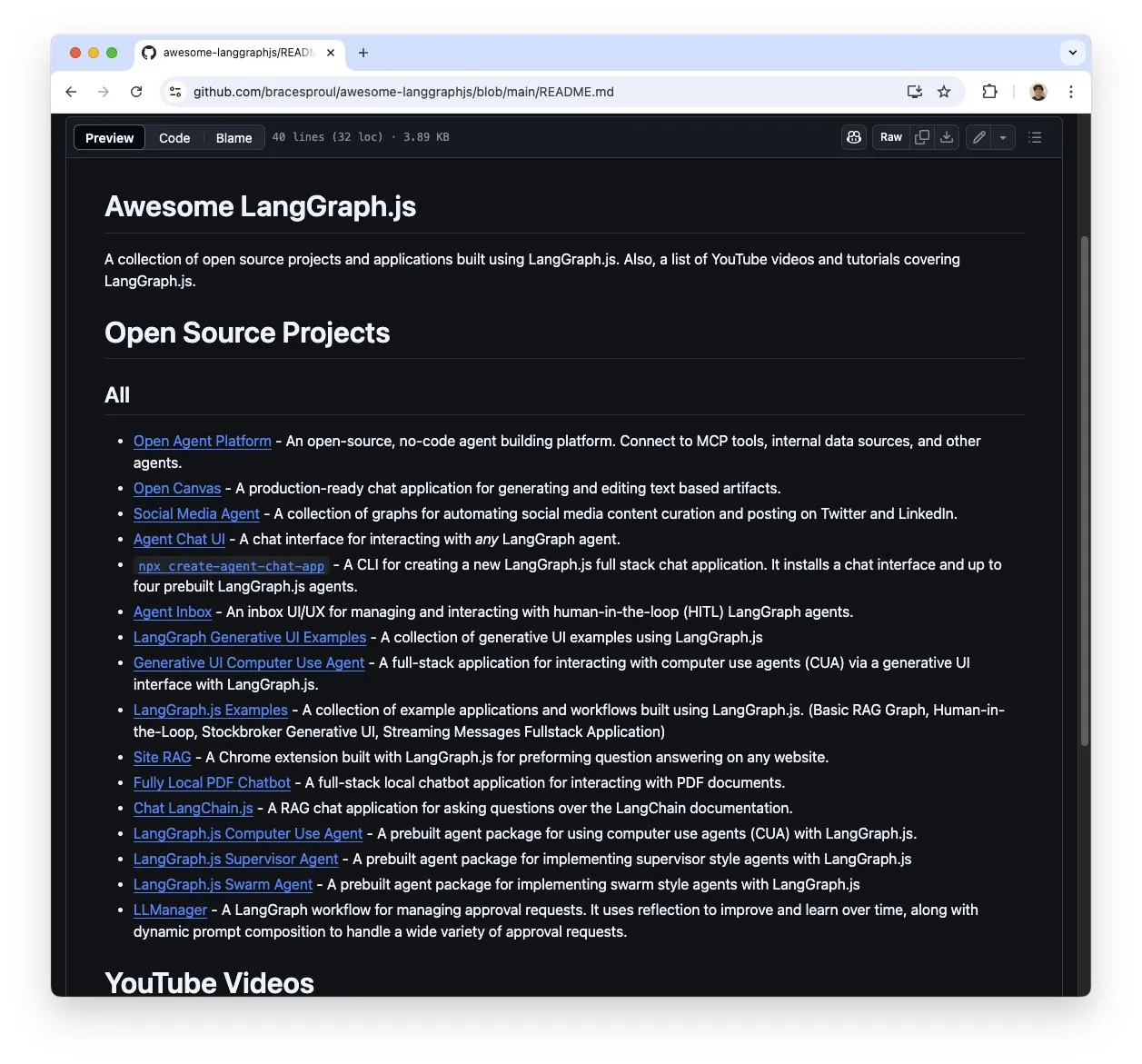

“awesome-langgraphjs” Collection of LangGraph.js Learning Resources Gains Attention: Brace created and maintains a GitHub repository called “awesome-langgraphjs,” which collects open-source projects and YouTube video tutorials built using LangGraph.js. This resource provides convenience for developers looking to learn and use LangGraph.js to build various applications, from multi-agent systems to full-stack chat applications (Source: LangChainAI)

💼 Business

Alibaba’s AI Strategy Transformation Shows Results, Cloud Business and AI Product Revenue Grow Significantly: Alibaba’s Q4 2025 financial report shows that, excluding certain businesses, overall revenue increased by 10% year-over-year. Cloud Intelligence business revenue grew by 18%, with AI-related product revenue maintaining triple-digit year-over-year growth for seven consecutive quarters. Alibaba considers AI a core strategy and plans to invest over 380 billion RMB in the next three years to upgrade its cloud computing and AI infrastructure. Its open-source Qwen-3 model has topped multiple global leaderboards, spawning over 100,000 derivative models, demonstrating its technological strength and the vitality of its open-source ecosystem. Alibaba is accelerating the implementation of AI in industries such as automotive, communications, and finance (Source: 36Kr)

Video Editing App Mojo Acquired by Dailymotion: Video editing app Mojo (@mojo_video_app) has been acquired by Dailymotion. Mojo’s video editing technology will be integrated into Dailymotion’s social app and B2B products, with both parties aiming to jointly build Europe’s next-generation social video platform (Source: ClementDelangue)

Cohere Acquires Ottogrid, Enhancing Enterprise AI Capabilities: AI company Cohere announced the acquisition of startup Ottogrid. This acquisition is expected to enhance Cohere’s capabilities in enterprise-grade AI solutions, though specific transaction details and Ottogrid’s technological focus were not disclosed in detail (Source: aidangomez, nickfrosst)

🌟 Community

AI Agents Spark Discussion on Work Transformation, Future May Resemble Real-Time Strategy Games: Will Depue proposed that future work might evolve into a model similar to StarCraft or Age of Empires, where humans command about 200 micro-agents to handle tasks, gather information, design systems, etc. Sam Altman retweeted in agreement. Fabian Stelzer jokingly called this “Zerg rush coded.” This viewpoint reflects the community’s imagination and discussion on how AI agents will reshape workflows and human-computer collaboration models (Source: willdepue, sama, fabianstelzer)

xAI’s Grok Bot Replies Spark Controversy, Prompt Allegedly Modified Without Authorization: xAI admitted that the prompt for its Grok response bot on the X platform was modified without authorization on the morning of May 14th, causing its analysis of certain events (such as those involving Trump) to appear unusual or inconsistent with mainstream information. The community is highly concerned about this matter, with figures like Clement Delangue calling for Grok to be open-sourced to increase transparency. Users like Colin Fraser are attempting to reverse-engineer the modification history of its system prompt by comparing Grok’s replies at different times (Source: ClementDelangue, menhguin, colin_fraser)

Rumors of Mass Departures from Meta Llama4 Team Spark Community Concerns About Open-Source AI Future: Community news suggests that about 80% of Meta’s Llama4 team (11 out of the original 14 members) have resigned, and the release of its flagship model Behemoth has been postponed. This has drawn widespread attention, with industry insiders like Nat Lambert expressing regret. Scaling01 commented that Meta might need a new Llama marketing director. Users like TeortaxesTex worry about the potential negative impact on open-source AI development, even discussing whether China will become the last hope for open source (Source: teortaxesTex, Dorialexander, scaling01)

AI’s Application in Warfare and Ethical Issues Draw Attention: The Reddit community discussed AI’s application in warfare, noting its use for surveillance and locating combatants by analyzing information to provide military intelligence. The discussion mentioned that the U.S. military has used AI tools like DART since 1991. Users expressed concern about the lethal risks of AI weaponization and potential threats to humanity, and are paying attention to the development of relevant international treaties and measures. OpenAI’s usage guidelines also removed the clause prohibiting military use, prompting further reflection (Source: Reddit r/ArtificialInteligence)

Large Language Models Perform Poorly in CCPC Programming Contest, Exposing Current Limitations: In the 10th China Collegiate Programming Contest (CCPC) Finals, several well-known large language models, including ByteDance’s Seed-Thinking (and others like o3/o4, Gemini 2.5 pro, DeepSeek R1), performed poorly, mostly solving only the “sign-in” (easiest) problem or scoring zero. Officials explained that the models attempted tasks purely autonomously without human intervention. Community analysis suggests this exposes the current shortcomings of large models in solving highly innovative and complex algorithmic problems, especially in non-Agentic mode (i.e., without tool-assisted execution and debugging). This contrasts with OpenAI’s o3 achieving a gold medal in the IOI competition through Agentic training (Source: WeChat)

DSPy Framework and “The Bitter Lesson” Spark Discussion, Emphasizing Standardized Design and Automated Prompting: Discussions related to DSPy emphasize that while AI scaling can bypass many engineering challenges (“The Bitter Lesson”), it cannot replace careful design of the core problem specification (requirements and information flow). However, scaling can elevate the level of abstraction at which problems are defined. Automated prompting (e.g., prompt optimizers) is seen as a way to leverage computational power in line with “The Bitter Lesson,” whereas manual prompting might contradict it by injecting human intuition rather than letting the model learn (Source: lateinteraction, lateinteraction)

Computational Cost of AI Agents’ Self-Checking/Tool Exploration During Inference Gains Attention: Paul Calcraft inquired about the practice of investing significant computational resources (e.g., $200+ to solve a single problem) during the inference phase for AI agents to perform active self-checking, tool use, and exploratory workflows. He noted that agents like Devin and its competitors might do this for PR demos, but it’s unclear for scenarios seeking novel solutions (similar to FunSearch but less constrained) (Source: paul_cal)

AI-Assisted “Vibe Coding” Sparks Discussion: Tools like GitHub Copilot have made “Vibe Coding” (a programming style relying more on intuition and AI assistance than strict planning) possible, with even a 16-year-old student using Copilot to complete a school project. Community opinions on this phenomenon are mixed, with some viewing it as a new programming paradigm, while others emphasize the importance of fundamentals and formal methods (Source: Reddit r/ArtificialInteligence, nrehiew_)

Hugging Face Transformers Library Launches New Community Board: Hugging Face has opened a new community board for its core library, Transformers. It will be used for announcements, new feature introductions, roadmap updates, and welcomes users to ask questions and discuss issues related to library usage or models, aiming to strengthen interaction and support with developers (Source: TheZachMueller, ClementDelangue)

AI Developers Call for Top Conferences to Add “Findings” Paper Track: Given the surge in submissions to top AI conferences like NeurIPS (e.g., 25,000 for NeurIPS), Dan Roy and others are calling for the establishment of a “Findings”-like paper track, similar to those at conferences like ACL. This aims to provide a publication venue for research that, while not meeting main conference standards, still holds value, thereby alleviating reviewer pressure and promoting broader academic exchange. Proposals include lightweight reviews focusing on improving paper clarity (Source: AndrewLampinen)

💡 Other

AI-Powered Exoskeleton Helps Wheelchair Users Stand and Walk: An AI-powered exoskeleton device demonstrated its ability to help wheelchair users stand and walk again. Such technology integrates robotics, sensors, and AI algorithms, perceiving user intent and providing power assistance, bringing hope for rehabilitation and improved quality of life for individuals with mobility impairments (Source: Ronald_vanLoon)

Using AI to Visualize User Name Ideas: A small trend has emerged on Reddit and X communities where users are using AI image generation tools (like ChatGPT’s built-in DALL-E 3) to create concept images based on their social media usernames. They share these imaginative creations, showcasing the fun applications of AI in personalized creative expression (Source: Reddit r/ChatGPT, Reddit r/ChatGPT)

Amazon Ads Leverages AI to Enhance Brand Marketing Efficiency for Going Global: Amazon Ads introduced the “World Screen Lab” concept, showcasing how it uses AI technology to empower Chinese brands going global. It expands brand reach through media matrices like Prime Video, lowers content production barriers with AI creative studios (such as video generation tools), and optimizes ad delivery and conversion through tools like Amazon DSP and Performance+. AI plays a full-funnel role from creative generation to performance measurement, aiming to help brand owners, especially SMEs, build global brands more efficiently (Source: 36Kr)