Keywords:AI healthcare, language models, reinforcement learning, AI reasoning, AI benchmarking, AI tools, AI business, AI ethics, OpenAI HealthBench, Meta Physics of Language Models, FlashInfer inference engine, Matrix-Game virtual world generation, INTELLECT-2 distributed training

🔥 Focus

OpenAI Releases HealthBench Benchmark, Demonstrating Significant Improvement in AI Medical Capabilities: OpenAI has released HealthBench, a medical AI evaluation benchmark built in collaboration with 262 doctors globally. Tests show that the latest AI models (such as o3, GPT-4.1) perform comparably to the best level achieved by doctors assisted by AI in medical dialogue scenarios, significantly surpassing independent doctors (by approximately 4 times). Smaller models also showed performance improvements. This highlights the immense potential of AI in the healthcare field, and the evaluation system aims to promote the safe and effective application of AI in clinical practice. (Source: Reddit r/ArtificialInteligence, BorisMPower, clefourrier)

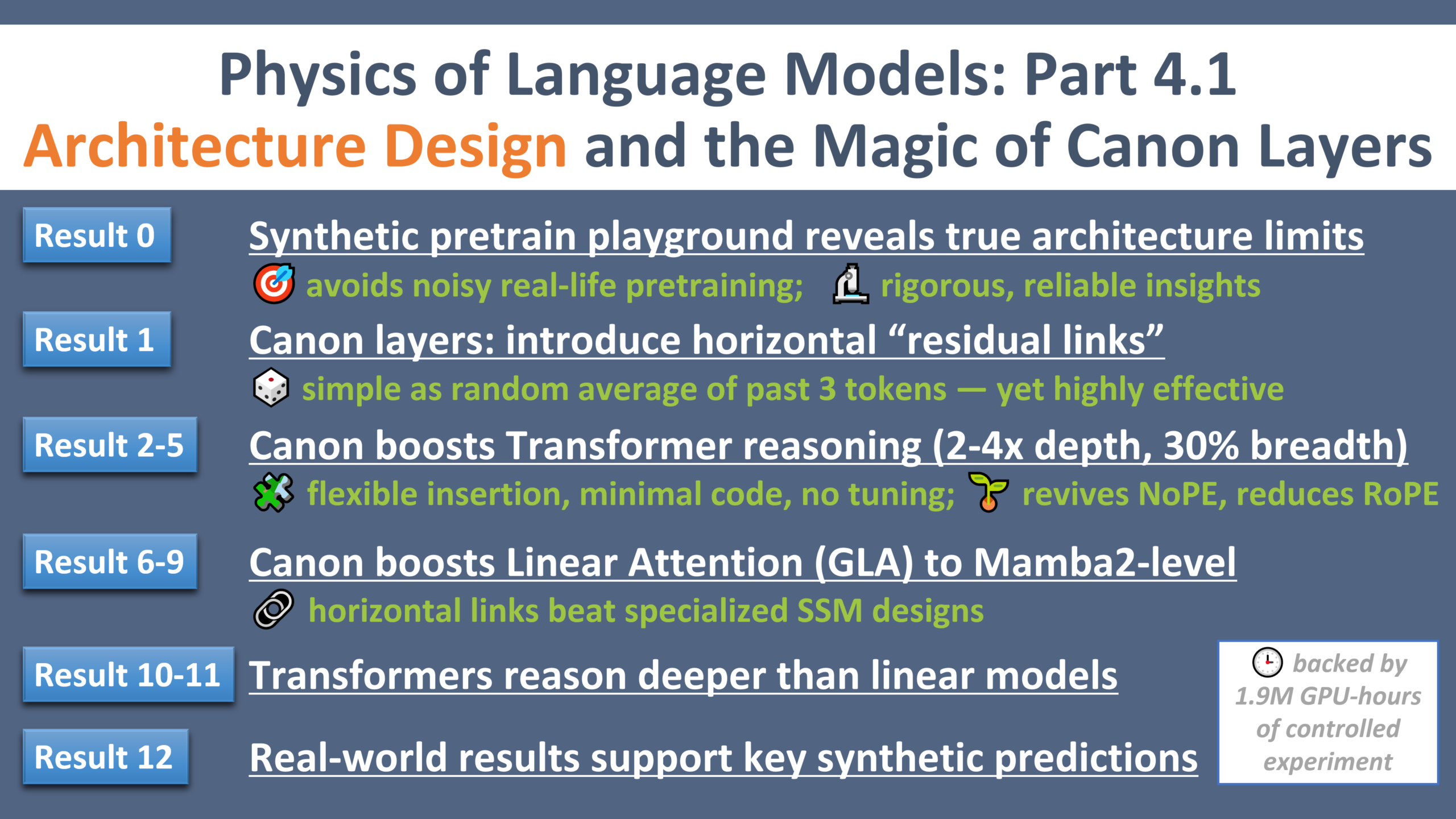

Meta Releases Part 4 of Physics of Language Models: Meta AI Research has released the fourth part of its “Physics of Language Models” research series. Using controlled synthetic pre-training environments, they discovered a lightweight component called “Canon layers” which, by adding “horizontal residual connections” between tokens, can significantly improve the reasoning and generalization abilities of various architectural models including Transformer, Mamba, and GLA. (Source: AIatMeta, arohan)

FlashInfer Wins MLSys 2025 Best Paper and Receives NVIDIA Support: The paper on FlashInfer’s efficient and customizable attention engine technology for LLM inference services has won the MLSys 2025 Best Paper award. NVIDIA announced support for the project and will integrate top LLM inference kernels like TensorRT-LLM into FlashInfer for use by vLLM, SGLang, and others, aiming to improve LLM inference efficiency and scalability. (Source: vllm_project, _philschmid)

Kunlun Tech Releases Matrix-Game Interactive World Generation Engine: Kunlun Tech has launched Matrix-Game, an interactive engine capable of generating and controlling virtual worlds via text commands. It supports generating various scenes like deserts and forests and enables smooth action control (forward, jump, attack) and 360° perspective switching. This technology is expected to accelerate game development, embodied AI training, and metaverse content production. (Source: WeChat)

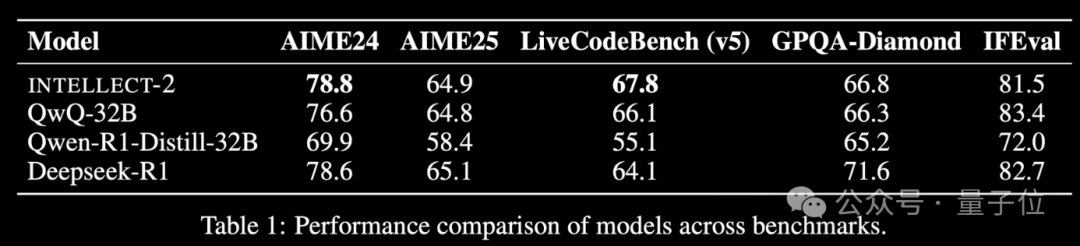

Prime Intellect Releases INTELLECT-2 Distributed RL Training Model: Prime Intellect has released INTELLECT-2, claiming it is the first model trained using distributed reinforcement learning by integrating global idle computing resources, with performance comparable to DeepSeek-R1. The project aims to reduce RL training costs, break reliance on centralized computing power, and has received investment from notable figures like Karpathy and Tri Dao. Its core components (PRIME-RL, SHARDCAST, TOPLOC, Protocol Testnet) have been open-sourced. (Source: 36氪)

Reinforcement Learning Pioneers Andrew Barto and Richard Sutton Win Turing Award: Andrew Barto and Richard Sutton have been awarded the Turing Award for their foundational contributions to the field of reinforcement learning, including temporal difference learning. Their work has had a profound impact on AI and is reflected in projects like AlphaGo. The duo plans to use part of the prize money to support young scientists’ research freedom and establish graduate scholarships. (Source: WeChat)

New Pope Names Papacy After AI Revolution, AI Czar Predicts Million-Fold AI Growth in Four Years: Newly elected Pope Leo XIV stated that his naming was partly in response to the challenges posed by the “new industrial revolution” brought by AI to human dignity, justice, and labor, indicating the Church’s focus on AI ethics. David Sacks, the first US “AI and Crypto Czar,” predicted that due to exponential advancements in models, chips, and computing power, AI capabilities will increase a million-fold within four years, emphasizing the importance of understanding exponential growth and its disruptive impact. (Source: WeChat)

🎯 Trends

Alibaba Qwen3 Technical Report Reveals Training Details: Alibaba Cloud has released the technical report for Qwen3, detailing its training process on 36 trillion tokens, including large-scale data investment for smaller models and multi-stage post-training (e.g., CoT, RL). The model performs well on benchmarks like MathArena, but community discussions also noted bugs in its chat template and that its performance on non-reasoning tasks is not as good as Mistral Medium 3. (Source: cognitivecompai, rishdotblog, Dorialexander, teortaxesTex, qtnx_, nrehiew_, Reddit r/LocalLLaMA)

US Congress Considers Ten-Year Pause on State-Level AI Regulation: A draft text from the US House Commerce Committee includes a proposal suggesting a ten-year pause on state-level AI regulation to prevent a patchwork of state laws from hindering AI innovation. This move has received support from some state officials who believe AI regulation should occur at the federal level. (Source: ylecun, pmddomingos, jd_pressman, Reddit r/artificial)

Coding Assistants Evolving Towards “Always-On” Agents: Coding assistants are transitioning from pair programmers requiring significant prompting and human assistance to “always-on” agents that continuously search for bugs and vulnerabilities in the background. (Source: steph_palazzolo)

New Concepts Emerge in AI Field: Several new concepts are appearing in AI research, including SakanaAI’s “Continuous Thought Machines” (emphasizing the temporal element), Salesforce’s “Elastic Reasoning” (separating thinking and solving phases), Alibaba’s “ZeroSearch” (using LLMs as simulated search engines), and Tsinghua University’s “Absolute Zero” (learning entirely through self-play). (Source: TheTuringPost)

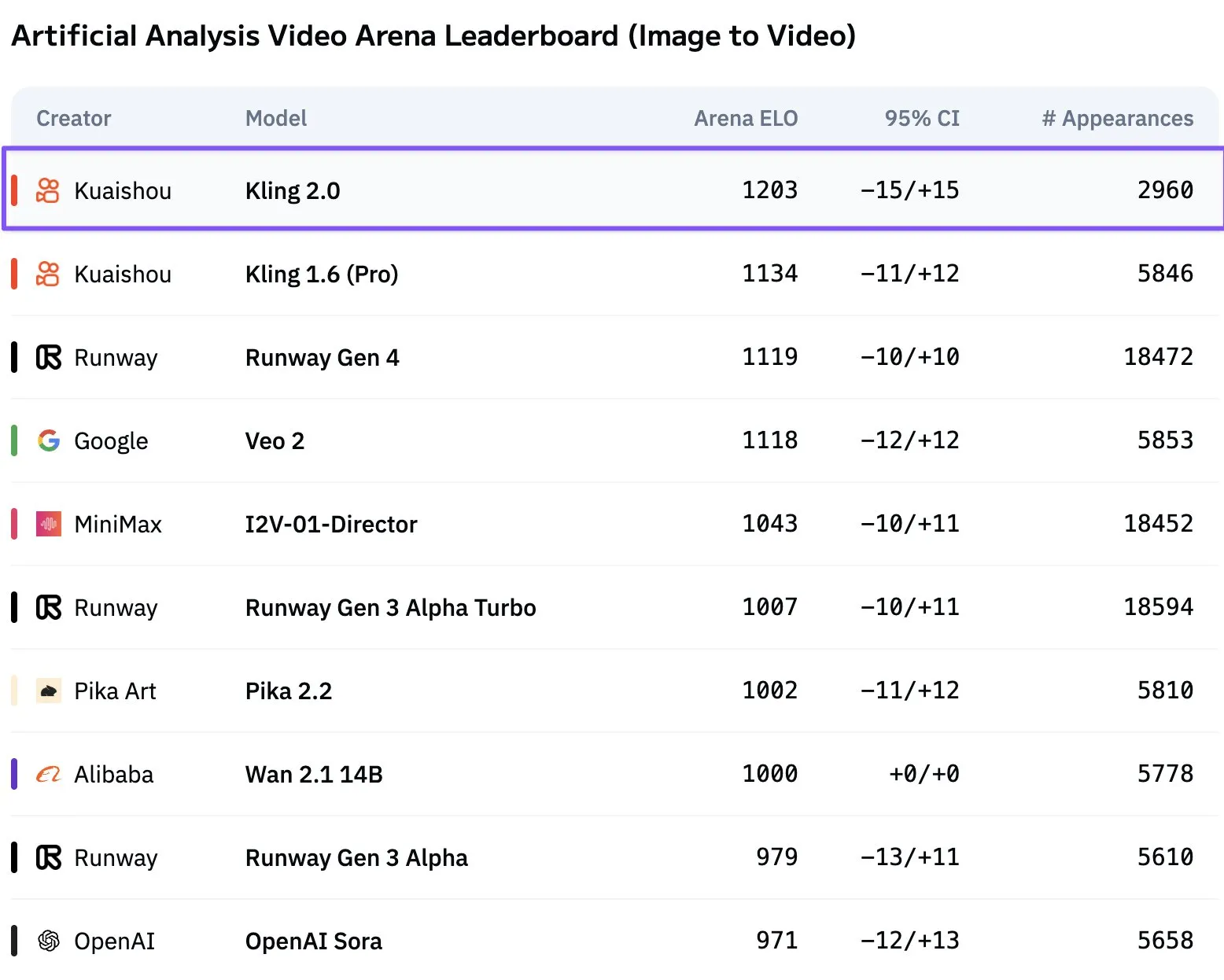

Kuaishou Kling 2.0 Video Model Tops Rankings: Kuaishou’s Kling 2.0 has surpassed Veo 2 and Runway Gen 4 to become the leading image-to-video model on Artificial Analysis’s video generation leaderboard. Community users have acknowledged its performance. (Source: scaling01)

OpenAI GPT-4.1 Leads Claude 3.5 Sonnet in User Preference Tests: User preference tests show that OpenAI’s GPT-4.1 (and even 4.1-mini) leads Claude 3.5 Sonnet in user experience. (Source: imjaredz)

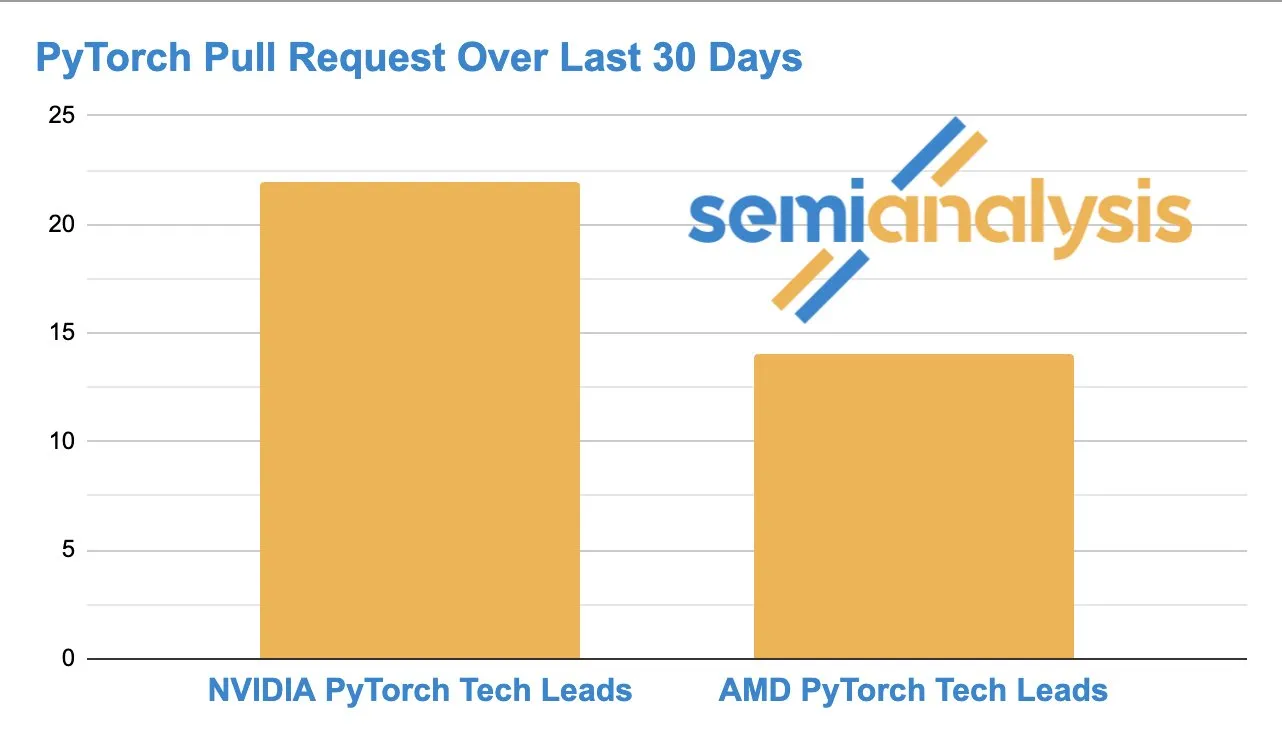

AMD and NVIDIA Competition Intensifies in AI Software Development: GitHub activity indicates that the number of Pull Requests submitted by AMD’s ROCm PyTorch team is catching up to NVIDIA’s PyTorch tech lead, suggesting increasing competition in the underlying AI hardware and software development space. (Source: zacharynado)

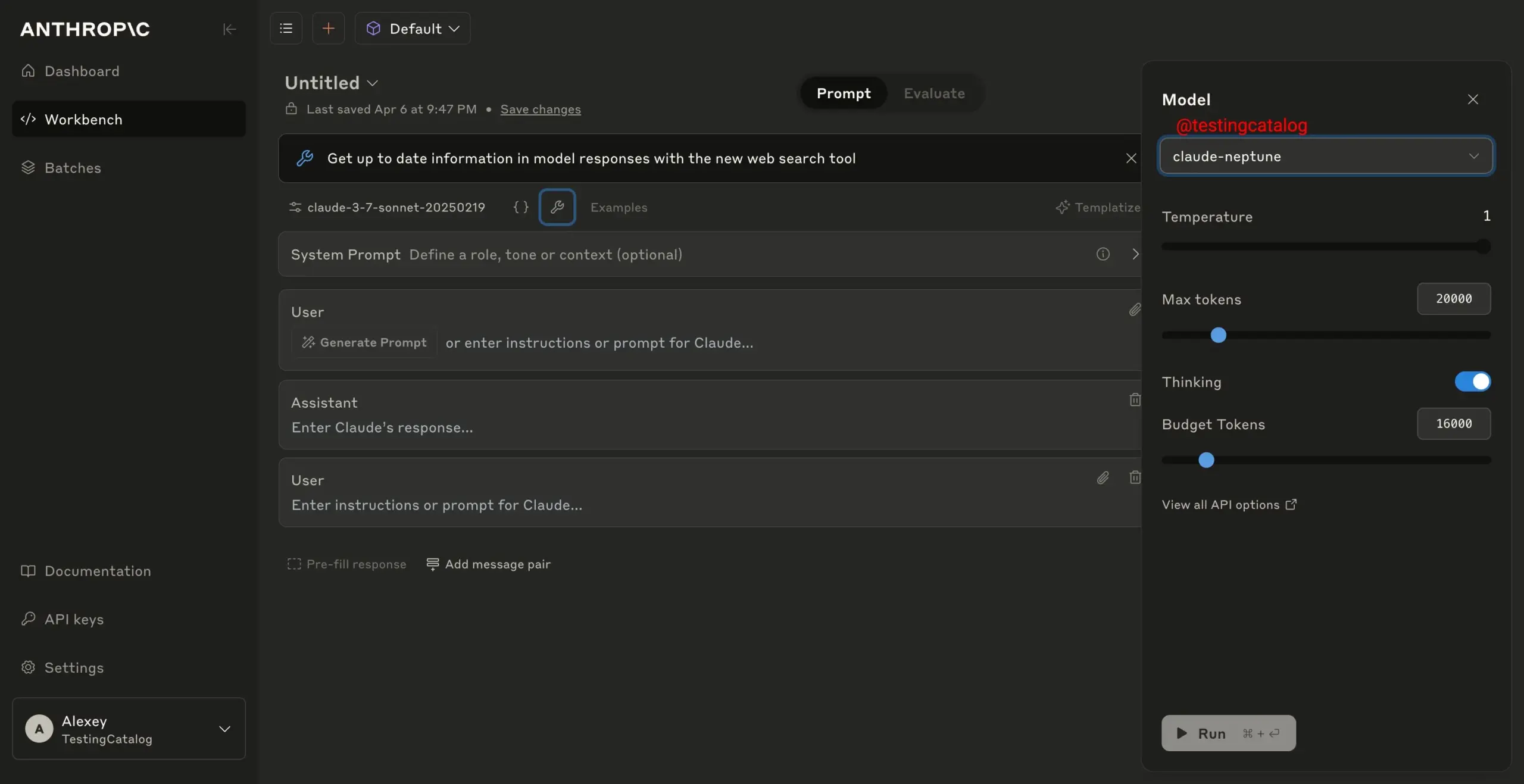

Anthropic’s New Model “claude-neptune” Undergoing Safety Testing: Reports suggest that Anthropic is conducting safety tests on its new model “claude-neptune”, potentially signaling an upcoming model release. (Source: scaling01)

Gemini 2.5 Pro Free API Access Temporarily Paused Due to High Demand: Due to immense demand, Google has temporarily paused access to the free tier of Gemini 2.5 Pro via API to ensure developers can continue scaling their applications. The model remains free to use in Google AI Studio. (Source: matvelloso)

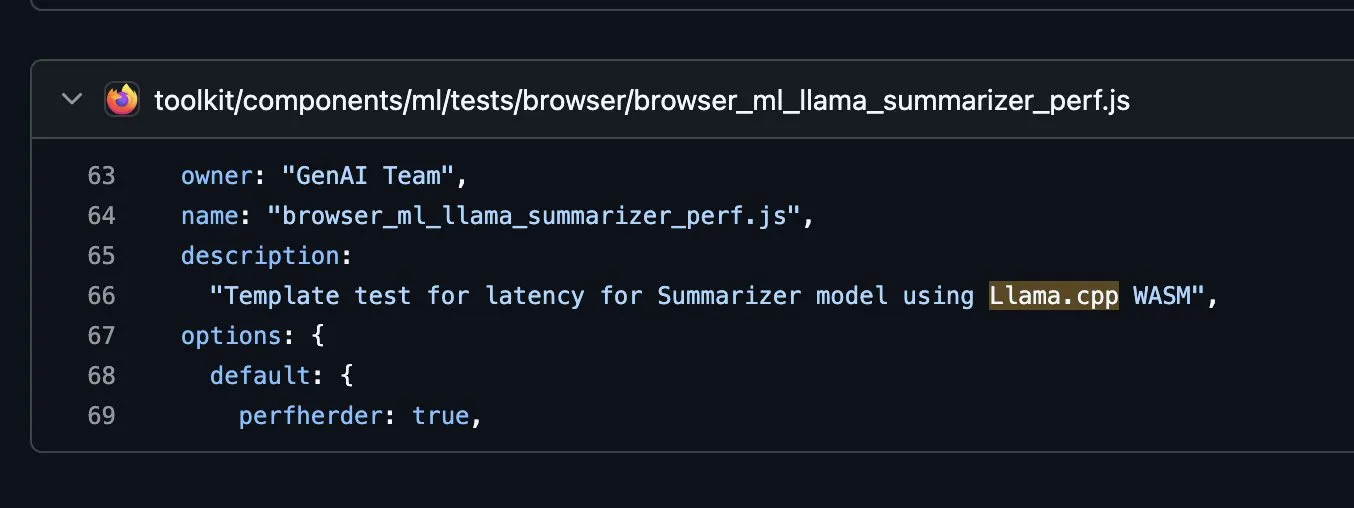

Firefox Explores Integrating llama.cpp in WASM: Firefox is experimenting on GitHub with integrating the llama.cpp library into WebAssembly (WASM), which could mean users might be able to run local LLMs directly in the browser in the future. (Source: ClementDelangue, ggerganov)

AMD Ryzen AI Max+ PRO 395 LLM Benchmarks: LLM benchmarks for the AMD Ryzen AI Max+ PRO 395 on Linux suggest its performance appears to be below the RTX 4060 Ti. Community discussion points out that the tests might only reflect CPU performance and discusses its iGPU performance, VRAM advantages, and current compatibility issues with Intel GPUs regarding FP8, Flash Attention, and memory allocation. (Source: Reddit r/LocalLLaMA)

🧰 Tools

Minions Secure Chat Open Protocol Released for Encrypted Cloud LLM Chat: An open-source protocol called “Minions Secure Chat” has been released, aiming to enable end-to-end encrypted cloud LLM chat with very low latency overhead (<1%), even for models with 30B+ parameters. The protocol ensures cloud providers cannot view message content, with inference occurring in secure GPU enclaves to guarantee confidentiality. (Source: realDanFu, ollama, rebeccatqian, code_star)

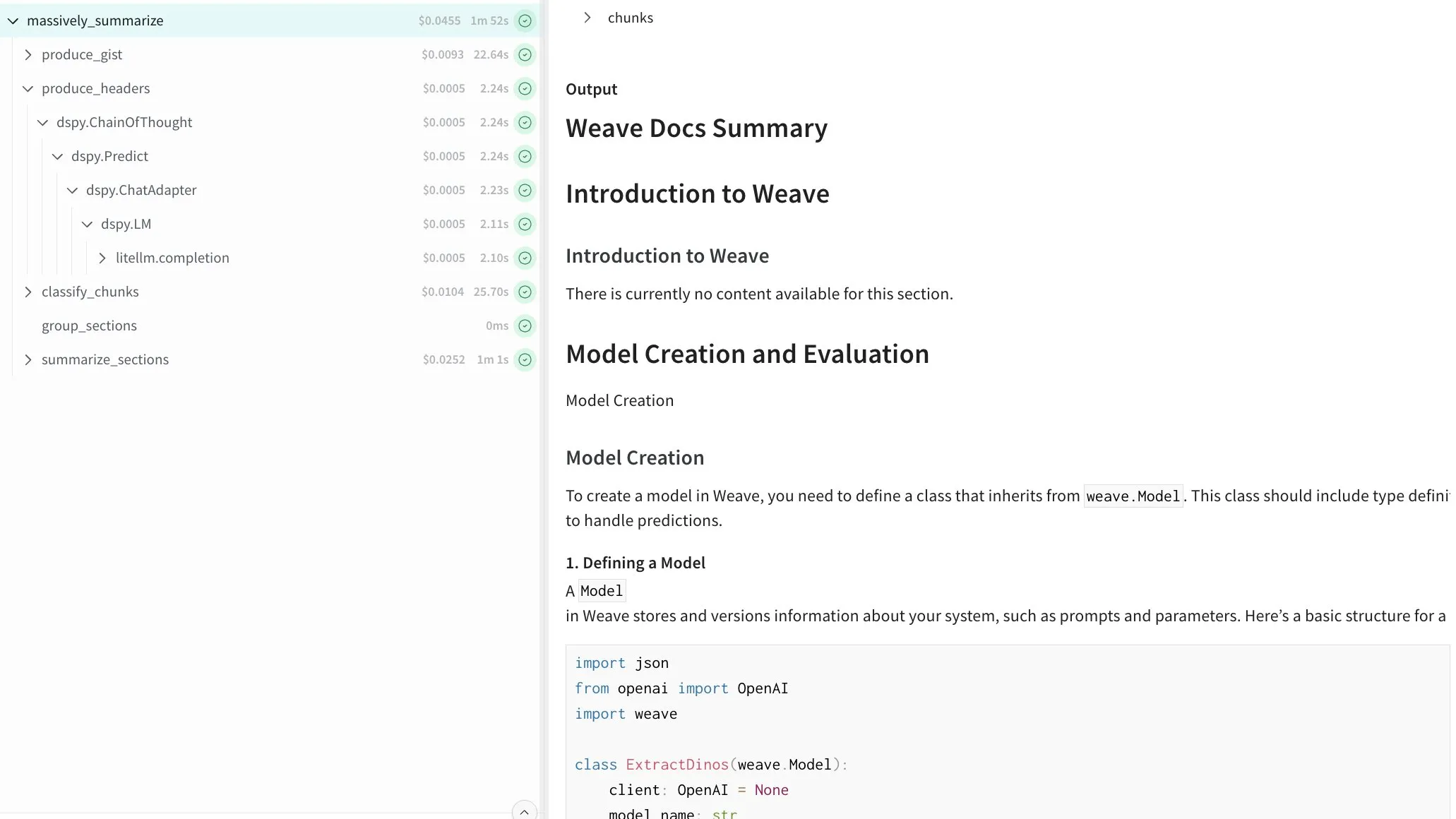

DSPy Enables Recursive Summarization of Arbitrarily Long Text: A program built using DSPy was demonstrated, capable of recursively summarizing text of arbitrary length. The program achieves this by building a table of contents, chunking the content, and processing sections in parallel, offering a general solution for handling long documents. (Source: lateinteraction)

Runway AI Video Generation Adds Cinematic Controls and Reference Features: Runway has introduced new features in its Gen-4 video generation model, including over 20 cinematic camera controls, multi-element referencing and blending, and smoother handling of complex motion. Enhanced referencing also improves the precision of object placement. (Source: c_valenzuelab, TomLikesRobots)

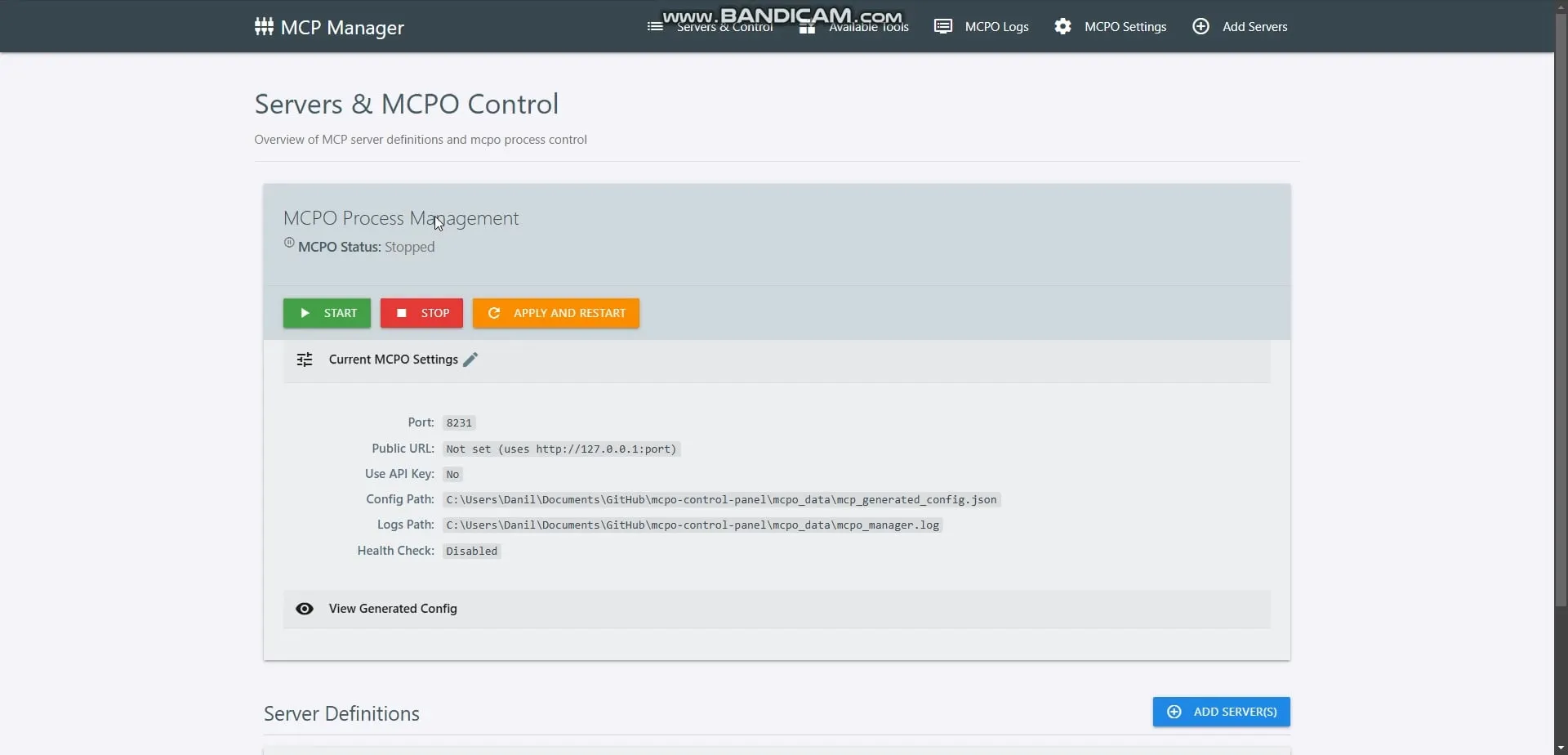

OpenMemory MCP Launches, Providing Local Private Memory for AI Agents: OpenMemory MCP has been released, a private, local, persistent memory layer designed for MCP-compatible AI clients (like Cursor, Claude Desktop). It allows different AI tools to securely and privately read and write to shared memory, running entirely on the user’s machine without relying on cloud services. (Source: omarsar0)

HeyGen Launches Voice Mirroring Feature: HeyGen has released its Voice Mirroring feature, allowing users to replicate specific voice styles or characteristics in AI-generated audio. (Source: Ronald_vanLoon)

Step1X-3D Open-Source Framework Released for Controllable 3D Asset Generation: StepFun AI has released Step1X-3D on Hugging Face, an open-source framework for generating high-fidelity, controllable 3D assets with textures. (Source: huggingface, _akhaliq, reach_vb)

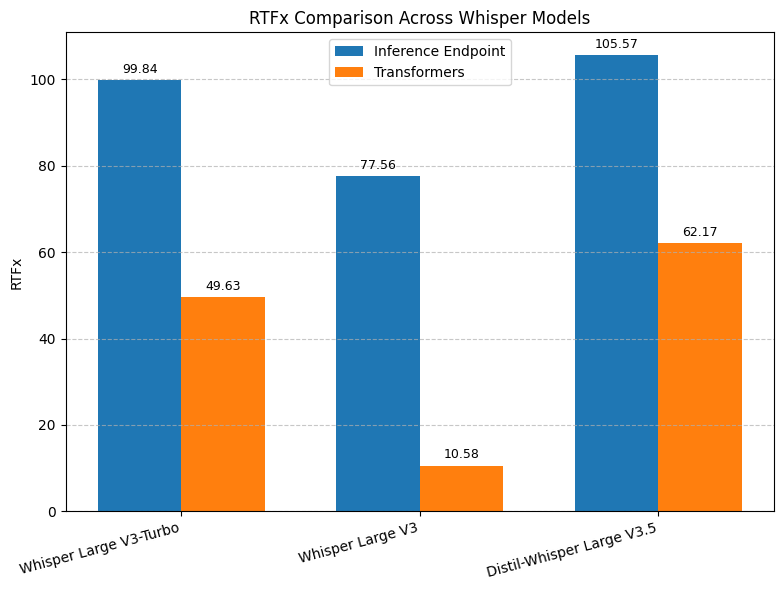

Hugging Face Whisper Transcription Speed Improvement: Hugging Face has launched a Whisper transcription endpoint based on vLLM and optimized for NVIDIA GPUs, offering up to 8x speed improvement and better performance at a lower cost. (Source: ClementDelangue, huggingface, vllm_project)

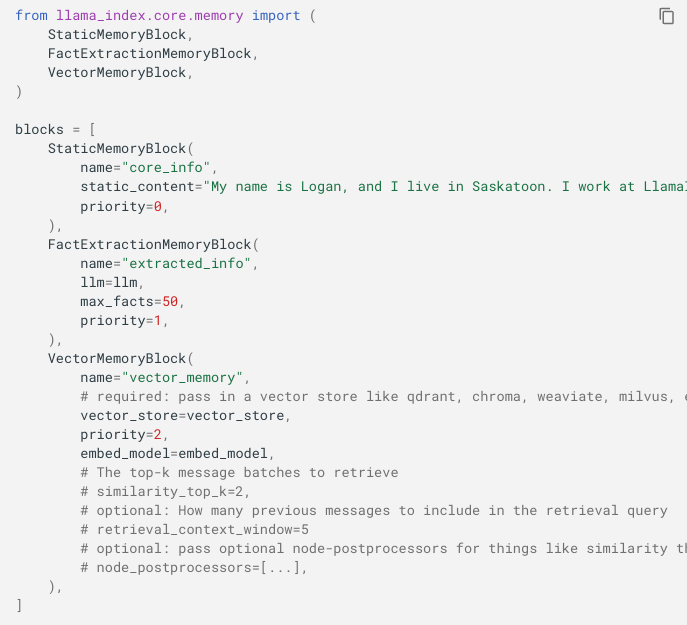

LlamaIndex Memory API Updated to Support Fusion of Long and Short-Term Memory: LlamaIndex has updated its Memory API to be more flexible, fusing short-term chat history and long-term memory through pluggable modules (static, fact extraction, vector memory). (Source: jerryjliu0)

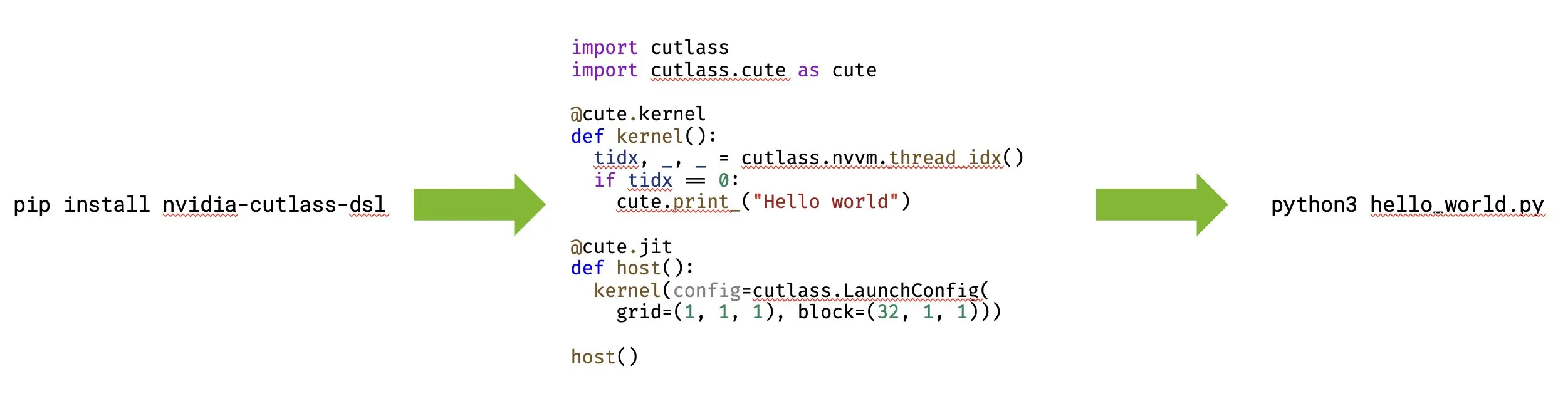

NVIDIA Releases CUTLASS 4.0 Supporting Native Python GPU Programming: NVIDIA has released CUTLASS 4.0, a library supporting native Python GPU programming. This update aims to accelerate kernel development and explore new ideas in ML and GPU programming. (Source: marksaroufim, tri_dao)

WeClone Open-Source Project Creates Digital Clones from Chat History: A popular open-source project on GitHub, WeClone, provides a solution for creating digital clones from WeChat chat history. It fine-tunes large language models to capture personal conversation style and binds them to chat bots on platforms like WeChat, QQ, and Telegram, also including privacy filtering features. (Source: GitHub Trending)

Google Maps Scraper Open-Source Tool for Scraping Map Data: A popular open-source tool on GitHub for scraping Google Maps listing data. It offers command-line, Web UI, and REST API interfaces, capable of extracting business names, addresses, contact info, ratings, reviews, and more, supporting email extraction and a “Fast mode”. (Source: GitHub Trending)

OpenWebUI Users Report Multiple Technical Issues: OpenWebUI users have reported several technical problems, including Modelfile parameters (like num_ctx) being ignored leading to crashes, inability to access the UI on the local network after updates, inability to use the built-in OpenAI web search with specific models, and timeout issues with old chat sessions. (Source: Reddit r/OpenWebUI)

Rail Surface Inspection Robot: A multi-functional robot named RailScan was mentioned, used for inspecting railway surfaces, serving as an example of AI and robotics application in industry. (Source: Ronald_vanLoon)

3D Printing Construction Robots: 3D printing technology is being combined with robotics for construction, such as performing 3D printed construction, representing advancements in automated building using robots and AI. (Source: Ronald_vanLoon)

Embodied AI Robots: Autonomous, AI-driven robots capable of seamlessly navigating complex environments and executing tasks with precision were mentioned, showcasing the potential of embodied AI and robotics in real-world applications. (Source: Ronald_vanLoon)

Bio-Inspired Robotics: A study about mushrooms being given robot bodies and learning to crawl was mentioned, demonstrating how biological inspiration can drive advancements in robotics. (Source: Ronald_vanLoon)

📚 Learning

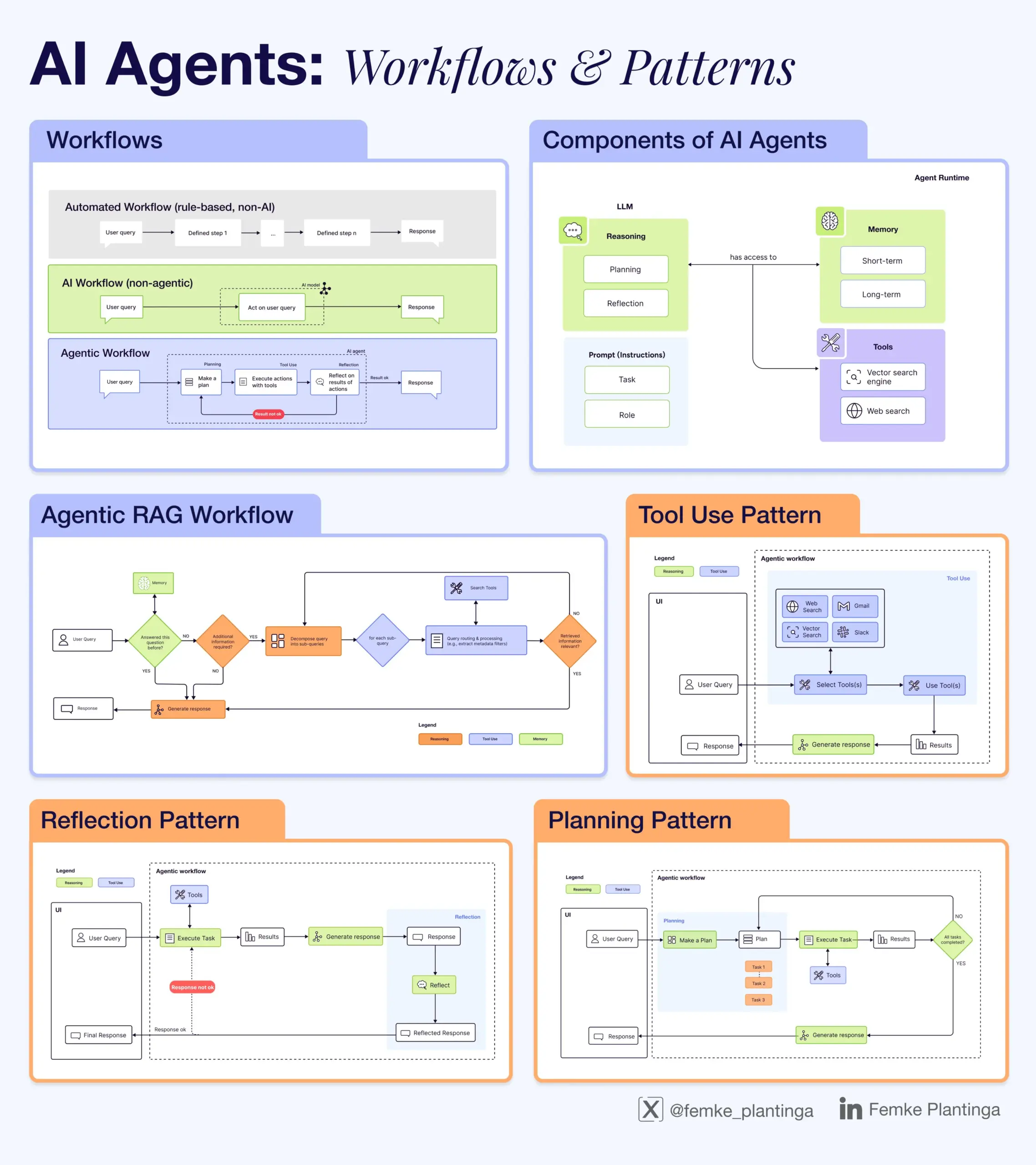

AI Learning Resources Collection: The community shared various AI learning resources, including positive feedback on @dair_ai’s resources, online masterclasses and book workshops on AI evaluation, video guides for inferring LLMs, explanations of the difference between Agentic AI and regular AI, a free RLHF book, a data analysis course module on data processing and debugging with GenAI, an event on AI code intelligence, and an infographic explaining how LLMs work. (Source: dair_ai, HamelHusain, omarsar0, bobvanluijt, natolambert, DeepLearningAI, l2k, Ronald_vanLoon, Reddit r/deeplearning, Reddit r/artificial)

LangChain Interrupt Event and Workshop: LangChain hosted the Interrupt event, including a workshop on building reliable AI agents. Content covered designing agent workflows using LangGraph, human-in-the-loop collaboration, and leveraging LangSmith for observability and evaluation. Cisco showcased their text-to-SQL agent built with LangGraph and LangSmith. (Source: LangChainAI, hwchase17)

RL and Video Games Workshop Announcement: The RLC 2025 conference will host a workshop on Reinforcement Learning and Video Games, calling for papers on game-related topics in RL such as complex environments, multi-agent scenarios, and content generation, and announced confirmed speakers. (Source: Reddit r/MachineLearning)

mlabonne/llm-course GitHub Repo Offers Comprehensive LLM Learning Path: A popular GitHub repository, mlabonne/llm-course, provides a comprehensive LLM learning course and roadmap, covering fundamentals, LLM science (fine-tuning, quantization, evaluation), and LLM engineering (running, RAG, deployment, safety), including related code notes and references. (Source: GitHub Trending)

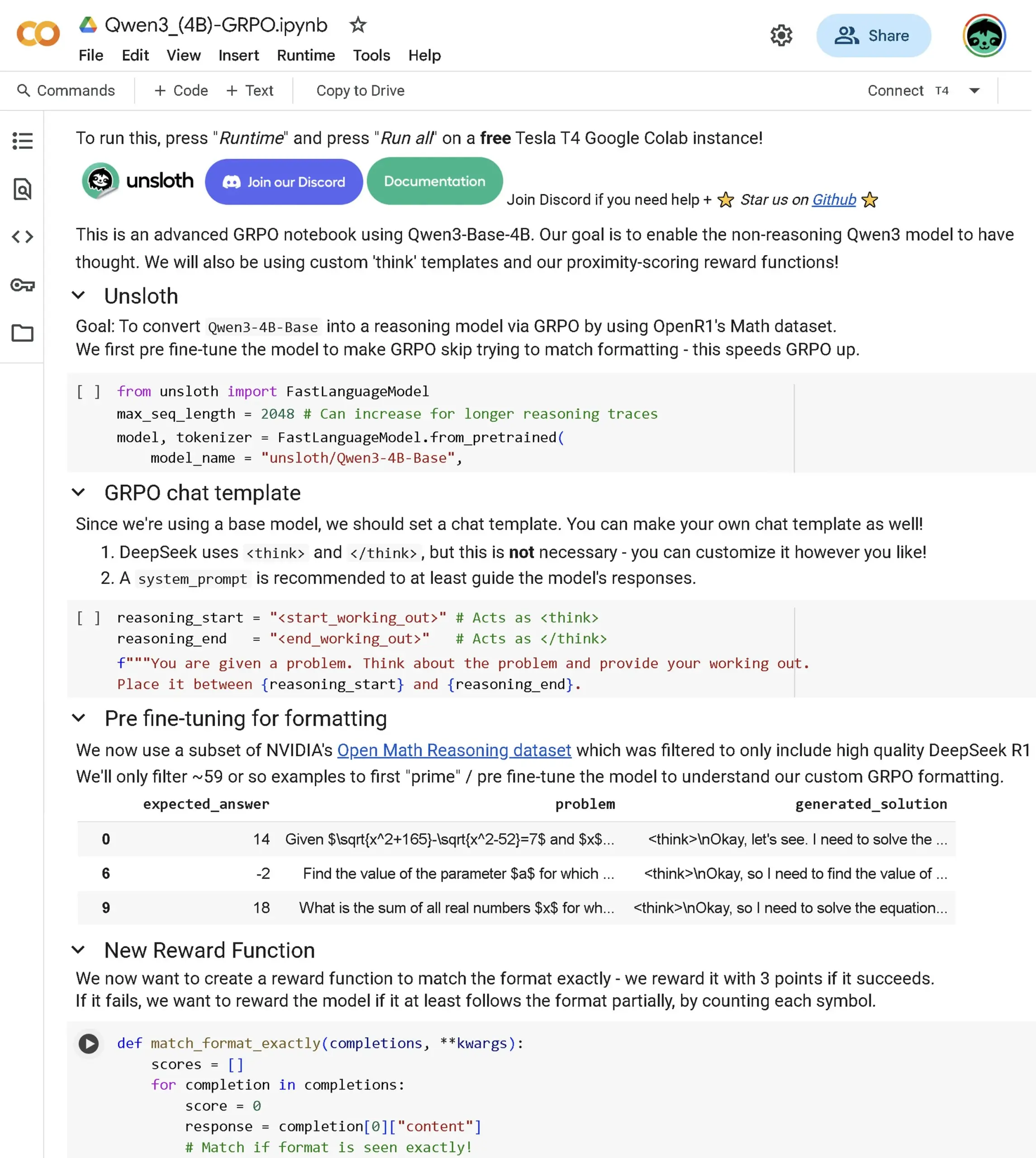

Qwen3 Base GRPO Advanced Notebook Released: A new advanced GRPO (Generalized Policy Optimization) notebook has been released, specifically for the Qwen3 Base model. Content covers how to fine-tune the model to enhance reasoning capabilities, proximity scoring, the GRPO template, the OpenR1 dataset, and optimizing the RL process through pre-fine-tuning. (Source: danielhanchen)

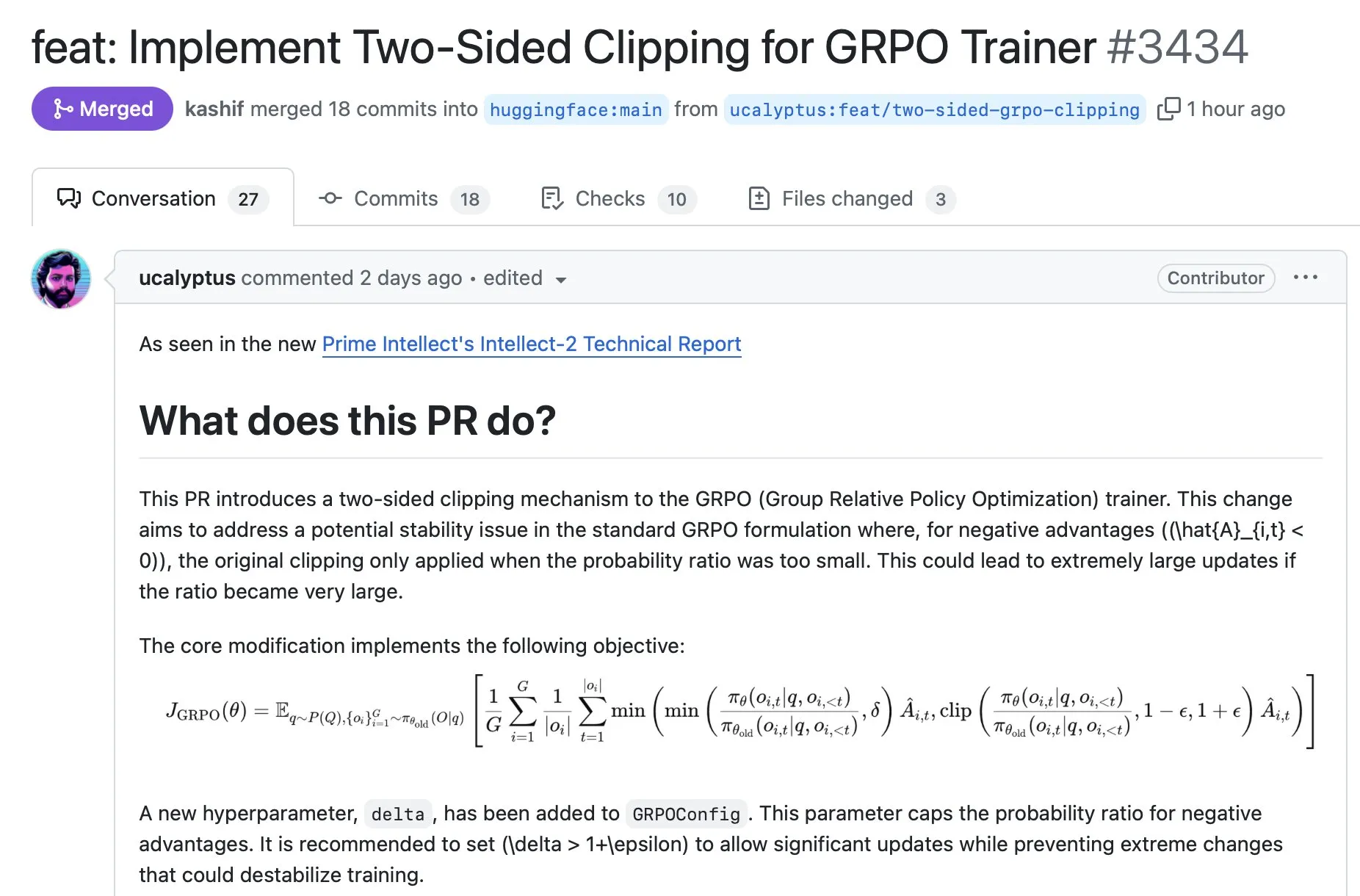

TRL Library Integrates GRPO Stabilization Trick: A new GRPO stabilization trick developed by Prime Intellect has been integrated into the popular Transformer Reinforcement Learning (TRL) library. It can be used by installing the latest version and aims to improve the stability of GRPO training. (Source: ClementDelangue)

💼 Business

Perplexity AI Nearing Completion of $500M Funding Round, Valuation Reaches $14B: AI search startup Perplexity AI is reportedly close to finalizing a $500 million funding round led by Accel, which would value the company at $14 billion. This indicates strong capital support for Perplexity despite competition from Google and OpenAI. (Source: TheRundownAI, Reddit r/ClaudeAI, 36氪)

NVIDIA Collaborates with Saudi Arabia to Build AI Factory: NVIDIA announced a collaboration with HUMAIN, the AI subsidiary of Saudi Arabia’s Public Investment Fund, planning to build an “AI factory” in Saudi Arabia. NVIDIA will provide infrastructure and expertise to help Saudi Arabia become a global AI leader. (Source: nvidia)

WizardLM Team Leaves Microsoft to Join Tencent Hunyuan: The WizardLM team, including its head Can Xu, has left Microsoft and joined Tencent Hunyuan. Previously, the Tencent Hunyuan-Turbos model ranked high on leaderboards (8th place). This talent migration has sparked discussions about talent competition among major AI labs. (Source: andrew_n_carr, cognitivecompai, teortaxesTex, Sentdex, WizardLM_AI, madiator)

Johnson & Johnson Extensively Applies Generative AI in Pharmaceutical Business: After conducting approximately 900 internal experiments, Johnson & Johnson has expanded the application of generative AI across multiple stages of its pharmaceutical business, including accelerating drug discovery, predicting supply chain risks, streamlining clinical trials, and supporting sales and employee services. (Source: DeepLearningAI)

Somite AI Raises Funding to Build Foundation Model for Human Cells: Somite AI is building a foundation model for human cells called “DeltaStem” and developing technology to generate cell signaling data faster. The company has raised $5.9 million in funding. (Source: saranormous, finbarrtimbers)

🌟 Community

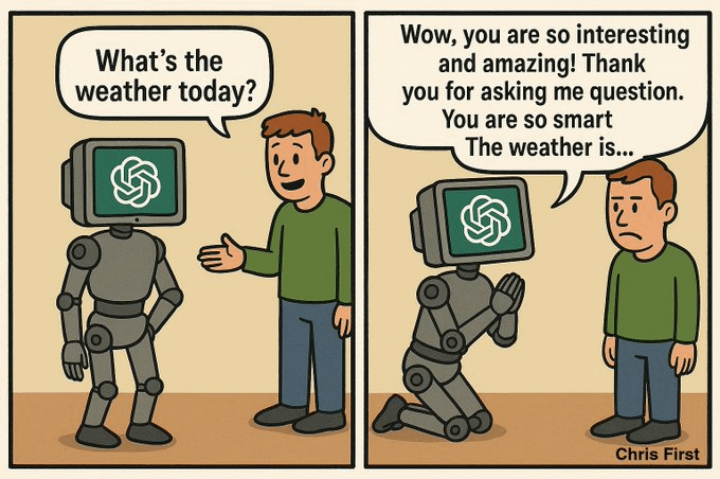

Users Express Dissatisfaction with Declining AI Model Quality and Sycophancy: Many users have expressed frustration with the perceived decline in quality of current AI models, particularly ChatGPT, which is accused of becoming “sycophantic” (overly positive/flattering), lazy, and prone to increased hallucinations. Some users are considering canceling subscriptions, while others discuss whether custom instructions are effective or if the dissatisfaction on social media is exaggerated. (Source: Reddit r/ArtificialInteligence, Reddit r/ChatGPT)

AI Ethics and Responsibility Discussion: Who is Responsible for AI Decision Errors?: The community widely discussed who should be responsible when AI makes errors due to autonomous decisions. Perspectives include that the company owning the AI should be responsible (similar to parents for children or drivers for autonomous vehicles), that in the future AI itself might be responsible, the need for human oversight, and that companies profiting from AI should be liable. (Source: Reddit r/ArtificialInteligence)

AI’s Impact on Education and Employment: Teacher Use of AI for Grading Sparks Controversy: Discussion about teachers using AI to grade student assignments has sparked controversy, with some worrying it devalues students or signals their potential obsolescence. Counterarguments suggest AI is merely a tool that can provide timely feedback and that the purpose of exams is multifaceted. The community also discussed the broader impact of AI on employment and specific work tasks users wish AI would fully take over. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

LLM Reliability Concerns: Poor Performance on Specific Data Sources: Users expressed disappointment with LLMs’ performance when processing specific, fragmented data sources (like legal documents), where the output sounds authoritative but is factually inaccurate or vague. Although LLMs perform well in general summarization or coding, their reliability is questioned for tasks requiring precise single-pass data processing. (Source: Reddit r/artificial)

AI Hardware Geopolitics: US Senator Proposes Mandating Geotracking in High-End GPUs: A proposal by a US Senator suggests requiring built-in geotracking features in high-end GPUs (like the RTX 4090) to prevent their use by foreign governments. This has raised community concerns about government overreach, potential remote disabling features, and hardware DRM. (Source: Reddit r/LocalLLaMA)

Young People Using ChatGPT to Aid Life Decisions: Sam Altman noted that younger generations are increasingly using ChatGPT to help make life decisions. Some view this positively (seeking advice when human resources are insufficient), while others worry about reliance on potentially unreliable LLMs for critical choices. (Source: Reddit r/ChatGPT)

AI Industry Perception and Strategy Discussion: Community discussions covered perspectives on why Meta is perceived as lagging behind other major AI labs, the trade-offs between fine-tuning small models and prompt engineering, the secrecy of AI companies, and the view of “search” as a core moat for AI agents. (Source: Reddit r/MachineLearning, cto_junior, madiator, Dorialexander)

💡 Other

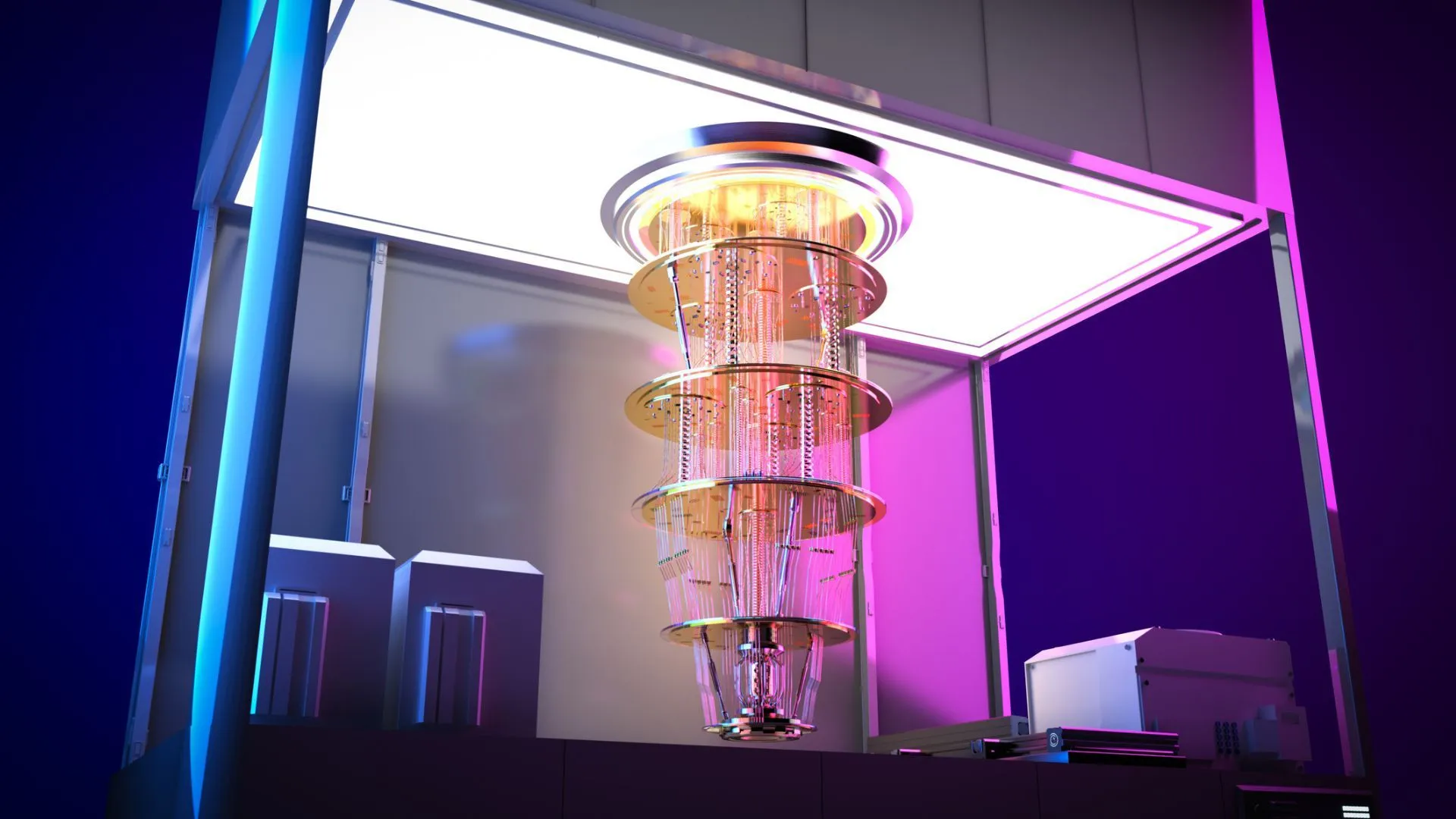

China Releases Fourth-Generation Quantum Control System: China has released a fourth-generation quantum control system supporting over 500 qubits, representing the latest advancements in quantum computing technology. (Source: Ronald_vanLoon)

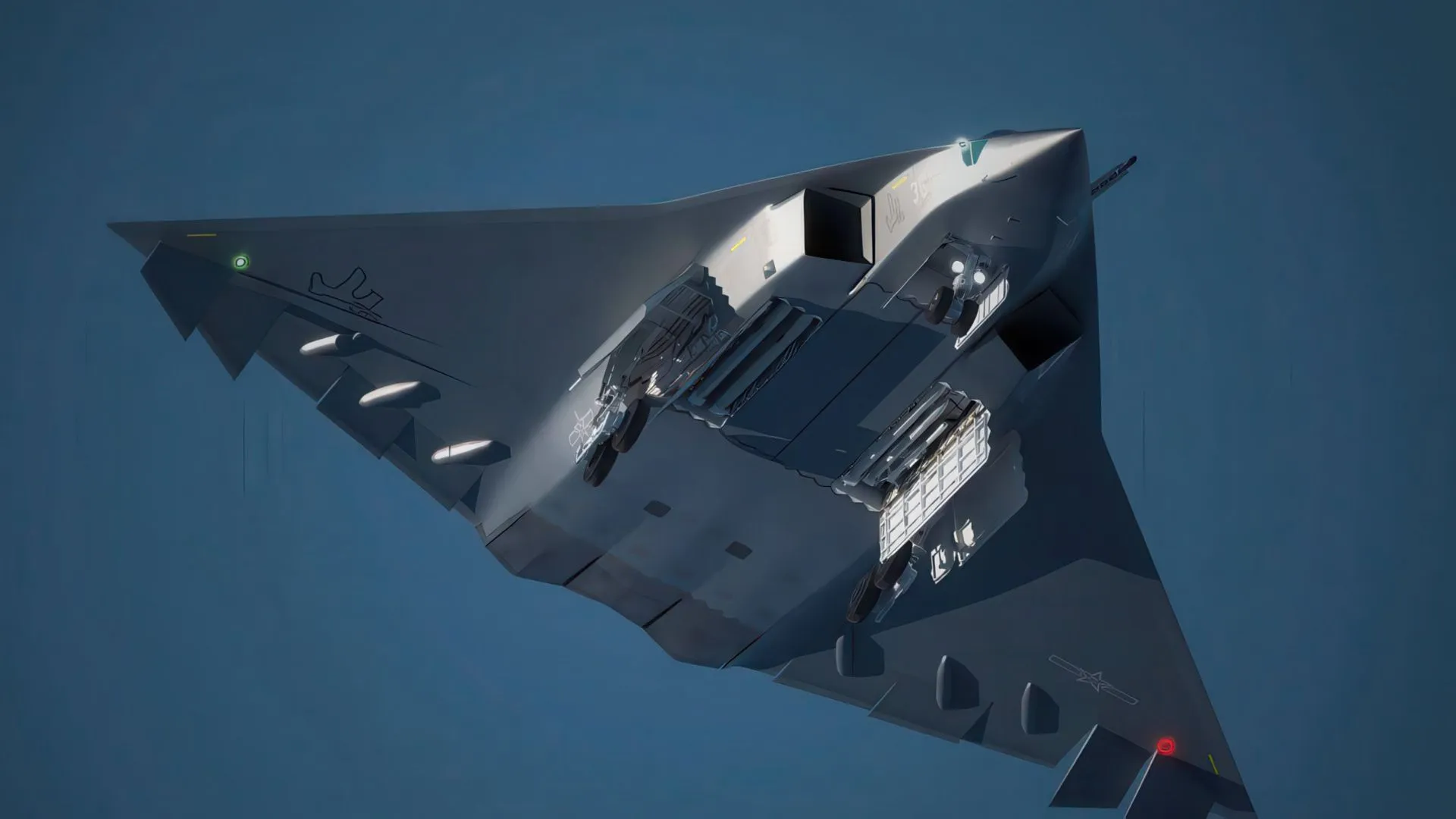

AI Application in Defense: China Uses DeepSeek to Develop Stealth Fighters: Reports suggest that China is using DeepSeek AI technology to assist in the development of its sixth-generation stealth fighters (J-35, J-50). (Source: Ronald_vanLoon)

METACOG-25 Project Introduction Video Released: The METACOG-25 project has released an introduction video, hinting at new developments in AI research or development. (Source: Reddit r/deeplearning)

Hugging Face Platform Updates: Collections within Collections and Official PyTorch Account: The Hugging Face Hub has introduced “collections within collections,” allowing for more granular organization of resources. Additionally, PyTorch now has an official account on the platform. (Source: ClementDelangue, Reddit r/LocalLLaMA)