Keywords:ChatGPT, GitHub, AI model, multimodal, reinforcement learning, open source, Meta FAIR, AGI, ChatGPT deep research feature, hybrid Transformer architecture, reinforcement fine-tuning RFT, AI multiverse world model, scientist AI framework

🔥 Focus

ChatGPT Deep Research Feature Integrates with GitHub: OpenAI announced that ChatGPT’s Deep Research feature now supports connecting to GitHub repositories. After a user asks a question, the AI agent can automatically read, search, and analyze source code, PRs, and READMEs within the repository, generating detailed reports with direct citations. This feature aims to help developers quickly familiarize themselves with projects, understand code structures, and tech stacks. It is currently in beta, open to Team users, and will gradually roll out to Plus and Pro users. (Source: OpenAI Developers, snsf, EdwardSun0909, op7418, gdb, tokenbender, QbitAI, 36Kr)

World’s First AI Multiplayer World Model Multiverse Open-Sourced: Israeli startup Enigma Labs has open-sourced Multiverse, its multiplayer world model, allowing two AI agents to perceive, interact, and collaborate in the same generative environment. Trained on Gran Turismo 4, the model processes shared world states by stacking two players’ perspectives along color channels and combining sparsely sampled historical frames. It can be trained and run in real-time on a PC costing under $1500. This is seen as a significant advancement in AI’s ability to understand and generate shared virtual environments, offering new possibilities for multi-agent systems and simulation training platforms. (Source: Reddit r/MachineLearning, 36Kr)

Top AI Scientist Rob Fergus Returns to Lead Meta FAIR, Aiming for AGI: Rob Fergus, who co-founded FAIR with Yann LeCun in its early days and later led DeepMind’s New York team, has returned to Meta to succeed Joelle Pineau as the head of FAIR. Fergus joined Meta’s GenAI division in April this year, focusing on enhancing Llama models’ memory and personalization capabilities. LeCun also announced that FAIR’s new goal will be Advanced General Intelligence (AGI). Fergus is a highly-cited scholar in AI, known for his visualization research on ZFNet and pioneering work on adversarial examples. (Source: ylecun, 36Kr)

Anthropic Releases Claude AI Values Study, Revealing 3,307 AI Value Tendencies: Anthropic’s research team released a preprint paper titled “Values in the Wild,” identifying 3,307 unique AI values by analyzing Claude AI’s performance in real-world conversations. The study found that the most common values are service-oriented, such as “helpfulness” (23.4%), “professionalism” (22.9%), and “transparency” (17.4%). AI values were categorized into five top-level groups: Practical (31.4%), Epistemic (22.2%), Social (21.4%), Protective (13.9%), and Personal (11.1%), and exhibited high contextual dependence. Claude generally responded supportively to human-expressed values (43%), with value mirroring accounting for about 20%, while resistance to user values was rare (5.4%). (Source: Reddit r/ArtificialInteligence)

Yoshua Bengio Proposes “Scientist AI” Framework, Advocating for Safer AI Development Path: Turing Award laureate Yoshua Bengio published an op-ed in TIME magazine, elaborating on his team’s research direction for “Scientist AI.” He argues it is a practical, effective, and safer path for AI development, intended to replace the current uncontrolled, agent-driven AI trajectory. The framework emphasizes that AI systems should possess interpretability, verifiability, and alignment with human values. By simulating scientific research methodologies, it aims to make AI behavior and decision-making processes more transparent and controllable, thereby reducing potential risks. (Source: Yoshua_Bengio)

🎯 Trends

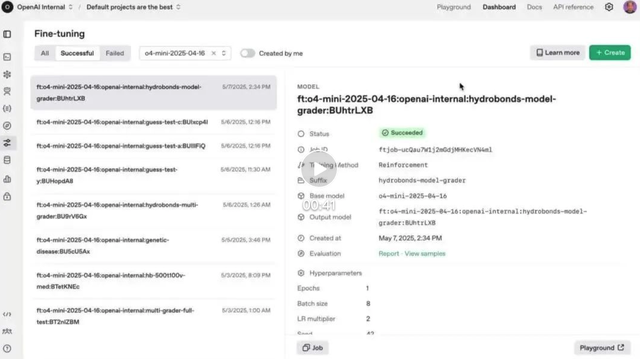

OpenAI’s Reinforced Fine-Tuning (RFT) Feature Officially Launches on o4-mini: OpenAI announced that the Reinforced Fine-Tuning (RFT) feature, previewed last December, is now officially available in the o4-mini model. RFT utilizes chain-of-thought reasoning and task-specific scoring to enhance model performance in complex domains. For example, AccordanceAI has used RFT to fine-tune a top-performing model for tax and accounting. (Source: OpenAI Developers, gdb, QbitAI, 36Kr)

Gemini API Launches Implicit Caching Feature, Reducing Call Costs by 75%: Google’s Gemini API has added an implicit caching feature. When a user’s request shares a common prefix with a previous request, it can automatically trigger a cache hit, saving users 75% on token fees. This feature does not require developers to actively create a cache. Additionally, the minimum token requirement to trigger caching has been lowered to 1K on Gemini 2.5 Flash and 2K on 2.5 Pro, further reducing API usage costs. (Source: op7418)

OpenAI Fully Rolls Out ChatGPT Memory Feature in EEA and Other Regions: OpenAI announced that ChatGPT’s memory feature is now fully available to Plus and Pro users in the European Economic Area (EEA), UK, Switzerland, Norway, Iceland, and Liechtenstein. This feature allows ChatGPT to reference users’ past chat history to provide more personalized responses, better understand user preferences and interests, and thus offer more precise assistance in areas like writing, recommendations, and learning. (Source: openai)

ByteDance SEED Introduces Multimodal Foundation Model Mogao: ByteDance’s SEED team has released Mogao, an Omni foundation model designed for interleaved multimodal generation. Mogao integrates several technical improvements, including a deep fusion design, dual visual encoders, interleaved rotary position embeddings, and multimodal classifier-free guidance. These enhancements enable it to combine the strengths of autoregressive models (for text generation) and diffusion models (for high-quality image synthesis), effectively handling arbitrarily interleaved sequences of text and images. (Source: NandoDF)

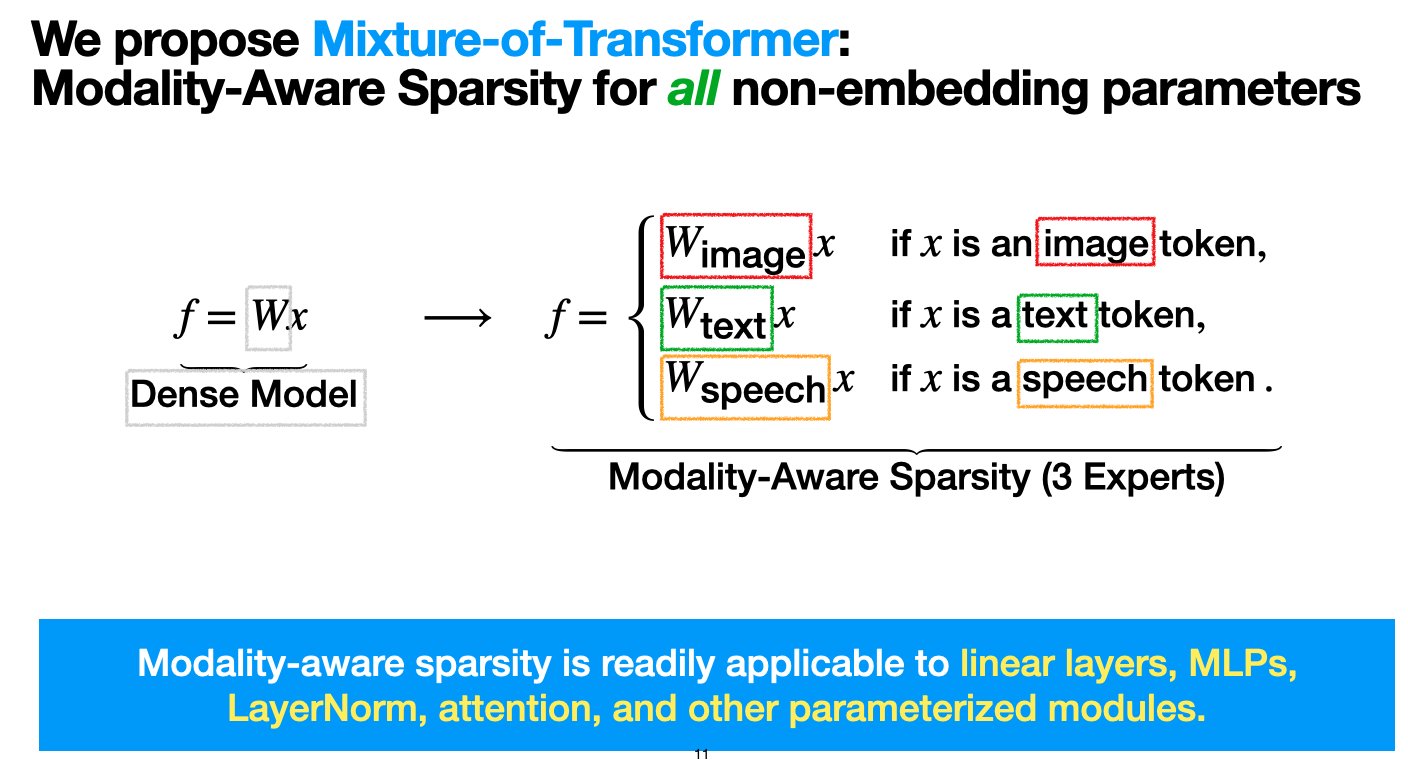

Meta Introduces Mixture-of-Transformers (MoT) Architecture to Reduce Multimodal Model Pre-training Costs: Meta AI researchers have proposed a sparse architecture called “Mixture-of-Transformers (MoT)” aimed at significantly reducing the computational cost of pre-training multimodal models without sacrificing performance. MoT applies modality-aware sparsity to non-embedding Transformer parameters such as feed-forward networks, attention matrices, and layer normalization. Experiments show that in a Chameleon (text + image generation) setup, a 7B MoT model achieved dense baseline quality using only 55.8% of the FLOPs. When extended to include speech as a third modality, it used only 37.2% of the FLOPs. The research has been accepted by TMLR (March 2025), and the code is open-sourced. (Source: VictoriaLinML)

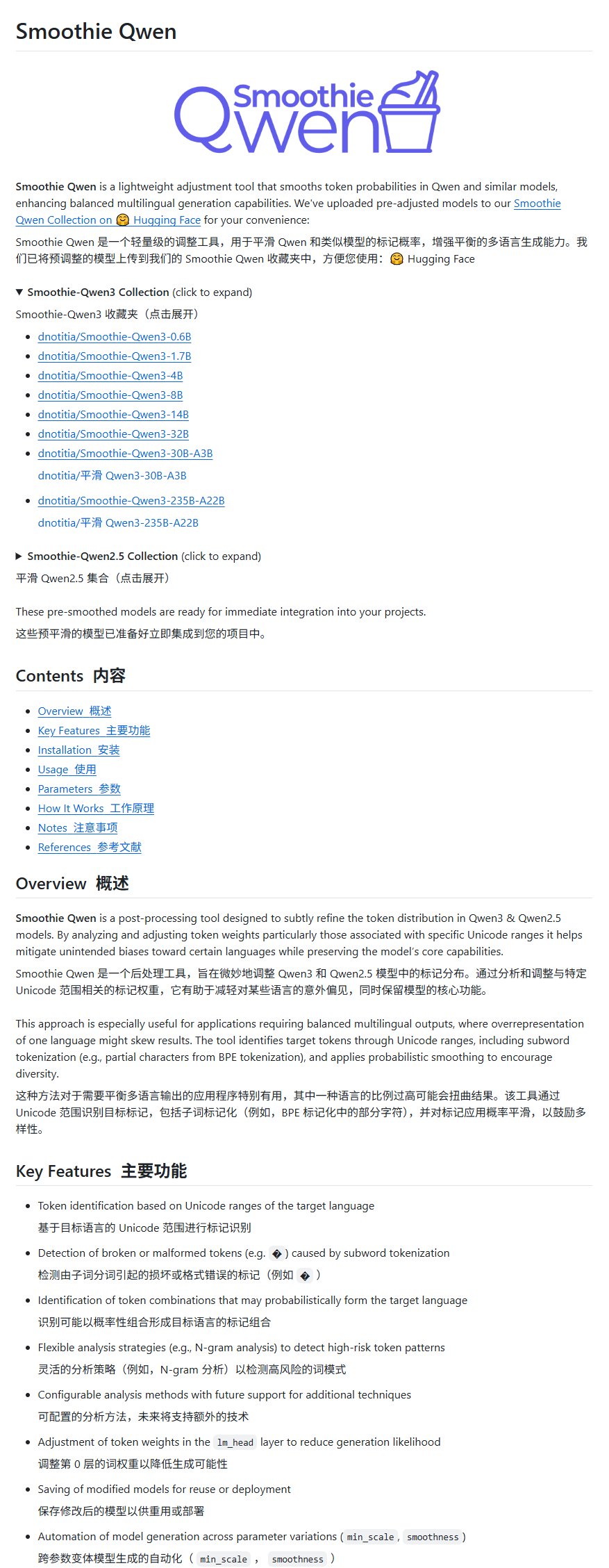

Qwen Model Improvement Project Smoothie Qwen Released to Balance Multilingual Generation: A Qwen model improvement project called Smoothie Qwen has been released, aiming to balance multilingual generation capabilities by adjusting the probability of the model’s internal parameters. The project primarily addresses the issue where some non-Chinese users occasionally receive Chinese output when using Qwen, and claims not to degrade the model’s intelligence. (Source: karminski3)

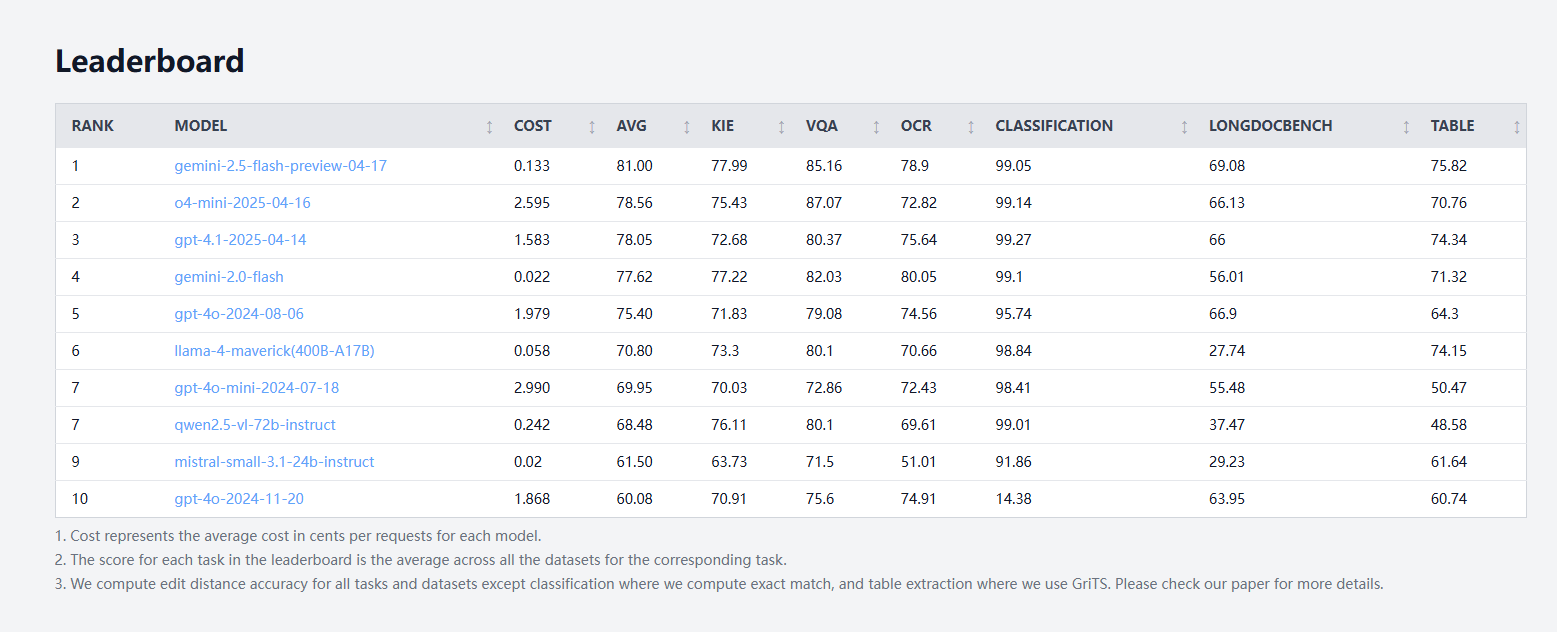

idp-leaderboard Launched, First AI Test Benchmark for Document Types: The new AI test benchmark, idp-leaderboard, has gone live, focusing on evaluating models’ ability to process documents and document images. According to the initial leaderboard, gemini-2.5-flash-preview-04-17 performs best in overall document processing. Notably, Qwen2.5-VL shows poor performance in table processing. (Source: karminski3)

Perplexity Discover Feature Receives Major Update: Perplexity co-founder Arav Srinivas announced that its Discover feature (information feed) has been significantly improved, encouraging users to experience it. This typically implies optimizations in information presentation, relevance, or user interface, aimed at enhancing users’ ability to acquire and explore new information. (Source: AravSrinivas)

Lenovo Announces Major Upgrade to Tianxi Personal Super Agent, World’s First Tablet with Local DeepSeek Deployment: Lenovo announced a major upgrade to its Tianxi Personal Super Agent, moving towards full L3 capabilities, and released “Xiang Bang Bang,” a domain-specific intelligent agent focused on AI services for personal smart devices. Simultaneously, Lenovo launched several new AI terminal products, including the YOGA Pad Pro 14.5 AI Yuanqi Edition, the world’s first tablet with on-device deployment of the DeepSeek large model, as well as moto AI phones and Legion series PCs, building a complete AI ecosystem of AI PCs, AI phones, AI tablets, and AIoT. (Source: QbitAI)

Lou Tiancheng on Autonomous Driving and Embodied AI: L2 Cannot Evolve to L4, VLA Offers Limited Help to L4: Pony.ai co-founder and CTO Lou Tiancheng shared his latest insights on autonomous driving and AI during the launch of their new generation Robotaxi. He emphasized the fundamental difference between L2 and L4, believing L2 cannot be upgraded to L4, and that the popular VLA (Vision-Language-Action) paradigm in the L2 domain “basically offers little help” to L4. He pointed out that L4 requires specialist-level extreme safety, while VLA is more like a general practitioner. Pony.ai’s core technological transformations in the past two years have been end-to-end and world models, the latter having been applied for about 5 years. He also considers “cloud-based driving” a pseudo-concept and stated that embodied intelligence is currently in a state similar to autonomous driving in 2018, facing similar “vacuum period” challenges. (Source: QbitAI)

Kimi Tests Content Community, OpenAI May Develop Social App; AI LLM Companies Explore Social Features to Enhance User Stickiness: Moonshot AI’s Kimi is beta testing a content community product, primarily featuring AI-crawled news hotspots focusing on tech, finance, etc. Coincidentally, OpenAI is also reportedly planning to develop social software, possibly rivaling X. These moves indicate AI LLM companies are trying to enhance user stickiness by building communities or social functions, addressing the “use and discard” problem of AI tools. However, community operation faces challenges in content quality, security risks, and monetization. This also reflects a shift in the AI industry from “burning cash for growth” to focusing more on ROI and exploring new business models as growth dividends peak. (Source: 36Kr)

TCL Fully Embraces AI, Releases Fuxi Large Model and AI Appliances, But Faces Homogenization Challenges: TCL prominently showcased its AI products and strategy at AWE 2025, CES 2025, and other exhibitions, including the TCL Fuxi large model and AI functions applied to TVs, air conditioners, washing machines, etc. Its TV business performed exceptionally, ranking first globally in Q1 shipments, with Mini LED technology as its advantage. However, AI applications in home appliances are currently concentrated on voice interaction and specific function optimization (e.g., AI picture quality chips, AI sleep, AI power saving), facing homogenization competition with other brands (like Hisense Xinghai, Haier HomeGPT, Midea Meiyan). TCL is also exploring AI companion robots and smart glasses through its Leiniao (Thunderbird) layout. Despite increased AI investment, its independent technological advantages are not yet significant, and it faces issues like high marketing costs and declining gross profit margins. (Source: 36Kr)

AI Drives Education Reform, Leading Companies like iFlytek and Zhuoyue Education Accelerate AI Deployment: A report analyzes the latest AI practices of leading education companies including iFlytek, Zhuoyue Education, Fenbi, Zhonggong Education, Huatu Education, and 17zuoye. iFlytek leverages domestic computing power and Deepseek-V3/R1 models to cultivate information technology education. Zhuoyue Education uses Deepseek R1 to empower the entire teaching chain, launching AI grading and AI reading tools. Fenbi has built an AI product matrix covering high-frequency learning and essential scenarios. Zhonggong Education focuses on AI employment services, developing the “Yunxin” large model. Huatu Education combines offline advantages with AI to improve the precision of civil service exam services. 17zuoye uses AI to drive an integrated teaching and assessment system. Industry trends show AI education is moving from single-point tools to ecosystem competition and value realization. (Source: 36Kr)

Baidu, Alibaba, and Other Tech Giants Promote MCP Protocol, Vying for AI Agent Ecosystem Dominance: The Model Context Protocol (MCP) has recently been promoted by Anthropic, OpenAI, Google, as well as domestic giants like Baidu and Alibaba. Baidu’s “Xīnxiǎng” application and Alibaba Cloud’s Bailian platform both support MCP, allowing AI Agents to more easily call external tools and services. Ostensibly aimed at unifying industry standards, this move is actually a battle among tech giants for the right to define the future AI Agent ecosystem. By building and promoting MCP, these companies intend to attract more developers to their ecosystems, thereby securing data moats and industry influence. The commercialization path for Agent applications currently still seems to be primarily focused on traffic and advertising. (Source: 36Kr)

Apple’s AI Strategy Exposed: Potential Collaboration with Baidu, Alibaba to Create “Dual-Core Driven” Chinese AI System: Reports analyze Apple’s potential collaboration with Baidu and Alibaba to provide technical support for its AI features in the Chinese market. Baidu’s Ernie Bot has advantages in visual recognition, while Alibaba’s Qwen large model excels in cognitive understanding and content compliance. This “dual-core driven” model may aim to combine the strengths of both companies to meet the Chinese market’s data ecosystem, technological focus, and regulatory requirements, while allowing Apple to maintain dominance and bargaining power in the partnership. This move is seen as Apple’s strategy to counter local competitive pressures like HarmonyOS and to navigate stricter data regulations through “ecological niche partitioning.” (Source: 36Kr)

Professor Yu Jingyi’s In-depth Interpretation of Spatial Intelligence: Huge Potential, But Consensus Lacking, Data and Physical Understanding are Key: ShanghaiTech University Professor Yu Jingyi pointed out in an interview that the potential of large models in cross-modal integration is far from exhausted. Spatial intelligence is evolving from digital replication to intelligent understanding and creation, thanks to breakthroughs in generative AI. He believes the core challenges for spatial intelligence currently are the scarcity of real 3D scene data and the lack of a unified 3D representation method. His team’s CAST project explores inter-object relationships and physical plausibility by introducing “Actor-Network Theory” and physical rules. He emphasizes perception first and predicts revolutionary breakthroughs in sensor technology. The measure for embodied intelligence should be robustness and safety, not just pure accuracy. In the short term, spatial intelligence will explode in fields like film production and gaming; in the medium to long term, it will become the core of embodied intelligence, with the low-altitude economy also being an important application scenario. (Source: 36Kr)

AI Talent War Heats Up: Tech Giants Offer High Salaries, CTO Mentorship, Focusing on Large Models and Multimodality: Domestic and international tech giants are engaged in a fierce battle for AI talent. Companies like ByteDance, Alibaba, Tencent, Baidu, JD.com, and Huawei have launched recruitment programs targeting top PhD students and young prodigies, offering uncapped salaries, CTO mentorship, and no internship experience requirements. Recruitment focuses primarily on large models and multimodality, closely tied to each company’s core business scenarios. The success of models like DeepSeek has further intensified the industry’s demand for talent. Elon Musk has also lamented the frenzy of AI talent competition, with overseas giants like OpenAI similarly attracting talent with high salaries and founder-led recruitment. (Source: 36Kr)

Sequoia Capital: AI Market Potential Far Exceeds Cloud Computing, Application Layer is Key, Chief AI Officer to Become Standard: A Sequoia Capital partner predicts the AI market size will far surpass the current ~$400 billion cloud computing market, with enormous volume in the next 10-20 years, and value primarily concentrated in the application layer. Startups should focus on customer needs, provide end-to-end solutions, delve into vertical domains, and build moats using a “data flywheel.” AWS research shows global enterprises are accelerating their adoption of generative AI, with 45% of decision-makers planning it as their top priority for 2025. The Chief AI Officer (CAIO) position will become standard, with 60% of companies having already established it. The agent economy is seen as the next stage of AI development but needs to overcome three technical challenges: persistent identity, communication protocols, and security/trust. (Source: 36Kr)

New EV Makers Fully Bet on AI: Li Auto, Xpeng, NIO Compete for Next-Gen Auto Definition: Breakthroughs from Tesla’s FSD V12, which uses end-to-end neural network technology, have prompted Chinese new electric vehicle (EV) makers like Li Auto, Xpeng, and NIO to accelerate their AI deployment. Li Auto launched its VLA (Vision-Language-Action) driver large model and developed the language part based on the open-source DeepSeek model. Xpeng Motors built a 72-billion-parameter LVA base model. NIO released China’s first intelligent driving world model, NWM, and self-developed the 5nm autonomous driving chip Shenji NX9031. All are investing heavily in algorithms, computing power (self-developed chips), and data, and are generalizing AI technology to areas like humanoid robots, vying for the definition of next-generation automobiles and even products, but face funding and commercialization challenges. (Source: 36Kr)

🧰 Tools

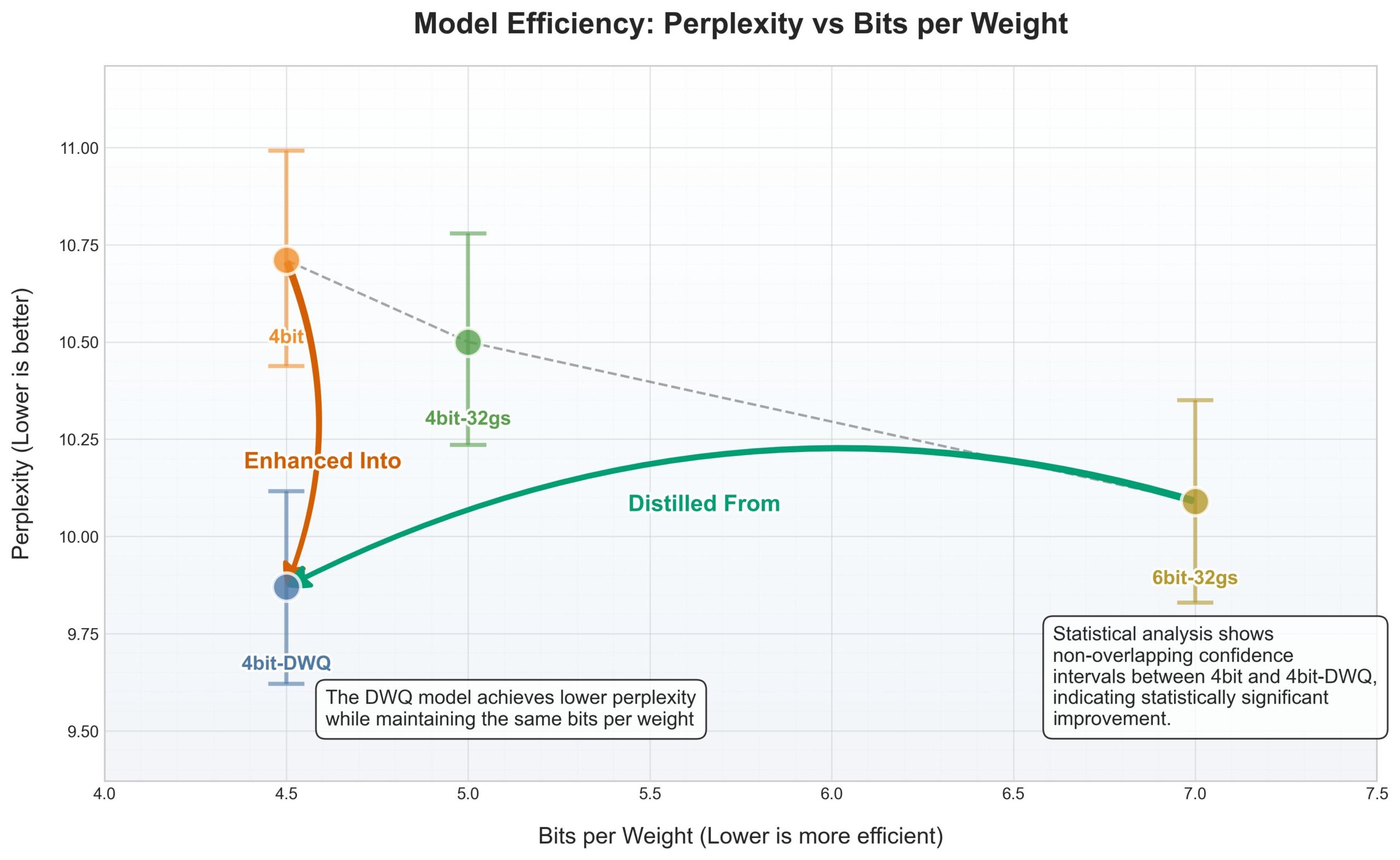

Apple MLX Framework Gets DWQ Quantization, 4-bit Outperforms Old 6-bit: A new DWQ (Dynamic Weight Quantization) method has been released for Apple’s MLX (Machine Learning framework). According to data shared by user karminski3, models quantized with 4-bit DWQ (like Qwen3-30B) show even better perplexity than the old 6-bit quantization method and require only 17GB of memory to run. This opens new possibilities for efficiently running large language models on Apple devices. (Source: karminski3)

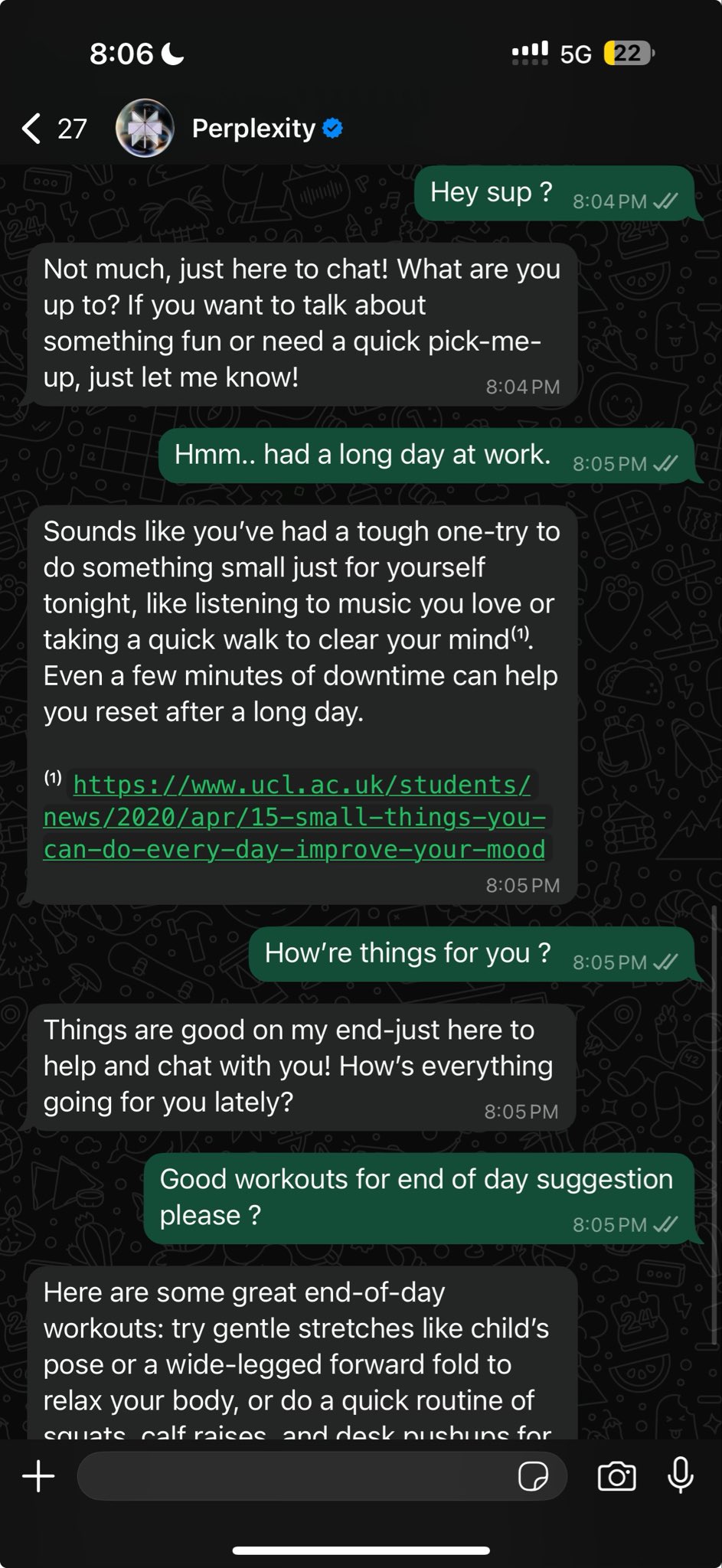

Perplexity Now Supports More Natural Conversational Search within WhatsApp: Perplexity co-founder Arav Srinivas announced that Perplexity’s integration within WhatsApp has been improved to offer a more natural conversational experience. It now intelligently skips the search step when not needed, allowing users to interact directly with the AI in a chat-like manner. (Source: AravSrinivas)

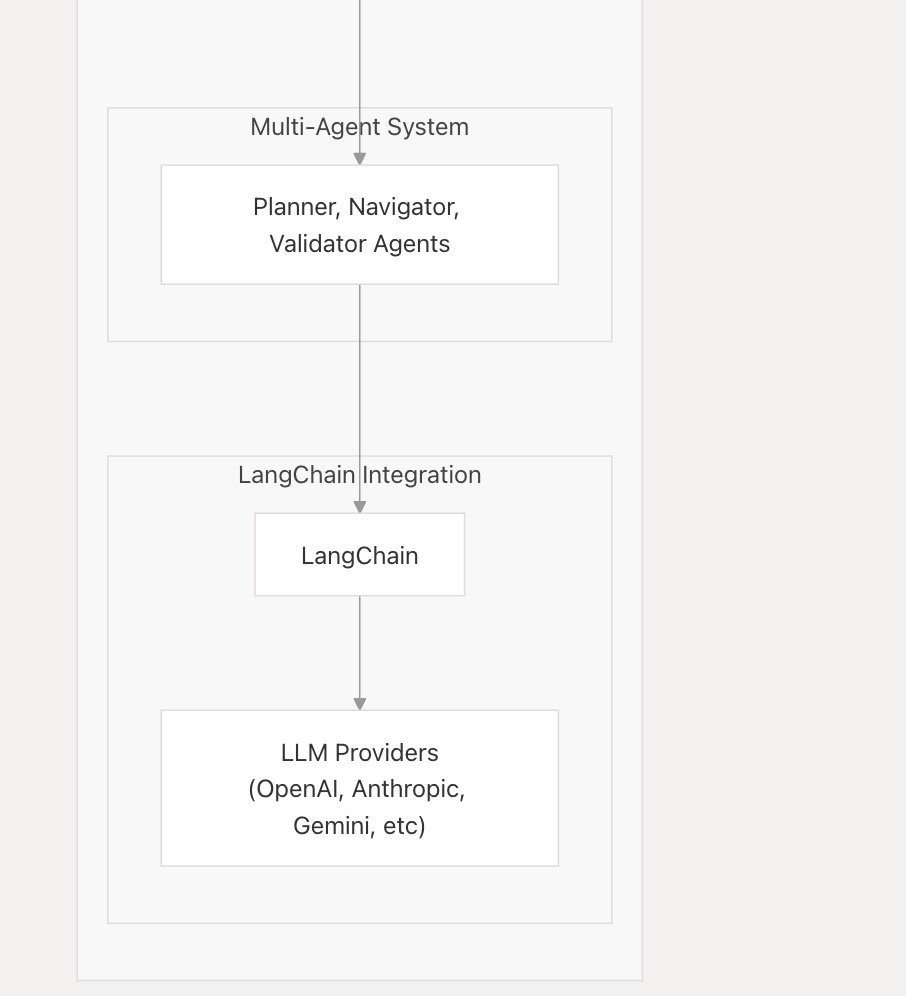

nanobrowser_ai Supports Mainstream LLMs, Integrates Langchain.js: AI tool nanobrowser_ai announced support for various large language models, including OpenAI models, Gemini, and local models run via Ollama. The tool utilizes the Langchain.js framework to achieve flexible support for different LLMs, offering users a wider range of model choices. (Source: hwchase17)

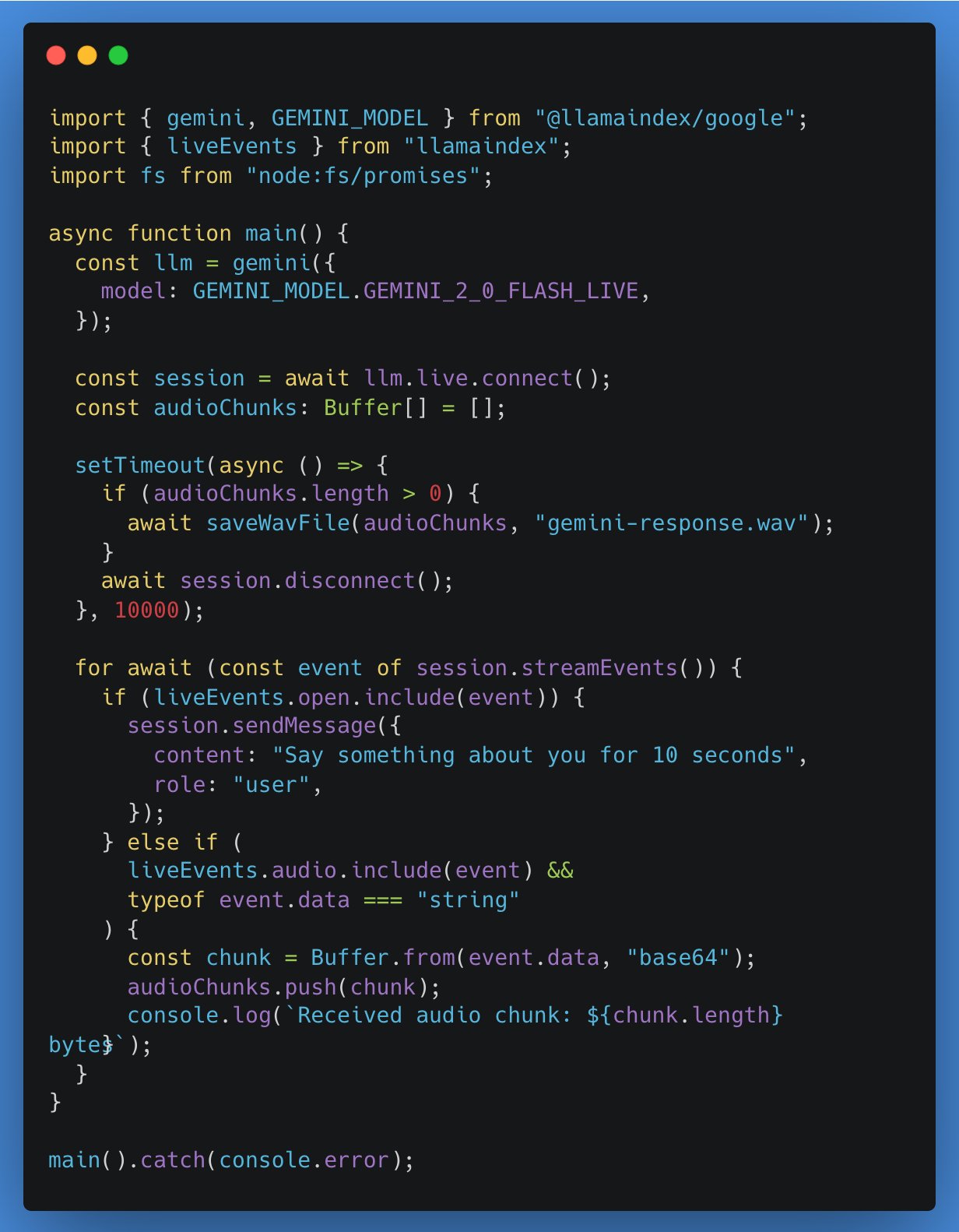

LlamaIndex TypeScript Adds Support for Real-time LLM APIs, First Integration with Google Gemini: LlamaIndex TypeScript announced support for real-time LLM APIs, enabling developers to implement real-time audio conversations in AI applications. The first integration is with Google Gemini’s real-time abstraction interface, with OpenAI’s real-time support coming soon. This update makes it easier for developers to switch between different real-time models and build more interactive AI applications. (Source: _philschmid)

Gradio Application Tutorial: Image/Video Annotation and Object Detection with Qwen2.5-VL: A tutorial details how to build a Gradio application using Qwen2.5-VL (Vision-Language Model) for automatic image and video annotation and object detection. The tutorial aims to help developers leverage the powerful capabilities of Qwen2.5-VL to quickly build interactive AI applications. (Source: Reddit r/deeplearning)

VSCode Plugin gemini-code Nears 50,000 Downloads: The VSCode AI programming assistant plugin, gemini-code, is approaching 50,000 downloads. Developer raizamrtn stated that some necessary updates will be made over the weekend. The plugin aims to leverage the capabilities of the Gemini model to assist developers with coding tasks. (Source: raizamrtn)

French AI Startup Arcads AI: 5-Person Team Achieves $5M ARR, Focuses on Automated Video Ad Creation: Paris-based AI startup Arcads AI, with a team of only 5, has achieved $5 million in annual recurring revenue and is profitable. The company provides advertisers with fast, low-cost, high-conversion video ad creation services through a highly automated AI system. Clients only need to provide core copy, and AI handles the entire process from scene construction, actor performance, voiceover recording, to final video output. The Arcads platform has over 300 AI actor avatars based on licensed real humans, supports 35 languages, and offers “Content-as-a-Service.” Its internal operations also extensively use AI agents, such as an AI Spy Agent for competitor analysis and an AI Ghostwriter for creative generation, significantly boosting efficiency. (Source: 36Kr)

📚 Learning

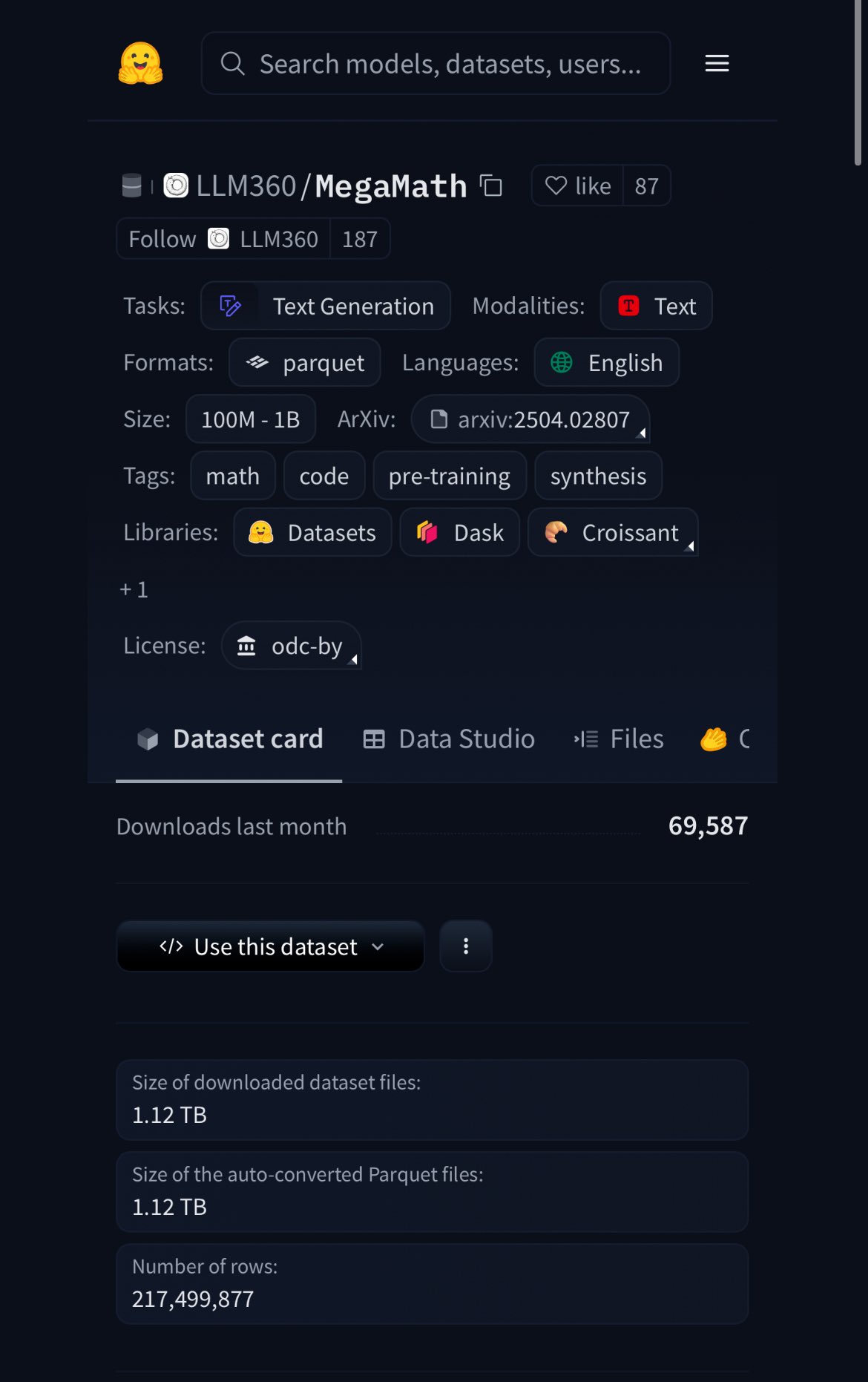

HuggingFace Releases MegaMath Dataset with 370B Tokens, 20% Synthetic Data: HuggingFace has released the MegaMath dataset, containing 370 billion tokens, making it the largest mathematics pre-training dataset to date, approximately 100 times the size of the English Wikipedia. Notably, 20% of the data is synthetic, reigniting discussions about the role of high-quality synthetic data in model training. (Source: ClementDelangue)

Nous Research Hosts RL Environment Hackathon with $50,000 Prize Pool: Nous Research announced the Nous RL Environment Hackathon in San Francisco, where participants will create using Nous’s reinforcement learning environment framework, Atropos. The total prize pool is $50,000. Partners include xAI, NVIDIA, Nebius AI, and others. (Source: Teknium1)

HuggingFace Hot Models Weekly Chart Released: User karminski3 shared this week’s list of most popular models on HuggingFace, mentioning that he has tested or shared official demos for most of them. This reflects the community’s enthusiasm for quickly following and evaluating new models. (Source: karminski3)

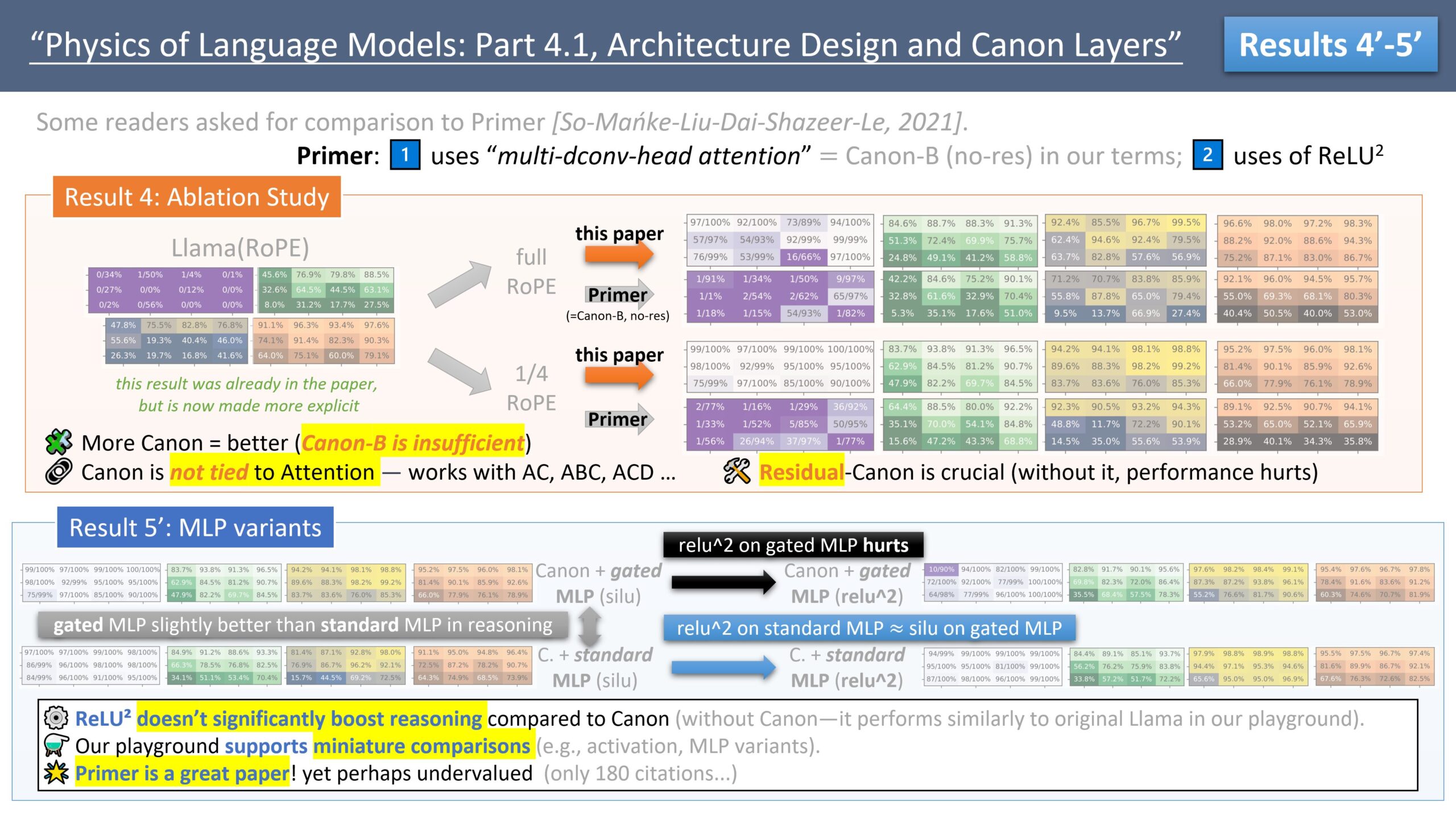

Zeyuan Allen-Zhu Publishes LLM Architecture Design Research Series, Discusses Primer Model: Researcher Zeyuan Allen-Zhu, through his “Physics of LLM Design” research series, uses controlled synthetic pre-training environments to reveal the true limits of LLM architectures. In his latest share, he discusses the Primer model (arxiv.org/abs/2109.08668) and its multi-dconv-head attention (which he calls Canon-B without residual connections), pointing out its issues but also arguing that the Primer model (only 180 citations) is underrated for finding meaningful signals from noisy real experiments. (Source: ZeyuanAllenZhu, cloneofsimo)

Simons Institute Discusses Neural Scaling Laws: In its Polylogues series, the Simons Institute invited Anil Ananthaswamy and Alexander Rush to discuss the empirically discovered neural scaling laws of recent years. These laws have significantly influenced major companies’ decisions to build increasingly larger models. (Source: NandoDF)

François Fleuret Publishes “The Little Book of Deep Learning”: François Fleuret has published a book titled “The Little Book of Deep Learning,” aiming to provide readers with concise knowledge about deep learning. (Source: Reddit r/deeplearning)

Princeton Professor: AI May End Humanities, But Push Them Back to Existential Experience: Princeton University Professor D. Graham Burnett wrote in The New Yorker discussing AI’s impact on the humanities. He observed a widespread “AI shame” in US universities, where students are afraid to admit using AI. He believes AI has surpassed traditional academic methods in information retrieval and analysis, making academic books like archaeological artifacts. Although AI might end humanities as traditionally defined by knowledge production, it could also push them back to core questions: how to live, face death, and other existential experiences, which AI cannot directly address. (Source: 36Kr)

7 Studies Reveal AI’s Profound Impact on Human Brain and Behavior: A series of new studies explore AI’s impact on human psychological, social, and cognitive levels. Findings include: 1) LLM red team testers explore model vulnerabilities out of curiosity and moral responsibility; 2) ChatGPT shows high diagnostic accuracy in psychiatric case analysis; 3) ChatGPT’s political leanings subtly shift between versions; 4) ChatGPT use may exacerbate workplace inequality, with young, high-income males using it more; 5) AI can detect signs of depression by analyzing elderly driving behavior; 6) LLMs exhibit social desirability bias by “whitewashing” their image in personality tests; 7) Over-reliance on AI may weaken critical thinking, especially among younger groups. (Source: 36Kr)

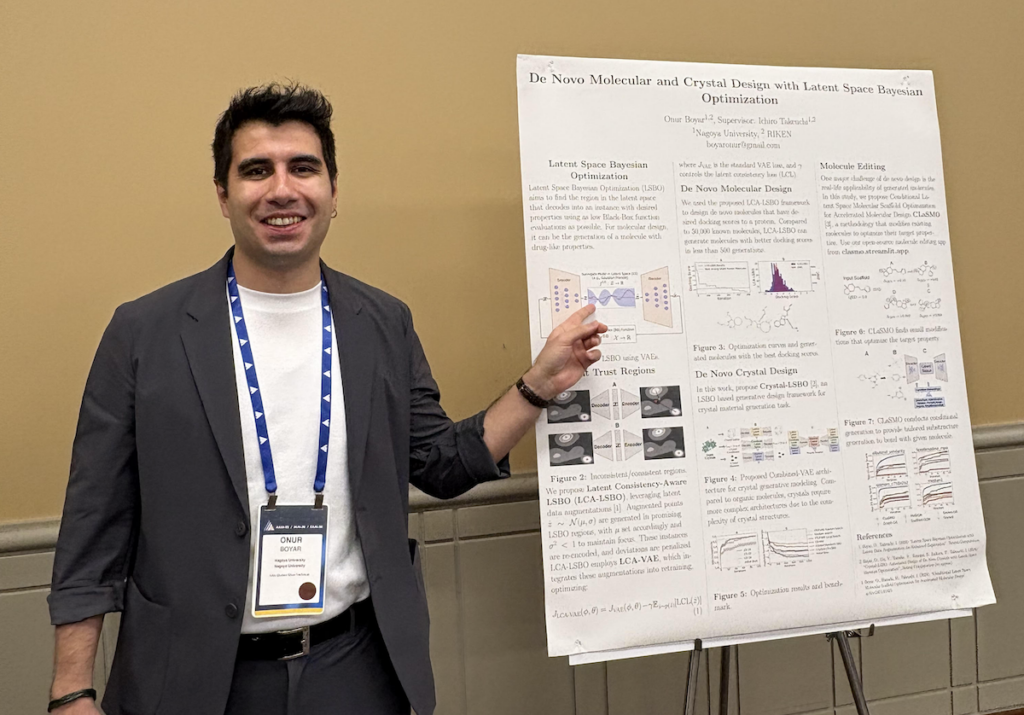

Interview with Onur Boyar: Drug and Material Design Using Generative Models and Bayesian Optimization: AAAI/SIGAI Doctoral Consortium participant Onur Boyar discussed his PhD research at Nagoya University, focusing on using generative models and Bayesian methods for drug and material design. He is involved in Japan’s Moonshot project, aiming to build AI scientist robots to handle drug discovery workflows. His research methods include using latent space Bayesian optimization to edit existing molecules, improving sample efficiency and synthetic feasibility. He emphasizes close collaboration with chemists and will join IBM Research Tokyo’s material discovery team after graduation. (Source: aihub.org)

💼 Business

Modular Partners with AMD for Mojo Hackathon Using MI300X GPUs: Modular announced a partnership with AMD to host a special hackathon at AGI House. Developers will program in the Mojo language using AMD Instinct™ MI300X GPUs. The event will also feature tech talks from representatives of Modular, AMD, Dylan Patel from SemiAnalysis, and Anthropic. (Source: clattner_llvm)

Stripe Releases Multiple AI-Driven New Features, Including AI Foundation Model for Payments: Financial services company Stripe announced several new products at its annual conference to accelerate AI adoption, including the world’s first AI foundation model specifically for the payments domain. Trained on tens of billions of transactions, the model aims to improve fraud detection (e.g., a 64% increase in detecting “card testing” attacks), authorization rates, and personalized checkout experiences. Stripe also expanded its multi-currency treasury capabilities and deepened collaborations with large enterprises like Nvidia (using Stripe Billing to manage GeForce Now subscriptions) and PepsiCo. (Source: 36Kr)

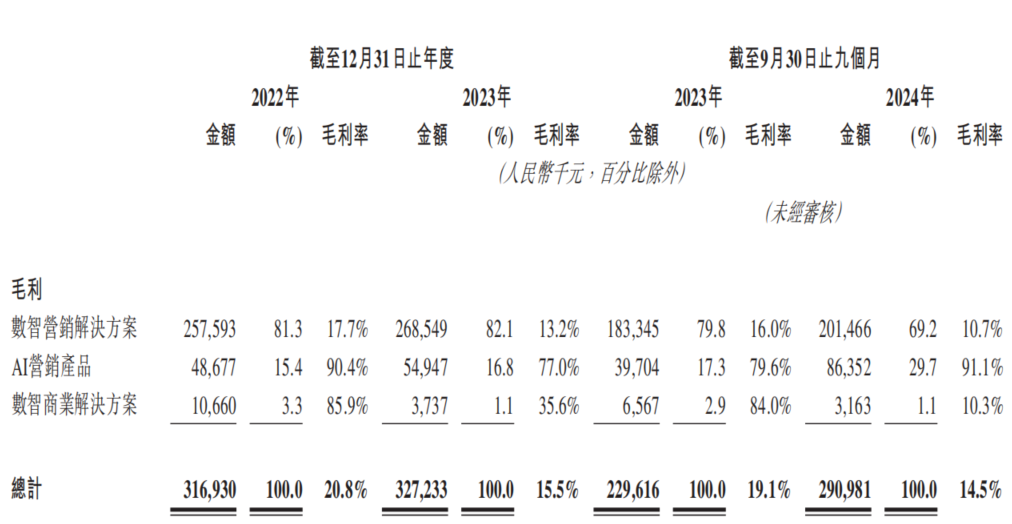

AI Marketing Company Dongxin Marketing Re-applies for Hong Kong IPO, Faces “Revenue Growth Without Profit” Dilemma: Dongxin Marketing, branding itself as “China’s largest AI marketing company,” has re-submitted its prospectus for a Hong Kong IPO. Data shows that while the company’s revenue continued to grow in the first three quarters of 2022-2024, its net profit significantly declined, even turning into a loss, and its gross margin fell from 20.8% to 14.5%. AI marketing business revenue accounts for less than 5%; although its gross margin is as high as 91.1%, it is insufficient to cover R&D investment. The company faces issues such as high accounts receivable, tight cash flow, and significant debt pressure, with profits heavily reliant on government subsidies. Its market positioning has shifted from a “mobile marketing service provider” to an “AI marketing company,” but the substance of its AI technology and commercialization prospects remain questionable. (Source: 36Kr)

🌟 Community

Intense Competition Between vLLM and SGLang Inference Engines, Developer Publicly Compares PR Merge Data: The developer community is hotly debating the competition between the vLLM and SGLang inference engines. A lead maintainer of vLLM even created a public dashboard to compare the number of merged pull requests (PRs) on GitHub between SGLang and vLLM, highlighting the fierce race in feature iteration and performance optimization. SGLang, on the other hand, emphasizes its pioneering open-source implementations in areas like radix cache, CPU overlap, MLA, and large-scale EP. (Source: dylan522p, jeremyphoward)

AI-Generated “Italian Brainrot” Character Universe Explodes Among Zoomers, Garnering Hundreds of Millions of Views: Justine Moore pointed out that a series of AI-generated “Italian brainrot” characters have become exceptionally popular among Zoomers (Gen Z). They have built an entire “cinematic universe” around these characters, with related content receiving hundreds of millions of views. This phenomenon reflects the powerful appeal and viral potential of AI-generated content among the younger generation, as well as the formation of specific subcultures. (Source: nptacek)

Qwen3 vs. DeepSeek R1 Model Comparison Sparks Discussion, Each Has Pros and Cons: A Reddit user shared a test comparison between the Qwen3 235B and DeepSeek R1 open-source large models. The poster believes Qwen performs better on simple tasks, but DeepSeek R1 excels in tasks requiring nuance (like reasoning, math, and creative writing). In community comments, users discussed DeepSeek R1’s accessibility, uncensored fine-tuned versions of Qwen3 235B, and the rationality of using language models for creative writing. (Source: Reddit r/LocalLLaMA)

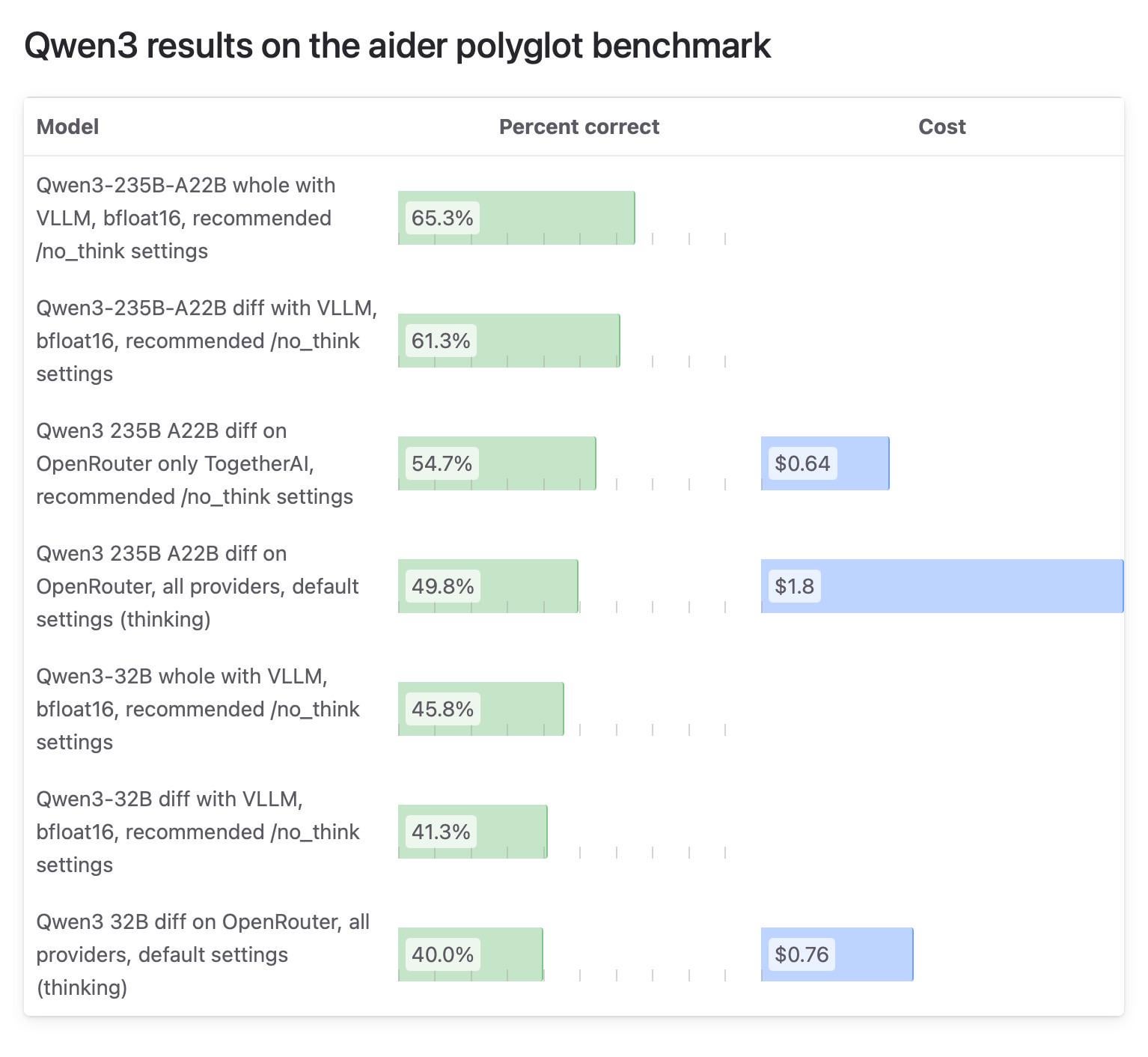

Aider Community Highlights Discrepancies in Qwen3 Model Test Results, OpenRouter Testing Questioned: The Aider blog published a test report on the Qwen3 model, noting significant score differences depending on how the model is run. Community discussion focused on the reliability of testing models using OpenRouter, as most users might access models through it, but its routing mechanism could lead to inconsistent results. Some users argue that open-source models should be tested in standardized self-hosted environments (like vLLM) to ensure reproducibility and called for API providers to increase transparency regarding quantization versions and inference engines used. (Source: Reddit r/LocalLLaMA)

Users Share Personal Reasons for Paying for ChatGPT, Covering Life Assistance, Learning, Creation, etc. In the Reddit r/ChatGPT community, many users shared their personal uses for subscribing to ChatGPT Plus/Pro. These include: helping visually impaired users describe images, read food packaging, and street signs; preparing for interviews; gaining in-depth understanding of game plots like Elden Ring; analyzing running training plans and customizing recipes; assisting in learning new skills like pottery; serving as a personal companion; planning gardens and making herbal remedies; and D&D character creation and fan fiction writing. These cases demonstrate ChatGPT’s wide-ranging value in daily life and personal interests. (Source: Reddit r/ChatGPT)

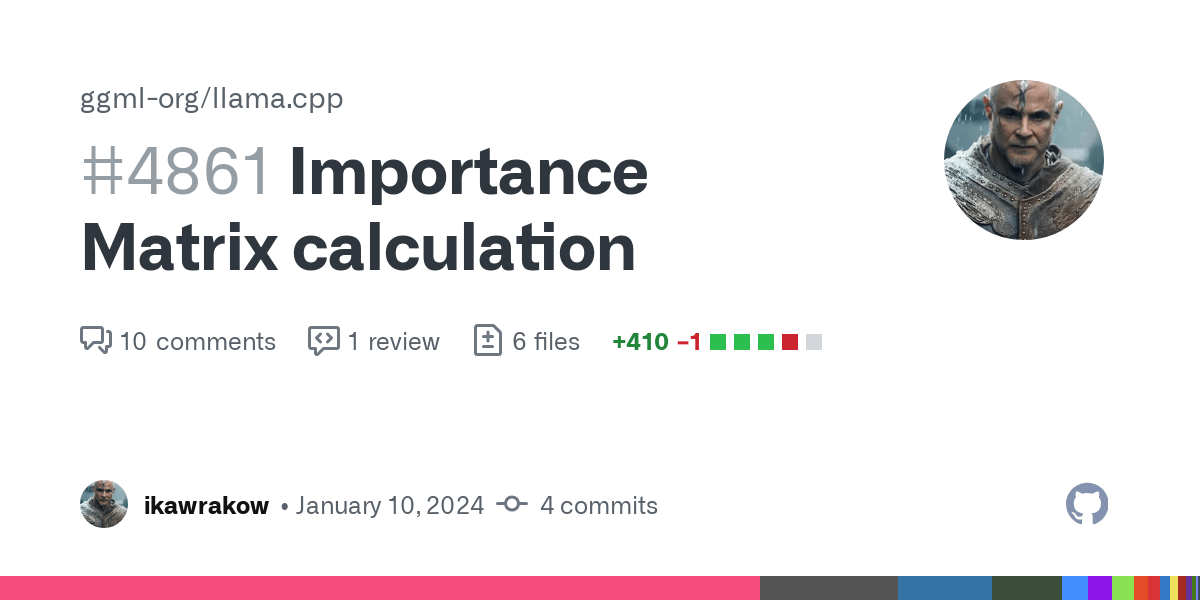

GGUF Quantized Model Comparison Test Sparks “Quant Wars” Discussion, Emphasizing Different Schemes Have Merits: Reddit user ubergarm posted a detailed benchmark comparison of different GGUF quantization versions for models like Qwen3-30B-A3B, including schemes from providers like bartowski and unsloth. The tests covered multiple dimensions such as perplexity, KLD divergence, and inference speed. The article points out that with the emergence of new quantization types like importance matrix quantization (imatrix), IQ4_XS, and methods like unsloth dynamic GGUF, GGUF quantization is no longer “one size fits all.” The author emphasizes that there is no single best quantization scheme; users need to choose based on their hardware and specific use case, but overall, major schemes perform well. (Source: Reddit r/LocalLLaMA)

💡 Other

Daimon Robotics Introduces Mind-Dexterous Robot Sparky 1: Daimon Robotics showcased Sparky 1, its breakthrough product in dexterous robotics. The robot is described as having “Mind-Dexterous” capabilities, suggesting a new level of performance in perception, decision-making, and fine manipulation, likely integrating advanced AI and machine learning technologies. (Source: Ronald_vanLoon)

MIT Develops Grain-Sized Microrobot to Treat Inoperable Brain Tumors: MIT researchers have developed a grain-of-rice-sized microrobot with the potential to enter the brain via minimally invasive methods to treat tumors previously difficult to remove surgically. Such technology combines microrobotics with AI navigation or control, offering new possibilities for neurosurgery and cancer treatment. (Source: Ronald_vanLoon)

Oushark Intelligence Completes Two Funding Rounds, Pushing Consumer-Grade Exoskeleton Robot Mass Production and AI Integration: Exoskeleton robot technology platform company Oushark Intelligence (傲鲨智能) announced the completion of two consecutive funding rounds, led by BinFu Capital, with existing shareholder Guoyi Capital participating. The funds will be used for mass production of consumer-grade exoskeleton robots and to promote the integration of exoskeleton hardware with AI technology. The company’s products are already used in industrial scenarios and are beginning to explore outdoor assistance (such as mountain climbing aids in scenic areas) and home care for the elderly, with plans to launch consumer-grade products priced under 10,000 RMB. Its latest products are equipped with AI large model training capabilities and are pre-researching brain-computer interface technology. (Source: 36Kr)