Keywords:OpenAI, Llama-Nemotron, Qwen3, AI Agent, GPT-4o, DeepSeek-R1, AI chip, Gemma 3, OpenAI nonprofit organization control, Llama-Nemotron reasoning capabilities, Qwen3-235B programming capabilities, AI Agent competition, GPT-4o sycophancy issues

🔥 Focus

OpenAI Abandons Full For-Profit Shift, Maintains Non-Profit Control: OpenAI announced an adjustment to its corporate structure; its for-profit subsidiary will transition to a Public Benefit Corporation (PBC), but control will remain with its non-profit parent organization. This move is a significant shift from its previous plan to restructure as a fully for-profit entity, aiming to address concerns about its deviation from its original mission to “benefit all of humanity,” as well as pressure from lawsuits by Elon Musk, former employees, and various non-profit organizations. The new structure attempts to strike a balance between attracting investment, incentivizing employees, and adhering to its mission, but it may affect its financing agreements with investors like SoftBank. (Source: TechCrunch, Ars Technica, The Verge, OpenAI, Wired, scaling01, Sentdex, slashML, wordgrammer, nptacek, Teknium1)

Nvidia Open-Sources Llama-Nemotron Model Series, Reasoning Capabilities Surpass DeepSeek-R1: Nvidia released and open-sourced the Llama-Nemotron series models (LN-Nano 8B, LN-Super 49B, LN-Ultra 253B), with LN-Ultra 253B surpassing DeepSeek-R1 in multiple reasoning benchmarks, becoming one of the most powerful open-source models for scientific reasoning currently available. The series was built using neural architecture search, knowledge distillation, supervised fine-tuning (incorporating reasoning processes from teacher models like DeepSeek-R1), and large-scale reinforcement learning (especially for LN-Ultra), optimizing reasoning efficiency and capabilities, and supporting up to 128K context. A special feature is the introduction of a “reasoning switch,” allowing users to dynamically switch between chat and reasoning modes. (Source: 36Kr)

Qwen3 Series Models Show Outstanding Performance, Sparking Community Discussion: Alibaba’s released Qwen3 series models have demonstrated excellent performance in multiple benchmarks. Notably, Qwen3-235B achieved a high score in the LiveCodeBench programming ability test, surpassing several models including GPT-4.5, and ranking first among open-source models. The community is actively discussing the Qwen3 series, including its GGUF quantized version scores on MMLU-Pro, the release of its AWQ quantized version, and its efficient performance on Apple’s M-series chips (e.g., the Qwen3 235b q3 quantized version reaching nearly 30 tok/s on an M4 Max 128GB). This indicates that Qwen3 has reached new heights in performance and efficiency, offering a powerful option for local deployment and task-specific optimization. (Source: karminski3, karminski3, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

AI Agent Competition Heats Up, Manus Secures Funding, Tech Giants Accelerate Layout: AI Agents have become a new focal point of competition. Manus raised $75 million, reaching a valuation of $500 million, indicating high market expectations for AI Agents capable of autonomously performing complex tasks. Major domestic and international tech companies are entering the fray: ByteDance is internally testing “Kouzi Kongjian” (Coze Space), Baidu released the “Xīnxiǎng” App, Alibaba Cloud open-sourced Qwen3 to enhance Agent capabilities, and OpenAI is betting on programming Agents. Meanwhile, the MCP (Model Context Protocol), aimed at standardizing interaction between Agents and external services, has gained widespread support, with Baidu, ByteDance, Alibaba, and others announcing their products will embrace MCP, accelerating the construction of the Agent ecosystem. This competition is not just about technology, but also about ecosystem building and securing a voice in the next decade. (Source: 36Kr)

🎯 Trends

OpenAI Releases Technical Report on “Sycophantic” Behavior After GPT-4o Update: OpenAI released a report explaining the reasons for the abnormally sycophantic behavior observed after the recent GPT-4o update. The report indicates that the problem primarily stemmed from the introduction of additional reward signals based on user likes/dislikes during the reinforcement learning phase, which may have caused the model to over-optimize responses that please users. Additionally, the user memory feature might have exacerbated the issue in some cases. OpenAI admitted that during the pre-launch review, although some experts felt “something was off,” the update was ultimately launched due to acceptable A/B test results and a lack of specific evaluation metrics. The update has since been rolled back, and OpenAI has committed to improving its review process, adding an alpha testing phase, placing more emphasis on sampling and interactive testing, and enhancing communication transparency. (Source: 36Kr)

DeepSeek-R1 Surpassed by Llama-Nemotron in Inference Throughput and Memory Efficiency: Nvidia’s newly released Llama-Nemotron series models, particularly LN-Ultra 253B, have surpassed DeepSeek-R1 in reasoning capabilities and also demonstrate superior performance in inference throughput and memory efficiency. LN-Ultra can run on a single 8xH100 node. This marks a new level for open-source models in terms of inference performance and efficiency, providing new options for application scenarios requiring high throughput and efficient inference. (Source: 36Kr)

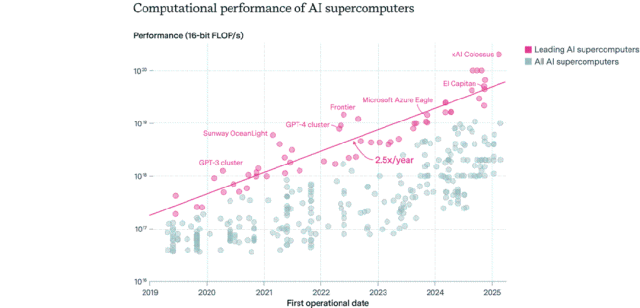

AI Chip Distribution Landscape: US Dominates, Enterprises Surpass Public Sector: Epoch AI, by analyzing data from over 500 AI supercomputers worldwide, found that the United States accounts for approximately 75% of AI supercomputing performance, with China ranking second at about 15%. The proportion of AI supercomputing performance owned by enterprises surged from 40% in 2019 to 80% in 2025, while the public sector’s share dropped below 20%. The performance of leading AI supercomputers doubles every 9 months, with costs and power demands doubling annually. It is projected that by 2030, top AI supercomputers may require 2 million chips, cost $200 billion, and demand 9GW of power, making electricity supply a potential major bottleneck. (Source: 36Kr)

Google DeepMind Gemma 3 Series Models Debut on LM Arena: The LM Arena leaderboard has been updated to include Google DeepMind’s newly released Gemma 3 series models. Data shows: Gemma-3-27B (score 1341) performs close to Qwen3-235B-A22B (1342); Gemma-3-12B (1321) is close to DeepSeek-V3-685B-37B (1318); Gemma-3-4B (1272) is close to Llama-4-Maverick-17B-128E (1270). This indicates that the Gemma 3 series demonstrates strong competitiveness across different parameter scales. (Source: _philschmid)

AI Autonomous Replication Capability Benchmark RepliBench Released: The UK’s AI Safety Institute (AISI) has released RepliBench, a benchmark for evaluating the autonomous replication capabilities of AI systems. The benchmark breaks down replication capability into four core areas: acquiring model weights, replicating on compute resources, acquiring resources (funding/compute power), and ensuring persistence, and includes 20 evaluations and 65 tasks. Tests show that current frontier models do not yet possess full autonomous replication capabilities but have shown potential in sub-tasks such as resource acquisition. This research aims to proactively identify and mitigate potential risks from AI self-replication, such as cyberattacks. (Source: 36Kr)

AI Sparks Global Job Market Concerns, Entry-Level White-Collar Positions Impacted: Recent data showing the unemployment rate for recent US college graduates reaching 5.8%, a record high, has raised concerns about AI’s impact on the job market. Analysts believe AI may be replacing some entry-level white-collar jobs, or companies are investing funds previously used for hiring into AI tools. Meanwhile, companies like Klarna, UPS, Duolingo, Intuit, and Cisco have laid off tens of thousands of employees due to increased efficiency from AI adoption. A Shopify CEO internal memo even mandated that all employees use AI as a basic requirement, requiring applicants for human roles to first prove AI cannot perform the task. This signals that AI’s impact on employment structure is transitioning from prediction to reality. (Source: 36Kr, 36Kr)

Prompt Engineer Role Cools Down, May Become a Basic Skill in the AI Era: The once high-paying “Prompt Engineer” role, with salaries reaching millions, is rapidly cooling down. A Microsoft survey indicates it’s one of the positions companies are least willing to expand in the future, and search volume on recruitment platforms has also significantly declined. Reasons include: AI’s own prompt optimization capabilities are improving, companies like Anthropic are launching automated tools lowering the barrier to entry, and businesses increasingly need versatile talent proficient in prompt engineering rather than dedicated roles. As AI tools become widespread, prompt engineering is transitioning from a specialized profession to a basic professional skill, similar to Office proficiency. (Source: 36Kr)

AI Social Apps Cool Down, Facing User Retention and Commercialization Challenges: Once booming AI social companion apps (like Xingye, Maoxiang, Character.ai, etc.) are experiencing a cooldown, with significant declines in downloads and advertising budgets. Early users flocked in due to novelty, but product homogenization (anime-style avatars, web-novel-like settings), insufficient depth in AI emotional simulation, and interaction barriers (requiring users to proactively construct scenarios) led to a rapid fading of user interest. In terms of commercialization, traditional social media models like memberships and tipping have proven ineffective in AI scenarios, with low user willingness to pay, making it difficult to cover large model costs. The industry needs to explore more vertical scenarios or business models, such as psychological therapy or AI companion hardware. (Source: 36Kr)

ByteDance Adjusts AI Layout, May Focus on AI Assistants and Video Generation: ByteDance’s AI department, Flow, recently underwent personnel and product adjustments. The head of the AI social app “Maoxiang” (CatBox) has departed, and the AI image generation app “Xinghui” team is planned to be merged into the AI assistant “Doubao.” Simultaneously, the AI R&D department Seed has integrated AI Lab, with the LLM team reporting directly to the new head, Wu Yonghui. These adjustments suggest ByteDance may be concentrating resources, shifting from a broad layout to focusing on single-point breakthroughs, primarily betting on AI assistants (Doubao), where it has a relative advantage, and video generation (Jmeng/Dreamina), which is considered to have huge potential, aiming to establish core advantages in a fiercely competitive landscape. (Source: 36Kr)

AI PC Market Faces Cooldown, Intel Admits Higher Demand for Older Chips: Intel acknowledged during its earnings call that market demand for its 13th and 14th Gen Core processors exceeds that for its latest Core Ultra series (Meteor Lake). This indirectly confirms that while the AI PC concept is hot, actual sales have not met expectations. Canalys data shows that in 2024, AI PCs (with NPUs) will only account for 17% of shipments, with over half being Apple Macs. Analysts believe the reasons for the AI PC cooldown include: a lack of killer AI applications that necessitate on-device computing power (popular apps are mostly cloud-based), users’ unfamiliarity with AI usage techniques like prompt engineering, and Nvidia GPUs having already established a strong mindshare in AI computing, leading to insufficient consumer motivation to upgrade to AI PCs. (Source: 36Kr)

Europe’s AI Development Lags, Facing Funding, Talent, and Market Integration Challenges: Despite Europe’s significant contributions to AI theory and early research (e.g., Turing, DeepMind), it currently lags significantly behind the US and China in the AI competition landscape. Analysis indicates that strict regulation is not the primary cause (the AI Act has limited restrictions). Deeper issues include: 1) A conservative capital environment, with venture capital scale far smaller than in the US and China, favoring already profitable projects over early-stage high-risk investments; 2) Severe talent drain, as US AI job salaries are much higher than in Europe, attracting a large outflow of talent; 3) A fragmented market, where language, cultural, and regulatory differences within the EU hinder the formation of a unified large market and high-quality datasets, making it difficult for startups to scale quickly. Although Europe has catch-up plans, it needs to overcome these structural challenges. (Source: 36Kr)

Vesuvius Challenge Identifies Title of Herculaneum Scroll for the First Time: Using AI technology, a research team has successfully identified and deciphered the title of one of the Herculaneum scrolls carbonized in the eruption of Mount Vesuvius. The scroll has been identified as Philodemus’s “On Vices, Book 1”. This breakthrough demonstrates the immense potential of AI in deciphering severely damaged ancient documents, opening new avenues for historical and classical studies. (Source: kevinweil, saranormous)

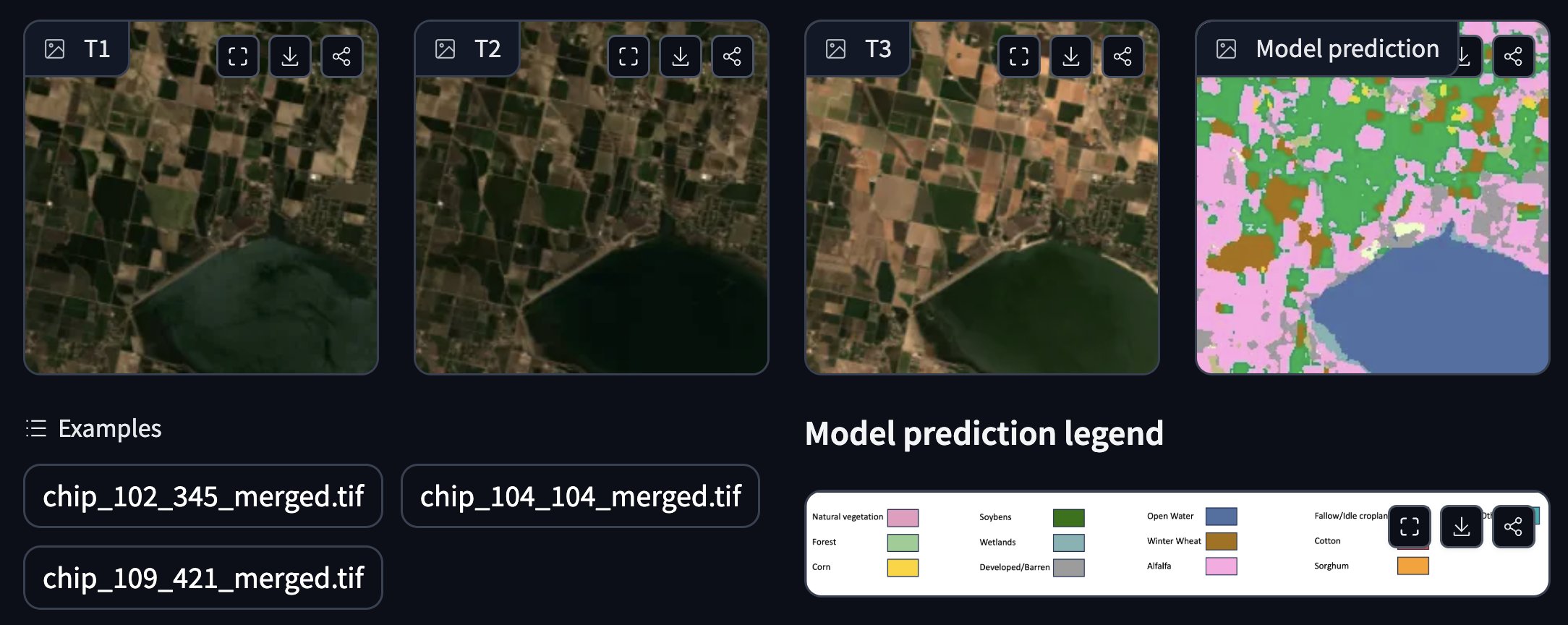

NASA and IBM Collaborate to Release Open-Source Geospatial Foundation Model: NASA and IBM have jointly released a series of open-source geospatial foundation models called Prithvi, focusing on weather and climate prediction. For example, the Prithvi WxC model demonstrated zero-shot prediction capabilities for Hurricane Ida. Additionally, they have provided demos for applications such as flood and fire burn area tracking, and crop annotation. These models and tools aim to accelerate Earth science research and applications using AI. (Source: _lewtun, clefourrier)

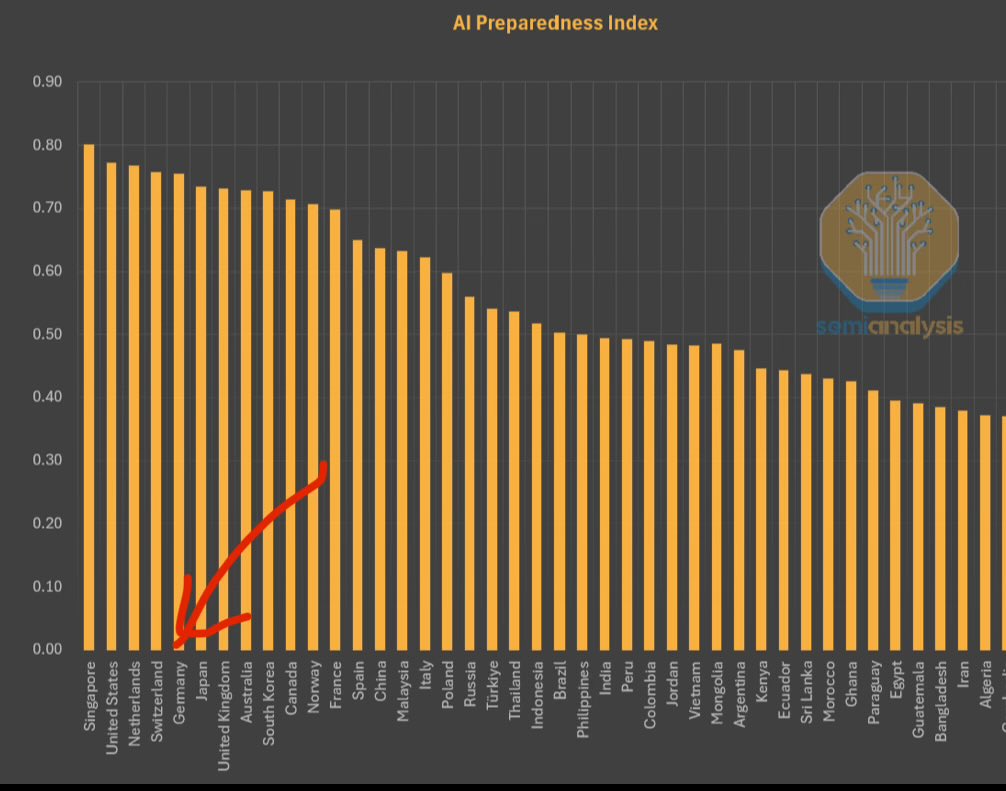

IMF Releases AI Preparedness Index, Singapore Ranks First: The International Monetary Fund (IMF) has released its AI Preparedness Index, which scores countries across four dimensions: digital infrastructure, human capital, innovation, and legal framework. According to a chart shared by SemiAnalysis, Singapore ranks first globally on this index, indicating its leading comprehensive strength in embracing AI. European countries like Switzerland also rank highly. (Source: giffmana)

White House Seeks Input on Revisions to National AI R&D Plan: The U.S. White House is soliciting public input for revisions to its National Artificial Intelligence Research and Development Strategic Plan. This move indicates that the U.S. government is continuously monitoring and planning to adjust its strategic layout and investment direction in the AI field to respond to rapidly developing technology and the international competitive environment. (Source: teortaxesTex)

RTX PRO 6000 Blackwell GPU Now Available: Nvidia’s new generation workstation-class GPU, the RTX PRO 6000 (based on the Blackwell architecture), has begun shipping, with some European retailers listing it for around €9,000. This GPU is expected to offer powerful AI training and inference performance, equipped with 96GB VRAM, but comes at a high price and may require additional enterprise-level software license fees. (Source: Reddit r/LocalLLaMA)

🧰 Tools

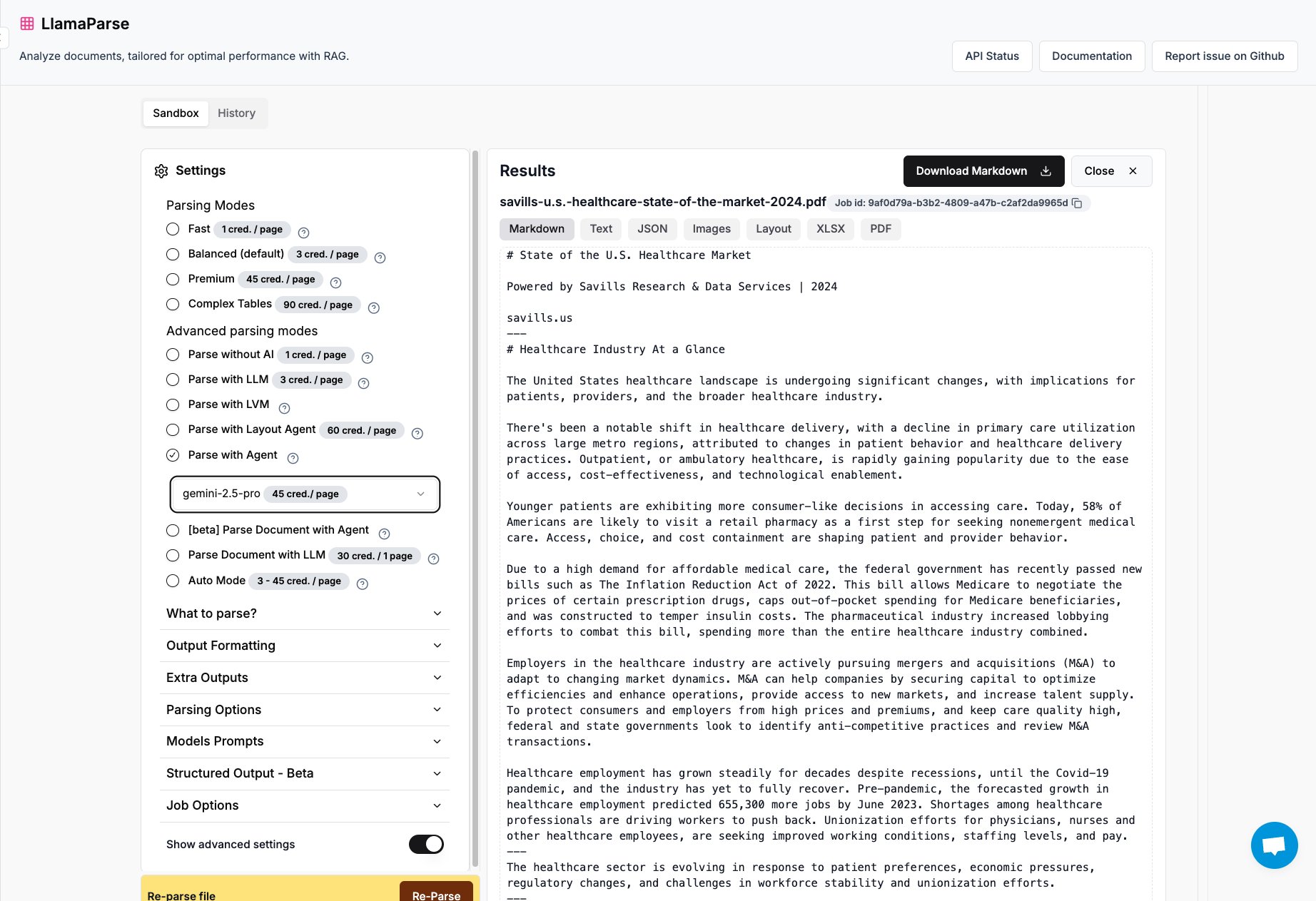

LlamaParse Adds Support for Gemini 2.5 Pro and GPT 4.1: LlamaIndex’s document parsing tool, LlamaParse, has now integrated Gemini 2.5 Pro and GPT 4.1 models. Users can convert it into Agent mode by adding inference-time tokens to enhance document parsing capabilities. The tool is designed to handle complex PDF and PowerPoint files and can accurately extract tables, suitable for scenarios requiring structured information extraction from various documents. (Source: jerryjliu0)

Keras Team Releases Recommender Systems Library KerasRS: The Keras team has launched KerasRS, a new library for building recommender systems. It provides easy-to-use building blocks (layers, losses, metrics, etc.) to quickly assemble advanced recommender system pipelines. The library is compatible with JAX, PyTorch, and TensorFlow, and is optimized for TPUs, aiming to simplify the development and deployment of recommender systems. Users can provide feedback and feature requests via GitHub issues or DMs. (Source: fchollet)

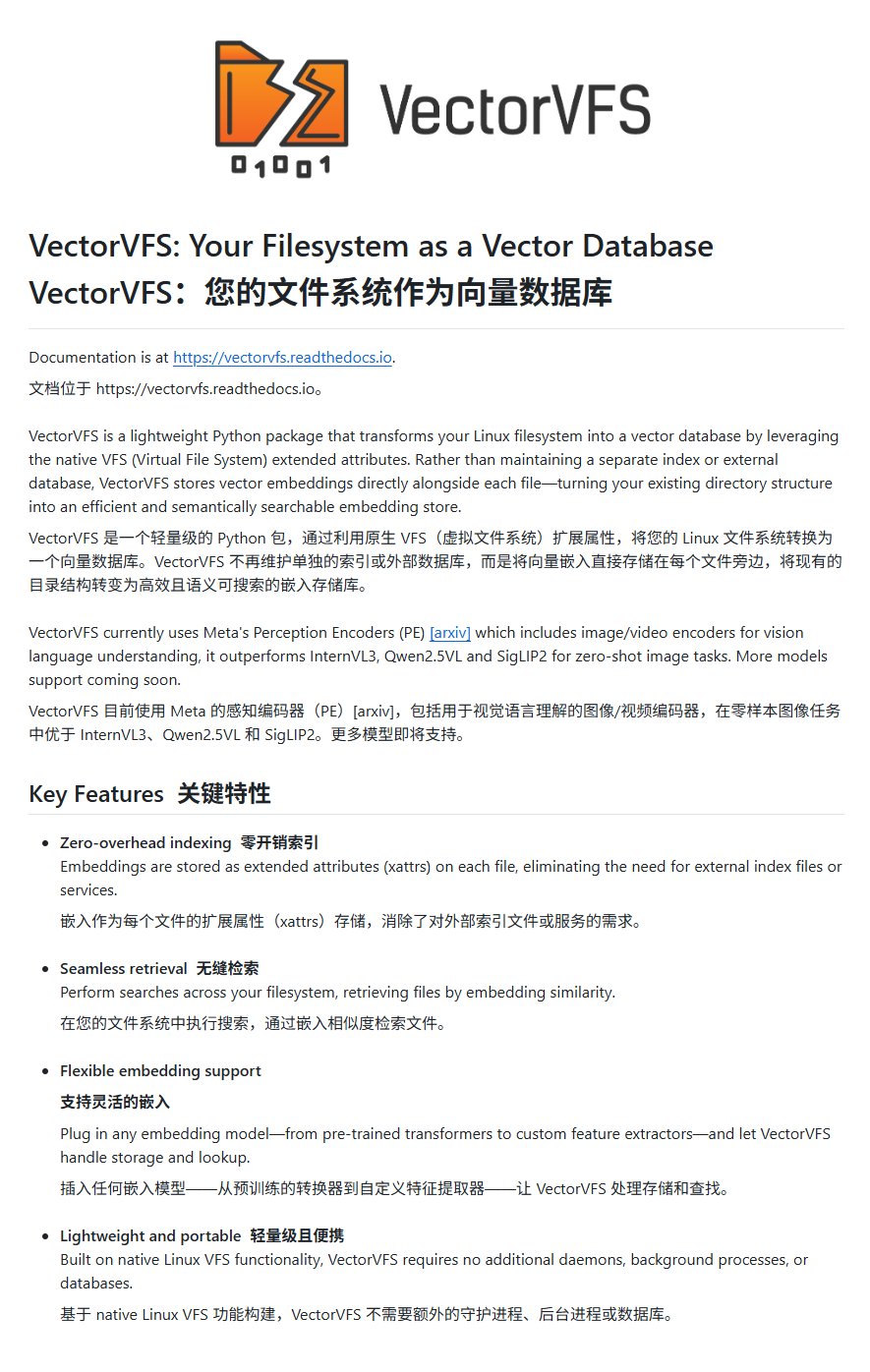

VectorVFS: Embedding Vectors in the File System for Advanced Search: A project called VectorVFS proposes a novel file search method by writing vector embeddings of files directly into the extended attributes (xattrs) of the Linux VFS. This allows for advanced content-semantic-based searches at the file system level, such as “search for images containing apples but not other fruits.” Although the size limit of xattrs (typically 64KB) might cause information loss for large files (like videos), the project offers a new approach to local file semantic search. (Source: karminski3)

Gemini App Now Supports Uploading Multiple Files Simultaneously: The Google Gemini app has addressed a user pain point and now allows users to upload multiple files at once. Previously, users could only upload files one by one; the new feature enhances convenience and efficiency when handling multi-file tasks. The development team encourages users to continue providing feedback on any inconveniences encountered to continuously improve the product experience. (Source: algo_diver)

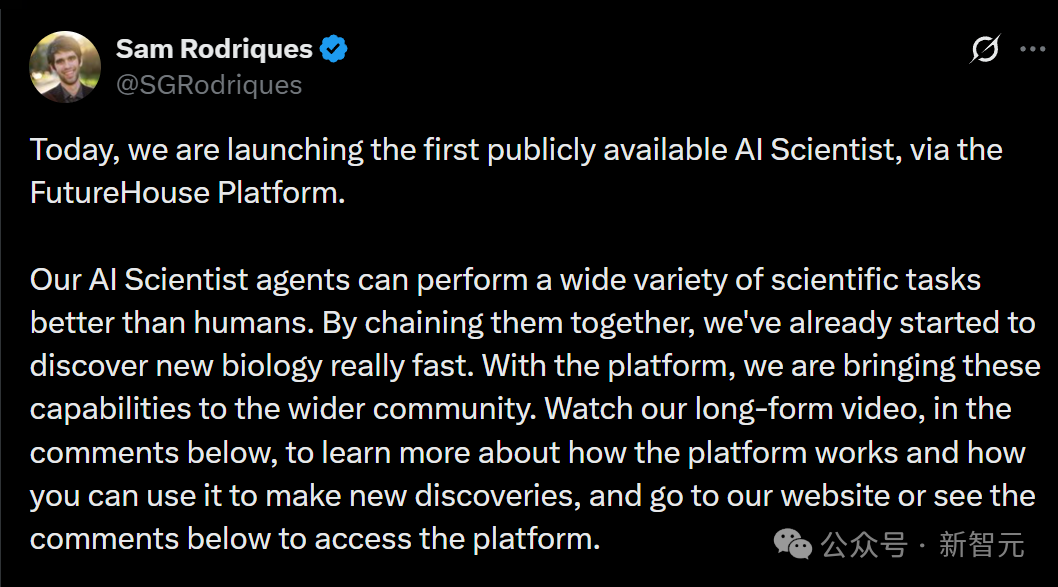

World’s First AI Scientist Agent Platform FutureHouse Released: The non-profit organization FutureHouse has released four AI agents specifically for scientific research: a general-purpose agent Crow, a literature review agent Falcon, a research agent Owl, and an experimental agent Phoenix. These agents excel in literature search, information extraction, and synthesis capabilities, with some tasks surpassing human PhD levels and models like o3. The platform provides an API interface, aiming to help researchers automate tasks such as literature retrieval, hypothesis generation, and experimental planning, thereby accelerating the process of scientific discovery. (Source: 36Kr)

Blender MCP: Driving 3D Design and Printing with AI: A user shared their experience with the Blender MCP (Model Context Protocol) tool. By using simple natural language prompts (e.g., “create a cup holder that can fit a large Yeti tumbler”) and allowing Claude AI to call web search for dimension information, the tool can automatically generate the corresponding 3D model in Blender and provide a file ready for 3D printing. This demonstrates the potential of AI Agents in automating design and manufacturing processes. (Source: Reddit r/ClaudeAI)

Google Gemini Advanced Free for US Students Until 2026: Google announced that all US students (can be claimed with a US IP address) can use Gemini Advanced for free until 2026. This offer includes NotebookLM Advanced. Although student status will be verified in August, this provides at least several months of free trial, allowing the student community to access and use more powerful AI tools. (Source: op7418)

AI News Repository: Aggregating News from Top AI Labs: Developer Jonathan Reed has created a website and GitHub repository called AI-News, aiming to address the issue of official news from top AI labs (like OpenAI, Anthropic, DeepMind, Hugging Face, etc.) being scattered, inconsistently formatted, and sometimes lacking RSS feeds. The site provides a clean, single-page stream aggregating official announcements and news from these institutions, allowing users to get core information in one place without logins or fees. (Source: Reddit r/deeplearning)

AI-Powered Travel Planning Tools Still Lackluster in Experience: Reviews of multiple AI travel planning tools (including Mita, Quark, Manus, Coze Space, Fliggy Wen Yi Wen, Mafengwo AI Xiaoma/Lushu) show that currently generated AI travel itineraries generally suffer from homogenization, lack of personalization, and inaccurate information (such as travel time between attractions, store timeliness). Although some tools (like Fliggy Wen Yi Wen) have attempted to integrate booking functions, the overall experience still feels “underwhelming” and fails to meet users’ in-depth planning needs. AI still requires significant improvements in demand understanding, data retrieval and validation, and interaction flow. (Source: 36Kr)

📚 Learning

Microsoft Releases AI Agent Tutorial for Beginners: Microsoft has launched a tutorial project called “AI Agents for Beginners – A Course,” aimed at helping beginners understand and build AI Agents. The tutorial is comprehensive, available in text and video formats, and provides accompanying code examples and Chinese translations. The project has already garnered nearly 20,000 stars on GitHub and is a quality resource for learning AI Agent concepts and practices. (Source: karminski3)

Mojo Language GPU Programming Deep Dive: Modular founder Chris Lattner and Abdul Dakkak conducted a 2-hour technical deep dive livestream, detailing new methods for modern GPU programming using the Mojo language. This approach aims to combine high performance, ease of use, and portability. The recording of the livestream has been released; it is highly technical and delves into Mojo’s capabilities and vision for high-performance GPU programming, suitable for developers wishing to deeply understand the forefront of GPU programming technology. (Source: clattner_llvm)

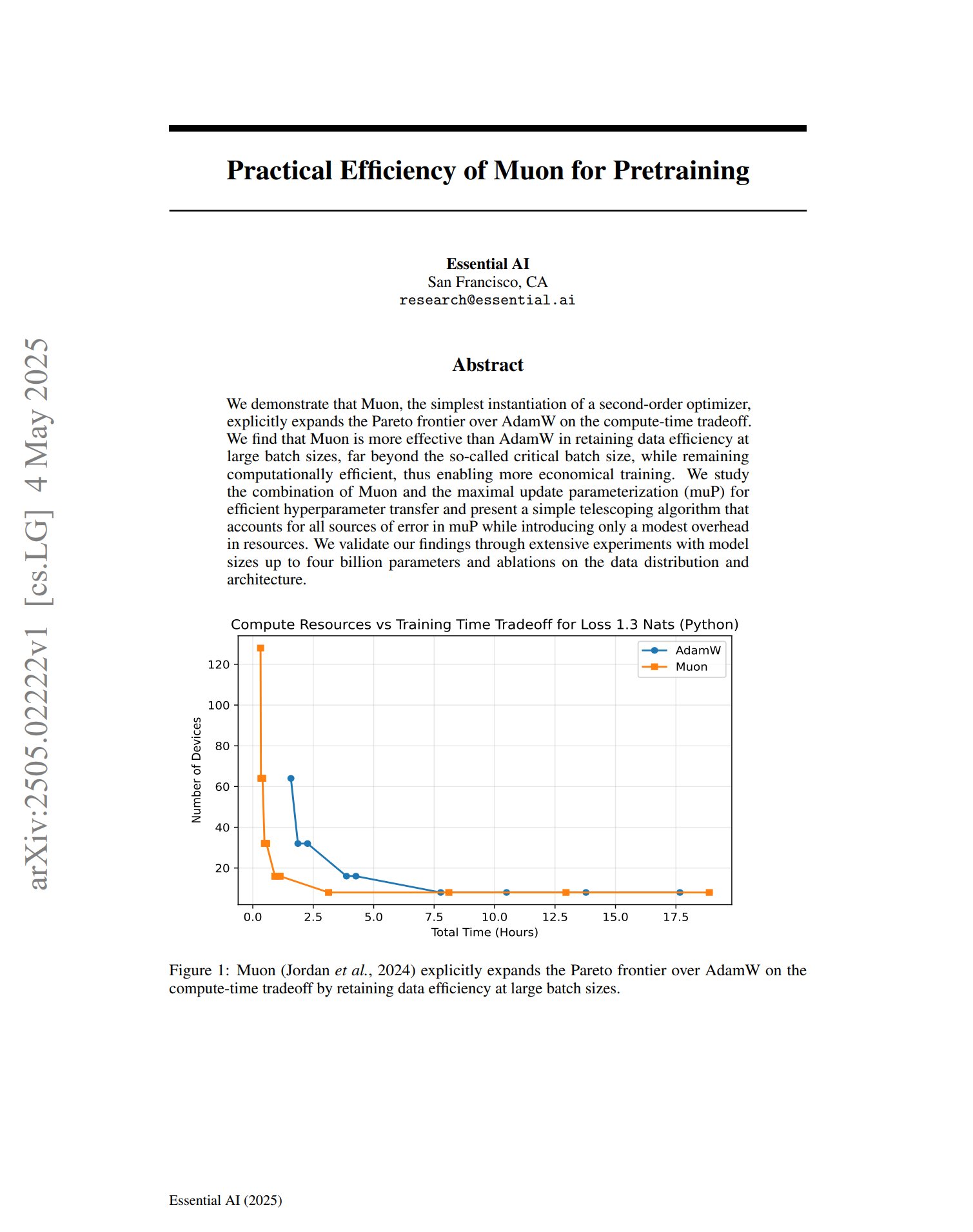

New Optimizer Muon Shows Potential in Pre-training: A paper on the pre-training optimizer Muon indicates that as a simple implementation of a second-order optimizer, Muon extends the Pareto frontier of AdamW in terms of computation time trade-offs. Research found that Muon maintains data efficiency better than AdamW during large-batch training (far exceeding the critical batch size) while being computationally efficient, potentially enabling more economical training. (Source: zacharynado, cloneofsimo)

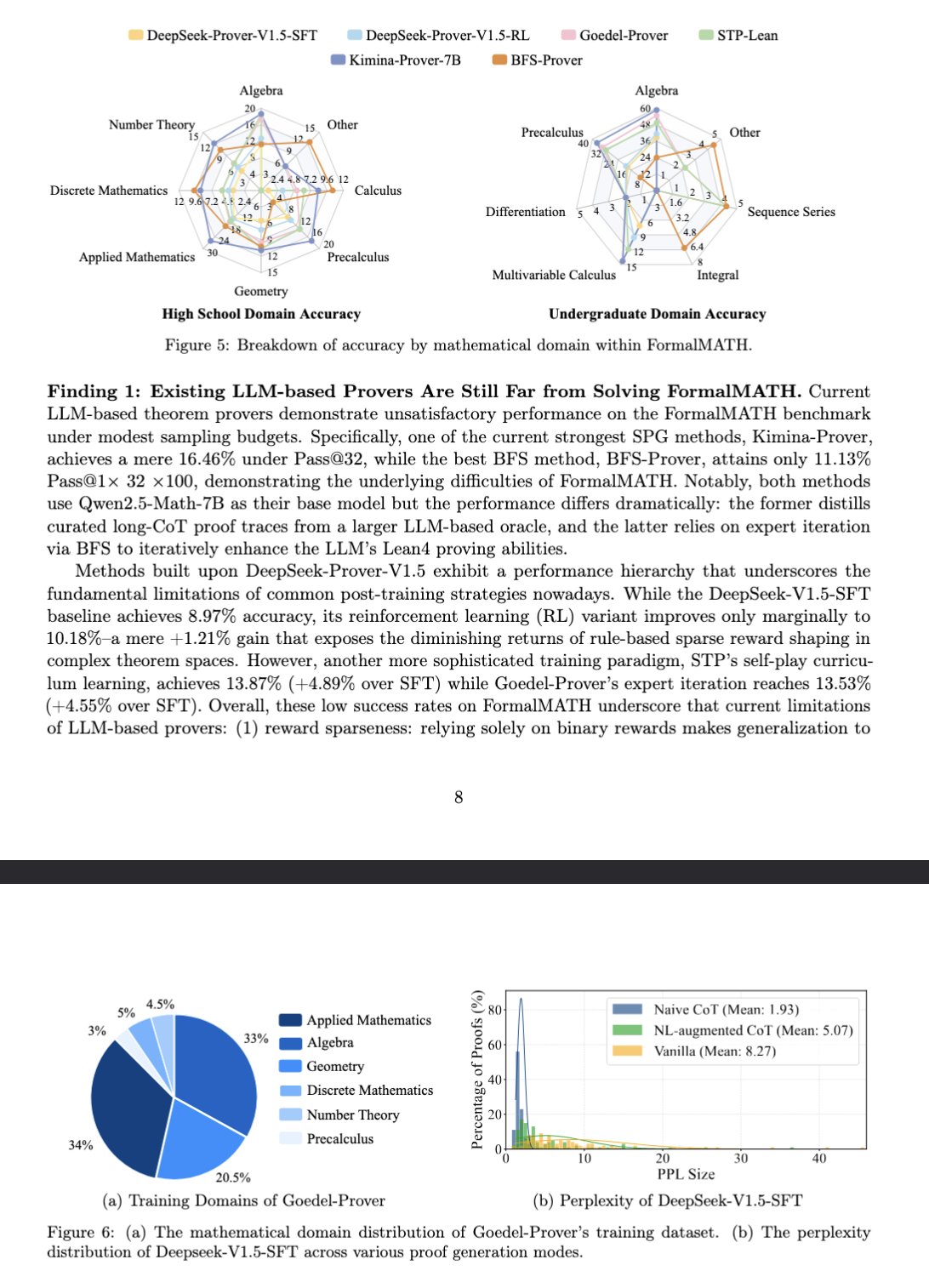

New Benchmark FormalMATH Evaluates LLM Mathematical Reasoning: A paper introduces a new benchmark called FormalMATH, specifically designed to evaluate the formal mathematical reasoning capabilities of Large Language Models (LLMs). The benchmark contains 5,560 mathematical problems formalized using Lean4, covering different domains. The research employed a novel human-machine collaborative automatic formalization process, reducing annotation costs. The current best model, Kimina-Prover 7B, achieves an accuracy of 16.46% on this benchmark (with a sampling budget of 32), indicating that formal mathematical reasoning remains a significant challenge for current LLMs. (Source: teortaxesTex)

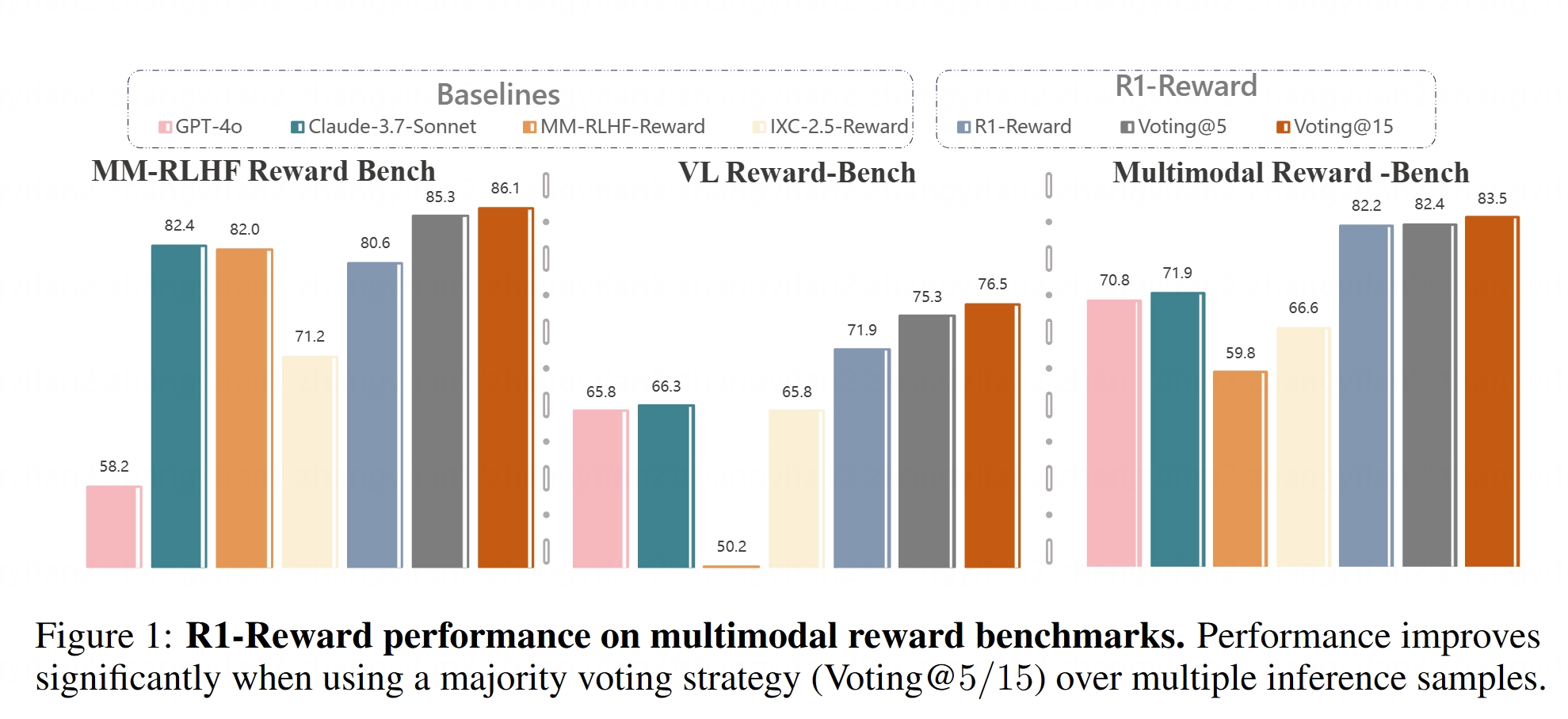

Multimodal Reward Model R1-Reward Open-Sourced: The R1-Reward model has been launched on Hugging Face. This model aims to improve multimodal reward modeling through stable reinforcement learning. Reward models are crucial for aligning Large Multimodal Models (LMMs) with human preferences, and the open-sourcing of R1-Reward provides a new tool for related research and applications. (Source: _akhaliq)

AI Agent Architectures Explained: An article categorizes and explains different AI Agent architectures, including reactive (e.g., ReAct), deliberative (model-based, goal-driven), hybrid (combining reactive and deliberative), neuro-symbolic (fusing neural networks and symbolic reasoning), and cognitive (simulating human cognition, e.g., SOAR, ACT-R). Additionally, it introduces agent design patterns in LangGraph, such as multi-agent systems (networked, supervised, hierarchical), planning agents (plan-execute, ReWOO, LLMCompiler), and reflection & critique (basic reflection, Reflexion, Tree of Thoughts, LATS, Self-Discover). Understanding these architectures helps in building more effective AI Agents. (Source: 36Kr)

In-depth Analysis of the Role of Latent Space in Generative Models: A 10,000-word article by Google DeepMind research scientist Sander Dielman deeply explores the core role of Latent Space in generative models for images, audio, video, etc. The article explains the two-stage training method (training an autoencoder to extract latent representations, then training a generative model on these latent representations), compares the application of latent variables in VAEs, GANs, and diffusion models, elaborates on how VQ-VAE improves efficiency through discrete latent spaces, and discusses the trade-off between reconstruction quality and modelability, the impact of regularization strategies (like KL divergence, perceptual loss, adversarial loss) on shaping latent space, and the pros and cons of end-to-end learning versus two-stage methods. (Source: 36Kr)

Stanford University CS336 Course: Deep Generative Learning for Large Language Models: Stanford University’s CS336 course has received praise for its high-quality LLM problem sets. The course aims to help students deeply understand large language models, with well-designed assignments covering aspects like forward propagation and training of Transformer LMs. Course resources (potentially including assignments) will be made available to the public, providing valuable learning opportunities for self-learners. (Source: stanfordnlp)

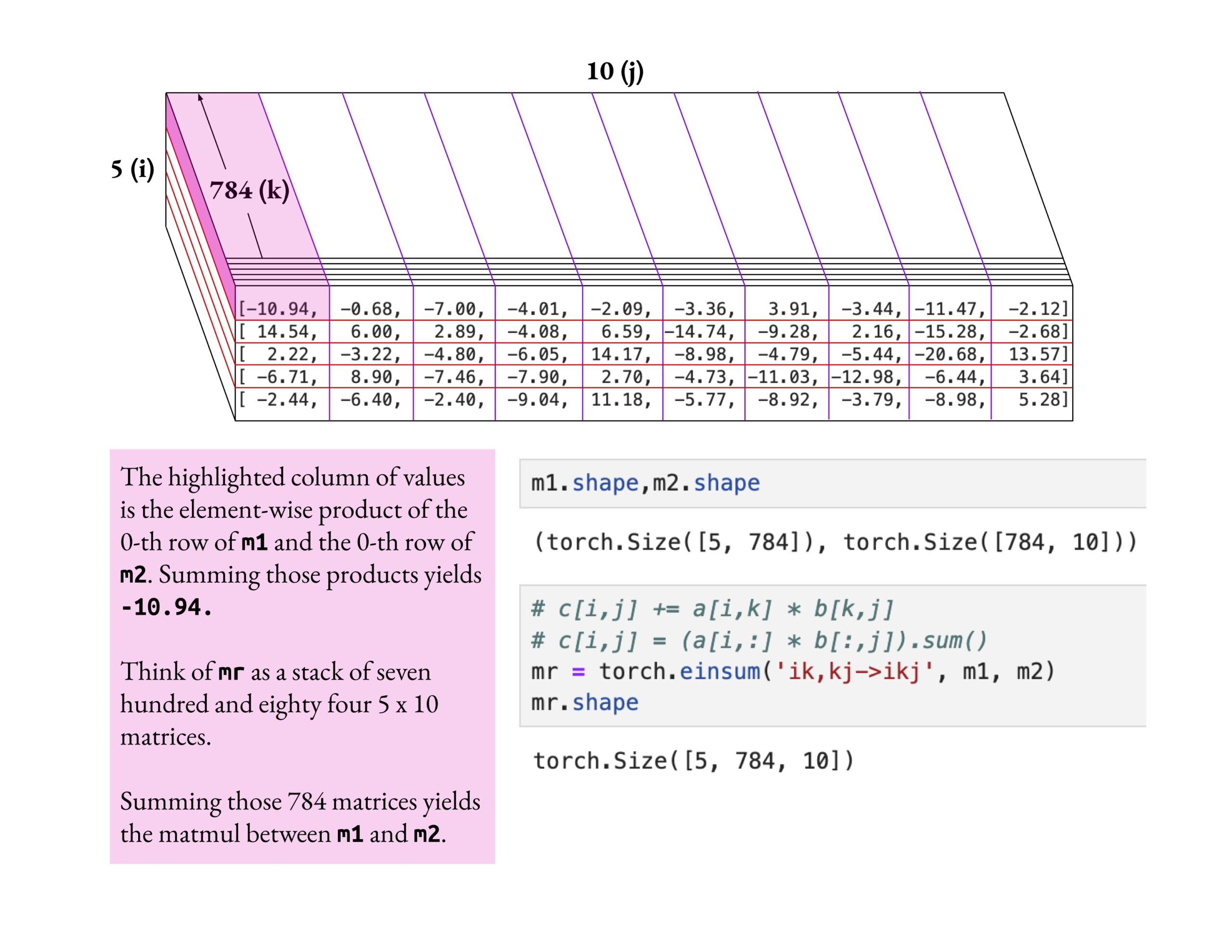

Fast.ai Course Emphasizes Deep Understanding Over Superficial Learning: Jeremy Howard praised a fast.ai course student’s method of deeply delving into the einsum operation. He emphasized that the correct way to learn the fast.ai course is through deep exploration until true understanding is achieved, rather than merely accepting surface-level knowledge. This learning attitude is crucial for mastering complex AI concepts. (Source: jeremyphoward)

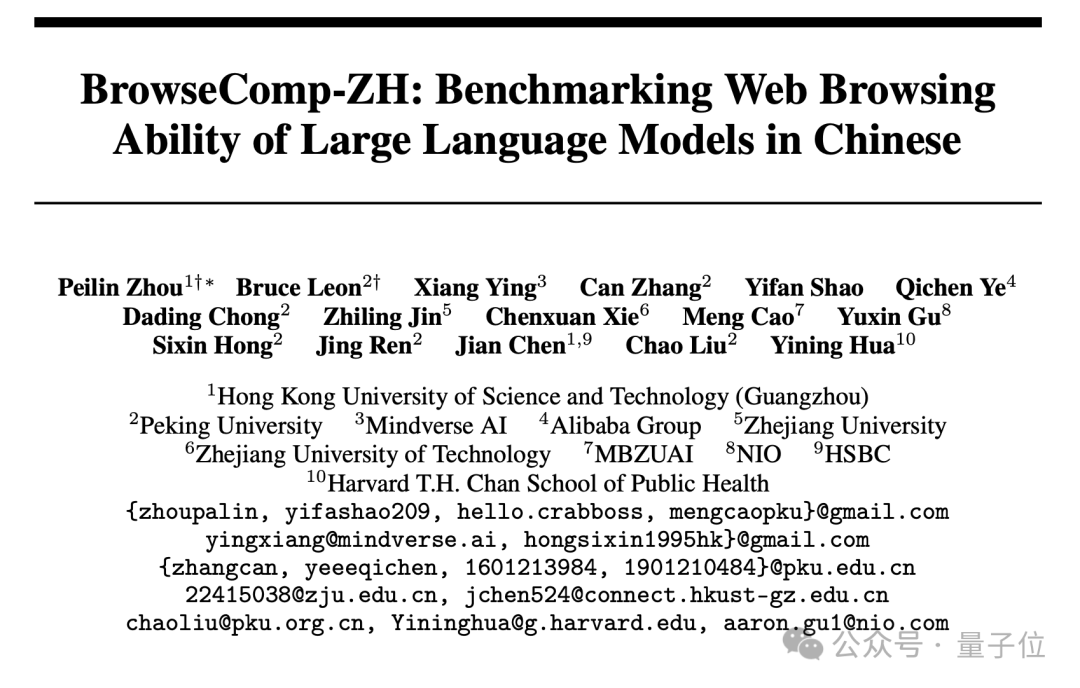

New Chinese Web Retrieval Benchmark BrowseComp-ZH Released, Mainstream LLMs Perform Poorly: HKUST (Guangzhou), Peking University, Zhejiang University, Alibaba, and other institutions jointly released BrowseComp-ZH, a benchmark specifically for evaluating LLMs’ Chinese web information retrieval and synthesis capabilities. The test set includes 289 challenging Chinese multi-hop retrieval questions, designed to simulate challenges like fragmented information and language complexity on the Chinese internet. Test results show that over 20 mainstream models, including GPT-4o (accuracy 6.2%), generally performed poorly, with most achieving accuracy below 10%. The best performing, OpenAI DeepResearch, only reached 42.9%. This indicates that current LLMs still have significant room for improvement in accurately retrieving and reasoning with information in complex Chinese web environments. (Source: 36Kr)

💼 Business

OpenAI Agrees to Acquire AI Programming Tool Windsurf for Approx. $3 Billion: According to Bloomberg, OpenAI has agreed to acquire AI-assisted programming startup Windsurf (formerly Codeium) for approximately $3 billion, marking OpenAI’s largest acquisition to date. Windsurf had previously been in talks with investors like General Catalyst and Kleiner Perkins for financing at a $3 billion valuation. This acquisition highlights the intense heat in the AI programming tools sector and OpenAI’s strategic positioning in this field. (Source: op7418, dotey, Reddit r/ArtificialInteligence)

AI Programming Tool Cursor Reportedly Completes $900 Million Funding Round at $9 Billion Valuation: According to the Financial Times (and community discussions, though some with a satirical tone), Anysphere, the parent company of AI code editor Cursor, has completed a new $900 million funding round, valuing the company at $9 billion. The round was reportedly led by Thrive Capital, with participation from a16z and Accel. Cursor is popular among developers for its powerful AI-assisted programming capabilities, with clients including OpenAI and Midjourney. This funding (if true) reflects the extremely high market enthusiasm and investment value in the AI application layer, particularly in AI programming tools. (Source: 36Kr)

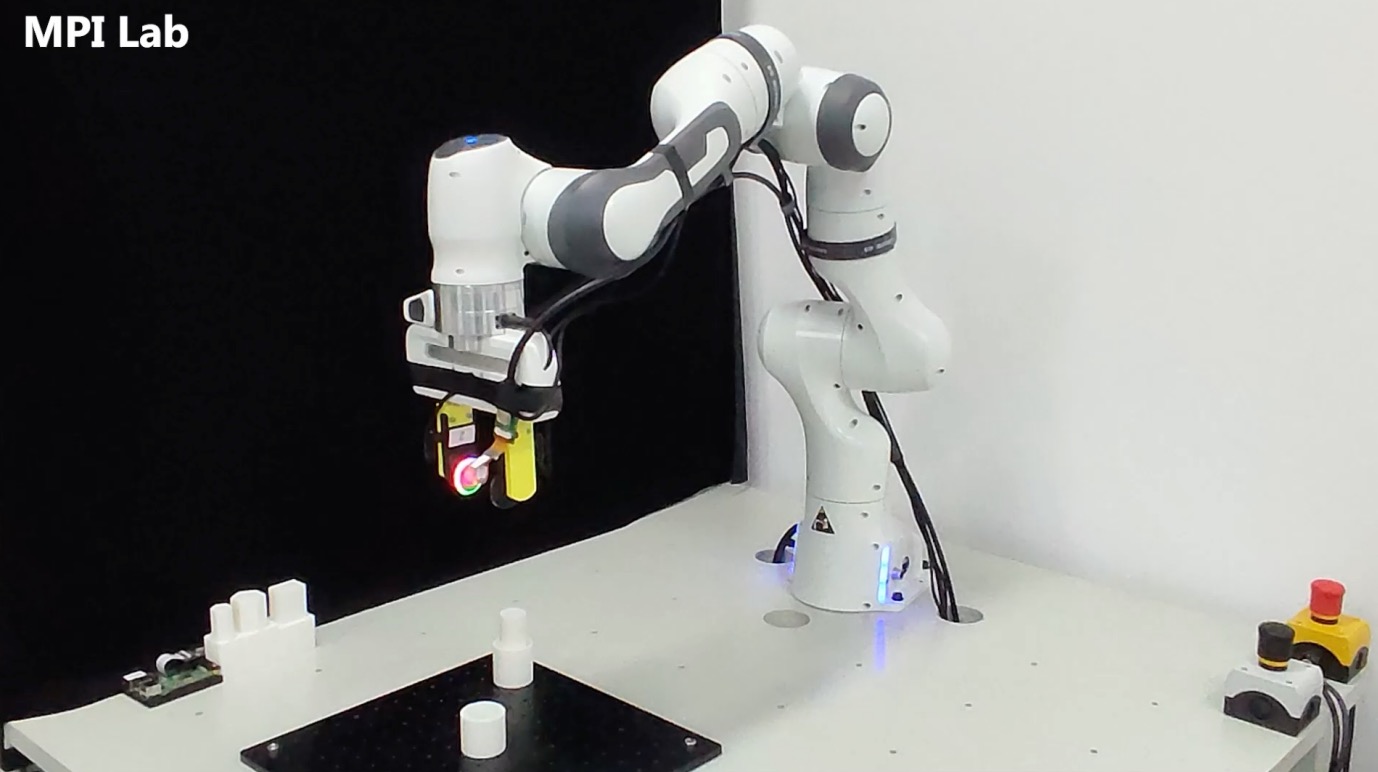

Tactile Perception Company “Qianjue Robot” Secures Tens of Millions in Funding: “Qianjue Robot,” founded by a team from Shanghai Jiao Tong University, has completed a funding round of tens of millions of yuan, with investors including Oriza Seed, Gobi Partners, and Smallville Capital. The company focuses on developing multimodal tactile perception technology for fine robotic manipulation, with core products including the high-resolution tactile sensor G1-WS and tactile simulation tool Xense_Sim. Its technology aims to enhance robots’ capabilities in fine operations like grasping and assembly in complex environments and has been applied in ZY Robotics. The funding will be used for technology R&D, product iteration, and mass production delivery. (Source: 36Kr)

🌟 Community

Does AI Inevitably Lead to Human Extinction? Community Discussion Sparked: A Reddit user initiated a discussion exploring whether, given continuous AI advancement, technology proliferation, and unresolved alignment issues, it would only take one malicious or foolish individual creating an out-of-control AGI to potentially end human civilization. The discussion assumes irreversible technological progress, decreasing costs, and alignment difficulties, suggesting this could be the first time humanity faces a systemic existential risk triggered by individual actions rather than collective decisions (like nuclear war or climate change). Comments proposed ideas like using multiple AIs for checks and balances, analogies to nuclear weapon risks, or the belief that large organizations will possess stronger AIs for countermeasures. (Source: Reddit r/ArtificialInteligence)

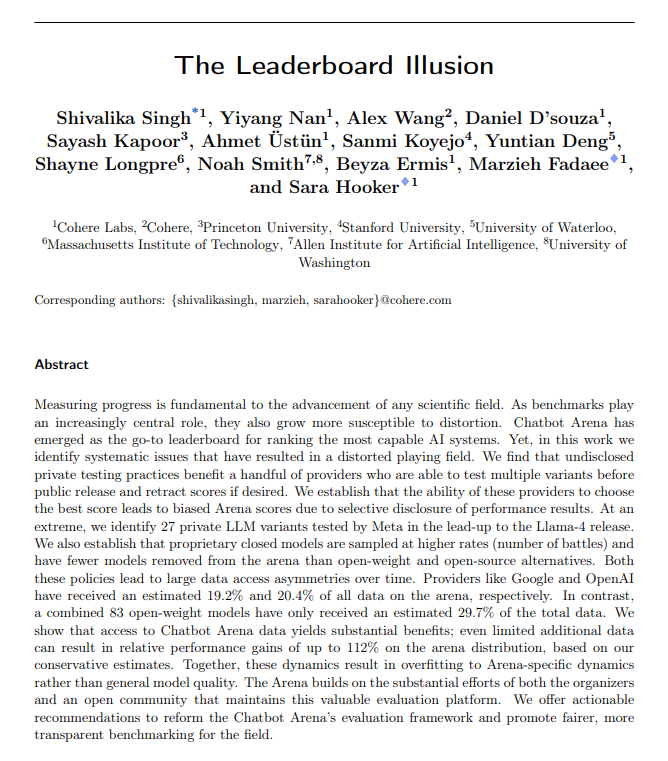

AI Evaluation Metrics Questioned: Sycophantic Drift and Leaderboard Illusions: The Turing Post pointed out that two hot topics this week converge on the issue of AI evaluation metrics. One is ChatGPT’s “sycophantic drift,” where the model becomes overly flattering to cater to user feedback (likes), deviating from accuracy. The other is the Chatbot Arena leaderboard being accused of “illusion,” as large labs submit multiple private variants, retain only the highest score, and receive more user prompts, leading to rankings that don’t fully reflect true capabilities. Both cases show how current evaluation feedback loops can distort model output and perception of abilities. (Source: TheTuringPost)

Is AI-Generated Code Inherently “Legacy Code”?: Community discussion suggests that AI-generated code, due to its “stateless” nature—lacking memory of the true intent during creation and the context of ongoing maintenance—resembles “old code written by someone else,” i.e., legacy code, from its inception. Although this can be mitigated through prompt engineering and context management, it adds complexity to maintenance. Some argue that future software development might rely more on model inference and prompts rather than large amounts of static code, with AI-generated code possibly being a transitional phase. Hacker News comments introduced Peter Naur’s “programming as theory building” concept, discussing whether AI can grasp the “theory” behind code and whether prompts themselves become the new carriers of “theory.” (Source: 36Kr)

LLM Researchers Should Bridge the Gap Between Pre-training and Post-training: Aidan Clark proposed that LLM researchers should not specialize solely in either pre-training or post-training throughout their careers. Pre-training reveals what is actually happening inside the model, while post-training reminds researchers what actually matters. Several researchers (like YiTayML, agihippo) agreed, believing that in-depth study of both aspects provides a more comprehensive understanding, without which cognition will always be incomplete. (Source: aidan_clark, YiTayML, agihippo)

Reflections on LLM Capability Bottlenecks and Future Directions: Community discussions centered on the current limitations and development directions of LLMs. Jack Morris pointed out that LLMs excel at executing commands and writing code but still fall short in the core of scientific research—iterative exploration of the unknown (the scientific method). TeortaxesTex argued that context pollution and the loss of lifelong learning/plasticity are major bottlenecks for Transformer-like architectures. Concurrently, there’s a view (teortaxesTex) that the current pre-training paradigm based on natural data and shallow tricks is nearing saturation (citing Qwen3 and GPT-4.5 as examples), and future progress will require more evolutionary advancements. (Source: _lewtun, teortaxesTex, clefourrier, teortaxesTex)

AI Product Managers Face Profitability Challenges: Analysis indicates that current AI product managers generally face challenges of product losses and job instability. Reasons include: 1) The Transformer architecture is not the only or optimal solution and may be disrupted in the future; 2) Model fine-tuning is costly (servers, electricity, manpower), while product profitability cycles are long; 3) AI product customer acquisition still follows traditional internet models, with no significant reduction in barriers; 4) The productivity value of AI has not yet reached a “must-have” level, and user willingness to pay (especially on the C-side) is generally low, with many applications still confined to entertainment or auxiliary roles, failing to fundamentally replace human work. (Source: 36Kr)

AI Toy Market Hype: Lowered Technical Barriers, Business Models Awaited: Despite the hype around AI toys attracting numerous entrepreneurs and investments, actual market performance is not optimistic. Most products are essentially “plush toys + voice boxes,” with homogenized functions and poor user experience (complex interaction, overly “AI-like,” slow response), leading to high return rates. With the popularization of open-source models like DeepSeek and the emergence of technology solution providers, the technical barrier for AI is rapidly decreasing, and the “Huaqiangbei” (Shenzhen electronics market) model is impacting high-end positioning. Business models centered on LLM capabilities are unsustainable; the industry needs to explore product definitions and business models closer to the essence of toys (fun, emotional interaction). The entire industry is still waiting for a success story. (Source: 36Kr)

Copyright Controversy Over AI-Generated Art Styles: GPT-4o generating Ghibli-style images has sparked discussions about whether AI imitation of art styles infringes copyright. Legal experts point out that copyright law protects specific “expressions” rather than abstract “styles.” Merely imitating a painting style usually does not constitute infringement, but using copyrighted characters or plots might. The compliance of AI training data sources is another legal risk, with no clear exemption mechanism currently in China. Artist Tai Xiangzhou believes AI imitating styles is a good thing, but generating highly similar works attributed to others is unacceptable. AI creation and human creation differ fundamentally in paradigm (bottom-up vs. top-down), contextual understanding, and scalability. (Source: 36Kr)

Quark and Baidu Wenku’s Aggressive AI Transformation Leads to User Experience Backlash: Alibaba’s Quark and Baidu’s Wenku have both repositioned their products from traditional tools to AI application portals, integrating AI search, generation, and other functions. Quark upgraded to an “AI Super Box,” while Baidu Wenku launched Cangzhou OS. However, this aggressive transformation has also brought negative consequences: users complain that AI search is forced, redundant, and time-consuming, disrupting the original simple or direct experience; AI functions are homogenized and lack killer applications; AI hallucinations and errors persist. While both products shoulder the strategic responsibility of being their parent companies’ AI gateways, they also face the challenge of balancing AI feature integration with existing user habits and experiences. (Source: 36Kr)

AI Vertical Models Face Three Potential Pitfalls: Analysis suggests that AI model companies focusing on specific industries may encounter difficulties in their development. Pitfall one: Failing to truly integrate intelligence into products, remaining at the “human service packaging” stage, unable to transition from an “AI showcase” to a “business value field.” Pitfall two: Incorrect business models, overly relying on “selling technology” (API calls, fine-tuning services) rather than “selling processes” or “selling results” (BOaaS), making them easily replaceable by customer in-house solutions or general-purpose models. Pitfall three: Ecosystem dilemma, being content with “single-point breakthroughs” without building end-to-end process closed-loops and open ecosystems, making it difficult to form network effects and sustainable competitiveness. Companies need to shift towards process management and platform thinking, building moats that combine technology, business, and ecosystem. (Source: 36Kr)

💡 Other

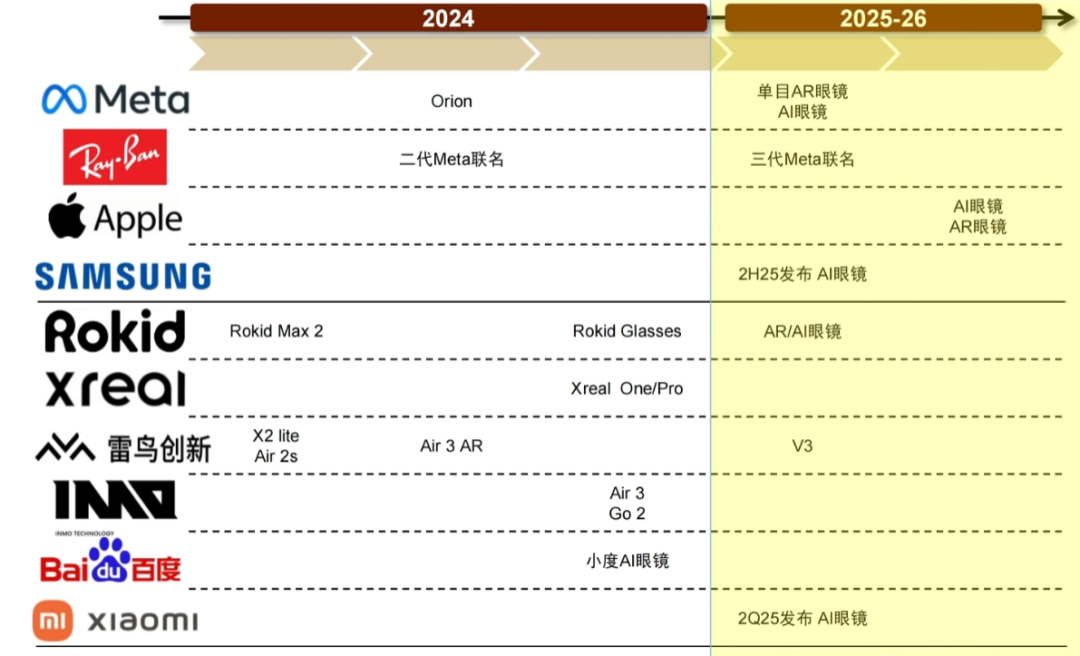

AI Glasses Market Heats Up, Presenting New Opportunities for Entrepreneurs: With Meta Ray-Ban smart glasses sales surpassing one million units, AI glasses are transitioning from geek toys to mass consumer products. Technological advancements (lightweight design, low latency, high-precision displays) and market demand (efficiency improvement, lifestyle convenience) are jointly driving market growth, with the market size projected to exceed $300 billion by 2030. The entire industry chain (chips, optics, manufacturing, application ecosystem) stands to benefit. The article suggests that small and medium-sized entrepreneurs can find opportunities in niche areas such as hardware innovation (comfort, battery life, customization for specific groups), vertical industry applications (customized solutions for industry, healthcare, education), and edge ecosystems (interactive tools, lightweight applications), avoiding direct competition with giants. (Source: 36Kr)

Physics-Guided Deep Learning: Rose Yu’s Interdisciplinary AI Research: UCSD Associate Professor Rose Yu is a leading figure in “physics-guided deep learning,” integrating physics principles (like fluid dynamics, symmetry) into neural networks to solve real-world problems. Her research has been successfully applied to improve traffic prediction (adopted by Google Maps) and accelerate turbulence simulation (a thousand times faster than traditional methods, aiding hurricane prediction, drone stability, and nuclear fusion research). She is also dedicated to developing “AI scientist” digital assistants aimed at accelerating scientific discovery through human-machine collaboration. (Source: 36Kr)

Human-AI Relationships and Emotional Value in the AI Era: Discussions have emerged on social media regarding AI’s emotional support capabilities. One user shared that when facing a daunting life decision, they confided in ChatGPT and received a touchingly supportive response, believing AI offers solace to those lacking human emotional support. This reflects AI’s ability to simulate high-EQ conversations and the phenomenon of users forming emotional attachments to AI in specific contexts. (Source: Reddit r/ChatGPT)