Keywords:Gemini 2.5 Pro, Kevin-32B, AI Agent, RAG technology, Digital twin, Gemini 2.5 Pro coding capabilities, Kevin-32B CUDA kernels, Agentic Search, GraphRAG knowledge graph, AI and digital twin integration

🔥 Focus

Google Releases Gemini 2.5 Pro I/O Edition: Google has released Gemini 2.5 Pro I/O Edition, significantly boosting its coding capabilities and sweeping the top spots on LMArena’s Programming, Vision, and WebDev leaderboards, marking the first time a single model has topped all three. The new version enhances frontend and UI development, capable of generating applications from hand-drawn sketches, and fixes function calling issues, demonstrating Google’s rapid progress in AI model capabilities. (Source: JeffDean, lmarena.ai, dotey)

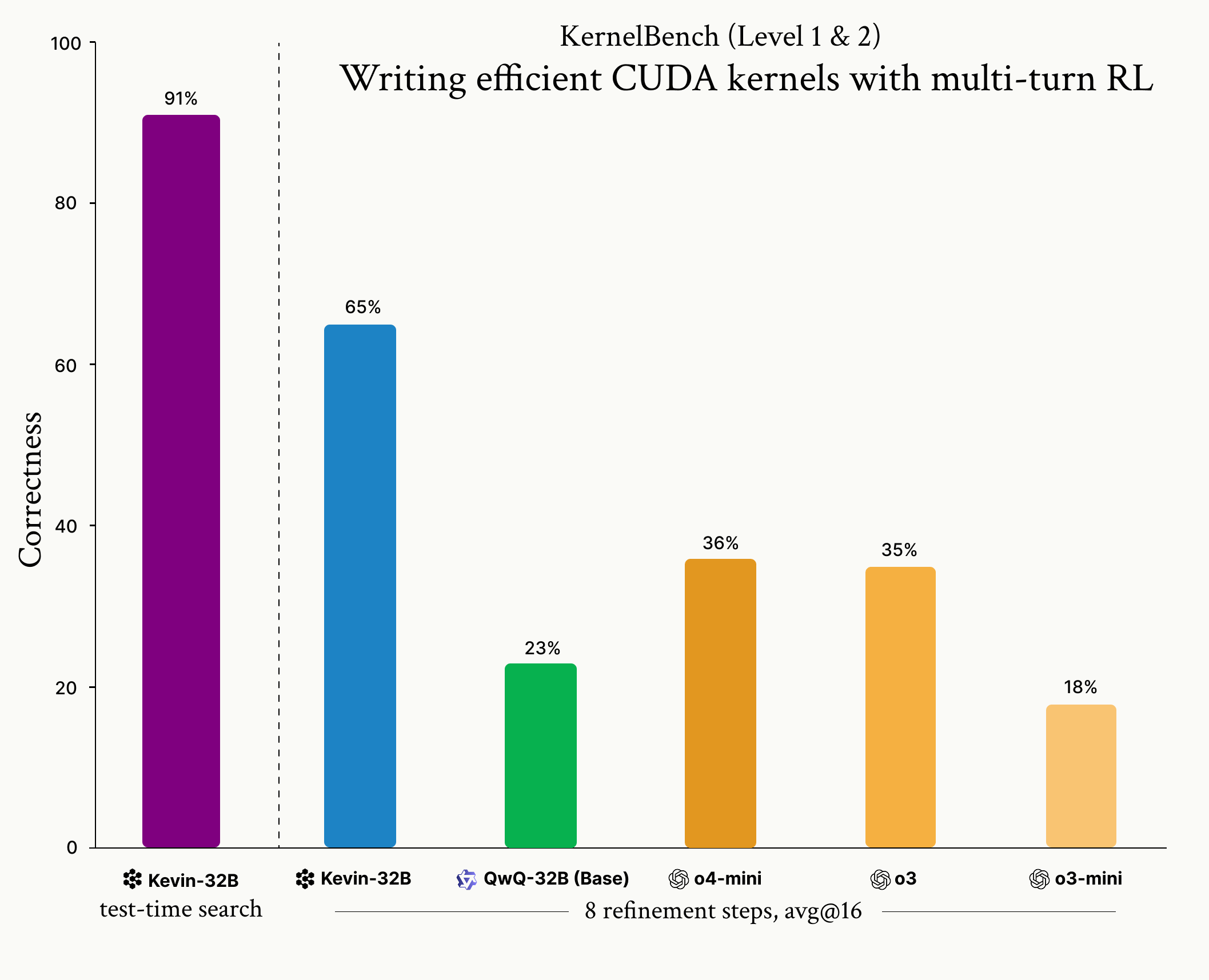

Cognition Releases Kevin-32B Model: Cognition has released Kevin-32B, the first open-source model trained using reinforcement learning (GRPO algorithm) specifically for writing CUDA kernels. The model performs exceptionally well on the KernelBench dataset, surpassing top inference models like o3 and o4-mini in correctness and performance, showcasing the potential of RL in low-level programming optimization. (Source: Cognition, Dorialexander, vllm_project)

Meta Releases Perception Encoder: Meta has released a new visual encoder, Meta Perception Encoder, setting new standards for image and video tasks. The model excels in zero-shot classification and retrieval, outperforming existing models and providing a powerful new foundation for image and video understanding research and applications. (Source: AIatMeta)

LTX-Video 13B Open-Source Video Generation Model Released: LTX-Video 13B has been released, one of the most powerful open-source video generation models to date. The model boasts 13 billion parameters, supports multi-scale rendering for enhanced detail, improved motion and scene understanding, can run on local GPUs, and supports keyframe, camera/character motion control. (Source: teortaxesTex, Yoav HaCohen)

🎯 Trends

Anthropic LeMUR Supports New Claude Models: AssemblyAI announced that its LeMUR capabilities now support Anthropic’s Claude 3.7 Sonnet and Claude 3.5 Haiku models. Sonnet enhances reasoning for complex audio analysis, while Haiku optimizes response speed, bringing significant improvements to tasks like audio content analysis and meeting summarization. (Source: AssemblyAI)

Nvidia and ServiceNow Launch Enterprise AI Model Apriel Nemotron 15B: Nvidia and ServiceNow have partnered to launch Apriel Nemotron 15B, a compact, cost-effective enterprise AI model built on Nvidia NeMo. The model is designed to provide real-time responses, handle complex workflows, and offer scalability for areas like IT, HR, and customer service. (Source: nvidia)

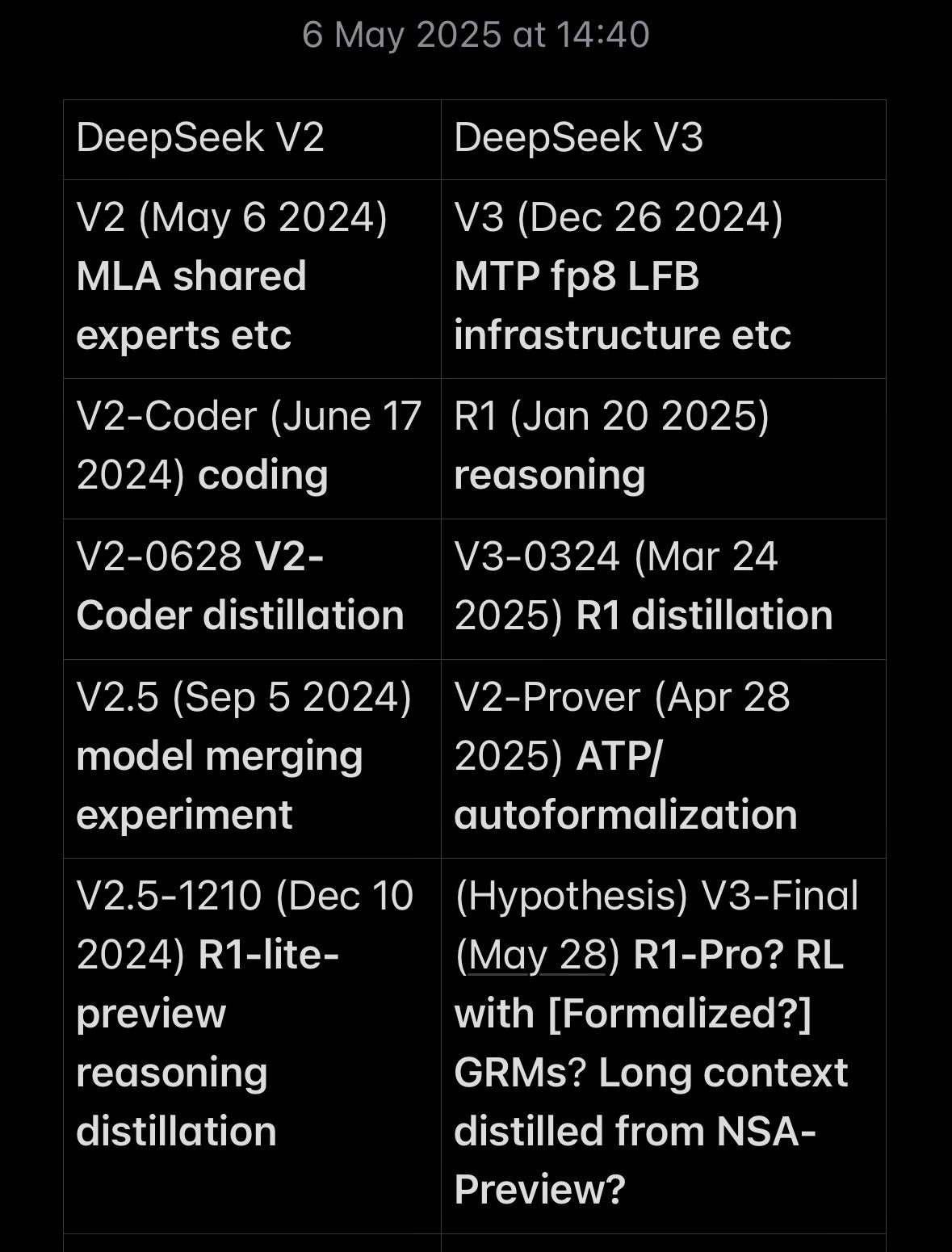

DeepSeek Model Updates and Development Timeline: DeepSeek V3 and V3-0324 models continue to be updated, showing progress in reasoning capabilities and new features. Community discussions highlight their timeline and characteristics, suggesting that DeepSeek has made significant strides in catching up with frontier models through innovative architecture and training methods. (Source: teortaxesTex, dylan522p)

GraphRAG and Agentic Search Drive RAG Technology Advancement: Cohere discusses GraphRAG and Agentic Search as next-generation RAG technologies. GraphRAG improves accuracy and reliability through knowledge graphs, while Agentic Search utilizes AI Agents for deep iterative search, providing more precise and contextually rich answers for enterprise AI applications. (Source: cohere)

AI Agent Concept Hype and Deployment Challenges: Institutions like Gartner point out that the current AI Agent field suffers from excessive hype (“Agent Washing”), with many existing technologies being repackaged. Despite a surge in market inquiries, the success rate of enterprise-level Agent deployment remains low, with technical bottlenecks, reliability, cost, and scenario applicability still being major limiting factors. (Source: 36氪, Gartner)

AI Reshapes EdTech Landscape, Chinese Companies Rise: The list of the world’s top EdTech companies published by Time magazine and Statista shows that Chinese companies have for the first time taken the top three spots (Coding Cat, NetEase Youdao, TAL Education Group), completely changing the US-dominated landscape. AI has become the key infrastructure driving the transformation of EdTech, and the success of Chinese companies is attributed to policy support and the deep integration of AI technology in educational scenarios. (Source: 36氪)

Meta and Microsoft CEOs Discuss the Future of AI: Meta founder Mark Zuckerberg and Microsoft CEO Satya Nadella discussed the impact of AI on enterprise productivity and future application development. Nadella believes AI is leading to a “deep application” phase with an increasing proportion of code written by AI; Zuckerberg predicts future engineers will lead teams of agents, with AI completing most development work. (Source: 36氪)

Digital Human Technology Evolves from “Likeness” to “Spirit”: Digital human technology is evolving from static images to intelligent interaction. Leveraging large models like Transformer and diffusion models, it achieves more realistic expressions, movements, and lip synchronization. This technology has broad application potential in consumer, small and medium-sized enterprises, and large enterprise sectors but still faces challenges such as technical coherence, interactivity, and industry chain collaboration. (Source: 36氪)

AI Successfully Reads Title of Herculaneum Scroll: The Vesuvius Challenge achieved a historic breakthrough, with researchers using AI technology to non-invasively read the title of a Herculaneum scroll carbonized by the volcano for the first time. This achievement, made possible by AI image segmentation and ink detection, demonstrates AI’s ability to “see through” ancient documents and paves the way for deciphering more dormant scrolls. (Source: 36氪)

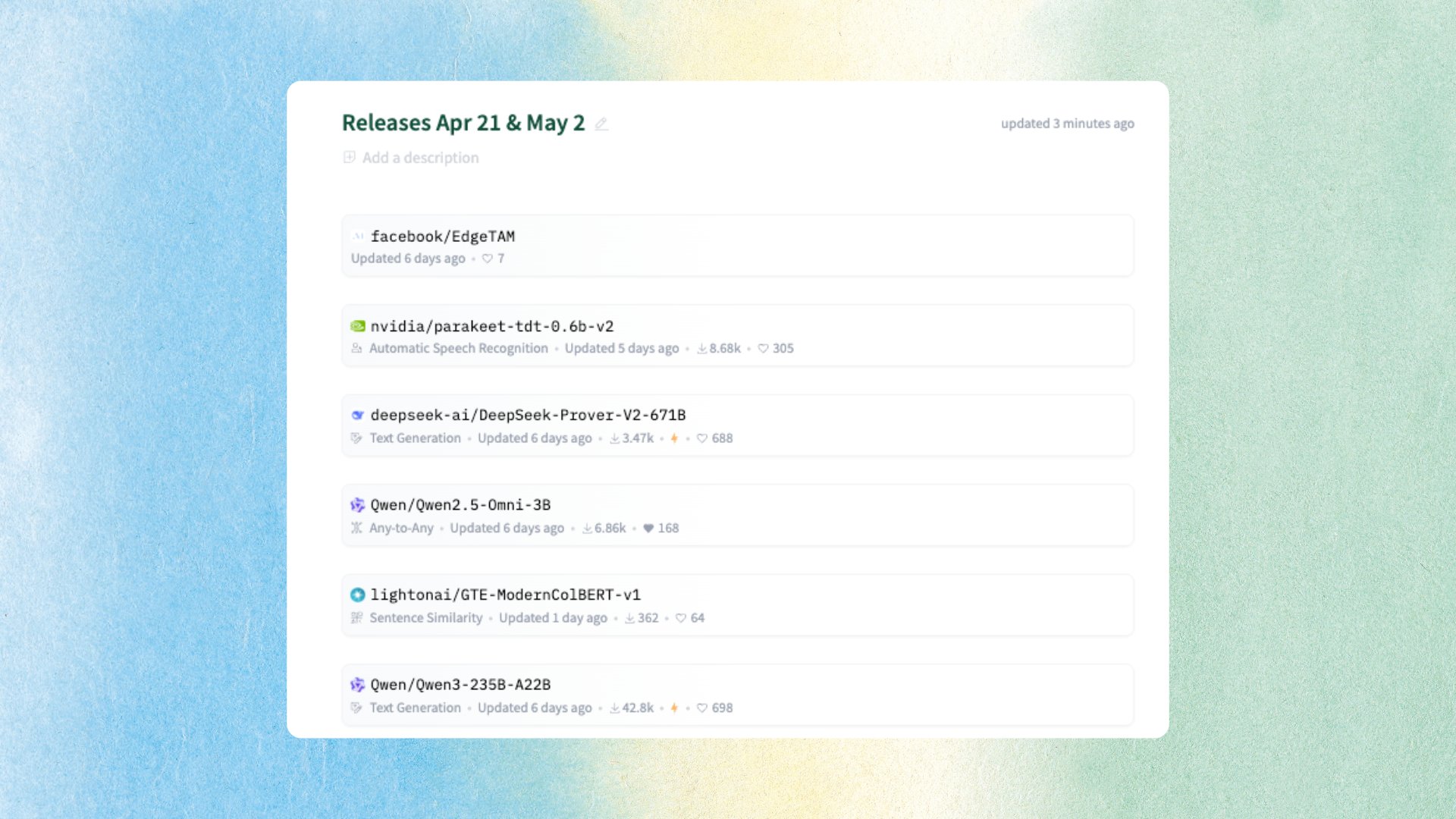

Multiple Open-Source AI Models and Datasets Released: The community summarizes recent progress in the open-source AI field, including Alibaba Qwen releasing the Qwen3 series models and Qwen2.5-Omni multimodal model, Microsoft releasing the Phi4 inference model, NVIDIA releasing the CoT inference dataset and speech recognition model Parakeet, and Meta’s EdgeTAM, among others. (Source: mervenoyann)

ACE-Step Releases Open-Source Music Generation Model: StepFun AI and ACE Studio have collaborated to release ACE-Step 3.5B, an open-source music generation model. The model supports multiple languages, various instrument styles, and vocal techniques, and can quickly generate songs on an A100 GPU, bringing a new AI tool to the music creation field. (Source: Teknium1, Reddit r/LocalLLaMA)

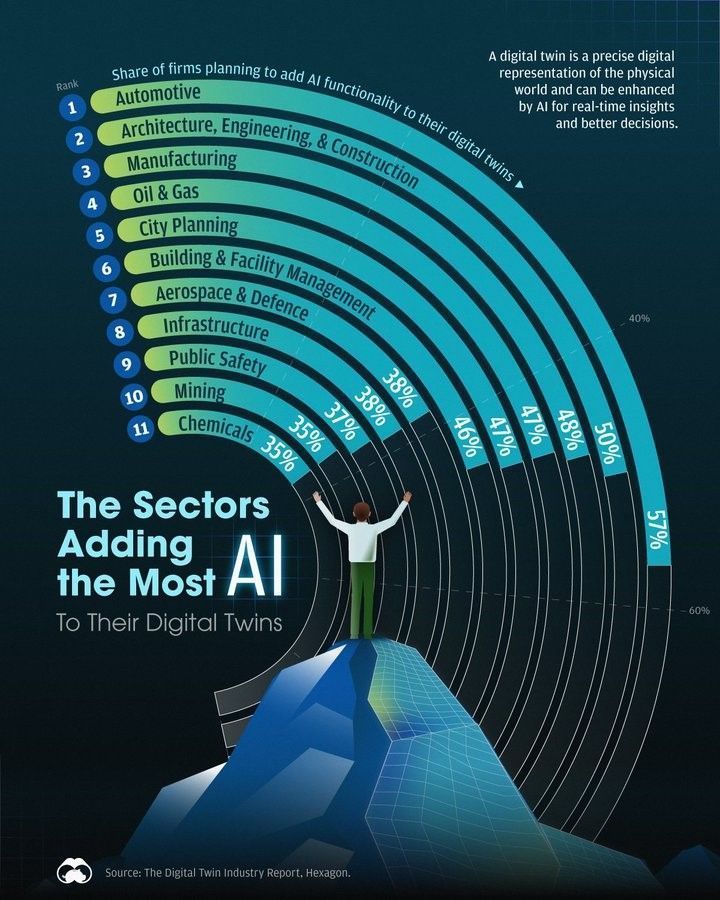

Growth in AI Application in Digital Twin Field: Reports indicate that an increasing number of industries are combining their digital twins with AI to improve efficiency and insights. The integration of AI and digital twins is becoming a significant technological trend, driving digital transformation and innovative applications across various sectors. (Source: Ronald_vanLoon)

🧰 Tools

Smolagents Integrates Computer Usage Capability: The Smolagents framework introduces computer usage functionality. Leveraging the capabilities of vision models like Qwen-VL, AI Agents can now understand screenshots and locate elements, enabling actions like clicking, thus advancing the development of complex Agent workflows. (Source: huggingface)

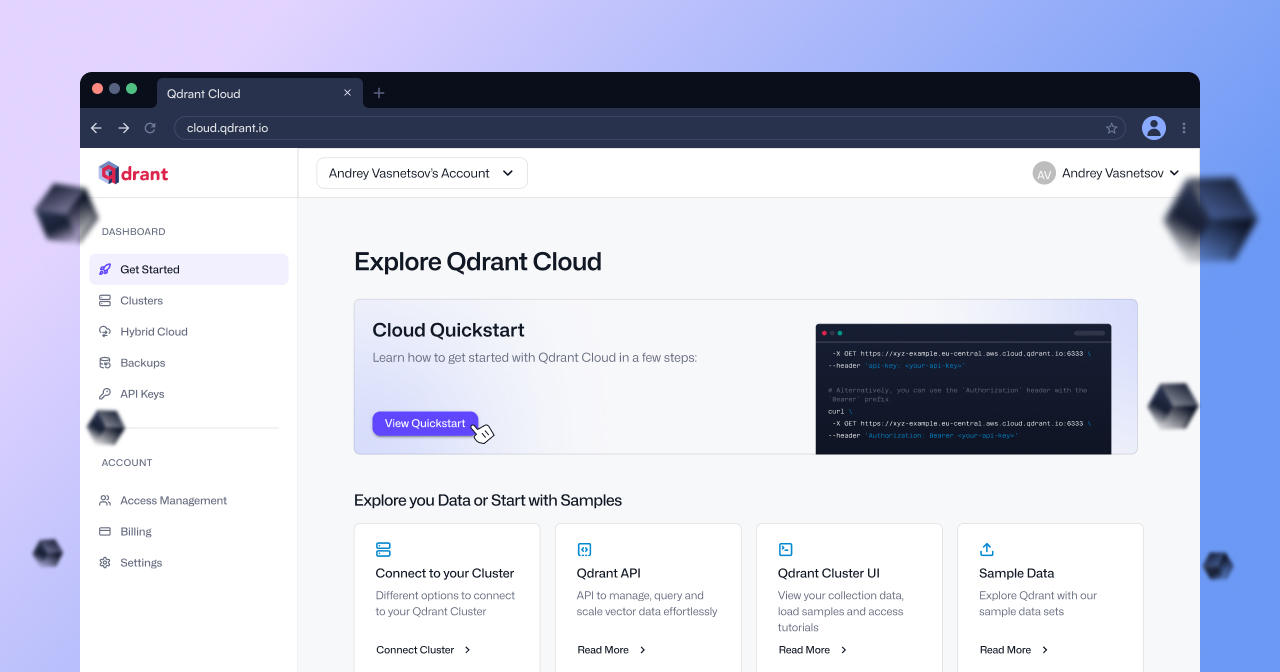

Qdrant Cloud Upgrade Enhances Vector Search Efficiency: Qdrant Cloud has undergone a major upgrade aimed at helping users move from prototype to production faster. The new version optimizes the user interface and experience, making it easier and more efficient to build semantic search and embedded vector search applications. (Source: qdrant_engine)

AI Hair Washing Service Emerges as New Business Model: AI hair washing salons are appearing in multiple cities like Shanghai and Shenzhen, offering standardized services via intelligent hair washing machines to attract customers with low prices. Although consumer feedback is mixed and challenges remain regarding technological maturity, safety, and profitability, AI hair washing represents an attempt to apply AI in the service industry, showcasing a new direction for business exploration. (Source: 36氪)

Open-Source LLM Evaluation Tool Opik Released: Opik is an open-source LLM evaluation tool for debugging, evaluating, and monitoring LLM applications, RAG systems, and Agent workflows. It provides comprehensive tracing, automated evaluation, and production-grade dashboards, helping developers improve the performance and reliability of AI applications. (Source: dl_weekly)

Python Chain-of-Thought Toolkit Cogitator: An open-source Python toolkit called Cogitator has been released, designed to simplify the use and experimentation of Chain-of-Thought (CoT) reasoning methods. The library supports OpenAI and Ollama models and includes implementations of CoT strategies such as Self-Consistency, Tree of Thoughts, and Graph of Thoughts. (Source: Reddit r/MachineLearning)

Comfyui Rebrands and Launches Native API Nodes: Comfyui has undergone rebranding and launched native API nodes, supporting integration with 11 online visual AI models including Flux, Kling, and Luma. Users can log in directly within Comfyui without needing separate API Keys, greatly simplifying the setup of multi-model workflows. (Source: op7418)

Cursor Offers Free Service to Students and Law Students: AI coding assistant Cursor announced it will provide free Pro versions to students, and legal AI tool Spellbook will also offer free services to law students. This lowers the barrier for students to access and use advanced AI tools, helping to popularize AI technology in education. (Source: scaling01, scottastevenson)

📚 Learning

Unsloth Framework Enables Efficient LLM Fine-tuning: The LearnOpenCV blog provides an in-depth analysis of the Unsloth framework, demonstrating how to fine-tune large language models and vision-language models (like Qwen2.5-VL) faster, more lightly, and more intelligently. Unsloth significantly reduces GPU memory usage and training time through optimization techniques, making it particularly suitable for users with limited resources. (Source: LearnOpenCV)

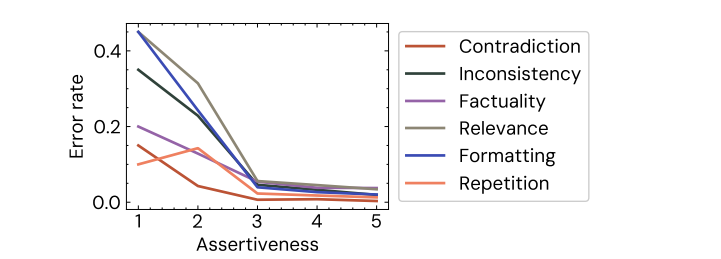

Cohere Research Reveals Bias in Human Evaluation of LLMs: A Cohere study found that even small biases (like more confident phrasing) systematically distort human evaluation of LLM outputs. Models giving more assertive answers are often rated as “better,” even if the content is the same, highlighting the irrationality of human evaluation and the challenges in evaluating models. (Source: Shahules786, clefourrier)

SWE-bench Introduces Multilingual Coding Capability Evaluation: The SWE-bench library has released a new version, introducing SWE-bench Multilingual, used to test LLMs’ coding abilities in 9 programming languages. Claude 3.7’s performance on this multilingual evaluation is lower than its score on the English SWE-bench, indicating that LLMs’ cross-language coding capabilities still need improvement. (Source: OfirPress)

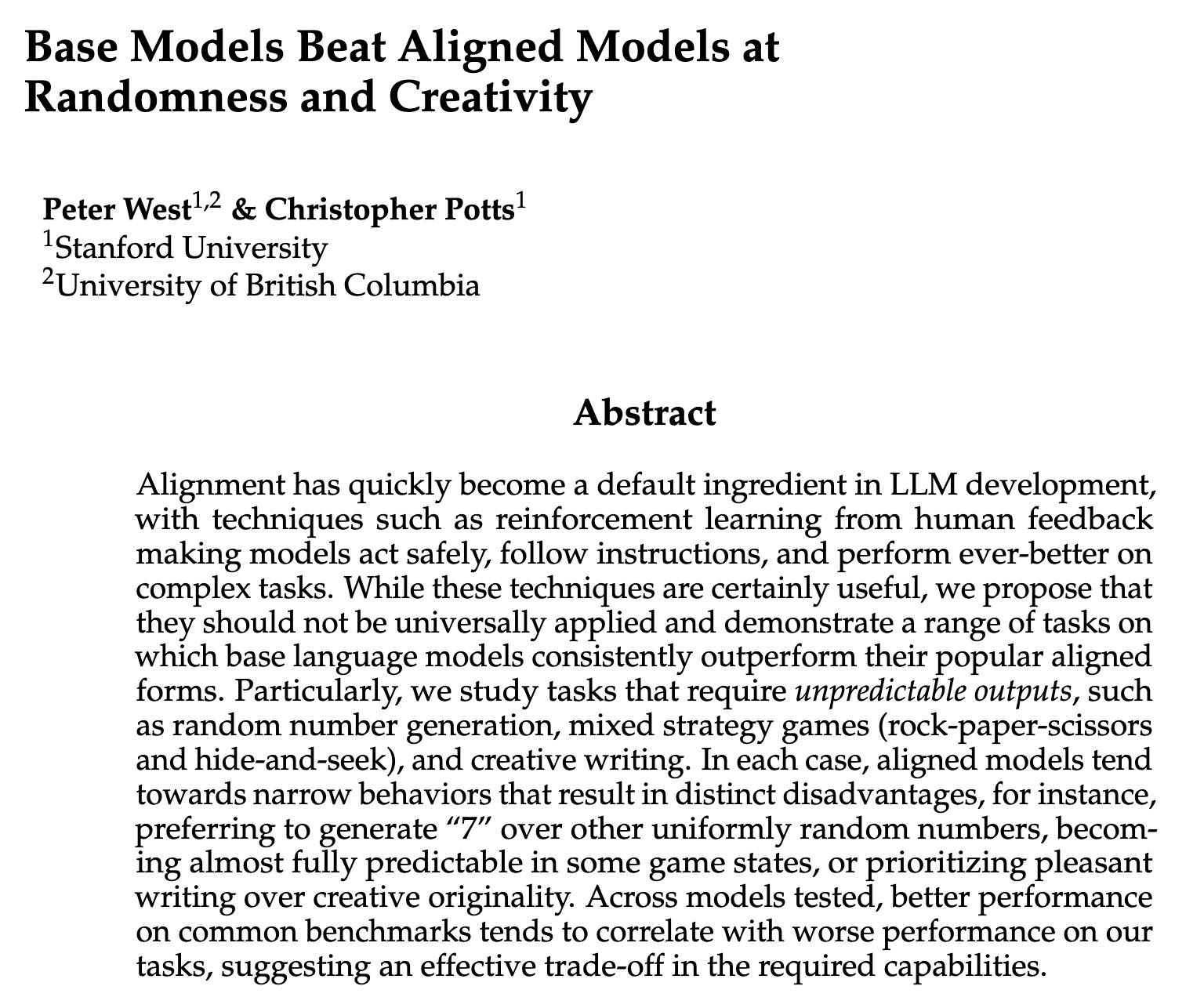

Research Explores Capabilities Potentially Lost During LLM Alignment: Researchers are exploring certain capabilities that large language models might lose during Alignment training, such as randomness and creativity. This sparks discussion on how to preserve models’ original potential while improving their safety and usefulness. (Source: lateinteraction, Peter West)

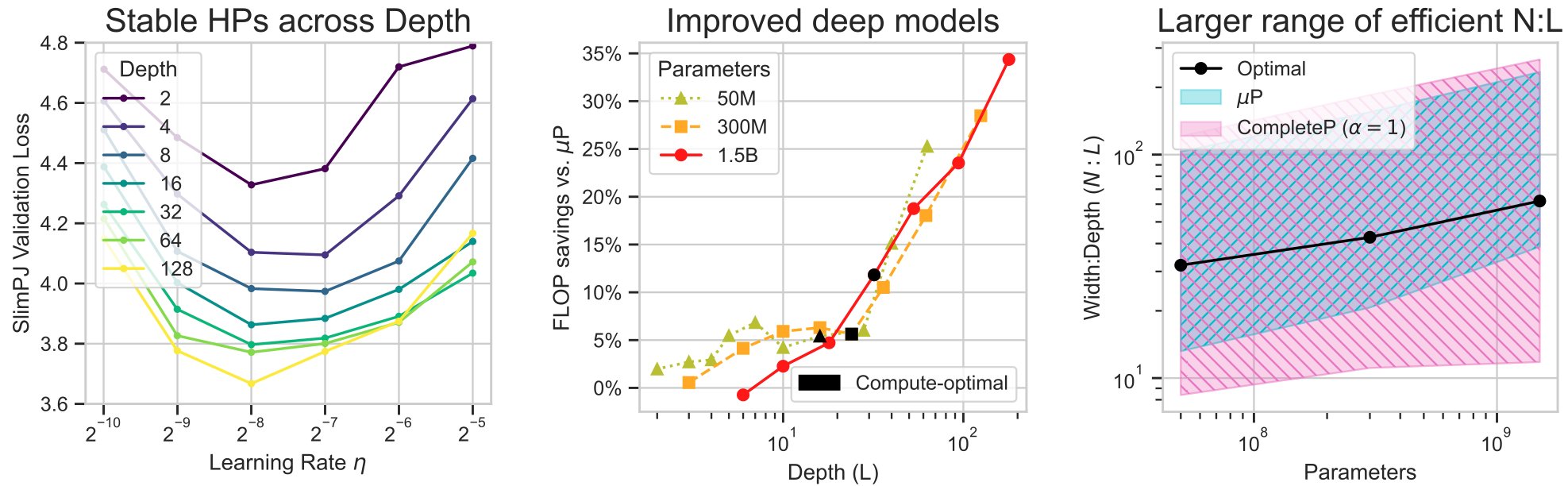

Muon Optimizer Research Shows Efficiency Advantages: Essential AI has published research exploring the practical efficiency of the Muon optimizer in LLM pre-training. The study shows that as a second-order optimizer, Muon has advantages over AdamW in computational time trade-offs, especially in large-batch training where it can retain data information more effectively. (Source: cloneofsimo, Essential AI)

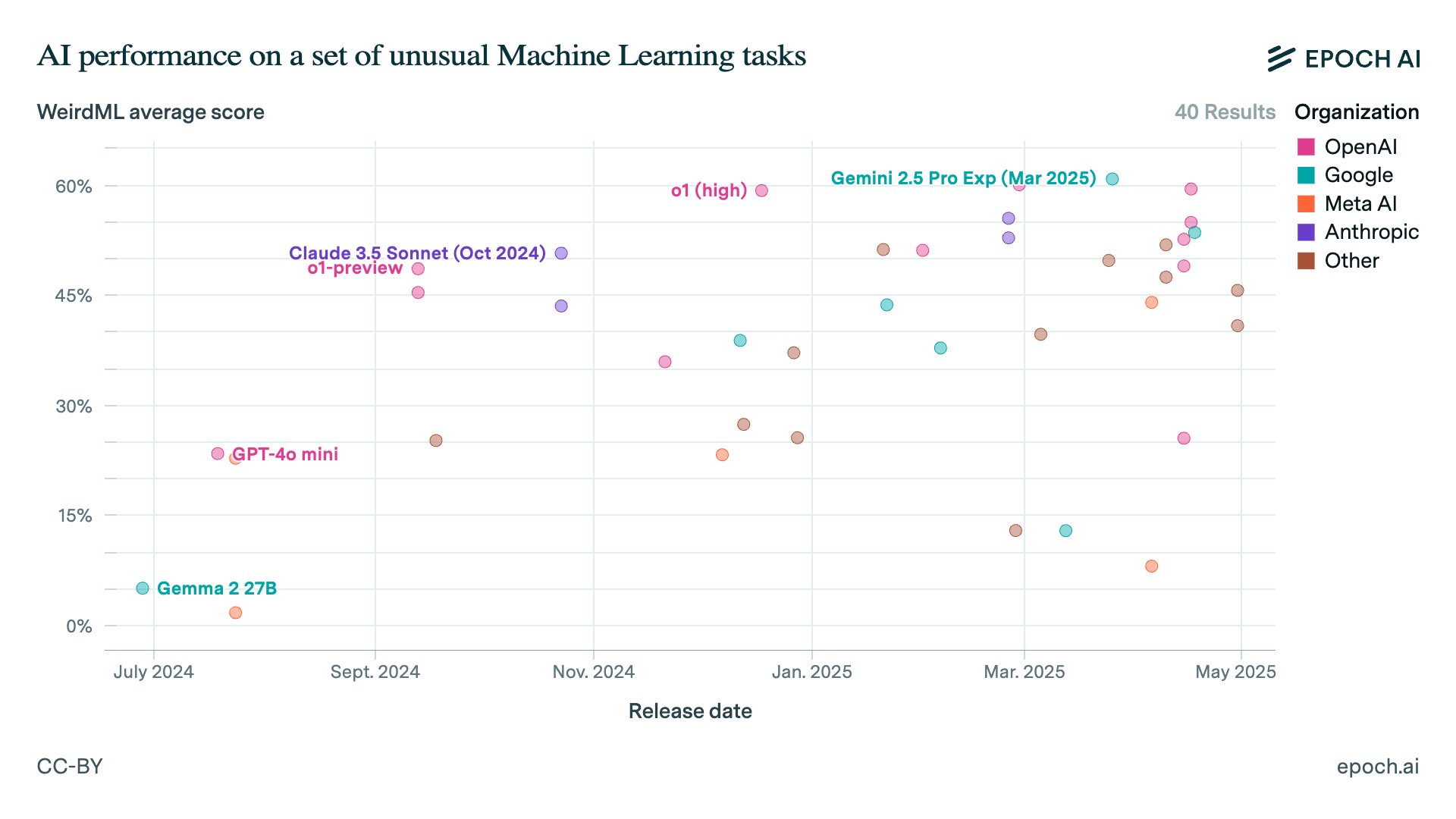

Epoch AI Benchmark Platform Updated: Epoch AI has updated its benchmark platform, adding evaluation items such as Aider Polyglot, WeirdML, Balrog, and Factorio Learning Environment. These new benchmarks introduce external leaderboard data, providing a more comprehensive perspective for evaluating LLM performance. (Source: scaling01)

Hugging Face Releases AI Agent Course: Hugging Face has released an AI Agent course covering Agent fundamentals, LLMs, model families, frameworks (smolagents, LangGraph, LlamaIndex), observability, evaluation, and Agentic RAG use cases. It includes a final project and benchmarks, providing a systematic resource for learning to build AI Agents. (Source: GitHub Trending, huggingface)

💼 Business

OpenAI Acquires AI Coding Assistant Windsurf: OpenAI has agreed to acquire Windsurf (formerly Codeium), a developer of AI coding assistants, for approximately $3 billion, marking OpenAI’s largest acquisition to date. This move aims to solidify OpenAI’s position in the AI coding field, gain Windsurf’s user base and code evolution data, and lay the groundwork for future AI coding Agent development. (Source: 36氪, Bloomberg, 智东西)

OpenAI Abandons Plan for Full Commercialization: OpenAI announced it is abandoning its plan to fully convert its parent company into a for-profit entity. It will maintain the structure where the non-profit parent controls the for-profit subsidiary and will convert the subsidiary into a “public benefit corporation.” This move is a compromise following discussions with regulators and various parties, impacting corporate governance and future financing strategies, and is also related to opposition from figures like Elon Musk. (Source: steph_palazzolo, 36氪)

CloudWalk Technology Faces Layoffs and Losses: Financial reports from veteran AI company CloudWalk Technology show a significant decline in revenue and expanded losses, along with layoffs and executive pay cuts. This reflects the profitability challenges and market competition pressure faced by AI startups, where “survival” has become the primary goal for many AI companies at this stage, potentially signaling the bursting of an AI startup bubble. (Source: 36氪)

🌟 Community

AI Deepfakes Cause Trust Crisis and “Plausible Deniability” Risk: The community discusses the increasing realism of AI deepfake technology, making it difficult for the public to distinguish real from fake information and causing a trust crisis. A greater concern is that individuals or institutions might use AI deepfakes as a “plausible deniability” excuse for their misconduct, posing challenges for fact-checking and legal accountability. (Source: Reddit r/ArtificialInteligence)

OpenAI Internal Tests Show Worsening ChatGPT Hallucination Problem: Reports indicate that OpenAI’s internal tests show ChatGPT’s hallucination problem is worsening, and the reason is unclear. This finding raises community concerns about model reliability and interpretability and suggests that even leading models still face fundamental challenges. (Source: Reddit r/artificial)

Community Concerns About Potential Ad Injection in AI Model Training Data: The community discusses the possibility of advertisements or biased information being intentionally injected into future AI model training data, leading to model outputs containing implicit promotions or specific viewpoints. This raises concerns about model transparency, safety, and business models, as well as the advantages of open-source models in this regard. (Source: Reddit r/LocalLLaMA)

Discussion on AI Agent Concept Hype vs. Practical Deployment Difficulty: The community is actively discussing the gap between the hype surrounding the AI Agent concept and the reality of practical deployment. Discussions point out that many “Agents” are merely repackaged existing technologies, and enterprises face challenges in building and deploying true Agents, including technical reliability, cost control, and complexity, requiring a pragmatic assessment of their business value. (Source: 36氪, Reddit r/ArtificialInteligence)

Controversy Surrounding Open-Source Tools like Ollama and OpenWebUI: The community discusses the pros and cons of Ollama as a local LLM running tool, including its model storage format, synchronization issues with llama.cpp, and default configurations. Simultaneously, OpenWebUI’s license change, adding restrictions for commercial users, has sparked community discussion about the spirit of open source and project sustainability. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

Machine Learning Practitioners’ Anxiety About Dataset Acquisition: Machine learning practitioners express anxiety on social media about acquiring high-quality datasets, believing data is the “ceiling” for model performance, while non-technical managers often underestimate the complexity of data work, viewing AI as a “magic wand.” (Source: Reddit r/MachineLearning)

Challenges in Managing and Reviewing AI-Generated Code: With the popularization of AI-generated code, the community discusses how to effectively manage and review the large volume of code produced by AI. Developers need to establish processes and tools to ensure the quality and correctness of AI code, and the focus of work may shift from writing code to reviewing and verifying it. (Source: matvelloso, finbarrtimbers)

Gap Between Actual RAG Application Performance and User Expectations: Some users report that when using RAG to process personal documents, the model’s performance is not as expected and cannot accurately answer questions contained in the documents. This indicates that RAG still faces challenges when dealing with specific, non-public datasets, and the actual performance differs from users’ experience with general-purpose models. (Source: Reddit r/OpenWebUI)

💡 Others

Microsoft PowerToys Updated with New Features like Command Palette: Microsoft has released PowerToys version 0.90, adding the Command Palette (CmdPal) module as an evolution of PowerToys Run, enhancing quick launching and extensibility. It also includes improvements to Color Picker, Peek file deletion, New+ template variables, and other features to boost Windows user productivity. (Source: GitHub Trending)

Nvidia Plans to End CUDA Support for Older GPUs: Nvidia announced plans to end CUDA support for Maxwell, Pascal, and Volta series GPUs in the next major Toolkit version. This move will affect some users still relying on these older hardware for AI/ML work, potentially driving infrastructure upgrades, but also sparking community discussion about hardware obsolescence and compatibility. (Source: Reddit r/LocalLLaMA)

Google Nest Hub Devices Fail to Integrate Gemini: Users complain that Google Nest Hub smart display devices still use the outdated Google Assistant and have not integrated the more powerful Gemini model. Although devices like Pixel phones support Gemini, the Nest Hub series lacks an upgrade roadmap, raising user questions about the fragmentation of Google’s product ecosystem and its commitment to AI popularization. (Source: Reddit r/ArtificialInteligence, Reddit r/artificial)