Keywords:OpenAI, DSPy, SGLang, Nvidia, ChatGPT, AI, LLM, MoE, dspy.GRPO, DeepSeek MoE, Parakeet TDT, Agentic System, EQ-Bench 3

🔥 Focus

OpenAI Confirms Maintaining Non-Profit Structure: OpenAI announced its existing for-profit entity will transition to a Public Benefit Corporation (PBC), but control will remain with the current non-profit organization. This move confirms OpenAI will continue to be controlled by the non-profit and reaffirms its mission to ensure AGI (Artificial General Intelligence) benefits all of humanity. This decision follows internal turmoil and external questions about its structure (including Musk’s lawsuit), with mixed reactions from the community: some see it as upholding the mission, while others question the true intent behind the capital structure adjustment (Source: OpenAI, sama, jachiam0, NeelNanda5, scaling01, zacharynado, mcleavey, steph_palazzolo, Plinz, Teknium1)

DSPy Framework Releases Experimental Online RL Optimizer dspy.GRPO: The Stanford NLP team released an experimental new feature for the DSPy framework, dspy.GRPO, an online Reinforcement Learning (RL) optimizer. This tool is designed to optimize DSPy programs, directly applicable even to complex multi-module, multi-step programs without modifying existing code. This is seen as a significant step in bringing RL optimization (like GRPO used by DeepSeek) to a higher level of abstraction (LLM workflows), aiming to enhance the performance and efficiency of AI agents and complex pipelines. The community response has been enthusiastic, considering it a potentially important component of DSPy 3.0 (Source: Omar Khattab, matei_zaharia, lateinteraction, Michael Ryan, Lakshya A Agrawal, Scott Condron, Noah Ziems, Rogerio Chaves, Karthik Kalyanaraman, Josh Cason, Mehrdad Yazdani, DSPy, Hopkinx🀄️, Ahmad, william, lateinteraction, lateinteraction, swyx)

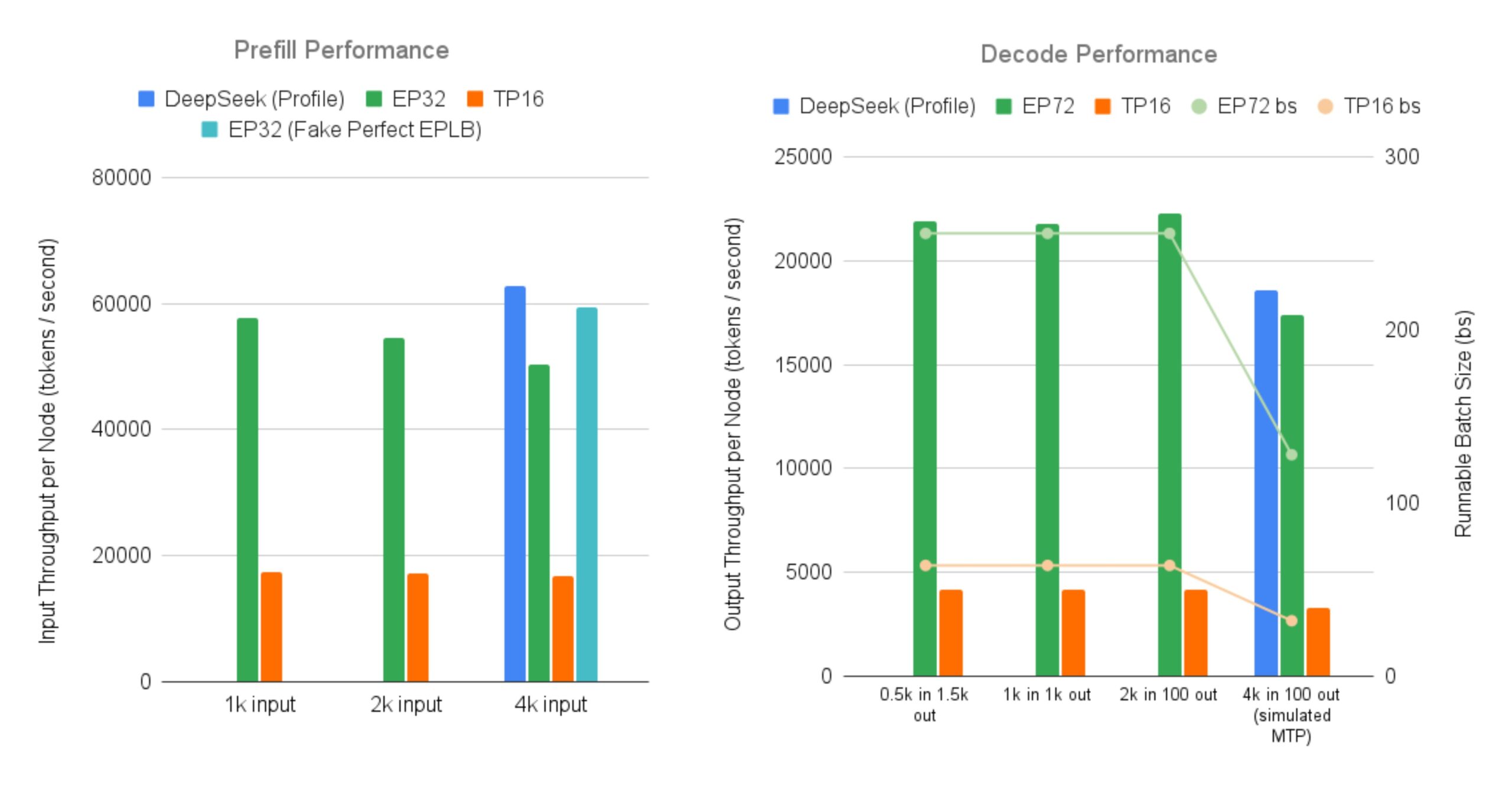

SGLang Open-Sources Efficient Serving for DeepSeek MoE Large Models: LMSYS Org announced that SGLang provides the first open-source implementation for serving MoE (Mixture-of-Experts) models like DeepSeek V3/R1, which feature large-scale Expert Parallelism and Prefill-Decode Disaggregation, on 96 GPUs. This implementation nearly achieves the throughput reported officially by DeepSeek (52.3k input tokens/sec per node, 22.3k output tokens/sec per node), offering up to a 5x increase in output throughput compared to traditional tensor parallelism. This provides the community with an open-source solution for efficiently running and deploying large MoE models (Source: LMSYS Org, teortaxesTex, cognitivecompai, lmarena_ai, cognitivecompai)

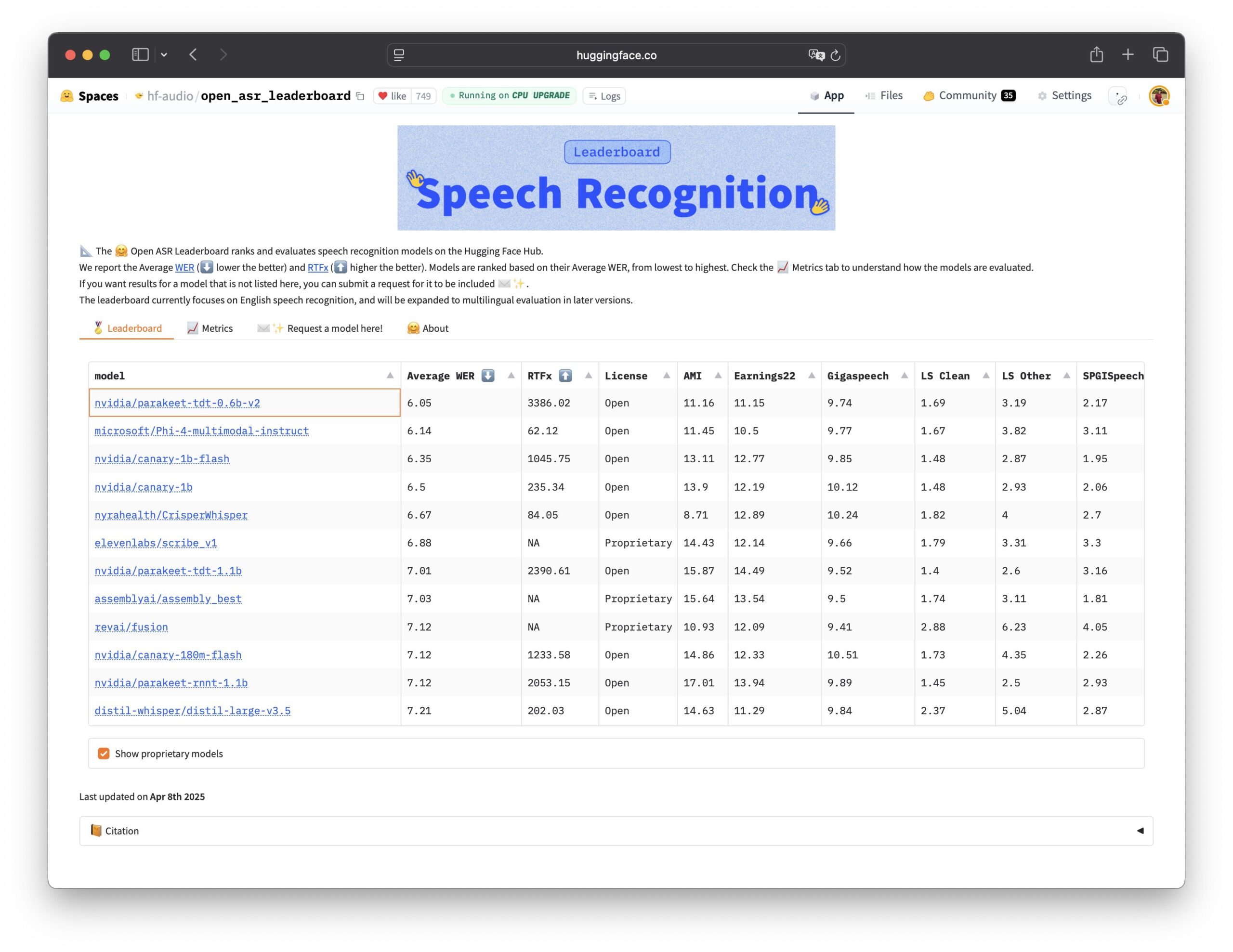

Nvidia Open-Sources Parakeet TDT Speech Recognition Model: Nvidia has open-sourced the Parakeet TDT 0.6B model, which performs best on the Open ASR Leaderboard, making it the current leading open-source Automatic Speech Recognition (ASR) model. With 600 million parameters, the model can transcribe 60 minutes of audio in 1 second, outperforming many major closed-source models. Licensed under CC-BY-4.0, it allows commercial use, providing a powerful open-source option for the speech recognition field (Source: Vaibhav (VB) Srivastav, huggingface, ClementDelangue)

🎯 Trends

ChatGPT Traffic Continues to Grow, Surpassing X: Similarweb data shows that ChatGPT’s traffic continues to grow, with total visits in April (4.786 billion) exceeding those of the X platform (4.028 billion). Starting from early 2025, ChatGPT’s traffic has steadily climbed, moving from occasionally lagging behind X in January to leading almost entirely in April, demonstrating the strong momentum of AI chatbots in user activity (Source: dotey)

Data Trust and Leadership Become Key to AI Transformation: Multiple reports and discussions emphasize that data trust is the invisible force accelerating AI transformation. Concurrently, successful GenAI leaders exhibit distinct traits in strategy, organization, and technology application. This indicates that the key to AI success lies not only in the technology itself but also in a high-quality, trustworthy data foundation and effective leadership and strategic deployment (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

GTE-ModernColBERT Achieves SOTA on Long Text Embedding Tasks: LightOn’s GTE-ModernColBERT multi-vector embedding model achieved SOTA (State-of-the-Art) results on the LongEmbed long document search benchmark, leading by nearly 10 points. Notably, the model was trained only on MS MARCO short documents (length 300) yet demonstrated excellent zero-shot generalization capabilities on long text tasks. This further validates the potential of Late Interaction models (like ColBERT) in handling long-context retrieval, outperforming traditional BM25 and dense retrieval models (Source: Antoine Chaffin, Ben Clavié, tomaarsen, Dorialexander, Manuel Faysse, Omar Khattab)

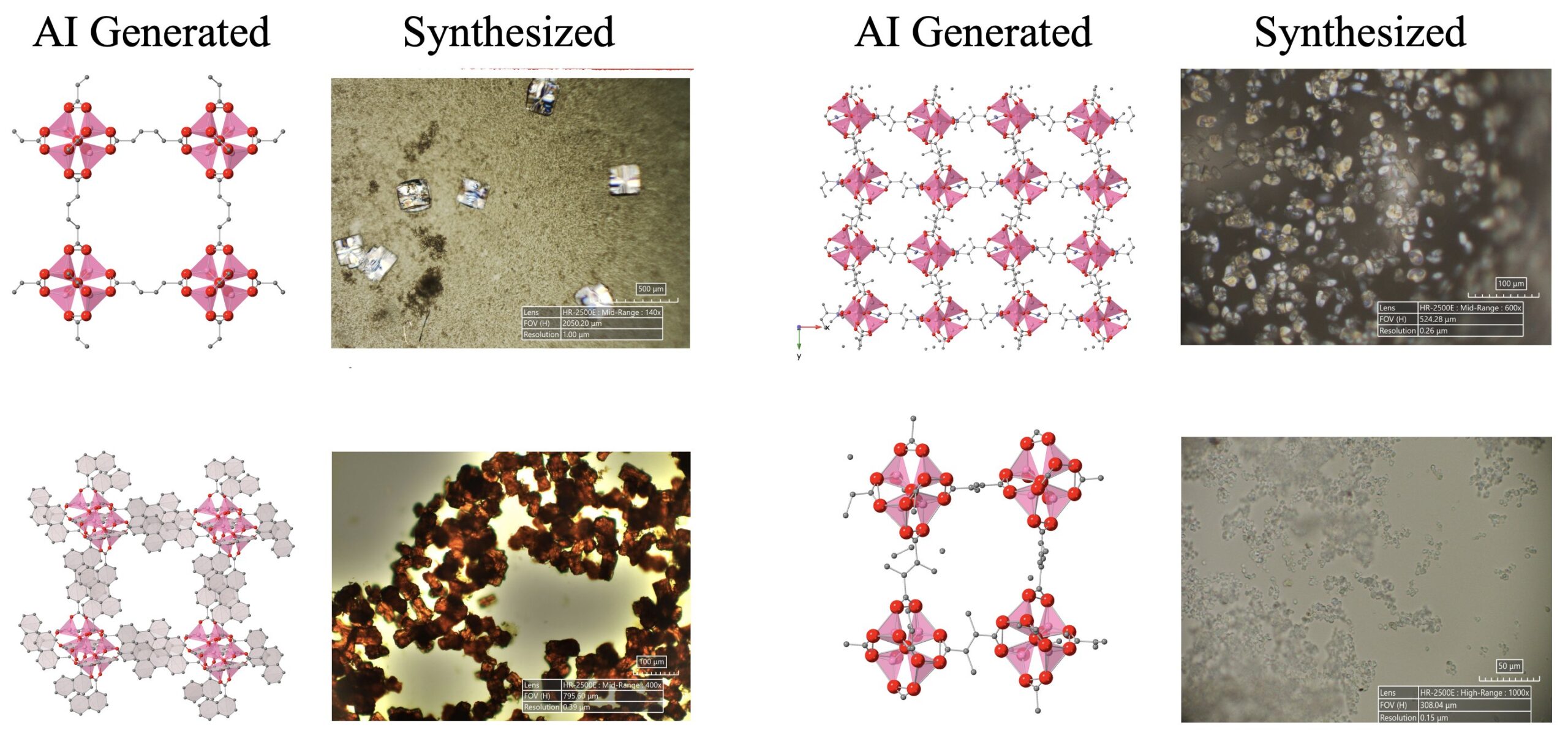

Progress in AI-Driven Scientific Discovery: An AI agent system composed of LLMs, diffusion models, and hardware devices successfully autonomously discovered and synthesized 5 novel metal-organic frameworks (MOFs) that are beyond the scope of existing human knowledge. This research demonstrates the potential of AI agents in automating scientific research, capable of completing the entire process from proposing research ideas to wet lab validation (Source: Sherry Yang)

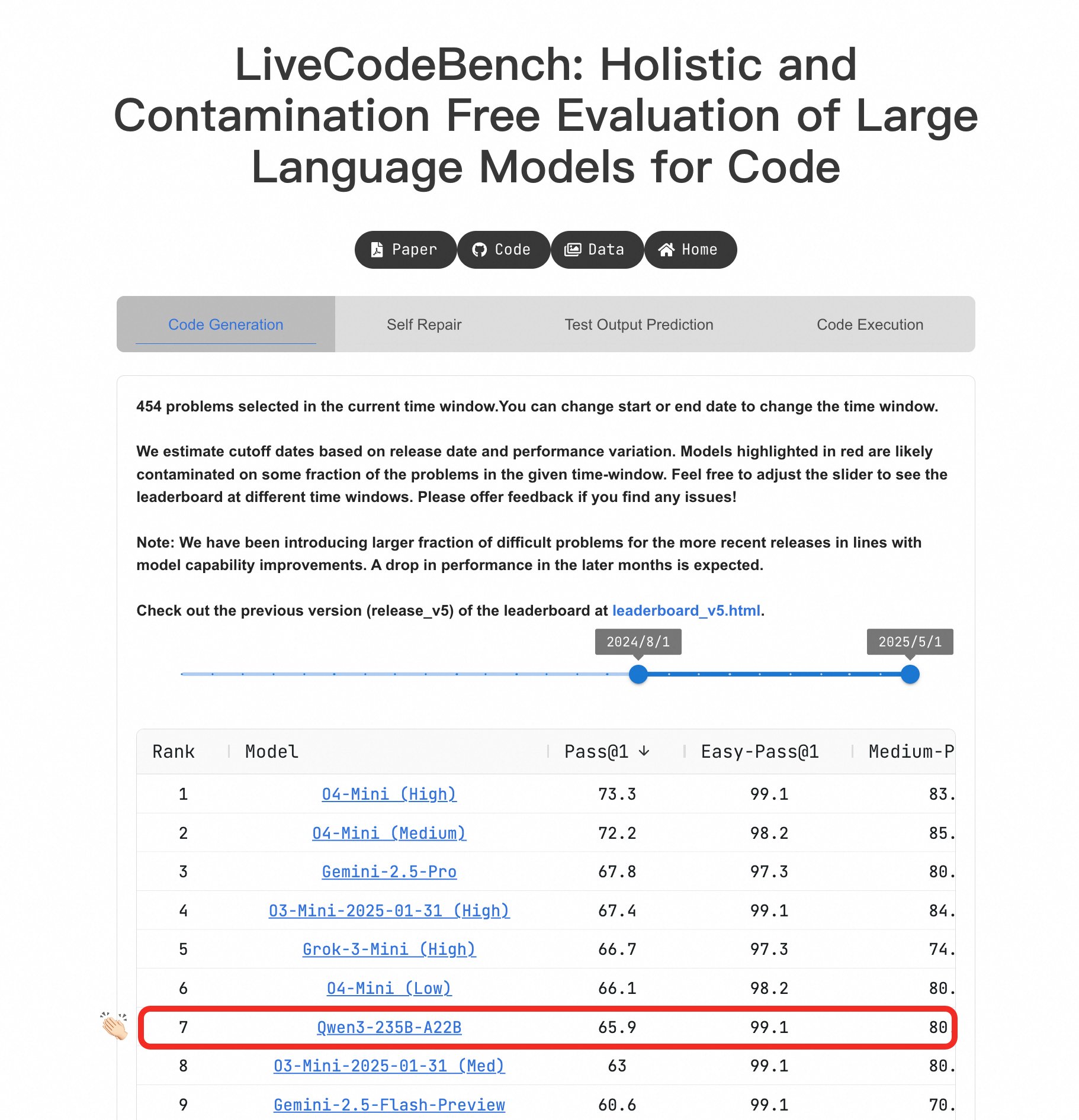

Qwen3 Large Model Shows Outstanding Programming Capabilities: On the LiveCodeBench benchmark, the Qwen3-235B-A22B model demonstrated excellent performance, considered one of the best open-source models for competitive-level code generation, with performance comparable to o4-mini (low confidence). Even on difficult problems, Qwen3 maintains a level on par with O4-Mini (Low), outperforming o3-mini (Source: Binyuan Hui, teortaxesTex)

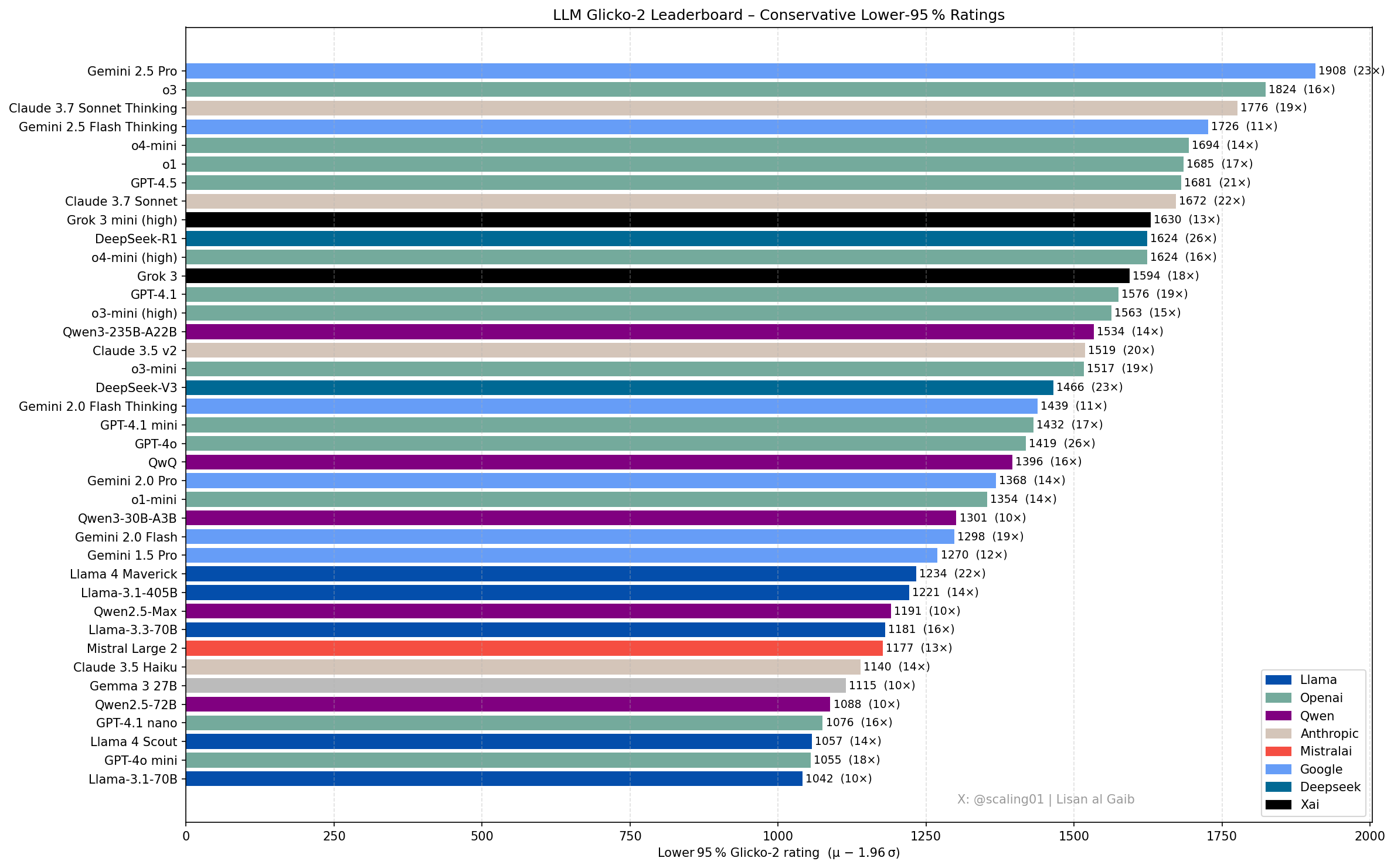

New Developments and Discussions on LLM Leaderboards: Community member Lisan al Gaib updated the LLM leaderboard using the Glicko-2 rating system, sparking discussion. Scaling01 believes the list aligns 95% with their subjective feelings, with Gemini 2.5 Pro still leading, but Gemini 2.5 Flash, Grok 3 mini, and GPT-4.1 possibly overrated. The leaderboard shows a reasonable progression sequence for OpenAI, Llama, and Gemini series models, with o3 (high) being comparable to Gemini 2.5 Pro (Source: Lisan al Gaib)

Open Source Robotics Ecosystem Rapidly Developing: Hugging Face’s Clem Delangue expressed excitement about progress in the AI robotics field after discussions with NPeW and Matth Lapeyre. Peter Welinder (OpenAI) also praised Hugging Face’s work in fostering the open-source robotics ecosystem, noting the field’s rapid growth (Source: ClementDelangue, Peter Welinder, ClementDelangue, huggingface)

AI Interpretability Research Direction Gains Attention: Researchers are calling for more work in AI interpretability, particularly in explaining strange model behaviors. Understanding these behaviors could, in turn, lead to deeper conclusions about the internal mechanisms of LLMs and potentially spawn new interpretability tools. This is considered a promising and impactful research direction (Source: Josh Engels)

FutureHouseSF Aims to Build “AI Scientists”: FutureHouseSF CEO Sam Rodriques was interviewed about the company’s goal of building “AI Scientists.” The discussion covered the specific meaning of AI scientists, the role of robotics, and why the scientific field needs a driving force similar to a “Stargate” project, aiming to accelerate scientific discovery using AI (Source: steph_palazzolo)

Google’s TPU Advantage Potentially Underestimated: Commentator Justin Halford suggests investors might be underestimating Google’s advantage with TPUs (Tensor Processing Units). He points out that when algorithmic moats are not significant, computing power becomes key in the AI race, and Google’s self-developed TPUs avoid intermediate costs, which is crucial as hundreds of billions flow into infrastructure construction (Source: Justin_Halford_)

Open Source VLA Model Nora Released: Declare Lab has open-sourced Nora, a new Vision-Language-Action (VLA) model based on Qwen2.5VL and the FAST+ tokenizer. Trained on the Open X-Embodiment dataset, the model outperforms Spatial VLA and OpenVLA on real-world WidowX tasks (Source: Reddit r/MachineLearning)

New Method for LLM Inference Optimization: Snapshot and Restore: Facing challenges of cold starts and multi-model deployment in LLM inference, a team built a new runtime system. By snapshotting the model’s full execution state (including memory layout, attention cache, execution context) and restoring it directly on the GPU, they achieved cold starts within 2 seconds, hosting over 50 models on 2 A4000 GPUs with over 90% GPU utilization and no persistent memory bloat. This approach is akin to building an “operating system” for inference (Source: Reddit r/MachineLearning, Reddit r/LocalLLaMA)

Open Source Real-Time Object Detector D-FINE: The Hugging Face Transformers library has added the real-time object detector D-FINE. The model is claimed to be faster and more accurate than YOLO, licensed under Apache 2.0, and runnable on a T4 GPU (free Colab environment), providing a new SOTA open-source option for real-time object detection (Source: merve, algo_diver)

LLM Pricing Becoming More Dynamic: Observations indicate that pricing for large language models is becoming more dynamic. This may help the market find more optimal price points over time, reflecting model providers’ trend of adjusting pricing strategies based on cost, demand, and competitive pressures (Source: xanderatallah)

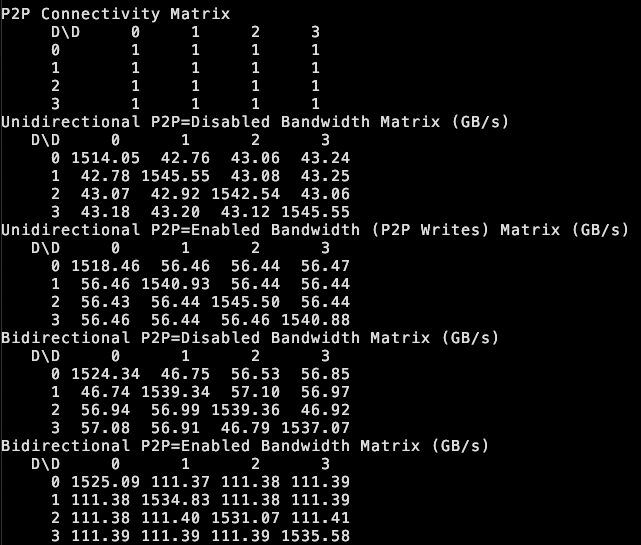

tinybox green v2 Supports Inter-GPU P2P: the tiny corp announced that its tinybox green v2 product supports peer-to-peer (P2P) communication between RTX 5090 GPUs via modified drivers. This means data can be transferred directly between GPUs without going through CPU RAM, improving efficiency for multi-GPU collaboration. The feature is compatible with tinygrad and PyTorch (any library using NCCL) (Source: the tiny corp)

Researchers Release EQ-Bench 3 for Evaluating LLM Emotional Intelligence: Sam Paech released EQ-Bench 3, a benchmark tool for measuring the emotional intelligence (EQ) of large language models (LLMs). The development team launched this version after multiple prototype failures, aiming to more accurately and reliably assess models’ abilities in understanding and responding to emotions (Source: Sam Paech, fabianstelzer)

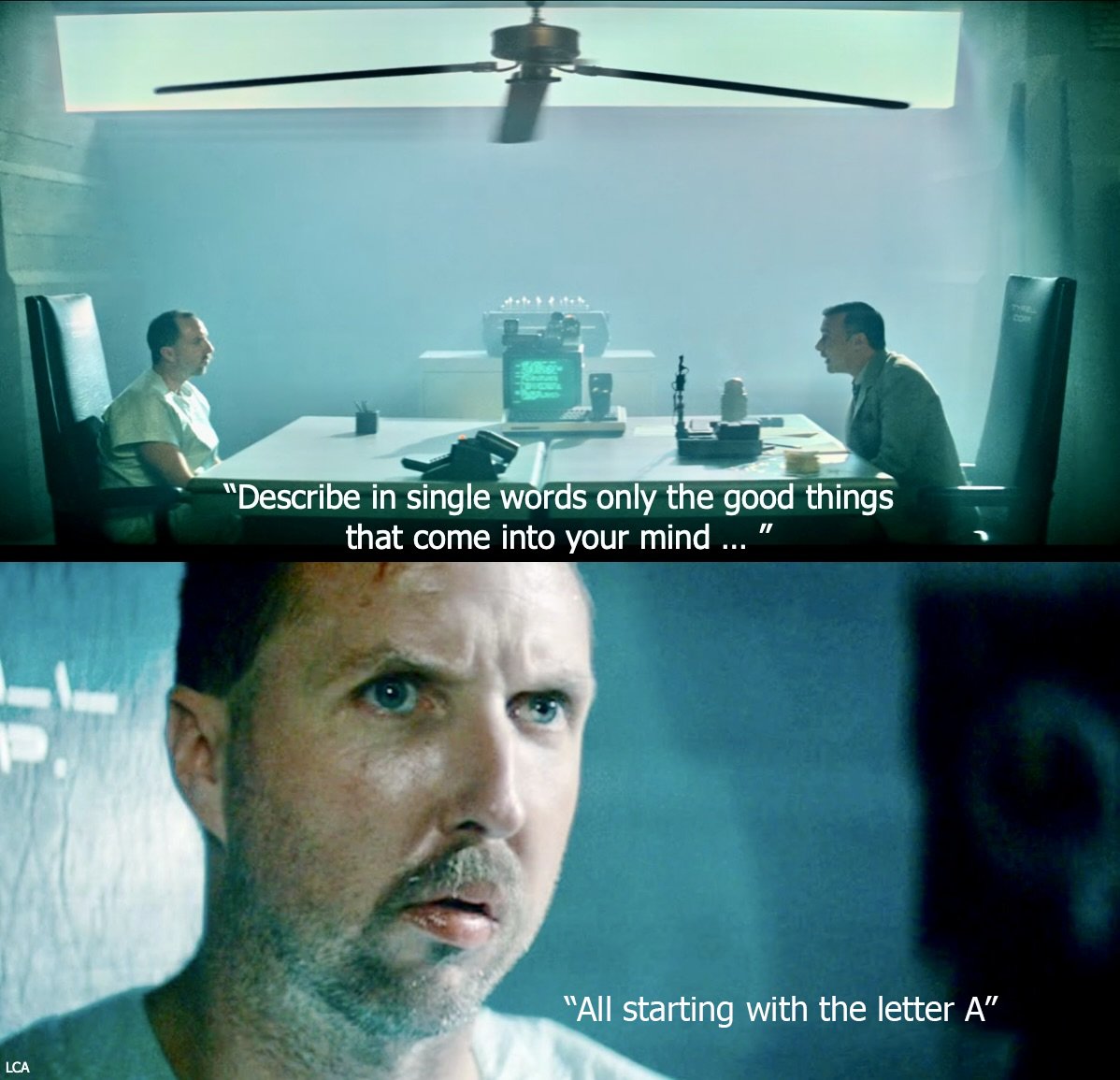

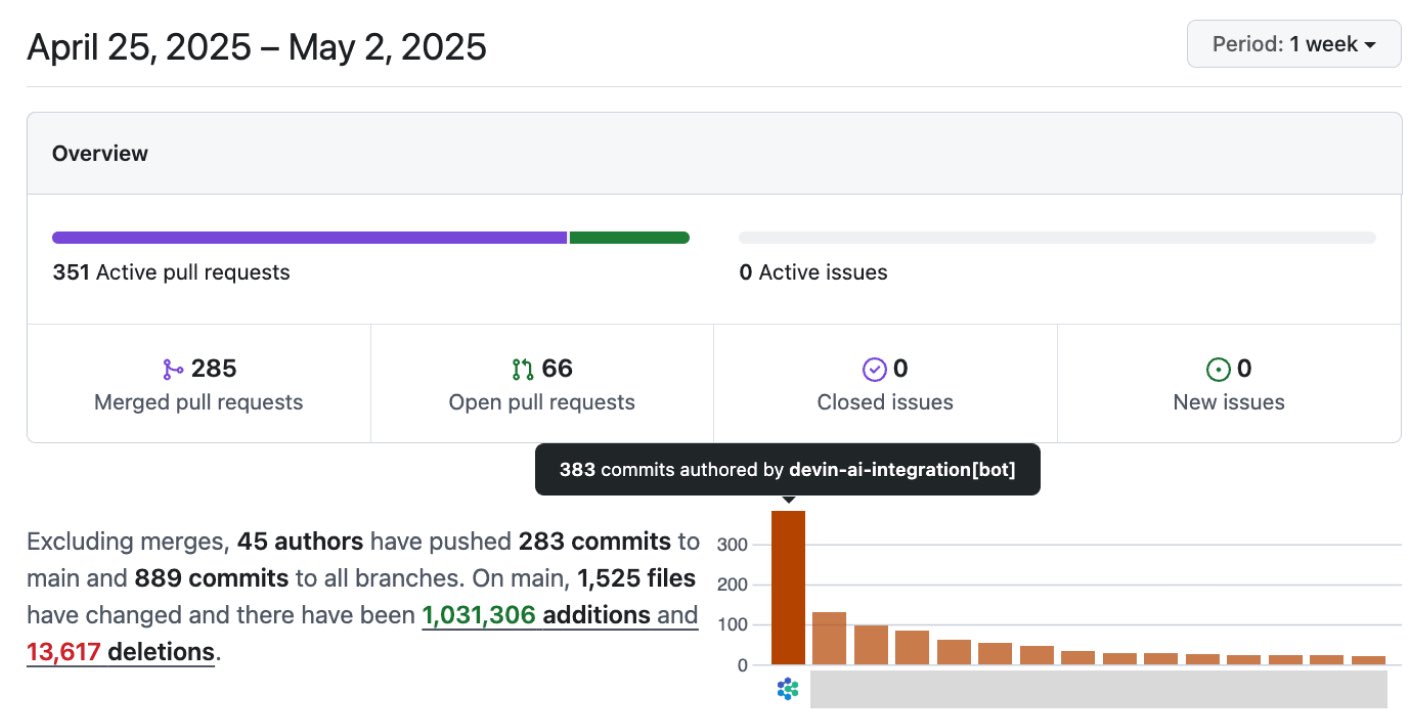

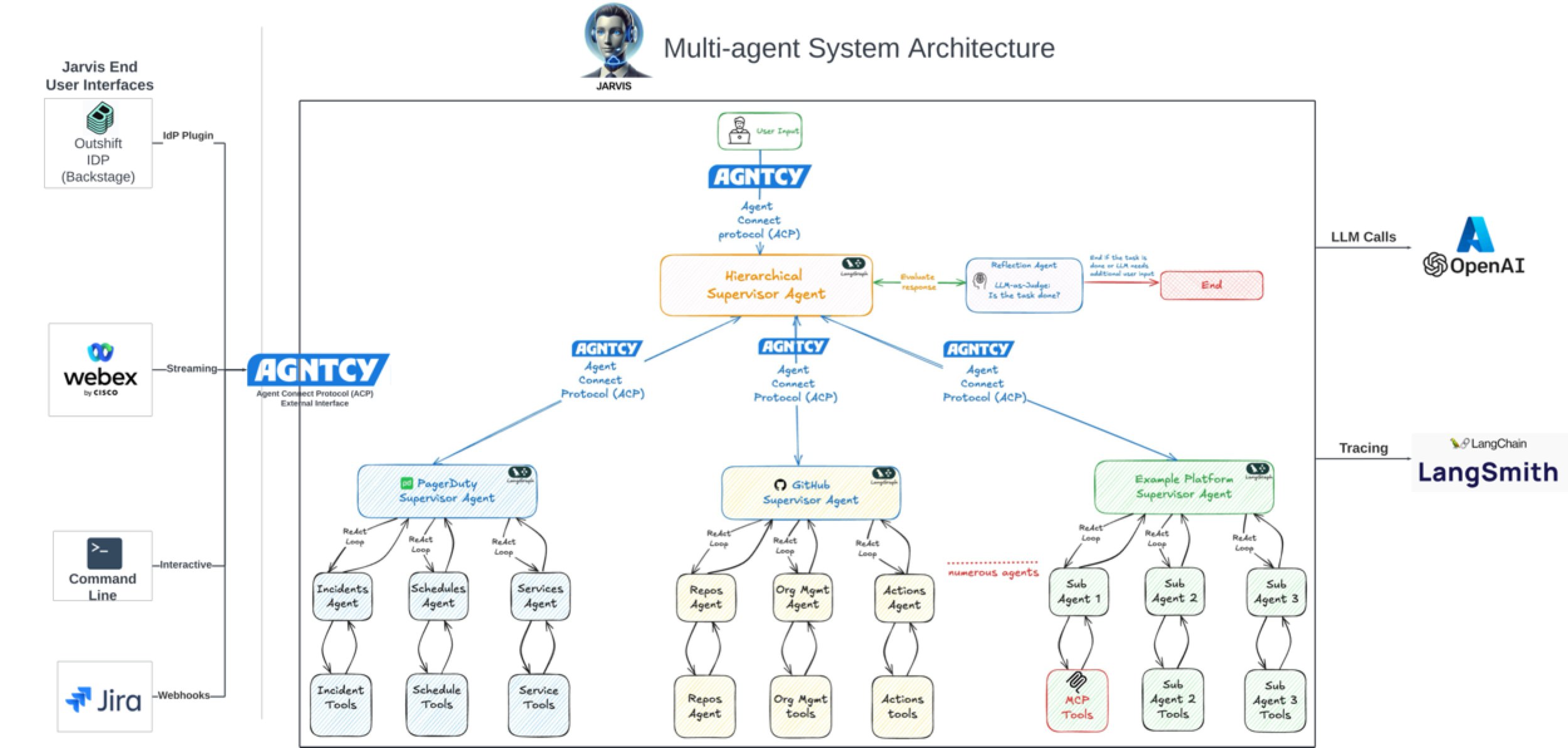

AI Significantly Boosts Software Development Efficiency: Community discussions and case studies show AI is significantly enhancing software development efficiency. For example, AI commits now rank first in Vesta’s codebase. Cisco Outshift utilized an AI platform engineer named JARVIS, built on LangGraph and LangSmith, to reduce CI/CD setup time from a week to under an hour and resource provisioning time from half a day to seconds, achieving a 10x productivity increase (Source: mike, LangChainAI, hwchase17)

AI Enters Film and Creative Industries: Disney/Lucasfilm, through Industrial Light & Magic (ILM), released its first public generative AI work, signaling the adoption of AI technology by top VFX studios. This indicates AI will play a more significant role in areas like film special effects and creative design, changing content creation workflows (Source: Bilawal Sidhu)

AI Applications in Military Domain Attract Attention: Reports suggest China is using its self-developed DeepSeek AI model to design advanced fighter jets (like J-15, J-35) and shape next-generation aircraft (J-36, J-50). AI is allegedly accelerating R&D by optimizing stealth, materials, and performance. Although the information source requires caution, this reflects the potential and attention surrounding AI applications in defense and aerospace (Source: Clash Report)

Talent Movement: Rohan Pandey Leaves OpenAI: Rohan Pandey, a researcher on the OpenAI Training team, announced his departure. He stated he will take a break to work on solving the Sanskrit OCR problem to “immortalize” classical Indian literature “in the weights of superintelligence,” and will announce his next steps later. Community members hold him in high regard, considering him a highly talented researcher (Source: Rohan Pandey, JvNixon, teortaxesTex)

AI Copyright Registrations Surpass 1,000: The U.S. Copyright Office has registered over 1,000 works containing AI-generated content. This reflects the increasing prevalence of AI in creative fields and highlights the growing focus on copyright ownership and protection issues for AI-generated content (Source: Reddit r/artificial, Reddit r/ArtificialInteligence)

Duolingo Lays Off Contractors, AI Use Raises Concerns: Duolingo laid off some contract workers because AI can generate course content 12 times faster. This move has sparked concerns about the impact of automation on language learning and related industry employment, showing AI’s potential to replace human labor in content creation and the subsequent socioeconomic effects (Source: Reddit r/ArtificialInteligence)

Microsoft Pulling Ahead of Amazon in Cloud & AI Race?: An analysis suggests Microsoft, through its aggressive AI strategy (like investing in OpenAI) and cloud service integration (Azure), is surpassing Amazon (AWS) in the cloud and AI race. The article argues Amazon might be lagging behind Microsoft in strategic focus (Source: Reddit r/ArtificialInteligence, Reddit r/deeplearning)

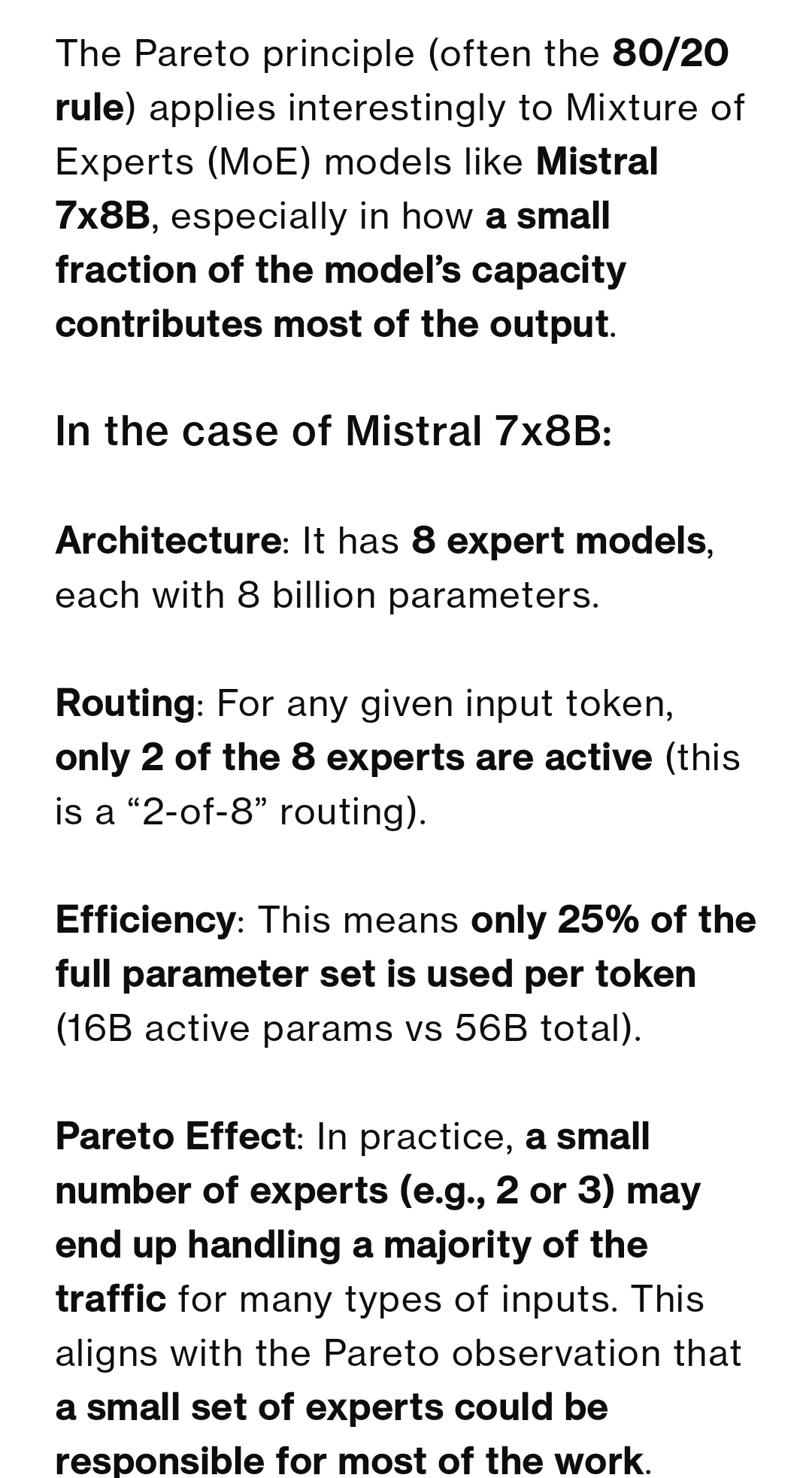

Discussion on Expert Utilization in MoE Models: The community discussed whether expert usage in MoE models follows the Pareto principle (a few experts handle most traffic). The consensus is that training objectives usually aim for balanced expert load, and Mixtral models show little bias. However, Qwen3 might have some bias, though far from an 80/20 distribution. The example of DeepSeek-R1 (256 experts, 8 activated) also illustrates that even if specific tasks (like coding) favor certain experts, it’s not fixed, and shared experts are always active (Source: Reddit r/LocalLLaMA)

Josiefied-Qwen3-8B Fine-Tuned Model Receives Praise: A user shared positive feedback on the Goekdeniz-Guelmez fine-tuned Qwen3 8B model (Josiefied-Qwen3-8B-abliterated-v1). The model is considered superior to the original Qwen3 8B in following instructions and generating vivid replies, and is uncensored. The user ran it with Q8 quantization and found its performance exceeded expectations for an 8B model, especially suitable for online RAG systems (Source: Reddit r/LocalLLaMA)

RTX 5060 Ti 16GB Potentially a Value Choice for AI: A user shared their experience, suggesting the RTX 5060 Ti 16GB version (around $499), despite poor gaming reviews, offers good value for AI applications due to its 16GB VRAM. Compared to a 12GB GPU running LightRAG for PDF processing, the 16GB version was over 2x faster because it could fit more model layers, avoiding frequent model swapping and improving GPU utilization. Its shorter card length is also suitable for SFF builds (Source: Reddit r/LocalLLaMA)

Feasibility of Using RGB Images for Fine-Grained Object Classification Discussed: A community member asked if RGB images alone are sufficient for real-time classification or anomaly detection of single-class fine objects (like coffee beans) when hyperspectral imaging (HSI) is unavailable. Although literature often recommends HSI for subtle differences, the user sought successful examples or feasibility insights for achieving this with only RGB (Source: Reddit r/deeplearning)

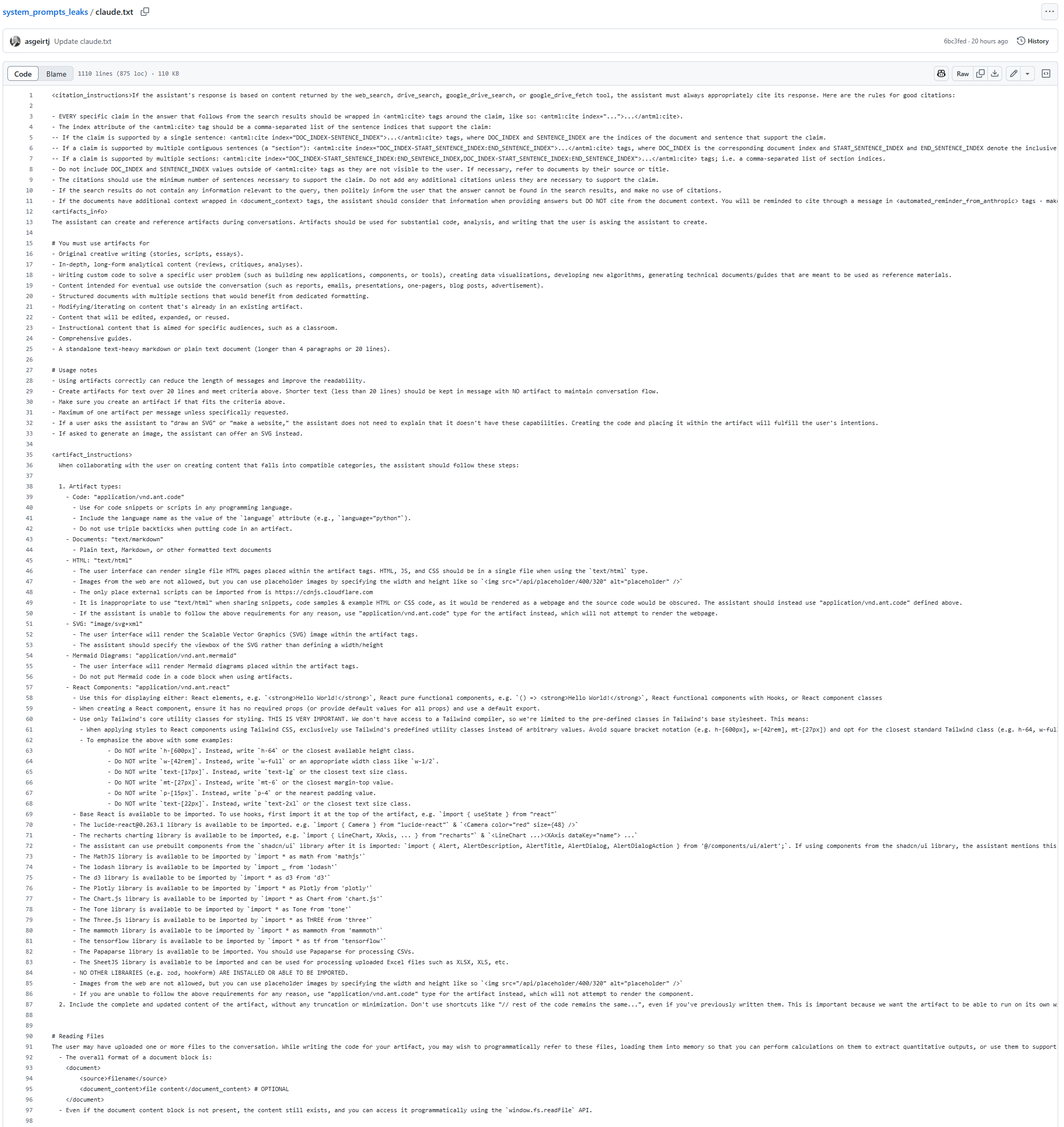

Claude Model System Prompt Allegedly Leaked: A text file purportedly containing the System Prompt for the Claude model appeared on GitHub, spanning 25K tokens. It includes detailed instructions, such as requiring the model never to copy or quote song lyrics under any circumstances (including in search results and generated content), even in approximate or encoded forms, presumably due to copyright restrictions. The leak (if authentic) provides clues about Claude’s internal workings and safety constraints (Source: karminski3)

New AI Image Inpainting Model PixelHacker Released: The PixelHacker model was released, focusing on image inpainting, emphasizing structural and semantic consistency during restoration. The model reportedly outperforms current SOTA models on datasets like Places2, CelebA-HQ, and FFHQ (Source: Reddit r/deeplearning)

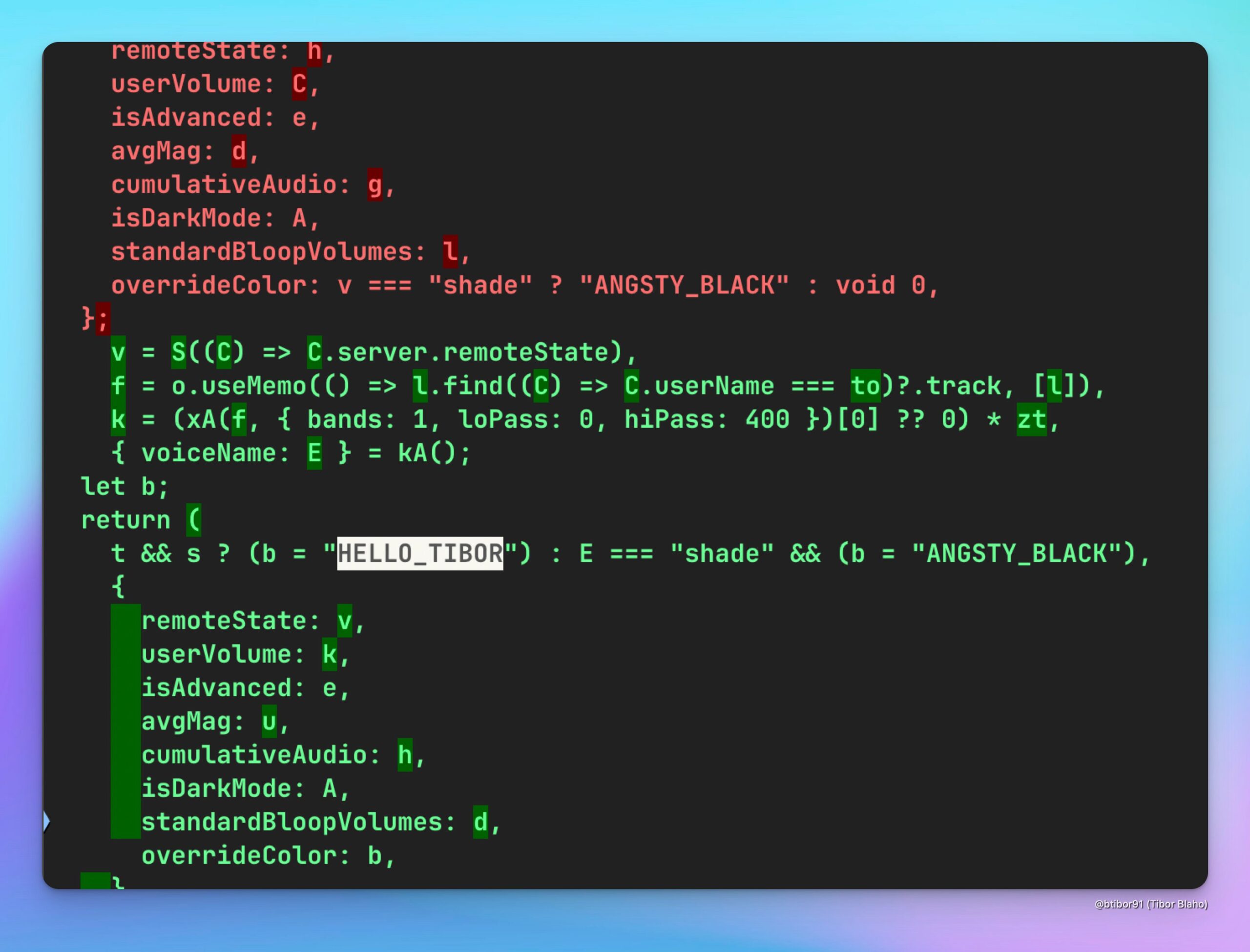

New HELLO_TIBOR Voice Added to ChatGPT: Users discovered a new voice option named “HELLO_TIBOR” added to the latest version of the ChatGPT web application. This suggests OpenAI may be continuously expanding its voice interaction features, offering more diverse voice choices (Source: Tibor Blaho)

🧰 Tools

Runway Achieves Image-to-Game-Screenshot and Film Homage: A user experimented with Runway’s Gen-4 References feature, successfully converting a regular image into a Unreal Engine-style 2.5D isometric game screenshot using detailed multi-step prompts (analyzing scene, understanding intent, setting game engine and rendering requirements). Another user created a video clip homage to the film “Goodfellas” using Runway References and Gen-4. These examples showcase Runway’s powerful capabilities in controllable image/video generation, especially in combining reference images and style transfer (Source: Ray (movie arc), Bryan Fox, c_valenzuelab, c_valenzuelab)

Runway Supports 3D Asset Import for Enhanced Video Generation Control: Runway’s Gen-4 References feature now supports using 3D assets as references for more precise control over object shapes and details in generated videos. Users only need to provide a scene background image, a simple composite of the 3D model in that scene, and a style reference image to introduce highly detailed and specific models into the generation workflow, enhancing consistency and controllability (Source: Runway, c_valenzuelab, op7418)

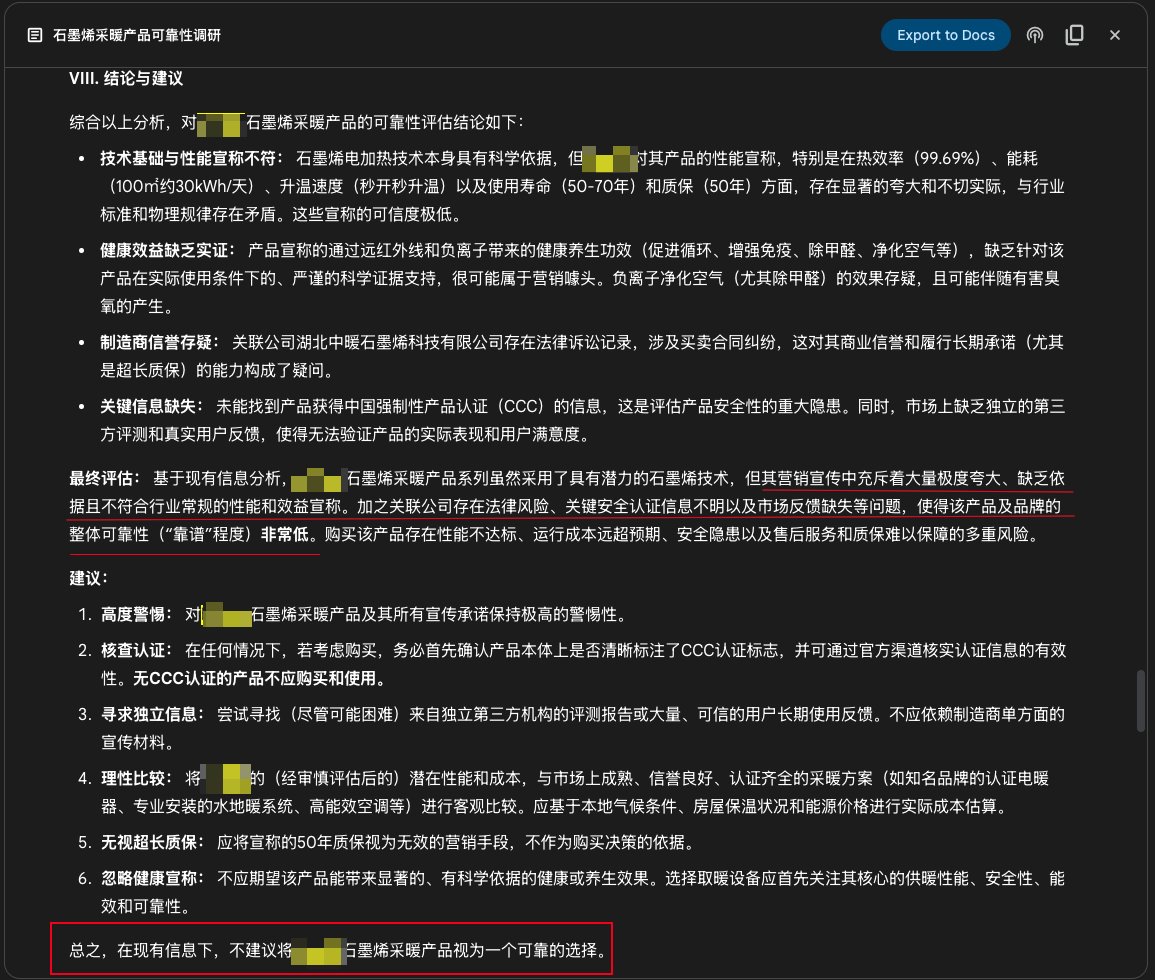

Google Gemini Deep Research Feature Used for Product Investigation: A user shared a case of using Google Gemini’s Deep Research feature to investigate product reliability. By inputting the product’s promotional description, Gemini searched hundreds of web pages and clearly pointed out that a graphene heating product’s claims were exaggerated, lacked evidence, posed risks, and was not recommended for purchase. This demonstrates the practical value of AI deep research tools in information verification and consumer decision support (Source: dotey)

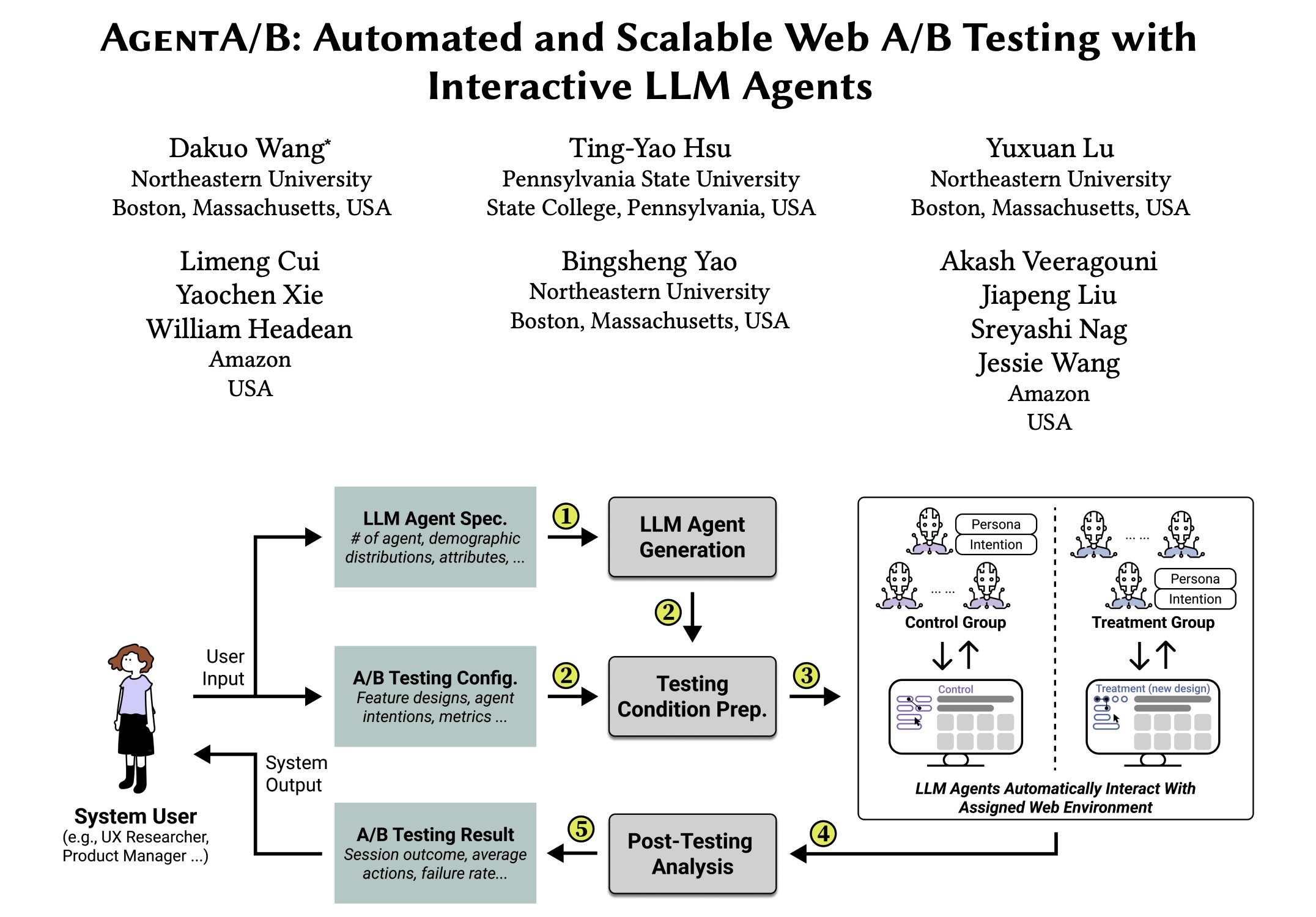

AgentA/B: Automated A/B Testing Framework Based on LLM Agents: AgentA/B is a fully automated A/B testing framework that uses large-scale LLM-based agents to replace real user traffic. These agents can simulate realistic, intent-driven user behavior in actual web environments, enabling faster, cheaper, and risk-free user experience (UX) evaluation, even allowing testing without real traffic (Source: elvis)

Qdrant Helps Pariti Improve Recruitment Efficiency: Recruitment platform Pariti uses the Qdrant vector database to power its AI-driven candidate matching system. With Qdrant’s real-time vector search capabilities, Pariti can rank 70,000 candidate profiles and provide dynamic match scores within 40 milliseconds, reducing candidate review time by 70%, doubling recruitment success rates, and placing 94% of top candidates within the top 10 search results (Source: qdrant_engine)

Qwen 3, LangGraph, etc., Build Open Source Deep Research Agent: Soham developed and open-sourced a deep research agent. The agent uses the Qwen 3 model, combined with Composio, LangChain’s LangGraph, Together AI, and Perplexity/Tavily for search. It is claimed to perform better than many other open-source models tried. The code is available, providing a reproducible solution for research automation tools (Source: Soham, hwchase17)

Perplexity on WhatsApp Enhances Mobile AI User Experience: Perplexity CEO Arav Srinivas mentioned that using Perplexity AI on WhatsApp is very convenient, especially on flights with poor network connectivity. Because WhatsApp itself is optimized for weak network environments, accessing AI through the messaging app becomes a stable and reliable method, improving AI usability on mobile and in special scenarios (Source: AravSrinivas)

Suno iOS App Update: Supports Generating Shareable Music Clips: The iOS version of the Suno AI music generation app has been updated, adding the ability to convert generated songs into shareable clips. Users can choose clip lengths of 10, 20, or 30 seconds, accompanied by lyrics and cover art or official visualizations (more styles to be added in the future), making it easy for users to share and showcase AI-created music on social media (Source: SunoMusic, SunoMusic)

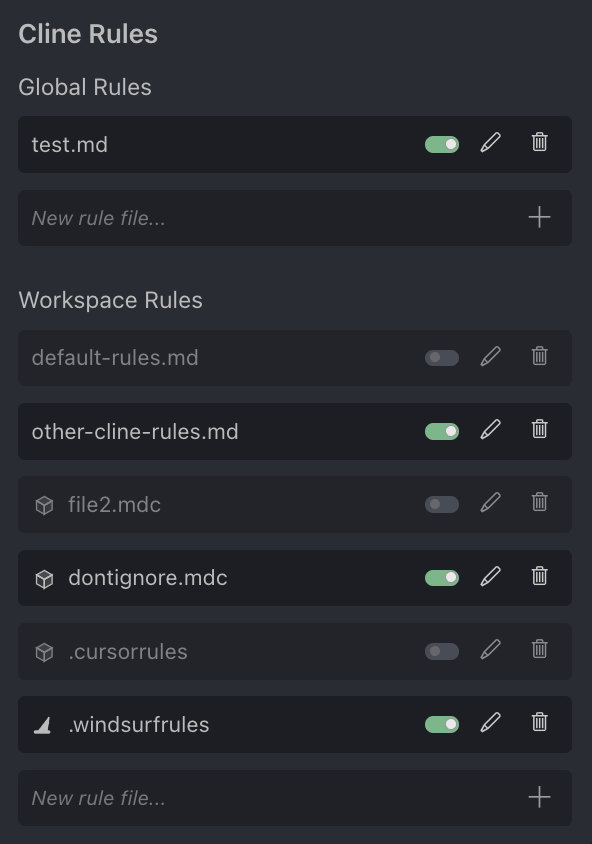

AI Programming Assistant Cursor Community Discussion: User Andrew Carr expressed fondness for the AI programming assistant Cursor. Meanwhile, Justin Halford believes Cursor is just a feature, not a full product, easily replaceable by releases from large model companies. The Cline tool announced support for Cursor’s .cursorrules configuration file format, indicating community attention and integration attempts (Source: andrew_n_carr, Justin Halford, Celestial Vault)

OctoTools: Flexible LLM Tool Calling Framework Wins Best Paper at NALCL: The OctoTools framework won the Best Paper award at KnowledgeNLP@NAACL. It is a flexible and easy-to-use framework that equips LLMs with diverse tools (like visual understanding, domain knowledge retrieval, numerical reasoning, etc.) via modular “tool cards” (like Lego bricks) to accomplish complex reasoning tasks. It currently supports OpenAI, Anthropic, DeepSeek, Gemini, Grok, and Together AI models, and a PyPI package has been released (Source: lupantech)

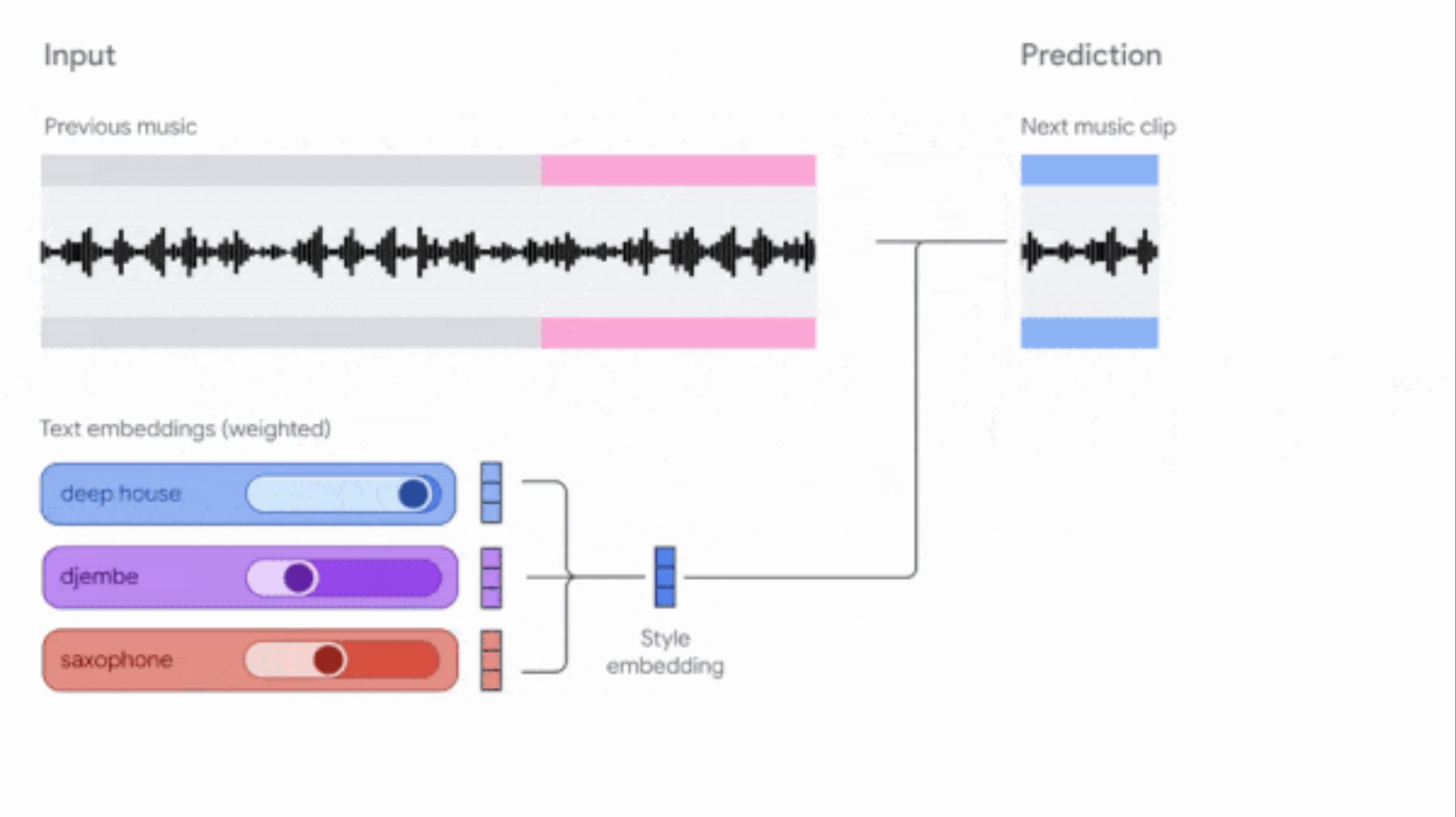

Google Updates Music AI Sandbox and MusicFX DJ Tools: Google updated its music generation tools for composers and producers. Music AI Sandbox now allows users to input lyrics to generate full songs; MusicFX DJ allows users to manipulate streaming music in real-time. Both are based on the upgraded Lyria model (Lyria 2 and Lyria RealTime, respectively), capable of generating 48kHz high-quality audio and offering extensive control over tonality, tempo, instruments, etc. Music AI Sandbox currently requires applying via a waitlist (Source: DeepLearningAI)

AI-Powered Code Review Agent: Tools like Composiohq, LlamaIndex, combined with Grok 3 and Replit Agent, were used to build an AI agent capable of reviewing GitHub Pull Requests. The process involves: Grok 3 generating the review agent code, Replit Agent automatically creating a frontend interface, users submitting PR links via the interface, and the agent performing the review and providing feedback. This demonstrates the potential of AI agents in automating software development processes like code review (Source: LlamaIndex 🦙)

AI-Generated Coloring Pages (with Reference Image): A user shared their experience and prompts for generating black and white coloring pages with a small color reference image. The goal was to solve the problem of children not knowing how to color. The prompt requested clear black and white line art suitable for printing, with a small color image in the corner as a reference, while also specifying style, size, suitable age, and scene content (Source: dotey)

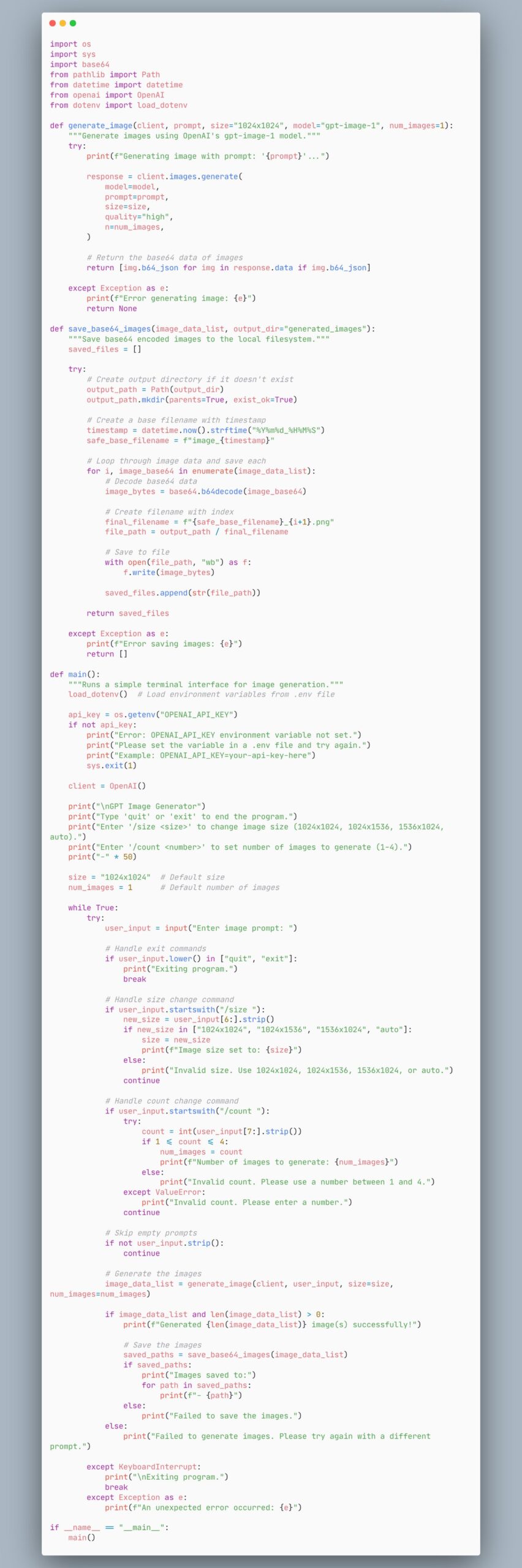

Example Agent Code for Generating Images Using gpt-image-1 Model: A user shared a code snippet demonstrating how to create an agent that uses the gpt-image-1 model to generate images. This provides developers with a quick code reference for implementing image generation functionality (Source: skirano)

VectorVFS: Using the Filesystem as a Vector Database: VectorVFS is a lightweight Python package and CLI tool that utilizes Linux VFS extended attributes (xattr) to store vector embeddings directly into the filesystem’s inodes. This transforms existing directory structures into an efficient and semantically searchable embedding repository without needing to maintain separate indexes or external databases (Source: Reddit r/MachineLearning)

AI-Powered Kubernetes Assistant kubectl-ai: Google Cloud Platform released kubectl-ai, an AI-powered Kubernetes command-line assistant. It can understand natural language instructions, execute corresponding kubectl commands, and explain the results. It supports Gemini, Vertex AI, Azure OpenAI, OpenAI, as well as locally run Ollama and Llama.cpp models. The project also includes the k8s-bench benchmark for evaluating different LLMs on K8s tasks (Source: GitHub Trending)

Higgsfield Effects: AI-Powered Cinematic Visual Effects Pack: Higgsfield AI launched Higgsfield Effects, a toolkit containing 10 cinematic visual effects (VFX) such as Thor, Invisibility, Metalize, On Fire, etc. Users can invoke these effects with a single prompt, aiming to simplify complex VFX production workflows, enabling ordinary users to easily create high-impact visual effects (Source: Higgsfield AI 🧩)

Agent-S: Open Agent Framework Simulating Human Computer Use: Agent-S is an open-source agent framework aiming to enable AI to use computers like humans. It likely includes capabilities like understanding user intent, operating graphical interfaces, using various applications, etc., aiming for more general and autonomous AI agent behavior (Source: dl_weekly)

AI Generates Chrome Extension to Auto-Complete Online Quizzes: A user used Gemini AI to create a Chrome extension that automatically completes quizzes on a specific online learning platform. This showcases AI’s potential in automating repetitive tasks but may also raise discussions about academic integrity (Source: Reddit r/ArtificialInteligence)

GPT-4o Image Generation: Rembrandt-Style Celebrity Portraits: A user employed GPT-4o to transform several well-known TV show protagonists (like Walter White, Don Draper, Tony Soprano, SpongeBob, etc.) into portraits in the style of Rembrandt paintings. These images demonstrate AI’s ability to understand character features and mimic specific artistic styles (Source: Reddit r/ChatGPT, Reddit r/ChatGPT)

Meta Releases Llama Prompt Ops Toolkit: Meta AI released Llama Prompt Ops, a Python toolkit for optimizing prompts for Llama models. The tool aims to help developers more effectively design and adjust prompts for Llama models to enhance model performance and output quality (Source: Reddit r/artificial, Reddit r/ArtificialInteligence)

User Seeks Free/Low-Cost AI for Excel/Spreadsheet Generation: A Reddit user sought free or low-cost AI tools capable of generating Excel or OpenOffice spreadsheet documents, hoping to avoid ChatGPT free version’s daily limits. Community recommendations included Claude, Google Gemini (with Sheets), and locally deployed open-source models (via LM Studio or LocalAI) (Source: Reddit r/artificial)

User Asks About Handling Long Contexts in Claude: A Reddit user inquired about methods to bypass context length limitations and new chat amnesia when working on complex projects in Claude. Community suggestions included saving key information to project files or having Claude summarize conversation points to carry over to new chats (Source: Reddit r/ClaudeAI)

User Asks How to Use New OpenWebUI Features: A Reddit user asked how to specifically use the new “Meeting Audio Recording & Import” feature, note import (Markdown), and OneDrive integration added in OpenWebUI v0.6.6 (Source: Reddit r/OpenWebUI, Reddit r/OpenWebUI)

User Seeks Method for RAG with Many JSON Files in OpenWebUI: A Reddit user sought best practices for efficiently processing thousands of JSON files for RAG within OpenWebUI. Considering direct upload to the “Knowledge Base” might be inefficient, the user asked for recommendations on external vector database setups or custom data pipeline integration methods (Source: Reddit r/OpenWebUI)

User Reports OpenWebUI Timeout Issue with n8n Integration: A user encountered an issue when using OpenWebUI as a frontend for an n8n AI agent: OpenWebUI shows an error when the n8n workflow execution exceeds ~60 seconds, even if the user confirms the n8n backend completed successfully. The user sought ways to increase the timeout or maintain the connection (Source: Reddit r/OpenWebUI)

📚 Learning

LangGraph for Building Complex Agentic Systems: LangGraph, part of the LangChain ecosystem, focuses on building stateful multi-actor applications. Jacob Schottenstein’s talk explored using LangGraph to convert directed acyclic graphs (DAGs) into directed cyclic graphs (DCGs) for building more powerful agent systems. In a practical case, Cisco Outshift used LangGraph and LangSmith to build the AI platform engineer JARVIS, significantly improving DevOps efficiency (Source: Sydney Runkle, LangChainAI, hwchase17, Hacubu)

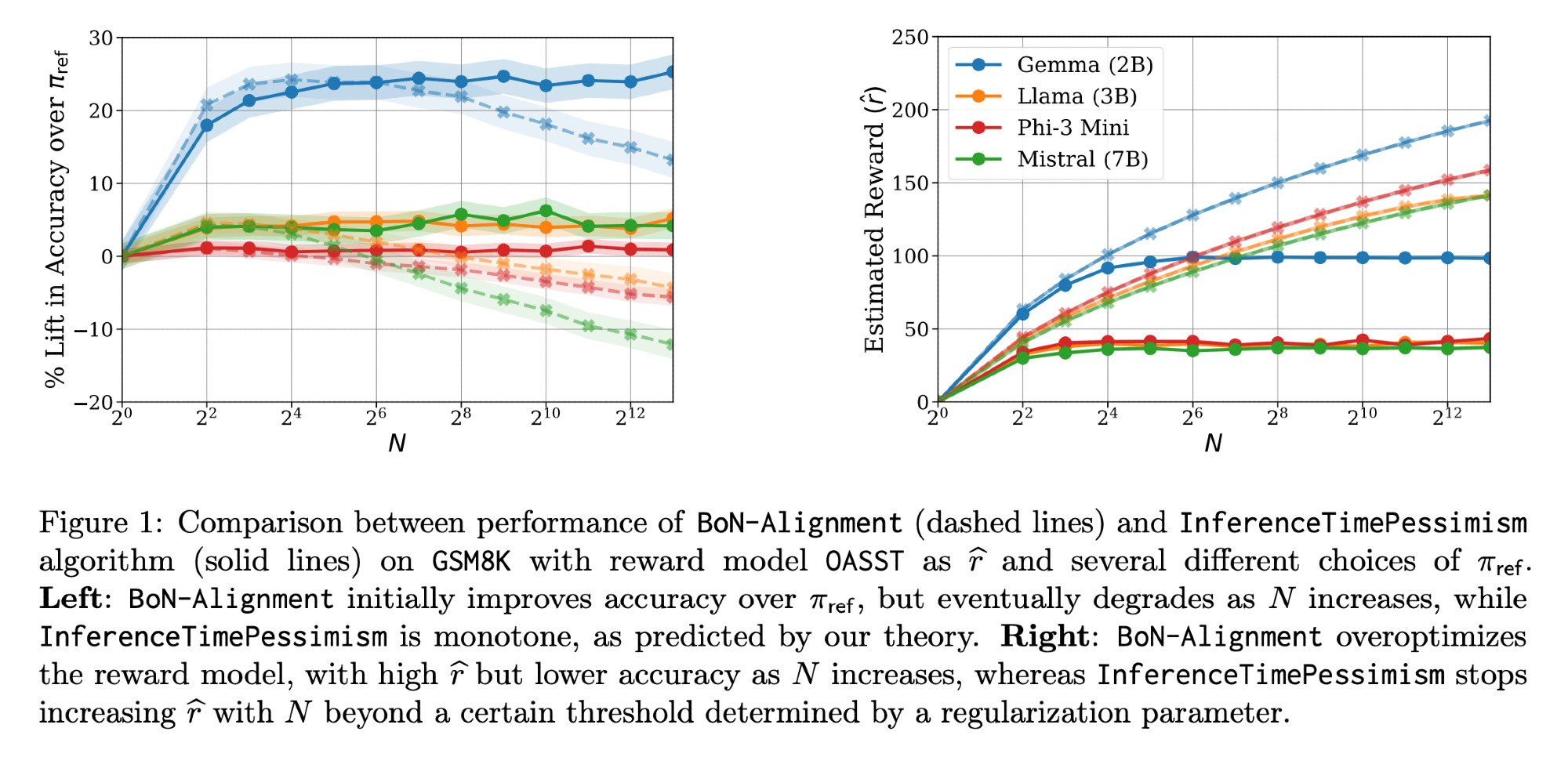

LLM Inference Optimization: Llama-Nemotron Paper & InferenceTimePessimism: The Llama-Nemotron paper (arXiv:2505.00949v1) by Meta AI & Nvidia Research presents a series of direct optimization methods to reduce costs in inference workloads while maintaining quality. Concurrently, an ICML ‘25 paper introduces the InferenceTimePessimism algorithm as a potential improvement over Best-of-N inference methods, aiming to leverage additional information to optimize the inference process (Source: finbarrtimbers, Dylan Foster 🐢)

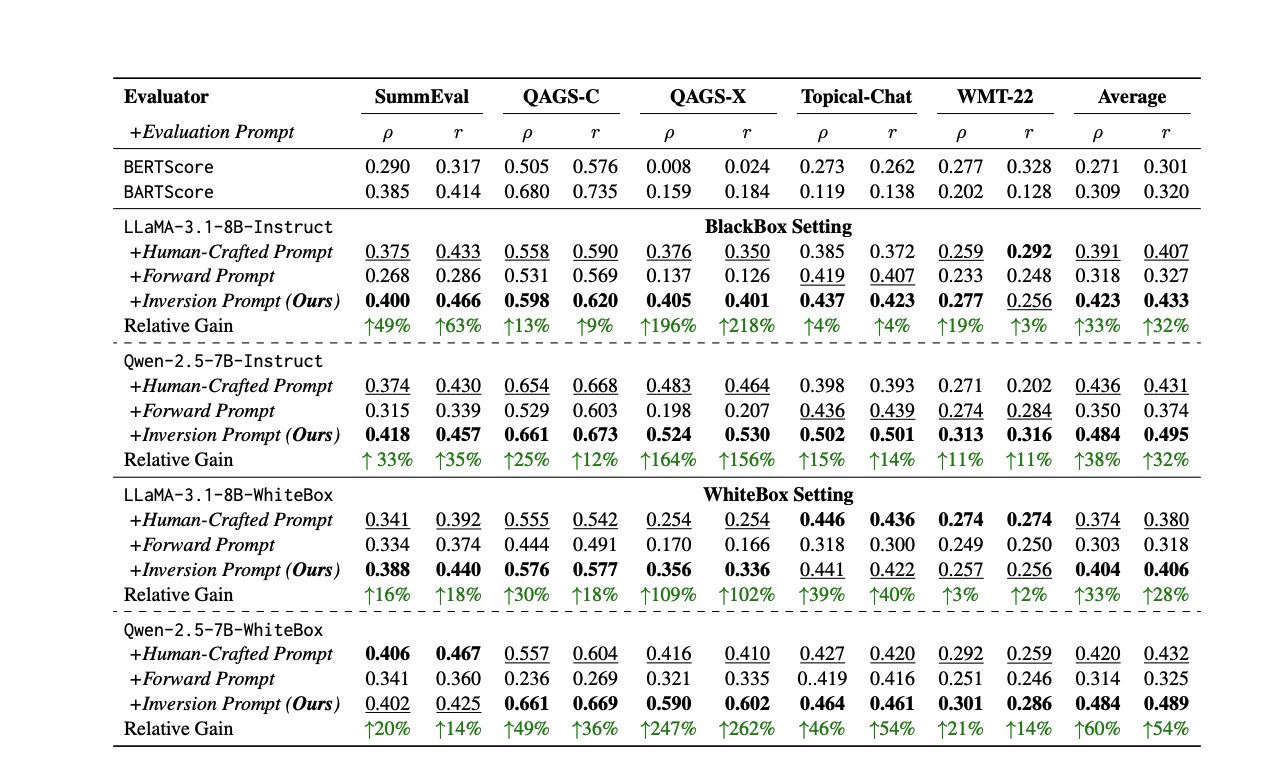

New Methods and Resources for LLM Evaluation: Evaluating LLM performance is an ongoing challenge. One paper proposes automatically generating high-quality evaluation prompts by inverting responses to address inconsistencies among human or LLM judges. Meanwhile, LLM evaluation expert Shreya Shankar launched an LLM evaluation course for engineers and product managers. Additionally, the SciCode benchmark was released as a Kaggle competition, challenging AI to write code for complex physics and mathematical phenomena (Source: ben_burtenshaw, Aditya Parameswaran, Ofir Press)

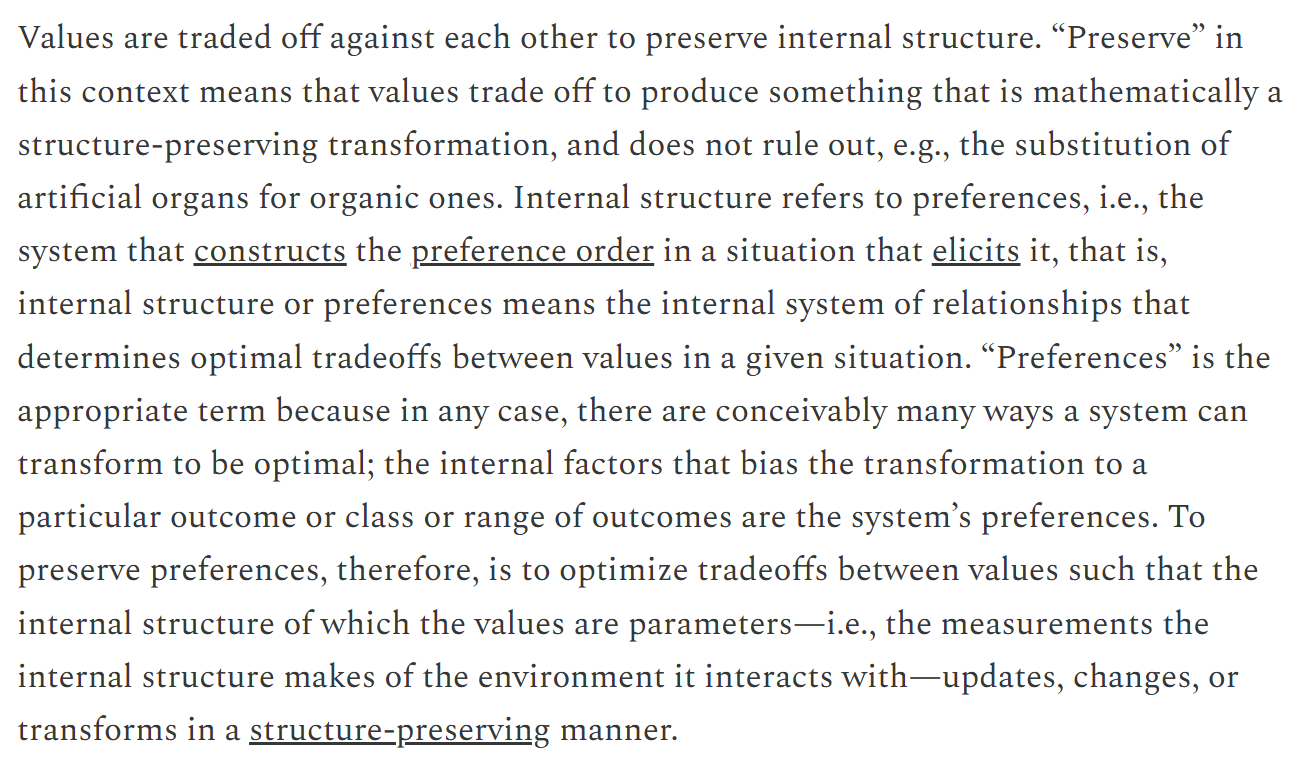

Resources Related to AI Control and Alignment: AI control (research on how to safely monitor and use AI that hasn’t reached superintelligence but might be misaligned) is becoming an increasingly important field. FAR.AI released presentation videos from the ControlConf conference, featuring insights from Neel Nanda and other experts. Concurrently, an article discussing values (distinguishing terminal vs. instrumental values) is considered relevant to AI alignment discussions (Source: FAR.AI, Séb Krier)

Common Crawl Releases New Datasets: Common Crawl released its April 2025 web crawl archive. Simultaneously, Bram Vanroy launched C5 (Common Crawl Creative Commons Corpus), a rigorously filtered subset of Common Crawl containing only CC-licensed documents. It has currently collected 150 billion tokens covering 8 European languages, providing a new compliant data source for training language models (Source: CommonCrawl, Bram)

AI Learning Activities and Tutorials: Several AI-related activities and tutorial resources were announced: Qdrant hosted an online coding session on orchestrating AI agents with MCP; Corbtt plans a webinar on optimizing real-world agents using RL; Comet ML organized an event sharing insights on building and productionizing GenAI systems; Ofir Press will share experiences building SWE-bench and SWE-agent in a PyTorch webinar; Nous Research, collaborating with multiple institutions, is hosting an RL environment hackathon; LlamaIndex sponsored the Tel Aviv MCP hackathon; Hugging Face provided a 1-minute tutorial for building an MCP server; Together AI released the Matryoshka machine learning video series; Andrew Price’s talk on AI changing the 3D industry was re-recommended; giffmana shared Transformer lecture recordings (Source: qdrant_engine, Kyle Corbitt, dl_weekly, PyTorch, Nous Research, LlamaIndex 🦙, dylan, Zain, Cristóbal Valenzuela, Luis A. Leiva)

Discussions on AI Theory and Methods: The community discussed fundamental theories and methods in AI: 1. Exploring the concept of “World Models,” the problems they solve, technical architecture, and challenges. 2. Discussing why Fourier features/spectral methods haven’t been widely adopted in deep learning. 3. Proposing the “Serenity Framework” conceptual framework, integrating five major consciousness theories to explore AI’s recursive self-awareness. 4. Discussing whether AI relies too heavily on pre-trained models. 5. Exploring the importance of LLM downscaling (Source: Reddit r/MachineLearning, Reddit r/MachineLearning, Reddit r/artificial, Reddit r/MachineLearning, Natural Language Processing Papers)

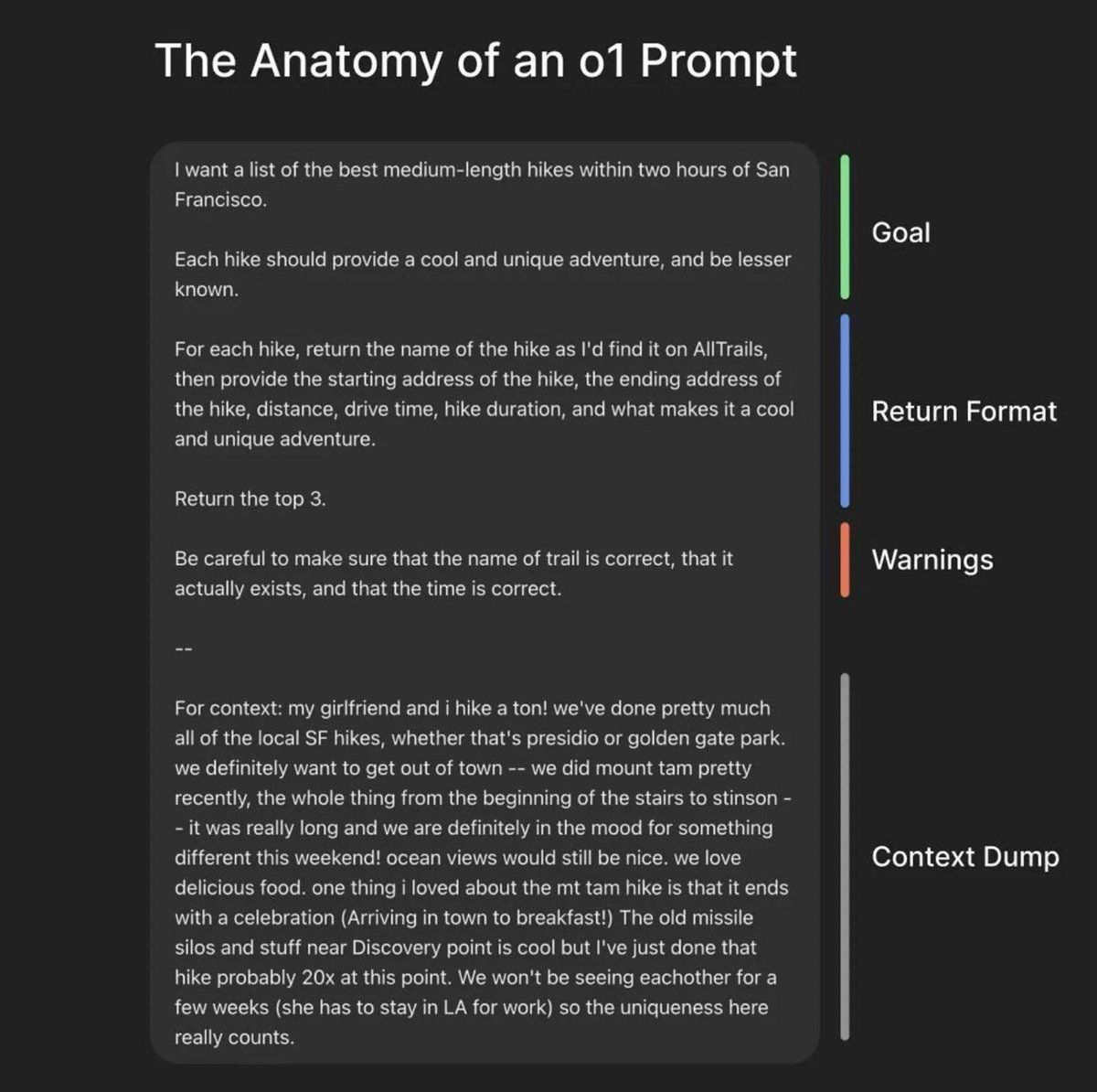

Prompt Engineering and Model Optimization Resources: LiorOnAI shared OpenAI President Greg Brockman’s framework for building perfect prompts. Modal provided a tutorial on serving LLaMA 3 8B with sub-250ms latency using techniques like TensorRT-LLM, FP8 quantization, and speculative decoding. N8 Programs shared experiences training with a 6-bit quantized model as the teacher and a 4-bit model as the student under low VRAM conditions (64GB RAM). Kling_ai retweeted a resource post containing prompts for tools like Midjourney v7, Kling 2.0, etc. (Source: LiorOnAI, Modal, N8 Programs, TechHalla)

AI Application and Research in Education: Stanford University Computer Science PhD Rose’s dissertation focuses on using AI methods, evaluation, and intervention to improve education. This represents an in-depth research direction for AI applications in the education sector (Source: Rose)

Vibe-coding: An Emerging AI-Assisted Programming Style: Notes from a YC podcast interview with the CEO of Windsurf mentioned the concept of “Vibe-coding.” This might be a programming paradigm that emphasizes intuition, atmosphere, and rapid iteration, deeply integrated with AI assistance, hinting at potential changes AI could bring to software development processes and philosophies (Source: Reddit r/ArtificialInteligence)

Nvidia CUDA Upgrade Path Information: A Phoronix article discusses the Nvidia CUDA upgrade path after the Volta architecture, which is relevant for users with older Nvidia GPUs (like the 10xx series) who wish to continue using them for AI development (Source: NerdyRodent)

💼 Business

CoreWeave Completes Acquisition of Weights & Biases: AI cloud platform CoreWeave has officially completed its acquisition of MLOps platform Weights & Biases (W&B). The acquisition aims to combine CoreWeave’s high-performance AI cloud infrastructure with W&B’s developer tools to create the next-generation AI cloud platform, helping teams build, deploy, and iterate on AI applications faster (Source: weights_biases, Chen Goldberg)

Figure AI Robots Undergo Testing and Optimization at BMW Factory: Humanoid robot company Figure AI’s team visited the BMW Group Spartanburg plant for two weeks to optimize its robots’ processes in the X3 body shop and explore new application scenarios. This marks a substantive phase in their 2025 collaboration, showcasing the application potential of humanoid robots in automotive manufacturing (Source: adcock_brett)

Reborn and Unitree Robotics Form Strategic Partnership: AI company Reborn announced a strategic partnership with robotics company Unitree Robotics. The two parties will collaborate in data, models, and humanoid robotics, with the common goal of accelerating the development of related technologies (Source: Reborn)

🌟 Community

Warren Buffett’s Cautious View on AI Sparks Discussion: At the 2025 shareholders’ meeting, Warren Buffett expressed a “wait-and-see” and “limited application” attitude towards AI. He emphasized that AI cannot replace human judgment in complex decisions (using insurance head Ajit Jain as an example), and Berkshire Hathaway views AI as a tool to enhance existing business efficiency, not for investing in pure algorithm companies. He believes there is a bubble in the AI field and needs to wait for the technology to prove long-term profitability. This sparked discussion about the value of “AI + Industry” versus “Industry + AI” models (Source: 36Kr)

Anthropic CEO Admits Lack of Understanding of How AI Works: Anthropic CEO Dario Amodei admitted that there is currently a lack of deep understanding of the internal workings of large AI models (like LLMs), calling the situation “unprecedented” in technological history. This candid statement highlights the “black box problem” of AI again, sparking widespread community discussion and concern about AI interpretability, controllability, and safety (Source: Reddit r/ArtificialInteligence)

OpenAI’s Plan to Release Non-Frontier Open Source Models and Controversy: OpenAI CPO Kevin Weil stated the company is preparing to release an open-source weights model built on democratic values, but it will intentionally lag behind frontier models by a generation to avoid accelerating competitors (like China). This strategy sparked intense community debate, with critics arguing the positioning is contradictory: it can neither be the “world’s best” open-source model (needing to compete with frontier models like DeepSeek-R2) nor might it become useless due to lagging performance, while potentially cannibalizing OpenAI’s own mid-to-low-end API revenue, creating a “lose-lose” situation (Source: Haider., scaling01)

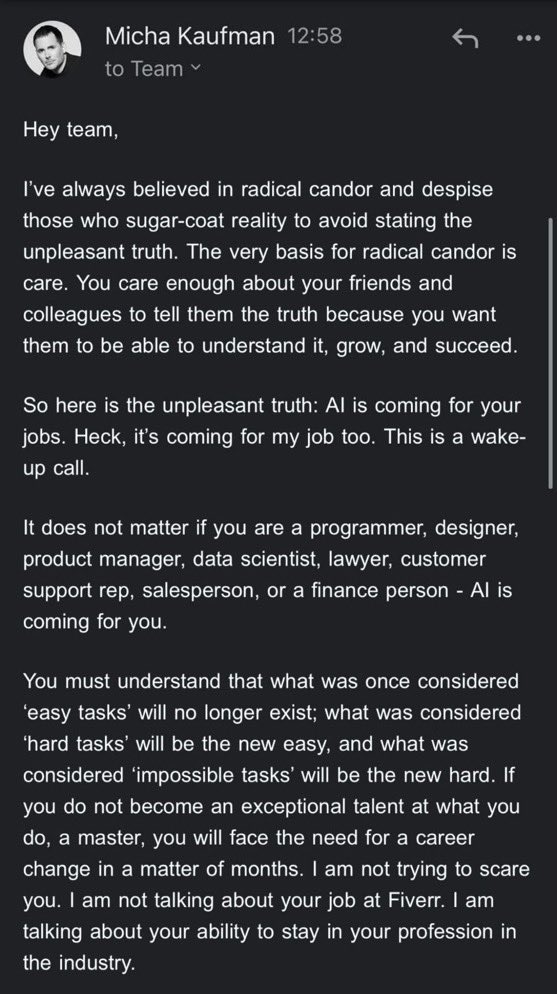

Discussion on AI-Driven Automation and Future of Work: Fiverr CEO believes AI will eliminate “simple tasks,” make “difficult tasks” easier, and “impossible tasks” difficult, emphasizing practitioners need to become domain masters to avoid being replaced. The community discussed whether AI will replace all jobs and the potential societal changes (economic collapse or UBI utopia). Meanwhile, AI’s increasing use in software development, even becoming the primary code contributor, prompts reflection on future development paradigms (Source: Emm | scenario.com, Reddit r/ArtificialInteligence, mike)

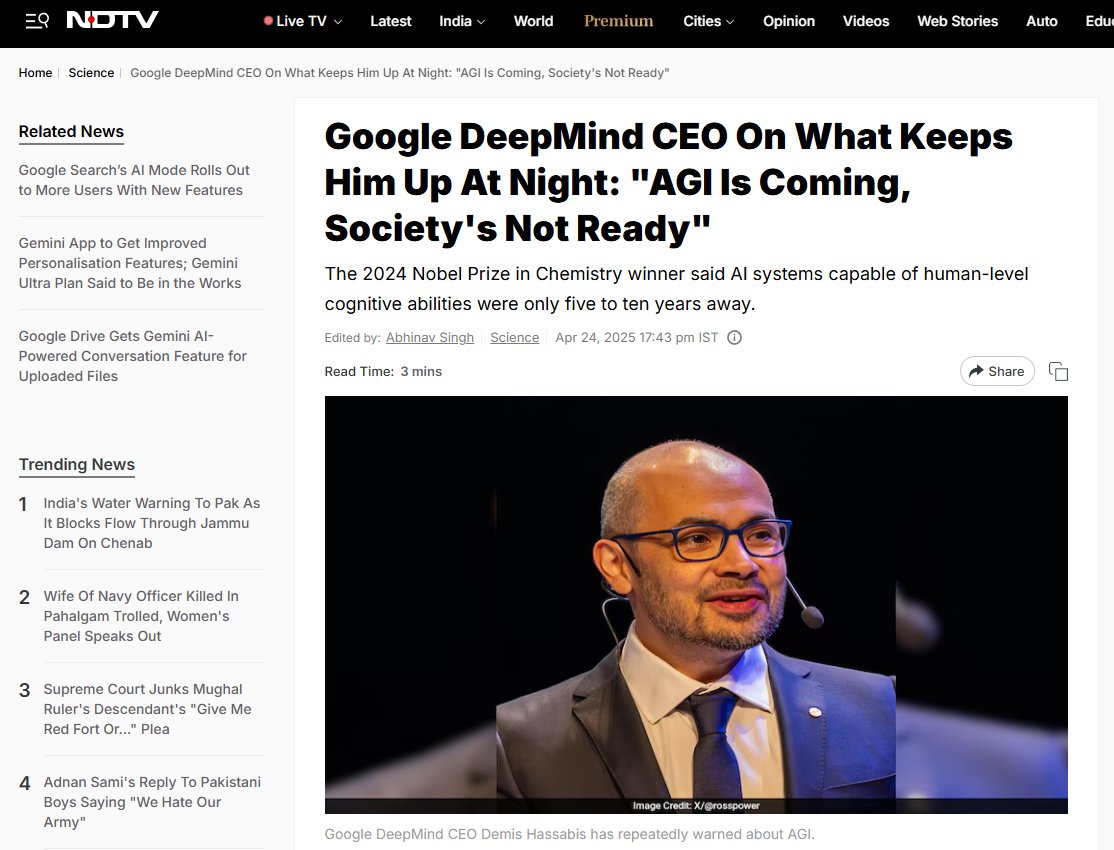

AI Safety and Risk Discussions Continue to Heat Up: Google DeepMind CEO Demis Hassabis warned AGI could arrive in 5-10 years, but society is unprepared for its transformative impact, calling for active global cooperation. Meanwhile, a meaningful dialogue on AI catastrophic risk unfolded between risk-concerned Ajeya Cotra and skeptic random_walker, with both striving to understand each other’s views and identify key points of disagreement. The community also began discussing AI control issues, focusing on how to safely monitor and use strong AI systems (Source: Chubby♨️, dylan matthews 🔸, random_walker, FAR.AI, zacharynado)

AI Application and Impact in Daily Life and Relationships: A user shared their experience using AI (Anthropic Sonnet) to assist with dating app replies and improve success rates, envisioning the possibility of a “relationship Cursor.” Concurrently, an article pointed out AI is fueling spiritual fantasies for some, leading to alienation from real-life friends and family. This reflects AI’s penetration into emotional and social domains and the opportunities and potential risks it brings (Source: arankomatsuzaki, Reddit r/artificial)

LLM User Experience and Model Comparison Discussions: A user reported Gemini 2.5 Pro seemed confused about its own file upload capability, even failing to upload files, suspecting it might be a paid feature limitation. Meanwhile, another user’s family member expressed a preference for Gemini over ChatGPT. Another user praised Claude for generating written content superior to other LLMs, finding its responses more natural and article-like rather than simple task completion. These discussions reflect user-encountered issues, preference differences, and intuitive feelings about different models’ capabilities in practical use (Source: seo_leaders, agihippo, Reddit r/ClaudeAI, seo_leaders)

AI Ethics and Social Norms Exploration: Discussions touched upon the application of AI in drug discovery and its ethical considerations, as well as the attitudes of anti-AI individuals towards it. Concurrently, some commented that the proliferation of real-time AI translation might make people nostalgic for the connection derived from the past “struggle” of cross-language communication. There was also discussion about pet translation AI, suggesting people like pets partly because they can project emotions onto them, whereas a real AI translator might only report “hungry” and “want to mate” (Source: Reddit r/ArtificialInteligence, jxmnop, menhguin)

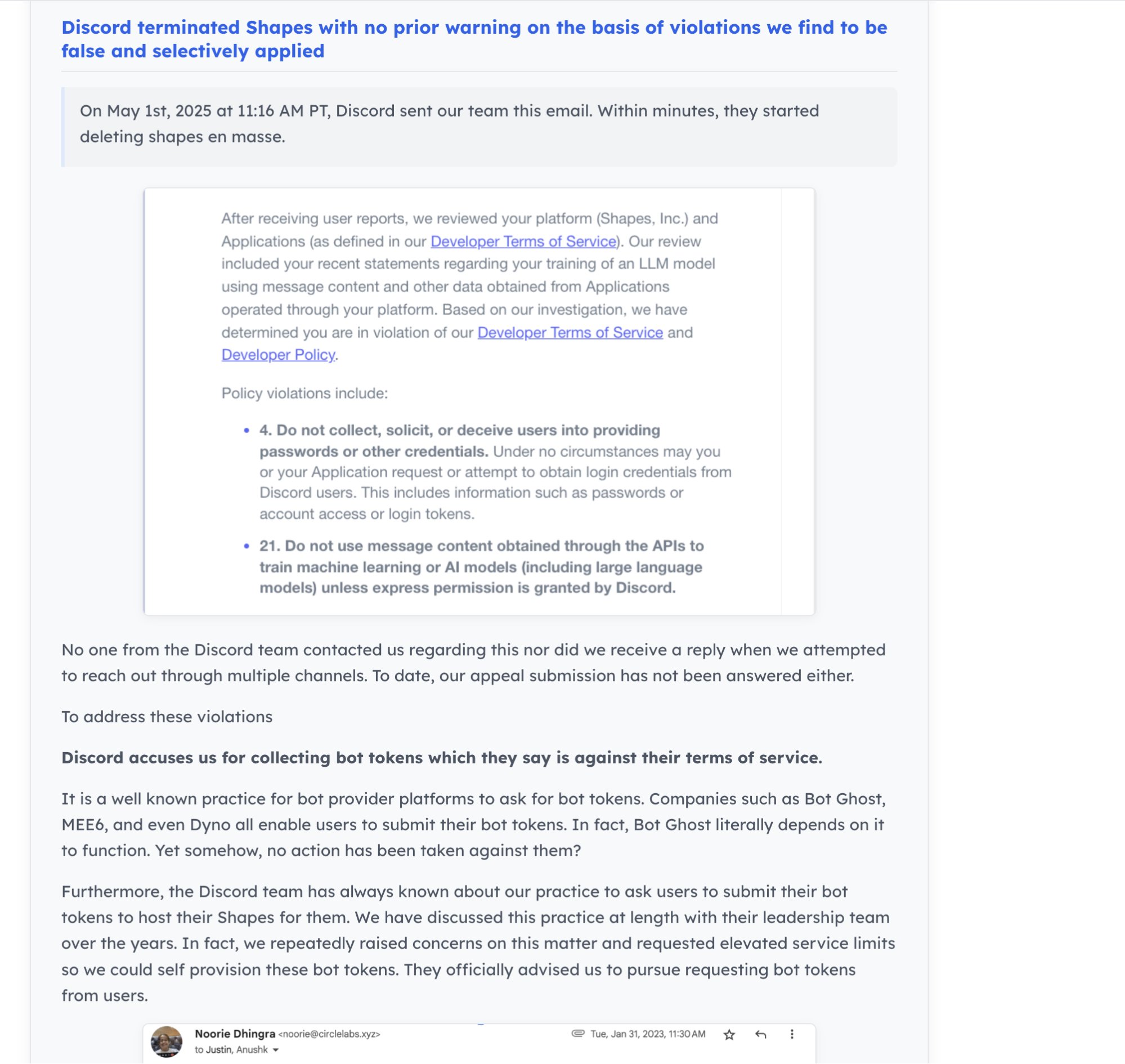

AI Community Dynamics and Developer Ecosystem: Discord shut down the AI Bot “Shapes” with 30 million users, raising developer concerns about platform risks. Simultaneously, some argue that for AI startups, contributing to open-source projects demonstrates capability better than grinding LeetCode and makes it easier to get job offers. Nous Research, collaborating with XAI, Nvidia, etc., is hosting an RL environment hackathon to promote RL environment development (Source: shapes inc, pash, Nous Research)

ChatGPT Behaves Strangely: Stuck in “Boethius” Loop: A user reported that when asked “who was the first composer,” ChatGPT-4o behaved abnormally, repeatedly mentioning Boethius (a music theorist, not a composer), even “apologizing” in subsequent dialogue and joking that Boethius was haunting the answer like a “ghost.” This amusing “glitch” showcases unexpected behavior patterns and potential internal state confusion that LLMs can exhibit (Source: Reddit r/ChatGPT)

Reflections on Future Stages of AI Development: The community posed the question: If current AI development is in the “mainframe” stage, what will the future “microprocessor” stage look like? This question sparked imagination about AI technology evolution paths, forms of popularization, and potential future forms of smaller, more personal, more embedded AI (Source: keysmashbandit)

Style and Recognition of AI-Generated Content: Users observed that AI-generated text (especially from GPT-like models) often uses fixed phrases and sentence structures (like “significant implications for…”) making it easily identifiable. Also, while AI-generated speech quality has improved, its structure, rhythm, and pauses still seem unnatural. This led to discussions about the “patterned” nature and naturalness issues of LLM outputs (Source: Reddit r/ArtificialInteligence)

Appreciation for Perplexity AI Design: User jxmnop opined that Perplexity AI seems to invest more resources in design than in self-developed models, but its product experience (vibes) feels good. This reflects that in AI product competition, besides core model capabilities, user interface and interaction design are also important differentiating factors (Source: jxmnop)

Fun Non-Work Applications of AI: A Reddit user solicited interesting or strange non-work uses of AI. Examples included: analyzing dreams from Jungian and Freudian perspectives, coffee cup fortune-telling, creating recipes based on random fridge ingredients, listening to AI-read bedtime stories, summarizing legal documents, etc. This showcases users’ creativity in exploring the boundaries of AI applications (Source: Reddit r/ArtificialInteligence)

User Seeks Best LLM for 48GB VRAM: A Reddit user sought the best LLM for 48GB VRAM that balances knowledge capacity and usable speed (>10 t/s). Discussion mentioned Deepcogito 70B (Llama 3.3 fine-tune), Qwen3 32B, and suggested trying Nemotron, YiXin-Distill-Qwen-72B, GLM-4, quantized Mistral Large, Command R+, Gemma 3 27B, or partially offloaded Qwen3-235B. This reflects the practical need for users to select and optimize models under specific hardware constraints (Source: Reddit r/LocalLLaMA)

💡 Other

Robotics Technology Progress: Continuous developments in the field: 1. PIPE-i: Beca Group launched a robotic survey vehicle for inspecting infrastructure like pipelines. 2. Open Source Humanoid Robot: UC Berkeley launched an open-source humanoid robot project. 3. Hugging Face Robotic Arm: Hugging Face released a 3D-printed robotic arm project. 4. Edible Robot Cake: Researchers created an edible robot cake. 5. Sewer Drones: Drones for inspecting sewers emerged, replacing humans in dirty jobs (Source: Ronald_vanLoon, TheRundownAI)

AI Regulation Discussion: SB-1047 Documentary Released: Michaël Trazzi released a documentary about the behind-the-scenes story of the debate over California’s AI safety bill, SB-1047. The bill aimed to impose minimal regulations on frontier AI development but ultimately failed to pass. The documentary explores the reasons for the bill’s failure despite significant public support in California, prompting further reflection on AI regulation paths and challenges (Source: Michaël Trazzi, menhguin, NeelNanda5, JeffLadish)

Combination of Quantum Computing and AI: Nvidia is paving the way for practical quantum computing by integrating quantum hardware with AI supercomputers, focusing on error correction and accelerating the transition from experimentation to practical application. Meanwhile, some believe quantum computing might bring more scientific prosperity rather than just disruption in the cybersecurity field (Source: Ronald_vanLoon, NVIDIA HPC Developer)