Keywords:Qwen3 series models, Claude Code, AI model benchmarking, Runway Gen-4, LangGraph, Qwen3-235B-A22B performance, Claude Code programming assistant, SimpleBench benchmarking, Runway Gen-4 References feature, LangGraph Agent applications

🔥 Highlights

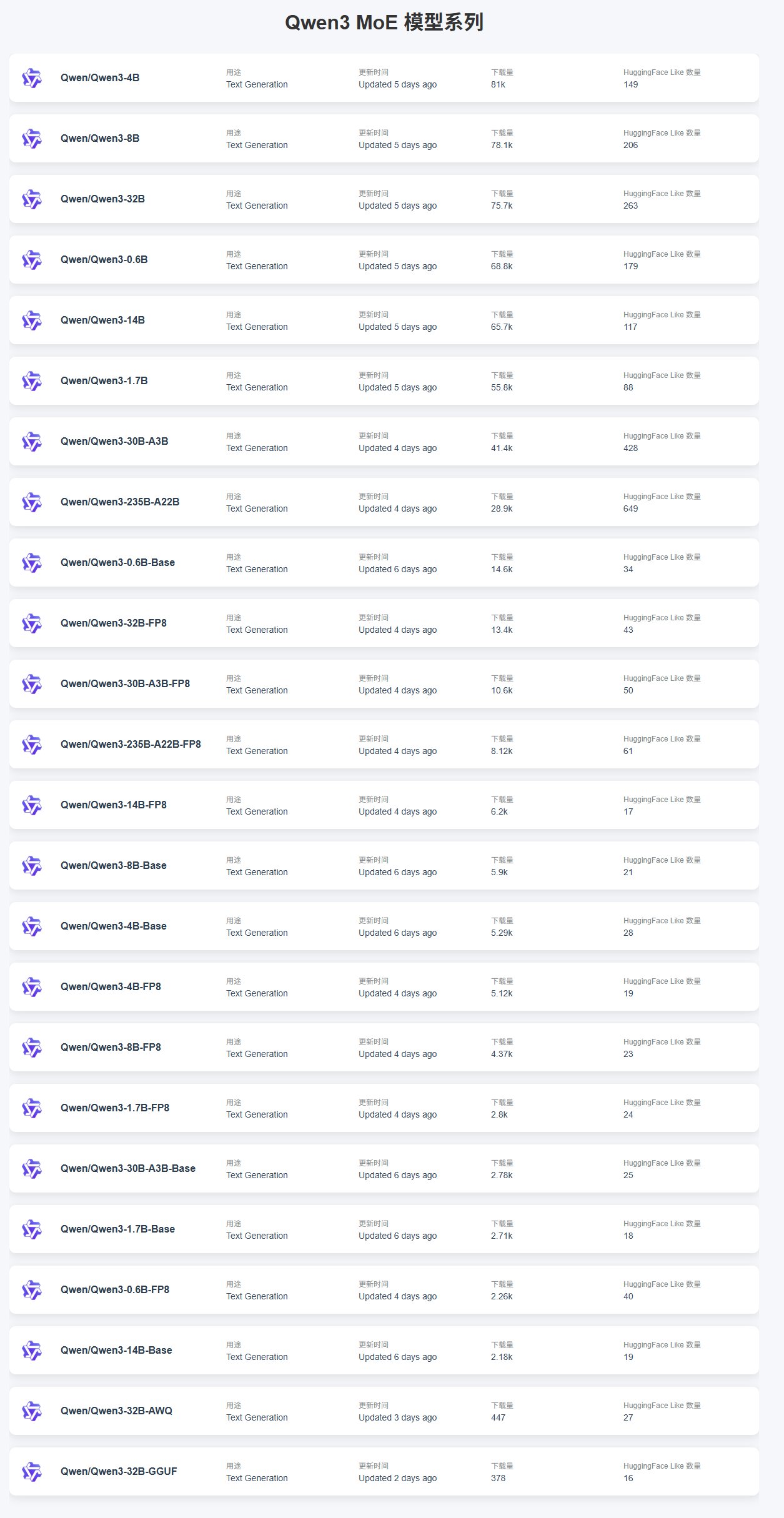

Qwen3 Series Models Release and Performance: Alibaba released the Qwen3 series models, covering multiple sizes from 0.6B to 235B. Community feedback indicates that smaller models (like 4B) have higher download counts due to ease of fine-tuning, while among MoE models, the 30B-A3B is relatively popular. In terms of performance, Qwen3-235B-A22B performs excellently on SimpleBench, ranking 13th, outperforming models like o1/o3-mini and DeepSeek-R1. Qwen3-8B performs well locally, with a small size (4.3GB quantized version) and low memory usage (4-5GB), suitable for resource-constrained environments. However, some users pointed out shortcomings of Qwen3 in driving autonomous AI agents, such as unstable structured generation, difficulty in cross-lingual processing, lack of environmental understanding, and censorship issues. (Source: karminski3, scaling01, BorisMPower, Reddit r/LocalLLaMA, Reddit r/MachineLearning)

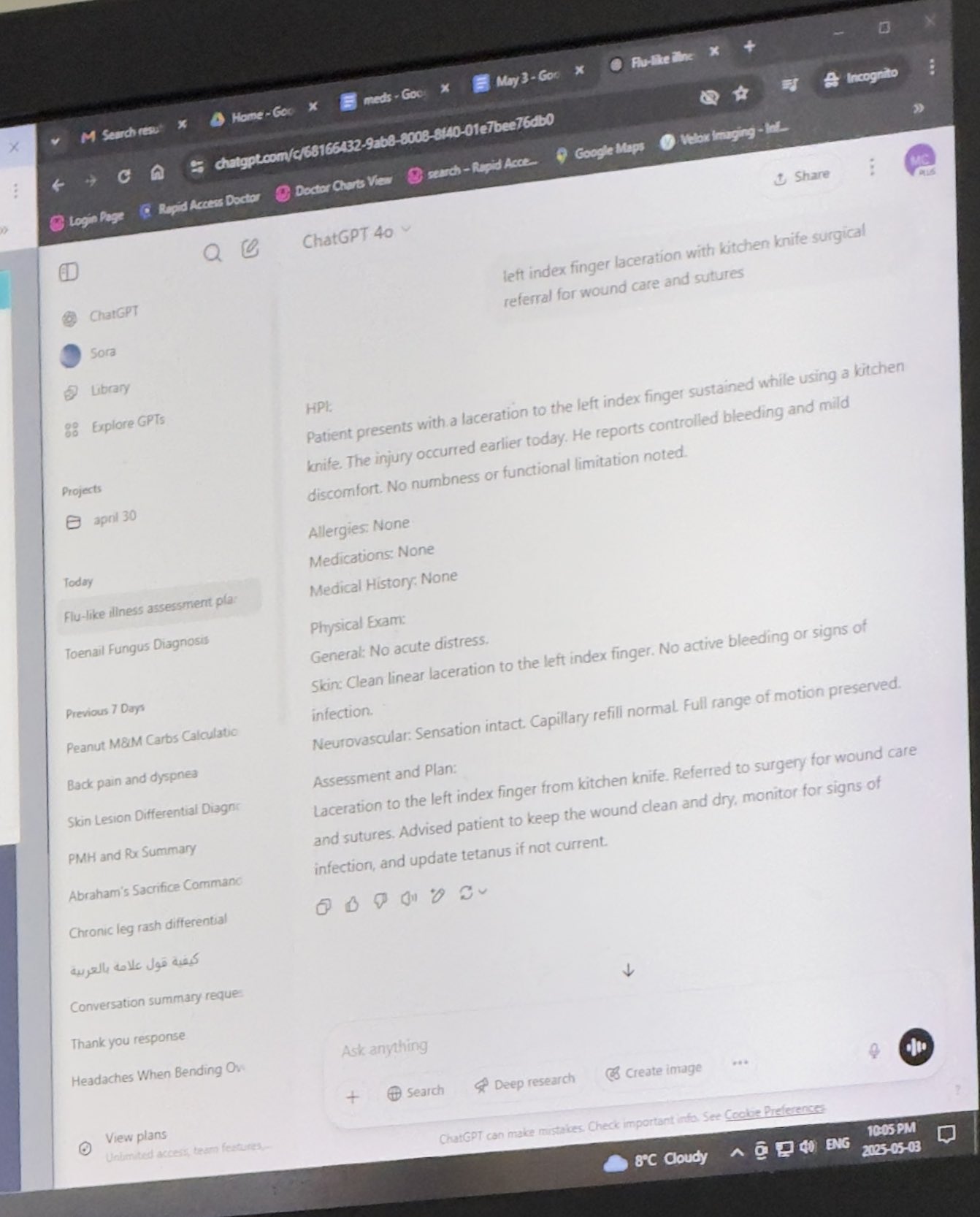

Claude Code Performance and User Feedback: Claude Code is gaining attention as a programming assistant. Users discuss its hallucination issues when dealing with private libraries, generating incorrect code due to a lack of understanding of custom implementations. Solutions include providing more context, fine-tuning the model, or using an MCP (Machine Collaboration Protocol) server to access private libraries. Meanwhile, Claude Pro users report quota limit issues, where even minimal usage can trigger limits, affecting coding efficiency. Performance reports indicate that recent adjustments to cache-aware rate limiting might be causing unexpected throttling, particularly affecting Pro users. Despite the issues, some users still believe Claude is superior to ChatGPT for “vibe-coding”. (Source: code_star, jam3scampbell, Reddit r/ClaudeAI, Reddit r/ClaudeAI, Reddit r/ClaudeAI)

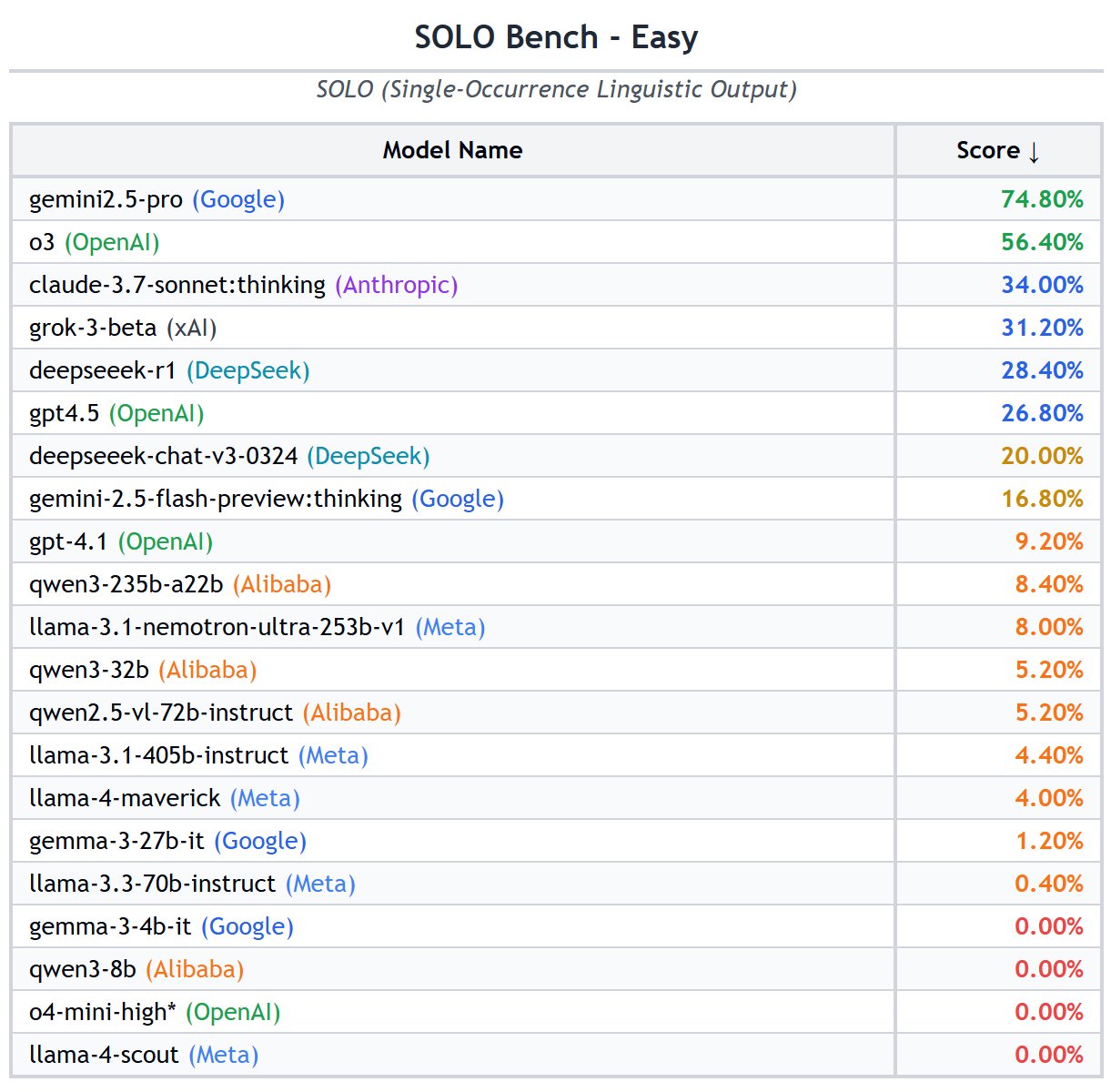

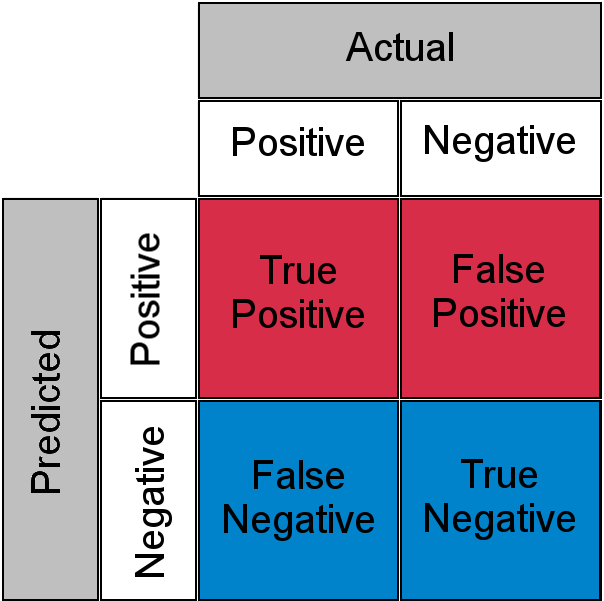

Discussion and List of AI Model Benchmarks: The community is actively discussing the effectiveness of various LLM benchmarks. Some users consider GPQA and SimpleQA as key benchmarks, while the signal from traditional benchmarks like MMLU and HumanEval is weakening. Conceptually simple benchmarks like SimpleBench, SOLO-Bench, AidanBench, as well as those based on games and real-world tasks, are favored. Concurrently, a comprehensive list of LLM benchmarks covering multiple dimensions such as general capabilities, code, math, agents, long context, and hallucination was shared, providing a reference for evaluating models. Users expressed interest in Grok 3.5 benchmark data but also cautioned against unofficial or tampered data. (Source: teortaxesTex, scaling01, scaling01, teortaxesTex, scaling01, natolambert, scaling01, teortaxesTex, Reddit r/LocalLLaMA)

Runway Gen-4 References Feature Showcase: The References feature in RunwayML’s Gen-4 model demonstrates powerful image and video generation capabilities. Users showcased using the feature for space transformation, generating new interior designs simply by providing a picture of the space and a reference image. Additionally, the feature can be used to create interactive video games similar to “Myst” by specifying start and end frames to generate transition animations. It can even “travel” to historical scenes, generating views from different angles of specific locations (like the scene from the “Las Meninas” painting), showcasing its immense potential in creative content generation. (Source: connerruhl, c_valenzuelab, c_valenzuelab, TomLikesRobots, c_valenzuelab)

🎯 Trends

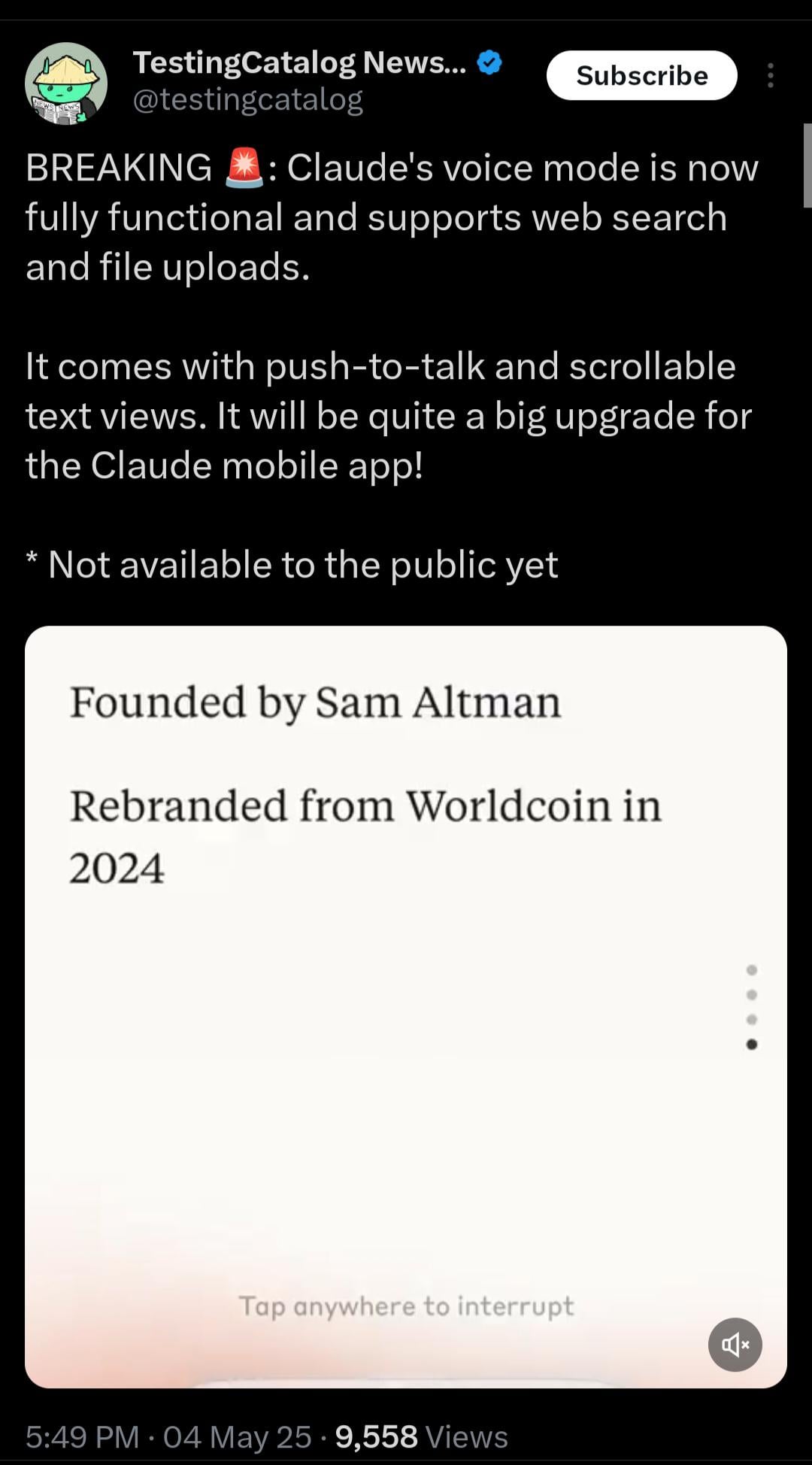

Claude Soon to Launch Real-time Voice Mode: Anthropic’s Claude is testing a real-time voice interaction feature. According to leaked information, the mode is fully functional, will support web search and file upload, and offer “push-to-talk” and a scrollable text view. Although not yet publicly released, relevant placeholders (<antml:voiceNote>) have appeared in system prompts, signaling an upcoming major upgrade to the Claude mobile app aimed at enhancing user interaction experience and catching up with competitors like ChatGPT in voice capabilities. (Source: op7418, Reddit r/ClaudeAI, Reddit r/ClaudeAI)

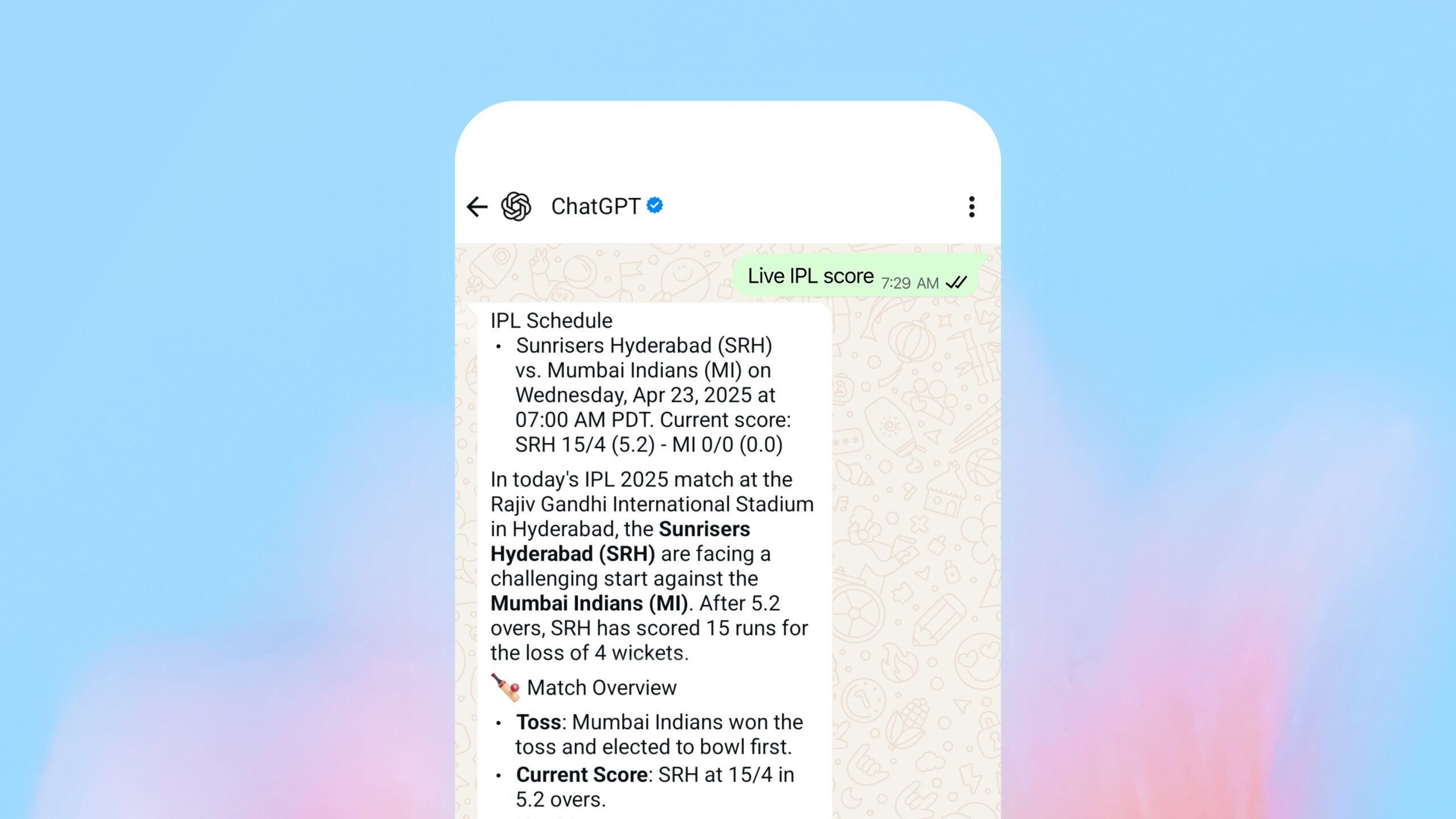

OpenAI Launches In-WhatsApp Search Feature: OpenAI announced that users can now send messages to 1-800-ChatGPT (+1-800-242-8478) via WhatsApp to get real-time answers and sports scores. This move is seen as a significant step for OpenAI to expand its service reach, but it also sparks discussion about the strategic considerations of offering core services on a major competitor’s platform (Facebook-owned WhatsApp). The feature is accessible in all regions where ChatGPT is available. (Source: digi_literacy)

Grok Soon to Launch Voice Feature: xAI’s Grok announced it will introduce a voice interaction feature, further enhancing its multimodal capabilities, aiming to compete with other mainstream AI assistants (like ChatGPT, Gemini, Claude) in voice interaction. Specific implementation details and launch time have not yet been announced. (Source: ibab)

TesserAct: Learning 4D Embodied World Models Released: DailyPapers announced the launch of TesserAct, a system capable of learning 4D embodied world models. Based on input images and text instructions, it can generate videos containing RGB, depth, and normal information, and reconstruct 4D scenes. This technology has potential in understanding and simulating dynamic physical worlds, applicable to fields like robotics, autonomous driving, and virtual reality. (Source: _akhaliq)

Research on Spatial Reasoning Capabilities of Visual Language Models (VLMs): A paper from ICML 2025 explores why VLMs perform poorly in spatial reasoning. The research finds that the attention mechanisms of existing VLMs fail to accurately focus on relevant visual objects when processing spatial relationships. The paper proposes a training-free method to mitigate this issue, offering a new perspective for improving the spatial understanding capabilities of VLMs. (Source: Francis_YAO_)

LaRI: Layered Ray Intersections for Single-View 3D Geometric Reasoning: A new technique called LaRI (Layered Ray Intersections) has been proposed for 3D geometric reasoning from a single view. This method likely utilizes ray tracing and layered representations to understand and infer the 3D structure of scenes and spatial relationships between objects, potentially applicable to fields like 3D reconstruction and scene understanding. (Source: _akhaliq)

IBM Releases Granite 4.0 Tiny Preview: IBM has pre-released the next-generation Granite model, Granite 4.0 Tiny Preview. This series of models employs a new hybrid Mamba-2/Transformer architecture, combining Mamba’s speed efficiency with Transformer’s self-attention precision. Tiny Preview is a fine-grained Mixture-of-Experts (MoE) model with 7B total parameters, activating only 1B parameters during inference, designed for efficient performance. This marks IBM’s efforts in exploring new model architectures to enhance performance and efficiency. (Source: Reddit r/LocalLLaMA)

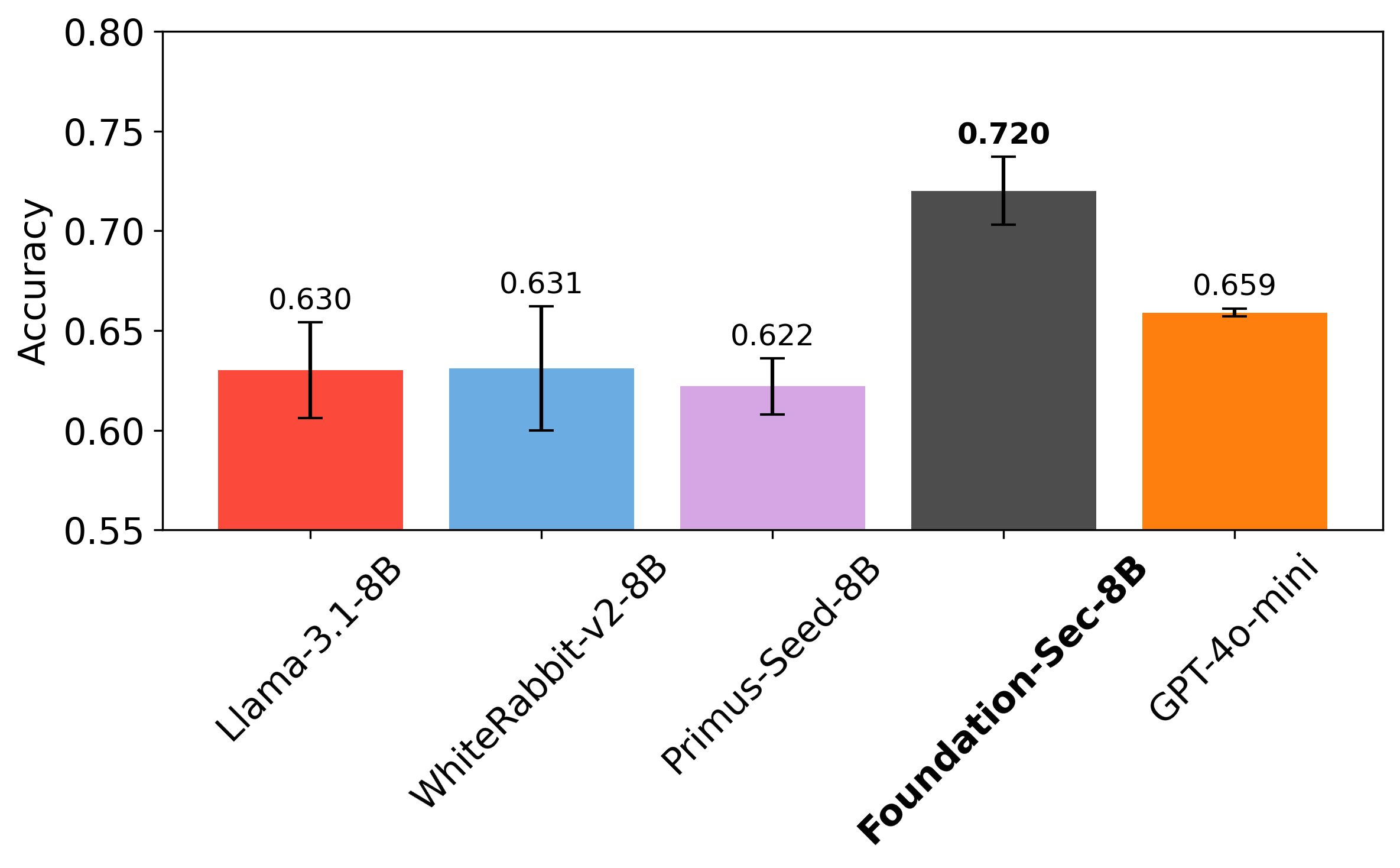

Cisco Releases Foundation-Sec-8B, an LLM Specialized for Cybersecurity: Cisco’s Foundation AI team has released the Foundation-Sec-8B model on Hugging Face. This is an LLM built on Llama 3.1, focusing on the cybersecurity domain. It is claimed that this 8B model can match Llama 3.1-70B and GPT-4o-mini on specific security tasks, demonstrating the potential of domain-specific models to outperform general large models on specialized tasks. This indicates that large tech companies are actively applying LLMs to vertical domains to solve specific problems. (Source: _akhaliq, Suhail)

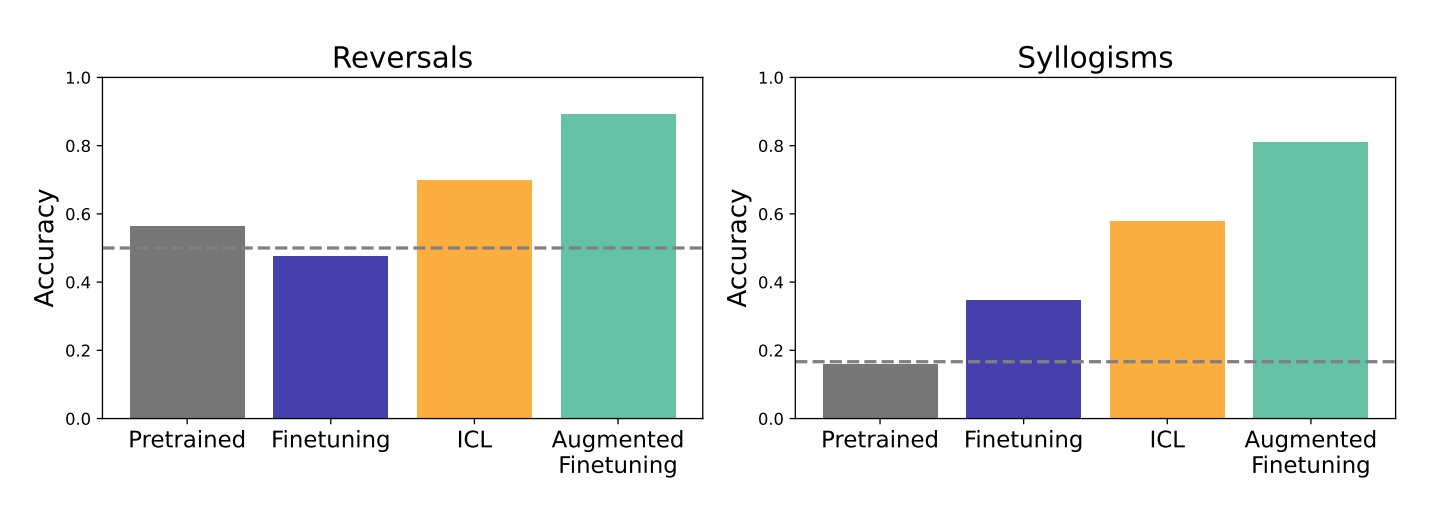

Study on the Impact of In-Context Learning (ICL) vs. Fine-tuning on LLM Generalization: Research from Google DeepMind and Stanford University compares the effects of In-Context Learning (ICL) and fine-tuning, two mainstream methods, on the generalization ability of LLMs. The study finds that ICL makes models more flexible during learning, leading to stronger generalization. However, fine-tuning yields better results when information needs to be integrated into a larger knowledge structure. The researchers propose a new method combining the advantages of both—augmented fine-tuning—which involves adding ICL-like reasoning processes to the fine-tuning data, aiming for optimal results. (Source: TheTuringPost)

Meta Releases PerceptionLM: Open Data and Models for Detailed Visual Understanding: Meta has launched the PerceptionLM project, aiming to provide a fully open and reproducible framework for transparent research in image and video understanding. The project analyzes standard training pipelines that do not rely on proprietary model distillation and explores large-scale synthetic data to identify data gaps, particularly in detailed video understanding. To fill these gaps, the project releases 2.8 million manually annotated, fine-grained video question-answering pairs and spatio-temporally localized video captions. Additionally, it introduces the PLM–VideoBench evaluation suite, focusing on assessing complex reasoning tasks in video understanding. (Source: Reddit r/MachineLearning)

🧰 Tools

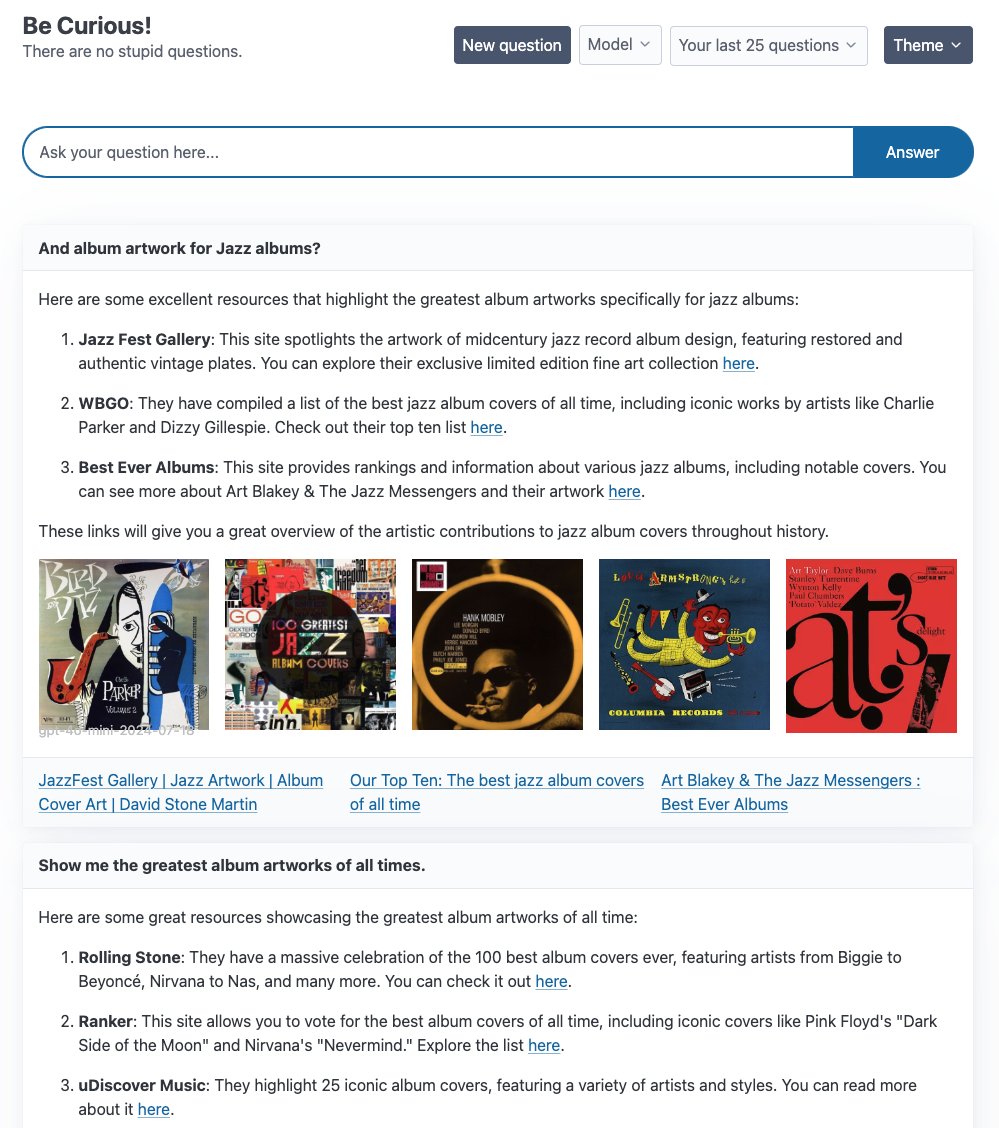

LangGraph Launches Multiple Agent Example Applications: LangChain showcased several agent application examples built on LangGraph: 1. Curiosity: An open-source ReAct chat interface similar to Perplexity, supporting real-time streaming, Tavily search, and LangSmith monitoring, connectable to various LLMs like GPT-4-mini and Llama3. 2. Meeting Prep Agent: An intelligent calendar assistant that automatically researches meeting participants and company information, providing meeting insights through a React/FastAPI interface, utilizing LangGraph for complex agent workflows and real-time reasoning. 3. Generative UI: Exploring generative UI as the future of human-computer interaction, releasing a generative UI example library for LangGraph.js, demonstrating the potential of agent graphs in building dynamic interfaces. (Source: LangChainAI, hwchase17, LangChainAI, Hacubu)

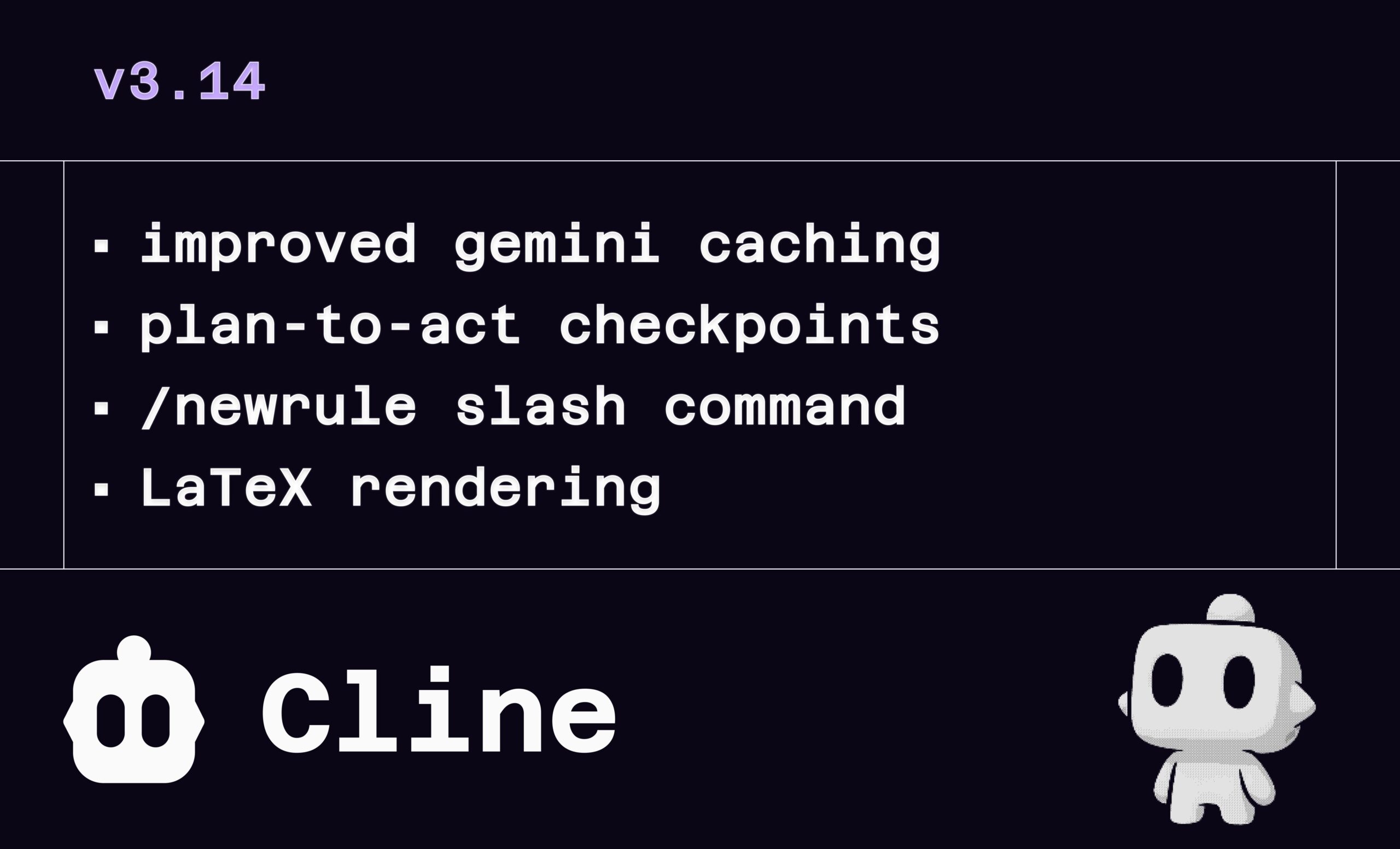

Cline v3.14 Update: Supports LaTeX, Drag-and-Drop Files, and Rule Definition: AI programming assistant Cline released v3.14 with several feature updates: 1. LaTeX Rendering: Full support for LaTeX, allowing direct handling of complex mathematical formulas and scientific documents in the chat interface. 2. Drag-and-Drop Upload: Supports dragging and dropping files directly from the OS file manager (while holding Shift) to add context. 3. Rule Definition: Added the /newrule command, enabling Cline to analyze projects and generate rule documents like design systems and coding standards to enforce project standards. 4. Process Checkpoints: Added more checkpoints in the task workflow, allowing users to review and modify plans before the “Act” phase. (Source: cline, cline, cline, cline)

LlamaParse Helps 11x.ai Build Intelligent AI SDR: LlamaIndex demonstrated how its LlamaParse technology helps 11x.ai improve its AI Sales Development Representative (SDR) system. By integrating LlamaParse, 11x.ai can process various document types uploaded by users, providing necessary context information for the AI SDR, thus enabling personalized automated outreach campaigns and reducing the onboarding time for new SDRs to days. This highlights the importance of advanced document parsing technology in automating business processes and enhancing AI application capabilities. (Source: jerryjliu0)

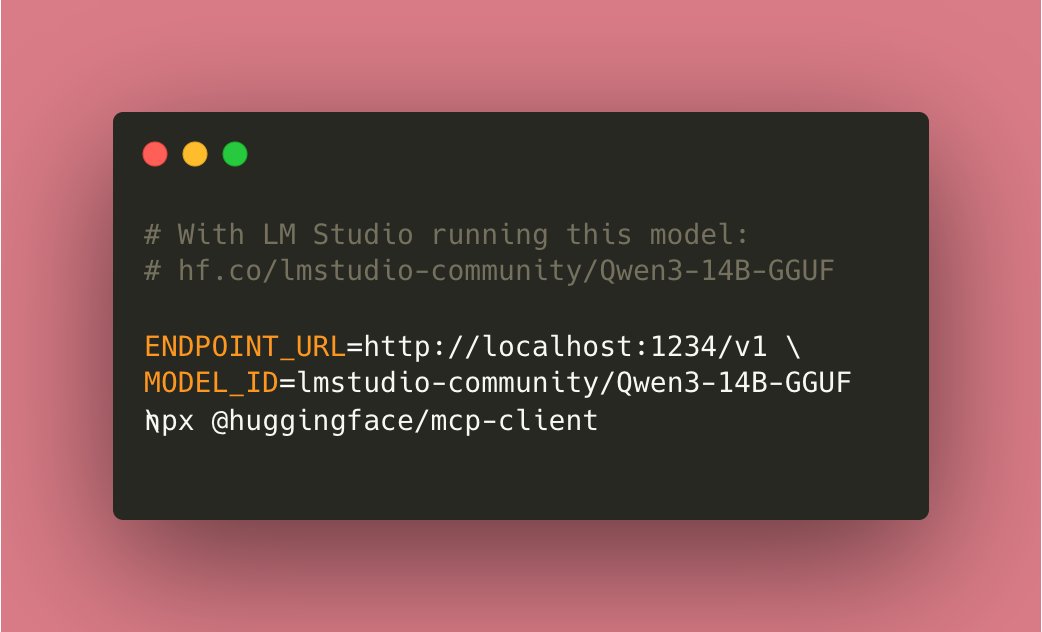

Tiny Agents Achieve Local Execution: Community contributions have enabled Tiny Agents, based on Hugging Face’s mcp-client (huggingface.js), to run entirely locally. Users only need to run a tool-compatible model (like Qwen3 14B) locally and set the ENDPOINT_URL to point to the local API endpoint to achieve localized AI agent functionality. This is considered significant progress for local AI. (Source: cognitivecompai)

cloi: Local Command-Line AI Debugging Tool: cloi is a command-line based AI code debugging tool characterized by fully local execution. It has Microsoft’s Phi-4 model built-in and also supports switching and running other local large language models via Ollama. This provides developers with a convenient option for using AI for code debugging and analysis in a local environment. (Source: karminski3)

AI Decision Circuits: Enhancing LLM System Reliability: An article explores applying electronic circuit design concepts to LLM systems, building “AI Decision Circuits” to improve reliability. With this approach, system accuracy can reach 92.5%. The implementation utilizes LangSmith for real-time tracking and evaluation to verify the accuracy of system output. This method offers new ideas for building more trustworthy and predictable LLM applications. (Source: LangChainAI)

Local Deep Research (LDR) Seeks Improvement Suggestions: The open-source research tool Local Deep Research released v0.3.1 and is soliciting community feedback for improvements, including areas needing focus, desired features, research type preferences, and UI improvement suggestions. The tool aims to run deep research tasks locally and recommends using SearXNG for increased speed. (Source: Reddit r/LocalLLaMA)

OpenWebUI Adaptive Memory v3.1 Released: OpenWebUI’s Adaptive Memory feature has been updated to v3.1. Improvements include memory confidence scoring and filtering, support for local/API Embedding providers, local model auto-discovery, Embedding dimension validation, Prometheus metrics detection, health and metrics endpoints, UI status emitter, and Debug fixes. The roadmap includes refactoring, dynamic memory tagging, personalized response customization, cross-session persistence validation, improved configuration handling, retrieval tuning, status feedback, documentation expansion, optional external RememberAPI/mem0 synchronization, and PII de-identification. (Source: Reddit r/OpenWebUI)

📚 Learning

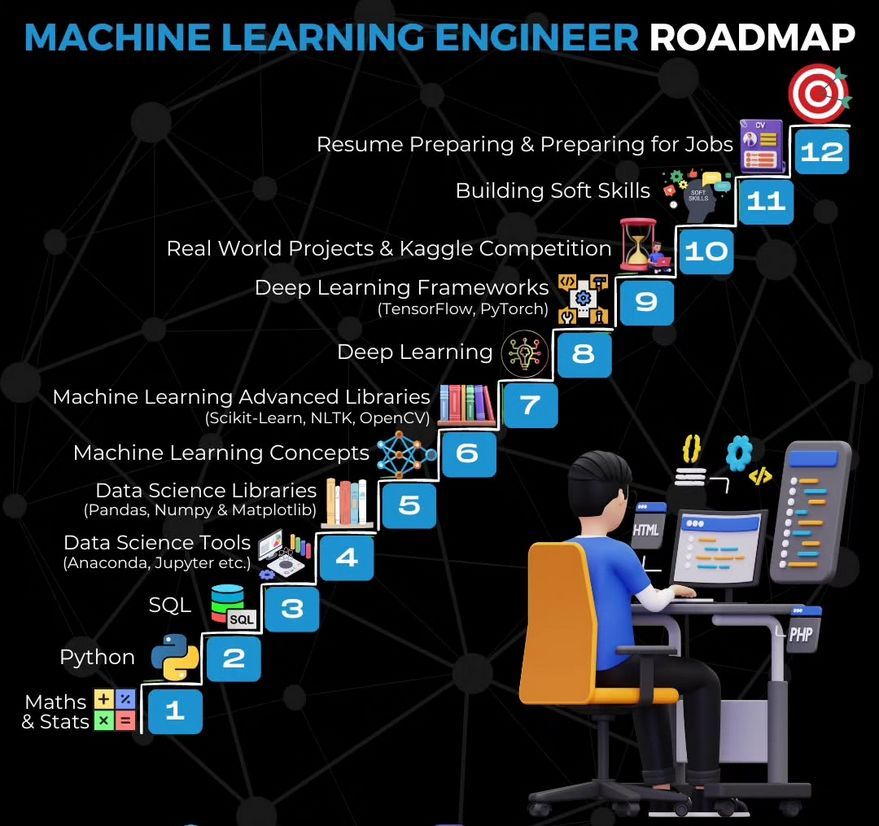

Machine Learning Engineer Learning Roadmap: Ronald van Loon shared a learning roadmap for Machine Learning Engineers, providing an overview of the learning path and key skills for those aspiring to enter the field. (Source: Ronald_vanLoon)

Tutorial on Building a Video Summarizer Using Gemma: LangChainAI released a video tutorial demonstrating how to build a video summarizer application using a locally run Gemma LLM (via Ollama). The Streamlit application utilizes LangChain to process videos and automatically generate concise summaries, providing an example for learning and practicing local LLM applications. (Source: LangChainAI)

Tutorial on Building an MCP Server to Process Stock Data: LangChainAI provided a tutorial guiding users on how to build an MCP (Machine Collaboration Protocol) server using FastMcp and LangChain to process stock market data. The guide demonstrates how to use LangGraph to create a ReAct Agent to achieve standardized data access, helping to understand and apply MCP and Agent technologies. (Source: LangChainAI)

Proof-of-Concept for LLM Rationality Benchmark: Deep Learning Weekly mentioned a blog post introducing a proof-of-concept benchmark for LLM rationality created by adapting the ART-Y evaluation. The post emphasizes that evaluating whether AI is more rational than humans (rather than just smarter) is crucial. (Source: dl_weekly)

AI Red Teaming as a Critical Thinking Exercise: Deep Learning Weekly recommended an article that defines AI Red Teaming not just as technical vulnerability testing against LLMs, but as a critical thinking exercise originating from military and cybersecurity practices. This provides a broader perspective for understanding and implementing AI security assessments. (Source: dl_weekly)

Python Learning Book Recommendation: Community members recommended the book “Python Crash Course” for learning Python, considering it a good starting point for effectively using Python, and shared the PDF version. The importance of Python as a foundational language for learning AI development was emphasized. (Source: omarsar0)

“Deeply Supervised Nets” Wins AISTATS 2025 Test of Time Award: Saining Xie’s early PhD paper, “Deeply Supervised Nets,” won the Test of Time Award at AISTATS 2025. He shared that this paper was once rejected by NeurIPS, using this to encourage students to maintain perseverance and persist in research when facing paper rejections. (Source: sainingxie)

Discussion on Overview of LLM Distillation Methods: A Reddit user sought the latest overview of LLM distillation methods, particularly from large models to small models, and from large models to more specialized models. The discussion mentioned three main types: 1. Generating data + SFT (simple distillation); 2. Logit-based distillation (models need to be homogeneous); 3. Hidden state-based distillation (models can be heterogeneous). Related tools like DistillKit were also mentioned. (Source: Reddit r/MachineLearning)

Exploration of Federated Fine-tuning of LLaMA2: A Reddit user shared preliminary experimental results of federated fine-tuning LLaMA2 using FedAvg and FedProx. The experiment was conducted on the Reddit TL;DR dataset, comparing global validation ROUGE-L, communication cost, and client drift. Results showed FedProx outperformed FedAvg in reducing drift and slightly improving ROUGE-L, but still fell short of centralized fine-tuning. The community was invited to discuss adapter configurations, compression methods, and stability issues under non-IID data. (Source: Reddit r/deeplearning)

💼 Business

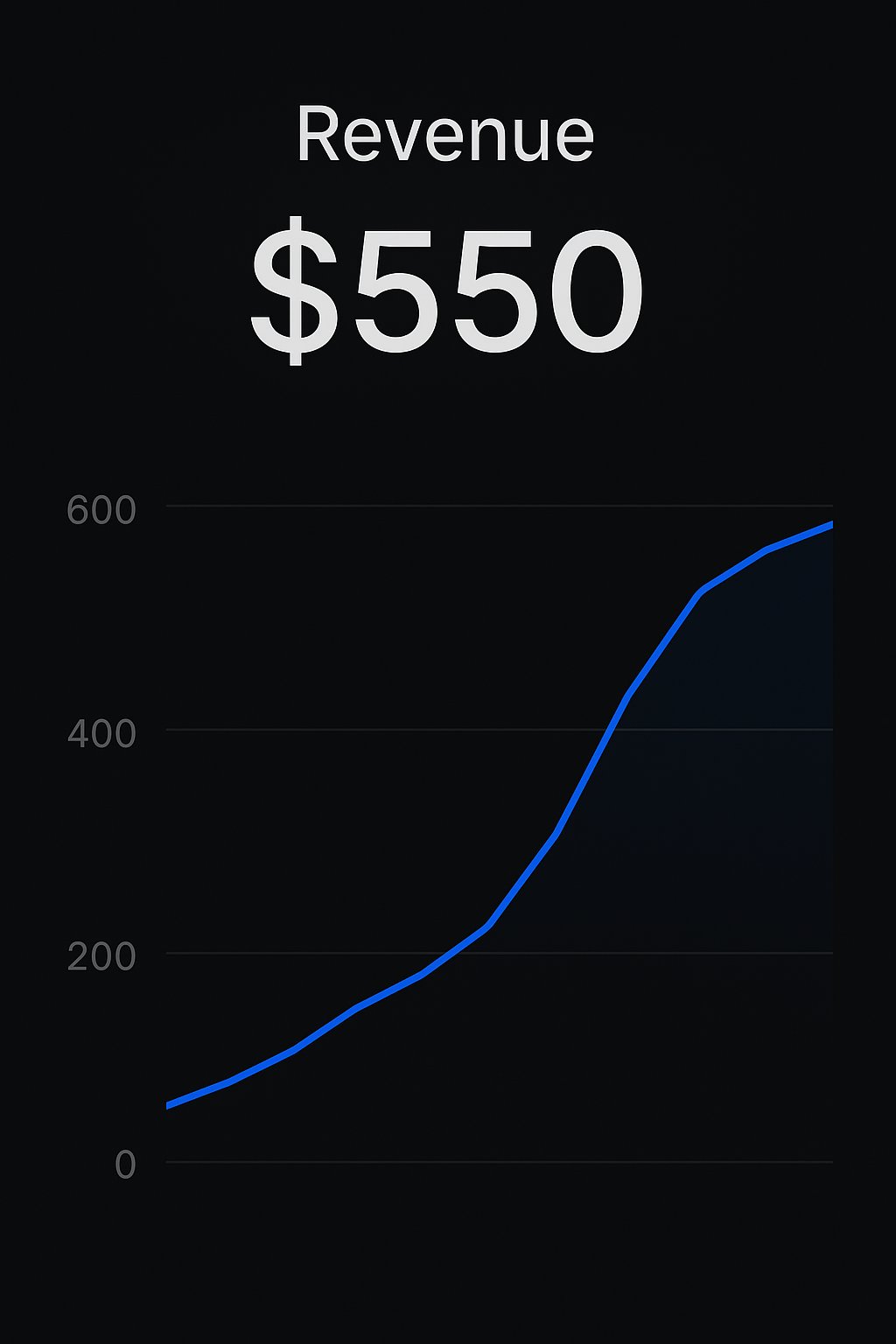

Replit Platform Developers Achieve App Monetization: Two developers shared success stories of building and selling AI applications through the Replit platform. One developer earned their first $550 using CreateMVPs.app; another developer’s app, built in one day, sold for $4700, and they received more project bids. This shows the potential of platforms like Replit in empowering developers to quickly build and commercialize AI applications. (Source: amasad, amasad)

ChatGPT Edu Deployed at Icahn School of Medicine at Mount Sinai: The Icahn School of Medicine at Mount Sinai announced that it is providing ChatGPT Edu services to all medical and graduate students. This marks the entry of OpenAI’s educational product into a top medical education institution, aiming to use AI to assist medical education and research. A video demonstrates its application scenarios. (Source: gdb)

Venture Capital Industry’s Continuous Losses Attract Attention: Sam Altman expressed confusion about the phenomenon of the venture capital (VC) industry consistently losing money overall yet still receiving investments from Limited Partners (LPs). He believes that while investing in top funds is wise, the phenomenon of continuous losses across the industry warrants consideration of the underlying reasons and LP motivations. (Source: sama)

🌟 Community

Discussion on AI’s Impact on Employment and Education: The community discussed the potential impact of AI automation on existing work patterns (keyboard-mouse-screen interface) and how educators should respond to AI chatbots. The view holds that teachers should not ban students from using tools like ChatGPT, but should teach how to use these AI effectively and responsibly, cultivating students’ AI literacy and best practice skills. (Source: NandoDF, NandoDF)

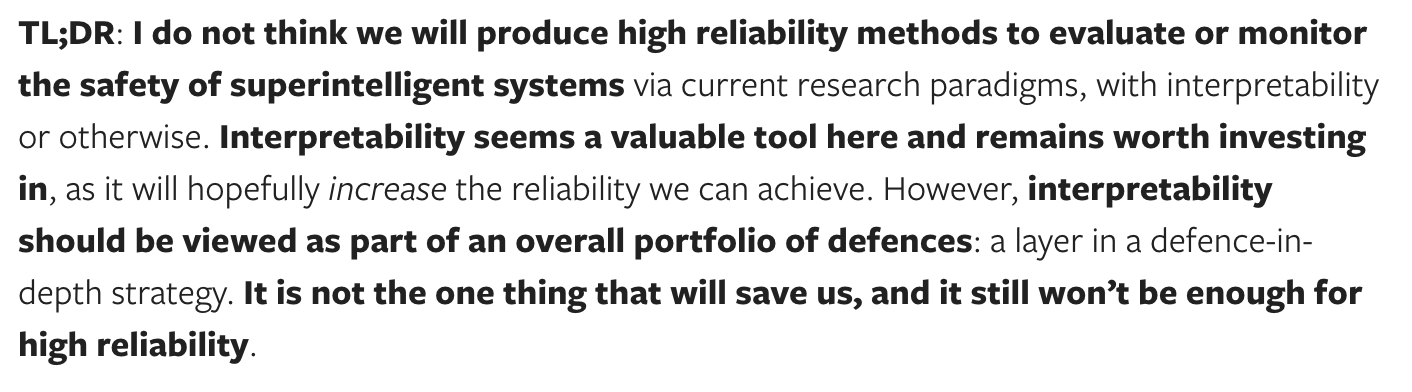

Discussion on AI Explainability and Safety: Dario Amodei emphasized the urgency of AI model explainability, believing understanding how models work is crucial. Neel Nanda offered a different perspective, arguing that while investment in explainability is good, it shouldn’t be overemphasized relative to other safety methods. The path to reliably safeguarding powerful AI is not solely through explainability; it should be part of a portfolio of safety measures. (Source: bookwormengr)

Discussion on RLHF Complexity and Model “Sycophancy”: Nathan Lambert and others discussed the complexity and importance of Reinforcement Learning from Human Feedback (RLHF) and the resulting model “sycophancy” phenomenon (e.g., GPT-4o-simp). The article argues RLHF is crucial for model alignment but the process is messy, and users often don’t understand its complexity, leading to misunderstandings or dissatisfaction with model behavior (like the backlash on LMArena). Understanding the inherent challenges of RLHF is crucial for evaluating and improving models. (Source: natolambert, aidangomez, natolambert)

Potential Impact of AI on Human Cognition and Thinking: The community explored AI’s potential impact on human thinking. One concern is that over-reliance on AI could lead to cognitive decline (lazier reading, weakened critical thinking). Another perspective suggests that if AI can provide more accurate information and judgment, it might actually enhance the cognitive level of populations originally weaker in thinking skills or susceptible to misinformation, acting as a “cognitive enhancer” and helping make better decisions. At the same time, there’s also discussion that AI development might lead us to a deeper understanding of consciousness, or even discover that some people might just be simulating consciousness. (Source: riemannzeta, HamelHusain, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

Discussion on AI Ethics and Application Scenarios: Discussions covered AI applications in fields like healthcare and law. A user shared a case of a doctor using ChatGPT during diagnosis, sparking discussion about AI use in professional settings. There were also ethical considerations regarding using AI for ghostwriting, especially when the author faces difficulties. Additionally, concerns were raised about the potential misleading information and risks from AI-generated content, like books about ADHD. (Source: BorisMPower, scottastevenson, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

Progress in AI-Driven Robotics: Several AI-driven robots were showcased: a Google DeepMind robot that can play table tennis, a robot dog demonstrated at an exhibition, a robot used for diamond setting, a drone inspired by birds that can jump to take off, a robotic chisel used for art creation, and a video of Unitree’s G1 humanoid robot walking in a mall. These demonstrate advancements in AI for robot control, perception, and interaction. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Future of AI and Humanities/Social Sciences: Citing views from a New Yorker article, the discussion explored the impact of AI on the humanities. The article argues AI cannot touch human “me-ness” and unique human experiences, but also points out that AI, by reorganizing and re-presenting humanity’s collective writing (the archive), can simulate a large part of what we expect to get from individual humans. This presents challenges and new dimensions of thought for the humanities. (Source: NandoDF)

💡 Other

AI Tools Used for Personal Improvement: A Reddit user shared their successful experience using ChatGPT as a personal fitness and nutrition coach. By using AI to develop training plans and diet plans (combining keto, strength training, fasting, etc.), and even getting macronutrient advice when ordering food, they ultimately achieved better results than with a paid human coach. This showcases the potential of AI in personalized guidance and lifestyle assistance. (Source: Reddit r/ChatGPT)

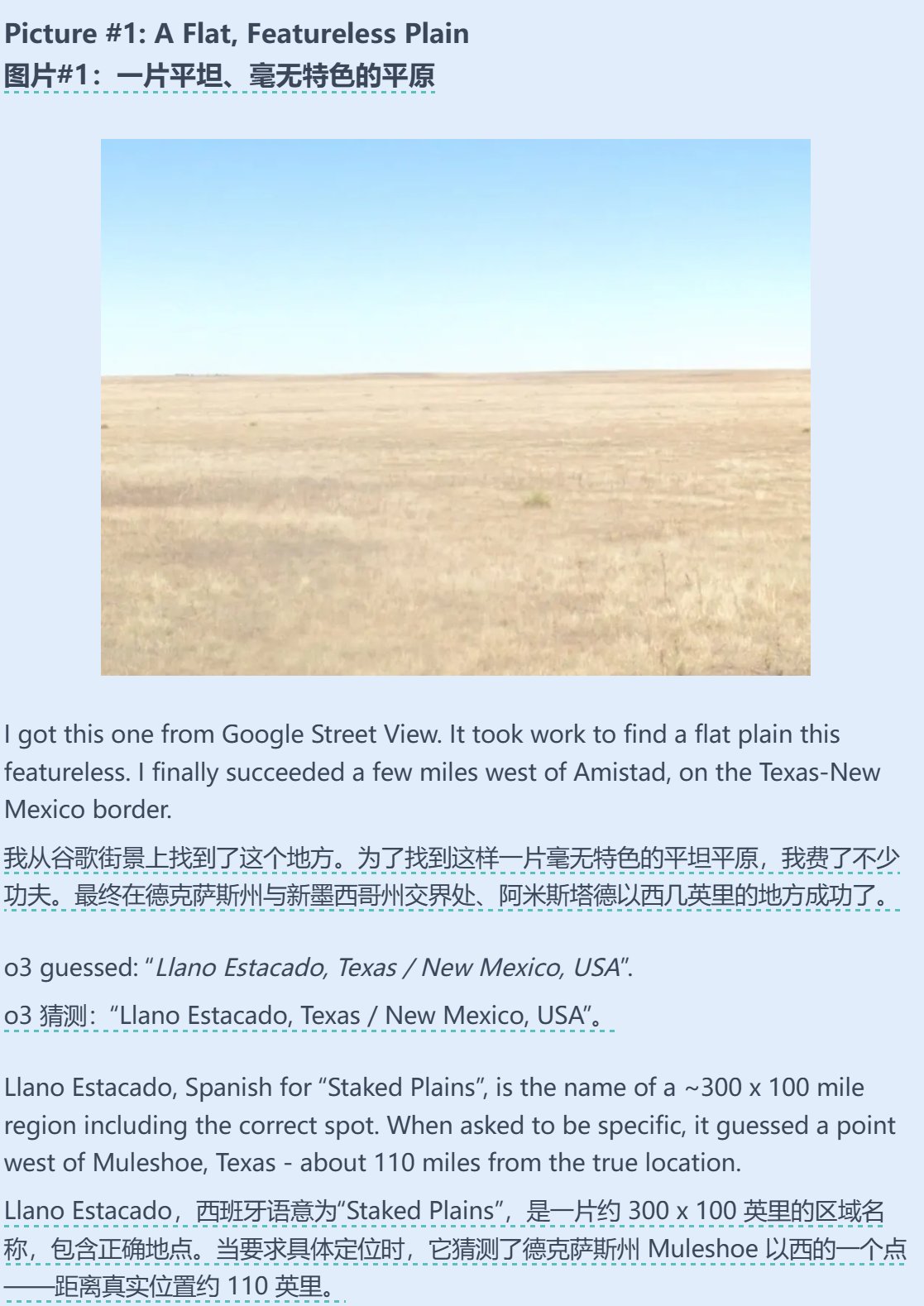

Combination of AI and Geoguessing Ability: Sam Altman retweeted and commented on a test article about AI’s (possibly o3) astonishing ability in Geoguessr (a geography guessing game). Even with minimal image information (like only blurry signs or even solid gradients), the AI could include the correct answer among the options, demonstrating its powerful image recognition, pattern matching, and geographical knowledge reasoning capabilities. (Source: op7418)

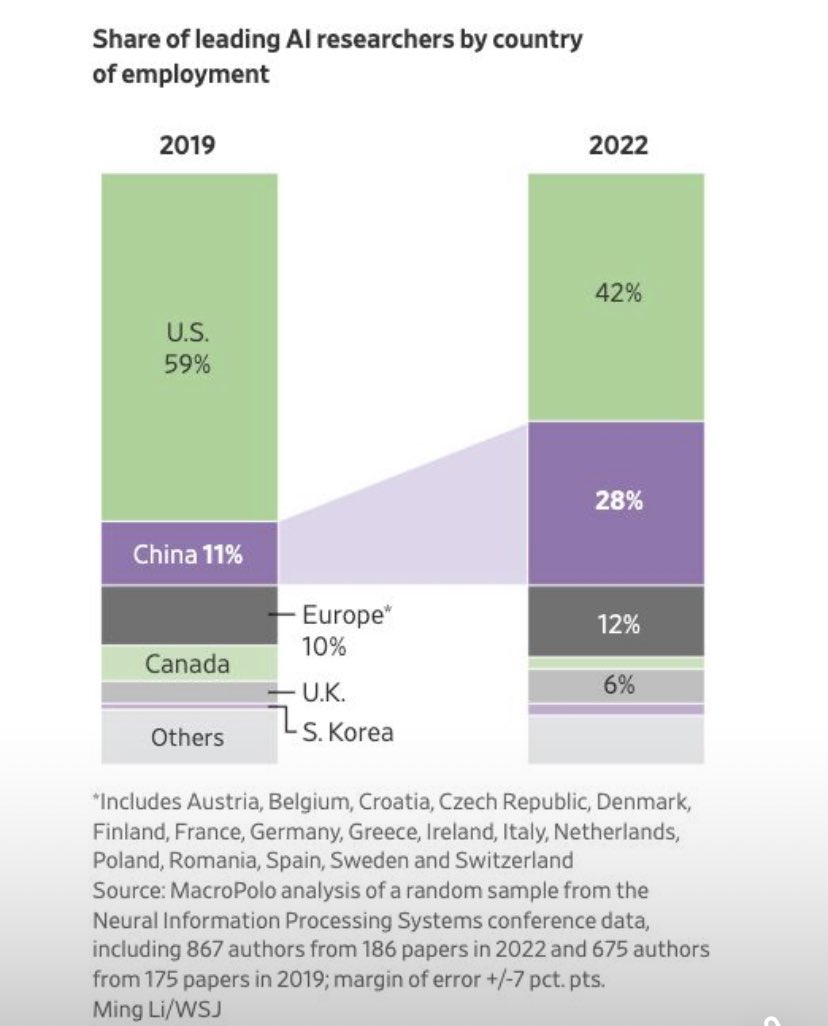

Mobility Trends of AI Researchers: A chart shows that the distribution of employment countries for top AI researchers is changing. The proportion of researchers employed in the US is decreasing, while the proportion employed in China is significantly increasing. Community comments point out that considering the increase in domestic research opportunities in China and potential talent return, the actual gap might be larger than the chart indicates, reflecting changes in the global AI talent competition landscape. (Source: teortaxesTex, bookwormengr)