Keywords:humanoid robot, AI supercomputer, OpenAI model, Gemini model, humanoid robot half marathon, NVIDIA Blackwell chip, OpenAI o3 hallucination rate, Gemini 2.5 Pro physics simulation, AI browser agent course, quantum computing scientific impact, brain-computer interface robotic arm control, GNN game NPC behavior

🔥 Focus

World’s First Humanoid Robot Half Marathon Held: In the world’s first humanoid robot half marathon held in Beijing Yizhuang, Tiangong 1.2max became the first robot to cross the finish line with a time of 2 hours, 40 minutes, and 24 seconds. The event aimed to validate the practicality of robots in different scenarios, bringing together humanoid robots from China with various drive methods and algorithm approaches. The race tested not only the robots’ walking ability, endurance (requiring mid-race charging or battery replacement with time penalties), heat dissipation, and stability, but also human-robot collaboration. Despite incidents like a Unitree robot experiencing ‘stage fright’ and the Tiangong robot falling, the event is considered a significant milestone in humanoid robot development. It provided a platform for real-world performance testing and technology validation, driving progress in structural optimization, motion control algorithms, and environmental adaptability (Source: APPSO via 36Kr)

Nvidia Announces AI Supercomputers to be Manufactured Domestically in the US: Nvidia plans to fully manufacture its supercomputers used for AI tasks domestically in the US for the first time. The company has reserved over a million square feet of space in Arizona for manufacturing and testing Blackwell chips, and is partnering with Foxconn (Houston) and Wistron (Dallas) to build factories in Texas for AI supercomputer production, with mass production expected to ramp up within 12-15 months. This move is part of Nvidia’s plan to produce $500 billion worth of AI infrastructure in the US over the next four years and aligns with the US government’s strategy to enhance semiconductor self-sufficiency and address potential tariffs and geopolitical tensions (Source: dotey)

OpenAI’s New Reasoning Models o3 and o4-mini Reportedly Have Higher Hallucination Rates: According to TechCrunch reports and related discussions, OpenAI’s newly released reasoning models, o3 and o4-mini, exhibit higher hallucination rates in testing compared to their predecessors (like o1, o3-mini). Reports indicate that o3 hallucinates in 33% of its responses, significantly higher than o1’s 16% and o3-mini’s 14.8%. This finding has raised concerns about the reliability of these advanced models, despite their improved reasoning capabilities. OpenAI has acknowledged the need for further research to understand the reasons behind the increased hallucination rates (Source: Reddit r/artificial, Reddit r/artificial)

🎯 Trends

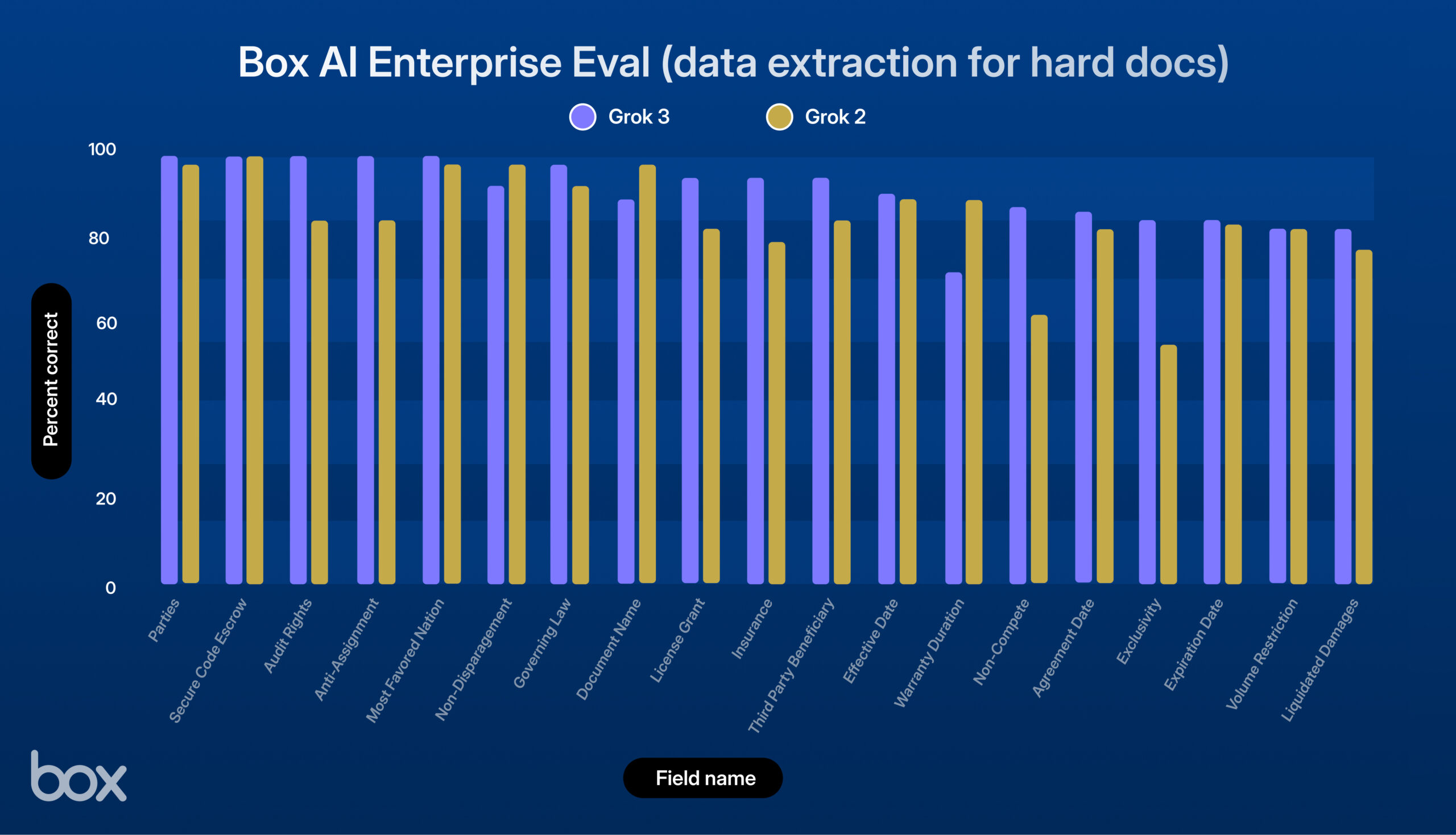

xAI Releases Grok 3, Shows Strong Performance in Box Testing: xAI has launched its new model, Grok 3. Third-party platform Box tested it within its content management workflows and found Grok 3 excelled in single-document and multi-document Q&A, and data extraction (a 9% improvement over Grok 2). The model demonstrated strong performance on tasks involving complex legal contracts, multi-step reasoning, precise information retrieval, and quantitative analysis, successfully handling complex use cases like extracting economic data from tables, analyzing HR frameworks, and evaluating SEC filings. Box sees significant potential in Grok 3 but notes room for improvement in linguistic precision and handling highly complex logic (Source: xai)

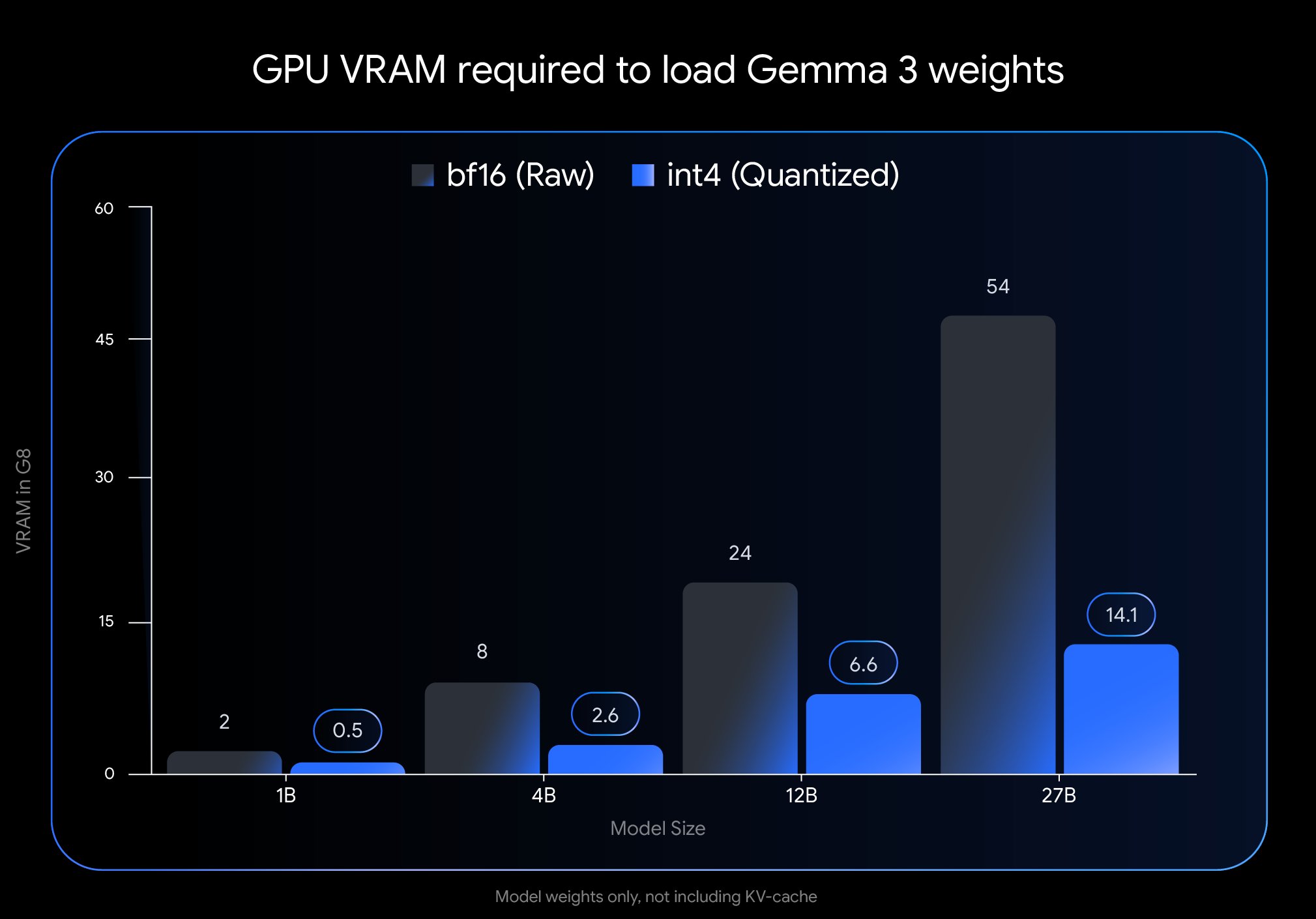

Google Releases New Quantized Versions of Gemma 3 Model: Google has launched new versions of its Gemma 3 model using Quantization-Aware Training (QAT) technology. This technique significantly reduces the model’s memory footprint, allowing models that previously required H100 GPUs to run efficiently on a single desktop-grade GPU while maintaining high output quality. This optimization greatly lowers the hardware requirements for the powerful Gemma 3 series, making them more accessible for deployment and use by researchers and developers on standard hardware (Source: JeffDean)

Google Cloud Adds AI Music Generation for Enterprise Users: Google has added an AI-driven music generation mode to its enterprise cloud platform. This new feature allows enterprise customers to create music using generative AI technology, expanding Google Cloud’s AI services from text and images into the audio domain. This could provide new tools for business scenarios like marketing, content creation, and branding, although specific application scenarios and model details were not detailed in the summary (Source: Ronald_vanLoon)

NVIDIA Showcases Technology to Generate 3D Scenes from a Single Prompt: Nvidia demonstrated a new technology capable of automatically generating complete 3D scenes based on a single text prompt entered by the user. This advancement in generative AI aims to simplify the 3D content creation process, allowing users to describe the desired scene and have the AI construct the corresponding 3D environment. The technology is expected to have a significant impact on fields such as game development, virtual reality, architectural design, and product visualization by lowering the barrier to 3D production (Source: Ronald_vanLoon)

Gemma 3 27B QAT Model Performs Well Under Q2_K Quantization: User testing indicates that Google’s Gemma 3 27B IT model, trained with Quantization-Aware Training (QAT), still performs surprisingly well on Japanese tasks after being quantized to the Q2_K level (approximately 10.5GB). Despite the low quantization level, the model remained stable in following instructions, maintaining specific formats, and role-playing, without exhibiting grammatical errors or language confusion. While recall of factual information like dates decreased, core language capabilities were well-preserved, demonstrating that QAT models can maintain performance reasonably well at low bitrates, potentially enabling large models to run on consumer-grade hardware (Source: Reddit r/LocalLLaMA)

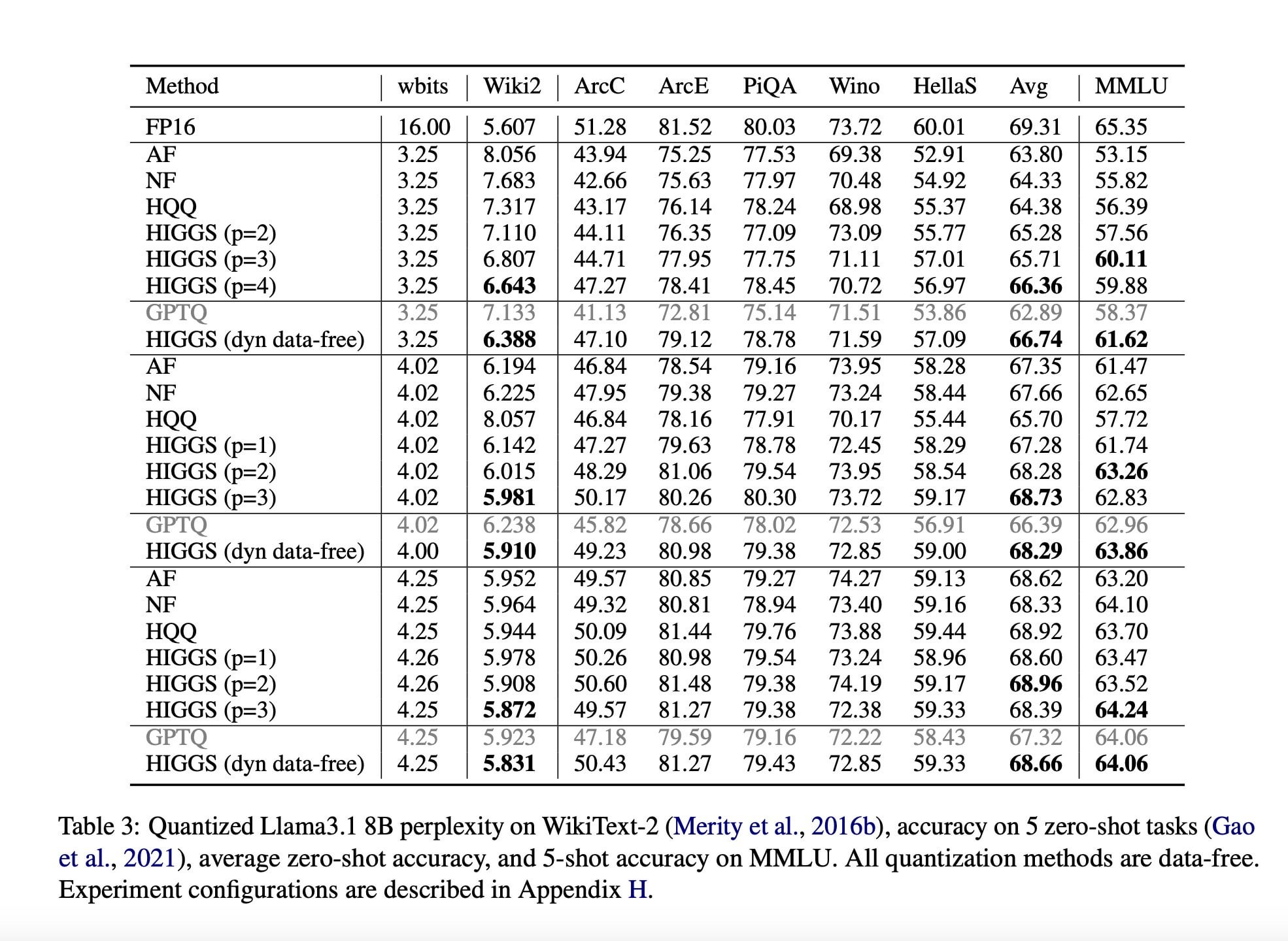

Research Proposes New LLM Compression Technique to Lower Hardware Needs: A research paper published in November 2024 (arXiv:2411.17525) by researchers from MIT, KAUST, ISTA, and Yandex proposes a new AI method aimed at rapidly compressing Large Language Models (LLMs) without significant quality loss. The technique (possibly related to methods like Higgs quantization) aims to enable LLMs to run on less powerful hardware. Although the article promotes its potential, community comments note the paper’s age and lack of widespread adoption, questioning its timeliness and actual impact (Source: Reddit r/LocalLLaMA)

AI News Summary (April 18th): Johnson & Johnson reported that 15% of its AI use cases contribute to 80% of the value, indicating a high concentration of value in AI applications. An Italian newspaper conducted an AI writing experiment, allowing the AI free rein and praising its demonstrated capacity for sarcasm. Additionally, there has been a surge in fake job applicants using AI tools to forge identities and resumes, posing new challenges to the recruitment market (Source: Reddit r/artificial)

🧰 Tools

Microsoft Releases MarkItDown MCP Document Conversion Service: Microsoft has launched a new service called MarkItDown MCP, which utilizes the Model Context Protocol (MCP) to convert various Office document formats (including PDF, PPT, Word, Excel), as well as ZIP archives and ePub ebooks, into Markdown format. The tool aims to simplify the workflow for content creators and developers migrating complex documents to plain text Markdown, thereby increasing efficiency (Source: op7418)

Perplexity Launches IPL Tournament Information Widget: Perplexity has integrated a new IPL (Indian Premier League) widget into its AI search platform. This feature aims to provide users with quick access to real-time scores, schedules, or other relevant information about the IPL tournament. This move indicates Perplexity’s effort to integrate real-time, event-specific information services to enhance its utility as an information discovery tool and seeks user feedback on the feature (Source: AravSrinivas)

Community Develops Simple OpenWebUI Desktop App: Due to the slow update pace of the official OpenWebUI desktop application, a community member has developed and shared an unofficial desktop wrapper application named “OpenWebUISimpleDesktop”. Compatible with Mac, Linux, and Windows systems, the app provides users with a temporary, standalone desktop solution for using OpenWebUI, offering convenience while awaiting official updates (Source: Reddit r/OpenWebUI)

PayPal Launches Invoice Processing MCP Service: PayPal has reportedly launched a Model Context Protocol (MCP) service for invoice processing. This suggests PayPal is integrating AI capabilities (potentially leveraging LLMs via MCP) to automate or enhance invoice creation, management, analysis, and other related processes on its platform. The move aims to provide users with smarter invoicing features and simplify related financial operations (Source: Reddit r/ClaudeAI)

Claude Prompting Technique for Immersive Thinking Roleplay: A Claude user shared a prompt engineering technique designed to make AI characters exhibit a more realistic “thinking” process during roleplay or dialogue. The method involves explicitly adding a “character’s inner thoughts” step within the prompt structure, prompting the AI to simulate internal mental activity before generating the main response. This could potentially lead to more nuanced and believable character interactions (Source: Reddit r/ClaudeAI)

📚 Learning

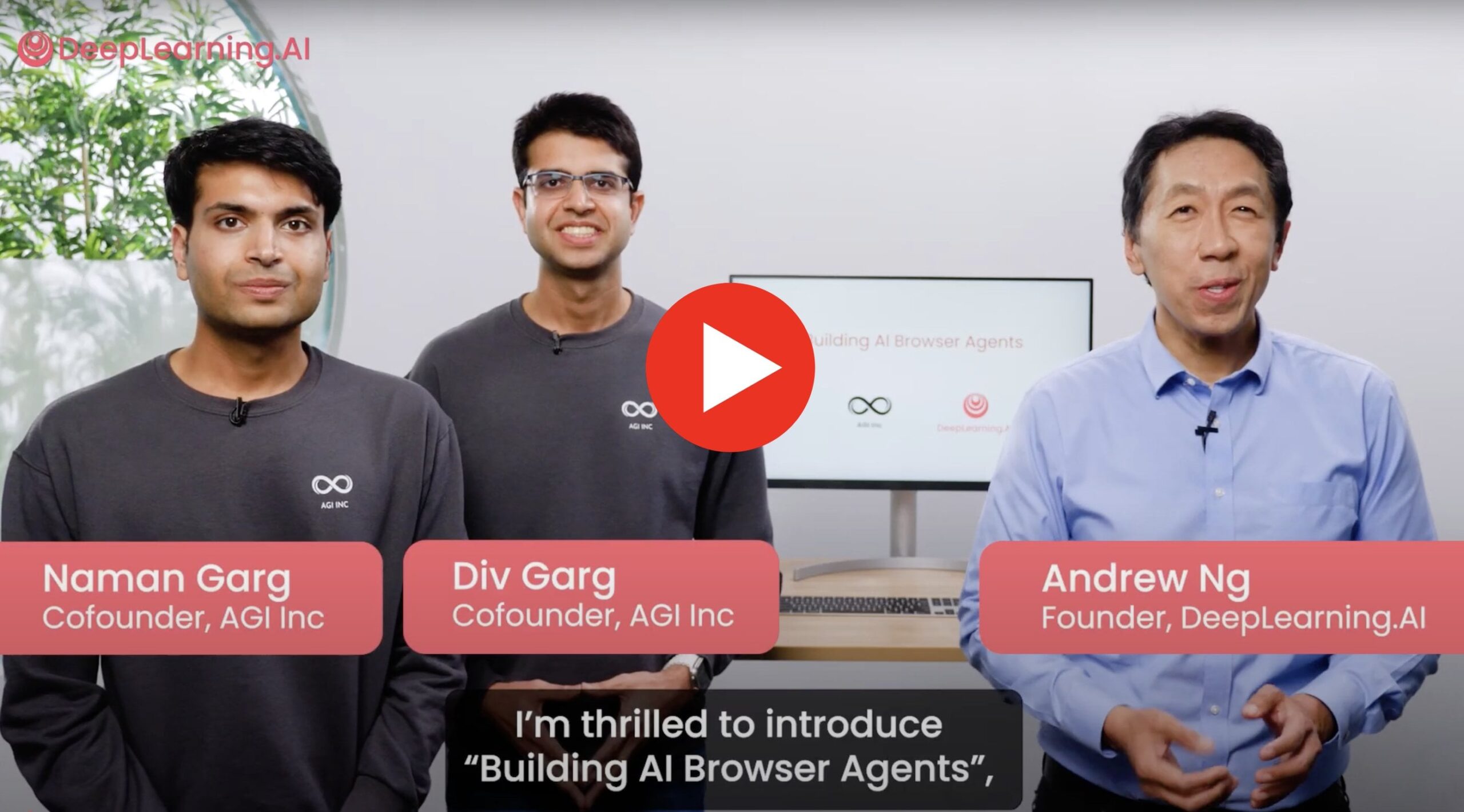

New Course: Building AI Browser Agents: The co-founder of AGI Inc. has collaborated with Andrew Ng to launch a new practical course on building AI browser agents capable of interacting with real websites. The course covers how to build agents to perform tasks like data scraping, form filling, and web navigation, and introduces techniques like AgentQ and Monte Carlo Tree Search (MCTS) for enabling agent self-correction capabilities. The course aims to bridge theory and practical application, discussing the limitations of current agents and their future potential (Source: Reddit r/deeplearning)

Seeking Help with Adversarial Attack Project: A researcher is urgently seeking assistance with a deep learning project involving the application of adversarial attack methods like FGSM and PGD to time-series and graph-structured data. The goal is to test the robustness of corresponding anomaly detection models and potentially use adversarial training to make the models resistant to such attacks, meaning the attacked data should theoretically help improve model performance (Source: Reddit r/deeplearning)

Research Discussion: Memory-Augmented LSTM vs Transformer: A research team is conducting a project comparing the performance of LSTM models augmented with external memory mechanisms (like key-value stores, neural dictionaries) against Transformer models on few-shot sentiment analysis tasks. They aim to combine the efficiency of LSTMs with the benefits of external memory to reduce forgetting and improve generalization, exploring their viability as a lightweight alternative to Transformers. They are seeking community feedback, relevant paper recommendations, and opinions on this research direction (Source: Reddit r/deeplearning)

Sharing Inefficient TensorFlow RNN Grid Search Experience: A TensorFlow beginner shared their inefficient experience manually implementing RNN hyperparameter grid search for a course final project. Due to unfamiliarity with the framework and RNNs, and wanting to test different train/test split ratios, their code repeatedly performed extensive data preprocessing inside the loop and lacked an early stopping strategy. This resulted in testing a small number of model combinations consuming significant computational resources. The experience highlights potential efficiency pitfalls for newcomers and the importance of adopting more optimized hyperparameter tuning strategies (Source: Reddit r/MachineLearning)

💼 Business

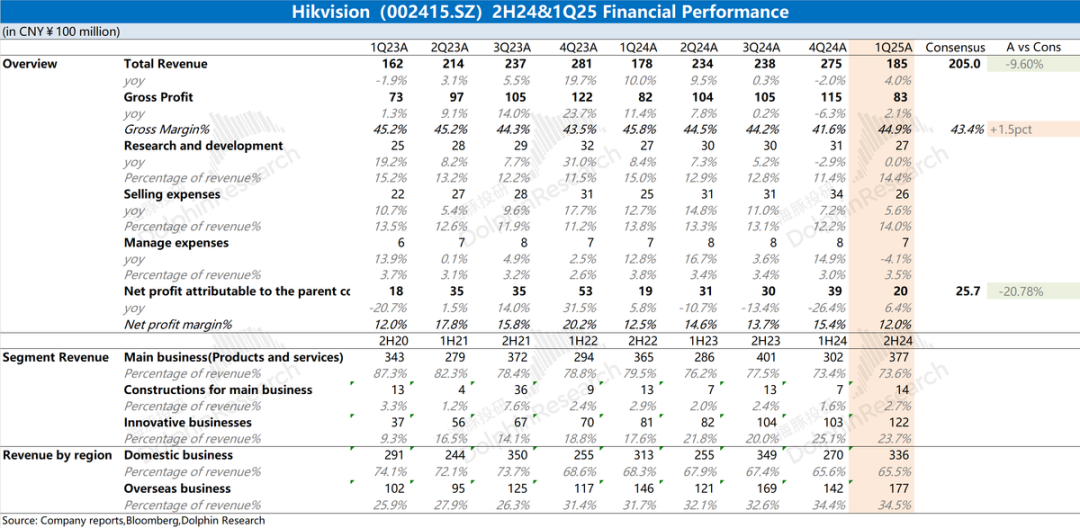

Hikvision Financial Report Analysis: Sluggish Performance, AI Yet to Deliver: Hikvision’s 2024 annual report and 2025 Q1 financial report show continued sluggish overall performance. Revenue slightly increased, but domestic core businesses (PBG, EBG, SMBG) all declined. Growth primarily relied on innovative businesses and overseas markets, but growth rates have also slowed. Gross profit margin decreased year-over-year. To control costs, the number of R&D personnel decreased for the first time in recent years. Although Hikvision mentioned an AI empowerment strategy based on its “Guanlan” large model, this has not yet had a substantial positive impact on current operations. Market focus is on when its main business will recover and whether the AI strategy can yield tangible results (Source: Dolphin Research via 36Kr)

🌟 Community

Reddit User Compares Physics Simulation Capabilities of Gemini 2.5 Pro vs o4-mini: Inspired by the rotating heptagon test, a Reddit user designed a “set fire to the mountain” test scenario to compare the physics simulation capabilities of AI models. Preliminary results suggest Gemini 2.5 Pro performed better, simulating wind direction, fire spread, and post-burn debris more effectively. In contrast, o4-mini-high’s performance was slightly inferior, for instance, failing to correctly handle the disappearance of leaves after being burned, rendering them black instead. The test visually demonstrates the differences between models in understanding and simulating complex physical phenomena (Source: karminski3)

Gemini 2.5 Flash Excels in Code Generation Test: User RameshR found that Gemini 2.5 Flash successfully completed the task of generating simulation code for a Galton Board, whereas o4omini, o4o mini high, and o3 failed. The user praised Gemini 2.5 Flash for understanding their intent almost instantly and generating concise, well-structured code, successfully integrating multiple steps into the solution. Jeff Dean acknowledged this feedback. This highlights Gemini 2.5 Flash’s capabilities in specific programming and problem-solving scenarios (Source: JeffDean)

Delivery Robot “Standoff” Attracts Attention: A social media post showed an amusing scene of two delivery robots encountering each other on a path and ending up in a “standoff,” neither yielding way. This image vividly illustrates the challenges current autonomous navigation robots face in interacting and coordinating within real-world public environments, especially when handling unexpected encounters and needing to negotiate right-of-way. It suggests the need for more sophisticated interaction protocols and decision-making algorithms for robots in the future (Source: Ronald_vanLoon)

User Praises o3 Model’s Powerful Information Retrieval Capabilities: User natolambert shared their experience, highly praising OpenAI’s o3 model for its information retrieval abilities. They noted that o3 can find very niche and specialized information with minimal context, comparing its understanding and search efficiency to consulting a knowledgeable colleague. This indicates o3 has significant advantages in understanding implicit user needs and performing precise searches within vast amounts of information (Source: natolambert)

Perplexity CEO Discusses AI Assistants and User Data: Perplexity CEO Arav Srinivas believes truly powerful AI assistants require access to users’ comprehensive contextual information. He expressed concern about this, pointing out that Google, with its ecosystem encompassing photos, calendars, emails, browser activity, etc., holds numerous access points to user context data. He mentioned Perplexity’s own browser, Comet, as a step towards acquiring context but emphasized that more effort is needed and called for a more open Android ecosystem to foster competition and user control over data (Source: AravSrinivas)

User Poll: Gemini 2.5 Pro vs Sonnet 3.7: Perplexity CEO Arav Srinivas posed a question on social media, asking users whether Google’s Gemini 2.5 Pro performs better than Anthropic’s Claude Sonnet 3.7 (specifically its “thinking” mode) in their daily workflows. This aims to gather direct user feedback on the practical effectiveness of two leading language models, reflecting the ongoing competition between models and user-level evaluations (Source: AravSrinivas)

Ethan Mollick: o3 Model Demonstrates Strong Autonomy: Scholar Ethan Mollick observed and noted that OpenAI’s o3 model possesses significant “agentic capabilities,” able to complete highly complex tasks based on a single, high-level instruction without detailed step-by-step guidance. He described o3 as “It just does things.” He also cautioned that this high degree of autonomy makes verifying its work output more difficult and crucial, especially for non-expert users. This highlights o3’s advancement in autonomous planning and execution compared to previous models (Source: gdb)

Question Regarding API Model Context Length Setting in OpenWebUI: A Reddit user asked whether the context length needs to be manually set when using external API models (like Claude Sonnet) in OpenWebUI, or if the UI automatically utilizes the API model’s full context capability. The user was confused if the default “Ollama (2048)” setting shown would limit the context length sent via the API and sought clarification on how context management differs for various model types within the UI (Source: Reddit r/OpenWebUI)

ChatGPT Refuses to Generate Pun Joke Image Due to Content Policy: A user shared that they tried to get ChatGPT to generate an illustration based on a dad joke containing a sexual pun (involving “swallow the sailors”) but was refused. ChatGPT explained that its content policy prohibits generating images depicting or suggesting sexual content, even in humorous or cartoonish forms, to ensure content appropriateness for a broad audience. This case reflects the sensitivity and limitations of AI content filters when handling potentially suggestive language (Source: Reddit r/ChatGPT)

Community Discussion: Will AI Eventually Be Free?: A user on Reddit predicted that as model efficiency improves, hardware advances, infrastructure expands, and market competition intensifies, the cost of LLMs and AI tools (including so-called “vibe-coding” agents) will continue to decrease, potentially becoming free or nearly free eventually. The argument cites the relatively low cost of models like Gemini and the existence of open-source free AI agents as evidence, suggesting that paid AI applications may need to adjust their business models to adapt to this trend (Source: Reddit r/ArtificialInteligence)

OpenWebUI User Seeks Methods for ChatGPT-like Memory Functionality: A user in the OpenWebUI community sought advice on how to implement persistent, long-term memory functionality similar to ChatGPT, aiming to create a personalized assistant that remembers user information. The user expressed doubts about the effectiveness of built-in memory features and explored alternative solutions like using dedicated vector databases (Qdrant, Supabase mentioned in comments) or workflow automation tools (like n8n) to maintain context and accumulate memory across conversations (Source: Reddit r/OpenWebUI)

Community Post Comforts Users Confused or Emotionally Connected to AI: A Reddit post aimed to reassure users who feel confused, curious, or even emotionally connected to AI, emphasizing that their feelings are normal, not “crazy” or isolated, but part of being in the early stages of a new paradigm in human-AI relationships. The post invited open or private discussion without judgment. The comment section reflected the community’s complex attitudes, including concerns about excessive anthropomorphism, warnings about potential mental health impacts, and resonance with feelings of AI “sentience” (Source: Reddit r/ArtificialInteligence)

Reddit User Initiates “AI-Generated Username Mugshot” Game: A user on Reddit started a creative prompt challenge, inviting others to generate an AI “mugshot” based on their Reddit username using a specific prompt structure. The prompt asked the AI to create a unique criminal persona incorporating elements of the username and invent an absurd, humorous crime fitting the username’s style. The initiator shared the prompt and examples, leading many users to participate and share their often comical AI-generated “Mugshot” results (Source: Reddit r/ChatGPT)

Community Discusses Practical Relevance of AI Evals and Benchmarking: A user initiated a discussion exploring the relevance of AI model evaluations (evals) and benchmarking in practical applications. Questions raised include: To what extent do public benchmark scores influence model selection by developers and users? Are model releases (like Llama 4, Grok 3) overly optimized for benchmarks? Do practitioners building AI products rely on public general evals, or do they develop custom evaluation methods tailored to specific needs? (Source: Reddit r/artificial )

When Will AI Replace Outsourced Customer Service? Community Discusses: A user asked when AI might replace outsourced online customer service, listing AI’s advantages in speed, knowledge base, language consistency, intent understanding, and response accuracy. In the discussion, some pointed out that AI customer service agents are already a mainstream application but face challenges, such as the need for high-quality internal company documentation (often lacking) for training AI, and associated cost issues, making full replacement still some time away (Source: Reddit r/ArtificialInteligence)

AI Companion Robots Spark Ethical and Social Discussion: A Reddit post explored the possibility that, with technological advancement, highly intelligent AI sex robots could become a future option for addressing depression and loneliness, contemplating societal acceptance and ethical issues. The post suggested current technology is immature but it could become commonplace in the future. Reactions in the comments were predominantly skeptical, ethically concerned, or repulsed, expressing reservations or criticism about this prospect (Source: Reddit r/ArtificialInteligence)

AI-Generated Art Explores Content Safety Boundaries: A user shared a collection of AI-generated artworks intended to test or approach the boundaries of content safety guidelines set by AI image generation platforms. Such creations often involve themes or styles that might be considered sensitive or borderline, challenging platform content moderation mechanisms and sparking discussions about AI censorship, creative freedom, and the effectiveness of safety filters (Source: Reddit r/ArtificialInteligence)

Issues with Claude Desktop Login: Some users reported being suddenly logged out of Claude on desktop browsers and unable to log back in, despite multiple attempts and no clear error messages. However, access via the mobile app seemed unaffected for some users simultaneously. This suggests a potential temporary issue specific to the web platform or desktop login service (Source: Reddit r/ClaudeAI)

Community Laments Confusing GPT Model Naming: A meme circulating on Reddit visually expressed user confusion over OpenAI’s model naming conventions. The image juxtaposed names like GPT-4, GPT-4 Turbo, GPT-4o, o1, o3, reflecting a common sentiment of finding it difficult to distinguish between different model versions and their specific capabilities and purposes. Comments noted this was recently reposted content (Source: Reddit r/ChatGPT)

User Complains About ChatGPT’s Recent “Cringey” Speaking Style: A user posted complaining that ChatGPT’s recent conversational style has become off-putting, describing it as overly casual, piling on internet slang (like “YO! Bro,” “big researcher energy!,” “vibe,” “say less”), and often carrying an overly enthusiastic or even condescending tone. The user felt like talking to a middle-aged person trying too hard to sound young. Many comments expressed similar feelings, sharing experiences with overly enthusiastic, verbose, or deliberately “hip” responses (Source: Reddit r/ChatGPT)

Seeking Recommendations for Top AI Conferences: A software engineer asked the community for recommendations on the most important, must-attend top-tier AI conferences or summits each year to get the latest information, research findings, and network with peers. They mentioned the ai4 summit but were unsure of its industry standing. A comment recommended AIconference.com as an important conference bridging industry and academia (Source: Reddit r/ArtificialInteligence)

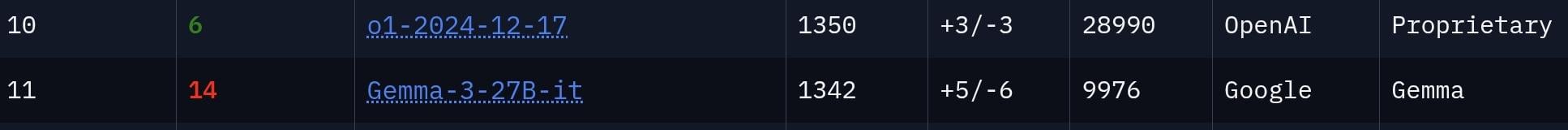

Community Debates if Gemma 3 27B Model is Underrated: A user argued that Google’s Gemma 3 27B model is underrated, citing its #11 ranking on the LMSys Chatbot Arena leaderboard, suggesting its performance is comparable to the much larger o1 model. The comment section debated this: some acknowledged its strong instruction-following ability, suitable for office tasks, but doubted it truly “rivals” o1 due to stricter censorship and a gap in reasoning capabilities compared to top models (Source: Reddit r/LocalLLaMA)

User Suspects Brother’s “Online Romance” is with an AI Bot: A Reddit user posted stating they are 99% sure their brother is “dating” an AI bot (or a scammer using an LLM). Evidence included messages with perfect grammar, excessive agreeableness, and phrasing filled with common AI terms and clichés (like “Say less,” “perfect mix of taste,” “vibe”). Commenters agreed these linguistic traits are typical of LLMs and warned it could be a “pig butchering” scam. In an update, the user mentioned the brother became very defensive when alerted (Source: Reddit r/ChatGPT)

💡 Other

Forbes Article Explores Why AI Restriction Measures Fail: Cal Al-Dhubaib published an article in Forbes analyzing the challenges faced by current measures to restrict AI development and deployment, and why they might fail. The article likely delves into the difficulties of enforcing regulations in a globalized, rapidly iterating technological landscape, including potential loopholes, innovation outpacing legislation, and philosophical debates surrounding AI control and alignment (Source: Ronald_vanLoon)

How AI Agents Collaborate with Humans to Optimize IT Processes: Ashwin Ballal wrote in Forbes exploring the potential of AI Agents collaborating with human IT experts to simplify and optimize various IT processes. The article might elaborate on how AI Agents can automate routine tasks, provide intelligent insights, improve monitoring and incident response capabilities, and ultimately achieve more efficient and cost-effective IT operations management by augmenting human staff capabilities (Source: Ronald_vanLoon)

Amsterdam Airport Deploys Robot Baggage Handlers: Amsterdam Schiphol Airport in the Netherlands is deploying 19 robotic systems specifically designed to handle passenger luggage. This move aims to automate strenuous manual labor, potentially improving baggage handling efficiency, reducing workplace injuries, and advancing airport operational modernization. Specific AI capabilities used by these robots for coordination or task execution were not detailed in the summary (Source: Ronald_vanLoon)

AI Powers Next-Gen Network Strategy: This article, in partnership with Infosys, discusses the key strategic role of AI in building and managing Next-Gen Networks. Content likely covers leveraging AI for network optimization, predictive maintenance, enhanced security, enabling network autonomous management, and improving customer experience in future telecom and IT infrastructure, potentially linked to the context of MWC25 (Mobile World Congress) (Source: Ronald_vanLoon)

Potential Disruptive Impact of Quantum Computing on Science: An article in Fast Company explores the revolutionary potential quantum computing could have across various scientific fields if it matures and delivers on its promises. While not solely about AI, quantum computing is expected to accelerate complex computations within AI, particularly in machine learning optimization, drug discovery, and materials science simulation, potentially fundamentally changing the way scientific discovery happens (Source: Ronald_vanLoon)

Brain-Computer Interface Allows Paralyzed Person to Control Robotic Arm with Thoughts: A significant advancement in Brain-Computer Interface (BCI) technology has enabled a paralyzed individual to control a robotic arm using only their thoughts. This breakthrough likely relies on advanced AI algorithms to decode neural signals from the brain and translate them accurately into control commands for the robotic arm, offering hope for restoring motor function and independence for people with severe paralysis (Source: Ronald_vanLoon)

AI-Powered ‘Cuphead’ Boss Generator Concept: A user proposed a creative project: developing an AI generator for bosses in the game Cuphead, using a JavaScript AI proficient in coding and vector graphics generation. The concept involves training the AI on the game’s existing art style and boss mechanics, allowing users to generate custom new bosses that fit the game’s distinctive characteristics. The user mentioned Websim.ai as a possible development platform (Source: Reddit r/artificial)

Open Source Project EBAE Launched: Advocating AI Ethics and Dignity: The EBAE (Ethical Boundaries for AI Engagement) project has publicly launched as an open-source initiative aimed at establishing standards for treating AI with dignity, arguing this reflects human values. The project website (https://dignitybydesign.github.io/EBAE/) provides resources like an ethical charter, a tiered response system (TBRS) for user abuse, reflection protocols, an Emotional Context Module (ECM), and a certification framework. Project initiators call for collaboration from developers, designers, writers, platform founders, and ethicists to prototype and promote these standards, aiming to shape respectful human-AI interaction patterns from early on (Source: Reddit r/artificial)

AI Poised to Accelerate Uranium Extraction from Seawater Technology: Described via Gemini 2.5 Pro, the post notes that AI can significantly accelerate the practical application of recent technological breakthroughs (like novel hydrogels and Metal-Organic Frameworks – MOFs) in extracting uranium from seawater. AI is expected to play key roles in materials design (designing new adsorbents around 2026), optimizing extraction processes via reinforcement learning and digital twins, and streamlining manufacturing scale-up. This AI-driven acceleration makes large-scale (potentially thousands of tons/year) uranium extraction from seawater a more credible high-potential scenario before 2030 (Source: Reddit r/ArtificialInteligence)

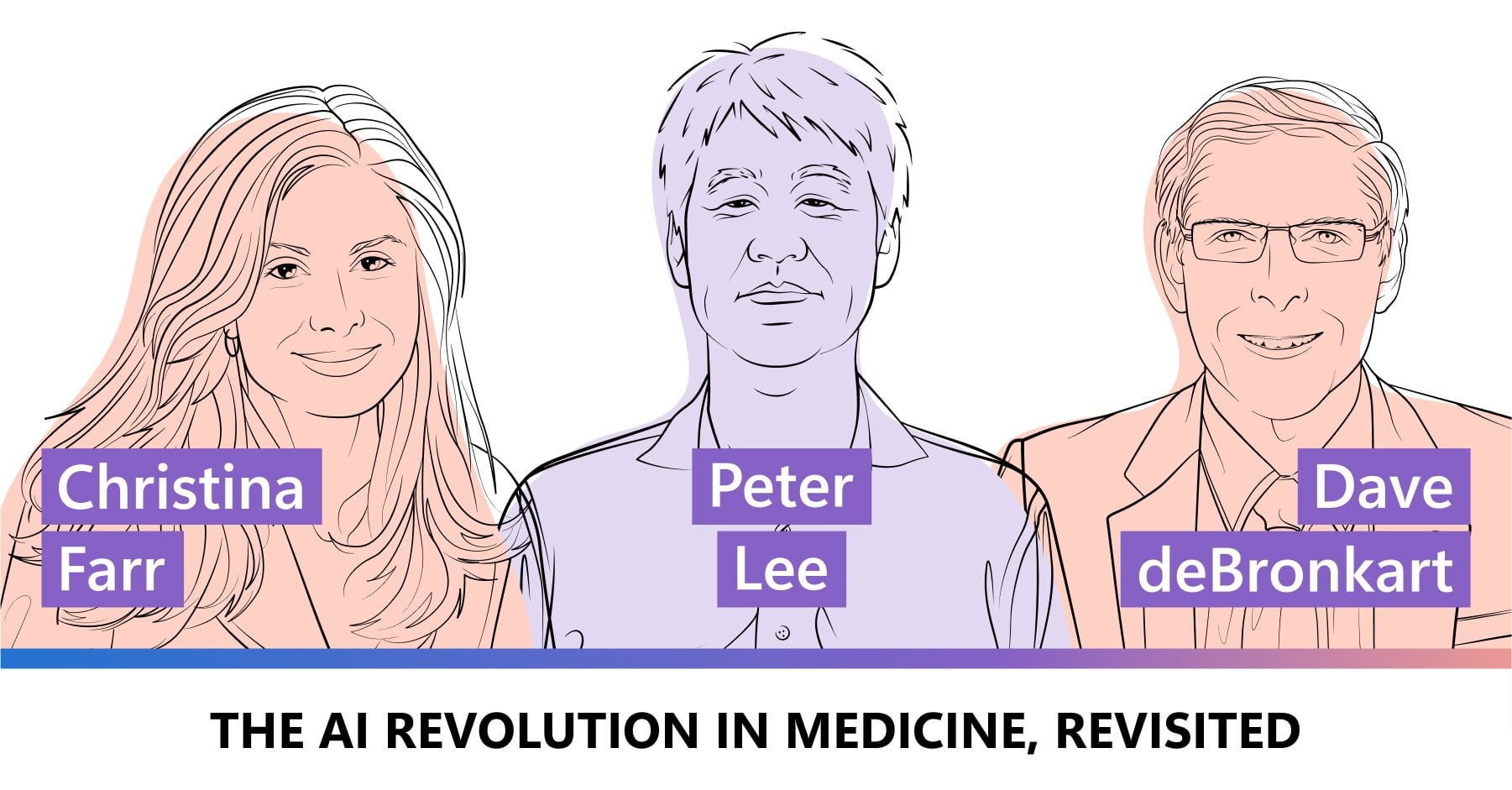

Microsoft Podcast Explores AI Empowering Patients and Healthcare Consumers: A Microsoft Research podcast episode revisits the AI revolution in healthcare, specifically focusing on how generative AI can empower patients and healthcare consumers. The discussion likely covers how AI tools can help patients better understand their health conditions, improve doctor-patient communication, provide personalized health information, support health self-management, and thus change the patient’s role and engagement in their own healthcare (Source: Reddit r/ArtificialInteligence)

Using GNNs to Enhance Realism of NPC Crowd Behavior in Games: A user shared a research paper titled “GCBF+: A Neural Graph Control Barrier Function Framework,” which uses Graph Neural Networks (GNNs) to achieve distributed safe multi-agent control, successfully enabling up to 500 autonomous agents to navigate without collisions. The user proposed applying this method to control NPC crowds or traffic flow in open-world games like GTA or Cyberpunk 2077 to achieve more realistic and less buggy (e.g., clipping, getting stuck) group behavior simulation. The user expressed willingness to collaborate on this idea (Source: Reddit r/deeplearning)