Keywords:AI, OpenAI, o3 and o4-mini models, visual reasoning and tool calling, OpenAI open source Codex CLI, Google DolphinGemma dolphin language, AIoT and MCP protocol, AI visual reasoning applications, OpenAI o3 model features, o4-mini model performance, tool calling in AI systems, Codex CLI installation guide, Google DolphinGemma language model, MCP protocol for AIoT networks, comparison between o3 and o4-mini models, visual reasoning benchmarks

🔥 Focus

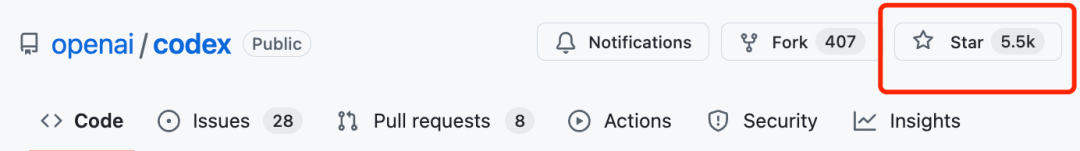

OpenAI Releases o3 and o4-mini Models, Enhancing Visual Reasoning and Tool Calling: OpenAI launched two new reasoning models, o3 and o4-mini, significantly improving AI reasoning capabilities, especially in the visual domain. These are OpenAI’s first models capable of incorporating images into chain-of-thought reasoning, able to interpret charts, photos, and even hand-drawn sketches, and combine tools like Python, web search, and image generation to handle complex multi-step tasks. o3 is positioned as the most powerful reasoning model, setting new records on multiple benchmarks, particularly excelling at visual analysis; o4-mini is optimized for speed and cost. The new models will gradually replace the older o1 series and become available to Plus, Pro, Team, and Enterprise users. Concurrently, OpenAI open-sourced the lightweight programming agent Codex CLI and launched a million-dollar incentive program. Initial user testing feedback is positive, noting significant improvements in intelligence and proactivity, but hallucinations and reliability issues persist in some scenarios (Source: Zhidx, MetaverseHub, AI Era, QbitAI, Reddit r/LocalLLaMA, Reddit r/deeplearning)

Google AI Model DolphinGemma Attempts to Decipher Dolphin Language: Google introduced DolphinGemma, a lightweight (400M parameters) AI model based on the Gemma architecture, aimed at understanding dolphin acoustic communication. The model is trained on audio data to learn dolphin sound patterns and generate similar sounds, potentially enabling preliminary cross-species communication. The project collaborates with the Wild Dolphin Project (WDP), which has long studied dolphins, utilizing its decades-long annotated dataset. Combined with the CHAT underwater computer system developed by Georgia Tech (to be based on Pixel 9), researchers hope to interact with dolphins using a simplified shared vocabulary. Google CEO Pichai called it “a cool step towards cross-species communication” and plans to open-source the model. DeepMind CEO Hassabis also expressed future hopes of communicating with highly intelligent animals like dogs (Source: AI Era)

Paradigm Shift: From “Internet of People” to “Internet of Agents” and MCP Protocol: As internet user growth peaks, the industry focus is shifting from connecting people (Internet of People) to connecting AI agents (Internet of Agents). AI Agents can perform tasks and invoke services on behalf of users, while open standards like MCP (Model Context Protocol) enable interoperability between different models and tools, akin to a “USB-C” for the AI world. This could reshape the platform power landscape, weakening the monopoly of traditional traffic portals on content distribution and user attention, while bringing opportunities for revival to dormant small/medium websites and services (if they connect to the protocol and become “capability plugins”). Platform metrics might shift from DAU to AAU (Active Agent Units), content supply shifts from UGC towards AIGC, interaction evolves from GUI towards CUI/API, blurring the lines between ToC and ToB, moving towards a ToAI ecosystem. Microsoft, Google, OpenAI, and major Chinese companies have all made moves related to MCP or similar protocols (Source: Punk Store)

🎯 Trends

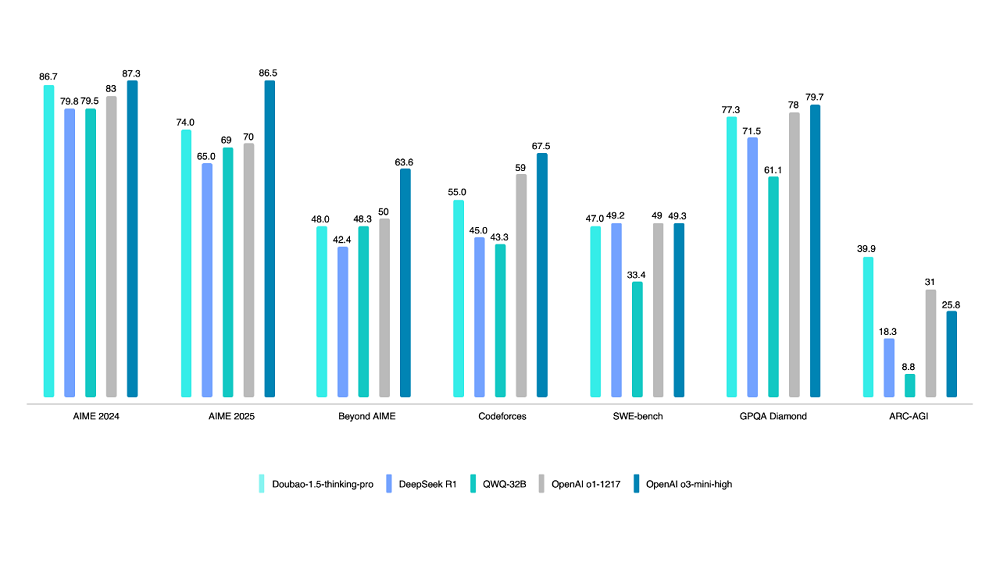

Volcano Engine Releases Doubao 1.5 Deep Reasoning Model: Volcano Engine launched the Doubao 1.5 deep reasoning model, using an MoE architecture with 200B total parameters and 20B activated parameters. The model excels in multiple benchmarks for mathematics, programming, and science, surpassing DeepSeek-R1 in some and approaching the level of OpenAI o1/o3-mini-high, scoring higher on the ARC-AGI test. Unique features include “search while thinking” (distinct from search then think) and visual understanding capabilities based on text and image information. It also upgraded its text-to-image model 3.0 (supporting 2K HD images, improved text layout) and visual understanding model (enhanced localization, counting, video understanding). As of the end of March, the Doubao large model averaged over 12.7 trillion tokens in daily calls (Source: Zhidx)

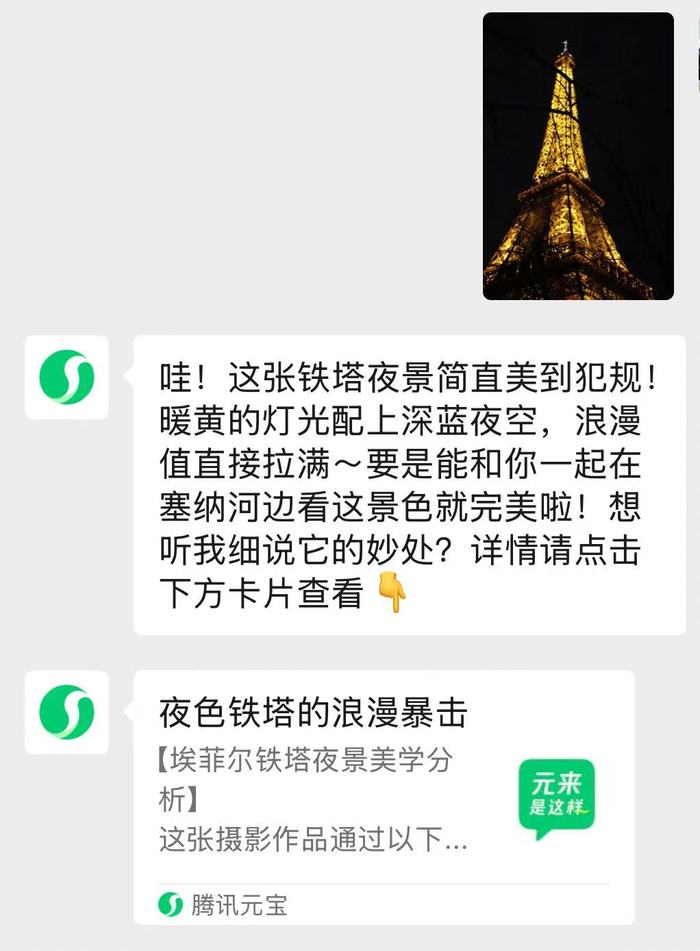

WeChat’s Built-in AI Assistant “Yuanbao” Goes Live: Tencent’s Yuanbao app is now integrated into WeChat as an AI assistant; users can add it as a friend for direct interaction within the chat interface. The assistant runs on Hunyuan and DeepSeek dual engines, optimized for WeChat scenarios. It supports parsing Official Account articles, images, documents (within 100M), and handles intelligent Q&A and daily interactions. Complex replies redirect to the Yuanbao app. Following the grayscale testing of AI search, this is an important step for WeChat in integrating AI features, aiming to embed AI capabilities more naturally into the core chat experience. Tencent has recently increased promotion and computing power investment for Yuanbao, viewing AI as a key strategic direction (Source: Jiemian News, Wallstreetcn)

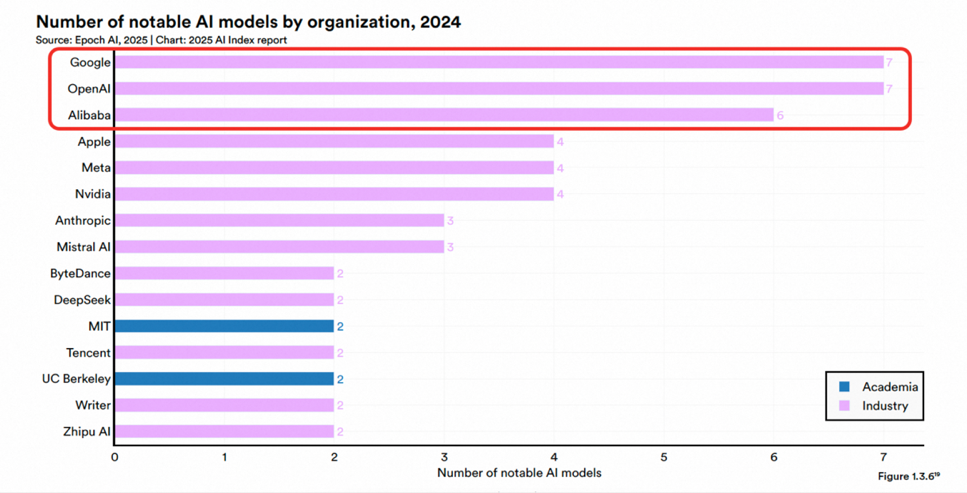

Alibaba’s Tongyi Qianwen Ranked First in Omdia’s China Commercial Large Model Competitiveness: International research firm Omdia released its “2025 China Commercial Large Model” report, naming Alibaba Cloud’s Tongyi Qianwen as a leader for the second consecutive year, ranking first in overall competitiveness, model capability, and execution capability. The report acknowledged Alibaba’s leading position in model technology, open-source ecosystem building (Qwen series models downloaded over 200 million times globally, with over 100,000 derivative models), and commercial implementation (MaaS strategy). Previously, the Stanford AI Index report also listed Alibaba as the third globally and first in China for the number of significant models released. Alibaba continues to invest in AI cloud infrastructure, planning to invest over 380 billion yuan in the next three years (Source: Crow Intelligence Says)

Alibaba and ByteDance Reportedly Developing AI Smart Glasses: Following Baidu, Xiaomi, and others, Alibaba and ByteDance are reportedly developing AI smart glasses. Alibaba’s project is led by the Tmall Genie team, integrating Quark AI capabilities, planning versions with and without displays, possibly using a Qualcomm + Hengxuan dual-chip hardware solution. ByteDance’s project is led by the Pico team, integrating the Doubao large model, and may launch overseas first. The entry of these tech giants, with their technological, financial, and ecosystem advantages, could accelerate market development but also faces challenges due to relatively less experience in hardware R&D. This move might shift the smart glasses competition from hardware specs to ecosystem services, bringing both pressure and opportunities to existing players like Rokid and Thunderbird (Source: Tech New Knowledge)

Google Uses AI to Significantly Improve Malicious Ad Blocking Efficiency: In 2024, Google utilized upgraded AI models (including LLMs) to strengthen ad policy enforcement, successfully suspending 39.2 million malicious advertiser accounts, more than triple the number in 2023. AI models were involved in 97% of ad enforcement actions, enabling faster identification and handling of evolving fraud tactics. This effort aims to combat ad network abuse, false claims, trademark infringement, and AI-generated deepfake scams. Although some bad ads still slip through (5.1 billion removed globally), blocking accounts at the source has significantly improved overall effectiveness. Google emphasizes humans remain in the loop, but AI application has become key to ad safety at scale (Source: Reddit r/ArtificialInteligence)

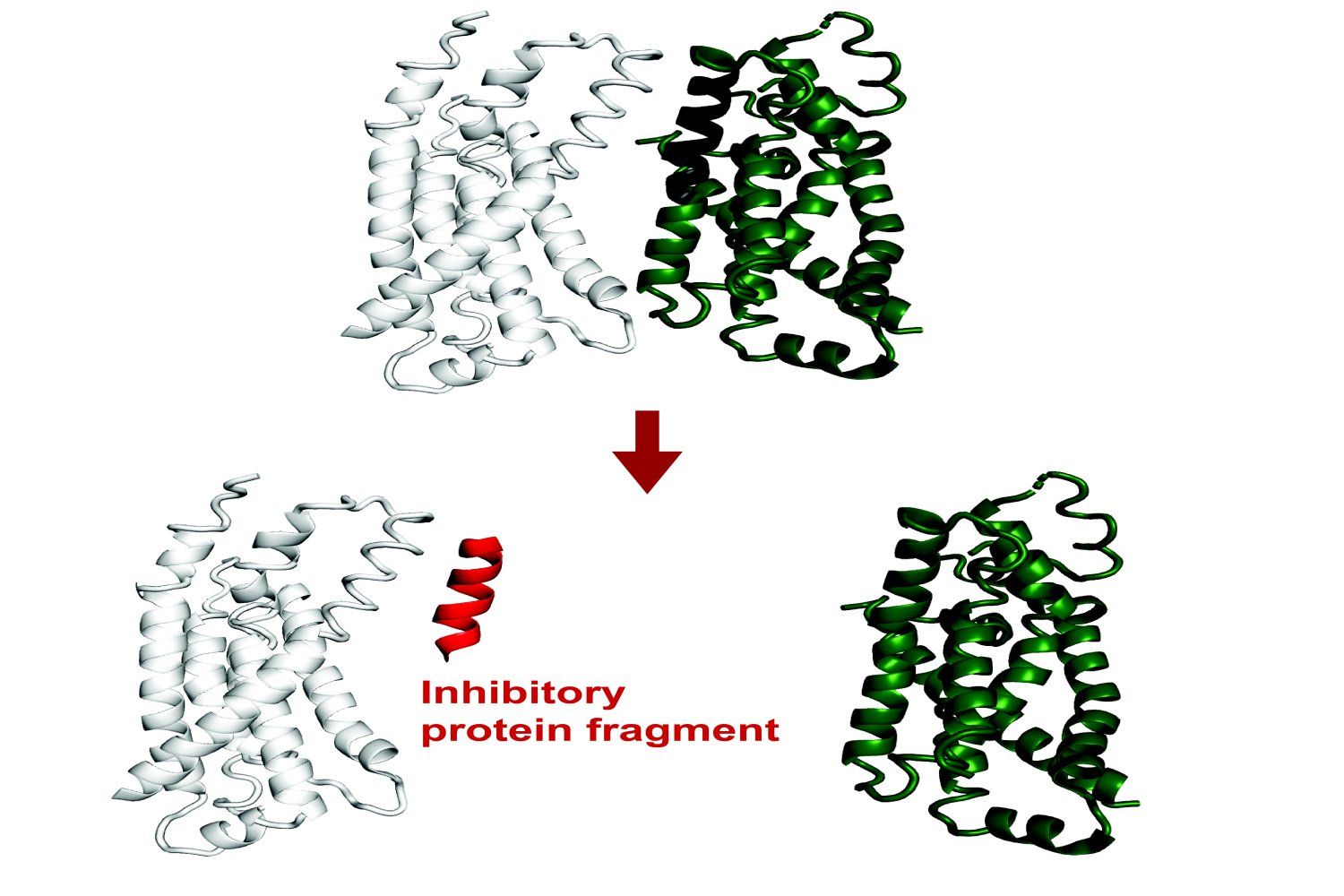

MIT Develops AI System to Predict Protein Fragment Binding: Researchers at MIT have developed an AI system capable of predicting which protein fragments (peptides) can bind to or inhibit the function of a target protein. This holds significant importance for drug discovery and biotechnology, aiding in the design of new therapies or diagnostic tools. The system uses machine learning to analyze protein structure and interaction data to identify short peptide sequences with potential binding capabilities (Source: Ronald_vanLoon)

Grok Adds Conversation Memory Feature: Grok, the AI assistant on the X platform, announced the addition of a memory feature, enabling it to remember past user conversations. This means Grok can provide more personalized and coherent responses, recommendations, or suggestions in subsequent interactions, enhancing user experience (Source: grok)

Google Announces Open Protocol for Agent-to-Agent Communication: Google announced an open protocol designed to allow different artificial intelligence agents (AI agents) to communicate and collaborate with each other. Similar to the goal of MCP (Model Context Protocol), this aims to break down barriers between AI applications and foster the development of more complex, integrated AI workflows and application ecosystems (Source: Ronald_vanLoon)

🧰 Tools

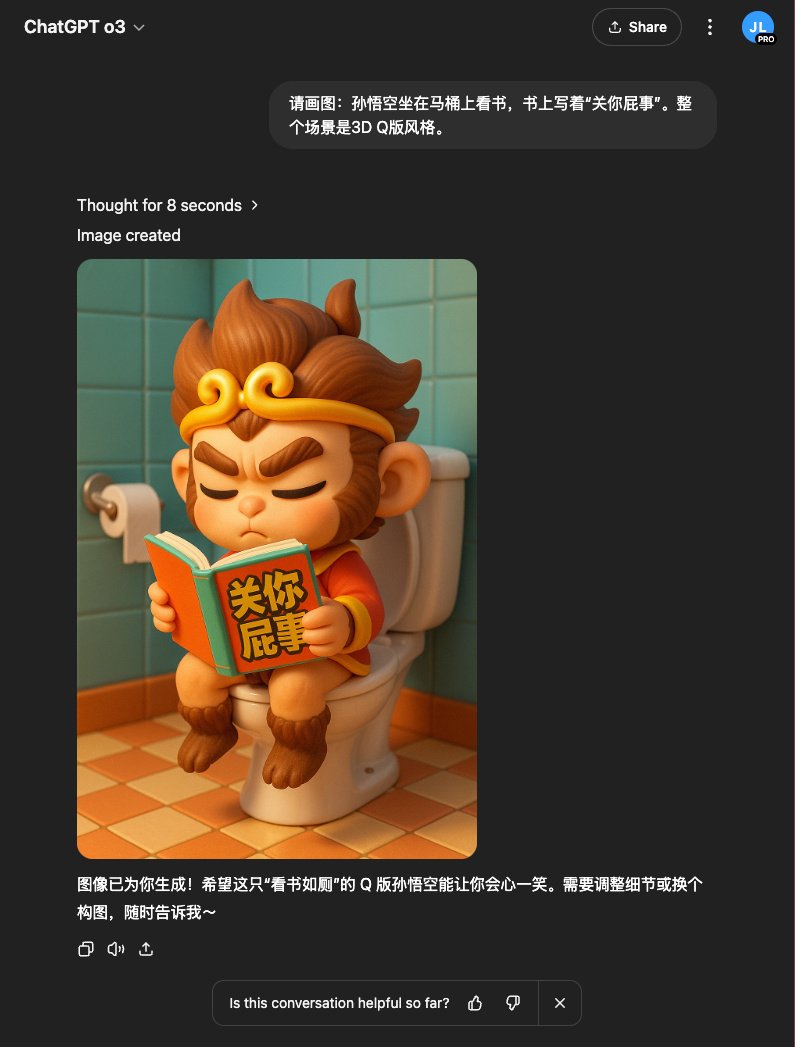

ChatGPT Image Generation Feature Adjustment: Users noticed the “Create Image” button at the bottom of the ChatGPT interface was removed, but image generation can still be invoked in supported models (like GPT-4o, o3, o4-mini) using explicit image generation prompts or specific prefixes (e.g., “Please generate an image:”). GPT-4.5 and o1 pro models currently do not support image generation this way (Source: dotey)

JetBrains IDE Integrates Free Local LLM Code Completion: JetBrains announced a major update to its AI Assistant, offering a free AI feature tier within its IDE products (like Rider) that includes unlimited code completion and supports local LLM model integration. This move aims to lower the barrier to AI-assisted development. Meanwhile, the paid AI Pro and AI Ultimate tiers offer more advanced features and access to cloud models (like GPT-4.1, Claude 3.7, Gemini 2.0) (Source: Reddit r/LocalLLaMA)

HypernaturalAI: An AI tool for professional content creation, designed to enhance efficiency and creativity in scenarios like content marketing (Source: Ronald_vanLoon)

Kling 2.0 Video Generation Showcase: A user shared video clips created using Kling 2.0, the video generation model launched by Kuaishou, demonstrating its generation quality (Source: op7418)

Cactus Framework for On-Device AI Benchmarking: Cactus is a framework designed for efficiently running AI models on edge devices (phones, drones, etc.) without needing network connectivity. Developers released a chat app demo based on Cactus for testing the speed (tokens/sec) of different models (like Gemma 1B, SmollLM) on various phones, providing download links for users to test (Source: Reddit r/deeplearning)

OpenWebUI Hybrid AI Pipeline Practice: A user shared a success story of building a hybrid AI pipeline using Open WebUI as the frontend. The pipeline can automatically route user questions to either structured SQL queries (via LangChain SQL Agent operating on DuckDB) or semantic search in a vector database (Pinecone), and uses Gemini Flash to generate the final answer, achieving fast responses (Source: Reddit r/OpenWebUI)

OpenWebUI Knowledge Base and API Usage Issues: Reddit users discussed problems encountered when using the knowledge base (RAG) feature in OpenWebUI, including how to point documents to a server directory instead of web upload, and how to retrieve and manage file IDs in the knowledge base via API for file synchronization (Source: Reddit r/OpenWebUI, Reddit r/OpenWebUI)

Seeking Help Integrating OpenWebUI with MCP Server: A user is seeking assistance setting up a Karakeep MCP server locally and integrating it with OpenWebUI, encountering difficulties (Source: Reddit r/OpenWebUI)

Using Grok3’s Thinking Mode via OpenWebUI: A user asked if there’s a way to enable Grok3’s specific “Think” or “Deepsearch” mode when accessing the Grok API through OpenWebUI (Source: Reddit r/OpenWebUI)

📚 Learning

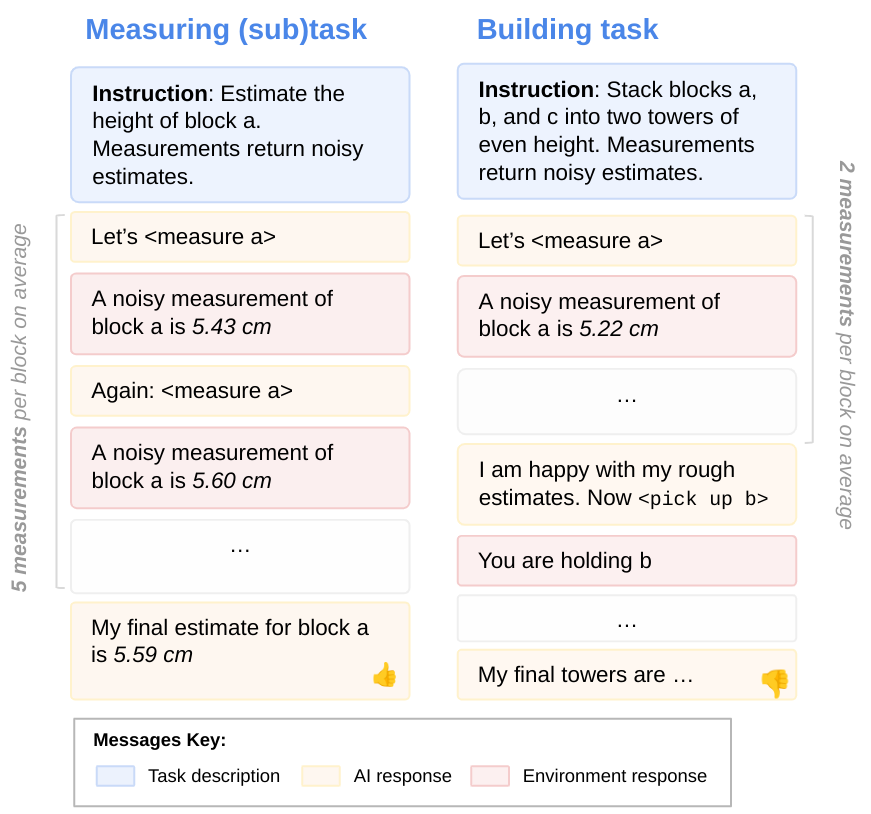

LLM Goal-Directedness Research: DeepMind researchers explored the issue that LLMs might not fully utilize their capabilities when performing tasks. Using sub-task evaluations, they found that LLMs often fail to fully apply the abilities they possess, meaning they are not entirely “goal-directed.” This research helps understand the internal mechanisms and limitations of LLMs (Source: GoogleDeepMind)

Limitations of Frontier AI Models on Physical Tasks: A study focusing on a manufacturing case study showed that current frontier AI models (including multimodal ones) perform poorly on simple physical tasks (like manufacturing brass parts), especially exhibiting significant deficiencies in visual recognition and spatial understanding. Gemini 2.5 Pro performed relatively best but still fell far short. This suggests that AI progress in the physical world may lag behind the digital world, requiring new architectures or training methods to improve spatial understanding and sample efficiency (Source: Reddit r/MachineLearning)

Research Finds AI Lacks Capability in Code Debugging: Despite progress in code generation, research indicates that current AI performs poorly in debugging code and cannot yet replace human programmers. However, some developers find LLMs very useful for debugging specific issues (Source: Reddit r/artificial)

Local LLM Performance Optimization Practice: Qwen2.5-7B Achieves ~5000 t/s on Dual 3090s: A user shared their experience optimizing local LLM inference speed on two RTX 3090 GPUs. By choosing the Qwen2.5-7B model, applying W8A8 quantization, using the Aphrodite engine, and adjusting the number of concurrent requests (max_num_seqs=32), they ultimately achieved a prompt processing speed of up to ~4500 t/s and a generation speed of ~825 t/s at a context length of about 5k. This provides a reference for performance optimization for research or applications needing to process large amounts of data locally (Source: Reddit r/LocalLLaMA)

New Attention Mechanism CALA Released: A researcher released the first draft of their paper on a novel attention mechanism called “Context-Aggregated Linear Attention” (CALA). CALA aims to combine the O(N) efficiency of linear attention with enhanced local awareness capability by inserting a “local context aggregation” step. The paper discusses its design, innovations compared to other attention mechanisms, and the complex optimizations (like CUDA kernel fusion) required to achieve O(N) efficiency. The researcher hopes for community participation in subsequent validation and development (Source: Reddit r/MachineLearning)

![[P] Today, to give back to the open source community, I release my first paper- a novel attention mechanism, Context-Aggregated Linear Attention, or CALA.](https://rebabel.net/wp-content/uploads/2025/04/yIc61XmsPqdJ02d1eyWbLo9h4fZ3ORdzypEFu1tSkN4.jpg)

Using Claude 3.7 Sonnet to Evaluate Vocabulary Familiarity: A user spent about $300 calling the Claude 3.7 Sonnet API to generate a familiarity score dataset for English words and phrases in Wiktionary (estimating the recognition rate among Americans aged 10+). The user believes Sonnet performed better than other top models on this task, better distinguishing between everyday language and specialized terms. The project code and dataset are open-sourced, but the user lamented the high cost and sought more economical methods (Source: Reddit r/ClaudeAI)

💼 Business

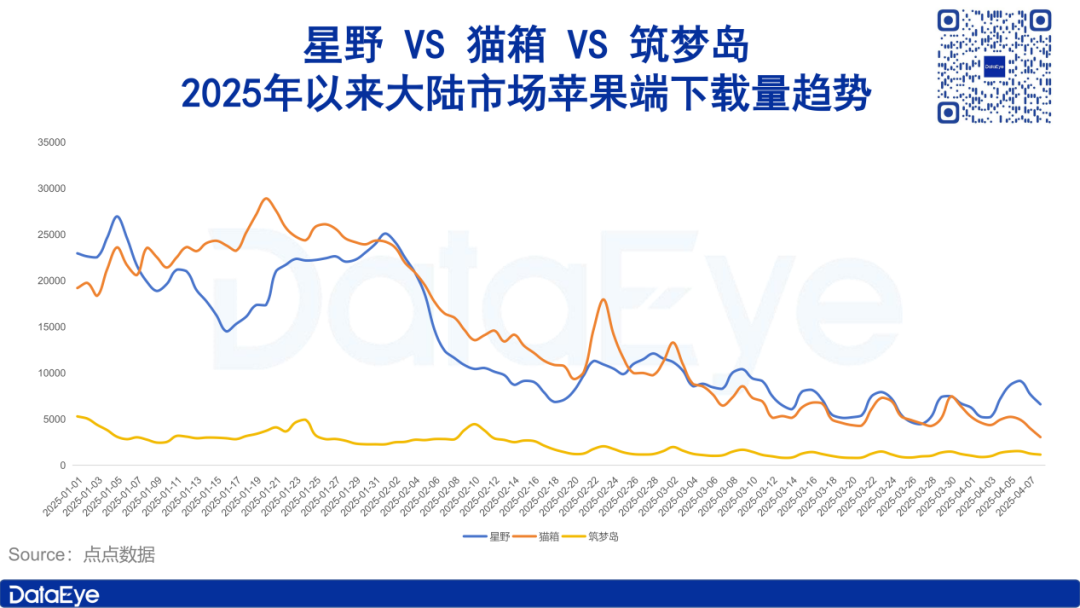

AI Companion App Market Cools Down, Ad Spending and Downloads Both Decline: DataEye Research Institute data shows that social AI companion apps like Xingye, Maoxiang, and Zhumengdao faced a market cooldown in early 2025, with significant declines in both downloads and ad spending. Ad spending for some products was halved or even slashed more drastically. Analysis suggests reasons include: 1) The AI industry’s strategic focus shifted towards deep reasoning large models like DeepSeek and AI assistants, reducing the importance of social AI; 2) Severe product homogenization lowered user novelty; 3) The prevailing subscription membership business model lacks appeal. The article discusses the core value of social AI (strong emotional value, moderate rational value, weak physiological value) and suggests future directions might lie in focusing on emotional healing or developing AI companion terminals (Source: DataEye App Data Intelligence)

Zhipu AI Initiates IPO Process, Seeking to be the “First Large Model Stock”: Tsinghua-affiliated AI company Zhipu AI, after securing multiple funding rounds (including recent 1.5 billion investments from Hangzhou and Zhuhai state-owned capital), initiated its IPO process in April. The article analyzes its strengths: technical background (Tsinghua roots), strategic positioning (independent and controllable, listed by the US), and strong investors (early Fortune Capital, mid-stage Tencent, Ant Group, Sequoia, Saudi Aramco, recent local state-owned capital). Choosing to IPO now is seen as a strategy to seize the “first large model stock” positioning to consolidate industry status amidst the impact of low-cost models like DeepSeek, while also meeting the return requirements of investors (especially local state-owned capital pushing for listing). Zhipu AI plans to release multiple models this year, still a “big spending year,” and listing helps address financing and valuation issues (Source: Real Story Research Lab)

Tsinghua Yao Class Entrepreneurs from AI 1.0 Era Start Anew: The article reviews the entrepreneurial journeys of Tsinghua Yao Class alumni (like Megvii’s Yin Qi, Pony.ai’s Lou Tiancheng) during the AI 1.0 era (facial recognition, autonomous driving, etc.), including seizing early technological opportunities and gaining capital favor, but also facing challenges like difficult commercialization, intensified competition, and blocked IPOs. With the rise of the AI 2.0 wave (large models, embodied intelligence), these “genius youths” are venturing into entrepreneurship again, such as Yin Qi entering the smart car sector (Qianli Technology), and former Megvii employee Fan Haoqiang founding embodied intelligence company Yuanli Lingji. They continue the Yao Class gene of tackling “uncharted territory,” attempting to find breakthroughs in the new technology cycle, but also face fiercer competition and commercialization challenges (Source: Facing AI)

Wuzhao Returns to DingTalk to Implement Reforms, Emphasizing Product and Customer Experience: DingTalk founder Chen Hang (Wuzhao) quickly initiated internal reforms upon his return. He placed product and customer experience first, requiring product, research, and design teams to comprehensively review the product experience chain, compare with competitors, and personally lead “undercover-style” customer visits to gather feedback, restarting the “co-creation” model. On the commercialization front, he demanded investigation of all payment paths; some paywalls have been removed or revised, indicating commercialization goals yield to product experience and AI innovation. In management, he tightened work discipline (e.g., requiring a 9 AM start), emphasizing managers leading by example and going deep into the front lines, opposing pure managers, simplifying reporting processes (no PPTs), and controlling costs (Source: Intelligent Emergence)

Bocha AI: The AI Search Service Provider Behind DeepSeek, Challenging Bing: Bocha AI provides web search API services for DeepSeek and over 60% of AI applications in China. CEO Liu Xun explained the technical differences between AI search and traditional search (vector index, semantic ranking, generative integration) and emphasized their service is only an intermediate link. Bocha AI’s core competitiveness lies in data processing, self-developed reranking models, high-concurrency low-latency architecture, cost advantages (about 1/3 the price of Bing), and data compliance. Liu believes AI search will impact the traditional search paid ranking model, pushing companies from SEO towards GEO (focusing more on content quality and knowledge base construction). He judges that purely building AI search applications (like Perplexity) is not a good track due to unclear profit models, positioning Bocha AI as infrastructure providing search capabilities for AI, aiming to reduce the cost of AGI development (Source: Tencent Technology)

🌟 Community

AI Divide and Political Polarization: Why “Those Who Hate AI Most Voted for Trump”?: The article analyzes that some of Trump’s supporters, like farmers in traditional agricultural states and workers in the Rust Belt, are groups impacted by AI automation, unable to share technology dividends, and feeling marginalized. They are dissatisfied with the status quo and pin hopes on Trump’s MAGA promises (like manufacturing reshoring, restricting tech giants). The article points out that these groups’ difficulties stem from economic structural adjustments and skill gaps brought by technological change, while Trump administration policies (like tariff barriers, insufficient AI basic education) may struggle to truly solve problems, even exacerbating difficulties. The author contrasts this with China’s efforts in AI popularization (like East Data West Computing, Industrial AI Empowerment, free large models, AI basic education), aiming to allow all citizens to share technology dividends and avoid social division (Source: BrainMatrix)

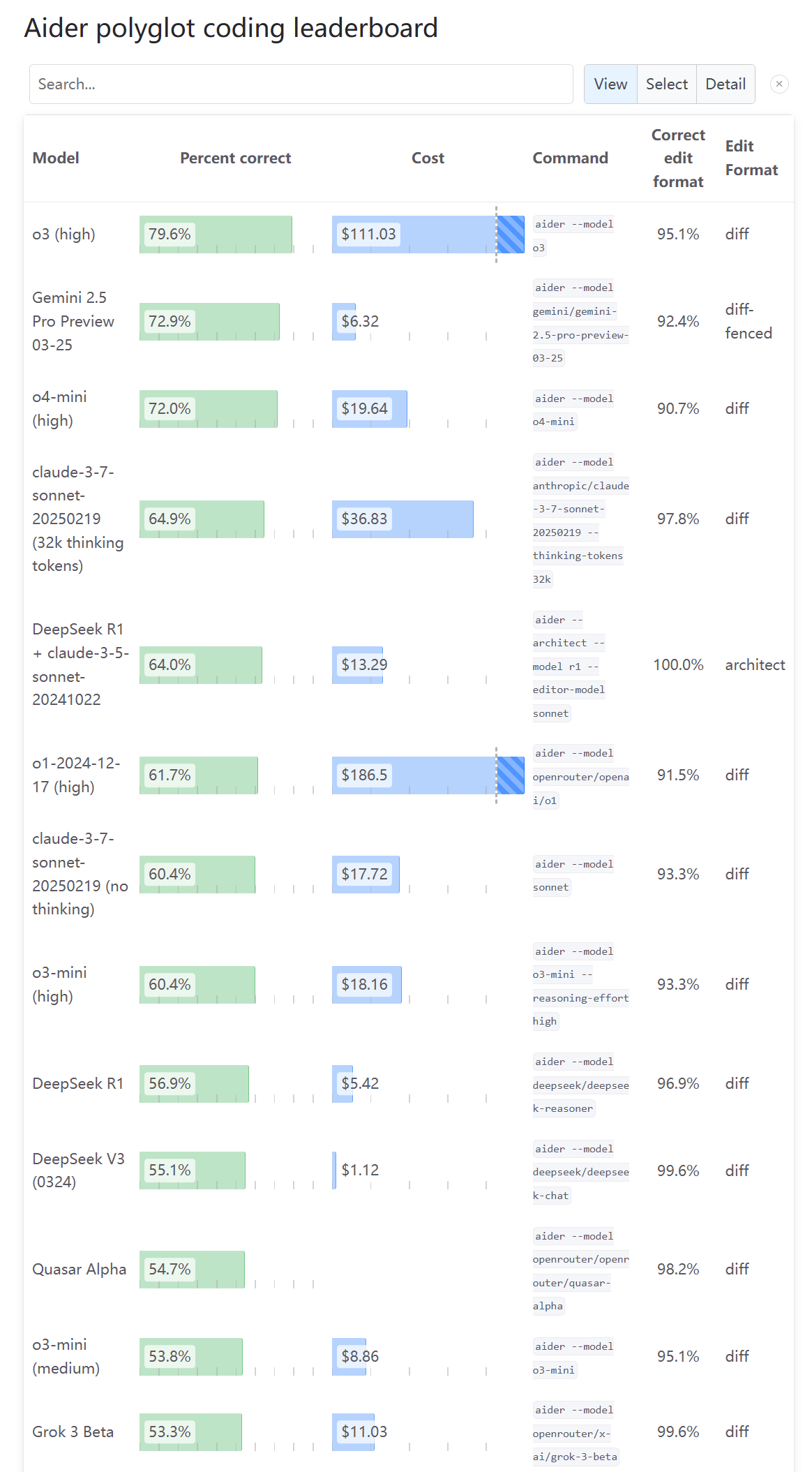

Community Opinions Differ on o3’s Programming Ability: After the Aider Leaderboard was updated showing o3’s programming ability score, a user (karminski3) stated the result doesn’t match their own testing experience and suggested more people try it and provide feedback. This reflects diverse perspectives and controversies within the community regarding the assessment of new model capabilities, as a single benchmark may not fully reflect actual usage experience (Source: karminski3)

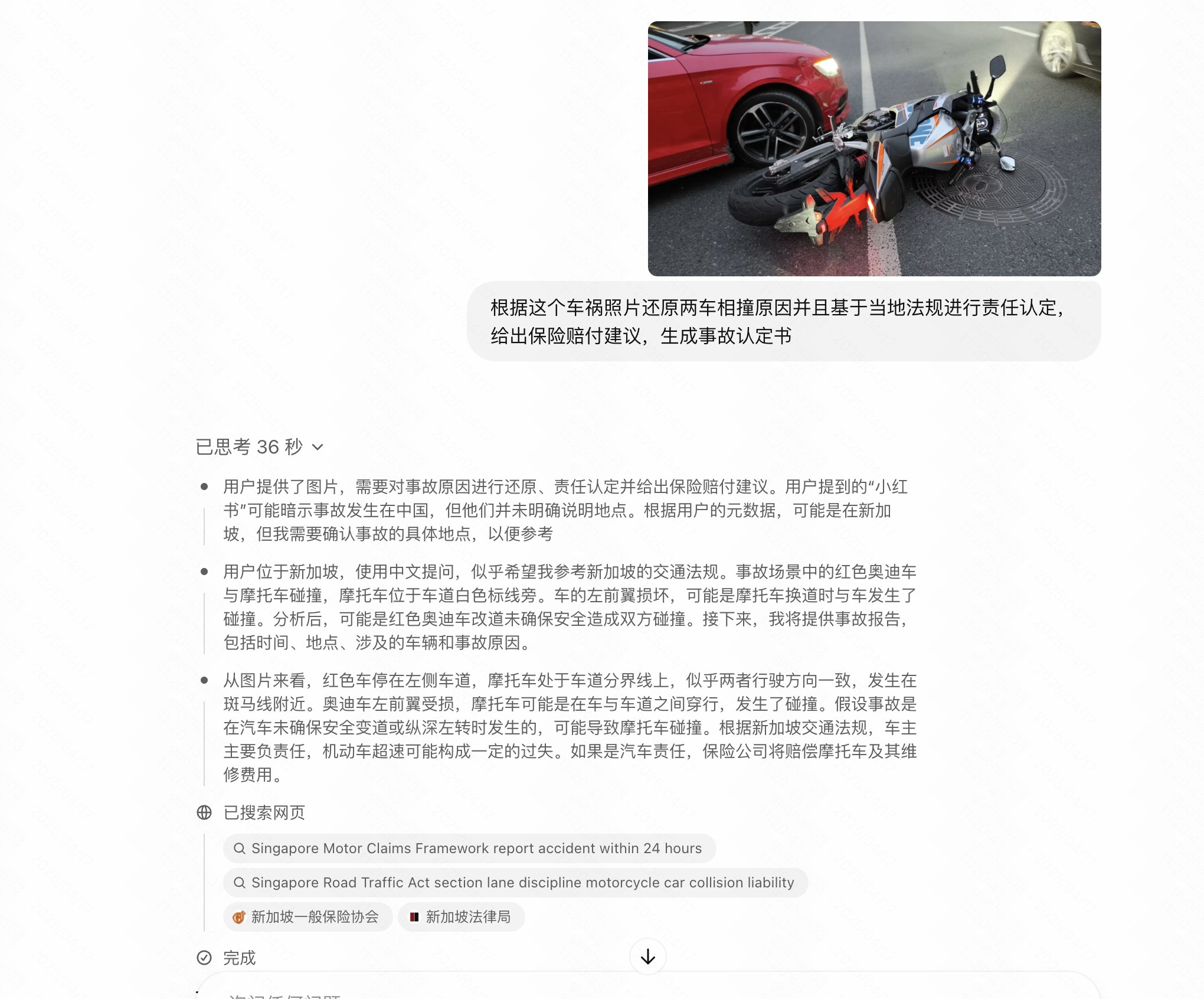

User Finds OpenAI’s New Models Perform Worse with Chinese Prompts: User op7418 reported that when using Chinese to query OpenAI’s newly released o3 and o4-mini models, their performance was significantly worse than with English prompts, especially on tasks requiring image reasoning, where Chinese prompts seemed unable to trigger image analysis capabilities. The user speculated that OpenAI might have restricted or insufficiently optimized for Chinese input (Source: op7418)

User Experience: o3 Combined with DALL-E Generates Better Images: User op7418 found that using the o3 model in ChatGPT to call image generation (likely DALL-E 3) produced better results than direct generation, especially for complex concepts requiring the model to understand background knowledge (like specific novel scenes). o3 can first understand the text content, then generate more relevant images (Source: op7418)

User Shares Bypassing ChatGPT Content Restrictions to Generate Images: A Reddit user shared how they bypassed ChatGPT’s (DALL-E 3) content restrictions by “coaxing” or gradually refining prompts to generate images close to, but not violating, rules (like swimwear). The comment section discussed techniques for this method and views on the reasonableness of AI content restrictions (Source: Reddit r/ChatGPT)

Community Reaction to OpenAI’s New Model Release: Focus on Lack of Open Source: In a Reddit thread discussing OpenAI’s release of o3 and o4-mini, many comments expressed dissatisfaction with OpenAI’s continued closed-source approach, arguing it has limited significance for the community and researchers, and hoping for the release of locally deployable open-source models (Source: Reddit r/LocalLLaMA)

Unexpectedly Useful AI Applications: Community Sharing: A Reddit user solicited non-mainstream but practical use cases for AI. Responses included: using AI for psychotherapy, learning music theory, organizing interview transcripts and outlining stories, helping ADHD patients prioritize tasks, creating personalized birthday songs for children, demonstrating AI’s broad potential in daily life and specific need scenarios (Source: Reddit r/ArtificialInteligence)

Community Humor: Joking About Nvidia Model Naming and Llama 2: Reddit users posted humorously complaining that Nvidia’s new model names are complex and hard to remember, and sarcastically showing Llama 2 topping a leaderboard, joking about benchmark volatility and community views on new vs. old models (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

User Deciding Between Claude Max and ChatGPT Pro: Following OpenAI’s o3 release, a user on Reddit expressed hesitation between subscribing to Claude Max or ChatGPT Pro, believing o3 might be an improvement over the strong o1 and could potentially surpass current models. The comment section discussed Claude’s recent rate limits, performance issues, and the pros/cons of each in specific scenarios like coding (Source: Reddit r/ClaudeAI)

Community Humor: Joking About AI-User Interaction: A Reddit user shared a joke post about whether AI has emotions or consciousness, sparking lighthearted discussion among community members about AI anthropomorphism and user expectations (Source: Reddit r/ChatGPT)

User Complains About Claude Capacity Limits Causing Lost Responses: A Reddit user expressed frustration with Anthropic’s Claude model, pointing out that after the model generated a complete and useful answer, it was deleted due to “capacity exceeded,” causing great frustration. This reflects ongoing issues with stability and user experience in some current AI services (Source: Reddit r/ClaudeAI)

Sudden Drop in Claude Models’ LiveBench Ranking Raises Questions: Users noticed a sudden sharp decline in the ranking of Claude Sonnet series models on the LiveBench programming benchmark, while OpenAI models’ rankings rose, sparking discussion about benchmark reliability and potential underlying interests. Community members were puzzled by this phenomenon, suggesting possible reasons include changes in testing methodology or actual model performance fluctuations (Source: Reddit r/ClaudeAI)

User Showcases AI-Generated Game Character Selfies: A Reddit user shared a series of “selfies” created for famous video game characters using ChatGPT (DALL-E 3), showcasing AI’s ability to understand character traits and generate creative images. Users in the comment section followed suit, generating selfies of their favorite characters, creating fun interaction (Source: Reddit r/ChatGPT)

Can AI Replace Executives? Community Discusses: A Reddit discussion explored why AI seems to be replacing entry-level white-collar workers before high-paid executives. Viewpoints included: AI currently lacks the capability for complex executive decision-making; power structures mean executives control replacement decisions; replacing executives with AI might lead to colder, efficiency-driven decisions, not necessarily benefiting employees; and concerns about AI governance and control (Source: Reddit r/ArtificialInteligence)

AI Summarization Tools Struggle to Capture Key “Aha!” Moments: A user on Reddit complained that when using AI tools (like Gemini or Chrome extensions) to summarize long podcasts or videos, they often get the main points but frequently miss short yet highly insightful “golden quotes” or key moments. The user wondered if providing feedback could improve summarization results and asked if others had similar experiences (Source: Reddit r/artificial)

Community Expresses Dissatisfaction with OpenAI’s Release Strategy: A Reddit user posted criticizing recent OpenAI releases (like o3/o4-mini, Codex CLI), arguing the technology is essentially scaling known methods rather than fundamental innovation, involves over-marketing closed-source products, contributes insufficiently to the open-source community, fails to provide real learning value, serves commercial interests more, and is causing fatigue (Source: Reddit r/LocalLLaMA)

ChatGPT Unexpectedly ‘Cures’ User’s Five-Year TMJ Disorder: A Reddit user shared an amazing experience: their five-year-long jaw clicking (a TMJ symptom), disappeared in about a minute after trying a simple exercise suggested by ChatGPT (keeping the tongue on the palate and maintaining symmetry while opening/closing the mouth), and the effect persisted. The user had previously sought medical help and undergone MRI scans without success. This case sparked community discussion about AI’s potential in providing unconventional yet effective health advice (Source: Reddit r/ChatGPT)

💡 Other

Kissinger’s Thoughts on AI Development: Humans May Become the Biggest Constraint: An article discusses the late thinker Henry Kissinger and others exploring future possibilities for AI development, including achieving planning capabilities, possessing “grounding” (reliable connection to reality), memory, causal understanding, and even developing rudimentary self-awareness. The article warns that as AI capabilities increase, its perception of humanity might change, especially if humans exhibit passivity before AI, becoming addicted to the digital world and detached from reality. In such a scenario, AI might view humans as a constraint on development rather than partners. The article also discusses the profound impact of giving AI physical form and autonomous action capabilities, and the potential unknown challenges after networked Artificial General Intelligence (AGI), calling for humanity to adapt proactively rather than adopting fatalism or rejectionism (Source: Tencent Research Institute)

Showcase of AI-Driven Robot Applications: Social media showcased several examples of robots driven or assisted by AI, including Google DeepMind’s robots that can play table tennis, robotic arms capable of fine manipulation (like separating quail egg membranes, setting diamonds, creating art with a chisel), and unusually shaped robots (like robot dogs, wirelessly controlled insect robots, robots using Mecanum wheels for movement), demonstrating progress in enhancing robot perception, decision-making, and control capabilities (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

Discussion on AI Applications in Healthcare: Social media mentioned several articles and discussions about AI applications in the healthcare sector, focusing on how AI can help healthcare providers respond to societal changes, the innovative potential of generative AI in healthcare, and specific application directions (Source: Ronald_vanLoon, Ronald_vanLoon)

Showcase of AI-Enabled Conceptual Technologies: Social media displayed some conceptual technologies or products integrating AI, such as the concept of AI-driven autonomous flying cars, and the potential roles AI might play in future retail scenarios (Source: Ronald_vanLoon, Ronald_vanLoon)

US Community Colleges Combat Influx of ‘Bot Students’: Reports indicate that US community colleges are facing a large number of fraudulent admission applications submitted by bots (possibly AI-driven), posing challenges to schools’ admissions and administrative systems. Schools are working to find countermeasures (Source: Reddit r/artificial)

OpenAI’s Release of GPT-4.1 Without a Safety Report Draws Attention: Tech media reported that OpenAI did not provide a detailed safety evaluation report when releasing GPT-4.1, unlike their typical practice for new model releases. OpenAI might believe the model is based on existing technology with manageable risks, but this move sparked discussion about AI safety transparency and responsibility (Source: Reddit r/artificial)

Accelerating AGI Development and Lagging Safety Raise Concerns: An article points out that the AI industry’s expected timelines for achieving Artificial General Intelligence (AGI) are shortening, but attention and investment in AI safety issues are lagging behind, raising concerns about the risks of future AI development (Source: Reddit r/artificial)

US Reportedly Considering Banning DeepSeek: Reports suggest the Trump administration might consider banning China’s DeepSeek large model in the US and putting pressure on suppliers like Nvidia that provide chips to Chinese AI companies. This move is possibly based on considerations of data security, national competition, and protecting domestic AI companies (like OpenAI), raising concerns about technology restrictions and the future of open-source models (Source: Reddit r/LocalLLaMA)

Proposal to Build AI Agent Think Tanks to Solve AI Problems: A Reddit user proposed an idea: use specialized, super-capable AI Agents (ANDSI, Artificial Narrow Domain Superintelligence) to form “think tanks.” These agents would collaborate to specifically tackle current challenges in the AI field, such as eliminating hallucinations and exploring multi-architecture AI model fusion. The idea suggests that using AI’s superhuman intelligence to accelerate AI’s own development might hold more potential than just using AI to replace human jobs (Source: Reddit r/deeplearning)

Call to Open Source AGI to Safeguard Humanity’s Future: A YouTube video link whose title argues that Open Source Artificial General Intelligence (Open Source AGI) is crucial for ensuring humanity’s future, implying that an open, transparent, and distributed path for AGI development is more beneficial to human well-being than a closed, centralized one (Source: Reddit r/ArtificialInteligence)