Keywords:GPT-4.5, Large language models, Huawei Pangu Ultra performance, GPT-4.5 training details, Impact of RLHF on reasoning capabilities, 4GB human learning limit research, Open-source math dataset MegaMath

🔥 Focus

OpenAI Reveals GPT-4.5 Training Details and Challenges: OpenAI CEO Sam Altman and the GPT-4.5 core technical team discussed the model’s development details. The project started two years ago, mobilized nearly the entire company, and took longer than expected. Training encountered “catastrophic issues” like the failure of a 100,000-GPU cluster and hidden bugs, exposing infrastructure bottlenecks but also prompting tech stack upgrades. Now, only 5-10 people are needed to replicate a GPT-4 level model. The team believes future performance gains hinge on data efficiency rather than compute power, requiring new algorithms to learn more from the same amount of data. The system architecture is shifting towards multi-cluster setups, potentially involving collaboration among tens of millions of GPUs in the future, demanding higher fault tolerance. The conversation also touched upon Scaling Laws, machine learning and system co-design, the nature of unsupervised learning, showcasing OpenAI’s thinking and practices in advancing cutting-edge large model development (Source: 36Kr)

Huawei Releases Ascend-Native 135B Dense Large Model Pangu Ultra: The Huawei Pangu team released Pangu Ultra, a 135B-parameter dense general-purpose language model trained on domestically produced Ascend NPUs. The model uses a 94-layer Transformer architecture and introduces Depth-scaled sandwich-norm (DSSN) and TinyInit initialization techniques to address training stability issues for ultra-deep models, achieving stable training without loss spikes on 13.2T high-quality data. At the system level, optimizations like hybrid parallelism, operator fusion, and sub-sequence splitting increased Model Flops Utilization (MFU) to over 50% on an 8192-card Ascend cluster. Evaluations show Pangu Ultra surpasses dense models like Llama 405B and Mistral Large 2 on multiple benchmarks and competes with larger MoE models like DeepSeek-R1, demonstrating the feasibility of developing top-tier large models based on domestic computing power (Source: Jiqizhixin)

Study Questions Significance of Reinforcement Learning for LLM Reasoning Enhancement: Researchers from the University of Tübingen and the University of Cambridge question recent claims that Reinforcement Learning (RL) significantly enhances language model reasoning abilities. Through rigorous investigation of common reasoning benchmarks (like AIME24), the study found results exhibit high instability, where simply changing the random seed can cause significant score fluctuations. Under standardized evaluation, performance improvements from RL are much smaller than originally reported, often lack statistical significance, are even weaker than the effects of Supervised Fine-Tuning (SFT), and show poor generalization ability. The study identifies sampling differences, decoding configurations, evaluation frameworks, and hardware heterogeneity as primary causes of instability, calling for stricter, reproducible evaluation standards to soberly assess and measure the true progress of model reasoning capabilities (Source: Jiqizhixin)

Altman’s TED Talk: Will Release Powerful Open-Source Model, Believes ChatGPT is Not AGI: OpenAI CEO Sam Altman stated at the TED conference that the company is developing a powerful open-source model whose performance will surpass all existing open-source models, directly responding to competitors like DeepSeek. He emphasized that ChatGPT’s user base continues to grow rapidly and the new memory feature will enhance personalized experiences. He believes AI will see breakthroughs in scientific discovery and software development (with huge efficiency gains), but current models like ChatGPT lack the ability for self-sustained learning and cross-domain generalization, and are thus not AGI. He also discussed copyright and “style rights” issues raised by GPT-4o’s creative capabilities and reiterated OpenAI’s confidence in model safety and its risk control mechanisms (Source: AI Era)

Study Estimating Human Lifetime Learning Capacity at ~4GB Sparks Debate on BCIs and AI Development: A study published in the Cell journal Neuron by Caltech researchers estimates human brain information processing speed at about 10 bits per second, far below the sensory system’s data collection rate of 1 billion bits per second. Based on this, the study infers that the upper limit of knowledge accumulation in a human lifetime (assuming 100 years of continuous learning without forgetting) is about 4GB, far less than the parameter storage capacity of large models (e.g., a 7B model can store 14 billion bits). The study suggests this bottleneck stems from the central nervous system’s serial processing mechanism and predicts that machine intelligence surpassing human intelligence is only a matter of time. The research also questions Musk’s Neuralink, arguing it cannot overcome the fundamental structural limitations of the brain and that optimizing existing communication methods might be better. This study has sparked widespread discussion on human cognitive limits, AI development potential, and the direction of brain-computer interfaces (Source: QbitAI)

🎯 Trends

GPT-4 to be Retired Soon, GPT-4.1 and Mysterious New Models May Debut: OpenAI announced it will fully replace the two-year-old GPT-4 with GPT-4o in ChatGPT starting April 30th; GPT-4 will still be available via API. Meanwhile, community and code leaks suggest OpenAI may soon release a series of new models, including GPT-4.1 (and its mini/nano versions), a full-power o3 inference model, and a new o4 series (like o4-mini). A mysterious model named Optimus Alpha has launched on OpenRouter, performing excellently (especially in programming) and supporting million-token context. It’s widely speculated to be one of OpenAI’s upcoming new models (possibly GPT-4.1 or o4-mini), sharing many similarities with OpenAI models (like specific bugs). This indicates OpenAI is accelerating its model iteration and actively consolidating its technological leadership (Source: source, source)

Alibaba’s Qwen3 Large Model Gearing Up for Release: Reports suggest Alibaba is expected to release the Qwen3 large model soon. The R&D team confirms the model is in the final preparation stage, but the specific release date is undetermined. Qwen3 is reportedly an important model product for Alibaba in the first half of 2025, with development starting after Qwen2.5. Influenced by competing models like DeepSeek-R1, Alibaba Cloud’s foundational model team is further shifting its strategic focus towards enhancing the model’s reasoning capabilities, showing strategic focus on specific capabilities amidst the competitive large model landscape (Source: InfoQ)

Kimi Open Platform Lowers Prices and Open-Sources Lightweight Visual Models: Moonshot AI’s Kimi Open Platform announced reduced prices for model inference services and context caching, aiming to reduce user costs through technological optimization. Simultaneously, Kimi open-sourced two lightweight vision-language models based on MoE architecture, Kimi-VL and Kimi-VL-Thinking, supporting 128K context with only about 3 billion activated parameters. They reportedly significantly outperform large models with 10x the parameters in multimodal reasoning capabilities, aiming to promote the development and application of small, efficient multimodal models (Source: InfoQ)

Google Releases Agent Interoperability Protocol A2A and Multiple New AI Products: At the Google Cloud Next ’25 conference, Google, along with over 50 partners, launched the open protocol Agent2Agent (A2A) to enable interoperability and collaboration between AI agents developed by different companies and platforms. It also released several AI models and applications, including Gemini 2.5 Flash (efficient flagship model), Lyria (text-to-music), Veo 2 (video creation), Imagen 3 (image generation), Chirp 3 (custom voice), and introduced the seventh-generation TPU chip Ironwood, optimized specifically for inference. This series of releases reflects Google’s comprehensive layout and open strategy in AI infrastructure, models, platforms, and agents (Source: InfoQ)

ByteDance Releases 200B-Parameter Inference Model Seed-Thinking-v1.5: The ByteDance Doubao team released a technical report introducing its MoE inference model Seed-Thinking-v1.5 with 200B total parameters. The model activates 20B parameters per inference and performs excellently on multiple benchmarks, reportedly surpassing DeepSeek-R1, which has 671B total parameters. The community speculates this might be the model used in the ‘Deep Thinking’ mode of the current ByteDance Doubao App, showcasing ByteDance’s progress in developing efficient inference models (Source: InfoQ)

Midjourney Releases V7 Model, Improving Image Quality and Generation Efficiency: AI image generation tool Midjourney released its new model V7 (alpha version). The new version improves the coherence and consistency of image generation, especially performing better on hands, body parts, and object details, and can generate more realistic and rich textures. V7 introduced Draft Mode, achieving ten times the rendering speed at half the cost, suitable for rapid iterative exploration. It also offers turbo (faster but more expensive) and relax (slower but cheaper) generation modes, catering to different user needs (Source: InfoQ)

Amazon Launches AI Voice Model Nova Sonic: Amazon released Nova Sonic, a new generation generative AI model that natively processes speech. Reportedly, the model rivals top voice models from OpenAI and Google on key metrics like speed, speech recognition, and conversation quality. Nova Sonic is available through the Amazon Bedrock developer platform, uses a new bidirectional streaming API, and is priced about 80% cheaper than GPT-4o, aiming to provide cost-effective natural voice interaction capabilities for enterprise AI applications (Source: InfoQ)

Apple’s China Version iPhone AI Features May Launch Mid-Year, Integrating Baidu and Alibaba Technologies: Reports indicate Apple plans to introduce Apple Intelligence services for iPhones in the Chinese market (possibly in iOS 18.5) before mid-2025. The feature will utilize Baidu’s ERNIE large model to provide intelligent capabilities and integrate Alibaba’s censorship engine to comply with content regulation requirements. Apple has not signed exclusive agreements with Baidu or Alibaba, indicating its strategy of localized cooperation in key markets to rapidly deploy AI features (Source: InfoQ)

🧰 Tools

Volcano Engine Releases Enterprise Data Agent: Volcano Engine launched the enterprise-level Data Agent. This tool leverages the reasoning, analysis, and tool-calling capabilities of large models, aiming to deeply understand enterprise business needs and automate complex data analysis and application tasks such as writing in-depth research reports and designing marketing campaigns, enhancing enterprise data utilization efficiency and decision-making levels (Source: InfoQ)

GPT-4o Image Generation’s New Styles Gain Attention: Social media users are showcasing new styles created using GPT-4o’s image generation capabilities, for example, combining Windows 2000 retro interface elements with character images to create unique collage effects. Users shared prompt techniques, such as using reference images for guidance and combining style with content descriptions, sparking community interest in exploring GPT-4o’s creative potential (Source: source, source)

📚 Learning

Largest Open-Source Math Pre-training Dataset MegaMath Released: LLM360 launched MegaMath, an open-source math reasoning pre-training dataset containing 371 billion tokens, surpassing the scale of DeepSeek-Math Corpus. The dataset covers math-intensive web pages (279B), math-related code (28B), and high-quality synthetic data (64B). The team used a refined data processing pipeline, including HTML structure optimization, two-stage extraction, LLM-assisted filtering and refinement, ensuring the dataset’s scale, quality, and diversity. Pre-training validation on the Llama-3.2 model shows that using MegaMath can bring an absolute improvement of 15-20% on benchmarks like GSM8K and MATH, providing the open-source community with a powerful foundation for training mathematical reasoning capabilities (Source: Jiqizhixin)

Nabla-GFlowNet: Balancing Diversity and Efficiency in Diffusion Model Fine-tuning: Researchers from CUHK (Shenzhen) and other institutions proposed Nabla-GFlowNet, a new diffusion model reward fine-tuning method based on Generative Flow Networks (GFlowNet). It aims to address the slow convergence of traditional RL fine-tuning and the issues of overfitting and loss of diversity in direct reward optimization. By deriving a new flow balance condition (Nabla-DB) and designing specific loss functions and log-flow gradient parameterization, Nabla-GFlowNet can efficiently align the model with reward functions (such as aesthetic scores, instruction following) while maintaining the diversity of generated samples. Experiments on Stable Diffusion demonstrate its advantages over methods like DDPO, ReFL, and DRaFT (Source: Jiqizhixin)

Llama.cpp Fixes Llama 4 Related Issues: The llama.cpp project merged two fixes for Llama 4 models, involving RoPE (Rotary Position Embedding) and incorrect norm calculations. These fixes aim to improve model output quality, but users may need to re-download GGUF model files generated with the updated conversion tools for the fixes to take effect (Source: source)

💼 Business

Nvidia Completes Acquisition of Lepton AI: Nvidia has reportedly acquired Lepton AI, an AI infrastructure startup founded by former Alibaba VP Yangqing Jia, with the deal value potentially reaching hundreds of millions of dollars. Lepton AI’s main business is renting out Nvidia GPU servers and providing software to help enterprises build and manage AI applications. Yangqing Jia, co-founder Junjie Bai, and about 20 other employees have joined Nvidia. This move is seen as a strategic deployment by Nvidia to expand into cloud services and enterprise software markets, countering competition from self-developed chips by AWS, Google Cloud, etc. (Source: InfoQ)

Anxiety Pervades US Tech Sector as AI Impacts Job Market: Reports indicate the US tech industry is facing challenges of job reduction, shrinking salaries, and longer job search cycles. Mass layoffs, companies (like Salesforce, Meta, Google) using AI to replace human labor or pausing hiring (especially for engineering and entry-level positions) are exacerbating career anxiety among professionals. Data shows an increase in the proportion of people reporting salary decreases and shifting from management to individual contributor roles. AI is reshaping the job market, forcing job seekers to broaden their horizons to non-tech industries or turn to entrepreneurship. Experts advise looking for job opportunities beyond the ‘Big Seven’ and mastering AI tools to enhance competitiveness (Source: InfoQ)

Rumor: OpenAI Considers Acquiring AI Hardware Company Co-founded by Altman and Jony Ive: Reports suggest OpenAI is in talks to acquire io Products, the AI company co-founded by its CEO Sam Altman and former Apple design chief Jony Ive, for no less than $500 million. The company aims to develop AI-driven personal devices, with possible forms including screenless ‘phones’ or home devices. io Products has an engineering team building the devices, OpenAI providing technology, Ive’s studio handling design, with Altman deeply involved. If the acquisition completes, it would integrate the hardware team into OpenAI, accelerating its expansion in the AI hardware sector (Source: InfoQ)

Former OpenAI CTO’s Startup Hires Again from Old Employer: The AI company ‘Mind Machines Lab’ founded by former OpenAI CTO Mira Murati has attracted two key former OpenAI figures to its advisory team: former Chief Research Officer Bob McGrew and former researcher Alec Radford. Radford was the lead author on core technical papers for the GPT series. This recruitment further strengthens the startup’s technical capabilities and reflects the intense talent competition in the AI field (Source: InfoQ)

Baichuan Intelligence Adjusts Business Focus to Healthcare: Baichuan Intelligence founder Wang Xiaochuan issued an all-hands letter on the company’s second anniversary, reiterating the company will focus on the healthcare sector, developing application services like Baixiaoying, AI Pediatrics, AI General Practice, and Precision Medicine. He emphasized the need to reduce redundant actions and flatten the organizational structure. Previously, the company reportedly laid off its B2B team for the financial industry, business partner Deng Jiang departed, and several other co-founders have left or are about to leave, indicating the company is undergoing strategic refocusing and organizational adjustments (Source: InfoQ)

Alibaba Cloud Launches “Blossoms” Plan for AI Ecosystem Partners: Alibaba Cloud announced the “Blossoms” (Fan Hua) plan, aimed at supporting AI ecosystem partners. The plan will provide cloud resources, computing power support, product packaging, commercialization planning, and full lifecycle services based on partner product maturity. Additionally, Alibaba Cloud launched an AI Application and Service Market, aiming to build a prosperous AI ecosystem and accelerate the implementation of AI technology and applications (Source: InfoQ)

Kugou Music Reaches Deep Cooperation with DeepSeek: Kugou Music announced a partnership with AI company DeepSeek to launch a series of innovative AI features. These include generating personalized listening reports using multimodal analysis, AI daily recommendations, smart search, AI playlist management, AI-generated dynamic covers, and an ‘AI Commentator’ with character settings, aiming to enhance user music experience and community interaction through AI technology (Source: InfoQ)

Rumor: Google Uses ‘Aggressive’ Non-Compete Agreements to Retain AI Talent: Reports claim Google’s DeepMind is implementing one-year non-compete agreements for some UK employees to prevent talent from moving to competitors. During this period, employees do not need to work but still receive their salary (paid leave), but this makes some researchers feel marginalized and unable to participate in the rapidly evolving industry. This practice might be banned by the FTC in the US but is applicable at the London headquarters, sparking discussions about talent competition and restrictions on innovation (Source: InfoQ)

Former OpenAI Employees File Legal Document Supporting Musk’s Lawsuit: 12 former OpenAI employees have filed a legal document supporting Elon Musk’s lawsuit against OpenAI. They argue that OpenAI’s restructuring plan (shifting to a for-profit structure) may fundamentally violate the company’s original non-profit mission, a mission that was a key factor in attracting them to join. OpenAI responded that its mission will not change, even with the structural shift (Source: InfoQ)

🌟 Community

Anthropic Study Reveals AI Application Patterns and Challenges in Higher Education: Anthropic analyzed millions of anonymized student conversations on the Claude.ai platform, finding that STEM (especially computer science) students are early adopters of AI. Student interaction patterns with AI include four types with roughly equal distribution: direct problem-solving, direct content generation, collaborative problem-solving, and collaborative content generation. AI is primarily used for higher-order cognitive tasks like creation (e.g., programming, writing exercises) and analysis (e.g., explaining concepts). The study also reveals potential academic misconduct (e.g., obtaining answers, circumventing plagiarism detection), raising concerns about academic integrity, critical thinking development, and assessment methods (Source: AI Era)

GPT-4o Image Generation Sparks New Trends: From Ghibli Style to AI Celebrity Cards: GPT-4o’s powerful image generation capabilities continue to fuel a creative frenzy on social media. Following the viral ‘Ghibli-style family portraits’ (driven by former Amazon engineer Grant Slatton), users are now creating ‘Magic: The Gathering’-style cards featuring AI celebrities (e.g., Altman depicted as ‘AGI Overlord’) and personalized tarot cards. These examples showcase AI’s potential in artistic style imitation and creative generation but also spark discussions about originality, copyright, aesthetic value, and the impact of AI on designers’ careers (Source: AI Era)

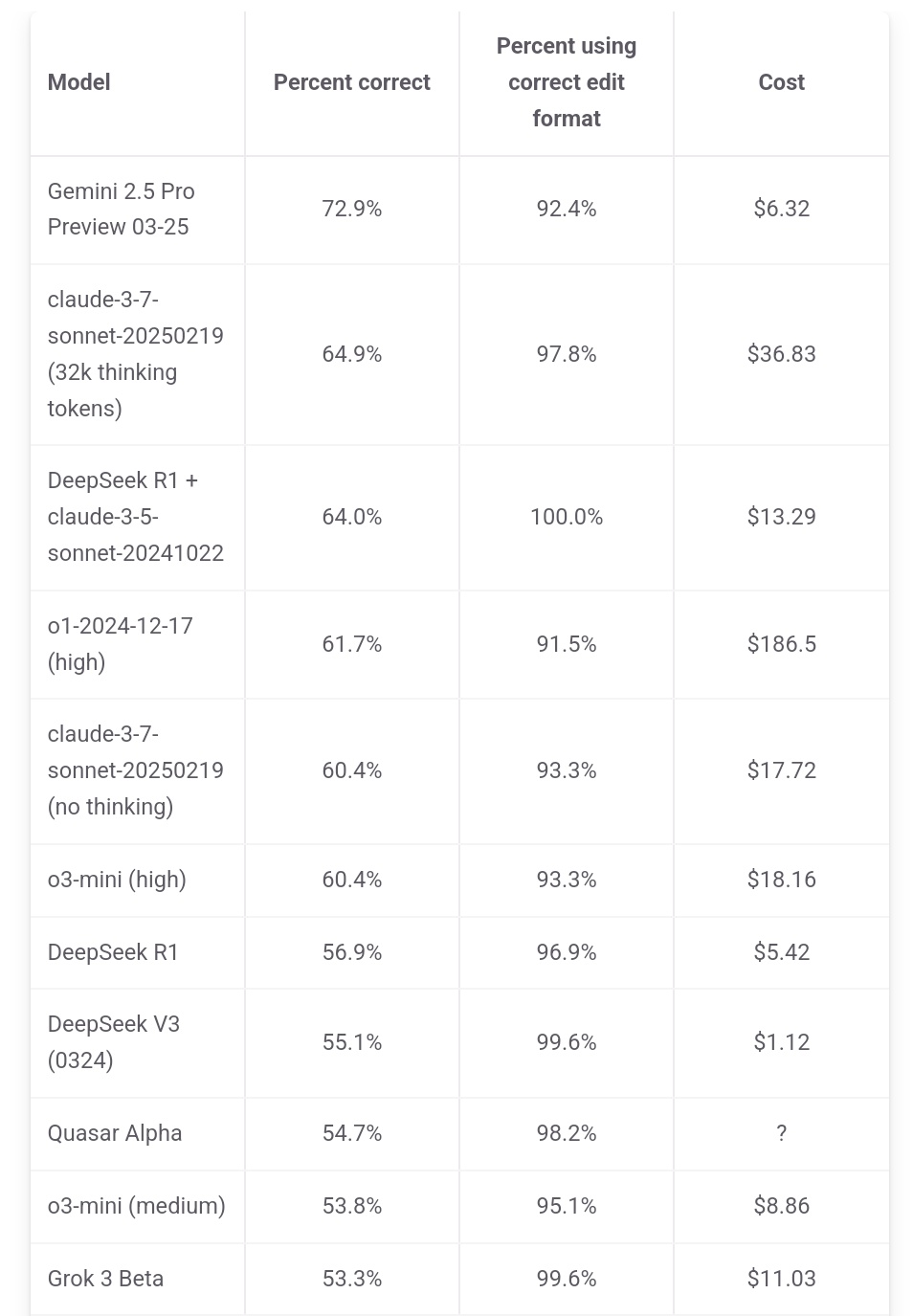

Jeff Dean Highlights Gemini 2.5 Pro Cost Advantage: Google AI lead Jeff Dean retweeted leaderboard data from aider.chat, pointing out that Gemini 2.5 Pro not only leads in performance on the Polyglot programming benchmark but its cost ($6) is significantly lower than other Top 10 models except DeepSeek, emphasizing its cost-effectiveness advantage. Some competing models cost 2x, 3x, or even 30x more than Gemini 2.5 Pro (Source: JeffDean)

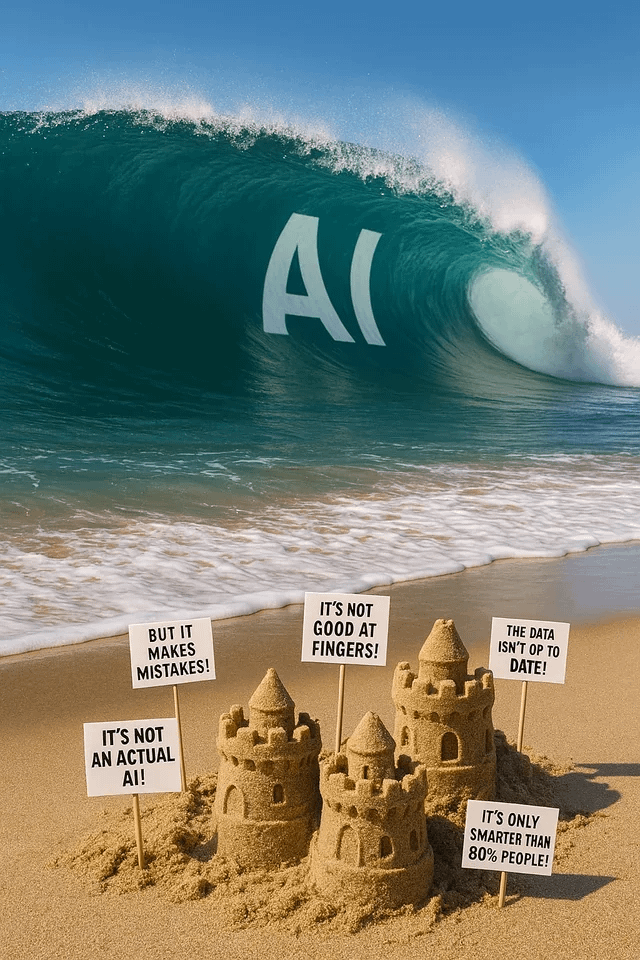

Reddit Discusses AI’s Impact on Job Market, Especially Entry-Level Roles: A Reddit forum post sparked heated discussion. The poster (a CIS master’s student) expressed deep concern about AI replacing entry-level non-manual jobs (especially software engineering, data analysis, IT support), arguing that the narrative ‘AI won’t take jobs’ ignores the plight of new graduates. He noted that large companies are already reducing campus recruitment, suggesting a potentially grim future job market. Comments showed divided opinions: some agreed with the crisis, others saw it as a normal part of technological change requiring adaptation to new roles (like managing AI teams), and some questioned the ‘90% of jobs disappearing’ claim, citing economic cycles, national differences, and AI’s current limitations (Source: source)

Claude Users Complain About Performance Decline and Tighter Restrictions: Concentrated discussion emerged on the Reddit r/ClaudeAI subreddit. Multiple users (including Pro subscribers) reported encountering stricter usage limits (quotas) recently, frequently hitting the cap even with normal usage. Some users believe Anthropic is secretly tightening quotas and expressed dissatisfaction, believing this will push users towards competitors. Additionally, some users reported that Claude’s ‘personality’ seems to have changed, becoming more ‘cold’ and ‘robotic’, losing the philosophical and poetic feel of earlier versions, leading some users to cancel their subscriptions (Source: source, source, source, source)

ChatGPT Image Generation Sparks Fun and Discussion: Reddit users shared various attempts and results using ChatGPT for image generation. One user asked to ‘transform’ a dog into a human, resulting in ‘anthro/furry’-like images, sparking discussion about prompt understanding and potential biases. Another user asked to be drawn as a multiverse version in stained glass, with stunning results. Other users requested metaphorical images about AI or asked about AI’s ‘nightmares’, showcasing the capabilities and limitations of AI image generation in creative expression and visualizing abstract concepts (Source: source, source, source, source, source)

Community Discusses LLM Model Selection and Usage Strategies: A user on the Reddit r/LocalLLaMA subreddit proposed a monthly discussion on model usage, sharing the best models (open-source and closed-source) they use for different scenarios (coding, writing, research, etc.) and their reasons. Users in the comments shared their current model combinations, such as Deepseek V3.1/Gemini 2.5 Pro/4o/R1/Qwen 2.5 Max/Sonnet 3.7/Gemma 3/Claude 3.7/Mistral Nemo, etc., mentioning specific uses (like tool calling, classification, role-playing), reflecting the practical trend of users selecting and combining different models based on task requirements (Source: source)

💡 Other

China AIGC Industry Summit Upcoming: The 3rd China AIGC Industry Summit will be held in Beijing on April 16th. The summit will gather over 20 industry leaders from companies like Baidu, Huawei, Microsoft Research Asia, AWS, ModelBest, ShengShu Technology, etc., to discuss topics such as AI technological breakthroughs (compute power, large models), industry applications (education, entertainment, research, enterprise services), and ecosystem building (security, control, implementation challenges). The summit will also release AIGC enterprise/product rankings and a panoramic map of China’s AIGC applications (Source: QbitAI)

Stanford Report: Performance Gap Between Top US and Chinese AI Models Narrows to 0.3%: Stanford University’s 2025 AI Index Report shows the performance gap between top US and Chinese AI models has significantly narrowed from 20% in 2023 to 0.3%. Although the US still leads in the number of notable models (40 vs 15) and industry-leading companies, Chinese models are catching up quickly. The report also notes that the performance gap between the very top models is also shrinking, down from 12% in 2024 to 5%, indicating a clear trend of convergence (Source: InfoQ)